10

Spatial Audio

Emerging Technologies in Immersive Sound

It is an exciting time for immersive technologies! And thankfully, audio has finally become part of the revolution. Breakthroughs in the recording, mixing, and playback of spatial audio have given creators new tools and workflows, allowing immersive sound to take its rightful place alongside immersive visuals. With these technological changes come opportunities in nonfiction storytelling, with a range of applications, such as news stories, documentaries—both short and long form—museum exhibits, live immersive experiences, and training applications. Immersive nonfiction storytelling has taken advantage of being able to place the viewer into a situation, with its accompanying emotional responses, and to bring us films that educate and inspire. Before we continue, let’s define a few terms (see our Glossary for more details). Spatial audio can refer to a wide range of sound, from stereo to the various types of multichannel surround sound to binaural and ambisonic sound. We will focus on binaural and ambisonic, as they are able to deliver the type of soundscape that brings the viewer into a fully immersive experience. The unique qualities of immersive experiences can affect sound. In platforms such as 360 and those viewed with web browsers, the viewer can look in all directions in the scene, but can’t really move through the scene. The audio and video is therefore limited to what we call three degrees of Freedom (X, Y, Z). The newest iteration of immersive experience, Six Degrees of Freedom (SDOF VR), uses X, Y, and Z axes as well as movements along those axes, such as moving forward or backward, left and right, up and down (Figure 10.1). So, when experiencing this one has unlimited viewing and listening perspectives. But that makes the audio recording, rendering, and playback particularly challenging. In terms of current mixing platforms, my studio is using Dolby Atmos®, so I will spend the most time describing working on this platform. But many of its qualities, and the production assets needed and the workflow, apply to other immersive mixing tools used to create sound imaging (how the sound is placed and played back spatially). The optimal location for our ears when listening to sound with regard to imaging is what sound engineers commonly call the “sweet spot.” (You can find more details on Sound Imaging and Degrees of Freedom in the glossary.) Our goal with this chapter is to provide you with both the big picture on where immersive is headed, and some practical storytelling tips for the tools and strategies you will need to become successful in building the audio story for three-dimensional experiences.

FIGURE 10.1 Three degrees of freedom versus six degrees of freedom.

Though some of the science used in the creation of immersive audio is not new, the blending of previous audio groundwork in ambisonic and binaural audio with new recording, listening, and mixing tools makes immersive sound cutting edge once again. One caution: while there are multiple methods of telling your story, the story should drive the method you choose. So, as we begin this chapter, we want to emphasize what we see as two primary challenges for content creators in this space. The first is shiny object syndrome. We both attend industry conferences where the excitement about a particular new piece of tech seems to become more important than the content it was designed to help create. Our goal is to provide you with a broad overview of the state of immersive audio today, rather than point you towards specific tools. The second challenge is workflow. Due to the rapid influx of new tools that seem to change almost overnight, producers are finding that their workflows may change mid-project. So again, we will try to give you a basic overview on workflows, but not lock ourselves into recommending one particular methodology. As the expression goes, we are all “changing the tires while driving.” Let’s take a look at what is emerging in spatial audio and how it affects you as a filmmaker.

What Is Old Is New Again: Ambisonic and Binaural Audio

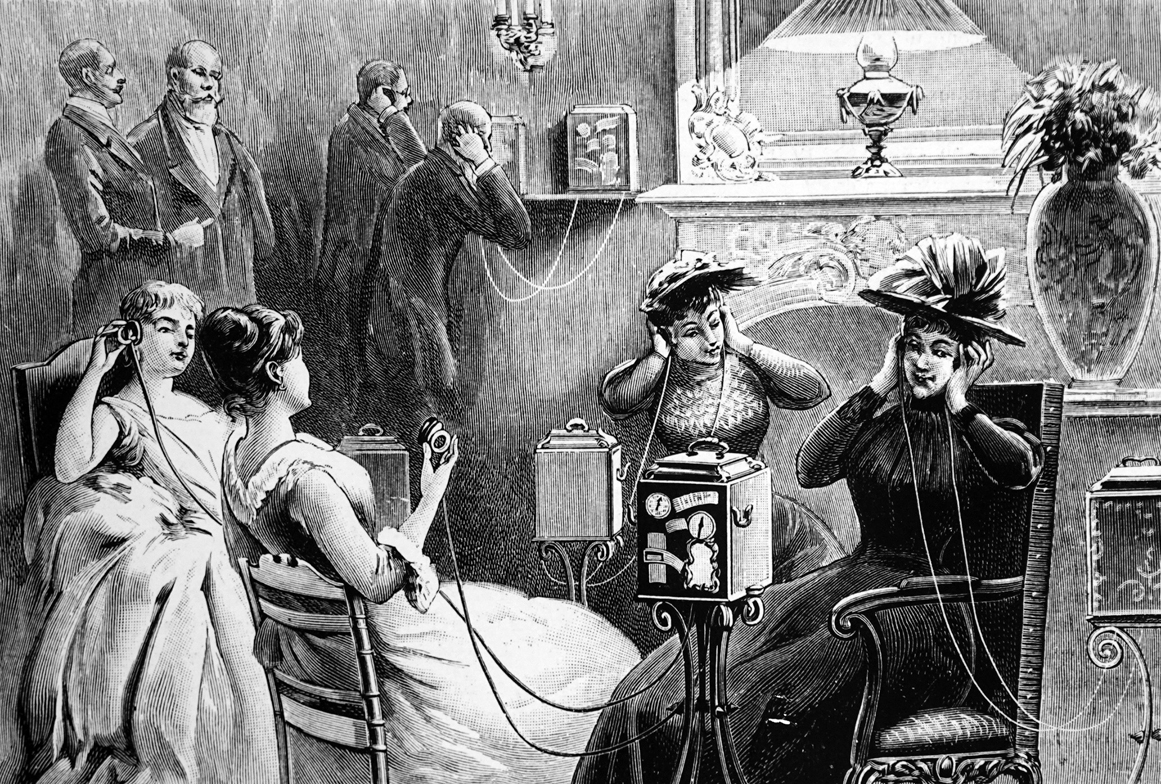

There are two primary types of audio we deal with when creating immersive experiences: binaural and ambisonic. Binaural audio, also known as 3D audio, has a long history. In many ways, binaural audio leans on stereophonic inventions of the late 19th century, most notably one by Clemente Ader, who built upon the telephony invention of Alexander Graham Bell to create an 80-channel spatial telephone that was exhibited in Paris in 1881. The resulting device, known as the théâtrophone, was widely used to listen to musical performances throughout Europe. Amazingly, this early binaural device also allowed the non-attendees to listen off-site (Figure 10.2). Perhaps this is the first case of streamed content? When amplification and speakers started to be phased in, in the 1930s, the thrill of these devices waned.

FIGURE 10.2 Clemente Ader’s 19th-century invention, the théâtrophone.

Throughout the 20th century there have been attempts to revive binaural content. But because binaural content requires specialized microphones for production and headsets for delivery, interest remained low. In some ways, the visual and audio delivery system remains the biggest challenge of widespread use of immersive content. We are now constantly on our phones, with earbuds providing instant access to audio content; having to pull out special headgear has led to adoption resistance in the public at large. What will radically transform immersive audio is the introduction of artificial intelligence (AI) into the equation, as XR audio expert Adam Levinson explains: “Machine learning and signal processing will transform audio for XR—devices will understand through the cloud your spatial positioning, where you go, what you do, and what you should be hearing.”

FIGURE 10.3 Neumann KU 100 binaural “head” microphone (courtesy: Sennheiser).

While we await that transformation, let’s delve into how binaural audio is currently recorded. A binaural audio recording setup uses two microphones placed to replicate the distance between our ears and therefore how we perceive sound as it travels from the source to the ear (Figure 10.3). A binaural recording device helps to process the world around us that shapes the sound, such as room shape, reflections, and even our own bodies. All of these elements can filter, shape, and color the sound. The nature of the two channel recording requires playback on headphones for full effect. Live music, podcasts, and games can all become immersive experiences with binaural sound.

Ambisonic audio was developed in the 1970s by Michael Gerzon and the British National Research Development Corporation. It is now getting much wider use as more tools are developed for gathering, implementing, and playback of spatial audio. Ambisonic audio is not like other surround formats because it’s not associated with direct channels or speakers, but rather the full 360 spherical sound field, including the W, X, Y, and Z axes. To deliver an immersive experience, the audio information coming via these axes is as follows:

W: Omni information

X: Left to right directional information

Y: Front to back directional information

Z: Height—Up and down directional information

To record ambisonic audio, a sound engineer will use tetrahedral microphones. Tetrahedral microphones employ four cardioid microphone capsules positioned in an array to capture the 360 degrees around the microphone. Some intrepid content creators have not waited for affordable tetrahedral microphones and have actually built their own setups. One such sound engineer is NPR’s Josh Rogosin, who put together four omnidirectional mics in order to begin exploring 360 video. But manufacturers are finally catching up. New options include Sennheiser’s Ambeo VR microphone, the TetraMic from Core Sound, and the ZYLIA ZM-1 3rd order ambisonics microphone which can be used alone for 360 audio or with a group of ZM-1’s using the company’s VR/AR Dev Kit for 6 DoF sound. If you own the Zoom H2n, the firmware can now be updated to handle ambisonic audio. That means you can use the H2n as a spatial audio microphone. Zoom also just released the H3-VR, an affordable ambisonic recorder/mic combo. In reality, you may end up combining a group of microphones—some with ambisonic capabilities and some without—so that you can achieve the combination of location-specific ambience and audio sources you need to create an immersive sound experience (Figures 10.4 and 10.5).

FIGURE 10.4 Recording ambient sound for an immersive project using the Sennheiser Tetrahedral mic, a Zoom H4n, and a Rode shotgun mic.

FIGURE 10.5 The Sennheiser Ambeo 3D VR microphone (courtesy: Sennheiser).

Ambisonic audio is extremely flexible and can be formatted into many speaker and channel arrays. For example, our audio studio recently used Flux by Spat, a software tool that models various speaker and room simulations. Spat also allows a traditional Digital Audio Workstation (DAW) to spatialize the audio using a Dante sound card as an interface. Starting inside a traditional Pro Tools 7.1 session, we are able to use Flux to place the audio exactly where it needed to go for a recent museum project projecting onto a 50 foot dome. This particular Spat audio setup was 16 speakers in a 360-degree sound field.

When we are mixing 7.1 surround sound, we may pan the audio to jump from speaker to speaker to achieve sound image. By contrast, ambisonic audio moves seamlessly within the audio sound field and is not tethered to a particular speaker. In this way, it is object-based audio. Sonically, ambisonic audio is “clean,” meaning it does not have any phasing issues. These qualities make ambisonic audio a good pairing for Virtual Reality (VR), Artificial Reality (AR), and Mixed Reality (MR) experiences. The sound fills the sound field, and any panning or rotation within the recording is reproduced exactly, because the localization is so precise.

FIGURE 10.6 The ZYLIA portable recording studio can record an entire 360-degree music scene with just one ambisonic microphone (courtesy: ZYLIA).

There are four categories of Ambisonic recording and playback, depending on what they accomplish sonically. First Order Ambisonics use four channels to recreate the 360 sphere and is very localized, with a very small sweet spot. Higher Order Ambisonics (HOA), recreates an even more detailed and localized capture, allowing for better sound quality and imaging. Second Order Ambisonics have nine channels, and Third Order Ambisonics have 16 (Figure 10.6). Sixth Order Ambisonics use 49 of the channels. Channels in this case refer to polar patterns that can be created. That’s why HOA have more detail and exact placement—there is more information to manipulate.

Breaking Creative Barriers with Ambisonic Sound

“We’ve been in the era of “pre-Pong” [the 1980’s video game] when it comes to spatial audio, and there is so much more that we can do. The early experiments were done with tracking—left, right, and quadrants—and having sound track with you as you turn your head. Maybe allowing for a sense of something flying overhead. But that’s just panning. It’s not really spatial. And a few cute enhancements can assist in our sensory perception now, like adding sub-pac (vibrations). But what, really, is spatial sound? What do we think of? It is to feel proximity to the source of sound. To really give that sound design the power it ought to have is what we’re after. Do our footsteps echo behind us? How perceptually accurate can we be when we replicate that? Ambisonics are like a pointillist painting: we can put sound 45 inches from your right eye if we want to. And that’s pretty fun stuff.”

–Nancy Bennett, Chief Creative Officer, Two Bit Circus

There are a few downsides to ambisonic audio. The primary one is that with certain types of ambisonics, sound is so localized that the “sweet spot,” the optimal listening position or space, is very small. Higher Order Ambisonics have a larger sweet spot, making imaging easier to accomplish. More care has to happen on set to place a tetrahedral microphone in the right spot to capture the sound. It is important to think about the point of view (POV) of the end user and what the focus of that POV is. Also, if using non-tetrahedral microphones be sure to position them as you normally would, if possible. Usually, the biggest challenge in gathering sound for VR or 360 video is that there is no place to hide the microphone. Try to place the microphones on the stitch line (ideally in the area directly under the camera), so it’s a little easier to mask later in editorial. When mixed and rendered, the audio may seem out of phase or out of focus. Gear can be expensive and the files tend to be large. The good news is more platforms have adopted ambisonics, providing better and more affordable tools for content creators. An example is Google’s spatial audio software tool called Resonance Audio that works across mobile and desktop platforms.

Let’s turn our attention to what happens once spatial audio is captured. How does it become a recording you can work with in your mix? Audio captured by tetrahedral microphones are raw ambisonic recordings and are “A” format audio. “A” Format Audio is not usable in NLEs or DAWs in the traditional sense. They first must be decoded into “B” format audio to be used and heard. There are two types of “B” format depending on the decoder used. There are standard decoder plug-ins, like Harpex, but usually the microphone comes with an A to B converter.

It is very important when decoding to make sure to keep the information in the proper order to replicate the sound recorded. The order of the W, X, Y, and Z axes is different in the two different types of “B” format audio. Furse-Malham or FuMa is an older protocol that is supported by a variety of plug-ins and other ambisonic processing tools. AmbiX is a more modern standard and has been widely adopted by YouTube and Facebook. FuMa and AmbiX follow different orders; not following the correct order in post is what leads to the most problems in mixing ambisonic audio.

FuMa follows this order:

W: Omni information (or the sphere) at constant volume and phase

X: Left to right directional information

Y: Front to back directional information

Z: Height—Up and down directional information

Ambix follows this order:

W: Omni information (or the sphere) at constant volume and phase

Y: Front to back directional information

Z: Height—Up and down directional information

X: Left to right directional information

Audio Differences Between 2D, Surround, and Immersive Platforms

Let’s take a moment to define how the sound experience differs from 2D to immersive. Mono sound is single-channel. Traditional two-channel stereo provides sound information coming out of one or the other channel, or has sound information spread between them. 360 audio is spatial but passive, meaning that the sound moves around you, but you do not move around inside the sound environment. 360 video soundtracks tend to be either stereo or binaural. Traditional 5.1 surround sound requires channels that correlate to speakers: Left, Right, Center, Low Frequency Effects (LFE) Left Surround, and Right Surround. 7.1 is similar, but adds rear speakers as well as side surround speakers into the mix. With VR sound, you have six degrees of freedom, and therefore can move around “inside” the space. That means sounds change as you move. This requires a special headset to consume this audio content. VR audio combines traditional stereo, mono, binaural, and higher level ambisonics.

Audio Delivery Formats for Immersive Experiences

Immersive sound differs from traditional 2D audio because it employs the Z-axis or height. In an immersive audio experience, you can hear not just around you but also above you, and even below your head. Traditional surround formats, such as 5.1 and 7.1, employ speakers arranged around the sweet spot, but this does not include height. Immersive sound formats are currently either object-based or channel-based. Channel-based means audio is output through channels and is spread over the speaker array as panned; it is a more traditional approach. Object-based means that when we are building a mix, we can render it to a three-dimensional space made up of objects—as many as 128 in Dolby Atmos® and an unlimited number in DTS:X. When the film is played, these objects then adapt in real time to whatever speaker array exists in the viewing space. Most object-based audio mixes have surround sound beds (5.1, 7.1, etc.) as their foundation. Then additional elements are added using the object-based tools. Object-based audio is exciting because the format is more translatable than channel-based immersive sound systems, since the mix is adapted to the speaker array and room. When encoded, the mix carries the information of the mix suite and translates it to the end environment (Figure 10.7).

Introduced in January 2012 with the George Lucas film Red Tails, Barco’s Aurora 3D was the very first immersive sound technology. It is a channel- based format relying on speakers placed high up on the walls along with a ceiling speaker. It is a valid format in commercial theatres around the world and has a larger presence in Asia and Europe than in the United States. Barco Aurora 3D has recently made inroads with its headphone technology for recreating immersive sound formats, but is just now making inroads into the home theatre market.

FIGURE 10.7 Object-based audio is rendered into three-dimensional space.

Dolby Atmos® was introduced shortly after Aurora 3D, in June 2012, with the Pixar film Brave. It has quickly taken the lead in immersive sound (disclosure: I (Cheryl) mix Dolby Atmos® projects). Dolby Atmos® is an object-based immersive sound format that has strongholds in both commercial and home theatre settings. Content mixed in Dolby Atmos® is now streaming through platforms such as iTunes, Netflix, Hulu, and Amazon Prime, with more outlets announced regularly. Immersive audio will assume a stronger role as gaming and interactive content creators push for headphones that reproduce spatial audio. This move into reproduction for interactives is a huge step into non-linear entertainment and storytelling.

DTS:X is another object-based immersive sound mixing standard that arrived in movie theaters in 2012, and was available to private consumers soon after via AV receivers and Blu-rays. Late to the immersive sound game, it is slowly gaining ground and is available on most home receivers, but fewer titles are encoded with the technology.

As the industry moves towards standardizing its formats so that equipment manufacturers can standardize their own gear, a new immersive format has emerged: MPEG-H from Froenhoffer. MPEG-H is object-based. It was first released in 2014 and was accepted in Korea as its broadcast standard in 2017. MPEG-H is starting to spread to Europe and was recently used for the broadcast of the 2018 French Open. CES 2019 brought announcements from SONY that it will use MPEG-H as the immersive sound codec for its 360 Reality Audio system for immersive mixing and playback for music. The exciting part of MPEG-H is that it is an open system for both interactives and immersive sound. It is designed to go from broadcast systems and streaming platforms to home theatres, tablets, and even VR devices. It can be played over speakers or headphones. The goal is to present MPEG-H as the future by having it be open to developers and easier to license. It is intended to allow consumers to use audio presets to control the mix, even to the point of manipulating dialogue levels. This is a big selling point, especially to networks and streaming companies who want the consumer to have the best experience. Of course, as a mixer this scares me. We work to achieve a specific mix that pulls you into the story, and in many cases, you could argue we are creating the story with our mix. So, having the audience make changes to this mix is a bit frightening. On the flip side, it would prevent many issues in playback platforms that we currently have little control over, allowing the consumer to control their listening levels geared to their systems and taste. I am sure this will be a very hot topic among content creators moving forward.

Dolby Atmos®

Dolby Atmos® is an object-based sound technology platform, developed by Dolby Laboratories for the creation, delivery, and playback of immersive audio content. It is employed primarily in cinemas, streaming, home theatre, and gaming. I first experienced it in 2013 at the Sound on Film Conference in Los Angeles, and what struck me immediately as a sound designer was the opportunity to deliver story content through more than the X and Y axes. The opportunities for storytelling literally made me giddy. I could place, move, and effect any sound in the sound field, including height, and it would translate from room to room and location to location, with metadata attached for proper playback. This would provide for extremely localized playback, no matter the size or movement of the object. Another intriguing area for me was a change in my workflow. For example, instead of creating a large sound by spreading it over multiple speaker channels, I would be able to adjust the size and precise placement of a sound into the full sound field of the frame. You can see right away the possibilities for this technology when it comes to immersive storytelling.

On that same trip to Los Angeles, I had an opportunity to visit an audio post house where they were adapting surround sound mixes to Dolby Atmos®. I saw the process in action, with tools I use every day. It made me a believer. This was not what we call “vapor ware,” but actual working software I could use to create new content. However, I was curious about the plan to get the technology beyond the commercial cinemas and into homes. As a mixer of primarily nonfiction, I felt this was critical to allowing broader content to be accessed by the home viewer. After mentioning this, I was ushered into a room where I listened to mockups of home theatre setups for Dolby Atmos®. Today, almost every receiver sold for home use has immersive sound decoders installed. Affordable sound bars and home speakers can emulate the mix via manipulations in reflections off ceilings and walls. There are even room-tuning tools included in some packages that allow the speakers to “read the room” and adjust the sound mix for that particular space. One of the more exasperating aspects of mixing traditional 5.1 or 7.1 surround for home theatre is that you never knew how the system is actually set up. More often than not, the surround speakers are on the floor with no idea of correct placement. Now that immersive sound has driven easier and better consumer setups, all mixes have a better chance of being heard in the intended manner.

The first project I mixed in Dolby Atmos® was a test for a network looking to start streaming with this new format. The film recreated the first act of a production about air crashes. The mix took me a long time, as I worked through learning the technology and tools to deliver the sound as I heard it in my head. Looking back, I was translating my vision from surround into immersive. Now, mixing is just the opposite for me. The most exciting moment of that mix was panning the first jet from behind me over my head and having it crash lower front right. I have been waiting my whole career to have that control. The excitement continued as I put voices in the overhead speakers like intercoms on a plane and moved on to create the true chaos of what a plane crash would be like think fire, explosions, impacts, and screams. It was so real to me that I now have difficulty flying. Satisfaction came later as the Executive Producer (EP), who has spent her whole career in nonfiction storytelling, was blown away by the experience and as excited by the possibilities as I was.

The first project I wanted to mix in Dolby Atmos® was a museum project that had a 180-degree life-sized screen with 16 speakers in a small gallery theatre, with two overhead speakers and one Low Frequency Effects (LFE) speaker. I had done large museum installations before and knew this director would want seamless movement of audio around all the speakers that was exactly placed to the images. After looking at a fine cut, I saw that it included a recreation of the battle of Yorktown. I realized an audio panner with height and 360 spatial capabilities was a must.

After doing some research I realized there were tools available, they just weren’t yet in Pro Tools, the DAW I use every day. Even the Facebook 360 tools were not available yet. I was surprised by this because I had seen Dolby Atmos® in action almost two years prior in LA. I reached out to my contact at Dolby about acquiring the panning tool. The technology I had seen in LA was already had been implemented in commercial cinemas and the tools I needed were close to being released. Both Dolby and I moved quickly to implement the new tools for the project, but I had to complete the project in an unfamiliar DAW. Though the mix turned out great and the 4D Theatre has won awards, I came out of that experience determined to implement Dolby Atmos® in my own studio using Pro Tools. What transpired led me to build the first near field (home thetare) Dolby Atmos® mixing suite on the East Coast.

As a result, I have a few tips if you decide to work in the Dolby Atmos® format. As frequently discussed in this book, one of the biggest challenges any film maker and mixer has is translating their mix from platform to platform and location to location. If you are having your film mixed in Dolby Atmos®, be sure to listen back through the Rendering and Mastering Unit (RMU) to the derived mixes and tweak to achieve the surround and stereo mixes desired. Just like comparing surround and stereo mixes in traditional channel-based mixing, this will help you deliver the mix you want. In Dolby Atmos®, other sound mixes, such as the 5.1 and 7.1, can be derived automatically from the time the audio is encoded, so there is no need to mix or deliver the audio more than once. Though true, we have had to tweak and output different mixes to satisfy different technical specifications as requested, similar to doing both theatrical and broadcast mixes (Figure 10.8).

FIGURE 10.8 Cheryl working on a Dolby Atmos® mix (photo credit: Ian Shive).

Gathering Field Assets for Spatial Mixing

Immersive productions differ from those made for stereo and surround sound in their audio production workflow, primarily because of the need to populate the Z axis with audio. This is the axis that affects the height of the sound in a spherical environment. Just like in any other projects, preproduction planning for the gathering of sound assets in the field is important. When you are in the field, make ambisonic recordings of natural and sync sound (not interviews). The decoded recordings translate extremely well into Dolby Atmos® because they contain the Z axis and carry over spatial sound image. Multiple omni microphones, placed to complement and overlap each other, are also helpful when approaching the mix stage, especially because these can provide needed ambiance elements. For example, when working on an environmental title for the Smithsonian, the location team found that sync sound of some of the animals was not easily recordable. So, my team went out with a tetrahedral microphone (ambisonic), a zoom h4n (quad), and a boom mic (mono). We then brought in the assets and worked in the Dolby Atmos® mixing suite so we wouldn’t have to work the whole day with head gear on. The tetrahedral microphone provided a spatial reproduction of Rock Creek Park that was lush and fully 360. What was also caught in the recording was a sports car as it drove by.

The quad and mono recordings added layers and textures to the 360 base of the ambisonic recording. We just chose to try out the tetrahedral microphone and used the h4N as our backup, instead of using an omni. The playback of the decoded ambisonic recording of the car in Dolby Atmos® was spectacular. The car could be felt as it zoomed around and revved its engine. This is the future of sound acquisition and mixing—making the listener/viewer fully sense the environment of the story.

Of course, there will be situations when omni or ambisonic recordings are not available. In this instance, it will take your sound designer and mixer some thought and time to create 360 sound elements from 2D assets. Similar to the current challenge of creating 5.1 or 7.1 surround out of mono and stereo assets, it is possible to achieve good results. Just remember to add additional time to your schedule to build these elements. As immersive sound becomes more mainstream, more and more tools and techniques will be developed to support the massaging of 2D assets for 3D productions.

Musical assets have similar challenges in immersive sound. Stereo full mix music can be manipulated and used when mixing for immersive sound, but it’s not ideal. The stems from a stereo music mix are helpful in any mix configuration, but are essential when building music in Dolby Atmos®. If time and budget allow for an original score, plan on mixing in surround (unless Dolby Atmos® mixing is available) and providing stems. Music stems allow the mixer to spatialize the music throughout the 7.1 bed and make objects out of individual instruments. If your music score or other music content for your production is being recorded live, use additional microphones to help create dimensionality to your score. Two to four up-firing mics or omni-directional mics placed above the musicians can provide natural height information. Omni mics hung and placed around the performers can also gather the information needed for an immersive sound mix. Try to place omni mics and some tight cardioids in the middle, on the sides, and at the conductor’s and listeners’ positions. As we have discussed in previous chapters, there is nothing worse than a wonderful live performance that seems like no audience was present. The same is true for immersive productions. So, if you are recording a live event or performance, be sure to mic the audience in the same fashion outlined above to capture reactions from multiple vantage points in the space.

Filmmakers should approach immersive sound opportunities from the beginning as they are capturing sound. Capturing as clean sound as possible should be a given no matter what, but it matters even more in immersive environments. That’s because the immersive mixing process makes each sound more localized. When you start to mix, you will really notice poor audio quality. This is especially true for VR/AR/MR as the encoding and rendering process can make poor audio stand out even more.

For more linear storytelling in the immersive realm—for example, a documentary-style approach to a natural landscape—capturing sound with clean elements in mind is essential. Using tetrahedral and Mid-Side microphone setups goes a long way to creating the sound field in mix. Mid-Side microphone set-ups use two microphones. The mid microphone captures direct signals, while the side microphone captures ambient signals. If specialized microphone set-ups cannot be used, consider using an array of mics placed strategically for the scene. Much can be done in post without these sources, with a more traditional approach to production and standard assets, such as mono and stereo sources. The magic can happen in post when the sound designer or mixer imagines the spatialized space and creates it. When planning for an immersive mix it is even more important to communicate and see where the mixer can make a difference, even for lower budget projects. If music is being composed, provide stems so the mixer can create the immersive music mix. Mono sound effects are easier to manipulate as objects, though stereo effects can be used as well.

Your Immersive Mix

New tools and plug-ins for 360 and immersive mixing are being introduced every day. As sound designers, we use mainly the same tools to sweeten audio in immersive as we do in traditional sound formats. EQ, compression, reverb, and sound design can all cross over very easily into immersive formats. More specific tools include those for panning, up-mixing, room modelling, and convolution reverb. For the most part, you will still work in your DAW of choice, such as Pro Tools, Nuendo, and Reaper. For 360, Tools for VR and Facebook 360 are fantastic and free. In their 360Pan Suite, Audio Ease also has some very useful 360 tools for VR mixing, including panner, render, and rotation tools. Harpex software is used extensively for microphone conversions and modelling speaker arrays, and Spat Flux has an excellent spatial modelling tool and ambisonic panner. Reverbs tend to still be in the traditional audio space, with very little in the immersive object-based space. AudioEase has one of the only systems that supports Dolby Atmos® with its “Indoor” reverb feature. Altiverb, also by AudioEase, is also widely used because of its quality and ease of use, although it does not employ height. Nugen’s Halo is a popular choice for up-mixing music and ambiances to Dolby Atmos® because it does employ overhead sources. Up-mixing is the process of converting audio into higher imaging formats. For example, mono audio can be up mixed to stereo or even 5.1. Most often stereo elements are up mixed to 5.1 or 7.1 surround formats. With immersive audio formats, up-mixers add in the overheads for the Z axis. However, be cautious of possible phase issues when using an up-mixer. The manipulation of the sound to create a broader sound image comes at a cost. Signals can be spread so that they overlap or partially overlap each other, cancelling out audio information. This results in a tiny hollow sound. When possible, I choose to build music beds and ambiances natively instead of using up-mixers.

When mixing in immersive sound, people seem to stay very close to their roots and not stray too much from what they know works. This is not a bad approach. However, I’ve learned to test out a different approach or technique in small chunks, instead of investing in a work flow or idea over a longer period of time. For instance, in placing dialogue as objects in a mix I played with different placements for the voice as an object and not just center-anchored as we are accustomed to hearing. This worked very well in certain scenes, such as those with movement, intimate scenes, or very loud vocal scenes that included yelling. I mixed a few scenes like this and then rendered out the final mixes; I listened back in Dolby Atmos®, 7.1, 5.1, and Stereo. There were no translation issues, which was great. If there had been any, it would have been early enough in the process that we could have solved those problems. Experimenting and testing as you go is essential.

When planning schedule and budget for immersive sound mixing, the creative should not take more time for this type of mix than it would for a traditional one. As in any creative collaborative environment, add time if there are ideas you want to explore or different approaches to story that you want to try out. The really cool part about Dolby Atmos® is that all the mixes and deliverables can be derived from one render. Time needs to be added to screen the derived mixes to make sure they are as expected. This workflow is similar to listening to the stereo down-mix of the 5.1 surround in a traditional surround work flow. Time must also be added to make all of the PCM audio deliverables. Feature films will almost always individually mix the different formats being delivered based on the original immersive or 7.1 mix. It is not uncommon for immersive sound mixes to be expensive.

Because it’s newer technology, the rate tends to be elevated. However, the trend for nonfiction is to add time at the end of the process at the surround mix rate to screen all of the derived mixes and to make sure everything is translating correctly, rather than just elevate the mix fee across the board. Plan on adding one to two days for every 50 minutes of run time for an independent production. This includes the time needed to make all the deliverables, including the print masters.

We tend to take the marrying of video and audio for granted in non-immersive formats, so in the world of immersive, it can be a surprise when this process isn’t always so easy. Platforms and tools are constantly changing, but currently there is not an easily accessible tool to marry immersive sound with HDR, HDR-10, and Dolby Vision®. At over six figures, it is just too expensive. But the industry is responding to the need for affordable tools. For example, Blackmagic Design’s Fairlight audio features in the latest version of DaVinci Resolve now include advanced spatial audio formats. If you are not able to use such spatial audio mixing tools, then you will need to send your final mix and color master to another studio with these capabilities to create your final master.

There are some mistakes you can avoid when mixing for immersive. One common mistake is going too far with a sound. Think of a new driver handling a car. When faced with a curve in the road, they often jerk the steering wheel too much, overcompensating, when all that is needed is a small adjustment. The same is true for immersive mixing. Small adjustments in a mix can make a huge impact in spatial sound. We tend to think we have to use every object (128) and the more movement the better. Yes—adding these sounds is thrilling! But quiet scenes, with small amounts of ambience and a few objects, often have the most impact. There’s something very intimate about pulling in the music a tad closer, almost like a blanket, and drawing the tones ever so slightly closer as an object. Or creating a scene where the listener can feel the atmosphere around them in the darkness of the night. These are the magical moments you can create in an immersive mix.

There are some additional problems to avoid when working in immersive. Before you start, it is even more critical than with a traditional stereo mix to have a concrete deliverables list, so that the rendering system can be set up correctly. Think ahead to your derived mixes and listen back constantly through the Dolby Atmos® RMU. Be aware of what you are supposed to be hearing and what you are actually hearing. This is true in any mix situation. When setting up your session, make sure to build the beds and objects in numerical order. This strategy makes it easier to move sessions from system to system.

Nonfiction Applications for Immersive Sound

Some of the most innovative uses of spatial sound are happening now in the nonfiction space. A great example of this work has been created by immersive sound designer Ana Monte Assis. In her shared creative studio space in Germany, this Brazilian audio engineer built original sounds for an immersive training project about the human heart for Stanford Virtual Heart. This VR experience created by Lighthaus and Oculus Rift was designed to help medical students and families understand common congenital heart defects. For the project, Ana and her partner David DeBoy had to analyze the frequencies of the human heart as heard through the body wall as if through a stethoscope. They were not able to use actual heart recordings, because these recordings were either too corrupted or too “clean.” Instead, using tools from iZotope RX, they developed original sounds, and then added spatial elements specific to each type of defect. Another creative space getting involved in 360 and VR is the music industry. Nancy Bennett recently directed the award-winning “One at a Time” music video for Alex Aiono & T-Pain, winning the Lumiere VR Award. Corporations are jumping onto the immersive train for educational applications. Walmart purchased more than 17,000 Oculus Go headsets for internal customer service training in a joint project with STRIVR. Sound is of course an essential tool when creating such realistic scenarios and when working towards an emotional response from the participant. VR Producer Anneliene van den Boom and the team at WeMakeVR in Amsterdam has been doing just that with a series of 360 educational experiences, including one for students about bicycle safety called Beat the Street. (If you’ve never been to Amsterdam, it’s worth understanding that thousands of people bike to and from school and work every day on the streets of this city.) Shooting with Nokia OZO in order to simulate the feeling of biking, Executive Producer Avinash Changa put together a frame on a small scooter (Figure 10.9). According to van den Boom “The OZO is small and light and allowed for lengthy takes and easy battery swaps. (Today, other options would be the Obsidian or Insta360 Pro II.) For audio, the team relied on a mix of microphones, including lavaliers on all the kids doing the biking and a microphone near the camera to pull in street ambience.” The voice-overs of the children were recorded on the final shoot day in a mini-sound studio created inside a production van. Crash sounds and other effects were added in audio post. The military is taking advantage of the medium as well, using it to recreate realistic battle scenarios. In the United States, the Army has already built simulations of cities such as San Francisco and Seoul. We don’t have the security clearance to know the audio components of these immersive experiences, but one can only imagine. One of the great innovators today in immersive sound is Metastage CEO Kristina Heller. In a joint venture with Sennheiser, they have mics placed in a volumetric capture space to create holographic recreations of real people and performers.

FIGURE 10.9 Filming a bicycle safety VR project (courtesy: WeMakeVR).

A growing area for nonfiction VR is “additional content” for 2D titles. Technologist Alexandra Hussenot, Founder of Immersionn, a VR exploration portal site allowing visitors to discover virtual reality content from multiple sources, sees this as a growing area for filmmakers: “VR can be integrated to other mediums and not consumed in isolation. This is where WebVR is useful. Most immersive documentaries only use a WebVR player to play the film. But it is possible to integrate web links inside the story so the user can explore various paths.”

Immersive has long been used in the museum and amusement space because the nature of the permanent and long-term installations allowing bespoke theatres and interactives to be built. It is great to see the sound design tools catching up and offering more opportunities for this type of experience. Harry Potter’s World in Orlando combines immersive sound and mixed media in many of the experiences. Atlanta’s College Football Hall of Fame’s main theater uses multiple life-like screens and a 22.1 speaker system to recreate the game day thrill. A 22.1 mix was almost impossible on most DAWs just six years ago. A modified Pro Tools system had to be used. Plus, the panning tools were limited to seven-speaker channels. Today, we can model sound arrays in 360, 180, and specialized combinations—including overhead speakers for the Z axis and panning tools that accommodate movement in these specialized sound environments.

Museums such as the Atlanta History Center and the Aquarium of the Pacific are imagining immersive experiences that include life-like visuals and sound. The tools to implement sound for these installations have been driven by the dramatic transition to object-based audio in VR/AR mixing and immersive sound tools like Dolby Atmos®.

Yet while innovators continue to press forward, in many ways VR has still not lived up to its hype. Storytelling, both fiction and nonfiction, is struggling with the platform because consumer markets have not adopted the new tech as quickly as was originally projected, due to cumbersome goggles, tethers, and the lack of a headphone standard. VR creators are still grappling with finding the right tools at affordable price-points. All of these issues will likely be resolved in the next few years. Wireless products are coming onto the market, and new low-cost production tools are also getting traction. Zoom has an ensemble of affordable, quality recorders for use in both traditional production scenarios and immersive capturing. Check out the HV VR 360 and H4N and H6. We mentioned Sennheiser’s Ambeo VR mic, quickly becoming a standard in 360 audio capture. At the same price-point, another favorite is the Rode NT-FS1 Ambisonic microphone. Some of the most accessible tools are Facebook’s 360 tools. They are free and work exceedingly well in most DAWs, including Pro Tools and Nuendo. The tools include 360 video integration into the post workflow and utilities to pan and design spatial audio. Included, of course, are easy publishing tools—especially for YouTube and Facebook. Since Facebook is offering their tools for free, most of the basic 360 and VR tool sets for audio are very reasonably priced. For example, AudioEase’s 360Pan Suite is priced well under $300. Most VR hardware suppliers and integrators, such Google and Samsung, offer free tools that are similar to Facebook’s but are geared to their proprietary hardware. Flux’s Spat is on the upper end of the price-point, but has incredible control and has expanded flexibility to create specialized speaker arrays. Zylia has released ZYLIA Studio PRO and ZYLIA Ambisonics Converter Plugins to enable those working with the Pro Tools DAW to use the ZM-1 microphone. As immersive production and post-production tools become more affordable and more widely available, AR, MR, and XR are likely to become a faster growing segment of the industry. As immersive headphone development takes a leap forward, we believe immersive sound will take its rightful place as the central ingredient needed to bring spatial audio into its full glory. In the meantime, we encourage you to experiment. There are no rules, only new ideas and new ways of telling stories.

Tips

![]() When you are in the field, make ambisonic recordings of natural and sync sound (not interviews). The decoded recordings translate extremely well into Dolby Atmos® because they contain the Z axis and carry over spatial sound image. Multiple omni microphones, placed to complement and overlap each other, are also helpful when approaching the mix stage, especially because these can provide needed ambiance elements.

When you are in the field, make ambisonic recordings of natural and sync sound (not interviews). The decoded recordings translate extremely well into Dolby Atmos® because they contain the Z axis and carry over spatial sound image. Multiple omni microphones, placed to complement and overlap each other, are also helpful when approaching the mix stage, especially because these can provide needed ambiance elements.

![]() If you are having your film mixed in Dolby Atmos®, be sure to listen back through the renderer (RMU) to the derived mixes and tweak to achieve the surround and stereo mixes desired. Just like comparing surround and stereo mixes in traditional channel-based mixing, this will help you deliver the mix you want.

If you are having your film mixed in Dolby Atmos®, be sure to listen back through the renderer (RMU) to the derived mixes and tweak to achieve the surround and stereo mixes desired. Just like comparing surround and stereo mixes in traditional channel-based mixing, this will help you deliver the mix you want.

![]() If the score or other musical content is being recorded live, use additional microphones to help create dimensionality to your score. Two to four up-firing mics or omni mics placed above the musicians can provide natural height information. Omni mics hung and placed around the performers gather the information needed for an immersive sound mix. Try to place omni mics and some tight cardioids in the middle, on the sides, and at the conductors and listeners positions. If the event or performance is live, be sure to mic the audience in the same fashion to capture reactions.

If the score or other musical content is being recorded live, use additional microphones to help create dimensionality to your score. Two to four up-firing mics or omni mics placed above the musicians can provide natural height information. Omni mics hung and placed around the performers gather the information needed for an immersive sound mix. Try to place omni mics and some tight cardioids in the middle, on the sides, and at the conductors and listeners positions. If the event or performance is live, be sure to mic the audience in the same fashion to capture reactions.

![]() Filmmakers should approach immersive sound opportunities from the beginning as they are capturing sound.

Filmmakers should approach immersive sound opportunities from the beginning as they are capturing sound.

![]() Capturing as clean a sound as possible should be a given no matter what technology you use, but it matters even more in immersive environments since we are isolating and localizing sounds.

Capturing as clean a sound as possible should be a given no matter what technology you use, but it matters even more in immersive environments since we are isolating and localizing sounds.

![]() Before you start an immersive mix, it is even more critical than with a traditional stereo mix to have a concrete deliverables list, so that the rendering system can be set up correctly.

Before you start an immersive mix, it is even more critical than with a traditional stereo mix to have a concrete deliverables list, so that the rendering system can be set up correctly.

![]() When setting up your session, make sure to build the beds and objects in numerical order.

When setting up your session, make sure to build the beds and objects in numerical order.

![]() When mixing, test segments, render, and listen back as you go. Be aware of what you are supposed to be hearing and what you are actually hearing. This is true in any mix situation.

When mixing, test segments, render, and listen back as you go. Be aware of what you are supposed to be hearing and what you are actually hearing. This is true in any mix situation.

![]() In the post-workflow process, once a spatialized mix is rendered, you will typically also need to re-render a stereo (and possibly 5.1 or 7.1) mix version, so plan ahead accordingly.

In the post-workflow process, once a spatialized mix is rendered, you will typically also need to re-render a stereo (and possibly 5.1 or 7.1) mix version, so plan ahead accordingly.

![]() Remember you will need to send your outputs back to your editor to marry in an NLE. Most DAWs do not have the capability (yet) to lay back to VR formats.

Remember you will need to send your outputs back to your editor to marry in an NLE. Most DAWs do not have the capability (yet) to lay back to VR formats.