Chapter 6. Evolution and Benefits of SDX and NFV Technologies and Their Impact on IoT

Topics covered in this chapter include

■ A Bit of History on SDX and NFV and Their Interplay

■ Software-Defined Networking (SDN)

■ Network Functions Virtualization (NFV)

A Bit of History on SDX and NFV and Their Interplay

The seminal concepts behind software-defined networking (SDN) can be traced back to the 1990s. The Internet grew exponentially during that period, and it marked the beginning of an inexorable journey that would transform our lives forever. The synergies between information and communication technologies found a home in the Internet, giving rise to the digital revolution. According to several historians and experts, that digital revolution will have a much bigger impact on society than the industrial revolution of the 19th century.

This was a time when everything seemed possible, so many researchers and young companies advocated for a faster pace of innovation. However, the process for bringing new architectures and protocols to the marketplace was cumbersome and slow. For instance, network control planes in the 1990s were not programmable at all, and moving new technologies into production required very slow standardization processes. In light of this, some thought leaders proposed a new model, shifting from conventional black-boxed network protocols and control planes to a more open and programmable paradigm. One initiative that gained substantial traction was active networking. It was one of the first solid steps toward making computer networks more open and flexible, by introducing programmable functions into the network itself. The research and development (R&D) efforts around active network architectures blossomed in the mid-1990s. Unfortunately, the “Everything Is Possible” slogan of the late 1990s led to excessive investment and speculation. Many companies that were developing Internet technologies hit a wall in the early 2000s with the dot-com bubble. The motto became exactly the opposite, and lack of investment drove many companies to bankruptcy. As a result, R&D efforts around programmable networks ceased almost completely by the early 2000s. Although it is fair to say that active networks have set the basis for open and programmable control planes, SDN needed to wait for better times to materialize.

In the mid-2000s, a recession took hold after the collapse of the dot-coms. The problems surrounding network programmability again attracted the attention of the research community. The work carried out by Nick McKeown and Martín Casado of Stanford University, and Scott Shenker of University of California, Berkeley, on control and data plane separation led to the development of open interfaces between these two. This initiative was a game changer in the field of network programmability, and it ultimately resulted in the design of the OpenFlow (OF) API and protocol. Many people consider the fundamental contributions of these researchers to be the beginning of modern SDN technologies. In 2011, Nick McKeown and Scott Shenker helped found the Open Networking Foundation (ONF) as a way to ensure the development of an open community centered on SDN and OF technologies. ONF is a nonprofit and operator-led consortium that focuses on leveraging the advantages of disaggregating network functions, the use of white-boxing technologies, and the development of open source software and standards (ONF is in charge of the OF standards).

The strengths of SDN reside primarily in the separation of the control and forwarding functions. By decoupling these functions, traffic flow through multiple networking devices can be managed by a central entity, called an SDN controller. This approach enables the abstraction of traffic control policies and the detachment of forwarding decisions from black-boxed network equipment and communication protocols. In other words, the devices in charge of traffic forwarding can be supplied by different vendors and be deployed in a distributed fashion, but the traffic through them can be controlled and enforced in a programmatic way through SDN controllers (for example, by a single centralized controller or a cluster for redundancy and reliability purposes). To achieve this, networking devices must support basically two things: an interface to communicate with the controller, and an internal means of translating and enforcing the control rules supplied by the SDN controller. The next section of this chapter covers these aspects.

The success of SDN laid the foundation for other initiatives that leveraged the strengths and flexibility offered by the software-defined paradigm, beyond the conventional scope of SDN. This gave rise to software-defined X (SDX), or the development of technologies whose central goal is to respond to market needs and bring innovations to production at the speed of software (instead of depending on slow cycles with hardware development or protocol standardization processes). Examples of well-established technologies in the SDX space are software-defined wide-area networks (SD-WAN), software-defined wireless networking (SDWN), and the digital transformation that we are starting to witness in many industries, gradually moving away from legacy hardware and embedded systems to adopt software-defined IoT (SD-IoT) solutions. SDN also revitalized interest in software-defined radio (SDR) and its modern variants in the context of 5G, such as the software-defined radio access network (SoftRAN).

A different effort that is often incorrectly mixed up with SDX is network functions virtualization (NFV). NFV started in the early 2010s and gained tremendous traction after an Industry Specification Group (ISG) of the European Telecommunications Standards Institute (ETSI) became the standard-bearer of this technology. Since the initial publications produced by ETSI, the technology has become a reference in both industry and the research community, to the point that is now hard to conceive of future network architectures, such as 5G networks, without NFV being part of them.

NFV relies both on IT and state-of-the-art virtualization to build virtualized network functions (VNF). These VNFs can be composed and connected (chained) to create blocks for implementing networking services. NFV should not be confused with server virtualization techniques; NFV aims to create communication services that go way beyond the scope of conventional server virtualization. One of the central objectives of NFV is to allow telecom operators and enterprises to transition from networks embodied by proprietary hardware appliances (such as traditional switches, routers, firewalls, or intrusion detection devices) to a model in which each of these functions can be implemented using one or more VNFs running on top of general-purpose servers.

The line that separates SDN and NFV is not clear to everyone. Unless you turn to specialized literature, the terms SDN and NFV are frequently used in tandem (as in SDN/NFV), which creates confusion about their respective roles, scope, and strengths. It is important to understand that SDN and NFV are not interchangeable terms. These technologies can benefit each other, but one does not depend on the other. SDN can be implemented and scale without any form of virtualization or NFV support, whereas VNFs and the virtualized communication services they support can be implemented without the need of an SDN controller. SDN and NFV address different problems and were devised with different objectives.

Although the separation of traditional SDN and NFV technologies is quite easy to illustrate, the limits between newer software-defined technologies under the SDX umbrella and NFV become fuzzier. For instance, the flexibility and benefits of leveraging NFV technologies for implementing SD-WAN solutions are substantial, so future WAN technologies will probably bet on more cohesive designs. Likewise, the advantages of leveraging SDX concepts in NFV infrastructures are incontestable. For instance, software-defined functions can more easily enable centralized orchestration, configuration, monitoring, and lifecycle management of VNFs and networking services in multivendor environments. In this sense, SDX can be seen as an enabler for NFV, whereas NFV represents one of the most promising and ambitious use cases for SDX. As Figure 6-1 shows, even though NFV can be implemented without SDX, and vice versa, their foremost strength lies in their intersection.

Figure 6-1 SDX and NFV: Two Different and Complementary Technologies That Can Benefit from Each Other

The rest of this chapter develops in more detail the strengths of SDX and NFV technologies both in isolation and combined, and also covers their roles as technology enablers for IoT, 5G networks, and the expected interplay between Fog and Cloud computing. Various aspects related to orchestration shall also be covered in this chapter, while the security facets of SDX and NFV will be addressed later in Chapter 7, “Securing SDN and NFV Environments.”

Software-Defined Networking

SDN seeks to bring the agility of modern software development and computing technologies to the networking field. This will allow IT departments to gradually decrease network architectures characterized by rigid control planes and static treatment of traffic flows, and instead use programmable control planes capable of offering the level of dynamicity and flexibility that the market is demanding. To achieve this, SDN proposes to decouple the “brain” from the “bodies” (see Figure 6-2). That is, SDN detaches the element that controls the policies and makes decisions about how traffic must be forwarded across the network infrastructure (the brain) from the elements in charge of forwarding the traffic (the bodies), based on the policies and rules supplied by the brain. This decoupling of control and forwarding functions is usually implemented using different devices: The brain is typically centralized and physically separated from the forwarding elements (the bodies), whereas the bodies can be distributed in such a way that a single brain can manage multiple forwarding elements simultaneously.

Figure 6-2 shows that the SDN architecture is stratified in three layers: the infrastructure supporting the data plane, the SDN controllers, and the Application layer.

■ Infrastructure layer (data plane): Included in this layer are the physical devices in charge of forwarding traffic across the network. These devices are endowed with processing capabilities and forwarding functions that are exposed and managed by an external SDN controller through the southbound API in Figure 6-2. The internal implementation of the data plane can vary from device to device because they might have different processing functions and traffic forwarding engines (for example, when the infrastructure consists of devices supplied by different vendors). The forwarding policy enforced in the infrastructure layer can be categorized into three groups, or forwarding modes.

Reactive mode: In this mode, the SDN controller pushes a new forwarding rule to a device whenever required. For instance, every time a packet arrives at a switch, a flow table lookup is performed. If no matching rule is configured on the switch defining how to process and forward the packet, the switch typically generates an exception and forwards either the header or the entire packet to the SDN controller using the southbound interface in Figure 6-2. The SDN controller decides the forwarding policy and pushes a rule into the switch, enabling the processing of not only the specific packet, but also subsequent ones belonging to the same traffic flow. Therefore, in this operation mode, the SDN controller basically reacts to exceptions and pushes traffic forwarding rules only when needed.

Proactive mode: The SDN controller populates in advance the necessary rules for processing and forwarding all possible traffic flows. In strict proactive mode, incoming packets either find a matching entry in the switch or are discarded. When the rules have been pushed to the switch, the role of the SDN controller is relegated merely to monitoring and performance supervision. The advantage of this mode is that all packets can be forwarded at the line rate.

Hybrid mode: This mode basically combines the best of the previous two modes. It is one of the most widely used modes because it can offer line-rate forwarding capabilities for part of the traffic without losing the flexibility of generating exceptions and pushing new rules for new (unexpected) traffic flows.

The next two sections delve into the internals of the infrastructure layer, with special focus on OpenFlow (OF) switches in general and the Open vSwitch (OVS) implementation in particular.

■ SDN control layer: This layer consists of a logically centralized entity called the SDN controller. As shown in Figure 6-2, the SDN controllers expose a northbound API to a set of specific functions (programs) running in the application layer. These applications are the ones that bring the desired level of programmability on top of the controllers, thereby offering the possibility to innovate in the networking field at the speed of software. The SDN controllers also exploit the southbound API exposed by the infrastructure layer to control the forwarding behavior on the data plane. In essence, an SDN controller implements the control plane logic and supports the northbound and southbound APIs, with the objective of performing the following actions:

Providing an abstract view of the network to the SDN application layer. The northbound API enables the SDN controller to expose an abstracted and technology-independent view of the infrastructure to higher-level applications.

Receiving requirements from the SDN application layer.

Translating the requirements received from the application layer to specific configuration rules that can be pushed down to the infrastructure layer.

Receiving and processing events from the infrastructure layer and performing monitoring of the network infrastructure.

An SDN controller is a logically centralized entity. Note that this does not preclude scenarios in which multiple controllers can coexist and be clustered for controlling a given network. The reasons for employing multiple SDN controllers can vary, but the main drivers are fundamentally the following:

■ Reliability: For example, deploying a cluster of SDN controllers can maintain the control plane operative even if one SDN controller fails or gets compromised.

■ Scalability: For instance, multiple SDN controllers can be deployed when the network is large enough that it requires some sort of segmentation in different areas of control, each managed by an individual (active) controller. These controllers can be arranged in different ways, depending on the requirements (hierarchy, mesh, partial mesh, and so on). Settings including a federation of hierarchically arranged SDN controllers, with communication interfaces between them based on open standards will become commonplace in the SDN arena.

■ SDN applications: These must not be confused with traditional applications in the TCP/IP network stack. These applications represent a set of functions that were specifically programmed to capture forwarding policies and perform surgical control on the packets and their corresponding traffic flows across the infrastructure layer. The applications express the desired behavior and policies to be applied to the SDN controller via the northbound API. To this end, the applications use the abstracted view of the infrastructure supplied by the SDN controller. SDN applications typically comprise the logical functionality behind the policies and decision-making process that must be enforced through the SDN controllers, as well as a set of northbound drivers to connect to different SDN controllers (for example, in cases where the controllers are supplied by different providers). Note that only one driver is required if the application always connects to the same controller.

■ Southbound API: This interface supports communication between the SDN controller and infrastructure devices. This API enables programmatic control of packet processing and forwarding rules across the device fabric. It also provides mechanisms for generating events (for example, an exception is sent to the controller when no matching rule is found after a lookup process), as well as for reporting monitoring information. A well-known protocol implementation supporting this southbound interface is OpenFlow, which offers an open, standardized, and vendor-agnostic interface that enables interoperability among a broad range of infrastructure devices and SDN controllers.

■ Northbound API: This interface supports communication between the SDN controller and the applications on top. As mentioned previously, the SDN controllers exploit this API to expose an abstracted (technology-agnostic) view of the network to the applications, while the applications communicate through the API the desired behavior and policies that need to be enforced. Different forums and standardization bodies are actively working on the definition of open, standardized, and vendor-agnostic northbound APIs to enable interoperability among applications and controllers.

The strengths of the model described previously are summarized as follows:

■ Highly programmable networks: Policy definition and flow control remain decoupled from forwarding functions and the inherent details of packet processing within infrastructure devices. This enables a new generation of open and highly programmable control planes, which can exercise full control over traffic processing functions and forwarding decisions with packet-level granularity.

■ Agility: Innovations in the form of new control protocols and mechanisms can be tested and brought to market at the speed of software.

■ Openness: Network owners can exploit different groups of applications, SDN controllers, and infrastructure devices that can be supplied by totally different vendors. This flexibility is possible thanks to the different levels of abstraction enabled by the proper segmentation of functions and roles and well-defined APIs and protocols. In SDN, the infrastructure is basically exposed to the application layer as a single (logical) resource fabric, allowing administrators to control or change the behavior of the network programmatically via open APIs.

■ Centralized configuration and view that simplifies operation and management (OAM): SDN architectures tend to follow simpler designs, which can potentially simplify OAM tasks substantially. The logically centralized model offered by SDN gives network administrators a single source of truth for configurations, a single policy decision point (PDP) that can push rules to a set of distributed policy enforcement points (PEP), and a unified and global view of the network (which obviously simplifies operational tasks). Moreover, the behavior and control of the traffic are managed by SDN controllers working in tandem with a pool of applications instead of vendor-specific devices and protocols. Administrators can thus initialize, configure, secure, and manage the lifecycle of network resources via applications (programs), which can be developed either by a third party or by the administrators themselves. Clearly, the strengths of centralizing the intelligence and operation also create critical dependencies: If the centralized elements fail, the whole network is at risk. Hence, SDN controllers and applications are attractive targets for attackers. Chapter 7 covers the potential vulnerabilities and security aspects of SDN architectures in detail.

OpenFlow

OpenFlow (OF) is the first widely accepted SDN implementation enabling the networking industry to open the control of L1/L2 switches, routers, mobile base stations, Wi-Fi access points, and more. Unlike the conventional black-box approach to design and control networking equipment, which ruled the networking industry for more than two decades, OF offers a programmatic interface to control the behavior and traffic flow across the network infrastructure.

A few years ago, everything seemed to indicate that OF would become omnipresent. However, OF implementations have not yet taken off commercially—at least, not at the time of this writing. But that is not the point. The strength of OF lies in its paradigm and an open implementation that the industry embraced as a reference model. It brought an unstoppable wave of SDN with it, and it has already created a second wave with SDX. Most likely, we will witness this second wave hitting the IoT space relatively soon. Overall, the model pushed by the OF community changed the mentality of many stakeholders along the networking value chain, including telecom companies, network administrators, software companies, and hardware vendors.

This critical mass surrounding the innovations brought by OF fostered the creation of the Open Networking Foundation (ONF). This organization is responsible for defining OF standards. The documents released by ONF include the specification of the OF architecture, which mainly consists of three components: an OF switch, an OF controller, and the OF protocol, which enables reliable and secure communication between the OF switch and the OF controller. Communication reliability is ensured by using TCP as the transport protocol; communication security is handled by TLS, which enables authentication and ensures the privacy and integrity of the communication between an OF controller and the OF switches. Through the OF protocol, a controller can enforce both the path followed by packets of a given flow across the network and institute a set of actions that can change the packets (such as the packets’ headers) as needed.

Figure 6-3 illustrates the three main components of the OF architecture. The OF controllers are depicted at the top. The central part of the figure shows the internals of an OF switch, which communicates with the OF controllers on the top using the OF protocol. The OF protocol enables OF controllers to create, read, update, or delete flow entries from the flow tables (entries can be matching rules or actions). As mentioned in the previous sections, this can be accomplished in three operation modes: reactive, in response to packets that do not match any of the existing rules in the tables; proactive, with the OF controller pushing all the forwarding rules in advance; or hybrid, with proactive behavior for certain flows and reactive behavior for the rest. Packets that match a rule can be forwarded at wire speed in the switch fabric, whereas packets that are unmatched need a different treatment. In the reactive and hybrid modes, the packets can be forwarded directly to the controller; in strict proactive mode, they are discarded. For packets that reach the controller, the controller can decide to add a new rule to a flow table, modify an existing one, discard the packets, or even forward the packets itself, as long as the OF switch is configured to forward the entire packets to the controller (not just the headers). This is particularly relevant when high-speed communications are required because the TCP/TLS communication back and forth between the OF switch and the controller obviously introduces non-negligible overhead.

As shown in Figure 6-3, an OF switch consists of one or more flow tables connected in series, a group table, a meter table, and one or more OF channels connecting to external controllers. For reliability reasons, an OF switch might be connected to multiple controllers. This allows an OF switch to remain operative even if the active controller or the communication with it fails. External means are obviously required to ensure coordination among controllers, especially to avoid uncoordinated control on the switch or to synchronize controller handoffs.

Each flow table in an OF switch contains a set of flow entries, which consist of a set of match fields and instructions that must be applied to any matching packet. Flow entries match packets in order of precedence, meaning that the first matching entry found in a flow table is the one that will be used. The matching process starts at the first flow table in the pipeline; depending on the outcome, it might continue to subsequent ones in the series. If a matching entry is found, the instructions associated with the specific flow entry are executed. On the other hand, if no matching entries are found, the actions to be applied on a packet depend on the operation mode configured in the OF switch (reactive, proactive, or hybrid mode).

The main function(s) of the elements shown in Figure 6-3 are summarized as follows:

■ OpenFlow channel: Element that supports the interface between an OF controller and an OF switch. An OF channel supports only one external OF controller, which the controller then uses to control the switch.

■ Forwarding process: Encompasses the actions required for processing and transferring a packet to an output port (or set of output ports, as in the case of flooding).

■ Pipeline: Set of flow tables connected in series, providing the means for examining, matching, and modifying packet fields and transferring the packets to an output port within an OF switch. Packet processing within the pipeline typically stops when a match is found and the instructions associated with the matching entry do not specify a next flow table. In that case, the packet is often modified and transferred to the corresponding output port.

■ Meter table: A meter element within an OF switch that can measure the rate of packets and enforce rate-limiting policies on a traffic flow.

■ Flow table: Entity that contains flow entries and represents one of the possible stages in the pipeline.

■ Flow entry: Element in a flow table that provides the match fields and the instruction set required to match and process packets. The instruction set in a flow entry can either contain actions or modify the pipeline processing. For instance, the actions in an instruction can describe packet-forwarding actions, modifications that need to be applied to a packet, or the need for group table processing. A flow entry can forward a packet directly to a physical port.

■ Group table: Entity that specifies additional processing, such as sets of actions that need to be applied for more complex forwarding semantics (flooding, link aggregation, multipath forwarding, and so on). As mentioned in the previous point, the actions defined in a flow entry can send packets to a group table. This level of indirection enables common output actions for different flow entries to be applied and changed efficiently. In a nutshell, a group table consists of a list of action sets and some means of selecting which of those action sets to apply on a per-packet basis. The packets sent to the group can be subject to the application of one or more action sets.

Network vendors can freely decide how to implement the internal components of an OF switch, as long as their specifications conform to the OF protocol and the semantics for matching and executing actions on packets are respected. For instance, a flow table lookup might be performed in different ways between vendors. Depending on the implementation, this lookup process could be done using software-based flow tables (as with Open vSwitch) or in hardware by implementing the flow tables directly in an ASIC.

Open Virtual Switch

The previous section outlined the main components of the OF architecture, including key features of an OF switch. This section focuses on Open vSwitch, one of the most successful and widely adopted implementations of a software-defined switch that supports OF. Open vSwitch (often abbreviated as OVS) is an open-source implementation of a multilayer, software-based switch that was especially conceived for virtual environments. It is now part of several virtualization and cloud computing platforms (including OpenStack and OpenNebula), making OVS one of the SDN implementations that is actively used in the NFV space (observe that OVS is one of the elements in the intersection of SDN and NFV in Figure 6-1).

OVS was designed to address some of the well-known limitations of existing hypervisors. On Linux-based hypervisors, the traffic between external systems and local VMs is typically bridged using built-in L2 Linux bridges. Linux bridges are very effective in single-server environments, but they are not well suited for multiserver virtualization deployments, which is typically the case in cloud computing settings. In these environments, the dynamics on the number of nodes joining or leaving the network can be high; in many cases, part of the network ends up connected to special-purpose switching hardware. OVS was designed to support virtualized networks that can be distributed across multiple physical servers.

The multilayer nature of OVS refers to the fact that the switch supports several different protocols and capabilities at different network layers, such as the following:

■ OF protocol, including specific extensions to support virtualization

■ IPv4 and IPv6

■ IPsec

■ NetFlow and IPFIX

■ Link Aggregation Control Protocol (LACP), IEEE 802.1ax

■ IEEE 802.1Q VLANs and trunking

■ GRE tunnels

■ VXLAN

■ Traffic policies per VM interface

■ Multicast snooping

■ Bonding, load balancing, active backup, and Layer 4 hashing

Figure 6-4 depicts the OVS architecture. OVS consists of three main elements:

OVS daemon, called ovs-vswitchd: Runs in user space and can control several OVS forwarding planes within one machine. A single instance of the daemon is intended to run per machine because it can handle all the necessary elements around the OVS forwarding plane.

OVS database, called ovsdb-server: Also runs in user space and offers a lightweight database to maintain the switch tables and the configuration of the flow tables in the kernel. Both the ovs-vswitchd daemon and external clients and/or controllers can communicate with ovsdb-server using the OVSDB management protocol. The protocol can be used either to issue queries about the switch configuration or to manipulate its tables.

OVS data path: Implemented at the kernel level and provides a fast path for traffic forwarding.

As Figure 6-4 shows, the OVS daemon supports a northbound OF interface, enabling external control of an Open vSwitch by means of an OF controller. In this way, a centralized OF controller can create a logical view of multiple OVSs running on separate physical servers and expose them to the upper application layer (refer to Figure 6-2). Because OVS supports OF, each OVS instance internally implements a multitable forwarding pipeline and also supports the “match-action” OF mechanisms described in the previous section. Note that an OF controller can interact both with the OVS daemon and with the database directly. The example in Figure 6-4 also shows how traffic generated by external systems is forwarded to an internal virtual machine (VM).

Figure 6-4 captures one of the first solid interplays between SDN and NFV in industry. As described previously, an OVS is basically a virtual switch that can be decomposed into three main functions (ovs-vswitchd, ovsdb-server, and OVS data path), each of which implements a specific set of network-related tasks. When we look at OVS through the lens of NFV, it becomes clear that, in the NFV terminology, each of these functions is actually a virtual network function (VNF) whose configuration and behavior can be managed by an SDN controller.

Another important aspect of OVS resides in the options available for managing traffic flows. Figure 6-4 shows one of these options for traffic received from an external endpoint that needs to be forwarded to an internal virtual machine (VM). In this case, the match-action process applied to the first packet of a new flow will yield a “match miss,” generating an event (an exception) that needs to be sent to the ovs-vswitchd daemon running in user space. When ovs-vswitchd determines the matching rules that need to be pushed to the OVS tables, it inserts the necessary entries in the OVS data path (such as to flow tables in the kernel). Subsequent packets of the flow will match these entries and be forwarded following the fast path shown in the figure.

An alternative technique is to enable traffic offloading and let the hardware chipsets take control and do the heavy lifting. This is an appealing feature because it allows OVS to control both a software-only implementation of the switch and one enabling hardware support. In this latter case, the match-action process applied in hardware to the first packet of a new flow again yields a “match miss.” As a result, an event is generated and, in this case, reaches the OVS kernel module. This yields a “match miss” as well and generates an event for the ovs-vswitchd daemon in user space. As in the earlier case, ovs-vswitchd will determine the matching rules and, based on the offload policy, it will push the corresponding entries to the flow tables implemented in programmable hardware. Subsequent packets of the flow will hit these entries and be forwarded without reaching the OVS kernel module. Note that the example illustrated in Figure 6-4 shows the basic operation of OVS, without considering OVS offloading.

Other relevant features and benefits worth highlighting for OVS include the following:

■ OVS was designed to offer a programmatic means of facilitating configuration automation in virtualized network deployments.

■ OVS offers support for VM migration, including not only static state such as network configuration (ACLs, tunnels, and so on), but also live network state.

■ The OVS database supports remote triggers, which can be used to monitor the network and generate events to an orchestration system that can instrument automated actions based on the event.

■ Since 2012, the Kernel implementation of OVS is part of the Linux kernel mainline.

■ As mentioned previously, OVS can operate both as a software-only switch and on dedicated switching hardware. Thanks to its flexibility, it has been ported to several switching chipsets and to hardware and software virtualization platforms.

Despite these strengths, OVS also has limitations. The performance of the software-only version of OVS is one of them. This is especially critical because it can become one of the first bottlenecks upon flooding attacks. In light of this, both industry and academia are actively working on mechanisms to improve the performance of software-based switches, both with and without hardware support, while also adding new techniques and tool sets for programmability. Vector Packet Processing (VPP) is one of these initiatives; it represents one the most promising advances in the evolution of software-defined switches and routers.

Vector Packet Processing

Vector Packet Processing (VPP) is a technology created by Cisco and is one of the main components of the open source initiative FD.io (Fast Data–input/output). FD.io (https://fd.io) is part of the Linux Foundation and is actively working on developing a “universal data plane.” More specifically, FD.io consists of a set of projects aimed at expanding the scope and reach of data plane programmability. The primary objective is to develop more ambitious software-defined packet processing techniques that can run on general-purpose (commodity) hardware platforms, covering bare metal, containers, and VMs. Aspects such as high throughput, low latency, and high efficiency in terms of I/O are at the heart of FD.io, and VPP is key to achieve these goals.

VPP has been implemented as an extensible open source library providing switching/routing functionality. It represents a paradigm shift in the way software-based functions can process and forward packets. Unlike traditional scalar processing methods, in which pipelines are designed for processing one packet at a time, VPP supports processing a “vector of packets” in parallel. Scalar processing techniques suffer from a number of issues, mainly caused by the context switching produced by nested calls within the routines developed to process I/O interrupts. In general, the result of these routines is one of the following actions: punt a packet (the term punt, introduced by Cisco, indicates the action of sending a packet down to the next-fastest switching level), drop the packet, or rewrite and forward the packet. The problem is that each packet incurs an identical set of steps and potential instruction cache (I-cache) misses. A cache miss while reading from an I-cache generally leads to the largest delay because the thread of execution needs to stall until the instruction is fetched and retrieved from main memory. This entails context switching and thrashing entries in the I-cache. The way to improve this in traditional scalar packet processing systems is to introduce larger caches.

VPP mitigates this problem. The larger the size of the vector, the lower the processing cost per packet because I-cache misses are amortized over a longer period of time. Clearly, there are disadvantages as well if the size of the vector is too large. For example, depending on the implementation, the process might need to wait until the vector is filled in, thus losing the merits of prefetching packets into the data cache and the time saved when I-cache misses occur.

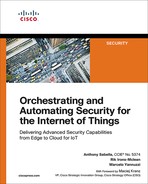

Figure 6-5 shows the fundamentals of VPP. It is based on a directed graph that consists of two different types of nodes. Input nodes are the ones used to inject packets into the directed graph; graph nodes are the ones implementing core packet processing functions. The packets are processed in batch and in parallel, and they are forwarded through the nodes in the graph in vectors. In this process, the packets are classified and then sent to different nodes in the graph, depending on their category. For instance, non-IP Ethernet packets go to the ethernet-input node and IPv6 packets go directly to the ipv6-input node, saving cycles in that way. All packets in the vector are processed together until they have all gone through the graph.

As shown in Figure 6-5, VPP offers a multilayer switch (L2–L4) that was designed to run on commodity multicore platforms. One of the main advantages versus some of its predecessors, such as OVS, is its high performance. Its current implementation can handle more than 14 million packets per second (MPPS) on a single core and support multimillion entries in its forwarding information bases (FIB), all while ensuring zero packet drops. To accomplish this, VPP can run a copy of the graph per core and exploit batching processes while efficiently caching packets in memory. It works in run-to-completion mode (avoiding context switching), and it runs in user space (also avoiding any form of mode switching, such as user mode to kernel mode). In terms of security, VPP is endowed with both stateless and stateful security features, including security groups, stateful ACLs, port security, and role-based access control (RBAC).

Figure 6-5 Directed Graph of Nodes Used for Processing a Batch of Packets (a Vector of Packets) in Parallel

The modularity of the VPP library is one of the key aspects facilitating data plane programmability. Because the VPP engine runs in user space, developers can add packet-processing capabilities and extend VPP without needing to modify code at the kernel level. Indeed, developers can insert new nodes into the VPP graph to build new packet-processing solutions. Because VPP is open source, anyone can develop new graph nodes and plug-ins for it. This approach also enables more advanced scenarios, by combining VPP with other solutions in the marketplace (for example, to build a highly efficient load balancer for OVS).

As shown in Figure 6-5, VPP leverages Intel’s Data Plane Development Kit (DPDK; see the input node at the top left in the graph). This approach has also been followed by OVS, which is being ported to DPDK as well. The union of VPP and DPDK is expected to accelerate NFV data planes and reach performances that were impossible to obtain with scalar processing techniques. VNFs requiring high I/O performance, such as virtualized deep packet inspection (DPI) systems and load balancers, are strong candidates to base their data plane designs on VPP, or at least borrow concepts from it.

Overall, VPP represents an important step in the evolution of software-defined switching and routing, enabling not only the transition from scalar-to-vector packet-processing techniques, but also the development of richer data plane programmability. The NFV community is enthusiastically looking at VPP and other recent technologies, such as P4 (Programming Protocol-Independent Packet Processors), which could have a big impact on the SDN and NFV arenas.

Programming Protocol-Independent Packet Processors (P4)

The evolution of SDN has opened a new era in the networking industry, but every big change has its challenges and side effects. Protocols such as OF were supposed to become pervasive, but OF adoption is occurring at a much slower pace than expected. In fact, other SDN projects that capitalized on its benefits, such as OVS, have gained more traction than OF itself. Part of the Internet community surmises that OF did not really take off because of the complexity associated with programmable forwarding planes. Enterprises and service providers need to evaluate the cost of such programmability and weigh the benefits of embracing OF. Pioneers in the SDN field, such as Nick McKeown, seem to have a different opinion and think that the lack of penetration is because OF fell short in its objectives and failed to take real control of the forwarding plane. Without proper means of programming and modifying the forwarding plane, changing the behavior of the packets and traffic flows remains a challenge. Whether it is because of cost and complexity or a lack of effective programming means, the reality is that OF has not reached the status that many anticipated.

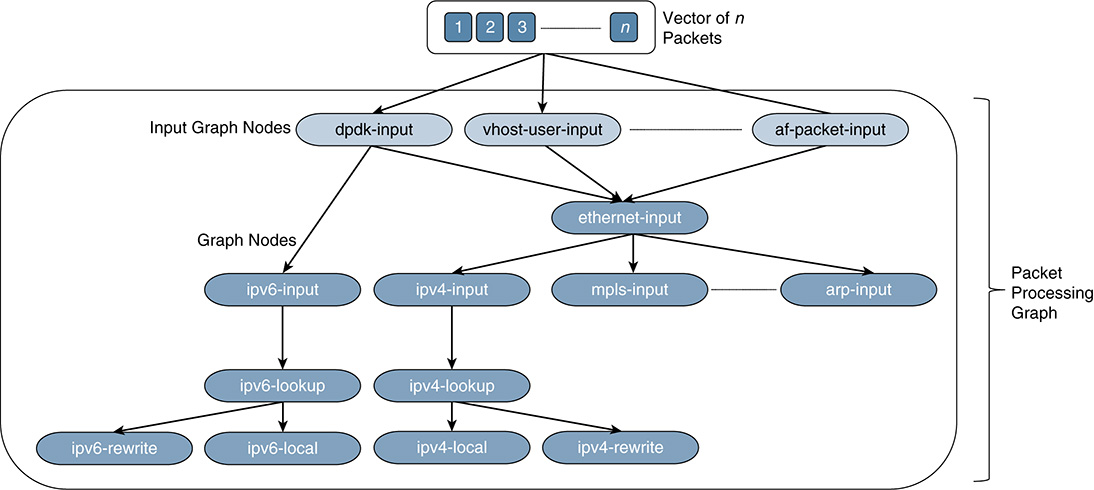

Programming Protocol-Independent Packet Processors (P4) is partly a response to increase the level of control and programmability of the forwarding plane. It remains to be seen whether P4 will succeed in reducing the complexity and overcome part of the resistance OF faced. P4 is basically a high-level (declarative) language to express rules on how packets are processed by network elements, ranging from software switches to ASIC-based appliances, in a protocol-independent way. More precisely, P4 proposes an abstract model and a common language to define how packets are processed by different technologies, including virtual switches, FPGAs, ASICs, and NPUs.

As shown at the top of Figure 6-6, P4 can work in conjunction with an SDN control plane, and developers can create P4 programs that can be mapped to different devices using a P4 compiler. Whereas OF was fundamentally designed to control and populate forwarding tables in fixed function switches, P4 increases the level of programmability, allowing SDN controllers to define how the switch should behave. For instance, OF can operate on a predefined (that is, fixed) number of protocol headers, but it lacks the capability to add and control new headers dynamically. This is the functionality required to actually develop protocol-independent switches, so P4 was designed with the following goals in mind:

■ Reconfigurability: Programmers should be able to change the way packets are processed by switches, including actions such as redefining the packet parsing and processing functions themselves.

■ Protocol independence: Switches should be tied neither to specific network protocols nor to packet/header formats. Instead, the SDN control plane should be able to specify how to parse and extract new headers and specific fields, as well as define the set of “match+action” tables required to process those fields or the entire headers (see Figure 6-6).

■ Target independence: A P4 programmer should know neither the specific details of the underlying switch hardware nor its software. The role of the compiler is to map a target-independent program written in P4 to a target-dependent binary in order to instrument a specific switch configuration.

As Figure 6-6 shows, switches that support P4 can forward packets via a programmable parser, followed by multiple stages of match+actions tables between ingress and egress that can be arranged in series, in parallel, or as a combination of both. The role of the parser is to process the header of incoming packets. The match+action tables perform lookups on header fields and apply actions and packet modifications according to the first match entry found in each table. Overall, a P4 program focuses on the specification of the parser, the match+action tables, and flow control through the pipelines, to define the switch configuration for any protocol implemented by a P4 programmer.

Unlike OF, which relies on a fixed parser, P4 supports a programmable parser. This parser allows new headers to be introduced and controlled whenever needed. Furthermore, in OF, the match+action tables operate in series, whereas in P4, they can be implemented and combined in parallel. The challenge is obviously how to find a balance between the desired level of expressiveness and programmability and the complexity of implementing it in practice across a variety of appliances and software switches.

The forwarding model in P4 is controlled by two main operations: configure and populate. The configure operations are the ones in charge of programming the pipeline (defining the set of match+action stages) and specifying the header fields that need to be processed at each stage. This determines the protocols that are supported and how the switch will process the packets. The populate operations determine the policy applied to the packets by adding or removing entries to and from the match+action tables that were defined during a configuration operation. These day 1+ configuration operations are usually referred to as runtime rules.

In a nutshell, a P4 program specifies the following elements:

■ The fields and size of each header within a packet

■ The header sequences allowed within a packet

■ The type of lookup to perform, including which input fields need to be used and the actions that can be applied

■ The dimension of each table

■ The pipeline, including the tables layout and the packet flow throughout the pipeline

■ A parsed representation on which match+action tables apply to each packet

■ The set of ports to which the packets will be sent

■ The queuing mechanisms

■ The egress pipeline and the resulting packet to be transmitted

An aspect worth highlighting is that the initial implementations in the marketplace are betting on switch silicon and optics on P4-enabled appliances instead of pure software switches. Nevertheless, the debate about whether P4 will overcome some of the challenges faced by OF will continue for the next few years.

Although the technologies analyzed so far are mainly focused on switching architectures, the evolution of SDN gave rise to much more ambitious paradigms that have gone far beyond packet processing and switching fabrics. The next three sections describe initiatives related to software-defined technologies that have certainly raised the bar of what it is possible to achieve through software in the networking and telecommunications industries.

OpenDaylight

The push for SDN and increased network programmability gave birth to a number of open source initiatives at almost every layer of the network stack. OpenDaylight (ODL) is considered a mature project that addresses the development of this new stack. Thanks to its progress, it has become a reference in the open networking industry. In a nutshell, ODL is a Linux Foundation Project that coordinates the development, distribution, and maintenance of probably the largest and most comprehensive open source SDN platform available at the time of this writing. The initiative started in 2013 and currently includes more than 50 organizations. It has a solid developer community composed of more than a thousand contributors actively working on the platform; according to the ODL foundation, the code base has around a billion subscribers, which makes it the most popular open SDN platform worldwide.

One of its central objectives is to deliver a programmable SDN framework that enables the combination and automation of protocols and networks of different sizes and shapes, while abstracting the details of the underlying network infrastructure from application developers. ODL is driven by a collaborative community that fosters openness and vendor neutrality. Its current release, called Oxygen, is the eighth version of the platform released by the ODL foundation. The last releases mainly focused on the development of extensions and enhancements to support three promising areas for SDN: IoT, Metro Ethernet, and cable operators. In this way, ODL has expanded its scope over the years and has applied automation of programmable networks to use cases beyond traditional networking settings (for example, in the IoT space).

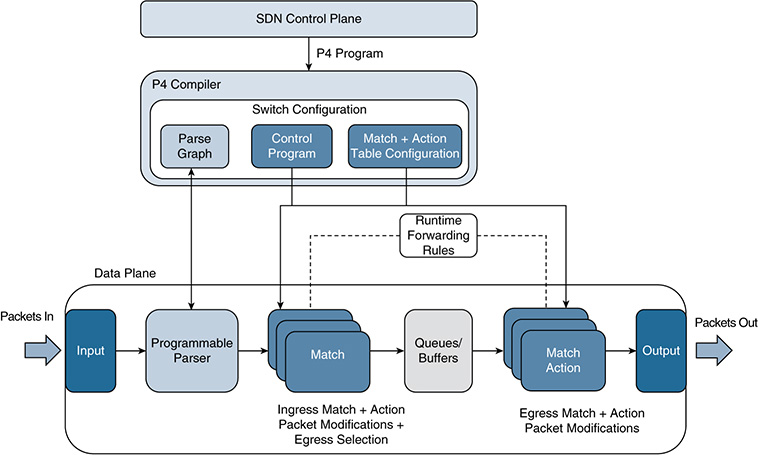

Figure 6-7 shows a simplified version of the ODL reference architecture. ODL was designed as a modular and extensible platform to support a large number of use cases combining SDN and NFV solutions. To better understand its reach, we describe it using a bottom-up approach. The architecture consists of the following layers:

■ Southbound interfaces and protocol plug-ins: As shown at the bottom of Figure 6-7, this layer interfaces with the physical and virtual infrastructure. It supports a wide variety of protocols, including different versions of OF, OVSDB, NETCONF, LISP, and BGP. It also includes specific protocols in the IoT space, such as COAP. Each of these protocols has its own plug-in, which can be dynamically linked to the Service Abstraction Layer (SAL) on top. The SAL exposes the device services to the upper layers (the SDN controllers and applications). The SAL is obviously a fundamental part of the ODL controller platform because it bridges the gap between the SDN applications located northbound the SAL and the SDN devices managed through the plug-ins mentioned previously. Observe that ODL supports OF-enabled devices as well as OVSs, along with many other physical and virtual elements (routers, access points, load balancers, and so on).

■ A Model-Driven Service Abstraction Layer (MD-SAL): In ODL, network devices and applications are all elements represented by a model—more specifically, a YANG model. The interactions between these elements occur within the SAL, which provides the adaptation mechanisms and enables data exchange between a network device and an SDN application (both of which are abstracted and represented as YANG models). This approach offers a formal and standardized specification of the capabilities of a network device or an application, in a machine-readable way. A key advantage is that SDN applications are not exposed to the specific details of the device, such as its particular CLI implementation—nor do they need those details to communicate with the device. As long as the SAL has access to the YANG models that abstract and stipulate the communications northbound with the applications and southbound with the devices, the data exchange and configurations mediated through the SAL are guaranteed. In ODL terminology, the SAL basically enables the communication between the producer and consumer models. A producer model implements an API and provides data through it. A consumer model gets data through the API. The role of a producer or consumer depends on the data to be exchanged. For instance, a protocol plug-in and its associated model act as a producer when supplying information about the underlying network to the upper layers. When the SDN applications send configuration instructions to networking elements via the SAL, the corresponding plug-ins receiving the data act as consumers. In this way, the SAL enables SDN applications to take control of the configuration of physical and virtual elements in the network. In general, the SDN applications that implement the business logic and algorithmic decisions consume the functions and resources offered by the controller platform; they make decisions based on the information gathered (in some cases, in real time) and use the controller platform again for pushing rules onto the network infrastructure. An orchestration system is key to enable all these operations, leveraging the abstractions (models) and interfaces (plug-ins) offered by a collection of dynamically pluggable modules to ODL’s SAL. The SAL and its YANG-based/model-driven nature are the secret sauce and the biggest differentiator in ODL, making it the de facto standard open source SDN control platform in industry. In summary, the SAL does the following:

■ Matches producers and consumers based on its data stores and the requests received through the different APIs running in the platform. Thanks to the SAL, consumers can find a given producer, trigger remote procedure calls (RPC) to get data from the producer, or be discovered and receive notifications or configuration commands from producers whenever needed.

■ Enables and instruments the exchange of information between producers and consumers. A producer can insert data into SAL’s storage; a consumer can read data from SAL’s storage. The SAL’s data store handles both operational and configuration data.

■ The ODL controller platform: This layer offers the control plane functions, a set of controller applications embedded in ODL itself, and the network abstractions required for controlling and managing the network infrastructure and its protocols. As outlined shortly, this layer also contains a group of modules that enable orchestration functions in ODL. For instance, this includes the integration of OpenStack Neutron, which offers functions that an orchestration system can use to automate the configuration of devices, thereby offering products such as networking as a service. More specifically, this layer has three constituent blocks:

■ Control plane functions: Modules for managing OF switches, OF forwarding rules, OF statistics, processing topologies, L2 switches such as OVS, Link Aggregation Control Protocol (LACP) and Locator/ID Separation Protocol (LISP) services, and more.

■ Embedded controller applications: An ecosystem of control applications offered by ODL. These applications can run in ODL itself. An installation of ODL does not need to pick them all, so an ODL instance can be quite lightweight, if needed. Among the applications ODL offers are Cardinal (monitoring), Controller Shield (security/anomaly detection), DOCSIS abstraction (for cable services), EMAN (energy management), NetIDE (client/server multicontroller engine that also integrates with an IDE for software development and testing), Neutron (OpenStack’s network manager), and Virtual Tenant Network (VTN) manager (enables the management of multitenant virtual networks).

■ Network abstractions: An ecosystem of applications that enable policy control, including separating the what (the intent) from the how (the instantiation process that maps to the desired intent). This block includes functions such as NEMO (domain-specific language enabling the abstraction of network models), the Application-Layer Traffic Optimization (ALTO) protocol manager, and Group-Based Policy (GBP) services (inspired by promise theory and based on the work initiated by Mike Dvorkin toward the decoupling of high-level policy definition from its specific implementation).

■ ODL APIs: These are a set of northbound APIs offered on top of the controller platform, including REST, RESTCONF, NETCONF, and AMQP interfaces.

■ Authentication, authorization, and accounting (AAA): Security is a key focus area for ODL. The platform provides a framework for AAA, as well as automatic discovery and securing of network devices and controllers. Security is part of a larger charter that, in ODL terminology, is called S3P (Security, Scalability, Stability, and Performance). The ODL community is constantly improving the code base across all its projects in the areas of S3P. Development and testing groups team together to assess how any new change impacts the S3P of the ODL platform. The ODL foundation is also working with the Open Platform for NFV (OPNFV) project to support a trial environment that could offer performance tests for SDN controllers in a realistic way.

■ Independent network applications: A set of SDN applications that could be implemented by any software developer. These leverage the abstractions and native functions offered by the ODL platform to build ambitious software-defined networks. This is the layer where the orchestration system would reside.

■ OpenDaylight User Experience (DLUX) application and the NeXT toolkit: DLUX mainly manages aspects such as allowing users to log in to the ODL platform, get node inventory, view statistics and the network topology, interact with YANG data model stores, and configure elements such as OF switches through ODL. The NeXT UI, on the other hand, is a toolkit that Cisco developed and contributed to the ODL community. It provides the means to perform multiple actions and configurations centered on the network topology. NeXT is an HTML5/JavaScript toolkit that is used to draw and display network topologies (including different layout algorithms), visualize traffic and paths, and enable user-friendly configurations. Figures 6-8 and 6-9 show snapshots of the NeXT UI for BGP and ACL configurations through ODL plug-ins.

Figure 6-9 Another Example of OpenDaylight’s NeXT UI Toolkit—in This Case, the Configuration of an ACL

ODL’s code is now part of various solutions and SDN applications that are commercially available. To implement a new SDN application, developers typically need to follow these steps:

Add/choose a set of southbound protocols plug-ins.

Select some or all of the modules offered by the ODL Controller Platform, such as control plane functions, embedded or external controller applications (recall that some of them might be contributed by the open source community and, therefore, might not necessarily become part of ODL’s distribution), and network abstractions and policies.

Build controller packages around a set of key ODL components, such as the MD-SAL and YANG tools. YANG tools offer the necessary tooling and libraries to support NETCONF and YANG.

As an open source project, ODL represents a dynamic environment. Therefore, it is essential to ensure that new software components can be deployed and tested without interfering with mature and thoroughly tested code. To this end, ODL leverages Apache Karaf. Among other functions, Apache Karaf helps coordinate the lifecycle of microservices, manage logging events related to the infrastructure, and enable the remote configuration and deployment of microservices in production environments with proper levels of isolation. ODL also uses other successful service platforms, such as the OSGi framework and Maven, to build packages that manage the features and corresponding interactions between components enabled by Apache Karaf. The modular design of the platform facilitates the reutilization of services created by other developers. This modular approach also allows developers and users to install only the ODL services and protocols they need.

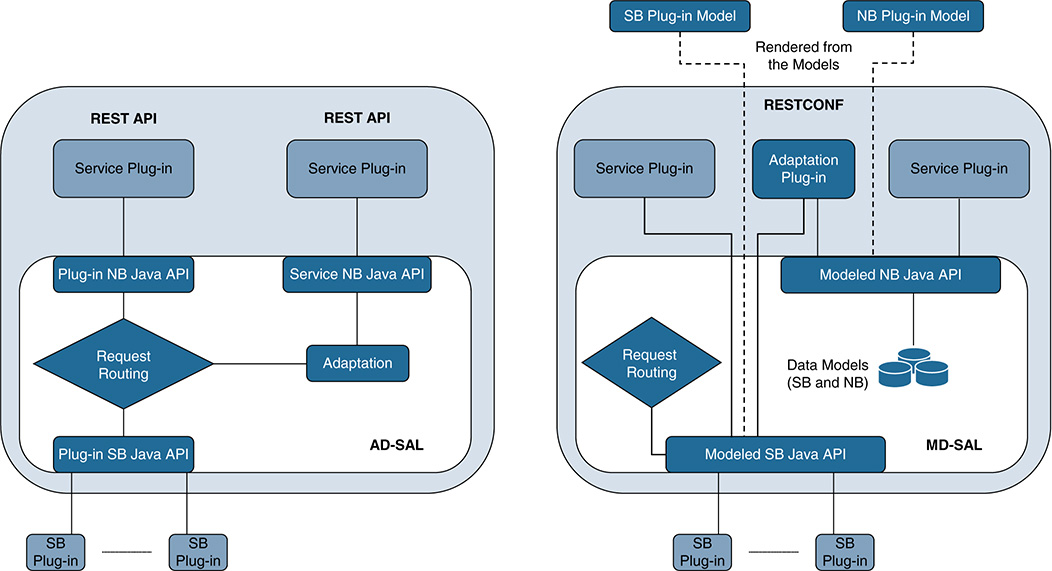

Another important aspect of ODL has to do with its contribution to model-driven SDN architectures. In particular, ODL represents a transition in industry from API-driven service abstraction layers (AD-SAL) to model-driven ones (MD-SAL), and this approach has become a distinctive factor of ODL’s SAL. Table 6-1 compares API-driven and model-driven SALs.

Table 6-1 Comparison of an API-Driven SAL (AD-SAL) and a Model-Driven SAL (MD-SAL) (Adapted from the Article “Difference Between AD-SAL and MD-SAL”)

API-Driven SAL |

Model-Driven SAL |

|---|---|

Routing requests between producers and consumers using the SAL APIs and data adaptations required are all statically defined at compile/build time. |

Routing requests between producers and consumers using the SAL APIs are defined by machine-readable models, and data adaptations are handled by internal plug-ins. The API code is generated directly from the models when a plug-in is compiled. When the plug-in OSGI bundle is loaded into the controller, the API code is loaded into the controller as well, along with the rest of the plug-in code containing the model. |

Northbound and southbound APIs are typically present in an AD-SAL even for functions and/or services that are mapped 1:1 between northbound plug-ins and southbound plug-ins. |

An MD-SAL enables the northbound and southbound plug-ins to use the same API, which is generated from a model. Any plug-ins can become an API (service) producer or consumer as needed by a specific application (captured in the model). |

In AD-SAL there is typically a dedicated REST API for each northbound and southbound plug-in. |

Instead of being needed to develop dedicated APIs, an MD-SAL can provide a common REST API to access data and functions defined by the models. |

An AD-SAL provides request routing functionality and selects a southbound plug-in based on the service type. It may also provide service adaptation for northbound (service, abstract) APIs, whenever these are different from their corresponding SB (protocol) API. |

An MD-SAL also provides request routing functionality and the infrastructure to support service adaptation. The difference is that the service adaptation functions are not provided by the SAL itself; they are provided by external adaptation plug-ins, which are implemented just as any other service plug-in in ODL. |

In an AD-SAL, request routing is resolved by plug-in/service type. An AD-SAL knows which node instance is served by which plug-in. For instance, when a northbound service plug-in requests an operation on a given node, the request is routed to the appropriate plug-in, which then routes the request to the appropriate node. |

Request routing in an MD-SAL is achieved based on both protocol type and node instances because node instance data is exported from the plug-in into the SAL. |

An AD-SAL is stateless. |

An MD-SAL can store data for models defined by plug-ins. Producer and consumer plug-ins can exchange data through the MD-SAL data store. |

In an AD-SAL, services usually provide both asynchronous and synchronous versions of the same API method. |

In an MD-SAL, the same API can be used for both synchronous and asynchronous communications. MD-SALs usually encourage an asynchronous approach to application developers, but they do not preclude synchronous calls (e.g., mechanisms are available allowing a caller to block until the message is processed and a result is sent back). |

Figure 6-10 sketches a more detailed picture for the SAL and compares both models. For example, the AD-SAL routes requests from one of the northbound (NB) service plug-ins shown at the top of Figure 6-10 to one or more southbound (SB) plug-ins at the bottom. Even when the NB and SB plug-ins and APIs are essentially the same, in an AD-SAL, they still need to be defined and deployed.

We now consider a different scenario, in which one of the NB service plug-ins is using an abstract API to access services provided by one or more SB plug-ins. As shown in Figure 6-10, an AD-SAL can provide service adaptation and perform the required translations between the abstract NB API and the SB plug-in APIs.

In the case of an MD-SAL, the adaptation plug-in shown on the right side of Figure 6-10 is just another (regular) plug-in. As with any other plug-in, it produces data that is sent to the SAL and consumes data from the SAL through APIs that can be automatically rendered from models. In an MD scenario, the role of an adaptation plug-in is to perform model-to-model translations between two APIs; the data models can contain information to aid the routing process. Thanks to the model-driven approach, the SAL can support runtime extensibility because the APIs can be extended and regenerated without needing to stop any running service. The southbound and northbound interfaces and the associated data models can be crafted and created using YANG and can be stored in a repository within the SAL.

Figure 6-10 From an AD-SAL to an MD-SAL and Interactions Involving Northbound (NB) and Southbound (SB) Plug-ins and Services (Adapted from the Official ODL Site)

An important aspect is that the work being carried out by ODL is very well aligned and is highly complementary to the vision that the ETSI ISG group on NFV is pushing forward. The NFV vision has already gathered hundreds of telecom carriers and companies around the world. It seeks to leverage the utilization of off-the-shelf technology in building and composing virtualized network functions (VNF). Aspects such as deciding where to run those VNFs; performing the corresponding instantiations of computing, networking, and storage capacity required; and chaining these VNFs to build “networks” are clearly all central to NFV. ODL is a candidate to meet a number of these goals and provide SDN-based control for NFV settings. In this regard, ODL has already begun to work with the Open Platform for NFV (OPNFV) and is starting to become adopted by some content services providers (CSP) who want to deploy and control NFV settings using SDN. In fact, multiple operators and vendors are joining forces in hopes of integrating open source components contributed by different projects and creating a reference platform to accelerate SDN/NFV deployments. For instance, OPNFV has selected ODL mainly because of the following features:

■ Its openness and vendor-neutral nature.

■ Model-driven SAL and the mechanisms offered by ODL in support of a wide range of physical and virtual network functions and technologies.

■ Policy management support and intent-based features, as well as the capability to interface with external orchestration systems (for example, the one OPNFV supplies).

■ Network virtualization and service chaining capabilities. NFV forwarding graphs and service function chaining (SFC) are explained in detail later in Figures 6-15 and 6-16.

It is worth highlighting that ODL is actively contributing to the convergence of several open source projects and standards. For example, initiatives such as ODL, OpenStack, and FD.io have joined forces to create a framework called the Nirvana Stack, presented in 2017 at one of the OpenStack Summit events. The Nirvana Stack also leverages the liaison with OPNFV to provide a common means to orchestrate, deploy, and test VNFs and SFC within OpenStack. Furthermore, ODL toolchains for cloud, NFV, and programmability are becoming not only part of the core of other open source frameworks beyond OPNFV and OpenStack (such as the Open Network and Automation Platform [ONAP]), but also part of the architectures developed by standards bodies such as the Metro Ethernet Forum (MEF).

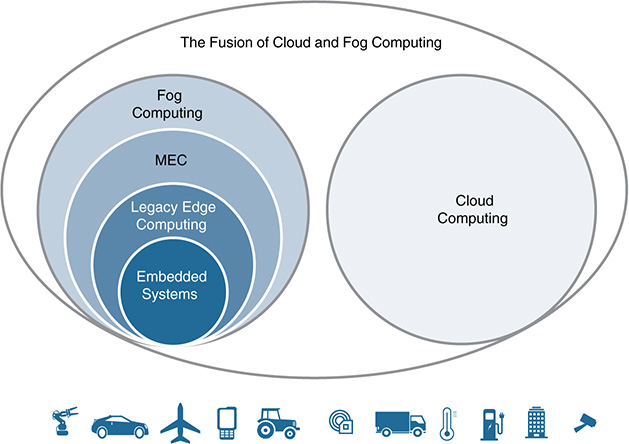

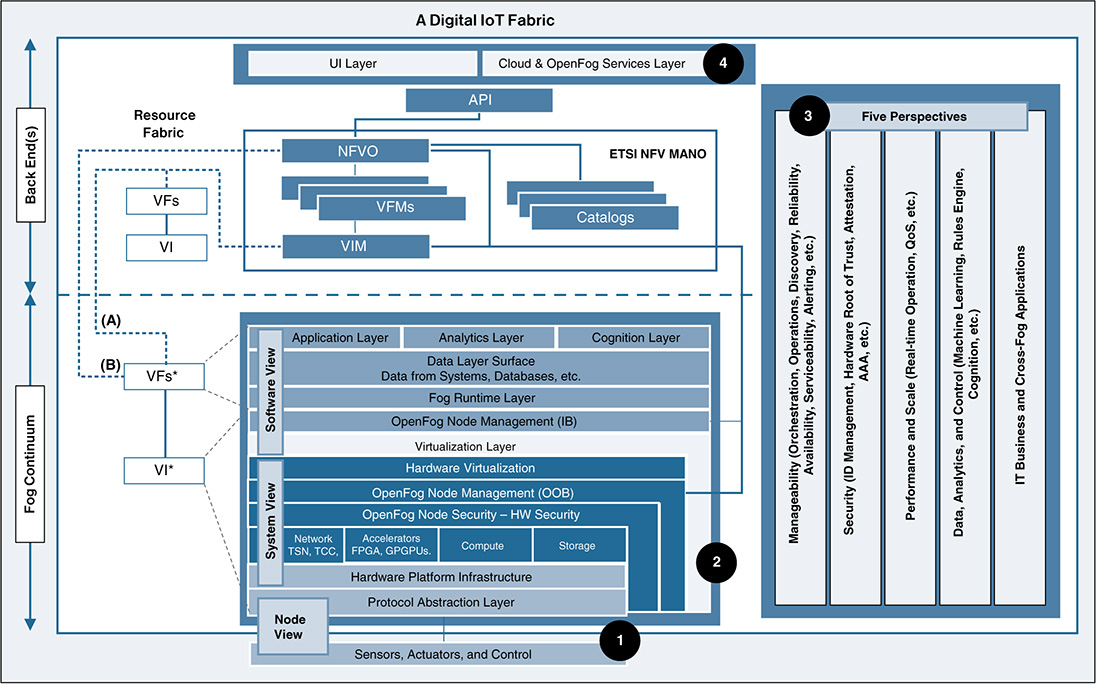

The modularity, programmability, and flexibility these new stacks offer can be applied to a growing number of use cases in the IoT space. Sectors such as smart cities, utilities, manufacturing, and transportation are undergoing a profound transformation and are transitioning from legacy systems to new IoT-centric architectures. Many of those different use cases represent a huge opportunity for SDN and NFV technologies, particularly for combined SDN/NFV architectures that can offer a uniform means to onboard, secure, and manage the lifecycle and communications of a large number of heterogeneous virtual and physical elements under a common management paradigm. ODL has accomplished part of this and is already supporting IoT-specific plug-ins, enabling its utilization in certain use cases. The architectural decisions that are being made about SDN and NFV, especially those related to orchestration and automation, will certainly influence future IoT architectures, such as those that embrace fog computing (Figure 6-21 to Figure 6-26 cover these aspects in detail).

After this succinct description of ODL’s role, its main components, and its interplay with various open source and NFV initiatives, it should become clear that ODL is the result of an industry move to accelerate and unfold the adoption of SDN, including the nascent application of SDN controllers to IoT use cases. It is worth highlighting that although ODL is a great example of industrywide collaboration and is a reference for SDN open source controllers, bringing it into production is still challenging in specific scenarios. Two of these reasons are that network service lifecycle management is lacking (it needs to be implemented at the application layer), and operationalization typically demands high skills (for example, professional services) instead of an easy-to-manage controller that most customers are looking for.

Extending the Concept of Software-Defined Networks

The success of SDN in the networking field rapidly captured the attention of the research community. Various systems that were traditionally implemented in hardware started to be re-examined, with the aim of assessing which components could be developed in software and become openly programmable. One area that embraced this approach is radio communications, with special emphasis on radio signal processing functions in software that need to interface with a hardware-based radio frequency (RF) module. The research efforts in this area keep growing steadily and lie under what is usually referred to as software-defined radio (SDR).

In a nutshell, SDR represents a paradigm shift in radio communication systems. Functions that for decades were implemented in hardware, such as digital tuners, modulators and demodulators, and multiplexers, are now being implemented in software. The idea of transitioning toward more software-oriented RF communications is older than modern SDN. Indeed, it is important to understand that SDR could live without SDN (although it is fair to say that SDN has revamped the interest in SDR and accelerated the efforts and investment around it).

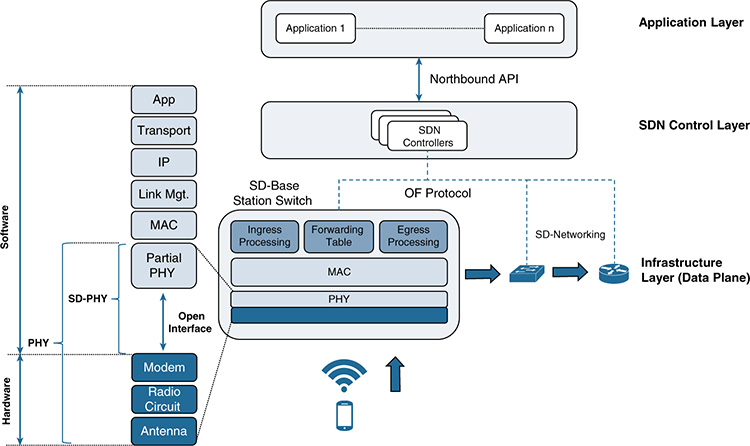

Although both technologies can coexist, there are obvious benefits when they come together. Figure 6-11 illustrates a graphical example in which a switch supporting a software-defined radio base station is managed by an SDN controller.

Figure 6-11 Example Showing a Radio Base Station Switch Integrating SDR at the PHY Layer, Managed by an SDN Controller

The central part of the figure shows a radio base station that provides services to mobile subscribers. The traditional three layers present in the SDN model are clearly visible in the figure (the switch, the SDN controller, and the applications on top). In the example, the switch that implements the radio base station supports the OF protocol. At the data plane level, it can forward traffic toward other SDN-enabled devices that also support OF (the software-defined L2/L3 devices shown on the right).

The left side of the figure shows the utilization of SDR and illustrates the differences between a traditional physical layer (PHY) and a software-defined PHY (SD-PHY). Whereas in legacy systems, basically anything below the MAC layer entails a hardware implementation, SDR gradually absorbs part of the functions in the PHY layer and implements them in software. In this particular example, the RF front end is implemented in hardware and supports the antenna, the lower-level radio circuits, and the modulation and demodulation functions. An open interface on top of it enables communication between the RF front end and a set of software-defined functions, which now implement part of the functionality of the PHY.

The combination of SDR and SDN has great potential because the software components offer much more flexibility to expose information from the RF module to an external SDN controller. Once processed by the SDN controller, the information can be used by the applications in the layer above to introduce innovations for topics such as power adjustment, cognitive radio, or capacity optimization. This example shows the synergies between SDR and SDN, but they target different problems and do not depend on each other. SDR follows a methodology that is essentially the same as the one used in SDN:

Decouple certain functionality at the data plane level from legacy (hardware-based) systems.

Implement those functions in software.

Make the implementation open and programmable so that the developer community can start innovating in the field.

Define interfaces and protocols in such a way that the data plane can be controlled and managed externally.

Another field in which software-defined technologies have gained substantial traction is wide-area networks (WAN). A WAN extends a computer network over large distances, from a few miles up to transoceanic distances. WAN technologies are largely used in the enterprise and public sectors to connect branch offices, headquarters, and data centers in different ways, depending on the requirements (for example, using a hub-and-spoke model, with the headquarters as the hub and branch offices as spokes, or a partial-mesh or even a full-mesh topology for reliability).

In traditional WAN schemes, the connection between sites requires purpose-built hardware. These devices support not only the links and protocols enabling the delivery of packets across the WAN, but also the mechanisms to guarantee the performance required by the applications and databases conducting the different business functions. In this regard, the expansion of networks across large distances poses multiple operational challenges; the larger the size of the network, the more difficult it becomes to manage the services across a WAN. Applications such as virtual desktops; streaming live media; and collaboration tools such as videoconferencing, voice, or virtual meeting rooms usually demand low latency and are sensitive to jitter and packet loss. Other applications, however, are more sensitive to bandwidth availability and network congestion, so the complexity and cost of expanding, managing, and troubleshooting an application portfolio on WAN environments tends to increase significantly as the network scales up.

These challenges became very evident as many applications started to migrate from privately owned IT infrastructures and data centers to public clouds. Before organizations adopted cloud-based solutions, a considerable part of their traffic was exchanged between branch offices and headquartered data centers and networks. With the rise of public clouds, traffic patterns changed substantially as more traffic involved the cloud directly. As a result, the rigidness of a WAN model based on dedicated and expensive links between sites, supported by specialized (proprietary) hardware, rapidly started to show its limitations. Many start-ups and vendors begun to develop a more flexible model tailored to the cloud, with the aim of offering increased agility while reducing cost. These efforts led to platforms that were better prepared to deliver services with high performance over the WAN. These new platforms are built on software-defined concepts and are categorized as SD-WAN solutions.

As with the evolution of SDR, the transition from legacy WAN technologies to modern SD-WAN followed the four steps listed previously. In the SD-WAN model, the control and configuration of the connectivity between sites are decoupled from the infrastructure devices that support the communications and protocols over the WAN. Controllers implemented in software are typically hosted in the cloud, allowing centralized configuration and operation and maintenance (OAM) tasks of a WAN in a simpler and more agile way. Again, the central idea resides in the separation (decoupling) of the control and configuration mechanisms from the devices supporting the WAN. A centralized controller is used to define and command the enforcement of a set of policies, including connectivity, reliability and failover mechanisms, security, and traffic prioritization. The SD-WAN controller can receive monitoring information about the status of the network and make decisions in real time to ensure that the applications meet the performance and service-level agreements (SLA) centrally defined.

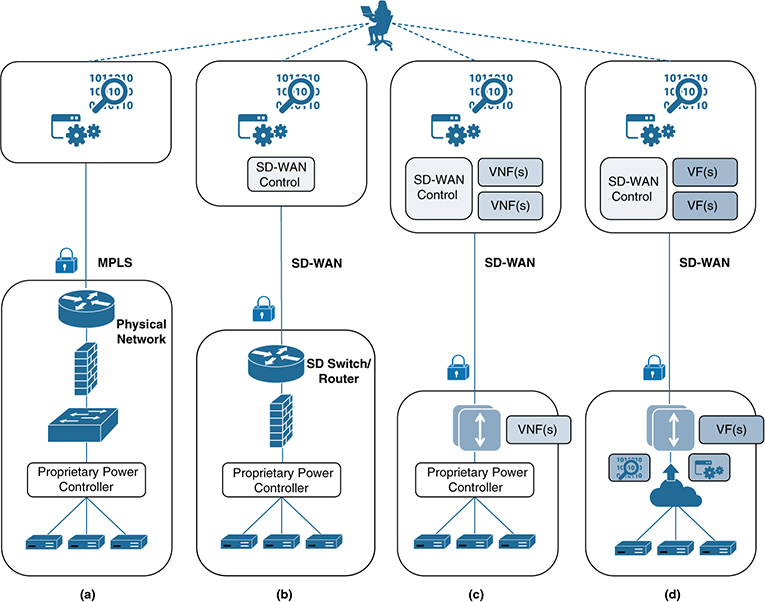

Another important step in the evolution of WAN technologies is the integration of virtualization, making SD-WAN another area in which SDN and NFV are starting to converge. With the introduction of virtualization techniques in the WAN, some organizations are starting to replace traditional branch routers and physical appliances at the network edge with virtualized elements. This approach has several advantages for end customers. First, it substitutes expensive routing/switching hardware with virtualized appliances, not only to support the communications over the WAN, but also to provide the desired level of performance for the different applications. Second, SD-WAN technologies leverage consumer-grade Internet and cellular connections, which are typically far less expensive than dedicated enterprise-grade MPLS links (see Figure 6-12). The combination of these factors contributes to a reduced CAPEX associated with the WAN, offering much more flexibility in the way headquarters and branch locations communicate with each other and also with the applications running in the cloud. Third, SD-WAN solutions give customers centralized control of their connectivity and provisioning processes, security and VPNs, communication policies, application delivery, application-level performance, and SLAs, all in a simple and intuitive way. This streamlines operational tasks substantially. This approach not only reduces the OPEX associated with maintaining and scaling the WAN, but it also increases the elasticity to absorb and manage traffic in a more dynamic and cost-effective way, compared to legacy WAN solutions. Indeed, the tendency is to move toward a WAN as a service or network as a service model, in which the WAN configuration and the services running on it become more dynamic and are better suited to support models such as software as a service (SaaS) or infrastructure as a service (IaaS) in the cloud.

Figure 6-12 shows an SD-WAN scenario that combines multiple locations, including headquarters, campus, branch and small/home offices, public and private clouds, and data centers with different communications means and centralized control to build more flexible and cost-effective WANs. SD-WAN solutions are characterized by four basic elements, according to Gartner: