Chapter 11. Data Protection in IoT

Topics covered in this chapter include

If we ask a group of technologists across different fields the question, “What is data protection?” the answer will vary considerably, depending on their area of expertise. A backup administrator will associate data protection to backups and data restoration processes, whereas storage administrators will think about data persistence, storage replication, and Redundant Array of Independent Disks (RAID). An expert on General Data Protection Regulation (GDPR) will immediately think about protecting consumers’ personal data. A security consultant will probably associate data protection with the set of mechanisms needed to secure data at different levels. In summary, data protection means different things to different people in different contexts.

For the purpose of this book, data protection is the combination of proactive and reactive technologies and mechanisms, along with best practices on securing data in an IoT platform. It is about preventing unauthorized access to data and securing its use during its entire lifecycle, including data produced at the data plane, control plane, and management and operational planes. As Preston de Guise says in his book Data Protection: Ensuring Data Availability, data protection goes beyond the responsibility of IT departments. It should be the result of close collaboration and strategic alignment among IT, OT, and the different lines of business (LoB) that make up an organization. In this chapter, our focus is obviously IoT, a field in which ensuring tight coordination among IT, OT, and LoBs is key to protecting sensitive data.

However, data protection in IoT is far from trivial. A number of technical and nontechnical factors make developing security mechanisms to protect data a challenging endeavor. For instance, several industries are currently transitioning from legacy systems toward new digital IoT platforms. Obviously, this transformation process will not occur overnight. In most cases, the transition involves the coexistence of brownfield and greenfield technologies for years. Many of the brownfield technologies that need to remain operative were developed in the 1980s, and it is not uncommon to find elements in an industry plant that were designed without any security. Additionally, some of those unsecure elements could be part of key productive processes, so the data that they use and produce might be critical for the plant. At a glance, we can think of a number of potential palliatives and add-on technologies to protect the data used and produced by unsecure elements in a data pipeline. Unfortunately, adding such elements might be impractical or simply unfeasible due to safety regulations. For example, operators of an industrial control system (ICS) might need to access the data in those elements in real time (for example, in plain text during production), but personnel in the industrial zone might not have the means, training, or time to deal with secure data. As mentioned previously, safety might be another obstacle because adding new hardware or software components to secure data on existing machines and elements in an ICS might demand the re-homologation of certain safety and quality processes.

In practice, the approach most ICSs follow is quite different. As described in Chapter 5, “Current IoT Architecture Design and Challenges,” ICSs are supported by the layered architecture in Figure 11-1. This is based on the standard IEC 62443, which is the successor of the well-known Purdue Model for Control Hierarchy and the ISA 95 standards. The ultimate goal of ISA99/IEC 62443 is the availability of ICSs (and the data), followed by data integrity. As Figure 11-1 shows, the model outlines six levels of applications and control in a manufacturing enterprise (Levels 0–5), arranged into four main zones (the cell/area zone, the industrial zone, the DMZ, and the enterprise zone.

Level 0 includes the sensors, drives, actuators, robots, and instrumentation elements that are necessary to carry out a manufacturing process. All of them produce data and, in practice, many of these elements have limited security or even no security at all. To defend against security threats on the data at Level 0, the elements at this level are typically isolated from the outside world. All communications are supervised and controlled, including short-range radio communications. Preventive mechanisms are also in place to avoid insider attacks, either intended or unintended because of negligence or involuntary mistakes. For instance, in some cases, operators cannot even access the lower levels (Levels 0–2) with their own mobile phones and need to use dedicated radios for internal communication. Increasingly, IT devices in a plant will have fewer I/O ports exposed (for example, free USB ports waiting to be used), which an attacker can potentially exploit to inject malware.

Figure 11-1 Segmentation of Responsibilities and Data in a Manufacturing Enterprise, Adhering to IEC 62443 and the Purdue Model of Control

The devices in Level 0 are controlled by the elements depicted in Level 1; they are typically responsible for batch, discrete, sequence, and hybrid control. The elements in Level 1 include programmable logic controllers (PLC), distributed control systems (DCS), remote terminal units (RTU), and so on. The next level in the hierarchy is the area of supervisory control, or Level 2. It includes the manufacturing operations equipment for an individual production area and typically covers the following components:

■ Human Machine Interfaces (HMI)

■ Alarm systems

■ Engineering and control workstations

A considerable part of the data used, produced, and exchanged at Levels 1 and 2 is critical for a manufacturing enterprise, so protecting this data is vital for the business. Therefore, similar considerations apply here.

The next level covers manufacturing operations and control (Level 3). The applications and elements at this level include the following:

■ Production control, scheduling, and reporting systems

■ Optimization control

■ Historian data

■ Domain-specific controllers

■ Remote access in a highly supervised way

■ Network file servers and IT-related functions that support the operational processes and tasks at Level 3 and below

Observe that some of the applications and elements in the industrial zone can communicate with the systems in the enterprise zone through the DMZ. The DMZ provides secure access and control, enabling data between these two zones to be exposed and delivered in a controlled way. In practice, direct communications between Levels 0–3 and the enterprise zones (Levels 4–5) are strongly discouraged, so any potential data exchange is supervised and goes through the DMZ. The DMZ segments and separates the OT domain (Levels 0–3) from the upper levels.

Levels 4 and 5 relate to site business planning and logistics and the enterprise network, respectively. Level 4 is often perceived as an extension of Level 5; it includes elements for managing inventories, handling capacity and business planning, reporting, scheduling, planning operation and maintenance (OAM) tasks, and so on. Level 5, on the other hand, is where the corporate IT infrastructure and applications reside. The elements, applications, and data in Levels 4 and 5 are usually managed and secured by corporate IT.

The hierarchical model in Figure 11-1 not only separates roles in an ICS but also segments how data flows are controlled. Its aim is to protect the data produced and used at the different levels. For instance, enterprise applications and decisions made at Levels 4 and 5 are fed from data supplied by Level 3. This is typically performed in a secure way through the control boundaries imposed by the DMZ. Although this model has historically governed ICSs and the way manufacturing enterprises were architected and operated, the divide between IT/enterprise and OT services and data is no longer seen as a must. With the advent of IoT, the separation between IT and OT is rapidly blurring. Many use cases enabled through novel IoT technologies often entail smart combinations of IT and OT services to maximize business outcomes. In general, bringing OT to IT is not an option. However, bringing IT capabilities to the OT field is perfectly feasible. This is already happening, driven by the adoption of fog computing powered by orchestration, automation, and data protection. This approach is a game changer in the desired convergence of IT and OT. IT services are becoming operationalized and deployed alongside OT services. This allows one to exploit and extract value of data produced in the field and generate business value in ways that operational technologies are not geared to produce. In summary, even in highly controlled environments, such as ICSs, the way data is being controlled and used is changing. Data protection mechanisms in ICSs will need to evolve and adapt accordingly.

Even though the convergence of IT and OT seems unavoidable, it is still too early to understand its ramifications and its potential impact on data protection techniques. In Part IV of this book, “Use Cases and Emerging Standards and Technologies,” we cover a set of transformational use cases, with special focus on security and orchestration involving a full IoT stack (covering the infrastructure, OSs, virtualized environments, applications, data, service assurance, and more). As Chapter 5 explained, under the hypothesis of a converged IT/OT scenario, the notion of a full IoT stack poses challenges regarding who owns what data and services and, therefore, who is responsible for protecting what data. The advantage of orchestration and automation techniques is that, once IT and OT departments agree upon the definition and lifecycle management of an IoT service and its data across the full stack, orchestrated transactions can also help in automating data protection; this lessens pressure on IT and OT administrators and their corresponding responsibilities. Access to data can be subject to role-based access control (RBAC), which can be automated and enforced as part of the orchestration process. This approach gives different IT and OT administrators access to different classes of data in an automated way.

Clearly, the need for data protection goes way beyond ICSs and the layered model depicted in Figure 11-1. Every industry vertical faces similar challenges, including smart cities, transportation and smart vehicles, utilities and smart grids, and smart buildings. However, the adversary forces and security needs vary a lot from one vertical to another. As described previously, the data managed at Level 0 in a manufacturing plant can be fenced so that it remains isolated from the outside world, to protect the operational processes (see Figure 11-1). However, a smart vehicle works in exactly the opposite way—that is, the operations required cannot keep the data isolated from the outside world. In light of this, data protection mechanisms that historically have been successfully implemented in one particular environment cannot be simply reutilized in other verticals.

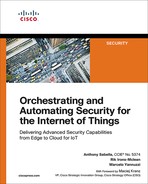

No magic formula or detailed recipe can protect data in IoT. Protecting data in a pub/sub system is one matter; in an application enablement framework (AEF) designed for IoT, it is another matter. In a full IoT stack involving fog, network, and cloud infrastructures, data protection is again a unique matter. In fact, this last scenario is very different from the previous two examples. Protecting data in a full IoT stack is more akin to an art than an exact science. So how do we cope with the challenges posed by data protection in IoT? Figure 11-2 illustrates the approach that we follow in this chapter. The methodology used for protecting data is based on the confidentiality, integrity, and availability (CIA) triad; it is supported by access control and complemented as needed with nonrepudiation capabilities. The triad applies to data produced and managed by the three relevant planes (the data plane, the control plane, and the management and operational plane). As we show later in the chapter, it applies to architectures implementing a full IoT stack.

Figure 11-2 Data Protection: The Confidentiality, Integrity, and Availability (CIA) Triad, Including Access Control and Nonrepudiation

Although the requirements related to data protection vary significantly across verticals, the methodology of starting from access control and applying the CIA triad for each of the three planes in an IoT stack offers a plausible approach. Hereafter, we assume a multitenant environment, in which entities or objects belonging to different tenants (sensors, actuators, nodes, users, and so on) produce and require secure access to data at different levels. In a nutshell, the art of data protection in IoT involves at least the following functions:

■ Access control: Refers to restricting data access to only the entities or objects that are allowed to access and manipulate the data. This encompasses the basic functions of authentication, authorization, and accounting (AAA), as well as physical access control.

■ Authentication: Determines who the object is. Unfortunately, many IoT de-vices do not have sufficient resources (for example, CPU power and memory) to support the cryptography utilized by some authentication protocols. To cope with this, new techniques are being developed that aim to build trust between a resource-constrained device and a more powerful fog node that can act as a proxy. The new techniques also seek to outsource resource-intensive computations to the fog node, including the execution of authentication protocols. Gateways provide another example that can be used to enable authentication for constrained devices.

■ Authorization: Determines what the object is allowed to do with the data (for example, the object can only read data, or can publish data, or can modify or even delete existing data). As we show in this chapter, fog also plays a key role in protecting data in multitenant systems. More specifically, fog not only can handle authentication, but also can host and support the authorization and data sharing rights among tenants. Data exchanges across tenants are critical in several verticals, and fog offers a strategic point of control at the edge to mediate and authorize the exchange of data between different tenants.

■ Accounting: Refers to keeping track of the different operations performed on the data and ensuring that those actions are time stamped and securely logged.

■ Physical access: One of the main challenges in IoT has to do with controlling the physical access to devices, especially, for assets in the field. Differently from cloud computing platforms, where gaining physical access to a data center facility is a complex endeavor, assuring that only authorized personnel can physically manipulate devices in the field and access their data is key in IoT. Thus, physical security and the development of proactive methods to restrict access to data produced by cyber-physical systems in the field also lie under the category of access control. It is worth highlighting that access control mechanisms can be complemented by intrusion detection systems (IDS) and intrusion prevention system (IPS). These can be used to discover, notify, and trigger potential actions against unauthorized access or malicious attempts to manipulate data.

Note that the scale and highly distributed nature of several IoT infrastructures makes access control a challenge. However access control is the cornerstone to ensure the confidentiality, integrity, and availability of data.

■ Confidentiality: Refers to preventing the disclosure of sensitive data to unauthorized entities or objects. Note that the terms confidentiality and privacy are often used interchangeably, but they have different meanings. Confidentiality is about keeping specific data secret, whereas privacy mainly refers to keeping secret certain attributes of the data, such as preventing the revelation of the ID of a data producer or the location where the data was produced. Normally, privacy regulation also covers other aspects, such as auditing processes to ensure that data will not be used for purposes other than what they were collected for.

■ Integrity: Refers to preventing the modification of data. A system that offers data integrity provides mechanisms to detect when data has been modified by an entity or object without authorization.

■ Availability: Refers to the capability of a system to ensure service continuity to authorized objects. This usually covers a set of mechanisms to detect potential attacks (for example, a denial-of-service [DoS] attack) and the procedures and countermeasures to either eliminate or mitigate the threat. Attackers typically look for vulnerabilities from multiple angles. In IoT, the adversary forces and attack surfaces that a system needs to defend against vary considerably from one vertical to another. For instance, successfully conducting an external attack targeting the availability of data on a manufacturing plant is hard to achieve (see Figure 11-1). However, successfully conducting a DoS attack in a smart city environment could be much simpler and cheaper. Consider, for example, the case shown in Figure 11-3. Several of the IoT technologies that are currently deployed in cities have adopted the unlicensed industrial, scientific, and medical (ISM) radio bands. Existing regulation imposes clear limitations on the amount of energy radiated and the output power for devices operating in these radio bands. An adversary willing to perpetrate an attack in a city will not be restricted by regulation, so the attacker can produce a device transmitting in the same unlicensed band to intentionally jam the radio signals. By hiding hundreds of those devices in urban spaces, an attacker could potentially affect the data readings of a large number of ISM-based sensors in different areas. City administrators would need to send personnel to the areas affected to find and eliminate the source of the attack. The challenge is that, depending on the output power and the batteries used, these devices might be quite small, so they would be hard to find without appropriate instrumentation. Also note that such devices are cheap and easy to build. Security is one reason why licensed radio bands are gaining traction over unlicensed ones in the space of IoT.

■ Nonrepudiation: Refers to the capability of a system to determine the identity of the entity or object that has originated a certain message or data, along with the necessary mechanisms to ensure that the data producer cannot deny its authorship. Nonrepudiation has to do with proof of data origination. In practice, it is applied together with mechanisms that ensure message integrity. Proving the authorship of a payload is of little help if the integrity of the bearer (the message) cannot be proved as well.

Data protection is essential in IoT, but it is clearly insufficient to secure a full IoT stack. Other aspects, such as securing the platform itself (for example, using trusted computes and remote attestation mechanisms, securing APIs, hardening operating systems, and managing keys and certificates), also play an important role while protecting data. These functions are not specific to data protection, so they are not covered here; instead, Chapter 13, “Securing the Platform Itself,” analyzes them in detail.

Data Lifecycle in IoT

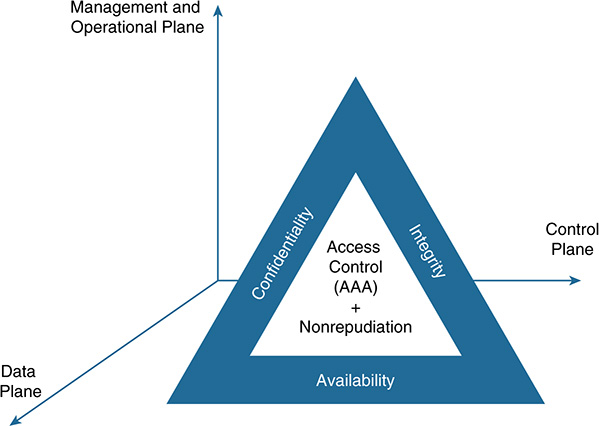

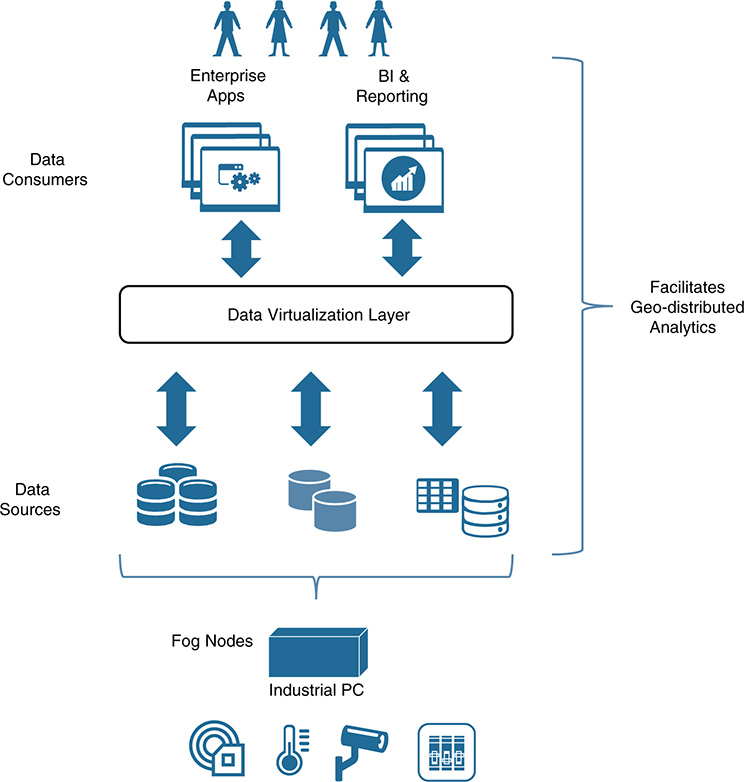

Before digging into the details on how to protect data, it is important to understand the lifecycle of data in IoT, including aspects such as the phases of data processing, which types of data are produced and used, and where the data could reside at the different stages involved in their processing (depending on the use cases). Figure 11-4 illustrates various industry verticals in IoT, including connected vehicles, smart buildings, smart surveillance systems, smart drills and tools, and manufacturing plants with industrial robots. Most of the elements involved in the different verticals in Figure 11-4 produce data. This data is produced by a wide spectrum of applications that can be hosted in vehicles, buildings, manufacturing plants, the robots themselves, NFV-PoPs, the fog, or the cloud. Indeed, these distributed applications can run as microservices, and they can be chained to build IoT services that can be deployed in the continuum between the things and the cloud. The lifecycle of data in an IoT service of this nature typically involves the four phases described in the following list (see Figure 11-5):

Collecting data: This phase corresponds to the process of acquiring and gathering the data produced by the different sources involved in a use case. The sources can be of a different nature and can vary considerably from one use case to another. They include “things” ranging from elementary sensors up to complex machines, external applications for data mashups (for example, weather reports in an agriculture use case), and data supplied by ancillary elements (such as cameras and a human operator). Data collection is not constrained to the data plane; it also covers data produced by the control plane and the management and operational layers. For instance, data can be collected during device discovery, during the registration or rejection of a new device, during configuration changes applied to the control plane, during a remote attestation process, and so on. Data buffering and data aggregation processes are often considered part of the data collection phase.

Figure 11-4 Connected Elements That Are Part of Different Industry Verticals in IoT and a Wide Spectrum of Applications Producing Data

Computing data: After the data has been collected (for example, in raw format), the next step is to process the data. The goal is to extract value of the data. In the case of IoT, this process usually starts at the edge of the network (for example, performed by fog elements deployed in the field). The rationale is simple: Instead of moving data from the sources up to where the computing resources reside (for example, to the cloud), the computing is moved closer to the data sources. This is because moving data to the cloud is not even an option in some cases, for these reasons:

■ Data ownership or privacy policies prevent this (for example, many manufacturing plants will simply not send sensitive data to the cloud).

■ Big Data is created by endpoints at the edge. For operational reasons, sending all the data to the cloud is impractical. For instance, terabytes of data need to be analyzed daily, but the connectivity between the data sources and the cloud is poor (for example, only a few 3G connections are available in the field).

■ Even if moving data to the cloud is technically feasible, the cost of sending all the data to the cloud to perform data analysis can be prohibitive (for example, for anomaly detection and preventive maintenance of machines).

■ Many IoT systems, such as ICSs, require real-time processing and closed-loop control. Cloud-only models usually cannot meet the stringent delay requirements posed by these use cases.

This phase typically covers functions such as data normalization, classification and data analysis, data filtering, protocol adaptations across different media, and the process of making the data available to be transported.

Moving data: An IoT service might consist of a chain of microservices, each with a specific role in the data pipeline (for example, gathering the data, adapting and normalizing data, performing real-time analytics for anomaly detection, persisting data, and creating data mashups for BI tools). Data processing can take place at different levels in both the fog hierarchy and the cloud. Every process or microservice involved in the pipeline receives data, transforms data, and sends the data produced to the next microservice in the data pipeline, including microservices capable of carrying out more elaborated data analysis running in the cloud. Therefore, the capability to move data from one place to another is key for data processing in IoT. This phase usually covers functions such as securing the data transfers and ensuring reliable data delivery.

Leveraging data: This refers to the last stage in a data pipeline or data workflow. It represents the collection of data visualization tools (for example, dashboards), analytics, and BI systems that turn data analysis into concrete business outcomes. To this end, the set of processes or microservices running in the compute resources offered by fog and cloud can work in concert and extract the value of the data at different levels. Whereas Phase 2 (computing the data) usually encompasses the processes for performing data analysis (for example, carrying out anomaly detection), this phase is in charge of notifying operators and decision makers of specific events, making recommendations (such as what to do when an anomaly is detected), or even making decisions in real time without human intervention.

As Figure 11-5 shows, these four phases of data processing lead to the three states data can ultimately take: at rest, in use, or on the move. Data is considered at rest when it is persisted and stored in cameras, caches, files, agent processes, or databases and repositories (for example, for further analysis or backup purposes). In IoT, data is frequently stored in databases hosted in fog nodes, in private data centers, or in the cloud. Data in use refers to data that is currently being utilized; it often entails a transformation process on the data (for example, data being normalized or data being analyzed and subsequently discarded). The utilization of in-memory databases is quite common when data processing requires a very fast response (for example, while data is being used). Last but not least, data on the move refers to data that is being transported for subsequent processing by a node at the network edge, by nodes in a fog hierarchy, or by data center nodes, or data that is simply being conveyed for final consumption using commercial dashboards or BI systems.

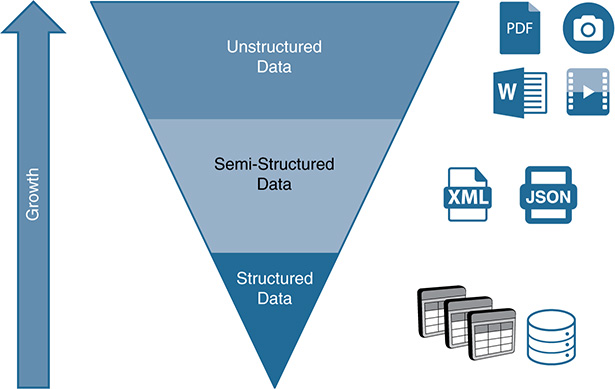

The data can be broadly classified into three main categories:

Structured data: Structured data has a high degree of organization and a strict data model in which the content is prepared to fit into a well-defined structure. The data is usually organized in specific fields delimited by labeled columns and rows within named tables, and they are stored in files or databases. Relational databases are popular examples of structured data. Metadata can be explicitly added according to a predefined structure and an agreed-upon data model. This extra dimension (the metadata) usually adds descriptions or data attributes that can help with categorizing, grouping, and reinforcing the meaning of the data. More precisely, metadata facilitates the process of extracting information from the data stored (for example, by simplifying the processes of finding relationships among data in different records). In many of the use cases in the IoT field, the raw data collected at the endpoints (the things) is processed, transformed, organized, and stored in relational databases as structured data. Although structured data has an important role during the lifecycle management of data in IoT, its relevance in terms of volume is relative, given the current dominance of semistructured and unstructured data (see Figures 11-6 and 11-7). Most of the endpoints in IoT produce either semistructured or unstructured data. Data structure is usually achieved after several stages of data analysis, filtering, and processing in a data pipeline.

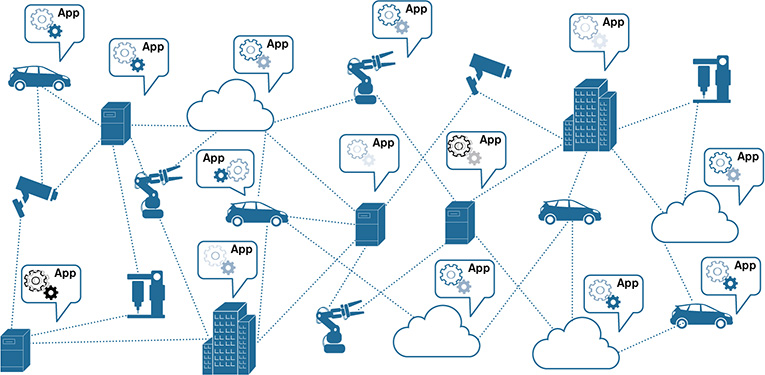

Semistructured data: Semistructured data does not have a strict data model or a structure that conforms to a high degree of organization (as with relational data-bases or other forms of data tables). However, the data does have a certain structure, usually defined in the form of schemas or self-describing patterns facilitating the ingestion, classification, and analysis of data. Popular examples of semistructured data include XML files and data encoded using XML schemas, as well as JSON, which is broadly used for encoding sensor data. Semistructured data is characterized by a flexible structure, often based on key value pairs—for example, in the form {“data”: {“temperature”: 70.2, “humidity”: 45.0}}. Figure 11-6 gives a simple example of semistructured data. The figure shows a JSON schema to process events received from a temperature sensor. The elements in the tuple [“ts”, “st”, “temp”] at the bottom of Figure 11-6 represent the time stamp, sensor type, and temperature (in degrees Celsius), respectively. This tuple carries the data produced by the event, and it will be encoded in JSON. In general terms, relational databases work with structured data, whereas nonrelational ones (such as MongoDB) work with semi-structured data, often using JSON-like data sets and schemas.

Unstructured data: This data does not have a predefined data model and is not organized in a predefined way. Some examples of unstructured data are audio, digital images, video, PDF files, and Word files. For a human being, this type of data does not have an identifiable structure internally, so the data is meaningless without the right tools for interpreting it (a media player, a PDF viewer, a Word editor, and so on). The process of automatically analyzing and extracting information from unstructured data obviously entails complexities when compared to structured or semistructured data. Although it is relatively easy to query a relational database and find anomalies, patterns, or specific similarities on the data stored, getting the same insights from video or audio analysis is way more complex. The challenge is that the vast majority of the data produced today is nonstructured data (see Figure 11-7); with the expansion of IoT, the volume of unstructured and semistructured data generated will increase dramatically. Recent studies show that unstructured data might account for more than 70 to 80 percent of all data in organizations. Estimations (such as the one in Figure 11-8) forecast that, in only 13 years, data will grow from a few hundred exabytes (EB) in 2007 to more than 50 zettabytes (ZB) by 2020. As you can see in Figure 11-8, data is growing exponentially. As the volumes of unstructured data increase, fueled by IoT, the necessity to analyze and filter this type of data directly (that is, without attempting to structure the data before the analysis) will grow as well. In light of this, automated ways of analyzing unstructured data will certainly keep evolving. Machine learning (ML) and Artificial Intelligence (AI) techniques can work in concert with data mining, natural language processing (NLP), and other tools to interpret unstructured data, filter data, find patterns, detect anomalies, and perform specific actions based on the data examined.

Figure 11-7 Growth and Variety of Data: The Large Majority of the Digital Data Produced Today Is Unstructured

Figure 11-8 Explosion of Unstructured Data (Source: EE Times, https://www.eetimes.com/author.asp?section_id=36&doc_id=1330462)

It is important to highlight that the implications of this categorization of structured, semistructured, and unstructured data are not completely evident. In terms of security, determining whether the data is at rest, in use by authorized entities, or on the move is much more relevant than the structure of the data itself. As described in Figure 11-2, when it comes to protecting data at rest, in use, or on the move, the key is providing access control and ensuring the confidentiality, integrity, and availability of data (along with using nonrepudiation mechanisms whenever needed).

Nonetheless, two aspects can make a difference when planning and budgeting for data protection: the variety and value of the data. These two terms are often identified with Big Data, which has traditionally focused on the well-known three V’s of data: managing big amounts of data (volume), usually measured in terabytes, petabytes, and exabytes; dealing with the velocity of the ingestion of data and providing the means to enable streaming, near-real-time, and batch data processing; and handling the variety of data, including structured, semistructured, and unstructured data. Recent studies on Big Data introduce a fourth V, which accounts for the value of the data. Ideally, all data in an IoT use case should be protected. Unfortunately, this might be unfeasible or prohibitive due to cost.

This is where the variety and value of the data come into play. As we show later in the chapter, the ultimate goal is to transform unstructured or semistructured data collected at the endpoints into business outcomes. In other words, the objective is to convert raw data into knowledge. Obviously, the higher the data is in the knowledge stack, the more value the data has. You might incorrectly think that most of the efforts related to data protection should be devoted to securing the data at the higher layers of the knowledge stack. In practice, if data is compromised at the lower layers, the veracity and value of the data at higher layers are compromised, too. As with any security mechanism, data protection is as weak as the weakest element in a data pipeline. In light of this, the designer in charge of building a service to fulfill the requirements of a given use case needs to take the following actions:

■ Decompose the data pipeline

■ Detect the data sources and data processing elements that are indispensable to accomplishing the desired goals

■ Identify, from a variety of data categories and topics, the ones that are mandatory (the most valuable data)

■ Protect the data at rest, in use, and on the move that is associated with each of the objects identified in the data pipeline

As mentioned previously, the development of automated ways to analyze unstructured data will substantially evolve in the coming years. Currently, however, the most common way to deal with nonstructured data in IoT is to use some form of data normalization. Unfortunately, there is no widely adopted standard for data normalization in IoT, so most of the existing products in the marketplace provide proprietary solutions.

Figure 11-9 depicts a simplified data normalization process. A number or devices supplied by different vendors (D1, D2, …, Dn) are connected by either wired or wireless means. These devices send data to a fog node located at the network edge. The data sent by the various devices carries temperatures, measured in either Celsius or Fahrenheit degrees, and the data can have different structure and formats (for example, they might follow a vendor-specific data format). The data can be transported over different protocols, such as Modbus TCP/IP, BACnet, HTTP/REST, and so on. To process the data reads from the various devices, the fog node offers a data extraction and adaptation layer, which consists of a number of drivers. Each of these drivers not only supports the communication protocol, but also performs the parsing functions of the payload and adaptations required to extract the temperature reads. These drivers can run in the fog node in multiple ways:

■ As independent processes in bare metal

■ As microservices in independent Docker containers

■ As different virtual machines (VM)

■ As different processes or containers in a VM

■ As different listeners of a multiprotocol application running in a single VM, or other variants

After the temperatures have been extracted, the next stage in the data pipeline is to bring those temperature reads to a common, vendor-agnostic format. This is precisely the role of the data normalization layer. In the example, this includes converting temperatures from Fahrenheit to Celsius degrees; all temperature reads are exposed to the next processes in the data pipeline using a uniform data format and temperature unit.

It is important to note that each driver is built for a specific protocol, API, and data format. For instance, if device Dn sends power monitoring data over an HTTP/REST API in the form of the tuple {“data”: {“timestamp”: 1515067723, “sensor type”: SCH1234, “power (KWs)”: 0.2}}, the parsing process performed by the corresponding driver must be tailored to extract the data using the specific protocol, API, and data structure utilized by the device. Frequently, the normalization process shown in Figure 11-9 transforms the input from semistructured data to either structured or semistructured data—though now in a common data format. Depending on their structure, the output data can be persisted in a relational or nonrelational database. These databases can be hosted by the fog node shown in the figure, by other fog nodes in a fog hierarchy, or by nodes in a back end running in a data center.

Currently, the development of the drivers shown in Figure 11-9 is done manually—hence, it takes time. The development effort might take from a few days up to weeks, depending on the complexity of the device and the protocols, APIs, and data models involved. Some companies in the IoT space prefer to talk about connectors instead of drivers. The terminology might differ, but the functionality is fundamentally the same. It is increasingly common to find companies that have already developed hundreds of these drivers or connectors that are integrated into their product portfolios (Cisco, Microsoft, SAP, GE, and so on). This means that sensors and device families of different natures can be connected to a gateway or a fog node; when they interface with the corresponding driver, the exchange of data is immediately available and managed by the driver.

For a technically savvy person in the IT field, this approach might sound a bit strange. In the IoT field, many of these drivers are developed by companies that have not manufactured the devices (the endpoints, or “things”). In the IT field, these are normally developed by the device manufacturer. More specifically, when we buy a printer, an IP camera, or any new peripheral that needs to be connected to a computer, the exchange of data is also handled by a driver. The difference, in this case, is that the driver is usually supplied by the company that manufactured the device. Then what is different in IoT? Why do external companies need to build drivers themselves?

Multiple factors make IoT different from traditional IT and have motivated external companies to develop the own driver catalogs:

■ Many endpoint vendors supply not only the devices, but also proprietary gateways and embedded systems, enabling a complete vertically integrated solution that they sell. They have obviously developed their own drivers, but to protect their business, they do not support portability of their drivers to third-party platforms. With the advent and penetration of fog computing, this is gradually changing. Many companies are realizing the value of open and standardized platforms for customers enabled through fog, which, among many other things, can host these drivers. Economic incentives and new business models are actively being developed to unlock this synergetic paradigm.

■ In other cases, the device manufacturers simply do not have the expertise or sufficient personnel to develop and maintain drivers for various third-party platforms. In some cases, they do have low-level programming expertise (for example, their devices might not even support the IP protocol), but they lack IT personnel who can help containerize their drivers so they can run on a Docker engine hosted by a fog node. As mentioned in the previous point, this is also gradually changing because device manufacturers are seeing the value of IoT and are transforming their business to enable the desired convergence of OT and IT.

■ Maintenance of the drivers is also an issue. In practical terms, a device manufacturer in the IT space generally needs to develop and maintain drivers for five operating systems, at most (Linux, Windows, macOS, IOS, and Android). Unfortunately, the current landscape in IoT is quite different. More than 400 IoT commercial platforms exist, each of which connects endpoints. The model for connecting these devices and then adapting and normalizing the data typically varies from one platform to another. Indeed, many of these platforms run multiprotocol applications, which actually embed the drivers and terminate the protocols at the sensor level. This means that a driver built for one platform cannot be effortlessly ported to another platform. Therefore, a manufacturer of a popular device that already comes with drivers that were externally developed by these platforms has little incentive to start developing and maintaining its own drivers. However, the situation for a new device (endpoint) in the IoT marketplace is different. Instead of waiting for several platforms to embrace its devices and build the corresponding drivers (which might take months or even years for the large majority of commercial platforms), a manufacturer might take the lead and develop its own driver. Obviously, the challenge for the device manufacturers is determining which platform(s) they should focus on in developing and maintaining drivers. Figure 11-10 shows a plausible approach to this question.

Figure 11-10 Automatic Data Normalization in Which the Drivers Are Automatically Rendered Based on a Formal Data Modeling Language

In summary, a combination of factors has prompted third-party companies to develop drivers or connectors, with the aim of accelerating the commercial evolution of IoT. However, this model is progressively becoming a problem for the companies that are developing and maintaining the drivers. Whereas peripherals in traditional IT settings use a limited number of I/O ports and communication protocols, the opposite is true in IoT: The spectrum of wired and wireless protocols, interfaces, APIs, and data structures that need to be ingested and interpreted is much broader and is expanding relentlessly.

Industry and standardization bodies such as the IETF are starting to push for a different approach. The manufacturer usage description (MUD) model described in Chapter 9, “Identity, Authentication, Authorization, and Accounting,” is a good example here. Instead of having a third party developing a new driver to confine the communications of an endpoint to their intended use, the manufacturer of the endpoint provides a MUD file in the form of a YANG model. This model provides machine-readable input based on a standardized and formal data modeling language. This YANG model is basically the “driver” that the platform needs to push the security rules and configurations to switches and fog nodes; its objective is restricting the communications of the endpoint to only its desired use.

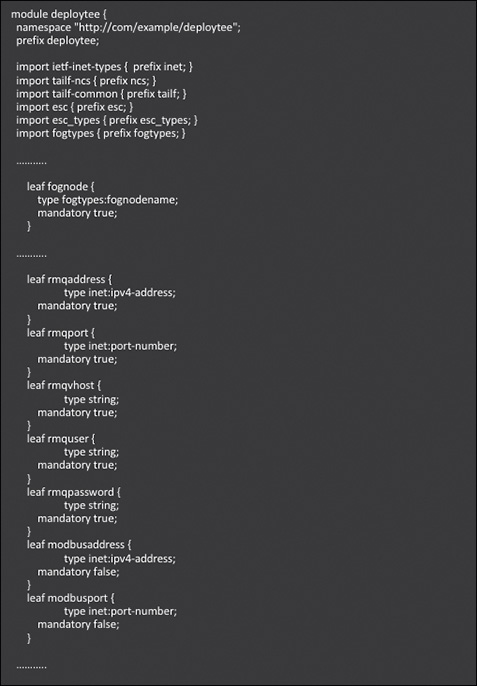

Although MUD enables the automation of certain security rules, it does not substitute the drivers shown in Figure 11-9. The next step in the evolution of data extraction, adaptation, and normalization at the edge might look like Figure 11-10. The goal is to enable the automatic parsing and entire data normalization process in such a way that the drivers can be rendered automatically based on the utilization of formal data modeling languages such as YANG. This approach is a win-win scenario for device manufacturers and third-party platform providers. On the one hand, device vendors do not need to develop specific drivers for each platform. As in the case of MUD, they just need to provide data models using a standardized and formal data modeling language that captures the following:

■ The communication protocol used

■ The constraints on the API, following a model-driven API (see Chapter 6, “Evolution and Benefits of SDN and NFV Technologies and Their Impact on IoT”)

■ The data model enabling the parsing of data plane payloads

Platform providers, on the other hand, need to do the following:

■ Create a device discovery module (refer to Figure 11-10) to process the data models supplied by device manufacturers

■ Develop the libraries to render the device drivers automatically based on the data models ingested

■ Build a vendor-agnostic data module (refer to Figure 11-10), which feeds the data normalization layer with the aim of providing a vendor-independent data model for the output data (after the data normalization processes is complete)

The role of the data normalization layer is to map the input data models to a normalized or vendor-independent data model.

Unfortunately, the industry is not there yet. Achieving something similar to Figure 11-10 will take years, especially because standardization efforts are needed. Hence, for the purpose of this book, when it comes to data normalization and its implications on data protection, the focus is on the model used today (refer to Figure 11-9). As discussed previously in this chapter, the key is to protect data at rest, in use, and on the move for the data, control, and management planes through a combination of access control, the CIA triad, and nonrepudiation mechanisms, whenever required. The process of ingesting, adapting, and normalizing data either at the edge or at higher layers in the thing-to-cloud continuum certainly involves data at rest, in use, and on the move; as a result, data protection can be analyzed following the same lines of argumentation. Before transitioning to the specifics of data protection, the next three subsections provide an overview of common scenarios showing data at rest, in use, and on the move in the context of IoT.

Data at Rest

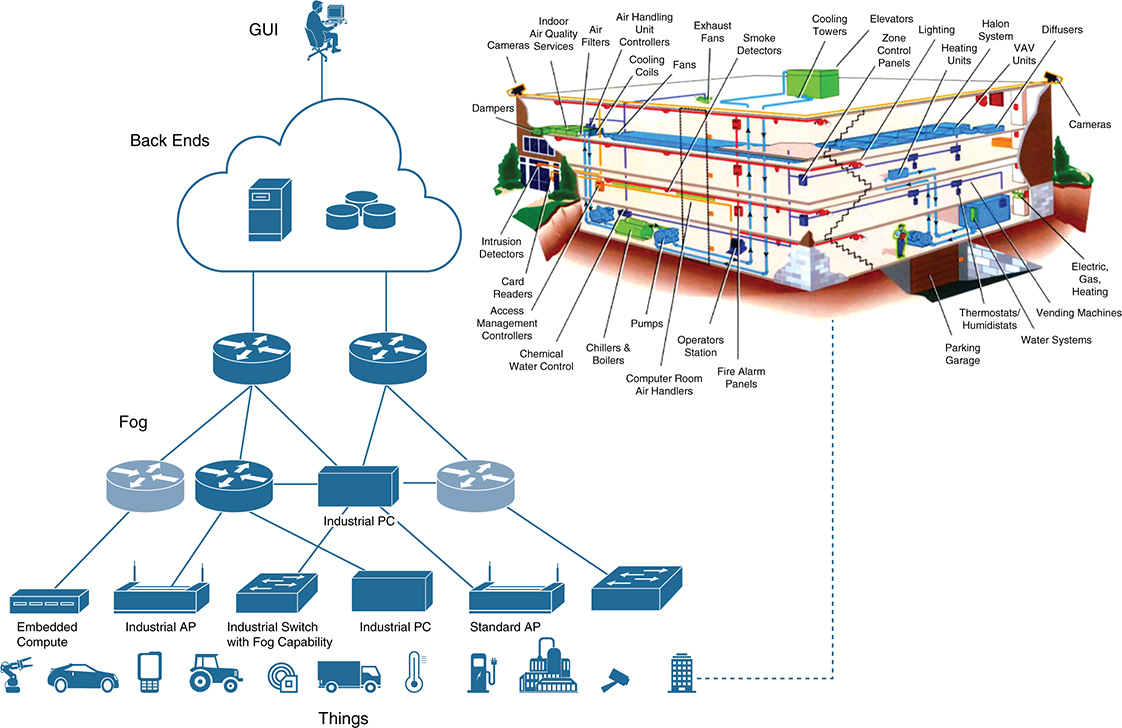

Figure 11-11 shows a simplified version of the thing-to-cloud continuum, with endpoints of different natures at the bottom connected to devices at the edge, such as gateways, access points, switches, embedded systems, and fog nodes with different form factors (for example, industrial switches or industrial PCs). These devices can then connect to other fog and network nodes and through a WAN to one or more back ends running in private data centers, public clouds, or a combination.

In this continuum, data can be found at rest in different locations, including the following:

■ The endpoints (drives, caches, memory, flash, and so on)

■ Fog nodes, which can be located anywhere between the things and the back end (for example, stored in local databases, main memory, caches, virtual storage, and so on). Note that data that temporarily resides in queues in gateways or switches is not considered data at rest; it is treated as data on the move.

■ In the back end (for example, stored in a data warehouse, in a data lake, or simply in main memory or virtual storage provided by a single blade in the back end)

The right side of Figure 11-11 zooms into the commercial building shown at the bottom right. Many of the gateways, access points, switches, and fog nodes depicted in the figure reside inside smart buildings. Building services that were traditionally supplied using legacy technologies (in most cases, using non-IP technologies) are starting to migrate to IP and are becoming part of full-fledged IoT solutions for commercial buildings. This is the case for power, lighting, HVAC systems, video surveillance and physical security, elevators, sensors for environmental control, appliances, and so on. Modern commercial buildings are smarter and represent a clear example of where non-IP-based technologies in the OT field are starting to converge with IT. These buildings might have thousands of Power over Ethernet (PoE) LED light fixtures, hundreds of PoE-enabled fog nodes and switches installed in ceilings and the building automation infrastructure, thousands of PoE HVAC controllers, and many other elements. Most of these elements will store data.

The amount of data at rest in commercial buildings will increase substantially in the coming years. One reason is to ensure that the building can operate autonomously—that is, the core functions must remain operative even if the building loses backhaul connectivity to a central data center or a control room (for example, one that manages multiple buildings). In that sense, smarter buildings will have some form of computational hierarchy, with several fog nodes per floor and cross-functional computation capacity centralized on the premises (for example, in the form of an NFV-PoP). These computational resources will clearly store data.

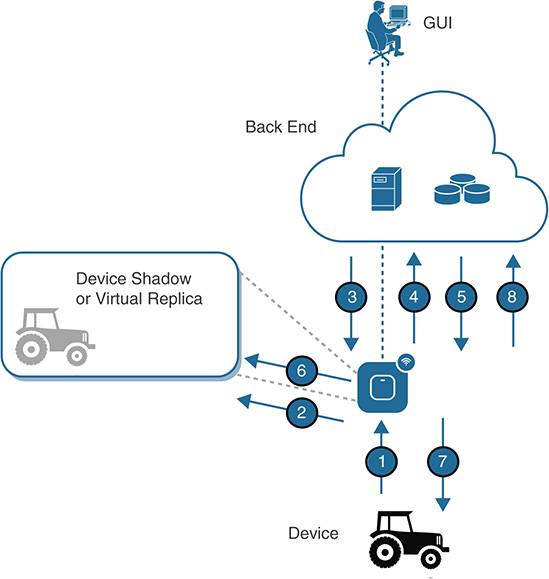

Another aspect that is highly relevant for data at rest is where to store the data the endpoints collect. A common practice is to use the fog nodes at the edge to store relevant data. In many cases, the communication between the fog nodes and the endpoints is bidirectional. For instance, the fog nodes not only receive data from the endpoints (for example, from sensors), but they also can send data to the endpoints (such as configuration commands and firmware updates). In principle, the endpoints and the fog nodes can exchange data at the data plane (for example, sensor data), the control plane (for example, data related to DHCP and L2 and L3 communication protocols), and the management plane (for example, configuration data and monitoring data). Depending on the use case and the setup, the communications between the endpoints and the fog nodes serving those endpoints might be unreliable. To solve this problem, some solutions in the marketplace use the concept of data shadows or virtual replicas (see Figure 11-12). The goal is twofold. First, it seeks to store the latest reads or status sent by the endpoint and make the data available at the fog node. This means that if the fog node loses connectivity to the sensor, it can still report its latest status (the most recent read). The buffer kept depends on the application, but it can be as simple as the last read received, up to the complete set of reads stored locally in a historian database at the fog node. Second, if the platform needs to configure the device (for example, to change the frequency of the reads), the fog node can act as a proxy, update the configuration of the virtual replica, and wait until the endpoint becomes available again to update it.

The fog nodes represent a strategic point of control in the field, so they can be used as a proxy or intermediate layer between the endpoints and back-end applications. This is particularly relevant when the communications between the back end and the fog nodes are reliable but the communications between the latter and the endpoints might not be. Clearly, the virtual replicas are persisted in the fog nodes; therefore, they represent data at rest.

Figure 11-12 depicts some interactions with a device, a fog node hosting the virtual replica, and a back end:

The endpoint sends sensor data to the fog node.

The fog node reads and persists the data in the virtual replica.

A back-end application pulls (queries for) data. An advantage is that, if the endpoint runs on batteries, the reads are performed and managed between the back-end application and the fog node without involving the endpoints. That is, the read process does not have any impact on the endpoint batteries.

The fog nodes send the last read stored. Either time stamps or metadata can be used to inform the application about how recent the data is.

The application in the back end now wants to reconfigure the endpoint (change the frequency of the data sent, upgrade the firmware to add a security patch, and so on).

The fog node first sends the new configuration to the virtual replica.

When the replica is updated, it attempts to configure the endpoint. If it fails, it keeps trying until it succeeds or reports the problem to the back end.

Upon successful reconfiguration, the fog node notifies the back-end application.

After analyzing the main aspects of data at rest in the fog domain, the next step is to examine state-of-the-art approaches for storing data in the back end (refer to Figure 11-11). Several IoT solutions use a data warehouse, a data lake, or a combination, so it is important to outline their differences and, more importantly, their synergies.

Data Warehouses

Creating a data warehouse (DW) typically involves the following sequence:

Conducting a requirements study to understand the reports and data analysis needed, as well as storage needs.

Defining the databases needed, their schemas, and their structure, and identifying the main queries that need to be processed.

Identifying the data sources needed to feed the DW (a data lake can be one of the sources).

Implementing an Extract-Transform-Load (ETL) model. This usually involves creating a pipeline to enable the extraction of the required data, as well as adapting and transforming them to fit the schemas defined in item 2. This approach is usually referred to as schema-on-write.

When the DW has been created, the ultimate goal is to enable the analysis of data to facilitate BI, create reports, and so on. In general terms, the data analysis enabled by DW architectures is most effective when it has the following characteristics:

■ Bounds the set of data types used

■ Clusters data with a common meaning to facilitate the analysis and reporting

■ Is optimized for interactive queries

DWs represent a collection of mature technologies that are widely used in the industry, but they also have a number of weaknesses. DWs tend to be quite expensive when very large data volumes need to be managed. Flexibility is also another issue. The schema-on-write approach is excessively rigid in some cases. More specifically, the philosophy DW designs follow is summarized here:

Predefine the structure of the data to be stored.

Ingest the data from the sources defined.

Analyze the data.

Most of the data sources in many relevant use cases in IoT generate nonstructured data. As discussed previously, we are witnessing a paradigm shift with this new philosophy:

Ingest data of any kind and structure.

Analyze the data in its current form (for example, semistructured or unstructured).

Structure data only when needed (that is, only relevant data gets structured, according to the needs). This new paradigm is usually referred to as schema-on-read.

These limitations gave rise to the expansion of data lakes, which are geared to collect any type of data (structured, semistructured, or unstructured) and are tailored for low-cost storage. They embrace the unpredictable and changing nature of data.

Data Lakes

Data lakes (DL) are the center of gravity of many Big Data solutions. A DL offers a very large data repository (for example, based on Hadoop) that can store a vast amount of data in its native format until it is needed.

■ DLs collect many different sources of data in a highly scalable and flexible way. Data sources can be added and removed dynamically, and a large fraction of data can be ingested and stored “just in case” (even if it is not used later).

■ Unlike DWs, DLs target an Extract-Load-Transform (ELT) model. This usually involves creating a pipeline so that the required data can be extracted. As mentioned earlier, data is kept in its native format until it is needed—that is, until it needs to be adapted and transformed. Hence, this approach is known as schema-on-read.

■ DLs manage very large data sets without needing to predefine the data schemas. This allows them to provide the right data to the right consumers with the right structure at the right time (supported by schema-on-read).

■ DLs generally offer a much cheaper approach than DWs, especially for archive purposes. DL infrastructures can be supported on commodity hardware, thereby freeing up expensive resources from DWs (for example, for performing data refinement processes). DLs complement DWs because DLs can be used as a data source for DWs. For instance, a DL can collect all sorts of data but send only specific data to a DW.

■ Whereas DWs are usually optimized for interactive queries, DLs can manage all types of workloads, including batch, streaming, and interactive queries and analysis.

■ Data governance remains key, especially to avoid turning a data lake into a data swamp over time.

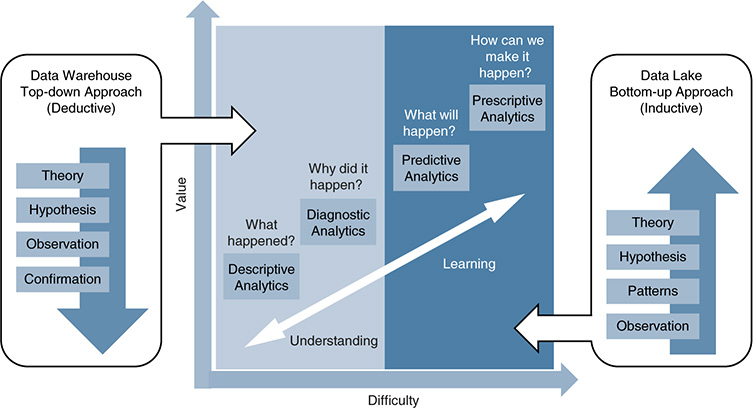

A central difference between DWs and DLs is the scheme-on-write versus scheme-on-read models. As Figure 11-13 shows, this is reflected in their corresponding designs. In his work “Big Data Architectures and the Data Lake,” Big Data evangelist James Serra shows how DWs follow a top-down approach that is tailored to facilitate a deductive process in data analysis. This often starts by developing a theory and then creating some hypotheses around it. The data is structured based on this. By performing interactive queries, a set of data consumers can observe and either confirm or revoke the hypotheses made.

Figure 11-13 Data Warehouse and Data Lake Design Philosophies (Source: “Big Data Architectures and the Data Lake”)

DLs, on the other hand, follow a bottom-up approach that is tailored to facilitate an inductive process in data analysis. They start from a set of observations on different sources of data that can have any type of structure. Based on the observations, analytics tools can search for patterns, which would then support a set of hypotheses and a theory.

As Figure 11-13 shows, deductive processes are good to understand and can answer questions such as “What happened?” or “Why did it happen?” However, they are less efficient when it comes to learning. Inductive processes are much better prepared for answering questions such as “What will happen?” or even more complex ones, such as “How can we make it happen?”

At this stage, the lines between data at rest and data in use start blurring. The left and right sides of Figure 11-13 mainly have to do with data at rest, in a DW, and in a DL, respectively. The central part of the figure represents data in use.

Data in Use

The data produced by an object or endpoint in an IoT environment might have little value individually, but the combination of fog computing, DWs, and DLs contributes to the analysis and correlation of vast amounts of data generated by a large number of entities. Together, this produces relevant information used to understand, learn, and make actionable decisions in real time. These decisions can range from activating control and actuation mechanisms in physical systems, to changing policies or making business recommendations. Those outcomes will also produce data, which again can be transformed into information and, subsequently, knowledge.

The terms data and information are often used interchangeably. In the context of this chapter, the term information represents the outcome of one or more data processing stages in which the output data is presented in a specific context (for example, in a concrete vertical and use case) and with a specific format so that a specific consumer or entity can interpret them in a useful way. Figure 11-14 shows how raw data can be processed, analyzed, filtered, and combined at different levels (for example, using data mashups) to extract information, learn, create knowledge, and, ultimately, create wisdom.

To accomplish this, we can use an architecture such as the one in Figure 11-15. This architecture centers on a DL solution supported by the following stages:

■ Data ingestion: Data comes from a large number of different types of sources, including logs, monitoring data at multiple levels in an IoT infrastructure, distributed applications, LOB data, and sets of IoT endpoints (such as sensors and control and actuation systems). The data ingested can have any format (structured, semistructured, or unstructured) and can be sourced by objects that belong to the data, control, and management and operational planes. Data ingestion can take place in real time, in small batches (or microbatches), and in batch processes. This stage classifies and separates data ingestion that requires real-time treatment (the “hot path”) from data that requires batch processing (the “cold path”). The hot path deals with the ingestion of large volumes of data in real time. The data is usually subject to high variety and is received at very high velocity.

Figure 11-14 Knowledge Stack: Transforming Raw Data into Wisdom (Information Flow and the Value of the Data at Different Layers in the Stack)

■ Data preparation: This stage provides the extract and load operations and relegates the transform operations to the next stage (because data transformation occurs when it is read in the ELT model). Data is stored in its native format (without requiring a predefined structure or schema definition). The hot path typically relies on in-memory databases for real-time processing, whereas the cold path stores raw data for historical reference and analysis.

■ Data analysis and exposure: This stage is where we really talk about data in use. At this stage, insights are extracted from the data using batch, interactive, and real-time (streaming) analytics. Different machine learning techniques can be used to create knowledge. Thanks to the schema-on-read approach, data transformation occurs at this stage, so the output data is ready to be consumed by third parties.

■ Data consumption: Different types of entities can consume the data analyzed and extract valuable information, such as vertical-specific applications, dashboards, BI tools, reporting systems, DWs, and so on.

Figure 11-15 focuses on technologies that are typically deployed in a data center; however, it is worth highlighting that, with IoT, Big Data is not confined to the data center. Big Data is considered the new currency in many fields, including IoT. But what actually is Big Data? In simple terms, Big Data means all data. How big is Big Data? The answer to that question varies significantly, depending on the industry vertical and the specifics of the use case addressed. For example, a set of oil wells can produce petabytes of data daily. A smart city can produce similar amounts of data in one day. Machines in a manufacturing plant might generate data that is orders of magnitude lower, but it can still be considered Big Data in the context of a manufacturing plant. All this data cannot be sent to the cloud, for a number of reasons. In the case of the oil wells, this is often because of limited or unreliable backhaul connectivity (in some cases, the only option is 3G connectivity, and it is available in only a few oil wells). Fog facilitates ingesting, sampling, analyzing, and filtering data locally and then making actionable decisions in real time (for safety reasons, workforce optimization, anomaly detection, preventive maintenance, and so on). Therefore, in IoT, fog computing is a key component of a Big Data infrastructure that, in practice, is built upon computing resources that are much more distributed than the ones shown in Figure 11-15.

A fog node can also offer both hot and cold paths for data analysis (see Figure 11-15) by concurrently hosting streaming and historian analytics. Although the data processing model inside a fog node can be conceptually similar to the one in Figure 11-15, the stages have certain differences. As of the time of this writing, the stages at a fog node level are often organized as follows: data sources → data ingestion → data adaptation (which might include data normalization) → data analysis → data consumption. The adaptation/normalization stage typically transforms the input data to either semistructured or structured data, so it follows more of an Extract-Transform-Load (ETL) model than the Extract-Load-Transform (ELT) model used in DLs. Some applications require persisting raw data in a historian database hosted by the fog nodes, but in practice, most of the use cases adapt the data ingested by the fog nodes before its analysis. Specific examples involving data in use and data protection mechanisms at the fog node level are covered later, in Figures 11-19, 11-30, and 11-32.

Data on the Move

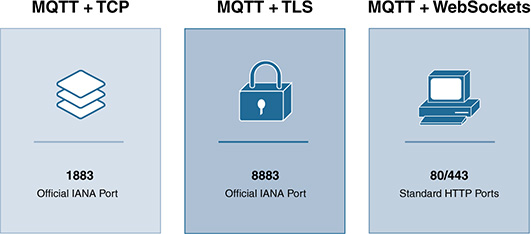

Moving data produced by endpoints at the network edge across fog and network nodes up to the data center is key in IoT. Protocols such as HTTPS, COAP, MQTT, and many others are widely used for transporting data in IoT. For detailed descriptions on protocols such as COAP or MQTT, refer to the Cisco Press book IoT Fundamentals: Networking Technologies, Protocols, and Use Cases for the Internet of Things.

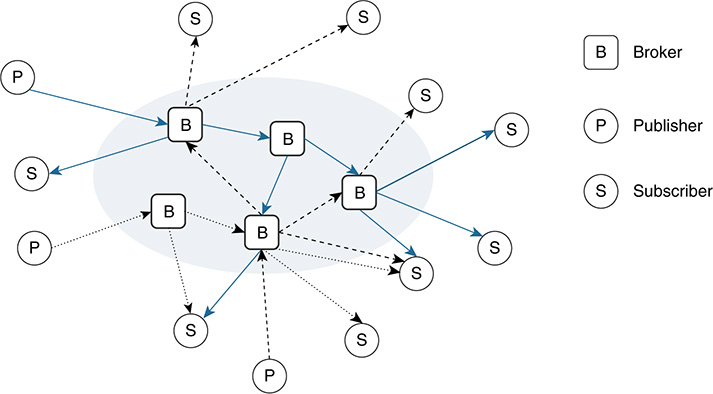

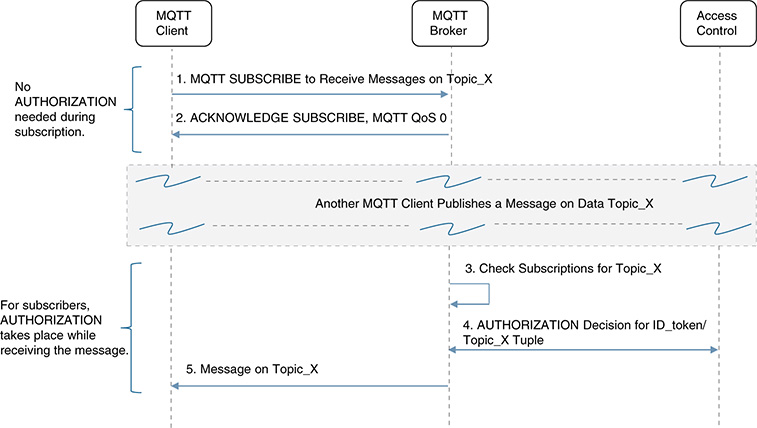

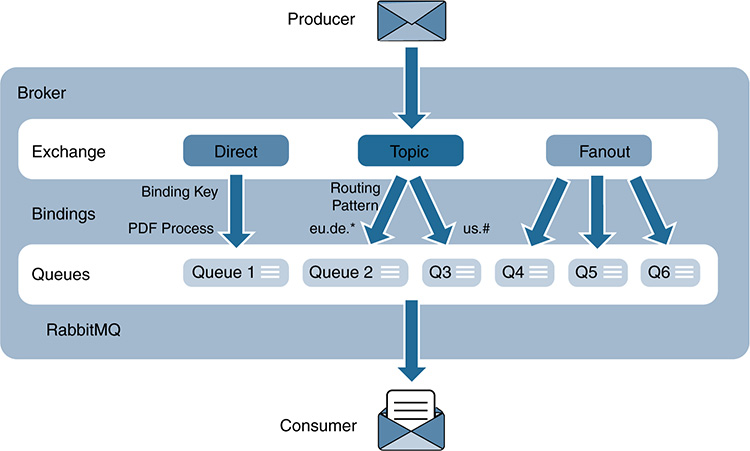

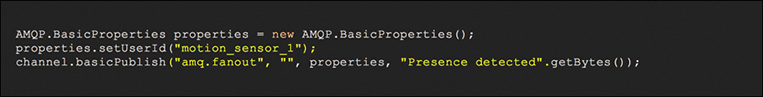

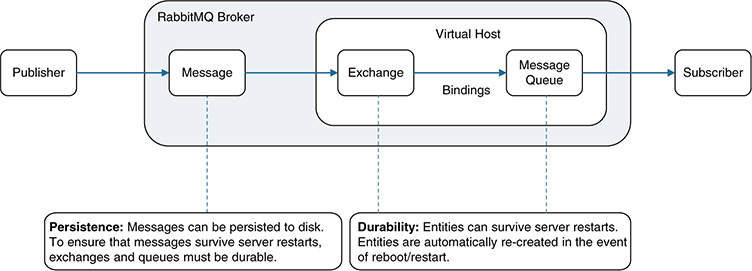

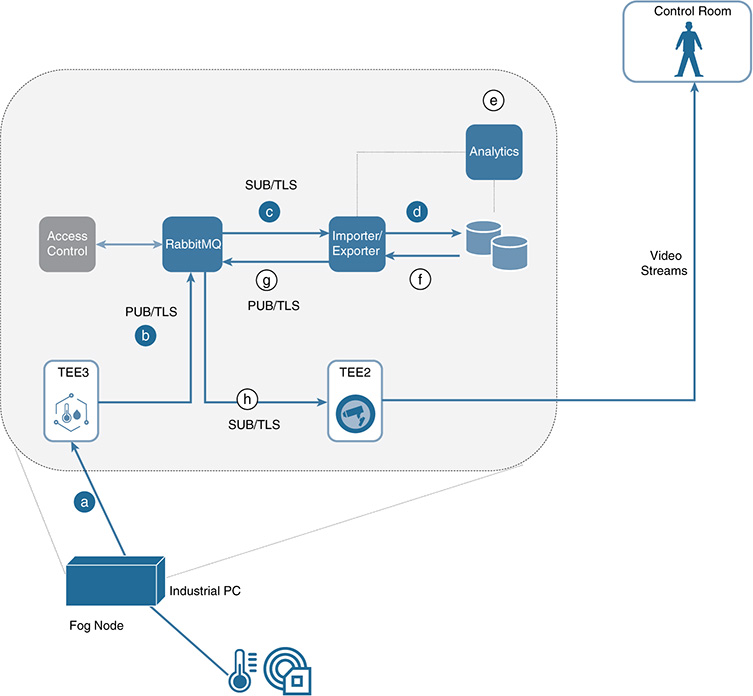

Data produced by one object or entity A often needs to be sent to a specific group of consumers or receivers (B and C). Other data sets, even if they are produced by the same entity A, might need to be distributed to a different group of receivers (C, D, and E). This is a well-known problem in the field of data distribution, where state-of-the-art technologies such as pub/sub systems support this functionality. Figure 11-16 shows several publishers (P) sending data to different brokers (B), which are in charge of distributing the data to the corresponding subscribers (S). The brokers allow for physical and temporal decoupling while distributing data between data providers and consumers. Specific analysis of the data protection mechanisms offered by key pub/sub systems (such as MQTT, RabbitMQ, or, in part, the Cisco Edge and Fog Processing Module [EFM]) is covered later in the chapter, in the sections “Message Queuing Telemetry Transport Protocol,” “RabbitMQ,” and “Cisco Edge and Fog Processing Module (EFM).”

The data distributed by the brokers is usually categorized into classes. Data consumers typically subscribe to one or more classes and receive data for only those specific classes. These classes support selecting and filtering which data needs to reach a given group of subscribers; this selection is generally based on a data topic or specific content. In topic-based systems, data is published to labeled or logical channels called topics. A subscriber receives data published for only the topics that it is subscribed to. Data publishers and brokers usually define the data topics that will be used, and all subscribers to those topics receive the same data. Content-based systems operate in a different way. In this case, data is delivered to a given subscriber if the content of the data matches a set of constraints that are usually defined by the subscriber (for example, in the form of data attributes). In other words, even for the same source data, different subscribers might receive different content. Some pub/sub systems support combinations in which data publishers post messages to topics, and data consumers define content-based subscriptions to a group of data topics.

In many IoT scenarios, the publishers, subscribers, and message brokers run as microservices both in fog nodes located in the thing-to-cloud continuum and in the back end(s) (for example, in a privately owned data center, a public cloud, or a combination). As Figure 11-16 shows, pub/sub systems can route messages across multiple brokers, not only to extend the reachability of the message distribution system, but also to scale and balance the load (including the number of publishers and subscribers each broker man-ages). The brokers normally perform tasks such as message queueing and store-and-forward functions, especially to temporally decouple the delivery of messages between a publisher and its subscribers. More specifically, a publisher might wake up, send a message to a broker, and go back to sleep mode until the transmission of the next message is needed. Similarly, subscribers might be in sleep mode, wake up, connect to their corresponding broker, get the batch of messages stored for them (for one or more data topics), and go back to sleep mode. Another important aspect of a pub/sub system is protocol adaptation. In many pub/sub systems, the publishers and subscribers might speak different languages (that is, they might use different messaging protocols), such as MQTT, AMQP, or STOMP. Protocol adaptations between data producers and consumers are essential. The way of handling these adaptations varies, depending on the implementation. For instance, RabbitMQ manages this through plug-ins to the message broker, turning RabbitMQ into a multibroker/multiprotocol pub/sub system. The Cisco EFM follows a different approach. EFM is based on the Distributed Services Architecture (DSA: http://iot-dsa.org); as discussed later in this chapter, the DSA performs protocol adaptations by means of DSlinks (http://iot-dsa.org/get-started/how-dsa-works).

The use message brokers is popular in IoT, but there are other ways of distributing data between data producers and data consumers in a pub/sub system. For instance, the Data Distribution Service (DDS) offers a pub/sub system that does not require a broker. In DDS, publishers and subscribers share metadata about each other via IP multicast; they can cache this information locally and route messages based on a set of discovery mechanisms offered by DDS.

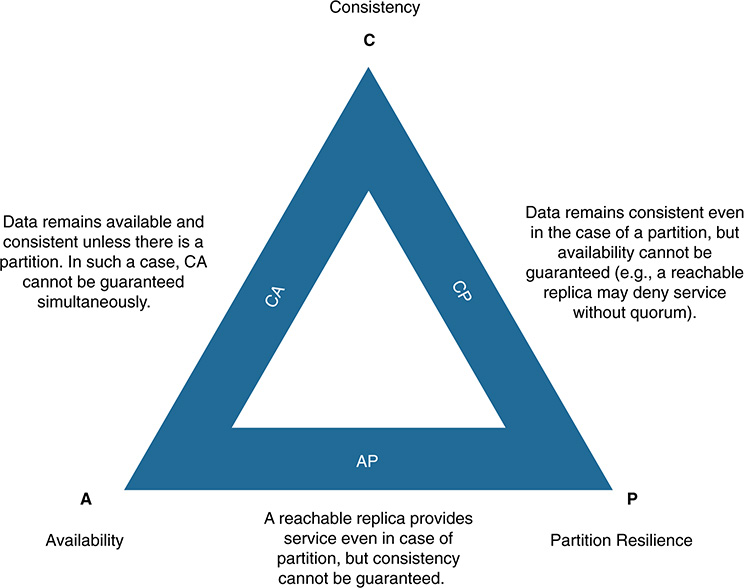

It is worth noting that the concepts of data in motion and data at rest get intertwined while examining the reliability of a pub/sub system. In a pub/sub system, reliability and availability are intimately related. Recall that availability is one of the central goals in the CIA triad (refer to Figure 11-2) because affecting the availability of resources is the main target of DoS attacks. In terms of reliability, the fundamental questions related to data in motion are what will happen to the data queued and stored if a message broker fails, and whether the data will be available once the broker restarts. To address these questions, most pub/sub systems in the marketplace support the concepts of data persistency, durability, and QoS.

■ Data persistency: In pub/sub systems, this is often considered a property associated with the messages. Some data distribution protocols mark a message as persistent by means of specific fields in the message header (for example, by setting the delivery mode). When a message broker receives a persistent message, it writes it to disk, which allows it to restore the message in case of a restart. Notice that, in most of the existing solutions in the marketplace, when a broker restarts, all persistent messages that were queued pending delivery before the restart are not automatically re-created in the queues. This requires durable queues, as explained in the following bullet. Message persistency comes at a price: Writing a significant fraction of messages to disk can considerably reduce the number of messages per second that a broker can process.

■ Durable queues: The literature is full of definitions and examples related to data durability, and it is important to notice that its meaning varies somewhat, depending on the context (databases, pub/sub system used, storage systems, and so on). Even between different pub/sub systems, the interpretation of the concept of durability might vary. For instance, in AMQP and RabbitMQ, it is usually associated with a property of certain entities, such as queues and data exchanges. Durable entities can survive a message broker restart because they can be automatically re-created when the server gets back up. To make this possible, the messages also need to be persistent because they need to be moved from disk to the corresponding queues after the restart. In the case of DDS, the interpretation is relatively different. The main objective of a pub/sub system such as DDS is to make sure that the data is delivered to the corresponding subscribers, especially all those that are available at the time the data is published. However, subscribers might join a pub/sub system dynamically (these are sometimes called late joiners); for the applications they run, it might be important to access the data that was published before they joined the pub/sub system (that is, access to historical data is key). To allow this, DDS provides QoS mechanisms in the form of a durability QoS policy. This policy specifies how to maintain the data published by DDS, and it supports four alternatives:

■ VOLATILE: When a message is published, it is not maintained for delivery to late joiners.

■ TRANSIENT_LOCAL: In this mode, the publishers store the messages locally in such a way that late joiners can get previously published messages. In this case, the data is available as long as the data writer is active—that is, as long as the publisher remains alive.

■ TRANSIENT: In this mode, the pub/sub system maintains the messages available for late joiners. While in TRANSIENT_LOCAL mode, the responsibility of storing the messages rests with the individual publisher—in this case, the responsibility is moved to the system. Data does not necessarily need to be persisted, so the data is maintained and remains available for late joiners as long as the middleware is running on at least one of the nodes.

■ PERSISTENT: In this mode, the data is persisted and remains available for late joiners even after the shutdown and restart of the whole middleware.

In pub/sub systems, the concept of QoS goes beyond the scope of data durability and involves other aspects, such as how brokers prioritize messages in a queue before routing them to their corresponding subscribers.

Durability and persistence are particularly needed when the data is indispensable. For example, a set of publishers can push data to a queue in a message broker, which a set of subscribers pulls to initiate the execution of specific tasks based on the content of the data. If the broker restarts, the messages in its queue should become available when the broker is back. Otherwise, the tasks that were queued before the restart might never be executed (although this depends on the controls performed at the application level and whether the tasks are managed by a stateless or a stateful application).

A data consumer might subscribe to specific messages at build time, initialization time, or runtime. The large majority of modern pub/sub systems allow subscribers to be added and removed at runtime. A distinctive factor in IoT is that the heterogeneity of publishers and subscribers, data objects, data models, encodings, and protocols can be quite high; some of these aspects are covered in more detail later in Figure 11-30. Data distribution can involve multiple tenants (both trusted and nontrusted); it also might require the enforcement of virtual fences for specific data (for example, certain data are not allowed to leave the factory). Additionally, it might require the use of sampling and filtering techniques before data can be sent to specific subscribers.

Figure 11-17 shows one such scenario, in which data produced by a large machine (an industrial Mazak machine) is sent for processing to a fog node (an industrial switch, in the example) that is connected to the Mazak machine. The Mazak machine can publish multiple data topics, which might have different subscribers. As shown in the example, part of the data is sent to management systems on the premises, other data is sent to HMI for operators, certain data is allowed to be sent to Mazak for statistics and preventive analysis and maintenance, and other data sets are sent to the carpeted zone (a corporate intranet). More specifically, the Mazak machine represents the publisher of multiple data topics, the fog node hosts the message broker, and the elements receiving the data represent the different subscribers. In this example, the export polices applied on different data topics permit sharing specific data with Mazak about its machine, sharing much more data with the company (see Levels 4 and 5 in Figure 11-1), and sharing no data at all with a given partner. All these data flows need to be created and protected. The roles of orchestration and automation are key to this end, not only to enable the different data pipelines required, but also to enforce the security polices authorizing the data exchanges.

Obviously, the concept of data in motion in IoT goes far beyond pub/sub systems. For instance, industrial protocols such as Modbus and its variants (for example, Modbus TCP/IP and legacy serial Modbus systems) are common in IoT, including industry verticals such as manufacturing and utilities. Many other protocols, such as serial RS-485, legacy OPC, BACnet, HTTP/REST, and SNMP, are broadly used as well. The utilization of pub/sub systems in IoT is becoming pervasive, especially to facilitate the distribution of data both within and between fog and cloud environments—see, for example, some of the most recent architectures related to OPC-UA pub/sub capabilities and Time Sensitive Networking (TSN). Indeed, a considerable number of the use cases addressed in this book require orchestration processes that involve the configuration of message brokers such as MQTT, RabbitMQ, or EFM. For this reason, when it comes to data on the move, this chapter focuses especially on data protection in the context of pub/sub systems.

Protecting Data in IoT

After this introduction to the basics of data distribution and the lifecycle of data in IoT, we now focus on data protection. Different kinds of data need to be protected at the data, management, and control plane levels. For each plane, the specific tools to protect the data depend on whether the data is at rest, in use, or on the move (for example, full disk encryption can obviously protect data at rest stored in a hard drive, but it will not help protect the data exchanged between a message broker and a subscriber in a pub/sub system). Nonetheless, the same security pillars apply, regardless of the state of the data—that is, the same needs exist for authenticating entities, authorizing entities, and ensuring the confidentiality, integrity, and availability of the data, as well as providing nonrepudiation whenever relevant.

Data Plane Protection in IoT

This topic deserves a book by itself. To provide tangible examples that can help administrators and developers understand how to protect data, we start the analysis with data on the move and delve into the details of MQTT and RabbitMQ. Then we focus on data in use and data at rest, examining the Cisco EFM as we go. These three technologies and the mechanisms they offer to protect data should provide sufficient foundational insight on the subject.

Message Queuing Telemetry Transport Protocol

MQTT is a well-known and broadly used pub/sub protocol. It allows devices or virtual entities (called MQTT clients) to connect with, publish, and get messages from an MQTT broker (see Chapter 9). As shown previously in Figure 11-16, the MQTT broker mediates the communications between MQTT clients, where a client can be a publisher, a subscriber, or both. Each client can subscribe to a particular set of topics.