Chapter 9. Identity, Authentication, Authorization, and Accounting

Topics covered in this chapter include

■ Introduction to Identity and Access Management for the IoT

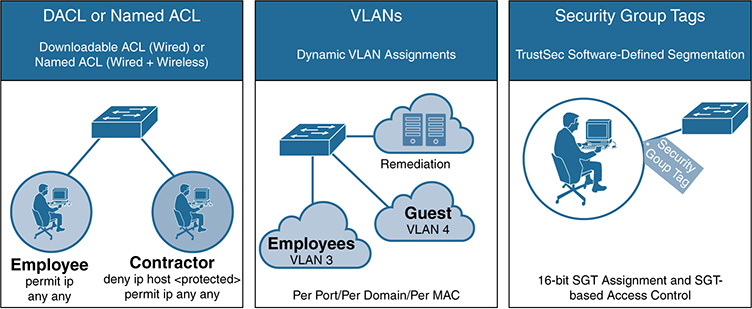

■ Dynamic Authorization Privileges

■ Manufacturer Usage Description (MUD)

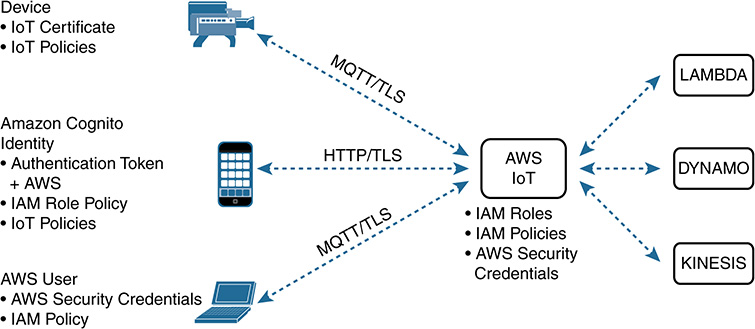

■ AWS Policy-based Authorization with IAM

■ Scaling IoT Identity and Access Management with Federation Approaches

■ Evolving Concepts: Need for Identity Relationship Management (IRM)

In Chapter 8, “The Advanced IoT Platform and MANO,” we discussed the building blocks and functionality required to bring automation and orchestration capabilities to a platform-type approach. Now we look at the identity and access management techniques that are evolving to assist with the explosion of new use cases and devices requiring connectivity.

The focus of this chapter is to explore the technology available for gaining identity when an endpoint attempts to access the network, methods to authenticate the endpoint, and, ultimately, automated solutions that can leverage that information to provide dynamic access privileges based on identity. We also explore how protocols such as OAuth 2.0 and OpenID Connect are helping to scale identity and authorization within IoT environments. Finally, we look at the evolution from IAM techniques to Identity Relationship Management (IRM) and its potential future applicability.

Before rolling through the agenda, it is important to have an elementary understanding of how the original or immutable identity is established. This identity will be leveraged for the access control technology concepts the chapter explores. We quickly step through some fundamental concepts on the provisioning process, various methods in which devices can be securely bootstrapped, device naming conventions, and the process of registering the devices for use. That information will establish a baseline understanding so that we can then proceed with the focus area.

We address these subjects in the following major areas:

■ Pre-network access (provisioning primer)

■ Establishing a unique naming convention

■ Establishing a trusted identity via bootstrap

■ Following an example registration and enrollment process for endpoints

■ Network access

■ Methods to gain device identity

■ Authentication methods: certificates and PKI, passwords, biometrics, AAA, 802.1X, RADIUS, and MAC Address Bypass (MAB)

■ Dynamic authorization solutions based on identity: Cisco Identity Services Engine (ISE), manufacturer usage description (MUD), and AWS policy-based authorization

■ Accounting

■ Scaling of IoT IAM with federation approaches

■ OAuth 2.0 and OpenID Connect 1.0

■ Evolution from IAM to IRM

Note In this chapter, the terms device and endpoint are used interchangeably, based on context.

Introduction to Identity and Access Management for the IoT

Every day, the Internet of Things (IoT) shifts further from concept stages into actual implementation and deployment stages. We have adopted connected lighting techniques that improve power efficiency, wearable fitness trackers that improve visibility into personal health, and even connected refrigerators that improve efficiency in shopping. Even the concept of autonomous cars promises to make one’s commute more secure while requiring less effort. The previous examples listed are only a small sample of the possibilities on the brink with IoT use cases.

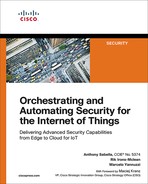

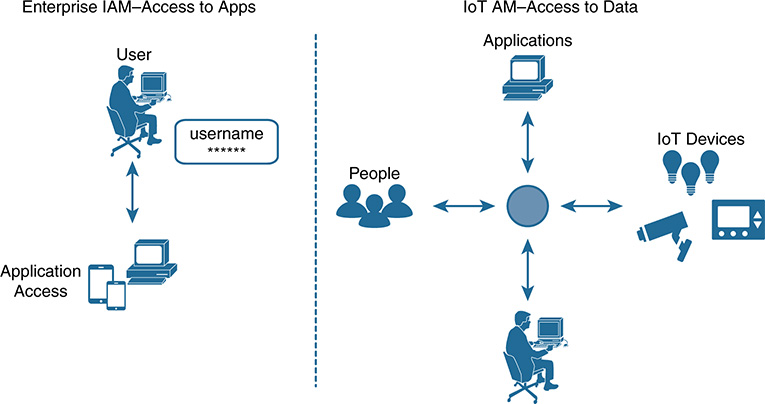

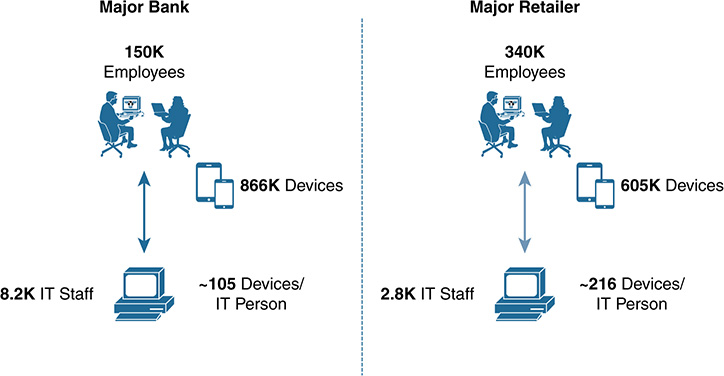

Despite how useful the listed use cases have become, we must address obstacles before we can take advantage of this technology in a secure way. One of those obstacles is scalability with identity management. The current model of using a traditional identity and access management (IAM) solution might not be applicable to IoT IAM. IoT introduces the need to manage exponentially more identities than existing IAM systems are capable of supporting. IoT identity management solutions require us not only to manage identity and authenticate human users, but also to manage billions of device identities and permissions on those users’ behalves. According to the Cisco Visual Networking Index (VNI), by 2020, approximately 25 billion devices will be connected to the Internet. Just five years later, the estimation is 74–100 billion devices by 2025. An exponential growth rate is on the horizon. Figure 9-1 illustrates the predicted device connection rate. (Note that this figure is not to scale.)

Many of these devices will need to communicate with each other and also with back-end systems. The user-to-device, device-to-cloud, user-to-cloud, and cloud-to-cloud connections that need to occur, along with the capability to grant permission on behalf of a party, is forcing a reevaluation of methodology. Our world is becoming a matrix of complex relationships.

Several solutions have been created in the last couple years to provide dynamic authorization privileges based on identity, and to do so in an automated way. This assists with scalability. Additionally, methods to extend identity and authorization capabilities by using tokens have come to fruition. These solutions are certainly useful, but they might not be enough to combat an imminent identity crisis brought on by the projected exponential IoT device connection rate.

This chapter explores the concept of Identity Relationship Management (IRM), which shows promise in dealing with the massive scalability challenges ahead. The evolution from traditional IAM to IRM will soon be at our doorstep. Because IRM concepts are still maturing, we still must leverage current identity, authentication, and dynamic authorization methods. For this reason, this chapter explores options for each category.

Device Provisioning and Access Control Building Blocks

Before we explore the methods for identifying, authenticating, and authorizing devices, we must begin by laying out a framework of building blocks to better organize these tasks.

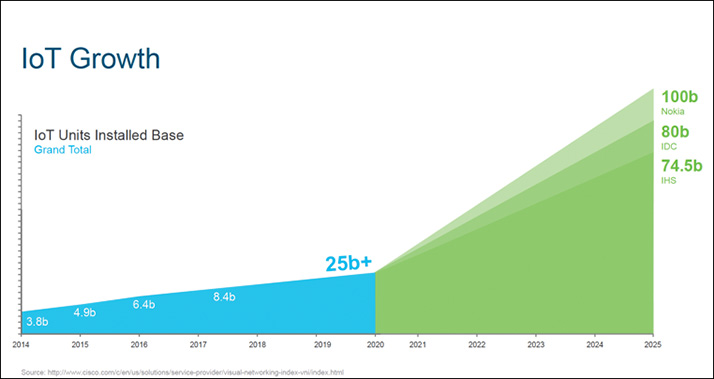

As we stated in the introduction, the focus of this chapter is to explore the access control technology required for a secure connection to the network. Specifically, this includes methods to identify the endpoint as it attempts to connect to the network, methods to authenticate its identity to ensure that the device is what it claims to be, and methods to grant the appropriate levels of authorization privileges based on the device’s identity, all in an automated way. Figure 9-3, later in the chapter, shows these categories.

Ideally, having a fundamental understanding of how a device obtains its unique identity is best before diving into access control topics. See the categories in Figure 9-2. This begins with the provisioning process and, within this context, the creation of hierarchical groupings for a device naming convention. This process then moves through creating a trusted identity for the device and, finally, enrolling the device to be used on the network. The provisioning process prepares the device to be used on the network, where the categories in Figure 9-3 can be invoked. The provisioning topic is not the focus area of this chapter, but it is important to address the beginning stages of establishing a trusted identity, which leads to more comprehensive understanding of the full lifecycle.

Figures 9-2 and 9-3 illustrate the two building block categories we explore in the next section.

This framework can be worked into a process or procedure and can be applied to all IoT devices that are procured, provisioned, registered, and ultimately used on an organization’s network.

As stated previously, for the context of this book, the provisioning process spans the naming convention, the secure bootstrap process, and device enrollment and provisioning so that it can be used securely on the network.

Naming Conventions to Establish “Uniqueness”

A common method leveraged at the beginning of the naming convention process is to create parent grouping or categories of endpoints that can be used within an organization. Creating a hierarchy of name space, similar to the Domain Name Service (DNS) or the Active Directory (AD) parent grouping structure, helps manage the volume of device types that will eventually be attached to the network. We illustrated a similar concept in Chapter 2, “Planning for IoT Security,” in the section “Segmentation, Risk, and How to Use Both in Planning the Consumer/Provider Communications Matrix.” These “unique” device names can be grouped under their parent category for optimized policy automation. Figure 2-9 in Chapter 2 shows a sample consumer/provider matrix to represent the interaction of proper groupings.

Proper groupings are an automation tool’s best friend. They allow for accuracy and repeatability, which essentially equates to improving the OPEX. Later in this chapter, we illustrate how to leverage technology to bring the matrix shown in Figure 2-9 to life.

The naming convention is an essential part of the structure and daily operation of an organization. Device naming conventions vary among companies, ranging from Extended Unique Identifiers EUI-48 and EUI-64 for MAC addresses, to IP and uniform resource indicators (URI), to Electronic Product Code for Radio Frequency Identification (RFID).

Because we are discussing a naming convention for an organization, not for the Internet, we simply must ensure that we achieve “uniqueness” for a device name; no other device can have an exact match. This can be accomplished with either determined output or randomized output. These conventions are all legitimate, and often combinations are leveraged, such as for manufacturer, application, location, and use variables.

The naming taxonomy complexity also varies between home use and company use. For home IoT use, naming conventions can be a bit more simplistic because they do not need to consider volume and geographical choices. For example, a common naming taxonomy leveraged with device counts under 50 could be location_device. For endpoint counts over 50, adding another variable would further increase scalability, as in floor3_masterbath_light1. As more devices are created with Internet connection capabilities, planning for a scalable naming scheme from the start is the best bet. An additional variable to consider for homes is the fact that many of the devices can be front-ended by natural language speech-recognition software, which allows for voice commands; thus, the names need to be distinguishable.

For company use, naming conventions might require a bit more planning, depending on the size of the company and the number of devices under management. As an example, an OUI is a 24-bit number assigned by the IEEE that uniquely identifies a vendor or manufacturer. The OUI consists of the first three octets of a MAC address. For example, an OUI for Apple is 00:CD:FE. A company may have more than one OUI, which allows it to group or categorize its parent product sets. This brings context to the naming process so that a pattern can identify a product. A MAC address, or part of a MAC address, can be used in the naming taxonomy along with the device serial number.

Regardless of the naming methodology chosen, the goal is to be able to provision the device using the unique identifier. The provisioning system thus must be able to discern one device from another.

Secure Bootstrap

IoT security has been a major topic in the industry for quite some time, particularly with the rise of smart cars, refrigerators, thermostats, and more. The growing concern of device security rests heavily on the minds of manufacturers, providers, and resellers.

A primary task within the identity framework is to establish the initial trust in the device. Ideally, if the device can be verified as a trusted unit, it has an improved chance of automated provisioning. Insecure or improper bootstrapping can be a major vulnerability because bogus data then is allowed to flow upstream and be leveraged as a DDoS mechanism; in the worst case, imposters can steal sensitive data, such as proprietary firmware. Additionally, if a device has been maliciously modified, it can be used to steal information and manipulate upstream processes.

In the context of this chapter, bootstrapping represents the provisioning of a trusted identity for a device. The bootstrapping process can begin in the manufacturing process or start when it is activated for the first time or when it is in an owner’s possession. The most secure bootstrapping methods typically are initiated during the manufacturing processes and implement discrete security associations throughout the supply chain. They use methods to uniquely identify a device in these ways:

■ Unique and unalterable identifiers stored and fused in device read-only memory (ROM)

■ Unique serial number(s) that are imprinted on the device

For example, Cisco Systems has a process that creates an immutable identity via a Secure Unique Device Identification (SUDI), as described in the following section.

Immutable Identity

The Secure Unique Device Identification (SUDI) is an X.509v3 certificate that maintains the product identifier and serial number. The identity is implemented when it is manufactured and is chained to a publicly identifiable root certificate authority (CA). SUDI can be used as an identity for configuration, security, auditing, and management; it is an identity that does not change. The SUDI credential in the Trust Anchor can be based on either RSA or Elliptic Curve Digital Signature Algorithm (ECDSA).

According to the Cisco Trust Anchor Technologies Data Sheet, the SUDI certificate, the associated key pair, and its entire certificate chain are stored in the tamper-resistant Trust Anchor chip. Additionally, the key pair is cryptographically bound to a specific Trust Anchor chip and the private key is not exported. This feature makes cloning or spoofing the identity information virtually impossible.

SUDI can be used for asymmetric key operations such as signing, verifying, encrypting, or decrypting. This capability makes remote verification of a device’s authentication possible. It enables accurate, consistent, and electronic identification of Cisco products for asset management.

Because of the lack of standards in this realm, many companies are combining their efforts and attempting to create standardization processes. Cisco has an initiative called IoT Ready that aggregates efforts among vendors, chip manufacturers, and the user community, to support standardization and certification. The standardization provides a set of guidelines that support a more comprehensive device identity process that can then be leveraged in the network access policies. Part of the IoT Ready initiative is working with device vendors to standardize the use of onboarding and access control mechanisms within devices. Personalizing the endpoint with a specific identification number and coupling that with a robust unique cryptographic identity such as PKI has significantly improved the bootstrap process.

After the unique identifiers are either imprinted on the device or stored in ROM, they are to be shipped securely from the manufacturer to a trusted facility in a tamper-resistant enclosure. These facilities must have the proper physical security measures in place, along with a thorough logging process.

The secure bootstrapping process can vary in comprehensiveness and should be tailored based on the industry in which the device will be used, the specific functions it will be performing, and the criticality of the data to be managed. Consumer-grade IoT devices might not have the stringent controls that a regulated industry requires, but the more connectivity we have, the greater the risk becomes to affect a broader spectrum. In a regulated space such as the payment card industry (PCI), the risk is high; thus, attaching more comprehensive bootstrapping procedures is critical. The most secure processes generally implement multiparty integrity procedures and documented separation of duties in the device bootstrap process.

This chapter explores the use of certificates (both X.509 and IEEE 1609.2) and PKI. For more detail, see the later sections “Certificates” and “Private Key Infrastructure.”

Bootstrapping Remote Secure Key Infrastructures

Bootstrapping can also be an automated process, using solutions such as the Bootstrapping Remote Secure Key Infrastructure (BRSKI). This automated process leverages vendor-installed X.509 certificates, in combination with a vendor-authorized service on the Internet. BRSKI works with Enrollment over Secure Transport (EST; RFC 7030) to enable zero-touch joining of a device in a network domain.

This process can be invoked using link-local connectivity or a routable address and a cloud service. Support for constrained devices (those that have constraints in the form of power draw, CPU, memory, and so on) is described for legacy reasons but is not encouraged. According to the IETF draft “Bootstrapping Remote Secure Key Infrastructures,” BRSKI provides a foundation to answer the following questions in a secure manner between a network domain element called a Registrar and an unconfigured, untouched device called a Pledge:

■ Registrar authenticating the Pledge: Who is this device? What is its identity?

■ Registrar authorizing the Pledge: Is it mine? Do I want it? What are the chances that its been compromised?

■ Pledge authenticating Registrar/Domain: What is this domain’s identity?

■ Pledge authorizing the Registrar: Should I join it?

The BRSKI process is considered complete when the cryptographic identity of the new key infrastructure is successfully deployed to the device. This approach provides a secure zero-touch method for enrolling new devices without any prestaged configuration. This process is referenced later in this chapter in the section “Manufacturer Usage Description.”

This Internet draft is in full accordance with BCP 78 and BCP 79. The BRSKI provisioning process is described in full detail in the BRSKI Internet Draft, at https://tools.ietf.org/html/draft-ietf-anima-bootstrapping-keyinfra-07#section-3.8.

Device Registration and Profile Provisioning

When the company receives the device, that device must be enrolled or registered for use on the network. The overall goal of the registration and profile provisioning process is to give an endpoint the logic it requires to make a secure connection to the network and operate within the company policy guidelines. The following steps are common:

■ Create and associate the naming convention or taxonomy with the device.

■ Prepare the device to participate in PKI (if not done already) by installing trust anchors and certificates for the certificate authority (CA) being leveraged and any intermediate registration authorities (RA).

■ Provision the private keys and device certificate signed by the CA so that the device can participate as a trusted unit on the network.

■ Download the profile (such as SSID info and encryption to be used), giving the device the capability to connect to the network.

■ Configure the device with day 0 information, such as its IP address, its local server address, and a default gateway.

Many vendors use different forms of this registration or enrollment process, each with varying steps and capabilities. Provisioning device certificates manually for each device would be a painstaking process; depending on the device type, certain methods can automate the process. We walk through two instances using provisioning examples from AWS and Cisco Systems.

Provisioning Example Using AWS IoT

Let’s walk through an elementary example of the Amazon Web Services (AWS) IoT device registration process.

Devices connected to the AWS IoT platform are represented by things in what they refer to as their “thing registry.” The thing registry maintains a record of all the devices that are connected to the AWS IoT account. The process begins by creating a device entry, which must be named. This is an example of how the unique naming convention can be applied.

Figure 9-4 illustrates adding the temperature sensor to the registry, beginning with creating the naming taxonomy process described previously.

The next step is to associate attributes to the thing that can be used in search criteria or, for a more comprehensive identity, by coupling variables. Figure 9-5 illustrates attaching attributes to the temperature sensor.

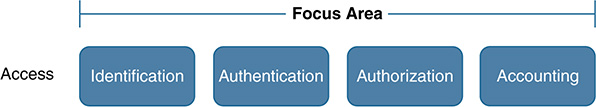

We now need to download and install the connection kit. If you choose the option for AWS to create the certificate and key pairs, it will be available to download as part of the connection kit. Figure 9-6 illustrates using the AWS-created certificate along with the public and private key pairs, which are made available for download and installation.

Figure 9-7 shows the downloaded package, which contains the certificate and associated key pair.

Several methods exist for managing certificates, and different IoT platform vendors might select different choices. You may choose to have the IoT platform vendor do the provisioning, to create both the certificate and the public/private key pairs. Another method might allow customers to bring their own certificates (BYOC); the customers then are responsible for creating the certificate for every device. A third option entails having customers generate their public/private keys and certificate signing request (CSR) and then sending the CSR to the IoT vendor’s CA to be signed.

AWS offers all three options for certificate management. See Figure 9-8 for certificate management options, along with the pros and cons for each.

Once the accounts and associated credentials have been provisioned, a process or procedure must be instituted to monitor the accounts against a defined set of criteria, to ensure policy adherence. Examples include the following:

■ Account monitoring and control: Toolsets that audit device administrative credentials should be deployed. This includes using strong password policies and rotating crypto keys.

■ Account discontinuance: A policy should address disabling credentials due to suspension status and/or deleting credentials due to an employment status change. Credentials, crypto keys, and certificates all need to be considered here. Having the keys in the wrong hands can lead to reverse-engineering attempts and unwanted compromise.

Provisioning Example Using Cisco Systems Identity Services Engine

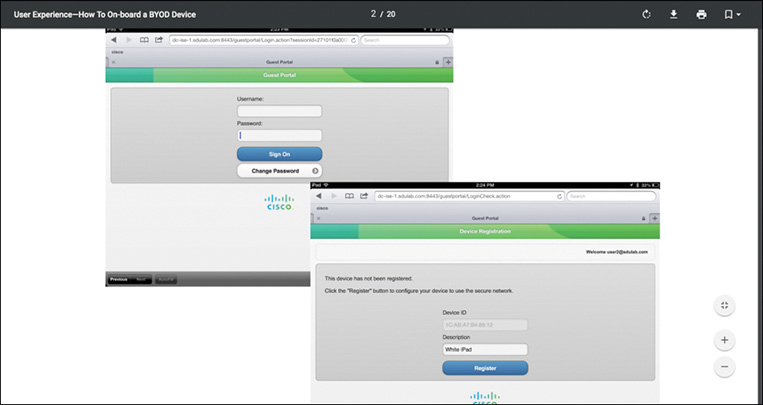

Cisco Systems also has an onboarding solution to self-register an endpoint for both wired and wireless users. This approach allows employees to manage onboarding their own devices through a self-registration workflow, simplifying the automatic provisioning of supplicants and certificate enrollment for the most common BYOD devices. The workflow supports iOS, Android, Windows, and Mac OS devices. It assists in transitioning these devices from an open environment to a secure network with the proper access, based on device and user credentials. This is particularly helpful for industrial environments that want to institute the use of BYOD in plants.

Workflows exist for both guest users and employees who want to use their own devices in a secure manner. Let’s walk through an example that allows an employee to bring in and provision a mobile device to be used on the company network, while at the same time allowing IT to enforce the appropriate access policies based on company policy.

Note ISE does not have an automated provisioning workflow for “things” to gain identity credentials. The workflow downloads a supplicant to the endpoint that walks the user through the provisioning process.

This entails downloading a supplicant that assists with the registration process, downloading a device certificate, and downloading the corporate wireless information (SSID and encryption method) to enable a connection to the secure company network. Consider the following process:

The employee connects to the provisioning SSID and is redirected to the Guest Registration portal for registration after opening a browser.

The employee enters the Active Directory credentials. If the device has not yet registered, the session is redirected to the self-registration portal, where the user is asked to enter a description for the new device.

The employee is not allowed to change the Device ID (MAC address); it is automatically discovered by the Cisco Identity Services Engine (ISE). (The next section introduces ISE.) Figure 9-9 illustrates the self-registration portal.

The supplicant profile is downloaded and installed on the endpoint.

Keys are generated and the certificate is enrolled.

The Wi-Fi profile required to connect to the BYOD_Employee is installed.

Figure 9-10 shows the supplicant profile being downloaded, along with the certificate enrollment, key generation, and download and installation of the Wi-Fi profile (which provides the SSID and encryption information required to establish a secure connection to the company SSID). After the download and installation of these profiles, the employee is notified that registration is complete and is reminded to manually connect to the BYOD_Employee SSID.

In summary, the AWS and Cisco registration and enrollment processes differ in terms of procedure and options available, but both give users the option of onboarding their own devices through a self-registration workflow. This simplifies the automatic provisioning of device enrollment and also assists with certificate deployment, which can be useful in terms of endpoint provisioning scalability.

The preceding section provided an elementary discussion of some of the prerequisites that must be met before an endpoint is allowed to gain access to network resources. These include bootstrapping an endpoint with a trusted identity, providing for a unique naming taxonomy, associating attributes with the endpoint for distinguishability, and ultimately enrolling the endpoint to be used on the network. The intent is to leverage that information to dynamically extrapolate those unique “fingerprints,” to identify the endpoint through the profiling process as it seeks access to the network, authenticate it, and grant the appropriate access privileges based on its identity. The next section explores those options in detail.

Access Control

As the cliché goes, we can’t properly secure what we can’t see. The identification process is a critical step in the access control process and works as a prerequisite to the automation logic that ensues. When a device is properly identified, it must be evaluated to determine whether it belongs on the network. After successful authentication, appropriate access privileges (authorization) can be granted, based on the device’s identity. These authentication and authorization steps require a properly documented trail that feeds into the accounting process. The accounting process also measures and keeps track of the resources a user consumes while accessing the network. The steps in this framework (identification, authentication, authorization, and accounting), shown in Figure 9-11, work in concert, as we explore in the following section.

Identifying Devices

So how is a device identified? Several methods can identify a device; this process varies significantly, depending on the device type and the connection method. Let’s walk through how these variables affect identification capabilities.

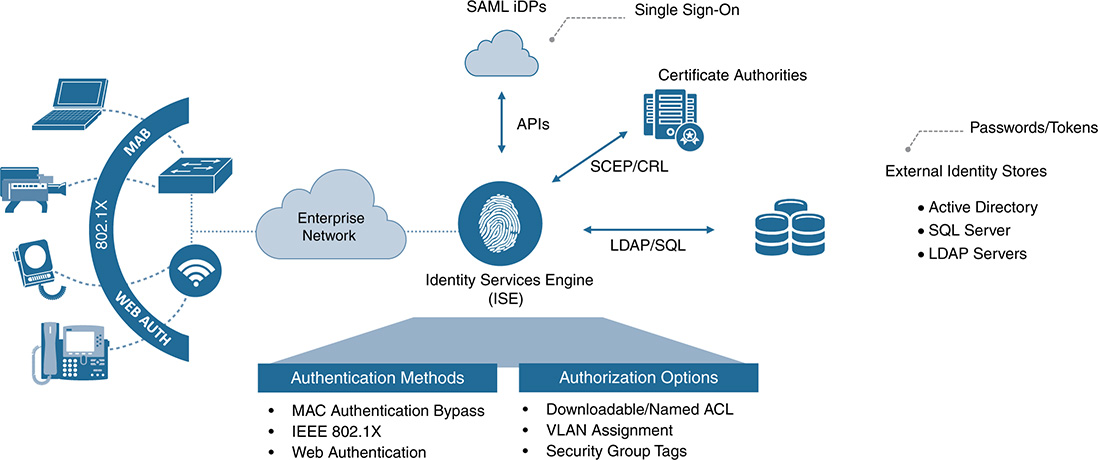

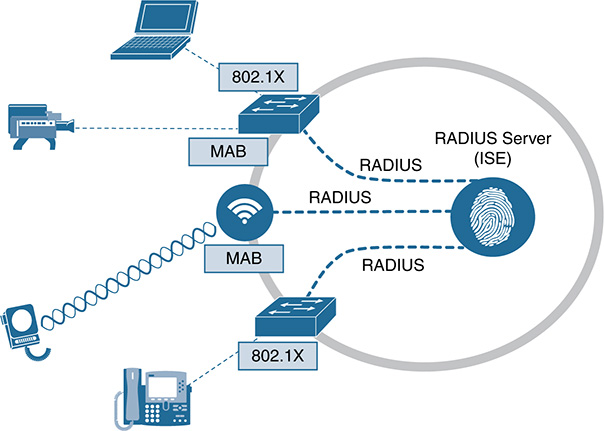

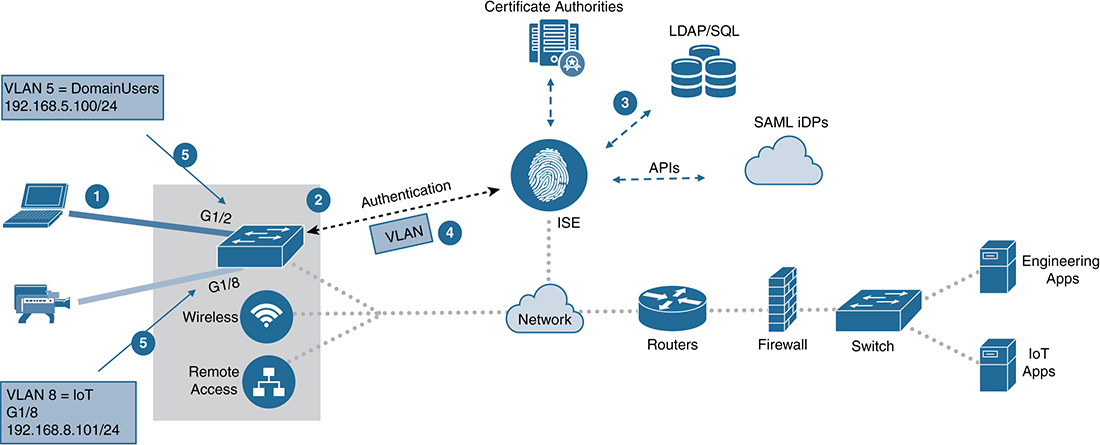

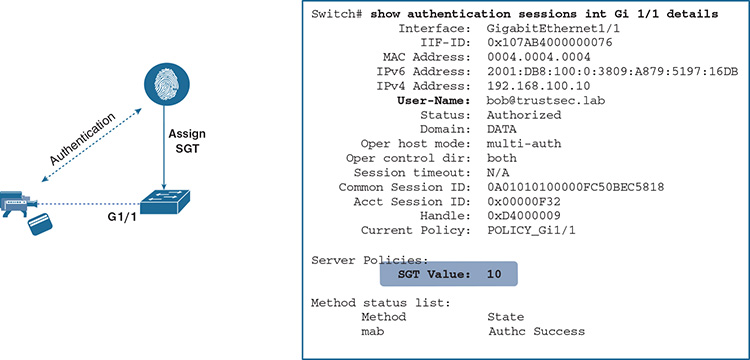

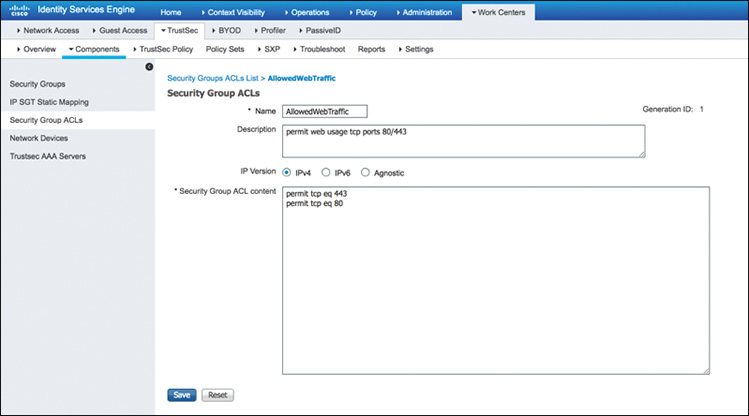

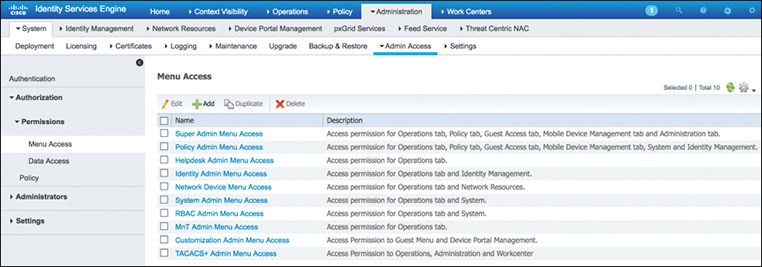

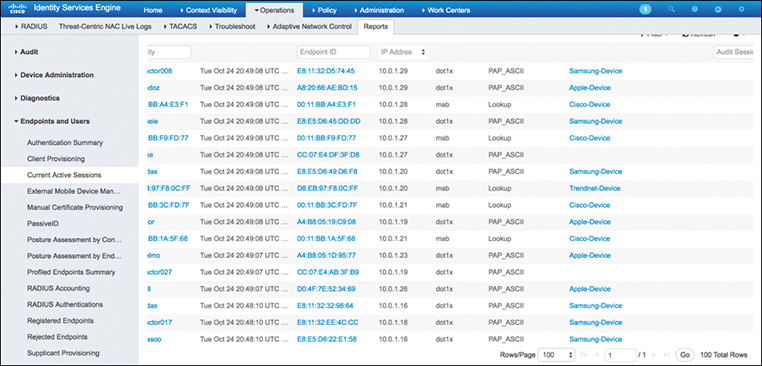

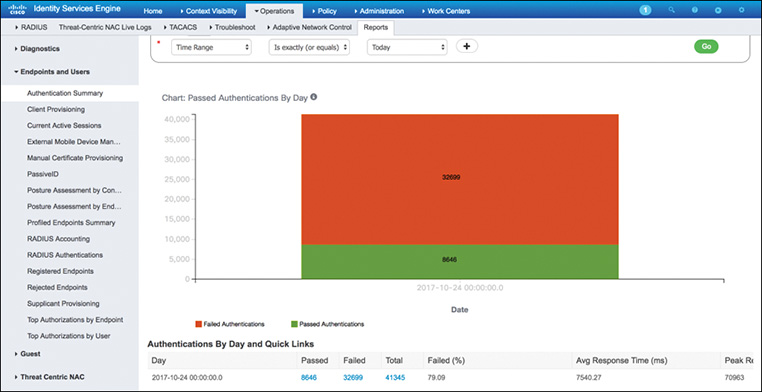

Cisco Systems has a product called the Identity Services Engine that combines endpoint identity, authentication, and authorization into a comprehensive access control solution. ISE essentially translates business policy into technical rules that are enforced electronically throughout a network. It was created to give organizations an integrated architecture approach for both network access and policy constructs. The concept is quite logical: to control policy from a single console. This encompasses the following:

■ Secure access via medium (wired, wireless, remote access)

■ Secure access via location (branch, campus, headquarters, remote user)

■ Secure access via endpoint (printer, computer, camera, medical device)

■ Secure access for any person (employee, contractor, vendor, guest)

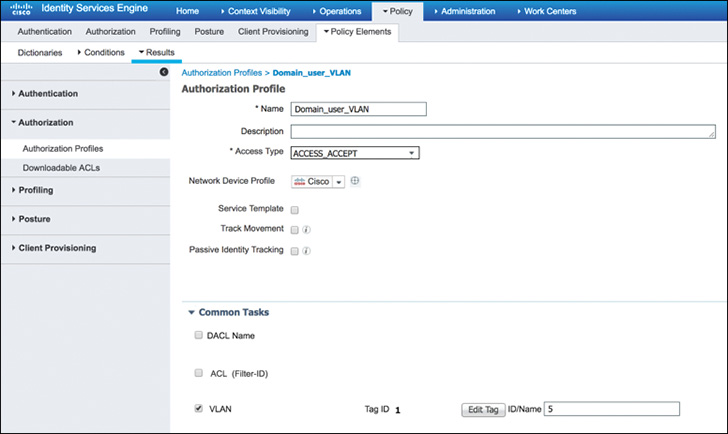

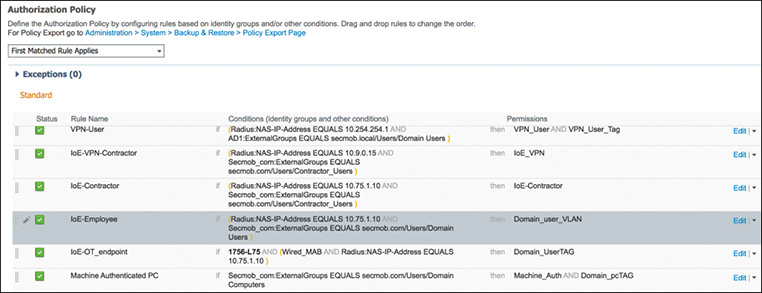

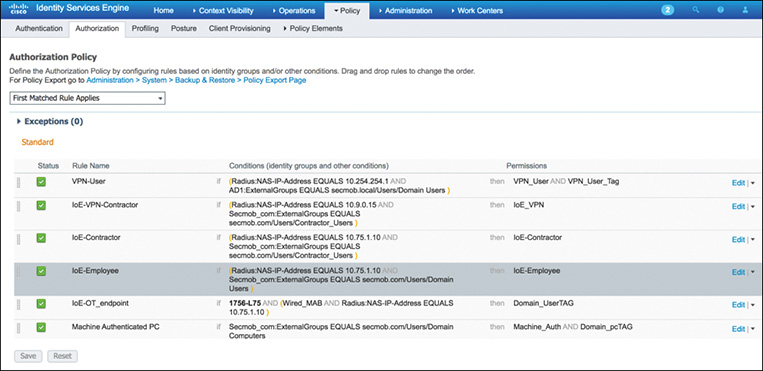

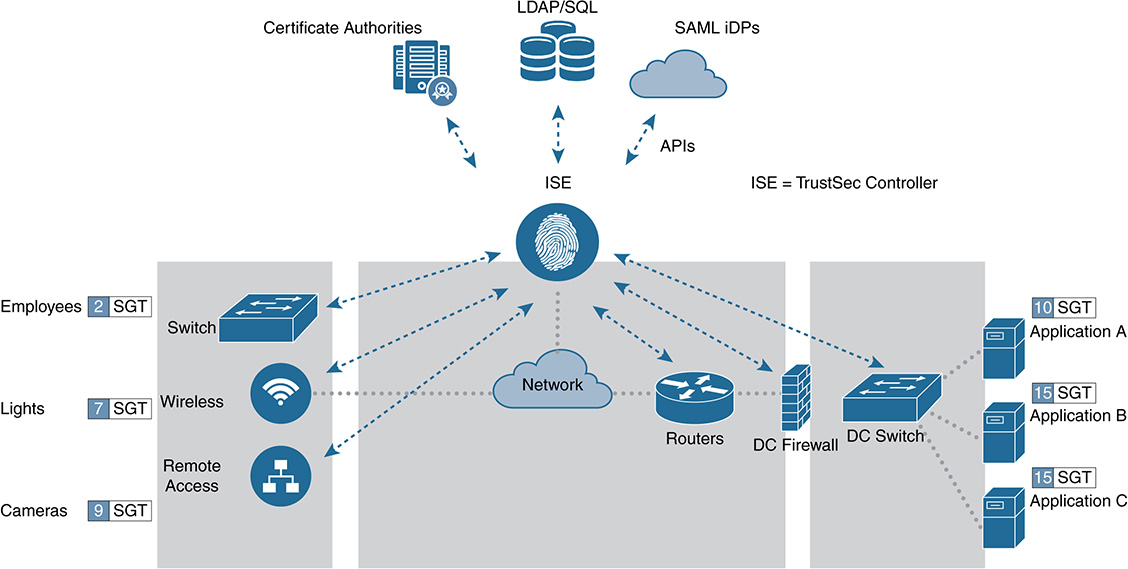

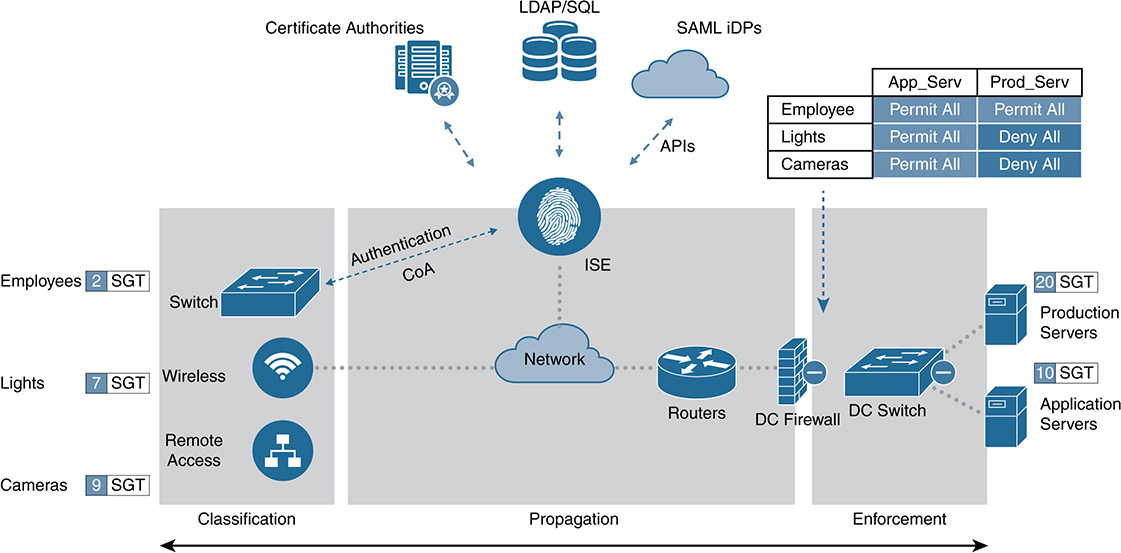

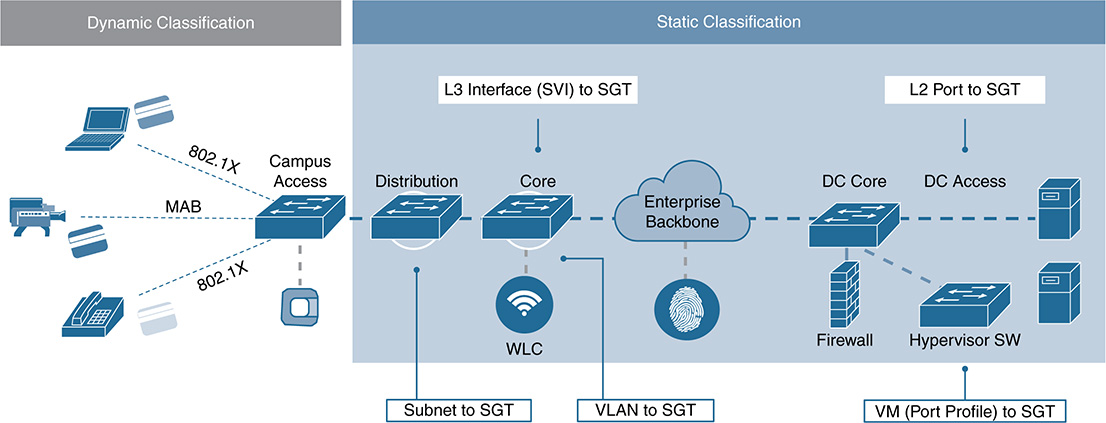

For Cisco, ISE is its authentication, authorization, and accounting (AAA) server on steroids. It also adds in the important capabilities of embedded profiling, posture, guest services, and PKI capability. The solution addresses the important questions of who, what, when, where, and how for various media options (wired, wireless, and remote access). ISE also connects to back-end authentication and authorization databases to enhance the “one-stop shop” theory of AAA, such as authenticating via Active Directory, LDAP, SQL, CAs, and external identity providers (iDP). Figure 9-12 illustrates ISE’s integrated approach to AAA.

Again, ISE is an integrated architecture that aggregates identity with AAA. Next, we explore the beginning stages, with methods to gather identity.

Endpoint Profiling

Profiling data can be gathered from endpoints in different ways, as the following sections explore.

Profiling Using ISE

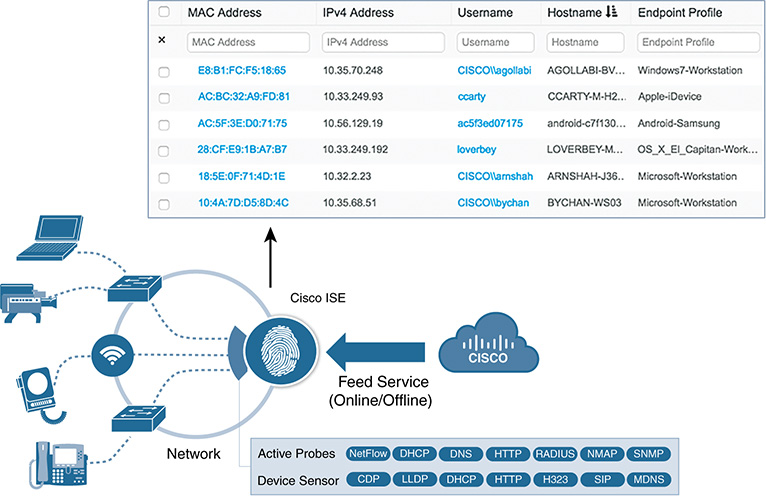

The ISE profiling process is an automated process of device discovery and classification. It is a key service responsible for identifying, locating, and determining the capabilities of endpoints that attach to the network, to deny or enforce specific authorization rules. The following are two of the main profiling capabilities:

■ Collector: Collects network packets from network devices and forwards attribute values to the analyzer.

■ Analyzer: Determines the device type by using configured policies that match attributes.

Two main methods collect endpoint information:

■ ISE acts as the collector and analyzer.

■ The infrastructure acts as the collector and sends the required attributes to ISE, which executes the analyzer portion.

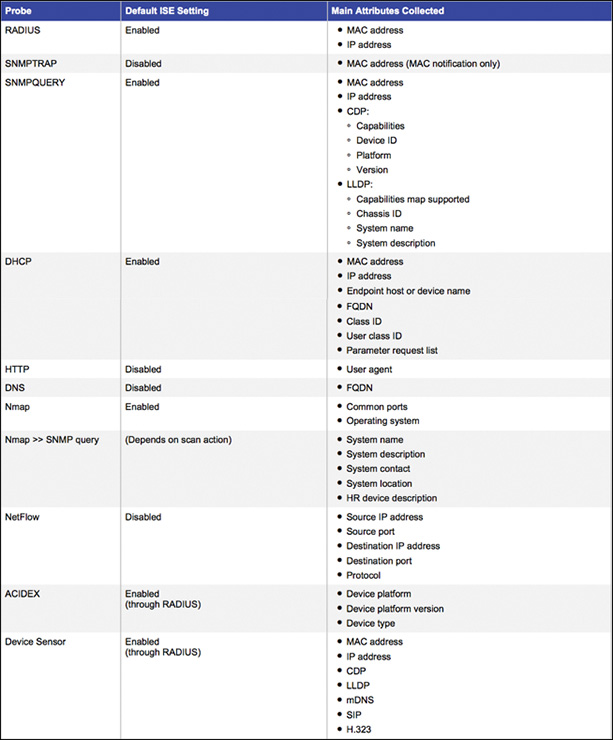

We discuss ISE as the collector and analyzer first. This approach is based on the use of collectors, referred to as probes. Probes use specific methods and protocols to collect attributes about each endpoint. The specific information a probe collects depends on the protocol and method implemented. Cisco ISE supports various probes, which we describe later in the chapter; each probe is capable of capturing different data points. Raw data for a given endpoint is parsed and stored in the ISE internal endpoint database. Relevant endpoint attributes are then analyzed against a library of fingerprinting rules known as profiler policies. Different attributes and rules have different weighting factors in the final endpoint classification, depending on the reliability of the data. Profiling is never an exact science; it is merely the process of collecting and aggregating variables to improve levels of certainty. Figure 9-13 provides an elementary view of the ISE profiling capability leveraging probes.

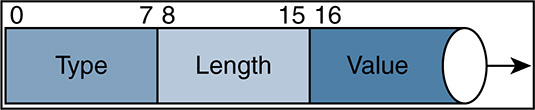

Each probe contributes different information about the endpoint. The goal is to deploy probes that optimize the collection process while adding unique value in the form of Type-Length-Value (TLV) to the classification. Additionally, the network must be designed and configured to support the collection. Figure 9-14 shows the list of embedded probes with ISE, along with the main attributes they gather.

ISE comes with definitions for thousands of endpoint detections and classifications on day one. These definitions can be updated via online or offline feed services for greater fidelity and up-to-date information. The information (or unique TLVs) about the endpoints can be gathered either using active probes or with the assistance of the device sensor feature on the network devices.

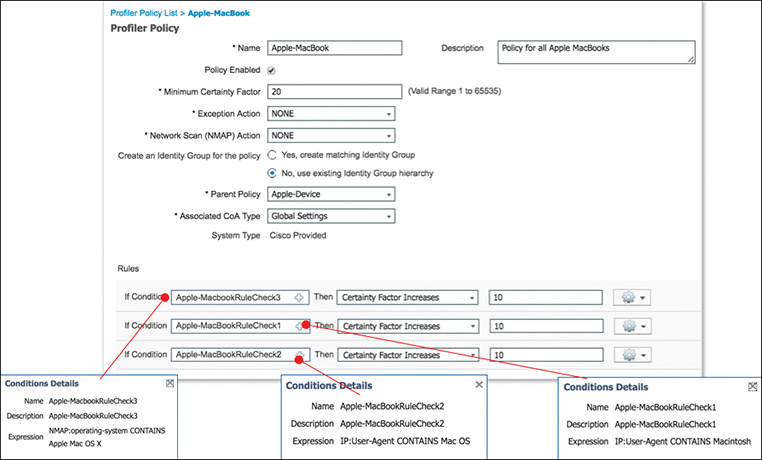

Profiling is not an exact science. Profiling is simply the process of gleaning protocols, extrapolating unique TLVs to leverage as fingerprints, and coupling as many TLVs as possible to increase the level of certainty. The more variables you can couple together, the better your chance for accuracy. Figure 9-15 illustrates the profiling policy in ISE for an Apple MacBook.

Figure 9-15 shows three conditions. With each condition that is successfully met, the certainty level increases. For our purposes, it would be enough to obtain just one of the three conditions shown because the minimum certainty factor to successfully profile an Apple MacBook is 20.

This policy is called a canned policy in ISE. Cisco has developed about 500 canned profiles that use the various probes. The profiling library is preconfigured in ISE and is continually updated using the automated Feed Service. ISE provides the capability to modify any of the canned profiles, and administrators can create customized profiles as well.

In addition to the default profiles, Cisco has created a library of specialized profiles, per industry. For example, the medical field commonly uses the Identity Services Engine. A fair number of specialized devices, such as electronic sensors, bio devices, controllers, and imaging systems, require unique privileges. Cisco has created medical device profiles that healthcare delivery organizations can download from Cisco.com. The Cisco Medical NAC Profile Library will continue to evolve: As of February 2016, it contained more than 250 medical device profiles.

Device Sensor

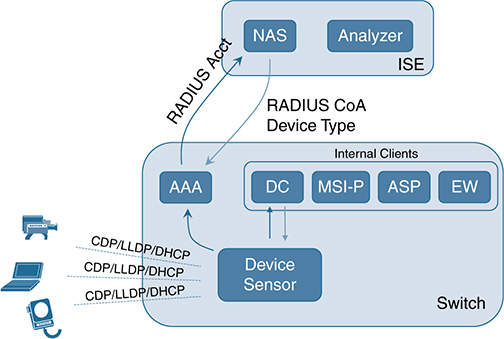

Cisco introduced a capability called Device Sensor in its access infrastructure (LAN switches and wireless LAN controllers) to bolster ISE’s endpoint profiling process. The sensor gleans information from specific protocols, such as MAC address, IP address, CDP and LLDP details, DHCP option fields, and HTTP user agents, and sends that raw data to ISE via RADIUS. Device Sensor makes that information available to its registered clients in the context of an access session. The access session represents an endpoint’s connection to the network. This is particularly useful for access control because we can leverage the TLVs acquired to learn the who, what, when, where, and how; we then can use that information to make a real-time decision on granting an endpoint access and authorization. We explore the access control piece later in this chapter.

The profiling capability of Device Sensor consists of two portions:

■ Collector: Gathers endpoint data from network devices

■ Analyzer: Processes the data for device determination

The Device Sensor represents the embedded collector functionality. Figure 9-16 shows where Device Sensor resides in the context of the profiling system.

Device Sensor has both internal and external clients. Internal clients include the embedded Device Classifier (local analyzer), ASP, MSI-Proxy, and EnergyWise (EW). The Identity Services Engine (ISE) is the external client and analyzer shown; it leverages RADIUS accounting to receive additional endpoint data.

The client notifications and accounting messages (which can contain profiling data, MAC addresses, and ingress port identifiers) are generated and sent to both internal and external clients.

Device Sensor has built-in port security that protects the switch from consuming memory and crashing, thus limiting intentional DDoS-type attacks.

Let’s look at an example of configuring the Device Sensor feature so that it can be used for profiling purposes on ISE. Device Sensor will collect information about connected endpoints by gleaning the following protocols and extrapolating the requisite TLVs for which it is configured:

■ Cisco Discovery Protocol (CDP)

■ Link Layer Discovery Protocol (LLDP)

■ Dynamic Host Configuration Protocol (DHCP)

The process begins with enabling the AAA, 802.1X, and RADIUS, and then enabling the appropriate protocols. See the standard AAA configuration example in Example 9-1.

Example 9-1 Standard AAA Configuration Example

aaa new-model

!

aaa authentication dot1x default group RADIUS1

aaa authorization network default group RADIUS1

aaa accounting update newinfo

aaa accounting dot1x default start-stop group RADIUS1

!

aaa group server radius RADIUS1

server name ISE1

radius server ISE1

address ipv4 10.1.X.X auth-port 1645 acct-port 1646

key cisco

!

dot1x system-auth-control

!

lldp run

cdp run

!

interface GigabitEthernet1/0/13

description IP_Phone_8941_connected

switchport mode access

switchport voice vlan 101

authentication event fail action next-method

authentication host-mode multi-domain

authentication order dot1x mab

authentication priority dot1x mab

authentication port-control auto

mab

dot1x pae authenticator

dot1x timeout tx-period 2

spanning-tree portfast

end

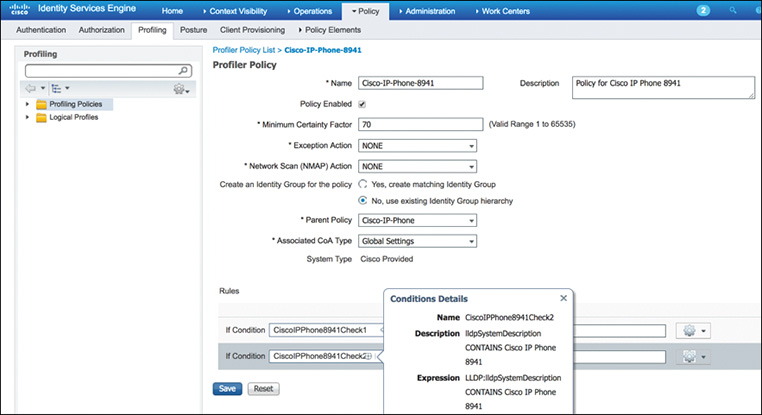

The attributes required to profile the device must then be determined. We moused over one of the checks (check 2) so that you can see how it references the lldpSystemDescription variable. Check 1 was for cdpCachePlatform (not shown) via the mouseover. Figure 9-17 illustrates the lldpSystemDescription variable.

The Device Sensor–specific portion of the configuration now begins:

■ Configure two filter lists, one for CDP and another for LLDP. These indicate which attributes should be included in RADIUS accounting messages. This step is optional; all are included by default.

■ Create two filter specs for CDP and LLDP. One filter spec indicates that list of attributes should be included or excluded from accounting messages. In the example, the following attributes are included:

■ device-name from CDP

■ system-description from LLDP

■ If needed, additional attributes can be configured and transmitted to ISE through RADIUS. This step is also optional.

■ Add the command device-sensor notify all-changes. It triggers updates whenever TLVs are added, modified, or removed for the current session.

■ To transit the information gathered via Device Sensor functionality to ISE, the switch must be configured to do so with the command device-sensor accounting.

Example 9-2 illustrates the Device Sensor probe configuration.

Example 9-2 Device Sensor Probe Configuration

device-sensor filter-list cdp list cdp-list

tlv name device-name

tlv name platform-type

!

device-sensor filter-list lldp list lldp-list

tlv name system-description

!

device-sensor filter-spec lldp include list lldp-list

device-sensor filter-spec cdp include list cdp-list

!

device-sensor accounting

device-sensor notify all-changes

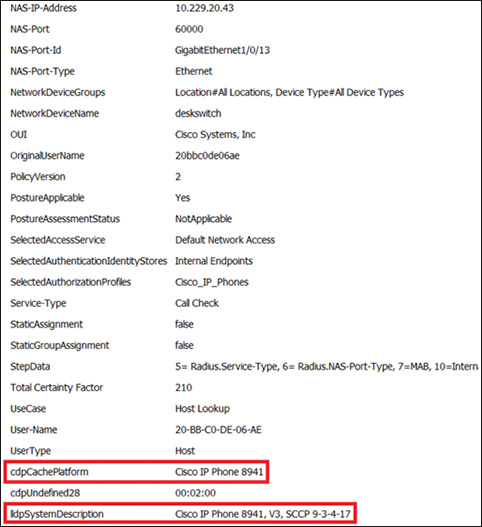

After configuring the authentication and authorization policies, the Cisco IP Phone can be successfully profiled. Figure 9-18 illustrates how ISE leveraged both the cdpCachePlatform and lldpSystemDescription variables to successfully profile the endpoint.

The important point is that differentiated authorization privileges should be provided, based on the information received. For example, you might need to differentiate between contractor devices and employee devices, between employees bringing in their own mobile devices and company-issued assets, between whether a user is on or off the network, or even between times of day. Contractors might be authorized to be onsite only Monday through Friday from 8:00 a.m. to 5:00 p.m.; vendors who need to enter on weekends might require differentiated access. The more information you can acquire, the more granular the policy can become. We explore the authentication and authorization sections later in this chapter.

Methods to Gain Identity from Constrained Devices

Devices fall into two major categories, constrained and complex. The term constrained devices was introduced to describe devices that have more stringent resource capabilities when compared to the common desktop computer (complex). This chapter does not exhaustively explore the constrained category, but it provides a baseline discussion of the category and its associated limitations so that you can employ the proper security considerations.

Before we discuss how to use standard IoT protocols to gain identity from constrained devices, we need to quickly walk through the classes of constrained devices.

Restrictions on constrained devices potentially include the following:

■ Less computational power (MegaFLOPS versus TeraFLOPS)

■ Reduced power consumption (mWatt versus Watt)

■ Less memory and/or flash, along with less buffer space (kilobytes versus gigabytes)

■ Potentially based on microcontrollers that provide a limited set of functionality and constraints on the user interface, such as the capability to set keys and update software

These types of constraints can make the device identification process challenging. Varying degrees of constraint are bolstering efforts to create categories.

Terminology was created for different classes of constrained devices in RFC 7228, “Terminology for Constrained-Node Networks.” Classes are defined for RAM/flash, energy limitation, and strategy for using power for communication.

Table 9-1 lists the RAM/flash classes of constrained devices.

Table 9-1 Classes of Constrained Devices (KIB = 1024 Bytes)

Name |

Data Size (RAM) |

Code (Flash) |

|---|---|---|

Class 0, C0 |

10 KB |

100 KB |

Class 1, C1 |

–10 KB |

–100 KB |

Class 2, C2 |

–50 KB |

–250 KB |

Class 0:

Severely constrained in memory and processing capabilities, and most likely cannot communicate directly with the Internet in a secure manner

Communicate via proxy or gateway devices

Cannot be managed or secured in a comprehensive manner

Most likely are preconfigured and might not have the capability to be reconfigured

Can respond to keepalives and send basic health status

Class 1:

Constrained in processing capabilities and flash/code space, and are unable to communicate with other devices that leverage the full protocol stack (HTTP/TLS/XML-based data representations).

Capable of using a protocol stack specifically designed for constrained nodes, such as Constrained Application Protocol (CoAP) over UDP, and can participate in conversations without the use of a gateway

Can leverage that protocol stack to provide support for security functions required on a larger network, and therefore can be integrated into an IP network

Need to be frugal with code space, memory, and power expenditure

Class 2:

Fundamentally capable of supporting most of the same protocol stacks used on desktops and powerful mobile devices

Can still benefit from lightweight and energy-efficient protocols that consume fewer resources

Energy Limitations

Certain devices are limited in available energy or power. Any device that does not have a limit is classified as E9. Energy limitation can refer to a certain time period or the device’s usable lifetime. When a device is discarded after its available energy has ceased, it is classified as E2. When the limitation refers to a period of time, such as solar energy being produced only during daylight hours, this classification is E1. Table 9-2 shows example energy limitation classifications.

Table 9-2 Classes of Energy Limitation

Name |

Type of Energy Limitation |

Example Power Source |

|---|---|---|

E0 |

Event energy limited |

Event-based harvesting |

E1 |

Period energy limited |

Battery that is periodically recharged or replaced |

E2 |

Lifetime energy limited |

Nonreplaceable primary battery |

E9 |

No direct quantitative limitations available |

Mains powered |

Strategy for Using Power for Communication

When wireless transmission is leveraged, the radio can consume a large portion of the device’s energy total. Different strategies address both power usage and network attachment, based on the energy source and the frequency the device leverages to communicate. Table 9-3 lists the general strategies.

Table 9-3 Strategy of Using Power for Communication

Name |

Strategy |

Capability to Communicate |

|---|---|---|

P0 |

Normally off |

Reattach when required |

P1 |

Low power |

Appears connected, perhaps with high latency |

P9 |

Always on |

Always connected |

■ Normally off: The device sleeps for long periods at a time. When it wakes up, it reattaches itself to the network. The goal is to minimize effort during the reattachment phase and the resulting application communication.

■ Low power: The device operates on small amounts of power but still communicates on a relatively frequent basis. This implies that extremely low-power solutions need to be used for the hardware, chosen link-layer mechanisms, and so on. Typically, with their small amount of time between transmissions, these devices retain some form of attachment to the network despite their sleep state. Techniques for minimizing power usage for network communications include minimizing any work from re-establishing communications after waking up and tuning the frequency of communications (including duty cycling, in which components are switched on and off in a regular cycle) and other parameters.

■ Always on: This strategy is applicable if extreme power-saving measures are not required. The device remains active in the usual manner. Consider leveraging power-friendly hardware or limiting the number of wireless transmissions, CPU cycles, and general power-saving tasks.

Billions of additional devices will be connected to the Internet, and a fair number of them might be using predominantly machine-to-machine (M2M) communication; they might present only external interfaces that are not primarily designed for human interaction. Couple that with the constraints listed, and constrained device identification can be challenging. Leveraging an 802.1X supplicant or PKI for identification on constrained devices might not be possible, so what about analyzing the protocol it currently uses for communication?

Leveraging Standard IoT Protocols to Identify Constrained Devices

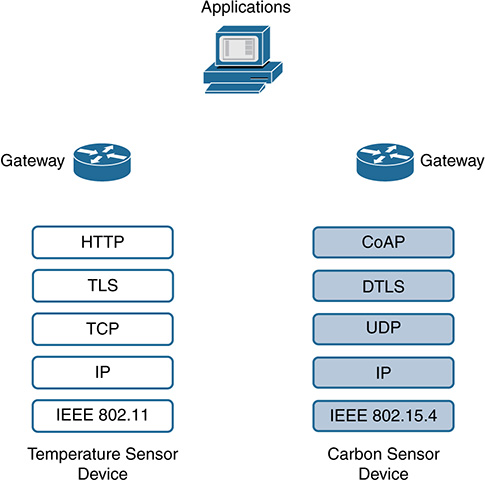

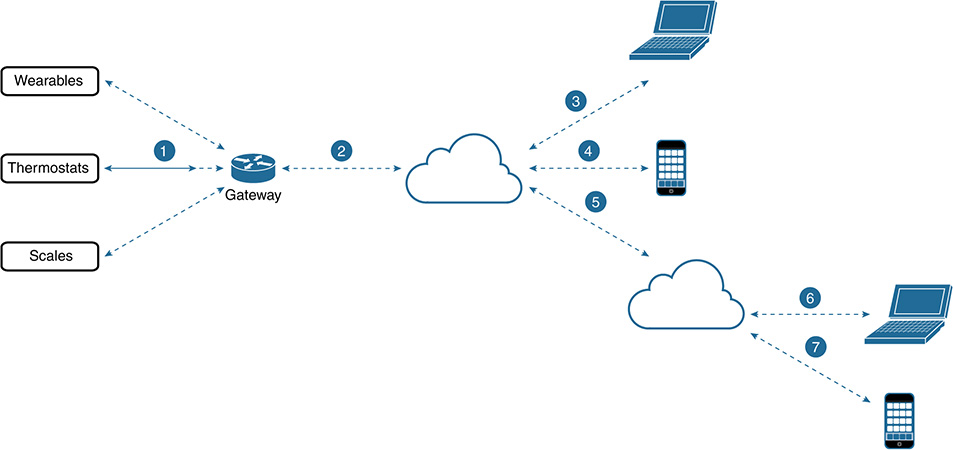

Figure 9-19 shows a communication pattern for uploading sensor data to an application service provider. If you are considering supporting constrained devices (see the right side), the protocols on the left side might not be suitable. As an example, data encoding schemes and transport protocols that are based on human-readable encoded data are verbose and thus not efficient enough when memory and energy resources are limited. Binary-based protocols, such as Constrained Application Protocol (CoAP) and Message Queue Telemetry Transport (MQTT), better fit M2M and IoT requirements on constrained devices.

Figure 9-19 Communication Stack Difference Between a Common Web Stack and a Stack for Constrained Devices

Vendors generally want to leverage popular protocols, which leads to increased adoption, but these options might not be possible, depending on the device constraints. Less widely available radio technologies (such as IEEE 802.15.4) could be needed, or special application-layer functionality (such as local authentication and authorization) might need to be provided for interoperability. Figure 9-19 illustrates the difference in protocol stacks for a common web stack versus a constrained stack.

If we consider the right side of Figure 9-19 to be a constrained device that leverages the communication stack, are there efficient methods to gain its identity? Depending on the protocol, sometimes the protocol format itself can be leveraged to gain identity variables, or the security overlay can help extrapolate additional identity information.

CoAP

The Representational State Transfer (REST) architecture has become widely leveraged in most applications and architectures on the web. One of the main goals of CoAP is to provide a REST architecture that is better suited for constrained environments. This protocol is used often, particularly in M2M scenarios, to build automation and energy. It aims to keep the message overhead smaller and limit the need for fragmentation. Some features include the following:

■ A web protocol that fulfills M2M requirements in constrained environments

■ Asynchronous message exchanges

■ UDP binding to support unicast and multicast requests

■ URI and content-type support

■ Low header overhead and parsing complexity

■ More simplistic proxy and caching capabilities

■ A stateless HTTP mapping, allowing proxies to provide access to CoAP resources via HTTP and also allowing simple interfaces to integrate with CoAP

■ Security binding to Datagram Transport Layer Security (DTLS)

The interaction model of CoAP is similar to the client/server model of HTTP. However, M2M interactions can also cause a device to take on both client and server roles. The CoAP messages are exchanged asynchronously between CoAP endpoints, and CoAP is bound to unreliable transports such as UDP.

The endpoint depends on the security model used. With no security, the endpoint is solely identified by an IP address and a UDP port number.

A common approach to providing enhanced identification and authentication for CoAP is to use Datagram Transport Layer Security (DTLS). DTLS can be leveraged in three ways:

■ PreSharedKey: DTLS is enabled and a list of preshared keys is generated. Each key includes a list of nodes that it can be used to communicate with.

■ RawPublicKey: DTLS is enabled and the device has an asymmetric key pair without a certificate (a raw public key) that is validated using an out-of-band mechanism.

■ Certificate: DTLS is enabled and the device has an asymmetric key pair with an X.509 certificate (RFC 5280) that binds it to its subject and is signed by a trust root.

PKI, certificates, and trust anchors are described in more detail in the later section “Authentication Methods.” Again, with no security, an endpoint using CoAP communicates via IP and UDP; that IP address and the UDP port number become its identifiers.

MQTT

MQTT was invented in the late 1990s to create a protocol for constrained devices that can address challenges in both battery power and bandwidth. MQTT is a client/server publish/subscribe messaging transport protocol that is lightweight, open, and simple to implement. However, it has been widely unused in M2M and IoT environments to address bandwidth and code footprint constraints. In October 2014, the protocol was approved as an OASIS standard.

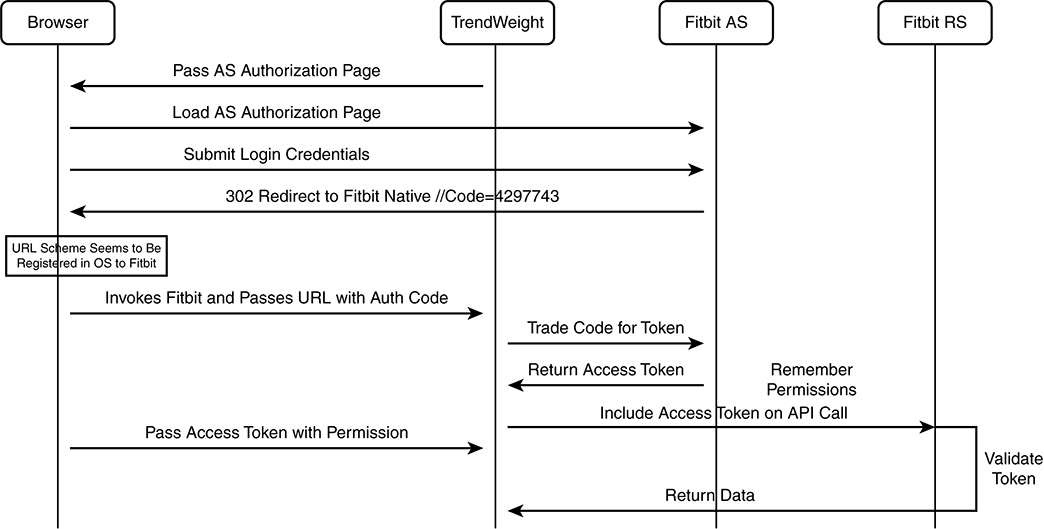

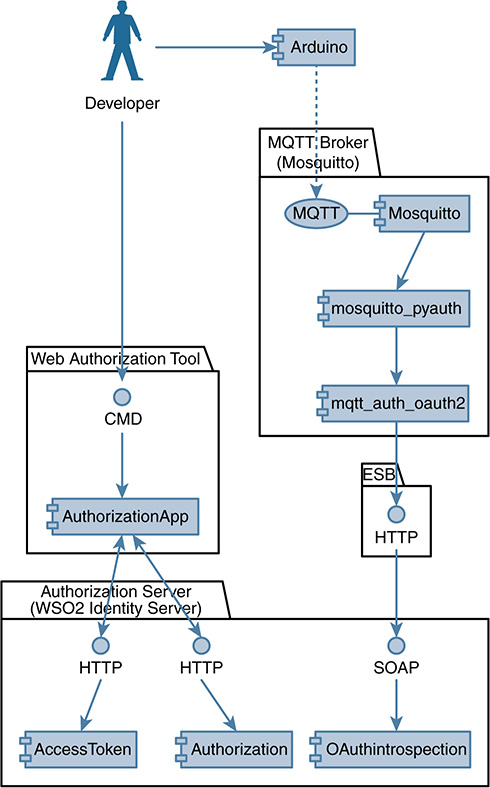

MQTT decouples the publisher and subscriber, so the client connection is always done with the broker. Figure 9-20 shows the working components of MQTT.

■ Client: An MQTT client is any device, from a micro controller up to a full-fledged server, that has an MQTT library running and is connecting to an MQTT broker over any kind of network. This can be a small device with resource constraints. The device must have a TCP/IP stack because it leverages TCP and IP, using MQTT over the top. This protocol is ideally suited for constrained IoT-type devices that can leverage the publish/subscribe model. MQTT client libraries are available for a wide variety of programming languages, including Android, Arduino, C, C++, Go, iOS, Java, JavaScript, and .NET.

■ Broker: The broker is the heart of any publish/subscribe model. Depending on the implementation, a broker handles thousands of concurrent MQTT clients. The broker receives all messages, filters them, determines who is subscribed to the topic at hand, and ultimately delivers the message to the subscribed clients. It keeps a session database of persisted clients that includes subscriptions and missed messages. The broker also handles authentication and authorization of clients. Often the broker is extensible, capable of integrating into additional back-end systems. This delivers customized authentication and authorization capabilities.

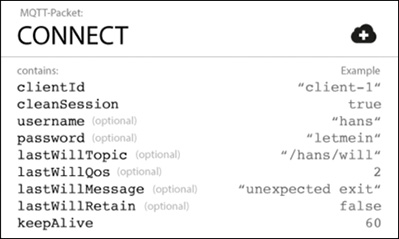

The MQTT connection is always between one client and the broker; no clients connect to each other directly. The connection is initiated when a client sends a CONNECT message to the broker. Within that CONNECT message there lies a ClientId, username, and password. Figure 9-21 illustrates these fields in the MQTT CONNECT packet.

The ClientId is the identifier of each MQTT client connecting to an MQTT broker. This ClientId must be unique per broker because the broker uses it to identify the client and its associated state. If you do not require state to be maintained, you can send an empty ClientId, resulting in a connection without state.

MQTT also enables you to send a username and password for authenticating the client and for authorization. This password is transmitted in plain text if it was not encrypted or hashed by leveraging TLS. Using a secure transport username/password field is recommended; we cover this shortly in the “Authentication Methods” section.

In summary, gaining identity from constrained devices can be challenging, but you can leverage the standard protocol it uses for communication or the security protocol overlay.

Authentication Methods

In contrast to desktops and personal mobile devices, IoT endpoints can vary from a light bulb to manufacturing equipment. Additionally, these devices grow in complexity as they progress along the IoT maturity model. At one end are simple sensors, such as smart refrigerators and wearables; at the other are more complex autonomous devices that perform action without human direction or intervention, such as a smart vehicle.

The intelligence and authentication capabilities of these endpoints also vary, thus requiring the network to support various authentication methods. This section explores the use of passwords, keys, certificates, client/server methods such as 802.1X and RADIUS, and biometrics.

Certificates

IoT has several requirements when it comes to security, but trust and control top the list. These two vary greatly depending on the device type, its nature of use, and also its given constraints. Regardless of the variants, cryptography plays a role. PKI and crypto technologies have proven both worthy and valuable in large-scale systems such as the financial and medical fields. PKI has performed well for years in trusted environments where millions of device certificates have been deployed for ATMs, cellular base stations, and smartphones. Although the “things” in IoT have much in common with these devices, they raise some new issues regarding assurance, scale, and technology.

Public key cryptography is based on the concept of a unique relationship between two distinct variables used to encrypt data. One of the variables is made public (the public key), and the other is kept private (the private key). When coupled together, the relationship seems to be valid. This is also known as asymmetric encryption because one key is used to encrypt and a related key is used to decrypt.

A digital certificate is analogous to a virtual passport. A passport contains an image, name, country of residence, place of birth, validity period, and so on to accurately validate a person’s identity. Similarly, a digital certificate contains fields that validate the identity of a device as it relates to a corresponding public key. Figure 9-22 illustrates the contents of a digital certificate.

As this book has pointed out many times, constrained devices will populate the IoT, and traditional cryptography might not be suitable (not enough RAM, flash, CPU power, and so on). Some adjustments thus might be required. Be sure to consider the following forms of digital certificates.

X.509

An X.509 certificate is a digital credential that associates an identity with a public key value. According to a Red Hat document, an X.509 certificate can include the following:

■ A subject’s distinguished name (DN) that identifies the certificate owner

■ The public key associated with the subject

■ X.509 version information

■ A serial number that uniquely identifies the certificate

■ An issuer DN that identifies the CA that issued the certificate

■ The digital signature of the issuer

■ Information about the algorithm used to sign the certificate

■ Some optional X.509 v.3 extensions (for example, an extension that distinguishes between CA certificates and end-entity certificates)

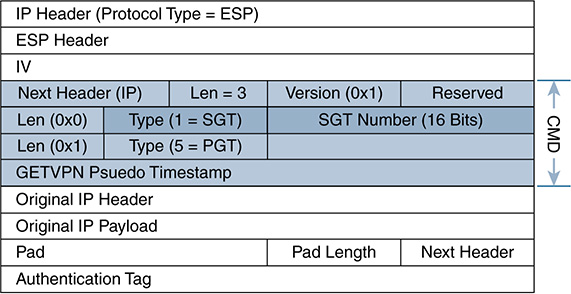

IEEE 1609.2

The IEEE 1609.2 certificate is about 50 percent of the size of the X.509 format. Despite its size, this certificate can leverage elliptic curve cryptographic algorithms (ECDH and ECDSA). The certificate is primarily used for M2M. In particular, the connected vehicle initiatives explore the use of on-board equipment (OBE) that communicates with other drivers in the vicinity using basic safety messages (BSM). Considering the number of potential vehicles communicating and the fact that the Dedicated Short-Range Communications (DSRC) wireless protocol is limited to a narrow set of channels, communications clearly needed to be secured and the security overhead of BSM transmissions needed to be minimized. This ushered in the sleeker 1609.2 certificate format. This new format has unique attributes, described as an explicit application identifier (SSID) and credential holder permission (SSP) fields. They allow IoT applications to make access control decisions without having to query for the credential holder’s permissions because they are embedded in the certificate.

Billions of certificates will be issued as IoT continues to evolve. These certificates will be used to identify devices, encrypt and decrypt communications, and sign firmware and software updates. Thankfully, trusted and proven solutions are capable of enhancing the security, efficiency, and manageability of digital certificates using PKI solutions.

Private Key Infrastructure

PKI is a key-management system that provisions asymmetric (public key) key material in the form of digital credentials. One of the most common formats is X.509, discussed earlier. PKI has been used for decades and is both a trusted and reliable form of authentication; it should be considered for confidentiality whenever appropriate. Despite its name, PKIs can operate publicly, can be Internet-based services, or can operate within a private organization.

When an identity needs to be asserted, a digital certificate is issued to a device that can perform a variety of cryptographic functions, such as signing messages and performing encryption and decryption.

Depending on implementation, different workflows are used to generate the public and private key pair. Centrally generated certificates and self-generated certificates exist. In the self-generation process, the device is commanded to generate a public/private key pair along with a certificate signing request (CSR). The CSR contains the device’s public key, and is sent to the certificate authority (CA) for signing. The CA signs with its private key and returns it to the device for use. The next section discusses cryptographical signing and the CA’s role in the PKI.

PKIs provide “verifiable” roots of trust and can adhere to a plethora of architectures. Some PKIs contain shallow trust chains with only a single parent CA; others have more comprehensive trust chains with varying levels. Figure 9-23 illustrates a PKI architecture.

The following example walks through a scenario leveraging the components shown in Figure 9-23.

When an IoT device requires a trusted identity, it leverages a trusted third party to verify or prove its identity (it enters the PKI architecture). The PKI uses a CA, which is responsible for cryptographically signing endpoint certificates. Most PKI infrastructures do not allow endpoints to interact directly with the CA and instead use an intermediate node, referred to as a registration authority (RA). The components work together in the following way:

The endpoint generates the key pair and CSR.

The endpoint sends the CSR to the RA, which contains the unsigned public key.

The RA verifies that the CSR meets the defined criteria and passes the certificate request to the CA.

The CA signs the certificate using an algorithm such as RSA, ECDSA, or DSA.

The CA sends the signed certificate request, called the certificate response, back to the RA.

The endpoint receives the certificate response, which contains the CA’s signature and explicit identity.

After the device installs the signed certificate, it can present that certificate during authentication. Other devices then can trust it as well. The trust stems from the fact that the certificate was signed by the CA and can be validated using the CA’s public key trust anchor, which is commonly stored in an internal trust store (the previous example assumes that the opposite end has the CA keys). See the upcoming section “Trust Stores” for a more thorough explanation.

What if endpoints have certificates that are signed by different PKIs? That scenario is fairly common, and it is handled with either explicit trust or cross-certification.

■ Explicit trust: This is one of the most common scenarios on the Internet. Each entity supports a policy that allows one to trust the other. Endpoints simply need to have a copy of the trust anchor from the other entity’s PKI to establish the trust relationship. This is accomplished by executing certificate path validation to preinstalled roots. You can also configure policies that dictate the acceptable quality of the trust chain during certificate path validation. As an example, web browsers explicitly trust many Internet-based web servers because the browser was preinstalled with copies of common Internet root CA trust anchors.

■ Cross-certification: If a PKI requires a more stringent interoperability policy with other PKIs, an option exists to directly cross-sign or create a new structure called a PKI bridge to implement and allocate policy interoperability. The U.S. government’s Federal PKI is an excellent example of this. In some cases, a PKI bridge can be created to provide an upgrade strategy between older certificates’ cryptographic algorithms and new ones.

Trust Stores

Considering our recent exploration of how trust is established and how keys and trust anchors are kept in a trust store, we should explore the trust store concept a bit deeper.

If you want to leverage PKI for IoT, you must determine whether the device has the capability to leverage a trust store. A trust store is a physical or logical portion of the device that stores public and private keys and PKI roots. The public keys are not a problem because they must be made freely available. The private keys, however, must be kept confidential, to avoid compromising the ability to trust an identity.

Trust stores are often sections of memory that have stringent access control prerequisites to prevent unauthorized alteration or malicious intent substitutions. Trust stores are sometimes implemented in hardware, such as a hardware security module or a Trust Platform Module (TPM). TPMs are typically dedicated chips that are integrated into the computer’s main board. Trust stores can also be implemented in software.

Circling back to IoT device use of PKI, if the device has externally provisioned an identity from a PKI, it must maintain and store the keys pertinent to that PKI (and possibly a trust chain, including any intermediate CAs) in its trust store.

The PKI provisioning process can be a challenging endeavor not only because of the potential size of the deployment, but also because of the constrained category of de-vices we discussed earlier in this chapter. An option that has been explored more in the past couple years is to leverage certificate authorities as a service. Experienced personnel working for PKI providers have surpassed many of the certificate provisioning challenges and also have developed the ability to scale to full deployment.

Revocation Support

Certificates are often issued with a specific validity period. In the process known as revocation, revoking a certificate invalidates it as a trusted security credential before its original validity period expires. This capability is useful in a variety of situations. Consider the following criteria for revocation, referenced in Microsoft TechNet:

■ Change in the name of the certificate subject

■ Discovery that a certificate was obtained fraudulently

■ Change of the status of the certificate subject as a trusted entity

■ Compromise of the certificate subject’s private key

■ Compromise of the certificate authority’s private key

Two primary revocation methods are leveraged: Certificate Revocation Lists (CRL) and Online Certificate Status Protocol (OCSP).

CRL

CRLs contain certificate serial numbers that have been revoked by the CA. The endpoint/client checks the serial number from the certificate against the serial numbers in the list (see the following sample).

Revoked Certificates:

Serial Number: 4845657EAAF2BEC5980067579A0A7702

Revocation Date: Sept 5 18:50:13 2017 GMT

Serial Number: 48456D15D25C713616E7D4A8EACFB3C2

Revocation Date: Sept 12 11:15:09 2017 GMT

To tell the client where to find the CRL, a distribution point is embedded within the certificate. CRLs were superseded by the Online Certificate Status Protocol (OCSP) for the following reasons:

■ CRLs can create a large amount of overhead because the client has to search through the revocation list.

■ CRLs are updated periodically, potentially increasing risk exposure until the ensuing CRL update takes place.

■ CRLs are not checked for OV- or DV-based certificates.

■ If the client is unable to download the CRL, then by default, the client trusts the certificate.

OCSP

Online Certificate Status Protocol (OCSP) addresses many CRL disadvantages by allowing the client to check the certificate status for a single entry. Consider the following OCSP process:

The client receives a certificate.

The client sends an OCSP request to an OCSP responder (over HTTP) with the certificate’s serial number.

The OCSP responder replies with a certificate status of Good, Revoked, or Unknown.

The following is an example of the OCSP process:

Response verify OK

0x36F5V12D5E6FD0BD4EAF2A2C966F3C21B: good

This Update: Mar 17 05:22:32 2017 GMT

Next Update: Mar 25 13:27:32 2017 GMT

The following are disadvantages of using OCSP:

■ OCSP requests are sent for each certificate, thus increasing the potential overhead on the OCSP responder (the CA) for high-traffic websites.

■ OCSP is not enforced for OV- or DV-based certificates, and is checked only for EV certificates.

SSL Pinning

SSL pinning can be seen as a tighter verified connection between the app and back-end APIs. It makes life difficult for security researchers and hackers, who abuse the absence of pinning to see how the app interacts with the back-end services. This technique generally applies more to IoT device developers, however. The intent is to pin the trusted server certificate directly to the device’s trust store. When the device connects, it then can check the respective certificate in the trust store. As long as the certificate is identical to the stored certificate (pinned) and the signature is valid, the connection is made.

Passwords

Many legacy devices rely on password use, and this is a growing concern. Complicating matters even more, default passwords for many DVRs and IP cameras usually are not changed. Someone cycling through the popular combinations of user/user, admin/admin, and root/12345 (done programmatically, of course) might be able to gain enough access over a device to use it in a botnet. This is precisely how the Mirai botnet was initiated.

Constrained devices that leverage standard IoT protocols that are better suited for constrained environments (such as MQTT and CoAP) might also use passwords. The preceding section explored how the ClientId, username, and password fields work in the MQTT CONNECT message, to deliver the capability to send a username/password to an MQTT broker for authentication. Refer to Figure 9-21 if you need to refresh your memory.

The username is a UTF-8 encoded string, and the password is binary data with 65535 bytes max. The specification dictates that a username without a password is possible, but the reverse is not an option (you cannot send a password without a username).

When using the built-in username/password authentication, the MQTT broker evaluates the credentials based on the implemented authentication mechanism and returns one of the following codes:

■ 0 = Connection accepted

■ 1 = Connection refused, unacceptable protocol version

■ 2 = Connection refused, identifier rejected

■ 3 = Connection refused, server unavailable

■ 4 = Connection refused, bad username or password

■ 5 = Connection refused, not authorized

This password is sent in plain text, so securing the transport of the username and password is highly recommended. Clients also can be authenticated with an SSL certificate, so no username and password are needed. Always use TLS with MQTT if you can afford the additional bandwidth and your clients have enough computing power and memory for TLS. As a rule of thumb, always use encrypted communication channels (for other protocols as well, such as HTTP).

Limitations for Constrained Devices

Securing MQTT over TLS sounds great, but you also must consider certain limitations. First, MQTT could be in use because of resource constraints. A drawback when using MQTT over TLS can be CPU usage and communication overhead. The additional CPU usage might be negligible on the broker, but it can be a problem for very constrained devices that are not designed for compute-intensive tasks.

The communication overhead of the TLS handshake can be significant if the MQTT client connections are expected to be short lived. Establishing a new TLS connection can take up to a few kilobytes of bandwidth (this can vary based on factors and implementation). Each packet is encrypted when using TLS, so packets on the wire also have additional overhead, compared to unencrypted packets.

If long-lived TCP connections are being used with MQTT, the TLS handshake overhead could be negligible. On the other hand, in an environment that instantiates quick reconnections and does not support session resumption, this overhead could be significant. Some environments have very little bandwidth to spare; if every byte on the wire counts for the use case, TLS might not be the best option.

Biometrics

A large portion of what we do today takes place on our personal mobile devices. All mobile devices should be protected, whether we choose our own passwords, use passcodes, or apply the newer biometric options. Biometrics developments for device authentication are increasing in the network authentication space, particularly as a potential means of secondary authentication.

According to techzone360.com, analysts estimating that the biometrics market will reach $30 billion by 2021. Passwords and PINs are often forgotten or compromised, and plastic cards can be lost or stolen. Device manufacturers can clearly see that a shift in methodology might be required. Biometric authentication offers an improved user experience because users are not required to remember their revolving list of 10 passwords or carry around physical equipment. Biometric technology uses prints, voice, and appearance for differentiation and authentication. The following sections look into recent advancements in biometric authentication.

TouchID

Fingerprint ID options (using a fingerprint instead of a password) are becoming more common on mobile devices. The sensor quickly reads a fingerprint and automatically unlocks the device. This technology can be extended in a variety of ways, from authorizing payments issued to the owner of the mobile device, to authorizing purchases from an electronic store. Developers are also allowing people to use fingerprint ID options to sign in to apps.

These sensors leverage advanced capacitive touch to take a high-resolution image from small sections of the user’s fingerprint. They analyze this information with a remarkable degree of detail and precision. Apple uses specific categories to analyze fingerprints, such as loop, arch, and whorl. It also maps out individual details in the ridges that are smaller than the human eye can see, and it even inspects minor variations in ridge direction caused by pores and edge structures.

Regarding security, the chip in an Apple device includes an advanced security architecture called the Secure Enclave that was developed to protect passcode and fingerprint data. Apple’s fingerprint ID system, Touch ID, does not store any images of fingerprints; instead, it relies only on a mathematical representation. Reverse-engineering the actual fingerprint image from stored data is not possible.

The fingerprint data is encrypted, stored on the device, and protected with a key available only to the Secure Enclave. The Secure Enclave verifies that the fingerprint matches the enrolled fingerprint data. The fingerprint cannot be accessed by the OS on the device or by any applications running on it; additionally, it is never stored on Apple servers or backed up to the cloud.

Face ID

Apple recently released another new biometric measure, called Face ID. The Face ID system was built on a new system known as TrueDepth that combines a traditional camera, an infrared camera, a depth sensor, and a dot projector. It projects 30,000 infrared dots onto the user’s face, with the intent of creating a “mathematical model of one face.”

This model is then run through the Neural Engine, a part of the new a11 bionic system on a chip that compares the new scan against previous models. Over time, the system will be able to learn and adapt as a person’s appearance changes because of new hairstyles, facial hair, glasses, and so on. All Face ID data is stored in the same Secure Enclave on the user’s device that Touch ID uses; the data is not transmitted to the cloud.

To demonstrate the solution’s effectiveness, the iPhone Face ID security system was tested against realistic masks designed by Hollywood special effects teams. It held up without error. Additionally, the iPhone Face ID unlock requires the user’s attention; it will not work if the user is looking away or has his or her eyes closed.

Tests concluded that the chance of a random person being able to unlock another device with the Touch ID fingerprint scanner was 1 in 50,000. The iPhone Face ID system has an even better rate of a 1 in 1,000,000 chance of a false positive, an exponential improvement.

Risk Factor

If someone loses an access card key or forgets a PIN, this is a security issue. Of course, each scenario has mitigation procedures. If a biometric spoof takes place, such as capturing someone’s fingerprint, well, there aren’t many ways out there to get a new fingerprint. For biometrics to be useful in IoT, it not only needs to maintain its user experience edge, but it must also deliver enterprise-level security.

Biometric data can be stored centrally. If someone attempts to authenticate to a system, that person’s unique biometric info is compared against the database. This central repository is a high-value target for malicious intent. One approach might use a decentralized system in which no two biometric data sets are stored together, negating the high-value target. This model is becoming more common, with users configuring biometric access on their personal devices. That data does not have to be transmitted across the network or stored centrally.

A method known as tokenization uses a process analogous to traditional cryptography. Biometric tokenization translates biometric data into something meaningless so that it can be stored safely on the device. When the user’s authentication is required, a crypto challenge response function would allow an action-specific verifier to be pulled from the biometric and transmitted. This transmission could be via Bluetooth or wireless. Successful authentication would result in a vehicle start, or whatever the application was designed to do.

When implemented with the proper safeguards, biometric access has applicability to a variety of environments, including connected car, connected home, smart locks, and more. During the introductory phase, biometric authentication might not immediately replace current methods, but it may either run alongside or complement traditional approaches while both solutions and processes mature. One thing is certain: When biometrics are handled properly, the user experience provides a refreshing change.

AAA and RADIUS

The authentication, authorization, and accounting (AAA) server is software that handles user requests for access to resources. Naturally, the AAA service provides authentication, authorization, and accounting (AAA) services. The AAA server typically interacts with the network access infrastructure and with databases and directories that contain user information on the back end. The current standard by which devices or applications communicate with an AAA server is the Remote Authentication Dial-In User Service (RADIUS).

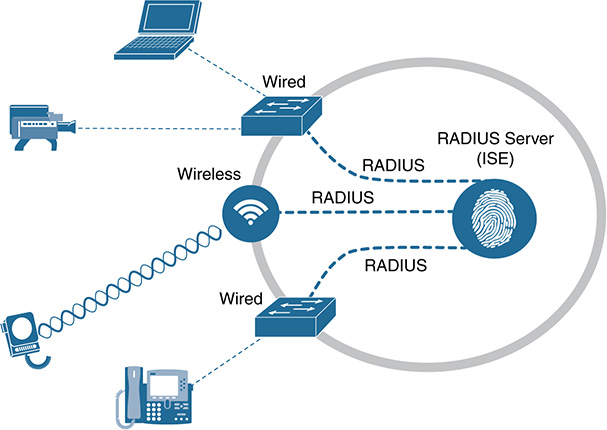

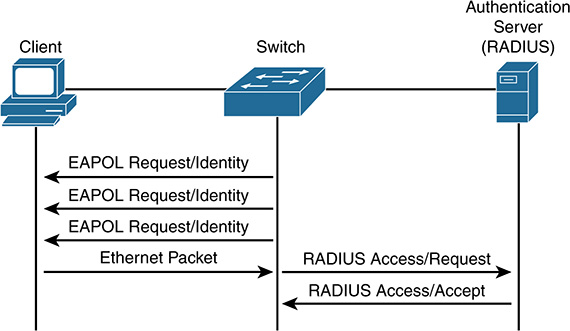

RADIUS is a distributed client/server system that secures networks against unauthorized access. RADIUS was created originally for dial-in user access (hence its name), but it has evolved greatly. RADIUS clients run on all types of infrastructure, such as routers, switches, and wireless controllers. These clients send authentication requests to a central RADIUS or AAA server that contains all user authentication and network service access information. Figure 9-24 shows how the infrastructure connects to the RADIUS server (ISE).

RADIUS is a fully open protocol, distributed in source code format, that can be modified to work with any security system currently available on the market. Cisco supports RADIUS under its AAA security paradigm, and RADIUS has been implemented in a variety of network environments that require high levels of security while maintaining network access for remote users. RADIUS, AAA, and 802.1X port-based authentication all work together harmoniously.

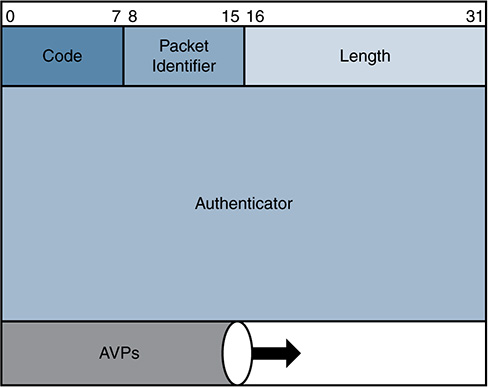

When the authentication request is transmitted to the AAA server, the AAA client expects the authorization result in the reply. RADIUS uses only four message types:

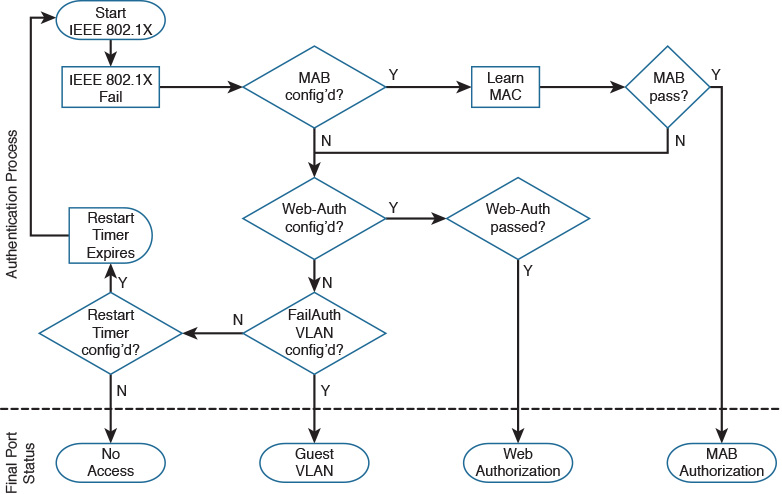

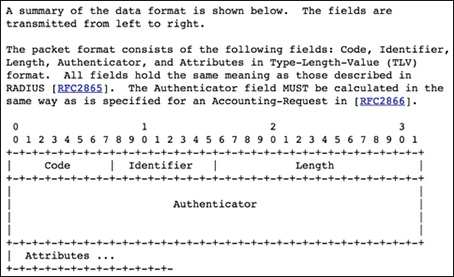

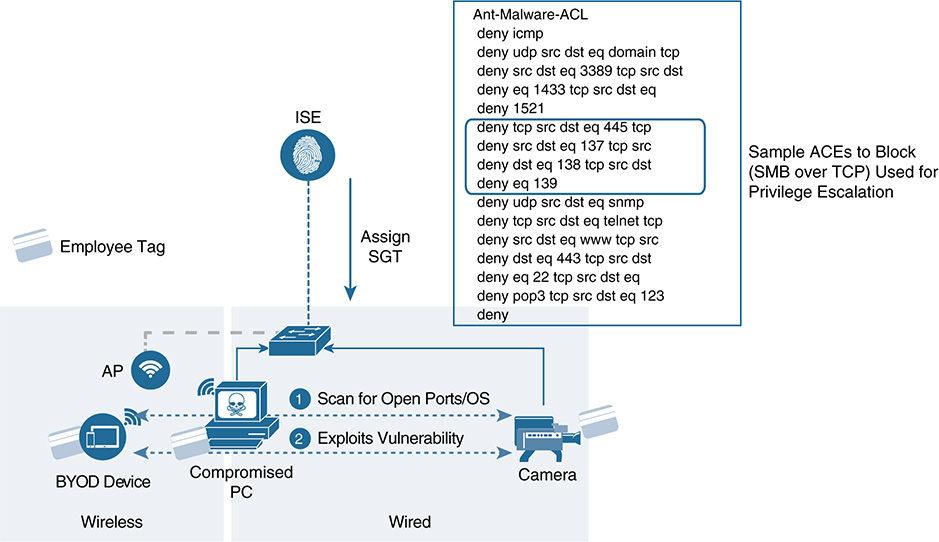

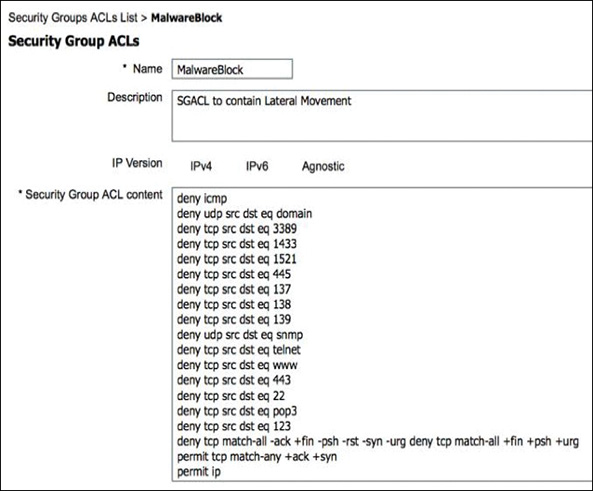

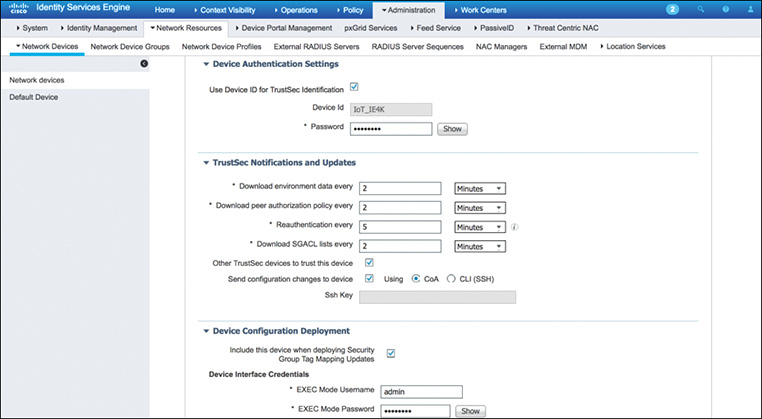

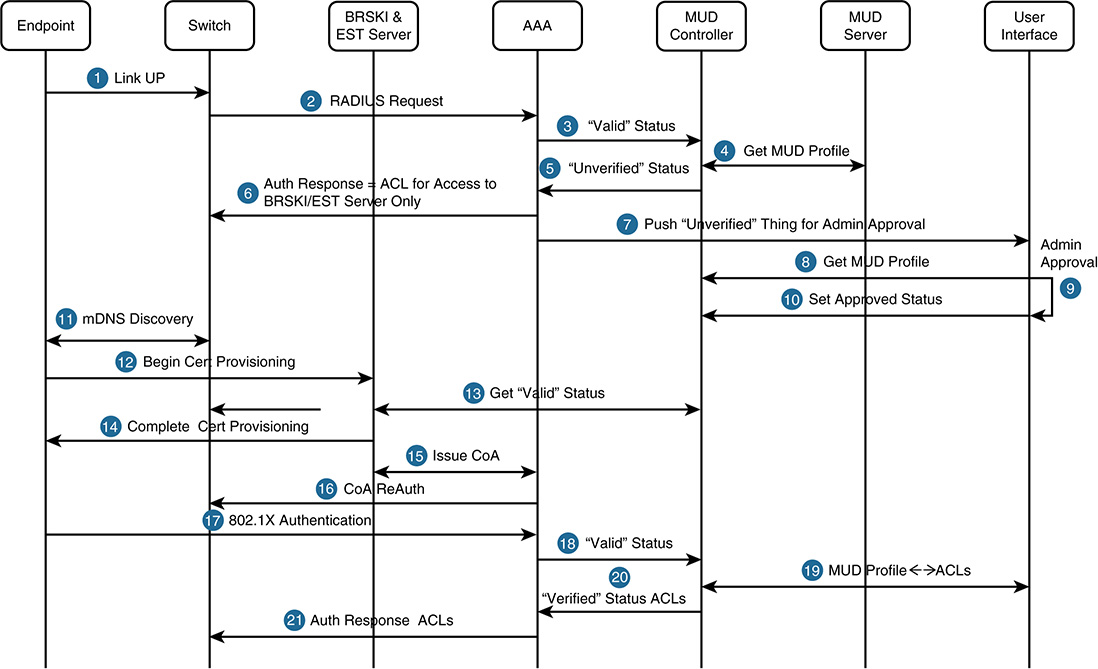

■ Access-Request: Sent from the AAA client to the AAA server, requesting an authentication and authorization.