Authentication and Remote Access

We should set a national goal of making computers and Internet access available for every American.

—WILLIAM JEFFERSON CLINTON

In this chapter, you will learn how to

![]() Identify the differences among user, group, and role management

Identify the differences among user, group, and role management

![]() Implement password and domain password policies

Implement password and domain password policies

![]() Describe methods of account management (SSO, time of day, logical token, account expiration)

Describe methods of account management (SSO, time of day, logical token, account expiration)

![]() Describe methods of access management (MAC, DAC, and RBAC)

Describe methods of access management (MAC, DAC, and RBAC)

![]() Discuss the methods and protocols for remote access to networks

Discuss the methods and protocols for remote access to networks

![]() Identify authentication, authorization, and accounting (AAA) protocols

Identify authentication, authorization, and accounting (AAA) protocols

![]() Explain authentication methods and the security implications in their use

Explain authentication methods and the security implications in their use

![]() Implement virtual private networks (VPNs) and their security aspects

Implement virtual private networks (VPNs) and their security aspects

On single-user systems such as PCs, the individual user typically has access to most of the system’s resources, processing capability, and stored data. On multiuser systems, such as servers and mainframes, an individual user typically has very limited access to the system and the data stored on that system. An administrator responsible for managing and maintaining the multiuser system has much greater access. So how does the computer system know which users should have access to what data? How does the operating system know what applications a user is allowed to use?

On early computer systems, anyone with physical access had fairly significant rights to the system and could typically access any file or execute any application. As computers became more popular and it became obvious that some way of separating and restricting users was needed, the concepts of users, groups, and privileges came into being (privileges mean you have the ability to “do something” on a computer system, such as create a directory, delete a file, or run a program). These concepts continue to be developed and refined and are now part of what we call privilege management.

Privilege management is the process of restricting a user’s ability to interact with the computer system. Essentially, everything a user can do to or with a computer system falls into the realm of privilege management. Privilege management occurs at many different points within an operating system or even within applications running on a particular operating system.

Remote access is another key issue for multiuser systems in today’s world of connected computers. Isolated computers, not connected to networks or the Internet, are rare items these days. Except for some special-purpose machines, most computers need interconnectivity to fulfill their purpose. Remote access enables users outside a network to have network access and privileges as if they were inside the network. Being outside a network means that the user is working on a machine that is not physically connected to the network and must therefore establish a connection through a remote means, such as by dialing in, connecting via the Internet, or connecting through a wireless connection.

Authentication is the process of establishing a user’s identity to enable the granting of permissions. To establish network connections, a variety of methods are used, the choice of which depends on network type, the hardware and software employed, and any security requirements.

User, Group, and Role Management

User, Group, and Role Management

To manage the privileges of many different people effectively on the same system, a mechanism for separating people into distinct entities (users) is required, so you can control access on an individual level. At the same time, it’s convenient and efficient to be able to lump users together when granting many different people (groups) access to a resource at the same time. At other times, it’s useful to be able to grant or restrict access based on a person’s job or function within the organization (role). While you can manage privileges on the basis of users alone, managing user, group, and role assignments together is far more convenient and efficient.

User

The term user generally applies to any person accessing a computer system. In privilege management, a user is a single individual, such as “John Forthright” or “Sally Jenkins.” This is generally the lowest level addressed by privilege management and the most common area for addressing access, rights, and capabilities. When accessing a computer system, each user is generally given a username—a unique alphanumeric identifier they will use to identify themselves when logging into or accessing the system. When developing a scheme for selecting usernames, you should keep in mind that usernames must be unique to each user, but they must also be fairly easy for the user to remember and use.

A username is a unique alphanumeric identifier used to identify a user to a computer system. Permissions control what a user is allowed to do with objects on a computer system—what files they can open, what printers they can use, and so on. In Windows security models, permissions define the actions a user can perform on an object (open a file, delete a folder, and so on). Rights define the actions a user can perform on the system itself, such as change the time, adjust auditing levels, and so on. Rights are typically applied to operating system–level tasks.

With some notable exceptions, in general a user who wants to access a computer system must first have a username created for them on the system they want to use. This is usually done by a system administrator, security administrator, or other privileged user, and this is the first step in privilege management—a user should not be allowed to create their own account.

Auditing user accounts, group membership, and password strength on a regular basis is an extremely important security control. Many compliance audits focus on the presence or lack of industry-accepted security controls.

Once the account is created and a username is selected, the administrator can assign specific permissions to that user. Permissions control what the user is allowed to do with objects on the system—which files they may access, which programs they may execute, and so on. Whereas PCs typically have only one or two user accounts, larger systems such as servers and mainframes can have hundreds of accounts on the same system. Figure 11.1 shows the Users management tab of the Computer Management utility on a Windows Server 2008 system. Note that several user accounts have been created on this system, each identified by a unique username.

• Figure 11.1 Users tab on a Windows Server 2008 system

Generic Accounts

Generic accounts are accounts without a named user behind them. These can be employed for special purposes, such as running services and batch processes, but because they cannot be attributed to an individual, they should not have login capability. It is also important that if they have elevated privileges, their activities be continually monitored as to what functions they are performing versus what they are expected to be doing. General use of generic accounts should be avoided because of the increased risk associated with no attribution capability.

A few “special” user accounts don’t typically match up one-to-one with a real person. These accounts are reserved for special functions and typically have much more access and control over the computer system than the average user account. Two such accounts are the administrator account under Windows and the root account under UNIX. Each of these accounts is also known as the superuser—if something can be done on the system, the superuser has the power to do it. These accounts are not typically assigned to a specific individual and are restricted, accessed only when the full capabilities of the account are required.

Due to the power possessed by these accounts, and the few, if any, restrictions placed on them, they must be protected with strong passwords that are not easily guessed or obtained. These accounts are also the most common targets of attackers—if the attacker can gain root access or assume the privilege level associated with the root account, they can bypass most access controls and accomplish anything they want on that system.

Another account that falls into the “special” category is the system account used by Windows operating systems. The system account has the same file privileges as the administrator account and is used by the operating system and by services that run under Windows. By default, the system account is granted full control to all files on an NTFS volume. Services and processes that need the capability to log on internally within Windows will use the system account—for example, the DNS Server and DHCP Server services in Windows Server 2008 use the Local System account.

Shared and Generic Accounts/Credentials

Shared accounts go against the specific treatise that accounts exist so that user activity can be tracked. This said, there are times when guest accounts are used, especially in situations where the guest access is limited to a defined set of functions and specific tracking is not particularly useful. Sometimes the shared accounts are called generic accounts and exist only to provide a specific set of functionality, like in a PC running in kiosk mode, with a browser limited to specific sites as an information display. Under these circumstances, being able to trace the activity to a user is not particularly useful.

Guest Accounts

Guest accounts are frequently used on corporate networks to provide visitors’ access to the Internet and to some common corporate resources, such as projectors, printers in conference rooms, and so on. Again, these types of accounts are restricted in their network capability to a defined set of machines, with a defined set of access, much like a user from the Internet visiting their publically facing web site. As such, logging and tracing activity have little to no use, so the overhead of establishing an account does not make sense.

Service Accounts

Service accounts are accounts that are used to run processes that do not require human intervention to start/stop/administer. From batch jobs that run in a data center, to simple tasks that are run on the enterprise for compliance objectives, the reasons for running are many, but the need for an accountholder is not really there. One thing you can do with these accounts in Windows systems is to not allow them to log into the system. This limits some of the attack vectors that can be applied to these accounts. Another security provision is to apply time restrictions for accounts that run batch jobs at night and then monitor when they run. Any service account that has to run in an elevated privilege mode should receive extra monitoring and scrutiny.

Onboarding/Offboarding

Onboarding and offboarding involve the bringing of personnel on and off a project or team. During onboarding, proper account relationships need to be managed. New members can be put into the correct groups; then, when offboarded, they can be removed from the groups. This is one way in which groups can be used to manage permissions, which can be very efficient when users move between units and tasks.

Privileged Accounts

Privileged accounts are any accounts with greater than normal user access. Privileged accounts are typically root or admin-level accounts and represent risk in that they are unlimited in their powers. These accounts require regular real-time monitoring, if at all possible, and should always be monitored when operating remotely. There may be reasons why and occasions when system administrators are acting via a remote session, but when they are, the purposes should be known and approved.

Group

Under privilege management, a group is a collection of users with some common criteria, such as a need for access to a particular dataset or group of applications. A group can consist of one user or hundreds of users, and each user can belong to one or more groups. Figure 11.2 shows a common approach to grouping users—building groups based on job function.

• Figure 11.2 Logical representation of groups

By assigning membership in a specific group to a user, you make it much easier to control that user’s access and privileges. For example, if every member of the engineering department needs access to product development documents, administrators can place all the users in the engineering department in a single group and allow that group to access the necessary documents. Once a group is assigned permissions to access a particular resource, adding a new user to that group will automatically allow that user to access that resource. In effect, the user “inherits” the permissions of the group as soon as they are placed in that group. As Figure 11.3 shows, a computer system can have many different groups, each with its own rights and permissions.

• Figure 11.3 Groups tab on a Windows Server 2008 system

As you can see from the description for the Administrators group in Figure 11.3, this group has complete and unrestricted access to the system. This includes access to all files, applications, and datasets. Anyone who belongs to the Administrators group or is placed in this group will have a great deal of access and control over the system.

Some operating systems, such as Windows, have built-in groups—groups that are already defined within the operating system, such as Administrators, Power Users, and Everyone. The whole concept of groups revolves around making the tasks of assigning and managing permissions easier, and built-in groups certainly help to make these tasks easier. Individual users accounts can be added to built-in groups, allowing administrators to grant permission sets to users quickly and easily without having to specify permissions manually. For example, adding a user account named “bjones” to the Power Users group gives bjones all the permissions assigned to the built-in Power Users group, such as installing drivers, modifying settings, and installing software.

Role

Another common method of managing access and privileges is by roles. A role is usually synonymous with a job or set of functions. For example, the role of security admin in Microsoft SQL Server may be applied to someone who is responsible for creating and managing logins, reading error logs, and auditing the application. Security admins need to accomplish specific functions and need access to certain resources that other users do not—for example, they need to be able to create and delete logins, open and read error logs, and so on. In general, anyone serving in the role of security admin needs the same rights and privileges as every other security admin. For simplicity and efficiency, rights and privileges can be assigned to the role security admin, and anyone assigned to fulfill that role automatically has the correct rights and privileges to perform the required tasks.

Domain Passwords

Domain Passwords

A domain password policy is a password policy for a specific domain. Because these policies are usually associated with the Windows operating system, a domain password policy is implemented and enforced on the domain controller, which is a computer that responds to security authentication requests, such as logging into a computer, for a Windows domain. The domain password policy usually falls under a group policy object (GPO) and has the following elements (see Figure 11.4):

• Figure 11.4 Password policy options in Windows Local Security Policy

Not only is it essential to ensure every account has a strong password, but it is also essential to disable or delete unnecessary accounts. If your system does not need to support guest or anonymous accounts, then disable them. When user or administrator accounts are no longer needed, remove or disable them. As a best practice, all user accounts should be audited periodically to ensure there are no unnecessary, outdated, or unneeded accounts on your systems.

![]() Enforce password history Tells the system how many passwords to remember and does not allow a user to reuse an old password.

Enforce password history Tells the system how many passwords to remember and does not allow a user to reuse an old password.

![]() Maximum password age Specifies the maximum number of days a password may be used before it must be changed.

Maximum password age Specifies the maximum number of days a password may be used before it must be changed.

![]() Minimum password age Specifies the minimum number of days a password must be used before it can be changed again.

Minimum password age Specifies the minimum number of days a password must be used before it can be changed again.

![]() Minimum password length Specifies the minimum number of characters that must be used in a password.

Minimum password length Specifies the minimum number of characters that must be used in a password.

![]() Password must meet complexity requirements Specifies that the password must meet the minimum length requirement and have characters from at least three of the following four groups: English uppercase characters (A through Z), English lowercase characters (a through z), numerals (0 through 9), and non-alphabetic characters (such as !, $, #, and %).

Password must meet complexity requirements Specifies that the password must meet the minimum length requirement and have characters from at least three of the following four groups: English uppercase characters (A through Z), English lowercase characters (a through z), numerals (0 through 9), and non-alphabetic characters (such as !, $, #, and %).

![]() Store passwords using reversible encryption Reversible encryption is a form of encryption that can easily be decrypted and is essentially the same as storing a plaintext version of the password (because it’s so easy to reverse the encryption and get the password). This should be used only when applications use protocols that require the user’s password for authentication, such as the Challenge-Handshake Authentication Protocol (CHAP).

Store passwords using reversible encryption Reversible encryption is a form of encryption that can easily be decrypted and is essentially the same as storing a plaintext version of the password (because it’s so easy to reverse the encryption and get the password). This should be used only when applications use protocols that require the user’s password for authentication, such as the Challenge-Handshake Authentication Protocol (CHAP).

Calculating Unique Password Combinations

One of the primary reasons administrators require users to have longer passwords that use upper- and lowercase letters, numbers, and at least one “special” character is to help deter password-guessing attacks. One popular password-guessing technique, called a brute-force attack, uses software to guess every possible password until one matches a user’s password. Essentially, a brute force-attack tries a, then aa, then aaa, and so on, until it runs out of combinations or gets a password match. Increasing both the pool of possible characters that can be used in the password and the number of characters required in the password can exponentially increase the number of “guesses” a brute-force program needs to perform before it runs out of possibilities. For example, if our password policy requires a three-character password that uses only lowercase letters, there are only 17,576 possible passwords (with 26 possible characters, a password that’s three characters long equates to 263 combinations). Requiring a six-character password increases that number to 308,915,776 possible passwords (266). An eight-character password with upper- and lowercase letters, a special symbol, and a number increases the possible passwords to 708, or over 576 trillion combinations.

Precomputed hashes in rainbow tables can also be used to brute-force past shorter passwords. As the length increases, so does the size of the rainbow table.

Domains are logical groups of computers that share a central directory database, known as the Active Directory database for the more recent Windows operating systems. The database contains information about the user accounts and security information for all resources identified within the domain. Each user within the domain is assigned their own unique account (that is, a domain is not a single account shared by multiple users), which is then assigned access to specific resources within the domain. In operating systems that provide domain capabilities, the password policy is set in the root container for the domain and applies to all users within that domain. Setting a password policy for a domain is similar to setting other password policies in that the same critical elements need to be considered (password length, complexity, life, and so on). If a change to one of these elements is desired for a group of users, a new domain needs to be created because the domain is considered a security boundary. In a Windows operating system that employs Active Directory, the domain password policy can be set in the Active Directory Users and Computers menu in the Administrative Tools section of the Control Panel.

Single Sign-On

Single Sign-On

To use a system, users must be able to access it, which they usually do by supplying their user IDs (or usernames) and corresponding passwords. As any security administrator knows, the more systems a particular user has access to, the more passwords that user must have and remember. The natural tendency for users is to select passwords that are easy to remember, or even the same password for use on the multiple systems they access. Wouldn’t it be easier for the user simply to log in once and have to remember only a single, good password? This is made possible with a technology called single sign-on.

Single sign-on (SSO) is a form of authentication that involves the transferring of credentials between systems. As more and more systems are combined in daily use, users are forced to have multiple sets of credentials. A user may have to log into three, four, five, or even more systems every day just to do their job. Single sign-on allows a user to transfer their credentials so that logging into one system acts to log them into all of the systems. Once the user has entered a user ID and password, the single sign-on system passes these credentials transparently to other systems so that repeated logons are not required. Put simply, you supply the right username and password once and you have access to all the applications and data you need, without having to log in multiple times and remember many different passwords. From a user standpoint, SSO means you need to remember only one username and one password. From an administration standpoint, SSO can be easier to manage and maintain. From a security standpoint, SSO can be even more secure, as users who need to remember only one password are less likely to choose something too simple or something so complex they need to write it down. The following is a logical depiction of the SSO process (see Figure 11.5):

• Figure 11.5 Single sign-on process

The CompTIA Security+ exam will very likely contain questions regarding single sign-on because it is such a prevalent topic and a very common approach to multisystem authentication.

1. The user signs in once, providing a username and password to the SSO server.

2. The SSO server provides authentication information to any resource the user accesses during that session. The server interfaces with the other applications and systems—the user does not need to log into each system individually.

Heartbleed

In 2014, a vulnerability that could cause user credentials to be exposed was discovered in millions of systems. Called the Heartbleed incident, this resulted in numerous users being told to change their passwords because of potential compromise. Users were also warned of the dangers of reusing passwords across different accounts. Although this makes passwords easier to remember, it also improves guessing chances. What made this whole effort of user protecting their passwords particularly challenging is that the breach was widespread—virtually all Linux systems—and the patching rate was uneven, so people could be suffering multiple exposures over time. After one year, an estimated 40 percent of all compromised systems remained unpatched. This highlights the importance of not reusing passwords across multiple accounts.

In reality, SSO is usually a little more difficult to implement than vendors would lead you to believe. To be effective and useful, all your applications need to be able to access and use the authentication provided by the SSO process. The more diverse your network, the less likely this is to be the case. If your network, like most, contains different operating systems, custom applications, and a diverse user base, SSO may not even be a viable option.

Security Controls and Permissions

Security Controls and Permissions

If multiple users share a computer system, the system administrator likely needs to control who is allowed to do what when it comes to viewing, using, or changing system resources. Although operating systems vary in how they implement these types of controls, most operating systems use the concepts of permissions and rights to control and safeguard access to resources. As we discussed earlier, permissions control what a user is allowed to do with objects on a system, and rights define the actions a user can perform on the system itself. Let’s examine how the Windows operating systems implement this concept.

The Windows operating systems use the concepts of permissions and rights to control access to files, folders, and information resources. When using the NTFS file system, administrators can grant users and groups permission to perform certain tasks as they relate to files, folders, and Registry keys. The basic categories of NTFS permissions are as follows:

Permissions can be applied to a specific user or group to control that user or group’s ability to view, modify, access, use, or delete resources such as folders and files.

![]() Full Control A user/group can change permissions on the folder/file, take ownership if someone else owns the folder/file, delete subfolders and files, and perform actions permitted by all other NTFS folder permissions.

Full Control A user/group can change permissions on the folder/file, take ownership if someone else owns the folder/file, delete subfolders and files, and perform actions permitted by all other NTFS folder permissions.

![]() Modify A user/group can view and modify files/folders and their properties, can delete and add files/folders, and can delete properties from or add properties to a file/folder.

Modify A user/group can view and modify files/folders and their properties, can delete and add files/folders, and can delete properties from or add properties to a file/folder.

![]() Read & Execute A user/group can view the file/folder and can execute scripts and executables, but they cannot make any changes (files/folders are read-only).

Read & Execute A user/group can view the file/folder and can execute scripts and executables, but they cannot make any changes (files/folders are read-only).

![]() List Folder Contents A user/group can list only what is inside the folder (applies to folders only).

List Folder Contents A user/group can list only what is inside the folder (applies to folders only).

![]() Read A user/group can view the contents of the file/folder and the file/folder properties.

Read A user/group can view the contents of the file/folder and the file/folder properties.

![]() Write A user/group can write to the file or folder.

Write A user/group can write to the file or folder.

Figure 11.6 shows the permissions on a folder called Data from a Windows Server system. In the top half of the Permissions window are the users and groups that have permissions for this folder. In the bottom half of the window are the permissions assigned to the highlighted user or group.

• Figure 11.6 Permissions for the Data folder

The Windows operating system also uses user rights or privileges to determine what actions a user or group is allowed to perform or access. These user rights are typically assigned to groups, as it is easier to deal with a few groups than to assign rights to individual users, and they are usually defined in either a group or a local security policy. The list of user rights is quite extensive, but here are a few examples of user rights:

![]() Log on locally Users/groups can attempt to log onto the local system itself.

Log on locally Users/groups can attempt to log onto the local system itself.

![]() Access this computer from the network Users/groups can attempt to access this system through the network connection.

Access this computer from the network Users/groups can attempt to access this system through the network connection.

![]() Manage auditing and security log Users/groups can view, modify, and delete auditing and security log information.

Manage auditing and security log Users/groups can view, modify, and delete auditing and security log information.

Rights tend to be actions that deal with accessing the system itself, process control, logging, and so on. Figure 11.7 shows the user rights contained in the local security policy on a Windows system.

• Figure 11.7 User Rights Assignment options from Windows Local Security Policy

Although it is very important to get security settings “right the first time,” it is just as important to perform routine audits of security settings such as user accounts, group memberships, file permissions, and so on.

Folders and files are not the only things that can be safeguarded or controlled using permissions. Even access and use of peripherals such as printers can be controlled using permissions. Figure 11.8 shows the Security tab from a printer attached to a Windows system. Permissions can be assigned to control who can print to the printer, who can manage documents and print jobs sent to the printer, and who can manage the printer itself. With this type of granular control, administrators have a great deal of control over how system resources are used and who uses them.

• Figure 11.8 Security tab showing printer permissions in Windows

A very important concept to consider when assigning rights and privileges to users is the concept of least privilege. Least privilege requires that users be given the absolute minimum number of rights and privileges required to perform their authorized duties. For example, if a user does not need the ability to install software on their own desktop to perform their job, then don’t give them that ability. This reduces the likelihood the user will load malware, insecure software, or unauthorized applications onto their system.

Access Control Lists

The term access control list (ACL) is used in more than one manner in the field of computer security. When discussing routers and firewalls, an ACL is a set of rules used to control traffic flow into or out of an interface or network. When discussing system resources, such as files and folders, an ACL lists permissions attached to an object—who is allowed to view, modify, move, or delete that object.

To illustrate this concept, consider an example. Figure 11.9 shows the access control list (permissions) for the Data folder. The user identified as Billy Williams has Read & Execute, List Folder Contents, and Read permissions, meaning this user can open the folder, see what’s in the folder, and so on. Figure 11.10 shows the permissions for a user identified as Leah Jones, who has only Read permissions on the same folder.

• Figure 11.9 Permissions for Billy Williams on the Data folder

• Figure 11.10 Permissions for Leah Jones on the Data folder

In computer systems and networks, access controls can be implemented in several ways. An access control matrix provides the simplest framework for illustrating the process. An example of an access control matrix is provided in Table 11.1. In this matrix, the system is keeping track of two processes, two files, and one hardware device. Process 1 can read both File 1 and File 2 but can write only to File 1. Process 1 cannot access Process 2, but Process 2 can execute Process 1. Both processes have the ability to write to the printer.

Table 11.1 An Access Control Matrix

Although simple to understand, the access control matrix is seldom used in computer systems because it is extremely costly in terms of storage space and processing. Imagine the size of an access control matrix for a large network with hundreds of users and thousands of files.

Mandatory Access Control (MAC)

Mandatory access control (MAC) is the process of controlling access to information based on the sensitivity of that information and whether or not the user is operating at the appropriate sensitivity level and has the authority to access that information. Under a MAC system, each piece of information and every system resource (files, devices, networks, and so on) is labeled with its sensitivity level (such as Public, Engineering Private, Jones Secret, and so on). Users are assigned a clearance level that sets the upper boundary of the information and devices that they are allowed to access.

Mandatory access control restricts access based on the sensitivity of the information and whether or not the user has the authority to access that information.

The access control and sensitivity labels are required in a MAC system. Labels are defined and then assigned to users and resources. Users must then operate within their assigned sensitivity and clearance levels—they don’t have the option to modify their own sensitivity levels or the levels of the information resources they create. Due to the complexity involved, MAC is typically run only on systems where security is a top priority, such as Trusted Solaris, OpenBSD, and SELinux.

MAC Objective

Mandatory access controls are often mentioned in discussions of multilevel security. For multilevel security to be implemented, a mechanism must be present to identify the classification of all users and files. A file identified as Top Secret (that is, it has a label indicating that it is “Top Secret”) may be viewed only by individuals with a Top Secret clearance. For this control mechanism to work reliably, all files must be marked with appropriate controls and all user access must be checked. This is the primary goal of MAC.

Figure 11.11 illustrates MAC in operation. The information resource on the left has been labeled “Engineering Secret,” meaning only users in the Engineering group operating at the Secret sensitivity level or above can access that resource. The top user is operating at the Secret level but is not a member of Engineering and is denied access to the resource. The middle user is a member of Engineering but is operating at a Public sensitivity level and is therefore denied access to the resource. The bottom user is a member of Engineering, is operating at a Secret sensitivity level, and is allowed to access the information resource.

• Figure 11.11 Logical representation of mandatory access control

Discretionary Access Control (DAC)

Discretionary access control (DAC) is the process of using file permissions and optional ACLs to restrict access to information based on a user’s identity or group membership. DAC is the most common access control system and is commonly used in both UNIX and Windows operating systems. The “discretionary” part of DAC means that a file or resource owner has the ability to change the permissions on that file or resource.

Multilevel Security

In the U.S. government, the following security labels are used to classify information and information resources for MAC systems:

![]() Top Secret The highest security level and is defined as information that would cause “exceptionally grave damage” to national security if disclosed.

Top Secret The highest security level and is defined as information that would cause “exceptionally grave damage” to national security if disclosed.

![]() Secret The second highest level and is defined as information that would cause “serious damage” to national security if disclosed.

Secret The second highest level and is defined as information that would cause “serious damage” to national security if disclosed.

![]() Confidential The lowest level of classified information and is defined as information that would “damage” national security if disclosed.

Confidential The lowest level of classified information and is defined as information that would “damage” national security if disclosed.

![]() For Official Use Only Information that is unclassified but not releasable to public or unauthorized parties. Sometimes called Sensitive But Unclassified (SBU).

For Official Use Only Information that is unclassified but not releasable to public or unauthorized parties. Sometimes called Sensitive But Unclassified (SBU).

![]() Unclassified Not an official classification level.

Unclassified Not an official classification level.

The labels work in a top-down fashion so that an individual holding a Secret clearance would have access to information at the Secret, Confidential, and Unclassified levels. An individual with a Secret clearance would not have access to Top Secret resources because that label is above the highest level of the individual’s clearance.

Under UNIX operating systems, file permissions consist of three distinct parts:

![]() Owner permissions (read, write, and execute) The owner of the file

Owner permissions (read, write, and execute) The owner of the file

![]() Group permissions (read, write, and execute) The group to which the owner of the file belongs

Group permissions (read, write, and execute) The group to which the owner of the file belongs

![]() World permissions (read, write, and execute) Anyone else who is not the owner and does not belong to the group to which the owner of the file belongs

World permissions (read, write, and execute) Anyone else who is not the owner and does not belong to the group to which the owner of the file belongs

For example, suppose a file called secretdata has been created by the owner of the file, Luke, who is part of the Engineering group. The owner permissions on the file would reflect Luke’s access to the file (as the owner). The group permissions would reflect the access granted to anyone who is part of the Engineering group. The world permissions would represent the access granted to anyone who is not Luke and is not part of the Engineering group.

In UNIX, a file’s permissions are usually displayed as a series of nine characters, with the first three characters representing the owner’s permissions, the second three characters representing the group permissions, and the last three characters representing the permissions for everyone else (or for the world). This concept is illustrated in Figure 11.12.

• Figure 11.12 Discretionary file permissions in the UNIX environment

Suppose the file secretdata is owned by Luke with group permissions for Engineering (because Luke is part of the Engineering group), and the permissions on that file are rwx, rw-, and ---, as shown in Figure 11.12. This would mean the following:

![]() Luke can read, write, and execute the file (rwx).

Luke can read, write, and execute the file (rwx).

![]() Members of the Engineering group can read and write the file but not execute it (rw-).

Members of the Engineering group can read and write the file but not execute it (rw-).

![]() The world has no access to the file and can’t read, write, or execute it (---).

The world has no access to the file and can’t read, write, or execute it (---).

Discretionary access control restricts access based on the user’s identity or group membership.

Remember that under the DAC model, the file’s owner, Luke, can change the file’s permissions any time he wants.

Role-Based Access Control (RBAC)

Access control lists can be cumbersome and can take time to administer properly. Role-based access control (RBAC) is the process of managing access and privileges based on the user’s assigned roles. RBAC is the access control model that most closely resembles an organization’s structure. In this scheme, instead of each user being assigned specific access permissions for the objects associated with the computer system or network, that user is assigned a set of roles that the user may perform. The roles are in turn assigned the access permissions necessary to perform the tasks associated with the role. Users will thus be granted permissions to objects in terms of the specific duties they must perform—not just because of a security classification associated with individual objects.

As defined by the “Orange Book,” a Department of Defense document (in the “rainbow series”) that at one time was the standard for describing what constituted a trusted computing system, a discretionary access control (DAC) is “a means of restricting access to objects based on the identity of subjects and/or groups to which they belong. The controls are discretionary in the sense that a subject with a certain access permission is capable of passing that permission (perhaps indirectly) on to any other subject (unless restrained by mandatory access control).”

Under RBAC, you must first determine the activities that must be performed and the resources that must be accessed by specific roles. For example, the role of “securityadmin” in Microsoft SQL Server must be able to create and manage logins, read error logs, and audit the application. Once all the roles are created and the rights and privileges associated with those roles are determined, users can then be assigned one or more roles based on their job functions. When a role is assigned to a specific user, the user gets all the rights and privileges assigned to that role.

Role-based and rule-based access control can both be abbreviated as RBAC. Standard convention is for RBAC to be used to denote role-based access control. A seldom-seen acronym for rule-based access control is RB-RBAC. Role-based focuses on the user’s role (administrator, backup operator, and so on). Rule-based focuses on predefined criteria such as time of day (users can only log in between 8 A.M. and 6 P.M.) or type of network traffic (web traffic is allowed to leave the organization).

Unfortunately, in reality, administrators often find themselves in a position of working in an organization where more than one user has multiple roles or even access to multiple accounts (a situation quite common in smaller organizations). Users with multiple accounts tend to select the same or similar passwords for those accounts, thereby increasing the chance one compromised account can lead to the compromise of other accounts accessed by that user. Where possible, administrators should first eliminate shared or additional accounts for users and then examine the possibility of combining roles or privileges to reduce the “account footprint” of individual users.

Rule-Based Access Control

Rule-based access control is yet another method of managing access and privileges (and unfortunately shares the same acronym as role-based access control). In this method, access is either allowed or denied based on a set of predefined rules. Each object has an associated ACL (much like DAC), and when a particular user or group attempts to access the object, the appropriate rule is applied.

The CompTIA Security+ exam will very likely expect you to be able to differentiate between the four major forms of access control discussed here: mandatory access control, discretionary access control, role-based access control, and rule-based access control.

A good example for rule-based access control is permitted logon hours. Many operating systems give administrators the ability to control the hours during which users can log in. For example, a bank might allow its employees to log in only between the hours of 8 A.M. and 6 P.M., Monday through Saturday. If a user attempts to log in outside of these hours (3 A.M. on Sunday, for example), then the rule will reject the login attempt regardless of whether the user supplies valid login credentials.

Attribute-Based Access Control (ABAC)

Attribute-based access control (ABAC) is a new access control schema based on the use of attributes associated with an identity. These can use any type of attributes (user attributes, resource attributes, environment attributes, and so on), such as location, time, activity being requested, and user credentials. An example would be a doctor getting access for a specific patient versus a different patient. ABAC can be represented via the eXtensible Access Control Markup Language (XACML), a standard that implements attribute- and policy-based access control schemes.

Account Policies

Account Policies

One of the key elements to guide security professionals in daily tasks is a good set of policies. Many issues are associated with the daily tasks, and leaving a lot of the decisions up to individual workers will rapidly result in conflicting results. Policies are needed for a wide range of elements, from naming conventions to operating rules, such as audit frequency and other specifics. Having these issues resolved as a matter of policy enables security professionals to go about the task of verifying and monitoring systems, rather than trying to adjudicate policy type issues with each user case that comes along.

Account Policy Enforcement

The primary method of account policy enforcement used in most access systems is still one based on passwords. The concepts of each user ID being traceable to a single person’s activity and no sharing of passwords and credentials form the foundation of a solid account policy. Passwords need to be managed to provide appropriate levels of protection. They need to be strong enough to resist attack, and yet not too difficult for users to remember. A password policy can act to ensure that the necessary steps are taken to enact a secure password solution, both by users and by the password infrastructure system.

Password Policies

Password policies, along with many other important security policies, are covered in detail in Chapter 3.

Credential Management

Credential management refers to the processes, services, and software used to store, manage, and log the use of user credentials. Credential management solutions are typically aimed at assisting end users manage their growing set of passwords. There are credential management products that provide a secure means of storing user credentials and making them available across a wide range of platforms, from local stores to cloud storage locations.

Group Policy

Microsoft Windows systems in an enterprise environment can be managed via group policy objects (GPOs). GPOs act through a set of registry settings that can be managed via the enterprise. A wide range of settings can be managed via GPOs, many of which are related to security, including user credential settings such as password rules.

Standard Naming Convention

Agreeing on a standard naming convention is one of the topics that can bring controversy out of professionals who seem to agree on most things. Having a standard naming convention has pluses in that it enables users to extract meaning from a name. Having servers with “dev,” “test,” and “prod” as part of their names can prevent inadvertent changes by a user because of the misidentification of an asset. By the same token, calling out privileges (say, appending “SA” to the end of usernames with system administrator privileges) results in two potential problems. First, it alerts adversaries to which accounts are the most valuable. Second, it creates a problem when the person is no longer a member of the system administrators group, as now the account must be renamed.

One aspect that everyone does agree on is the concept of leaving room for the future. The simplest example is in the numbering of accounts. For instance, for e-mail, use first initial plus last name plus a digit if a repeat. Will we ever have more than 10 John Smiths? Well, you might be surprised, as Joan Smiths and Jack Smiths also take from the pool. And the pool is further diluted by the fact that we inactivate old accounts, not reuse them. So plan on having plenty of room ahead for fixing any naming scheme.

Account Maintenance

Account maintenance is not the sexiest job in the security field. But then again, traffic cops have boring lives as well—until you realize that roughly half of all felons are arrested on simple traffic stops. The same is true with account maintenance—no, we aren’t catching felons, but we do find errors that otherwise only increase risk and because of their nature are hard to defend against any other way. Account maintenance is the routine screening of all attributes for an account. Is the business purpose for the account still valid, that is, is the user still employed? Is the business process for a system account still occurring? Are the actual permissions associated with the account appropriate for the account holder? Best practice indicates that this be performed in accordance with the risk associated with the profile. System administrators, and other privileged accounts, need greater scrutiny that normal users. Shared accounts, such as guest accounts, also require scrutiny to ensure they are not abused.

For some high-risk situations, such as unauthenticated guest accounts being granted administrator privilege, an automated check can be programmed and run on a regular basis. In Active Directory, it is also possible for the security group to be notified any time a user is granted domain admin privilege. And it is also important to note that the job of determining who has what access is actually one that belongs to the business, not the security group. The business side of the house is where the policy decision on who should have access is determined. The security group merely takes the steps to enforce this decision. Account maintenance is a joint responsibility.

Usage Auditing and Review

As with all security controls, a monitoring component is an important aspect of security controls used to mitigate risk. Logs are the most frequently used component, and with respect to privileged accounts, logging can be especially important. Usage auditing and review is just that: an examination of logs to determine user activity. Reviewing access control logs for root-level accounts is an important element of securing access control methods. Because of the power and potential for misuse, administrative or root-level accounts should be closely monitored. One important element for continuous monitoring of production would be the use of an administrative-level account on a production system.

Logging and monitoring of failed login attempts provides valuable information during investigations of compromises.

A strong configuration management environment will include the control of access to production systems by users who can change the environment. Root-level changes in a system tend to be significant changes, and in production systems these changes would be approved in advance. A comparison of all root-level activity against approved changes will assist in the detection of activity that is unauthorized.

Account Recertification

User accounts should periodically be recertified as necessary. The process of account recertification can be as simple as a check against current payroll records to ensure all users are still employed, or as intrusive as having users identify themselves again. The later is highly intrusive but may be warranted for high-risk accounts. The process of recertification ensures that only users needing accounts have accounts in the system.

Time-of-Day Restrictions

Some organizations need to tightly control certain users, groups, or even roles and limit access to certain resources to specific days and times. Most server-class operating systems enable administrators to implement time-of-day restrictions that limit when a user can log in, when certain resources can be accessed, and so on. Time-of-day restrictions are usually specified for individual accounts, as shown in Figure 11.13.

• Figure 11.13 Logon hours for Guest account

Be careful implementing time-of-day restrictions. Some operating systems give you the option of disconnecting users as soon as their “allowed login time” expires, regardless of what the user is doing at the time. The more commonly used approach is to allow currently logged-in users to stay connected but reject any login attempts that occur outside of allowed hours.

From a security perspective, time-of-day restrictions can be very useful. If a user normally accesses certain resources during normal business hours, an attempt to access these resources outside this time period (either at night or on the weekend) might indicate an attacker has gained access to or is trying to gain access to that account. Specifying time-of-day restrictions can also serve as a mechanism to enforce internal controls of critical or sensitive resources. Obviously, a drawback to enforcing time-of-day restrictions is that it means a user can’t go to work outside of normal hours to “catch up” with work tasks. As with all security policies, usability and security must be balanced in this policy decision.

Account Expiration

In addition to all the other methods of controlling and restricting access, most modern operating systems allow administrators to specify the length of time an account is valid and when it “expires” or is disabled. Account expiration is the setting of an ending time for an account’s validity. This is a great method for controlling temporary accounts, or accounts for contractors or contract employees. For these accounts, the administrator can specify an expiration date; when the date is reached, the account automatically becomes locked out and cannot be logged into without administrator intervention. A related action can be taken with accounts that never expire: they can automatically be marked “inactive” and locked out if they have been unused for a specified number of days. Account expiration is similar to password expiration, in that it limits the time window of potential compromise. When an account has expired, it cannot be used unless the expiration deadline is extended.

Disabling Accounts

An administrator has several options for ending a user’s access (for instance, upon termination). The best option is to disable the account but leave it in the system. This preserves account permission chains and prevents reuse of a user ID, leading to potential confusion later when examining logs.

Similarly, organizations must define whether accounts are deleted or disabled when no longer needed. Deleting an account removes the account from the system permanently, whereas disabling an account leaves it in place but marks it as unusable. Many organizations disable an account for a period of time after an employee departs (30 or more days) prior to deleting the account. This prevents anyone from using the account and allows administrators to reassign files, forward mail, and “clean up” before taking any permanent actions on the account.

Preventing Data Loss or Theft

Preventing Data Loss or Theft

Identity theft and commercial espionage have become very large and lucrative criminal enterprises over the past decade. Hackers are no longer merely content to compromise systems and deface web sites. In many attacks performed today, hackers are after intellectual property, business plans, competitive intelligence, personal information, credit card numbers, client records, or any other information that can be sold, traded, or manipulated for profit. This has created a whole industry of technical solutions labeled data loss prevention (DLP) solutions.

It can be assumed that a hacker has assumed the identity of an authorized user, and DLP solutions exist to prevent the exfiltration of data regardless of access control restrictions. DLP solutions come in many forms, and each of these solutions has strengths and weaknesses. The best solution is a combination of security elements: some to secure data in storage (encryption) and some in the form of monitoring (proxy devices to monitor data egress for sensitive data), and even NetFlow analytics to identify new bulk data transfer routes.

The Remote Access Process

The Remote Access Process

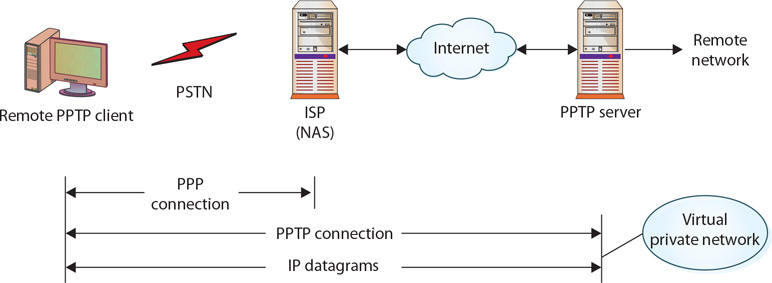

The process of connecting by remote access involves two elements: a temporary network connection and a series of protocols to negotiate privileges and commands. The temporary network connection can occur via a dial-up service, the Internet, wireless access, or any other method of connecting to a network. Once the connection is made, the primary issue is authenticating the identity of the user and establishing proper privileges for that user. This is accomplished using a combination of protocols and the operating system on the host machine.

Securing Remote Connections

By using encryption, remote access protocols can securely authenticate and authorize a user according to previously established privilege levels. The authorization phase can keep unauthorized users out, but after that, encryption of the communications channel becomes very important in preventing unauthorized users from breaking in on an authorized session and hijacking an authorized user’s credentials. As more and more networks rely on the Internet for connecting remote users, the need for and importance of secure remote access protocols and secure communication channels will continue to grow.

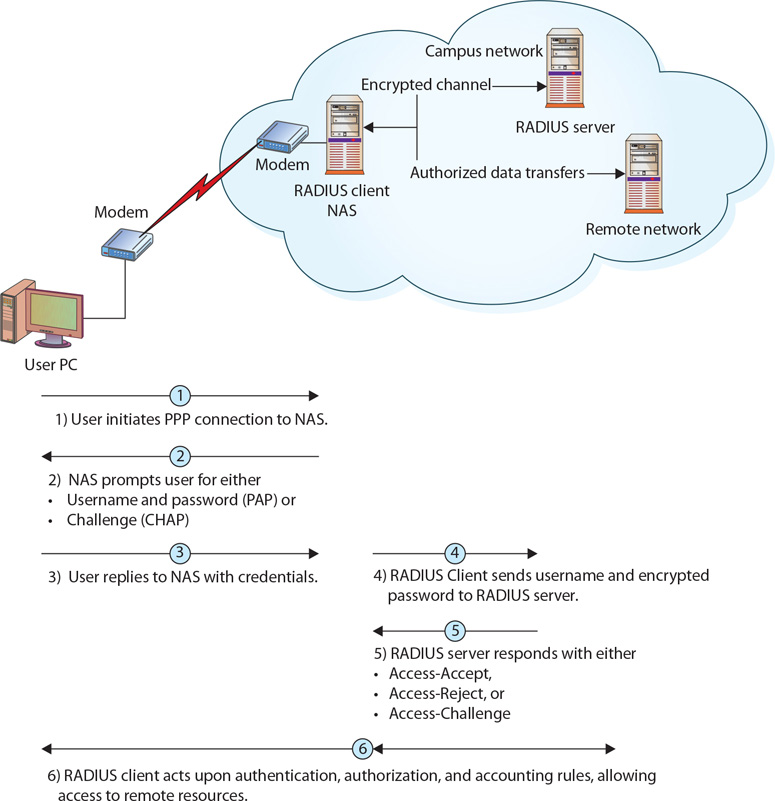

The three steps in the establishment of proper privileges are authentication, authorization, and accounting, commonly referred to simply as AAA. Authentication is the matching of user-supplied credentials to previously stored credentials on a host machine, and it usually involves an account username and password. Once the user is authenticated, the authorization step takes place. Authorization is the granting of specific permissions based on the privileges held by the account. Does the user have permission to use the network at this time, or is their use restricted? Does the user have access to specific applications, such as mail and FTP, or are some of these restricted? These checks are carried out as part of authorization, and in many cases this is a function of the operating system in conjunction with its established security policies. Accounting is the collection of billing and other detail records. Network access is often a billable function, and a log of how much time, bandwidth, file transfer space, or other resources were used needs to be maintained. Other accounting functions include keeping detailed security logs to maintain an audit trail of tasks being performed.

When a user connects to the Internet through an ISP, this is similarly a case of remote access—the user is establishing a connection to their ISP’s network, and the same security issues apply. The issue of authentication, the matching of user-supplied credentials to previously stored credentials on a host machine, is usually done via a user account name and password. Once the user is authenticated, the authorization step takes place. Remote authentication usually takes the common form of an end user submitting their credentials via an established protocol to a remote access server (RAS), which acts upon those credentials, either granting or denying access.

Access controls define what actions a user can perform or what objects a user is allowed to access. Access controls are built on the foundation of elements designed to facilitate the matching of a user to a process. These elements are identification, authentication, and authorization. A myriad of details and choices are associated with setting up remote access to a network, and to provide for the management of these options, it is important for an organization to have a series of remote access policies and procedures spelling out the details of what is permitted and what is not for a given network.

Federation

Federated identity management is an agreement between multiple enterprises that lets parties use the same identification data to obtain access to the networks of all enterprises in the group. This federation enables access to be managed across multiple systems in common trust levels.

Identification

Identification is the process of ascribing a computer ID to a specific user, computer, network device, or computer process. The identification process is typically performed only once, when a user ID is issued to a particular user. User identification enables authentication and authorization to form the basis for accountability. For accountability purposes, user IDs should not be shared, and for security purposes, they should not be descriptive of job function. This practice enables you to trace activities to individual users or computer processes so that they can be held responsible for their actions. Identification links the logon ID or user ID to credentials that have been submitted previously to either HR or the IT staff. A required characteristic of user IDs is that they must be unique so that they map back to the credentials presented when the account was established.

Authentication

Authentication is the process of binding a specific ID to a specific computer connection. Two items need to be presented to cause this binding to occur—the user ID and some “secret” to prove that the user is the valid possessor of the credentials. Historically, three categories of secrets are used to authenticate the identity of a user: what users know, what users have, and what users are. Today, an additional category is used: what users do.

These methods can be used individually or in combination. These controls assume that the identification process has been completed and the identity of the user has been verified. It is the job of authentication mechanisms to ensure that only valid users are admitted. Described another way, authentication is using some mechanism to prove that you are who you claimed to be when the identification process was completed.

Categories of Shared Secrets for Authentication

Originally published by the U.S. government in one of the “rainbow series” of manuals on computer security, the categories of shared “secrets” are as follows:

![]() What users know (such as a password)

What users know (such as a password)

![]() What users have (such as tokens)

What users have (such as tokens)

![]() What users are (static biometrics such as fingerprints or iris pattern)

What users are (static biometrics such as fingerprints or iris pattern)

Today, because of technological advances, new categories have emerged, patterned after subconscious behaviors and measurable attributes:

![]() What users do (dynamic biometrics such as typing patterns or gait)

What users do (dynamic biometrics such as typing patterns or gait)

![]() Where a user is (actual physical location)

Where a user is (actual physical location)

The most common method of authentication is the use of a password. For greater security, you can add an element from a separate group, such as a smart card token—something a user has in their possession. Passwords are common because they are one of the simplest forms of authentication, and they use user memory as a prime component. Because of their simplicity, passwords have become ubiquitous across a wide range of authentication systems.

Another method to provide authentication involves the use of something that only valid users should have in their possession. A physical-world example of this would be a simple lock and key. Only those individuals with the correct key will be able to open the lock and thus gain admittance to a house, car, office, or whatever the lock was protecting. A similar method can be used to authenticate users for a computer system or network (though the key may be electronic and could reside on a smart card or similar device). The problem with this technology, however, is that people do lose their keys (or cards), which means not only that the user can’t log into the system but that somebody else who finds the key may then be able to access the system, even though they are not authorized. To address this problem, a combination of the something-you-know and something-you-have methods is often used so that the individual with the key is also required to provide a password or passcode. The key is useless unless the user knows this code.

The third general method to provide authentication involves something that is unique about you. We are accustomed to this concept in our physical world, where our fingerprints or a sample of our DNA can be used to identify us. This same concept can be used to provide authentication in the computer world. The field of authentication that uses something about you or something that you are is known as biometrics. A number of different mechanisms can be used to accomplish this type of authentication, such as a fingerprint, iris, retinal, or hand geometry scan. All of these methods obviously require some additional hardware in order to operate. The inclusion of fingerprint readers on laptop computers is becoming common as the additional hardware is becoming cost effective.

A new method, based on how users perform an action, such as their walking gait or their typing patterns, has emerged as a source of a personal “signature.” While not directly embedded into systems as yet, this is an option that will be coming in the future.

Although the three main approaches to authentication appear to be easy to understand and in most cases easy to implement, authentication is not to be taken lightly because it is such an important component of security. Potential attackers are constantly searching for ways to get past the system’s authentication mechanism, and they have employed some fairly ingenious methods to do so. Consequently, security professionals are constantly devising new methods, building on these three basic approaches, to provide authentication mechanisms for computer systems and networks.

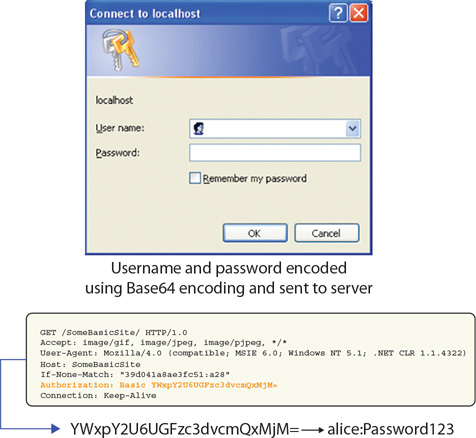

Basic Authentication

Basic authentication is the simplest technique used to manage access control across HTTP. Basic authentication operates by passing information encoded in Base64 form using standard HTTP headers. This is a plaintext method without any pretense of security. Figure 11.14 illustrates the operation of basic authentication.

• Figure 11.14 How basic authentication operates

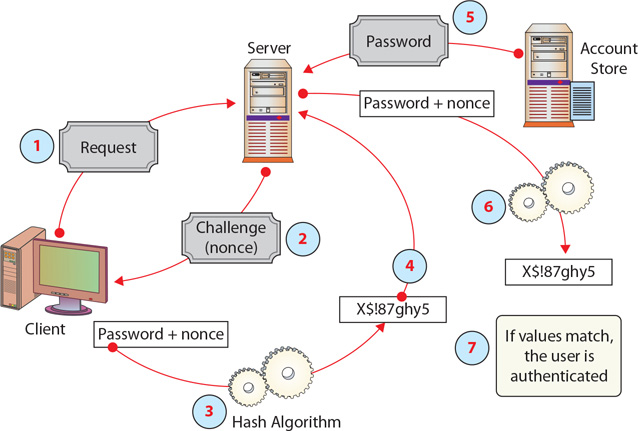

Digest Authentication

Digest authentication is a method used to negotiate credentials across the Web. Digest authentication uses hash functions and a nonce to improve security over basic authentication. Digest authentication works as follows, as illustrated in Figure 11.15:

• Figure 11.15 How digest authentication operates

1. The client requests login.

2. The server responds with a challenge and provides a nonce.

3. The client hashes the password and nonce.

4. The client returns the hashed password to the server.

5. The server requests the password from a password store.

6. The server hashes the password and nonce.

7. If both hashes match, login is granted.

Digest authentication, although it improves security over basic authentication, does not provide any significant level of security. Passwords are not sent in the clear. Digest authentication is subject to man-in-the-middle attacks and potentially replay attacks.

The bottom line for both basic and digest authentication is that these are insecure methods and should not be relied upon for any level of security.

Kerberos

Developed as part of MIT’s project Athena, Kerberos is a network authentication protocol designed for a client/server environment. The current version is Kerberos 5 release 1.16 and is supported by all major operating systems. Kerberos securely passes a symmetric key over an insecure network using the Needham-Schroeder symmetric key protocol. Kerberos is built around the idea of a trusted third party, termed a key distribution center (KDC), which consists of two logically separate parts: an authentication server (AS) and a ticket-granting server (TGS). Kerberos communicates via “tickets” that serve to prove the identity of users.

Two tickets are used in Kerberos. The first is a ticket-granting ticket (TGT) obtained from the authentication server (AS). The TGT is presented to a ticket-granting server (TGS) when access to a server is requested and then a client-to-server ticket is issued, granting access to the server. Typically both the AS and the TGS are logically separate parts of the key distribution center (KDC).

Taking its name from the three-headed dog of Greek mythology, Kerberos is designed to work across the Internet, an inherently insecure environment. Kerberos uses strong encryption so that a client can prove its identity to a server and the server can in turn authenticate itself to the client. A complete Kerberos environment is referred to as a Kerberos realm. The Kerberos server contains user IDs and hashed passwords for all users who will have authorizations to realm services. The Kerberos server also has shared secret keys with every server to which it will grant access tickets.

The basis for authentication in a Kerberos environment is the ticket. Tickets are used in a two-step process with the client. The first ticket is a ticket-granting ticket (TGT) issued by the AS to a requesting client. The client can then present this ticket to the Kerberos server with a request for a ticket to access a specific server. This client-to-server ticket (also called a service ticket) is used to gain access to a server’s service in the realm. Because the entire session can be encrypted, this eliminates the inherently insecure transmission of items such as a password that can be intercepted on the network. Tickets are time-stamped and have a lifetime, so attempting to reuse a ticket will not be successful. Figure 11.16 details Kerberos operations.

• Figure 11.16 Kerberos operations

Kerberos Authentication

Kerberos is a third-party authentication service that uses a series of tickets as tokens for authenticating users. The six steps involved are protected using strong cryptography:

![]() The user presents their credentials and requests a ticket from the key distribution center (KDC).

The user presents their credentials and requests a ticket from the key distribution center (KDC).

![]() The KDC verifies credentials and issues a ticket-granting ticket (TGT).

The KDC verifies credentials and issues a ticket-granting ticket (TGT).

![]() The user presents a TGT and request for service to the KDC.

The user presents a TGT and request for service to the KDC.

![]() The KDC verifies authorization and issues a client-to-server ticket (or service ticket).

The KDC verifies authorization and issues a client-to-server ticket (or service ticket).

![]() The user presents a request and a client-to-server ticket to the desired service.

The user presents a request and a client-to-server ticket to the desired service.

![]() If the client-to-server ticket is valid, service is granted to the client.

If the client-to-server ticket is valid, service is granted to the client.

To illustrate how the Kerberos authentication service works, think about the common driver’s license. You have received a license that you can present to other entities to prove you are who you claim to be. Because other entities trust the state in which the license was issued, they will accept your license as proof of your identity. The state in which the license was issued is analogous to the Kerberos authentication service realm, and the license acts as a client-to-server ticket. It is the trusted entity both sides rely on to provide valid identifications. This analogy is not perfect, because we all probably have heard of individuals who obtained a phony driver’s license, but it serves to illustrate the basic idea behind Kerberos.

Mutual TLS–based authentication provides the same functions as normal TLS, with the addition of authentication and nonrepudiation of the client. This second authentication, the authentication of the client, is done in the same manner as the normal server authentication using digital signatures. The client authentication represents the many sides of a many-to-one relationship. Mutual TLS authentication is not commonly used because of the complexity, cost, and logistics associated with managing the multitude of client certificates. This reduces the effectiveness, and most web applications are not designed to require client-side certificates.

Mutual Authentication

Mutual authentication describes a process in which each side of an electronic communication verifies the authenticity of the other. We are accustomed to the idea of having to authenticate ourselves to our ISP before we access the Internet, generally through the use of a user ID/password pair, but how do we actually know that we are really communicating with our ISP and not some other system that has somehow inserted itself into our communication (a man-in-the-middle attack)? Mutual authentication provides a mechanism for each side of a client/server relationship to verify the authenticity of the other to address this issue. A common method of performing mutual authentication involves using a secure connection, such as Transport Layer Security (TLS), to the server and a one-time password generator that then authenticates the client.

Certificates

Certificates are a method of establishing authenticity of specific objects such as an individual’s public key or downloaded software. A digital certificate is a digital file that is sent as an attachment to a message and is used to verify that the message did indeed come from the entity it claims to have come from.

PIV/CAC/Smart Cards

The U.S. federal government has several smart card solutions for identification of personnel. The personal identity verification (PIV) card is a U.S. government smart card that contains the credential data for the cardholder used to determine access to federal facilities and information systems. The Common Access Card (CAC) is a smart card identification used by the U.S. Department of Defense (DoD) for active-duty military, selected reserve personnel, DoD civilians, and eligible contractors. Like the PIV card, it is used for carrying the credential data, in the form of a certificate, for the cardholder used to determine access to Federal facilities and information systems.

Digital Certificates and Digital Signatures

Kerberos uses tickets to convey messages. Part of the ticket is a certificate that contains the requisite keys. Understanding how certificates convey this vital information is an important part of understanding how Kerberos-based authentication works. Certificates, how they are used, and the protocols associated with PKI were covered in Chapter 7. Refer back to this chapter as needed for more information.

Tokens

While the username/password combination has been and continues to be the cheapest and most popular method of controlling access to resources, many organizations look for a more secure and tamper-resistant form of authentication. Usernames and passwords are “something you know” (which can be used by anyone else who knows or discovers the information). A more secure method of authentication is to combine the “something you know” with “something you have.” A token is an authentication factor that typically takes the form of a physical or logical entity that the user must be in possession of to access their account or certain resources.

A token is a hardware device that can be used in a challenge/response authentication process. In this way, it functions as both a something-you-have and something-you-know authentication mechanism. Several variations on this type of device exist, but they all work on the same basic principles. Tokens are commonly employed in remote authentication schemes because they provide additional surety of the identity of the user, even users who are somewhere else and cannot be observed.

Most tokens are physical tokens that display a series of numbers that changes every 30 to 90 seconds, such as the token pictured in Figure 11.17 from Blizzard Entertainment. This sequence of numbers must be entered when the user is attempting to log in or access certain resources. The ever-changing sequence of numbers is synchronized to a remote server such that when the user enters the correct username, password, and matching sequence of numbers, they are allowed to log in. Even if an attacker obtains the username and password, the attacker cannot log in without the matching sequence of numbers. Other physical tokens include Common Access Cards (CACs), USB tokens, smart cards, and PC cards.

• Figure 11.17 Token authenticator from Blizzard Entertainment

The use of a token is a common method of using “something you have” for authentication. A token can hold a cryptographic key or act as a one-time password (OTP) generator. It can also be a smart card that holds a cryptographic key (examples include the U.S. military Common Access Card and the Federal Personal Identity Verification [PIV] card). These devices can be safeguarded using a PIN and lockout mechanism to prevent use if stolen.

Software Tokens