Business Continuity, Disaster Recovery, and Organizational Policies

Strategy without tactics is the slowest route to victory. Tactics without strategy is the noise before defeat.

—SUN TZU

In this chapter, you will learn how to

![]() Describe the various components of a business continuity plan

Describe the various components of a business continuity plan

![]() Describe the elements of disaster recovery plans

Describe the elements of disaster recovery plans

![]() Describe the various ways backups are conducted and stored

Describe the various ways backups are conducted and stored

![]() Explain different strategies for alternative site processing

Explain different strategies for alternative site processing

Much of this book focuses on avoiding the loss of confidentiality or integrity due to a security breach. The issue of availability is also discussed in terms of specific events, such as denial-of-service (DoS) attacks and distributed DoS attacks. In reality, however, many things can disrupt the operations of your organization. From the standpoint of your clients and employees, whether your organization’s web site is unavailable because of a storm or because of an intruder makes little difference—the site is still unavailable. In this chapter, we’ll discuss what do to when a situation arises that results in the disruption of services. This discussion includes both disaster recovery and business continuity.

Disaster Recovery

Disaster Recovery

Many types of disasters, whether natural or caused by people, can disrupt your organization’s operations for some length of time. Such disasters are unlike threats that intentionally target your computer systems and networks, such as industrial espionage, hacking, attacks from disgruntled employees, and insider threats, because the events that cause the disruption are not specifically aimed at your organization. Although both disasters and intentional threats must be considered important in planning for disaster recovery, the purpose of this section is to focus on recovering from disasters.

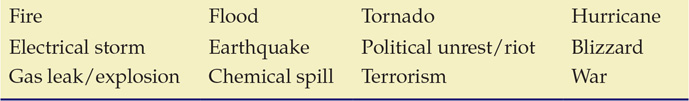

How long your organization’s operations are disrupted depends in part on how prepared it is for a disaster and what plans are in place to mitigate the effects of a disaster. Any of the events in Table 19.1 could cause a disruption in operations.

Table 19.1 Common Causes of Disasters

Fortunately, these types of events do not happen frequently in any one location. It is more likely that business operations will be interrupted because of employee error (such as accidental corruption of a database or unplugging a system to plug in a vacuum cleaner—an event that has occurred at more than one organization). A good disaster recovery plan will prepare your organization for any type of organizational disruption.

Disasters can be caused by nature (such as fires, earthquakes, and floods) or can be the result of some manmade event (such as war or a terrorist attack). The plans an organization develops to address a disaster need to recognize both of these possibilities. While many of the elements in a disaster recovery plan will be similar for both natural and manmade events, some differences might exist. For example, recovering data from backup tapes after a natural disaster can use the most recent backup available. If, on the other hand, the event was a loss of all data as a result of a computer virus that wiped your system, restoring from the most recent backup tapes might result in the reinfection of your system if the virus had been dormant for a planned period of time. In this case, recovery might entail restoring some files from earlier backups.

Disaster Recovery Plans/Process

No matter what event you are worried about—whether natural or manmade and whether targeted at your organization or more random—you can make preparations to lessen the impact on your organization and the length of time that your organization will be out of operation. A disaster recovery plan (DRP) is critical for effective disaster recovery efforts. A DRP defines the data and resources necessary and the steps required to restore critical organizational processes.

Consider what your organization needs to perform its mission. This information provides the beginning of a DRP since it tells you what needs to be quickly restored. When considering resources, don’t forget to include both the physical resources (such as computer hardware and software) and the personnel (the people who know how to run the systems that process your critical data).

To begin creating your DRP, first identify all critical functions for your organization and then answer the following questions for each of these critical functions:

![]() Who is responsible for the operation of this function?

Who is responsible for the operation of this function?

![]() What do these individuals need to perform the function?

What do these individuals need to perform the function?

![]() When should this function be accomplished relative to other functions?

When should this function be accomplished relative to other functions?

![]() Where will this function be performed?

Where will this function be performed?

![]() How is this function performed (what is the process)?

How is this function performed (what is the process)?

![]() Why is this function so important or critical to the organization?

Why is this function so important or critical to the organization?

By answering these questions, you can create an initial draft of your organization’s DRP. The name often used to describe the document created by addressing these questions is a business impact assessment (BIA). Both the disaster recovery plan and the business impact assessment, of course, will need to be approved by management, and it is essential that they buy into the plan—otherwise your efforts will more than likely fail. The old adage “Those who fail to plan, plan to fail” certainly applies in this situation.

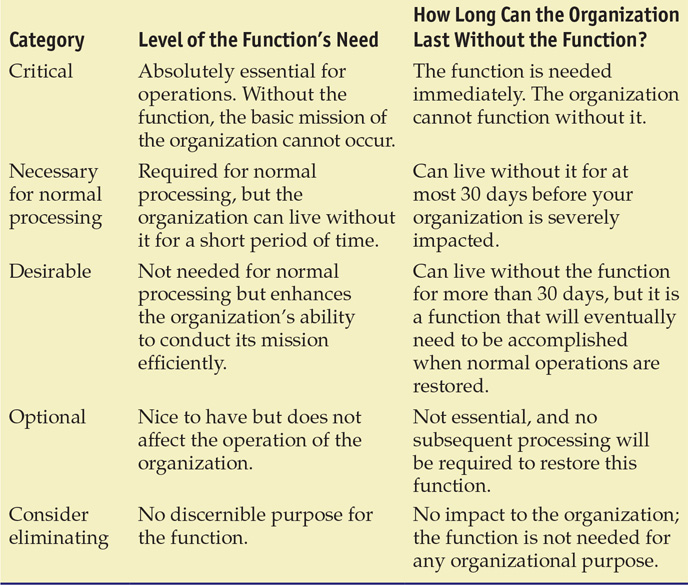

It is often informative to determine what category your various business functions fall into. You may find that certain functions currently being conducted are not essential to your operations and could be eliminated. In this way, preparing for a security event may actually help you streamline your operational processes.

A good DRP must include the processes and procedures needed to restore your organization to proper functioning and to ensure continued operation. What specific steps will be required to restore operations? These processes should be documented and, where possible and feasible, reviewed and exercised on a periodic basis. Having a plan with step-by-step procedures that nobody knows how to follow does nothing to ensure the continued operation of the organization. Exercising your DRP and processes before a disaster occurs provides you with the opportunity to discover flaws or weaknesses in the plan when there is still time to modify and correct them. It also provides an opportunity for key figures in the plan to practice what they will be expected to accomplish.

Categories of Business Functions

In developing your disaster recovery plan or the business impact assessment, you may find it useful to categorize the various functions your organization performs, such as shown in Table 19.2. This categorization is based on how critical or important the function is to your business operation and how long your organization can last without the function. Those functions that are the most critical will be restored first, and your DRP should reflect this. If the function doesn’t fall into any of the first four categories, then it is not really needed, and the organization should seriously consider whether it can be eliminated altogether.

Table 19.2 DRP Considerations

The difference between a disaster recovery plan and business continuity plan is that the business continuity plan will be used to ensure that your operations continue in the face of whatever event has occurred that has caused a disruption in operations. (The elements of a BCP will be fully covered later in this chapter.) If a disaster has occurred and has destroyed all or part of your facility, the DRP portion of the business continuity plan will address the building or acquisition of a new facility. The DRP can also include details related to the long-term recovery of the organization.

However you view these two plans, an organization that is not able to quickly restore business functions after an operational interruption is an organization that will most likely suffer an unrecoverable loss and may cease to exist.

IT Contingency Planning

Important parts of any organization today are the information technology (IT) processes and assets. Without computers and networks, most organizations could not operate. As a result, it is imperative that a business continuity plan includes IT contingency planning. Because of the nature of the Internet and the threats that come from it, an organization’s IT assets will likely face some level of disruption before the organization suffers from a disruption due to a natural disaster. Events such as viruses, worms, computer intruders, and denial-of-service attacks could result in an organization losing part or all of its computing resources without warning. Consequently, the IT contingency plans are more likely to be needed than the other aspects of a business continuity plan. These plans should account for disruptions caused by any of the security threats discussed throughout this book as well as disasters or simple system failures.

Test, Exercise, and Rehearse

An organization should practice its DRP periodically. The time to find out whether it has flaws is not when an actual event occurs and the recovery of data and information means the continued existence of the organization. The DRP should be tested to ensure that it is sufficient and that all key individuals know their role in the specific plan. The security plan determines whether the organization’s plan and the individuals involved perform as they should during a simulated security incident.

A test implies a “grade” will be applied to the outcome. Did the organization’s plan and the individuals involved perform as they should? Was the organization able to recover and continue to operate within the predefined tolerances set by management? If the answer is no, then during the follow-up evaluation of the exercise, the failures should be identified and addressed. Was it simply a matter of untrained or uninformed individuals, or was there a technological failure that necessitates a change in hardware, software, and procedures?

Whereas a test implies a “grade,” an exercise can be conducted without the stigma of a pass/fail grade being attached. Security exercises are conducted to provide the opportunity for all parties to practice the procedures that have been established to respond to a security incident. It is important to perform as many of the recovery functions as possible, without impacting ongoing operations, to ensure that the procedures and technology will work in a real incident. You may want to periodically rehearse portions of the recovery plan, particularly those aspects that either are potentially more disruptive to actual operations or require more frequent practice because of their importance or degree of difficulty.

Additionally, there are different formats for exercises with varying degrees of impact on the organization. The most basic is a checklist walk-through in which individuals go through a recovery checklist to ensure that they understand what to do should the plan be invoked and confirm that all necessary equipment (hardware and software) is available. This type of exercise normally does not reveal “holes” in a plan but will show where discrepancies exist in the preparation for the plan. To examine the completeness of a plan, a different type of exercise needs to be conducted. The simplest is a tabletop exercise in which participants sit around a table with a facilitator who supplies information related to the “incident” and the processes that are being examined. Another type of exercise is a functional test in which certain aspects of a plan are tested to see how well they work (and how well prepared personnel are). At the most extreme are full operational exercises designed to actually interrupt services in order to verify that all aspects of a plan are in place and sufficient to respond to the type of incident that is being simulated.

Exercises are an often overlooked aspect of security. Many organizations do not believe that they have the time to spend on such events, but the question to ask is whether they can afford to not conduct these exercises, as they ensure the organization has a viable plan to recover from disasters and that operations can continue. Make sure you understand what is involved in these critical tests of your organization’s plans.

Tabletop Exercises

Exercising operational plans is an effort that can take on many different forms. For senior decision-makers, the point of action is more typically a desk or a conference room, with their method being meetings and decisions. A common form of exercising operational plans for senior management is the tabletop exercise. The senior management team, or elements of it, are gathered together and presented a scenario. They can walk through their decision-making steps, communicate with others, and go through the motions of the exercise in the pattern in which they would likely be involved. The scenario is presented at a level to test the responsiveness of their decisions and decision-making process. Because the event is frequently run in a conference room, around a table, the name tabletop exercise has come to define this form of exercise.

Recovery Time Objective and Recovery Point Objective

The term recovery time objective (RTO) is used to describe the target time that is set for resuming operations after an incident. This is a period of time that is defined by the business, based on the needs of the enterprise. A shorter RTO results in higher costs because it requires greater coordination and resources. This term is commonly used in business continuity and disaster recovery operations.

Recovery point objective (RPO), a totally different concept from RTO, is the time period representing the maximum period of acceptable data loss. The RPO determines the frequency of backup operations necessary to prevent unacceptable levels of data loss. A simple example of establishing RPO is to answer the following questions: How much data can you afford to lose? How much rework is tolerable?

RTP and RPO are seemingly related but in actuality measure different things entirely. The RTO serves the purpose of defining the requirements for business continuity, while the RPO deals with backup frequency. It is possible to have an RTO of 1 day and an RPO of 1 hour or to have an RTO of 1 hour and an RPO of 1 day. The determining factors are the needs of the business.

Although recovery time objective and recovery point objective seem to be the same or similar, they are very different. The RTO serves the purpose of defining the requirements for business continuity, while the RPO deals with backup frequency.

Backups

Backups

A key element in any business continuity/disaster recovery plan is the availability of backups. This is true not only because of the possibility of a disaster but also because hardware and storage media will periodically fail, resulting in loss or corruption of critical data. An organization might also find backups critical when security measures have failed and an individual has gained access to important information that may have become corrupted or at the very least can’t be trusted. Data backup is thus a critical element in these plans, as well as in normal operation. These are several factors to consider in an organization’s data backup strategy:

![]() How frequently should backups be conducted?

How frequently should backups be conducted?

![]() How extensive do the backups need to be?

How extensive do the backups need to be?

![]() What is the process for conducting backups?

What is the process for conducting backups?

![]() Who is responsible for ensuring backups are created?

Who is responsible for ensuring backups are created?

![]() Where will the backups be stored?

Where will the backups be stored?

![]() How long will backups be kept?

How long will backups be kept?

![]() How many copies will be maintained?

How many copies will be maintained?

Keep in mind that the purpose of a backup is to provide valid, uncorrupted data in the event of corruption or loss of the original file or the media where the data was stored. Depending on the type of organization, legal requirements for maintaining backups can also affect how it is accomplished.

Backups Are a Key Responsibility for Administrators

One of the most important tools a security administrator has is a backup. While backups will not prevent a security event (or natural disaster) from occurring, they often can save an organization from a catastrophe by allowing it to quickly return to full operation after an event occurs. Conducting frequent backups and having a viable backup and recovery plan are two of the most important responsibilities of a security administrator.

What Needs to Be Backed Up

Backups commonly comprise the data that an organization relies on to conduct its daily operations. While this is certainly essential, a good backup plan will consider more than just the data; it will include any application programs needed to process the data and the operating system and utilities that the hardware platform requires to run the applications. Obviously, the application programs and operating system will change much less frequently than the data itself, so the frequency with which these items need to be backed up is considerably different. This should be reflected in the organization’s backup plan and strategy.

The business continuity/disaster recovery plan should also address other items related to backups. Personnel, equipment, and electrical power must also be part of the plan. Somebody needs to understand the operation of the critical hardware and software used by the organization. If the disaster that destroyed the original copy of the data and the original systems also results in the loss of the only personnel who know how to process the data, having backup data will not be enough to restore normal operations for the organization. Similarly, if the data requires specific software to be run on a specific hardware platform, then having the data without the application program or required hardware will also not be sufficient.

Implementing the Right Type of Backups

Carefully consider the type of backup that you want to conduct. With the size of today’s PC hard drives, a complete backup of the entire hard drive can take a considerable amount of time. Implement the type of backup that you need and check for software tools that can help you in establishing a viable backup schedule.

Strategies for Backups

The process for creating a backup copy of data and software requires more thought than simply stating “copy all required files.” The size of the resulting backup must be considered, as well as the time required to conduct the backup. Both of these will affect details such as how frequently the backup will occur and the type of storage medium that will be used for the backup. Other considerations include who will be responsible for conducting the backup, where the backups will be stored, and how long they should be maintained. Short-term storage for accidentally deleted files that users need to have restored should probably be close at hand. Longer-term storage for backups that may be several months or even years old should occur in a different facility. It should be evident by now that even something that sounds as simple as maintaining backup copies of essential data requires careful consideration and planning.

Types of Backups

The amount of data that will be backed up, and the time it takes to accomplish this, has a direct bearing on the type of backup that should be performed. Table 19.3 outlines the four basic types of backups that can be conducted, the amount of space required for each, and the ease of restoration using each strategy.

Table 19.3 Backup Types and Characteristics

The values for each of the strategies in Table 19.3 are highly variable depending on your specific environment. The more frequently files are changed between backups, the more these strategies will look alike. What each strategy entails bears further explanation.

Archive Bits

The archive bit is used to indicate whether a file has (1) or has not (0) changed since the last backup. The bit is set (changed to a 1) if the file is modified, or in some cases, if the file is copied, the new copy of the file has its archive bit set. The bit is reset (changed to a 0) when the file is backed up. The archive bit can be used to determine which files need to be backed up when using methods such as the differential backup method.

Full

The easiest type of backup to understand is the full backup. In a full backup, all files and software are copied onto the storage media. Restoration from a full backup is similarly straightforward—you must copy all the files back onto the system. This process can take a considerable amount of time. Consider the size of even the average home PC today, for which storage is measured in tens and hundreds of gigabytes. Copying this amount of data takes time. In a full backup, the archive bit is cleared.

Differential

In a differential backup, only the files that have changed since the last full backup was completed are backed up. This also implies that periodically a full backup needs to be accomplished. The frequency of the full backup versus the interim differential backups depends on your organization and needs to be part of your defined strategy. Restoration from a differential backup requires two steps: the last full backup first needs to be loaded, and then the last differential backup performed can be applied to update the files that have been changed since the full backup was conducted. Again, this is not a difficult process, but it does take some time. The amount of time to accomplish the periodic differential backup, however, is much less than that for a full backup, and this is one of the advantages of this method. Obviously, if a lot of time has passed between differential backups or if most files in your environment change frequently, then the differential backup does not differ much from a full backup. It should also be obvious that to accomplish the differential backup, the system needs to have a method to determine which files have been changed since some given point in time. The archive bit is not cleared in a differential backup since the key for a differential is to back up all files that have changed since the last full backup.

Incremental vs. Differential Backups

Both incremental and differential backups begin with a full backup. An incremental backup only includes the data that has changed since the previous backup, including the last incremental. A differential backup contains all of the data that has changed since the last full backup. The advantage that differential backups have over incremental is shorter restore times. The advantage of the incremental backup is shorter backup times. To restore a differential backup, you restore the full backup and the latest differential backup: two events. To restore an incremental system, you restore the full and then all the incremental backups in order.

Delta

Finally, the goal of the delta backup is to back up as little information as possible each time you perform a backup. As with the other strategies, an occasional full backup must be accomplished. After that, when a delta backup is conducted at specific intervals, only the portions of the files that have been changed will be stored. The advantage of this is easy to illustrate. If your organization maintains a large database with thousands of records comprising several hundred megabytes of data, the entire database would be copied in the previous backup types even if only one record has changed. For a delta backup, only the actual record that changed would be stored. The disadvantage of this method is that restoration is a complex process because it requires more than just loading a file (or several files). It requires that application software be run to update the records in the files that have been changed.

There are some newer backup methods that are similar to delta backups in that they minimize what is backed up. There are real-time or near-real-time backup strategies, such as journaling, transactional backups, and electronic vaulting, that can provide protection against loss in real-time environments. Implementing these methods into an overall backup strategy can increase options and flexibility during times of recovery.

You need to make sure you understand the different types of backups and their advantages and disadvantages for the exam.

Snapshots

Snapshots refer to copies of virtual machines. One of the advantages of a virtual machine over a physical machine is the ease in which the virtual machine can be backed up and restored. A snapshot is a copy of a virtual machine at a specific point in time. This is done by copying the files that store the VM. The ability to revert to an earlier snapshot is as easy as pushing a button and waiting for the machine to be restored via a change of the files.

Each type of backup has advantages and disadvantages. Which type is best for your organization depends on the amount of data you routinely process and store, how frequently the data changes, how often you expect to have to restore from a backup, and a number of other factors. The type you select will shape your overall backup strategy and processes.

Determining How Long to Maintain Backups

Determining the length of time that you retain your backups should not be based on the frequency of your backups. The more often you conduct backup operations, the more data you will have. You might be tempted to trim the number of backups retained to keep storage costs down, but you need to evaluate how long you need to retain backups based on your operational environment and then keep the appropriate number of backups.

Backup Frequency and Retention

The type of backup strategy an organization employs is often affected by how frequently the organization conducts the backup activity. The usefulness of a backup is directly related to how many changes have occurred since the backup was created, and this is obviously affected by how often backups are created. The longer it has been since the backup was created, the more changes that likely will have occurred. There is no easy answer, however, to how frequently an organization should perform backups. Every organization should consider how long it can survive without current data from which to operate. It can then determine how long it will take to restore from backups, using various methods, and decide how frequently backups need to occur. This sounds simple, but it is a serious, complex decision to make.

Related to the frequency question is the issue of how long backups should be maintained. Is it sufficient to simply maintain a single backup from which to restore data? Security professionals will tell you no; multiple backups should be maintained for a variety of reasons. If the reason for restoring from the backup is the discovery of an intruder in the system, it is important to restore the system to its pre-intrusion state. If the intruder has been in the system for several months before being discovered and backups are taken weekly, it will not be possible to restore to a pre-intrusion state if only one backup is maintained. This would mean that all data and system files would be suspect and may not be reliable. If multiple backups were maintained, at various intervals, then it is easier to return to a point before the intrusion (or before the security or operational event that is necessitating the restoration) occurred.

There are several strategies or approaches to backup retention. One common and easy-to-remember strategy is the “rule of three,” in which the three most recent backups are kept. When a new backup is created, the oldest backup is overwritten. Another strategy is to keep the most recent copy of backups for various time intervals. For example, you might keep the latest daily, weekly, monthly, quarterly, and yearly backups. Note that in certain environments, regulatory issues may prescribe a specific frequency and retention period, so it is important to know your organization’s requirements when determining how often you will create a backup and how long you will keep it.

If you are not in an environment for which regulatory issues dictate the frequency and retention for backups, your goal will be to optimize the frequency. In determining the optimal backup frequency, two major costs need to be considered: the cost of the backup strategy you choose and the cost of recovery if you do not implement this backup strategy (that is, if no backups were created). You must also factor into this equation the probability that the backup will be needed on any given day. The two figures to consider then are these:

Alternative 1: (probability the backup is needed) × (cost of restoring with no backup)

Alternative 2: (probability the backup isn’t needed) × (cost of the backup strategy)

The first of these two figures, alternative 1, can be considered the probable loss you can expect if your organization has no backup. The second figure, alternative 2, can be considered the amount you are willing to spend to ensure that you can restore, should a problem occur (think of this as backup insurance—the cost of an insurance policy that may never be used but that you are willing to pay for, just in case). For example, if the probability of a backup being needed is 10 percent and the cost of restoring with no backup is $100,000, then the first equation would yield a figure of $10,000. This can be compared with the alternative, which would be a 90 percent chance the backup is not needed multiplied by the cost of implementing your backup strategy (of taking and maintaining the backups), which is, say, $10,000 annually. The second equation yields a figure of $9,000. In this example, the cost of maintaining the backup is less than the cost of not having backups, so the former would be the better choice. While conceptually this is an easy trade-off to understand, in reality it is often difficult to accurately determine the probability of a backup being needed.

Fortunately, the figures for the potential loss if there is no backup are generally so much greater than the cost of maintaining a backup that a mistake in judging the probability will not matter—it just makes too much sense to maintain backups. This example also uses a straight comparison based solely on the cost of the process of restoring with and without a backup strategy. What needs to be included in the cost of both of these is the loss that occurs while the asset is not available as it is being restored—in essence, a measurement of the value of the asset itself.

To optimize your backup strategy, you need to determine the correct balance between these two figures. Obviously, you do not want to spend more in your backup strategy than you face losing should you not have a backup plan at all. When working with these two calculations, you have to remember that this is a cost-avoidance exercise. The organization is not going to increase revenues with its backup strategy. The goal is to minimize the potential loss due to some catastrophic event by creating a backup strategy that will address your organization’s needs.

When you’re calculating the cost of the backup strategy, consider the following:

![]() The cost of the backup media required for a single backup

The cost of the backup media required for a single backup

![]() The storage costs for the backup media based on the retention policy

The storage costs for the backup media based on the retention policy

![]() The labor costs associated with performing a single backup

The labor costs associated with performing a single backup

![]() The frequency with which backups are created

The frequency with which backups are created

All of these considerations can be used to arrive at an annual cost for implementing your chosen backup strategy, and this figure can then be used as previously described.

Onsite Backup Storage

One of the most frequent errors committed with backups is to store all backups onsite. While this greatly simplifies the process, it means that all data is stored in the same facility. Should a natural disaster occur (such as a fire or hurricane), you could lose not only your primary data storage devices but your backups as well. You need to use an offsite location to store at least some of your backups.

Long-Term Backup Storage

An easy factor to overlook when upgrading systems is whether long-term backups will still be usable. You need to ensure that the type of media utilized for your long-term storage is compatible with the hardware that you are upgrading to. Otherwise, you may find yourself in a situation in which you need to restore data, and you have the data, but you don’t have any way to restore it.

Storage of Backups

An important element to factor into the cost of the backup strategy is the expense of storing the backups. This is affected by many variables, including number and size of the backups, and the need for quick restoration. This can be further complicated by keeping hot, warm, and cold sites synchronized. Backup storage is more than just figuring out where to keep tapes, but rather becomes part of business continuity, disaster recovery, and overall risk strategies.

Issues with Long-Term Storage of Backups

Depending on the media used for an organization’s backups, degradation of the media is a distinct possibility and needs to be considered. Magnetic media degrades over time (measured in years). In addition, tapes can be used a limited number of times before the surface begins to flake off. Magnetic media should thus be rotated and tested to ensure that it is still usable.

You should also consider advances in technology. The media you used to store your data two years ago may now be considered obsolete (5.25-inch floppy disks, for example). Software applications also evolve, and the media may be present but may not be compatible with current versions of the software. This may mean that you need to maintain backup copies of both hardware and software in order to recover from older backup media.

Another issue is security related. If the file you stored was encrypted for security purposes, does anybody in the company remember the password to decrypt the file to restore the data? More than one employee in the company should know the key to decrypt the files, and this information should be passed along to another person when a critical employee with that information leaves, is terminated, or dies.

Geographic Considerations

An important element to factor into the cost of the backup strategy is the location of storing the backups. A simple strategy might be to store all your backups together for quick and easy recovery actions. This is not, however, a good idea. Suppose the catastrophic event that necessitated the restoration of backed-up data was a fire that destroyed the computer system the data was processed on. In this case, any backups that were stored in the same facility might also be lost in the same fire.

The solution is to keep copies of backups in separate locations. The most recent copy can be stored locally because it is the most likely to be needed, while other copies can be kept at other locations. Depending on the level of security your organization desires, the storage facility itself could be reinforced against possible threats in your area (such as tornados or floods). A more recent advance is online backup services. A number of third-party companies offer high-speed connections for storing data in a separate facility. Transmitting the backup data via network connections alleviates some other issues with the physical movement of more traditional storage media, such as the care during transportation (tapes do not fare well in direct sunlight, for example) or the time that it takes to transport the tapes.

Location Selection

Picking a storage location has several key considerations. First is physical safety. Because of the importance of maintaining proper environmental conditions safe from outside harm, this can limit locations. Heating, ventilating, and air conditioning (HVAC) can be a consideration, as well as issues such as potential flooding and theft. The ability to move the backups in and out of storage is also a concern. Again, the cloud and modern-day networks come to the rescue; with today’s high-speed networks, reasonably priced storage, encryption technologies, and the ability to store backups in a redundant array across multiple sites, the cloud is an ideal solution.

Offsite Backups

Offsite backups are just that, backups that are stored in a separate location from the system being backed up. This can be important when an issue affects a larger area than a single room. A building fire, a hurricane, or a tornado could occur and typically affect a larger area than a single room or building. Having backups offsite alleviates the risk of losing them. In today’s high-speed network world with cloud services, storing backups in the cloud is an option that can resolve many of the risks associated with backup availability.

Distance

The distance associated with an offsite backup is a logistic problem. If you need to restore a system and the backup is stored hours away by car, this can increase the recovery time. The physical movement of backup tapes has been alleviated in many systems through networks that move the data at the speed of the network.

Legal Implications

When planning an offsite backup, you must consider the legal implications of where the data is being stored. Different jurisdictions have different laws, rules, and regulations concerning core tools such as encryption. Understanding how these affect data backup storage plans is critical to prevent downstream problems.

Data Sovereignty

Data sovereignty is a relatively new phenomenon, but in the past couple of years several countries have enacted laws stating that certain types of data must be stored within their boundaries. In today’s multinational economy, with the Internet not knowing borders, this has become a problem. Several high-tech firms have changed their business strategies and offerings in order to comply with data sovereignty rules and regulations. For example, LinkedIn, a business social network site, recently was told by Russian authorities that all data on Russian citizens needed to be kept on servers in Russia. LinkedIn made the business decision that the cost was not worth the benefit and has since abandoned the Russian market.

Business Continuity

Business Continuity

Keeping an organization running when an event occurs that disrupts operations is not accomplished spontaneously but requires advance planning and periodically exercising those plans to ensure they will work. A term that is often used when discussing the issue of continued organizational operations is business continuity (BC).

The acronyms DR and BC are often used synonymously and sometimes together as in BC/DR, but there are subtle differences between them. Study this section carefully to ensure that you can discriminate between the two.

There are many risk management best practices associated with business continuity. The topics of planning, business impact analysis, identification of critical systems and components, single points of failure, and more are detailed in the following sections.

Business Continuity Plans

As in most operational issues, planning is a foundational element to success. This is true in business continuity, and the business continuity plan (BCP) represents the planning and advance policy decisions to ensure the business continuity objectives are achieved during a time of obvious turmoil. You might wonder what the difference is between a disaster recovery plan and a business continuity plan. After all, isn’t the purpose of disaster recovery the continued operation of the organization or business during a period of disruption? Many times, these two terms are used synonymously, and for many organizations there may be no major difference between them. There are, however, real differences between a BCP and a DRP, one of which is the focus.

The focus of a BCP is the continued operation of the essential elements of the business or organization. Business continuity is not about operations as normal but rather about trimmed-down, essential operations only. In a BCP, you will see a more significant emphasis placed on the limited number of critical systems the organization needs to operate. The BCP will describe the functions that are most critical, based on a previously conducted business impact analysis, and will describe the order in which functions should be returned to operation. The BCP describes what is needed in order for the business to continue to operate in the short term, even if all requirements are not met and risk profiles are changed.

The focus of a DRP is on recovering and rebuilding the organization after a disaster has occurred. The recovery’s goal is the complete operation of all elements of the business. The DRP is part of the larger picture, while the BCP is a tactical necessity until operations can be restored. A major focus of the DRP is the protection of human life, meaning evacuation plans and system shutdown procedures should be addressed. In fact, the safety of employees should be a theme throughout a DRP.

Business Impact Analysis

Business impact analysis (BIA) is the term used to describe the document that details the specific impact of elements on a business operation (this may also be referred to as a business impact assessment). A BIA outlines what the loss of any of your critical functions will mean to the organization. The BIA is a foundational document used to establish a wide range of priorities, including the system backups and restoration that are needed to maintain continuity of operation. While each person may consider their individual tasks to be important, the BIA is a business-level analysis of the criticality of all elements with respect to the business as a whole. The BIA will take into account the increased risk from minimal operations and is designed to determine and justify what is essentially critical for a business to survive versus what someone may state or wish.

Conducting a BIA is a critical part of developing your BCP. This assessment will allow you to focus on the most critical elements of your organization. These critical elements are the ones that you want to ensure are recovered first, and this priority should be reflected in your BCP and subset DRP.

Identification of Critical Systems and Components

A foundational element of a security plan is an understanding of the criticality of systems, the data, and the components. Identifying the critical systems and components is one of the first steps an organization needs to undertake in designing the set of security controls. As the systems evolve and change, the continued identification of the critical systems needs to occur, keeping the information up-to-date and current.

Removing Single Points of Failure

A key security methodology is to attempt to avoid a single point of failure in critical functions within an organization. When developing your BCP, you should be on the lookout for areas in which a critical function relies on a single item (such as switches, routers, firewalls, power supplies, software, or data) that if lost would stop this critical function. When these points are identified, think about how each of these possible single points of failure can be eliminated (or mitigated).

In addition to the internal resources you need to consider when evaluating your business functions, there are many resources external to your organization that can impact the operation of your business. You must look beyond hardware, software, and data to consider how the loss of various critical infrastructures can also impact business operations.

Risk Assessment

The principles of risk assessment can be applied to business continuity planning. Determining the sources and magnitudes of risks is necessary in all business operations, including business continuity planning.

Succession Planning

Business continuity planning is more than just ensuring that hardware is available and operational. The people who operate and maintain the system are also important, and in the event of a disruptive event, the availability of key personnel is as important as hardware for successful business continuity operations. The development of a succession plan that identifies key personnel and develops qualified personnel for key functions is a critical part of a successful BCP.

Business continuity is not only about hardware; plans need to include people as well. Succession planning is a proactive plan for personnel substitutions in the event that the primary person is not available to fulfill their assigned duties.

Continuity of Operations

The continuity of operations is imperative because it has been shown that businesses that cannot quickly recover from a disruption have a real chance of never recovering, and they may go out of business. The overall goal of business continuity planning is to determine which subset of normal operations needs to be continued during periods of disruption.

Exercises/Tabletop

Once a plan is in place, a tabletop exercise should be performed to walk through all of the steps and ensure all elements are covered and that the plan does not forget a key dataset or person. This exercise/tabletop walk-through is a critical final step because it is this step that validates the planning covered the needed elements. As this is being done for operations determined to be critical to the business, this hardly seems like overkill.

After-Action Reports

Just as lessons learned are key elements of incident response processes, the after-action reports associated with invoking continuity of operations reports on two functions. First is the level of operations upon transfer. Is all of the desired capability up and running? The second question addresses how the actual change from normal operations to those supported by continuity systems occurred.

Failover

Failover is the process of moving from a normal operational capability to the continuity of operations version of the business. The speed and flexibility depends on the business type, from seamless for a lot of financial sites to one where A is turned off and someone goes and turns B on with some period of no service between. Simple transparent failovers can be achieved through architecture and technology choices, but they must be designed into the system.

Separate from failover, which occurs whenever the problem occurs, is the switch back to the original system. Once a system is fixed, resolving whatever caused the outage, there is a need to move back to the original production system. This “failback” mechanism, by definition, is harder to perform as primary keys and indices are not easily transferred back. The return to operations is a complicated process, but the good news is that it can be performed at a time of the organization’s choosing, unlike the problem that initiated the initial shift of operations to continuity procedures.

Alternative Sites

An issue related to the location of backup storage is where the restoration services will be conducted. Determining when or if an alternative site is needed should be included in recovery and continuity plans. If the organization has suffered physical damage to a facility, having offsite storage of data is only part of the solution. This data will need to be processed somewhere, which means that computing facilities similar to those used in normal operations are required. There are a number of ways to approach this problem, including hot sites, warm sites, cold sites, and mobile backup sites.

Research Alternative Processing Sites

There is an industry built upon providing alternative processing sites in case of a disaster of some sort. Using the Internet or other resources, determine what resources are available in your area for hot, warm, and cold sites. Do you live in an area in which a lot of these services are offered? Do other areas of the country have more alternative processing sites available? What makes where you live a better or worse place for alternative sites?

Hot Site

A hot site is a fully configured environment, similar to the normal operating environment that can be operational immediately or within a few hours depending on its configuration and the needs of the organization.

Warm Site

A warm site is partially configured, usually having the peripherals and software but perhaps not the more expensive main processing computer. It is designed to be operational within a few days.

Cold Site

A cold site will have the basic environmental controls necessary to operate but few of the computing components necessary for processing. Getting a cold site operational may take weeks.

Table 19.4 compares hot, warm, and cold sites.

Table 19.4 Comparison of Hot, Warm, and Cold Sites

Alternate sites are highly tested on the CompTIA Security+ exam. It is also important to know whether the data is available or not at each location. For example, a hot site has duplicate data or a nearready backup of the original site. A cold site has no current or backup copies of the original site data. A warm site has backups, but they are typically several days or weeks old.

Shared alternate sites may also be considered. These sites can be designed to handle the needs of different organizations in the event of an emergency. The hope is that the disaster will affect only one organization at a time. The benefit of this method is that the cost of the site can be shared among organizations. Two similar organizations located close to each other should not share the same alternate site because there is a greater chance that they would both need it at the same time.

All of these options can come with a considerable price tag, which makes another option, mutual aid agreements, a possible alternative. With a mutual aid agreement, similar organizations agree to assume the processing for the other party in the event a disaster occurs. This is sometimes referred to as a reciprocal site. The obvious assumptions here are that both organizations will not be hit by the same disaster and both have similar processing environments. If these two assumptions are correct, then a mutual aid agreement should be considered. Such an arrangement may not be legally enforceable, even if it is in writing, and organizations must consider this when developing their disaster plans. In addition, if the organization that the mutual aid agreement is made with is hit by the same disaster, then both organizations will be in trouble. Additional contingencies need to be planned for, even if a mutual aid agreement is made with another organization. There are also the obvious security concerns that must be considered when having another organization assume your organization’s processing.

Order of Restoration

When restoring more than a single machine, there are multiple considerations in the order of restoration. Part of the planning is to decide on the order of restoration, in other words, which systems go first, second, and ultimately last. There are a couple of distinct factors to consider. First are dependencies. Any system that is dependent upon another for proper operation might as well wait until the prerequisite services are up and running. The second factor is criticality to the enterprise. The most critical service should be brought back up first.

Utilities

The interruption of power is a common issue during a disaster. Computers and networks obviously require power to operate, so emergency power must be available in the event of any disruption of operations. For short-term interruptions, such as what might occur as the result of an electrical storm, uninterruptible power supplies (UPSs) may suffice. These devices contain a battery that provides steady power for short periods of time—enough to keep a system running should power be lost for only a few minutes and enough time to allow administrators to gracefully halt the system or network. For continued operations that extend beyond a few minutes, another source of power will be required. Generally this is provided by a backup emergency generator.

While backup generators are frequently used to provide power during an emergency, they are not a simple, maintenance-free solution. Generators need to be tested on a regular basis, and they can easily become strained if they are required to power too much equipment. If your organization is going to rely on an emergency generator for backup power, you must ensure that the system has reserve capacity beyond the anticipated load for the unanticipated loads that will undoubtedly be placed on it.

Generators also take time to start up, so power to your organization will most likely be lost, even if only briefly, until the generators kick in. This means you should also use a UPS to allow for a smooth transition to backup power. Generators are also expensive and require fuel. Be sure to locate the generator high if flooding is possible, and don’t forget the need to deliver fuel to it or you may find yourself hauling cans of fuel up a number of stairs.

When determining the need for backup power, don’t forget to factor in environmental conditions. Running computer systems in a room with no air conditioning in the middle of the summer can result in an extremely uncomfortable environment for all to work in. Mobile backup sites, generally using trailers, often rely on generators for their power but also factor in the requirement for environmental controls.

Power is not the only essential utility for operations. Depending on the type of disaster that has occurred, telephone and Internet communication may also be lost, and wireless services may not be available. Planning for redundant means of communication (such as using both land lines and wireless) can help with most outages, but for large disasters, your backup plans should include the option to continue operations from a completely different location while waiting for communications in your area to be restored. Telecommunication carriers have their own emergency equipment and are fairly efficient at restoring communications, but it may take a few days.

Secure Recovery

Several companies offer recovery services, including power, communications, and technical support that your organization may need if its operations are disrupted. These companies advertise secure recovery sites or offices from which your organization can again begin to operate in a secure environment. Secure recovery is also advertised by other organizations that provide services that can remotely (over the Internet, for example) provide restoration services for critical files and data.

In both cases—the actual physical suites and the remote service—security is an important element. During a disaster, your data does not become any less important, and you will want to make sure that you maintain the security (in terms of confidentiality and integrity, for example) of your data. As in other aspects of security, the decision to employ these services should be made based on a calculation of the benefits weighed against the potential loss if alternative means are used.

The Sidekick Failure of 2009

In October 2009, many T-Mobile Sidekick users discovered that their contacts, calendars, todo lists, and photos were lost when cloud-based servers lost their data. Not all users were affected by the server failure, but for those that were, the loss was complete. T-Mobile quickly pointed the finger at Microsoft, who had acquired in February 2008 a small startup company, Danger, that built the cloudbased system for T-Mobile. To end users, this transaction was completely transparent. In the end, a lot of users lost their data and were offered a $100 credit by T-Mobile against their bill. Regardless of where the blame lands, the affected end user must still face some simple questions: Did they consider the importance of backup? If the information on their phone was critical, did they perform a local backup? Or did they assume that the cloud and large corporations they contracted with did it for them?

Cloud Computing

Cloud Computing

One of the newer innovations coming to computing via the Internet is the concept of cloud computing. Instead of owning and operating a dedicated set of servers for common business functions such as database services, file storage, e-mail services, and so forth, an organization can contract with third parties to provide these services over the Internet from their server farms. This is commonly referred to as Infrastructure as a Service (IaaS). The concept is that operations and maintenance are activities that have become a commodity, and the Internet provides a reliable mechanism to access this more economical form of operational computing.

Pushing computing into the cloud may make good business sense from a cost perspective, but doing so does not change the fact that your organization is still responsible for ensuring that all the appropriate security measures are properly in place. How are backups being performed? What plan is in place for disaster recovery? How frequently are systems patched? What is the service level agreement (SLA) associated with the systems? It is easy to ignore the details when outsourcing these critical yet costly elements, but when something bad occurs, you must have confidence that the appropriate level of protections has been applied. These are the serious questions and difficult issues to resolve when moving computing into the cloud—location may change, but responsibility and technical issues are still there and form the risk of the solution.

Redundancy

Redundancy

Redundancy is the use of multiple, independent elements to perform a critical function so that if one fails, there is another that can take over the work. When developing plans for ensuring that an organization has what it needs to keep operating, even if hardware or software fails or if security is breached, you should consider other measures involving redundancy and spare parts. Some common applications of redundancy include the use of redundant servers, redundant connections, and redundant ISPs. The need for redundant servers and connections may be fairly obvious, but redundant ISPs may not be so, at least initially. Many ISPs already have multiple accesses to the Internet on their own, but by having additional ISP connections, an organization can reduce the chance that an interruption of one ISP will negatively impact the organization. Ensuring uninterrupted access to the Internet by employees or access to the organization’s e-commerce site for customers is becoming increasingly important.

Redundancy is an important factor in both security and reliability. Make sure you understand the many different areas that can benefit from redundant components.

Fault Tolerance

Fault tolerance basically has the same goal as high availability—the uninterrupted access to data and services. It can be accomplished by the mirroring of data and hardware systems. Should a “fault” occur, causing disruption in a device such as a disk controller, the mirrored system provides the requested data with no apparent interruption in service to the user. Certain systems, such as servers, are more critical to business operations and should therefore be the object of fault-tolerant measures.

Fault tolerance and high availability are similar in their goals, yet they are separate in application. High availability refers to maintaining both data and services in an operational state even when a disrupting event occurs. Fault tolerance is a design objective to achieve high availability should a fault occur.

High Availability

One of the objectives of security is the availability of data and processing power when an authorized user desires it. High availability refers to the ability to maintain availability of data and operational processing (services) despite a disrupting event. Generally this requires redundant systems, both in terms of power and processing, so that should one system fail, the other can take over operations without any break in service. High availability is more than data redundancy; it requires that both data and services be available.

Certain systems, such as servers, are more critical to business operations and should, therefore, be the object of fault-tolerance measures. A common technique used in fault tolerance is load balancing. Another closely related technique is clustering. Both techniques are discussed in the following sections.

Obviously, providing redundant systems and equipment comes with a price, and the need to provide this level of continuous, uninterrupted operation needs to be carefully evaluated.

Clustering

Clustering links a group of systems to have them work together, functioning as a single system. In many respects, a cluster of computers working together can be considered a single larger computer, with the advantage of costing less than a single comparably powerful computer. A cluster also has the fault-tolerant advantage of not being reliant on any single computer system for overall system performance.

Uptime Metrics

Because uptime is critical, it is common to measure uptime (or, conversely, downtime) and use this measure to demonstrate reliability. A common measure for this has become the measure of “9s,” as in 99 percent uptime, 99.99 percent uptime, and so on. When someone refers to “five nines” as a measure, this generally means 99.999 percent uptime. Expressing this in other terms, five nines of uptime correlates to less than five-anda- half minutes of downtime per year. Six nines is 31 seconds of downtime per year. One important note is that uptime is not the same as availability because systems can be up but not available for reasons of network outage, so be sure you understand what is being counted.

Load Balancing

Load balancing is designed to distribute the processing load over two or more systems. It is used to help improve resource utilization and throughput but also has the added advantage of increasing the fault tolerance of the overall system because a critical process may be split across several systems. Should any one system fail, the others can pick up the processing it was handling. While there may be an impact to overall throughput, the operation does not go down entirely. Load balancing is often utilized for systems that handle web sites and high-bandwidth file transfers.

Single Point of Failure

Related to the topic of high availability is the concept of a single point of failure. A single point of failure is a critical operation in the organization upon which many other operations rely and which itself relies on a single item that, if lost, would halt this critical operation. A single point of failure can be a special piece of hardware, a process, a specific piece of data, or even an essential utility. Single points of failure need to be identified if high availability is required because they are potentially the “weak links” in the chain that can cause disruption of the organization’s operations. Generally, the solution to a single point of failure is to modify the critical operation so that it does not rely on this single element or to build redundant components into the critical operation to take over the process should one of these points fail.

Understand the various ways that a single point of failure can be addressed, including the various types of redundancy and high availability clusters.

In addition to the internal resources you need to consider when evaluating your business functions, there are many external resources that can impact the operation of your business. You must look beyond hardware, software, and data to consider how the loss of various critical infrastructures can also impact business operations. The type of infrastructures you should consider in your BCP is the subject of the next section.

Load Balancing, Clusters, Farms

A cluster is a group of servers deployed to achieve a common objective. Clustered servers are aware of one another and have a mechanism to exchange their states, so each server’s state is replicated to the other clustered servers. Load balancing is a mechanism where traffic is directed to identical servers based on availability. In load balancing, the servers are not aware of the state of other servers. For purposes of load, it is not uncommon to have a load balancer distribute requests to clustered servers.

Database servers are typically clustered, as the integrity of the data structure requires all copies to be identical. Web servers and other content distribution mechanisms can use load balancing alone whenever maintaining state changes is not necessary across the environment. A server farm is a group of related servers in one location serving an enterprise. It can be either clustered, load balanced, or both.

Failure and Recovery Timing

Several important concepts are involved in the issue of fault tolerance and system recovery. The first is mean time to failure (or mean time between failures). This term refers to the predicted average time that will elapse before failure (or between failures) of a system (generally referring to hardware components). Knowing what this time is for hardware components of various critical systems can help an organization plan for maintenance and equipment replacement. Mean time to failure and mean time between failures are separate items, with minor differences. MTTF is the time to fail for a device that cannot or will not be repaired. MTBF is the time between failures, indicating that the item can in fact be repaired and may suffer multiple failures in its lifetime, each solved by repairs.

MTTR

Mean time to repair (MTTR) is a common measure of how long it takes to repair a given failure. This is the average time and may or may not include the time needed to obtain parts.

MTBF

Mean time between failures (MTBF) is a common measure of reliability of a system and is an expression of the average time between system failures. The time between failures is measured from the time a system returns to service until the next failure. The MTBF is an arithmetic mean of a set of system failures.

MTBF = Σ (start of downtime – start of uptime) / number of failures

MTTF

Mean time to failure (MTTF) is a variation of MTBF, one that is commonly used instead of MTBF when the system is replaced in lieu of being repaired. Other than the semantic difference, the calculations are the same. When one uses MTTF, they are indicating that the system is not repairable and that it in fact will require replacement.

Measurement of Availability

Availability is a measure of the amount of time a system performs its intended function. Reliability is a measure of the frequency of system failures. Availability is related to, but different than, reliability and is typically expressed as a percentage of time the system is in its operational state. To calculate availability, both the MTTF and the MTTR are needed.

Availability = MTTF / (MTTF + MTTR)

Assuming a system has an MTTF of 6 months and the repair takes 30 minutes, the availability would be as follows:

Availability = 6 months / (6 months + 30 minutes) = 99.9884%

A second important concept to understand is mean time to restore (or mean time to recovery). This term refers to the average time that it will take to restore a system to operational status (to recover from any failure). Knowing what this time is for critical systems and processes is important to developing effective, and realistic, recovery plans, including DRP, BCP, and backup plans.

The last two concepts are closely tied. As previously described, the recovery time objective is the goal an organization sets for the time within which it wants to have a critical service restored after a disruption in service occurs. It is based on the calculation of the maximum amount of time that can occur before unacceptable losses take place. Also covered was the recovery point objective, which is based on a determination of how much data loss an organization can withstand. Note that both of these are measured in terms of time, but in different contexts.

Taken together, these four concepts are important considerations for an organization developing its various contingency plans. Having RTO and RPO that are shorter than the MTTR can result in losses. And attempting to lower the mean time between failures or the recovery time objectives below what is required by the organization wastes money that could be better spent elsewhere. The key is in understanding these figures and balancing them. MTTR and related metrics are covered in more detail in the next chapter.

Backout Planning

An issue related to backups is the issue of returning to an earlier release of a software application in the event that a new release causes either a partial or complete failure. Planning for such an event is referred to as backout planning. These plans should address both a partial or full return to previous releases of software. Sadly, this sort of event is more frequent than most would suspect. The reason for this is the interdependence of various aspects of a system. It is not uncommon for one piece of software to take advantage of some feature of another. Should this feature change in a new release, another critical operation may be impacted.

RAID

One popular approach to increasing reliability in disk storage is Redundant Array of Independent Disks (RAID) (previously known as Redundant Array of Inexpensive Disks). RAID takes data that is normally stored on a single disk and spreads it out among several others. If any single disk is lost, the data can be recovered from the other disks where the data also resides. With the price of disk storage decreasing, this approach has become increasingly popular to the point that many individual users even have RAID arrays for their home systems. RAID can also increase the speed of data recovery because multiple drives can be busy retrieving requested data at the same time instead of relying on just one disk to do the work.

An interesting historical note is that RAID originally stood for Redundant Array of Inexpensive Disks, but the name was changed to the currently accepted Redundant Array of Independent Disks as a result of industry influence.

Several different RAID approaches can be considered.

![]() RAID 0 (striped disks) simply spreads the data that would be kept on the one disk across several disks. This decreases the time it takes to retrieve data, because the data is read from multiple drives at the same time, but it does not improve reliability, because the loss of any single drive will result in the loss of all the data (since portions of files are spread out among the different disks). With RAID 0, the data is split across all the drives with no redundancy offered.

RAID 0 (striped disks) simply spreads the data that would be kept on the one disk across several disks. This decreases the time it takes to retrieve data, because the data is read from multiple drives at the same time, but it does not improve reliability, because the loss of any single drive will result in the loss of all the data (since portions of files are spread out among the different disks). With RAID 0, the data is split across all the drives with no redundancy offered.

![]() RAID 1 (mirrored disks) is the opposite of RAID 0. RAID 1 copies the data from one disk onto two or more disks. If any single disk is lost, the data is not lost since it is also copied onto the other disk(s). This method can be used to improve reliability and retrieval speed, but it is relatively expensive when compared to other RAID techniques.

RAID 1 (mirrored disks) is the opposite of RAID 0. RAID 1 copies the data from one disk onto two or more disks. If any single disk is lost, the data is not lost since it is also copied onto the other disk(s). This method can be used to improve reliability and retrieval speed, but it is relatively expensive when compared to other RAID techniques.

![]() RAID 2 (bit-level error-correcting code) is not typically used, as it stripes data across the drives at the bit level as opposed to the block level. It is designed to be able to recover the loss of any single disk through the use of error-correcting techniques.

RAID 2 (bit-level error-correcting code) is not typically used, as it stripes data across the drives at the bit level as opposed to the block level. It is designed to be able to recover the loss of any single disk through the use of error-correcting techniques.

![]() RAID 3 (byte-striped with error check) spreads the data across multiple disks at the byte level with one disk dedicated to parity bits. This technique is not commonly implemented because input/output operations can’t be overlapped due to the need for all to access the same disk (the disk with the parity bits).

RAID 3 (byte-striped with error check) spreads the data across multiple disks at the byte level with one disk dedicated to parity bits. This technique is not commonly implemented because input/output operations can’t be overlapped due to the need for all to access the same disk (the disk with the parity bits).

![]() RAID 4 (dedicated parity drive) stripes data across several disks but in larger stripes than in RAID 3, and it uses a single drive for parity-based error checking. RAID 4 has the disadvantage of not improving data retrieval speeds since all retrievals still need to access the single parity drive.

RAID 4 (dedicated parity drive) stripes data across several disks but in larger stripes than in RAID 3, and it uses a single drive for parity-based error checking. RAID 4 has the disadvantage of not improving data retrieval speeds since all retrievals still need to access the single parity drive.

![]() RAID 5 (block-striped with error check) is a commonly used method that stripes the data at the block level and spreads the parity data across the drives. This provides both reliability and increased speed performance. This form requires a minimum of three drives.

RAID 5 (block-striped with error check) is a commonly used method that stripes the data at the block level and spreads the parity data across the drives. This provides both reliability and increased speed performance. This form requires a minimum of three drives.

RAID 0 through 5 are the original techniques, with RAID 5 being the most common method used, because it provides both the reliability and speed improvements. Additional methods have been implemented, such as duplicating the parity data across the disks (RAID 6) and a stripe of mirrors (RAID 10).

Knowledge of the basic RAID structures by number designation is a testable element and should be memorized for the exam.

Spare Parts and Redundancy

RAID increases reliability through the use of redundancy. When developing plans for ensuring that an organization has what it needs to keep operating, even if hardware or software fails or if security is breached, you should consider other measures involving redundancy and spare parts.

Many organizations don’t see the need for maintaining a supply of spare parts. After all, with the price of storage dropping and the speed of processors increasing, why replace a broken part with older technology? However, a ready supply of spare parts can ease the process of bringing the system back online. Replacing hardware and software with newer versions can sometimes lead to problems with compatibility. An older version of some piece of critical software may not work with newer hardware, which may be more capable in a variety of ways. Having critical hardware (or software) spares for critical functions in the organization can greatly facilitate maintaining business continuity in the event of software or hardware failures.

Chapter 19 Review

Chapter Summary

Chapter Summary

After reading this chapter and completing the exercises, you should understand the following regarding disaster recovery and business continuity.

Describe the various components of a business continuity plan

![]() A business continuity plan should contemplate the many types of disasters that can cause a disruption to an organization.

A business continuity plan should contemplate the many types of disasters that can cause a disruption to an organization.

![]() A business impact assessment (BIA) can be conducted to identify the most critical functions for an organization.

A business impact assessment (BIA) can be conducted to identify the most critical functions for an organization.

![]() A business continuity plan is created to outline the order in which business functions will be restored so that the most critical functions are restored first.

A business continuity plan is created to outline the order in which business functions will be restored so that the most critical functions are restored first.

![]() One of the most critical elements of any disaster recovery plan is the availability of system backups.

One of the most critical elements of any disaster recovery plan is the availability of system backups.

Describe the elements of disaster recovery plans

![]() Critical elements of disaster recovery plans include business continuity plans and contingency planning.

Critical elements of disaster recovery plans include business continuity plans and contingency planning.