System Hardening and Baselines

People can have the Model T in any color—so long as it’s black.

—HENRY FORD

In this chapter, you will learn how to

![]() Harden operating systems and network operating systems

Harden operating systems and network operating systems

![]() Implement host-level security

Implement host-level security

![]() Harden applications

Harden applications

![]() Establish group policies

Establish group policies

![]() Secure alternative environments (SCADA, real-time, and so on)

Secure alternative environments (SCADA, real-time, and so on)

The many uses for systems and operating systems require flexible components that allow users to design, configure, and implement the systems they need. Yet it is this very flexibility that causes some of the biggest weaknesses in computer systems. Computer and operating system developers often build and deliver systems in “default” modes that do little to secure the systems from external attacks. From the view of the developer, this is the most efficient mode of delivery, as there is no way they can anticipate what every user in every situation will need. From the user’s view, however, this means a good deal of effort must be put into protecting and securing the system before it is ever placed into service. The process of securing and preparing a system for the production environment is called hardening. Unfortunately, many users don’t understand the steps necessary to secure their systems effectively, resulting in hundreds of compromised systems every day.

Hardening systems, servers, workstations, networks, and applications is a process of defining the required uses and needs and then aligning security controls to limit a system’s desired functionality. Once this is determined, you have a system baseline that you can compare changes to over the course of a system’s lifecycle.

Overview of Baselines

Overview of Baselines

The process of establishing a system’s operational state is called baselining, and the resulting product is a system baseline that describes the capabilities of a software system. Once the process has been completed for a particular hardware and software combination, any similar systems can be configured with the same baseline to achieve the same level of applicaiton. Uniform baselines are critical in large-scale operations, because maintaining separate configurations and security levels for hundreds or thousands of systems is far too costly.

Constructing a baseline or hardened system is similar for servers, workstations, and network operating systems (NOSs). The specifics may vary, but the objects are the same.

Hardware/Firmware Security

Hardware/Firmware Security

Hardware, in the form of servers, workstations, and even mobile devices, can represent a weakness or vulnerability in the security system associated with an enterprise. While hardware can be easily replaced if lost or stolen, the information that is contained by the devices complicates the security picture. Data or information can be safeguarded from loss by backups, but this does little in the way of protecting it from disclosure to an unauthorized party. There are software measures that can assist in the form of encryption, but these also have drawbacks in the form of scalability and key distribution.

FDE/SED

Full drive encryption (FDE) and self-encrypting drives (SED) are methods of implementing cryptographic protection on hard drives and other similar storage media with the express purpose of protecting the data even if the drive is removed from the machine. Portable machines, such as laptops, have a physical security weakness in that they are relatively easy to steal and then can be attacked offline at the attacker’s leisure. The use of modern cryptography, coupled with hardware protection of the keys, makes this vector of attack much more difficult. In essence, both of these methods offer a transparent, seamless manner of encrypting the entire hard drive using keys that are only available to someone who can properly log into the machine.

TPM

The Trusted Platform Module (TPM) is a hardware solution on the motherboard, one that assists with key generation and storage as well as random number generation. When the encryption keys are stored in the TPM, they are not accessible via normal software channels and are physically separated from the hard drive or other encrypted data locations. This makes the TPM a more secure solution than storing the keys on the machine’s normal storage.

Hardware Root of Trust

A hardware root of trust is the concept that if one has trust in a source’s specific security functions, this layer can be used to promote security to higher layers of a system. Because roots of trust are inherently trusted, they must be secure by design. This is usually accomplished by keeping them small and limiting their functionality to a few specific tasks. Many roots of trust are implemented in hardware that is isolated from the OS and the rest of the system so that malware cannot tamper with the functions they provide. Examples of roots of trust include TPM chips in computers and Apple’s Secure Enclave coprocessor in its iPhones and iPads. Apple also uses a signed Boot ROM mechanism for all software loading.

HSM

A hardware security module (HSM) is a device used to manage or store encryption keys. It can also assist in cryptographic operations such as encryption, hashing, and the application of digital signatures. HSMs are typically peripheral devices, connected via USB or a network connection. HSMs have tamper-protection mechanisms to prevent physical access to the secrets they guard. Because of their dedicated design, they can offer significant performance advantages over general-purpose computers when it comes to cryptographic operations. When an enterprise has significant levels of cryptographic operations, HSMs can provide throughput efficiencies.

Storing private keys anywhere on a networked system is a recipe for loss. HSMs are designed to allow the use of a key without exposing it to the wide range of host-based threats.

UEFI/BIOS

Basic Input/Output System (BIOS) is the firmware that a computer system uses as a connection between the actual hardware and the operating system. BIOS is typically stored on nonvolatile flash memory, which allows for updates, yet persists when the machine is powered off. The purpose behind BIOS is to initialize and test the interfaces to the actual hardware in a system. Once the system is running, the BIOS translates low-level access to the CPU, memory, and hardware devices, making a common interface for the OS to connect to. This facilitates multiple hardware manufacturers and differing configurations against a single OS install.

Unified Extensible Firmware Interface (UEFI) is the current replacement for BIOS. UEFI offers a significant modernization over the decades-old BIOS, including dealing with modern peripherals such as high-capacity storage and high-bandwidth communications. UEFI also has more security designed into it, including provisions for secure booting.

Secure Boot and Attestation

One of the challenges in securing an OS is the myriad of drivers and other add-ons that hook into the OS and provide specific added functionality. If these additional programs are not properly vetted before installation, this pathway can provide a means by which malicious software can attack a machine. And because these attacks can occur at boot time, at a level below security applications such as antivirus software, they can be very difficult to detect and defeat. UEFI offers a solution to this problem, called Secure Boot, which is a mode that when enabled only allows signed drivers and OS loaders to be invoked. Secure Boot requires specific setup steps, but once enabled, it blocks malware that attempts to alter the boot process. Secure Boot enables the attestation that the drivers and OS loaders being used have not changed since they were approved for use. Secure Boot is supported by Microsoft Windows and all major versions of Linux.

Integrity Measurement

Integrity measurement is the measuring and identification of changes to a specific system away from an expected value. Whether it’s the simple changing of data as measured by a hash value or the TPM-based integrity measurement of the system boot process and attestation of trust, the concept is the same: take a known value, store a hash or other keyed value, and then, at the time of concern, recalculate and compare values.

In the case of TPM-mediated systems, where the TPM chip provides a hardware-based root of trust anchor, the TPM system is specifically designed to calculate hashes of a system and store them in a Platform Configuration Register (PRC). This register can be read later and compared to a known, or expected, value, and if they differ, there is a trust violation. Certain BIOSs, UEFIs, and boot loaders can all work with the TPM chip in this manner, providing a means of establishing a trust chain during system boot.

Understand how TPM, UEFI, Secure Boot, hardware root of trust, and integrity measurement work together to solve a specific security issue.

Firmware Version Control

Firmware is present in virtually every system, but in many embedded systems it plays an even more critical role because it may also contain the OS and application. Maintaining strict control measures over the changing of firmware is essential to ensuring the authenticity of the software on a system. Firmware updates require extreme quality measures to ensure that errors are not introduced as part of an update process. Updating firmware, although only occasionally necessary, is a very sensitive event, because failure can lead to system malfunction. If an unauthorized party is able to change the firmware of a system, as demonstrated in an attack against ATMs, an adversary can gain complete functional control over a system.

EMI/EMP

Electromagnetic interference (EMI) is an electrical disturbance that affects an electrical circuit. This is due to either electromagnetic induction or radiation emitted from an external source, either of which can induce currents into the small circuits that make up computer systems and cause logic upsets. An electromagnetic pulse (EMP) is a burst of current in an electronic device as a result of a current pulse from electromagnetic radiation. EMP can produce damaging current and voltage surges in today’s sensitive electronics. The main sources for EMP would be industrial equipment on the same circuit, solar flares, and nuclear bursts high in the atmosphere.

It is important to shield computer systems from circuits with large industrial loads, such as motors. These power sources can have significant noise, including EMI and EMPs that will potentially damage computer equipment. Another source of EMI is fluorescent lights. Be sure any cabling that goes near fluorescent light fixtures is well shielded and grounded.

Supply Chain

Hardware and firmware security is ultimately dependent on the manufacturer for the root of trust. In today’s world of global manufacturing with global outsourcing, fully understanding who your manufacturer supply chain is and how it changes from device to device, and even between lots, is difficult because many details can be unknown. Who manufactured all the components of the device you are ordering? If you’re buying a new PC, where did the hard drive come from? Can the new PC come preloaded with malware? Yes, it has happened.

Supply chain for assembled equipment can be very tricky, because not only do you have to worry about where you get the computer, but also where they get the parts and the software, including who wrote the software and with what libraries. These can be very difficult issues to negotiate if you have very strict rules concerning country of origin.

Operating System and Network Operating System Hardening

Operating System and Network Operating System Hardening

The operating system (OS) of a computer is the basic software that handles things such as input, output, display, memory management, and all the other highly detailed tasks required to support the user environment and associated applications. Most users are familiar with the Microsoft family of desktop operating systems: Windows Vista, Windows 7, Windows 8, and Windows 10. Indeed, the vast majority of home and business PCs run some version of a Microsoft operating system. Other users may be familiar with macOS, Solaris, or one of the many varieties of the UNIX/Linux operating system.

The Term Operating System

Operating system is the commonly accepted term for the software that provides the interface between computer hardware and the user. It is responsible for the management, coordination, and sharing of limited computer resources such as memory and disk space.

A network operating system (NOS) is an operating system that includes additional functions and capabilities to assist in connecting computers and devices, such as printers, to a local area network (LAN). Some of the more familiar network operating systems include Novell’s NetWare and PC Micro’s LANtastic. For most modern operating systems, including Windows Server, Solaris, and Linux, the terms operating system and network operating system are used interchangeably because they perform all the basic functions and provide enhanced capabilities for connecting to LANs. Network operating system can also apply to the operational software that controls managed switches and routers, such as Cisco’s IOS and Juniper’s Junos.

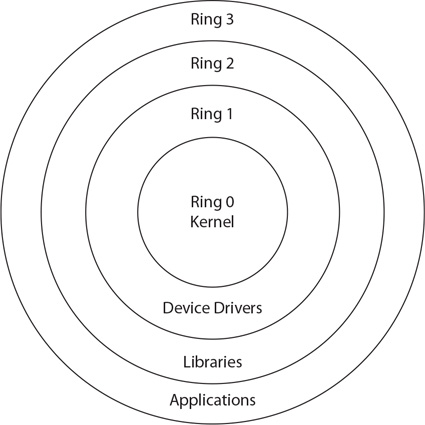

Protection Rings

Protection rings were devised in the Multics operating system in the 1960s to deal with security issues associated with time-sharing operations. Protection rings can be enforced by hardware, software, or a combination of the two, and they serve to act as a means of managing privilege in a hierarchical manner. Ring 0 is the level with the highest privilege and is the element that acts directly with the physical hardware (CPU and memory). Higher levels, with less privilege, must interact through adjoining rings through specific gates in a predefined manner. Use of rings separates elements such as applications from directly interfacing with the hardware without going through the OS and, specifically, the security kernel, as shown here.

OS Security

The operating system itself is the foundation of system security. The operating system does this through the use of a security kernel. The security kernel is also called a reference monitor and is the component of the operating system that enforces the security policies of the operating system. The core of the OS is constructed so that all operations must pass through and be moderated by the security kernel, placing it in complete control over the enforcement of rules. Security kernels must exhibit some properties to be relied upon: they must offer complete mediation, as just discussed, and must be tamperproof and verifiable in operation. Because they are part of the OS and are in fact a piece of software, ensuring that security kernels are tamperproof and verifiable is a legitimate concern. Achieving assurance with respect to these attributes is a technical matter that is rooted in the actual construction of the OS and technically beyond the level of this book.

Data Execution Prevention

Data Execution Prevention (DEP) is a collection of hardware and software technologies to limit the ability of malware to execute in a system. Windows uses DEP to prevent code execution from data pages.

OS Types

Many different systems have the need for an operating system. Hardware in networks requires an operating system to perform the networking function. Servers and workstations require an OS to act as the interface between applications and the hardware. Specialized systems such as kiosks and appliances, both of which are forms of automated single-purpose systems, require an OS between the application software and hardware.

Network

Network components use a network operating system to provide the actual configuration and computation portion of networking. There are many vendors of networking equipment, and each has its own proprietary operating system. Cisco has the largest footprint with its IOS (for Internetworking Operating System). Juniper has Junos, which is built off of a stripped Linux core. As networking moves to software-defined networking (SDN), the concept of a network operating system will become more important and mainstream because it will become a major part of day-to-day operations in the IT enterprise.

Server

Servers require an operating system to bridge the gap between the server hardware and the applications that are being run. Currently, server OSs include Microsoft Windows Server, many flavors of Linux, and more and more VM/hypervisor environments. For performance reasons, Linux has a significant market share in the realm of server OSs, although Windows Server with its Active Directory technology has made significant inroads into market share.

Workstation

The OS on a workstation exists to provide a functional working space for a user to interact with the system and its various applications. Because of the high level of user interaction on workstations, it is very common to see Windows in this role. In large enterprises, the ability of Active Directory to manage users, configurations, and settings easily across the entire enterprise has given Windows client workstations an advantage over Linux.

Appliance

Appliances are standalone devices, wired into the network and designed to run an application to perform a specific function on traffic. These systems operate as headless servers, preconfigured with applications that run and perform a wide range of security services on the network traffic they see. For reasons of economics, portability, and functionality, the vast majority of appliances are built on top of a Linux-based system. As these are often customized distributions, keeping them patched becomes a vendor problem because this sort of work is outside the scope or ability of most IT people to properly manage.

Kiosk

Kiosks are standalone machines, typically operating a browser instance on top of a Windows OS. These machines are usually set up to automatically login to a browser instance that is locked to a website that allows all of the functionality desired. Kiosks are commonly used for interactive customer service applications, such as interactive information sites, menus, and so on. The OS on a kiosk needs to be able to be locked down to minimal function, have elements such as automatic login, and an easy way to construct the applications.

Mobile OS

Mobile devices began as phones with limited additional capabilities. But as the Internet and functionality spread to mobile devices, the capabilities of these devices have expanded as well. From smartphones to tablets, today’s mobile system is a computer, with virtually all the compute capability one could ask for—with a phone attached. The two main mobile OSs in the market today are Apple’s iOS and Google’s Android system.

Trusted Operating System

A trusted operating system is one that is designed to allow multilevel security in its operation. This is further defined by its ability to meet a series of criteria required by the U.S. government. Trusted OSs are expensive to create and maintain because any change must typically undergo a recertification process. The most common criteria used to define a trusted OS is the Common Criteria for Information Technology Security Evaluation (abbreviated as Common Criteria, or CC), a harmonized set of security criteria recognized by many nations, including the United States, Canada, Great Britain, most of the EU countries, as well as others. Versions of Windows, Linux, mainframe OSs, and specialty OSs have been qualified to various Common Criteria levels.

The term trusted operating system is used to refer to a system that has met a set of criteria and demonstrated correctness to meet requirements of multilevel security. The Common Criteria is one example of a standard used by government bodies to determine compliance to a level of security need.

Patch Management

Patch management is the process used to maintain systems in an up-to-date fashion, including all required patches. Every OS, from Linux to Windows, requires software updates, and each OS has different methods of assisting users in keeping their systems up to date. Microsoft, for example, typically makes updates available for download from its web site. While most administrators or technically proficient users may prefer to identify and download updates individually, Microsoft recognizes that nontechnical users prefer a simpler approach, which Microsoft has built into its operating systems. In Windows 7 forward, Microsoft provides an automated update functionality that will, once configured, locate any required updates, download them to your system, and even install the updates, if that is your preference.

In Windows 10 forward, Microsoft has adopted a new methodology treating the OS as a service and has dramatically updated its servicing model. Windows 10 now has a twice-per-year feature update release schedule, aiming for March and September, with an 18-month servicing timeline for each release. This model is called the Semi-Annual Channel model and is offered as a means of having a regular update/upgrade cycle of improvements over time for the software. For systems requiring longer term service, such as in embedded systems, Microsoft will offer a Long-Term Servicing Channel model. This model has less-frequent releases, expected every two to three years (with the next one for Windows expected in 2019). Each of these releases will be serviced for 10 years from the date of release.

How you patch a Linux system depends a great deal on the specific version in use and the patch being applied. In some cases, a patch will consist of a series of manual steps requiring the administrator to replace files, change permissions, and alter directories. In other cases, the patches are executable scripts or utilities that perform the patch actions automatically. Some Linux versions, such as Red Hat, have built-in utilities that handle the patching process. In those cases, the administrator downloads a specifically formatted file that the patching utility then processes to perform any modifications or updates that need to be made.

Regardless of the method you use to update the OS, it is critically important to keep systems up to date. New security advisories come out every day, and while a buffer overflow may be a “potential” problem today, it will almost certainly become a “definite” problem in the near future. Much like the steps taken to baseline and initially secure an OS, keeping every system patched and up to date is critical to protecting the system and the information it contains.

Vendors typically follow a hierarchy for software updates:

![]() Hotfix This term refers to a (usually) small software update designed to address a specific problem, such as a buffer overflow in an application that exposes the system to attacks. Hotfixes are typically developed in reaction to a discovered problem and are produced and released rather quickly.

Hotfix This term refers to a (usually) small software update designed to address a specific problem, such as a buffer overflow in an application that exposes the system to attacks. Hotfixes are typically developed in reaction to a discovered problem and are produced and released rather quickly.

![]() Patch This term refers to a more formal, larger software update that can address several or many software problems. Patches often contain enhancements or additional capabilities as well as fixes for known bugs. Patches are usually developed over a longer period of time.

Patch This term refers to a more formal, larger software update that can address several or many software problems. Patches often contain enhancements or additional capabilities as well as fixes for known bugs. Patches are usually developed over a longer period of time.

![]() Service pack This refers to a large collection of patches and hotfixes rolled into a single, rather large package. Service packs are designed to bring a system up to the latest known-good level all at once, rather than requiring the user or system administrator to download dozens or hundreds of updates separately.

Service pack This refers to a large collection of patches and hotfixes rolled into a single, rather large package. Service packs are designed to bring a system up to the latest known-good level all at once, rather than requiring the user or system administrator to download dozens or hundreds of updates separately.

Disabling Unnecessary Ports and Services

An important management issue for running a secure system is to identify the specific needs of a system for its proper operation and to enable only items necessary for those functions. Disabling unnecessary ports and services prevents their use by unauthorized users and improves system throughput and increases security. Systems have ports and connections that need to be disabled if not in use.

Disabling unnecessary ports and services is a simple way to improve system security. This minimalist setup is similar to the “implicit deny” philosophy and can significantly reduce an attack surface.

Just as we have a principle of least privilege, we should follow a similar track with least functionality on systems. A system should do what it supposed to do, and only what it is supposed to do. Any additional functionality is an added attack surface for an adversary and offers no additional benefit to the enterprise.

Secure Configurations

Operating systems can be configured in a variety of manners—from completely open with lots of functionality, whether it is needed or not, to stripped to the services needed to perform a particular task. Operating system developers and manufacturers all share a common problem: they cannot possibly anticipate the many different configurations and variations that the user community will require from their products. So, rather than spending countless hours and funds attempting to meet every need, manufacturers provide a “default” installation for their products that usually contains the base OS and some more commonly desirable options, such as drivers, utilities, and enhancements. Because the OS could be used for any of a variety of purposes, and could be placed in any number of logical locations (LAN, DMZ, WAN, and so on), the manufacturer typically does little to nothing with regard to security. The manufacturer may provide some recommendations or simplified tools and settings to facilitate securing the system, but in general, end users are responsible for securing their own systems. Generally this involves removing unnecessary applications and utilities, disabling unneeded services, setting appropriate permissions on files, and updating the OS and application code to the latest version.

Weak security configurations are a result of many different items, each specific to a particular set of components and operating conditions. The path to avoid weak configurations involves a combination of information sources. One is manufacturer recommendations, another is industry best practices, and the last is testing.

This process of securing an OS is called hardening, and it is intended to make the system more resistant to attack, much like armor or steel is hardened to make it less susceptible to breakage or damage. Each OS has its own approach to security, and although the process of hardening is generally the same, different steps must be taken to secure each OS. The process of securing and preparing an OS for the production environment is not trivial; it requires preparation and planning. Unfortunately, many users don’t understand the steps necessary to secure their systems effectively, resulting in hundreds of compromised systems every day.

System hardening is the process of preparing and securing a system and involves the removal of all unnecessary software and services.

You must meet several key requirements to ensure that the system hardening processes described in this section achieve their security goals. These are OS independent and should be a normal part of all system maintenance operations:

![]() The base installation of all OS and application software comes from a trusted source and is verified as correct by using hash values.

The base installation of all OS and application software comes from a trusted source and is verified as correct by using hash values.

![]() Machines are connected only to a completely trusted network during the installation, hardening, and update processes.

Machines are connected only to a completely trusted network during the installation, hardening, and update processes.

![]() The base installation includes all current patches and updates for both the OS and applications.

The base installation includes all current patches and updates for both the OS and applications.

![]() Current backup images are taken after hardening and updates to facilitate system restoration to a known state.

Current backup images are taken after hardening and updates to facilitate system restoration to a known state.

These steps ensure that you know what is on the machine, can verify its authenticity, and have an established backup version.

Disable Default Accounts/Passwords

Because accounts are necessary for many systems to be established, default accounts with default passwords are a way of life in computing. Whether the account is for the OS or an application, this is a significant security vulnerability if not immediately addressed as part of setting up the system or installing of the application. Disabling default accounts/passwords should be such a common practice that there should be no systems with this vulnerability. This is a simple task, and one that must be done. When you cannot disable the default account (and there will be times when disabling is not a viable option), the other alternative is to change the password to a very long one that offers strong resistance to brute-force attacks.

Configurations

Modern software is configuration driven. This means that setting proper configurations is essential for secure operation of the software. Using weak configurations or allowing access to configuration files so attackers can weaken or misconfigure a system is a security failure. Default configurations should be checked to ensure they employ the desired level of security.

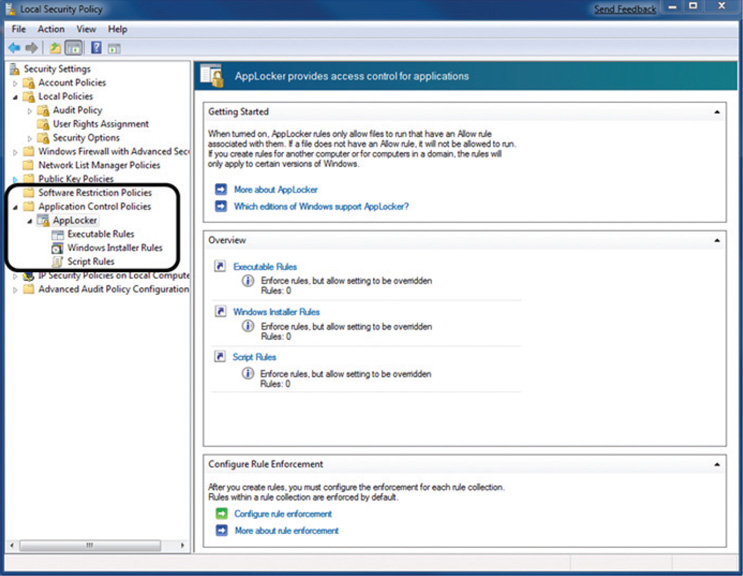

Application Whitelisting/Blacklisting

Applications can be controlled at the OS at the time of start via blacklisting or whitelisting. Application blacklisting is essentially noting which applications should not be allowed to run on the machine. This is basically a permanent “ignore” or “call block” type of capability. Application whitelisting is the exact opposite: it consists of a list of allowed applications. Each of these approaches has advantages and disadvantages. Blacklisting is difficult to use against dynamic threats, as the identification of a specific application can easily be avoided through minor changes. Whitelisting is easier to employ from the aspect of the identification of applications that are allowed to run—hash values can be used to ensure the executables are not corrupted. The challenge in whitelisting is the number of potential applications that are run on a typical machine. For a single-purpose machine, such as a database server, whitelisting can be relatively easy to employ. For multipurpose machines, it can be more complicated.

Microsoft has two mechanisms that are part of the OS to control which users can use which applications:

Using OS level restrictions to control what software can be used can prevent users from loading and running unauthorized software. Unauthorized software, whether because of licensing restrictions or because it is not vetted for use, can present risk to the enterprise. Controlling this risk via an enterprise operational control such as white listing can simplify compliance and improve baseline security posture.

![]() Software restrictive policies Employed via group policies and allow significant control over applications, scripts, and executable files. The primary mode is by machine and not by user account.

Software restrictive policies Employed via group policies and allow significant control over applications, scripts, and executable files. The primary mode is by machine and not by user account.

![]() User account level control Enforced via AppLocker, a service that allows granular control over which users can execute which programs. Through the use of rules, an enterprise can exert significant control over who can access and use installed software.

User account level control Enforced via AppLocker, a service that allows granular control over which users can execute which programs. Through the use of rules, an enterprise can exert significant control over who can access and use installed software.

On a Linux platform, similar capabilities are offered from third-party vendor applications.

Sandboxing

Sandboxing refers to the quarantine or isolation of a system from its surroundings. It has become standard practice for some programs with an increased risk surface to operate within a sandbox, limiting the interaction with the CPU and other processes, such as memory. This works as a means of quarantine, preventing problems from getting out of the sandbox and onto the OS and other applications on a system.

Virtualization can be used as a form of sandboxing with respect to an entire system. You can build a VM, test something inside the VM, and, based on the results, make a decision with regard to stability or whatever concern was present.

Secure Baseline

Secure Baseline

While this process of establishing software’s base state is called baselining, and the resulting product is a baseline that describes the capabilities of the software, this is not necessarily secure. To secure the software on a system effectively and consistently, you must take a structured and logical approach. This starts with an examination of the system’s intended functions and capabilities to determine what processes and applications will be housed on the system. As a best practice, anything that is not required for operations should be removed or disabled on the system; then, all the appropriate patches, hotfixes, and settings should be applied to protect and secure it. This becomes the system’s secure baseline.

Software and hardware can be tied intimately when it comes to security, so they must be considered together. Once the process has been completed for a particular hardware and software combination, any similar systems can be configured with the same baseline to achieve the same level and depth of security and protection. Uniform software baselines are critical in large-scale operations, because maintaining separate configurations and security levels for hundreds or thousands of systems is far too costly.

After administrators have finished patching, securing, and preparing a system, they often create an initial baseline configuration. This represents a secure state for the system or network device and a reference point of the software and its configuration. This information establishes a reference that can be used to help keep the system secure by establishing a known-safe configuration. If this initial baseline can be replicated, it can also be used as a template when similar systems and network devices are being deployed.

Machine Hardening

The key management issue behind running a secure server setup is to identify the specific needs of a server for its proper operation and enable only items necessary for those functions. Keeping all other services and users off the system improves system throughput and increases security. Reducing the attack surface area associated with a server reduces the vulnerabilities now and in the future as updates are required.

Server Hardening Tips

Specific security needs can vary depending on the server’s specific use, but at a minimum, the following are beneficial:

![]() Remove unnecessary protocols such as Telnet, NetBIOS, Internetwork Packet Exchange (IPX), and File Transfer Protocol (FTP).

Remove unnecessary protocols such as Telnet, NetBIOS, Internetwork Packet Exchange (IPX), and File Transfer Protocol (FTP).

![]() Remove unnecessary programs such as Internet Information Services (IIS).

Remove unnecessary programs such as Internet Information Services (IIS).

![]() Remove all shares that are not necessary.

Remove all shares that are not necessary.

![]() Rename the administrator account, securing it with a strong password.

Rename the administrator account, securing it with a strong password.

![]() Remove or disable the Local Admin account in Windows.

Remove or disable the Local Admin account in Windows.

![]() Disable unnecessary user accounts.

Disable unnecessary user accounts.

![]() Disable unnecessary ports and services.

Disable unnecessary ports and services.

![]() Keep the operating system (OS) patched and up to date.

Keep the operating system (OS) patched and up to date.

![]() Keep all applications patched and up to date.

Keep all applications patched and up to date.

![]() Turn on event logging for determined security elements.

Turn on event logging for determined security elements.

![]() Control physical access to servers.

Control physical access to servers.

Once a server has been built and is ready to be placed into operation, the recording of hash values on all of its crucial files will provide valuable information later in case of a question concerning possible system integrity after a detected intrusion. The use of hash values to detect changes was first developed by Gene Kim and Eugene Spafford at Purdue University in 1992. The concept became the product Tripwire, which is now available in commercial and open source forms. The same basic concept is used by many security packages to detect file-level changes.

Securing a Workstation

Workstations are attractive targets for crackers because they are numerous and can serve as entry points into the network and the data that is commonly the target of an attack. Although security is a relative term, following these basic steps will increase workstation security immensely:

![]() Remove unnecessary protocols such as Telnet, NetBIOS, and IPX.

Remove unnecessary protocols such as Telnet, NetBIOS, and IPX.

![]() Remove unnecessary software.

Remove unnecessary software.

![]() Remove modems unless needed and authorized.

Remove modems unless needed and authorized.

![]() Remove all shares that are not necessary.

Remove all shares that are not necessary.

![]() Rename the administrator account, securing it with a strong password.

Rename the administrator account, securing it with a strong password.

![]() Remove or disable the Local Admin account in Windows.

Remove or disable the Local Admin account in Windows.

![]() Disable unnecessary user accounts.

Disable unnecessary user accounts.

![]() Disable unnecessary ports and services.

Disable unnecessary ports and services.

![]() Install an antivirus program and keep abreast of updates.

Install an antivirus program and keep abreast of updates.

![]() If the floppy drive is not needed, remove or disconnect it.

If the floppy drive is not needed, remove or disconnect it.

![]() Consider disabling USB ports via BIOS to restrict data movement to USB devices.

Consider disabling USB ports via BIOS to restrict data movement to USB devices.

![]() If no corporate firewall exists between the machine and the Internet, install a firewall.

If no corporate firewall exists between the machine and the Internet, install a firewall.

![]() Keep the operating system (OS) patched and up to date.

Keep the operating system (OS) patched and up to date.

![]() Keep all applications patched and up to date.

Keep all applications patched and up to date.

![]() Turn on event logging for determined security elements.

Turn on event logging for determined security elements.

The primary method of controlling the security impact of a system on a network is to reduce the available attack surface area. Turning off all services that are not needed or permitted by policy will reduce the number of vulnerabilities. Removing methods of connecting additional devices to a workstation to move data—such as optical drives and USB ports—assists in controlling the movement of data into and out of the device. User-level controls, such as limiting e-mail attachment options, screening all attachments at the e-mail server level, and reducing network shares to needed shares only, can be used to limit excessive connectivity that can impact security.

Early versions of home operating systems did not have separate named accounts for separate users. This was seen as a convenience mechanism; after all, who wants the hassle of signing into the machine? This led to the simple problem that all users could then see and modify and delete everyone else’s content. Content could be separated by using access control mechanisms, but that required configuration of the OS to manage every user’s identity. Early versions of many OSs came with literally every option turned on. Again, this was a convenience factor, but it led to systems running processes and services that they never used, thus increasing the attack surface of the host unnecessarily.

Determining the correct settings and implementing them correctly is an important step in securing a host system. The following sections explore the multitude of controls and options that need to be employed properly to achieve a reasonable level of security on a host system.

Hardening Microsoft Operating Systems

Microsoft has spent years working to develop the most secure and securable OS on the market. As a desktop OS, Windows has provided a range of security features for users to secure their systems. Most of these options can be employed via group policies in enterprise setups, making them easily deployable and maintainable across an enterprise.

Here are some of the security capabilities in the Windows environment:

![]() User Account Control allows users to operate the system without requiring administrative privileges. If you’ve used Windows Vista and beyond, you’ve undoubtedly seen the “Windows needs your permission to continue” pop-ups.

User Account Control allows users to operate the system without requiring administrative privileges. If you’ve used Windows Vista and beyond, you’ve undoubtedly seen the “Windows needs your permission to continue” pop-ups.

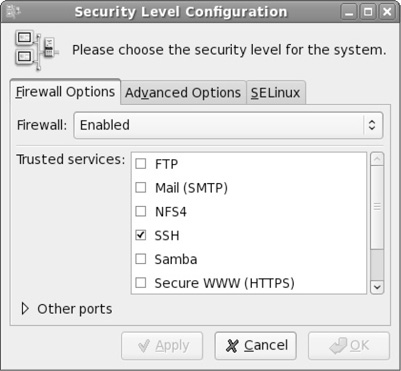

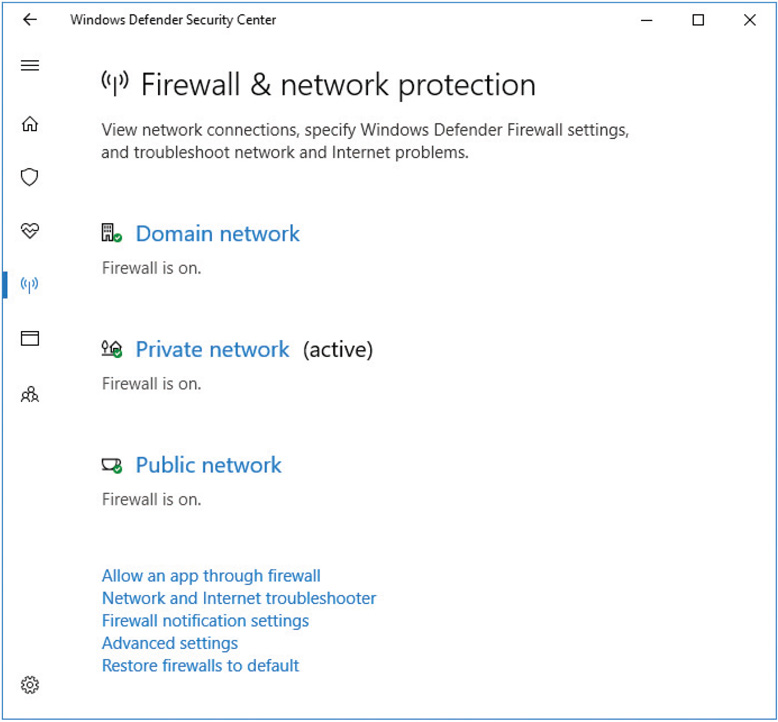

![]() Windows Firewall includes an outbound filtering capability. Windows allows filtering of traffic coming into and leaving the system, which is useful for controlling things like peer-to-peer applications.

Windows Firewall includes an outbound filtering capability. Windows allows filtering of traffic coming into and leaving the system, which is useful for controlling things like peer-to-peer applications.

![]() BitLocker allows encryption of all data on a server, including any data volumes. This capability is only available in the higher-end distributions of Windows.

BitLocker allows encryption of all data on a server, including any data volumes. This capability is only available in the higher-end distributions of Windows.

![]() Windows clients work with Network Access Protection. See the discussion of NAP in the following “Hardening Windows Server” section for more details.

Windows clients work with Network Access Protection. See the discussion of NAP in the following “Hardening Windows Server” section for more details.

![]() Windows Defender is a built-in malware detection and removal tool. Windows Defender detects many types of potentially suspicious software and can prompt the user before allowing applications to make potentially malicious changes.

Windows Defender is a built-in malware detection and removal tool. Windows Defender detects many types of potentially suspicious software and can prompt the user before allowing applications to make potentially malicious changes.

Hardening Windows Server

Microsoft touted Windows Server 2008 as its “most secure server” to date upon its release. Although Microsoft has not touted security specifically since, many improvements have been continuously evolving across the Windows Server platform, including in Windows Server 2012 and 2016, making it arguably one of the most securable platforms in the enterprise.

![]() BitLocker allows encryption of all data on a server, including any data volumes. Improved BitLocker functionality to now allow administrator-less reboots.

BitLocker allows encryption of all data on a server, including any data volumes. Improved BitLocker functionality to now allow administrator-less reboots.

![]() Role-based installation of functions and capabilities minimizes the server’s footprint. For example, if a server is going to be a web server, it does not need DNS or SMTP software, and thus those features are no longer installed by default.

Role-based installation of functions and capabilities minimizes the server’s footprint. For example, if a server is going to be a web server, it does not need DNS or SMTP software, and thus those features are no longer installed by default.

![]() Network Access Protection (NAP) controls access to network resources based on a client computer’s identity and compliance with corporate governance policy. NAP allows network administrators to define granular levels of network access based on client identity, group membership, and the degree to which that client is compliant with corporate policies. NAP can also ensure that clients comply with corporate policies. Suppose, for example, that a sales manager connects their laptop to the corporate network. NAP can be used to examine the laptop and see if it is fully patched and running a company-approved antivirus product with updated signatures. If the laptop does not meet those standards, network access for that laptop can be restricted until the laptop is brought back into compliance with corporate standards.

Network Access Protection (NAP) controls access to network resources based on a client computer’s identity and compliance with corporate governance policy. NAP allows network administrators to define granular levels of network access based on client identity, group membership, and the degree to which that client is compliant with corporate policies. NAP can also ensure that clients comply with corporate policies. Suppose, for example, that a sales manager connects their laptop to the corporate network. NAP can be used to examine the laptop and see if it is fully patched and running a company-approved antivirus product with updated signatures. If the laptop does not meet those standards, network access for that laptop can be restricted until the laptop is brought back into compliance with corporate standards.

![]() Read-only domain controllers can be created and deployed in high-risk locations, but they can’t be modified to add new users, change access levels, and so on. This new ability to create and deploy “read-only” domain controllers can be very useful in high-threat environments.

Read-only domain controllers can be created and deployed in high-risk locations, but they can’t be modified to add new users, change access levels, and so on. This new ability to create and deploy “read-only” domain controllers can be very useful in high-threat environments.

![]() More-granular password policies allow for different password policies on a group or user basis. This allows administrators to assign different password policies and requirements for the sales group and the engineering group, for example, if that capability is needed.

More-granular password policies allow for different password policies on a group or user basis. This allows administrators to assign different password policies and requirements for the sales group and the engineering group, for example, if that capability is needed.

![]() Web sites or web applications can be administered within IIS 7. This allows administrators quicker and more convenient administration capabilities, such as the ability to turn on or off specific modules through the IIS management interface. For example, removing CGI support from a web application is a quick and simple operation in IIS 7.

Web sites or web applications can be administered within IIS 7. This allows administrators quicker and more convenient administration capabilities, such as the ability to turn on or off specific modules through the IIS management interface. For example, removing CGI support from a web application is a quick and simple operation in IIS 7.

![]() The traditional ROM-BIOS has been replaced with Unified Extensible Firmware Interface (UEFI). Microsoft is using the security-hardened 2.3.1 version, which prevents boot code updates without appropriate digital certificates and signatures.

The traditional ROM-BIOS has been replaced with Unified Extensible Firmware Interface (UEFI). Microsoft is using the security-hardened 2.3.1 version, which prevents boot code updates without appropriate digital certificates and signatures.

![]() The trustworthy and verified boot process has been extended to the entire Windows OS boot code with a feature known as Secure Boot. UEFI and Secure Boot significantly reduce the risk of malicious code such as rootkits and boot viruses.

The trustworthy and verified boot process has been extended to the entire Windows OS boot code with a feature known as Secure Boot. UEFI and Secure Boot significantly reduce the risk of malicious code such as rootkits and boot viruses.

![]() Early Launch Anti-Malware (ELAM) has been instituted to ensure that only known, digitally signed antimalware programs can load right after Secure Boot finishes (without requiring UEFI or Secure Boot). This permits legitimate antimalware programs to get into memory and start doing their job before fake antivirus programs or other malicious code can act.

Early Launch Anti-Malware (ELAM) has been instituted to ensure that only known, digitally signed antimalware programs can load right after Secure Boot finishes (without requiring UEFI or Secure Boot). This permits legitimate antimalware programs to get into memory and start doing their job before fake antivirus programs or other malicious code can act.

![]() DNSSEC is fully integrated.

DNSSEC is fully integrated.

![]() Data Classification with Rights Management Service is fully integrated so that you can control which users and groups can access which documents based on content or marked classification.

Data Classification with Rights Management Service is fully integrated so that you can control which users and groups can access which documents based on content or marked classification.

![]() Managed Service Accounts, introduced in Server 2008 R2, allow for advanced self-maintaining features with extremely long passwords, which automatically reset every 30 days, all under the control of Active Directory in the enterprise.

Managed Service Accounts, introduced in Server 2008 R2, allow for advanced self-maintaining features with extremely long passwords, which automatically reset every 30 days, all under the control of Active Directory in the enterprise.

![]() Credential Guard enables the use of virtualization-based security to isolate credential information, preventing password hashes or Kerberos tickets from being intercepted. It uses an entirely new isolated Local Security Authority (LSA) process, which is not accessible to the rest of the operating system. All binaries used by the isolated LSA are signed with certificates that are validated before they are launched in the protected environment, making pass-the-hash-type attacks completely ineffective.

Credential Guard enables the use of virtualization-based security to isolate credential information, preventing password hashes or Kerberos tickets from being intercepted. It uses an entirely new isolated Local Security Authority (LSA) process, which is not accessible to the rest of the operating system. All binaries used by the isolated LSA are signed with certificates that are validated before they are launched in the protected environment, making pass-the-hash-type attacks completely ineffective.

![]() Windows Server 2016 includes Device Guard to ensure that only trusted software can be run on the server. Using virtualization-based security, Device Guard can limit what binaries can run on the system based on the organization’s policy. If anything other than the specified binaries tries to run, Windows Server 2016 blocks it and logs the failed attempt so that administrators can see that there has been a potential breach. Device Guard is also integrated with PowerShell so that you can authorize which scripts can run on your system.

Windows Server 2016 includes Device Guard to ensure that only trusted software can be run on the server. Using virtualization-based security, Device Guard can limit what binaries can run on the system based on the organization’s policy. If anything other than the specified binaries tries to run, Windows Server 2016 blocks it and logs the failed attempt so that administrators can see that there has been a potential breach. Device Guard is also integrated with PowerShell so that you can authorize which scripts can run on your system.

The tools available in each subsequent release of the Windows Server OS are designed to increase the difficulty factor for attackers, eliminating known methods of exploitation. The challenge is in administrating the security functions, although the integration of many of these via Active Directory makes this much more manageable than in the past.

Microsoft Security Compliance Manager

Microsoft provided a tool called Security Compliance Manager (SCM) to assist system and enterprise administrators with the configuration of security options across a wide range of Microsoft platforms. SCM allows administrators to use group policy objects (GPOs) to deploy security configurations across Internet Explorer, the desktop OSs, server OSs, and common applications such as Microsoft Office. Microsoft reluctantly retired SCM in the summer of 2017 in favor of a new tool set called Desired State Configuration (DSC).

Desired State Configuration (DSC)

Desired State Configuration (DSC) is a PowerShell-based approach to configuration management of a system. Rather than having documentation that describes the security settings for a system and expecting a user to set them, DSC performs the work via PowerShell functions. This makes security configuration a managed-by-code process that brings with it many advantages. Using DSC, it is easier and faster to adopt, implement, maintain, deploy, and share system configuration information. DSC brings the advantages of DevOps to system configuration in the Windows environment. While detailed PowerShell implementations are beyond the scope of this book, the concept of programmable configuration control is not. DSC is more than just PowerShell, for DSC configurations separate intent (or “what I want to do”) from execution (or “how I want to do it”). By separating the specifics of deployments, DSC enables multiple environments to be serviced by single DSC implementations that via configuration data can target dev, test, and production environments appropriately.

Microsoft Security Baselines

A security baseline is a group of Microsoft-recommended configuration settings with an explanation of their security impact. There are over 3000 Group Policy settings for Windows 10, which does not include over 1800 Internet Explorer 11 settings. So of these 4800 settings, only some are security related, and choosing which to set can be a laborious process. Security baselines bring an expert-based consensus view to this task. Microsoft provides a security compliance toolkit to facilitate the application of Microsoft-recommended baselines for a system. The Microsoft Security Compliance Toolkit (SCT) is a set of tools that allows enterprise security administrators to download, analyze, test, edit, and store Microsoft-recommended security configuration baselines for Windows.

Using the toolkit, administrators can compare their current group policy objects (GPOs) with Microsoft-recommended GPO baselines or other baselines. You can also edit them, store them in GPO backup file format, and apply them broadly through Active Directory or individually through local policy. The Security Compliance Toolkit consists of specific baselines based on OS and two tools—the Policy Analyzer tool and the Local Group Policy Object (LGPO) tool.

For further information, see Microsoft Security Compliance Toolkit 1.0 (https://docs.microsoft.com/en-us/windows/security/threat-protection/security-compliance-toolkit-10).

Microsoft Attack Surface Analyzer

One of the challenges in a modern enterprise is understanding the impact of system changes from the installation or upgrade of an application on a system. To help you overcome that challenge, Microsoft has released the Attack Surface Analyzer (ASA), a free tool that can be deployed on a system before a change and then again after a change to analyze the changes to various system properties as a result of the change.

Using ASA, developers can view changes in the attack surface resulting from the introduction of their code onto the Windows platform, and system administrators can assess the aggregate attack surface change by the installation of an application. Security auditors can use the tool to evaluate the risk of a particular piece of software installed on the Windows platform. And if ASA is deployed in a baseline mode before an incident, security incident responders can potentially use ASA to gain a better understanding of the state of a system’s security during an investigation.

Group Policies

Microsoft defines a group policy as “an infrastructure used to deliver and apply one or more desired configurations or policy settings to a set of targeted users and computers within an Active Directory environment. This infrastructure consists of a Group Policy engine and multiple client-side extensions (CSEs) responsible for writing specific policy settings on target client computers.” Introduced with the Windows 2000 operating system, group policies are a great way to manage and configure systems centrally in an Active Directory environment (Windows NT had policies, but technically not “group policies”). Group policies can also be used to manage users, making these policies valuable tools in any large environment.

Within the Windows environment, group policies can be used to refine, set, or modify a system’s Registry settings, auditing and security policies, user environments, logon/logoff scripts, and so on. Policy settings are stored in a group policy object (GPO) and are referenced internally by the OS using a globally unique identifier (GUID). A single policy can be linked to a single user, a group of users, a group of machines, or an entire organizational unit (OU), which makes updating common settings on large groups of users or systems much easier. Users and systems can have more than one GPO assigned and active, which can create conflicts between policies that must then be resolved at an attribute level. Group policies can also overwrite local policy settings. Group policies should not be confused with local policies. Local policies are created and applied to a specific system (locally), are not user specific (you can’t have local policy X for user A and local policy Y for user B), and are overwritten by GPOs. Further confusing some administrators and users, policies can be applied at the local, site, domain, and OU levels. Policies are applied in hierarchical order—local, then site, then domain, and so on. This means settings in a local policy can be overridden or reversed by settings in the domain policy if there is a conflict between the two policies. If there is no conflict, the policy settings are aggregated.

Windows Local Security Policies

Open a command prompt as either administrator or a user with administrator privileges on a Windows system. Type the command secpol and press ENTER (this should bring up the Local Security Policy utility). Expand Account Policies on the left side of the Local Security Policy window (which should have a + next to it). Click Password Policy. Look in the right side of the Local Security Policy window. What is the minimum password length? What is the maximum password age in days? Now explore some of the policy settings—but be careful! Changes made to the local security policy can affect the functionality or usability of your system.

Creating GPOs is usually done through either the Group Policy Object Editor, shown in Figure 14.1, or the Group Policy Management Console (GPMC). The GPMC is a more powerful GUI-based tool that can summarize GPO settings; simplify security filtering settings; backup, clone, restore, and edit GPOs; and perform other tasks. After creating a GPO, administrators will associate it with the desired targets. After association, group policies operate on a pull model, meaning that at a semi-random interval, the Group Policy client will collect and apply any policies associated to the system and the currently logged-on user.

• Figure 14.1 Group Policy Object Editor

Microsoft group policies can provide many useful options, including the following:

![]() Network location awareness Systems are now “aware” of which network they are connected to and can apply different GPOs as needed. For example, a system can have a very restrictive GPO when connected to a public network and a less restrictive GPO when connected to an internal, trusted network.

Network location awareness Systems are now “aware” of which network they are connected to and can apply different GPOs as needed. For example, a system can have a very restrictive GPO when connected to a public network and a less restrictive GPO when connected to an internal, trusted network.

![]() Ability to process without ICMP Older group policy processes would occasionally time out or fail completely if the targeted system did not respond to ICMP packets. Current implementations in Windows Vista and Windows 7 do not rely on ICMP during the GPO update process.

Ability to process without ICMP Older group policy processes would occasionally time out or fail completely if the targeted system did not respond to ICMP packets. Current implementations in Windows Vista and Windows 7 do not rely on ICMP during the GPO update process.

![]() VPN compatibility As a side benefit of network location awareness, mobile users who connect through VPNs can receive a GPO update in the background after connecting to the corporate network via VPN.

VPN compatibility As a side benefit of network location awareness, mobile users who connect through VPNs can receive a GPO update in the background after connecting to the corporate network via VPN.

![]() Power management Starting with Windows Vista, power management settings can be configured using GPOs.

Power management Starting with Windows Vista, power management settings can be configured using GPOs.

![]() Device access blocking Under Windows Vista and Windows 7, policy settings have been added that allow administrators to restrict user access to USB drives, CD-RW drives, DVD-RW drives, and other removable media.

Device access blocking Under Windows Vista and Windows 7, policy settings have been added that allow administrators to restrict user access to USB drives, CD-RW drives, DVD-RW drives, and other removable media.

![]() Location-based printing Users can be assigned to various printers based on their location. As mobile users move, their printer locations can be updated to the closest local printer.

Location-based printing Users can be assigned to various printers based on their location. As mobile users move, their printer locations can be updated to the closest local printer.

In Windows, policies are applied in hierarchical order. Local policies get applied first, then site policies, then domain policies, and finally OU policies. If a setting from a later policy conflicts with a setting from an earlier policy, the setting from the later policy “wins” and is applied. Keep this in mind when building group policies.

Hardening UNIX- or Linux-Based Operating Systems

Although you do not have the advantage of a single manufacturer for all UNIX operating systems (like you do with Windows operating systems), the concepts behind securing different UNIX- or Linux-based operating systems are similar, regardless of whether the manufacturer is Red Hat or Sun Microsystems. Indeed, the overall tasks involved with hardening all operating systems are remarkably similar.

Establishing General UNIX Baselines

General UNIX baselining follows similar concepts as baselining for Windows OSs: disable unnecessary services, restrict permissions on files and directories, remove unnecessary software, apply patches, remove unnecessary users, and apply password guidelines. Some versions of UNIX provide GUI-based tools for these tasks, while others require administrators to edit configuration files manually. In most cases, anything that can be accomplished through a GUI can be accomplished from the command line or by manually editing configuration files.

Like Windows systems, UNIX systems are easiest to secure and baseline if they are providing a single service or performing a single function, such as acting as a Simple Mail Transfer Protocol (SMTP) server or web server. Prior to performing any software installations or baselining, the administrator should define the purpose of the system and identify all required capabilities and functions. One nice advantage of UNIX systems is that you typically have complete control over what does or does not get installed on the system. During the installation process, the administrator can select which services and applications are placed on the system, offering an opportunity to not install services and applications that will not be required. However, this assumes that the administrator knows and understands the purpose of this system, which is not always the case. In other cases, the function of the system itself may have changed.

Runlevels

Runlevels are used to describe the state of init (initialization) and what system services are operating in UNIX systems. For example, runlevel 0 is shutdown. Runlevel 1 is single-user mode (typically for administrative purposes). Runlevels 2 through 5 are user defined (that is, administrators can define what services are running at each level). Runlevel 6 is for reboot.

Services on a UNIX system (called daemons) can be controlled through a number of different mechanisms. As the root user, an administrator can start and stop services manually from the command line or through a GUI tool. The OS can also stop and start services automatically through configuration files (usually contained in the /etc directory). (Note that UNIX systems vary a good deal in this regard, as some use a super-server process, such as inetd, while others have individual configuration files for each network service.) Unlike Windows, UNIX systems can also have different runlevels in which the system can be configured to bring up different services, depending on the runlevel selected.

Linux Hardening

One of the “strengths” behind Linux is the ability of a sysadmin to fully control all of the features, the ultimate in customizable solutions. This can lead to leaner and faster processing, but also can lead to security problems. Securing a Linux environment involves a couple different types of operations, as in how a sysadmin operates and how the system is configured. What’s more, there are the intricacies of the Linux system itself.

Linux has several separate operating spaces, each with its own characteristics. The application space is where user applications exist and run. These are above the kernel and can be changed while operating by simply restarting the application. The kernel space is integral to the system and can only be changed by rebooting the hardware. Thus, updates to kernel processes require a reboot to finish and become active.

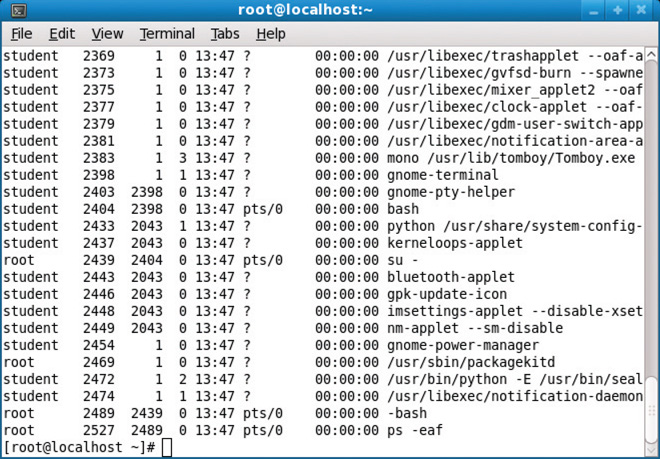

Securing Linux is in many ways like securing any other operating system. Issues such as securing the services, keeping things up to date, and enforcing policies are all the same objectives regardless of the type or version of OS. The differences occur in the how one achieves these objectives. Using passwords as an example, there is no centralized method like Active Directory and group policies. Instead, these functions are controlled granularly using commands on the system. It is possible to manage passwords to the same degree as through unified systems; it just takes a bit more work. The same goes for controlling access to administrative or root access accounts. On a running UNIX system, you can see which processes, applications, and services are running by using the process status, or ps, command, as shown in Figure 14.2. To stop a running service, you can identify the service by its unique process identifier (PID) and then use the kill command to stop the service. For example, if you wanted to stop the bluetooth-applet service in Figure 14.2, you would use the command kill 2443. To prevent this service from starting again when the system is rebooted, you would have to modify the appropriate runlevels to remove this service, as shown in Figure 14.2, or modify the configuration files that control this service.

• Figure 14.2 The ps command run on a Fedora system

Linux is built around the concept of a file—everything is a file. Files are files, as are directories. Devices are files, I/O locations are files, conduits between programs, called pipes, are files. Making everything addressable as a file makes permissions easier. Users are not files; they are subjects in the subject-object model. Subjects act upon objects according to permissions. Users exist in the singular, and in groups, and permissions are layered between the owner of the object, groups, and single subjects (users). In Linux, a group is a name for a list of users; this allows for shorter access control entry (ACE) lists on objects because groups are checked first. When a subject attempts to act upon an object, the security kernel examines the entries for the object’s access control entries until it finds a match. If no match, the action is not allowed.

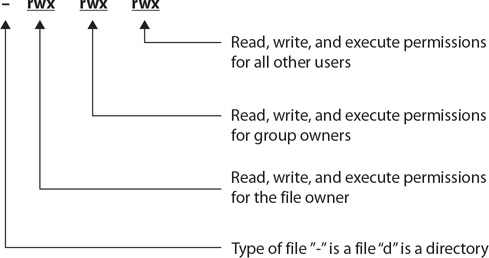

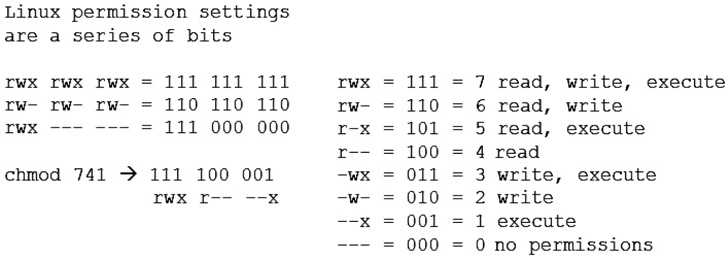

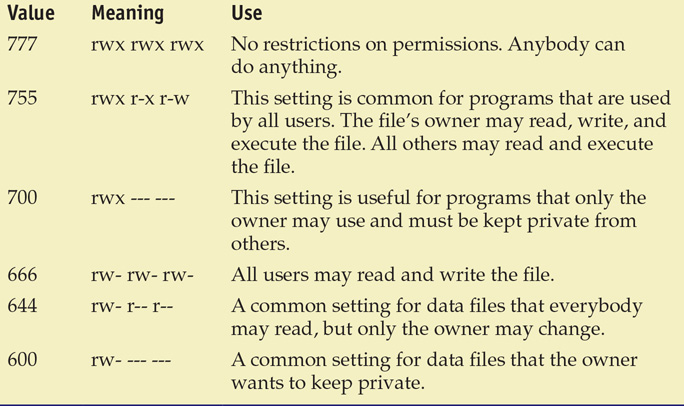

Permissions on files are expressed in bit patterns, as illustrated in Figures 14.3 and 14.4. Permissions are modified using the chmod command and indicating a three-digit number that translates to the appropriate set of read, write, and execute permissions for the item. Figure 14.3 illustrates how the permissions are displayed during a file listing as well as how the relative positions relate to the owner, group, and others. Figure 14.4 illustrates the decoding pattern of the bit structure.

• Figure 14.3 Linux permissions listing

• Figure 14.4 Linux permission bit sequence

The common patterns frequently used in Linux systems are illustrated in Table 14.1.

Table 14.1 Common Linux File Permissions

For applications in the user space on a Linux box, setting the correct permissions is extremely important. These permissions are what protect configuration and other settings that enable or disable a lot of functionality—and could, if set erroneously, allow attackers to perform a wide range of attacks, including installing malware that can watch other users. For these reasons and more, Linux can be an awesome system, with great performance and capability. The downside is that it requires significant expertise to do these things securely in today’s computing environment.

Directories also use the same nomenclature as files for permissions, but with minor differences. An r indicates that the contents can be read. A w indicates that the contents can be written, and x allows a directory to be entered. Both r and w have no effect without x being set. A setting of 777 indicates that anyone can list and create/delete files in the directory. 755 gives the owner full access, while others may only list the files. 700 restricts access to only the owner.

There are times when a user needs more permissions than their account holds, as in needing root permission to perform a task. Rather than logging in as root, and thus losing their identity in logs and such, the user can use the superuser command, su, in order to assume root privilege, provided they have the root password.

Antimalware

In the early days of PC use, threats were limited: most home users were not connected to the Internet 24/7 through broadband connections, and the most common threat was a virus passed from computer to computer via an infected floppy disk (much like the medical definition, a computer virus is something that can infect the host and replicate itself). But things have changed dramatically since those early days, and current threats pose a much greater risk than ever before. According to SANS Internet Storm Center, the average survival time of an unpatched Windows PC on the Internet is less than 60 minutes (http://isc.sans.org/survivaltime.html). This is the estimated time before an automated probe finds the system, penetrates it, and compromises it. Automated probes from botnets and worms are not the only threats roaming the Internet—there are viruses and malware spread by e-mail, phishing, infected web sites that execute code on your system when you visit them, adware, spyware, and so on. Fortunately, as the threats increase in complexity and capability, so do the products designed to stop them.

Malware

Malware comes in many forms and is covered specifically in Chapter 15. Antivirus solutions and proper workstation configurations are part of a defensive posture against various forms of malware. Additional steps include policy and procedure actions, prohibiting file sharing via USB or external media, and prohibiting access to certain web sites.

Antivirus

Antivirus (AV) products attempt to identify, neutralize, or remove malicious programs, macros, and files. These products were initially designed to detect and remove computer viruses, though many of the antivirus products are now bundled with additional security products and features.

Although antivirus products have had over two decades to refine their capabilities, the purpose of the antivirus products remains the same: to detect and eliminate computer viruses and malware. Most antivirus products combine the following approaches when scanning for viruses:

![]() Signature-based scanning Much like an intrusion detection system (IDS), the antivirus products scan programs, files, macros, e-mails, and other data for known worms, viruses, and malware. The antivirus product contains a virus dictionary with thousands of known virus signatures that must be frequently updated, as new viruses are discovered daily. This approach will catch known viruses but is limited by the virus dictionary—what it does not know about it cannot catch.

Signature-based scanning Much like an intrusion detection system (IDS), the antivirus products scan programs, files, macros, e-mails, and other data for known worms, viruses, and malware. The antivirus product contains a virus dictionary with thousands of known virus signatures that must be frequently updated, as new viruses are discovered daily. This approach will catch known viruses but is limited by the virus dictionary—what it does not know about it cannot catch.

![]() Heuristic scanning (or analysis) Heuristic scanning does not rely on a virus dictionary. Instead, it looks for suspicious behavior—anything that does not fit into a “normal” pattern of behavior for the OS and applications running on the system being protected.

Heuristic scanning (or analysis) Heuristic scanning does not rely on a virus dictionary. Instead, it looks for suspicious behavior—anything that does not fit into a “normal” pattern of behavior for the OS and applications running on the system being protected.

Most current antivirus software packages provide protection against a wide range of threats, including viruses, worms, Trojans, and other malware. Use of an up-to-date antivirus package is essential in the current threat environment.

As signature-based scanning is a familiar concept, let’s examine heuristic scanning in more detail. Heuristic scanning typically looks for commands or instructions that are not normally found in application programs, such as attempts to access a reserved memory register. Most antivirus products use either a weight-based system or a rule-based system in their heuristic scanning (more effective products use a combination of both techniques). A weight-based system rates every suspicious behavior based on the degree of threat associated with that behavior. If the set threshold is passed based on a single behavior or a combination of behaviors, the antivirus product will treat the process, application, macro, and so on that is performing the behavior(s) as a threat to the system. A rule-based system compares activity to a set of rules meant to detect and identify malicious software. If part of the software matches a rule, or if a process, application, macro, and so on performs a behavior that matches a rule, the antivirus software will treat that as a threat to the local system.

Heuristic scanning is a method of detecting potentially malicious or “virus-like” behavior by examining what a program or section of code does. Anything that is “suspicious” or potentially “malicious” is closely examined to determine whether or not it is a threat to the system. Using heuristic scanning, an antivirus product attempts to identify new viruses or heavily modified versions of existing viruses before they can damage your system.