CHAPTER 11

Low Bit-Rate Coding: Codec Design

In the view of many observers, compared to newer coding methods, linear pulse-code modulation (PCM) is a powerful but inefficient dinosaur. Because of its gargantuan appetite for bits, PCM coding is not suitable for many audio applications. There is an intense desire to achieve lower bit rates because low bit-rate coding opens so many new applications for digital audio (and video). Responding to the need, audio engineers have devised many lossy and lossless codecs. Some codecs use proprietary designs that are kept secret, some are described in standards that can be licensed, while others are open source. In any case, it would be difficult to overstate the importance of low bit-rate codecs. Codecs can be found in countless products used in everyday life, and their development is largely responsible for the rapidly expanding use of digital audio techniques in storage and transmission applications.

Early Codecs

Although the history of perceptual codecs is relatively brief, several important coding methods have been developed, which in turn inspired the development of more advanced methods. Because of the rapid development of the field, most early codecs are no longer widely used, but they established methods and benchmarks on which modern codecs are based.

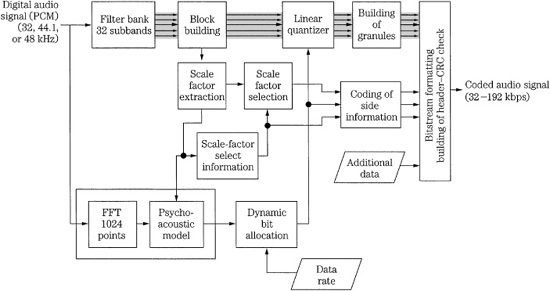

MUSICAM (Masking pattern adapted Universal Subband Integrated Coding And Multiplexing) was an early perceptual coding algorithm that achieved data reduction based on subband analysis and psychoacoustic principles. Derived from MASCAM (Masking pattern Adapted Subband Coding And Multiplexing), MUSICAM divides the input audio signal into 32 subbands with a polyphase filter bank. With a sampling frequency of 48 kHz, the subbands are each 750 Hz wide. A fast Fourier transform (FFT) analysis supplies spectral data to a perceptual coding model; it uses the absolute hearing threshold and masking to calculate the minimum signal-to-mask ratio (SMR) value in each subband. Each subband is given a 6-bit scale factor according to the peak value in the subband’s 12 samples and quantized with a variable word ranging from 0 to 15 bits. Scale factors are calculated over a 24-ms interval, corresponding to 36 samples. A subband is quantized only if it contains audible signals above the masking threshold. Subbands with signals well above the threshold are coded with more bits. In other words, within a given bit rate, bits are assigned where they are most needed. The data rate is reduced to perhaps 128 kbps per monaural channel (256 kbps for stereo). Extensive tests of 128 kbps MUSICAM showed that the codec achieves fidelity that is indistinguishable from a CD source, that it is monophonically compatible, that at least two cascaded codec stages produce no audible degradation, and that it is preferred to very high-quality FM signals. In addition, a bit-error rate of up to 10−3 was nearly imperceptible. MUSICAM was developed by CCETT, IRT, Matsushita, and Philips.

OCF (Optimal Coding in the Frequency domain) and PXFM (Perceptual Transform Coding) are similar perceptual transform codecs. A later version of OCF uses a modified discrete cosine transform (MDCT) with a block length of 512 samples and a 1024-sample window. PXFM uses an FFT with a block length of 2048 samples and an overlap of 1/16. PXFM uses critical-band analysis of the signal’s power spectrum, tonality estimation, and a spreading function to calculate the masking threshold. PXFM uses a rate loop to optimize quantization. A stereo version of PXFM further takes advantage of correlation in the frequency domain between left and right channels. OCF uses an analysis-by-synthesis method with two iteration loops. An outer (distortion) loop adjusts quantization step size to ensure that quantization noise is below the masking threshold in each critical band. An inner (rate) loop uses a nonuniform quantizer and Huffman coding to optimize the word length needed to quantize spectral values. OCF was devised by Karlheinz Brandenburg in 1987, and PXFM was devised by James Johnston in 1988.

The ASPEC (Audio Spectral Perceptual Entropy Coding) standard described a MDCT transform codec with relatively high complexity and the ability to code audio for low bit-rate applications such as ISDN. ASPEC was developed jointly using work by AT&T Bell Laboratories, Fraunhofer Institute, Thomson, and CNET.

MPEG-1 Audio Standard

The International Organization for Standardization (ISO) and the International Electrotechnical Commission (IEC) formed the Moving Picture Experts Group (MPEG) in 1988 to devise data reduction techniques for audio and video. MPEG is a working group of the ISO/IEC and is formally known as ISO/IEC JTC 1/SC 29/WG 11; MPEG documents are published under this nomenclature. The MPEG group has developed several codec standards. It first devised the ISO/IEC International Standard 11172 “Coding of Moving Pictures and Associated Audio for Digital Storage Media at up to about 1.5 Mbit/s” for reduced data rate coding of digital video and audio signals; the standard was finalized in November 1992. It is commonly known as MPEG-1 (the acronym is pronounced “m-peg”) and was the first international standard for the perceptual coding of high-quality audio.

The MPEG-1 standard has three major parts: system (multiplexed video and audio), video, and audio; a fourth part defines conformance testing. The maximum audio bit rate is set at 1.856 Mbps. The audio portion of the standard (11172-3) has found many applications. It supports coding of 32-, 44.1-, and 48-kHz PCM input data and output bit rates ranging from approximately 32 kbps to 224 kbps/channel (64 kbps to 448 kbps for stereo). Because data networks use data rates of 64 kbps (8 bits sampled at 8 kHz), most codecs output a data channel rate that is a multiple of 64.

The MPEG-1 standard was originally developed to support audio and video coding for CD playback within the CD’s bandwidth of 1.41 Mbps. However, the audio standard supports a range of bit rates as well as monaural coding, dual-channel monaural coding, and stereo coding. In addition, in the joint-stereo mode, stereophonic irrelevance and redundancy can be optionally exploited to reduce the bit rate. Stereo audio bit rates below 256 kbps are useful for applications requiring more than two audio channels while maintaining full-screen motion video. Rates above 256 kbps are useful for applications requiring higher audio quality, and partial screen video images. In either case, the bit allocation is dynamically adaptable according to need. The MPEG-1 audio standard is based on data reduction algorithms such as MUSICAM and ASPEC.

Development of the audio portion of the MPEG-1 audio standard was greatly influenced by tests conducted by Swedish Radio in July 1990. MUSICAM coding was judged superior in complexity and coding delay. However, the ASPEC transform codec provided superior sound quality at low data rates. The architectures of the MUSICAM and ASPEC coding methods formed the basis for the ISO/MPEG-1 audio standard with MUSICAM describing Layers I and II and ASPEC describing Layer III. The 11172-3 standard describes three layers of audio coding, each with different applications. Specifically, Layer I describes the least sophisticated method that requires relatively high data rates (approximately 192 kbps/channel). Layer II is based on Layer I but is more complex and operates at somewhat lower data rates (approximately 96 kbps to 128 kbps/channel). Layer IIA is a joint-stereo version operating at 128 kbps and 192 kbps per stereo pair. Layer III is somewhat conceptually different from I and II, is the most sophisticated, and operates at the lowest data rate (approximately 64 kbps/channel). The increased complexity from Layer I to III is reflected in the fact that at low data rates, Layer III will perform best for audio fidelity. Generally, Layers II, IIA, and III have been judged to be acceptable for some broadcast applications; in other words, operation at 128 kbps/channel does not impair the quality of the original audio signal. The three layers (I, II, and III) all refer to audio coding and should not be confused with different MPEG standards such as MPEG-1 and MPEG-2.

In very general terms, all three layer codecs operate similarly. The audio signal passes through a filter bank and is analyzed in the frequency domain. The sub-sampled components are regarded as subband values, or spectral coefficients. The output of a side-chain transform, or the filter bank itself, is used to estimate masking thresholds. The subband values or spectral coefficients are quantized according to a psychoacoustic model. Coded mapped samples and bit allocation information are packed into frames prior to transmission. In each case, the encoders are not defined by the MPEG-1 standard, only the decoders are specified. This forward-adaptive bit allocation permits improvements in encoding methods, particularly in the psychoacoustic modeling, provided the data output from the encoder can be decoded according to the standard. In other words, existing codecs will play data from improved encoders.

The MPEG-1 layers support joint-stereo coding using intensity coding. Left/right high-frequency subband samples are summed into one channel but scale factors remain left/right independent. The decoder forms the envelopes of the original left and right channels using the scale factors. The spectral shape of the left and right channels is the same in these upper subbands, but their amplitudes differ. The bound for joint coding is selectable at four frequencies: 3, 6, 9, and 12 kHz at a 48-kHz sampling frequency; the bound can be changed from one frame to another. Care must be taken to avoid aliasing between subbands and negative correlation between channels when joint coding. Layer III also supports M/S sum and difference coding between channels, as described below. Joint stereo coding increases codec complexity only slightly.

Listening tests demonstrated that either Layer II or III at 2 × 128 kbps or 192 kbps joint stereo can convey a stereo audio program with no audible degradation compared to a 16-bit PCM coding. If a higher data rate of 384 kbps is allowed, Layer I also achieves transparency compared to 16-bit PCM. At rates as low as 128 kbps, Layers II and III can convey stereo material that is subjectively very close to 16-bit fidelity. Tests also have studied the effects of cascading MPEG codecs. For example, in one experiment, critical audio material was passed through four Layer II codec stages at 192 kbps and two stages at 128 kbps, and they were found to be transparent. On the other hand, a cascade of five codec stages at 128 kbps was not transparent for all music programs. More specifically, a source reduced to 384 kbps with MPEG-1 Layer II sustained about 15 code/decodes before noise became significant; however, at 192 kbps, only two codings were possible. These particular tests did not enjoy the benefit of joint-stereo coding, and as with other perceptual codecs, performance can be improved by substituting new psychoacoustic models in the encoder.

The similarity between the MPEG-1 layers promotes tandem operation. For example, Layer III data can be transcoded to Layer II without returning to the analog domain (other digital processing is required, however). A full MPEG-1 decoder must be able to decode its layer, and all layers below it. There are also Layer X codecs that only code one layer. Layer I preserves highest fidelity for acquisition and production work at high bit rates where six or more codings can take place. Layer II distributes programs efficiently where two codings can occur. Layer III is most efficient, with lowest rates, with somewhat lower fidelity, and a single coding.

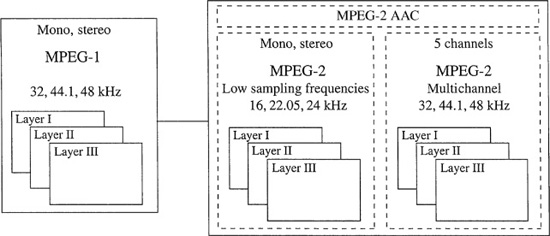

MPEG-2 incorporates the three audio layers of MPEG-1 and adds additional features, principally surround sound. However, MPEG-2 decoders can play MPEG-1 audio files, and MPEG-1 two-channel decoders can decode stereo information from surround-sound MPEG-2 files.

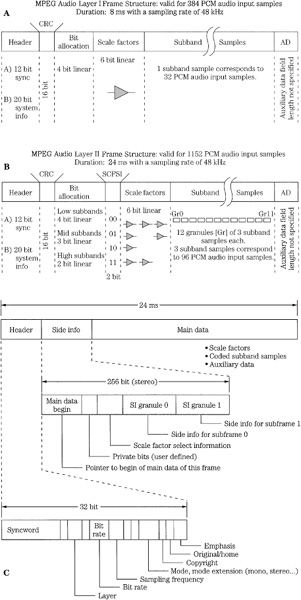

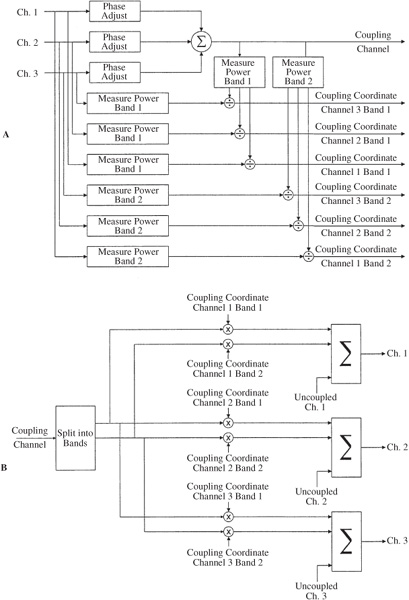

MPEG Bitstream Format

In the MPEG elementary bitstream, data is transmitted in frames, as shown in Fig. 11.1. Each frame is individually decodable. The length of a frame depends on the particular layer and MPEG algorithm used. In MPEG-1, Layers II and III have the same frame length representing 1152 audio samples. Unlike the other layers, in Layer III the number of bits per frame can vary; this allocation provides flexibility according to the coding demands of the audio signal.

A frame begins with a 32-bit ISO header with a 12-bit synchronizing pattern and 20 bits of general data on layer, bit-rate index, sampling frequency, type of emphasis, and so on. This is followed by an optional 16-bit CRCC check word with generation polynomial x16 + x15 + x2 + 1. Subsequent fields describe bit allocation data (number of bits used to code subband samples), scale factor selection data, and scale factors themselves. This varies from layer to layer. For example, Layer I sends a fixed 6-bit scale factor for each coded subband. Layer II examines scale factors and uses dynamic scale factor selection information (SCFSI) to avoid redundancy; this reduces the scale factor bit rate by a factor of two.

The largest part of the frame is occupied by subband samples. Again, this content varies among layers. In Layer II, for example, samples are grouped in granules. The length of the field is determined by a bit-rate index, but the bit allocation determines the actual number of bits used to code the signal. If the frame length exceeds the number of bits allocated, the remainder of the frame can be occupied by ancillary data (this feature is used by MPEG-2, for example). Ancillary data is coded similarly to primary frame data. Frames contain 384 samples in Layer I and 1152 samples in II and III (or 8 ms and 24 ms, respectively, at a 48-kHz sampling frequency).

FIGURE 11.1 Structure of the MPEG-1 audio Layer I, II, and III bitstreams. The header and some other fields are common, but other fields differ. Higher-level codecs can transcode lower-level bitstreams. A. Layer I bitstream format. B. Layer II bitstream format. C. Layer III bitstream format.

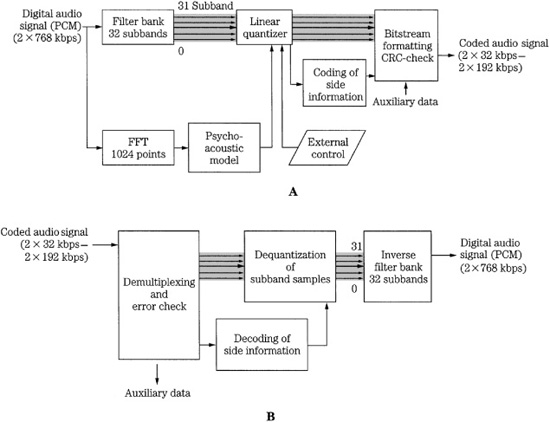

MPEG-1 Layer I

The MPEG-1 Layer I codec is a simplified version of the MUSICAM codec. It is a subband codec, designed to provide high fidelity with low complexity, but at a high bit rate. Block diagrams of a Layer I encoder and decoder (which also applies to Layer II) are shown in Fig. 11.2. A polyphase filter splits the wideband signal into 32 subbands of equal width. The filter is critically sampled; there is the same number of samples in the analyzed domain as in the time domain. Adjacent subbands overlap; a single frequency can affect two subbands. The filter and its inverse are not lossless; however, the error is small. The filter bank bands are all equal width, but the ear’s critical bands are not; this is compensated for in the bit allocation algorithm. For example, lower bands are usually assigned more bits, increasing their resolution over higher bands. This polyphase filter bank with 32 subbands is used in all three layers; Layer III adds additional hybrid processing.

The filter bank outputs 32 samples, one sample per band, for every 32 input samples. In Layer I, 12 subband samples from each of the 32 subbands are grouped to form a frame; this represents 384 wideband samples. At a 48-kHz sampling frequency, this comprises a block of 8 ms. Each subband group of 12 samples is given a bit allocation; subbands judged inaudible are given a zero allocation. Based on the calculated masking threshold (just audible noise), the bit allocation determines the number of bits used to quantize those samples. A floating-point notation is used to code samples; the mantissa determines resolution and the exponent determines dynamic range. A fixed scale factor exponent is computed for each subband with a nonzero allocation; it is based on the largest sample value in the subband. Each of the 12 subband samples in a block is normalized by dividing it by the same scale factor; this optimizes quantizer resolution.

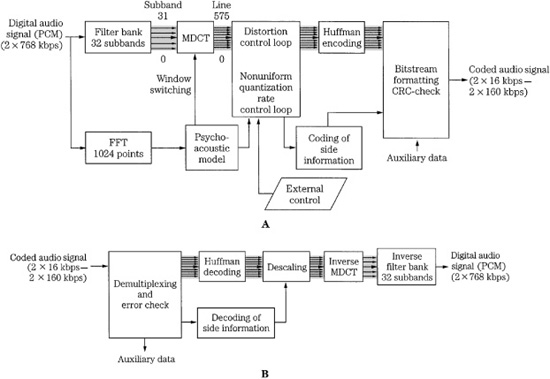

FIGURE 11.2 MPEG-1 Layer I or II audio encoder and decoder. The 32-subband filter bank is common to all three layers. A. Layer I or II encoder (single-channel mode). B. Layer I or II two-channel decoder.

A 512-sample FFT wideband transform located in a side chain performs spectral analysis on the audio signal. A psychoacoustic model, described in more detail later, uses a spreading function to emulate a basilar membrane response to establish masking contours and compute signal-to-mask ratios. Tonal and nontonal (noise-like) signals are distinguished. The psychoacoustic model compares the data to the minimum threshold curve. Using scale factor information, normalized samples are quantized by the bit allocator to achieve data reduction. The subband data is coded, not the FFT spectra.

Dynamic bit allocation assigns mantissa bits to the samples in each coded subband, or omits coding for inaudible subbands. Each sample is coded with one PCM codeword; the quantizer provides 2n−1 steps where 2 ≤ n ≤ 15. Subbands with a large signal-to-mask ratio are iteratively given more bits; subbands with a small SMR value are given fewer bits. In other words, the SMR determines the minimum signal-to-noise ratio that has to be met by the quantization of the subband samples. Quantization is performed iteratively. When available, additional bits are added to codewords to increase the signal-to-noise ratio (SNR) value above the minimum. Because the long block size might expose quantization noise in a transient signal, coarse quantization is avoided in blocks of low-level audio that are adjacent to blocks of high-level (transient) audio. The block scale factor exponent and sample mantissas are output. Error correction and other information are added to the signal at the output of the codec.

Playback is accomplished by decoding the bit allocation information, and decoding the scale factors. Samples are requantized by multiplying them with the correct scale factor. The scale factors provide all the information needed to recalculate the masking thresholds. In other words, the decoder does not need a psychoacoustic model. Samples are applied to an inverse synthesis filter such that subbands are placed at the proper frequency and added, and the resulting broadband audio waveform is output in consecutive blocks of thirty-two 16-bit PCM samples.

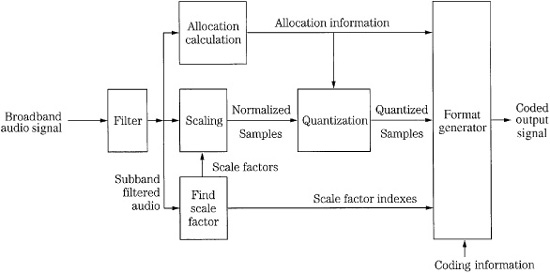

Example of MPEG-1 Layer I Implementation

As with other perceptual coding methods, MPEG-1 Layer I uses the ear’s audiology performance as its guide for audio encoding, relying on principles such as amplitude masking to encode a signal that is perceptually identical. Generally, Layer I operating at 384 kbps achieves the same quality as a Layer II codec operating at 256 kbps. Also, Layer I can be transcoded to Layer II. The following describes a simple Layer I implementation without a psychoacoustic model; its design is basic compared to other modern codecs.

PCM data with 32-, 44.1-, or 48-kHz sampling frequencies can be input to an encoder. At these three sampling frequencies, the subband width is 500, 689, and 750 Hz, and the frame period is 12, 8.7, and 8 ms, respectively. The following description assumes a 48-kHz sampling frequency. The stereo audio signal is passed to the first stage in a Layer I encoder, as shown in Fig. 11.3. A 24-bit finite impulse response (FIR) filter with the equivalent of 512 taps divides the audio band into 32 subbands of equal 750-Hz width. The filter window is shifted by 32 samples each time (12 shifts) so all the 384 samples in the 8-ms frame are analyzed. The filter bank outputs 32 subbands. With this filter, the effective sampling frequency of a subband is reduced by 32 to 1, for example, from a frequency of 48 kHz to 1.5 kHz. Although the channels are bandlimited, they are still in PCM representation at this point in the algorithm. The subbands are equal width, whereas the ear’s critical bands are not. This can be compensated for by unequally allocating bits to the subbands; more bits are typically allocated to code signals in lower-frequency subbands.

FIGURE 11.3 Example of an MPEG-1 Layer I encoder. The FFT side chain is omitted.

The encoder analyzes the energy in each subband to determine which subbands contain audible information. This example of a Layer I encoder does not use an FFT side chain or psychoacoustic model. The algorithm calculates average power levels in each subband over the 8-ms (12-sample) period. Masking levels in subbands and adjacent subbands are estimated. Minimum threshold levels are applied. Peak power levels in each subband are calculated and compared to masking levels. The SMR value (difference between the maximum signal and the masking threshold) is calculated for each sub-band and is used to determine the number of bits N assigned to a subband (i) such that Ni ≥ (SMRi−1.76)/6.02. A bit pool approach is taken to optimally code signals within the given bit rate. Quantized values form a mantissa, with a possible range of 2 to 15 bits. Thus, a maximum resolution of 92 dB is available from this part of the coding word. In practice, in addition to signal strength, mantissa values also are affected by rate of change of the waveform pattern and available data capacity. In any event, new mantissa values are calculated for every sample period.

Audio samples are normalized (scaled) to optimally use the dynamic range of the quantizer. Specifically, six exponent bits form a scale factor, which is determined by the signal’s largest absolute amplitude in a block. The scale factor acts as a multiplier to optimally adjust the gain of the samples for quantization. This scale factor covers the range from −118 dB to + 6 dB in 2-dB steps. Because the audio signal varies slowly in relation to the sampling frequency, the masking threshold and scale factors are calculated only once for every group of 12 samples, forming a frame (12 samples/subband × 32 subbands = 384 samples). For every subband, the absolute peak value of the 12 samples is compared to a table of scale factors, and the closest (next highest) constant is applied. The other sample values are normalized to that factor, and during decoding will be used as multipliers to compute the correct subband signal level.

A floating-point representation is used. One field contains a fixed-length 6-bit exponent, and another field contains a variable length 2- to 15-bit mantissa. Every block of 12 subband samples may have different mantissa lengths and values, but would share the same exponent. Allocation information detailing the length of a mantissa is placed in a 4-bit field in each frame. Because the total number of bits representing each sample within a subband is constant, this allocation information (like the exponent) needs to be transmitted only once every 12 samples. A null allocation value is conveyed when a subband is not encoded; in this case neither exponent nor mantissa values within that subband are transmitted. The 15-bit mantissa yields a maximum signal-to-noise ratio of 92 dB. The 6-bit exponent can convey 64 values. However, a pattern of all 1’s is not used, and another value is used as a reference. There are thus 62 values, each representing 2-dB steps for an ideal total of 124 dB. The reference is used to divide this into two ranges, one from 0 to −118 dB, and the other from 0 to + 6 dB. The 6 dB of headroom is needed because a component in a single subband might have a peak amplitude 6 dB higher than the broadband composite audio signal. In this example, the broadband dynamic range is thus equivalent to 19 bits of linear coding.

A complete frame contains synchronization information, sample bits, scale factors, bit allocation information, and control bits for sampling frequency information, emphasis, and so on. The total number of bits in a frame (with two channels, with 384 samples, over 8 ms, sampled at 48 kHz) is 3072. This in turn yields a 384-kbps bit rate. With the addition of error detection and correction code, and modulation, the transmission bit rate might be 768 kbps. The first set of subband samples in a frame is calculated from 512 samples by the 512-tap filter and the filter window is shifted by 32 samples each time into 11 more positions during a frame period. Thus, each frame incorporates information from 864 broadband audio samples per channel.

Sampling frequencies of 32 kHz and 44.1 kHz also are supported, and because the number of bands remains fixed at 32, the subband width becomes 689.06 Hz with a 44.1-kHz sampling frequency. In some applications, because the output bit rate is fixed at 384 kbps, and 384 samples/channel per frame is fixed, there is a reduction in frame rate at sampling frequencies of 32 kHz and 44.1 kHz, and thus an increase in the number of bits per frame. These additional bits per frame are used by the algorithm to further increase audio quality.

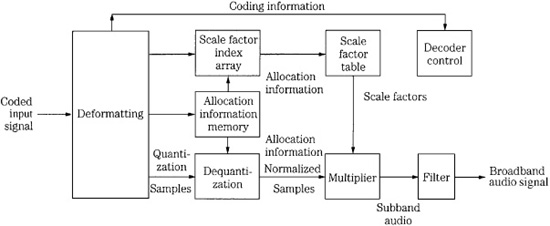

Layer I decoding proceeds frame by frame, using the processing shown in Fig. 11.4. Data is reformatted to PCM by a subband decoder, using allocation information and scale factors. Received scale factors are placed in an array with two columns of 32 rows, each six bits wide. Each column represents an output channel, and each row represents one subband. The decoded subband samples are multiplied by their scale factors to restore them to their quantized values; empty subbands are automatically assigned a zero value. A synthesis reconstruction filter recombines the 32 subbands into one broadband audio signal. This subband filter operates identically (but inversely) to the input filter. As in the encoder, 384 samples/channel represent 8 ms of audio signal (at a sampling frequency of 48 kHz). Following this subband filtering, the signal is ready for reproduction through D/A converters.

FIGURE 11.4 Example of an MPEG-1 Layer I decoder.

Because psychoacoustic processing, bit allocation, and other operations are not used in the decoder, its cost is quite low. Also, the decoder is transparent to improvements in encoder technology. If encoders are improved, the resulting fidelity would improve as well. Because the encoding algorithm is a function of digital signal processing, more sophisticated coding is possible. For example, because the number of bits per frame varies according to sampling rate, it might be expedient to create different allocation tables for different sampling frequencies.

An FFT side chain would permit analysis of the spectral content of subbands and psychoacoustic modeling. For example, knowledge of where signals are placed within bands can be useful in more precisely assigning masking curves to adjacent bands. The encoding algorithm might assume signals are at band edges, the most conservative approach. Such an encoder might claim 18-bit performance. Subjectively, at a 384-kbps bit rate, most listeners are unable to differentiate between a simple Layer I recording and an original CD recording.

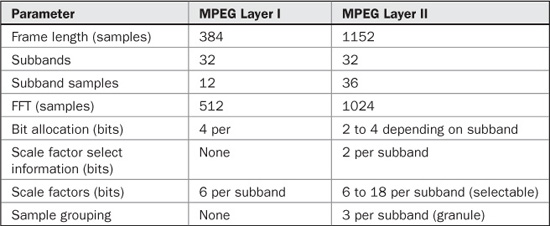

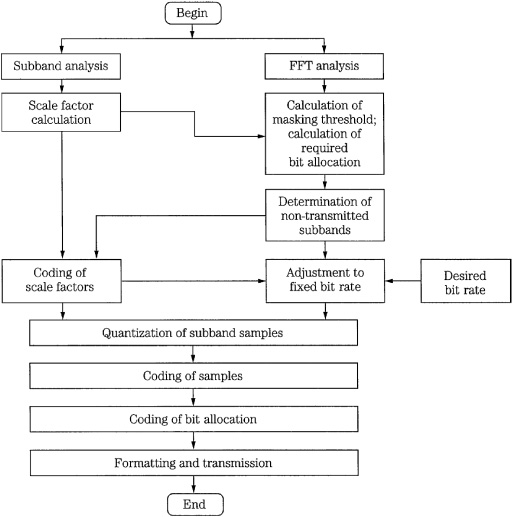

MPEG-1 Layer II

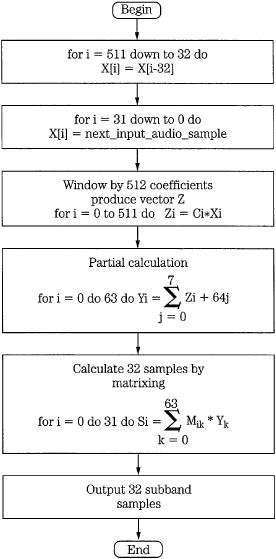

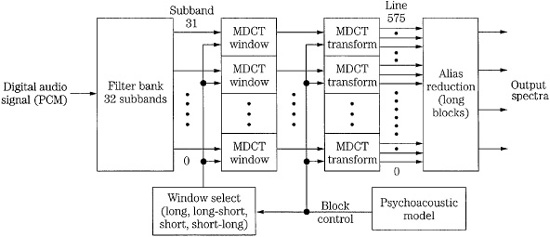

The MPEG-1 Layer II codec is essentially identical to the original MUSICAM codec (the frame headers differ). It is thus similar to Layer I, but is more sophisticated in design. It provides high fidelity with somewhat higher complexity, at moderate bit rates. It is a sub-band codec. Figure 11.5 gives a more detailed look at a Layer II encoder (which also applies to Layer I). The filter bank creates 32 equal-width subbands, but the frame size is tripled to 3 × 12 × 32, corresponding to 1152 wideband samples per channel. In other words, data is coded in three groups of 12 samples for each subband (Layer I uses one group). At a sampling frequency of 48 kHz, this comprises a 24-ms period. Figure 11.6 shows details of the subband filter bank calculation. The FFT analysis block size is increased to 1024 points. In Layer II (and Layer III) the psychoacoustic model performs two 1024-sample calculations for each 1152-sample frame, centering the first half and the second half of the frame, respectively. The results are compared and the values with the lower masking thresholds (higher SMR) in each band are used. Tonal (sinusoidal) and nontonal (noise-like) components are distinguished to determine their effect on the masking threshold.

A single bit allocation is given to each group of 12 subband samples. Up to three scale factors are calculated for each subband, each corresponding to a group of 12 sub-band samples and each representing a 2-dB step-size difference. However, to reduce the scale factor bit rate, the codec analyzes scale factors in three successive blocks in each subband. When differences are small or when temporal masking will occur, one scale factor can be shared between groups. When transient audio content is coded, two or three scale factors can be conveyed. Bit allocation is used to maximize both the subband and frame signal-to-mask ratios. Quantization covers a range from 3 to 65,535 (or none), but the number of available levels depends on the subband. Low-frequency subbands can receive as many as 15 bits, middle-frequency subbands can receive seven bits, and high-frequency subbands are limited to three bits. In each band, prominent signals are given longer codewords. It is recognized that quantization varies with subband number; higher subbands usually receive fewer bits, with larger step sizes. Thus for greater efficiency, three successive samples (for all 32 subbands) are grouped to form a granule and quantized together.

FIGURE 11.5 MPEG-1 Layer II audio encoder (single-channel mode) showing scale factor selection and coding of side information.

FIGURE 11.6 Flow chart of the analysis filter bank used in the MPEG-1 audio standard.

TABLE 11.1 Comparison of parameters in MPEG-1 Layer I and Layer II.

As in Layer I, decoding is relatively simple. The decoder unpacks the data frames and applies appropriate data to the reconstruction filter. Layer II coding can use stereo intensity coding. Layer II coding provides for a dynamic range control to adapt to different listening conditions, and uses a fixed-length data word. Minimum encoding and decoding delays are about 30 ms and 10 ms, respectively. Layer II is used in some digital audio broadcasting (DAB) and digital video broadcasting (DVB) applications. Layer I and II are compared in Table 11.1. Figure 11.7 shows a flow chart summarizing the complete MPEG-1 Layer I and II encoding algorithm.

MPEG-1 Layer III (MP3)

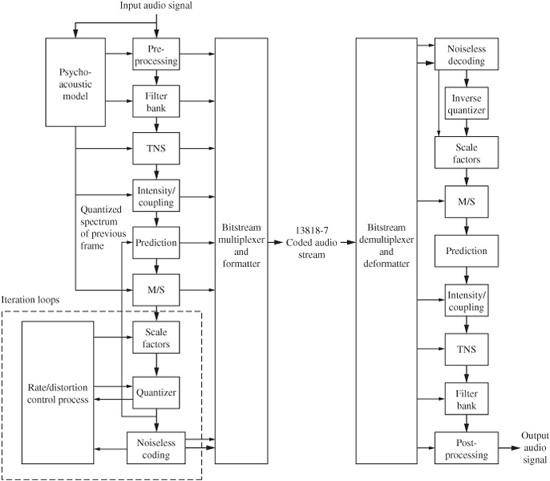

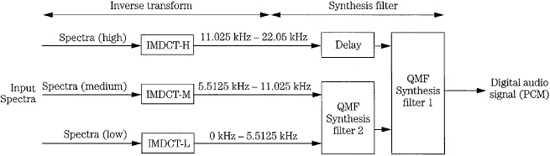

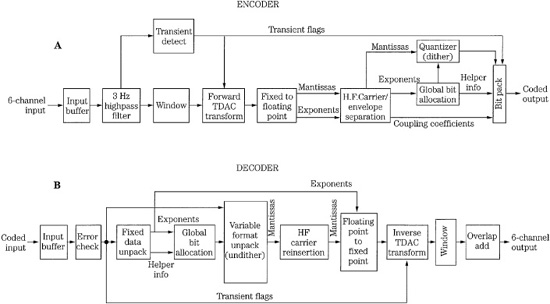

The MPEG-1 Layer III codec is based on the ASPEC codec and contains elements of MUSICAM, such as a subband filter bank, to provide compatibility with Layers I and II. Unlike the Layer I and II codecs, the Layer III codec is a transform codec. Its design is more complex than the other layer codecs. Its strength is moderate fidelity even at low data rates. Layer III files are popularly known as MP3 files. Block diagrams of a Layer III encoder and decoder are shown in Fig. 11.8.

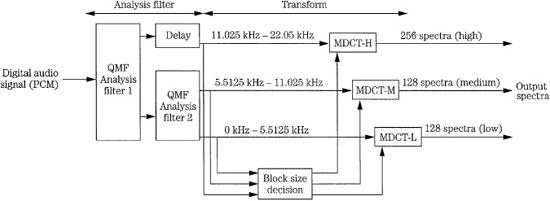

As in Layers I and II, a wideband block of 1152 samples is first split into 32 subbands with a polyphase filter; this provides backward compatibility with Layers I and II. Each subband’s contents are transformed into spectral coefficients by either a 6- or 18-point modified discrete cosine transform (MDCT) with 50% overlap (using a sine window) so that windows contain either 12 or 36 subband samples. The MDCT outputs a maximum of 32 × 18 = 576 spectral lines. The spectral lines are grouped into scale factor bands that emulate critical bands. At lower sampling frequencies optionally provided by MPEG-2, the frequency resolution is increased by a factor of two; at a 24-kHz sampling rate the resolution per spectral line is about 21 Hz. This allows better adaptation of scale factor bands to critical bands. This helps achieve good audio quality at lower bit rates.

FIGURE 11.7 Flow chart of the entire MPEG-1 Layer I and II audio encoding algorithm.

FIGURE 11.8 MPEG-1 Layer III audio encoder and decoder. A. Layer III encoder (single-channel mode). B. Layer III two-channel decoder.

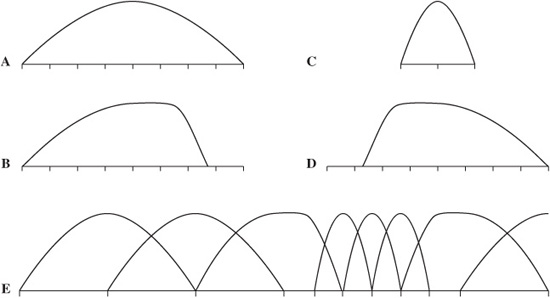

FIGURE 11.9 Long and short blocks can be selected for the MDCT transform used in the MPEG-1 Layer III encoder. Both long and short windows, and two transitional windows, are used.

Layer III has high frequency resolution, but this dictates low time resolution. Quantization error spread over a window length can produce pre-echo artifacts. Thus, under direction of the psychoacoustic model, the MDCT window sizes can be switched between frequency or time resolution, using a threshold calculation; the architecture is shown in Fig. 11.9. A long symmetrical window is used for steady-state signals; a length of 1152 samples corresponds 24 ms at a 48-kHz sampling frequency. Each transform of 36 samples yields 18 spectral coefficients for each of 32 subbands, for a total of 576 coefficients. This provides good spectral resolution of 41.66 Hz (24000/576) that is needed for steady state-signals, at the expense of temporal resolution that is needed for transient signals.

Alternatively, when transient signals occur, a short symmetrical window is used with one-third the length of the long window, followed by an MDCT that is one-third length. Time resolution is 4 ms at a 48-kHz sampling frequency. Three short windows replace one long window, maintaining the same number of samples in a frame. This mode yields six coefficients per subband, or a total of 32 × 6 = 192 coefficients. Window length can be independently switched for each subband. Because the switchover is not instantaneous, an asymmetrical start window is used to switch from long to short windows, and an asymmetrical stop window switches back. This ensures alias cancellation. The four window types, along with a typical window sequence, are shown in Fig. 11.10. There are three block modes. In two modes, the outputs of all 32 subbands are processed through the MDCT with equal block lengths. A mixed mode provides frequency resolution at lower frequencies and time resolution at higher frequencies. During transients, the two lower subbands use long blocks and the upper 30 subbands use short blocks. Huffman coding is applied at the encoder output to additionally lower the bit rate.

A Layer III decoder performs Huffman decoding, as well as decoding of bit allocation information. Coefficients are applied to an inverse transform, and 32 subbands are combined in a synthesis filter to output a broadband signal. The inverse modified discrete cosine transform (IMDCT) is executed 32 times for 18 spectral values each to transform the spectrum of 576 values into 18 consecutive spectra of length 32. These spectra are converted into the time domain by executing a polyphase synthesis filter bank 18 times. The polyphase filter bank contains a frequency mapping operation (such as matrix multiplication) and an FIR filter with 512 coefficients.

FIGURE 11.10 MPEG-1 Layer III allows adaptive window switching for the MDCT transform. Four window types are defined. A. Long (normal) window. B. Start window (long to short). C. Short window. D. Stop window (short to long). D. An example of a window sequence. (Brandenburg and Stoll, 1994)

MP3 files can be coded at a variety of bit rates. However, the format is not scalable with respect to variable decoding. In other words, the decoder cannot selectively choose subsets of the entire bitstream to reproduce different quality signals.

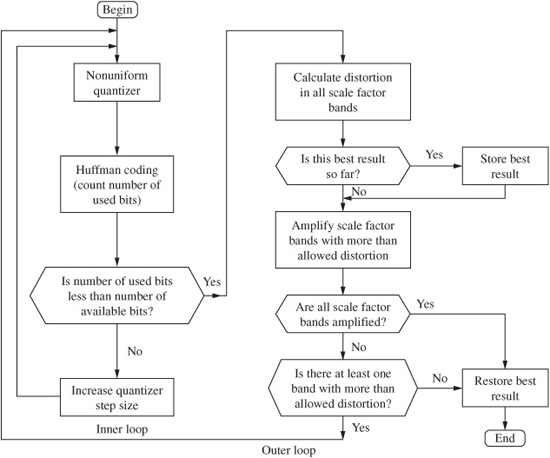

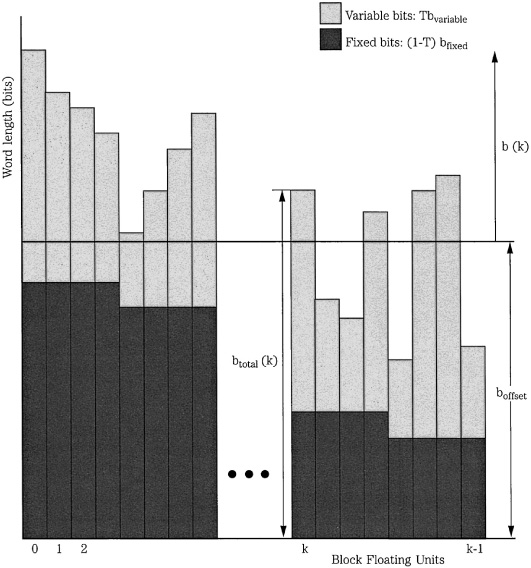

MP3 Bit Allocation and Huffman Coding

The allocation control algorithm suggested for the Layer III encoder uses dynamic quantization. A noise allocation iteration loop is used to calculate optimal quantization noise in each subband. This technique is referred to as noise allocation, as opposed to bit allocation. Rather than allocate bits directly from SNR values, in noise allocation the bit assignment is an inherent outcome of the strategy. For example, an analysis-by-synthesis method can be used to calculate a quantized spectrum that satisfies the noise requirements of the modeled masking threshold. Quantization of this spectrum is iteratively adjusted so the bit rate limits are observed. Two nested iteration loops are used to find two values that are used in the allocation: the global gain value determines quantization step size, and scale factors determine noise-shaping factors for each scale factor band. To form scale factors, most of the 576 spectral lines in long windows are grouped into 21 scale factor bands, and most of the 192 lines from short windows are grouped into 12 scale factor bands. The grouping approximates critical bands, and varies according to sampling frequency.

An inner iteration loop (called the rate loop) acts to decrease the coder rate until it is sufficiently low. The Huffman code assigns shorter codewords to smaller quantized values that occur more frequently. If the resulting bit rate is too high, the rate loop adjusts gain to yield larger quantization step sizes and hence small quantized values and smaller Huffman codewords and a lower bit rate. The process of quantizing spectral lines and determining the appropriate Huffman code can be time-consuming. The outer iteration loop (called the noise control loop) uses analysis-by-synthesis to evaluate quantization noise levels and hence the quality of the coded signal. The outer loop decreases the quantizer step size to shape the quantization noise that will appear in the reconstructed signal, aiming to maintain it below the masking threshold in each band. This is done by iteratively increasing scale factor values. The algorithm uses the iterative values to compute the resulting quantization noise. If the quantization noise level in a band exceeds the masking threshold, the scale factor is adjusted to decrease the step size and lower the noise floor. The algorithm then recalculates the quantization noise level. Ideally, the loops yield values such that the difference between the original spectral values and the quantized values results in noise below the masking threshold.

If the psychoacoustic model demands small step sizes and in contradiction the loops demand larger step sizes to meet a bit rate, the loops are terminated. To avoid this, the perceptual model can be modified and two loops tuned, to suit different bit rates; this tuning can require considerable development work. Nonuniform quantization is used such that step size varies with amplitude. Values are raised to the 3/4 power before quantizing to optimize the signal-to-noise ratio over a range of quantizer values (the decoder reciprocates by raising values to the 4/3 power).

Huffman and run-length entropy coding exploit the statistical properties of the audio signal to achieve lossless data compression. Most audio frames will yield larger spectral values at low frequencies and smaller (or zero) values at higher frequencies. To utilize this, the 576 spectral lines are considered as three groups and can be coded with different Huffman code tables. The sections from low to high frequency are BIG_ VALUE, COUNT1, and RZERO, assigned according to pairs of absolute values ranging from 0 to 8191, quadruples of 0, −1, or + 1 values, and the pairs of 0 values, respectively. The BIG_VALUE pairs can be coded using any of 32 Huffman tables, and the COUNT1 quadruples can be coded with either of two tables. The RZERO pairs are not coded with Huffman coding. A Huffman table is selected based on the dynamic range of the values. Huffman coding is used for both scale factors and coefficients.

The data rate from frame to frame can vary in Layer III; this can be used for variable bit-rate recording. The psychoacoustic model calculates how many bits are needed and sets the frame bit rate accordingly. In this way, for example, music passages that can be satisfactorily coded with fewer bits can yield frames with fewer bits. A variable bit rate is efficient for on-demand transmission. However, variable bit rate streams cannot be transmitted in real time using systems with a constant bit rate. When a constant rate is required, Layer III can use an optional bit reservoir to allow for more accurate coding of particularly difficult (large perceptual entropy) short window passages. In this way, the average transmitted data rate can be smaller than peak data rates. The number of bits per frame is variable, but has a constant long-term average. The mean bit rate is never allowed to exceed the fixed-channel capacity. In other words, there is reserve capacity in the reservoir. Unneeded bits (below the average) can be placed in the reservoir. When additional bits are needed (above the average), they are taken from the reservoir. Succeeding frames are coded with somewhat fewer bits than average to replenish the reservoir. Bits can only be borrowed from past frames; bits cannot be borrowed from future frames. The buffer memory adds throughput time to the codec. To achieve synchronization at the decoder, headers and side information are conveyed at the frame rate. Frame size is variable; boundaries of main data blocks can vary whereas the frame headers are at fixed locations. Each frame has a synchronization pattern and subsequent side information discloses where a main data block began in the frame. In this way, main data blocks can be interrupted by frame headers.

In some codecs, the output file size is different from the input file size, and the signals are not time-aligned; the time duration of the codec’s signal is usually longer. This is because of the block structure of the processing, coding delays, and the lookahead strategies employed. Moreover, for example, an encoder might either discard a final frame that is not completely filled at the end of a file, or more typically pad the last frame with zeros. To maintain the original file size and time alignment, some codecs use ancillary data in the bitstream in a technique known as original file length (OFL). By specifying the number of samples to be stripped at the start of a file, and the length of the original file, the number of samples to be stripped at the end can be calculated. The OFL feature is available in the MP3PRO codec.

MP3 Stereo Coding

To take advantage of redundancies between stereo channels, and to exploit limitations in human spatial listening, Layer III allows a choice of stereo coding methods, with four basic modes: normal stereo mode with independent left and right channels; M/S stereo mode in which the entire spectrum is coded with M/S; intensity stereo mode in which the lower spectral range is coded as left/right and the upper spectral range is coded as intensity; and the intensity and M/S mode in which the lower spectral range is coded as M/S and the upper spectral range is coded as intensity. Each frame may have a different mode. The partition between upper and lower spectral modes can be changed dynamically in units of scale factor bands.

Layer III supports both M/S (middle/side) stereo coding and intensity stereo coding. In M/S coding, certain frequency ranges of the left and right channels are mixed as sum (middle) and difference (side) signals of the left and right channels before quantization. In this way, stereo unmasking can be avoided. In addition, when there is high correlation between the left and right channels, the difference signal is further reduced to conserve bits. In intensity stereo coding, the left and right channels of upper-frequency subbands are not coded individually. Instead, one summed signal is transmitted along with individual left- and right-channel scale factors indicating position in the stereo panorama. This method retains one spectral shape for both channels in upper sub-bands, but scales the magnitudes. This is effective for stationary signals, but less effective for transient signals because they may have different envelopes in different channels. Intensity coding may lead to artifacts such as changes in stereo imaging, particularly for transient signals. It is used primarily at low bit rates.

MP3 Decoder Optimization

MP3 files can be decoded with dedicated hardware chips or software programs. To optimize operation and decrease computation, some software decoders implement special features. Calculation of the hybrid synthesis filter bank is the most computationally complex aspect of the decoder. The process can be simplified by implementing a stereo downmix to monaural in the frequency domain, before the filter bank, so that only one filter operation must be performed. Downmixing can be accomplished with a simple weighted sum of the left and right channels. However, this is not optimal because, for example, an M/S-stereo or intensity-stereo signal already contains a sum signal. More efficiently, built in downmixing routines can calculate the sum signal only for those scale factor bands that are coded in left/right stereo. For M/S- and intensity-coded scale factor bands, only scaling operations are needed.

To further reduce computational complexity, the hybrid filter bank can be optimized. The filter bank consists of IMDCT and polyphase filter bank sections. As noted, the IMDCT is executed 32 times for 18 spectral values each to transform the spectrum of 576 values into 18 consecutive spectra of length 32. These spectra are converted into the time domain by executing a polyphase synthesis filter bank 18 times. The polyphase filter bank contains a frequency mapping operation (such as matrix multiplication) and a FIR filter with 512 coefficients. The FIR filter calculation can be simplified by reducing the number of coefficients, the filter coefficients can be truncated at the ends of the impulse response, and the impulse response can be modeled with fewer coefficients. Experiments have suggested that filter length can be reduced by 25% without yielding additional audible artifacts. More directly, computation can be reduced by limiting the output audio bandwidth. The high-frequency spectral values can be set to zero; an IMDCT with all input samples set to zero does not have to be calculated. If only the lower halves of the IMDCTs are calculated, the audio bandwidth is limited. The output can be downsampled by a factor of 2, so that computation for every second output value can be skipped, thus cutting the FIR calculation in half.

There are many nonstandard codecs that produce MP3-compliant bitstreams; they vary greatly in performance quality. LAME is an example of a fast, high-quality, royalty-free codec that produces a MP3-compliant bitstream. LAME is open-source, but using LAME may require a patent license in some countries. LAME is available at http://lame.sourceforge.net. MP3 Internet applications are discussed in Chap. 15.

MPEG-1 Psychoacoustic Model 1

The MPEG-1 standard suggests two psychoacoustic models that determine the minimum masking threshold for inaudibility. The models are only informative in the standard; their use is not mandated. The models are used only in the encoder. In both cases, the difference between the maximum signal level and the masking threshold is used by the bit allocator to set the quantization levels. Generally, model 1 is applied to Layers I and II and model 2 is applied to Layer III.

Psychoacoustic model 1 proposes a low-complexity method to analyze spectral data and output signal-to-mask ratios. Model 1 performs these nine steps:

1. Perform FFT analysis: A 512- or 1024-point fast Fourier transform, with a Hann window with adjacent overlapping of 32 or 64 samples, respectively, to reduce edge effects, is used to transform time-aligned time-domain data to the frequency domain. An appropriate delay is applied to time-align the psychoacoustic model’s output. The signal is normalized to a maximum value of 96 dB SPL, calibrating the signal’s minimum value to the absolute threshold of hearing.

2. Determine the sound pressure level: The maximum SPL is calculated for each subband by choosing the greater of the maximum amplitude spectral line in the subband or the maximum scale factor that accounts for low-level spectral lines in the subband.

3. Consider the threshold in quiet: An absolute hearing threshold in the absence of any signal is given; this forms the lower masking bound. An offset is applied depending on the bit rate.

4. Finding tonal and nontonal components: Tonal (sinusoidal) and nontonal (noise-like) components in the signal are identified. First, local maxima in the spectral components are identified relative to bandwidths of varying size. Components that are locally prominent in a critical band by + 7 dB are labeled as tonal and their sound-pressure level is calculated. Intensities of the remaining components, assumed to be nontonal, within each critical band are summed and their SPL is calculated for each critical band. The nontonal maskers are centered in each critical band.

5. Decimation of tonal and nontonal masking components: The number of maskers is reduced to obtain only the relevant maskers. Relevant maskers are those with magnitude that exceeds the threshold in quiet, and those tonal components that are strongest within 1/2 Bark.

6. Calculate individual masking thresholds: The total number of masker frequency bins is reduced (for example, in Layer I at 48 kHz, 256 is reduced to 102) and maskers are relocated. Noise masking thresholds for each subband, accounting for tonal and nontonal components and their different downward shifts, are determined by applying a masking (spreading) function to the signal. Calculations use a masking index and masking function to describe masking effects on adjacent frequencies. The masking index is an attenuation factor based on critical-band rate. The piecewise masking function is an attenuation factor with different lower and upper slopes between −3 and + 8 Bark that vary with respect to the distance to the masking component and the component’s magnitude. When the subband is wide compared to the critical band, the spectral model can select a minimum threshold; when it is narrow, the model averages the thresholds covering the subband.

7. Calculate the global masking threshold: The powers corresponding to the upper and lower slopes of individual subband masking curves, as well as a given threshold of hearing (threshold in quiet), are summed to form a composite global masking contour. The final global masking threshold is thus a signal-dependent modification of the absolute threshold of hearing as affected by tonal and nontonal masking components across the basilar membrane.

8. Determine the minimum masking threshold: The minimum masking level is calculated for each subband.

9. Calculate the signal-to-mask ratio: Signal-to-mask ratios are determined for each subband, based on the global masking threshold. The difference between the maximum SPL levels and the minimum masking threshold values determines the SMR value in each subband; this value is supplied to the bit allocator.

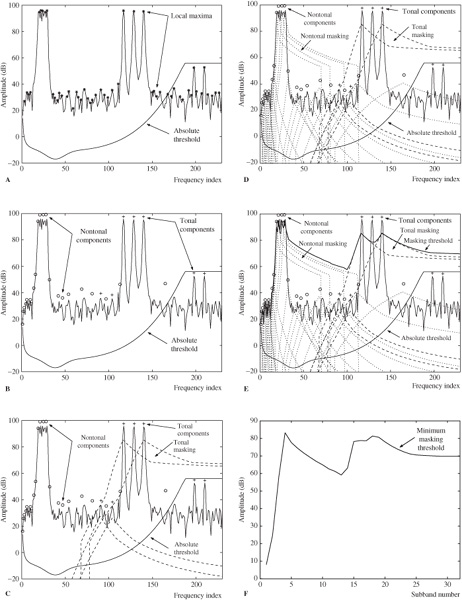

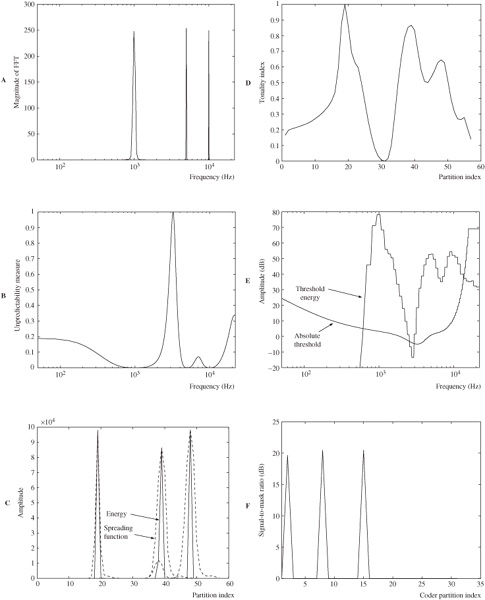

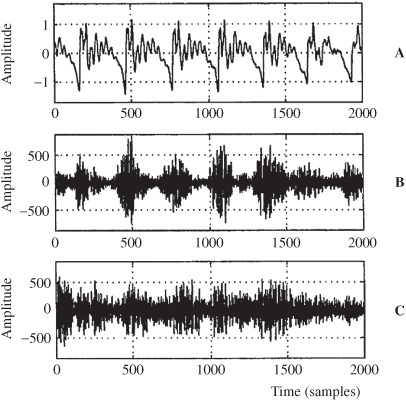

The principal steps in the operation of model 1 can be illustrated with a test signal that contains a band of noise, as well as prominent tonal components. The model analyzes one block of the 16-bit test signal sampled at 44.1 kHz. Figure 11.11A shows the audio signal as output by the FFT; the model has identified the local maxima. The figure also shows the absolute threshold of hearing used in this particular example (offset by −12 dB). Figure 11.11B shows tonal components marked with a “+” and nontonal components marked with a “o.” Figure 11.11C shows the masking functions assigned to tonal maskers after decimation. The peak SMR (about 14.5 dB) corresponds to that used for tonal maskers. Figure 11.11D shows the masking functions assigned to nontonal maskers after decimation. The peak SMR (about 5 dB) corresponds to that used for nontonal maskers. Figure 11.11E shows the final global masking curve obtained by combining the individual masking thresholds. The higher of the global masking curve and the absolute threshold of hearing is used as the final global masking curve. Figure 11.11F shows the minimum masking threshold. From this, SMR values can be calculated in each subband.

FIGURE 11.11 Operation of MPEG-1 model 1 is illustrated using a test signal. A. Local maxima and absolute threshold. B. Tonal and nontonal components. C. Tonal masking. D. Nontonal masking. E. Masking threshold. F. Minimum masking threshold.

To further explain the operation of model 1, additional comments are given here. The delay in the 512-point analysis filter bank is 256 samples and centering the data in the 512-point Hann window adds 64 samples. An offset of 320 samples (256 + (512 − 384)/2 = 320) is needed to time-align the model’s 384 samples.

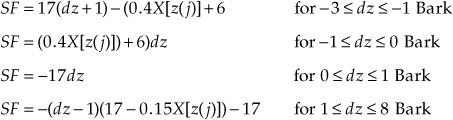

The spreading function used in model 1 is described in terms of piecewise slopes (in dB):

where dz = z(i) − z(j) is the distance in Bark between the maskee and masker frequency; i and j are index values of spectral lines of the maskee and masker, respectively. X[z(j)] is the sound pressure level of the jth masking component in dB. Values outside −3 and + 8 Bark are not considered in this model.

Model 1 uses this general approach to detect and characterize tonality in audio signals: An FFT is applied to 512 or 1024 samples, and the components of the spectrum analysis are considered. Local maxima in the spectrum are identified as having more energy than adjacent components. These components are decimated such that a tonal component closer than 1/2 Bark to a stronger tonal component is discarded. Tonal components below the threshold of hearing are discarded as well. The energies of groups of remaining components are summed to represent tonal components in the signal; other components are summed and marked as nontonal. A binary designation is given: tonal components are assigned 1, and nontonal components are assigned 0. This information is presented to the bit allocation algorithm. Specifically, in model 1, tonality is determined by detecting local maxima of 7 dB in the audio spectrum. To derive the masking threshold relative to the masker, a level shift is applied; the nature of the shift depends on whether the masker is tonal or nontonal:

ΔT(z) = −6.025 − 0.275z dB

ΔN(z) = −2.025 − 0.175z dB

where z is the frequency of the masker in Bark.

Model 1 considers all the nontonal components in a critical band and represents them with one value at one frequency. This is appropriate at low frequencies where sub-bands and critical bands have good correspondence, but can be inefficient at high frequencies where there are many critical bands in each subband. A subband that is apart from the identified nontonal component in a critical band may not receive a correct nontonal evaluation.

MPEG-1 Psychoacoustic Model 2

Psychoacoustic model 2 performs a more detailed analysis than model 1, at the expense of greater computational complexity. It is designed for lower bit rates than model 1. As in model 1, model 2 outputs a signal-to-mask ratio for each subband; however, its approach is significantly different. It contours the noise floor of the signal represented by many spectral coefficients in a way that is more accurate than that allowed by coarse subband coding. Also, the model uses an unpredictability measure to examine the side-chain data for tonal or nontonal qualities. Model 2 performs these 14 steps:

1. Reconstruct input samples: A set of 1024 input samples is assembled.

2. Calculate the complex spectrum: The time-aligned input signal is windowed with a 1024-point Hann window; alternatively, a shorter window may be used. An FFT is computed and output represented in magnitude and phase.

3. Calculate the predicted magnitude and phase: The predicted magnitude and phase are determined by extrapolation from the two preceding threshold blocks.

4. Calculate the unpredictability measure: The unpredictability measure is computed using the Euclidian distance between the predicted and actual values in the magnitude/phase domain. To reduce complexity, the measure may be computed only for lower frequencies and assumed constant for higher frequencies.

5. Calculate the energy and unpredictability in the partitions: The energy magnitude and the weighted unpredictability measure in each threshold calculation partition are calculated. A partition has a resolution of one spectral line (at low frequencies) or 1/3 critical band (at high frequencies), whichever is wider.

6. Convolve energy and unpredictability with the spreading function: The energy and the unpredictability measure in threshold calculation partitions are each convolved with a cochlea spreading function. Values are renormalized.

7. Derive tonality index: The unpredictability measures are converted to tonality indices ranging from 0 (high unpredictability) to 1 (low unpredictability). This determines the relative tonality of the maskers in each threshold calculation partition.

8. Calculate the required signal-to-noise ratio: An SNR is calculated for each threshold calculation partition using tonality to interpolate an attenuation shift factor between noise-masking-tone (NMT) and tone-masking-noise (TMN). The interpolated shift ranges from 5.5 dB for NMT and upward. The final shift value is the higher of the interpolated value or a frequency-dependent minimum value.

9. Calculate power ratio: The power ratio of the SNR is calculated for each threshold calculation partition.

10. Calculate energy threshold: The actual energy threshold is calculated for each threshold calculation partition.

11. Spread threshold energy: The masking threshold energy is spread over FFT lines corresponding to threshold calculation partitions to represent the masking in the frequency domain.

12. Calculate final energy threshold of audibility: The spread threshold energy is compared to values in absolute threshold of quiet tables, and the higher value is used (not the sum) as the energy threshold of audibility. This is because it is wasteful to specify a noise threshold lower than the level that can be heard.

13. Calculate pre-echo control: A narrow-band pre-echo control used in the Layer III encoder is calculated, to prevent audibility of the error signal spread in time by the synthesis filter. The calculation lowers the masking threshold after a quiet signal. The calculation takes the minimum of the comparison of the current threshold with the scaled thresholds of two previous blocks.

14. Calculate signal-to-mask ratios: Threshold calculation partitions are converted to codec partitions (scale factor bands). The SMR (energy in each scale factor band divided by noise level in each scale factor band) is calculated for each partition and expressed in decibels. The SMR values are forwarded to the allocation algorithm.

The principal steps in the operation of model 2 can be illustrated with a test signal that contains three prominent tonal components. The model analyzes a set of 1024 input samples of the 16-bit test signal sampled at 44.1 kHz. Figure 11.12A shows the magnitude of the audio signal as output by the FFT; the phase is also computed. Following prediction of magnitude and phase, the unpredictability measure is computed, as shown in Fig. 11.12B, using the Euclidian distance between the predicted and actual values in the magnitude/phase domain. When the measure equals 0, the current value is completely predicted. Figure 11.12C shows the energy magnitude in each partition and the spreading functions that are applied. Figure 11.12D shows the tonality index derived from the unpredictability measure; the tonality index ranges from 0 (high unpredictability and noise-like) to 1 (low unpredictability and tonal). Figure 11.12E shows the spread masking threshold energy in the frequency domain and the absolute threshold of quiet; the higher value is used to find the energy threshold of inaudibility. Figure 11.12F shows signal-to-mask ratios (energy in each scale factor band divided by noise level in each scale factor band) in codec partitions.

To further explain the operation of model 2, additional comments are given here. The spreading function used in model 2 is:

10 log10 SF(dz) = 15.8111389 + 7.5(1.05dz + 0.474) − 17.5[1.0 +(1.05dz +0.474)2]1/2+8 MIN[(1.05dz − 0.5)2 − 2(1.05dz − 0.5),0] dB

where dz is the distance in Bark between the maskee and masker frequency.

The spectral flatness measure (SFM), devised by James Johnston, measures the average or global tonality of the segment. SFM is the ratio of the geometric mean of the power spectrum to its arithmetic mean. The value is converted to decibels and referenced to −60 dB to provide a coefficient of tonality ranging continuously from 0 (nontonal) to 1 (tonal). This coefficient can be used to interpolate between TMN and NMT models. SFM leads to very conservative masking decisions for nontonal parts of a signal. More efficiently, specific tonal and nontonal regions within a segment can be identified. This local tonality can be measured as the normalized Euclidean distance between the actual and predicted values over two successive segments, for amplitude and phase. On the basis of this, tonality unpredictability can be computed for narrow frequency partitions and used to create tonality metrics that are used to interpolate between tone or noise models.

FIGURE 11.12 Operation of MPEG-1 model 2 is illustrated using a test signal. A. Magnitude of FFT. B. Unpredictability measure. C. Energy and spreading functions. D. Tonality index. E. Threshold energy and absolute threshold. F. Signal-to-mask ratios. (Boley and Rao, 2004)

Specifically, in model 2, a tonality index is created, on the basis of the predictability of the audio signal’s spectral components in a partition in two successive frames. Tonal components are more accurately predicted. Amplitude and phase are predicted to form an unpredictability measure C. When C = 0, the current value is completely predicted, and when C = 1, the predicted values differ from the actual values. This yields the tonality index T ranging from 0 (high unpredictability and noise-like) to 1 (low unpredictability and tonal). For example, the audio signal’s strongly tonal and nontonal areas are evident in Fig. 11.12D. The tonality index is used to calculate a (z) shift, for example, interpolating values from 6 dB (nontonal) to 29 dB (tonal).

When used in a Layer III encoder, model 2 is modified. The model is executed twice, once with a long block and once with a short 256-sample block. These values are used in the unpredictability measure calculation. A slightly different spreading function is used. The NMT shift is changed to 6.0 dB and a fixed TMN shift of 29.0 dB is used. As noted, a pre-echo control is calculated. Perceptual entropy is calculated as the logarithm of the geometric mean of the normalized spectral energy in a partition. This predicts the minimum number of bits needed for transparency. High values are used to identify transient attacks, and thus to determine block size in the encoder. In addition, model 2 accepts the minimum masking threshold at low frequencies where there is good correspondence between subbands and critical bands, and it uses the average of the thresholds at higher frequencies where subbands are narrow compared to critical bands.

Much research has been done since the informative model 2 was published in the MPEG-1 standard. Thus, most practical encoders use models that offer better performance, even if they are based on the informative model. An encoder that follows the informative documentation literally will not provide good results compared to more sophisticated implementations.

MPEG-2 Audio Standard

The MPEG-2 audio standard was designed for applications ranging from Internet downloading to high-definition digital television (HDTV) transmission. It provides a backward-compatible path to multichannel sound and a low sampling frequency provision, as well as a non-backward-compatible multichannel format known as Advanced Audio Coding (AAC). The MPEG-2 audio standard encompasses the MPEG-1 audio standard of Layers I, II, and III, using the same encoding and decoding principles as MPEG-1. In many cases, the same layer algorithms developed for MPEG-1 applications are used for MPEG-2 applications. Multichannel MPEG-2 audio is backward compatible with MPEG-1. An MPEG-2 decoder will accept an MPEG-1 bitstream and an MPEG-1 decoder can derive a stereo signal from an MPEG-2 bitstream. However, MPEG-2 also permits use of incompatible audio codecs.

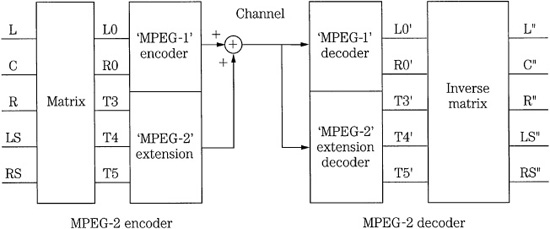

One part of the MPEG-2 standard provides multichannel sound at sampling frequencies of 32, 44.1, and 48 kHz. Because it is backward compatible to MPEG-1, it is designated as BC (backward compatible), that is, MPEG-2 BC. Clearly, because there is more redundancy between six channels than between two, greater coding efficiency is achieved. Overall, 5.1 channels can be successfully coded at rates from 384 kbps to 640 kbps. MPEG-2 also supports monaural and stereo coding at sampling frequencies of 16, 22.05, and 24 kHz, using Layers I, II, and III. The MPEG-1 and -2 audio coding family is shown in Fig. 11.13. The MPEG-2 audio standard was approved by the MPEG committee in November 1994 and is specified in ISO/IEC 13818-3.

FIGURE 11.13 The MPEG-2 audio standard adds monaural/stereo coding at low sampling frequencies, multichannel coding, and AAC. The three MPEG-1 layers are supported.

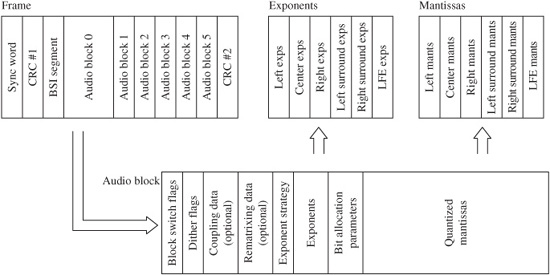

The multichannel MPEG-2 BC format uses a five-channel approach sometimes referred to as 3/2 + 1 stereo (3 front and 2 surround channels + subwoofer). The low-frequency effects (LFE) subwoofer channel is optional, providing an audio range up to 120 Hz. A hierarchy of formats is created in which 3/2 may be downmixed to 3/1, 3/0, 2/2, 2/1, 2/0, and 1/0. The multichannel MPEG-2 BC format uses an encoder matrix that allows a two-channel decoder to decode a compatible two-channel signal that is a subset of a multichannel bitstream. The multiple channels of MPEG-2 are matrixed to form compatible MPEG-1 left/right channels, as well as other MPEG-2 channels, as shown in Fig. 11.14. The MPEG-1 left and right channels are replaced by matrixed MPEG-2 left and right channels and these are encoded into backward-compatible MPEG frames with an MPEG-1 encoder. Additional multichannel data is placed in the expanded ancillary data field.

FIGURE 11.14 The MPEG-2 audio encoder and decoder showing how a 5.1-channel surround format can be achieved with backward compatibility with MPEG-1.

To efficiently code multiple channels, MPEG-2 BC uses techniques such as dynamic crosstalk reduction, adaptive interchannel prediction, and center channel phantom image coding. With dynamic crosstalk reduction, as with intensity coding, multichannel high-frequency information is combined and conveyed along with scale factors to direct levels to different playback channels. In adaptive prediction, a prediction error signal is conveyed for the center and surround channels. The high-frequency information in the center channel can be conveyed through the front left and right channels as a phantom image.

MPEG-2 BC can achieve a combined bit rate of 384 kbps, using Layer II at a 48-kHz sampling frequency. MPEG-2 allows for audio bit rates up to 1066 kbps. To accommodate this, the MPEG- 2 frame is divided into two parts. The first part is an MPEG-1-compatible stereo section with Layer I data up to 448 kbps, Layer II data up to 384 kbps, or Layer III data up to 320 kbps. The MPEG-2 extension part contains all other surround data.

A standard two-channel MPEG-1 decoder ignores the ancillary information, and reproduces the front main channels. In some cases, the dematrixing procedure in the decoder can yield an artifact in which the sound in a channel is mainly phase canceled but the quantization noise is not, and thus becomes audible. This limitation of spatial unmasking in MPEG-2 BC is a direct result of the matrixing used to achieve backward compatibility with the original two-channel MPEG standard. In part, it can be addressed by increasing the bit rate of the coded signals.

MPEG-2 also specifies Layer I, II, and III at low sampling frequencies (LSF) of 16, 22.05, and 24 kHz. This extension is not backward compatible to MPEG-1 codecs. This portion of the standard is known as MPEG-2 LSF. At these low bit rates, Layer III generally shows the best performance. Only minor changes in the MPEG-1 bit rate and bit allocation tables are necessary to adapt this LSF format. The relative improvement in quality stems from the improved frequency resolution of the polyphase filter bank in low- and mid-frequency regions; this allows more efficient application of masking. Layers I and II fare better than Layer III in these applications because Layer III already has good frequency resolution. The bitstream is unchanged in the LSF mode and the same frame format is used. For 24-kHz sampling, the frame length is 16 ms for Layer I and 48 ms for Layer II. The frame length of Layer III is decreased relative to that of MPEG-1. In addition, the “MPEG-2.5” standard supports sampling frequencies of 8, 11.025, and 12 kHz with the corresponding decrease in audio bandwidth; implementations use Layer III as the codec. Many MP3 codecs support the original MPEG-1 Layer III codec as well as the MPEG-2 and MPEG-2.5 extensions for lower sampling frequencies.

The menu of data rates, fidelity, and layer compatibility provided by MPEG are useful in a wide variety of applications such as computer multimedia, CD-ROM, DVD-Video, computer disks, local area networks, studio recording and editing, multichannel disk recording, ISDN transmission, digital audio broadcasting, and multichannel digital television. Numerous C and C++ programs performing MPEG-1 and -2 audio coding and decoding can be downloaded from a number of Internet file sites, and executed on personal computers. The backward-compatible format, using Layer II coding, is used for the soundtracks of some DVD-Video discs. However, a matrix approach to surround sound does not preserve spatial fidelity as well as discrete channel coding.

MPEG-2 AAC

The MPEG-2 Advanced Audio Coding (AAC) format codes monaural, stereo, or multi-channel playback for up to 48 channels, including 5.1-channel, at a variety of bit rates. AAC is known for its relatively high fidelity at low bit rates; for example, about 64 kbps per channel. It also provides high-quality 5.1-channel coding at an overall rate of 320 kbps or 384 kbps. AAC uses a reference model (RM) structure in which a set of tools (modules) has defined interfaces and can be combined variously in three different profiles. Individual tools can be upgraded and used to replace older tools in the reference software. In addition, this modularity makes it easy to compare revisions against older versions. AAC also comprises the kernel of audio tools used in the MPEG-4 standard for coding high-quality audio. AAC also supports lossless coding. AAC is specified in Part 7 of the MPEG-2 standard (ISO/IEC 13818-7), which was finalized in April 1997.

MPEG-2 AAC coding is not backward compatible with MPEG-1 and was originally designated as NBC (non-backward compatible) coding. An AAC bitstream cannot be decoded by an MPEG-1-only decoder. By lifting the constraint of compatibility, better performance is achieved compared to MPEG-2 BC. MPEG-2 AAC supports standard sampling frequencies of 32, 44.1, and 48 kHz, as well as other rates from 8 kHz to 96 kHz, yielding maximum bit rates of 48 kbps and 576 kbps, respectively. Its input channel configurations are: 1/0 (monaural), 2/0 (two-channel stereo), different multichannel configurations up to 3/2 + 1, and provision for up to 48 channels. Matrixing is not used. Downmixing is supported. To improve error performance, the system is designed to maintain bitstream synchronization in the presence of bit errors, and error concealment is supported as well.

To allow flexibility in audio quality versus processing requirements, AAC coding modules are used to create three profiles: main profile, scalable sampling rate (SSR) profile, and low-complexity (LC) profile. The main profile employs the most sophisticated encoder using all the coding modules except preprocessing to yield the highest audio quality at any bit rate. A main profile decoder can also decode the low-complexity bitstream. The SSR profile uses a gain control tool to perform poly-phase quadrature filtering (PQF), gain detection, and gain modification preprocessing; prediction is not used and temporal noise shaping (TNS) order is limited. SSR divides the audio signal into four equal frequency bands each with an independent bitstream and decoders can choose to decode one or more streams and thus vary the bandwidth of the output signal. SSR provides partial compatibility with the low-complexity profile; the decoded signal is bandlimited. The LC profile does not use preprocessing or prediction tools and the TNS order is limited. LC operates with low memory and processing requirements.

AAC Main Profile

A block diagram of a main profile AAC encoder and decoder is shown in Fig. 11.15. An MDCT with 50% overlap is used as the only input signal filter bank. It uses lengths of 1024 for stationary signals or 128 for transient signals, with a 2048-point window or a block of eight 256-point windows, respectively. To preserve interchannel block synchronization (phase), short block lengths are retained for eight-block durations. For multi-channel coding, different filter bank resolutions can be used for different channels. At 48 kHz, the long-window frequency resolution is 23 Hz and time resolution is 21 ms; the short window yields 187 Hz and 2.6 ms. The MDCT employs time-domain aliasing cancellation (TDAC). Two alternate window shapes are selectable on a frame basis in the 2048-point mode; either sine or Kaiser–Bessel-derived (KBD) windows can be employed. The encoder can select the optimal window shape on the basis of signal characteristics. The sine window is used when perceptually important components are spaced closer than 140 Hz and narrow-band selectivity is more important than stop-band attenuation. The KBD window is used when components are spaced more than 220 Hz apart and stopband attenuation is needed. Window switching is seamless, even with the overlap-add sequence. The shape of the left half of each window must match the shape of the right half of the preceding window; a new window shape is thus introduced as a new right half.

FIGURE 11.15 Block diagram of MPEG-2 AAC encoder and decoder. Heavy lines denote data paths, light lines denote control signals.

The suggested psychoacoustic model is based on the MPEG-1 model 2 and examines the perceptual entropy of the audio signal. It controls the quantizer step size, increasing step size to decrease buffer levels during stationary signals, and correspondingly decreasing step size to allow levels to rise during transient signals.

A second-order backward-adaptive predictor is applied to remove redundancy in stationary signals found in long windows; residues are calculated and used to replace frequency coefficients. Reconstructed coefficients in successive blocks are examined for frequencies below 16 kHz. Values from two previous blocks are used to form one predicted value for each current coefficient. The predicted value is subtracted from the actual target value to yield a prediction error (residue) which is quantized. Coefficient residues are grouped into scale factor bands that emulate critical bands. A prediction control algorithm determines if prediction should be activated in individual scale factor bands or in the frame at all, based on whether it improves coding gain.

AAC Allocation Loops

Two nested inner and outer loops iteratively perform nonuniform quantization and analysis-by-synthesis. The simplified nested algorithms are shown in Fig. 11.16. The inner loop (within the outer loop) begins with an initial quantization step size that is used to quantize the data and perform Huffman coding to determine the number of bits needed for coding. If necessary, the quantizer step size can be increased to reduce the number of bits needed. The outer loop uses scale factors to amplify scale factor bands to reduce audibility of quantization noise (inverse scale factors are applied in the decoder). Each scale factor band is assigned one multiplying scale factor. The scale factor is a gain value that changes the amplitude of the coefficients in the scale factor band; this shapes the quantization noise according to the masking threshold. The outer loop uses analysis-by-synthesis to determine the resulting distortion and this is compared to the distortion allowed by the psychoacoustic model; the best result so far is stored. If distortion is too high in a scale factor band, the band is amplified (this increases the bit rate) and the outer loop repeats. The two loops work in conjunction to optimally distribute quantization noise across the spectrum.

FIGURE 11.16 Two nested inner and outer allocation loops iteratively perform nonuniform quantization and analysis-by-synthesis.

The width of the scale factor bands is limited to 32 coefficients, except in the last scale factor band. There are 49 scale factor bands for long blocks. Scale factor bands can be individually amplified in increments of 1.5 dB. Noise shaping results because amplified coefficients have larger values and will yield a higher SNR after quantization. Because inverse amplification must be applied at the decoder, scale factors are transmitted in the bitstream. Designers should note that scale factors are defined with opposite polarity in MPEG-2 AAC and MPEG-1/2 Layer III (larger scale factor values represent larger signals in AAC, whereas it is the opposite in Layer III).

Huffman coding is applied to the quantized spectrum, scale factors, and directional information. Twelve Huffman codebooks are available to code pairs or quadruples of quantized spectral values. Two codebooks are available for each maximum value, each representing a different probability function. A bit reservoir accommodates instantaneously variable bit rates, allowing bits to be distributed across consecutive blocks for more effective coding within the average bit-rate constraint. A frame output consists of spectral coefficients and control parameters. The bitstream syntax defines a lower layer for raw audio data, and a higher layer contains audio transport data. In the decoder, current spectral components are reconstructed by adding a prediction error to the predicted value. As in the encoder, the coefficients are calculated from preceding values; no additional information is required.

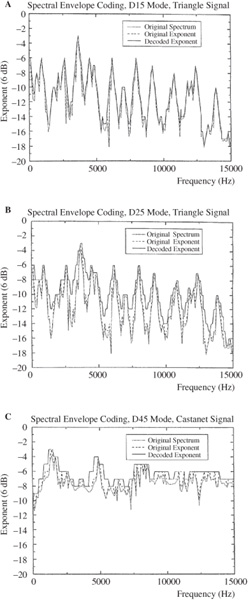

AAC Temporal Noise Shaping