![]()

Planning Your Plan

This chapter describes the dependencies you will have to address before you can begin to create a disaster recovery plan. The plan itself will consist of what to do when disaster happens, but there are a number of important steps before it can even be created. Firstly, there has to be a will to create it and the funding to do so, plus the input of stakeholders in the business itself and not just IT. There will be barriers to your desire to create a plan and you will have to approach removing them in different ways. I will describe each one and give an example of how you can remove it.

The objective at the end of this chapter is to be in a position to create a plan and put it into action. Before you have a plan, though, you have to plan what is required for that plan to exist. You will need to do the following:

- Gain approval from management.

- Build consensus among stakeholders.

- Create a business impact assessment.

- Address the physical and logistical realities of your place and people.

The focus here is “first things first.” These are the dependencies that, if not addressed, will either prevent you from creating a plan or mean it can’t or won’t work when it is really tested.

Getting the Green Light from Management

Since I am assuming your disaster recovery plan does not already exist, step one is to get approval for your plan. In order to initiate a DR planning project, top level management would normally be presented with a proposal. A project as important as this should be approved at the highest level. This is to ensure that the required level of commitment, resources, and management attention are applied to the process.

The proposal should present the reasons for undertaking the project and could include some or all of the following arguments:

- There is an increased dependency by the business over recent years on SharePoint, thereby creating increased risk of loss of normal services if SharePoint is not available.

- Among stakeholders, there is increased recognition of the impact that a serious incident could have on the business.

- Disaster recovery is not something that can be improvised. Therefore, there is a need to establish a formal process to be followed when a disaster occurs.

- There is an opportunity to lower costs or losses arising from serious incidents. This is the material benefit to the plan.

- There is a need to develop effective backup and recovery strategies to mitigate the impact of disruptive events.

The first step to understanding what the disaster recovery plan should consist of will be a business impact assessment (BIA). However, before discussing the BIA, let’s consider some of the barriers to organizational consensus and the metaphors that often underlie these barriers.

Barriers to Consensus

There can be many barriers to creating a DR plan. Some will be practical, such as the fact that every business has finite resources. These have to be addressed with facts and figures to back up your arguments.

One paradoxical objection is the existence of a plan already! This plan may be large and complex to such an extent that no one actually understands it or has ever tested it. It may not include SharePoint explicitly. There can also be political reasons to oppose the development of a plan. For example, there may be people who favor the support of a platform they perceive as in competition with SharePoint and so they oppose anything that relates to it. Counteracting political maneuvering like this is beyond the scope of this book.

Another barrier to approval of a DR plan is a lack of awareness by Upper Management of the potential impact of a failure of SharePoint. This is why, as I will outline in this chapter, having a BIA is the essential first component of the DR plan. It gives management real numbers around why the DR plan is necessary. Creating a DR plan will cost resources (time and money), so the BIA is essential to show you are trying to avoid cost and reduce losses, not increase them.

Some barriers to creating a DR plan will be more nebulous. This is because they have to do with the way people’s minds work, especially when it comes to complex systems like technology. There is a mistake we are all prone to making in relation to anything we don’t understand: we substitute a simple metaphor for the thing itself. As a result, we make naïve assumptions about what is required to tackle the inherent risks in managing them.

Disaster recovery planning is usually not done very well. This is because the business doesn’t understand the technology. At best, it still thinks it should be simple and cheap because copying a file or backing up a database to disk seems like it should be simple and cheap. But the reality is that SharePoint is a big, complex system of interdependent technologies, and maintaining a recoverable version of the system is not simple—even more so if you include third-party apps from the SharePoint Store. While this book will give you the knowledge and facts to back up your system, you will need to construct some better metaphors for your business to help you convince them to let you create a disaster recovery plan that actually fulfils its purpose. But first, you have to understand where these metaphors come from and the purpose they serve.

Metaphors have been used in computing for many years to ease adoption and help people understand the function of certain items. This is why we have “documents” in “folders” on our computer “desktop.” These metaphors were just to represent data on the hard disk. John Siracusa of Ars Technica talks about what happened next:

Back in 1984, explanations of the original Mac interface to users who had never seen a GUI before inevitably included an explanation of icons that went something like this: “This icon represents your file on disk.” But to the surprise of many, users very quickly discarded any semblance of indirection. This icon is my file. My file is this icon. One is not a “representation of” or an “interface to” the other. Such relationships were foreign to most people, and constituted unnecessary mental baggage when there was a much more simple and direct connection to what they knew of reality.

(Source: http://arstechnica.com/apple/reviews/2003/04/finder.ars/3)

Zen Buddhists have an appropriate kōan, although I don’t think they had WIMP (window, icon, menu, pointing device) in mind:

Do not confuse the pointing finger with the moon.

The problem is the convenient, simplistic metaphor used to represent the object becomes the object in itself if you don’t understand fully the thing being pointed at. When this kind of thinking gets applied to planning the recovery of business information, it creates a dangerous complacency that leaves a lot of valuable data in jeopardy.

Long, Long Ago . . .

It may seem obvious to some readers, but almost all business information is now in the form of electronic data. We don’t think of the fragility of the storage medium. We think of business information as being as solid as the servers that contain it. They say to err is human, but to really mess things up takes a computer. This is especially true when years of data from thousands of people can be lost or corrupted in less than a second and is completely unrecoverable. We find it hard to grasp that the file hasn’t just fallen behind the filing cabinet and we can just fish it out somehow.

In many ways, the store of knowledge, experience, and processes is the sum of what your business is—beyond the buildings and the people. Rebuilding a premises or rehiring staff can be done more quickly than rebuilding years of information.

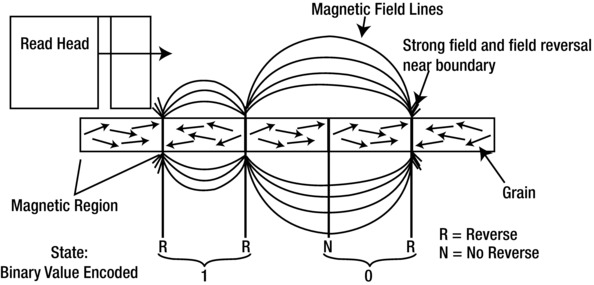

The fragility and importance of electronic information is mainly underestimated because of our outmoded metaphors. We confuse the metaphor with the thing it represents. Those are not really documents; they are just lots of magnetized materials arranged in one direction or another on aluminum or glass in a hard drive (see Figure 2-1).

Figure 2-1. Your documents are actually just little bits of iron

A relative of mine had an original way to get around this fragility. When he visited a web page he liked, he would print it out and file it alphabetically in a file cabinet right beside the computer. He complained this was a frustrating process because the web sites kept changing. Once I introduced him to the concept of a bookmark (another metaphor) in the browser, his life got a lot simpler. But I think that from a data retention point of view, perhaps he had the right idea!

Long Ago . . .

Even if some people have moved on from the perception of files in filing cabinets, they still think that data protection can be addressed exclusively through some form of tape-based backup. A few years ago, copies of backup tapes were retained locally to meet daily recovery requirements for lost files, database tables, et cetera. Copies of some of those tapes were periodically shipped to remote locations where they were often stored for years to ensure data recovery in the event of a catastrophic disaster that shut down the organization’s primary site.

Long-term, off-site storage of tapes was the conventional way to “do” disaster recovery. If operation of the business needed to be restarted in some location other than the primary one, these tapes could be shipped to the new location; application environments would be manually rebuilt; the data would be loaded onto the new servers; and business operations would be transacted from this new location until such time as the primary location could be brought back online.

This model is still seen as valid in some organizations and it is insufficient to capture the complexity and scale of a SharePoint farm. As you will see in later chapters, a SharePoint farm has many interdependent and ever changing components. Rebuilding a SharePoint farm with only tape backups and no DR plan would be a challenge for anyone no matter what their technical skill. Combine that with the cost of every second the system is down and it’s not a good scenario.

Another Weak Metaphor: Snapshots

Virtualization uses another metaphor that is taken wrongly to promise something it can’t deliver in relation to a SharePoint farm. Some people still think they can simply take a “snapshot” of a running SharePoint farm’s virtual web, application, and SQL servers. They think this magic camera captures all the information in a SharePoint farm at a moment in time and this allows them to restore it at any point in the future back to that point. This is overly simplistic.

Just one example of a part of a SharePoint farm that shows their complexity is timer jobs. SharePoint farms have over 125 default timer jobs (http://technet.microsoft.com/en-us/library/cc678870.aspx). They can run anywhere from every 15 seconds (in the case of Config Refresh, which checks the configuration database for configuration changes to the User Profile Service) to monthly runs of My Site Suggestions Email Job, which sends e-mails that contain colleague and keyword suggestions to people who don’t update their user profile often, prompting them to update their profiles.

Timer jobs look up what time it is now to know when next to run. This is because they don’t run on one universal time line but follow a simple rule like “after being run, set the timer to run again in 90 minutes.” If you take a snapshot of these services and then try to restore them to a previous point in time, they think it’s the time of the snapshot, not the current time. Obviously, this can create major problems. More detail can be found here: http://technet.microsoft.com/en-us/library/ff621103.aspx. It states a best practice of not using snapshots in Production environments with some detailed information on how they can also affect performance.

“Reality is merely an illusion, albeit a very persistent one”

—Albert Einstein (attributed)

The point I am making is that these overly simplistic views of the information stored on computers are what leads to poor disaster recovery plans because there is an assumption that it should be cheap and simple to make backups, like taking a photo or making a tape recording. But times have changed, and it is no longer that simple or cheap. Your aim at this point is to get buy-in for your project to create a DR plan. You don’t have time to make people experts on SharePoint, but you have to change their weak metaphors to stronger ones.

Weak metaphors operate like superstitions; they can’t be counteracted with knowledge and facts. Facts simply make the person’s viewpoint more entrenched because there is no 100% true view of anything. To persuade people to open their minds, the more successful indirect approach is to improve their metaphors; you’re going to have to substitute better metaphors they can use instead.

Here are some stronger metaphors that may help you convince people SharePoint is a complex system:

- SharePoint is like a public park: It needs constant pruning and planning to manage its growth. Now imagine that park has been destroyed and you have to recreate it. You would need a lot of different information to recreate it. A simple snapshot would not be enough, and you can’t simply maintain a copy of a constantly changing organic thing.

- SharePoint is like an office building: If, due to a natural disaster, you had to relocate everyone and everything in this building elsewhere, how would you do it? This metaphor is useful because no matter what business you are in, you will likely rely on some physical location. SharePoint is a virtual version of that. A home is another variation of this metaphor.

- SharePoint is like the human body: It has many interconnected and interdependent parts. Like a body, if one organ fails, it impacts the whole organism. If you had to rebuild a person from scratch using cloned organs, you would need not only the physical aspects but also the years of information stored in the brain. With SharePoint, you can clone virtual servers, but only empty ones. The data is constantly changing and complex.

If you can get across to your stakeholders some idea of the complex and evolving nature of SharePoint, you can win them over to the more concrete step of working out what the impact of a SharePoint system failure would be. This is the next step.

Business data is an asset and has tangible value. But some data has more value than others. Most organizations don’t actually calculate the value of information or the cost to the business of losing some or all of it. Before you can do a disaster recovery plan you have to plan why you need it. And what you need to have to fully appreciate why you need a disaster recovery plan is a BIA. This will result in knowing the cost of not having a disaster recovery plan in real money. The conclusion most organizations will come to is that the cost of producing and maintaining a BIA is proportionally very little compared to what it will have saved them in the event of a disaster.

A BIA should be a detailed document that has involved all the key stakeholders of the business. They are the people key to making the BIA and, by extension the DR plan, a success. It is not something that can only be drawn up by the IT department. This is another fundamentally outmoded mind set. It is the responsibility of the content owners to own the business continuity planning for their teams. While that is done in conjunction with the IT department, they are simply there to fulfill the requirements. It is the job of the business to define them.

A BIA should identify the financial and operational impacts that may result from a disruption of operations. Some negative impacts could be

- The cost of downtime

- Loss of revenue

- Inability to continue operations

- Loss of automated processes

- Brand erosion: loss of a sense of the company’s quality, like the Starbucks example in Chapter 1

- Loss of trustworthiness and reputation: the hacking of Sony demonstrated this impact

I have offered my theory as to why disaster recovery planning is so underfunded and also why traditionally IT has been the owner of it: the stakeholders don’t realize the complexity. In most cases, it is the IT department that determines the recovery time and recovery point objectives. But how are they supposed to determine them accurately without empirical input from the business?

As a consequence, the objectives are typically set based on generic Microsoft guidelines or some arbitrary decision, like which managers complain the most about how particularly important their data is. Without a BIA, IT has no empirical way to determine how to measure these objectives (RTO and RPO). The business users are owners of their content, so by extension they are responsible for business continuity/disaster recovery planning. They should dictate the RTOs and RPOs for their business processes within SharePoint.

RTOs and RPOs are either based on simple tape backups or snapshots—or due to overzealousness they go to the opposite extreme of something approaching zero downtime. SharePoint farms sometimes end up with overly aggressive RTO and RPOs because business users believe they can’t tolerate any downtime. That is certainly true in some organizations, such as Amazon.com, which relies on uptime to exist as a business. Another example would be air traffic control where every second counts if planes are circling your airport and running low on fuel. These are the exception, not the rule. The more aggressive the RTO/RPO, the more expensive the technology needed to achieve that objective. As you saw in “Applied Scenario: It’s Never Simple” in Chapter 1, an RTO/RPO in minutes or hours necessitates the use of SAN replication technology, which makes it very expensive to replicate the data at the SAN level to another data center. Log shipping is slower but also much cheaper.

The objective in disaster recovery planning is to find the perfect equilibrium between what you want to pay for your RPO/RTO and what you can afford to lose. Call it the Goldilocks principle: not too much or too little. The graph in Figure 2-2 illustrates this principle.

Figure 2-2. Time is money when it comes to RPO and RTO

In Figure 2-2, the central axis is cost. It also marks the point in time when the disaster happens. On top, you have your ship hitting the iceberg. From that point what you do is your disaster recovery. The clock is literally ticking and time is money. Beside the stopwatch and to the right is time moving forward. The arrow coming from the Titanic indicates that the cost goes down as you move away from the point of impact. In other words, the longer you can wait to restore the data, the cheaper your recovery will be. However, this descending curve is crossed by an ascending dotted curve pointing up to the Impact of Outage. That is the rising cost to your organization of SharePoint being offline. The further you go from the stopwatch, the higher it will climb.

To illustrate this dynamic, consider the Titanic. Lifeboats took up space on the deck, and space on a ship is very valuable to the passengers and by extension the company because the more comfortable the voyage, the more passengers they will have and the higher their profits. It was also the common wisdom of the time that if a ship that large did sink, there wouldn’t be enough time to get all the thousands of passengers onto the lifeboats, so they were useless anyway. Also, it was believed that in such a busy shipping lane, there would be plenty of ships to come to their aid and fish people out of the water in the event of a sinking. Thus, a focus on profits and a dependency on luck were placed over the value of human lives. It is important to point out that White Star Line (who owned Titanic) eventually ceased to exist and was taken over by its competitor Cunard. It is fair to say they did not consider seriously the Impact Of Outage or achieve a reasonable RTO equilibrium.

On the other side of the stopwatch is the time since your last backup. The cost of being able to recover to a point in time seconds or minutes before the disaster is at the highest point on the curve to the left of the cost axis. In other words, if you can only afford to lose seconds or minutes of data, it’s very expensive to implement this. But if you can afford to lose hours or days, it is much cheaper.

To give a non-SharePoint example, to keep backups of this book as I wrote it I used Jungle Disk. It is based on Amazon Web Storage. I schedule a timer job to back up the folder with all my chapters and figures to the cloud every night at 1 a.m. If it fails at that time because my Internet access is down, it tries again until it gets a connection. I am comfortable with a Recovery Point Objective of at most 24 hours because if I lost a day’s work, it would only be 1,200 or so words on average as that is what I write in a day. I could make that up in four days at a rate of 1,500 words a day. Jungle Disk is low cost because you only pay for the storage you use after your initial upload. It is not too expensive for me, so I feel I have achieved my RPO Equilibrium. My RTO is also low because all I need to do is go to another PC, install Jungle Disk, and restore my backup—in less than an hour, I’m back writing. You can read more about Jungle Disk here if you are interested: http://aws.amazon.com/solutions/case-studies/jungledisk/.

The single most important point in this book is that if you are going to implement a disaster recovery plan, you need to start by understanding your requirements and the implications of those requirements. It is a mistake to focus first on the many technologies that are often associated with SharePoint. I knew I needed to protect myself from a potential hard drive crash. I didn’t want to rewrite this book from the beginning if I lost it. I knew I wouldn’t mind spending a few dollars a month on backup, as long as I’d not lose more than a day’s work and I could be back writing within an hour or so. That was my BIA. Once I’d figured out my parameters, I searched until I found the technical solution that met my requirements.

A good BIA will result in consensus on RTO/RPOs for critical business processes within SharePoint. To achieve this you will have to involve a representative from all of your organization’s business units. Their job is to identify the critical business processes their units perform and how long those processes can be down before there is a critical impact to the organization. Notice the standard is critical impact. Critical means if they don’t function, the organization can’t function and comes to an immediate stop. It’s not just the point where SharePoint being down would cause them some inconvenience. It is the point where there is a real, measurable cost. The reality is that there is invariably a manual procedure that can be followed until SharePoint is brought back up. Admittedly, it will be a bit annoying to catch up with re-entering the data, but the cost savings could be very large.

Once you have tangible figures, these business process RTO/RPOs will translate into application and system RTO/RPOs for SharePoint, and IT can support these processes. From IT’s point of view, it will give them real requirements to meet. As a bonus, this will also likely help identify dependent systems such as Active Directory or external data sources linked to the prioritized business processes within SharePoint.

You’ll never know whether you’re really maintaining the “just right” point in your DR spending without producing a thorough BIA with all the necessary inputs. Also, if you don’t review and revisit it every 6 to 12 months it will become out of date and irrelevant. Remember the lesson of the lifeboats and the Titanic.

Like the ship, you have to make sure all the stakeholders are on board and understand the risks so they are committed fully to knowing what they will need to do in the event of a disaster. Think of this as making sure your passengers know the lifeboat drill. To understand the importance of the drill, they need to know the impact, literally, of an impact.

To prepare a disaster recovery plan, the main dependency you will need to make it happen is people. I have already discussed completing a BIA, RTO/RPO, the Goldilocks principle, and finally, consensus. In the event of a disaster, what other people will you need involved in the planning? The answer is, of course, the people to execute the plan. This is another point where planning your plan can fall down before it even begins. What if the person or people who know your SharePoint farm best are not available themselves because of the disaster? Perhaps the disaster is an epidemic and all your SharePoint administrators are ill in hospital or worse. Your plan has to take into account that the people who created it may not be the ones implementing it. The only way to prepare for this and to test your plan properly is to have an independent third party test your recovery plan.

Another issue to consider when testing your plan is that the people who created the plan have a vested interest in it being successfully run. As a result, they will make sure the test of the plan is successful. This is another reason why a third party should do a dry-run implementation of the plan. So now you have everyone in agreement about needing a plan, you have a way to measure what it needs to achieve, and you’ve avoided the pitfall of relying on one person/group of people as a single point of failure to test and execute the plan. What other dependencies are there?

The physical dependencies in the event of a disaster are the SharePoint farm itself and the data. Many enterprises implement a DR plan for just data, assuming that the servers and application environment will be manually rebuilt. Manually rebuilding SharePoint is not a simple task. Think again of the complex system metaphors. The answer is to use automated application recovery. DR plans that provide for automated SharePoint application recovery will be able to meet much shorter RTOs than those that just recover data and then depend on administrators to manually rebuild SharePoint. Those plans will also be more reliable and perform more predictably because they will not be as dependent upon the skill of the SharePoint farm administrators that are actually performing the recovery, some of whom may not be available when a real disaster hits.

Remember that the primary goal of the BIA is to find an equilibrium point for the RTO and RPO objectives where the impact to the organization can be tolerated and the organization can afford the cost of the solution. If you’ve not built it already, it may result in changing how you plan your SharePoint architecture. You may have separate farms with different RTO/RPOs because you have a prioritized recovery order of the business processes within the business units.

From IT’s point of view, it will help identify dependent systems such as Active Directory or external data sources linked to the prioritized business processes within SharePoint. So when planning your plan, realize it will have a comprehensive impact on your architecture. Don’t build until you have a BIA. It’s tempting to do things the other way around, but the result will be an architecture where, like the Titanic, you have to hope nothing will go wrong because you know it will be a disaster.

Outside of your SharePoint farm and its data is a bigger world that is beyond your control. But as part of your pre-DR plan planning you can assess what could happen that might affect your not being able to put your plan into action. This means you will have to evaluate the types of disasters that you are most likely to encounter given where your data centers are located. If you are in an area prone to natural disasters, such as tsunamis, floods, earthquakes, or widespread power outages, you may want to follow the DR best practice guideline of locating your remote recovery site at least 200 miles away from your primary site. If this is your requirement, this will affect any decision you make to implement replication technologies to help address your DR requirements. For example, Microsoft recommends a stretched farm have at worst 1 ms of latency between the data centers, and fiber optic cable contains imperfections that start to degrade the light being passed along after about 60 miles. There may be other specific risks associated with your type of organization that should be taken into account when planning what should be in your DR plan.

To assess risk, you’ll need to do some research. There will be many sources of information, including the following:

- System interfaces, hardware and software

- Data in logs

- People: Ask! There will be lots of valuable information here.

- History of hacks/attacks on the system from the following sources: internal, police, news media.

- History of natural disasters from the following sources: internal, police, news media.

- External/internal audits

- Security requirements

- Security test results

There are many types of risk that should be considered. The following list is by no means exhaustive but it at least demonstrates the range of possibilities:

- Natural Disasters

- Tornado

- Hurricane

- Flood

- Snow

- Drought

- Earthquake

- Tsunami

- Electrical storms

- Fire

- Subsidence and landslides

- Freezing conditions

- Contamination and environmental hazards

- Health epidemic

- Deliberate Disruption

- Terrorism

- Sabotage

- War

- Coups

- Theft

- Arson

- Labor disputes/industrial action

- Loss of Utilities and Services

- Electrical power failure

- Loss of gas supply

- Loss of water supply

- Petroleum and oil shortage

- Loss of telephone services

- Loss of Internet services

- Loss of drainage/waste removal

- Equipment or System Failure

- Internal power failure

- Air conditioning failure

- Cooling systems failure

- Equipment failure (excluding IT hardware)

- Serious Information Security Incidents

- Hacking

- Accidental loss of records or data

- Disclosure of sensitive information

- Other Emergency Situations

- Workplace violence

- Public transportation disruption

- Riots

- Health and safety regulations

- Employee morale

- Mergers and acquisitions

- Negative publicity

- Legal Problems

The outcome of this will be to identify preventive controls. These are measures that reduce the effects of system disruptions, increase system availability, and potentially reduce your disaster recovery costs.

Time and distance are big factors in planning your plan. Synchronous means “at the same time.” Replication means “make a copy of data.” Keeping current is something people have always done. Before we even used technology, we used verbal communication to keep in sync. If I haven’t seen a friend for a while, we will share news until we “catch up.” We exchange the new information of interest since the last time we met. So the key concepts here are time and data. When you connect your smartphone to your PC, they also catch up and exchange information that may be new on the other until they are in sync again; this includes new downloads, software updates, contacts, e-mails, calendar entries, and so forth. In both cases, it takes a bit of time to catch up. The longer since you last talked/connected to the PC, the longer it takes to get in sync.

Social networking technologies like Facebook and Twitter mean we tend to know a lot of current information about a lot of people very quickly, almost the instant it happens. Some updates are even from people we don’t know; we are just interested in a topic they are sharing their knowledge about. If we constantly check these applications, we can stay constantly in sync. The drawback is you have to spend every waking minute with your eyes glued to a screen reading to ensure you are up to date. The real world is passing you by while you stay in sync with the virtual one.

You can think of talking/smartphone sync as not happening all the time, only when we hook up. The term for this is asynchronous. The longer the gap between syncs, the longer it takes. But while we are not syncing, we are free to do other things. Facebook/Twitter is almost synchronous; it can happen all the time in real time, but at the expense of doing anything else.

Synchronous Replication: Mirroring

Replication is the process of syncing data. It comes in two flavors: synchronous and asynchronous. SharePoint data is mostly stored in SQL Server, so for synchronous replication it can use a technology called database mirroring to keep two databases looking the same, like a mirror image, on different farms in different data centers. The more the source changes, the harder the mirror has to work to keep current. Think of it has having a constant stream of updates on Facebook or Twitter. SharePoint farms will have about 20 databases of various sizes and purposes. Some change frequently, like the ones holding content; some less so, like the ones holding farm-wide configuration settings. This puts a strain on your system resources, so you will have to plan to have extra resources if you use mirroring.

To recap, SharePoint farmers (I wonder if that term will catch on?) use synchronous replication like mirroring to keep a source and target synchronized in terms of data states; this is hard work for the servers if there are a lot of changes. Latency is another factor; that’s how much time it takes the data to travel between the servers. High latency, measured in milliseconds, naturally causes a performance hit on your production SharePoint farm if the source and target are more than about 30 miles apart. This is frequently the case and is often deliberate so that the data centers will not be prone to the same potential physical disasters.

Asynchronous Replication: Log Shipping

Because fiber optic cable gets slower and less reliable with distance, asynchronous replication is much more widely used to meet long-distance DR requirements. Asynchronous replication keeps a source and target in sync over literally any distance, but the target may lag the source by up to several minutes (depending on the write volumes and network latencies). This is like our example of two friends catching up, or connecting your Smartphone to your PC. If it’s done once a day, you have the rest of the time to focus on other things, but since you do it every day, the catch-up doesn’t take too long, and you can do it in the evening when other activities are quieter.

SharePoint stores most of its data in SQL Server, and SQL Server tracks changes in a log. If you copied the logs from your production farm overnight to another farm in another data center, then applied all the changes in it the those databases, the two farms would become in sync again. There is a backlog between the applying of the changes, but this log shipping replicates asynchronously because it doesn’t happen until a while after the real changes. The advantage is it can be done when the servers are not too busy and requires less system resources as a consequence.

Asynchronous replication provides the kind of RPO performance necessary to meet SLAs of 99.9% up time per year, and it does so in such a way that doesn’t impact the performance of production applications. This translates to around 8 hours a year, since 99.9% is one day in 1,000, or one day unscheduled down time every 3 years, approximately. A year is 365 1/4 days. 8 hours is 1/3 of a 24-hour day. So, if you log shipped every 8 hours, that’s the maximum you would lose in a year. You could bring the recovery point back to at worst 8 hours since the last log ship. Keep in mind that if your primary data center is too close to your secondary data center, the same natural disaster could disable both of them, so the benefits of synchronous replication could be moot. Another reason log shipping is a good option is that it is a resilient process; you can keep the logs and even restore them to the production farm to roll it back to a point in time. Note in both cases your farms must have the same architecture, patches, et cetera so they can be kept in sync. All of these things need to be considered before you can begin your disaster recovery plan.

This is likely starting to sound complex, but a process I apply to simplify matters is to create priorities among what will need to be recovered. This helps focus resources on what is most important. Defining recovery tiers is an approach that is often used when evaluating in your BIA the recovery requirements for your various business processes within SharePoint. Instead of evaluating and setting recovery requirements individually for all major business process areas, a small number of recovery tiers can be defined. Each tier has a set of recovery performance metrics that are associated with all application environments within that tier. For example, Management may define three tiers like those in Table 2-1.

Table 2-1. Recovery Tiers, Because Not All Content is Equal

| Tier | RPO/RTO | Business Applications in SharePoint |

|---|---|---|

| 1 | RPO 5 min, RTO 1 hour | Finance Department Site Collection, Legal Department Site Collection |

| 2 | RPO 4 hours, RTO 8 hours | Project Sites |

| 3 | RPO/RTO 1 day or more | Team Sites and My Sites, all other content |

These numbers are just suggestions for your organization since your recovery tiers will vary based upon your business and regulatory requirements. But the general idea applies: there will be a small amount of critical content that requires a very low RPO and very short RTO; then there will be another set of very important sites that require stringent RPO/RTO, but not as stringent as tier 1; then there will likely be all the rest of the sites, which are not critical and may only need to be recovered within one or two days.

Additionally, you may have only two tiers, or you may have more than three tiers, depending on your requirements, but keep in mind that what you don’t want is one tier: clearly all your SharePoint sites do not merit the highest priority in terms of recovery. You don’t want to pay the price premium to meet your most stringent recovery requirements for sites that don’t need it. By the same token, you don’t want your critical application environments supported by the same multiday RPOs or the RTOs that you use for relatively unimportant sites.

Asking your end users about their recovery requirements is an important step, but not the only one. Generally, meeting more stringent recovery requirements requires more expensive solutions. When not thinking about costs, most end users will respond that they want very rapid recovery, when in fact they may be able to deal with a failure more easily than they think. The fact is you have to make them really think about it as part of the BIA.

In some situations, you may have already chosen your architecture—either on the premises or in the cloud—and now you know from your BIA that you will not be able to put in place a disaster recovery plan that will meet your RTO/RPO needs. In any case, the BIA should indicate whether the cost of changing what has been done so far will be less than the cost of a disaster. On that basis, you can win support to fix things and not be stuck with poor decisions and 20/20 hindsight.

In the case of on premises, you may argue you have already paid a third-party consultancy a lot of money to design your SharePoint farm, and they didn’t talk about any of this. How can you have different tiers of recovery in that situation? If the farm they created has RPO/RTOs higher than you need, this is an opportunity to save money by changing the rate and amount of data that is backed up/replicated. You may have to create another farm that is just for your tier 1 critical content and migrate content to there. This new farm will cost money but this will likely be cheaper than losing the data, and you can argue you saved money by reducing the RPO/RTO on the tier 2/3 data. If their architecture had too low RPO/RTOs, you should create a new tier 1 farm just to maintain the critical data. Once again you are avoiding greater losses, not spending more money.

What if you are using SharePoint Online Standard and you can’t have different RTO/RPOs there, but only what Microsoft offers, which is a 12-hour RTO and 24-hour RPO per year? In that case, you should also consider another hosting option for your critical content like an on-premises farm. This means moving content out of the cloud and building a farm for your critical tier 1 content. Again you can argue that you are avoiding greater losses, not spending more money. SharePoint Online Enterprise has a 1-hour RTO and 6-hour RPO, but this also costs more.

Neither solution is simple, but it is still better than knowing you need to do something but not doing it. In a sense, if you have made decisions about your farm’s location without considering DR, you are effectively starting again. This book will help you get to the point where you will be in a stronger position.

Your SLA may already have been negotiated and now is not sufficient for your needs. It may be too high and therefore too costly, or too low, which is potentially very costly! A Service Level Agreement is just that—an agreement between parties recording a common understanding they have reached about the required level of service. It must be a living document that keeps track of changing needs and priorities. It should not be perceived as a straitjacket that restricts either party from negotiating adjustments. In this case, the SLA should be reviewed and changed. In fact, SLAs should be reviewed at least annually. The very process of establishing or renegotiating an SLA helps to open up communications and establish both parties’ expectations of what can be realistically accomplished.

SLAs should be seen as positive things, but they can be a blunt tool to prevent communication. A good SLA ensures that both parties have the same criteria to evaluate service quality. A bad SLA is used to stifle complaints in an already troubled relationship or as a quick fix to stop discussion. To be effective, an SLA must incorporate two things: service elements and management elements.

The service elements clarify services by communicating such things as:

- What services will be provided.

- The conditions of service availability.

- The standard of service.

- Escalation procedures.

- Both parties’ responsibilities.

- Cost versus service tradeoffs.

The management elements of the SLA focus on how to put the agreement into practice and measure if it is working or not. Without these, an SLA lacks enforceability or a way to agree if it is effective or not. These considerations include the following:

- How to track service effectiveness.

- How information about how service effectiveness will be reported and addressed.

- How service-related disagreements will be resolved.

- How the parties will review and revise the agreement.

The key to establishing and maintaining a successful SLA is having one person on both sides of the agreement that is responsible for creating it and reviewing it. It can be just part of an IT manager’s job or a full-time job for one person. How long it takes to create one is only answered realistically by doing it and then looking back. It is a living document, so it can be agreed upon and evolves as time goes by.

Now you have prepared most of the groundwork for your plan: you’ve justified it, got consensus, and created your BIA and RTO/RPOs. You have done your risk assessments and assessed physical limitations such as location and latency. You have planned and decided on your recovery tiers and negotiated your SLAs. So have you finished planning your plan? Not quite, but you are at the final hurdle—the dependencies that arise once the disaster itself happens. I will go into more detail in Chapter 3, but here are some principles you can apply to coordinating your disaster. These principles come from the people whose job is arguably the most stressful and chaotic: the Army. They have developed 4Ci to help identify the essential components of a good command structure you will need during your disaster if you want to be able to put your plan into place.

4Ci

This stands for command, control, communications, computers, and intelligence. While it is a US military acronym, it applies to the civilian world in relation to how to respond to a disaster. Overall, it describes how the disaster should be coordinated by the people involved, and how essential communication, leadership, and information are. Even before you establish how you will meet the recovery needs of the business from a technical standpoint, you need the people available and communicating well as an effective chain of command. War is hell, as they say, and if a military command structure can continue to function in those circumstances, they have something to teach those of us in the Information Technology world about managing situations that are unexpected and chaotic.

This is the exercise of authority based upon certain knowledge to attain an objective. Note that the phrase “certain knowledge” can mean command experience or training, but it also implies that making command decisions has to be done with the available information. Most commanders would not care to admit that a lot of their decisions are based in guesswork and luck. In the case of a SharePoint disaster recovery, the key question is, “Who is in charge and who makes the final decisions when a disaster happens?”

This is the process of verifying and correcting activity such that the objective or goal of command is accomplished. Control means that the person in command is maintaining control of the situation as it develops and new information is gathered and analyzed. It is not enough to say what should be done and, if the disaster happened in the middle of the night, go back to bed. Control must be taken and maintained until the situation has returned to normal or until control can be delegated to someone else.

This is the ability to exercise the necessary liaisons to effective communications between tactical or strategic units. When a disaster unfolds, it happens in real time. There is the time the disaster is detected and the time it occurred. There is usually one cause for the disaster but multiple side effects that amplify it and make it grow from a minor “near miss” that didn’t miss into a cascade of problems. This is why communication is essential.

For accurate communication, your disaster recovery plan must have a call list. This list must be kept current. The list should have designated backups for each key individual and multiple contact information for them as well. Someone should be designated as the communication list manager to monitor responses and contact backup staff as necessary.

This refers to the computer systems and compatibility of these computer systems. Obviously the SharePoint farm and all its dependent systems are essential in your disaster recovery. This includes the network, Active Directory, and perhaps Exchange, plus third-party data sources and so forth. This is the largest area of your disaster recovery, so you must be focused on it.

This includes collection as well as analysis and distribution of information. When a disaster occurs, the logs become very important; they can tell you what went wrong and how to get the system back online as quickly as possible. Note that the initial priority is not to work out why this happened. The postmortem can be done after the critical tier 1 and perhaps tier 2 systems are back up and running. At this point, the purpose of analyzing the logs is to find out what when wrong so it can be fixed as soon as possible. If it the cause of the error can’t be corrected, it’s time for the last resort, which is restoring from backup.

When the stopwatch has started and you are now in recovery mode, many things are occurring at the same time and confusion is inevitable. In order to make the disaster recovery process easier, I recommend a detailed script or set of step-by-step instructions in your DR plan. Of course, the script should be formally reviewed by several different members of the DR team.

Since there is no guarantee that the script will be followed by the same person who wrote it, it’s best to use a simple bulleted list with easy-to-follow steps. When in a real recovery scenario, there will be intense pressure and many things going on at the same time. Also, a disaster can occur at any time, so if the plan is executed late at night, confusion is likely to be high.

If possible, try to anticipate errors and include remediation steps. Straightforward and simple instructions go a long way when you are under extreme stress and fatigue. Avoid using terms that may not be understood when extreme fatigue hits. If you must use technical terms, add a glossary.

Last but Not Least: Supply Stores and Restaurants

It may seem unimportant now, but if your staff are onsite for many hours or days, access to good-quality food and drinks will help keep their energy levels high and avoid the negative side effects of stress and fatigue. Adrenaline will only keep you going for so long. When it runs out, you need time to rest and recover. It is also good for morale, helps team building, and reduces stress by giving your team a break to eat and rehydrate. Sometimes the best ideas occur to people if they stop thinking about the problem or step back and chat about it more informally. Do not underestimate the power of a good pizza to solve an IT problem!

As well as planning access to food and drink, consider planned funds/permissions to purchase new equipment and cables. I have seen situations where the solution was very simple, like a damaged cable, but it took hours to find an open store to buy the replacement. If it is a more expensive piece of equipment, put in place a process to get the funds cleared in an emergency to buy the new servers. If it’s Friday evening, do you really have to wait until a store opens on Monday before you can get the necessary new hard drive?

Small things like these can make a big difference to meeting your RPO/RTOs, so plan for them now.

Summary

As this chapter shows, there are many factors that need to be considered when planning your plan. The first is getting management buy-in. The next is to get people to change from overly simplistic understandings of SharePoint to something that captures its complexity. This is where you update weak metaphors. This is very important, because stakeholders often don’t appreciate that SharePoint is a complex system.

The next step is writing something that will quantify why the DR plan is needed: the Business Impact Assessment. This helps establish a consensus as to the RTO/RPOs and the cost-to-benefit ratio of the DR options. There will be more detail on the technical options in later chapters. Another important factor to understand is physical limitations when planning DR: location, latency, and types of synchronicity.

Your most useful tool in creating a viable DR plan will be building an architecture that reflects your needs. This means creating different tiers in your SharePoint architecture that have different RTO/RPOs and different cost levels relative to the importance of the data in them.

Finally, I offered some advice on creating a good SLA and some initial thoughts on coordinating the disaster. There’s more on the latter topic in Chapter 3.