The MPI standard defines the primitives for the management of virtual topologies, synchronization, and communication between processes. There are several MPI implementations that differ in the version and features of the standard supported.

We will introduce the MPI standard through the Python mpi4py library.

Before the 1990s, writing parallel applications for different architectures was a more difficult job than what it is today. Many libraries facilitated the process, but there was not a standard way to do it. At that time, most parallel applications were destined for scientific research environments.

The model that was most commonly adopted by the various libraries was the message-passing model, in which the communication between the processes takes place through the exchange of messages and without the use of shared resources. For example, the master process can assign a job to the slaves simply by sending a message that describes the work to be done. A second, very simple, example here is a parallel application that performs a merge sort. The data is sorted locally to the processes and the results are passed to other processes that will deal with the merge.

Since the libraries largely used the same model, albeit with minor differences from each other, the authors of the various libraries met in 1992 to define a standard interface for the exchange of messages, and, from here, MPI was born. This interface had to allow programmers to write portable parallel applications on most parallel architectures, using the same features and models they were already used to.

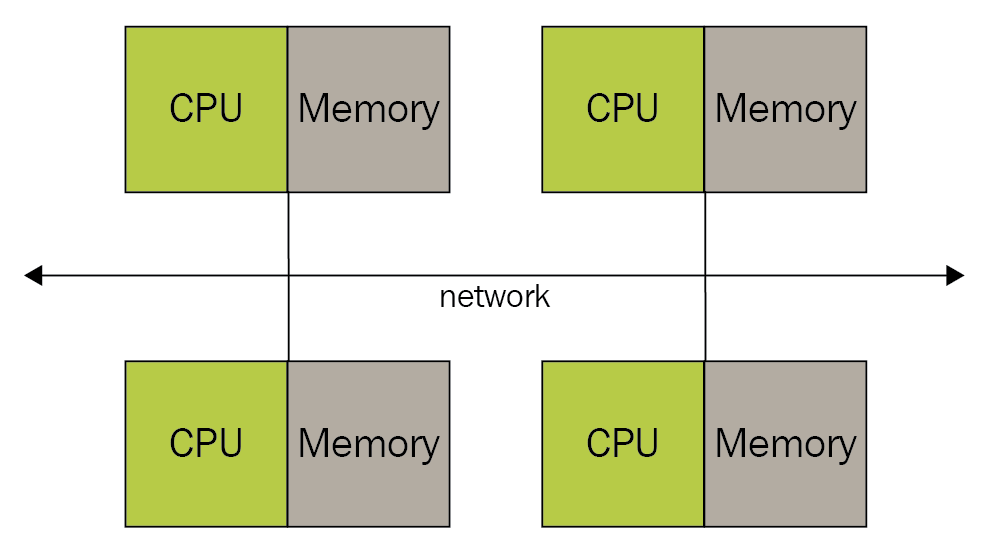

Originally, MPI was designed for distributed memory architectures, which began to grow in popularity 20 years ago:

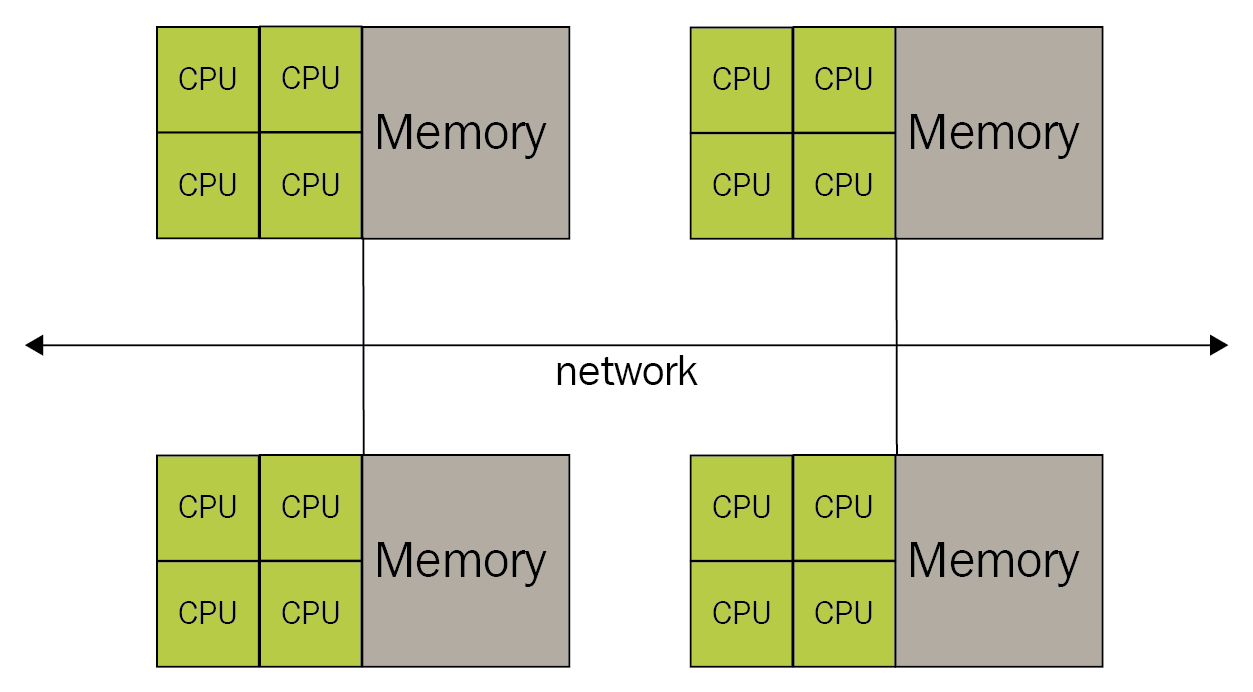

Over time, distributed memory systems began to be combined with each other, creating hybrid systems with distributed/shared memory:

Today, MPI runs on distributed memory, shared memory, and hybrid systems. However, the programming model remains that of distributed memory, although the true architecture on which the calculation is performed may be different.

The strengths of MPI can be summarized as follows:

- Standardization: It is supported by all High-Performance Computing (HPC) platforms.

- Portability: The changes applied to the source code are minimal, which is useful if you decide to use the application on a different platform that also supports the same standard.

- Performance: Manufacturers can create implementations optimized for a specific type of hardware and get better performance.

- Functionality: Over 440 routines are defined in MPI-3, but many parallel programs can be written using fewer than even 10 routines.

In the following sections, we will examine the main Python library for message passing: the mpi4py library.