A GPU is a specialized CPU/core for vector processing of graphical data to render images from polygonal primitives. The task of a good GPU program is to make the most of the great level of parallelism and mathematical capabilities offered by the graphics card and minimize all the disadvantages presented by it, such as the delay in the physical connection between the host and device.

GPUs are characterized by a highly parallel structure that allows you to manipulate large datasets in an efficient manner. This feature is combined with rapid improvements in hardware performance programs, bringing the attention of the scientific world to the possibility of using GPUs for purposes other than just rendering images.

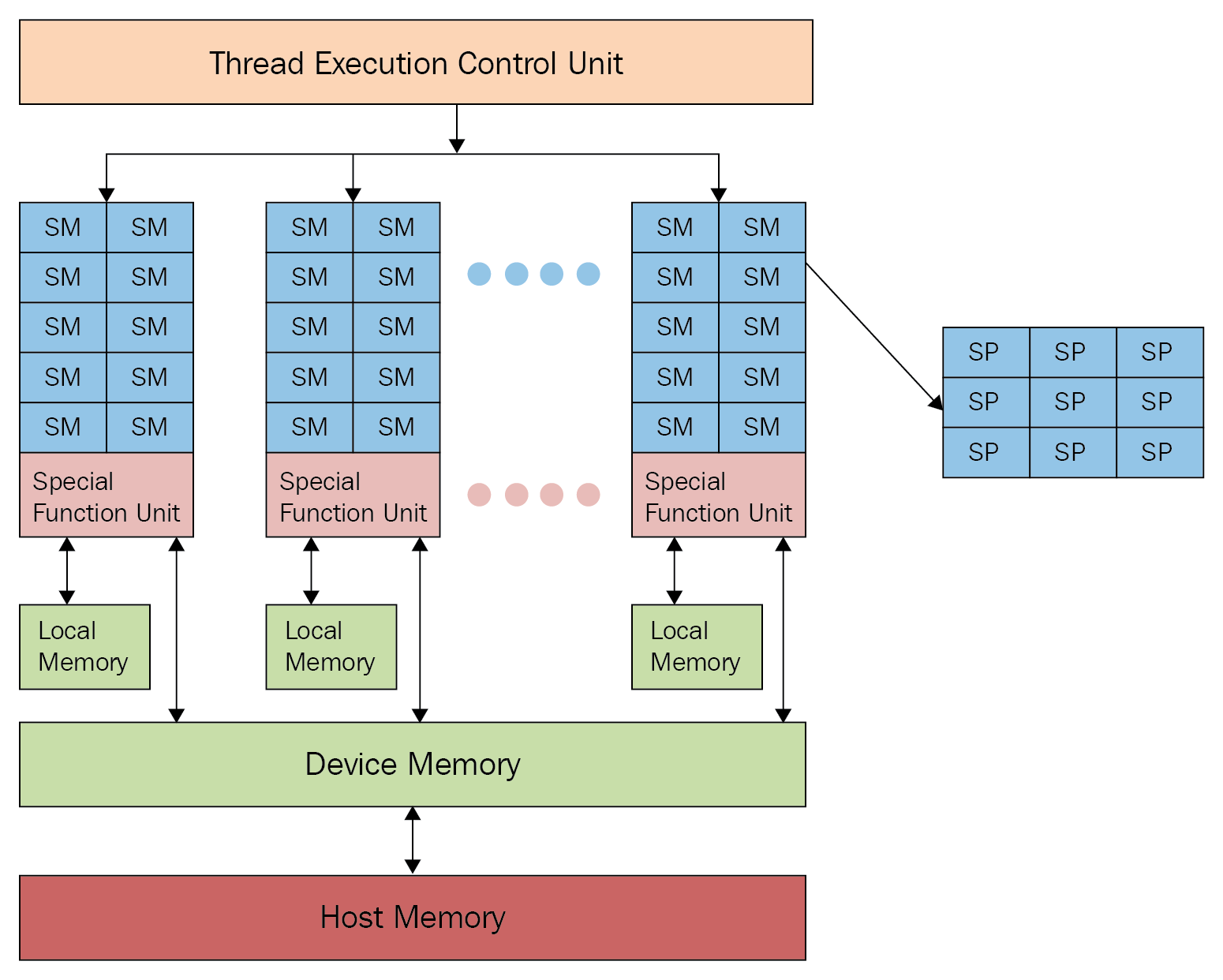

A GPU (refer to the following diagram) is composed of several processing units called Streaming Multiprocessors (SMs), which represent the first logic level of parallelism. In fact, each SM works simultaneously and independently from the others:

Each SM is divided into a group of Streaming Processors (SPs), which have a core that can run a thread sequentially. The SP represents the smallest unit of execution logic and the level of finer parallelism.

In order to best program this type of architecture, we need to introduce GPU programming, which is described in the next section.