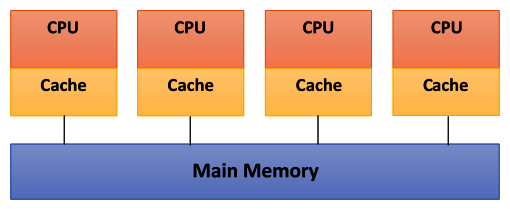

The schema of a shared memory multiprocessor system is shown in the following diagram. The physical connections here are quite simple:

Here, the bus structure allows an arbitrary number of devices (CPU + Cache in the preceding diagram) that share the same channel (Main Memory, as shown in the preceding diagram). The bus protocols were originally designed to allow a single processor and one or more disks or tape controllers to communicate through the shared memory here.

The problem occurs when a processor modifies data stored in the memory system that is simultaneously used by other processors. The new value will pass from the processor cache that has been changed to the shared memory. Later, however, it must also be passed to all the other processors, so that they do not work with the obsolete value. This problem is known as the problem of cache coherency—a special case of the problem of memory consistency, which requires hardware implementations that can handle concurrency issues and synchronization, similar to that of thread programming.

The main features of shared memory systems are as follows:

- The memory is the same for all processors. For example, all the processors associated with the same data structure will work with the same logical memory addresses, thus accessing the same memory locations.

- The synchronization is obtained by reading the tasks of various processors and allowing the shared memory. In fact, the processors can only access one memory at a time.

- A shared memory location must not be changed from a task while another task accesses it.

- Sharing data between tasks is fast. The time required to communicate is the time that one of them takes to read a single location (depending on the speed of memory access).

The memory access in shared memory systems is as follows:

- Uniform Memory Access (UMA): The fundamental characteristic of this system is the access time to the memory that is constant for each processor and for any area of memory. For this reason, these systems are also called Symmetric Multiprocessors (SMPs). They are relatively simple to implement, but not very scalable. The coder is responsible for the management of the synchronization by inserting appropriate controls, semaphores, locks, and more in the program that manages resources.

- Non-Uniform Memory Access (NUMA): These architectures divide the memory into high-speed access area that is assigned to each processor, and also, a common area for the data exchange, with slower access. These systems are also called Distributed Shared Memory (DSM) systems. They are very scalable, but complex to develop.

- No Remote Memory Access (NoRMA): The memory is physically distributed among the processors (local memory). All local memories are private and can only access the local processor. The communication between the processors is through a communication protocol used for exchanging messages, which is known as the message-passing protocol.

- Cache-Only Memory Architecture (COMA): These systems are equipped with only cached memories. While analyzing NUMA architectures, it was noticed that this architecture kept the local copies of the data in the cache and that this data was stored as duplicates in the main memory. This architecture removes duplicates and keeps only the cached memories; the memory is physically distributed among the processors (local memory). All local memories are private and can only access the local processor. The communication between the processors is also through the message-passing protocol.