In the previous chapter, the TurtleBot 2 robot was described as a two-wheeled differential drive robot developed by Willow Garage. The setup of the TurtleBot 2 hardware, netbook, network system, and remote computer were explained, so the user could set up and operate their own TurtleBot. Then, the TurtleBot 2 was driven around using keyboard control, command-line control, and a Python script. TurtleBot 3 was also introduced and driven around using keyboard control.

In this chapter, we will expand TurtleBot's capabilities by giving the robot vision. The chapter begins by describing 3D vision systems and how they are used to map obstacles within the camera's field of view. The four types of 3D sensors typically used for TurtleBot are shown and described, detailing their specifications. A 2D vision system is also introduced for TurtleBot 3.

Setting up the 3D sensor for use on TurtleBot 2 is described and the configuration is tested in a standalone mode. To visualize the sensor data coming from TurtleBot 2, two ROS tools are utilized: Image Viewer and rviz. Then, an important aspect of TurtleBot is described and realized: navigation. TurtleBot will be driven around and the vision system will be used to build a map of the environment. The map is loaded into rviz and used to give the user point and click control of TurtleBot so that it can autonomously navigate to a location selected on the map. Two additional navigation methods will be shown: driving TurtleBot to a location without a map and driving with a map and a Python script. The autonomous navigation ability using rviz is also shown for TurtleBot 3.

In this chapter, you will learn the following topics:

- How 3D vision sensors work

- The difference between the four primary 3D sensors for TurtleBot

- Details on a 2D vision system for TurtleBot 3

- Information on TurtleBot environmental variables and the ROS software required for the sensors

- ROS tools for the rgb and depth camera output

- How to use TurtleBot to map a room using Simultaneous Localization and Mapping (SLAM)

- How to operate TurtleBot in autonomous navigation mode by adaptive monte carlo localization (amcl)

- How to navigate TurtleBot to a location without a map

- How to navigate TurtleBot to waypoints with a Python script and a map

TurtleBot's capability is greatly enhanced by the addition of a 3D vision sensor. The function of 3D sensors is to map the environment around the robot by discovering nearby objects that are either stationary or moving. The mapping function must be accomplished in real time so that the robot can move around the environment, evaluate its path choices, and avoid obstacles. For autonomous vehicles, such as Waymo's self-driving cars, 3D mapping is accomplished by a high-cost LIDAR system that uses laser radar to illuminate its environment and analyze the reflected light. For our TurtleBot, we will present a number of low cost but effective options. These standard 3D sensors for TurtleBot include Kinect sensors, ASUS Xtion sensors, Carmine sensors, and Intel RealSense sensors. TurtleBot 3 navigates using a 2D low cost laser distance sensor, the Hitachi-LG LDS.

The 3D vision systems that we describe for TurtleBot have a common infrared (IR) technology to sense depth. This technology was developed by PrimeSense, an Israeli 3D sensing company and originally licensed to Microsoft in 2010 for the Kinect motion sensor used in the Xbox 360 gaming system. The depth camera uses an IR projector to transmit beams that are reflected back to a monochrome Complementary Metal–Oxide–Semiconductor (CMOS) sensor that continuously captures image data. This data is converted into depth information, indicating the distance that each IR beam has traveled. Data in x, y, and z distance is captured for each point measured from the sensor axis reference frame.

Note

For a quick explanation of how 3D sensors work, the video How the Kinect Depth Sensor Works in 2 Minutes is worth watching at https://www.youtube.com/watch?v=uq9SEJxZiUg.

This 3D sensor technology is primarily for use indoors and does not typically work well outdoors. Infrared from the sunlight has a negative effect on the quality of readings from the depth camera. Objects that are shiny or curved also present a challenge for the depth camera.

Currently, many 3D vision sensors have been integrated with ROS and TurtleBot. Microsoft Kinect, ASUS Xtion, PrimeSense Carmine, and Intel RealSense have all been integrated with camera drivers that provide a ROS interface used for TurtleBot 2. The Intel RealSense sensors are also used with the TurtleBot 3 Waffle version, but navigation is performed using the (2D) LDS on both the Burger and Waffle versions of TurtleBot 3. The ROS packages that handle the processing for these sensors will be described in an upcoming section, but first, a comparison of these products is provided.

Kinect was developed by Microsoft as a motion sensing device for video games, but it works well as a mapping tool for TurtleBot. Kinect is equipped with an rgb camera, a depth camera, an array of microphones, and a tilt motor.

The rgb camera acquires 2D color images in the same way in which our smart phones or webcams acquire color video images. The Kinect microphones can be used to capture sound data and a three-axis accelerometer can be used to find the orientation of the Kinect. These features hold the promise of exciting applications for TurtleBot, but this book will not delve into the use of these Kinect sensor capabilities.

Kinect is connected to the TurtleBot netbook through a USB 2.0 port (USB 3.0 for Kinect v2). Software development on Kinect can be done using the Kinect Software Development Kit (SDK), freenect, and Open Source Computer Vision (OpenCV). The Kinect SDK was created by Microsoft to develop Kinect apps, but unfortunately, it only runs on Windows. OpenCV is an open source library of hundreds of computer vision algorithms that provides support for 2D image processing. 3D depth sensors, such as the Kinect, ASUS, PrimeSense, and RealSense are supported in the VideoCapture class of OpenCV. Freenect packages and libraries are open source ROS software that provides support for Microsoft Kinect. More details on freenect will be provided in an upcoming section titled Configuring TurtleBot and installing 3D sensor software.

Microsoft has developed three versions of Kinect to date: Kinect for Xbox 360, Kinect for Xbox One, and Kinect for Windows v2. The following figure presents images of the variations and the subsequent table shows their specifications:

Microsoft Kinect versions

Microsoft Kinect version specifications:

|

Spec |

Kinect 360 |

Kinect One |

Kinect for Windows v2 |

|---|---|---|---|

|

Release date |

November 2010 |

November 2013 |

July 2014 |

|

Horizontal field of view (degrees) |

57 |

57 |

70 |

|

Vertical field of view (degrees) |

43 |

43 |

60 |

|

Color camera data |

640 x 480 32-bit @ 30 fps |

640 x 480 @ 30 fps |

1920 x 1080 @ 30 fps |

|

Depth camera data |

320 x 240 16-bit @ 30 fps |

320 x 240 @ 30 fps |

512 x 424 @ 30 fps |

|

Depth range (meters) |

1.2–3.5 |

0.5–4.5 |

0.5–4.5 |

|

Audio |

16-bit @ 16 kHz |

4 microphones | |

|

Dimensions |

28 x 6.5 x 6.5 cm |

25 x 6.5 x 6.5 cm |

25 x 6.5.x 7.5 cm |

|

Additional information |

Motorized tilt base range ± 27 degrees; USB 2.0 |

Manual tilt base; USB 2.0 |

No tilt base; USB 3.0 only |

|

Requires external power |

fps: frames per second

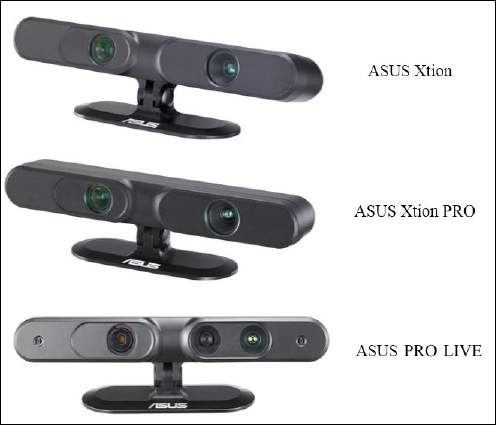

ASUS Xtion, Xtion PRO, PRO LIVE, and the recently released Xtion 2 are also 3D vision sensors designed for motion sensing applications. The technology is similar to the Kinect, using an IR projector and a monochrome CMOS receptor to capture the depth information.

The ASUS sensor is connected to the TurtleBot netbook through a USB 2.0 port (or USB 3.0 for the Xtion 2) and no other external power is required. Applications for the ASUS Xtion sensors can be developed using the ASUS development solution software, OpenNI2, and OpenCV. OpenNI2 packages and libraries are open source software that provides support for ASUS and PrimeSense 3D sensors. More details on OpenNI2 will be provided in the following section, Configuring TurtleBot and installing 3D sensor software.

The following figure presents images of the ASUS sensor variations and the subsequent table shows their specifications:

ASUS Xtion and PRO versions

ASUS Xtion and PRO version specifications:

|

Spec |

Xtion |

Xtion PRO |

Xtion PRO LIVE |

Xtion 2 |

|---|---|---|---|---|

|

Horizontal field of view (degrees) |

58 |

58 |

58 |

74 |

|

Vertical field of view (degrees) |

45 |

45 |

45 |

52 |

|

Color camera data |

none |

none |

1280 x 1024 |

2592 x 1944 |

|

Depth camera data |

unspecified |

640 x 480 @ 30 fps 320 x 240 @ 60 fps |

640 x 480 @ 30 fps 320 x 240 @ 60 fps |

640 x 480 @ 30 fps 320 x 240 @ 60 fps |

|

Depth range (meters) |

0.8–3.5 |

0.8–3.5 |

0.8–3.5 |

0.8–3.5 |

|

Audio |

none |

none |

2 microphones |

none |

|

Dimensions (cm) |

18 x 3.5 x 5 |

18 x 3.5 x 5 |

18 x 3.5 x 5 |

11 x 3.5 x 3.5 |

|

Additional information |

USB 2.0 |

USB 2.0 |

USB 2.0/ 3.0 |

USB 3.0 |

|

No additional power required—powered through USB | ||||

PrimeSense was the original developer of the 3D vision sensing technology using near-infrared light. They also developed the NiTE software that allows developers to analyze people, track their motions, and develop user interfaces based on gesture control. PrimeSense offered its own sensors, Carmine 1.08 and 1.09, to the market before the company was bought by Apple in November 2013. The Carmine sensor is shown in the following image. ROS OpenNI2 packages and libraries also support the PrimeSense Carmine sensors. More details on OpenNI2 will be provided in the upcoming section titled Configuring TurtleBot and installing 3D sensor software:

PrimeSense Carmine

PrimeSense has two versions of the Carmine sensor: 1.08 and the short range 1.09. The preceding image shows how the sensors look and the subsequent table shows their specifications:

|

Spec |

Carmine 1.08 |

Carmine 1.09 |

|---|---|---|

|

Horizontal field of view (degrees) |

57.5 |

57.5 |

|

Vertical field of view (degrees) |

45 |

45 |

|

Color camera data |

640 x 480 @ 30 Hz |

640 x 480 @ 60 Hz |

|

Depth camera data |

640 x 480 @ 60 Hz |

640 x 480 @ 60 Hz |

|

Depth range (meters) |

0.8–3.5 |

0.3–1.4 |

|

Audio |

Two microphones |

Two microphones |

|

Dimensions |

18 x 2.5 x 3.5 cm |

18 x 3.5 x 5 cm |

|

Additional information |

USB 2.0 / 3.0 |

USB 2.0 / 3.0 |

|

No additional power required—powered through USB | ||

Intel RealSense technology was developed to integrate gesture tracking, facial analysis, speech recognition, background segmentation, 3D scanning, augmented reality, and many more applications into an individual's personal computer experience. For TurtleBot, the RealSense series of 3D cameras can be combined with powerful and adaptable machine perception software to give TurtleBot the capability to navigate on its own. Given that these cameras are powered by a USB 3.0 interface, connecting to the TurtleBot's netbook or SBC is straightforward. Any of these cameras will provide color, depth, and IR video streams for navigation.

In the following paragraphs, we will introduce and describe the latest Intel RealSense series cameras: R200, SR300, and ZR300. Intel's Euclid Development Kit integrates the RealSense camera technology with a power computer to provide a compact all-in-one imaging system. The Euclid will be introduced and described also in a subsequent paragraph.

Intel RealSense Camera R200: It is a long range, stereo vision 3D imaging system. The R200 has two active IR cameras positioned on the left and right of an IR laser projector. These two cameras provide the ability to implement stereo vision algorithms to calculate depth. Images detected by these cameras are sent to the R200 application-specific integrated circuit (ASIC). The ASIC is custom designed to calculate the depth value for each pixel in the image. The IR laser projector is a class-1 laser device that emits additional illumination to texture a scene for better stereo vision performance. The R200 also has a full HD color imaging sensor for color vision data. The R200 has the added advantage of outdoor use because of the IR projection system. Adjustments for a fully sunlit environment might be necessary. The product datasheet for the R200 can be found at the website: https://software.intel.com/sites/default/files/managed/d7/a9/realsense-camera-r200-product-datasheet.pdf.

Intel RealSense Camera SR300: It's a short range, coded light 3D imaging system that is optimized for background segmentation and facial tracking applications. The SR300 is the second-generation improvement over the Intel RealSense F200 camera in depth range and higher quality depth data. The F200 and SR300 imaging systems use embedded coded light IR for depth measurement and full HD color for imaging. The SR300 camera contains an IR laser projector system and a fast video graphics array (VGA) IR sensor that work together using a coded light pattern projected on a 2D array of monochromatic pixel values. This coded light method provides depth imaging with reduced exposure time allowing for faster dynamic motion capture. This technology has two significant drawbacks:

- Multiple SR300 (and F200) cameras used for the same scene degrade the cameras' performance.

- The camera does not work well in outdoor environments due to ambient IR.

The camera can operate to produce independent color, depth, and IR video streams or synchronizes these video data streams to a client computer system. The SR300 product datasheet can be found at: https://software.intel.com/sites/default/files/managed/0c/ec/realsense-sr300-product-datasheet-rev-1-0.pdf.

Intel RealSense Camera ZR300: This variant is a mid-range, stereo vision 3D imaging system similar to the R200. The ZR300 stereo vision is implemented with a left IR camera, a right IR camera, and an IR laser projector. The image data is received from the cameras on the ZR300 ASIC, which calculates the depth values for each pixel in the image. The ZR300 also incorporates a six-degree of freedom IMU and a fisheye optical sensor. The ZR300 image streams have timestamp synchronization to a 50µs reference clock. The ZR300 product datasheet can be found at: https://www.intel.com/content/dam/support/us/en/documents/emerging-technologies/intel-realsense-technology/ZR300-Product-Datasheet-Public.pdf.

Intel Euclid Developer Kit: The Euclid combines a ZR300 camera with a full featured computer into the compact size of a candy bar. The computer is an Intel Atom x7-8700 Quad-Core processor with 4 GB of memory and 32 GB of storage. It comes pre-installed with Ubuntu 16.04 and ROS Kinetic Kame. The Euclid has both Wi-Fi and Bluetooth communication and USB 3.0, Micro HDMI, and USBOTG/Charging ports. Sensors within the Euclid include an IMU, barometric pressure sensor, GPS, and proximity sensor. It can be powered by its own lithium polymer battery pack or with external power. The Euclid comes ready to use out of the box and the developer uses a web interface from a phone or computer to run, monitor, and manage the application. Many more details on the Euclid Developer Kit are available at the following websites:

- Euclid Development Kit Datasheet: https://click.intel.com/media/productid2100_10052017/335926-001_public.pdf

- Euclid Development Kit User Guide: https://click.intel.com/media/productid2100_10052017/euclid_user_guide_2-15-17_final3d.pdf

- Euclid Operating Guide: https://click.intel.com/media/productid2100_10052017/euclid-operating-guide-final.pdf

Software development tools for the RealSense cameras are available with any of the following software packages:

- The Intel RealSense SDK for Windows was created to encourage users to develop natural, immersive, and intuitive interactive applications with the 3D cameras. Each of the cameras requires Depth Camera Manager (DCM) software specific for that camera to be downloaded and installed for use with the SDK. This SDK makes it easy to incorporate human-computer interaction capabilities such as facial recognition, hand gesture recognition, user background segmentation/removal, 3D scanning, and more features into your apps. Unfortunately, Intel has decided to discontinue support of the SDK for Windows. The

librealsenseAPI (described next) is the recommended alternative software for developers. Information on the SDK for Windows is available at: https://software.intel.com/en-us/intel-realsense-sdk. - The

librealsenseAPI was developed by Intel as an open-source, cross-platform (Linux, OS X, and Windows) driver for streaming video data from the RealSense cameras. It provides image streams for color, depth, and IR as well as produces rectified and registered image streams. Librealsense also supports multi-camera capture from single or multiple RealSense models simultaneously. This API has been initiated to support developers in the areas of robotics, virtual reality, and internet of things. The GitHub repository is present at https://github.com/IntelRealSense/librealsense. - The Intel RealSense SDK for Linux is a collection of software libraries, tools, and samples utilizing the

librealsenseAPI. The SDK libraries provide the ability to correlate color and depth images, create point clouds, and apply other advanced image processing. Tools and samples are provided to demonstrate the usage of the SDK libraries. https://software.intel.com/sites/products/realsense/sdk/. - OpenCV software can also be used with the image streams produced by the RealSense cameras.

- ROS RealSense packages rely on the librealsense API described previously. The

librealsensepackage is used by the ROSrealsense_camerapackage, which creates the camera node for publishing streams of color, depth, and IR data. http://wiki.ros.org/RealSense.

More details on ROS RealSense software is provided in the upcoming section titled Configuring TurtleBot and installing 3D sensor software.

The Intel RealSense cameras currently considered for integrating with TurtleBot are the R200, SR300, ZR300, and the Euclid Development Kit as shown in the following figure. Specifications for the R200, SR300, and ZR300 cameras are shown in the subsequent table:

Intel RealSense cameras and the development kit

Intel RealSense camera's specifications:

|

Spec |

R200 |

SR300 |

ZR300 |

|---|---|---|---|

|

Release Date |

December 2015 |

July 2016 |

January 2017 |

|

Horizontal field of view (degrees) |

70 |

71.5 |

68 |

|

Vertical field of view (degrees) |

43 |

55 |

41.5 |

|

Color camera data |

1920 x 1080 @ 30 fps |

1920 x 1080 @ 30 fps |

1920 x 1080 |

|

Depth camera data |

640 x 480 @ 60 fps |

640 x 480 @ 60 fps |

640 x 480 |

|

IR camera data |

640 x 480 @ 60 fps |

640 x 480 @ 200 fps |

640 x 480 |

|

Depth range (meters) |

0.4–3.5 |

0.2–1.5 |

0.55–2.8 |

|

Audio |

None |

Dual-array Microphones |

None |

|

Dimensions |

10.2 x. 1.0 x 0.4 cm 11 x 1.3 x 0.4 cm |

15.5 x 3.2 x 0.9 cm | |

|

Additional information |

Outdoor depth range up to 10 meters USB 3.0 only |

USB 3.0 only |

Includes: Fisheye Camera 640 x 480; IMU USB 3.0 only |

|

No additional power required—powered through USB | |||

The TurtleBot 3 LDS rotates in a continuous 360 degrees to collect 2D distance data that is transmitted to the SBC. The hardware configurations are described in the Introducing TurtleBot 3 section in Chapter 3, Driving Around with TurtleBot. The distance data is used for obstacle detection, SLAM mapping, and navigation. The LDS uses a Class 1 laser with a semiconductor laser diode light source. Basic specifications for the device can be found at http://wiki.ros.org/hls_lfcd_lds_driver?action=AttachFile&do=view&target=LDS_Basic_Specification.pdf.

The software driver is contained in the ROS package hls-lfcd-lds-driver. The LDS starts operating as part of the basic TurtleBot 3 operation described in the Using keyboard teleoperation to move TurtleBot 3 section in Chapter 3, Driving Around with TurtleBot. The following figure shows a side view and top view of the LDS and the subsequent table shows its specifications:

Hitachi-LG LDS

LDS specifications:

|

Spec |

LDS |

|---|---|

|

Distance range (meters) |

0.120 – 3.5 |

|

Angular range (degrees) |

360 |

|

Angular resolution (degrees) |

1 |

|

Distance accuracy (meters) 0.120 – 0.499 0.500 – 3.500 |

± 0.015 ± 5.0% |

|

Distance precision (meters) 0.120 – 0.499 0.500 – 3.500 |

± 0.010 ± 3.5% |

|

Scan rate (rpm) |

300 ± 10 |

TurtleBot uses 2D and 3D sensing for autonomous navigation and obstacle avoidance, as described later in this chapter. Other applications that these 3D sensors are used in include 3D motion capture, skeleton tracking, face recognition, and voice recognition.

There are a few drawbacks that you need to know about when using the infrared 3D sensor technology for obstacle avoidance. These sensors have a narrow imaging area of about 58 degrees horizontal and 43 degrees vertical (typically), although those for Kinect for Windows v2 and Xtion 2 are slightly larger. These sensors can also not detect anything within the first 0.5 meters (~20 inches). Highly reflective surfaces, such as metals, glass, or mirrors cannot be detected by the 3D vision sensors.