3

Reliability Modeling for Systems Engineers: Nonmaintained Systems

3.1 WHAT TO EXPECT FROM THIS CHAPTER

It is not the purpose of this book to support your becoming a reliability engineering specialist. As a systems engineer, though, you will be interacting with these specialists both as a supplier and as a customer. You will be supplying reliability requirements that specialist engineers will use to guide their design for reliability work. You will be a customer for information flowing back from reliability engineering specialists regarding how well a design, in its current state, is likely to meet those reliability requirements and whether deployed systems are meeting their reliability requirements. The purpose of this chapter, then, is primarily to support your supplier and customer roles in these interactions. You will need enough facility with the language and concepts of reliability engineering that you will create sensible reliability requirements. Much of this was covered in Chapter 2, and the material covered in this chapter supports and amplifies the concepts introduced there. You will also need enough of this facility to be able to sensibly use the information provided by specialist reliability engineers so that design may be properly guided.

The material in this chapter is designed to support this latter need. What you will find here is chosen so that it reinforces correct use of the concepts and language of reliability modeling for nonmaintained systems.1 It is complete enough that it covers almost all situations you will normally encounter, and if you learn this well you will be able to adapt it to unusual situations as well. While everything here is precise and in a useful order, no attempt is made to provide mathematical rigor with theorems and proofs even though there is a flourishing mathematical theory of reliability [3, 4] that underpins these ideas. If you wish to follow these developments further, many additional references are provided.

3.2 INTRODUCTION

The industrial, medical, and military systems prevalent today are usually very complex and closely coupled, and expensive and time-consuming to develop. For transparent economic and schedule reasons, it is not even remotely realistic to test such systems for reliability. Indeed, to do so would be to fly in the face of the guiding principle of contemporary systems engineering for the sustainability disciplines: design the system from the earliest stages of its development to incorporate features that promote reliable, maintainable, and supportable operation. In short, in preference to a costly and lengthy testing program, or, worse, design scrap-and-rework, take those actions during systems engineering and design that lead to a sustainable, profitable system.

Accepting, then, that testing a complicated system for reliability is not sensible, what can systems engineers and reliability engineers do to ensure that a system meets the reliability needs of its customers? In this book, we advocate strongly for the discipline of design for reliability, the discipline that encompasses actions that are taken during systems engineering and design to anticipate and prevent failures. Design for reliability is discussed from this point of view in Chapter 6 where we introduce specific methods such as fault tree analysis (FTA), failure modes, effects, and criticality analysis (FMECA), and others that provide systematic, repeatable techniques that are widely applicable and very effective in anticipating the failures that are possible in the system and deploying suitable countermeasures that prevent those failures from occurring. An important part of the design for reliability process is the ability to project or forecast in quantitative terms the reliability one can expect of the system given the current state of the design. A discipline called reliability modeling has been developed to enable these sorts of quantitative projections to be made, even before any of the system may be built (or even prototyped).

Reliability modeling is based on the observation that while the systems we deal with are complex and closely coupled, usually they are made up of a large number of simpler components. Reliability modeling is a process of combining, in suitable mathematical fashion, quantitative information about the reliability of individual components to produce information about the reliability of the complex assembly of those components that is the system in question. It is usually possible to obtain information about the reliability of these simpler components from life testing, fundamental physical principles, and real field experience. Life testing of components is possible because it deals with only one (population of identical) component at a time; complicated interactions with other components are not present, and varying environmental conditions can be applied to characterize the component’s reliability in different environments likely to be encountered in operation [16, 62]. Estimation of component reliability from fundamental physical principles is possible in some cases because the physical, chemical, mechanical, and/or electrical mechanisms causing degradation of the component have been identified in many practical classes of components [10, 24]. Component reliability may also be estimated from real operational experience with systems that contain the component provided that the failure that caused a system to be taken out of service for repair can be traced to that specific component [7, 55] (see also Section 5.6). This chapter is devoted to helping you gain an understanding of reliability modeling for nonmaintained systems so that you are equipped to assess whether your reliability requirements are likely to be met as part of an ongoing process throughout the design and development of the system. Reliability models for nonmaintained systems introduced in this chapter form building blocks for the reliability models for maintained systems discussed in Chapter 4.

However, all the reliability modeling you can afford is of little value unless you use what you learned from it to do one (or both) of two things:

- Improve the reliability of the system if modeling shows that the system in its current configuration is unlikely to meet its reliability requirements.

- Determine that the reliability requirements originally proposed are too restrictive and may be loosened, possibly creating an opportunity for development cost savings.

Chapter 5 discusses comparison of what is learned from reliability modeling (usually called a “reliability prediction”) with the relevant reliability requirement(s). To improve the reliability of the system, additional design for reliability actions must be undertaken or the design for reliability actions already undertaken should be re-examined at greater depth (Chapter 6). The alternative is to decide that the original reliability requirements were more restrictive than they needed to be—but this decision can’t really be made without thorough re-examination of the process by which they were created (QFD, House of Quality, Kano analysis, etc., introduced in Section 1.6.1). Without this response, reliability modeling has little value.

Finally, most systems are intended to be repaired when they fail, and by the repair to be restored to service. There are obvious exceptions, of course (viz., satellites, although the example of the Hubble Space Telescope shows that when the stakes are high enough, truly heroic measures will be undertaken to repair even some systems that are traditionally designated as non-repairable). Many reliability effectiveness criteria are appropriate for describing the frequency and duration of failures of a maintainable system (see Section 4.3). The system maintenance concept (see Chapter 10) tells how the system will be restored to service when it fails, and which part(s) of the system are designated as repaired and which parts are not repaired. A reliability model for the system mirrors the system maintenance plan: the model builds up reliability descriptions of the maintained parts of the system from reliability descriptions of their constituent components and subassemblies. All systems contain some components that are not maintainable in the sense that if a system failure is traceable to one such nonmaintainable component, repair of the system is effected by discarding the failed component and replacing it with another (usually new) one. Some systems also contain more complex subassemblies that may be removed and replaced in order to bring a system back to proper operation and that are sufficiently complex and expensive that the removed units are themselves repaired and used as spare parts for later system repairs. See Chapter 11 for more details on this type of operation. Accordingly, Chapters 3 and 4 are structured so that we learn about reliability effectiveness criteria and models for nonmaintainable components first and then we learn how these are combined to form reliability effectiveness criteria and models for the higher level entries in the system maintenance concept—the subassemblies, line-replaceable units, etc., on up to the system as a whole.

3.3 RELIABILITY EFFECTIVENESS CRITERIA AND FIGURES OF MERIT FOR NONMAINTAINED UNITS

3.3.1 Introduction

This section discusses the various ways we describe quantitatively the reliability of a nonmaintained component or system. An object that is not maintained is one that ceases operation permanently when it fails. No repair is performed and a failed nonmaintained component is usually discarded. An object that is not maintained may be a simple, unitary object like a resistor or a ball bearing (these are not repaired because it is physically impossible or economically unreasonable to repair them), or it may be a complicated object like a rocket or satellite (not repaired because they are destroyed when used or are impossible to access). Simple nonmaintained components usually form the constituents of a larger system that may be maintained or not. Most complex systems are maintained to some degree. For example, while failed hardware in a consumer router (for home networking) may not be repairable, the firmware in the router can be restored to its original factory configuration by pressing the reset button. We study reliability effectiveness criteria and figures of merit for nonmaintained items because

- reliability effectiveness criteria and figures of merit are used to describe mission success probabilities for systems that may be maintainable but cannot be maintained while in use (see Section 4.3.4) and

- reliability models for a maintained system are built up from simpler reliability models for the nonmaintained components making up the system.

By contrast, of course, an object that is maintained undergoes some procedure(s) to restore it to functioning operation when it fails; in this case, repeated failures of the same object are possible. The system maintenance concept will tell which part(s) of the system are nonmaintained and which are maintained and will give instructions for restoration of the system to functioning condition when it fails because of the failure of one of the nonmaintained parts of the system (or any other type of failure, for that matter).

Language tip: The concepts presented in Section 3.2 apply to any object that is not maintained, no matter how simple or complicated. We will use the language of “unit” or “component” to describe such objects even though the words “unit” or “component” seem to imply a single, unitary object like a resistor or ball bearing and do not seem to apply to complicated objects like satellites. Nonetheless, the reliability effectiveness criteria we shall describe in Section 3.2 pertain to all such objects, simple or complicated, provided they are, or when they are considered to be, nonmaintained.

Most real engineering systems are maintained: when they experience failure, they are repaired and put back into service. There are, of course, significant exceptions (most notably, satellites) for which repair is not possible at all,2 and other systems (such as undersea cable telecommunications systems) for which repair is possible but extremely expensive. All systems contain components that are not maintained but instead are replaced when they fail. The replaced component is discarded if it is not repairable, like a surface-mount inductor. Other replaced “components” are more elaborate subassemblies that may be repaired and placed into a spares inventory if it makes economic sense to do this. Reliability models that produce reliability effectiveness criteria for maintained systems are constructed from simpler models for the reliability of their nonmaintained constituent components and subassemblies, and it is these latter models that we study in this chapter.

This is a good time to explore the relationship between failures of parts or components and system failures. A system failure is any instance of not meeting some system requirement. As discussed in Chapter 2, not meeting a system requirement does not necessarily mean that the system has totally ceased operation. Many reliability models are constructed based on the belief that system failure is equivalent to total cessation of system operation. The reality is somewhat more complicated. Some system requirements pertain to performance characteristics like throughput, delay, tolerance, etc., that may be measured on a continuum scale. Instances of system operation where some performance characteristic falls outside the range specified in the requirement constitute system failures, even though the system may still be operating, perhaps with some reduced capability. Such failures are indeed within the scope of reliability modeling, and component failures may contribute to these events. This points up the importance of an effective system functional decomposition (Section 3.4.1) as a first step in creating a reliability model and a maintenance plan for each system failure mode. Obviously, any realistic system has too many failure modes for it to be feasible to create a reliability model for every one of them. Some method is required to decide which failure modes to focus attention on; an effective system reliability analysis requires this as a first step.

The key operational characteristic of a nonmaintained item is that when it fails, no attempt is made to repair it, and it is instead discarded (possibly recycled, but whatever disposition it may receive, it is not reused in the original system). The decision about whether any particular component should be considered maintained or nonmaintained is largely an economic one, and is closely connected with the maintenance concept for the system as a whole (see Chapter 10). The always-cited classic example of a nonmaintained unit is the incandescent light bulb (and now we will refer to anything nonmaintained as a unit; this may encompass individual components such as resistors, bearings, hoses, etc., or various assemblies, composed of several components, that are part of a larger system, or in some cases an entire system that is not maintained). When a light bulb burns out and ceases to produce light, it is discarded and the socket that contained it is filled with another, usually new, light bulb.

The repair-or-replace decision is part of the system maintenance concept. In addition to other factors such as accessibility, staff training, etc., which are covered extensively in Chapter 10, this decision has a large economic component. Consider, for example, that it is technically possible to repair a light bulb. Careful removal of the glass envelope from the base, reinstallation of a good filament, and resealing and re-evacuating the bulb are all operations that are easily within contemporary technical capabilities. However, this is never done because it would be a monumentally stupid thing to do from an economic point of view (note that, however, some kinds of expensive ceramic/metal high-power vacuum tubes are sometimes repaired by a process very much like that described here [31] ). At this time, raw materials for incandescent bulbs are cheap and plentiful, and the cost of manufacturing a new bulb is measured in pennies. The cost to carry out the repair operations cited would be orders of magnitude greater than the cost of producing a new bulb, and so today this is never done (except possibly for some signally important units like Edison’s original bulb which is kept running for historical purposes). There may come a time (and this will probably be an unhappy time) when these raw materials may be scarce and/or expensive, and the consequent increased cost of manufacturing a new bulb may change the discard versus repair equation. 3 But for now, in the decision to characterize a component, unit, assembly, or system as nonmaintained, economics plays a primary role. This reasoning should be very familiar to systems engineers.

Again, the key operational characteristic of a nonmaintained unit is that when it fails, it is discarded. Thus, it can suffer at most one failure. To describe this scenario quantitatively, it is useful to consider the time from start of operation of a new unit until the time the unit fails (assuming continuous, uninterrupted operation). This interval of time is called the lifetime of the unit. It can be reasonably represented by the upper case letter L (although this is not obligatory), and is most often thought of as a random variable.

Requirements tip: We have seen that a good reliability requirement must include a specification of the length of time over which the requirement is to apply. When writing these requirements, and undertaking modeling studies to support them, it is important to remember when operational time is intended and when calendar time is intended. Calendar time refers to elapsed time measured by an ordinary clock and is always greater than or equal to operational time, the period of time during which the object in question is in use. Some systems are intended to be used continuously (most web servers and telecommunications infrastructure equipment are of this nature) while other systems are used only intermittently (an automobile, for instance). Be aware of whether the system you are developing is intended to be used continuously or intermittently, and state reliability requirements accordingly. This matters because equipment is usually considered to be not aging (i.e., accumulating time to failure) when it is not operating.4 Usually, a model is required to relate operational time to calendar time so that users may anticipate their maintenance and replacement needs based on calendar time that is normally used for operations planning purposes. Some material on relating operational time to calendar time in the context of software products is found in Refs. 33, 46, 47.

3.3.2 The Life Distribution and the Survivor Function

3.3.2.1 Definition of the life distribution

Much discussion has taken place over the choice to model lifetimes as random variables. Suffice it to say that the most satisfactory explanation is that the factors influencing the lifetime of a unit are numerous, not all fully understood, and sometimes not controllable. In a sense, the choice to describe lifetimes as random is a cover for this (inescapable) ignorance [17, 61] . In some rare cases, it might be possible in principle to identify precisely the lifetime of a particular component. This would involve a deep understanding of the physical, chemical, mechanical, and thermodynamic factors at play in the operation of the component, as well as extremely precise measurements of the geometry, morphology, electrical characteristics, etc., of the component. Even if it were possible in principle to acquire such understanding, it would be prohibitively expensive in practice, and the knowledge obtained about the lifetime of a component A would not be transferrable to any knowledge about the lifetime of a component B from the same population because components A and B are not likely to be identical to the degree necessary to justify not having to perform all the same measurements on component B also. Clearly, this is an impossible situation.

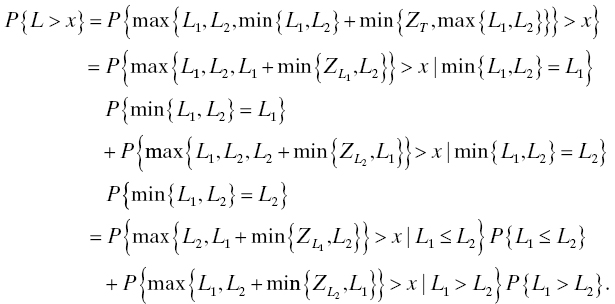

What we do instead is attempt to describe the distribution (in the probabilist’s sense) of the lifetimes of a population of “similar” components. For example, imagine a collection of 8 μF, 35-V tantalum electrolytic capacitors in an epoxy-sealed package manufactured by Company C during July 2011. Assuming the manufacturing process at Company C did not change during July 2011, we may reasonably assume that these are “similar” components for the purposes of calling them a “population” in the sense that a statistician would do. Every member of the population has a (different) lifetime that, under specified operating conditions, is fixed but unknown. The difference in lifetimes may be explainable by differences in raw materials, manufacturing process controls, varying environmental conditions in the factory, etc. Instead of trying to ascertain the lifetime of each individual in a deterministic fashion, what we do instead is consider populations of similar components and assign a distribution of the lifetimes (under specified operating conditions) in each population. A distribution of lifetimes for a population is called the life distribution for that population. The life distribution is a cumulative distribution function (“cdf”), in the sense that it is used in probability theory, and is often (though this is not obligatory) denoted by the upper case letter F (or sometimes FL if it is necessary to explicitly call out the pertinent lifetime random variable). Thus, denoting by L the lifetime of a component drawn at random from the population,

Here, x is a variable that is at your disposal (we will call this a discretionary variable). You specify a value of x and the life distribution value at that x is the probability that a unit chosen at random from that population has a lifetime no greater than x, or, in other words, fails at or before time x. For instance, suppose a population of components has a life distribution given by F(x) = 1 − exp(−x/1000) for x ≥ 0 measured in hours. Then the probability that a component chosen at random from that population fails at or before 1 year is F(8766) = 1 − exp(−8.766) = 0.999844 which is almost certainty. We will return to this example later to explore some of the other things it has to teach but before we do, here is a picture (Figure 3.1).

Figure 3.1 Generic life distribution.

The dashes at the end of the curve serve to indicate that the curve continues further to the right. A life distribution need not be continuous (as drawn), and it may have inflection points (not shown), but it is always nondecreasing and continuous from the right (see Section 3.3.2.3).

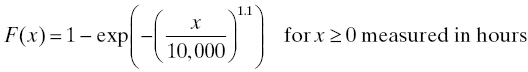

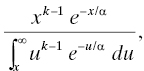

Example: Suppose the population of tantalum capacitors described earlier has a life distribution given by

when operated at 20°C. Suppose 100 capacitors from this population are placed into operation (at 20°C) at a time we will designate by 0. After 1000 hours of uninterrupted operation have passed, what is the expectation and standard deviation of the number of capacitors that will still be working?

Solution: The number of capacitors still working at time x has a binomial distribution with parameters 100 (the number of trials in the experiment) and the probability of survival of one capacitor past time x. For x = 1000, this probability is

As the expected value of a binomial random variable with parameters n and p is np, the expected number of capacitors still working after 1000 hours is 100 × 0.92364 = 92.364. The variance of a binomial distribution with parameters n and p is np(1 − p), which in this case is equal to 7.05292. Consequently, the standard deviation of the number of capacitors still working after 1000 hours is equal to 2.65573.

Requirements tip: We have carried out the computations in this example to five decimal places, which is far more than would be desirable in almost any systems engineering application, solely for the purposes of illustrating the computations. Choose the appropriate number of decimal places whenever a quantity is specified in a requirement. The choice is often dictated by economic factors, practicality of measurement factors, and/or commonsensical factors that indicate how many places is too many for the application contemplated. For instance, specifying the length of a football field, in feet, to two decimal places is too much precision, whereas specifying the dimensions (in inches) of a surface-mount component may require more than two decimal places. Note that the units chosen bear on the decision as well.

3.3.2.2 Definition of the survivor function

The example points to another useful quantity in reliability modeling of nonmaintained units, and that is the survivor function or reliability function. The survivor function is simply the probability that a unit chosen at random from the population is still working (“alive”) at time x:

and is consequently one minus the life distribution (the complement of the life distribution) at x. Again, a subscript L is sometimes used if it is necessary to avoid ambiguity.

Note that we have consistently pointed out that the discretionary variable x is nonnegative in lifetime applications. This is because, for obvious physical reasons, a life distribution can have no mass to the left of zero. That is, the probability of a negative lifetime is zero. Lifetimes are always nonnegative, so when L is a lifetime random variable, there is no point in asking for P{L ≤ x} when x < 0 because P{L ≤ x} = 0 whenever x < 0.

3.3.2.3 Properties of the life distribution and survivor function

This discussion leads naturally into a discussion of other useful properties of life distributions. We consider four of these:

- The life distribution is zero for x < 0.

- The life distribution is a nondecreasing function of x for x ≥ 0.

- F(0−) = 0 and F(+∞) = 1.

- The life distribution is continuous from the right and has a limit from the left at every point in [0, ∞).

Return to Figure 3.1 to explore how the generic (continuous) life distribution shown there has these properties. We have indicated in Section 3.3.2.1 how the first property comes about. For the second property, consider that F(x) is the probability that a unit5 fails before time x. That is, F(x) is the probability that the unit fails in the time interval [0, x]. Choose now x1 and x2 with x1 < x2 and consider F(x1) and F(x2). The interval [0, x2] is larger than (and in fact contains) the interval [0, x1], so there are more opportunities for the unit to fail in the additional time from x1 to x2.6 Thus F(x2) must be at least as large as F(x1), which is property 2. From property 3, F(0−) = 0 says that the limit as x → 0 from the left (i.e., through negative values) of the probability that a unit fails immediately upon being placed into operation is zero. F(+∞) = 1 says that every unit in the population eventually fails. There are situations in which we may wish to assume F(0) > 0 (an example is given by a switch that fails to operate when called for) or F(+∞) < 1 (an example could be some component that is certain to not fail until after the service life of the system in which it is used is expired). But in most cases, property 3 is used as stated. Finally, the continuity of the life distribution from the right is a consequence of the choice of ≤, rather than <, in the cdf definition of life distribution. An equally satisfactory probability theory can be constructed on the choice of < (and in fact many notable probability textbooks do this), but the convention we have chosen to follow is as above, and in this case the cdf is continuous from the right (in the other case, it is continuous from the left).

Language (and notation) tip: For most of the life distributions in common use in reliability engineering, it is immaterial whether the < sign or the ≤ sign is chosen, because these life distributions are continuous. However, once the choice is made, it is important to continue the current analysis with the same choice throughout for consistency. This only matters when the life distribution has discontinuities (such as the switch life distribution, used in the example in Section 3.4.5.1, which contains a non-zero turn-on failure probability). Even when all life distributions in a study are continuous and it doesn’t make any difference to the outcome, it is just sloppy practice to switch between < and ≤ arbitrarily. When working with someone else’s analysis, endeavor to determine which choice was made and whether it is consistently applied.

Because the survivor function S is the complement of the life distribution F (i.e., S = 1 − F), the corresponding four properties for the survivor function are

- The survivor function is one for x < 0.

- The survivor function is a nonincreasing function of x for x ≥ 0.

- S(0−) = 1 and S(+∞) = 0.

- The survivor function is continuous from the left and has a limit from the right at each point in [0, ∞).

Language tip: The survivor function is also sometimes called the reliability function. Recalling our discussions from the Foreword and Chapter 2, the fact that we have just encountered yet another use of the same word “reliability” should strengthen your resolve to master potential confusions inherent in this language and be prepared to clarify for your teammates, customers, and managers another of the many unfortunate language clashes that abound in reliability engineering.

3.3.2.4 Interpretation of the life distribution and survivor function

The easiest way to maintain a consistent interpretation of the life distribution and survivor function is to visualize

- the population of components to which they apply and

- the “experiment” of choosing an item from that population at random.7

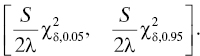

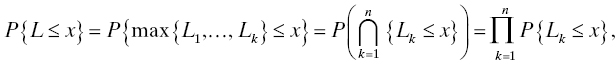

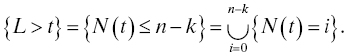

When you make this choice at a certain time (call it t, meaning that you have chosen some time to start a clock and that clock now measures t time units later), the probability that the item chosen is still alive (“working”) at that time is given by the value of the survivor function S(t) for that population. Because of the nature of selection at random without replacement, the number of items in the population still alive at time t is a random variable having a binomial distribution. If the initial size of the population is A < ∞ and N(t) denotes the (random) number of items still alive at time t, then

This is a binomial distribution with parameters A and S(t). Its mean is AS(t) and its standard deviation is ![]() . So the expected proportion of the population that is still alive at time t is AS(t)/A = S(t). As more time passes (t increases), this proportion does not increase.

. So the expected proportion of the population that is still alive at time t is AS(t)/A = S(t). As more time passes (t increases), this proportion does not increase.

Similarly, the (random) number of items that have failed by time t (or, to put it another way, the number of items that have failed in the time interval [0, t] from 0 to t) has a binomial distribution with parameters A and F(t) = 1 − S(t).

Language tip: Note that we have used t and x interchangeably in this section to denote a discretionary variable having the dimensions of time. This is not cause for alarm. It is routinely acceptable provided the definition is clear and the same letter is used consistently throughout each application.

3.3.3 Other Quantities Related to the Life Distribution and Survivor Function

As with cumulative distribution functions in probability, other related quantities enhance our ability to make reliability models. The ones we shall study in this section are the density and hazard rate.

3.3.3.1 Density

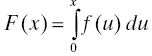

Should it happen that the life distribution is absolutely continuous (i.e., can be written as an indefinite integral of some integrable function), that integrable function is called the density of the lifetime random variable. So if we can write

for some integrable function f, then f is called the density of F. If this is the case, then F is necessarily continuous at every x for which this equation holds. More simply, if F is differentiable on an interval (a, b), then it is absolutely continuous there and f(x) = F΄(x) = dF/dx for x ∈ (a, b). Because of properties 1 and 2 of life distributions, we have f(x) = 0 for x < 0 and f(x) ≥ 0 for x ≥ 0. Most of the life distributions in common use in reliability modeling have densities (see the examples in Section 3.3.4) (Figure 3.2).

Figure 3.2 A generic density function.

Example: Suppose F(t) = t/(1 + t) for t ≥ 0 and F(t) = 0 for t < 0. Then properties 1, 3, and 4 (Section 3.3.2.3) are readily verified. Also, F is differentiable on [0, ∞) and F΄(t) = 1/(1 + t)2 > 0 there, so F is increasing (property 2) and f(t) = 1/(1 + t)2 is its density. Thus, this F is a life distribution with a density.

3.3.3.2 Interpretation of the density

When the lifetime L has a distribution F that has a density in a neighborhood of a point t, we may write

That is, for a small positive increment ε, the probability that an item chosen at random from the population fails in the (small) time interval [t, t + ε] is approximately ε times the value of the density at t. Note that this item may have already failed before time t—there is no requirement that the item be alive at the beginning of this interval. Contrast this with the hazard rate interpretation discussed in Section 3.3.3.5.

3.3.3.3 Return to the stress–strength model

The stress–strength model was introduced in Section 2.2.7 and the example of destruction of a single complementary metal-oxide semiconductor (CMOS) integrated circuit was explained as resulting from a single environmental stress, namely the application of a voltage stress exceeding the strength of the oxide in the device. Here we explore the stress–strength model in a population of devices and an environment that can offer a range of stresses.

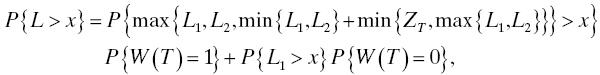

Imagine that a population of devices has a range of strengths that is described by a strength density. That is, for some device characteristic V that indicates “strength” (e.g., oxide breakdown voltage), there is a density fV characterizing that population with respect to that strength variable, or characteristic. That means that we describe the strength of an item drawn at random from the population by a random variable V that has density fV, and when that item is subjected to a stress greater than V, it fails. Further suppose that the environment offers stresses (on the same scale) described by a random variable S with density gS. Figure 3.3 shows this relationship graphically. The density of stresses offered by the environment, gS, and the density of strength in the population of devices, fV, is shown on the same axes. Figure 3.3 depicts a situation where most of the population strengths are greater than most of the environmental stresses, except for the small area where the two densities overlap. For a stress in this area (a value indicated by the × on the horizontal axis), a device whose strength is to the left of this stress (weaker than this stress) will fail. In this picture, this small area indicates that there are few devices in the population whose strength is less than (to the left of) this value. The area under the stress density to the right of the chosen stress value is also small, and this indicates that stresses so large are rarely offered (most stresses are less than this value, or almost all of the stress density lies to the left of this value).

Figure 3.3 Stress–strength relationship in a population.

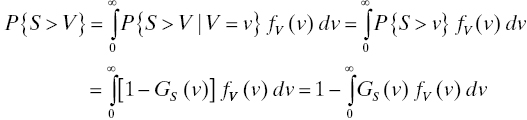

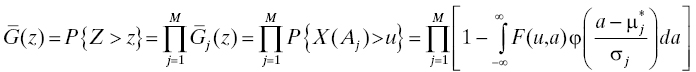

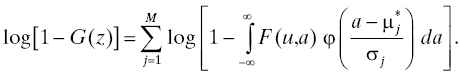

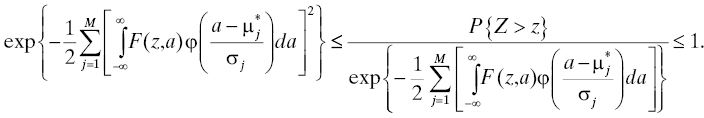

The probability of failure, P{S > V}, is the probability that a stress chosen at random from the population of stresses (described by the density gS) exceeds the strength of a device chosen at random from the population of devices whose strength density is fV. Then the probability of failure of a device drawn at random from that population, when subjected to a stress drawn at random from that environment, is

as long as we assume the environmental stresses are stochastically independent of the population strengths.

Note that neither of these relates to time to failure. The distributions (densities) here are both on a scale of some physical property (e.g., volts). To develop this model further to the point where a lifetime distribution could be obtained, it would be necessary to describe the times at which the environment offers stresses of a given size. This could be done with, for example, a compound Poisson process in which at each (random) time an event occurs, a stress of a random magnitude is applied. Some details of this model may be worked out in Exercise . A deeper discussion of stress–strength models is found in Ref. 38.

3.3.3.4 Hazard rate or force of mortality

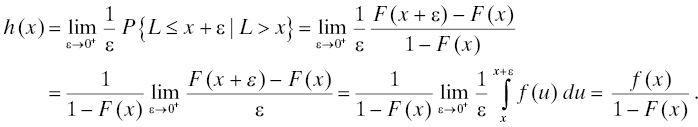

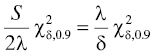

The second related quantity, one that is widely used in modeling the reliability of nonmaintained units, is the hazard rate. The hazard rate is customarily denoted by h, and the definition of hazard rate is

when the limit exists. This is the hazard rate of the lifetime random variable L. It is also sometimes spoken of as the hazard rate of the life distribution. Note this definition contains a conditional probability, and, unlike the quantities we have studied so far which are dimensionless, the hazard rate has the dimensions of 1/time (probability is dimensionless and ε has the dimensions of time).

In case F is absolutely continuous at x, the hazard rate may be computed as follows:

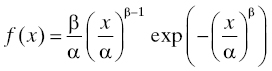

If we further assume F is differentiable, the differential equation

with initial condition F(0) = α may be solved to yield

Thus when the life distribution is differentiable, there is a one-to-one correspondence between the life distribution and its hazard rate. Knowing either one enables you to obtain the other. Most often α will be zero, but it is useful to know the expression for life distribution in terms of hazard rate even when α > 0. An example of a component whose life distribution is a switch for which the probability of failure when it is called upon to operate is α > 0.

3.3.3.5 Interpretation of the hazard rate

Return to the definition above to see that

as ε → 0+. Imagine for the moment that time is measured in seconds and consider this equation for ε = 1 (second). Then the hazard rate at x is approximately equal to the conditional probability of failure in the next second (i.e., before time x + 1) given that the unit is currently alive (using time x to represent the current time). So the hazard rate is something like the propensity to fail soon given that you are currently alive. In fact, the concept of hazard rate is lifted directly from demography, the study of lifetimes of human populations, where it is called the force of mortality. This description is very apt: the hazard rate, or force of mortality, describes how hard nature is pushing you to die (very) soon when you are alive now.

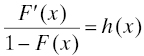

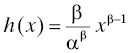

Example: Let F(x) = 1 − exp((−x/α) β) for x ≥ 0 and F(x) = 0 for x < 0, where α and β are positive constants. This is readily verified to be a life distribution (Exercise 2). Its particular properties depend on the choice of the constants α and β which are called parameters. This life distribution is called the Weibull distribution in honor of the Swedish engineer, scientist, and mathematician Ernst Hjalmar Wallodi Weibull (1887–1979). See also Section 3.3.4.3. This distribution has a density

for x ≥ 0. Consequently, the hazard rate of the Weibull distribution is given by

again for x ≥ 0.8 It follows from this expression that the hazard rate of the Weibull distribution may be increasing, decreasing, or constant, depending on the choice of β: if β < 1, the hazard rate is decreasing, if β > 1, the hazard rate is increasing, and if β = 1, the hazard rate is constant. The special case β = 1 has a long and extensive usage in reliability modeling: it is the exponential life distribution F(x) = 1 − exp(−(x/α)) (Section 3.3.4.1). We have seen that the hazard rate of the exponential distribution is constant; it has been shown that this is the only life distribution in continuous time whose hazard rate is constant [34] (the geometric probability mass function p(x) = (1 − α)α x−1 for x = 0, 1, 2, . . . and 0 < α < 1 is a life distribution on a discrete time scale that has a constant hazard rate, and it is the only life distribution in discrete time that is so blessed [35] ). We will explore additional properties of the exponential distribution when we discuss more examples in Section 3.3.4.

Finally, contrast the interpretation of hazard rate with the interpretation of density given in Section 3.3.3.2. Owing to the equation

ε times the density at t is approximately equal to the probability that a lifetime falls between t and t + ε, that is, the probability that L > t and L ≤ t + ε. Here, we are selecting a unit at random from the population and asking if its lifetime is between t and t + ε. The hazard rate, instead, satisfies

which indicates that ε times the hazard rate at time t is approximately equal to the conditional probability that a lifetime expires (at or) before t + ε, given that it is greater than t. Here, we are selecting from a restricted portion of the population, namely that set of units whose lifetimes are greater than t (those that are still alive at time t). Selecting a unit at random from those, we ask what is the probability that the lifetime of that unit does not exceed t + ε. In more mathematical terms, this is the difference between P(A ∩ B) and P(A | B).

Language tip: The hazard rate or force of mortality is almost always called the failure rate of the relevant life distribution. This is unfortunate, the more so because it is almost universal, because the word “rate” makes engineers think of “number per unit time,” and there is nothing like that going on here (even though the dimensions of the hazard rate are 1 over time). The closest one can come to interpreting “hazard rate” as a rate is as in the following example. Suppose the population of units we are considering initially contains N members and we start all of these operating at an arbitrary time we shall label “zero.” At a later time x, the expected number of failed units is NF(x) (where F is the life distribution for this population) and the expected number of units still working is NS(x) = N(1 − F(x)). One of these still-alive units fails before time x + 1 with probability approximately equal to h(x).9 So the hazard rate is like the proportion of the remaining (still-alive) population that is going to fail very soon. This looks like a “rate” when referred to the number of remaining (still-alive or “at-risk”) members of the population. Extended discussion of this deplorable situation is available in Ref. 2. See also the “Language Tips” in Section 4.4.2.

Requirements tip: Be very careful when contemplating writing a requirement for “failure rate.” Because the phrase can be interpreted in (at least three) different ways in reliability engineering, it is vital that you specify which meaning is intended in the requirement. For this reason, it is probably best to avoid “failure rate” altogether in requirements. Instead, spell out the specific reliability effectiveness criterion intended. For example, “The number of system failures shall not exceed 3 in 25 years of operation under the specified conditions” is preferable to “The system failure rate shall not exceed 1.37 × 10−5 failures per hour during the service life of the system when operated under specified conditions.” Indeed, the latter formulation tends to induce one to think that system failures accrue uniformly over time, while the former formulation allows for arbitrary patterns of failure appearance in time, as long as the total number does not exceed 3 in 25 years.

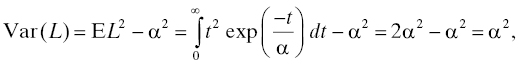

The concept of cumulative hazard function will be useful later in the study of certain maintained system models (Section 4.4.2). The cumulative hazard function H is simply the integral of the hazard rate over the time scale:

It is easy to see that H(t) can also be written as H(t) = −log S(t) = −log [1 − F(t)].

3.3.4 Some Commonly Used Life Distributions

3.3.4.1 The exponential distribution

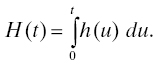

The lifetime L has an exponential distribution if P{L ≤ x} = 1 − exp(−x/α) for x ≥ 0 and α > 0. α is called the parameter of the distribution. As x has the dimensions of time, so does α because the exponent must be dimensionless. In fact, α is the mean life:

The exponential distribution has a density, namely (1/α) exp(−x/α). Consequently, the hazard rate of the exponential distribution is constant and is equal to 1/α. Note this has the units of 1 per time as it should. The variance of the exponential distribution is

so its standard deviation is α. The median of the exponential distribution is the value m for which P{L ≤ m} = 0.5; solving exp(−m/α) = 0.5 for m yields m = α log 2.

Frequently, the exponential distribution is seen with the parameterization 1 − exp(−λx) for λ > 0. This is perfectly acceptable; simply replace α by 1/λ in all the earlier statements.

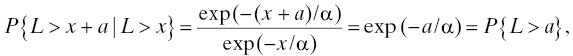

The exponential distribution is also blessed with a peculiar property called the memoryless property. As a consequence of the following computation

we see that if an item’s lifetime L has an exponential distribution, then the probability that the item will fail after the passage of a (additional) units of time is the same no matter how old the item is. That is, if the item is currently x time-units old, then the probability of the item’s surviving to time x + a is the same as the probability that a new item survives to time a, regardless what x may be. To get some sense of how peculiar a property this is, consider the purchase of a used flat-screen television. If reliability were your only concern, and the life distribution of the (population of) flat-screen TV(s) were exponential, then you would be willing to pay the same price for a used flat-screen TV of any age as you would for a new one. Of course, there are other factors at play here, and reliability is not your only concern, but the example serves as a caution you should remember when you contemplate using the exponential distribution for the lifetime or a nonrepairable item. The exponential distribution is the only life distribution (in continuous time) that has this property [34].

One reason for the popularity of the exponential distribution in reliability modeling is that it is the limiting life distribution of a series system (Section 3.4.4) of “substantially similar” components [15] . In this context, “substantially similar” has a precise technical meaning which we will defer discussing until Sections 3.4.4.3 and 4.4.5 when a similar result (Grigelionis’s theorem [53] ) will be seen as relevant to both maintained and nonmaintained systems. Implications for field reliability data collection and analysis are discussed in Chapter 5.

3.3.4.2 The uniform distribution

A random variable L is said to have a uniform distribution on [a, b], a < b, if

If a ≥ 0, the uniform distribution can be used as a life distribution. In this model, the lifetimes are between a and b with probability 1, and the distribution has the name “uniform” because the probability that a lifetime lies within any subset of [a, b] of total measure τ, say, is τ/(b − a) regardless where within [a, b] this subset may lie (as long as it lies wholly within [a, b]). The density of the uniform distribution is 1/(b − a) on [a, b] and zero elsewhere. The expected value of a uniformly distributed lifetime is (a + b)/2 and the variance is (b − a)2/12. In other uses of the uniform distribution, a may be negative, but for use as a life distribution a must be nonnegative. See Exercise 6 for the hazard rate of the uniform distribution.

3.3.4.3 The Weibull distribution

The lifetime L has a Weibull distribution if P{L ≤ x} = 1 − exp(−(x/α) β) for x ≥ 0 and α > 0, β > 0. α and β are the parameters of the distribution. As we saw in the example in Section 3.3.3.4, the Weibull distribution has a density

and its hazard rate is

all for x ≥ 0.

As noted previously, the hazard rate for the Weibull distribution can be increasing, decreasing, or constant, depending on the value of β (Table 3.1).

Table 3.1 Weibull Distribution Hazard Rate

| If β is | Then the Weibull hazard rate is |

| >1 | Increasing |

| =1 | Constant |

| <1 | Decreasing |

When β = 1, the Weibull distribution reduces to the exponential distribution (Section 3.3.4.1). The Weibull distribution with β > 1 is frequently used to describe the lifetimes in a population of items that may suffer mechanical wear. For example, ball bearings normally exhibit wear (decrease of diameter) as they continue to operate.10 A population of identically sized ball bearings made of the same material, when operated continuously, will accumulate more and more failures due to wear as time increases. That is, failures will begin to accumulate more rapidly the longer the population continues in operation. This phenomenon is labeled “wearout” in reliability engineering, the term being inspired by the concept of mechanical wear such as illustrated in this example. Note that this example treats a nonrepairable item. Any individual ball bearing may suffer at most one failure; the “accumulation of failures” pertains to multiple failures in a population of many bearings, each of which may fail at most once. See Section 3.3.4.8 for additional development of this idea.

Finally, the Weibull distribution is the limiting distribution of the smallest extreme value (i.e., the minimum) of a set of independent, identically distributed random variables [27] . The lifetime of a component under the competing risk model (Section 2.2.8) is a smallest extreme value. This may account for the frequent appearance of the Weibull distribution as a reasonable description of the lifetime of individual components.

3.3.4.4 a life distribution with a “bathtub-shaped” hazard rate

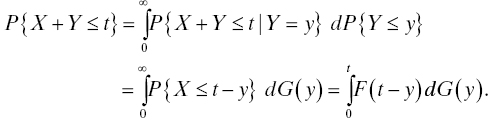

Demographers have determined that the force of mortality in human populations follows a broad U-shaped, or “bathtub-shaped,” curve (see Figure 3.4).

Figure 3.4 Force of mortality for human populations.

The commonly accepted explanation for this shape posits that the decreasing force of mortality in early life comes from infant mortality and the diseases that afflict the young, which, after some period of time, are outgrown and subsequently exert little influence on the population. The increasing force of mortality in late life is due in large part to the finite lifetime of human beings (see Exercise 6), but is also due to what are termed “wearout mechanisms” such as atherosclerosis, loss of telomeres, and others, that promote earlier death. The (approximately) constant force of mortality in mid-life is primarily due to deaths caused by accidents that occur at random times and the rarer occurrence of diseases that strike prematurely in middle age. A similar interpretation obtains in reliability engineering: decreasing force of mortality in the early part of the lifetime in a population of components is explained by the early failure of some components in the population that have manufacturing defects (see Section 3.3.6) that cause them to fail prematurely (such failures are often referred to as “infant mortality failures”). Increasing force of mortality in the later part of the lifetimes is explained by physical and chemical wearout mechanisms such as mechanical wear, depletion of reactants, increase of nonradiative recombinations, increase in the number and/or size of oxide pinholes, etc. Indeed, the presence of an increasing hazard rate is often taken as a symptom of the presence of an active wearout failure mode, even if no physical, chemical, or mechanical wearout explanation can be discerned. The constant force of mortality during “useful life” is due primarily to the occurrence at random times of shocks whose stresses exceed the strengths of the components (see Section 3.3.3.3 and Exercise 1).

None of the life distributions discussed elsewhere in this section has a force of mortality with this shape. To develop such a life distribution, we need to employ the method shown in Section 3.3.3.4 in which a life distribution is developed from a hazard rate by the integral formula shown there.

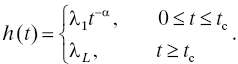

At least one attempt at implementing a practical version of such a life distribution has been made. Holcomb and North [30] introduced a life distribution of this type for electronic components. Their model is a Weibull distribution describing the component’s reliability until a time called the crossover time, at which time it changes to an exponential distribution that applies thereafter. That is, the population life distribution is described by a Weibull distribution up until the crossover time, and the (conditional) life distribution of the subset of the population that survives beyond the crossover time is an exponential distribution. This distribution is continuous everywhere and has a density everywhere except at tc. The hazard rate model is as follows:

This model contains four parameters, λ1, λL, tc, and α. λ1 > 0 is the early life hazard rate coefficient and represents the hazard rate of the life distribution at t = 1 (conventionally, the time unit in this model is hours). α > 0 is the early life hazard rate shape parameter; it represents the rate at which the hazard rate decreases until time tc. At time tc, the hazard rate becomes equal to a constant λL > 0. The model further imposes the condition that the hazard rate be continuous, so the four parameters are not independent. They are linked by the relation

Note that while this hazard rate model allows for a decreasing hazard rate in early life and a constant hazard rate in “mid-life,” the increasing hazard rate characteristic of wearout is not present. This is because it was reasoned that wearout mechanisms in electronic components take so long to appear that the service life of the equipment or system is over before this occurs.11 Finally, note that in this model the conditional life distribution of components, given that they survive beyond tc, is exponential with parameter 1/λL.

For the life distribution and density corresponding to this hazard rate model, see Exercise 7.

3.3.4.5 the normal (Gaussian) distribution: a special case

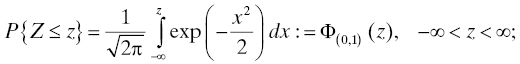

A random variable Z has a standard normal (or standard Gaussian) distribution if

the mean of this distribution is 0 and its variance is 1 (this is the definition of “standard” for the normal distribution and the explanation of the subscript on the Φ). The density of this distribution is given by

Clearly, evaluating the normal distribution is not a paper-and-pencil exercise. The old-school method is to use the table of standard normal percentiles, which appears in all elementary statistics textbooks; the tables are usually constructed by numerical integration or polynomial approximation [1] . Now, all statistical software and many scientific calculators include a routine for evaluating the standard normal distribution, and many office software programs, such as Microsoft Excel®, also include this capability.

If Z is a standard normal random variable, the random variable σZ + μ has mean μ and variance σ2 where −∞ < μ < ∞ (could be negative!) and σ > 0; the distribution of σZ + μ is conventionally denoted by Φ(μ,σ) or simply Φ if μ and σ are clear from the context. Correspondingly, if Z is a normally distributed random variable having mean μ and standard deviation σ, then (Z − μ)/σ has a standard normal distribution. The normal distribution is also called the Gaussian distribution in honor of the great mathematician Carl Friedrich Gauss (1777–1855) who first used it to describe the distribution of errors in statistical observations.

The normal distribution is not a life distribution because it has mass to the left of 0, i.e., it gives positive probability to negative lifetimes. Nonetheless, some studies use a normal distribution with large positive μ and small σ as an approximate life distribution because when μ is large positive and σ is small, the probability that the lifetime is negative is quite small and may for some purposes (e.g., computing moments) be neglected. However, the normal distribution is not appropriate for use with many of the important models for the reliability of a maintainable system. For example, the equations of renewal theory (Section 4.4.1) fail for the normal distribution (even if μ is large positive and σ is small).

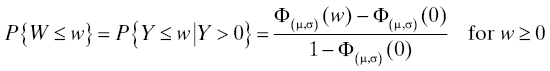

Some studies make use of a truncated normal distribution to avoid the difficulty with negative lifetimes. A truncation of a normal distribution with parameters μ and σ is the conditional distribution of a random variable Y that is normally distributed with mean μ and variance σ2, conditional on Y belonging to some interval. To use the truncated normal distribution as a life distribution, this interval would be [0, ∞], or the conditioning is on Y ≥ 0. If we denote by W the lifetime random variable described by this truncated distribution, then

and P{W ≤ w} = 0 for w ≤ 0 so that the truncated normal distribution is a bona fide life distribution. Note that the mean and variance of the truncated normal distribution are no longer μ and σ2. For more details on the truncated normal distribution, see Ref. 28.

3.3.4.6 the lognormal distribution

A lifetime L is said to have a lognormal distribution if the logarithm of the lifetime has a normal distribution. That is,

Note that while L ≥ 0, log L may have any sign because the logarithms of numbers between 0 and 1 are negative. If Y has a normal distribution, then L = eY has a lognormal distribution. If μ and σ are the parameters of the underlying normal distribution, then the mean of the lognormal distribution is ![]() and its variance is

and its variance is ![]() .

.

The lognormal distribution has been successfully used for modeling repair times of complex equipment [37, 51]. Its hazard rate is decreasing as t → ∞, leading to the interpretation that when equipment is complex, repairs are often complicated, and the longer a repair lasts, the less likely that it is that it will be completed soon. For example, times to complete repairs for undersea telecommunications cables that require a repair ship to visit the site of the failure have been postulated to follow a lognormal distribution, but citations in the literature are hard to find.12

3.3.4.7 The gamma distribution

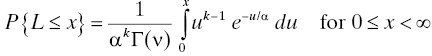

The lifetime L has a gamma distribution if

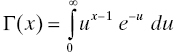

where α > 0 and k > 0 are the location and shape parameters, respectively, of the distribution, and Γ is the famous gamma function of Euler (Leonhard Euler, 1707–1783), defined by

for x > 0. The gamma function is perhaps most well-known for being an analytic function that interpolates the factorial function: Γ(n + 1) = n! whenever n is a positive integer. α is a location parameter and k is a shape parameter (α has the units of time and k is dimensionless); when k = 1 the gamma distribution reduces to the exponential distribution (Section 3.3.4.1) with parameter α. The importance of the gamma distribution in reliability modeling lies largely in its property that the gamma distribution with parameters α and n (n an integer) is the distribution13 of the sum of n independent exponential random variables, each of which has mean α. Actually, more is true: the sum of two independent gamma-distributed random variables with parameters (α1, ν) and (α2, ν) again has a gamma distribution with parameters (α1 + α2, ν), and of course this extends to any finite number of summands as long as the shape parameter ν is the same in each. There is a natural connection with the life distribution of a cold-standby redundant system (see Section 3.4.5.2 for further details).

The density of the gamma distribution is given by

Consequently, the hazard rate of the gamma distribution is given by

again for x > 0. When k = 1, this reduces to 1/α, a constant, as it should because for k = 1 the gamma distribution is the exponential distribution. The hazard rate is clearly increasing for k > 1; it is the content of Exercise 3 that the hazard rate is decreasing when 0 < k < 1. So the behavior of the gamma distribution is similar to that of the Weibull distribution according to the shape parameters (Table 3.2).

Table 3.2 Gamma Distribution Hazard Rate

| If k is | Then the gamma hazard rate is |

| >1 | Increasing |

| =1 | Constant |

| <1 | Decreasing |

The mean of the gamma distribution is kα and its variance is kα2.

The main importance of the gamma distribution elsewhere comes from its relation to commonly used quantities in statistics that we use in Chapters 2, 5, 10, and 12. The sample variance from a population having a normal distribution has a gamma distribution. Formally, if X1, X2, . . ., Xn are normally distributed random variables with mean 0 and variance σ2, then X1 2 + X2 2 + ⋅ ⋅ ⋅ + Xn 2 has a gamma distribution with parameters 1/2σ2 and n/2. For historical reasons, this distribution when σ = 1 is also called the chi-squared distribution with n degrees of freedom (Karl Pearson, 1857–1936). Other important quantities in statistics have distributions related to the gamma distribution, including student’s T-statistic (student was a pseudonym adopted by William Sealy Gosset (1876–1937) to enable him to publish his works over the objections of his employer, the Guinness brewing company), Snedecor’s F-statistic (George W. Snedecor, 1871–1974), and Fisher’s Z-statistic (Sir Ronald A. Fisher, 1890–1962) all have distributions than can be expressed in terms of the gamma function and distribution. For details, see Ref. 21.

3.3.4.8 mechanical wearout and statistical wearout

“Wearout” is used in two senses in reliability engineering. Mechanical wearout is the physical phenomenon of loss of material during sliding, rolling, or other motion of materials against one another. Statistical wearout is the mathematical property of increasing hazard rate of a life distribution when the hazard rate does not decrease after the period of increase being described. The second interpretation arose because of the first: a population of devices subject to (physical) wearout will exhibit a life distribution with an increasing hazard rate in later life. The following example may help illustrate this phenomenon.

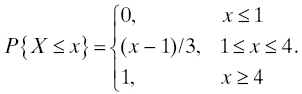

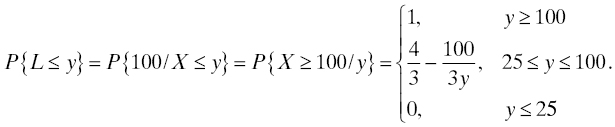

Example: A population of 5/8″ ball bearings is operated under nominal conditions under which their diameter decreases by X ten-thousandths of an inch per hour, where X is a random variable having a uniform distribution on [1, 4] (see Section 3.3.4.2). A ball bearing is declared failed when its diameter has decreased by 0.010″. What is the distribution of lifetimes L in this population of ball bearings? For a ball bearing that we label ω, the rate of decrease of its diameter is X(ω), and the amount of time (in hours) it takes for that ball bearing to decrease by 0.010″, which is 100 ten-thousandths, is 100/X(ω) hours. Our task, then, is to find the distribution of 100/X when X has the stated uniform distribution. We know that

Then

The density of this distribution is 100/3y 2 for 25 ≤ y ≤ 100, and zero elsewhere. So the hazard rate of this distribution is 100/y(100 − y) for 25 ≤ y ≤ 100, and zero elsewhere. This is clearly seen to be an increasing function of y as y → 100− (i.e., as y approaches 100 from the left, or through smaller values, which is what the superscripted minus sign is supposed to convey). This example, while not generic, does illustrate the connection between physical wearout and the mathematical interpretation of wearout as an increasing hazard rate with increasing time. See also Exercises 6, 20, and . Further discussion may be found in Ref. 24.

Another way to understand this phenomenon is to imagine that all the ball bearings wear at exactly the same (constant) rate, say 2.5 ten-thousandths of an inch per hour. Then every ball bearing fails at 40 hours exactly. Then a small variation in the rate of wear (i.e., 0.00025″/hour ± a little bit) will translate into some variation in the failure times (40 hours ± a little bit14). The failure time density will be zero until shortly before 40 hours (i.e., up until 40 – the little bit) and then it will increase rapidly to a maximum near 40 hours and then decrease again rapidly to zero (at 40 + the little bit). The survivor function of the lifetimes will be zero until shortly before 40 hours, and then will decrease rapidly to zero shortly after 40 hours. Think about the quotient of these two quantities (the hazard rate): from shortly before 40 hours until at least 40 hours, the numerator is increasing rapidly while the denominator is decreasing. The quotient is therefore increasing, at least until the density peaks. Deeper analysis would reveal that the hazard rate continues to increase until “shortly after 40 hours,” but that is not the point of this illustration. The point is that under very general conditions, physical wearout, even at random rates, leads to an increasing hazard rate life distribution, which is the characteristic of wearout in the statistical (or mathematical) sense.

3.3.5 Quantitative Incorporation of Environmental Stresses

In Chapter 2, we emphasized that three things must be present in a proper reliability requirement: a specification of a limit on some reliability effectiveness criterion, a time during which the requirement is to apply, and conditions (environmental or other) under which the requirement is to apply. In the discussion of earlier life distributions, no mention is made of conditions. In this section, we will discuss some modifications that enable us to incorporate the role of prevailing conditions into a life distribution model.

3.3.5.1 accelerated life models

Accelerated life models are among the simplest models for relating the life distribution of a population of objects operated under a given set of environmental conditions to the life distribution of that population operated under a different set of environmental conditions. We describe two accelerated life models in this book, the strong accelerated life model and the weak accelerated life model, and the proportional hazards model which in analogous terminology might be called the accelerated hazard model.

The strong accelerated life model postulates that there is a linear relationship between the individual lifetimes at the different conditions. If L1 and L2 are the lifetimes of an object when the conditions under which it is operated are C1 and C2, respectively, then the strong accelerated life model asserts that L2 = A(C1, C2)L1, where A is a constant depending on the two conditions C1 and C2.15 If many conditions change from one application to another, it is possible that C1 and C2 may be vectors. If the conditions are dynamic (may change with time), then C1 and C2 may be functions of time.

We begin our study with the simplest case in which the two conditions are constant. For example, condition C1 may be a constant temperature of 10°C, while condition C2 may be a constant temperature of 40°C. Typically, one of these conditions, say C1, represents a “nominal” operating condition, that is, a condition under which life distribution estimates for the population are known (or the conditions prevailing when the data for these estimates were collected), and the other condition C2 represents a condition under which operation of the system is anticipated in service with the customer. The types of environmental conditions that are typically encountered in engineering systems include

- temperature,

- humidity,

- vibration,

- shock, and

- mechanical load.

This list is far from all-inclusive. It includes only those conditions that are commonly encountered. Other more specialized conditions may include salt spray and immersion for marine environments, dust and oil spray for automotive environments, etc.

If a population follows the strong accelerated life model, the life distributions at the different environmental conditions differ only by a scale factor. In fact we have, for F1 the life distribution of L1 and F2 that of L2,

showing that the scale factor is 1/A(C1, C2). For the densities, we have

and for the hazard rates, we have

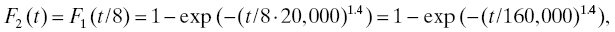

Example: Suppose that, under nominal conditions, a population of devices has a Weibull life distribution with parameters α = 20,000 and β = 1.4 (see Section 3.3.4.3). Under the strong accelerated life model, what are the new parameters of the life distribution when the population is operated at conditions for which the acceleration factor is 8? Denote by the subscript 1 the nominal conditions and by the subscript 2 the operating conditions (for which the acceleration factor is 8). Then

so the life distribution parameters under the operating conditions are α = 160,000 and β = 1.4.

From the example, we may gather that if the life distribution at nominal conditions has a certain parametric form, then the life distribution at any altered conditions continues to have the same parametric form when the strong accelerated life model applies (see Exercise 8).

We summarize the strong accelerated life model in Table 3.3.

Table 3.3 Strong Accelerated Life Model

| Description | Formula |

| Lifetime (or failure time) | L2 = A(C1, C2)L1 |

| Life distribution | F2(t) = F1(t/A(C1, C2)) |

| Density | f2(t) = f1(t/A(C1, C2))/A(C1, C2) |

| Hazard rate | h2(t) = h1(t/A(C1, C2))/A(C1, C2) |

In the strong accelerated life model, the defining equation L2 = A(C1, C2)L1 shows that the individual lifetimes under the two conditions are proportional. In fact, the probabilist would write L2(ω) = A(C1, C2)L1(ω) to emphasize that the proportional relationship holds for each individual lifetime (sample point ω in the sample space, or individual member of the population). This is a very strong assumption, but one that is in very common use. Weaker versions of the accelerated life model are available. One such is the weak accelerated life model that postulates the life distribution relationship F2(t) = F1(t/A(C1, C2)) without the assumption that the lifetimes are proportional as individuals. For this weaker model, all the relationships in Table 3.3 apply except for that in the first row. In practice, usually the weak accelerated life model is all that is needed to make sensible use of the accelerated life model ideas.

Requirements tip: In a reliability requirement, while you do specify the environmental conditions that will prevail during operation with the customer and under which the specified reliability is to be achieved, the model to be used when projecting reliability under the operating conditions when the base reliability estimates pertain under some other, “nominal,” conditions is not normally part of the requirement. The choice of model to use when projecting potential system life distributions or when analyzing field reliability data would normally be made by a reliability engineer who is familiar with the system, its components, and the operating environment(s). Systems engineers, while not necessarily themselves carrying out the computations involved, need to be aware of the options available and be able to ascertain whether the reliability engineer’s choice is suitable given all the conditions prevailing.

How do you tell whether an accelerated life model is appropriate? If you have lifetime data collected under two different operating conditions, then the strong accelerated life assumption is easily tested. From the defining equation of the strong accelerated life model, we have

Therefore, if the strong accelerated life model applies, a quantile–quantile plot (Q–Q plot) [45] of the logarithms of the lifetimes should have slope 1 and vertical intercept log A. The Q–Q plot is a graphical aid for determining when the strong accelerated life model might be appropriate and provides a method for an initial guess at the value of A.

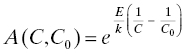

The foregoing development leaves open the structure of the function A. In practice, different functions A are associated with different types of stresses (temperature, voltage, vibration, etc.). One of the most commonly used in reliability modeling is the Arrhenius relationship (Svante August Arrhenius, 1859–1927) for temperature:

where C and C0 represent the two temperatures in °K (Kelvins), E is an activation energy in electron-volts (eV) particular to the material, and k is Boltzmann’s constant 8.62 × 10–5 eV/°K. This equation was first used to describe the speeding up of chemical reactions when heat is added and has been widely used in reliability engineering as an empirical acceleration factor, even for phenomena that do not involve heat.

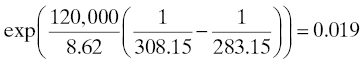

Example: Suppose that, when operated at 10°C, a population of devices has a Weibull life distribution with parameters α = 20,000 and β = 1.4 (see Section 3.3.4.3). Under the strong accelerated life model, what are the new parameters of the life distribution when the population is operated at 35°C? Assume the weak accelerated life model and that the Arrhenius relation holds for these devices with an activation energy of 1.2 eV.

Solution: The Kelvin temperatures corresponding to 10 and 35°C are 283.15 and 308.15, respectively. Then the acceleration factor is

so the life distribution of the population at 35°C is

Many other parametric acceleration functions are used for stress modeling. These include

- the Eyring equation

, with the single parameter β, is used for temperature, humidity, and other stresses [16] ;

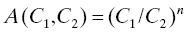

, with the single parameter β, is used for temperature, humidity, and other stresses [16] ; - the inverse power law model

, with the single parameter n, is usually used for voltage [16] ; and

, with the single parameter n, is usually used for voltage [16] ; and - the Coffin–Manson equation [18], similar to the inverse power law model, used for modeling fatigue under thermal cycling and modeling solder joint reliability.

Environmental conditions in operation may also vary with time. In this case, C1 and C2 may be functions of time. Generalizations of the accelerated life model can be devised to cover this case. One such generalization is a differential accelerated life model. This model postulates that the differential change in the lifetime of a unit is proportional to the current value of stress on the unit. Begin with the equation for the strong accelerated life model:

and include the time dependence of C:

If the life distribution in the population at condition C0 is F0, then the population life distribution after t time units has passed is

(see Section 6.4.3 of [41]). This model is used in analysis of data from accelerated life tests that use time-varying stresses such as ramp stress in which the stress takes the form C(t) = a + bt.

Many other models exist for relating the life distribution of a population operated at some set of environmental conditions to the life distribution of the same population operated at a different set of environmental conditions. Perhaps the most flexible of these is the acceleration transform developed by LuValle et al. [42] . See also Refs. 20, 43.

3.3.5.2 the proportional hazards model

The proportional hazards model is similar to the accelerated life model in that it postulates that certain quantities are proportional: in this case, it is the hazard rates, not the lifetimes, that are proportional. That is, the model postulates that

where h1(t) (resp., h2(t)) denotes the hazard rate of the population when the conditions under which the population is operated are described by C1 (resp., C2). Note the difference with the accelerated life model (Table 4.1). The proportional hazards model was first described by Cox [13] and is widely used in biomedical studies. h1(t) is referred to as the “baseline hazard rate” and is usually associated with a nominal set of conditions such as the conditions under which the data characterizing the population were collected (i.e., the same idea as seen in the accelerated life model, Section 3.3.5.1). See Exercise 22.

3.3.6 Quantitative Incorporation of Manufacturing Process Quality

A commonly accepted explanation for so-called early life failures is that the population contains items that have manufacturing defects (see Section 3.3.4.4). In other words, components or subassemblies used in the system are received from their manufacturer(s) with defects that are undetected and not remedied by the manufacturers’ process controls. The model is that such a defect will activate, or “fire,” at some later time and cause a failure at that time. Using this reasoning, one may seek to construct a model for the early-life reliability of a component or subsystem that contains some factor related to the manufacturer’s process quality. This model may also be used for the whole system to describe the influence of manufacturing processes on its reliability although the generally larger number of manufacturing processes involved may make the model more complicated. One attempt at creating such a model is described in Ref. 56 . A brief summary is given in this section.

We may think of manufacturing as an opportunity to add defects to a product in the sense that when a product (here interpreted broadly to include components, subassemblies, and entire systems) is designed, it has a certain reliability that is a consequence of the degree to which design for reliability (Chapter 6) for the product is successful. The reliability of any realization of this design in physical space can never be better than this because additional failure modes are introduced by this realization, some failure modes were not anticipated in the design for reliability process, etc. The approach taken in Ref. 56 to model this situation is to allow the life distribution for a product to depend also on a parameter that represents the quality of the manufacturing process for that product.

Suppose the lower and upper specification limits for the product’s manufacturing process16 are aL and aU, respectively, and aL < aU. The center of the process’s specification window is a 0 = (aL + aU)/2, which we also assume to be the target of the process output. Finally, we postulate that the true process output is a random variable A that is normally distributed with mean μ and variance σ2 (see Figure 3.5). The process meets “six sigma” goals [29] if there is an m, 4.5 ≤ m ≤ 7.5, for which

Figure 3.5 Specification limits and process output.