Security in embedded systems*

J. Rosenberg Draper Laboratory, Cambridge, MA, United States

Abstract

This chapter is about security of embedded devices. Throughout this book, the effects of harsh environments in which we require many of our embedded devices to operate have been discussed in some detail. But even an embedded processor in a clean, warm, dry, stationary, and even physically safe situation is actually in an extremely harsh environment. That is because every processor everywhere can be subject to the harshness of cyber attacks. We know that even “air-gapped” systems can be attacked by the determined attacker as we saw happen with the famous Stuxnet attack. This chapter will discuss the nature of this type of harsh environment, what enables the cyber attacks we hear about every day, what are the principles we need to understand to work toward a much higher level of security, and we will present new developments that may change the game in our favor.

Keywords

Embedded systems; Security; Cyber attacks; Harsh environment; Internet of things

1 Not Covered in This Chapter

This chapter is about logical and network security (or lack thereof) of embedded devices. The physical security of the environs of the embedded device is not touched on here. In many situations, such as when the embedded devices are part of an enterprise—used in manufacturing or part of some sort of infrastructure or part of the financial operations (e.g., point of sale (POS) systems)—or when used in a contained military vehicle like a submarine or bomber, the physical security of the environment takes care of the embedded device. In situations where the embedded device is just one of many Internet of things (IoT), physical security of one device may not be vital but security of the whole array of devices is tied to the network that connects them, we will be discussing network security for embedded devices in this chapter. While the concept of denial of service (DoS) will be covered here, it is difficult if not impossible for the embedded device itself to locally defend itself against DoS attacks. Finally, where physical security of the embedded device is of critical importance, but the device cannot be part of any large organizational security infrastructure, an antitamper approach must be taken. Antitamper technology equips the device with special hardware and software that makes sure the device is, first, nonoperational when not in the hands of those authorized to possess it, and second, refuses to give up any secrets it contains if the unauthorized parties in possession try to tamper with the device in an attempt to steal its secrets. Perhaps even more important, if a device is considered inherently secure, antitamper is necessary to maintain that designation. We will only briefly cover antitamper as it is a large topic unto itself but we will discuss how an embedded device can become inherently secure in this chapter.

2 Motivation

2.1 What Is Security?

In the English language, security is defined as the state of being free from danger or threat.

Consequently we lock our car. We like to walk along well-lit streets. Many of us have alarm systems on our houses. Thanks to important banking laws enacted after the depression, we trust the government to back up losses at our bank. We use credit cards knowing that if they are stolen we are not responsible for expenditures on them. We are (mostly) free from danger or threat in our physical being and in our financial dealings.

But what happens when your house or car is broken into and your things are stolen, or you hear footsteps behind you on a dark street, or your bank is hacked and your identity is stolen? Understandably you have a visceral reaction. It feels like you have been personally violated.

The early days of computer hacking was simply vandalism usually designed to disable a computer. The first significant computer worm was The Morris worm of Nov. 2, 1988. It was the first to be distributed via the Internet. And it was the first to gain significant mainstream media attention. It also resulted in the first conviction in the United States under the 1986 Computer Fraud and Abuse Act. It was written by a graduate student at Cornell University, Robert Morris, and launched from MIT (where he is now a full professor).

But as the stakes got higher and higher and people who wanted things that were not theirs got more sophisticated in their programming skills, much bigger targets were taken on including big corporations, public infrastructure, and the military.

We are talking about security of embedded systems in this chapter so let’s begin with the fundamental principles on which computer security is based.

2.2 Fundamental Principles

Computer security is defined in terms of what is called the CIA triad: Confidentiality, Integrity, and Availability [1]. Let’s take each concept in turn and list out the traits of that principle.

2.2.1 Confidentiality

• Assurance that information is not disclosed to unauthorized individuals, programs, or processes.

• Ensures that the necessary level of secrecy is enforced at each junction of data processing and prevents unauthorized disclosure.

• Some information is more sensitive than other information and requires a higher level of confidentiality.

• The military and intelligence organizations classify information according to multilevel security designations such as Secret, Top Secret, and so forth that require protection from disclosure.

• Individuals have personally identifiable information such as social security numbers that also require protection from disclosure to unauthorized individuals.

• Attacks against confidentiality perpetrated by individuals tend to be about personal gain, so they go after credit card numbers that they use themselves or sell on the black market.

• On the other hand, Nation States may be after corporate confidential information like plans for a new fighter jet or national or corporate secrets.

• Either individuals, corporations, or government information may be the target of insider attacks, who have a variety of motives for accessing information they are not authorized to.

2.2.2 Integrity

• Assures that the accuracy and reliability of information and systems are maintained and any unauthorized modification is prevented.

• Must make sure data, resources, are not altered in an unauthorized fashion.

• Modification of data on disk, or in transit would violate integrity.

• Modification of any programs, computer systems, and network connections would also violate integrity.

• Vandals may try to change or destroy data just to create confusion or fear.

• Nation states may try to change data to affect outcomes beneficial to them. The military would fear an attack that changes battlefield recognizance information or command directives. These would be integrity attacks.

2.2.3 Availability

• Ensures reliability and timely access to data and resources to authorized individuals.

• Information systems to be useful at all must be available for use in a timely manner so productivity is not affected.

• A system that loses connectivity to its database would become useless to most users.

• A system that runs so slowly that users cannot get their work done becomes useless as well.

• Denial of service attacks have a wide variety of forms but all tend to keep some aspect of a system so busy responding to the DoS traffic that it no longer functions for its legitimate users.

Computer security is necessary because there are threats. The best way to think about what types of security is needed is to establish a model for those threats. This will get us into some basic vocabulary that is used to describe threats.

2.3 Threat Model

Key vocabulary used in security discussions includes “vulnerability,” “threat,” “risk,” and “exposure,” which would create a lot of confusion if they are really the same thing; which they are not. We will define them here and, more importantly, we will define a threat model in terms of how these concepts interact with each other.

2.3.1 Vulnerability

A vulnerability is a software, hardware, procedural, or human weakness that provides a hacker an open door to a network or computer system to gain unauthorized access to resources in the environment. Weak passwords, lax physical security, unmatched applications, an open port on a firewall, or bugs in software enable an attacker to leverage that flaw to gain access to the computer that software is running on. The Heartbleed bug was a vulnerability deployed on 66% of servers on the Internet [2] (not counting email, chat or VPN servers, or any embedded devices) created by a programmer’s failure to verify the bounds of a buffer that allowed unlimited external access to a server’s internal memory. For years, the vast majority of home wireless access points were shipped with the administration password of “password” and most users never changed that creating a huge vulnerability.

2.3.2 Threat

A threat is a potential danger to information or systems. The danger is that someone or something will identify a specific vulnerability and use it against the company or individual. A hacker or attacker coming into a network from across the internet is a threat agent. The entity that takes advantage of a vulnerability is a threat agent. A threat agent might be an intruder accessing the system through an open port on firewall. Or it could be a process accessing data in a way that violates security policy. An employee making a mistake that exposes confidential information is an (inadvertent) threat agent. The insider who, for their own reasons, wants to cause harm or steal information taking advantage of their insider access privileges is a threat agent.

2.3.3 Risk

Risk is the likelihood of a threat agent being able to take advantage of a vulnerability and the operational impact if they do. Risk ties together the vulnerability, threat and likelihood of exploitation to the resulting operational loss. If firewall ports are open, if users are not educated on proper procedures, if an intrusion detection system is not installed or configured correctly, risk goes up. If a known vulnerability is not addressed, risk goes up because bad guys pay attention to discovered vulnerabilities, they did not discover themselves.

2.3.4 Asset

Assets can be physical, information, monetary, or reputation. A physical asset includes computers, network equipment, or attached peripherals. Information assets are things such as customer data, proprietary information, and secret information. Monetary assets include the fines or direct expenses from a breach or loss of stock value in the stock market. And reputation asset is what is lost due to the poor perceptions of a company that has suffered a high visibility breach.

2.3.5 Exposure

Exposure is an instance of being subjected to losses or asset damage from a threat agent. A vulnerability exposes an organization to possible damages. Just as failure to have working fire sprinklers exposes an organization not only to fire danger but also losses of physical assets and potential liabilities, poor password management exposes an organization to password capture by threat agents who would then gain unauthorized access to systems and information.

2.3.6 Safeguard

A safeguard (or countermeasure) mitigates potential risk. It could be software, hardware, configurations, or procedures that eliminate a vulnerability or reduce likelihood a threat agent will be able to exploit a vulnerability.

The relationships between all of these concepts form a threat model: A threat agent creates a threat, which exploits a vulnerability that leads to a risk that can damage an asset and causes an exposure, which can be remedied through a safeguard or countermeasure.

If no one and nothing had access to our computers and networks we would be done with this discussion and this would be a very short chapter. Threats exist because there is access (usually through a network a system is connected to but also even when a system is air-gapped), so the next thing we need to include, when thinking about what security is, has to be access control. Readers who are aware of embedded systems that are not connected to any network or are on a network that is air-gapped from any network, where threat agents are active and think those devices are safe from threat just need to remember how Stuxnet was able to destroy 2000 uranium processing centrifuges that were air-gapped from the Internet.

2.4 Access Control

Fundamental to security is controlling how resources are accessed. A subject is an active entity that requests interaction with an object. An object is a passive entity that contains information. Access is the flow of information between a subject and an object. Access controls are security features that control how subjects and objects communicate and interact with each other.

2.4.1 Identification

All access control, and all of security for that matter, hinges on making sure with high confidence we know who is requesting access to a resource. If everyone can claim to be Barack Obama and gains access as such, there is little hope we will have much security in our systems. Identification is the method for ensuring that a subject is the entity it claims to be. Once an authoritative body has accepted the entity is who they say they are, that information is mapped to something that can be used to claim that identity such as a login user name or account number.

2.4.2 Authentication

A weakness in most systems is that identification is quite easy to spoof. Stronger defense against what are called social engineering attacks requires a second piece of the credential beyond identification such as password, passphrase, cryptographic key, personal identification number, anatomical attribute (biometric), token, or answers to a set of shared secrets.

2.4.3 Authorization

Authorization asks the question: Does the subject have the necessary rights and privileges to carry out the requested actions? If so, the subject is authorized to proceed.

2.4.4 Accountability

Keeping track by identity of what each subject did in the system is called accountability. It is used for forensics, to detect attacks, and to support auditing.

Clearly, different organizations have different needs when it comes to security. The national intelligence and defense organizations need to protect confidential, secret, and top secret information in separate buckets and people without sufficient clearance must never be allowed to see higher level information. A bank must not allow customer social security numbers to get to unauthorized individuals. These are examples of security policies.

2.5 Security Policy

A security policy is an overall statement of intent that dictates what role security plays within the organization. Security policies can be organizational policies, issue-specific policies, or system-specific policies, or a combination of all of these.

Security policies might:

• identify assets the organization considers valuable;

• state company goals and objectives pertaining to security;

• outline personal responsibility of individuals within the organization;

• define the scope and function of a security team;

• outline the organization’s planned response to an incident including public relations, customer relations, or government relations; and

• outline the company’s response to legal, regulatory, and standards of due care.

2.6 Why Cyber?

Cyber is short for cybernetics. It is used as an adjective defined as: of, relating to, or characteristic of the culture of computers, information technology, and virtual reality. That doesn’t really explain why it started to be used so heavily because it was a simple substitution for Information Technology or Computers or even Internet. The best answer might just be that it sounds cool and the media tend to drive the popular terminology. Whatever the reason, our subject is now described by the terms cyber-security, cyber-attacks, and cyber-threats.

2.7 Why is Security Important?

Security has become vitally important because of the critical nature of what our computers and networks do now compared to two or even one generation ago. For instance, they run the electric grid. On Aug. 14, 2003, shortly after 2 p.m. Eastern Daylight Time, a high-voltage power line in northern Ohio brushed against some overgrown trees and shut down—a fault, as it’s known in the power industry. The line had softened under the heat of the high current coursing through it. Normally, the problem would have tripped an alarm in the control room of FirstEnergy Corporation, an Ohio-based utility company, but the alarm system failed due to a software bug—a vulnerability. (Here the threat agent was Mother Nature not a hacker.) All told, 50 million people lost power for up to 2 days in the biggest blackout in North American history. The event contributed to at least 11 deaths and cost an estimated $6 billion. The entire power grid is vulnerable to determined hackers and a nationwide blackout—according to the Wall Street Journal quoting US intelligence sources, nation-states have penetrated the US power grid [3].

Similarly, computers control our water supply. EPA advisories have outlined the risk of cyber attack and its consequences. Our drinking water and wastewater utilities depend heavily on computer networks and automated control systems to operate and monitor processes such as treatment, testing, and movement of water. These industrial control systems (ICSs) have improved drinking water and wastewater services and increased their reliability. However, this reliance on ICSs, such as supervisory control and data acquisition (SCADA) embedded devices, has left the Water Sector like other interdependent critical infrastructures, including energy, transportation, and food and agriculture, vulnerable to targeted cyber attacks or accidental cyber events. A cyber attack causing an interruption to drinking water and wastewater services could erode public confidence, or worse, produce significant panic or public health and economic consequences. In factories producing chemicals, processing food, and manufacturing products, every valve, pump, and motor has the same sort of ICS processor control and monitoring embedded system each one of which is highly susceptible to cyber attack.

Transportation systems, air traffic control (ATC), airplanes themselves, trains, ships, even our cars have dozens of processors in them and many of those are in embedded systems with little or no physical protection from cyber attack. Havoc of disruption to our air, train, ship, or road-based transportation would have a significant impact on our economy and society. Transportation represents 12% of the GDP, it is highly visible and most citizens are dependent on it. Just if GPS went down it would shut down most of the above systems. You just have to see what happens when one stoplight is out and multiply that by tens of thousands just for autotransportation.

The stock market and commodity trading systems use thousands of embedded systems to function. A major disruption to the stock market stops the flow of investment capital and thus could shut down the economy.

Besides these major systems, other segments of the economy that are heavily dependent on highly vulnerable embedded systems include financial systems including POS, e-commerce, and inventory. Twenty percent of the economy is health-related including hospitals, medical devices, and even insurance companies. While not life-critical, the entertainment segment is big business and an important aspect of life including movies, TV, cable systems, satellite TV, video games, music, audio, and e-books.

Increasingly, our homes and all the devices in them such as thermostats, washers, dryers, stoves, microwaves, and even newer toasters are all controlled by embedded systems; they are all part of the trend toward an IoT.

Perhaps the heaviest user of embedded systems to date is our military. Military systems from satellites, to weapons systems, to command & control (C&C) are heavily dependent on embedded processors. Recent hacks against Central Command, the Office of Personnel Management (not an embedded device attack), and hundreds not reported, point to the danger.

And of course, the Internet and World Wide Web themselves are controlled by millions of embedded devices. Designed to withstand a direct nuclear hit, the Internet is indeed very resilient but never was the cyber attacking envisioned or planned for and no mechanisms to help defend against them were ever designed in. In fact, the entire Web is designed to help the attackers with ways to hide their tracks, dynamically change IP addresses, and to anonymize their location and attack paths.

Let’s talk some more about the IoT because this is the future of embedded devices and represents a huge potential source of vulnerabilities. What is the IoT and what is the vision? Currently there are 9 billion interconnected devices, which is expected to reach 24 billion devices by 2020. This next major expansion of our computing base will be outside the realm of the traditional desktop and will sit squarely in the embedded device space. In the IoT paradigm, many of the objects that surround us will be on the network in one form or another. Radio frequency identification (RFID) and sensor network technologies will represent a major portion of the sensors that will drive the IoT expansion. Many new types of sensors will be part of the IoT including smart healthcare sensors, smart antennas, enhanced RFID sensors, RFID applied to retail, smart grid, and household metering sensors, smart traffic sensors, and many others. IoT will have major implications for privacy concerns and impressive new levels of big data storage, management, analytics and, yes, security. IoT will be such a large market opportunity that it is the latest “gold rush” and companies are rushing new products to market as fast as possible with very little attention being paid to innate security. The hackers must be rubbing their hands in anticipation of their coming opportunities.

2.8 Why Are Cyber Attacks so Prevalent and Growing?

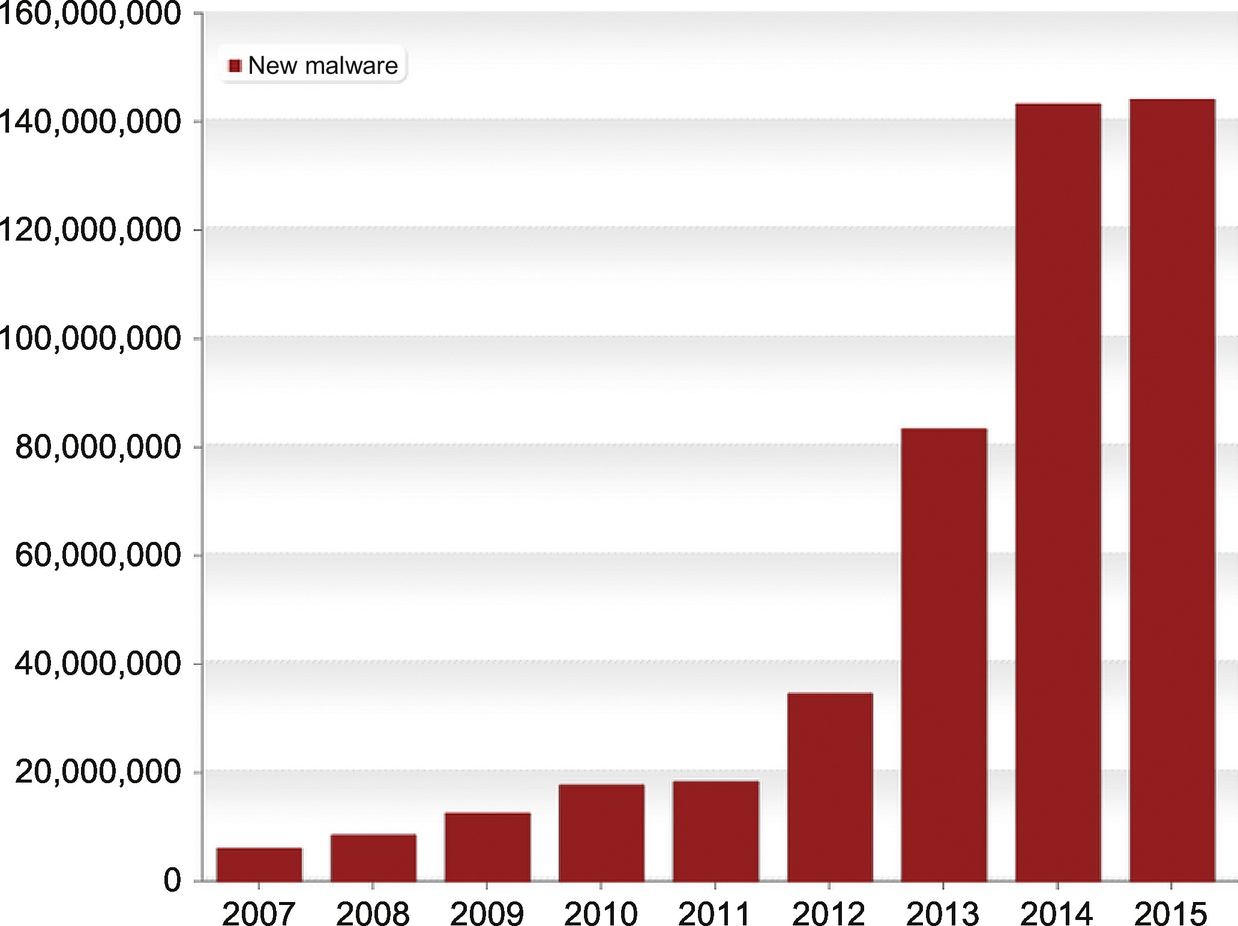

In 2014, companies reported 42.8 million detected attacks (the number unreported is probably at least as much) worldwide, a 48% year-over-year increase [4]. Some nation-states who are sometimes considered “adversaries” of the US have acknowledged they have large teams of cyber warriors [5]. But there are a lot of factors that have contributed to the exponential growth of cyber attacks. We identify a few of those here. The rapid growth and severity of incidents is stunning. Over 220,000 new malware variants appear every day according to AV-TEST. The number of new malware programs has rapidly grown over the last 10 years to exceed 140 million per year.

Source: AV-TEST, https://www.av-test.org.

2.8.1 Mistakes in software

Mistakes are a big part of how cyber attackers ply their trade. Programmers make a mistake, that becomes a bug in an application and the cyber attackers exploit that bug as a cyber vulnerability. Many mistakes in the software in the SCADA controllers, and the Windows-based systems commonly used to program them, were leveraged to destroy 2000 centrifuges in the 2008 Stuxnet attack. Similarly, it was mistakes in various aspects of the systems at Sony, Target, Home Depot, and hundreds of others that allowed those famous cyber attacks to proceed. Stuxnet, however, is in a new class of dangerous attacks designed to destroy physical assets from across the Internet but this category also includes the Shamoon attack on Saudi Aramco that destroyed 30,000 desktop computers and in late 2014 hackers struck an unnamed steel mill in Europe. They did so by manipulating and disrupting control systems to such a degree that a blast furnace could not be properly shut down, resulting in “massive”—though unspecified—damage.

According to Lloyd’s of London, businesses lose $400B per year due to cyber attacks but due to potential lawsuits and liability issues, much cybercrime goes unreported and so is presumably much higher than $400B. According to the US Department of Homeland Security, about 90% of security breaches originate from defects in software. The embedded processors in mobile devices and the applications built for them are becoming the most common vector of attack on companies. These mobile apps typically have poor security because developers rush to bring them to market before properly implementing security protocols. More on software bugs and vulnerabilities to cyber attack is discussed later in this chapter.

2.8.2 Opportunity scale created by the Internet

The sheer size of the Internet, the existence of malcontents, determined nation-state adversaries, and the financial gains now possible from cyber attacks, are all reasons for growth of cyber crime.

Size of the Internet as measured by domains is 271 M, [6] servers, and as measured by users is 3.17B users.1 There has been an exponential explosion of smart phones that are Internet connected. In 2015, there were 2B smart phone users worldwide and that is expected to grow to over 6B by 2020.1 GE estimates the “Industrial Internet” has the potential to add $10 to $15 trillion2 to global GDP over the next 20 years. Cisco states that its forecast for the economic value of the IoT is $19 trillion3 in the year 2020.

2.8.3 Changing nature of the adversaries

The first cyber-criminals were purely vandals. The previous generation’s graffiti is hacking into a site and scribbling on their home page. There is an aspect of Haves vs. Have-nots on the Internet where the have-nots can be across borders and oceans and still attack the rich people in the rich countries. Thus phase two of cyber-crime became identity theft and wholesale thefts of credit cards which are re-sold as in the Target POS credit card theft. This phase also included a rash of cyber-ransom attacks against individuals and small businesses. Meanwhile increasing steadily are the actions of nation-states, where the aim is to steal secrets and to obtain an advantageous posture for a future action that will include cyber-war as part of an overall strategy. These nation states steal identities of government employees, and contractors in order to blackmail individuals into turning over secrets, they are behind Flame—an attack against the embedded devices in personal computers (e.g., Keyboard, microphone, camera)—whose mission is espionage and is considered by many to be the most complex malware ever found. The US’s main adversaries have penetrated critical infrastructure and have, it is believed, left behind mechanisms allowing them to take action against that infrastructure when it suits their overall aims.

2.8.4 Financial gain opportunities

The Target attack, enabled by the HVAC-contractor vulnerability, ultimately compromised the POS systems where consumer's credit cards are swiped. The foreign organized crime hackers who perpetrated the attack were highly enriched by their ability to resell those credit cards on the black market [7]. Those stolen cards were being offered at “card shops” starting at $20 each, up to more than $100. Between 1 and 3 million of those credit cards were ultimately sold on the black market, raising an estimated $53.7 million for the hackers.4 The attack costs Target $148 million, and costs credit card issuers institutions $200 million. The CEO lost his job and company profits fell 46% the quarter after the breach.

2.8.5 Ransomware

Ransomware is malware that locks your keyboard or computer to prevent you from accessing your data until you pay a ransom, usually demanded in Bitcoin. The digital extortion racket is not new—it’s been around since about 2005, but attackers have greatly improved on the scheme with the development of ransom cryptoware, which encrypts your files using a private key that only the attacker possesses, instead of simply locking your keyboard or computer.

It is not the case that ransomware just affects desktop machines or laptops; it also targets mobile phones and if it became more lucrative, the IoT would be next (think: control of your Nest thermostat during very cold weather).

Symantec gained access to a C&Cl server used by the CryptoDefense malware and got a glimpse of the hackers’ haul based on transactions for two Bitcoin addresses the attackers used to receive ransoms. Out of 5700 computers infected with the malware in a single day, about 3% of victims appeared to shell out for the ransom. At an average of $200 per victim, Symantec estimated that the attackers hauled in at least $34,000 that day. Extrapolating from this, they would have earned more than $394,000 in a month. This was based on data from just one command server and two Bitcoin addresses; the attackers were likely using multiple servers and Bitcoin addresses for their operation.

Conservatively, at least $5 million is extorted from ransomware victims each year. But forking over funds to pay the ransom doesn’t guarantee attackers will be true to their word and victims will be able to access their data again. In many cases, Symantec reports, this doesn’t occur.

2.8.6 Industrial espionage

Worldwide, around 50,000 companies a day are thought to come under cyberattack with the rate estimated as doubling each year. One of the means perpetrators use to conduct industrial espionage is by exploiting vulnerabilities in computer software. Malware and spyware as a tool for industrial espionage, are designed to transmit digital copies of trade secrets, customer plans, future plans and contacts. Newer forms of malware include devices which surreptitiously switch on mobile phones camera and recording devices and in some cases, to monitor every keystroke at the keyboard.

Operation Aurora was a series of cyber attacks conducted by advanced persistent threats such as the Elderwood Group based in Asia [8]. The attack has been aimed at dozens of organizations, including Adobe Systems, Juniper Networks, Rackspace, Yahoo, Symantec, Northrop Grumman, Morgan Stanley, and Dow Chemical. The primary goal of the attack5 was to gain access to and potentially modify source code repositories at these high tech, security and defense contractor companies. The Source Code Management systems were found to be wide open even though these were the crown jewels of these companies, in many ways more valuable than any financial or personally identifiable data that they may have and spend so much time and effort protecting.

2.8.7 Transformation into cyber warfare

Since 2010, when the cyberweapon Stuxnet was finally understood—and the damage a cyber attack could affect was fully absorbed—it has become clear that cyberwarfare was possible. At the very least, if not used as a weapon directly, cyber attacks were now part of any adversarial nation’s foreign policy. The target most frequently cited is our critical infrastructure which in general is heavily dependent on embedded systems such as programmable logic controllers (PLCs) and SCADA controllers. This is exactly what Stuxnet targeted. And this is why this chapter is so important.

2.9 Why Isn’t Our Security Approach Working?

Why hasn’t what we’ve been doing been working to stop or even slow the relentless cyber attacking? We have really smart people; the inventiveness of our cyber security people is legend. We have created firewalls, intrusion detection systems, anomaly sleuthing, virus scanners, schemes to make an application morph itself into a different application to fool attackers, and every possible piece of software protection these brilliant people can invent, and still the attack frequency and seriousness goes up year after year.

We are living in a time of inherent in-security and there is nothing that seems to be working to slow things down much less fix the problem. One reason even experts seem to be throwing up their hands is that the assumption is that our computer systems are so complex that it is impossible to design them without vulnerabilities to cyber attacks.

Therefore, the best we can do, it seems, is to: build virtual walls around our networks using firewalls and intrusion detection systems, then constantly run virus scanning tools on every computer on those networks to look for known attack signatures, patch our operating systems, applications, firmware, and even those security systems (they have bugs too) once a week or so, and then what, pray?

Perimeters are known to be very porous. In fact, hackers like to joke that enterprise networks look like some kind of candy: crunchy on the outside but soft and chewy on the inside. That’s because if they do penetrate the perimeter they are in and once in, they can pretty much move through the network and operate at will. In fact, one of the problems has become those perimeter systems themselves. They tend to run at a very high privilege level and because they are very large they have a corresponding number of bugs and those bugs are the entry point for the hackers. Patching is a particularly ineffective strategy for addressing cyber physical and other embedded systems because they can be much harder to reach, they tend to be long-lived and specialized, some are not on a network, and they substantially increase the attack surface of the environment with more patches to deliver and more opportunities for failure to protect.

It is important to remember that signature scanning and patching are only done after an attack has been identified and isolated. This is like waiting to lock your doors until after your neighbors have had a theft. What is most damning about patching’s effectiveness is that we have found that common vulnerabilities and exposures (CVEs) [9] that were found between 1999 and 2011 represented 75% of the exploits reported in 2014. That’s as much as 15 years since a vulnerability was reported without being patched [10].

2.9.1 Asymmetrical

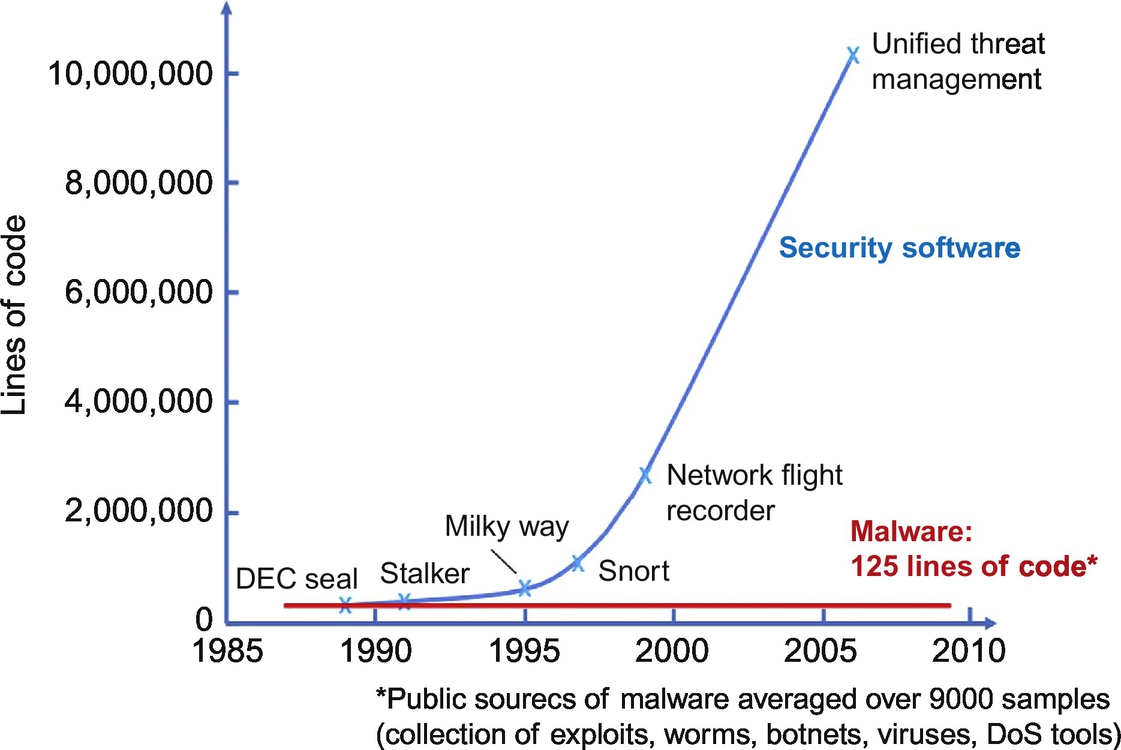

A DARPA review of 9000 distinct pieces of malware in 2010 found that the average size of each independent attack component was only 125 lines of code. Meanwhile, the defensive systems enterprises use to protect their systems including intrusion detection continue to grow and some of these systems have reached 10 million lines of code. That is so complex—and these systems by nature have to have privileged access to the systems they are protecting—that they have become a desirable target for attack. Like all software, the larger it is the more bugs it has. Steve Maguire in his book Writing Secure Code found that across all deployed software regardless of application domain or programming language, one finds between 15 and 50 bugs per thousand lines of code (abbreviated KLOC). Rough estimates have been suggested that 10% of all bugs are potential cyber vulnerabilities. Given this, a 10-million lines of code defensive system has 150,000 bugs at best and potentially 15,000 security vulnerabilities that could enable an attacker entry into the network and all the systems the defensive system was designed to protect.

Size of malware compared to the increasing complexity of defensive software.

Malware over almost a 25-year span has remained at about 125 lines of code measured over 9000 samples. But in that same period, defensive systems have gotten increasing more complex to the point where they are over 10 million lines of code. Since studies have shown that there are consistently 15 bugs per KLOC, this means the threat and we are diverging. Figure credit DARPA.

2.9.2 Architectural flaws

The crux of the matter is that we are losing the cyber war mostly because the architecture of our computer processors has no support for cybersecurity, so it is practically child’s play to attack them and have them do the bad guys bidding. No matter what virus protection, firewalls, or number of patches we apply, software is written by people and people cannot write perfect software, so vulnerabilities will always exist in any reasonably complex software. Bad guys are smart, they are patient, and they can win with very simple attack software.

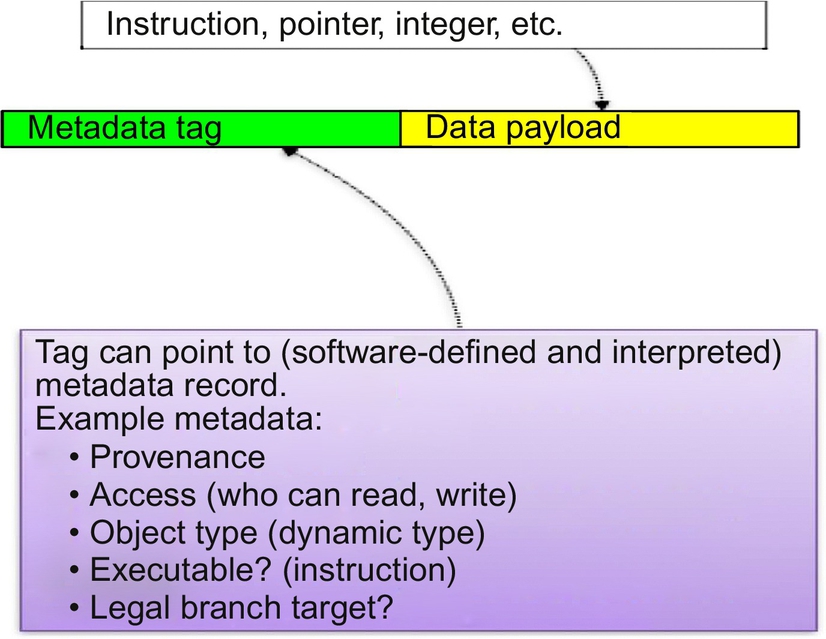

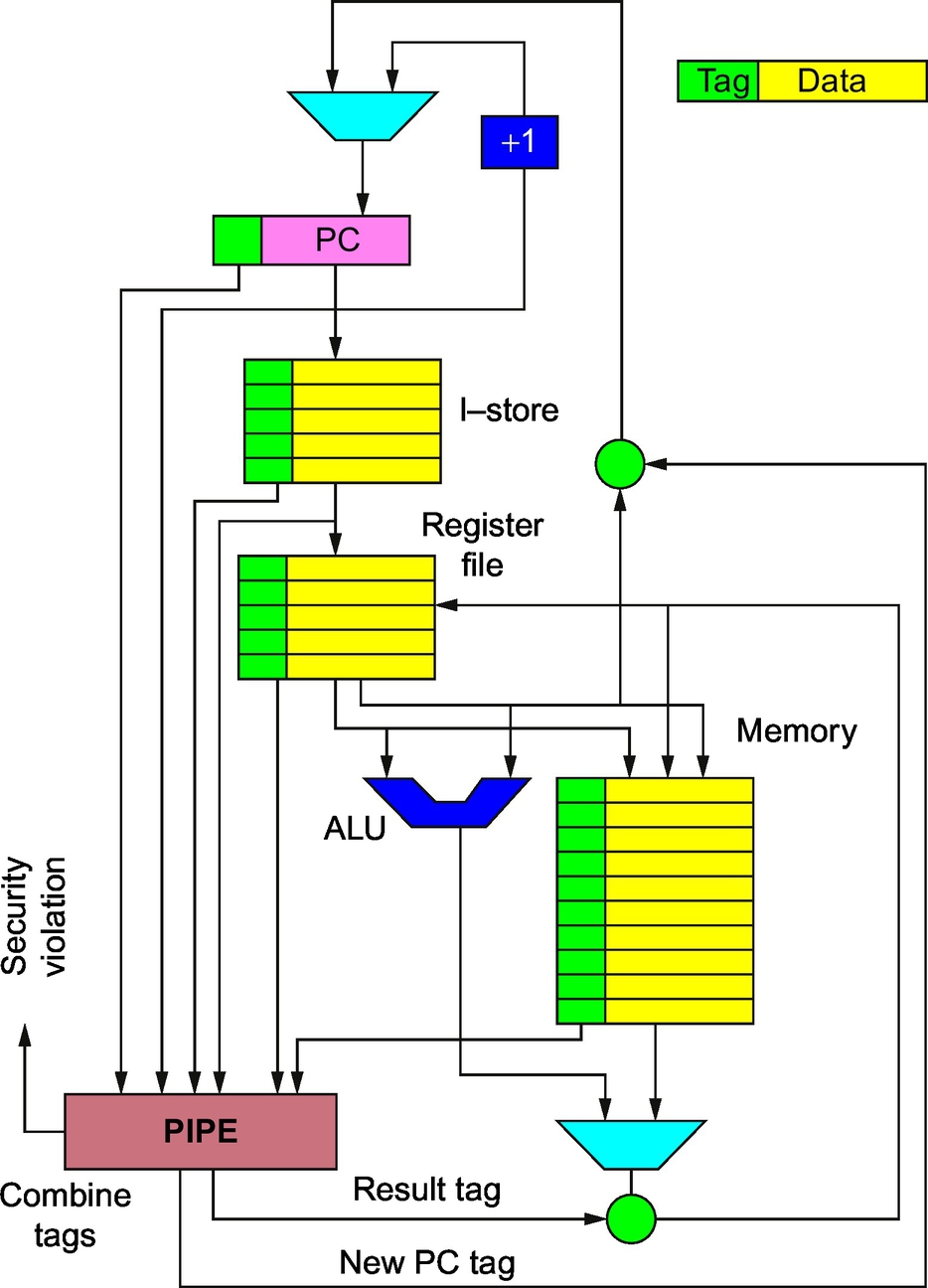

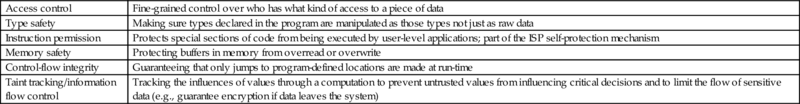

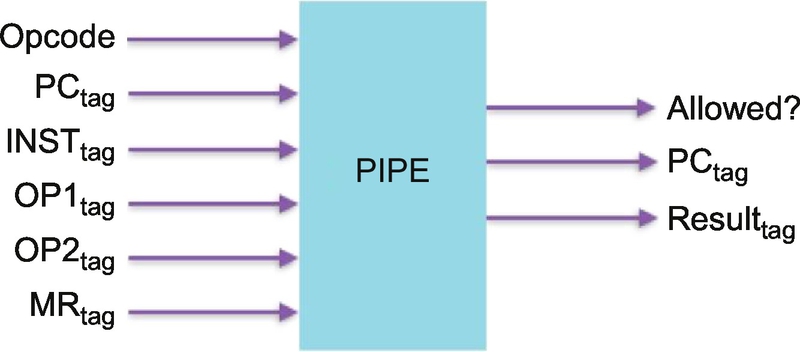

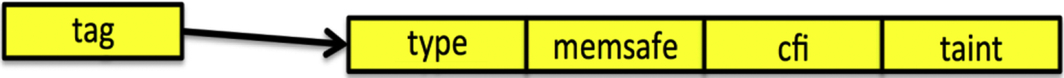

Our legacy processor architectures are built around what many in the cyber security community call “Raw Seething Bits.” That is their description of what it means to have a single undifferentiated memory, where there is no indication what each word in that memory is. There is no way to tell—and most importantly, no way for the processor to tell—if a word is an instruction or an integer or a pointer to a block of memory storing data. There is an important reason processors started out having a single undifferentiated memory: it was simple to build. Simple was important when logic was performed by vacuum tubes or, a bit later, by just a few really expensive transistors: it was easier and cheaper to build.

That architecture—still in use in all our computing devices whether servers, laptops, or embedded devices—is Von Neumann’s 1945 stored-program computer (see Fig. 1). Having worked out the simplest architecture that worked, the industry started to perfect it, made transistors smaller and smaller, and ultimately started to see a trend that became known as Moore’s Law take hold. Moore’s Law stated that every 18th month the number of transistors occupying the same area would double. In fact the reason for the explosion of the Internet, mobile devices, the coming IoT, and information processing in general, is Moore’s Law leveraging this simple architecture and driven by a mantra of smaller, cheaper, faster. Moore’s Law has given us an iPhone more powerful than all computers NASA owned when it landed men on the moon. Transistors are now incredibly cheap, and while Moore’s Law may have reached its limit, the 2000 transistors for the first microprocessor in 1971 has become 5.5 billion transistors in the current model. Ironically, it’s the connectedness of everything enabled by Moore’s Law that has made cyber threats so prevalent and so serious.

In 1945, Von Neumann and others described a simple but powerful processor architecture with a single internal memory. This architecture continues to dominate the architecture of processors in billions of devices today. This single memory where instructions, data, pointers, and all the data structures needed by an application are stored with no way to tell what is what. It is this memory sometimes called “raw, seething bits” that prevents the processor from cooperating with the program to enforce security. Figure under the Creative Commons Attribution-Share Alike 3.0 by Kapooht.

As our processors became much more powerful and sophisticated, people began to trust them to protect increasingly valuable things. As processor power made more things possible, people’s expectations also grew and so software size has grown exponentially to keep up. All the while programming languages like C and C++ continued to provide direct access to the “raw, seething bits” of memory without manifest identity, types, boundaries, or permissions to help explain what each word in memory was for. Even Java—12 years newer than C++ and 23 years newer than C—which was designed to be safer and more helpful to the programmer, retains the C/C++ risks because it builds on libraries written in C and C++ and that unsound foundation leaves it vulnerable to bugs and attacks. This has left everything up to the programmer. When programming in C/C++/Java, the default is unsafe. As we have seen, a buffer when allocated does not protect itself from being overwritten; the programmer always has to do extra work (write more code) to make it safe. This is not so much the fault of the programming language as it is of things below the level of the language the programmer is writing in. It is this undefined behavior of the language, where most of the problems (which become cyber vulnerabilities) arise.

As cybersecurity started to become a concern, there were important constraints that had to be enforced in every program by every programmer to avoid cyber attack. But those vital constraints would only be enforced when every programmer got everything right on every single line of code. Any single mistake could become a vulnerability and sink the ship.

2.9.3 Software complexity—many vulnerabilities

NIST maintains a list of the unique software vulnerabilities (see https://nvd.nist.gov). Across all the world’s software, whenever a vulnerability is found that has not been identified anywhere before, it is added to this list. As of this writing, that list was approaching 76,000 unique vulnerabilities. This means attackers have 76,000 things they can leverage to compromise the systems they attack. Vulnerabilities are how attackers get in. Bugs (or weaknesses) are how the software is written so that vulnerabilities are created.

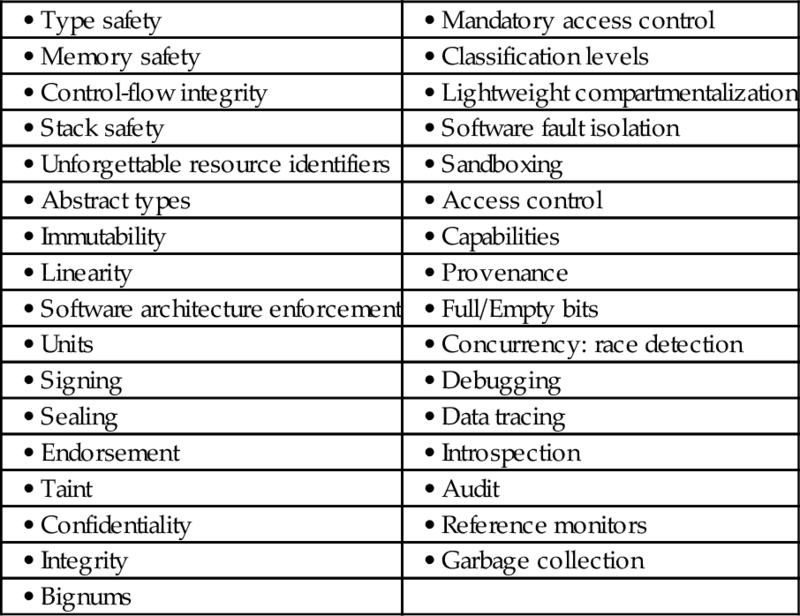

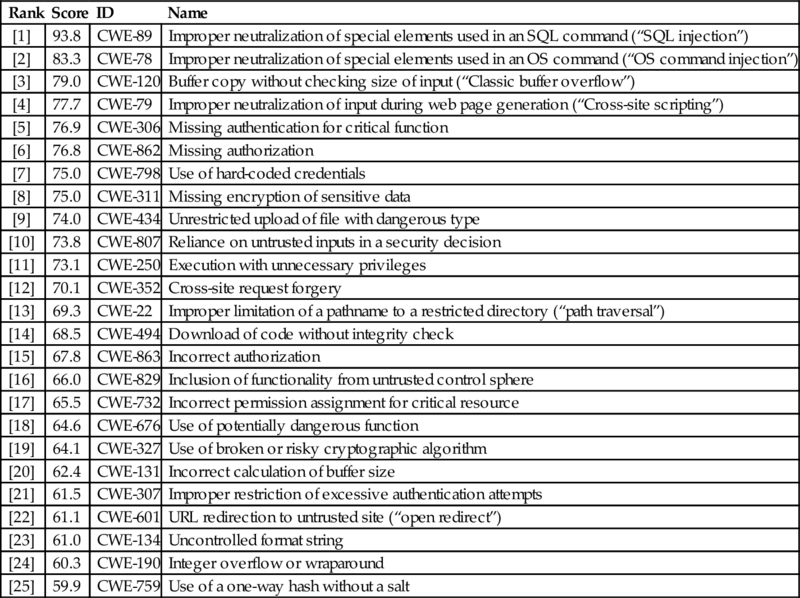

The common weakness enumeration list contains a rank ordering of software errors (bugs) that can lead to a cyber vulnerability. Top 25 most dangerous software errors is a list of the most widespread and critical errors that can lead to serious vulnerabilities in software. They are often easy to find, and easy to exploit. They are dangerous because they will frequently allow attackers to completely take over the software, steal data, or prevent the software from working at all.

| Rank | Score | ID | Name |

| [1] | 93.8 | CWE-89 | Improper neutralization of special elements used in an SQL command (“SQL injection”) |

| [2] | 83.3 | CWE-78 | Improper neutralization of special elements used in an OS command (“OS command injection”) |

| [3] | 79.0 | CWE-120 | Buffer copy without checking size of input (“Classic buffer overflow”) |

| [4] | 77.7 | CWE-79 | Improper neutralization of input during web page generation (“Cross-site scripting”) |

| [5] | 76.9 | CWE-306 | Missing authentication for critical function |

| [6] | 76.8 | CWE-862 | Missing authorization |

| [7] | 75.0 | CWE-798 | Use of hard-coded credentials |

| [8] | 75.0 | CWE-311 | Missing encryption of sensitive data |

| [9] | 74.0 | CWE-434 | Unrestricted upload of file with dangerous type |

| [10] | 73.8 | CWE-807 | Reliance on untrusted inputs in a security decision |

| [11] | 73.1 | CWE-250 | Execution with unnecessary privileges |

| [12] | 70.1 | CWE-352 | Cross-site request forgery |

| [13] | 69.3 | CWE-22 | Improper limitation of a pathname to a restricted directory (“path traversal”) |

| [14] | 68.5 | CWE-494 | Download of code without integrity check |

| [15] | 67.8 | CWE-863 | Incorrect authorization |

| [16] | 66.0 | CWE-829 | Inclusion of functionality from untrusted control sphere |

| [17] | 65.5 | CWE-732 | Incorrect permission assignment for critical resource |

| [18] | 64.6 | CWE-676 | Use of potentially dangerous function |

| [19] | 64.1 | CWE-327 | Use of broken or risky cryptographic algorithm |

| [20] | 62.4 | CWE-131 | Incorrect calculation of buffer size |

| [21] | 61.5 | CWE-307 | Improper restriction of excessive authentication attempts |

| [22] | 61.1 | CWE-601 | URL redirection to untrusted site (“open redirect”) |

| [23] | 61.0 | CWE-134 | Uncontrolled format string |

| [24] | 60.3 | CWE-190 | Integer overflow or wraparound |

| [25] | 59.9 | CWE-759 | Use of a one-way hash without a salt |

Rank ordered by a score based on factors including technical impact, attack surface, and environmental factors as determined by NIST and MITRE who maintain the list. Each item in the list has its ID listed with it for cross-reference to the list maintained at http://cwe.mitre.org.

Layer upon layer of software increases the “attack surfaces” that attackers can probe for weaknesses. Every layer is subject to the same 15–50 bugs per KLOC and the corresponding exploitable vulnerabilities. This has led many to conclude that while perimeters are absolutely necessary to keep the vandals and script-kiddies out, they are far from sufficient to keep out the determined and sophisticated hackers that nation-states now deploy.

2.9.4 Complacence, fear, no regulatory pressure to act

Another factor making today’s security strategies not work is the simple fact that it is too easy to do nothing. There is the erroneous thinking that systems that are air-gapped are safe (the Stuxnet SCADA controllers were air-gapped). There is the fear and hoping that someone else gets hacked instead of you. In almost all situations for embedded systems there are few if any regulations that force one to act combined with a competitive environment that makes one not want to be first as it cuts into profit margins. The regulations we do have are mostly advisory in nature and have no teeth to require industry to act. It is a situation that is heading for a major catastrophe before serious action is taken.

2.9.5 Lack of expertise

Finally, security is not working for a huge number of organizations—especially smaller ones—simply because these organizations are not experts at IT, and expecting them to be on the cutting edge of IT security is totally unreasonable. Even if they outsource their IT function to a supposed expert, the IT outsourcing companies that service small organizations are themselves small businesses that cannot keep pace with the rapid change in the cybersecurity domain.

2.10 What Does This Mean for the IoT Security?

The IoT is an extremely fast growing area with many established companies building products. But many of the companies are small startups trying to leverage this brand new market into a business. Projections by Cisco and others predict 50 billion connected IoT devices by 2020. There are lots of old products that are getting this “new” name IoT such as routers and home devices. New or old, companies participating in IoT have very little time to get a product to market so they do whatever they have to, to get their first products to market in the least amount of time. Products in the IoT space have to be inexpensive, so margins are thin and all participants—large and small companies—cut corners and build products that are powered by specialized computer chips made by companies such as Broadcom, Qualcomm, and Marvell. These chips are cheap, and the profit margins slim. Aside from price, the way processor manufacturers differentiate themselves from each other is by features and bandwidth. They typically put a version of the Linux operating system onto the chips, as well as a bunch of other open-source and proprietary components and drivers. They do as little engineering as possible before shipping, and there’s little incentive to update their systems until absolutely necessary.

The system manufacturers—usually original device manufacturers (ODMs) who often don’t get their brand name on the finished product—choose a chip based on price and features, and then build their IoT device such as a router or server, or something else. They tend not to do a lot of engineering either. The brand-name company on the box adds a user interface perhaps a few new features, they run batteries of tests to make sure everything works, and then they are done, too.

The problem with this process is that no one entity has any time, margin room (or maybe any cash at all), expertise, or even ability to patch the software once it is shipped. The chip manufacturer is busy shipping the next version of the chip. The ODM is busy upgrading its product to work with this next chip. Maintaining the older chips and products is just not a priority. But the situation with the software is worse.

Much of the software is old, even when the device is new. It is common in home routers that the software components are 4–5 years older than the device. The minimum age of the Linux operating system is around 4 years. The minimum age of the Samba file system software is 6 years. They may have had all the security patches applied, but most likely not. No one has that job or has made it a priority. Some IoT components are so old that they’re no longer being patched. This patching is especially important because security vulnerabilities are found more easily as systems age.

To make matters worse, it’s often impossible to patch the software or upgrade the components to the latest version. Often, the complete source code isn’t available. They’ll have the source code to Linux and any other open-source components but many of the device drivers and other components are just “binary blobs” with no source code at all. That’s the most pernicious part of the problem: one can’t patch code that is just binary.

Even when a patch is possible, it’s rarely applied. Users usually have to manually download and install relevant patches. But since users never get alerted about security updates, and don’t have the expertise to manually administer these devices, it doesn’t happen. Sometimes the ISPs have the ability to remotely patch routers and modems, but this is also rare.

The result is hundreds of millions of devices that have been sitting on the Internet, unpatched and insecure, for the last 5–10 years. Devices that have not been on the Internet are being put on it and hackers are taking notice. When TrackingPoint enabled their self-aiming rifles for Wi-Fi, hackers wasted no time in hacking in and re-aiming the rifle and firing it remotely [11]. Similarly, when the skateboard company Boosted brought a remote-controlled powered skateboard to market and failed to encrypt the Bluetooth communications, hackers simply took control and could make a board going 20 miles an hour suddenly stop ejecting its rider [12]. Malware DNS Changer attacks home routers as well as computers. In a South American country, 4.5 million DSL routers were compromised for purposes of financial fraud. Last month, Symantec reported on a Linux worm that targets routers, cameras, and other embedded devices.

This is only the beginning. What we will see soon are some easy-to-use hacker tools and once we do the script kiddies and vandals will get into the game.

All the new IoT devices will only make this problem worse, as the Internet—as well as our homes and bodies—becomes flooded with new embedded devices that will be equally poorly maintained and unpatchable. Still, routers and modems pose the biggest problem because they are: between users and the Internet so turning them off is usually not an option; more powerful and more general in function than other embedded devices; the one 24/7 computing device in the house, and therefore a natural place for lots of new features.

We were here before with personal computers, and we fixed the problem by disclosing vulnerabilities which forced vendors to fix the problem. But that approach won’t work the same way with embedded systems. The scale is different today: more devices, more vulnerability, viruses spreading faster on the Internet, and less technical expertise on both the vendor and the user sides. Plus, as we have shown, we now have vulnerabilities that are impossible to patch. We have a formula for disaster: huge numbers of devices all connected to the Internet, more functionality in devices with a lack of updates, a pernicious market dynamic that has inhibited updates and prevented anyone else from updating, and a sophisticated hacker community chomping at the bit for this green field opportunity.

Fixing this has to become a priority before a disaster. We need better designs that start with security and don’t build them in on the third or fourth revision, or never. Automatic and very secure update mechanisms—now that all these devices are going to be connected to the Internet—are essential as well.

2.11 Attacks Against Embedded Systems

Embedded systems face distinct challenges separate from networked IT systems. Conventional protective strategies are insufficient to mitigate current cyber vulnerabilities. Most organizations do not currently have sufficient embedded system expertise to provide long-term vulnerability mitigation against the adaptive threat we are seeing. While there is no silver-bullet solution, there are a broad-based set of immediate actions that can significantly mitigate embedded system cyber risk above and beyond basic hygiene:

1. Employ digital signatures and code signing to ensure software integrity of new applications and all updates. Require future systems to cryptographically verify all software and firmware as it is loaded onto embedded devices.

2. Mandate inclusion of software assurance tools/processes and independent verification and validation using appropriate standards as part of future system development. Use best commercial code tools and languages available.

3. Employ hardware/software isolation and randomization to reduce embedded cyber risk and improve software agility even for highly integrated systems.

4. Improve and build organizational cyber skills and capabilities for embedded systems.

5. Protect design/development information (e.g., Source code repositories and revision control systems). Implement security procedures sufficiently early that protection against exfiltration and exploitation is consistent with the eventual criticality of the fielded system.

6. Develop situational awareness hardware and analysis tools to establish a baseline for your embedded operational patterns such that this will inform the best mitigation strategies.

7. Develop and deploy continuously verifiable software techniques.

8. Develop and deploy formal-method software assurance tools and processes.

In the following sections, we will examine 11 specific types of attacks against embedded systems which could be mitigated using the above list of recommendations:

1. Stuxnet, the first true cyber-weapon, created by nation-states to attack and destroy physical equipment half a world away. This was a sophisticated cyber attack against SCADA controllers driving nuclear weapon plutonium refinement. Examining how this attack worked is extremely helpful in thinking how other embedded devices might be attacked and how to prevent it. Stuxnet destroyed the misconception of the myriad people who had been saying “we are air-gapped and so not susceptible like everyone else is.”

2. Flame, Gauss, and Dudu are all derivatives of Stuxnet possibly made by different nation-states, and while not as focused on embedded devices per se, they are worth understanding in terms of their scale and sophistication. Again, knowing how these malwares work is helpful in preventing new embedded devices from being susceptible to the next attack.

3. Routers are the backbone of any network including the Internet itself. A router is a fairly simple embedded device, yet the entire network is lost if a router is compromised. It was thought that routers from major market-leading vendors were safe but once again, this overconfidence has proven to be unwarranted.

4. Aviation has embedded devices both on-board the plane and as part of the ground-based control infrastructure, any part of which if compromised could represent catastrophe. Some very simple hacks have been discovered in spite of high degrees of concern and diligence.

5. Automotive not only has a multitude of embedded devices, but it also represents one of the most complex system of systems in some vehicles reaching 100 million lines of source code. Given that Steve Mcquire reports [13] that there are at least 15 bugs per KLOC in deployed systems and approximately 10% of those can be turned into cyber vulnerabilities, these hugely complex vehicles could have 150,000 potential vulnerabilities.

6. Medical devices are embedded systems frequently with a life-critical mission. Some are embedded themselves into the human body. Security of these devices is critical for life safety of course but also to prevent the panic that would inevitably ensue should cyber hacks occur. Well, they have occurred and quite publicly.

7. ATM Jackpotting is a hack demonstrated at a Black Hat conference. ATMs, like a lot of today’s devices and machines, are running fairly standard computers and operating systems internally. ATM machines, like these other devices, allow updates over a network. Software unfortunately has flaws and as security vulnerabilities are found in the ATM software, they must be updated or they risk being hacked in increasingly creative ways like treating an ATM like a jackpot machine that spews out money like a slot machine that hit the jackpot.

8. Military is full of embedded systems from weapons systems to major platforms such as plans, tanks, ships, and submarines. Attack on these systems has not only loss of life implications of national security ones as well.

9. Infrastructure includes electric grid, water supply, communications and transportation to name just a few. All of these types of infrastructures depend heavily on embedded systems to operate. Yet many of these infrastructures are quite old and made up of legacy (and highly insecure) embedded systems. Attack on any of these infrastructures could cripple the country and thus are national security issues themselves.

10. Point-of-sale (POS) systems are included because when it comes to purely making money off of hacking, this is a favorite target of cyber attackers as shown in the Target, Home Depot, TJX, BJs and many others. These embedded devices are one of the most prevalent consumer-facing embedded devices trusted to handle highly sensitive personally identifiable data beginning with credit card numbers and they are highly vulnerable to attack.

11. Social engineering & password guessing. Password guessing must be included because it remains a common way attackers gain control and it is so easy to prevent and yet remains a prevalent weakness of embedded devices just as it is of large computer systems as well.

2.11.1 Stuxnet

Stuxnet was a 500-kilobyte computer worm that infected the software of at least 14 industrial sites in the country it was targeted at, including a uranium-enrichment plant. While a computer virus relies on an unwitting victim to install it, a worm spreads on its own, often over a computer network [14].

This worm was an unprecedentedly masterful and malicious piece of code that attacked in three phases. First, it targeted Microsoft Windows machines and networks, repeatedly replicating itself as it infected system after system. From there it sought out Siemens Step7 software, which is also Windows-based system used to program ICSs that operate equipment, such as centrifuges. Finally, it compromised the PLCs that are directly connected to and in control of the centrifuge motors. The worm’s authors could thus spy on the industrial systems and even cause the fast-spinning centrifuges to tear themselves apart, unbeknownst to the human operators at the plant. The Stuxnet victim never confirmed that the attack destroyed some of its centrifuges but satellite images show 2000 centrifuges being rolled out of the facility into the trash heap.

Stuxnet could spread stealthily between computers running Windows—even those not connected to the Internet. If a worker stuck a USB thumb drive into an infected machine, Stuxnet could jump onto it, then spread onto the next machine that read that USB drive. Because someone could unsuspectingly infect a machine this way, letting the worm proliferate over local area networks, experts feared that the malware had perhaps gone wild across the world. Basically it did but Stuxnet was highly selective in terms of location, host, and SCADA controller it had its sights set on so its spread was harmless except to one facility it was designed to target.

For several years the authors of Stuxnet were not officially acknowledged, but the size and sophistication of the worm led experts to believe that it could have been created only with the sponsorship of a nation-state, leading candidates being the United States and one of its allies [15]. Then in Jun. 2012, Obama announced that the United States had been involved. Since the discovery of Stuxnet many computer-security engineers have been fighting off other weaponized viruses based on Stuxnet design principles, such as Duqu, Flame, and Gauss, an onslaught that shows no signs of abating. The callout outlines the 12 steps that the Stuxnet malware went through. The most detailed analysis of how Stuxnet worked was done by Ralph Langer [16].

Put the following in a callout:

1. Stuxnet was initially launched into the wild as early as Jun. 2009, and its creator updated and refined it over time, releasing three different versions. An indication of how determined they were to remain anonymous was that one of the virus’s driver files used a valid signed certificate stolen from RealTek Semiconductor, a hardware maker in Taiwan, in order to fool systems into thinking the malware was a trusted program from RealTek.

2. Several layers of masking obscured the zero-day exploit inside, requiring work to reach it, and the malware was huge—500 k bytes, as opposed to the usual 10–15 k. Generally malware this large contained a space-hogging image file, such as a fake online banking page that popped up on infected computers to trick users into revealing their banking login credentials. But there was no image in Stuxnet, and no extraneous fat either. The code appeared to be a dense and efficient orchestra of data and commands.

3. Normally, Windows functions are loaded as needed from a DLL file stored on the hard drive. Doing the same with malicious files, however, would be a giveaway to antivirus software. Instead, Stuxnet stored its decrypted malicious DLL file only in memory as a kind of virtual file with a specially crafted name. It then reprogrammed the Windows API—the interface between the operating system and the programs that run on top of it—so that every time a program tried to load a function from a library with that specially crafted name, it would pull it from memory instead of the hard drive. Stuxnet was essentially creating an entirely new breed of ghost file that would not be stored on the hard drive at all, and hence would be almost impossible to find.

4. Each time Stuxnet infected a system, it “phoned home” to one of two domains—http://www.mypremierfutbol.com and http://www.todaysfutbol.com hosted on servers in Malaysia and Denmark—to report information about the infected machines. This included the machine’s internal and external IP addresses, the computer name, its operating system and version, and whether Siemens Simatic WinCC Step7 software, also known simply as Step7, was installed on the machine. This approach let the attackers update Stuxnet on infected machines with new functionality or even install more malicious files on systems.

5. Out of the initial 38,000 infections, about 22,000 were in the targeted country. The next most infected country was a distant second, with about 6700 infections, and the third-most had about 3700 infections. The United States had fewer than 400.

6. All told, Stuxnet was found to have at least four zero-day attacks in it. That had never been seen before. One was a Windows print spooler vulnerability that allowed the worm to spread across networks using a shared printer. Another attacked vulnerabilities in a Windows keyboard driver and a Task Scheduler process to perform a privilege escalation to root. Finally, it was exploiting a static password Siemens had hard-coded into its Step7 software, which it used to gain access to and infect a server hosting a database used with the Step7 programming system.

7. Stuxnet was not spreading via the Internet like every other worm before it had. It was targeting systems that were not normally connected to the Internet at all (air-gapped) and instead was spreading via USB thumb drives physically inserted into new machines.

8. The payload Stuxnet carried was three main parts and 15 components all wrapped together in layers of encryption which would only be decrypted and extracted when the conditions on the newly infected machine were just right. When it found its target—a Windows machine running Siemens Step7—it would install a new DLL that impersonated a legitimate one Step7 used.

9. Step7 was a Windows-based programming environment for the Siemens PLC used in many industrial control automation applications. The malicious DLL would intercept commands going from Step7 to the PLC and replace them with its own commands. Another portion of Stuxnet disabled alarms as a result of the malicious commands. Finally, it intercepted status messages sent from the PLC to the Step7 machine stripping out any signs of the malicious commands. This meant workers monitoring the PLC from the Step7 machine would see only legitimate commands and have no clue the PLC was being told to do very different things from what they thought Step7 had told it to do. At this moment, the first ever worm designed to do physical sabotage was born.

10. Stuxnet, it turns out, was a precision weapon sabotaging a specific facility. It carried a very specific dossier with details of the target configuration at the facility it sought. Any system not matching would be unharmed. This made it clear the attackers were a government with detailed inside knowledge of its target.

11. In the final stage of Stuxnet’s attack, it searched for the unique part number for a Profibus and then for one of two frequency converters that control motors. The malware would sit quietly on the system doing reconnaissance for about 2 weeks, then launch its attack swiftly and quietly, increasing the frequency of the converters to 1410 Hz for 15 min, before restoring them to a normal frequency of 1064 Hz. The frequency would remain at this level for 27 days, before Stuxnet would kick in again and drop the frequency down to 2 Hz for 50 min. The drives would remain untouched for another 27 days, before Stuxnet would attack again with the same sequence. The extreme range of frequencies suggested Stuxnet was trying to destroy whatever was on the other end of the converters. Satellite images show that approximately 2000 centrifuges—one-fifth of their total—were removed from the targeted country’s nuclear facility suggesting they were indeed destroyed.

12. Recently it was discovered there were even older versions of Stuxnet that went undiscovered for many years. The first targeted gas valves in nuclear reactors and the second targeted the reactors’ cores.

The 2010 Stuxnet worm used at least three separate zero-day exploits—an unprecedented feat—to damage industrial controllers and disrupt the uranium enrichment facility.

The zero-day vulnerabilities included the following from the list of CVEs [9]:

• CVE-2010-2568—Executes arbitrary code when user opens a folder with maliciously crafted.LNK or .PIF file.

• CVE-2010-2729—Executes arbitrary code when attacker sends a specially crafted remote procedure call message.

• CVE-2010-2772—Allows local users to access a back-end database and gain privileges in Siemens Simatic WinCC and PCS 7 SCADA system.

The attack also exploited already patched vulnerabilities, suggesting that the attackers knew the systems likely would not have been updated.

2.11.2 Flame, Gauss, and Duqu

Flame, Gauss, and Duqu are cousins of Stuxnet but did not focus on embedded systems. Still it is useful to understand what serious attackers are interested in doing and because the distinction between embedded systems and nonembedded systems will lesson over time.

Flame

Flame also known as Flamer and Skywiper, is computer malware discovered in 2012 that attacks computers running the Windows operating system. The program is being used for targeted cyber espionage in Middle Eastern countries. The internal code has few similarities with other malware, but exploits two of the same security vulnerabilities used previously by Stuxnet to infect systems.

Its discovery was announced by Kaspersky Lab and CrySyS Lab of the Budapest University of Technology and Economics, which stated that Flame “is certainly the most sophisticated malware we encountered during our practice; arguably, it is the most complex malware ever found” [17].

Flame can spread to other systems over a local network or via USB stick. It can record audio, screenshots, keyboard activity, and network traffic. The program also records Skype conversations and can turn infected computers into Bluetooth beacons, which attempt to download contact information from nearby Bluetooth-enabled devices. This data, along with locally stored documents, is sent on to one of several C&C servers that are scattered around the world. The program then awaits further instructions from these servers.

According to estimates by Kaspersky in May 2012, Flame had initially infected approximately 1000 machines, with victims including governmental organizations, educational institutions, and private individuals [18]. At that time, 65% of the infections happened in the Middle East and North Africa. Flame has also been reported in Europe and North America. Flame supports a “kill” command, which wipes all traces of the malware from the computer. The initial infections of Flame stopped operating after its public exposure, and the “kill” command was sent [19].

Gauss

Kaspersky Lab researchers also discovered a complex cyber-espionage toolkit called Gauss, which is a nation-state sponsored malware attack closely related to Flame and Stuxnet, but blends nation-state cyber-surveillance with an online banking Trojan [20]. It can steal access credentials for various online banking systems and payment methods and various kinds of data from infected Windows machines such as specifics of network interfaces, computer’s drives, and even information about BIOS. It can steal browser history, social network, and instant messaging info and passwords, and searches for and intercepts cookies from PayPal, Citibank, MasterCard, American Express, Visa, eBay, Gmail, Hotmail, Yahoo, Facebook, Amazon, and some other Middle Eastern banks. Additionally Gauss includes an unknown, encrypted payload, which is activated on certain specific system configurations.

Gauss is a nation state sponsored banking Trojan, which carries a warhead of unknown designation according to Kaspersky. The payload is run by infected USB sticks and is designed to surgically target a certain system (or systems), which has a specific program installed. One can only speculate on the purpose of this mysterious payload. The malware copies itself onto any clean USB inserted into an infected personal computer, then collects data if inserted into another machine, before uploading the stolen data when reinserted into a Gauss-infected machine.

The main Gauss module is only about 200 k, which is one-third the size of the main Flame module, but it has the ability to load other plugins, which altogether count for about 2 MB of code. Like Flame and Duqu, Gauss is programmed with a built in time-to-live (TTL). When Gauss infects an USB memory stick, it sets a certain flag to “30.” This TTL flag is decremented every time the payload is executed from the stick. Once it reaches 0, the data stealing payload cleans itself from the USB stick. This probably means it was built with an air-gapped network in mind.

There were seven domains being used to gather data, but the five C&C servers went offline before Kaspersky could investigate them. Kaspersky says they do not know if the people behind Duqu switched to Gauss at that time but they are quite sure they are related: Gauss is related to Flame, Flame is related to Stuxnet, and Stuxnet is related to Duqu. Hence, Gauss is related to Duqu. On the other hand, it is hard to believe that a nation state would rely on these banking Trojan techniques to finance a cyber-war or cyber-espionage operation.

So far, Gauss has infected more than 2500 systems in 25 countries with the majority—1660 infected machines—being located in a Middle Eastern country. Gauss started operating around Aug.–Sep. 2011 and it is almost certainly still in operation. Deep analysis of Stuxnet, Duqu, and Flame, leads these experts to believe with a high degree of certainty that Gauss comes from the same “factory” or “factories.” All these attack toolkits represent the high end of nation-state sponsored cyber-espionage and cyberwar operations, pretty much defining the meaning of “sophisticated malware” [21].

Duqu

Duqu looks for information that could be useful in attacking ICSs [22]. Its purpose seems not to be destructive but rather to gather information. However, based on the modular structure of Duqu, a special payload could be used to attack any type of computer system by any means and thus cyber-physical attacks based on Duqu might be possible. However, use on personal computer systems has been found to delete all recent information entered on that system, and, in some cases, total deletion of the computer’s hard drive has occurred. One of Duqu’s actions is to steal digital certificates (and corresponding private keys, as used in public-key cryptography) from attacked computers apparently to help future viruses appear as secure software. Duqu uses a 54 × 54 pixel jpeg file and encrypted dummy files as containers to smuggle data to its C&C center. Security experts are still analyzing the code to determine what information the communications contain. Initial research indicates that the original malware sample automatically removes itself after 36 days, which limits its detection.

Duqu has been called “nearly identical to Stuxnet, but with a completely different purpose.” Thus, Symantec believes that Duqu was created by the same authors as Stuxnet, or that the authors had access to the source code of Stuxnet [23]. The worm, like Stuxnet, has a valid, but abused digital signature, and collects information to prepare for future attacks. Duqu’s kernel driver, JMINET7.SYS, is so similar to Stuxnet’s MRXCLS.SYS that some systems thought it was Stuxnet.

2.11.3 Routers

Routers are attractive to hackers because they operate outside the perimeter of firewalls, antivirus, behavioral detection software, and other security tools that organizations use to safeguard data traffic. Until now, they were considered vulnerable only to sustained denial-of-service attacks using barrages of millions of packets of data. It has been “common knowledge” that they were not very vulnerable to outright takeover. However, a recent very dangerous attack against routers called SYNful has proven this commonly held belief is wrong. SYNful is a reference to SYN, the signal a router sends when it starts to communicate with another router, a process which the implant exploited.

If one seizes control of the router, that attacker owns the data of all the companies and government organizations that sit behind that router. Takeover of the router is considered the ultimate spying tool, the ultimate corporate espionage tool, the ultimate cybercrime tool. Router attacks have hit multiple industries and government agencies.

Cisco has confirmed the SYNful attacks but said they were not due to any vulnerability in its own software. Instead, the attackers stole valid network administration credentials from targeted organizations or managed to gain physical access to the routers. Mandiant has found at least 14 instances of the router implants [24]. Because the attacks actually replace the basic software controlling the routers, infections persist when devices are shut off and restarted. If found to be infected, basic software used to control those routers has to be re-imaged, a time-consuming task for IT engineers.

SYNful Knock is a stealthy modification of the router’s firmware image that can be used to maintain persistence within a victim’s network. It is customizable and modular in nature and thus can be updated once implanted. Even the presence of the backdoor can be difficult to detect as it uses nonstandard packets as a form of pseudo-authentication.

The initial infection vector does not appear to leverage a zero-day vulnerability. It is believed that the credentials are either default or discovered by the attacker in order to install the backdoor. However, the router’s position in the network makes it an ideal target for re-entry or further infection. It is a significant challenge to find backdoors within one’s network but finding a router implant is even more so. The impact of finding this implant on one’s network is severe and most likely indicates the presence of other footholds or compromised systems. This backdoor provides ample capability for the attacker to propagate and compromise other hosts and critical data using this as a very stealthy beachhead.

The implant consists of a modified Cisco IOS image that allows the attacker to load different functional modules from the anonymity of the internet [25]. The implant also provides unrestricted access using a secret backdoor password. Each of the modules are enabled via the HTTP (not HTTPS), using specially crafted Transfer Control Protocol (TCP) packets sent to the routers interface. The packets have a nonstandard sequence and corresponding acknowledgment numbers. The modules can manifest themselves as independent executable code or hooks within the routers IOS that provide functionality similar to the backdoor password. The backdoor password provides access to the router through the console and Telnet.

This very sophisticated, powerful, and dangerous attack on such an important embedded device is emblematic of what any embedded device developer has to be prepared for.

Aviation

There have been a number of incidents in recent years that demonstrate that worrisome cyber security vulnerabilities exist in the civil aviation system, some of which are in embedded devices within the airplane or attacks on ground systems affected the embedded devices on board. Some incidents of note are:

• an attack on the Internet in 2006 that forced the US Federal Aviation Administration (FAA) to shut down some of its ATC systems in Alaska;

• a cyber-attack that led to the shutdown of the passport control systems at the departure terminals at Istanbul Atatürk and Sabiha Gökçen airports in Jul. 2013, causing many flights to be delayed; and

• an apparent cyber-attack that possibly involved malicious hacking and phishing targeted at 75 airports in the United States in 2013.