Embedded security

J. Rosenberg Draper Laboratory, Cambridge, MA, United States

Abstract

This chapter is about security of embedded devices. Throughout this book, the effects of harsh environments in which we require many of our embedded devices to operate have been discussed in some detail. But even an embedded processor in a clean, warm, dry, stationary, and even physically safe situation is actually in an extremely harsh environment. That is because every processor everywhere can be subject to the harshness of cyber attacks. We know that even “air-gapped” systems can be attacked by the determined attacker as we saw happen with the famous Stuxnet attack. This chapter will discuss the nature of this type of harsh environment, what enables the cyber attacks we hear about every day, what are the principles we need to understand to work toward a much higher level of security, and we will present new developments that may change the game in our favor.

Keywords

Embedded systems; Security; Cyber attacks; Harsh environment; Internet of things

1 Important Security Concepts

We will now shift from these “motivating” discussions about how susceptible our embedded computing infrastructure is and the types of attacks that have been or could be perpetrated against them, and cover important security concepts that we will need for a common vocabulary and to have as a baseline when we delve into what might be done to improve our cybersecurity defensive posture.

1.1 Identification and Registration

Cybersecurity must begin with sufficiently strong identification and registration of any identities who will access the devices being secured or the networks those devices communicate with. If we get this wrong the security we build around all our embedded devices might as well be a house of cards.

National Institute of Standards and Technology (NIST) has established an Electronic Authentication Guide designated 800-63-2 [1] that defines technical requirements for each of four levels of assurance in the areas of identity proofing, registration, tokens, management processes, authentication protocols and related assertions. These four levels of authentication—simply designated Levels 1–4—are differentiated in terms of the consequences of the authentication errors and misuse of credentials at each successive level. Level 1 is the lowest assurance and Level 4 is the highest. The NIST guidance defines the required level of authentication assurance in terms of the likely consequences of an authentication error. As the consequences of an authentication error become more serious, the required level of assurance increases.

Electronic authentication, or E-authentication, begins with registration. The usual sequence for registration proceeds as follows. An Applicant applies to a Registration Authority (RA) to become a Subscriber of a Credential Service Provider (CSP). If approved, the Subscriber is issued a credential by the CSP which binds a token to an identifier (and possibly one or more attributes that the RA has verified). The token may be issued by the CSP, generated directly by the Subscriber, or provided by a third party. The CSP registers the token by creating a credential that binds the token to an identifier and possibly other attributes that the RA has verified. The token and credential may be used in subsequent authentication events.

The name specified in a credential may either be a verified name or an unverified name. If the RA has determined that the name is officially associated with a real person and the Subscriber is the person who is entitled to use that identity, the name is considered a verified name. If the RA has not verified the Subscriber's name, or the name is known to differ from the official name, the name is considered a pseudonym. The process used to verify a Subscriber's association with a name is called identity proofing, and is performed by an RA that registers Subscribers with the CSP.

At Level 1, identity proofing is not required so names in credentials and assertions are assumed to be pseudonyms. The name associated with the Subscriber is provided by the Applicant and accepted without verification. This is why there are thousands of Bill Gates in email systems because anyone can create any email name they want. Level 1 is never going to be acceptable for protecting embedded systems.

At Level 2, identity proofing is required, but the credential may assert the verified name or a pseudonym. In the case of a pseudonym, the CSP retains the name verified during registration. In addition, pseudonymous Level 2 credentials are distinguishable from Level 2 credentials that contain verified names. Level 2 credentials and assertions specify whether the name is a verified name or a pseudonym. This information assists relying parties (RPs) in making access control or authorization decisions.

In most cases, only verified names are specified in credentials and assertions at Levels 3 and 4. At Level 3 and above, the name associated with the Subscriber is always verified. At all levels, personally identifiable information collected as part of the registration process is also protected, and all privacy requirements are satisfied.

Fig. 1 shows the registration, credential issuance, maintenance activities, and the interactions between the Subscriber/Claimant, the RA and the CSP. The usual sequence of interactions is as follows:

Fig. 1

1. An individual Applicant applies to an RA through a registration process.

2. The RA identity proofs that Applicant.

3. On successful identity proofing, the RA sends the CSP a registration confirmation message.

4. A secret token and a corresponding credential are established between the CSP and the new Subscriber.

5. The CSP maintains the credential, its status, and the registration data collected for the lifetime of the credential (at a minimum).

6. The Subscriber maintains his or her token.

1.2 Authentication

In this chapter, the party to be authenticated is called a Claimant and the party verifying that identity is called a Verifier. When a Claimant successfully demonstrates possession and control of a token to a Verifier through an authentication protocol, the Verifier can verify that the Claimant is the Subscriber named in the corresponding credential. The Verifier passes on an assertion about the identity of the Subscriber to the relying party (RP). That assertion includes identity information about a Subscriber, such as the Subscriber name, an identifier assigned at registration, or other Subscriber attributes that were verified in the registration process (subject to the policies of the CSP and the needs of the application). Where the Verifier is also the RP, the assertion may be implicit. The RP can use the authenticated information provided by the Verifier to make access control or authorization decisions.

Authentication establishes confidence in the Claimant's identity, and in some cases in the Claimant's personal attributes (e.g., the Subscriber is a US citizen, is a student at a particular university, or is assigned a particular number or code by an agency or organization). Authentication does not determine the Claimant's authorizations or access privileges; this is a separate decision. RPs (e.g., government agencies) will use a Subscriber's authenticated identity and attributes with other factors to make access control or authorization decisions. The operational semantics of authentication varies according to the NIST levels as described next.

1.2.1 Level 1

Although there is no identity proofing requirement at this level, the authentication mechanism provides some assurance that the same Claimant who participated in previous transactions is accessing the protected transaction or data. It allows a wide range of available authentication technologies to be employed and permits the use of any of the token methods of Levels 2, 3, or 4. Successful authentication requires that the Claimant prove through a secure authentication protocol that he or she possesses and controls the token.

Plaintext passwords or secrets are not transmitted across a network at Level 1. However this level does not require cryptographic methods that block offline attacks by eavesdroppers. For example, simple password challenge-response protocols are allowed. In many cases an eavesdropper, having intercepted such a protocol exchange, will be able to find the password with a straightforward dictionary attack.

At Level 1, long-term shared authentication secrets may be revealed to Verifiers. At Level 1, assertions and assertion references require protection from manufacture/modification and reuse attacks.

1.2.2 Level 2

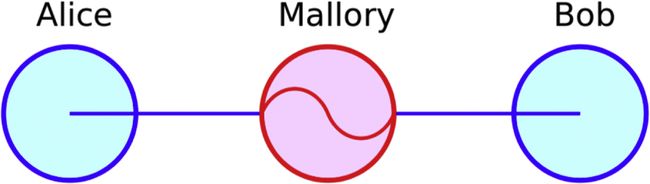

Level 2 provides single factor remote network authentication. At Level 2, identity proofing requirements are introduced, requiring presentation of identifying materials or information. A wide range of available authentication technologies can be employed at Level 2. For single factor authentication, Memorized Secret Tokens, Pre-Registered Knowledge Tokens, Look-up Secret Tokens, Out of Band Tokens, and Single Factor One-Time Password Devices are allowed at Level 2. Level 2 also permits any of the token methods of Levels 3 or 4. Successful authentication requires that the Claimant prove through a secure authentication protocol that he or she controls the token. Online guessing, replay, session hijacking, and eavesdropping attacks are resisted. Protocols are also required to be at least weakly resistant to man-in-the-middle (MITM) attacks. Long-term shared authentication secrets, if used, are never revealed to any other party except Verifiers operated by the CSP; however, session (temporary) shared secrets may be provided to independent Verifiers by the CSP. In addition to Level 1 requirements, assertions are resistant to disclosure, redirection, and capture and substitution attacks. Approved cryptographic techniques are required for all assertion protocols used at Level 2 and above.

1.2.3 Level 3

Level 3 provides multifactor remote network authentication. At least two authentication factors are required. At this level, identity proofing procedures require verification of identifying materials and information. Level 3 authentication is based on proof of possession of the allowed types of tokens through a cryptographic protocol. Multifactor Software Cryptographic Tokens are allowed at Level 3. Level 3 also permits any of the token methods of Level 4. Level 3 authentication requires cryptographic strength mechanisms that protect the primary authentication token against compromise by the protocol threats for all threats at Level 2 as well as verifier impersonation attacks. Various types of tokens may be used. Authentication requires that the Claimant prove, through a secure authentication protocol, that he or she controls the token. The Claimant unlocks the token with a password or biometric, or uses a secure multitoken authentication protocol to establish two-factor authentication (through proof of possession of a physical or software token in combination with some memorized secret knowledge). Long-term shared authentication secrets, if used, are never revealed to any party except the Claimant and Verifiers operated directly by the CSP; however, session (temporary) shared secrets may be provided to independent Verifiers by the CSP. In addition to Level 2 requirements, assertions are protected against repudiation by the Verifier.

1.2.4 Level 4

Level 4 is intended to provide the highest practical remote network authentication assurance. Level 4 authentication is based on proof of possession of a key through a cryptographic protocol. At this level, in-person identity proofing is required. Level 4 is similar to Level 3 except that only “hard” cryptographic tokens are allowed. The token is required to be a hardware cryptographic module validated at Federal Information Processing Standard (FIPS) 140–2 Level 2 or higher overall with at least FIPS 140–2 Level 3 physical security. Level 4 token requirements can be met by using the personal identity verification (PIV) authentication key of a FIPS 201 compliant PIV Card. Level 4 requires strong cryptographic authentication of all communicating parties and all sensitive data transfers between the parties. Either public key or symmetric key technology may be used. Authentication requires that the Claimant prove through a secure authentication protocol that he or she controls the token. All protocol threats at Level 3 are required to be prevented at Level 4. Protocols must also be strongly resistant to MITM attacks. Long-term shared authentication secrets, if used, are never revealed to any party except the Claimant and Verifiers operated directly by the CSP; however, session (temporary) shared secrets may be provided to independent Verifiers by the CSP. Approved cryptographic techniques are used for all operations. All sensitive data transfers are cryptographically authenticated using keys bound to the authentication process.

1.2.5 Multifactor authentication

The classic paradigm for authentication systems identifies three factors as the cornerstone of authentication:

• Something you know (e.g., a password)

• Something you have (e.g., an ID badge or a cryptographic key)

• Something you are (e.g., a fingerprint or other biometric data)

Multifactor authentication—as required in Levels 3 and 4 described earlier—refers to the use of more than one of the factors listed above. The strength of authentication systems is largely determined by the number of factors incorporated by the system. Single factor authentication (the vast majority of systems today) is almost always too weak to protect any type of important information or assets. Implementations that use two factors are considered to be stronger than those that use only one factor; systems that incorporate all three factors are stronger still (but become unwieldy quickly). The authors strongly suggest that developers of systems incorporate two-factor authentication from inception as people are beginning to accept the slight extra burden of it in order to have higher security.

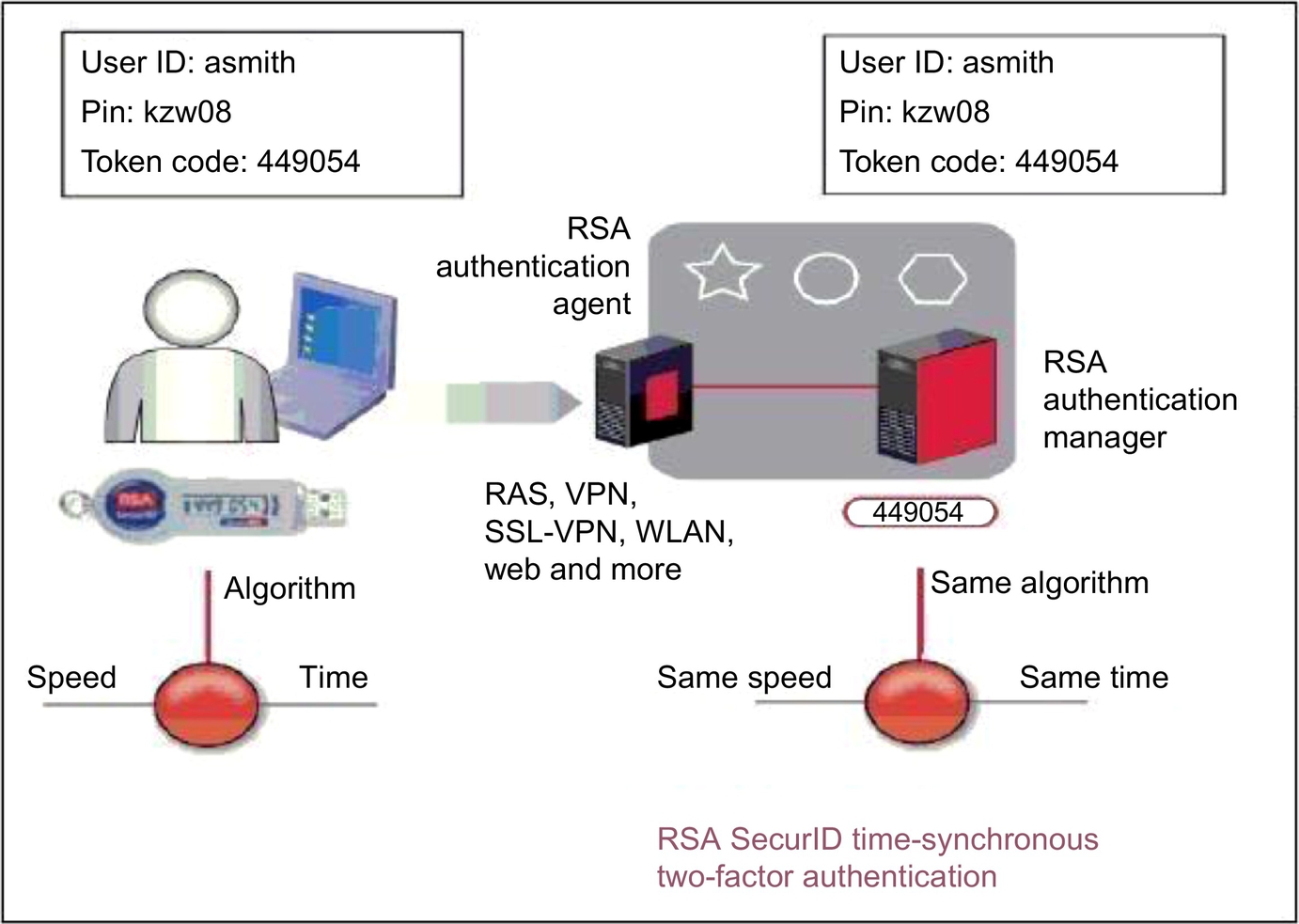

The secrets contained in tokens commonly used in two-factor authentication are based on either public key pairs (asymmetric keys) or shared secrets. A public key and a related private key comprise a public key pair. The private key is stored on the token and is used by the Claimant to prove possession and control of the token. A Verifier, knowing the Claimant's public key through some credential (typically a public key certificate), can use an authentication protocol to verify the Claimant's identity, by proving that the Claimant has possession and control of the associated private key token. A common, easy to use, and very strong cryptographic key system for two-factor authentication (where a password is provided in addition to the code displayed on the token) made by RSA and others is the time-synchronized token. The working of this token is diagrammed in Fig. 2.

Fig. 2

EMC Corporation.

1.3 Authorization

Ultimately we are after access control which is control over exactly who can access the protected resources under specified circumstances. Access control depends on:

Identification (discussed in a previous section)

Subjects supplying identification information

Username, user ID, account number

Authentication (discussed in a previous section)

Verifying this identification information

Passphrase, PIN value, biometric, secure token, one-time password, password

Authorization

Using criteria to make a determination of operations that subjects can carry out on objects

Now that I know who you are, what am I going to allow you to do?

Accountability

Audit logs and monitoring to track subject activities with objects

Authentication and authorization are very different but together they represent a two-step process that determines whether an individual (or an application acting on behalf of an individual) is allowed to access a resource. Once authentication has sufficiently proven that the individual is who they claim to be, the system must establish whether this user is authorized to access the resource they are requesting and further, what actions this individual is allowed to perform on that resource.

Access control in computer systems and networks rely on access policies. The access control process can be divided into the following two phases: (1) policy definition phase where access is authorized, and (2) policy enforcement phase where access requests are approved or disapproved. Authorization is thus the function of the policy definition phase which precedes the policy enforcement phase where access requests are approved or disapproved based on the previously defined authorizations.

Most modern, multiuser operating systems include access control and thereby rely on authorization. Frequently it is such a multiuser system that acts as the gateway to embedded devices that depend on the general-purpose computer to protect them. Authorization is the responsibility of an authority, such as a department manager, within the application domain, but is often delegated to a custodian such as a system administrator. Authorizations are expressed as access policies in some types of “policy definition application,” e.g., in the form of an access control list (ACL) or a capability, on the basis of the “principle of least privilege”: consumers of services should only be authorized to access whatever they need to do their jobs. Older and single user operating systems often had weak or nonexistent authentication and access control systems.

An ACL with respect to a computer file system, is a list of permissions attached to an object. An ACL specifies which users or system processes are granted access to objects, as well as what operations are allowed on given objects. Each entry in a typical ACL specifies a subject and an operation. For instance, if a file object has an ACL that contains (Alice: read, write; Bob: read), this would give Alice permission to read and write the file and Bob to only read it.

Even when access is controlled through a combination of authentication and ACLs, the problems of maintaining the authorization data is not trivial, and often represents as much administrative burden as managing authentication credentials. It is often necessary to change or remove a user's authorization: this is done by changing or deleting the corresponding access rules on the system. Using atomic authorization is an alternative to per-system authorization management, where a trusted third party securely distributes authorization information.

SAML is an XML-based framework for creating and exchanging authentication and attribute information between trusted entities over the Internet. At the time of this writing, the latest specification is SAML v2.0, issued Mar. 15, 2005. SAML is used in large general-purpose computer systems and in embedded systems and the emerging Internet of things.

The building blocks of SAML include the Assertions XML schema which define the structure of the assertion; the SAML protocols which are used to request assertions and artifacts; and the Bindings that define the underlying communication protocols (such as HTTP or SOAP) and that can be used to transport the SAML assertions. The three components above define a SAML profile that corresponds to a particular use case.

SAML Assertions are encoded in a XML schema and can carry up to three types of statements:

• Authentication statements—Include information about the assertion issuer, the authenticated subject, validity period, and other authentication information. For example, an Authentication Assertion would state the subject “John” was authenticated using a password at 10:32 p.m. on Jun. 6, 2004.

• Attribute statements—Contain specific additional characteristics related to the Subscriber. For example, subject “John” is associated with attribute “Role” with value “Manager.”

• Authorization statements—Identify the resources the Subscriber has permission to access. These resources may include specific devices, files, and information on specific web servers. For example, subject “John” for action “Read” on “SCADA device 1002” given evidence “Role.”

Further exploration of SAML is best done in one of the many excellent detailed books on the subject.

1.4 Cryptography

[Some material here is derived from Securing Web Services with WS_Security written by this chapter's author and is used with permission.]

Once users are identified and registered and after they have authenticated themselves to a system they wish to access to which they have been provided access, the next important thing to consider is how they communicate with that system if their messages are confidential. Shared key technologies including shared key encryption—also called symmetric encryption—will be our critical tool for keeping messages confidential. In this section we will learn about the cryptography behind the algorithms, mechanisms for managing keys, and the relationship between shared key technologies and public key technologies, which are presented after shared key.

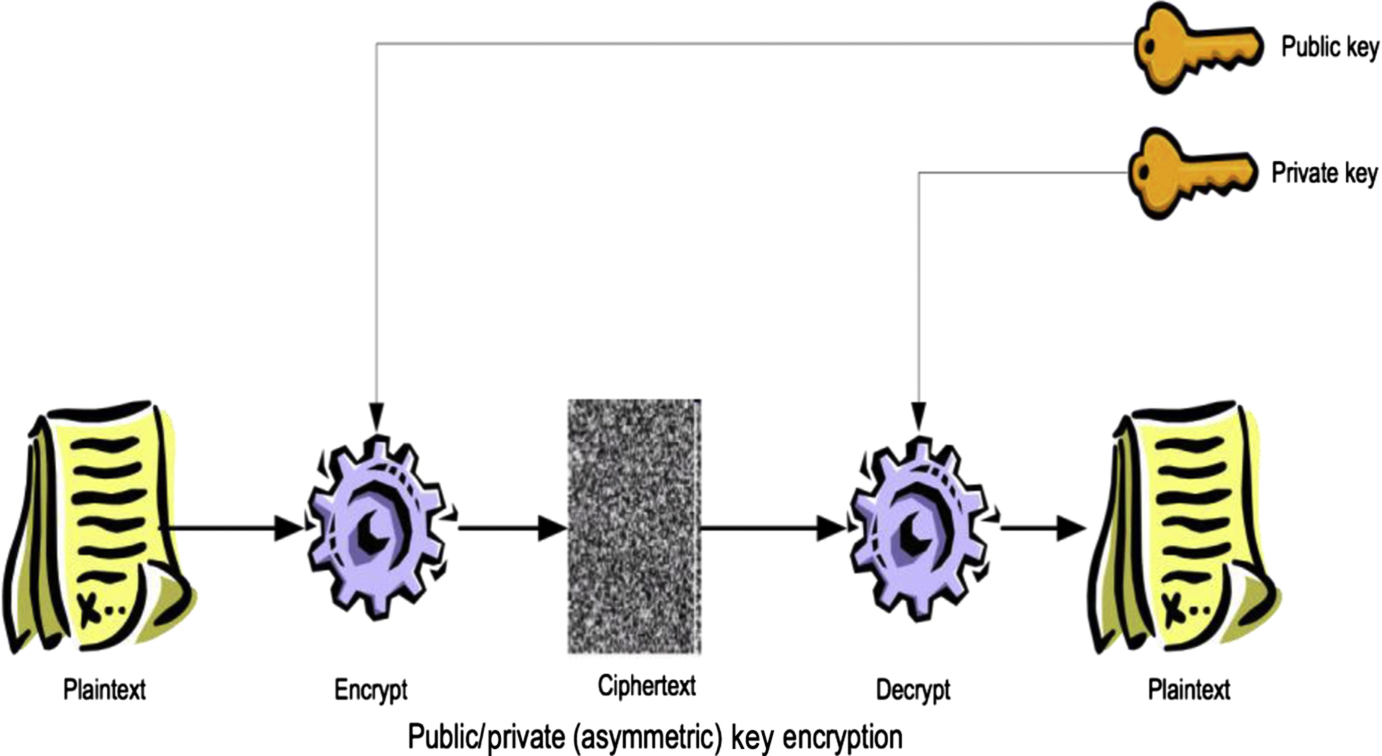

1.4.1 Shared key encryption

Several synonyms for shared key encryption are used. It is sometimes referred to as symmetric encryption or secret key encryption because the same key is used to both encrypt and decrypt a message and this key must be kept secret from all nonintended parties to keep the encrypted message secret. For clarity and simplicity, in this chapter we will use the term shared key encryption because sender and receiver share the same key, which is distinct from public key encryption where one key is made open and public to the entire world. The security principle we are driving for with shared key encryption is message confidentiality. By that we mean that no one other than the intended recipient will be able to read an encrypted message. Shared key encryption is explained pictorially in Fig. 3.

Fig. 3

In encryption, the key is the key so to speak. Since both sender and receiver must utilize the exact same key in shared key encryption, this solitary key must somehow be separately and confidentially exchanged so both parties have it prior to message exchange. Under other circumstances one might imagine you could send the key via US Mail or read it over the phone but neither of these would be consistent with the goals of computer-to-computer integration we are after. Typically key exchange must be done over the same channel that the encrypted messages will flow. Experience shows us that the best way to accomplish this is to encrypt the shared key using public key encryption before exchange. Public key encryption will be described after this discussion of shared key.

Only the key is secret. It has become clear from many years of experience that the algorithms themselves must be public and well scrutinized. The field of cryptography has unequivocal proof that this openness enhances confidence in the algorithm's security due to the extensive study and analysis by all the world's cryptographers of these public algorithms that ensues.

Without going into extensive mathematical derivations to prove it, we need you to take on faith the two most important things you need to know about shared key encryption:

(a) Shared key encryption is much faster than public key encryption, and

(b) Shared key encryption can operate on arbitrarily large plaintext messages which public key encryption cannot.

You cannot use public key encryption to encrypt large messages. You must use something comparable to public key encryption to get a shared key to the other endpoint from where the key was crafted. This is why you need to understand both technologies.

Shared key encryption uses cryptographic algorithms known as block ciphers. This means that the algorithm works by taking the starting plaintext message and first breaks it into fixed size blocks before encrypting each block. Two long-standing algorithms used throughout the software industry are Triple-Data Encryption Standard (3DES), and Advanced Encryption Standard (AES).

DES involves a lot of computationally fast and simple substitutions, permutations, XORs and shifting on a data block at a time to produce ciphertext from an input plaintext message. The design of DES is so clever that decryption uses exactly the same algorithm as encryption. The only difference is that the order in which the key parts are used in the algorithm is exactly reversed. DES was designed to be simple enough to build into high speed hardware. Many appliances used to accelerate Secure Sockets Layer (SSL), provide high speed virtual private networks (VPNs) and for other uses have been built with DES built in. However, in the past decade, weaknesses have been found in DES which has led to the creation of Triple-DES or 3DES.

3DES is a variant of DES that uses DES thrice in succession performing encrypt-decrypt-encrypt of the incoming message to compensate for DES' weakness. 3DES uses a key length of 192-bits. Like DES, 3DES is also frequently found in hardware devices for very high speed encryption.

AES is the most recently adopted shared key encryption standard. AES came out of a government-sponsored contest won by a cryptographer named Rijndael. It also works well in hardware and can have keys up to 256-bits long taking it out of the realm of being susceptible to a brute force attack with the current generation of computers.

A critical concept for shared key encryption is padding. The blocks that are input to the cipher must be of a fixed size so if the plaintext input is not already of the correct size it must be padded. But you can't just add random data or we would need to communicate to the receiver the correct real size of the data and that would be a dangerous clue to an attacker. You also can't just add zeros because the message itself might have leading or trailing zeros. So padding must include sentinel information that identifies the padding and must not give an attacker any critical information that might compromise the message. The name of the standard padding schemes accepted for use with these symmetric ciphers is PKCS#5 [2], and PKCS#7 [3].

As mentioned earlier, shared key technologies fail to solve the problem of scalable key distribution. While these algorithms are fast and can handle infinitely large messages, both ends of the communication need access to the same key and we need to get it to them securely which shared key cannot help with. Shared key cryptography also fails to solve the issue of repudiation. At times we are going to need to be able to prove that a certain identity created and attests to sending a message (or document) and no one else could have. They must not be able to deny having sent this exact document at this moment in time. Shared key cryptography provides no help here. Finally, shared key cryptography fails to solve the issue of data integrity. We know no one could have successfully intercepted our message and we have some assurance that no blocks of data in the message were substituted thanks to a process called cyclic block chaining that links each separately encrypted data block to the next, but we do not have assurance that the message sent and the one received are identical. To solve these issues, we need public key technologies.

1.4.2 Public key technologies

Public key technologies, including public key encryption, will be our tool for delivering integrity, nonrepudiation and authentication of messages. We will begin with an explanation of the concepts behind public key encryption. We will expand and apply this knowledge to build the basis for digital signatures. A discussion of public key technologies is not complete without discussion of public key infrastructure (PKI) and the issues of establishing trust.

Public key encryption

Public key encryption is also referred to as asymmetric encryption because there is not just one key used in both directions as with the symmetric encryption. In public key encryption there are two keys; whichever one is used to encrypt requires the other be used to decrypt. In this chapter we will stick with the term public key encryption to help establish context and contrast it to shared key encryption.

The keys in public key encryption are nonmatching but they are mathematically related. One key (it does not matter which) is used for encryption. That key is useless for decryption. Only the matching key can be used for decryption. This concept provides us with the critical facility we need for secure key exchange to establish and transport a shared key.

A diagram showing how basic public key encryption works is shown in Fig. 4.

Fig. 4

A note about Kerberos before we continue with discussions of public key encryption. While it is true that Kerberos is an alternative for distributing shared keys, Kerberos only applies to a closed environment where all principals requiring keys share direct access to trusted key distribution centers (KDCs) and all principals share a key with that KDC. Microsoft Windows natively support Kerberos so within a closed Windows-only environment Kerberos is an option. No further discussion of Kerberos is contained in this chapter. We recommend public key systems for this function. Public key systems work with paired keys one of which (the private key) is kept strictly private and the other (the public key) is freely distributed; in particular the public key is made broadly accessible to the other party in secure communications.

Communicating parties each must generate a pair of keys. One of the keys, the private key, will never leave the possession of its creator. Each party to the communication passes their public key to the other party. The associated public key encryption algorithms are pure mathematical magic because whatever is encrypted with one half of the key pair can only be decrypted with its mate. Combining this simple fact with the strict rule that private keys remain private and only public keys can be distributed leads to a very interesting and powerful matrix of how public key encryption interrelates to confidentiality and identity. This matrix is shown in Table 1.

Table 1

How Public Key Encryption Interrelates to Confidentiality and Identity

| Public Key | Private Key | What This Means |

| Encrypt (w/ recipient's) | Decrypt (w/ recipient's) | Confidentiality (no one but intended recipient can read) |

| Decrypt (w/ sender's) | Encrypt (w/ sender's) | Signature (identity) (it could only have come from sender) |

For Alice to send a confidential message to Bob, Alice must obtain Bob's public key. That's easy since anyone can have Bob's public key at no risk to Bob; it is just for encrypting data. Alice takes Bob's public key and provides it to the standard encryption algorithm and encrypts her message to Bob. Because of the nature of the public-private key pair and the fact that Alice and Bob agree on a public, standard encryption algorithm (like RSA), Bob can use his private key to decrypt Alice's message. Most importantly, only Bob—because no one will ever get their hands on Bob's private key—can decrypt Alice's message. Alice just sent Bob a confidential message. Anyone intercepting it will get just scrambled data because they don't have Bob's private key.

Digital signatures will be described in just a moment but notice something interesting about doing things just the reverse of Alice's confidential message. If Alice encrypts a message with her private key, which only Alice could possess, and if Alice makes sure Bob has her public key, Bob can see that Alice and only Alice could have encrypted that message. In fact, since Alice's public key is in theory accessible to the entire world, anyone can tell that Alice and only Alice encrypted that message. The identity of the sender is established. That is the basic principle of digital signature.

Remember: encrypt with your private key and the whole world using your public key can tell it could be from you and only you (digital signature) or encrypt with a specific person's public key and they and only they, using their private key, can read your message (secret or confidential messages).

Public key encryption is based on the mathematics of factoring large numbers into their prime factors. This problem is thought to be computationally intractable if the numbers are large enough. But a limitation of public key encryption is that it can only be applied to small messages. To achieve our goal of distributing shared keys this is no problem—shared keys are not larger than the message size limitation of public key algorithms. To achieve our goal of digital signatures we will apply a neat trick and remain within this size limitation as we will discuss momentarily.

Digital signature basics

Digital signature is the tool we will use to achieve the message-level security principle of integrity. Digital signature involves a one-way mathematical function called hashing and using public key (asymmetric) encryption. The basic idea is to create a message digest and then to encrypt that. A message digest is a short representation for the full message. We need that because as we have just seen, asymmetric encryption is slow and is limited in the size message it can encrypt. A hash is a one-way mathematical function that creates a unique fixed size message digest from an arbitrary size text message. One-way means that you can never take the hash value and recreate the original message. Hash functions are designed to be very fast and are good at never creating the same result value from two different messages (they avoid collisions). Uniqueness is critical to make sure an attacker can never just replace one message with another and have the message digest come out the same anyway—that would pretty much ruin our goal of providing message integrity through digital signatures.

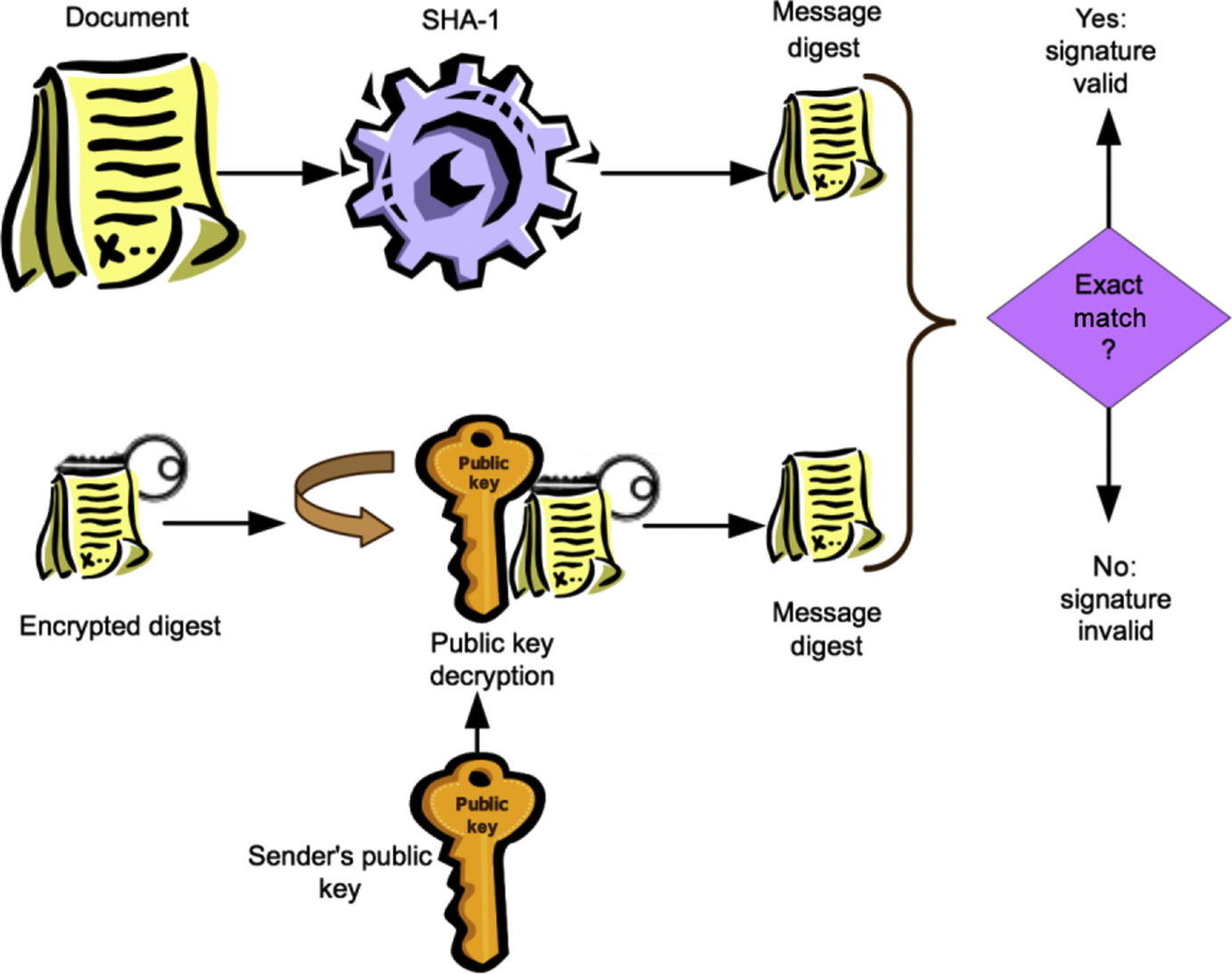

Here is the basic outline of digital signature:

1. Hash the entire plaintext message creating a fixed size (usually 20 bytes) message digest.

2. Encrypt this message digest using the signer's private key.

3. Send the original message and the encrypted message digest along with the signer's public key to any desired recipients.

4. Recipients use the signer's public key to decrypt the message digest. If the decryption is successful and the recipient trusts the signer's public key to be valid and the recipient trusts that the sender has protected their private key, then the recipient knows that it was the signer that sent this message.

5. Recipient uses the exact same hash function as the signer to create a message digest from the full, just-received plaintext message.

6. Recipient compares the just-created message digest to the one just decrypted and if they match message integrity has been proven.

These steps are shown in Figs. 5 and 6 where the hash algorithm being used is called Secure Hash Algorithm 1 (SHA-1).

We know that public key encryption only works on small size messages. And we also know that if Alice encrypts a small message with her private key and sends the message to Bob, Bob can use Alice's public key to prove that the message could only have come from Alice. This identification of the sender is one half of what digital signature is all about. The other half relates to obtaining our goal of verifying the integrity of the message. By integrity we mean that we can tell if the message has changed by even one bit since it was sent. The key to integrity in our digital signature design was our use of the hash function to create a message digest.

Hashing the message to a message digest

A hash function is a one-way (nonreversable) function that takes an arbitrary plaintext message and creates a fixed size numerical output called a message digest that can be used as a proxy for our original message. We want this function to be very fast because we are going to need to run this function on both the sending and verifying ends of a communication. Most importantly we must be certain that it is virtually impossible for two messages to create the same output hash value or we will lose our integrity goal.

A large number of one-way hash functions that have excellent collision avoidance properties have been designed and deployed including MD4, MD5, and SHA-1. Weaknesses have been found in the first two and currently most security systems use SHA-1.

If we hash the entire plaintext message and then protect that hash value from being modified in any way and if the sender and the receiver use the exact same input message and hash algorithm, the integrity of the message can be checked and verified by the recipient without the huge expense of trying to encrypt the entire message. So let's discuss next how to protect the message digest.

Public key encryption of the message digest

Protecting the message digest simply involves encrypting the message digest with the private key of the sender, and to send the original message along with the encrypted message digest to the recipient. This public key encryption of the message digest (which is small so asymmetric encryption will work) gives us nonrepudiation because only the identity with the private key that did the encryption could have initiated the message. Protecting the message digest by encrypting it so no middleman attacker could have modified it gives us message integrity.

Signature verification

Signature verification is the process the message recipient must go through to achieve the message integrity and nonrepudiation goals. The recipient receives the original plaintext message and the encrypted message digest from the sender. Separately, or at this same time, the recipient will receive the public key of the sender. The original plaintext document is run through the same SHA-1 hash algorithm originally performed by the signer. This algorithm is identical on all platforms so the recipient has confidence that the exact same result will occur if the document has not been altered in any way. Separately, the encrypted digest is put through the public key decryption algorithm using the provided public key of the sender. The result of this operation is the decrypted message digest that was originally encrypted by the sender. The final step of the verification process is to do a bit-for-bit comparison of the message digest computed locally from the original document to the one just decrypted. If they are an exact match the signature is valid. We now know for sure that the private key that matches this public key is the one that encrypted the message digest which gives us nonrepudiation and we know that the message was sent unaltered so we have integrity. What we still need and we will discuss in a few moments is assurance that we know for sure the identity of the owner of the public key we just used.

Integrity without nonrepudiation

A very different approach to verifying message integrity when nonrepudiation is not a goal is via a message authentication code (MAC). This approach is like creating a cryptographic checksum. Combined with a hash, the acronym becomes HMAC.

Think of an HMAC as a key-dependent one-way hash function. Only someone with the identical key can verify the hash. We know that hashing is a very fast operation so these types of functions are very useful for guaranteeing authenticity when secrecy and nonrepudiation are not important but speed is. They are different from a straight hash because the hash value is encrypted and protected with a key. The algorithm is symmetric: the sender and the recipient possess a shared key.

1.4.3 Certificates, CAs, and CA hierarchies—public key infrastructure

In all of our discussions of public key encryption and its application to digital signatures, we oversimplified to the extreme when we said the public key is just sent to a recipient. We need more than just the public key itself if the public key is from someone we don't know well. We need identity information associated with the public key. We also need a way to know if someone we trust has verified this identity so that we can trust this entire transaction. Trust is what PKI is all about.

Digital certificates are containers for public keys

A digital certificate is a data structure that contains identity information along with an individual's public key and is signed by a certificate authority (CA). By signing the certificate the CA is vouching for the identity of the individual described in the certificate. Therefore RPs can trust the public key also contained in the same certificate.

Bob, the RP, must be certain that this is really Alice's key. He does this by checking the identity of the CA that signed this certificate (how does he trust them in the first place?) and by verifying both the identity and integrity of the certificate through the CA's attached signature. A validity date included in X.509 certificates helps insure against compromised (or out of date and invalid) keys.

The X.509 digital certificate trust model is a very general one. Each identity has a distinct name. The identity must be certified by the CA using some well-defined certification process they must describe and publish in a certification practice statement (CPS). The CA assigns a unique name to each user and issues a signed certificate containing the name and the user's public key.

Certificate authorities issue (and sign) digital certificates

The CA signs the certificate using a standard digital signature using the private key of the CA. Like any digital signature, this allows anyone with the CA's matching public key to verify that this certificate was indeed signed by the CA and is not fraudulent. The signature is an encrypted hash (called the thumbprint) of the contents of the certificate so standard signature verification guarantees integrity of the certificate data. That in turn allows you to believe the information contained in the certificate. Of course what we are really after is trust in the validity of the subject's (i.e., the sender/signer) public key that is contained in the certificate.

So far so good—if you trust the CA who signed this certificate, that is. The entire world may rely on such a signature so you can be sure the CA goes to extraordinary lengths to protect their private key including armed guards, copper clad enclosures and hardware protecting the private key that is heat and vibration sensitive. The matching public key of the CA is typically very widely distributed. In fact, the public keys for SSL certificates are found in all Web servers, in all Web browsers, and in the various trust store mechanisms many operating systems maintain to make sure RPs can always verify certificates signed by those CAs.

They key to trusting the signed certificate is what process the CA used to verify the identity of the subject prior to the issuance of the certificate. It might be based on my being an employee. It might require I produce my drivers license. Or it might be that I satisfy a set of shared secret questions drawn automatically from data bases that know about me such as telephone company, drivers license bureau, and credit bureau. In extreme cases where no doubt is tolerable (national security), a blood or DNA sample might have to be produced.

The CA can be thought of as a digital notary. One's identity is based on the assurance (honesty) of the notary. A certificate policy specifies the levels of assurance the CA has to provide and the CPS specifies the mechanisms and procedures to be used to achieve a level of assurance. Development of the CPS is the most time-consuming and essential component of establishing a CA. The planning and development of the certificate policies and procedures require the definition of requirements, such as key escrow, and processes such as certificate revocation.

A CA may be the guy down the hall, the HR department of your company, a local external company, a public CA, or the government. The CA must be trusted; or vouched for by someone who is.

Certificate revocation for dealing with public keys gone bad

Key escrow is an optional feature, but certificate revocation is an essential part of the certificate process to establish and maintain trust. Authentication of clients and servers requires a way to verify each certificate within the chain, as well as a way to determine if a certificate is valid or revoked. A certificate could be revoked if a key is compromised or lost due to modification of privileges, misuse, or termination. This is why it is essential that near real-time revocation of certificates is achieved. Currently two technologies are used for revocation: certificate revocation lists (CRLs) and online certificate status protocol (OCSP).

A CA must keep an up-to-date list of all certificates revoked in the CRL. It goes without saying that the CA must make it easy for registration authorities to revoke any given certificate (but prove that they have the right to). With CRLs, RPs have the burden of checking this list each time a certificate is presented. Best practices call for a certificate deployment point (CDP) URL to be embedded in the certificate. A CDP is a pointer to the location of the CRL on the Internet accessible programmatically by any RP's applications. CRLs are usually updated once per day because the process of generating them is nontrivial and time-consuming. When dealing with a compromised key or a rogue employee, once per day updates can potentially mean a huge loss could occur during that day especially when interactions are automated. Currently, almost no one checks revocation lists. While CRLs are created obediently by the sponsoring CAs and numerous tools can and do process them, there are so many unsolved problems with them that in this author's view, CRLs on the Internet are a technological failure.

OCSP was an attempt to create a much finer granularity protocol for essentially real-time revocation checking. But like CRLs, the information provided must be signed by the originating CA, which is a very expensive operation to perform in real-time. The best case on an unloaded system of moderate speed is 26 ms response time for a single OCSP request in our tests. In our view, this makes OCSP so limited in scope that it will continue to be only a bit player in revocation solutions.

1.4.4 SSL Transport Layer Security

The last topic under cryptography is about SSL. SSL is arguably the most widely used implementation of PKI ever deployed. It is important and relevant to a discussion of distributed networked embedded device security because it is so easy to use and so effective for some types of these deployments.

SSL security is most commonly used for browser-to-server security for e-commerce uses. SSL is effective at maintaining confidentiality of arbitrary transactions and will prove to be broadly useful for distributed networked embedded device security especially in early implementations.

There are four options when using http transport security:

1. SSL/TLS (one-way)

Secure Socket Layer (also known as Transport Layer Security). This is the same SSL that you use online when entering your credit card on a Web site. Using one-way SSL you get two benefits:

a. You are verifying the identity of the server, and

b. You are getting an encrypted session between the client and the server.

2. Basic Authentication (Basic Auth)

With Basic Authentication, a username and password is sent by the client for authentication. These credentials are sent in the clear so it is common practice to combine Basic Auth with one-way SSL.

3. Digest Authentication

Digest Authentication addresses the issue of the password being in the clear by using hashing technology (e.g., MD5 or SHA-1). Basically it involves the server passing a nonce (just a number or string chosen by the server) down to the client, which the client then combines with the password and hashes using the algorithm specified by the server. The server then receives the hash, runs the same hash algorithm on the password and nonce that it has. There are a couple of problems with Digest Authentication that make it seldom used. The main issue is that it is not supported in a standard way across Web servers and clients. The other issue is, for the server to participate, it must have access to a clear password, meaning the password must be stored in the clear. Many implementations will only store a hashed password making it impossible to participate in a digest protocol defined this way.

4. Client certificates (two-way SSL or mutually authenticated SSL)

This is one-way SSL as discussed earlier with the addition that the client must also provide an X.509 certificate. The protocol involves challenge and response by the server and the client in which information is digitally signed to prove the possession of the private key and therefore, identity based on the public key inside the certificate can be trusted. This is a powerful option but it adds a lot of complexity especially for Web applications with large numbers of clients because each client needs to be issued certificates in order to gain access. In some Web services scenarios—such as business-to-business—this may not be quite so onerous since the number of clients is typically small. However, one of the major complexity implications of using client certificates is that either.

a. X.509 certificates need to be issued by your company to each of the clients, meaning you need to get certificate management software and become a certificate authority, or

b. You need to work with a certificate authority's managed service. Also, unfortunately, configuring your Web server to accept client certificates is often not for the faint of heart, so you will need an experienced systems administrator to be successful.

When people talk about SSL transport layer security they will sometimes use the term “secure pipe” as a metaphor. What this means is that after the SSL endpoints have gone through their protocol (either one-way or two-way SSL) a cryptographic pathway has been created between the two endpoints (see Figs. 3–11). Web services that are based on HTTP as the transport will flow through this secure pipe making all messages sent back and forth confidential.

1.5 Other Ways to Keep Secrets

This section briefly describes four other ways to keep secrets. A one-time pad (OTP) is an encryption technique that cannot be cracked if used correctly. Steganography is the practice of concealing a file, message, image, or video within another file, message, image, or video. A one-way function is a function that is easy to compute on every input, but hard to invert given the image of a random input. Elliptic curve cryptography (ECC) depends on the ability to compute a point multiplication and the inability to compute the multiplicand given the original and product points.

1.5.1 One-time pad

In cryptography, the OTP is an encryption technique that cannot be cracked if used correctly. In this technique, a plaintext is paired with a random secret key (also referred to as a OTP). Then, each bit or character of the plaintext is encrypted by combining it with the corresponding bit or character from the pad using modular addition. If the key is truly random, is at least as long as the plaintext, is never reused in whole or in part, and is kept completely secret, then the resulting ciphertext will be impossible to decrypt or break. It has also been proven that any cipher with the perfect secrecy property must use keys with effectively the same requirements as OTP keys. However, practical problems have prevented OTPs from being widely used.

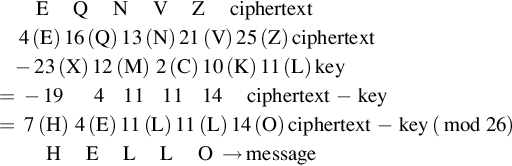

The “pad” part of the name comes from early implementations where the key material was distributed as a pad of paper, so that the top sheet could be easily torn off and destroyed after use. Here is how it works. Suppose Alice wishes to send the message “HELLO” to Bob. Assume two pads of paper containing identical random sequences of letters were somehow previously produced and securely issued to both. Alice chooses the appropriate unused page from the pad. The way to do this is normally arranged for in advance, as for instance “use the 12th sheet on 1 May,” or “use the next available sheet for the next message.” The material on the selected sheet is the key for this message. Each letter from the pad will be combined in a predetermined way with one letter of the message. (It is common, but not required, to assign each letter a numerical value, e.g., “A” is 0, “B” is 1, and so on.)

In this example, the technique is to combine the key and the message using modular addition. The numerical values of corresponding message and key letters are added together, modulo 26. So, if key material begins with “XMCKL” and the message is “HELLO,” then the coding would be done as follows:

If a number is larger than 26, then the remainder after subtraction of 26 is taken in modular arithmetic fashion. This simply means that if the computations “go past” Z, the sequence starts again at A.

The ciphertext to be sent to Bob is thus “EQNVZ.” Bob uses the matching key page and the same process, but in reverse, to obtain the plaintext. Here the key is subtracted from the ciphertext, again using modular arithmetic:

Similar to the above, if a number is negative then 26 is added to make the number zero or higher.

Thus Bob recovers Alice's plaintext, the message “HELLO.” Both Alice and Bob destroy the key sheet immediately after use, thus preventing reuse and an attack against the cipher. OTP has many challenges including being difficult to ensure that the key material is actually random, is used only once, never becomes known to the opposition, and is completely destroyed after use. The auxiliary parts of a software OTP implementation present real challenges: secure handling/transmission of plaintext, truly random keys, and one-time-only use of the key.

1.5.2 Steganography

Steganography is the practice of concealing a file, message, image, or video within another file, message, image, or video. Generally, the hidden messages appear to be (or be part of) something else: images, articles, shopping lists, or some other cover text. For example, the hidden message may be in invisible ink between the visible lines of a private letter. Some implementations of steganography that lack a shared secret are forms of security through obscurity, whereas key-dependent steganographic schemes adhere to Kerckhoffs's principle.1

The advantage of steganography over cryptography alone is that the intended secret message does not attract attention to itself as an object of scrutiny. Plainly visible encrypted messages—no matter how unbreakable—arouse interest, and may in themselves be incriminating in countries where encryption is illegal. Thus, whereas cryptography is the practice of protecting the contents of a message alone, steganography is concerned with concealing the fact that a secret message is being sent, as well as concealing the contents of the message.

Steganography includes the concealment of information within computer files. In digital steganography, electronic communications may include steganographic coding inside of a transport layer, such as a document file, image file, program or protocol. Media files are ideal for steganographic transmission because of their large size. For example, a sender might start with an innocuous image file and adjust the color of every 100th pixel to correspond to a letter in the alphabet, a change so subtle that someone not specifically looking for it is unlikely to notice it.

1.5.3 One-way functions

In computer science, a one-way function is a function that is easy to compute on every input, but hard to invert given the image of a random input. In applied contexts, the terms “easy” and “hard” are usually interpreted relative to some specific computing entity; typically “cheap enough for the legitimate users” and “prohibitively expensive for any malicious agents.” One-way functions, in this sense, are fundamental tools for cryptography, personal identification, authentication, and other data security applications. While the existence of one-way functions in this sense is also an open conjecture, there are several candidates that have withstood decades of intense scrutiny. Some of them are essential ingredients of most telecommunications, e-commerce, and e-banking systems around the world.

We have already discussed one type of one-way function called hash functions in the section on digital signatures. Other forms of one-way function include multiplication and factoring, the Rabin function, discrete exponential and logarithm, as well as others. Hash is easy, fast and very commonly used and we recommend staying with that unless a specific reason for a different form exists.

A cryptographic hash function is a hash function which is considered practically impossible to invert, that is, to recreate the input data from its hash value alone. These one-way hash functions have been called “the workhorses of modern cryptography.” The input data is often called the message, and the hash value is often called the message digest or simply the digest.

The ideal cryptographic hash function has four main properties:

1. It is easy to compute the hash value for any given message.

2. It is infeasible to generate a message from its hash.

3. It is infeasible to modify a message without changing the hash.

4. It is infeasible to find two different messages with the same hash.

Cryptographic hash functions have many information security applications, such as in digital signatures, and message authentication codes (MACs), as we have discussed. They can also be used as ordinary hash functions, to index data in hash tables, for fingerprinting, to detect duplicate data or uniquely identify files, and as checksums to detect accidental data corruption. Indeed, in information security contexts, cryptographic hash values are sometimes called (digital) fingerprints, checksums, or just hash values, even though all these terms stand for more general functions with rather different properties and purposes.

1.5.4 Elliptic curve cryptography

Public key cryptography is based on the intractability of certain mathematical problems. Early public key systems are secure assuming that it is difficult to factor a large integer composed of two or more large prime factors. For elliptic-curve-based protocols, it is assumed that finding the discrete logarithm of a random elliptic curve element with respect to a publicly known base point is infeasible: this is the “elliptic curve discrete logarithm problem” or ECDLP. The security of ECC depends on the ability to compute a point multiplication and the inability to compute the multiplicand given the original and product points. The size of the elliptic curve determines the difficulty of the problem.

The primary benefit promised by ECC is a smaller key size, reducing storage and transmission requirements, i.e., that an elliptic curve group could provide the same level of security afforded by an RSA-based system with a large modulus and correspondingly larger key: for example, a 256-bit ECC public key should provide comparable security to a 3072-bit RSA public key.

The US NIST has endorsed ECC in its Suite B set of recommended algorithms, specifically Elliptic Curve Diffie–Hellman (ECDH) for key exchange and Elliptic Curve Digital Signature Algorithm (ECDSA) for digital signature. The US National Security Agency (NSA) allows their use for protecting information classified up to top secret with 384-bit keys.

1.6 Discovering Root Cause

Root cause analysis (RCA) is a method of problem solving used for identifying the root causes of faults or problems. It is a standard part of thorough engineering in the face of issues much broader than cybersecurity but when a hack is successful against an embedded device, RCA is an important tool to developing a robust system that withstands attempts to cyber attack it. In RCA a factor is considered a root cause if removal thereof from the problem-fault-sequence prevents the final undesirable event from recurring; whereas a causal factor is one that affects an event's outcome, but is not a root cause. Though removing a causal factor can benefit an outcome, it does not prevent its recurrence within certainty.

Think of getting at the root cause of an exploited cybersecurity vulnerability like the difference between treating a symptom and curing a chronic medical condition. The truth is security professionals spend far too much time treating recurring symptoms without penetrating to the deeper roots of software and information technology (IT) issues so that problems can be solved at their source. Without utilizing the principles of RCA, sysadmins and operations managers may be kept too busy treating symptoms to ever bother digging down to find the roots of chronic conditions.

RCA has been used in many famous engineering disasters: the Tay Bridge collapse of 1879, the New London school explosion of 1937, and the Challenger space shuttle disaster of 1986 are a few examples. Builders, users, the public demanded that these types of incidents must never happen again. For that guarantee, it required RCA to be certain.

Now it is routinely used after major cyber attacks to determine what is needed to prevent a recurrence. One of the simplest and most common approaches to RCA—as it's practiced in every field and industry—is the “5-Why” approach developed by Sakichi Toyoda, the founder of Toyota Motor Corporation.

The 5-Why formula is incredibly simple: just keep asking why deeper and deeper like a 5-year-old child.

Formally or informally, this was the process used to ultimately understand how Stuxnet did the damage it did to just the centrifuges and just in one plant in the target country. It went like this:

Q Why did 2000 plutonium processing centrifuges at the nuclear processing site just get wheeled out to the dump?

A They self-destructed and were rendered useless and unfixable.

A Because their program said they should spin up very fast then slow down over and over during many months.

Q Why did they not follow their programming and boost speed over safe limits and slow down again over and over?

A Their program got corrupted before it was uploaded to the controller.

Q Why did the programmers who set up their programming not know the program being uploaded was corrupt?

A The design software itself had been corrupted and hid from the programmer what was actually in the uploaded code.

Q Why did the design software get corrupted?

A Because the entire Windows 7 machine was taken over by the worm.

Q Why was a worm able to take over the Windows 7 machine?

A Because an infected thumb drive was inserted into the machine.

1.6.1 Automatically determining root cause

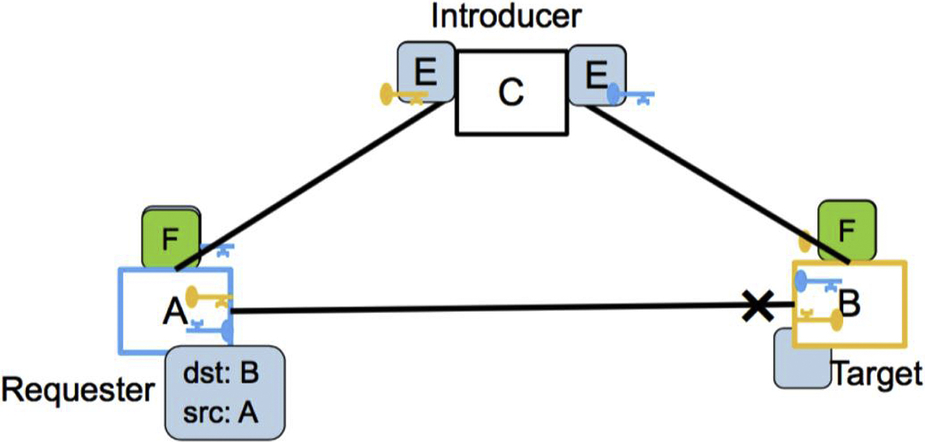

Many attacks are multistage like Stuxnet. Each individual stage might not look like a major attack or even like a big problem. For example, there are legitimate reasons for a port scan so by itself just a port scan is not a reason for alarm. In fact, when monitoring software throws up an alarm at every port scan, a network operations center can be flooded with false negatives. But a port scan followed by an access to a behind-the-wall web page with no protecting password followed by a probe for the heart bleed bug is actually a multistage attack in the works.

A networking environment with a strong identity system and the ability to maintain reputation of an identity is able to keep track of the steps in what might become a multistage attack and is able to not sound an alarm too early but still to track the stages up to the point where it is clear that the individual (or application) is being nefarious and needs to be removed from the system before the last stage of their attack. This is especially important in a distributed environment with a large number of embedded devices because such an environment allows the perpetrator to move around from device to device thinking they are thwarting attempts to detect them.

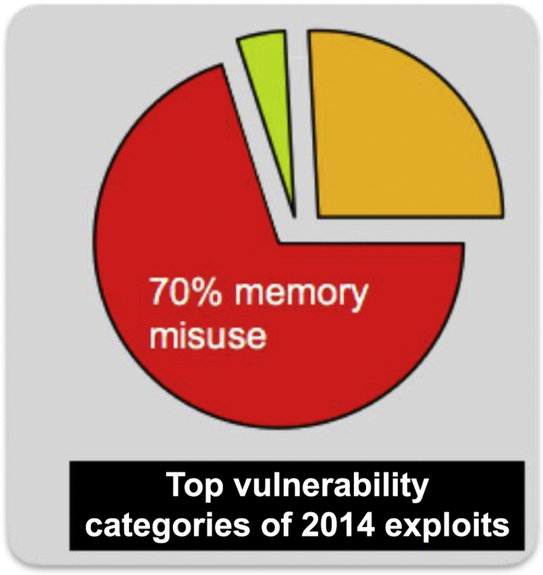

1.7 Using Diversity for Security [4]

First, it is important that we acknowledge that software vulnerabilities exist. Second, we must also recognize that monocultures (where many devices and/or users are all running the same software, e.g., VxWorks or Linux) are highly vulnerable en masse. Diversity is a good way to acknowledge the first point and avoid the dangers of the second.

The principle that a moving target is harder to hit applies not only in conventional warfare but also in cybersecurity. Moving-target defenses change a system's attack surface with respect to time, space, or both. For instance, software diversity makes the software running on each individual system unique—and different from that of the attacker. Diversity can have a potentially large impact on security with little impact on runtime performance. That is not to say that software diversity is free or trivially easy to deploy, but it can be engineered to minimize impact on both developers and users. In addition, diversification costs can be placed up front (prior to execution) so there's no ongoing drag on performance.

The most prevalent form of software design diversity is N-version programming [5]. In an N-version system, developers write redundant software components to the same specification in the conventional manner. The premise behind design diversity is that separating methodology and programming teams will result in different faults in the different versions—although software will still fail, the hope is that different versions will fail at different times and in different ways.

Data diversity is the concept that diversity in the data space (as opposed to the design space) can potentially avoid event sequences that lead to failure. Applying data diversity changes the data that a program reads, causing the program to execute a different path and thereby possibly avoid a fault. Data diversity's advantage over design diversity is that it lends itself to automation and is thus scalable. Data diversity doesn't remove vulnerabilities, it only makes them harder to exploit.

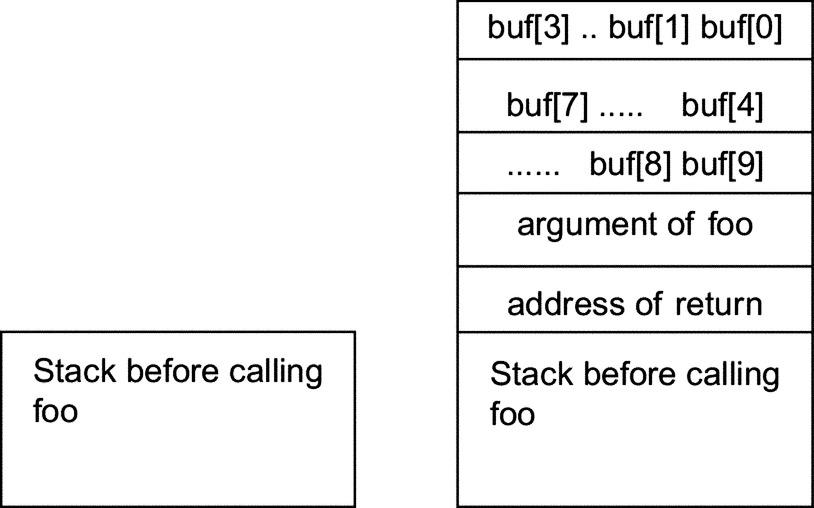

A poor man's diversity of sorts is address space layout randomization (ASLR) [6]. Address space randomization hinders some types of security attacks by making it more difficult for an attacker to predict target addresses. For example, attackers trying to execute return-to-libc attacks must locate the code to be executed, while other attackers trying to execute shellcode injected on the stack have to find the stack first. In both cases, the system obscures related memory-addresses from the attackers. These values have to be guessed, and a mistaken guess is not usually recoverable due to the application crashing.

On the downside, for ASLR to be employed, the code must be compiled such that it is position-independent. Unfortunately, position-independent code increases register pressure on some processors and thus degrades performance. Furthermore, ASLR is highly susceptible to information leakage attacks; because all addresses in a segment are shifted by a constant amount, a single leaked code pointer lets attackers sidestep this defense.

The point in the development pipeline at which diversity is introduced matters for several reasons. Because software is predominantly distributed in binary form, diversification during compilation means that it occurs before software distribution and installation on end-user systems. So, software developers or distributors must pay for the computational resources necessary for diversification. Postponing diversification until the time at which the binaries are installed or updated on the end-user system distributes the diversification cost among users instead. However, post facto diversification via binary rewriting interferes with code signing because it changes the cryptographic hash. Signed code is used pervasively on mobile devices and increasingly on other embedded systems as a way to eliminate one source of cyber vulnerability. Finally, not all applications of diversity are possible with host-based solutions. Diversification makes tampering and piracy significantly harder and protects software updates against reverse engineering; these protections are ineffective if diversification is host based—users can simply disable the diversification engine running on their systems.

1.8 Defense in Depth

The idea behind defense in depth is to defend a system against any particular attack using several independent methods. It is a layering tactic, conceived by the NSA as a comprehensive approach to information and electronic security [7].

Defense in depth means there are overlapping systems designed to provide security even if one of them fails. An example from the enterprise systems domain is a firewall coupled with an intrusion-detection system (IDS). It can equally as effectively be applied in the embedded systems domain. Defense in depth bolsters security because there's no single point of failure and no assumed single vector for attacks.

Defense in depth was originally a military strategy that delays, rather than prevents, the advance of an attacker by yielding space in order to buy time. The placement of security protection mechanisms, procedures, and policies is intended to increase the dependability of an IT system where multiple layers of defense prevent espionage and direct attacks against critical systems. In terms of computer network defense, defense in depth measures should not only prevent security breaches, but also buy an organization time to detect and respond to an attack, thereby reducing and mitigating the consequences of a breach. Of course, key to this strategy working with an array of embedded systems “at the edge” of the network is that they can act as sensors and report back that they have detected an attack in process.

In the industrial control systems domain an increasing number of organizations are using modern networking to enhance productivity and reduce costs by increasing the integration of external, business, and control system networks; the ICA devices are being networked to each other and to the main systems of the organization. However, these integration strategies often lead to vulnerabilities that greatly reduce the cybersecurity posture of an organization and can expose mission-critical industrial control systems to cyber threats. Cyber-related vulnerabilities and risks are being created that did not exist when industrial control systems were isolated (but remember, the Stuxnet SCADA controllers were not connected to any network). A number of instances have illustrated the interdependence of industrial control systems, such as those in the power sector, including the 2003 North American blackout.

Simply deploying IT security technologies into a control system may not be a viable solution. Although modern industrial control systems often use the same underlying protocols that are used in IT and business networks, the very nature of control systems functionality (combined with operational and availability requirements) may make even proven security technologies inappropriate. Some sectors, such as energy, transportation, and chemical, have time sensitive requirements, so the latency and throughput issues associated with security software and hardware systems may introduce unacceptable delays and degrade or prevent acceptable system performance.

What this highlights is the need for security in depth within embedded systems and their frameworks. The same is true for IoT devices (to date more commonly thought of as consumer devices) and while an enterprise may have the ability to lock down, partition, and track the security of its network, it will be impossible for the average consumer or user to even come close. Many new companies are rapidly getting into the IoT market rushing products to market focused on functionality to grab a piece of market share and not giving any consideration to security. This is dangerous and it may mean a looming IoT cybersecurity disaster is on our horizon.

Industrial IoT—or IIoT—such as smart energy, intelligent transportation, factory automation, and industrial process control—like any other network, will require strong protection against malicious intrusions, including pervasive monitoring, wiretapping, MAC address spoofing, MITM attacks, and denial-of-service (DoS) attacks. Of the tens of billions of IoT-connected devices already in use today, few find themselves in physically secure locations. For better or worse, this is a natural consequence of mobility. Yet these same devices are often used to transmit confidential data.

As a specific minimal recommendation relative to defense in depth, it should be standard practice to have different security checks for actions like over-the-wire updates, Web-based management, and command authentication. Passwords or keys should never be stored as cleartext, they should follow the strongest standards for strong passwords and two-factor authentication should strongly be considered. Certainly security should not be an add-on or a simple firewall.

1.9 Least Privilege

The principle of least privilege (also known as the principle of minimal privilege or the principle of least authority) requires that in a particular abstraction layer of a computing environment, every module (such as a process, a user, or a program, depending on the subject) must be able to access only the information and resources that are necessary for its legitimate purpose [8].

The principle means giving a user account only those privileges which are essential to that user's work. For example, a backup user does not need to install software: hence, the backup user has rights only to run backup and backup-related applications. Any other privileges, such as installing new software, are blocked. The principle applies also to a user who usually does work in a normal user account, and opens a privileged, password protected account (i.e., a superuser) only when the situation absolutely demands it.

When applied to users, the terms least user access or least-privileged user account (LUA) are also used, referring to the concept that all user accounts at all times should run with as few privileges as possible, and also launch applications with as few privileges as possible. Software bugs may be exposed when applications do not work correctly without elevated privileges.

The principle of least privilege is widely recognized as an important design consideration in enhancing the protection of data and functionality from faults (fault tolerance) and malicious behavior (computer security).

From a security perspective the principle of least privilege means each part of a system has only the privileges that are needed for its function. This way even if an attacker gains access to one part, they have only limited access to the whole system.

No current OS fully abides by this approach although simpler OSs used in embedded systems have a much better chance of adhering to LP than full-fledged large system OSs like Unix derivatives.

Privilege escalation is a major component of many of the most damaging cyber attacks. Systems that have very little least privilege in their design and run several processes at highest privilege take on huge risk because if an attacker can successfully penetrate and take over such a process (or thread) it can now do anything. The attacker's malware can then read and write memory, can create or modify any existing file, and it can create and run any process. Through these and other means, an attacker can hide latent processes on the current system that may rise again later (called an advanced persistent threat (APT)) and it can spread its malice to machines to which the victim machine is connected. This was one of the strategies Stuxnet used to remain undetected.

1.10 Antitampering

Tampering involves the deliberate altering or adulteration of a product, package, or system. Why do adversaries do it? Three main goals of tampering are:

• To develop countermeasures. Those countermeasures might be to understand how to attack the device or if it is a weapon to develop a counter weapon that defeats or circumvents the capabilities of the device;

• To gain access to technology sooner than the adversary would have naturally developed it. Basically to steal the intellectual property employed in the device to advance the adversaries technological prowess; or

• To reverse engineer the device and in the process modify it to add features or capabilities to it.

Antitamper refers to technologies aimed at deterring and/or delaying unauthorized exploitation of critical information and technologies. Antitamper schemes range from simple “lock-it-up” to “deter-detect-react.”

Military weapons systems are frequent targets of tampering. Tampering may occur to take over a device in order to accomplish some malicious goals. This might be to take over a device so that its behavior or that of a device connected to the tampered one can be affected according to the purposes of the attacker. Tampering may occur by the end user of the product to change its behavior. Tampering with iPhones is common to accomplish what is called jail breaking the phone for the purpose of removing Apple's restrictions.

Tamper-resistant microprocessors are used to store and process private or sensitive information, such as private keys or electronic money credit. To prevent an attacker from retrieving or modifying the information, the chips are designed so that the information is not accessible through external means and can be accessed only by the embedded software. Examples of tamper-resistant chips include all secure cryptoprocessors, such as the IBM 4758 and chips used in smartcards, as well as the Clipper chip.

It is very difficult to make simple electronic devices secure against tampering, because numerous attacks are possible, including:

• physical attack of various forms (microprobing, drills, files, solvents, etc.)

• freezing the device

• applying out-of-spec voltages or power surges

• applying unusual clock signals

• inducing software errors using radiation (e.g., microwaves or ionizing radiation)

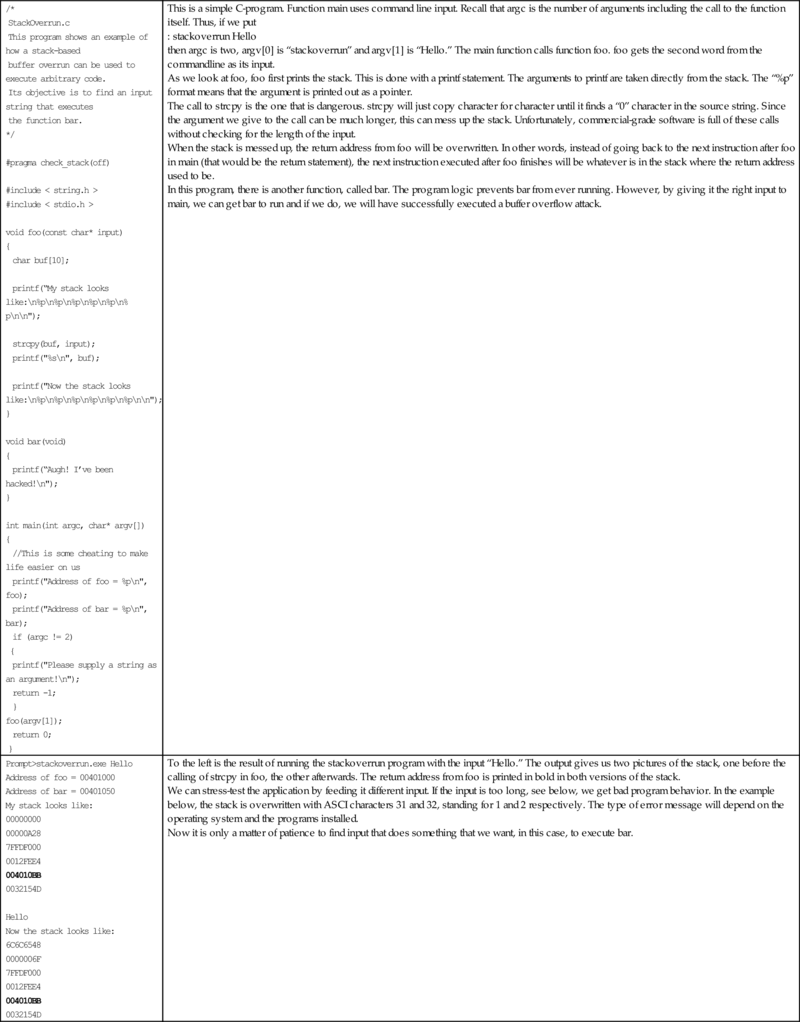

• measuring the precise time and power requirements of certain operations (see power analysis)