5 The Test Plan

This chapter describes the relationship between the strategic concepts of the quality and test policies and their concrete implementation in test planning.

A core element of test planning is creating a set of test plan1 documents. This chapter provides a detailed account of the typical contents of these planning documents and the activities that lead to them.

5.1 General Test Plan Structure

5.1.1 From Strategy to Implementation

Chapter 4 described the relationship between a quality policy, test policy, and test handbook. Whereas quality and test policy can be considered strategic corporate guidelines, a test handbook ideally represents a collection of “best practices,” supporting and facilitating the implementation of strategic guidelines in concrete applications.

In most cases, these applications—that is, the planning and execution of concrete test activities—take place within a software or system development project. Test activities performed in the development project are subsumed under the term test project.

According to [PMBOK 04], “a project is a temporary endeavor undertaken to create a unique product, service, or result.”

Central features of a project are its plannability and controllability of activities.

Test plan: planning basis for the test project

In the test project, planning of activities that are geared toward achieving the test objectives is done in the test plan, whose function, among others, is as follows:

![]() To describe, in brief, all activities of the test project, determining project costs and test execution times and putting them in relation to the project results to be tested, thus forming the basis upon which budget and resources can be approved

To describe, in brief, all activities of the test project, determining project costs and test execution times and putting them in relation to the project results to be tested, thus forming the basis upon which budget and resources can be approved

![]() To identify project results (e.g., documents) to be created

To identify project results (e.g., documents) to be created

![]() To identify conditions and services to be provided by persons or departments external to the test team

To identify conditions and services to be provided by persons or departments external to the test team

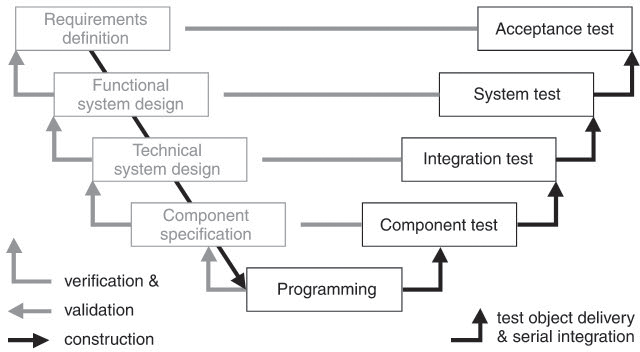

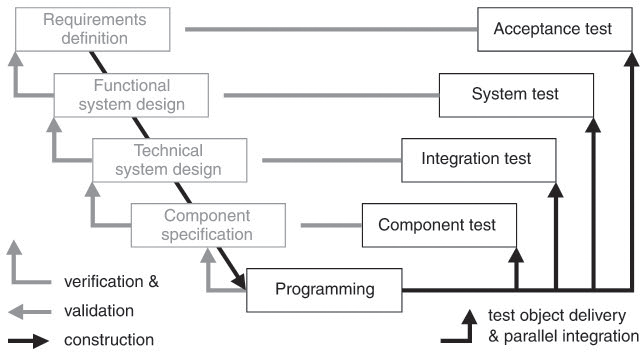

In chapter 2, we explained that the test project is composed of several phases; in a project, these phases are usually repeated in test cycles performed at the various development levels of the test object.

![]() The test plan must also identify test cycles and major test cycle content, assign activities to the cycles, and align them with the software release plan.

The test plan must also identify test cycles and major test cycle content, assign activities to the cycles, and align them with the software release plan.

The test plan is the central planning basis of the test project, translating corporate quality goals, test policy principles, and generic test handbook measures into project reality.

The project plan of a development project usually refers to the test plan in its section on test activity planning.

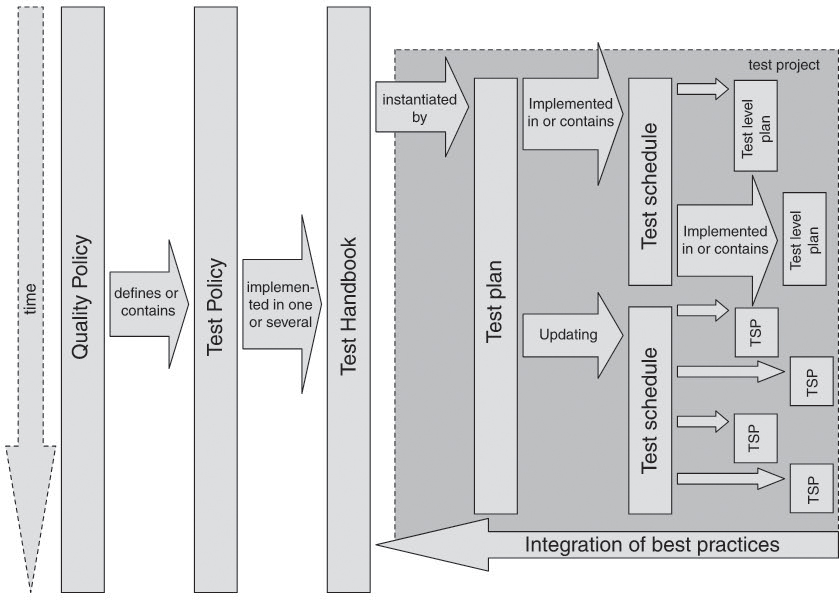

Figure 5-1 illustrates the relationship between the basic components from which test project activities are derived and shows their different life cycles. The figure lists the individual items according to their strategic importance and in the sequence of their “average life expectancy”:

![]() Usually, quality and test policy are changed rarely and are only adapted to incorporate changing corporate goals and long-term project experience.

Usually, quality and test policy are changed rarely and are only adapted to incorporate changing corporate goals and long-term project experience.

![]() Test manuals, whose validity generally encompasses all projects, are also revised at regular intervals, incorporating experiences gained in test projects.

Test manuals, whose validity generally encompasses all projects, are also revised at regular intervals, incorporating experiences gained in test projects.

![]() The test plan is created at the beginning of the test project as the central planning document and is valid throughout the entire project life cycle. However, some of its planning directives are of a dynamic nature and must be revised and adjusted to reality several times during the test project’s lifetime (details are discussed in chapter 6). These are as follows:

The test plan is created at the beginning of the test project as the central planning document and is valid throughout the entire project life cycle. However, some of its planning directives are of a dynamic nature and must be revised and adjusted to reality several times during the test project’s lifetime (details are discussed in chapter 6). These are as follows:

Figure 5–1 From quality policy to test level plan

• The test schedule, linking all executable test activities in the project to concrete resources and dates. Changes in the availability of resources, but also changes in planning requirements (e.g., delivery dates of test objects), regularly lead to changes in the test schedule. In larger test projects with several comprehensive test levels, the test schedule will often be divided further into test level plans.

• Test level plans2 break down the test schedule to the test procedures for each of the planned test levels such as component or system test. They are revised at each test cycle or even more frequently and may even be created anew or derived from the overall test schedule.

As you saw in chapter 4, the quality policy, test policy, and test handbook need not necessarily be separated into three documents.

Monolithic document or granular document structure

The hierarchical contents of the (project-wide) test plan, the test schedule, and the test level plans can be contained in one document or may be split in several ones. The recommended contents of a test plan should then be covered by the whole set of documents.

There are good reasons to split the contents into several documents:

![]() If divided as proposed, strategic, or rather static, content is separated from parts that become more and more dynamic and that may need to be revised several times during the test project, making repeated changes and releases a lot easier.

If divided as proposed, strategic, or rather static, content is separated from parts that become more and more dynamic and that may need to be revised several times during the test project, making repeated changes and releases a lot easier.

![]() The single documents can be distributed separately to stakeholders of different parts of the contents.

The single documents can be distributed separately to stakeholders of different parts of the contents.

![]() Whereas strategic components are usually written down in a text document, a test management tool is best used to manage the dynamic content.

Whereas strategic components are usually written down in a text document, a test management tool is best used to manage the dynamic content.

5.1.2 Strategic Parts of the Test Plan

A test handbook simplifies the creation of the test plan.

The test plan describes how testing is organized and performed in a project. At best, we have a mature test handbook filled with easily applied specifications, in which case the test plan can in many parts simply refer to the test handbook and only document and explain necessary deviations. Here are some examples of test plan components (see also section 4.3):

![]() Test entry/exit and completion criteria for the respective test levels

Test entry/exit and completion criteria for the respective test levels

![]() Applicable test specification techniques

Applicable test specification techniques

![]() Standards and document templates to be complied with

Standards and document templates to be complied with

![]() Test automation approach

Test automation approach

![]() Measurements and metrics

Measurements and metrics

![]() Incident management

Incident management

The test plan adds project specifics to the test handbook.

In addition, the test plan describes the project-specific organizational constraints within which testing may operate.

Although general specifications provided in the test handbook may be used to describe these constraints, every project needs to document facts above and beyond those stated in the test handbook.

![]() Project-related test objectives

Project-related test objectives

![]() Planned resources (time, staffing, test environment, tester work places, test tools, and so on)

Planned resources (time, staffing, test environment, tester work places, test tools, and so on)

![]() Estimated test costs

Estimated test costs

![]() “Deliverables” to be created, such as test case specification, test reports, and so on

“Deliverables” to be created, such as test case specification, test reports, and so on

![]() Requirements for test execution, such as necessary provisions

Requirements for test execution, such as necessary provisions

![]() Contact persons internal and external to the test team with required communication interfaces

Contact persons internal and external to the test team with required communication interfaces

![]() identification of components making up the test basis, test objects, and interfaces for testing

identification of components making up the test basis, test objects, and interfaces for testing

The test plan documents the test objectives and appropriate test strategy. Test objectives and test strategy are used to derive the mandatory and the optional test topics, building an initial test design specification. They are, initially, listed by key words and roughly prioritized, resulting in a first draft that will be further elaborated into test case specifications during the course of the project.

The test plan provides the skeleton for the test specification.

All test plan components listed here are of a “static” nature in the sense that they are defined at the beginning of the test project with usually little or no changes at all later on. This does not apply to the components covered in the following sections.

5.1.3 The Test Schedule

Keeping the test strategy in mind during testing, concrete technical resources, responsible staff and target dates must be assigned to test cases listed in the test case catalog. The test manager determines who is to specify, implement, and, if necessary, automate test cases and at what times. The definition and execution of test cases are thus brought into a chronological order, and the initial (created by the ideal assumption of having unlimited resources available) test specification and prioritization is brought into line with available resources. Adding these time and resource specifications, the initial test design specification will eventually grow into the test schedule that needs to be maintained at regular intervals.

The test schedule maps the general planning to concrete dates and resources.

A test schedule may, for example, be structured as follows:

Time and resource planning in the test schedule

1. Organizational Planning

• Scheduling of start and end dates of test cycles depending on delivery dates for the builds of the test objects

• Resource planning consisting of

– task assignment to the test team

– layout for the test infrastructure (hard- and software, testware)

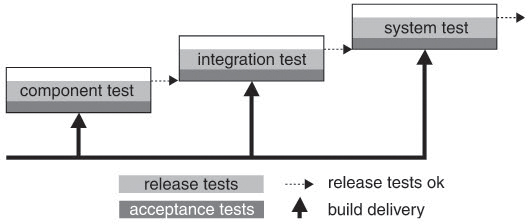

• Scheduling for acceptance testing3 and release testing during transition to subsequent test levels, for the execution of all high-priority tests and any anticipated retests, and so on.

Planning test schedule content

2. Content Planning

• Scoping of test cycle content depending on the anticipated functionality of each of the test objects. Generally, the aim is a mixture of initial, new functionality testing, retesting of areas containing defects from previous cycles, and regression testing of all other areas.

• Step-by-step refinement of the assigned scope per test cycle to build up a list of all planned tests, starting with the test objectives specified in the test plan. In longer, cyclic test projects, this refinement process will not be restricted to test execution but extend to simultaneous specification and, where applicable, test automation for test cases to be executed in subsequent test cycles.

• Effort estimation for each planned cycle as a basis for scheduling. Distribution of effort to specification, automation, and execution depends on the functional scope of the test objects, on test strategy and test objectives, and on resources available for each respective test cycle.

• Planning of an efficient test sequence to minimize redundant actions; for instance, through executing test cases that create data objects before executing those that manipulate or delete them. This way some test cases are a precondition for subsequent ones.

• Additional test cases, defined ad hoc or by explorative testing if resources are available.

• Resource assignment to specific test topics:

– Test personnel with application or test task specific know-how, such as, for instance, for explorative or ad-hoc tests, load tests, and so on.

– Definition of the specific test infrastructure for the selected test tasks (e.g., load generators, usability lab infrastructure ...).

– Provision of test data (-bases)

Chapter 6 describes all the tasks involved in test scheduling, maintenance, and control.

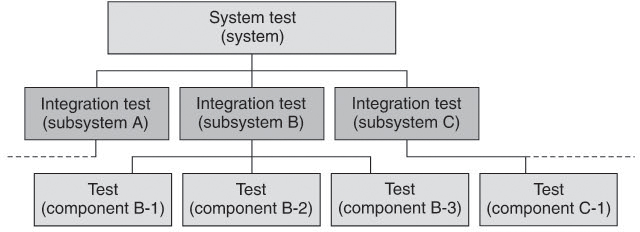

5.1.4 The Level Test Plan

The test plan defines the test procedure for an entire test project, passing through several test levels such as component testing, integration testing, and system test. In large or complex test projects in which different teams may be in charge of different test levels, it may make sense to set up different test plans for different test levels. One test level plan details the planning for a particular test level and represents the application and substantiation of the test plan specifications for that level. It covers the same topics, but it’s constrained to a particular test level. When using a test management tool, such test level plans may not exist at all in document form but only in the form of planning data.

From test plan to level test plan

5.1.5 IEEE 829 – Standard for Test Documentation

The [IEEE 829] standard defines the structure and content of some of the standard documents required in testing; among others for the test plan. Using the standard as the basis for the creation of a test plan guarantees coverage of all relevant aspects of test planning for the project.

Standard for test plan structure and content

Besides the test plan definition, IEEE 829 also provides templates for three levels of test specifications, test item transmittal reports, test logs, test incident reports, and test summary reports. A project’s test plan defines which of those documents are to be created by whom and whether or not to use the IEEE 829 or other templates.

The new IEEE 829 (2005) draft currently out for ballot provides appreciably improved specifications for the planning of complex test projects in the form of “master” and “level” documents outlining, for instance, the structures of a “master test plan” and a “test level plan”.4

According to the standard, the structure of the test plan comprises the items listed in the following table (the table also states where the required items are discussed in more detail in this chapter). If you compare this list with what was said about static and dynamic test plan components, you’ll see that IEEE 829 covers both. The relevant sections for dynamic content are sections 10 (testing tasks) and 14 (schedule).

Test plan structure according to IEEE 829

Chapter according to IEEE 829 |

Refer to section |

5.2 Test Plan Contents

The following section addresses the content of the test plan in more detail; sequence and subchapter names correspond to the structure of the IEEE 829 standard. In this book, we concentrate on those aspects that, according to the ISTQB Advanced Level Syllabus, need to be described in detail and that were not exhaustively treated in [Spillner 07].

5.2.1 Test Plan Identifier

The name, or identifier, of the test plan ensures that the document can be clearly and uniquely referenced in all other project documents. Depending on the organization’s general document naming conventions, a number of different name components can be defined by means of which storage and retrieval of the test plan is managed. Minimum requirements are the name of the test plan, its version, and its status.

The identifier ensures that the test plan can be uniquely referenced.

5.2.2 Introduction

The purpose of the introduction is to familiarize potential test plan readers with the project and to provide guidance as to where information relevant to their particular role can be found.

The purpose of the introduction is to get the reader acquainted with his role in the test plan.

Typical readers of the test plan (besides the test team itself) are the project manager, developers, and even the end customer.

All external information sources necessary for the understanding of the test plan must also be listed, such as, for instance, the following:

![]() Project documents (project plan, quality management plan, configuration management plan, etc.)

Project documents (project plan, quality management plan, configuration management plan, etc.)

![]() Standards (see chapter 13)

Standards (see chapter 13)

![]() Underlying quality policy, test policy, and test handbook

Underlying quality policy, test policy, and test handbook

![]() Customer documents

Customer documents

Ensure that relevant versions of these sources are referenced. In addition, the introduction is intended to provide an overview of the test objects and test objectives.

5.2.3 Test Items

This section provides a detailed description of the test basis and of the objects under test:

Identifying the test objects

![]() Documents that form the basis for testing and which is input information for test analysis and design. Among them are requirements and design specifications for the test objects but also all system manuals.

Documents that form the basis for testing and which is input information for test analysis and design. Among them are requirements and design specifications for the test objects but also all system manuals.

![]() Components that constitute the test object itself; that is, all components that need to be present for the associated tests to be executed. This includes providing reference to the versions of the test objects.

Components that constitute the test object itself; that is, all components that need to be present for the associated tests to be executed. This includes providing reference to the versions of the test objects.

![]() This section also deals with the way in which test objects are passed from development to the test department—is it, for instance, delivered via download, CD/DVD, or any other medium?

This section also deals with the way in which test objects are passed from development to the test department—is it, for instance, delivered via download, CD/DVD, or any other medium?

Particular system components that are not test objects but are required for testing are also mentioned here. Some system components, for example, are supplied by subcontractors and are already tested, so they do not need to be tested again by the project.

5.2.4 Features to Be Tested

During the development of a system, project objectives contain objectives for the implementation of the project, such as the intended release date and overall budget, but also, and in particular, the targets for the quality level; i.e., the nature and implementation of the system’s quality attributes.

Identifying the test objectives

With respect to the quality attributes, the test plan constitutes a kind of contract between project management and test management. The test plan determines which of the quality attributes are to be verified in which way during testing; in other words, it defines what the test objectives of the project are.

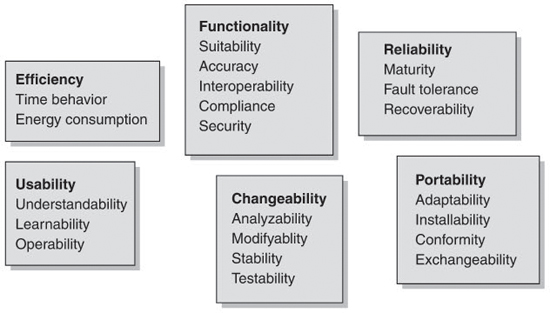

In order to achieve a traceable and consistent attribution of quality attributes to test objectives and test techniques required for their verification across all test levels, it is best to use a well-defined quality model, such as [ISO 9126], shown in figure 5-2.

This standard divides the features of software systems into 6 main quality attributes and 21 subattributes (see also [Spillner 07, section 2.1.3]).

This model allows you to describe the (functional as well as nonfunctional) software features well and particularly supports the description of suitable verification techniques and the quantification of acceptance criteria (see also section 5.3).

Figure 5–2 ISO 9126 software quality attributes

Example: Test goals of the VSR test plan

For instance, the VSR test plan contains the following statements relating to the DreamCar subsystem

![]() The quality attribute “functionality” will be tested extensively at the different test levels using all the available test methods. It represents the focus of test activities since accuracy of the calculated vehicle price is the most important feature. The test objective is to test as many functional use cases as possible with all the available data on vehicles, special editions, and car accessories.

The quality attribute “functionality” will be tested extensively at the different test levels using all the available test methods. It represents the focus of test activities since accuracy of the calculated vehicle price is the most important feature. The test objective is to test as many functional use cases as possible with all the available data on vehicles, special editions, and car accessories.

![]() Since DreamCar is supposed to be used by car dealers and prospective buyers alike without any previous experience, special attention is given to the quality attribute “usability.” This quality attribute will be addressed later in the context of system testing,5 where a usability test is to be carried out by at least five car dealer representatives and five arbitrarily chosen people.

Since DreamCar is supposed to be used by car dealers and prospective buyers alike without any previous experience, special attention is given to the quality attribute “usability.” This quality attribute will be addressed later in the context of system testing,5 where a usability test is to be carried out by at least five car dealer representatives and five arbitrarily chosen people.

5.2.5 Features Not to Be Tested

This section describes which project objectives are not a constituent part of the test plan, i.e., which quality attributes are not subject to testing. In particular, stating explicitly which objectives will not be pursued and providing reasons why particular quality attributes and/or system components will not be tested are important parts because they provide for a clear division of responsibilities between test and other forms of quality assurance, such as, for example, verification through reviews.

Identification of the non-objectives of test projects

Example: Non-objectives for VSR DreamCar testing

The VSR test plan, for example, states the following for the DreamCar subsystem:

![]() Changeability and portability are non-testable quality attributes but must be verified through reviews or other static quality assurance measures.

Changeability and portability are non-testable quality attributes but must be verified through reviews or other static quality assurance measures.

![]() Implementation efficiency does not play an essential role in DreamCar and therefore does not need to be tested.

Implementation efficiency does not play an essential role in DreamCar and therefore does not need to be tested.

![]() In addition, the test plan states that the database containing the vehicle data is provided by a subcontractor that sells them as a standard product. Because of mutual trust and the distribution of the database in the market, we may assume that it is sufficiently tested and the defects are minimized.

In addition, the test plan states that the database containing the vehicle data is provided by a subcontractor that sells them as a standard product. Because of mutual trust and the distribution of the database in the market, we may assume that it is sufficiently tested and the defects are minimized.

It helps readability if besides non-tested quality attributes, “not-to-be-tested” system components are listed or referenced too (see section 5.2.3). This way, everything not covered by the test plan is listed in one section.

5.2.6 Approach

The test strategy is the “heart” of the test plan, and its elaboration is closely connected with topics such as the estimation of the test effort attributable to the strategy, organization, and coordination of the different test levels. This is why a major section in this chapter is devoted to this topic (see section 5.3).

5.2.7 Item Pass/Fail Criteria

There must be clear rules for each test level and for the entire test project as to when a test object will be tested and when respective test activities may be stopped.

Test exit criteria and metrics belong together.

This question is, in principle, closely connected with the definition and application of suitable metrics. Chapter 11 lists corresponding metrics and chapter 6 explains their application in test control and reporting. On the other hand, the definition of entry and exit criteria also depends on the organization of the test levels. Section 5.5 provides further information on this issue and lists examples for entry and exit criteria.

Like the test strategy, test completion and acceptance criteria should be oriented toward the project and product risk of the system under test, not only with regard to the stringency of the criteria to be applied (i.e., the necessary accuracy of the metrics) but also with regard to the definition of release criteria based on these metrics (that is, the measurement values that need to be reached for test completion or acceptance).

Often it is useful to distinguish between test exit criteria and acceptance criteria. Test exit criteria are applied in each test cycle to determine the end of a test cycle. Generally speaking, they solely relate to test progress and not to test results. In contrast, acceptance criteria are used to determine the ability to deliver the test objects to the next test level or the customer.

There may be a considerably wider spectrum of acceptance criteria—for instance, test results, the availability of release documents, official release signatures, etc.

5.2.8 Suspension Criteria and Resumption Requirements

If a test object is not ready to complete a test level (i.e., to meet the test exit criteria), there is the risk of wasteful testing on that test level while executing the test level plan. In that situation, it makes much more sense to return the test object for repair to development and earlier test levels.

One partial solution for this problem is the definition and application of suspension criteria; another lies in defining entry criteria used for each test level (their application will prevent such a situation or at least reduce its probability).

Test suspension criteria and test entry criteria compliment each other.

Such entry criteria can also be used as resumption criteria for resuming an interrupted test level. Again, section 5.5 will provide more detailed information.

5.2.9 Test Deliverables

The test plan must state the following very clearly:

![]() Which documents are to be created in the project?

Which documents are to be created in the project?

![]() What the formal standards and content-related specifications (release criteria) are for these documents?

What the formal standards and content-related specifications (release criteria) are for these documents?

As mentioned in section 5.1.5, IEEE 829 provides a good baseline for necessary or urgently required documents in the test project; others, such as test automations, may be project-specific additions. Auxiliary testing tools and test automation must be treated as documents, too; if applicable, this also applies to test reference databases.

Like any other software development document, test documents must be subject to projectwide or locally maintained configuration and version management.

Test documents under configuration management

In smaller test projects, the author himself will be mostly responsible for this (likewise for the their distribution to the recipients as defined in the test plan). Larger projects sometimes have their own testware configuration manager who is also usually responsible for versioning the deliveries of test objects within the test environment and for assigning them to testware versions (concerning responsibilities, please refer to section 5.2.12).

5.2.10 Testing Tasks

In this part of the test plan, test strategy guidelines are broken down into individual activities necessary for test preparation and execution. Responsibilities for these activities, together with their interdependencies and external influences and resources, must also be considered.

Describing test tasks is a dynamic component of the test plan.

It is important not only to list these activities but also to track their individual processing status as well as actual and planned dates. For this reason, we strongly recommend keeping this component of the test plan separate, either in form of a separate test schedule or as several test level plans (see sections 5.1.3 and 5.1.4).

Best would be to have a dedicated test management database. Maintenance, monitoring, and reporting will be described in chapter 6 within the context of the test schedule.

5.2.11 Environmental Needs

We must describe the environmental needs of the project:

Test platform = basic software, hardware, and other prerequisites

![]() The requirements exist with regard to the test platform (for instance, hardware, operating system and other basic software)

The requirements exist with regard to the test platform (for instance, hardware, operating system and other basic software)

![]() Other prerequisites necessary for testing (for instance, reference databases or configuration data)

Other prerequisites necessary for testing (for instance, reference databases or configuration data)

In addition, the following topics need to be addressed:

Test interfaces and tools

![]() What are the points of control (i.e., which interfaces are used to actuate the test objects), and what are the points of observation (i.e., through which interfaces are the actual responses of the test objects checked)?

What are the points of control (i.e., which interfaces are used to actuate the test objects), and what are the points of observation (i.e., through which interfaces are the actual responses of the test objects checked)?

![]() What accompanying software and hardware is necessary? Examples are monitoring and protocol environments, simulators, debuggers, and signal generators.

What accompanying software and hardware is necessary? Examples are monitoring and protocol environments, simulators, debuggers, and signal generators.

![]() Which approaches to test automation are possible and, if required, should be used in this environment?

Which approaches to test automation are possible and, if required, should be used in this environment?

Depending on the test level, answers to these questions will turn out quite differently for the same test object. For instance, the software of an embedded system may perhaps be tested in a host development environment during component testing, using a typical component testing environment like CPPUnit [URL: CPPUnit] or Tessy [URL: Tessy]. During system testing, however, hardware in the loop (HiL) environments may be used. For one, the definition of the test environments is influenced by what is technically feasible, while for the other, it is influenced by the test tasks and resulting test techniques. In the end it is also affected by the available and budgetable hardware and software and by the proficiency of the testers.

In addition to the test team, further resources are necessary for test development and execution. Typical resources are test computers and test networks and components as well as special hardware such as hardware debuggers and protocol monitors, but you may also need licenses for test software such as test management or test automation tools.

Resource management of test environment components

Depending on the number of (parallel) users and use scenarios, their management requires different degrees of coordination effort.

Resource management is advisable—be it by means of a simple spreadsheet, resource usage database, or project management tool. Strategies on how to deal with lacking resources must then also be part of risk management (section 5.2.15 and chapter 9).

In addition to resource coordination, the management of software components of the test environment requires integration into test version and test configuration management. Only too often the operability of test environments depends on a precise combination of specific versions of the operating system, test automation tool, analysis tools, and test object versions. For example, testing of software involving medical technology, the aerospace industry, and railroad engineering requires strict accountability concerning the storage of all test environment components for 10 or more years (see section 13.4). This, too, needs to be stated in the test plan.

Part of the test environment must be put under configuration management.

One of the factors in gaining efficiency in testing is efficient test platform management. Using imaging software and an image management environment [URL: Imaging] can help to significantly reduce setup times for test runs in that it allows fast installation of “images” for test hardware across projects with common and often required combinations of operating system, test software, and other platform components (e.g., Office package). In combination with virtualization solutions such as VMWare and Plex86 [URL: Virtualization],

these standard images can be run in virtual hardware and are thus largely independent of short-lived hardware development in the PC sector. In addition, the technology can also be used to make snapshots of the computer states prior to longer test runs, providing points for fallback in case of failing test runs.

5.2.12 Responsibilities

The general responsibilities are test management, test specification, test preparation, test execution, and follow-up.

Further responsibilities concern internal test project quality assurance, configuration management, and the provision and maintenance of the test environment.

The organizational integration of test personnel into the overall project, the distribution of authority within the test team, and, where required, the division/organization of the test teams into different test groups and/or test levels must be defined here.

Most of these responsibilities are related to the creation of documents (see section 5.2.9). If responsibilities are only defined at the level of “persons X, Y, and Z are assigned the role of R”, it is not sufficient to ensure on-time creation and adequate document quality. Additionally, for each of the documents we must explicitly answer the following questions:

Responsibilities also comprise document management.

![]() Who is the responsible author and who participates in which role in the creation of the document?

Who is the responsible author and who participates in which role in the creation of the document?

![]() How, when, and by whom will the document be released?

How, when, and by whom will the document be released?

![]() How, when, and by whom will it be distributed after release?

How, when, and by whom will it be distributed after release?

This document plan may either be created in section 5.2.9 or 5.2.12.

5.2.13 Staffing and Training Needs

For each role or responsibility, we must state the resource requirements in terms of staffing in order to be able to implement the test plan; furthermore, it needs to be specified how the role and project-specific training and instruction is to be carried out.

In mature test processes, roles and necessary qualifications are already defined in the test handbook.6 Based on the list of necessary resources, the test plan simply refers to the predefined role descriptions. The use of a standardized training scheme like that of the “ISTQB Certified Tester” facilitates the creation of this chapter by offering a reference ensuring the qualifications necessary for a particular activity in the test process through standardized training content. At the same time, responsibilities for the standard documents mentioned above can be assigned to these roles. Section 10.2 provides more details on role qualifications.

Using standardized training schemes facilitates specification of the necessary.

In addition to these test-specific qualifications, involved staff frequently need additional, comprehensive, domain-specific qualifications to create good test specifications, to be able to judge system behavior during test execution, and to look beyond their own test specification to recognize, describe, and adequately prioritize anomalies. Other desirable “soft skills” are listed and explained in chapter 10.

5.2.14 Schedule

To begin with, a draft schedule is drawn up of test activities at the milestone level. Because of the inevitable dynamics of scheduling, however, the draft schedule only serves as a starting point for subsequent detailed planning of all activities, which again needs to be updated regularly.

The milestones comprise planned document release dates, due dates for (partial) releases of the test objects, and their delivery dates from development to test.

Based on these milestones, the detailed test schedule is derived; in large projects, this may involve creating several test level plans. This plan or these plans must find their way into the project manager’s project plan. Regular coordination between project and test manager must be ensured.

The test schedule (goal) is derived from the milestone plan (implementation).

The test manager must be informed about development release delays and react to them in his detailed test planning. The project manager must react on test results and, as the case may be, may even have to postpone milestones because additional correction cycles need to be planned. Chapter 6 describes the interaction between development and test and also explains the test manager’s task when controlling and adapting the plan.

5.2.15 Risks and Contingencies

One of the test objectives is to minimize product risk. This is done by selecting a suitable test strategy. A test plan must also address project risks; i.e., all factors that might jeopardize the planned achievement of the test objectives in the test project must be identified, analyzed, and if possible, avoided. The test manager must—not only in this respect—think and act like a project manager. Chapter 9 provides more details on this subject.

Product risk is a matter for test strategy. Test project risk management is the subject of this chapter in the test plan.

The hurdle is high for the introduction of full, formal risk management. One easy way into this topic is to simply list known risks in the test status report, thus making involved staff aware of risks without necessarily having to introduce methods for risk evaluation and risk treatment at that point.

5.2.16 Approvals

List all the names and roles of everyone that needs to sign the test plan as proof of notice. Depending on the signer’s role, this has the following consequences:

![]() Usually the project manager’s signature releases the budget necessary for the implementation of the concept—unfortunately, in practice, it too often only shows that notice has been taken of the test manger’s statement that the available budget is not sufficient to carry out the measures necessary for achieving the quality goals with all the ensuing risks.

Usually the project manager’s signature releases the budget necessary for the implementation of the concept—unfortunately, in practice, it too often only shows that notice has been taken of the test manger’s statement that the available budget is not sufficient to carry out the measures necessary for achieving the quality goals with all the ensuing risks.

![]() The development leader’s signature confirms that the described tests are adequate and, if applicable, that he agrees to the involvement of the development team and the provision of necessary resources.

The development leader’s signature confirms that the described tests are adequate and, if applicable, that he agrees to the involvement of the development team and the provision of necessary resources.

![]() The end customer’s signature confirms acceptance of the proposed quality assurance measures for his product; it may also mean that the planned acceptance tests are cleared for release.

The end customer’s signature confirms acceptance of the proposed quality assurance measures for his product; it may also mean that the planned acceptance tests are cleared for release.

![]() Last but not least, the test team needs to sign the test plan, thus communicating its “commitment” to joint activities.

Last but not least, the test team needs to sign the test plan, thus communicating its “commitment” to joint activities.

5.3 Defining a Test Strategy

The test strategy defines the techniques to be employed for testing test objects, the method of distribution of available resources to components and quality attributes under test, and the sequence of activities that need to be performed.

Depending on the feature, testing of the quality attributes required by the system asks for completely different procedures and test techniques to be applied at the different test levels. Within the context of ISO 9126, testing is a process by which the degree of fulfillment of the quality attributes is to be measured.

Strategy for the selection of suitable test techniques

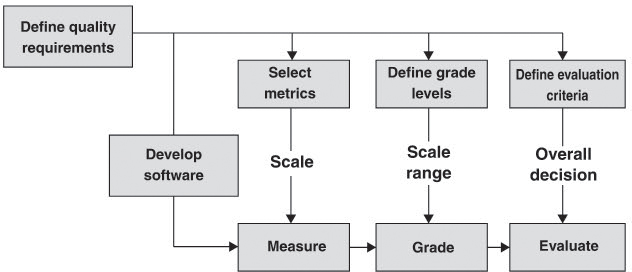

According to ISO 9126, it is generally not possible to measure quality attributes directly. The standard, however, provides an abstract definition for this evaluation process (see figure 5-3):

Figure 5–3 ISO 9126 software evaluation process

In this process, testing can be considered a sequence of the following activities:

![]() Select metrics (i.e., define suitable test techniques)

Select metrics (i.e., define suitable test techniques)

![]() Define classification levels (i.e., define acceptance criteria)

Define classification levels (i.e., define acceptance criteria)

![]() Measure (i.e., execute test cases)

Measure (i.e., execute test cases)

![]() Categorize (i.e., compare actual behavior with the acceptance criteria)

Categorize (i.e., compare actual behavior with the acceptance criteria)

The test strategy correlates each feature to be tested with methods for adequate feature testing and the classification level required for feature acceptance.

In a mature test organization, this task can be done at test handbook level, which then contains information about the general test design strategy and correlates the general quality attributes and test techniques available in the organization with each other.

Examples for test techniques

The following two examples illustrate how these attributes, methods, and classification levels correlate.

1 “Accurateness”

The functional quality attribute “accurateness” is defined as “attributes of software that bear on the provision of right or agreed results or effects” (e.g., required accurateness of calculated values).

A suitable test technique at the level of system testing is use case testing [Spillner 07, section 5.1.5] with the additional application of equivalence class partitioning and boundary value analysis [Spillner 07, sections 5.1.1 and 5.1.2].

One example of a suitable metric for determining the classification levels is the deviation between calculated and expected value of an output parameter.

Possible classification levels for acceptance are as follows:

![]() 0 .. 0.0001: acceptable

0 .. 0.0001: acceptable

![]() > 0.0001: not acceptable

> 0.0001: not acceptable

2 “Operability”

The nonfunctional quality attribute “operability” is defined as “attributes of software that bear on the user’s effort for operation and flow control”.

A suitable test technique for this attribute is an operability test covering the major use cases of the system. A useful metric is the measurement of the average time spent on each of these use cases for different classes of users (e.g., beginners, advanced users).

It makes sense to define specific classification levels for each of the use cases. For the use case “create new model in the DreamCar configuration”, we could, for instance, define these classification levels:

![]() <1 minute: excellently suitable

<1 minute: excellently suitable

![]() 1–2 minutes: acceptable

1–2 minutes: acceptable

![]() 2–3 minutes: conditionally acceptable

2–3 minutes: conditionally acceptable

![]() >3 minutes: not acceptable

>3 minutes: not acceptable

Strategy for the distribution of test effort and intensity

Many test techniques are principally scalable; i.e., with more effort one can achieve a higher test coverage. Normally, this scalability ought to be used when spreading available resources across the test activities as not all possible test tasks can be processed with the same intensity.

The test manager bases his considerations on the following aspects:

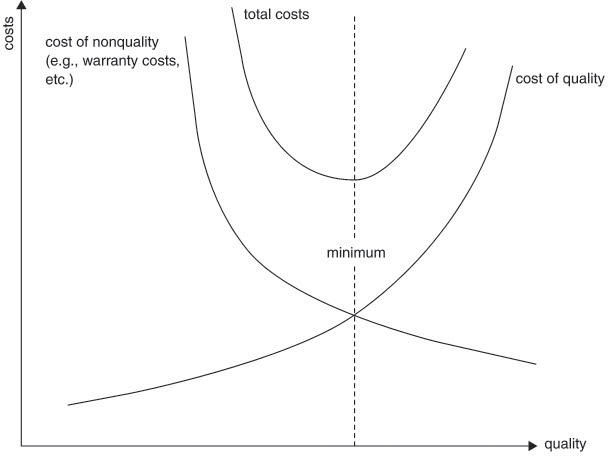

![]() Information on product risk. Chapter 9 exemplifies the necessary basic mechanisms of risk identification, analysis, evaluation, and treatment. Most important in this context is the requirement that the test should minimize product risk7 as quickly as possible and with the least possible effort, as illustrated in figure 5-4.

Information on product risk. Chapter 9 exemplifies the necessary basic mechanisms of risk identification, analysis, evaluation, and treatment. Most important in this context is the requirement that the test should minimize product risk7 as quickly as possible and with the least possible effort, as illustrated in figure 5-4.

Figure 5–4 Quality costs and risk, according to [Juran 88]

Testing improves the overall quality of products. With increasing quality, however, cost increases disproportionately. On the other hand, the very high product risk before start of testing is successively reduced by the reduction of the expected costs of nonquality. Economically speaking, the best possible time to stop testing is the point where both curves intersect here the minimum of the sum of cost of quality and non quality is reached. In order to make risk reduction effective as early as possible, planned quality assurance measures must be performed in the sequence of the product risks addressed by them.

Find the balance between test and failure cost.

To get to this minimum quantitatively and methodically is a very complex task; the section on “precision testing” in chapter 9 provides some approaches.

![]() Consideration of project risks. Injection of defects in a system is influenced by a number of risk factors inherent in the development project. Among such influencing factors are communication problems in distributed teams, different process maturities of involved development departments, high time pressure, and the use of new but not yet properly mastered development techniques. As with product risk, the test manager must try to quantify his knowledge about these factors to be able to use them as a basis for determining the test intensity or test effort for the different test tasks.

Consideration of project risks. Injection of defects in a system is influenced by a number of risk factors inherent in the development project. Among such influencing factors are communication problems in distributed teams, different process maturities of involved development departments, high time pressure, and the use of new but not yet properly mastered development techniques. As with product risk, the test manager must try to quantify his knowledge about these factors to be able to use them as a basis for determining the test intensity or test effort for the different test tasks.

The test manager must now take all the information on product and project risk into account for his effort distribution. This is best done by using unique algorithmic mapping of all known influencing factors on a particular system feature’s product and project risk and calculating one single measurable value for the test effort allocated for testing this feature. Such calculation techniques are, for instance, described in [Gutjahr 95] and [Schaefer 96] (for both, see also chapter 9). The second technique, in particular, is very practical and easy to use. The test manager may, of course, define calculation methods that are perhaps simpler and better suited to his particular project situation.

Effort distribution, taking risk into account

A general formula for such a figure is expressed as

TE(Fx) = f(RProductFx, R1ProjectFx, ..., RnProjectFx)

whereby:

Fx |

= feature x of the system under test |

TE |

= measured value for the test effort to be allocated |

f |

= mapping between risks and test effort |

RProductFx |

= product risk of feature x |

RyProjectFx |

= different project risks during implementation of feature x |

Following are some basic rules for such a mapping:

![]() In most cases, there are too many influencing factors to consider them all with justifiable effort; some of these factors can easily be quantified, whereas with others, it may not be possible at all with the available knowledge. The basic rule is as follows: It is better to begin with a few, easily assessable factors. In our experience, they cover approximately 80% of all the influencing factors (the Pareto principle8 applies here, too).

In most cases, there are too many influencing factors to consider them all with justifiable effort; some of these factors can easily be quantified, whereas with others, it may not be possible at all with the available knowledge. The basic rule is as follows: It is better to begin with a few, easily assessable factors. In our experience, they cover approximately 80% of all the influencing factors (the Pareto principle8 applies here, too).

Basic rules for a pragmatic consideration of risks

![]() In most cases, neither product nor project risk are completely quantifiable. Instead, it is sufficient to work with classifications (e.g., 1 = “little risk”, 2 = “medium risk”, 3 = “high risk”). The classification should not be too subtle (better to work with three instead of eight risk classes).

In most cases, neither product nor project risk are completely quantifiable. Instead, it is sufficient to work with classifications (e.g., 1 = “little risk”, 2 = “medium risk”, 3 = “high risk”). The classification should not be too subtle (better to work with three instead of eight risk classes).

![]() Avoid using overcomplex algorithms for calculation of risks; in most cases, simple multiplication and addition are sufficient. To improve algorithms, nonlinear weighting may be applied for classification (i.e., 1 = “little risk”, 3 = “medium risk”, and 10 = “high risk”). Such emphasis on high risks leads to a good differentiation between measured effort values.

Avoid using overcomplex algorithms for calculation of risks; in most cases, simple multiplication and addition are sufficient. To improve algorithms, nonlinear weighting may be applied for classification (i.e., 1 = “little risk”, 3 = “medium risk”, and 10 = “high risk”). Such emphasis on high risks leads to a good differentiation between measured effort values.

![]() For practical use of the calculated effort values, a simple mapping between measured value and scaling of test methods must be defined. Assuming the measured effort values to be between 0 and 1,000 (depending on function f), we can give the following example:

For practical use of the calculated effort values, a simple mapping between measured value and scaling of test methods must be defined. Assuming the measured effort values to be between 0 and 1,000 (depending on function f), we can give the following example:

• |

0 < TE(Fx) < 100: |

no test |

• |

100 ≤ TE(Fx) < 300: |

“smoke test” |

• |

300 ≤ TE(Fx) < 700: |

normal test, e.g., application of equivalence class partitioning |

• |

700 ≤ TE(Fx): |

intensive testing, application of several test techniques in parallel |

Besides determining the test effort, the test strategy must also define the sequence of the test activities and the most suitable dates to start them. From a technical perspective, it makes sense, in principle, to start those test activities first that bring about the highest risk reduction. In most cases, however, additional technical dependencies exist that also impact the planning of the sequence. Last but not least, the sequence of the feature releases by development, too, form an important basis for planning. This basis, however, is of a dynamic nature so that sequence planning is best kept as a separate document or, ideally, integrated into a test management tool (see sections 5.1.3 and 5.1.4).

Strategy for determining sequence and time of the activities

A test strategy that is entirely structured according to formal criteria reduces the probability of finding defects hidden in the “blind spot” of requirement and risk analysis, i.e., in the area of implicit requirements. At the latest, when the system is put into operation, it is left to the unlimited “creativity” of the end user. Often a massive increase of the failure rate can then be observed. Intuitive and stochastic test case specification should therefore be given enough room within the context of the overall test planning. Well-defined methods exist, which is to say that creative or random-based testing need not mean that the approach is unstructured or chaotic! [Spillner 07] describes these techniques in section 5.3; they are well suited to anticipating end user profiles in a systematic test environment.

Including heuristics, creativity, and chancel

This increases the defect detection percentage of those defects that are most visible in the end user environment—a rather effective measure to enhance customer satisfaction. Besides involving creativity and chance, heuristics also play an important role in a good test strategy.

[Spillner 07] mentions and explains several heuristic planning approaches in section 6.4.2; they are therefore not repeated here.

The development of a suitable test strategy is an elaborate and complex process that, as already mentioned, requires iterative alignment of test objectives, effort, and organizational structures.

Reusability of test strategies

It is all the more important that a strategy thus developed is well documented and that it is, stripped of all project-specific data, integrated for reuse into the test handbook of the organization(-al unit). Candidates for such a reusability strategy are, at least, these:

![]() The definition of test techniques and their assignment to quality attributes

The definition of test techniques and their assignment to quality attributes

![]() The methods for the calculation of the test intensity based on risk factors.

The methods for the calculation of the test intensity based on risk factors.

5.4 Test Effort Estimation

In most cases, estimating the expected test effort represents an approximate evaluation based on experience values—either one’s own or of that of other people or institutions. Depending on the underlying information base and the required precision and granularity of the estimation, different estimation techniques are used. This section considers the following:

![]() Flat models or analogy estimations

Flat models or analogy estimations

![]() Detailed models based on test activities

Detailed models based on test activities

![]() Formula-based models based on the functional volume to be tested

Formula-based models based on the functional volume to be tested

5.4.1 Flat Models

The simplest method used for test effort estimation is to add a percentage flat rate to the development effort—for instance, 40% for test effort. A simple model such as this is better than nothing and may be the only way—for instance, in case of a new development in a business segment hitherto not covered by the organization—to get to an estimation.

Percentage flat rate as a starting point

What is more, this simple estimation of the test effort causes considerably less estimation effort than more complex methods and may therefore be quite adequate for small projects.

Of course, precondition is that (apart from a consistent add-on factor) the development effort was known or estimated accurately.

Consequent tracking of actual effort and iterative improvements of the estimation model through comparison of actuals against plan after project end are more important here than in all other methods used for estimating test effort. The next test project will then at least be based on a more suitable percentage value for the estimation.

To start with such a flat model, one may take into account experience values from similar projects, technologies, corporate or sector comparisons, or of course general literature sources. Sources with suitable add-on factors are, among others, [Jones 98], [URL: Rothman], and [Rashka 01]. Looking for comparable sources, several factors need to be considered:

Use comparison data

![]() The source used for comparison must suit the task; i.e., it must take into account the organization’s own implemented software technology. For example, sources used for estimating the effort to test an embedded system developed in C are probably inadequate to estimate the effort to test a web portal developed in .NET.

The source used for comparison must suit the task; i.e., it must take into account the organization’s own implemented software technology. For example, sources used for estimating the effort to test an embedded system developed in C are probably inadequate to estimate the effort to test a web portal developed in .NET.

![]() The authenticity of the source needs to be checked, especially with regard to the fidelity of the data collection.

The authenticity of the source needs to be checked, especially with regard to the fidelity of the data collection.

![]() It makes sense to use several sources. If the data is contradictory, adjustments can be made using mean value and standard deviation; otherwise, it is better to remain on the safe side and discard the sources altogether.

It makes sense to use several sources. If the data is contradictory, adjustments can be made using mean value and standard deviation; otherwise, it is better to remain on the safe side and discard the sources altogether.

5.4.2 Detailed Models Based on Test Activities

If the simple estimation method cannot be used satisfactorily, the test project must be considered a concatenation of individual activities and different estimation methods must be used for each individual activity. This approach follows classical methods of project management. There are many different approaches; for orientation, some criteria given below consider the different types of resources required for the execution of the activities:

Classic project management approach

![]() Time

Time

![]() Hardware and software for the test environment

Hardware and software for the test environment

![]() Testware such as test automation tools

Testware such as test automation tools

To estimate this effort usually requires a combination of intuition, experience, and comparison values as well as formal calculation methods. The following factors, among others, may enter into the calculation, depending on the desired accuracy of the effort estimation:

Diverse influencing factors

![]() Number of tests (conditional on the type, number, and complexity of the requirements on the test object but also on the planned test intensity, resulting from risk estimation)

Number of tests (conditional on the type, number, and complexity of the requirements on the test object but also on the planned test intensity, resulting from risk estimation)

![]() Complexity and controllability of the test environment and of the test object (in particular during its installation and configuration)

Complexity and controllability of the test environment and of the test object (in particular during its installation and configuration)

![]() Estimated number of test repetitions per test level based on assumptions about the progress of defect detection

Estimated number of test repetitions per test level based on assumptions about the progress of defect detection

![]() Maturity of the test processes and of the applied methods and tools

Maturity of the test processes and of the applied methods and tools

![]() Quality of available documentation for the system and test environment

Quality of available documentation for the system and test environment

![]() Required quality and accuracy of documents to be created

Required quality and accuracy of documents to be created

![]() Similarity of the project to be estimated with previous projects

Similarity of the project to be estimated with previous projects

![]() Reusability of solutions from previous projects

Reusability of solutions from previous projects

![]() (Test) maturity of the system under test (If the quality of the tested systems is bad, the effort required to meet the test exit criteria may be difficult to define.)

(Test) maturity of the system under test (If the quality of the tested systems is bad, the effort required to meet the test exit criteria may be difficult to define.)

![]() Adherence of test object deliveries to schedule

Adherence of test object deliveries to schedule

![]() Complexity of scheduling possible part or incremental deliveries, especially during integration testing

Complexity of scheduling possible part or incremental deliveries, especially during integration testing

![]() Results of possible previous test levels and test phases

Results of possible previous test levels and test phases

A typical basic mistake during effort estimation is to take all scaling factors into account but not all effort types that scale accordingly. Essentially, such effort types follow the phases of the test processes. Effort needs to be considered for the following:

Different effort types

![]() Test planning (among other things, effort required for developing the effort estimation itself).

Test planning (among other things, effort required for developing the effort estimation itself).

![]() Test preparation (reception, configuration management and installation of the test objects, provision of necessary documentation and templates and possibly test databases, setting up the test environment, etc.).

Test preparation (reception, configuration management and installation of the test objects, provision of necessary documentation and templates and possibly test databases, setting up the test environment, etc.).

![]() Staffing (e.g., coaching and/or training of testers).

Staffing (e.g., coaching and/or training of testers).

![]() Test specification at all test levels. Estimation techniques may differ considerably depending on the quality attributes to be tested and the applied test techniques and test tools.

Test specification at all test levels. Estimation techniques may differ considerably depending on the quality attributes to be tested and the applied test techniques and test tools.

![]() If necessary, test automation for selected tests—basically, the same applies here as for the test specification; there are, however, some additional variations regarding the costs for test automation tools.

If necessary, test automation for selected tests—basically, the same applies here as for the test specification; there are, however, some additional variations regarding the costs for test automation tools.

![]() Test execution at all test levels, again considering different estimation techniques for different types of tests.

Test execution at all test levels, again considering different estimation techniques for different types of tests.

![]() Documentation of test execution, i.e., reports and incident reports.

Documentation of test execution, i.e., reports and incident reports.

![]() Defect analysis and retests—the latter naturally scaling with the number of detected anomalies, thus requiring a separate estimation of the number of defects: the defect detection percentage and the defect correction percentage.

Defect analysis and retests—the latter naturally scaling with the number of detected anomalies, thus requiring a separate estimation of the number of defects: the defect detection percentage and the defect correction percentage.

![]() Collection of test metrics.

Collection of test metrics.

![]() Communication within the test team and between test and development team (meetings, e-mails, management of incident reports, Change Control Board, etc.).

Communication within the test team and between test and development team (meetings, e-mails, management of incident reports, Change Control Board, etc.).

Within these effort types individual tasks must subsequently be identified and individually estimated. Such tasks must be kept rather small (some person days) and should always be related to a concrete event at the start and at the end to make tracking of the actual effort between these two dates as simple as possible.

Keep estimated tasks small.

The same applies as mentioned earlier: some estimations are extremely difficult. In order to improve on subsequent estimations, the individual applied techniques and assumptions used must be documented; the actual effort must be accurately logged and deviations need to be analyzed.

All these estimation techniques should not lead one to forget that testing is a team process:

Estimations are part of the teamwork.

![]() A test manager must not perform effort estimation in the ivory tower. Assumptions and estimations should be discussed in the team, with team members being involved in the estimation of activities assigned to them. Different estimations from different people can be averaged out, or alternatively minimum and maximum values can be used for good/bad case estimations.

A test manager must not perform effort estimation in the ivory tower. Assumptions and estimations should be discussed in the team, with team members being involved in the estimation of activities assigned to them. Different estimations from different people can be averaged out, or alternatively minimum and maximum values can be used for good/bad case estimations.

![]() It must be considered that later execution of the work is also subject to variation in the same way the estimations are. In our experience, tasks performed by someone not involved in estimating their effort always take longer than expected.

It must be considered that later execution of the work is also subject to variation in the same way the estimations are. In our experience, tasks performed by someone not involved in estimating their effort always take longer than expected.

![]() Effort does not only depend on the qualification and knowledge of those involved but also on their motivation!

Effort does not only depend on the qualification and knowledge of those involved but also on their motivation!

![]() The quality and test policy—i.e., the importance accorded to testing in the enterprise and in management—affect estimation or at least the risk of false estimation.

The quality and test policy—i.e., the importance accorded to testing in the enterprise and in management—affect estimation or at least the risk of false estimation.

![]() The maturity of the software under test has a strong influence, too; immature, defective software can multiply the effort required for one single test by a high factor.

The maturity of the software under test has a strong influence, too; immature, defective software can multiply the effort required for one single test by a high factor.

In this step, too, experiences made in the past can help; the large number of individual estimations, however, also requires a large number of historical data for the individual activities, thus presupposing the previous creation of a practicable set of test metrics (see chapter 11).

Use experiences values

It may be of help to carry out a study prior to a complex project, selecting some random sample test cases from the test schedule and logging the effort needed for implementing and executing them. The results can be used for validating the estimation techniques or for extrapolation of the total effort.

5.4.3 Models Based on Functional Volume

This model class is based on the estimation of the scope or functionality of the objects under test and tries to establish a mathematical model by reducing scope or functionality to some few but significant parameters.

De facto, such models work with experience values, too, mostly in the form of fixed coefficients or factors that are used in the formulas. This way, they represent a practicable compromise between the accurate but elaborate models based on single task estimation and the cost-effective but inaccurate and risky flat models.

Compromise between general and individual estimations

[ISO 14143] provides a detailed framework, based on which a concrete metric can be evaluated to determine the functional volume of the system to be tested.

As an example of such a method, we shall first look at the

Function Point Analysis (FPA)

This method (see, for example, [Garmus 00]) is generally used in effort estimations and widely applied in system development.

Since the function point analysis starts with system requirements, it can be applied very early in a software development project. The method itself, however, defines only the number of function points of the product; in order to deduce information about the expected development effort for this product, corporate specific experience values relating to the effort per function point is required.

The function point method works in three steps:

Stepwise analysis and estimation

![]() In a first step, defined rules are used to count the functions to be realized and all data to be processed; these are divided into the categories input, output, query, interface file, and internal file and then weighed as simple, medium, or complex. The result (i.e., the weighted sum) of this count is called “unadjusted function point value”. It is a measure for the functional scope of the system from the user’s point of view.

In a first step, defined rules are used to count the functions to be realized and all data to be processed; these are divided into the categories input, output, query, interface file, and internal file and then weighed as simple, medium, or complex. The result (i.e., the weighted sum) of this count is called “unadjusted function point value”. It is a measure for the functional scope of the system from the user’s point of view.

![]() Independent of the first step, the system to be realized is evaluated in a second step based on 14 nonfunctional attributes, such as, for example, a measure for the performance or the efficiency of the user interface. Each of these influencing factors is evaluated by a factor from 0 (unimportant) to 5 (very important) and added up. The result is taken as a percentage value. That value is added to 0.65 and multiplied with the result of the first step. Thus we get to the “adjusted function point value”, which represents a basic factor for the expected development effort.

Independent of the first step, the system to be realized is evaluated in a second step based on 14 nonfunctional attributes, such as, for example, a measure for the performance or the efficiency of the user interface. Each of these influencing factors is evaluated by a factor from 0 (unimportant) to 5 (very important) and added up. The result is taken as a percentage value. That value is added to 0.65 and multiplied with the result of the first step. Thus we get to the “adjusted function point value”, which represents a basic factor for the expected development effort.

![]() In a last step, the function points must be converted into effort, i.e., person months and costs, etc. At precisely this point, the method must be adapted to corporate needs because the conversion requires comparisons with figures from past projects.

In a last step, the function points must be converted into effort, i.e., person months and costs, etc. At precisely this point, the method must be adapted to corporate needs because the conversion requires comparisons with figures from past projects.

The test effort can, for example, be deduced by means of the following conversion rule [URL: Longstreet]: Let FP be the number of function points we then get

Test effort is a result of estimates from function points.

![]() the number of system test cases = FP1.2

the number of system test cases = FP1.2

![]() the number of acceptance test cases = FP × 1.2

the number of acceptance test cases = FP × 1.2

Hence, the estimation result is simply the number of test cases; however, in practice this number will not be sufficient to perform a complete effort estimation. In order to use it, we need at least an add-on estimate for the number of test cases with a mean effort for all test activities, or we use the number of test cases as a parameter for an activity-based estimation model.

Test Point Analysis (TPA)

Based on the function point analysis, the test point analysis was developed by the Sogeti company B.V. [URL: Sogeti]. In principle, this method uses all the essential factors already listed in the section on activity-based models as factors by which the number of the system’s defined function points is multiplied. The algorithms used are rather complicated and contain several experience-based parameters. For setting up these parameters, we use experience values for the weighting of quality attributes and certain features of the functions, of the environment, experience, and equipment of the test teams, and of the organizational structure.

The advantages of this method is that the classification of the test points is based on ISO 9126 and that it is therefore well suited to the methods for test strategy development described in sections 5.2.4 and 5.3.

TPA harmonizes with ISO 9126.

The experience factors map to very many possible influencing factors without confusing the estimation—TPA, for example, can very easily be implemented in form of a spreadsheet. TPA provides a complete effort estimation in test hours; adding additional values as in FPA is not necessary.

Similar to FPA, TPA thrives on the quality of the experience values; it is therefore hardly suitable for initial effort estimation in a new enterprise or business segment. However, if data collection and adjustment between estimation and actual effort is regularly done and if the experience factor is calibrated accordingly, the method will become more accurate with time.

TPA, though, makes using FPA mandatory. In each individual case, we must weigh whether to base estimation on the rather complex TPA or on the simple algorithms for direct derivation of the test efforts from the function point analysis that we just mentioned.

TPA based on FPA.

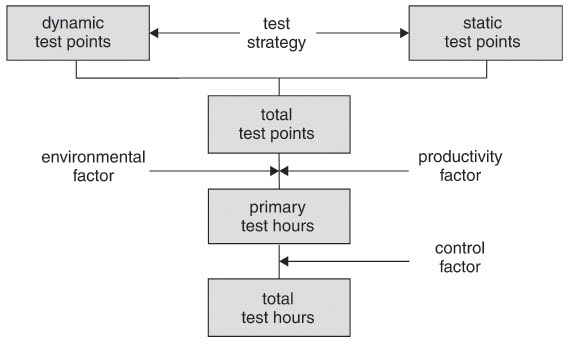

Figure 5-5 shows the test point analysis workflow.

Figure 5–5 Test point analysis workflow

![]() Dynamic test points: for this value we define the following for each individual function:

Dynamic test points: for this value we define the following for each individual function:

Test point estimation using quality attributes

• The value of the function points for this function

• A factor for the quality attributes required for this function (functionality, safety, integration ability, and performance)

• A factor that comprises the priority of the function, its frequency of application, and its complexity and influence on the rest of the system.

These three factors are multiplied with each other per function and the results are added up. This sum, the dynamic test points, reflects the effort for the dynamic test.

![]() Static test points: For each of the required non-testable quality attributes (flexibility, testability, traceability), the total number of function points is multiplied by a factor and the intermediary results are added up. The result is a measure for the effort of the static tests.

Static test points: For each of the required non-testable quality attributes (flexibility, testability, traceability), the total number of function points is multiplied by a factor and the intermediary results are added up. The result is a measure for the effort of the static tests.

Dynamic and static test points are summed up and subsequently weighted with environmental factors:

Multiplication with different influencing factors