3

The Definitions of Systemics: Integration and Interoperability of Systems

3.1. A few common definitions

The most commonly used definitions, found in all books that deal with systemics or the theory of systems either closely or vaguely, are as follows.

DEFINITION 3.1.– J. Forrester, in Principles of Systems and Industrial Dynamics: “A system means a grouping of parts that operate together for a common purpose […] a system may include people as well as physical parts.”1

DEFINITION 3.2.– P. Delattre, in Système, structure, fonction, évolution – Essai d’analyse épistémologique: “The notion of a system can be defined, in a completely general way, as corresponding to a set of interacting elements…”2

DEFINITION 3.3.– J.-L. Le Moigne, in La théorie du système général – Théorie de la modélisation3, is more eloquent. Simplifying his words, he tells us that the general system is the description of an artificial object “which, in an environment, given end purposes, exerts an activity and sees its internal structure evolve as time passes, without however losing its unique identity”. He insists on the constructive aspect of the thing with its modeling aspect, articulated around three hubs, that he calls a triangulation, in other words: operational (what the object does), ontological (what the object is) and genetic (what the object becomes).

Along the same lines, we can also mention Le macroscope by J. de Rosnay, with its “10 commandments” which are in fact obvious truths, as we will see later on. Saying in commandment no.1 that it is necessary to “conserve variety” comes down to saying that redundancies are necessary for adaptation: Shannon’s second theorem. Saying in commandment no. 2, “do not open the centrifugal governors”, is pure tautology because an open “governor” can obviously no longer control anything; the rest is in the supplementary section. The book is qualitative, and therefore cannot be used by engineers or by anyone who wants to model in order to understand, measure, etc. Wanting to explain systemics using metaphors from biology (he trained at the Institut Pasteur, then at MIT) is delicate in the sense that we do not really know very well how the biological machinery works, and even less everything that touches embryogenesis and engineering of living things4. It is better to do the opposite, as von Neumann recommended and before him Descartes, starting with perfectly understood simple mechanisms5, and to increase in complexity, step by step, by progressive integration.

R. Thom, the famous mathematician and 1958 Fields Medal laureate, with his destructive mindset (well-known for his Catastrophe Theory), provides us with a “spatialized” morphological definition in his pamphlet La boîte de Pandore des concepts flous6:

DEFINITION 3.4.– “A system is the portion of space contained inside a box (B). The walls of the box can be material or purely fictional, insofar as a precise assessment of the energy and material flows that pass through its walls can be drawn up.” He found it more interesting that the usual combinatorial analysis definition, considered suspect in his eyes, referring to a theory that does not produce any mathematical theorem (a desire of N. Wiener, as we reminded ourselves in the historical introduction), and that at best is only a set of non-constructive definitions; a theory to watch “with suspicion…”, he said. Hence the metaphor of the “black box” that led to numerous misinterpretations (see Figure 3.1). The walls of the black box give rise to the inside/outside border which plays an essential role; the appearance or not in the black box is a voluntary act, a decision by the modeling architect, not the result of chance. This is a predicative act, in Poincaré’s meaning of the term.

Figure 3.1. The metaphor of the black box

Taking the point of view of an engineer, which will by definition be constructive, we can understand reticence to follow modus operandi that do not really allow action to be taken, because in engineering we must never rely on chance. At this level of generality, we are often led to say only banal things. The use of models is proclaimed everywhere, except that at the time of release of these books, computer science, of which we sensed the importance in advance, was not developed enough; it was still centralized at that time but this changed radically in the 1990s with distributed computer science.

The “black box” was simply a metaphor to generate understanding that a careful distinction is necessary between what is “outside” the system and what it interacts with – in other words, the external flows, from the “inside” of the box therefore from “how” it is made. As R. Thom makes sure to say, the walls of the box can be purely conventional, but in all cases, they must be well defined. From the point of view of an engineer, the transformation F(Input)→(Output) needs to be specified and constructed, taking care not to use any information from contexts other than those carried by flows, failing which the transformation function F has no meaning. For N. Wiener, this was absolutely obvious, and he said7: “Suppose that we have a machine in the form of a ‘black box’, that is a machine performing a definite stable operation (one that does not go into spontaneous oscillation) but with an internal structure that is inaccessible to us and that we do not have knowledge of”.

In a future supporting volume, we return to these definitions based on the work of D. Krob for the Complex Systems Engineering Chair at the École Polytechnique8, where to go back to the words of R. Thom, we will give him real mathematical content that allows the construction of computerized models.

Traditionally, in engineering systems literature9, we distinguish the problem space – including the facts that it relates to – from the solution space that always contains multiple solutions. In effect, there are always several F functions that can correspond to the constraints that need to be satisfied; constraints whose nature we characterize using the acronym PESTEL (political, economic, social, technical, ecological and legal, see Chapter 8). In more mathematical language, it can be said that the transformation made by the black box must be described/specified in a “context-free” language, a notion introduced by N. Chomsky in his founding article “On Certain Formal Properties of Grammars”10. All these notions provided the starting point for theoretical computer science in France with the professors M.-P. Schützenberger and M. Nivat, in the 1960s. Everything that is contextual is subject to interpretation and must therefore be “considered with mistrust”, according to R. Thom, for the simple reason that this damages human communication. The aspect that has cemented the success and effectiveness of European mathematics11 is that it is in fact context free. It has logical forms, a system of disembodied relationships, and thus transposable in any culture, as was the case for the Jesuit fathers with the Éléments d’Euclide that was translated into Chinese in the 1600s12.

In the history of science, it is necessary to remember that originally, numerology and astrology, for example, were intimately associated with mathematics and with the birth of astronomy. The book Mysterium Cosmographicum, in which Kepler formulated his famous laws, was an astrology book. Newton was an alchemist. But it was the separation that made mathematics so effective, and Principia Mathematica by Newton remains a model of its kind which is actually based on Éléments by Euclid, a work that Newton had perfect knowledge of.

Even with such general definitions (definitions 3.1, 3.2 and 3.3, cited previously), we can nevertheless establish a certain number of properties that all systems, whatever their nature, will share. We will now establish this for three of them:

- – constitutive element (building block or simply a module);

- – interaction/communication;

- – invariant/control regulation.

In some way, we need to look for the equivalent of the “points”, “straight lines”, “planes” which constitute the physical and societal spatio-temporal object that we will call a system, and the instruments that allow them to be organized and manipulated, the equivalent of the ruler and compass used by surveyors.

3.2. Elements of the system

For the definition to work, we need to identify “elements” that are not systems themselves, in other words name them, failing which the definition becomes circular and is no longer of use. The definition presumes a finalized integration process that must be controlled. Since the 18th Century, a distinction has been made between the elements of a clockwork mechanism and the final integrated object: besides this, at that time, a clock was not called a system. But an astronomical clock, or a watch that includes complications, is a good metaphor for talking about systems and complexity, as used by H. Simon in his book, The Sciences of the Artificial13, with Chapter 8 entitled “Architecture of Complexity”. For simplicity, a clock is made of gears, cams, axes and pivots, an escapement to beat out the seconds (meaning a well-determined and stable time step), and an energy storage mechanism, for example a spiral spring (this stores potential energy), which will be gradually released to maintain the movement, which will be displayed by different quadrants and hands, for hours of the day, a stopwatch, the phases of the moon, the tides, the planets, etc. depending on the complications of the timepiece, since the basic features are hours, minutes and seconds. All of this is mounted on a frame, known in horology as a caliber, which defines the structure of space (this is a topology) in which the various constituent parts will be integrated. In systems engineering, we talk about a host structure or an architecture framework.14

Each of these elements has a very precise function. Correct organization of their layout (which needs to be controlled/regulated) according to an explicit construction plan, that is, a program of the assembly, results in an extremely precise reproduction of the movement of the stars, depending on the size and number of teeth on the gears. The “result” that emerges from this layout, visible to the owner of the clock, will be the time and date of the day, for example, that will constitute the interface between the system and the owner, meaning the man/machine interface. “More is different” said the physicist P. Anderson, to which we could add “and open” because it generates new freedoms. A more subtle statement than the very classic and wellworn, “the whole is more than the sum of its parts” because the “more” is not at all guaranteed, whereas the difference is certain. P. Anderson directly targeted phenomena related to scale, well known in solid-state physics, which was not yet called “emergence“; this is exactly what we are talking about here.

Using the escapement, we will be able to create an initial rotation mechanism, using gears, that will restitute the movement of the circles in Ptolemy’s model that roll over each other, as seen in the theory of epicycles. The clock is never more than an easily transportable mechanical model of the apparent movement of the stars around the Earth.

The precision of the size of the gears and the rotation axes, and the quality of the pivots (sometimes made of diamond) mean that the clock will consume the potential energy that is stored in its springs more quickly or less quickly in order to compensate for the inevitable friction. And all clockmakers know that once a certain number of moving gears and parts is surpassed, a clock seizes up irrevocably15, thus limiting the capacities of the system and its lifetime, because the state of the gears is modified over time by oxidation, wear to the teeth, which increases the friction, etc.

Saying that a given object is an element of the system is the same as saying that there is a “collectivizing”16 relationship that rigorously defines belonging or non-belonging to the system that we are attempting to characterize (see section 3.1, definition 3.4 of the black box). Without this criterion, we risk incorporating “foreign” elements into the system that will falsify all reasonings that relate to the globality of the system. In the case of a clock, in the same way as with all mechanical objects, a parts list, the assembly drawings and the tools required for assembly are all needed. In biology, the role of the immune system is to ruthlessly destroy any element that is present in the organism that does not bear the signature identifying it as a member of the community17.

The elements are subject to wear or degeneration according to their own laws that are specific to them, including some that are not well known, or are completely unknown and which, due to this, generate black swan18, if we are not careful. Over time, the elements will be altered and will no longer adhere to their service contract; it then becomes necessary to replace them, which can cause maintenance downtime. Certain systems, like for example electrical and telephone systems, can no longer be shut down globally as this would cause a major economic disaster. Thanks to conveniently arranged redundancies we can stop certain parts without significantly affecting the overall service contract of the whole, and then carry out maintenance work.

In the case of a company “extended” to its clients and/or its suppliers, there is interpenetration of information systems, which means that the relationship of belonging to such and such a system becomes problematic, which will have serious consequences in the event of incidents/accidents because then, whose fault is it? The entire problem of systems of systems is then posed, and of the conditions for “healthy” interoperability that must respect the rights and obligations of the systems that come together in this way. Cooperation has a price, as long as it gives a competitive advantage, but it always has a cost.

A second important characteristic involving the entire system corresponds to the concept of a predicative set, a notion that comes from H. Poincaré, following his discussions with B. Russel, concerning the paradoxes of the set theory that was gathering pace at that time, at the beginning of the 20th Century19.

A set is known as predicative if the list of its constituent elements is immovable. In sets of this kind, we can reason in complete security about what belongs or does not belong to the set. Negation has some meaning, but in real-life situations that are subject to a future, sets such as these are rare. In a non-predicative set, the list of elements is open and its composition can vary over time. The negation of a sub-set of a list of this kind is therefore open and we do not really know what is in it; therefore, we cannot apply operations planned in advance to the elements of this sub-set. Reasonings that involve the intervention of excluded third parties no longer function because the inside/outside border is blurred. We note that the concept of context-free language is a predicative notion because the language is enough in itself and does not require external input to interpret its semantics.

In a company, there may be people on the premises who are not employees. They must be identified as such and be monitored by any means. This is because their behavior or constantly security clearance is not the same as that of the employees, who are supposed to know the rules of the company for whom they work.

In the case of an electric system, the set of elements/equipment that ensures production is predicative; we can therefore plan the production. In the case of renewable energies, or of the installation of photovoltaic panels that turns each one of us into a potential producer, the set of means of production loses the property of predicativity. This is a significant difficulty for the network regulator and for EDF (Électricité de France), hence the need to collect information via “intelligent” meters such as Linky.

REMARK.– The incorporation of “foreign” elements makes the system non-predicative, which is an annoyance but is not prohibitive. It can even be a strategic advantage, but it needs to be taken into account in engineering and architecture to control its potentially damaging effects.

In companies that apply security rules, each person who is an outsider to the department must be accompanied by a qualified person who is responsible for their actions. The pair that is thus created remains predicable. The accompanied person temporarily inherits certain properties that characterize the employees.

What we need to remember is that the elements of the system are identified as such by an explicit membership criterion which must be controlled at all times in the “life” of the system, and by the function that they carry out within the system “community”. The “everything” obtained in this way, according to the assembly plan (this is the integration operator) of the system is different from the sum of its parts; this is an area of new freedoms. The overall function of the system is an emerging property which is not present as such in any of the elements constituting the system, but all of which must comply with the rules of the assembly plan (in logical terms, in theoretical computer science, this is an assembly operation) and the means of the authorized interactions (a grammar of interactions). The set defines an integration operator, in the mathematical sense of the term, from which a set of elements creates/manufactures a new entity which will be known as “integrated” and whose properties cannot be deduced from any of its constituent elements.

3.3. Interactions between the elements of the system

When we talk about interactions we talk ipso facto about two things: (1) the need for a physical means for exchanges and communication between the interacting elements, either a mechanical connection, as in the case of centrifugal governors, or an electrical connection with conducting wires/cables, or a connection by electromagnetic or optoelectronic waves through laser bundles as is now habitual; (2) conventions so that the interactions are operational and contribute to the system’s mission, where each of the elements exchange information, or meaning, with others with which they are interacting, depending on the linguistical means. In passing, we note that there are intended interactions, in some way operational, and unintended interactions, generally damaging, such as those defined simply by being physical neighbors (heating of a given zone causes a breakdown in certain unprotected and/or exposed equipment, etc.) and that need to be monitored; for example, the centralized management system (CMS) for autonomic management of buildings.

This means that in the description of the system interactions20, we will, of course, necessarily find:

- – the interconnection matrix of the elements between themselves, in the form of directed relationships which specify the physical nature of the interconnection and the capacities required for the link. This is the notion of “communication channel”, which plays a fundamental role in Shannon–Weaver’s theory of information. The elements in interactions with the exterior (the outside) must be listed;

- – the classification of the messages that convey the exchanged information. In the world of telecommunications, which is above all a world of messages and signals, a conventional notation known as ASN.1 language is laid down as a basis. The physical representation of the message comes from a specific problem related to the format. When the first computers appeared, engineers used delay lines with mercury baths, which propagated conventional signals with a certain speed. In the world of the Internet, there is an XML language, known to a good number of Internet users;

- – the procedures and rules of exchange which allow elements to synchronize themselves and to act in a coherent and finalized manner, thanks to protocols and conventions. In Roman armies, until the Napoleonic period, the fighting units synchronized themselves with drums, bugles and flags (the word standard initially meant a coat of arms, which was a sign by which to recognize friends/enemies; a device that is always in effect in modern ground–air combat via transponders and the IFF identification21, so that the anti-aircraft defense was not destroyed by friendly aircraft). Procedures and rules of exchange operated like the grammar of a language which ensures coherence of all the behaviors of the elements in the system.

The mechanics of interactions can be centralized or decentralized but its dynamic will still be transactional – that is, quantified – both in energy and in time/duration, as in the case of the electric system: what is consumed by one can no longer be consumed by another, and the global energy resources must be diminished by the same amount. Ideally decentralization may appear to be a better solution, because there is no center, yet each element is a potential center, which means that each one is linked to all others. Intuitively, we do sense that there are indeed limits to decentralization, for example when we need to react rapidly in response to dangerous situations, or to sudden changes in the environment. Inversely, an excess of centralization will inundate the messaging center if the system is large, which can lead to significant latency of varying durations, depending on communication channel congestion, and response times that are incompatible with the survival of items that are awaiting answers. This will lead to a randomized malfunction of the feedback loop (Figure 2.1), hence the phenomena related to what the physicist P. Bak calls Self-Organized Criticality22. We will come back to this in Chapters 8 and 9.

Living organisms propose a wide range of centralized/decentralized mechanisms. The nervous system is relatively centralized. The neurovegetative and endocrine systems can be considered as mixed. The immune system is extremely decentralized because the immune response must be immediate in the case of an attack on the organism, an injury, etc.

In human societies, language is a decentralized mechanism23 and each time we have ventured to unilaterally legislate language, this has generally not ended well. The military and the police, who in some way play the role of an immune system, are highly centralized systems both in principle and in their organization, able to act locally on request, or be “projected” outside national territory, as in the case of the armed forces.

In the defense and security systems24 that all modern states have, we are accustomed to distinguishing what is conventionally known as: (1) thinking time, aptly named, but in order to think and make decisions, the capacity to process large amounts of information is necessary and therefore large memory storage capacities are required; and (2) real time which implies an instantaneous answer, or at least the fastest possible; as in the problem of the “anti-missile shields” that were so dear to President Reagan at the time of the Strategic Defense Initiative, whose most immediate effect was to bring down what was left of the Soviet economy.

Using the metaphor of the clock, mentioned previously, we can say and affirm that a system architect is not someone who knows how to cut gears, although they do need to be able to judge the fitness for purpose, but instead it is someone who provides the assembly plan of elements, possibly organized into sub-assemblies, until they obtain the final technical object; taking G. Simondon’s meaning of the term, while preserving the ability to check the consistency of the {U, S, E} triplet, hence the existence of thresholds that should not be exceeded.

What we need to remember (second section C.2.2) is that the interactions between elements in the system will naturally translate into grammer-based operations of these interactions fundamental principle is a custodian. This is like specifying the coherent “sentences” which are all valid behaviors of the system with respect to its environment, that is, its semantics, itself characterized by a certain sequence of actions that the system effectively does.

3.4. Organization of the system: layered architectures

As soon as they cross a certain threshold of complexity – even though this is only in terms of the size of the system, measured by the number of basic mechanisms/equipment integrated – the artificial systems are organized hierarchically to the engineering teams’ capacity to understand what is required as they ensure the development and maintenance of the system. This point has been theorized by H. Simon in Chapter 8 of his work, Sciences of the Artificial: “Architecture of complexity”. From a strictly epistemological point of view, we wish in this chapter to refer to a few fundamental aspects of the hierarchical organization of systems, illustrating it using examples of everyday life of humancreated systems.

3.4.1. Classification trees

First, we note that the notion of hierarchy, meaning classification, is deeply rooted in the cognitive capacities that appear to be specific to the human species25, with language – which to a certain extent is also a classification26 – allowing individuals who have mastered it to interact. Every human has the capacity to learn their mother tongue, but instilling modifications to this language requires a collective work among the speakers concerning the grammar of language, its metamodel, that is, all the rules that are applied to guarantee coherence of the model. All classification comes from the principle of economy of thinking. At level 0, everything that is perceived in situations that have been lived is listed as a primitive element, a factual description of what exists, and of the essential memorization mechanisms that allow us to survive and to adapt; then very quickly, as soon as the stock of memorized situations increases, classification levels appear that are based on abstractions shared by certain elements. Hierarchy is a means of organization of knowledge which – using a few general criteria resulting from the observation of a specific individual object and in fact which mean that it can be identified as such27 – allow it to be found in the classification and therefore all its known characteristics to be used. It is a system of addressing and/or locating knowledge which is the basis for the mechanisms that have been in all computers since von Neumann’s model to organize memory content, and even much more recently to organize a library as soon as the number of works exceeds a few hundred. When we ascend in the classification tree up to the root, we proceed by generalization, and when we descend to the leaves, we specialize more and more until we reach the individuals themselves. Once the key elements of classification have been integrated, which was the objective of the learning, it is easy to find the way. For these same reasons, classification of the entities is at the heart of engineering of artificial systems, under a more down-to-earth name: system classification, as H. Simon has shown us with the clock metaphor (see Figures 3.2, 3.3 and 3.5).

The essential point to understand perfectly is that in all classifications, a principle of exclusion applies: an individual cannot feature in two leaves of the tree because they would have two names, a principle without which the classification would be inconsistent, which is obviously a prohibitory fault because for our hunting/gathering ancestors, classification was a tool for survival. Mistaking a cow for a lion meant running the risk of death, except when seen from very far away like in the classification by J.L. Borges28. The leaves on the tree have properties that are exclusive to each other; only the properties that they have in common, meaning the abstract properties, feature in the hierarchy. Classification is a strong semantic act. In terms of a system, this principle of exclusion that avoids ambiguities must be part of the construction procedure, and once constructed, it is necessary to ensure that this is indeed the case, in other words that the elements designed as isolated are effectively isolated in the physical reality of the physical system. This is what we will illustrate in two examples of systems that have had a profound influence on the history of techniques: (1) the internal combustion engine, which equips automobiles and/or airplanes, a 19th-Century invention; and (2) the computer, which is without doubt the greatest machine ever invented by man in the second half of the 20th Century.

3.4.1.1. The internal combustion engine

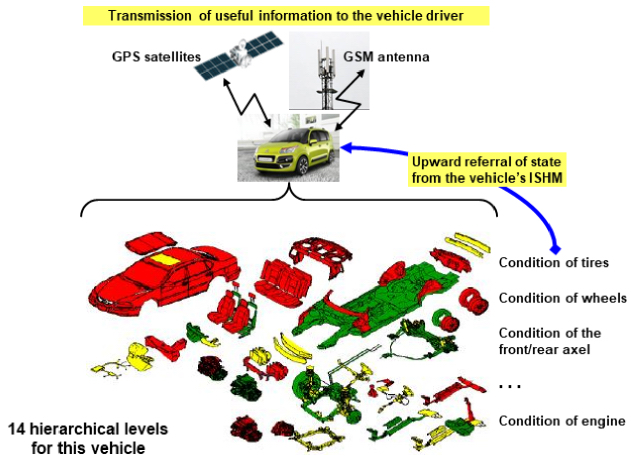

As its name suggests, the internal combustion engine29 is a system that regulates energy produced by explosions of a mixture of air/petrol (or diesel) – in other words a discontinuous phenomenon, so that it becomes a motor torque distributed over driving wheels which allows the vehicle to advance continuously and not in stops and starts. The engine is integrated into an equipment hierarchy, as shown in Figure 3.2.

Figure 3.2. Hierarchical organization of an automobile. For a color version of this figure, see www.iste.co.uk/printz/system.zip

In a four-stroke engine, four cylinders, turn at 3000 turns/min, making 3000 explosions (in the order 1, 3, 2, 4, 1, etc.) minute or 50 per second, knowing that an explosion is a rapid chemical phenomenon (less than a millisecond). The energy produced by the engine results from a quick succession of shocks in a back-and-forth motion of the piston which will have to be transformed into continuous rotational energy so that the car does not lurch forwards!

From a purely operational point of view, after energy is produced in the engine cylinders and before it is made available on the driving wheels, it is necessary for the discrete energy signal to have been transformed into a continuous signal.

That means an operational chain:

The resultant forces created by the movement of the various parts of the engine must be zero, otherwise the operation of the engine will lead to an awkward drift of the vehicle in the form of a parasitic force, moreover, variable, and made up of vibrations. The mechanical energy that is dissipated in the form of impacts and/or vibrations, not recovered on the driving wheels, must be cushioned to avoid creating destructive and/or unpleasant parasite phenomena: displacement of certain parts, unbearable noise, resonance, etc. because energy that is not used on the driving wheels is transmitted throughout the structure of the vehicle and is released as it can be, where it can be, because if it accumulates, it will be worse!

This example highlights the general problem of mechanical coupling because, in addition to the functional coupling, non-functional coupling are systematically going to appear which are generally damaging, related to the production of energy itself and to the geometry of the constituent parts of the vehicle. These non-functional coupling must also be checked, via the information that they generate, because whether we want it or not they are part of the system. It is up to the entire vehicle, as a system, to ensure that the global energy balance is effectively compensated – in other words, a fundamental invariant:

The hierarchical structure of the vehicle is obviously going to play an important role in maintaining this invariant, given that it is understood that compensation for waste energy must be carried out as closely as possible to the source taking into account the known transmission modes of the energy, via mechanical assemblies. The geometry of a part like the crankshaft is determined by the requirement to cancel out the resultant forces caused by the movements of the pistons, connecting-rods and the crankshaft itself; hence its very specific form obtained empirically through trial and error.

REMARK.– We note the similarity with the supply/demand equilibrium of the electrical system. This is formally the same problem, the only difference is the scale of the energies.

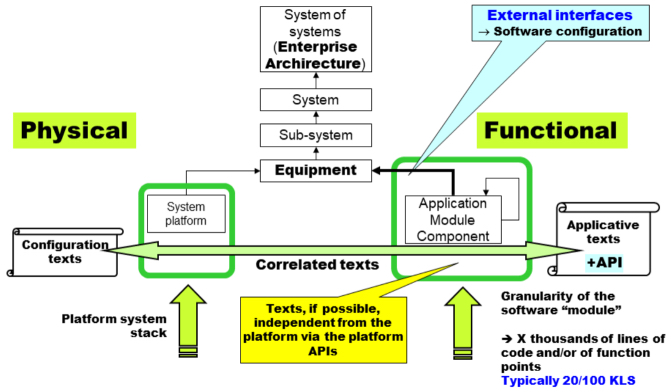

3.4.1.2. The computer and its interface stack

The computer is probably the greatest hierarchical machine that has ever been invented by mankind. Figure 3.3 gives some idea of this.

Figure 3.3. Hierarchical organization of a computer in layers. For a color version of this figure, see www.iste.co.uk/printz/system.zip

Within the computer – which is first an immense electrical circuit including hundreds of kilometers of invisible cables buried in a mass of silicium30 – coexist physicochemical structures that are completely dependent on the laws of nature with their hazards, and structures that are purely logical, completely abstract and immaterial: the programs created by the various categories of programmers which mean that, from the point of view of the user, it will be possible to provide a service, meaning that a single cause (in this case the activation of a command of the system) will always produce the same effect in reality.

In the software, there are more than 20 levels depending on the organizations used (besides, the term “layered architecture” comes from the world of computers, and for good reason!), and it is necessary to add at least as much from a hardware point of view.

The computer’s lesson in systemics teaches us how to go from a world that is dependent on the laws of nature, in particular those of the world of quantum physics, to an idealized world of algorithms and cooperating processes, whose control methods are perfectly subject to the directives given by the machine programmer. The computer does absolutely nothing unless it has been decided by a programmer; it has strictly no initiative, nor any autonomy that was not foreseen in its design. This is the very condition for its programming, more exactly its capacity to be programmed, and of its utility, as we briefly mentioned in Chapter 2 with the Church–Turing thesis.

From the point of view of its circuitry, a machine instruction is never more than a diagram of interconnections of a functional element, like an adder circuit, with other elements where the “numerical” information that needs to be taken into account is registered, incorrectly called “data” because at this level of the machine, there are only configurations of energy states. The resulting energy state will be interpreted as a number on the next level up. In von Neumann’s architecture, the interconnection diagrams, meaning the commands that the computer must execute, and the “data” are registered in the same organ: the memory. The memory is a neutral organ whose function is to maintain the energy state of its constituents as long as it is fed electrically. In the upper stages of the hierarchy, its content will be interpreted as interconnection or data diagrams, with possible permutations of these two roles. This allows us to virtually introduce “abstract machines”, that are purely logical, into the only real physical machine; for example, the bootstrap stage in Figure 3.3, where a protosystem, invisible to the user, will construct in its memory the structures of the final system that will be visible to them (for those who use Microsoft Windows, this is the role performed by BIOS), hence the name bootstrap.

As we all know, the quantum world, at an atomic level, is a random world. Totally strange phenomena take place there, such as allowing an electric current to pass or not, depending on certain conditions: in one case, we have a semiconductor, and in the other case, a perfect insulator; thanks to semiconductor physics, to the extreme purification of materials31, that the computer has really been able to develop. Bodies that are naturally semiconductors, like galena (lead sulphide mineral), are rarely present in nature. However, ultra-pure silicium crystals with which all integrated circuits are made are perfect insulators; they are glass! By disturbing the crystal very slightly by introducing certain impurities, the semiconductor phenomena will emerge.

Transistors are today technical objects in the nano world. With etching distances of 10–20 nanometers, a transistor is made up of rows of atoms that reach numbers of a few hundred atoms of silicium, that is a small block of matter that contains a few million atoms (a block of 100 × 100 × 100 = 106 = 1 million), which means that a complete circuit can contain, or will between 2010–2020, contain 10 billion transistors (i.e. 1010) for a few cm2 of silicium, which can appear enormous but which does however remain small at the scale of Avogadro’s number, 6.02 × 1023 atoms per mole. But these nanometric transistors are sufficiently small to be subject to the influences of alpha particles (2 protons + 2 neutrons, like helium) which are produced by natural radioactivity. These particles are sufficiently energetic to be able to change the state of a transistor. Without counting other random phenomena like diffusion (at the atomic scale and at atmospheric temperature, everything is moving! It is only at absolute zero, –273°C, that matter is at rest), the electrical and magnetic fields are induced by the electrical charges in movement, which can lead to parasite currents, etc. When we say that the circuit commutes at the speed of 1 gigahertz, this is as if we were turning on/switching off the light a billion times per second; and this can create overpotentials at the scale of the circuit. Since it is a current that is circulating, this will produce heat, and it will be necessary to dissipate the heat produced, otherwise there will be a breakdown. From a physics point of view, the circuit is a machine that makes information circulate, which is represented by a certain energy state that is temporarily or permanently memorized in its circuits. When we talk about the circulation of information, we ipso facto refer to the phenomena of communications such as telephone lines, which are sensitive to all kinds of “noise” whose effects must be cancelled by suitable compensations. The same can be said for circuits that contain tens of kilometers of nanometric cables and where “noise” must be controlled and inhibited.

In short, if nothing is done to organize the interactions, “it is not going to work!”

All the reliability of a circuit, its physical capacity to do exactly what the programmer has logically decided, is based on collective phenomena, not those of an atom, but a few million atoms. How far down we can go, no-one is today able to say32. It is necessary to test and observe what happens, without forgetting slow phenomena such as aging. This is all the more so since the circuit must remain operational, for at least as long as the lifetime of the system that hosts it, although the diffusion phenomena of microscopic conductors of a few nanometers, in aluminum or in copper, are correlated to thermal agitation. Only large atoms of gold, used for a long time in these technologies, do not move much. Since there are many transistors, we will be able to use some to correct the others, with error correction codes whose pertinence is based on a statistical property related to the intrinsic random nature in the quantum world itself. So that the circuit breaks down, it is necessary for the two alpha particles, perfectly synchronized to the billionth of a second (meaning less than the cycle of a clock), disturb the energy profile of the information and of its corrector code, two physical zones of the machine that are different from a topological point of view. However, this is a probability that we know how to calculate. All the reliability of the structure will be organized in this way, with compensation mechanisms placed in a suitable manner, since the structure is itself constantly under surveillance, at least its electrical state. If this is declared to be good for the service, then we can verify that the operational logic of circuits, meaning the automatons in finished state (this is an invariant that is independent of the materials that constitute it, materialized by test games that are calculated in a suitable manner), remains nominal and in compliance with the expected service. If this is not the case, the circuit is no longer anything more than a simple resistance that will lose the meaning that its designers had attributed to it.

It is still the case that at a certain level of the hardware stack, random phenomena, either those from the quantum world, or those from the classical world, must be perfectly compensated for not 99.999% but to exactly 100%, because at the commutation speed of the machine ![]() breakdown would be certain. This means that the layer above can become completely abstract from that below it which then operates like a perfect black box, providing the expected service. If the electrical system constituted by the circuit is not guaranteed at 100%, it is then strictly impossible to validate the operational logic of the circuit, because we no longer know if the cause of a breakdown is “physical” or “logical”, and since we are in the nano world, we can no longer even observe what happens on the outside using intrusive devices. The observer must therefore be integrated at the layer that they observe (this is Maxwell’s demon of the layer that filters what is good (see Figure 2.3); but in this case, the demon is the architecture!).

breakdown would be certain. This means that the layer above can become completely abstract from that below it which then operates like a perfect black box, providing the expected service. If the electrical system constituted by the circuit is not guaranteed at 100%, it is then strictly impossible to validate the operational logic of the circuit, because we no longer know if the cause of a breakdown is “physical” or “logical”, and since we are in the nano world, we can no longer even observe what happens on the outside using intrusive devices. The observer must therefore be integrated at the layer that they observe (this is Maxwell’s demon of the layer that filters what is good (see Figure 2.3); but in this case, the demon is the architecture!).

Herein lies the magic of layers. A “layer” that possibly does not have this property is not a layer; it is just a thing or unformed magma! Only the interface that separates the sender from the receiver counts, as shown in the diagram in Figure 3.4. The sender only sees the part of the receiver that is strictly necessary for their use, meaning their external language. All the rest is and must remain hidden. One can refer to the authors’ website concerning the organization of a computer stack, which is an excellent illustration of this fundamental principle.

Figure 3.4. Hierarchical functional logic of layers. For a color version of this figure, see www.iste.co.uk/printz/system.zip

Without perfect mastery of layer engineering, which is the very essence of the constructive modular approach of systems engineering, there would be neither computers, nor communications systems, nor systems at all! This is because the logic of layers has allowed propagation of abnormal situations to be controlled, which are non-functional, and whose accumulation would be mortal for the system. The decoupling, materialized by the interface, radically separates two worlds, both of which respect a logic that is particular to them. Each level has its Simondon triplet {U, S, E}. This is controlled emergence, that nature also does very well with its conservation laws by creating more and more diversified structures33 at all scales. By analogy with mathematics34, we can say that a layer is a theorem or a set of theorems that are seen as axioms, which can be used within their limits of validity to construct other theorems. If necessary, they add on certain levels new axioms that are related to that level, in order to semantically enrich the foundations of the system to satisfy the functional requirements of the applicative layers, at the top of the stack in Figure 3.2. This is what J. von Neumann, right from the start, called the logical model for the machine, described by him as a computing instrument.

Engineering of layer N+1 is independent from engineering of layer N, notwithstanding compliance with the rules of the interface, meaning the external language that is associated with the layer. This independence is applied via naming mechanisms, meaning the act of giving a name, of the entities that are specific to the layer, and of cross-referencing to keep track of who has done what in the system, from one layer to another. If the layers are not or are badly decoupled, this will the specific hazards for each of the layers will combine, due to this become unintelligible for their immediate environment. The corresponding engineering teams will be able to work independently from each other, as long as the interface between each of them ensures the transduction of one domain to another, which requires the cooperation of each team. Hence, traceability matrices that memorize past states are essential engineering tools; without these, reconstructing the situation that has led to a mistake is impossible.

The lesson given by these two examples is that all “living” systems, meaning those that provide a service to their users, possess a logical model that keeps it functionally “alive”, meaning coherent from the point of view of its mission, that is, its maintenance in operational conditions.

Understanding a system means explaining its logical model, and above all guaranteeing the logical model as an invariant of fundamental comprehension, in other words, deterministic and with a mastery of the construction process, level by level. This is a text that is associated with a certain abstract machine (in fact, a stack of machines organized in a hierarchical manner) which operationally defines its semantic. Whatever happens, we are always able to extract the history of the successive transformations that are carried out, and compensate for the hazards found, which is a prerequisite for the healthy engineering of errors, thanks to traceability matrices.

3.4.2. Meaning and notation: properties of classification trees

These two examples show, each in their own way, the importance of the act of “naming”. Naming things, choosing a name, is never a trivial act, something that we have known for a long time; in the Bible, it is even an attribute that God confers on humankind; Genesis 2:18. If the elements of reality do not have the same name, the act of communication is impossible, confusion takes over and that is Babel, another biblical metaphor; Genesis 11:1.

Logicians have taught us that it is necessary to be careful with names. Two different names do not necessarily mean two different things; G. Frege distinguished what he called the meaning, what we now call a name, from what designates the name, hence in his terminology the meaning and notation. The evening star and the morning star are two names for the same object, in this case the planet Venus.

The number pi has multiple meanings: other than the usual denominatione  , where

, where  is also found, which is the calculation of the surface area of a circle with radius 1, or even that used by Mac Laurin to calculate the 100 first decimals:

is also found, which is the calculation of the surface area of a circle with radius 1, or even that used by Mac Laurin to calculate the 100 first decimals:  and other, more exotic ones. More in-depth, the objective of algebraic geometry, refounded in the 1960s–1970s by A. Grothendieck, was to replace the geometrical language on the basis of figures, by algebraic expressions, which is a way of studying the correspondence between the continuous universe of geometrical beings and the uncountable universe with that which we can approximate to be continuous. As we know, rational numbers have the same cardinality as all whole numbers, but the continuous contains an infinity of numbers that are not rational. A computer, by definition, only allows manipulations of what is uncountable, which does not prevent us from making it perform magnificent approximations of the continuous, for example caustic surfaces in optics or even fractal curves, and making it control fundamentally continuous physical–chemical processes, or at least modeled by differential equations, and all this thanks to the inertia of the real world where, from a certain threshold, the two universes are at a tangent. This last point is fundamental because it comes down to defining a quantum of action thanks to which the constructed system remains unintelligible. A quantum depends on the expertise of the architect who designed the system because it is a decision made by the architect (refer to the numerous additions on the authors’ website).

and other, more exotic ones. More in-depth, the objective of algebraic geometry, refounded in the 1960s–1970s by A. Grothendieck, was to replace the geometrical language on the basis of figures, by algebraic expressions, which is a way of studying the correspondence between the continuous universe of geometrical beings and the uncountable universe with that which we can approximate to be continuous. As we know, rational numbers have the same cardinality as all whole numbers, but the continuous contains an infinity of numbers that are not rational. A computer, by definition, only allows manipulations of what is uncountable, which does not prevent us from making it perform magnificent approximations of the continuous, for example caustic surfaces in optics or even fractal curves, and making it control fundamentally continuous physical–chemical processes, or at least modeled by differential equations, and all this thanks to the inertia of the real world where, from a certain threshold, the two universes are at a tangent. This last point is fundamental because it comes down to defining a quantum of action thanks to which the constructed system remains unintelligible. A quantum depends on the expertise of the architect who designed the system because it is a decision made by the architect (refer to the numerous additions on the authors’ website).

In the closed world of the constituent parts of an automobile (although it could just as easily be a large carrier like an A380), the billions of components of a computer today, etc., the description of the system is a finite set of references where everything is listed; this is what mechanics have called it since the beginning of the automobile industry, a classification, the bill of materials35. This classification reflects exactly the hierarchical structure as it is visualized on Figure 3.2.

Everything else is the situation created with integration of the computerized communication mechanisms within the systems themselves that allows the user, whether they are human or artificial, to interact with the system, wherever it is. To understand the consequences of this, we need to return to Simondon’s triplet {U, S, E}. Any interaction by the user will give rise to structures stored in the memories of computers/processors (a calculation organ in the J. von Neumann sense of the term) which are now interested parties in all systems. We recall once again that for computerized systems and silicium, all this was transformed at one time or another into energy profiles. This point will be taken up in Chapter 5. Each time the user interacts with the system, an active memorization structure, referred to as a process, must be created and therefore named. The user can interrupt their interaction, temporarily or definitively, which leads to the consequence of having the capacity to find what has been created.

When an interaction is declared finished from the point of view of the user, it does however leave an imprint that is required for traceability of the operations carried out, a trace that must be conserved in a long-term archive, in compliance with the legislation in effect; this is known as forensic data36. In the event of a breakdown, thanks to these traces, the history of the interactions that have led to an abnormal situation can be reconstructed; this is therefore an essential device for system safety.

In terms of naming, the consequence is that it will be necessary to create new names throughout the life of the system. In a computerized system, they are now all like this, the set of constituent elements of the system is no longer a finite set with a known extent, it is in the literal sense, infinite – in other words not finite. The set is no longer predicative; as a consequence, excluded third parties are no longer going to work when we reason on the basis of a “living” system that interacts with its environment. The “parts” of the system are, on the one hand, physical parts, visible, in the usual meaning of the term, but also invisible “parts”, which cannot be touched but simply “seen”, on the other hand. There are therefore real parts in all computerized systems, of a finite and known number, as well as, and this is new, virtual parts of a potentially infinite number. This is shown in the diagram in Figure 3.5 with which we could project the description of the vehicle in Figure 3.2.

Figure 3.5. Static and dynamic classification. For a color version of this figure, see www.iste.co.uk/printz/system.zip

The figure shows the static part and the dynamic part of the system of which some of the constituent equipment integrates software to a greater or lesser extent. When this software “works” on behalf of users, humans or non-humans (e.g. “connected objects” in interactions), there is a creation of workspaces, known as “sessions” in systems engineering jargon, to which a name must be given to be able to manage them (see section 5.1, the language of interaction and raw orders) because they are likely to interact with the equipment, and also from user to user.

In terms of complexity, this “opening” will have serious consequences. The opening creates new opportunities, but it also creates architectural obligations, because it will be necessary to organize it rigorously in terms of engineering. It is not enough to “open”, it is also necessary to control, and for this to trace, to conserve histories to validate the transformations that have been carried out. These dynamic entities related to the evolution of the system over time of course have a relationship with the static element that has created them; but they can also have relationships between themselves, 2 to 2, 3 to 3, etc., which means that the potential combinatorial is of the same order as all the parts, that is, if N entities are created: ![]() . But there is worse because the context thus created can itself enter into a relationship with the set that created it, and this time, we are in

. But there is worse because the context thus created can itself enter into a relationship with the set that created it, and this time, we are in  , and so on and so forth, as the operations carried out by the users continue, in other words:

, and so on and so forth, as the operations carried out by the users continue, in other words:  . We are hotfooting it into what physicists who are interested in information call the third infinite, that of combinatorials that are conveyed by information, the infinity of complexity or the infinitely complex37. Other than the errors that we have already mentioned, the architect designer must take care of the control of this combinatory aspect so that the system is simply testable: this is the role of the integration process, one of the fundamental processes of systems engineering, which is nothing more than a concatenation operator, in the language of theoretical computer science.

. We are hotfooting it into what physicists who are interested in information call the third infinite, that of combinatorials that are conveyed by information, the infinity of complexity or the infinitely complex37. Other than the errors that we have already mentioned, the architect designer must take care of the control of this combinatory aspect so that the system is simply testable: this is the role of the integration process, one of the fundamental processes of systems engineering, which is nothing more than a concatenation operator, in the language of theoretical computer science.

With the telephone numbers or IP addresses required for the proper of the Internet, all users are familiar with naming problems. A telephone number identifies a specific telephone, thanks to a code of 10 numbers that is known to everyone, accompanied if necessary by the international dialing code. Ten numbers, that makes 10 billion potential telephone numbers, for a population of 65 million, plus companies, plus users who sometimes have several telephone numbers. Any computer connected to the Internet and, in general, all “connected objects”, which we call the Internet of Things (IoT), are provided with an IP address that identifies it as a communicating entity. The IP address has first been coded on 32 bits, which is a potential for identification of 4.294.967.296 objects! Largely insufficient with the development of the IoT. With the level V6, the IP address has gone from 128 bits, meaning a potential for identification of 128 2 which in engineering notation would be written ![]() The number can seem immense, but when we think about it correctly, not all that big, for at least two reasons:

The number can seem immense, but when we think about it correctly, not all that big, for at least two reasons:

- 1) it is necessary to recall that what makes the combinatorial of things that are infinitely complex are the relationships or the interactions, no matter what they are. Maintaining a trace of incoming and outgoing phone calls on the telephone over the legal period of two or three years (refer to the L of PESTEL) is quickly going to generate thousands of thousands of combinations that will need to be archived, but above all to be found again, just in case! It is necessary for the identifier of the interaction to be unique because if it is not, we are no longer sure of the sustainability of the incoming/outgoing relationship. There is a risk of confusion about the identity;

- 2) moreover, it is humanly possible to memorize numbers consisting of 39–40 numbers, unless they are written in places that are highly visible to the user, which is contrary to all security regulations. The solution consists of associating a familiar name “in plain English” with the code, that the user can then easily memorize.

Taking the Latin alphabet, lower case and upper case, in addition to the punctuation and some current symbols, we rapidly reach approximately 70–80 characters. If the designer authorizes names in plain English of 30 characters, the combinatorial is this time ![]() ; to be compared with the 1038 for the IP address! Therefore, it overflows.

; to be compared with the 1038 for the IP address! Therefore, it overflows.

Thirty characters – this is very short and not very user-friendly; we recall that SMS messages or Twitter messages are made up of 160–140 characters. If we redo the calculation with for example 100 characters, the space for names that is thus created will count (this is a text line, in font size 10–11). Morality, clear required for considerations of user-friendliness in human/machine interfaces – this is the external language of the user – will cause a difficult problem in the management of names to comply with the coding possibilities, given the internal names that are authorized by technology. By means of these naming problems, we touch on one of the difficulties of correctly managing the inside/outside interaction, meaning the of interaction of the user U with the two other elements in the triplet {U, S, E}. We will return to this in Chapters 8 and 9.

Here we give a few technical elements that allow us to understand the possibilities, but also the new problems, caused by classification trees.

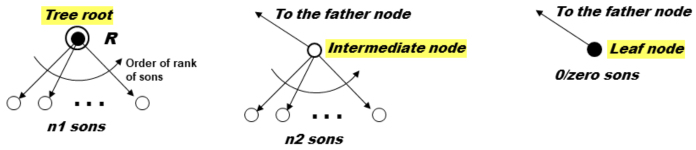

Every tree in the classification has a unique root, intermediate nodes and terminal leaves. The root is a node that only has sons, an intermediate node has sons and a single father, and a terminal leaf has a single father and no sons (see Figure 3.6).

Figure 3.6. Construction of classification trees

Each element of the tree, whether it is a leaf node or an intermediate node, can be located by a series of numbers, starting at the root {R, i1, i2, …, ik} which constitutes its name; the order or rank of the son is conventional. The series of numbers depends on the depth (or height) of the tree.

If all the nodes have the same number of connections, that is, n, we say that the tree is balanced; it is n-ary. A tree of this kind, counting the root node, of depth/height h will have a total number of nodes

The number of terminal leaves is sometimes known as the “width” of the tree; we see that the depth/height of the tree varies in the same way as the logarithm of base n of the number of leaves (refer to an application of these properties in Chapter 8).

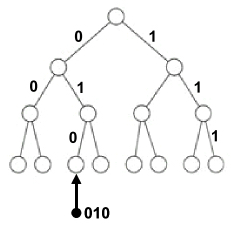

In ICT, binary trees, with two connections per node, play an important role due to the technology, because many physical devices that are easy to manufacture, present two stable energy states that we then know how to compose (meaning concatenate). All binary numbers can be interpreted as paths in a binary tree. For this, it is simply a case of interpreting 0 as going to the left and 1 as going to the right. In Figure 3.7, the number 010 therefore denotes one of the eight leaves of a binary tree of depth 3 (i.e. 23). A binary number with 32 bits will have a designation capacity that is equal to 232, that is 4,294,967,296, a little more than 4 billion leaves. This interpretation of the number, whether binary or not, since this works with any numbering basis, will be used as a storage system.

Figure 3.7. Binary tree to code the names of leaves

In a computer, a binary number can therefore designate a memory compartment, this is the addressing mechanism, meaning a memory reference, which is a compartment that can contain a value that is also represented by a binary number. This choice of “all binary” numbers was made at the origin of the first machines by von Neumann himself.

But the reference/value distinction made is absolutely fundamental because we do not “calculate” the same thing by operating on references or on values; in the language of G. Frege, the meaning (names) and the notation must not be confused if we want to avoid serious logical errors! In linguistics, this is the distinction between signifying/signified that is operated by F. de Saussure to organize the signs of language.

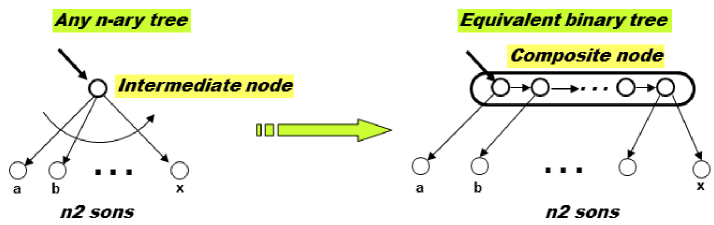

Another fundamental property of binary trees is that they will allow any type of tree to be represented, as shown in Figure 3.8. We note that the intermediary node with its n2 sons has led to a composite node formed of binary nodes numbering n2-1, which inherit the properties of the initial node.

Figure 3.8. Binary trees representing any tree. For a color version of this figure, see www.iste.co.uk/printz/system.zip

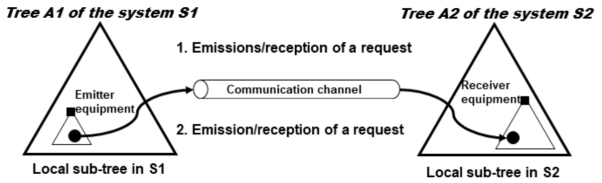

To add to what has already been said about the communication between equipment, it is fundamental to understand that all acts of communication between an emitter and a receiver presuppose that these are located in two classification trees, particular to the systems that accommodate them. They are therefore perfectly identified in their respective hierarchy (a little like the URLs that allow resources to be identified on the Internet) which will allow us to associate specific processings with them allowing exchanges to be controlled. For example, a recipient can refuse to receive messages if the emitter is not registered as having the right to send them to that recipient. This exchange can be represented as in Figure 3.9.

Figure 3.9. Communication in a space of names

The emitter/receiver equipment has a local tree, positioned respectively in the general system tree; this means that any element has a local name, and a complete name from the root of the system tree that their element is part of. As long as the exchange is carried out inside the system, we can be content with local names, but as soon as the exchange is made between systems, complete names must be used. We again encounter the fundamental dichotomy inside/outside which structures the identification of the constituent elements and the organization of the exchanges within and between systems. Every local name must have a corresponding complete name, a unique identifier that can exist in two forms: an internal form, generally in binary language, and an external form, in “plain English” in the user’s language, whether this is human or machine. This is the price of coherence and universal exchange, a fundamental aspect of a communication architecture.

- 1 Pegasus Communications Inc., 1990 and 1999 (originals in 1961–1968).

- 2 Maloine, 1971.

- 3 PUF, 1977.

- 4 To convince ourselves of this, refer to the book by J.-C. Ameisen, La sculpture du vivant, Le Seuil, or P. Kourilsky, Le jeu du hasard et de la complexité, Odile Jacob.

- 5 In his biography of Turing, A. Hodges tells us that Turing said: “Keep the hardware as simple as possible”; a rule of wisdom that machine architects have slightly overlooked with the number of transistors integrated into a chip, several thousands in 2010, but the engineering process remains perfectly controlled.

- 6 Refer to a recension of his articles in Apologie du logos, Hachette, 1990.

- 7 Refer to God & Golem Inc., p. 42; cited previously.

- 8 Refer to Éléments de systémique – Architecture des systèmes available at: http://cesam.community/ and on the author’s website.

- 9 Refer to the books by J.-P. Meinadier, cited previously, published by Hermès.

- 10 Refer to the journal Information and Control, vol. 2, no.2, 1959; the editorial office consisted of all the actors in this new approach, including M.-P. Schützenberger.

- 11 Refer to the famous text by E. Wigner, The Unreasonable Effectiveness of Mathematics in Natural Sciences.

- 12 Refer to P.M. Engelfriet, Euclid in China – The Genesis of the First Translation of Euclid’s Elements in 1607 & its Reception Up to 1723, Brill, 1998.

- 13 At MIT Press, numerous editions, first in 1969, which is translated into French by J.-L. Le Moigne.

- 14 In the jargon used in the 1990s–2000s: workbench, integration framework and architecture framework; in reference to the frame theory by R. Shank. Refer, for example, to the DoD Architecture framework, the TOGAF, by the OPEN Group, or even the NAF by NATO.

- 15 Refer to the caliber designed by P. Phillipe, in 1989, which numbers 1728 parts, a record! We recall that the combinatorial analysis of the assembly is the number 1728!, that is, ≈ 1.07 × 104.646 obtained via Stirling’s formula; in other words, such a large number that we cannot write it, even using all the atoms of hydrogen that the visible universe contains, that is, 1080 (according to Christian Magnan; refer to https://en.wikipedia.org/wiki/Observable_universe.

- 16 Refer to the definition of sets in N. Bourbaki, Théorie des ensembles, Hermann, 1970.

- 17 Refer to the work by P. Kourilsky, Le jeu du hasard et de la complexité, Odile Jacob, 2014.

- 18 Refer to the best-seller by N. Taleb, The Black Swan – The Impact of the Highly Improbable, Penguin, 2007. Using Poincaré’s language, this is a property known as “predicative”; it therefore has nothing new.

- 19 Refer to H. Poincaré, Dernières pensée, Chapter IV, “La logique de l’infini”; and “Science et méthode”, Chapter III and the following, “Les mathématiques et la logique”. And more in depth, refer to Archives Henri Poincaré, published by A. Blanchard.

- 20 All this is described in research literature for systems engineering: NASA Systems Engineering Handbook and INCOSE Systems Engineering Handbook.

- 21 Refer to https://en.wikipedia.org/wiki/Identification_friend_or_foe.

- 22 Refer to his book How Nature Works – The Science of Self-Organized Criticality, Copernicus Springer.

- 23 In his work, Aux sources de la parole – Auto-organisation et évolution, Odile Jacob, 2013, P.-Y. Oudeyer explains how by using models a clear consensus can emerge from interactions within a human group, modeled by robots; the emergence is in the interaction.

- 24 These are C4ISTAR systems, already mentioned.

- 25 Refer to C. Lévi-Strauss, La pensée sauvage, Plon, 1962.

- 26 Refer to the founding text by J. Piaget, Équilibration des structures cognitives, PUF, 1975. The organization of a database is entirely founded on this principle. For a more philosophical method, refer to the famous text by J.L. Borgès, La bibliothèque de Babel, vol. I, Pléiade, p. 491.

- 27 In the files and/or databases, a long time before the invention of computers, there are indices, or “unique” identifiers that distinguish the individuals from each other. In cladistics, we refer to the phylogenetic classification of living things.

- 28 Refer to the new “La langue analytique de John Wilkins”, in the collection Autres inquisitions, Œuvres complètes, Pléiades, vol. I, p. 747.

- 29 Refer to the Wikipedia entry: https://en.wikipedia.org/wiki/Reciprocating_engine; well-written, or alternatively: http://jl.cervella.free.fr/ressources/moteur.html.

- 30 Although it is highly abundant in nature, the silicium in our computers does not exist in a natural state; it needs to be transformed with a complex physicochemical process to give it the property of being a semiconductor.

- 31 This is the mechanism of fusion in zones, on which all semiconductor physics is based.

- 32 In quantum information, we hope to be able to control the states of a single atom by “cooling” them to the extreme, hence the new possibilities of coding by playing with the two states: (1) ground state of minimal energy; and (2) excited state following an energy input; however, as stated by S. Haroche, Nobel prize winner, who works on the subject, “this is not for tomorrow”.

- 33 On this subject, one can refer to the work by the Nobel prize winner R. Laughlin, A Different Universe – Reinventing Physics from the Bottom Down, Basic Books, 2005; he is a specialist of semiconductors, and a Professor at Stanford.

- 34 In his famous work, The Foundations of Geometry, D. Hilbert thus places the axioms of geometry into a hierarchy, leading us to various geometries that we know of; since geometry is historical and, as we already know, one of the pillars of engineering science.

- 35 In systems engineering, these are matrices known as N2; refer to NASA System Engineering Handbook, paragraph 4.3 “Logical decomposition”, in the 2007-6105 version.

- 36 Refer to https://en.wikipedia.org/wiki/Forensic_data_analysis and https://en.wikipedia.org/wiki/Computer_forensics.

- 37 Refer to J.-P. Baton, G. Cohen-Tannodji, L’horizon des particules – Complexité et élémentarité dans l’univers quantique, NRF Essais, Gallimard, 1989.