6

The Problem of Control

6.1. An open world: the transition from analog to all-digital

As briefly mentioned in the recap of historical developments, the 1990s was marked by a profound era of disruption in systems engineering, which have been from then onwards described as “complex” without reference to what “complex” truly means. The polysemy of the word “complex”, at the time, was even more vague than the word system in the 1940s–1950s. In fact, as soon as there is feedback, meaning a non-linearity, there is a complexity, so we can say that all systems are complex; consequently, reference to a “complex system” is almost a pleonasm.

The control mechanisms whose importance we have stressed, in other words, the feedback loop, are initially purely electromechanical, hydraulic and/or pneumatic with motors and pumps. Analog devices (we call these analog “calculators”, but this is almost an incorrect use of language!) which provide the command have a latency in the form of a time period which is specific to electronic circuits and generally very small (propagation of signals at our scale is almost instantaneous), which means that synchronization between the magnitude to be controlled and the controller is excellent due to its construction.

There is only one disadvantage; these devices cost a lot to develop, the transfer function that they carry out is “hard cast”, as we say in common language, in the circuit itself. In short, they are not programmable, impossible to maintain without a total overhaul and, most significantly, are not very reliable, so devices must be redundant, which causes a proportionate multiplication of the cost and also increases in the complexity.

The appearance of the first computers, this time true calculators, in the 1960s, transformed the economic situation because it was possible to program the transfer function, in the modern sense of the term, and to modify it depending on the requirements of the context of use; it becomes independent of the tangible substrate that can, due to this, be more easily reconfigured. The control requirements (strict compliance with latency time of the feedback loop) and the processing capabilities of the calculators available at the time were such that the control mechanism was designed based on these constraints. The priority requirements are the performance and availability. Programming is certainly simpler than with analog “calculators” but it remains difficult, with engineers qualified in automatisms and electronics, which means that in total the systems in question are limited in terms of both size and number. The “intelligence” of the system designed in this way is reduced to a strict minimum, in the sense that the action carried out is itself a type of reflex, with no room for adaptation to the context of use.

For the record, these systems are known as C2, command–control. They will progressively be installed and/or be substituted for the analog devices that are already in place. They are described as “real time”, which is another incorrect use of language, instead of “rapid”, because stricto sensu “real time” simply means compliance with a schedule, of whatever kind. One example would be the Normandy landings, D-day on June 6, 1944, which was a massive operation on the human time scale, with a schedule that must absolutely be complied with, on the penalty of creating total chaos. It is true that in a motor that runs at 3,000 rpm, that is equal to 50 rps (frequency of alternating current in our houses), in other words, 20 ms of calculation time per revolution.

Nevertheless, many systems in which the requirement for control is not fulfilled remain in use, in particular, systems that include human actors in the control loop, or even systems where the measure to be controlled is not so much the response time as an equal distribution of available resources over more or less variable time periods in order to optimize the advancement of request input flows to the system, flows that are measured in the number of transactions made per second (this is part of the AC (algorithmic complexity) measure) with priorities that depend on contracts established between the users and the system owner.

In the 1980s, progress in microelectronics was so significant, with the arrival of the first VLSI circuits (very large-scale integration, with 100–200,000 components per chip1), that the performance characteristics requirements of C2 systems were fulfilled ipso facto; this is both faster and more reliable. Engineering constraints with a breakdown of the cycle times required for control and in-depth knowledge of the automaton are less constraining. Synchronization with a clock is all that is necessary. The calculation capabilities are overdimensioned, at least when everything is going well, but beware of avalanche effects.

REMARK.– With an automaton that is capable of carrying out 1–10 million operations each second, in 1 ms, we can “go” from 1,000 to 10,000 operations, which is more than enough in many cases, and we will even be able to integrate “intelligence”. We note that in devices with high dynamics, such as anti-missile missiles, let’s say Mach 10, 1 ms corresponds to a distance of 3 meters, sufficient enough to ensure the destruction of the target missile. For precision to the nearest centimeter, atomic clocks are required, such as in GPS and GALILEO systems.

Due to this, the technology of what has become known as C3I or C4I systems (command, control, concurrency/communications, computer, information/intelligence) is becoming more widespread. It is becoming accessible to less welltrained programmers, available in a far greater number, who do not have the added constraint of mastering in detail system requirements that nevertheless still exist today. This is a risk that needs to be managed in this way, in terms of advantages/disadvantages. However, in a situation of potential or declared breakdown – non-compliance with the service contract, problem of system safety – strict control of the system becomes necessary again. In fact, the two modes exist in parallel, but in a C3I/C4I system, an element of C2 will always remain.

The final outcome of this evolution that began in the 1990s is a new family of systems, named systems of systems, for want of a better term (refer to a definition given by M. Maier2, on the author’s website), where the dominant characteristic is interoperability (we refer to the definition given by ISO, in summary: “Interoperability is a characteristic of a product or system, whose interfaces are completely understood, to work with other products or systems, present or future, in either implementation or access, without any restrictions”, as human language can be). Or even, to reuse the classic acronyms, C4ISR or C4ISTAR systems (Command, Control, Communications, Computers, Intelligence, Surveillance, Target Acquisition and Reconnaissance). We observe in passing that the interoperability between systems is a means of growth of organisms/organizations that enter, by virtue of integration, into a symbiotic relationship to increase their operational scope and their survival ability (resilience), up to a certain size threshold: if this is not the case, the system of systems has no competitive advantage.

There are numerous examples of systems of this kind. We can cite air traffic control in airport zones with thousands of daily flights and millions of users, rail services across the English Channel and the thousands of ships and boats that pass through it, urban transport networks and cities in general or in the field of state security, crisis management and defense/security systems, etc.

In this chapter, we will provide a few fundamental systemic characteristics for these types of systems, because they are obviously systems in their own right that elsewhere fulfill the “definitions” that are recalled in Chapters 2 and 8; only the scale has changed. It is not a question of going into technical details here, nor even in the more or less generic architectures of these systems, except when the “detail” is significant from the systemic point of view. On all these systems, there is abundant, good quality research literature (be wary all the same, because a selection needs to be made), some of which is even excellent, like the versions/editions of the book by E. Rechtin, Systems Architecting (the 2nd edition in collaboration with M. Maier), concerning C2, C3, C4ISR, etc. And of course, information systems for which there are also excellent references; see, for example, the various books by Y. Caseau, all published by Dunod). Regarding software alone, but in a system context, refer to the book Architecture logicielle3.

The fundamental question thrown up by this development is whether the systems constructed in this way remain controllable, in terms of cybernetics (see Chapters 1 and 2). We can/must even go a step further in our questioning, and ask whether we know how to relax the heavy constraint of controllability, as far as we know how to guarantee an acceptable service contract for the user, taking into account the context of use which can vary in itself. This is the “system safety” dimension which is thus open, in the widest acceptance of the term, which leads ipso facto to the questions: (1) of the acceptable risk; (2) of the ethics problems that become unsurmountable with the behaviors of human actors, users and/or engineers, more or less foreseeable, or even damaging. They will not be considered in this book, but the problems still need to be laid out correctly in order for there to be a hope of finding at least one acceptable answer.

We give just one example to provide a basis for a detailed explanation.

The user of a network of mobile telephones expects, in “normal” time, an SMS sent at instant T to reach its recipient within a few minutes, at T + Δt. If this takes place on December 31 at midnight, they will not be shocked that, in these exceptional circumstances, the same SMS will take 1 or 2 hours. In this situation, its only requirement will be to have a guarantee that it will be correctly conveyed to its destination, and if this is not the case, to be warned in advance so that a new attempt can be made.

However, there is a limit to this type of logic. In information given here and there, users are advised to turn on their household appliances at night because electricity is cheaper. Taking this to the limit, this is equivalent to making each of us a controller of our own behavior and of the hazards that we do not control, which is contradictory to the very idea of control, because it will be very difficult to set out the rules of the game for 30 million users where the whole world is involved in playing the game. Even worse would be that, at the end of the day, the system would impose its decision on users. If the rules are not complied with, what will the users and/or the central controller do? We do indeed feel that the reference situation can rapidly degrade towards the unacceptable, from an ethics point of view. This will end up resembling the highway code, with insurances, fines, privileges, legislations and standards, a court… in short, cheating! In the event of a disaster, where does responsibility lie? This is the same question as for self-driving cars. In short, Hell at the corner of the street!

Relaxing the rules a little, there are two levels of control:

- 1) Hard/strong control, which requires actions of great precision, therefore a quantitative measure of the parameters to be controlled (including the behaviors of actors, users and/or equipment, hence an increase in skill) and of the evolving dynamic of these parameters. The system is deterministic, and it is always possible to go back in time for post mortem analyses that allow the system to be improved if a malfunction is found. The system is constructed in a modular fashion, using autonomic blocks for which the outline block diagram has been provided. It is also hierarchized into layers, in the strict sense of the term.

- 2) Low/weak control, more qualitative whenever this is possible, with variables grouped together and with varying tolerance margins for the parameters that are controlled. The system is no longer necessarily hierarchical. The requirement of reversibility and determinism at all scales is abandoned; in the event of a breakdown it is now sufficient to restore a coherent state of the system as far as it is possible to warn the users who will be subject to an interruption of services. An approach of this kind is equivalent to overdimensioning the system’s capabilities, on the one hand, in such a way as to remove it from risky situations/zones, without really knowing about the corresponding geometry, as far as all this is acceptable from the point of view of PESTEL; and, on the other hand, to permanently monitor the saturation level of the correct operational state of the critical resources that determine and condition the capacity. If the saturation threshold is reached, the system must be reconfigured, and to do so, it must have a central core of functions that are to be defined, from which it will be possible to reconstruct the system. This is what is known as bootstrap mechanisms, many of which exist in operating systems and communications systems (refer to the network stack of protocols such as TCP/IP; also refer to the IT stack, Chapter 3 and Figure 3.3).

We can of course imagine situations that are even less controlled4, but in that case, we would be leaving the engineering domain to enter the realm of games of chance. Experience teaches us that situations that are considered improbable often turn out to be certain, as was demonstrated to us by the tragedy of the Challenger5 space shuttle, or the 2008 financial and economic crisis (that we are still stuck in), arising from one-sided systematic deregulation other than that of the supposed “wisdom” of the market “laws” and of the honesty of the users, presumed all to be saints.

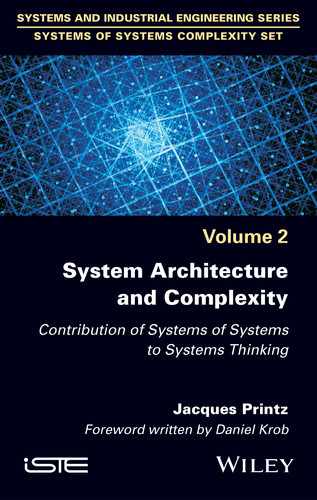

In principle, the problem can be presented as follows (Figure 6.1):

- a) The inside/outside frontier is removed. The criterion of belonging depends on the levels of interoperability and coupling that are sought, depending on user requirements. The set that is then created from the union of several systems defines a certain processing capacity – this is the AC measure of complexity – in such a way as to satisfy the service contracts of possibly large numbers of users (30 million in the case of the French electricity system; 15–20 million for a mobile telephone operator, etc.).

- b) Flows are eminently variable and defined by statistical laws that are more or less well-known, or by the situation at instant T (unforeseen events, crises, etc.). For example, EDF has consumption statistics, per day, per week, per month, per year, which can allow demand to be anticipated and the electrical system to be subsequently configured, which allows the consumption to be correlated with certain events in the environment (the weather or the football match that must not be missed and which will cause an increase in energy consumption).

REMARK.– If we return to the case of sending an SMS on December 31 at midnight, reasoning like an engineer, we see that with 10 million users who want to send their good wishes to 10 friends and receive an answer, this will lead to something of the order of 200 million exchanges. Simple to manage the memory capacity, if 1000 bytes per exchange are counted without considering the back-ups that are required by the service contract, the system must mobilize 200 gigabytes of memory in a few minutes to simply memorize the load. Knowing that an exchange can often generate a dozen internal requests (delivery receipts, various notifications, back-ups, etc.), the system will have to process approximately 2 billion requests without incident, whereas the peak flow is at the very most 50,000 requests per second. We see that in the best cases, the order of 40,000 seconds is required, therefore approximately 10 hours for the load to finish flowing. We understand data center operators’ or directors’ obsessive fear of a breakdown.

- c) The access points, system inputs and outputs, are numerous, but not ordinary. The system interacts with the environment thanks to a set of sensors and effectors that are all transducers, in other words, energy converters. This equipment has a hybrid status because they are both inside and outside; inside, because they contribute to providing the system with the information that is necessary on the various flows that pass through it, outside, because they can be dispersed over the entire spatio-temporal region where the system is present, and due to this, they can be exposed to the hazards of this environment. With SCADA, we will see a concrete example of this type of equipment which plays the role, everything elsewhere being equal, of “sense organs”. This is particularly noticeable in the evolution of the latest generation radars or in robotics.

- d) Concerning the technical part of the system, system safety engineering needs to be taken into account, in order to maintain the integrity of the constitutive equipment and its interactions, in a coherent manner with the overall service contract that is created from the service contracts that have been negotiated with users (including rates) and in order to find the best compromise, which is never simple when there are millions of users and thousands of heavy pieces of equipment like those in large data centers.

Figure 6.1. System of capability logic. SLA stands for service-level agreement

These systems never operate on an all-or-nothing basis; it is therefore necessary to manage deterioration modes, again in this situation optimizing the service contract and the survivability (system safety). Lastly, certain legislative stipulations must be taken into account to comply with the law, corresponding to the L in PESTEL, which is not necessarily in phase with the specific engineering constraints.

We can provide the example of the public switched telephone network (PSTN), which is a good illustration of this problem, quite different from that of a system like the energy transport network, where we are truly in a pure control mode. For the PSTN, all users can potentially be connected to any other user. Since there are 30–40 million users that have a landline (many more if we include mobile telephones), a “colossal” resource would in theory be required, equivalent to 30 × 30 × 1012 of physical lines. Given that not everyone telephones everyone else all the time, we will be able to establish a hierarchy of connections to the automatic switches and telephone exchanges, massively digitalized since the 1970s, and ensure that the stock of resources is compatible with an economic equilibrium between the cost of communication/cost of infrastructures. Let us recall that until the 1920s, all this was done manually, via human operators6. The role of the PSTN system is to manage this resource as best possible, ensuring that a line that, at instant T, connects two users is never cut. If there are not enough available resources, the user requester of a service is placed in a queue, or asked to call back later, possibly with automatic recall functions.

Systems of this kind relate to a construction/operational logic that is sometimes referred to as “capability”, which means that the system has at instant T a certain capacity for action (refer to the notion of an active unit, fundamental in this context) that must be managed as best possible, taking into account the mission attributed to the system. To do this, it must first be created in the form of an initial germ (an embryo), then grow until it reaches the capacity required to carry out its mission. Lastly, when everything is finished, it must retreat in the right order. In the world of IT systems, this is what is known as “continuous” integration, but this is an entirely general notion that conditions the controlled growth of the system and the means of this growth.

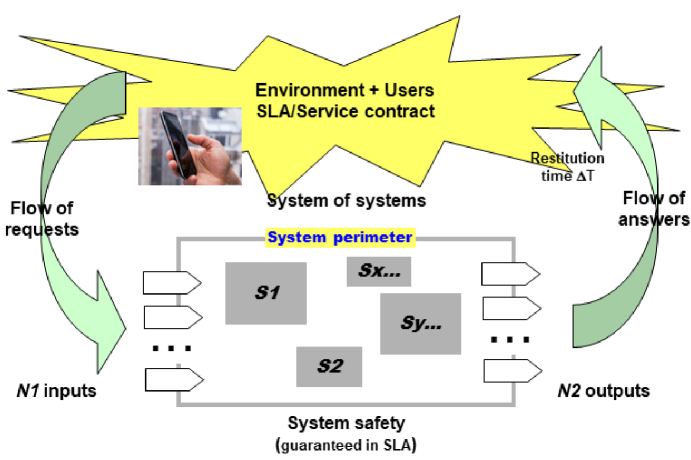

In order to correctly understand the nature of this logic, let us start with the notion of a technical object/system as presented in Chapters 2–4. In this approach (see Figures 2.4, 4.2 and 4.3), we are dealing with three categories of entities, Simondon’s triplet {U, E, S}, or three evolving trajectories: the system and its equipment itself (in other words, TS), and the two communities, users and engineering (in other words, TU and TE); each with its specific complexity, as we have seen. Each of the entities has its own growth dynamic, its own constraints and interactions with the two others, in other words, in total seven fundamental interactions (see Figure 6.2). At the current moment, the coherence of these various trajectories and their interaction is more or less guaranteed, which poses the problem of the coherent evolution of the whole, therefore of its control. We do indeed note that what is denoted as “present” on the figure is not an “instant” in the mechanical or Newtonian sense of the term but a quantified spatio-temporal “zone” in which an action/interaction is possible/feasible, taking into account the timing characteristics of presences, in the active unit sense. This is a quantum or an elementary semantic transformation “step” that can require several atomic transformations in the transactional sense.

Figure 6.2. Trajectories of {U,S,E} capability logic

For example, it is pointless to increase the number of users and/or their level of interactions with the system if it does not have resources that allow it to carry the load. If these two constraints are complied with, from an engineering point of view, the support and maintenance functions will need to be correctly provided, etc. We see that the “truth” of one or the other will depend on the overall context (holistic, as some say) and on the interactions that organize the set in the long term in such a way as to optimize the flow of flows depending on the available capacities, which can vary over time. In the event of saturation, it is best to limit the input flow, creating zones or waiting areas, and minimizing interactions. In the system context, we therefore encounter a notion that is analogous to the principle of least action discovered by physicist mathematicians in the 18th Century such as Maupertuis, but especially in the 19th Century with Lagrange and Hamilton, and also analogous to the new MEP principle in energetics. In systemics, it is always necessary to “conserve” the interaction capacity of the system; a system that no longer interacts is a “dead” system.

6.2. The world of real time systems

We will not explain the history and/or the theory of real time C2 systems (communication and control); there are already excellent books and reports for that7. However, in our systemics optic, there is a fundamental point of these systems which allows the problem of the control loop to be examined, and to understand one of the essential aspects. To act, or react, it is first necessary to be informed: (a) about the evolution of the environment; (b) about the internal state of the system, because as we have said, everything changes. In the very first systems, and this was made perfectly obvious by N. Wiener with the help of C. Shannon and their colleagues at MIT, the communication dimension is essential. On the one hand, it is necessary to acquire the information where it is and transmit it to the command “center” to set up orders, and, on the other hand, one should transmit the order to the relevant actors. Therefore, communications is needed at all levels.

The element of the system that is in charge of this function, known in systems at least as “real time”, has been and is always known as SCADA (supervisory control and data acquisition). But the underlying concept of the acquisition of data/information is entirely general. Any system that interacts with its environment necessarily has a function of this kind. Whatever the nature of the supervised processes, it is necessary to collect relevant information, of high quality, at a pace that is compatible with the dynamic of these processes.

Rapid acquisition in “real time” relates to the case of an engineer or of a chemical reactor, for highly dynamic processes; acquisition in “reflection time” relates to organizational and/or human processes which take place at the pace of human actions, with a variety of MMIs almost without technical limitations. For example, refer to BYOD (bring your own device) approaches, a growing phenomenon in the world of information systems and not only in civil ISs.

In all configurations, it is a case of setting up information, using signals observed either by human means or by measurement instruments, generally a combination of the two, meaning something that has a meaning for the decision/command center.

It is obvious that this acquisition has a close relationship, on the one hand, with the system end purpose, the one being the reciprocal of the other, even if the acquisition field is sometimes larger than would strictly be necessary, and, on the other hand, with the nature of the internal parameters of the system which are conditioned by external data. In informational “noise”, which will, in fact, probably have a meaning, it is necessary to know what to look for to have a hope of taking action.

In the firing systems studied by N. Wiener, the role of radars is not simply to collect the echoes of electromagnetic waves on airplanes, it is, in particular, to construct the most probable trajectories of target airplanes. In some ways, the radar “knows” that it is looking for airplanes and not a bird’s flight which would not at all have the same dynamic.

In an “intelligent” building, there is a data acquisition system that monitors a certain number of parameters which characterize the real or supposed “intelligence” of the building for what is known as the building management system (BMS). This is how real estate and/or energy positive buildings are designed, in compliance with the latest environmental standards (HQE standards).

In an online sales system, typical client profiles can be determined and behaviors can be targeted thanks to the autonomic management of the purchases made by clients. This means that systems can be proactive with respect to clients and to suggest such or such a purchase, which can quickly become very unpleasant.

All these examples demonstrate that in terms of form, we are dealing with the same problem: formulating the raw data that is collected by various sensors, correlating the data obtained with respect to the end purpose of the system, an end purpose that predetermines the form to be identified given the mission and the service contract.

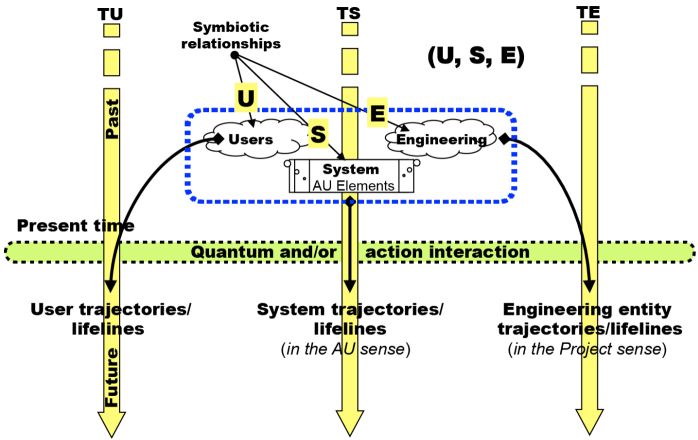

The block diagram of an acquisition system is given by Figure 6.3. The data acquired by the sensors can be processed in “push” mode, in which case, they are immediately transmitted towards the pilot who will do the same with regard to the acquisition and/or the concentration (“fusion” of data). They can also be processed in “pull” mode which means that the data remain with the sensor/pilot until an explicit reading action is carried out.

Figure 6.3. Block diagram of data acquisition. For a color version of this figure, see www.iste.co.uk/printz/system.zip

In the pushed configuration, the communication network needs to have a capacity that allows it to carry all its data, even data that is possibly incorrect. In the pulled configuration, a minimum of memory is required in the sensor/pilot to avoid losing information.

The essential parameter of a data acquisition element/system is the pathway of data, generally a network of communication, which itself has its own logic given the nature of the data transmitted and of the spatio-temporal environment in which the material elements are deployed. A clock is generally essential, like the high precision atomic clocks in a GPS system, in order to synchronize the signals so that they have a meaning, in order to calculate an actual position.

In current systems, there can be thousands of sensors that can/must possibly be remotely operated, for example, remote surveillance networks on public roads or in the urban transport of large agglomerations. In systems that operate 24/7, it is necessary to carry out maintenance operations without interrupting the service, which requires suitable architectures that we will not give details of here. But these architectures are essential for carrying out autonomous or semi-autonomous systems which now accompany users in their daily lives.

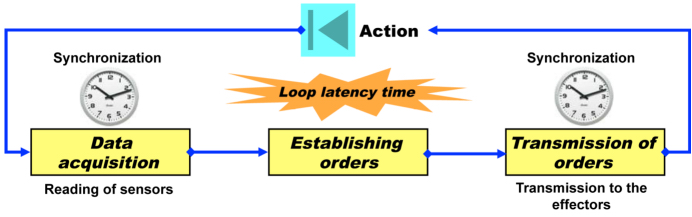

C2 systems are purely reflex systems, with no capacity for memory of past actions, except in certain cases of the last action carried out, therefore without “intelligence”. They follow as closely as possible the logic of the fundamental feedback loop that is described in Chapter 2, as shown in Figure 6.4.

Figure 6.4. Block diagram of a C2 system

For systems of this kind, the loop latency time must be compatible with the dynamic of the processes on which the actions are carried out. If the gap between the decided action and the evolution of processes is too large, the corrections carried out to compensate the drift will be too energetic, which will cause vibrations and/or oscillations.

This was a serious point of concern for N. Wiener and was the basis for diseases of the nervous system such as Parkinson’s disease. But his words must not be inversed, because the symptom is intrinsic to the control mechanism, independent of all reference to biological systems, some of whose complexities are still a long way out of our reach8.

In a “real time” system, synchronization is generally done using a clock, but there is a large number of situations where it can be done on a system status. For example, in air traffic control, taking off or landing can only be done if the runway is free. In systems that manage sharing of resources between users, synchronization is carried out based on the rules of sharing of resources, hence the importance of signaling equipment, such as the traffic lights that we see almost everywhere, in towns, in ports, in airports, etc.

6.3. Enterprise architectures: the digital firm

This section further develops the subject that was briefly touched on in Part 1 of this book.

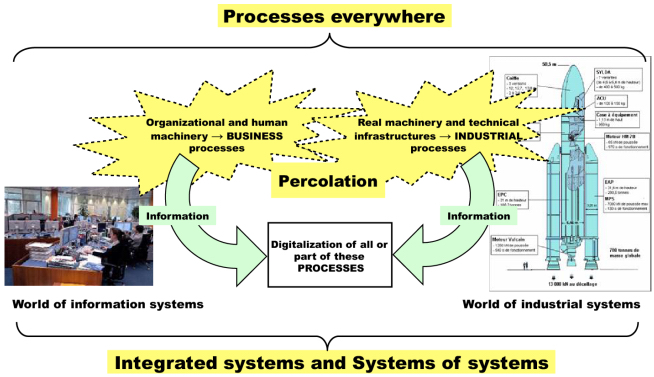

Since the 1990s, we have, in fact, been observing a fusion/integration of fields that were until then considered to be different (see Figure 6.5).

Figure 6.5. Digital information and company

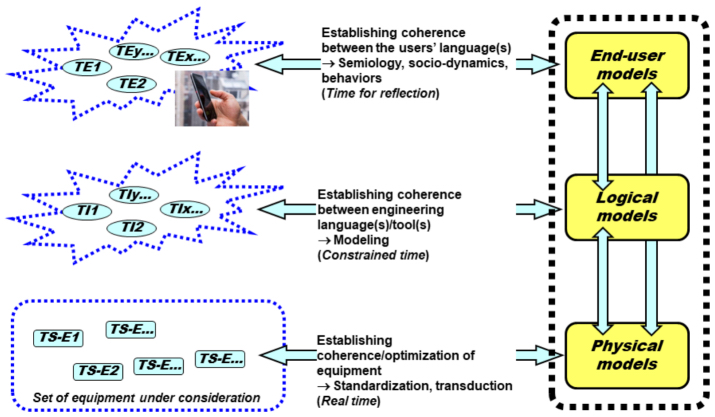

This fusion is accompanied by an integration of various time-related logics which must be harmonized to allow the invariants of the systems integrated in this way to be maintained (see Figure 6.6).

Figure 6.6. Time-related logics and the digital company. For a color version of this figure, see www.iste.co.uk/printz/system.zip

We thus see three logics come to light: (1) a logic which is human time, given our ergonomic and physiological constraints, and sociodynamics, a “human” time; (2) a logic relating to equipment that is integrated and supervised by the overall system to maintain integrity of the equipment, a “real” time; (3) an intermediate logic, denoted “constraint time” in Figure 6.6, which incorporates the two previous logics in such a way as to ensure an overall coherence of the processes that are implemented in the actions carried out by the system in its environment.

There is abundant literature on all these subjects and for information systems, in particular, we recommend the works by Y. Caseau, published by Dunod.

What we are interested in here are the lessons to be learned in systemic terms from all these evolutions, for example, the temporality, the various representations of information, the general organization of exchanges (interoperability), controllability, and errors.

6.4. Systems of systems

As we have seen previously, the 1990s were an era of disruption in artificial systems engineering. From the point of view of systemics, these new developments were an opportunity for better understanding of the mechanics of systems, as much in terms of architectures as in terms of their dynamic behavior and their evolution. Deep structures will also progressively emerge, typically “language-focused”, analogous to grammars, and of primary importance. In the field of defense and security systems, like those referred to in the various white papers that define the general requirement and missions, we will attempt to integrate systems in use into the various professions so as to improve overall efficiency, and make this new set more coherent, without, however, damaging the necessary autonomous action. It’s in this environment that the notions that are now a structural basis will be able to surface (such as the notion of systems of systems from the French MINDEF, of “architecture framework” such as the US-DoD DoDAF, the UK-MoD MoDAF, the NAF for NATO), all of which resulted in a civilian version promoted by the Open Group: TOGAF (The Open Group Architecture Framework). Over and above the opaque acronyms, the objective of all these efforts is to master C4ISTAR systems, more specifically the STAR part ((Intelligence) Surveillance Targeting Acquisition and Reconnaissance).

The system monitors its spatio-temporal environment and therefore itself to ensure that it is not threatened in the broad sense of the term, including from a security point of view. This is a BMS pushed to the best that technology has to offer.

This autonomic management implies acquisition of all types of information (via SCADAs, as mentioned above) that are likely to be of interest to the system, to establish correlations between events, hence the information gathering dimension that targets actions in progress, and the recognition dimension that is the equivalent of giving a form, a meaning (via correlations) to what is monitored. Since the system’s resources are by definition limited, it is necessary to conserve capacities, to target the actions to be implemented, to evaluate them before deciding on an action. Targeting is therefore the implementation of the logic known as capability.

Using the block diagram (see Figure 6.1), we now envisage systems whose objective is to optimize and control as best possible what is happening in a given spatio-temporal zone, so as to avoid certain emerging phenomena that are undesirable for users of the zone. Here, we are very close to the geometrical definition of systems given by R. Thom and that of D. Krob (see Chapter 3 and the author’s website).

The problem to be solved is not so much the control, which does, however, remain a fundamental characteristic, but ensuring that what happens in the zone in question, of varying perimeter, maintains a certain number of properties that will constitute invariants (in systems engineering, we refer to essential requirements) of the system, in other words, what it must preserve, conserve, in all circumstances. This implies the management of deterioration modes, with rules of priority which can themselves vary as a function of the situations encountered and which are largely unpredictable.

If, for example, we take the case of an airport zone, like Roissy or Heathrow, the requirement is that on a permanent basis:

- – airplanes can safely take off and land;

- – passengers can access the sectors where they board and/or disembark, drop off/pick up their bags, etc.;

- – the zone has sufficient resources (energy, trained personnel, equipment, etc.) to function smoothly and to conserve the security of people and the goods located in the zone, etc.

In this type of system that has a very broad spectrum, the actions to be carried out by active units (AUs) and the processes to be implemented will require the cooperation of a certain number of systems, of which each is already in itself a complex system but of a lower rank in the hierarchy of the system of systems. Each one of them serves a particular community, one or several professions, and has its own engineering teams.

Taking Simondon’s technical object/system approach as a governing principle, we must envisage a triplet of three T trajectories that correspond to Simondon’s triplet, in other words, {TU, TS, TE} (see Figure 6.2). Operationally, this will be expressed as:

- – cooperation between the user communities TU1, TU2, etc.;

- – cooperation between the engineering teams TE1, TE2, etc.;

- – technical coherence of the hardware/software components of the equipment that constitute the system.

Knowing that all this will change over time, hence the diagram in Figure 6.7 that summarizes the fundamental problem of systems of systems.

Figure 6.7. Establishing coherence between models

As can be seen in Figure 6.7, the fundamental recurring theme of the coherence of a system of systems is the structure consisting of the set of the three families of models which, if they have been correctly formalized in terms of language and grammar, will be possible to analyze and study with suitable tools and methods, in particular, all those relating to the theory of languages, including those that incorporate the various types of controls (synchronous, asynchronous, shared resources, capacities, events, priorities, etc.) and the temporalities that are specific to each one.

These three models refer to the same thing, they share the same information about the situation that justifies the system’s existence, with the same meaning, but each with abstract and/or mechanisms that are specific to them.

A magnificent example of a model of uses, for C4ISTAR crisis management systems, is the DoD document, Common Warfighting Symbology, which is a basis for the human/machine interfaces of these systems; it is even used by certain strategy video games. This document defines a graphics language, an ideography, containing several thousand symbols, which allows all those who apply it to interact in a coherent manner in a crisis zone, and extension facilities that allow new symbols to be created (see Figure 8.9). During use, this type of MMI, based on 2D/3D vision, turned out to be much more effective than the automatic translation of natural languages that was initially envisaged. In information systems, this type of configuration also exists for the same reasons, although the context is completely different in architectural mechanisms with three structures (you could say the same for models, and even metamodels): external (the outside of the system), logical (or conceptual), and physical (or organic). The latter two diagrams characterize the inside, where the logical structure is an abstraction of the physical structure.

- 1 One of the major chips of this era, the Motorola 68000, contains 68,000 transistors.

- 2 An aerospace fellow, and an author and practitioner of systems architecture.

- 3 J. Printz, Architecture logicielle, 3rd edition, Dunod.

- 4 In their key article, already cited, Behavior, Purpose and Teleology, A. Rosenblueth et al. give a certain number of them.

- 5 Refer to the comprehensive work by D. Vaughan, The Challenger Launch Decision, 1996.

- 6 For more information on the first automatic power station in Paris, in 1928; refer to the Wikipedia entry “Histoire du téléphone en France”: https://fr.wikipedia.org/wiki/Historie_du_téléphone_en_France.

- 7 For example, refer to Understanding Command Control by D. Halberts, R. Hayes of the DoD/CCRP Command and Control Research Program; easily downloadable.

- 8 For further persuasion, refer to the book by J.-C. Ameisen, La sculpture du vivant, Le Seuil; and to the book by P. Kourilsky, Le jeu du hasard et de la complexité, Odile Jacob, 2014, concerning the immune system.