4

The System and its Invariants

4.1. Models

The “system” object itself can undergo evolutions that may be large or small, under pressure originating from three sources: (a) users who require new functions and/or other modes of operation, for new uses; (b) technological innovations that will allow the system to increase its capacities and provide the users with competitive advantages; and (c) the environment with its uncontrollable hazards. The objective of these evolutions is to improve the system’s service contract with regard to its users, or simply to survive.

For developments of this kind to be possible, whilst avoiding re-doing everything, the system must have certain abilities that are independent of the desired evolutions so that these can become easily integrated and constitute an operational evolution of the system.

A little like the axioms of a mathematical theory, these abilities will constitute the invariants of the system, which must not change under any circumstances, and if they do, they will compromise the general equilibrium. These invariance properties can be immaterial, important in the entire system, such as compliance with a procedure, or can be conveyed by a particular piece of equipment.

For example, the equilibrium between supply and demand for the electrical system is an invariant property. Each piece of production equipment is supposed to guarantee its production by contract. Compliance with a 220/380 volts potential, or frequency and synchronization at 50 Hertz, can be guaranteed by certain pieces of equipment such as UPSs which thus become the carriers of the property of invariance. The circulation of information relating to the state of the system, which moves upwards in the form of messages to the center of operations, must be standardized on a national or European scale. Reliability of the communication system must be guaranteed, meaning that nothing must be neither lost nor added, by an invariance property implemented by error correction codes (ECCs) which are simultaneously procedures that come directly from the theory of codes (Shannon, Hamming, Huffman, etc.) and tangible material entities, largely distributed at the very core of the system that they protect from hazards, for example, the alpha particles produced by cosmic radiation. Without these redundancies, no network would work for more than a few minutes. The integrity of the information transmitted is the invariant of all communication systems, hence the fundamental importance of Shannon’s second theory1.

In an automobile, the chassis is a geometrical invariant common to a whole range of vehicles. It is now designed to be impact resistant.

In a computer, the “logical design”, as named by von Neumann, is an architectural invariant shared by all computers, for any constructor: all of them have a shared memory that can contain data and instructions, one or several processors2, asynchronous input–output ports. J. von Neumann’s architecture model materializes the fundamental property of the universality of modern computers, not in a theoretical sense like Turing’s “paper” machine, but in very real technical objects that arise from human intelligence, initially with electronic tubes, triodes and pentodes, and those that are today entirely transistorized in silico. Programming languages, when they are designed well, are invariants that allow the programs that have been written in a language L1 to be executed and to provide the same results for any executing machine. The compiler guarantees the semantic invariance of what the programmer wrote when they transformed/translated the external language L1 into the internal language of a particular machine LMx.

Compliance with the Internet http protocol allows any client to connect to the Internet (they do have a unique identifier) and to interact with other clients and/or to access the resources on the network unambiguously; name engineering is a fundamental problem. With the increase in power of “connected objects”3, billions of elements will now find themselves interacting with each other.

In the GPS constellation, or the future GALILEO, each satellite must be perfectly synchronized with all others thanks to high precision atomic clocks to which the relativistic correction does, however, need to be applied to guarantee the precision of the signal that is emitted.

The list could easily be extended.

The stability of certain aspects of the system is a necessary condition for its engineering. If everything moves, it is then impossible to stabilize anything, which will immediately affect the engineering teams which will become permanently destabilized. In ICT, stabilization of the hardware/software interface, a state of affairs acquired in the 1960s4, has allowed two opposing universes to be integrated, with, on the one hand, a material system subject to hazards and to the uncertainties of physics, including at a quantic level, and, on the other hand, a logical, deterministic universe, protected from the hazards of the environment, thanks to which the construction work of a programmer becomes simply possible (see Figures 3.3–3.5).

What is important, from the point of view of the system’s life and of its potential for evolution, is to be able to take things into consideration between what is essential, what we cannot touch recklessly for fear of damaging it, and what is conjunctural, likely to be modified as a function of the events that will appear throughout the life of the system. In living organisms, the constitutive matter, atoms and molecules are constantly being renewed, but the structure (the form, in the interpretation provided by Aristotle) remains stable or grows back in the case of amputation in certain batrachians, for example. In the systemic approach, there must therefore be, at the heart of the approach, identification and a clear distinction of the essential aspects and of the conjunctural or non-essential aspects. Since engineering corresponds to these two aspects, their organization and relations will necessarily be different; the disappearance of an essential element is an irremediable loss, whereas it would always be possible to reconstruct an inessential element.

REMARK.– In biology, the genotype is separated from the phenotype, even if there are, obviously, interactions between these two processes; the phenotype is what results from the interactions between the organism’s genotype and the environment of this organism.

In a company, what we call the “company model” must at the very least structure the interactions with the environment (in PESTEL terms) in which the company evolves. The point of connection between what is essential and what is inessential is made of physical–chemical mechanisms that are known as transducers (e.g. the conversion of a pressure or of a concentration into electrical current; refer to the authors’ website for more information about transducers). They provide the conversion from one world to another. Thanks to these transducers, well-situated in the “machine” space, we can replace the electromechanical and/or hydraulic devices of the first servo-controls by devices composed purely of information, initially analog, then digital. These principles were also the basis for analog machines, because certain electronic devices are the physical equivalent of operations (such as deviations or integrations) much more easily than digital machines. These transducers are critical devices for everything that falls under the category of a sensor or an effector.

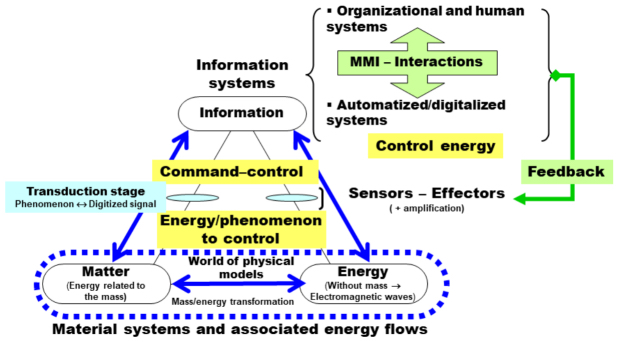

In section 3.3, we saw how the mechanism that provides the correct coupling between the interacting elements is necessarily a fundamental invariant of the system. It must be defined a priori so that everyone can comply with its rules. As we have seen, these elements are more or less independent processes that each have their own dynamic given the phenomena that take place in them. The methods of interaction between the processes, Inter Process Communication (IPC) to use the correct term, must comply with the time schedules of the various processes. In the first steam machines, a worker (generally a child) opened the valves for steam to enter the piston (a part called the “slide valve”), at the right moment, until one of them realized that this opening simply needed to be servo-controlled by the movement of the connecting rod to make this manual operation automatic. For around a century, all this machinery was mechanical, hydraulic and then electromechanical, to be progressively replaced from the 1950s to 1960s by a wide variety of devices known as command-control (CC, or C2) which are at the basis of all modern systems. These devices make both intensive (a large quantity of calculations) and extensive (ubiquitous, where it is needed) uses of computers insofar as the command–control information was completely digitalized, with suitable configuration. We can represent a modern system in a diagram, like in Figure 4.1.

Figure 4.1. Diagram showing the principle of modern systems. For a color version of this figure, see www.iste.co.uk/printz/system.zip

In line with the definition, the system is thrown into the heart of an environment, characterized by a certain PESTEL profile which can vary over time and space where the system is deployed. Each item of equipment is identified as an element of the system, with its own temporality (symbolized by a clock on the diagram), essential for successful control. Each element is linked to the C2 center by any information link (mechanical, hydraulic, electronic) but whose service contract must comply with the equipment constraints, in particular, those concerning possible saturations. In the case of war drones, this link can be a satellite link with a center located at thousands of kilometers from the targets. The equipment can have a certain autonomy authorized by the center. The center must comply with time schedules for the various equipment, which is usually described as “real time”, which simply means “absolute compliance with the schedule”, whatever that is, the millisecond or less (the cadence of integrated circuits is currently of the order of the nanosecond, 10–9 s), or several hours, and so on. The diagram highlights the critical role played by the C2 center which is the true director of the coherence of the whole. The center is the carrier of the system mission and the heart of the active unit (AU), according to François Perroux’s interpretation, which integrates all energy, human and information resources that the system requires to accomplish its task and avoid saturation in all circumstances. The equipment are resources attributed to the AU, depending on the desired capacity to obtain a given effect. In sciences of action and decision, the term “capability logic”, in other words, a logic based on an optimal use of the resources that define the capacity, for maximum effect, which is left up to the appreciation of the human operator who interacts with the system via the interaction language CRUDE, which we will return to in section 5.2.1. This is obviously a time-related logic, non-reversible (in mathematics, we would say it is non-commutative, nor associative), because once such or such a resource has been consumed, it is no longer available, and the order in which they are consumed is significant.

REMARK.– If a vehicle comes back to its starting point, for any reason, the fuel is consumed irreversibly, and the stock is reduced by the same amount.

In the most advanced systems, the C2 center has a real “intelligence”, which is reflected in the acronym C4ISTAR (Command, Control, Communications, Computers, Intelligence, Surveillance, Target Acquisition and Reconnaissance) which characterizes it5; this point will be examined in more detail in section 8.1. It is itself structured like a system, in fact, a system of systems because it is a coherent federation that must respect the specific rules of the various equipment that it coordinates. The center must only manage information that is essential for the coherence of the whole, which is essential for the maintenance of this coherence over time and nothing else. What is circumstantial to a piece of equipment must be considered as inessential for the center, and it is above all essential to duplicate the information towards the center, although this is essential from the point of view of the equipment. This is the entire problem of interoperability which is thus presented. We see the language aspect of the system taking shape, because each piece of equipment is characterized from the point of view of the center by states, associated with commands and messages such as equipment shutdown, ready, active, suspended (waiting for an event x, y, or z), interrupted (waiting for repairs), etc. The adjustment parameters, now numerous, allow for an adaptation to local conditions but by adding complexity, in other words, a large combination that must also be organized to be controlled.

In defense and security systems, the “language” of the system is illustrated for human operators by a dictionary of graphic symbols, a language that is found in certain video games, an ideography6 that will allow operators to understand each other, independent of their usual languages which is only a comment, and to command–control the situation in which the system is operating.

These aspects are detailed in Part 2 of this book, “A World of Systems of Systems”.

To conclude with a metaphor: biology certainly provides us with the most spectacular example of invariance with the structure of the genome, written in chemical language using four proteins symbolized by four letters {A, T, C, G}, common to all the living states of the planet ever since the origin of life, a billion years ago. This being the case, the structure of the genome remains an immense question mark when we know that the largest known genome belongs to a small Japanese flower, Paris japonica, which contains 150 billion bases, in other words, 50 times the human genome. As for understanding operational architecture, and its genesis, one had to wait a little longer given the immensity of the challenge and its complexity. Talking about a program (in the computer science sense) regarding DNA is extremely daring insofar as we do not know the operational semantic, and biologists do not really know what this will code as, hence the strange expressions for a computer scientist like “non-coding DNA” that cause real unease7.

But practices in the engineering of very large systems can certainly enlighten us, and perhaps even provide some ideas8. There is a strong analogy between biological engineering, which is not well-known, and complex systems: to organize complexity, the starting point must be with simple objects that are elementary, but not simplistic, with properties that are perfectly known and mastered by engineering actors, and then integrate them in a certain manner as a function of the end purpose of the system. The computing stack mentioned previously is today the best example of the way in which this fundamental composition operator/operation in artificial systems must be organized.

What is truly extraordinary in engineering is the reliability of the construction process of living beings who, from a single cell, will produce an organized set of 75–100 thousand billion cells which renew themselves at the speed of a thousand billion cells per day – an absolutely unreasonable efficiency in the eyes of physicists who spent a serious amount of time considering the question, such as E. Schrödinger in his small work What is Life, M-P. Schutzenberger, a computer scientist who was also a doctor, or more recently R. Laughlin in A Different Universe, previously cited in Chapter 3. It is a highly organized process, perfectly Newtonian, in which chance, in the mathematical sense, no longer has a place.

4.2. Laws of conservation

To continue the analysis of the invariant properties of systems, it is a good idea to look at the laws that are at the very foundation of the immense success of the physics of Europeans, from Copernicus, Galileo, Newton, etc. if we compare this to what advanced civilizations such as the civilization of classical Islam or the Chinese civilization have achieved.

The most fundamental laws of physics, sometimes called super laws or phenomenological laws, are conservation laws that emerged progressively throughout the 18th Century. They are something radically new, the observation of which has been made possible thanks to the precision of measurement instruments, such as Roberval’s scales, the Vernier caliper scale to measure distances or angles, or even high precision clocks to measure time and above all longitude for transoceanic navigation. Without the precision of the measurements carried out by T. Brahé, Kepler would never have discovered the elliptical movement of the far planets, in this case, Mars; a fortiori, the abnormal advancement of Mercury’s perihelion, and even less so the invariance of the speed of light posed as an axiom of the theory of relativity.

One of the first laws is the conservation of the quantity of movement, observed in the laws of impact, which strongly encourages, from the point of view of the description of phenomena, very clear ideas to be established about the notion of a vector, with the parallelogram of forces, etc9.

There will be others like the Principe de moindre action by Pierre Fermat, René Huygens, Pierre Maupertuis; the Conservation de la masse by Antoine de Lavoisier with his famous “nothing is lost, nothing is created, everything is transformed”; then that of energy with Carnot, Clausius and Gibbs and especially the construction of an equation operated by Lagrange and Hamilton in differential form (the sum “kinetic energy + potential energy” is constant). The discovery of atoms and nuclear reactions clearly shows fundamental principles such as Einstein’s famous ![]() , which relates the weighted mass (meaning the atoms themselves and not the “inertial” mass which depends on the speed of the body in movement) and energy, followed by the no less famous

, which relates the weighted mass (meaning the atoms themselves and not the “inertial” mass which depends on the speed of the body in movement) and energy, followed by the no less famous ![]() by Planck which this time relates energy, therefore the mass, to the frequency of a wave, hence the wave mechanics of Louis de Broglie based on the equivalence

by Planck which this time relates energy, therefore the mass, to the frequency of a wave, hence the wave mechanics of Louis de Broglie based on the equivalence ![]() . These are discoveries that totally overthrew our view of the world, with the literally “incomprehensible” wave/particle duality, to paraphrase R. Feynman.

. These are discoveries that totally overthrew our view of the world, with the literally “incomprehensible” wave/particle duality, to paraphrase R. Feynman.

Indeed, there are not many of these laws, but without them, nature is literally incomprehensible10. The recent discovery of the Higgs boson results from the application of these conservation laws at the atomic/subatomic scale which requires means of observation that are both enormous and perfectly controlled, such as the LHC at the CERN, and absolutely massive data processing because an experiment at the LHC creates hundreds of billions of events to analyze, inaccessible using simple human means. The mathematical analysis of these conservation laws, established by the German mathematician E. Noether11, has shown the importance of the notions of symmetries that are associated with them, a symmetry which cannot be “broken” without an energy counterpart. By reasoning in this way, Paul Dirac demonstrated the existence of antimatter, even before it was effectively discovered, by applying the conservation principles in such a way as to maintain a coherent mathematical formulation of the laws of physics. These principles have played a fundamental role in the discovery of the famous boson12 which “explains” the cohesion of matter, why matter “holds together” and forms stable structures over time.

What can we draw from all this that will be useful for a deeper understanding of the notions of system and information? To do this, we need to indicate an important school of thought, initiated by physicians, biologists and computer scientists associated with the work done by the Santa Fe Institute, founded in 1984. The theme that related the various domains is the “physics of information”, meaning the study of information, in the broad sense, with a physical point of view, like that of conservation laws. See, for example, the proceedings of the work done at the “Complexity, entropy, and the physics of information” workshop, in June 1989, published by Wojciech Zurek in 1990, or even those of the symposium dated October 27–31, 1992, by the Académie Pontificale, “The emergence of complexity in mathematics, physics, chemistry and biology”, published in 1996. Above the physical part of our material or immaterial objects (such as fields), there is the information, in the broad sense, that is necessary for them to work correctly, and which will determine the action of the various communities. Hence, the slightly provocative formulation given by the physicist John Archibald Wheeler13 (famous for popularizing the term “black holes”), “It from bit”, which should be understood as:

“[…] put every it – every particle, every field of force, even the spacetime continuum itself – derives its function, its meaning, its very existence entirely – even in some contexts indirectly – from the apparatus-elicited answers to yes-or-no questions14, binary choices, bits. It from bit symbolizes the idea that every item of the physical world has at bottom – at a very deep bottom, in most instances – an immaterial source and explanation; that which we call reality arises in the last analysis from the posing yes-or-no questions and the registering of equipment-evoked responses; in short, that all things physical are information-theoretic in origin and this is a participatory universe.”

We have known, since the work of C. Shannon and N. Wiener, that there is a deep analogy between information and organization, between entropy and disorder highlighted by the similitude of L. Boltzmann and C. Shannon’s formulae, an analogy that is reinforced by the 3rd MEP principle, Maximum Entropy Production, which is a principle of organization; this being the case, analogy does not mean causality; it is therefore wise to remain prudent. The physicist P. Bak has provided quite a captivating vision of the relations that appear to exist between these various notions, by what they call self-organized criticality, SOC, in his work How Nature Works – The Science of Self-Organized Criticality. Without information and control of the level of saturation of the feedback loop, the behavior of the systems is incomprehensible, and since this will be seen in section 4.2.2, without a repair procedure, without information about the state of its constitutive elements, the lifetime of the system, measured by an availability, is limited.

We will return later to this aspect of complexity. But we can already say that a semantic invariant is necessarily preserved which formalizes the intentional actions of the user of a system (we could say, by linguistic analogy, its “performative” aspect15) until they are implemented in transducers which in fine “will execute” the user’s intention in the real world; this invariant implements a succession of languages, integrated with each other, of which the stack of interfaces that are present in all computing equipment provides an excellent illustration.

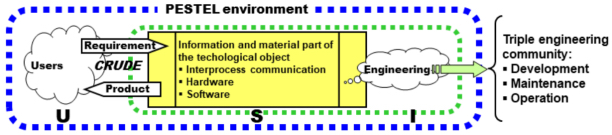

To progress, we need to return to the definition of the system as a technical object, as G. Simondon explained it, with the triplet {U, S, E}, Figure 4.2.

Figure 4.2. The system and its invariants. For a color version of this figure, see www.iste.co.uk/printz/system.zip

The technical object can be of any size, either like a mobile phone, which manages a power of a few Watts, or like the electrical system, which manages a power of the order of 100–110 gigawatts (in other words, the equivalent of approximately 5000 Hiroshima atomic bombs per hour, in other words, a little more than a bomb per second, or in a more prosaic manner, the simultaneous supply of 100 million irons).

In order to guarantee the coherence and use of the technical object, it is simultaneously necessary for (a) the system to remain useable and attractive to its users to which it provides a competitive advantage; and (b) understandable for the engineering teams who ensure its development, maintenance and operation, in other words, three activities with very different skills and experience profiles which are executed in parallel. Here, we see N. Wiener’s problem in its entirety, at the very beginning of his reflection on the subject of what cybernetics would become, because it is necessary to control the coherence of the various underlying flows. The quality system is implemented precisely so that this coherence and this utility are guaranteed as far as possible throughout the system’s life. The quality system is in some way the immune system of artificial systems; its challenge is to fabricate something reliable from non-reliable elements, by playing on well-positioned redundancies thanks to the review mechanisms that are said to be “independent” (see our previous works on the engineering and integration of projects).

The set {Technical Object U, S, E} is plunged into a socioeconomic environment that we have characterized by the acronym PESTEL, an environment that it is itself a part of. To correctly use the system, the users must have a sufficient level of knowledge, known as the “grade” in English-speaking countries. In France, approximately 80% of a given age range have been educated at the Baccalaureate level, and therefore, at least in theory, have a certain level and amount of skills. It is quite simply a permit to perform actions x, y or z, which require a certain level of knowledge to avoid damaging one’s environment or oneself.

To correctly carry out assignments in engineering, the corresponding community which is triple (see Figure 4.2) must have sufficient maturity, in skills and in experience. For energy, this is Baccalaureate + 5 years. Engineering makes a product/system available to the community of users which satisfies a service contract which we have characterized by the acronym FURPSE under economic conditions, because it is necessary to be able to pay it, characterized by the acronym CQFD (the basis of the quantum energy project) and TCO (the integral of the quanta “project” for the entire lifeline).

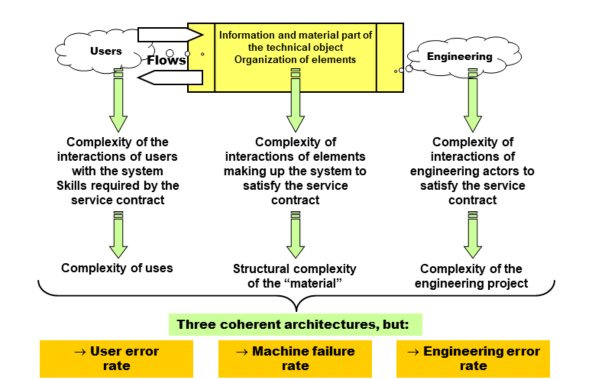

By reasoning in the same way as H. Simon in Sciences of the Artificial, Chapter 8, “Architecture of complexity”, we can improve Figure 4.2 as follows (see Figure 4.3). In the diagram, the users are considering “what is this used for in the environment in which I am acting?”, whereas engineers must ask the question “how is it made, how can it be repaired in the event of a breakdown, etc.?”, in their environment of constraints and hazards, to respond to the requirements of users, meaning they should seek how to control all this, while preserving the semantic invariant.

Figure 4.3. Architecture of complexities

In “energy” terms, three types of energies must be considered, to which three types of complexities will be associated, and three architectures will organize these complexities:

- – that required for learning by the actors so that they become able to use the system without putting their life and/or their environment in danger. The corresponding effort is correlated with the quantity of information associated with the flow of messages that must be managed by the user: this is the Shannon QI measure, associated with these messages. REMARK.– With this measure, which is infrequent with a low probability of occurrence, a large quantity of information will be transferred, which will require a long learning time because its low occurrence means that it will rapidly be forgotten;

- – that required for the correct operation of the material part of the system and more particularly for the management of interactions at the very heart of the system. We will correlate it with the required treatment power to ensure that all the transformations that are carried out by the “machine” are correct, in other words, the calculation capacity that the system must have, in the broad sense, via its transducers, to accomplish its mission. This is the measure AC, for algorithmic complexity, used for the first time by J. von Neumann to count the number of operations that are required to solve a problem;

- – that required for the production of the text that defines the set of procedures to be carried out, in other words, programming in the broad sense, either automatically, or manually, by engineering actors. We will correlate them with Kolmogorov–Chaitin’s measure of textual complexity, TC.

Regarding more particularly the interactions that will be organized and managed by the information system, we can complete the general situation presented in Figure 4.1 by Figure 4.4.

Figure 4.4. Control of flows and command–control. MMI stands for man-machine interface. For a color version of this figure, see www.iste.co.uk/printz/system.zip

In this diagram, the information system only sees what the system architect has decided to let it see so that the control and command–control are carried out optimally, taking into account the service contract. Energy comes from diverse origins: wave, heat, fluid flow, etc. If we wanted to be perfectly rigorous, and is consistent with the energy logic of the standard particle model, the material “matter” part itself would be fermions, meaning the protons, neutrons and electrons that are the constituent parts of matter at our scale, and the “energy” part would be of bosons, massless particles such as photons of electromagnetic energy, which ensure their cohesion. In a chemical reactor, like a combustion engine, matter is recomposed of constant mass, liberating heat energy; in a nuclear reactor, matter is transformed by the fission of atoms of uranium/plutonium, which, as we all know, liberates a lot of energy that is recovered per unit of mass essentially in the form of heat radiation.

The error rate and/or the breakdown rate are added to the three complexities, which is logical. A man-machine interface for users will be all the more difficult to master since it is complex, in the usual sense of the term, that is, with many messages and relationships between the messages. The same can be said for the machine and engineering. The user will make even more errors because they will master the “grammar” of the correct use of these messages more or less effectively16 and more specifically rare messages, which are by definition difficult to memorize.

However, to understand the magnitude of the phenomena that are related to the scale of the processes at work and that we want to servo-control, and to measure the potential consequences of errors, let us look at the case of the electric system (ES) which produces a peak power of approximately 100–110 gigawatts. If the material part of the transport system has a yield of 99%, this means that the ES must be capable of compensating, through suitable mechanisms, for a power of approximately 1 gigawatt (one section of a nuclear power station!) which is wandering around in the network, in thermal and/or mechanical form, and which may accumulate! If for any reason, control is not implemented, or is implemented badly, we very quickly have a potential Chernobyl incident given the energies that are at stake. Hence the critical importance of the partitioning that is implemented by the architecture of the transport network. At the least incident, at the least imbalance, it is possible for the operator in charge of the control to no longer be in a position to act on the physical reality, even if the information continues to function correctly, from its own point of view. In the diagram in Figure 4.4, the correct interpretation is that the energies used by the information system, the command–control (ICS) in the wider sense of C4ISTAR systems, have no measure in common with those that are released in the controlled material and/or human system, which can give the incorrect appearance that there is no risk, if the operators of the ICS have unfortunately forgotten reality.

There is a real lesson to be learnt from this for the daredevils of the financial system that circulate the flows of hundreds of billions of euros, per millisecond (see the excesses of “high frequency trading” HFT17), a time that totally escapes all regulatory control from human actors, in addition to rigging the rules of the game practiced on the markets, symbolized by Adam Smith’s famous “invisible hand”! Unfortunately, we know what the result is.

This is what we will specify in sections 4.2.1 and 4.2.2.

4.2.1. Invariance

As we have already said, without going into details, control of any kind implies energy expenditure that has a detrimental effect on the effectiveness of the system. James Watt’s centrifugal governor for the very first steam engines consumed energy which, due to this, is no longer available to make the machine move, but prevents it from exploding due to overheating.

The centrifugal governor therefore plays the role of an insurance which guarantees that, in the event of an imbalance, we will have sufficient resources to return to the nominal situation: remaining in a state of balance. The relevant consideration to make in systemics is how to obtain a deep understanding of the nature of the relationship between the energy that the governor is able to mobilize in order to correct any imbalance that may arise and which could prove to be damaging or destructive to all or part of the system, or that could pose a risk to its users and/or to the engineering teams, with the objective of applying the most suitable counter-measures for the situation.

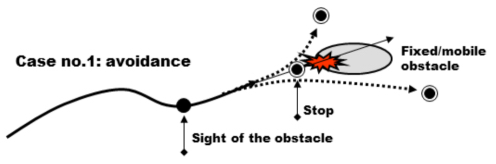

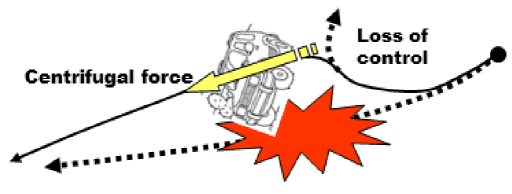

We all experience this type of situation when we are driving vehicles. When a driver is faced with an unforeseen obstacle, they have a choice of two strategies: (1) stopping before the obstacle, if possible; or (2) deviating around the obstacle as indicated in Figure 4.5. In case (1), the vehicle’s brakes need to be able to absorb the kinetic energy of the vehicle, over a distance ≤ than that of an obstacle. The energy that can be mobilized comes from its brakes, which are only effective if the wheels are not blocked. In case (2), it will deviate from the initial trajectory by modifying the direction and grip of its tires, possibly by slowing down, using its brakes to avoid the car skidding or swerving; in this last hypothesis, the driver loses control of the vehicle.

Figure 4.5. Control of a trajectory with deviation around an obstacle. For a color version of this figure, see www.iste.co.uk/printz/system.zip

Figure 4.6. Control of a trajectory which avoids the obstacle. For a color version of this figure, see www.iste.co.uk/printz/system.zip

Several general lessons can be drawn from the situation:

- – From the moment when the obstacle is seen to the moment when the effect of the decisions made is felt, there is always a period of latency during which the system (here, the vehicle) follows its nominal trajectory. If this latency time, which depends both on the governor and on the inertia of the system in movement, is not carefully controlled, the shock can become unavoidable.

- – The energy to inject into the system to carry out the correction must be compatible with the structural constraints of the system. In the case of the vehicle, if the braking is too sudden, the wheels will become blocked, and therefore lose their grip, which is almost the same thing as cancelling out the braking; hence, the advantage of ABS devices. If the change of trajectory is too sudden, the centrifugal force that depends on the radius of the new trajectory can be enough to destabilize the vehicle, which will also translate into a loss of grip, with potentially fatal consequences. If this energy for control is not suitable for the dynamic context of the system at the instant T, loss of control is inevitable. The system must always have resources in reserve that can be mobilized without delay to guarantee a latency ΔT that is compatible with the phenomenon that needs to be controlled. For this to take place, it is necessary to measure and quantify the capacities18.

- – The system situation, that is, its state, is eminently variable. In the case of a vehicle, the dynamic is a function of the mass, therefore of the number of passengers and/or of the weight of the luggage on board. This means that no plans can be made in advance and that it will be necessary to adapt to the spatio-temporal situation of the system in real time, integrating the latency time. The capacity for adaptation of the system depends not only on the knowledge of its internal state at the instant T but also on the environment in which it evolves in order to prepare alternative plans that will always need to be adapted to the context of the situation.

- – In the event of an incident/accident, a new obstacle appears, which can set off a waterfall phenomenon that will amplify the initial problem (take the example of pileups on motorways) and potentially lead to a situation of a general blockage of the system; or what economists call a systemic breakdown, highly inappropriately since, by removing the controls, the system is destined to become uncontrollable. Spatial organization of the system must be such that the zone that has been contaminated by the accidental phenomenon is confined and temporarily isolated from the rest of the system. In the case of the electric system, organization in autonomous regions provides this guarantee. We therefore see that there is a direct relationship between the latency of the control loop and the “size” of the “energetic” region whose hazards must be controlled. A region of this kind determines an autonomous or autonomic loop (using a term introduced in automatic computing approaches), of which the size is determined by the latency (or the inertia) of the loop.

Figure 4.7. Loss of control of the trajectory. For a color version of this figure, see www.iste.co.uk/printz/system.zip

The metaphor of the trajectory can greatly help the system architect designer to plan for the types of situations that they will have to face, without, of course, knowing the place nor the date of the expected events.

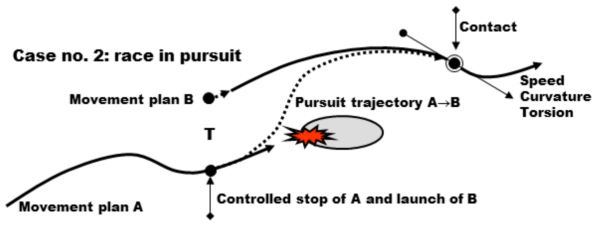

Two types of trajectory can be envisaged, increasing in terms of the difficulty of adaptation: (1) trajectories for avoidance and/or deviation, possibly involving stopping; and (2) pursuit trajectories, when the difficulty encountered obliges them to change course from plane A to plane B, whose dynamic will, by definition, be different. This can be schematized as follows, Figure 4.8.

Figure 4.8. Control with the change of trajectory and avoidance of an obstacle. For a color version of this figure, see www.iste.co.uk/printz/system.zip

In a situation where one has to avoid an obstacle, the state of the fixed or mobile obstacle needs to be evaluated before selection of the most appropriate strategy, either stopping, or deviation, and ensuring that we have the resources that are required to carry out the action that has been selected. For the case of stopping, an evaluation needs to be made of the distance to the obstacle and of the energy that can be mobilized for braking. For the case of deviation, it will be necessary to choose where the deviation will, in fact, pass, depending on the dynamic parameters of the deviation trajectory, or on the speed, the radius of curvature and possibly the torsion (if 3D), taking into account the constraints that are specific to the system, as well as the possibilities proposed by the environment. Once the obstacle has been bypassed, all the trajectory parameters need to be recalculated to see if we can return to a nominal situation, as if there had not been an obstacle, or one must announce the consequences of this deviation on the lifeline of the system and on the service contract.

In the context of a pursuit, the situation is more difficult to analyze, although it is intuitively quite obvious, because this time, it is necessary to control the movements of two trajectories, that of stopping at A and that of starting off at B, in such a way that the connection will be made smoothly. For this, it is necessary to calculate a transitional trajectory in such a way as to progressively organize the move from plane A to plane B. “Plane B” cannot start instantly. It is therefore necessary to plan for an increase in power whose “energy” will come from the deceleration of A, at least in theory. At the time of contact, the parameters of the two trajectories, the transition A→B, and B must be correctly aligned to avoid impacts. One of the most famous examples of this type of realignment is the case of the Apollo 13 mission following the incident that caused cancellation of the initial mission19.

This type of trajectography is exactly what is encountered in completely general and relatively frequent situations, which makes the metaphor useful because it allows us to “see” the complexity of the situations that need to be managed. For example, when the manager of the RTE transport network must shutdown a power station for a given reason, or if one stops by itself (e.g. falling bars following an incident), while maintaining the equilibrium between supply and demand, it is exactly in this situation. Another example, with a company that must deeply rethink its offer of products and services, here again for a given reason (e.g. globalization, merger and acquisition, digital impact (transform or perish)), we are again in a configuration of trajectories, except that in this case, the general management of the company must manage an organizational and human problem that we do not know how to describe with clear formulae as we did in the example for SE.

The metaphor of the trajectography can provide one last service; an understanding of the profound nature of control phenomena. There are two types of control energies. An initial energy corresponds to the control of a nominal trajectory, with no specific obstacle. However, there are operations that need to be carried out depending on the position of the system on its lifeline. The road can rise or fall, turn right or left, there can be signs giving instructions to the driver who must consequently be educated. In a modern car, we can say that we have classic devices such as an automatic clutch, a speed adjuster/limiter, an ABS braking system, etc. The control energy is used to maintain the engine at its level of optimal yield, in compliance with the highway code.

A second energy will allow us to react to risky situations in which the nominal trajectory must be abandoned in favor of another trajectory which will allow the risk (which, by definition, if unforeseeable) to be compensated for. For example, a complete stop of the vehicle requires a device that must completely absorb the kinetic energy of the vehicle, without killing the occupants (from 10 g and above, the lungs and internal organs explode).

In case (1), we can always remain in control with suitable servo-control; in case (2), this is not certain. In any system, we will find energy bands of different levels:

- – Nominal/controllable: the system lives its life, in its environment, and everything is for the better in the best of all worlds.

- – Non nominal/controllable (NNC): we have enough resources to execute the appropriate action via suitable procedures that are planned in advance and maintain the system in its current working state, with no catastrophic consequences on the service contract. This NNC case corresponds to a situation for which we have a theory, a certain model, and we can act as a consequence of this.

- – Non nominal/non controllable (NNNC): we do not have enough resources to execute the appropriate action which, moreover, does not feature in the risk management plan. The system will be damaged, or destroyed in extreme cases (Air France flight 447 Rio–Paris in June 2009, Chernobyl, Fukushima, etc.). The case of NNNC corresponds to a situation where we have no theory, but we can at least hope to learn lessons in order to avoid being surprised a second time, so long as we have deployed the correct means of autonomic management.

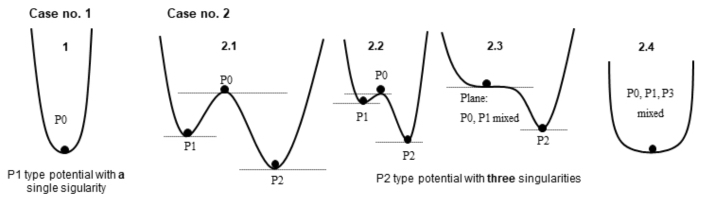

According to the theory of dynamic systems, this type of situation can be represented using “potential” functions. Here, we will only give an intuitive representation, because in the world of real systems, very few of them are sufficiently well-defined for it to be possible to model them with this theory, in particular, as soon as there are human operators in the loop, and in a general manner a degree of hazard, for example, the intensity of an earthquake, the resonance modes of a machine, etc. However, we can make some very interesting qualitative reasonings.

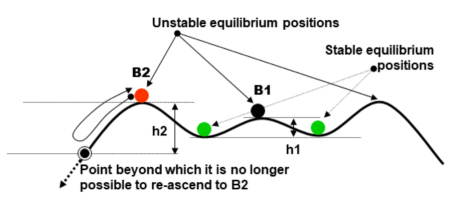

The idea is to represent the “potential” of the system through a landscape of valleys and hills, as shown in Figure 4.10. The valley bottoms and the summits are the singularities of potential. The points of equilibrium and instability are represented by balls. When the ball is at the bottom of a valley, it stays there. When it is on a summit or on a plane, the slightest disturbance is likely to make it fall into the neighboring valleys. In this representation, what is important is the number of singularities, and their forms that can be expressed using polynomials. In case no. 2 of Figure 4.9, we have a polynomial of degree 4, in other words, a maximum of three singularities, which corresponds to the number of zeros (extremes) of the polynomial for the derivative.

Figure 4.9. Different types of potential energies

The most interesting case for our subject of interest here is the potential known as “Mexican hat” because it allows us to qualitatively reason about the above situations.

The ball in B1 (Figure 4.10) corresponds to an optimal yield situation that we know how to control. If the ball falls to the right or to the left, we always have enough energy to bring it back to position B1, compensating for the fall h1.

Figure 4.10. Potential known as “Mexican hat”. For a color version of this figure, see www.iste.co.uk/printz/system.zip

A position such as B2 is unstable, because if the ball that moves due to an imbalance is not caught in time (problem of latency period), it will reach a threshold where the system is no longer capable of mobilizing sufficient energy to compensate for the fall h2. The situation then becomes catastrophic.

In the case of the electric system, there are optimal positions of equilibrium in which all the energy produced is consumed as closely as possible to the production points and the surplus (e.g. nuclear electricity) is sold to our neighbors by the RTE trading system. If the supply exceeds the demand, the system is no longer at its optimal level but the service contract is satisfied. If the demand exceeds the supply, the service must be degraded to satisfy the equilibrium between supply and demand, or one must buy external energy via the trading system.

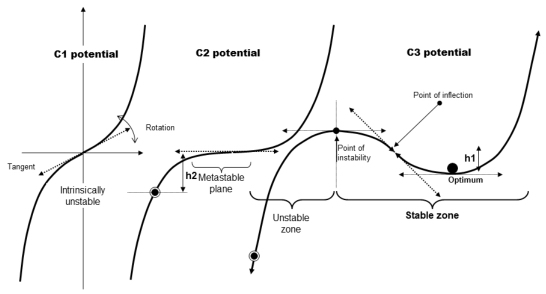

In the case of situations such as the equilibrium between supply and demand of the electric system, there is a dissymmetry between the supply and the demand. If the supply > demand, there is always a position where the service contract is respected, even without resorting to trading. If the opposite is true and supply < demand, there is necessarily a degradation of the service contract. The equilibrium can only be re-established by putting the users “in the dark” and/or by buying energy from outside the system. In this case, the potential must reflect this dissymmetry, with a potential function of an uneven degree. Figure 4.11 shows different aspects of a potential of degree 3, including the C3 case that corresponds to the situation of the electric system with its intrinsic dissymmetry.

Figure 4.11. Evolution of a C3 stable potential towards an intrinsically unstable C1

In reality, the form of the potential can change over time and as the situation progresses, taking into account the hazards, which comes down to making coefficients of the polynomial of time functions, or more generally of a variable of the evolution of a parameter t which takes into account the internal state of the system that results from the lifeline. Namely, in the case of a potential of degree 3, a function of the following type would be (we can always make the x2 term disappear which does not change the morphology):

We can also change the degree of the polynomial that describes the morphology, which is similar to analyzing the dynamic parameters of the singularity at the time of the change of morphology. A potential that is intrinsically stable (potential of an even degree) can evolve towards an unstable potential (potential of uneven degree), or an intrinsically unstable potential (case C1), as shown in Figure 4.11. If the dynamic parameters of the trajectory (speed, curvature, torsion, in other words, the derivatives of the first and second orders) are not continuous, there will be potentially destructive “jolts”, impacts, clicks and/or vibrations.

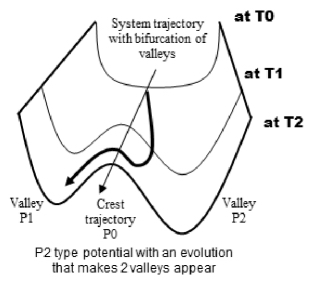

In the most common case, sought out by architects of complex systems, there will be evolutions of the potential, as indicated in Figure 4.12, where, from a single intrinsically stable valley, we will find ourselves in a system with two valleys (if the potential is of degree 4), of which each one reveals a different stable optimum, taking into account the selected strategy. In systems engineering, preference can be given to the duration of the construction, which we wish to be as short as possible, or on the contrary an optimum expenditure, which is the same as giving preference to the TCO (Total Cost of Ownership). Here again, qualitative reasoning can give rise to thresholds where the strategy can be changed given the observed situation. Currently, release onto the market as early as possible is considered to be the best strategy, despite being the most risky, which means that starting from a prudent strategy where expenditure is strictly controlled, we can allow ourselves to shift to a more offensive strategy in terms of time period, once we are sure that the expenditure will not evolve out of control.

Figure 4.12. Doubling of a stable potential valley into two stable valleys

What we have referred to as plane A and plane B, in fact, correspond to morphological situations that are prepared in advance. This corresponds in the real world to situations where the management of a company permanently maintains various types of strategic plans to react in the best possible way depending on the hazards of the economic “war” arising from the globalization of the economy where predatory behavior is seen in its pure and simple state. In this case, the work of operational directors consists, in the case of a decision to go from one plan to another, of managing pursuit trajectories, known in the jargon as “strategic alignment”, avoiding jolts if there is a discontinuity in the dynamic parameters of speed, curvature and torsion. On the way, this demonstrates why weak signals are of critical importance (these are magnitudes of second order, often hidden in the background noise, so by definition they are difficult to perceive without suitable measurement and/or observation instruments, including human), to manage the competitive advantages of any system in the best possible way. This also shows why, in the case of an electric system, making each one an energy producer is not necessarily a good strategy, given the current dynamic of our system. Causing a latest generation giant petrol tanker or container ship to change course too abruptly (400 meters long, 40–50 meters wide, with thousands of containers piled up over 8–10 levels) can completely disrupt the structure and cause the ship to sink.

The metaphor of the trajectory allows us to take stock of control energies that are applied and to better represent dynamic phenomena, and hazards, of which systems are the site.

REMARK.– The theory of dynamic systems in its current state20 is not easily applicable to the systemics that are envisaged in this book, in the same way that it would be to modeling the digital calculation codes that are used in the simulation of certain physical phenomena that can be approximated using continuous functions.

All the systems envisaged here, due to the importance of ICT, are discrete systems whose state changes are carried out by discrete transitions that are modeled by atomic transactions (in other words, a quantum of action), with the theory of automatons. A non-trivial adaptation is necessary, but it requires profound knowledge of the domain of systems engineering before any attempt at mathematization that is not exaggerated; the fundamental question is the approximation of phenomena that are intrinsically discrete by continuous functions. However, a qualitative analysis by simulation, despite its limitations, remains possible on the condition that the required skills and knowledge are present.

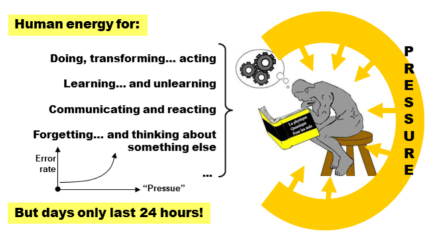

4.2.2. System safety: risks

To end our search for the fundamental invariants of systems, it becomes obvious that this invariance needs to be sought among human actors themselves, users and engineering that give “life” to the system. In fact, the human actor shares their time between, on the one hand, (a) the time dedicated to learning and the maintenance of the knowledge required for their specific action, or to correct the errors and/or contrasts, and, on the other hand, (b) the time dedicated to the interactions with the members of their community to ensure that its individual action remains coherent from the global point of view of the system, and in compliance with the service contract. Quite obviously, they must also rest and relax, to avoid the stress phenomena whose devastating effects are known in terms of the rate of human errors (see Figure 4.13). If there is divergence from this, sooner or later, there will be a disaster, as seen in Chernobyl.

Figure 4.13. Rodin’s thinker studying Quantum Physics for Dummies

Knowing that human actors make mistakes, individually, as well as collectively21, and that the “machine” itself will come across hazards related to the physics of its constituents (wear of parts, fatigue, etc.) and to the physics of the environment, we see very well that the fundamental invariant will be based on the ability to compensate for errors of all kinds that will unavoidably appear during the life of the system.

Here, we come across the point of view of R. Hamming who underlines the importance that the architect must apply to their mistakes. A little stress over a limited time duration reinforces the vigilance and lowers the error rate (this is a pure system safety concept, well-known to fault tolerant architectures that tolerate errors up to a certain threshold where the system saturates, which is equivalent to a “disruption of symmetry”).

Errors are by definition unknown, and the consequences of their occurrence are of a magnitude that we cannot measure, while remaining certain that they exist. At best, we can set up statistics about the risks we run, but only after the event.

As for the risk itself, it is defined as a mathematical expectancy, in other words:

in which p is the probability of the expected event. In industries that involve risks, like EDF nuclear installations, the scale in Table 4.1 is used (INES scale22).

Table 4.1. The international classification scale for nuclear events, known as the INES scale (International Nuclear Event Scale)23

| Level | Examples | ||

| Difference | 0 | No importance | Not classified because no consequence |

| Incident | 1 | Anomaly | Outside authorized limits |

| 2 | Simple | Low level of irradiation | |

| 3 | Serious | Irradiation of a few people | |

| Accident | 4 | Confined within the site | A fire |

| 5 | Off-site | Three Mile Island | |

| 6 | Serious | High level of irradiation + death | |

| 7 | Major | Chernobyl; Fukushima-Daiichi |

This classification is only an example. But it is clear that the notion of risk now needs to be considered extensively, as we can see with social networks that can destroy a reputation and/or push people to the brink of suicide, because a private life no longer exists. In fact, every system, depending on its operating methods, the maturity of its engineering teams, etc., must have a risk scale, correlated with its internal organization/architecture and with the environment.

With this notion of risks associated with the errors, or with ill-will, we are at the heart of the problem of complexity. One of the actors in the system makes an error (or violates a law), but according to the context in which the error is revealed, the consequences can be either catastrophic, or inexistent. A good example of a situation of this kind is the crash of the first Ariane 50124. A software fault that was already present on the Ariane 4 launchers, but masked due to its dynamic, was revealed in the context of Ariane 5 where the dynamic was different due to the power of the motors of the new launcher.

REMARK.– The error rate of human actors implicated in risk situations has been the subject of a great number of ergonomic studies. It can reach 5–10 errors per hour, depending on the context and the degree of fatigue/stress of the actor. What must be integrated, and it is not easy to admit, is that this rate is never zero; there are always residual faults!

In the language of the physics of unstable phenomena, this is what is known as a “critical” situation, hence the term criticality used by P. Bak25 and many others. Critical situations are often associated with a change of phase. For example, water that normally boils at 100°C conserves its state at 105°C, 110°C, but if the least disturbance appears, like an impurity, or a weak impact, then it immediately vaporizes, which can lead to a destructive explosion. Equilibrium of this kind is known as metastable, meaning over the normal point of stability. Correctly controlled, like in explosives, or combustion engines, with a low activation energy, these phenomena are, however, very useful for humanity.

In a system, when an error manifests itself, and the system sees it, it changes operating mode ipso facto, because it will be necessary to correct the error. This is the equivalent of a phase change. The aptitude of the system to control its availability more or less well, in other words, its aptitude to provide the service we are expecting it to provide, is known as the system safety which is measured by the availability, a statistical magnitude. It is represented in Figure 4.14.

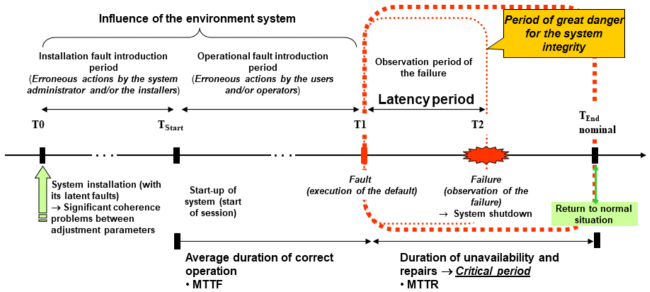

Figure 4.14. The chain of errors. For a color version of this figure, see www.iste.co.uk/printz/system.zip

The critical period of time runs from the moment T1 when the default has been executed, the date at which the state of the system begins to degrade, to the date TFin, when the system recovers its nominal operating mode after repairs and when users can again use it without risk. The latency time is the time that passes between the execution of the fault and the detection of the failure. The magnitudes MTTF (Mean Time To Failure) and MTTR (Mean Time To Repair) are statistical; they measure the average correct operation time and the average repair time, which allows the availability of the system to be calculated:

With this definition, we see that if we had an infinitely effective repair mechanism, that is MTTR = 0, or an MTTR < at the latency threshold of the feedback loop, the availability would always be equal (or perceived as equal) to 100%. Hence, the importance of the detection of saturation thresholds whose effect on the availability can be devastating because the system becomes randomly uncontrollable having been controllable.

One of the most fundamental invariants of systems, since as Hamming states, “… in real-life noise is everywhere”, and that will have to put up with errors, is the presence of a sub-system which is in charge of carrying out general autonomic management and repairs in such a way that the availability of the system is as close as possible to 1, but it will never strictly be equal to 1, over a given time period. This had already been identified by N. Wiener and, in particular, by J. von Neumann who made the first theoretical studies of it. This sub-system must not only survey the internal state of the equipment and interactions between the various equipment, manage redundancies but also the environment to ensure that the conditions for the correct operation are fulfilled; for this, the system may cooperate with other systems that are plunged into this environment, via procedures of interoperability that will be specified in Part 2 of this book. The mission of this sub-system is to avoid disasters and to extend the system lifetime as far as possible.

One of the most counter-intuitive aspects of error engineering is their ability to undergo metastasis during the latency period. An error that is demonstrated in an element of the system will propagate, generally via manipulated data, towards elements with which this element is coupled and which therefore inherits an incoherent state. The more inactive the system is, the more significant the sharing of resources, the faster the propagation. The potentially faulty element must be architectured to detect its own errors as quickly as possible, a property known as Fail-Fast, and confine them in such a way as to inform its environment of its failure, but to inform it in a language that can be understood by the environment, in other words, not that of the faulty element: it is necessary to reformulate the information in the “language” of the receiver, in other words, a transduction (see Chapter 6). Without going into useless details at this stage, we will add that this latency time is closely related to the structure of confinement spaces (see regional systems in the case of an electrical system); the “size” of the confinement space must be such that it allows this part of the system to isolate itself in the event of a catastrophe, or for the survival of the set. This size is therefore an increasing function of the latency of the feedback loop, hence the importance of the Fail-Fast property and of autonomic management. Here, we again see this fundamental dichotomy, pointed out by J. von Neumann, between the internal language and the external language. There are tens of ways for an element that is a little complex to break down, but from the point of view of the element’s environment, this can be translated by only two or three states, for example: done, not done but recoverable, and not done and irrecoverable, depending on the means agreed on by the architect; it being understood that the detail should be registered in an onboard journal that is specific to the element. We will remark in passing that the decision logic cannot be a simple yes/no logic and that the excluded third party will obviously not “work”. A modal logic is required, also temporal, because an alert must generally be processed in a given time period, a resource must be available at date T1, not before, and not after when it is too late, etc.

Failing this, the system is inadaptable. Here, we have a precise illustration of the “law” known as the “law” of essential variety, formulated by W.R. Ashby26 in a trivial manner (this is a false law), because we know, at least since Shannon’s theory of coding, that a saturated code has no resilience, by definition, since all the possible states of the code have a meaning.

REMARK.– The “law” of essential variety stipulates that only the variety of the control system can reduce that which results from the process to be controlled; in other words, the control system must never be saturated.

This is the profound reason why, in nature, and a fortiori in artificial systems, a correct dose of redundancy is required, in suitable places of the system, a redundancy that is calculated by the architect, which depends on the environmental “noise” to which the element is exposed.

Returning to the diagram showing the principle of systems (Figure 4.1), each of the elements constituting the equipment needs to be surveyed in a suitable manner, taking into account (a) its function in the system and (b) its spatio-temporal and human environment. The functional structure is doubled, in some way, in such a way as to make the autonomic management system explicit with dedicated elements and resources, as indicated in Figure 4.15.

Figure 4.15. Elements of the autonomic system. For a color version of this figure, see www.iste.co.uk/printz/system.zip

The functional blocks that constitute the system, known as building blocks27 in systems engineering, all have a dual image in the autonomic management system. Each ITG-F gives rise to an ITG-FS which, in turn, is related indissociably, like Socrates and his demon. ITG-FS supervises the state of correct operation of the ITG-F, by collecting information via sensors, and possibly acts retroactively via the effectors, for example, by executing certain tests to confirm or reject a probable breakdown hypothesis, etc. The objective of these ITG-FS is to maximize the availability D of the system, and we see that here again, there is an equilibrium to be found so that the autonomic management system itself does not become too complex, which requires an autonomic management in contradiction with the target objective of simplicity (of “simplexity”, in reference to the neologism introduced by A. Berthoz in his work Simplexity (refer to the authors’ website)).

To this end, autonomic management is therefore a participant in the information system as we can see in Figures 4.4 and 4.15.

In the simplest case, this ITG-FS is an MMI (man–machine interface), and it is up to the human operator to make decisions that are imposed given its knowledge of the context of the surveyed function, via the control post (see Figure 5.1). If the human operator is not correctly formed and is themselves surveyed by their peers, disaster is certain in systems at risk.

REMARK.– We will remark that the couple ITG-F ⇔ ITG-FS forms a learning loop that will give the operators better understanding of the system’s behavior.

New autonomic management models can be extracted from this new knowledge, or an adaptation of existing models can be carried out, possibly by adapting the functional part to optimize this fundamental capacity28 which contributes to the robustness of the whole. This is a good example of the application of PAC logic.

- 1 Much more so than the “law” known as of essential variety, coined by W.R. Ashby, because the theory of the channel with noise allows a constructive approach to the problem of coding whose existence it guarantees.

- 2 The Tera-100 machine delivered by Bull to the CEA for digital simulations has approximately 240,000 Intel processors. Latest generation video game consoles have several tens of processors.

- 3 Commonly called the Internet of Things, IoT.

- 4 The interface of IBM 360 systems, and of the associated machine code, is an important date in the history of ICT; it has served as a model for many others, such as, for example, the byte code of the Java virtual machine in the 1990s.

- 5 Refer to our two latest works: Architecture logicielle, 3rd edition, Dunod, 2011 and Estimation des projets de l’entreprise numérique, Hermes-Lavoisier, 2012.

- 6 Refer to the Military Standard, Common Warfighting Symbology; also used by the NATO community; refer to http://en.wikipedia.org/wiki/NATO_Military_Symbols_for_Land_Based_Systems.

- 7 What biologists have called “the genetic whole” after the publication of Jacques Monod’s work is in the process of being revised; refer among others to the works by H. Atlan, M. Morange and J.-C. Ameisen.

- 8 H. Atlan expresses himself in a slightly similar way in his last work, Le vivant postgénomique, Odile Jacob, 2011; refer to Chapter 4, p. 134. Refer especially to P. Kourilsky, Le jeu du hasard et de la complexité, Odile Jacob, 2015.

- 9 For more information, refer to E. Mach, La mécanique, and R. Dugas, Histoire de la mécanique, reprints with J. Gabay.

- 10 For a non-technical presentation of these laws, refer to the work by C. Gruber and P.-A. Martin, De l’atome antique à l’atome quantique, Presses polytechniques et universitaires romandes, 2013, in particular, Chapter 5, “Les grands principes de conservation, symétrie et invariance”.

- 11 Refer to the work by Y. Kosmann-Schwarzbach, Les théorèmes de Noether : invariance et lois de conservation au XX e siècle, Éditions de l’École polytechnique, 2011.

- 12 Refer to J. Baggott, Higgs: The Invention and Discovery of the God Particle, Oxford University Press, 2012; translated into French, La particule de Dieu, published by Dunod; with homage to Emmy Noether.

- 13 See his text, Information, Physics, Quantum: The Search for Links; refer also, for those who are not afraid of quantum physics, to: Quantum Theory and Measurement, Princeton University Press, 1983, and his article “Law without law”.

- 14 A formulation with which John von Neumann would probably not have agreed, because in a complex universe, uncertainty or ignorance (refer to the Black Swans) is everywhere; logic is modal, at least. From the 1980s onwards, computer scientists have developed logic known as “temporal” to take into account this more complex and more stable reality.

- 15 Refer to J.L. Austin’s work How To Do Things with Words, 1962; translation from Le Seuil, Quand dire, c’est faire, 1970.

- 16 By way of an example, refer to NATO message systems; you can find many references by typing in: “Adat-P3 military messaging”.

- 17 Refer to http://en.wikipedia.org/wiki/High-frequency_trading, for an introduction to this new means of speculation; also refer to J.-F. Gayraud, Le nouveau capitalisme criminel, Odile Jacob, 2014.

- 18 Hence, the terminology, a little strange, of “capability logic” that is used in the environment of systems where this problem exists; the invariant to guarantee is the capacity of the system, in terms of resources, to interact to carry out its assignment.

- 19 Refer to https://en.wikipedia.org/wiki/Apollo_13.

- 20 For an initial introduction, refer to R. Thom, Stabilité structurelle et morphogenèse (difficult); V.I. Arnold, Catastrophe Theory; a classic: J.-M. Souriau, Structure des systèmes dynamiques (and his website); P. Back, How Nature Works. Especially refer to the work of J.-L. Lions and his numerous pupils. Also refer to the work of J.-J. Slotine at the MIT, Applied Linear Control.

- 21 Refer, in particular, to the impressive analysis of the Challenger space shuttle disaster, D. Vaugham, The Challenger Launch Decision – Risky Technology, Culture, and Deviance at NASA, The University of Chicago Press, 1996.

- 22 Refer to https://en.wikipedia.org/wiki/International_Nuclear_Event_Scale.

- 23 Refer to https://en.wikipedia.org/wiki/International_Nuclear_Event_Scale.

- 24 There has been abundant documentation on this. Refer, for example, to http://sunnyday.mit.edu/nasa-class/Ariane5-report.html.

- 25 Refer to his work, cited previously, How Nature Works.

- 26 Refer to his work An Introduction to Cybernetics, 1st edition, 1956. Ashby was a biologist and a psychiatrist.

- 27 In his work The Logic of Life, the biologist and Nobel prize-winner François Jacob used the term “integron” to explain the hierarchical organization of living things; this holds the same meaning as the building blocks, but applied to living things.

- 28 Concerning this aspect, refer to the remarkable work by L. Valiant, Probably Approximately Correct – Nature’s Algorithms for Learning and Prospering in a Complex World, Basic Books, 2013.