8

Interoperability

The concept of interoperability is at the very center of the justification for writing this work. The emergence of this new concept dates back some time: in a timeframe of scarcely 10 years in the 1980s we witnessed a progressive, though rapid, replacement of “large” mainframes and their environment of thousands of “dumb” terminals, in the sense that the user cannot program them – they can only be configured – by groups of machines of varying power that are organized to cooperate in architectures described as clients/servers. Communications networks are at the center of these new groups, an architecture that for a while was described as Network-centric to really emphasize its importance.

The processing power that until then was concentrated in the central system, with up to 8 or 16 processors, but not many more for performance reasons, was from then on able to distribute itself across all equipment containing a programmable processor, thanks to the microelectronics and VLSI (very large-scale integration revolution [of components]). Terminals were replaced by work stations and/or PCs which came into use at that time; these are individual work stations that are all programmable. The central system was split up over potentially tens/hundreds of servers that can specialize in data servers, application servers, safety and autonomic manager/back-up servers, lists of personnel and authentication servers. The power provided, as long as the software architectures allow it, is virtually unlimited, or “on demand” as they say, as a function of the requirements of users who could also choose to obtain their equipment from a different supplier. The computer science platform becomes heterogeneous; a heterogeneity that will be necessary to manage in order to guarantee coherence and reliability from start to finish, and to facilitate migrations.

For both system users and designers, this is a conceptual revolution. Applications that were initially designed for a given type of material must increase in abstraction to become more widespread and install themselves on the resources available on the platform. In applications, a separation is required between what is specific to the platform and what can be separated from it because it is specific to the application which has its own separate operation. Interconnection capacities become generalized or more specialized, as a function of the requested information flows and safety problems. Transactional systems initially designed to interconnect the terminals and the databases in the central systems have undergone a major change and have become generalized exchange systems, with suitable protocols constructed above the ISO and/or TCP/IP layers, which will allow a massive interconnection, in asynchronous real time: these are intermediary middleware, or even ESB (enterprise service buses), and include numerous services such as those that guarantee system safety and autonomy.

This is, as they say, the right moment for architects who will have the role of organizing this new set, leading to abstractions that are independent of the platform’s physical structures, the semantic, in other words the what of the functions that fulfill the users’ requirements, and the related role of organizing the platform so that it can develop and adapt to the offers on the market while complying with the service contract that was negotiated with the users, in other words the how. In order to satisfy these requirements, the architects will have to invent new tools and new methods to organize the complexity caused by these evolutions, including those of the exchange model and the associated languages which become the central structuring structure of this new state of affairs with virtually limitless possibilities, restricted only by our own capacity to organize ourselves to ensure that correct use is made of it.

We will outline details of this in the two fundamental chapters, 8 and 9.

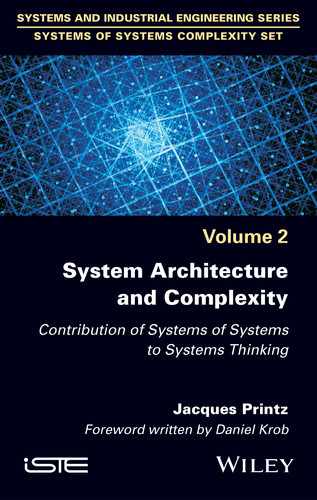

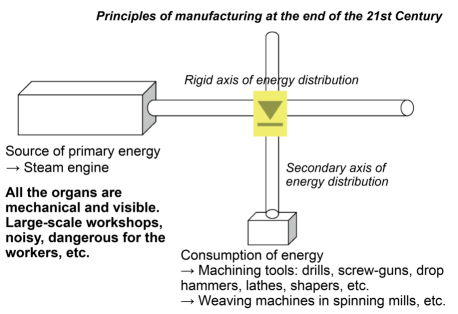

The revolution of the interoperability of systems: to begin with, a diagram (Figure 8.1) and the image of a control which implements J. Watt’s governor (Figure 8.2).

Figure 8.1. Mechanical and human interoperability1

Figure 8.2. Interoperability with an end purpose

The interoperability of systems is a new term, forged in the 1980s and 1990s in systems engineering environments. It refers to things that are old and already known, without necessarily knowing in detail how they worked, and the mastery of their engineering. The problem of interoperability is the organization of the cooperation between organisms and/or equipment of all kinds with an objective in sight, focused on action, that none of them could reach if they remained isolated. This is fundamentally a semantics problem.

Living organisms cooperate and provide each other with mutual services which improve their survival capacity. In biology, these are all symbiosis mechanisms which play an essential role – for example, the symbiotic relationship between pollinating insects and flowering plants. We ourselves are dependent on this because there is a symbiotic relationship between our digestive metabolism and the billions of bacteria that we house in our intestines and without which full digestion would be impossible.

In human systems, extended by tools of all kinds invented over the course of the ages by our ancestors, there are also all kinds of symbiotic relationships and cooperations that we are all familiar with. J. von Neumann, as we recalled in Chapter 1, invented game theory specifically in order to model certain fundamental behaviors that structure the interactions between economic agents. This theory, despite its limitations, has spectacularly advanced our understanding of the basic mechanisms that structure the exchanges between organizational actors and/or humans, as demonstrated by the number of Nobel Prizes for Economics that have been attributed to economists that have used it, such as the two Nobel Prizes awarded to French economists, M. Allais, more than 30 years ago, and in 2014, J. Tirole, both of whom were experts of this theory. For the US, this concerns at least 10 to 15 economists, such as Thomas Schelling from RAND, and many others.

In the artificial systems that are at the center of this work, because we have total control over the engineering, the cooperation between the constitutive equipment and/or the human operators that command and control them is relatively old, as shown in Figures 8.1 and 8.2, but with very serious limitations due to the technology that was available.

Figure 8.1 represents the principle of manufacturing at the end of the 19th Century.

The true rupture, after the generalization of electricity in the 1920s–1930s, came in the 1990s where each piece of equipment was fitted with one or several computers; these items of equipment were then able to interact with each other, exchange information, via wired and/or Hertzian electronic connections, thus turning into reality one of the dreams maintained by N. Wiener and his colleagues in early cybernetics. The transfer function is completely dematerialized and becomes a pure abstraction carried out, or reified, by a “program” in the computing sense of the term.

Awareness is wide-reaching but we can say without insult to the past that it is focused on defense and security systems, like a reminiscence of the firing systems that had been the source of the reflections of N. Wiener and the continuation of the SAGE and NTDS2 systems. All these systems operate in coordination with each other and constitute what has been called a system of systems3 since the 1990s–2000s, which are interlinked in such a way as to constitute a new organized collective entity which is capable of fulfilling assignments that none of the systems could carry out separately when taken individually, where the interaction could, as in the case of the centrifugal governor, also be operational but this time totally dematerialized. We are in a case of emergence, in the strictest sense of the term, emergence organized by the architect of the overall system which must control all its aspects, including and very obviously the undesirable aspects, human errors or not, breakdowns, environmental hazards, malice, which will most certainly occur.

With these systems as a common theme, we are going to present the problem of interoperability, a problem that is today widespread, which has become significant given the massive computerization of equipment of all kinds, of companies and of society, that we have been experiencing since the 2000s.

8.1. Means of systemic growth

A good way to present the problem of the interoperability of systems is to start with the notions introduced in Chapters 5 and 9, in the logic that belonged to G. Simondon (in particular, see Figure 2.4). Made up of its communities of users/end-users and its technical/physical component, itself comprising a “hard” material part, also known as brick and mortar, and a “soft” programming part. In other words made up of the software which makes its first appearance in systems from the 1950s onwards, with suitable human/machine interfaces, the technical system/object forms a coherent or supposedly coherent whole, at a given instant in its history. As we have briefly discussed in Chapters 6 and 7, all this moves, is transformed at a slower or faster speed, given the evolution of the spatio-temporal environment which surrounds all systems. We have characterized this environment using the acronym PESTEL (see section 8.3.3), which allows us to grasp the main constraints of evolution to which the systems that we are interested in here are subject, independent of the “fight for life” aspects of technical systems between themselves, rendered remarkably well by M. Porter’s well-known diagram describing the forces4 that structure this evolving dynamic and the competitive advantages that result from it when everything is neatly organized. In many ways, this evolving dynamic presents numerous analogies with what we can observe in the living world, but by methodological rigor, we will not use metaphors from something more complex, namely the living world, to “explain” something that is less complex5, the world of our artificial systems, even though these are now indissociable from their human components, as pointed out by G. Simondon.

What is remarkable in the current evolution of these systems is the “real time” component, which becomes important and is present everywhere thanks to the technical capacities of the means of communication and to the intermediary software known as “bus” software or middleware. The adaptative cycle, with respect to the systems that have known the “founding fathers” and their successors, including J. Forrester with his model describing the means of industrial growth6 in the 1960s–1970s, has become sufficiently short/flexible (in marketing jargon the word “agility” is used, which from the point of view of engineering does not mean anything! What is important, is how, so that we can talk properly about real time. Real time that must be taken here in its true sense, meaning compliance with a time schedule, which is determined by the adaptation capacities of the entities present and their situation in the system’s environment. A community of users will react as a function of the interaction capacities that are specific to this community and the motivations of its members, quality requirements included, which thus defines a kind of quantum, in length and/or in transformation capacity that characterizes it (a human “energy” expressed in hours worked, unless there is a better measure of human action in the projects7).

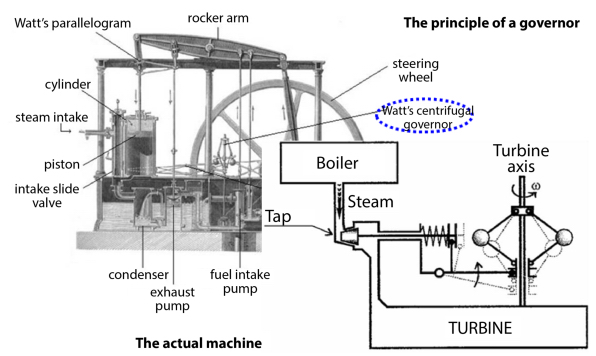

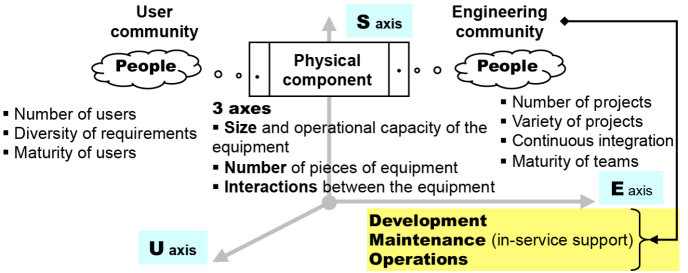

Figure 8.3 demonstrates the symbiotic aspects that are specific to the analysis made by G. Simondon. If for whatever reason the connections that maintain this symbiosis, in other words the system reference framework, break apart, then the system disappears progressively from the landscape. The system and its constitutive communities are plunged into an environment where, on the one hand, the uses evolve and, on the other hand, technological innovation is going to create new opportunities, therefore leading to a competition between existing systems and/or systems to be created, according to the classical diagrams set out by M. Porter in his works8.

Figure 8.3. Dynamics of a technical system. For a color version of this figure, see www.iste.co.uk/printz/system.zip

In a certain way, we can say that the system is “fed” by its environment, either by new users who see an advantage for themselves in using the system, or by new functions made available to the users by the community that provides the engineering, which must therefore be equipped to ensure that what it does complies with the expressed, or latent, requirements of their communities of users. Regardless of the service contract, the ternary relationship {users, hard/soft physical system/component, engineering/end-users}, Simondon’s triplet {U, S, E}, is indeed symbiotic.

Understanding of the dynamics of the set, quality included, and of its organization is essential to guarantee stability and longevity of the system considered as a whole, including its sociodynamics. We will now analyze this in detail.

8.2. Dynamics of the growth of systems

If we take the example of an electrical system, we see that the growth of the physical component of the system is firstly induced by the concomitant increase in the number of users and the requirements created by this new technology which was revolutionary for its time, it being understood that the engineering and technology that are put in place are going to be able to/have to accompany, or even anticipate, this growth. In the case of an electrical system there will simultaneously be, regarding the physical component: (a) an increase in the intrinsic capacity of production factories up to the latest 1630 gigawatt EPR nuclear reactors (for Flamanville); (b) increase in the number of production plants; (c) complete progressive interconnection of the transport network. To adapt supply to demand, a whole range of equipment with a variety of powers and capacities must be available, in such a way as to adjust them as finely as possible, as was briefly explained in the case study of the electrical system. Since the variety of the equipment also induces a form of redundancy which will play a fundamental role in guaranteeing the system’s service contract, via the quality system considered here to be indissociable from the system itself, and the associated engineering, with among others the aspect of system safety that is essential for the survival of the overall system and its parts.

From this example, we can infer a general law that we will come across again in the physical component of all systems:

Physical growth takes place in three ways: (1) growth in size/power of the equipment that constitute the system; (2) growth in the number of items of equipment; (3) growth in capacity of interactions of the equipment to optimize the available resources.

The no. 1 factor of growth comes firstly from the users’ demand rather than the supply. A new offer, resulting from a variety of innovations, which at one time or another would not be expressed by a requirement from the users, could not cause dynamic growth in itself. Once this initial dynamic set in, the engineering and technology will trigger their own related dynamic of development, exploitation/operation and maintenance to support both the supply and the demand, notwithstanding their intrinsic limitations that we will analyze in section 8.4.

We can therefore represent the phenomenology of the growth of systems in Figure 8.4.

Figure 8.4. Phenomenology of the growth of systems

Studying the “growth” of a system therefore comes down to studying the interactions and reciprocal influences between the three components of the symbiotic relationship that defines the system. Each of the components, according to its own specific logic, is subject to ageing/wear phenomena which generally follow Lokta–Volterra’s S dynamic which we mentioned in Chapter 7. In each of these components there is an aspect that, for lack of a better term, can be described as “mass”, with which a characteristic “inertia” will be associated. A community with many members, without a weak structure and lacking in coherence will, all things being equal elsewhere, be difficult to put into motion in a given direction; the forces and the energies to correct the trajectory will be more intense and, due to this, more difficult to control.

Intuitively, it appears to be obvious that any system must comply with a structural invariant which defines an equilibrium between the three components, thus allowing it to “hold sway” when faced with PESTEL environmental hazards; in particular everything related to system safety. This invariant defines in a certain way a conservation law that maintains the system in an operational condition as long as there is compliance with this fundamental invariant; in other words that the constraints which define it are complied with.

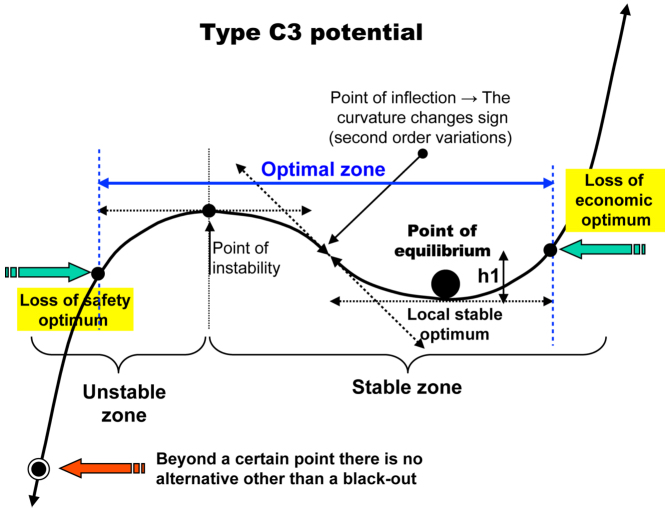

To complete what has already been written in Chapter 4, in the case of an electrical system we have a C3 type potential that must be interpreted as indicated in Figure 8.5.

Figure 8.5. Interpretation of the lines of potential. For a color version of this figure, see www.iste.co.uk/printz/system.zip

If the energy requirements of users cannot or can no longer be fulfilled, for any reason whatsoever (loss of equipment, fluctuation in climate which affects the “green” component of the system) the supply/demand point of equilibrium will move to the left of the risk diagram and move out of the optimal zone. If nothing is done by the operators running the system that are part of the engineering teams, the autonomous security component will abruptly and without warning unload the users to preserve the equipment which are at risk of destruction due to the ensuing disequilibrium.

If the physical component and the engineering teams are overdimensioned, there is no more security risk (even though the complexity related to the number is in itself a security risk if it is not properly organized), but it can appear to be an economic risk because the cost of production becomes too heavy. If the users are in an “open” energy market, they can then go and look elsewhere, which further increases the disequilibrium.

Taking into account the inertia of these various components (10 years of construction works for a nuclear production plant such as Flamanville, or for a EHV line, up to 700 kilovolts), we understand why we must not attempt to get ahead of ourselves by making inopportune decisions which, given the inertia involved, will turn out to be fatal 10 or 15 years later, therefore too late, where equilibrium can only then be re-established with difficulty (see section 7.2.3).

In large-scale systems, it is generally very difficult to obtain an analytical expression of the optimum of a system and its invariant, but well-constructed systemic modeling can provide a qualitative expression, which is already better than nothing. Failing this, it will be intuition or worse – the political-economic constraints, indecision and/or wishful thinking of the “communicants” focusing on short term constraints – that will prevail, with the risks that are known (refer, among many other possible examples, to the subprime mortgage crisis and the economic and social consequences that followed; or even Chernobyl, the power station managed by a geologist who was academically gifted but incompetent on the subject of nuclear risk).

8.2.1. The nature of interactions between systems

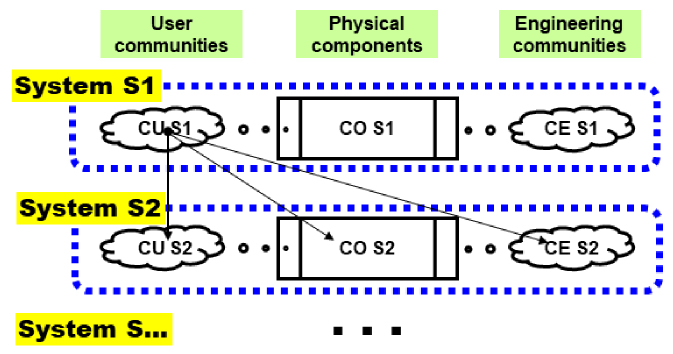

When two systems get close to each other, for any reason (mutualization and specialization of systems; pooling of resources that can be shared; fusion of companies; integration of subcontractors into the “extended” company; multinational combined operations in the case of C4ISTAR Defense and Security systems; etc.), the systemic situation of their relationships must be represented as it is in Figure 8.6.

Situations of this kind occurred towards the end of the 1980s, then became generalized in the 1990s when they changed scale with the large-scale spread of processing of all kinds arising from the development of information and communication technologies (ICTs, and from now on, in the near future, NBICs with “intelligent connected objects” and 5G).

Figure 8.6. Interoperability of systems

Making systems interact with each other obviously requires organization of the interactions of the physical component with the networks, as some had already understood correctly in the 1970s, but it especially involves making the communities of users and the engineering teams interact, in other words the behaviors, uses, methods, expertise, etc. which had brought the whole semantic problem to the forefront of concerns and which had not been distinguished as such until that time. This was a true shock for many, and a true rupture for all.

If we only consider the combinatorial aspect, each of the components of a system S1 will move towards the three others for S2, and inversely, in other words a total of 18 arrows for two systems approach each other; with each arrow materializing a type of flow, which can be expressed in several physically different flows. In the case of three systems or more, all parties must be considered, which means that the combinatorial “explodes” exponentially and becomes totally unmanageable if the architect of the whole set doesn’t manage to organize the interactions by means of a suitable global architecture. However, they must accept to take on this role.

REMARK.– If a set includes N elements, the set of the parts increases by ![]() if this new set is reinserted as a part, the combinatorial becomes

if this new set is reinserted as a part, the combinatorial becomes ![]() and so on, at each iteration

and so on, at each iteration ![]() .

.

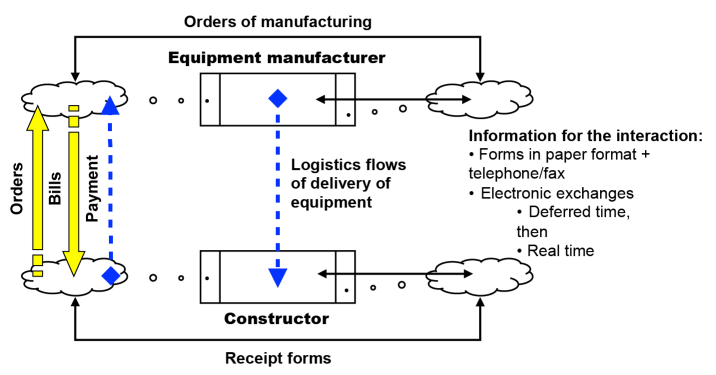

In the example of the extended company, automobile constructors have developed an interaction with their equipment manufacturers in such a way as to operate “just-in-time”, which allows them to eliminate costly equipment storage on each side, but ties the company, in all senses of the word, to their equipment manufacturers and makes it dependent on correct operation of the transport system for logistics flows. Quality of service becomes essential. The block diagram of this interaction is represented in Figure 8.7.

Figure 8.7. Interactions in the extended company. For a color version of this figure, see www.iste.co.uk/printz/system.zip

Logistics flows and financial flows for payments are added to traditional information flows of paper order/billing forms, all of which become progressively totally electronic, or doubled by electronics which allows the status of the logistics flow to be visualized in real time. This is what integrated industrial management software does with high precision (PGI, MRP (manufacturing and resource planning)) in which the progression of orders/deliveries is monitored in real time by the transporter thanks to geo-localization systems. The logistics flows associated with payment flows constitute transactional flows which guarantee that for all equipment deliveries, there is a corresponding payment that balances out the transaction. The coherence of these flows is fundamental because this guarantees that the company balances its books and makes a profit.

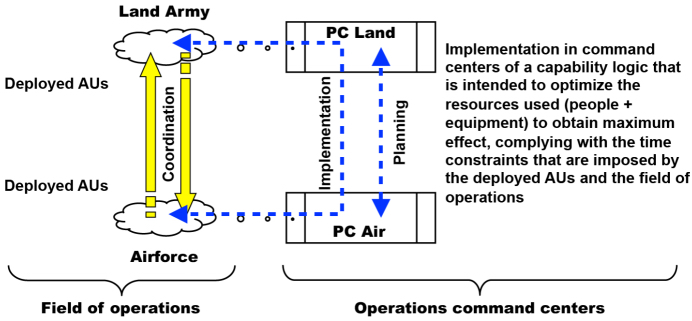

In the case of C4ISTAR defense and security systems, the highly simplified block diagram of an air–earth operation is constructed as in Figure 8.8, where each of the represented French land army and airforce communities are themselves composed of sub-communities that represent the whole range of professions that are required for highly complex operations. One can refer to the various scenarios of projections for the armed forces that were described in the last two white papers, available on the government websites of the French Ministry of Defense.

Figure 8.8. Interactions in C4ISTAR systems. For a color version of this figure, see www.iste.co.uk/printz/system.zip

In the real case of the French land army and airforce as physical components (PC), the systems that are specific to each of the components can be counted in dozens and totalling millions of lines of code. These are systems that equip all active units, ranging from an individual infantryman with the FELIN system, land vehicles, Rafale airplanes and others still, to the systems that equip the decision-making centers and which provide the link with political power. Since France is a senior nation in NATO, the system must be able to talk to the specific systems of the various nations that are participating in the operation. The capability logic guarantees coherence of management of resources, in the sense that a resource that has been attributed to an AU, or consumed by an AU, is no longer available for other AUs, until it is returned in the case of a temporary loan, or replaced by an equivalent resource. Capability logic therefore necessarily integrates a transactional logic which takes into account the problem of re-supply9.

8.2.2. Pre-eminence of the interaction

The remarkable thing about the recent evolution of systems due to the importance of ICT is that interactions that implement flows of materials and/or energy (see Figure 4.4) are multiplied by two in a set of information flows that need to be managed as such and which will constitute a “virtual image” of this physical reality.

This virtual image only has meaning if all the elements that make it up are “signs” of something in reality; speaking like a linguist, these are meaningful things of which the meaning is a very specific physical reality. Operating on one (refer to linguists’ “performative”) is equivalent to operating on the others, and reciprocally, on the condition that the signs of the virtual image remain perfectly coherent with physical reality. The interaction takes precedence over the action undertaken (a change of state, an adaptation/transformation of reality), in other words the way in which the actions carried out connect and balance each other out, in the same way as in linguistics it is the relationships between the language terms that provide a framework for the meaning of the phrase, at least in Indo-European languages.

As explained in Chapter 5, this grammar of actions, here in the context of a system of systems, is essential for the stability of the whole set that is constituted in this way. This is exactly what Wittgenstein said in his aphorism 3.328 in the Tractacus logico-philosophicus, “If a sign is useless [in other words, if it represents nothing], it is meaningless”; we note that the aphorism is an excellent definition of the semantic.

The systemic analysis of a situation must therefore indicate as clearly as possible the rules of correspondence between signs and reality, because the rules guarantee that reasoning on the basis of a virtual image is the equivalent of reasoning on the basis of a real situation. This is the condition sine qua non of the effectiveness of action. If the coherence is broken for any reason, we no longer know on what basis we are reasoning. We then risk making a ridiculous conclusion, to use the phrase of medieval philosophers, “ex falso sequitur ad quod libet” (from falsehood, anything follows); hence the importance of security/safety for all these systems, because what is real is only understood via its virtual image which can be manipulated/altered either voluntarily or involuntarily.

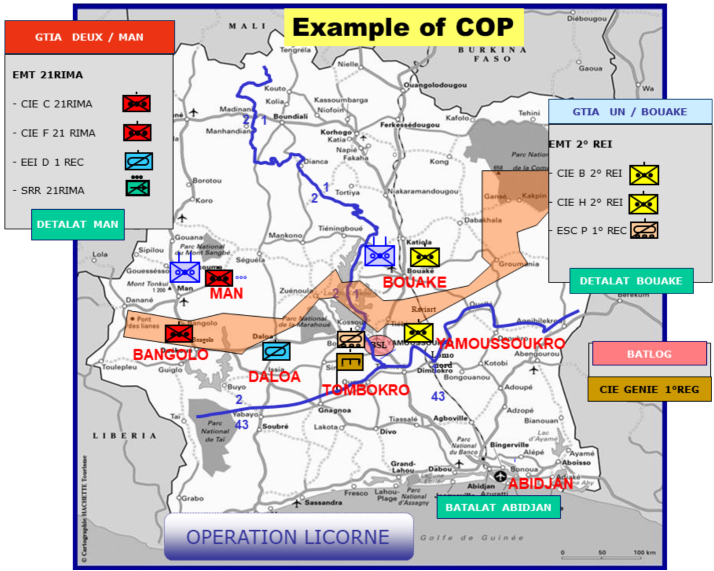

In the case of the C4ISTAR, this virtual image has even received a name: the COP, in other words the common operational picture, in NATO jargon. Figure 8.9 gives an example of symbolic representation, in other words a virtual image, using signs taken from the situational representation standards10 that are typical of Defense and Security systems, as well as in certain strategy video games. The classifications used to code the situations comprise thousands of symbols which constitute a graphical language (literally, this is an ideography) used in all crisis management groups. We can visualize the image at different scales, and with different levels of synthesis, up to the ultimate level which corresponds to individual “atomic” actions (in the non-divisible sense, or even in the done/not done sense of the transactional sense. Note that here it is a case of pure convention between individuals). Without the development of ICT, all this would obviously be quite impossible.

Figure 8.9. Pictograms of a situation in C4ISTAR systems. For a color version of this figure, see www.iste.co.uk/printz/system.zip

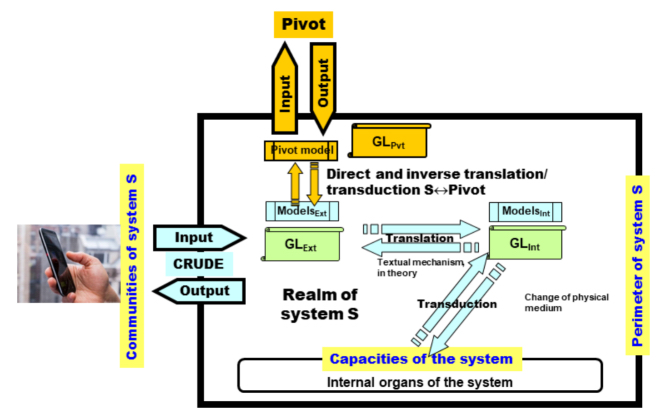

A very general principle of the construction of abstractions can be drawn from these considerations, which will allow the architect of the system of systems to know exactly what they are doing and what they are not doing. This is shown in Figure 8.10. This diagram shows a construction structure with three aspects:

- – The aspect of the real world and the phenomenology that is of particular interest to us; a sub-set of phenomena that need to be characterized in order to separate out the elements to abstract; the rest, which has been eliminated, constitutes the “environment” or the outside, reusing our own terminology.

- – The abstract aspect of the phenomenology which is a world of signs11 independent of all material media and all representations, although it is necessary to select one if only to communicate. Signs constructed in this way correspond to those of the theory of abstract types which can be composed and hierarchized in the same way as in the theory of languages, which we have known how to do for a long time (in computing, this is known as an “object”). These are disembodied, logical entities, “pure” in the sense used by mathematicians to refer to pure mathematics. The system of signs thus formed functions like a theory of the phenomenology that it denotes; we also say “symbolic system”. At this level, we can represent the time of phenomenology, the transformations, consumption of resources, and more generally the finite nature of the physical world, using logic procedures classed as temporal12.

- – The virtual aspect, which is a projection of the abstract level into a logically mastered computing structure, either a Turing machine if we wish to remain independent from real machines, or a logic abstract machine in J. von Neumann’s sense of the term, that can be compiled and/or interpreted with real machines. For this aspect, the abstractions are executable, which will authorize simulations even before the system truly exists.

Figure 8.10. Equilibration of cognitive structures

The rules of correspondence between these various constructions must be explicit and reversible, which is a fundamental demand of the engineering of real systems and which must be validated before implementation. The notion of process seen in Chapter 7 is going to allow a link to be made between these various aspects because it is common to all three, thereby allowing translations/transductions between them.

The systems of systems constructed according to these rules are themselves systems that are ready to enter a new construction cycle with mastered engineering. Step by step, we can therefore organize what J. von Neumann designated as ultrahigh complexity in his Hixon Lectures. We will return to this subject in Chapter 9.

However, we must not believe that this type of organization emerges from the architect’s brain at the wave of a magic wand. If we take the example of the genesis of the “computing stack” (see Figure 3.3 and Chapter 7 for more detail); almost 20–30 years of R&D began at the MIT with the MULTICS system towards the end of the 1960s, to then finish in the 1990s–2000s with transactional Middleware in a distributed environment, seen in classic works by J. Gray and A. Reuter (Transaction Processing: Concepts and Techniques) or P. Bernstein and E. Newcomer (Principles of Transaction Processing) concerning transactional aspects. The procedure to be undertaken complies exactly with what was said by A. Grothendieck (see section 1.3), where for a correct abstraction a high level of familiarity is required with the underlying phenomenologies, which requires a lot of time and a lot of effort. Without this, modeling takes place in a void, and this time Wittgenstein administers the correction (refer to aphorism 3.328 of Tractacus, already cited).

8.3. Limits of the growth of systems

In the real world, limits are everywhere. For decades, the major science of engineering was, and still is, resistance of materials13. In-depth knowledge of the “matter” that they manipulate is a requirement for engineering designer architects of the system, including when the latter relates to information. To avoid breaking a part, there must be a deep understanding of how and why a part breaks. If a part heats up due to bad dissipation of the heat produced by friction, its mechanical characteristics will change, possible causing the part to break. For all these reasons, the size of the machines cannot exceed certain dimensions. In the case of turbines used by the nuclear industry, the rotation speed of the turbine (1500 rotations/minute for the most powerful) which can weigh several dozen metric tons, means that the extremity of the blades of the water wheels (around 3 meters in diameter) will approach the speed of sound (3 × 3.14 × 1500 × 60 = 848 km/h, in other words 235 m/s). This is a threshold speed that must not be surpassed due to the vibrations induced when nearing this speed (weak warning signs).

The moral of the story: the size of the machine, and therefore its power, is intrinsically limited by the internal constraints that it must fulfill.

An integrated circuit which can have billions of transistors and hundreds of km of nanometric cables buried in its mass, is primarily an electric circuit subject to Ohm’s Law and Kirchoff’s Law, therefore “it heats up”! If the heat is not dissipated by a suitable device, in general a ventilator mounted on the chip, the thermal noise will end up disturbing the state of the circuit, making it therefore unusable because it generates random errors that the error correction codes will not be able to compensate for. We know that fine engraving will allow chips to be made which can integrate 10 or 20 billion transistors, on condition of slowing down the clock, so of changing the sequential programming style for which programmers have always been trained, for a parallel style involving a complete overhaul of all the engineering, at least if we want to benefit from the integration of components to increase the processing capacity of the chip.

We remark in passing that the “size” of the equipment refers not only to its geometric dimension or its mass, such as in the case of the turbine, but to the number of elementary constituents and/or links/couples between the constituents. Therefore, software such as an operating system is more complex (in terms of “size”) than an Airbus A380, although it can be saved on a USB key. With the development of nanotechnologies, these constituents can be on a nanometric scale, therefore very small, but all the same it must be possible to observe their behaviors. Instrumentation, required to effectively engineer them, becomes in itself problematic because it must be possible to test the device that has been created. This is a major engineering problem for high-level integration; a problem made more serious by the interactions that are possible between the components of the same chip. The nanoworld is a complex world where we can no longer see what we are doing! Correct operation becomes a problem which can only be tackled by probabilistic considerations related to the occurrence of inevitable random breakdowns, as we have managed to do with error correction codes, except that this time engineers are also faced with a problem of scale. This type of approach can make the technology impossible to use in very high reliability devices if we do not know how to/cannot implement design to test. The construction methodology, in other words the integration, prevails.

For all these reasons, and for many others, there is always an intrinsic limit to all technology which means that the physical capacity (denoted here by the “size”) of the equipment is necessarily restricted and limited.

There are more subtle limitations induced by human components. Equipment that is operationally very rich such as the latest generation of smartphones requires learning and an MMI that is suitable for the user. A large dose of naivety would be required if we were to believe that all this will take place without difficulty, a little like the air that we breathe. Even M. Serres’ character, Thumbelina, eventually learned how to use the technology put in her hands with confidence. A smartphone equipped with payment methods, if it is pirated, is a godsend for the crooks that roam the Internet, without mentioning the adverse effects of geolocation. An unmastered technology is a factor of societal instability. And it is also necessary to take into account the ageing of the population and the reduction of cognitive capacities with age.

The more complex society is, the more users will have to be on their guard and comply with protocols or rules that guarantee their security in the broad sense of the term. Even better, they must be trained. A human–machine interface (MMI) must absolutely comply with the ergonomic constraints of the user which can vary from one individual to another, with age, or from one culture to another.

The limits which affect the engineering teams have been the subject of many organizational evaluations, including and above all in the field of ICT. Today, there is no longer any equipment used in everyday life which does not integrate ICT to a significant degree. Automobile constructors estimate that the added value resulting from the use of ICT in a vehicle accounts for around 30% of the cost of production of a vehicle. The smart grid that is the dream of users of the energy transition will require en masse use of ICT and more particularly software, because each time the word smart is used, the word “software” must be read instead. We are going to live in a world where the software “material” is going to dominate, in particular in everything that affects relationship and intermediary aspects, whether this is: (a) relationships of individuals between themselves (refer to GAFA – Google, Apple, Facebook, Amazon), within organizations and/or communities (the inside); or (b) their relationships with their daily environment (the outside).

In our two most recent works, Écosystèmes des projets informatiques and Estimation des projets de l’entreprise numérique, we looked in detail at the maximum production capacities of the software engineering teams who are the most at-risk teams in the world of ICT and of equipment using ICT. These evaluations show that there are two thresholds, a first threshold of around 80 people ± 20 %, and a second threshold of around 700 ± 20 %, which appears unsurpassable for socio-dynamic reasons as we explain in these two books. The basic active unit in management of computing projects which have the main characteristic of being the most interactive projects, is a team of 7 people ± 2 people, a size required to implement “agile” methods known as. Over and above this, communication problems within the team are such that it is preferable for it to be divided in half. For projects that require more members in the teams, teams must be set up regardless of the architecture of the software that needs to be created, itself dependent on the system context as we have demonstrated in our book Architecture logicielle. To create a market standard video game (2015), a team from the first category must be deployed, in other words a maximum of 80 to 100 people for a duration of two to three years. For software such as is required for an electrical system or C4ISTARtype system, one or several teams from the second category should be deployed, for durations of three to five years. These numbers are obviously no more than statistical averages, but they have been corroborated so many times that they can be considered to be truths14, and the fact that the teams in charge of the maintenance often do not have the same skill level as the initial development teams must be taken into account. Knowing that the average productivity of a programmer within a team of this type is around 4000 lines of source code actually written and validated, with residual error rates of the order of 1 to 2 per thousand lines of source code, it is easy to calculate the average production.

The size of the “program” component, in the broad sense, required for all systems is an intrinsic limit of the size of the system in addition to the others. But the real limiting factor is the socio-dynamic nature because beyond a certain size, we no longer know how to organize the project team that is required for deployment of the system and its software in such a way that it remains controllable. In particular, we no longer know how to compensate for the human errors made naturally by the users/end-users (engineering) in suitable quality procedures (rereading, pair reviews, more or less formal reviews, etc.) (refer to the project problem, already mentioned).

In conclusion, we can say – and this is no surprise for engineers and scientists who understand what they are doing – that there is always a limit to the size of the parts that can be constructed making intelligent use of the laws of nature, for internal reasons related to physics of materials, or for external reasons related to the socio-dynamics of human interactions. The silicon in our computers does not exist in nature, although its primary material, sand, is very abundant on Earth. In order for it to be of interest to us it must be purified using a technique discovered at the end of the 19th Century, zone melting15, which only allows a few “useful” faults to remain, because if the silicon crystal was perfect, faultless, it would be of no interest, simply an insulator like glass.

The artificial “matter” (informational in nature) that constitutes our programs does not exist in nature. Only our brains, individually and/or in a team, are capable of producing it, but with faults which this time are entirely harmful and that we do not know how to eliminate completely. The information “parts” that are thus produced, like their material colleagues, have a limited size that must not be exceeded, and they still contain faults. But by the “magic” of architecture and human intelligence, we would be able to arrange and organize them in such a way as to produce inconceivable constructions if it were necessary to “machine” them into a single indivisible block16. This is what we are going to examine in Chapter 9, implementing cooperation.

8.3.1. Limits and limitations regarding energy

Interoperability leads us back to the relationship/dependence between all systems and the sources of energy which allow them, in fine, to operate. Without energy, a system cannot function, including systems that produce energy such as an electrical system which, in order to remain autonomous, must have a source that is independent of their production. Conservation of the energy source which supplies the system is very obviously part of the system invariant which must be conserved at all costs17! Consequently, the system has the top priority of completely controlling the energy that makes it “live”. With interoperability, and the capability logic that characterizes systems of systems, this property fundamentally disappears.

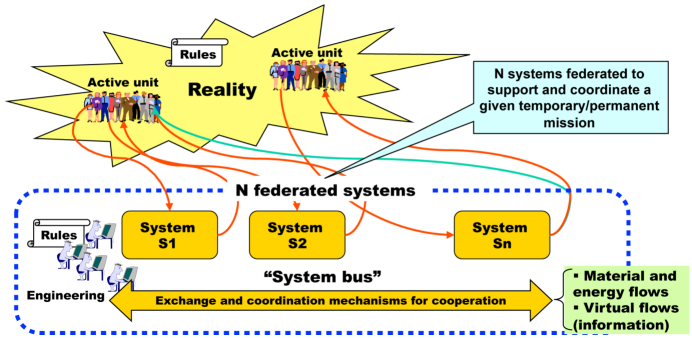

The situation can be drawn up as shown in Figure 8.11.

Figure 8.11. Energy autonomy of systems of systems. For a color version of this figure, see www.iste.co.uk/printz/system.zip

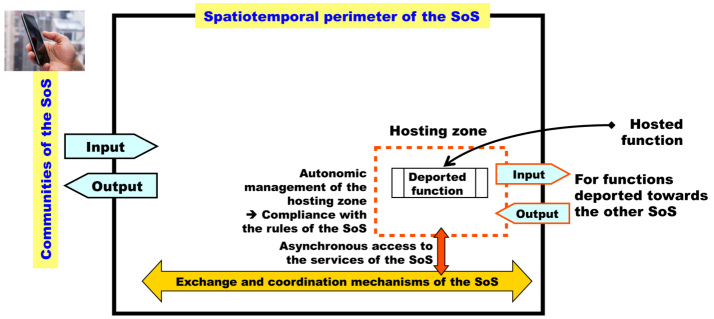

This figure shows that the AU are now dependent on correct operation of the exchange and coordination mechanisms, which by definition are not controlled by any of them. If this system of exchanges is repositioned in a diagram in Simondon’s terms, we see: (a) that the community of users is the sum of the communities of each of the systems taken individually; (b) that it has its specific community in terms of engineering, but that this must absolutely not act independently of the engineering communities of each of the systems; and lastly (c) that it has its material and virtual resources but that the service contract for the whole (it is a system in itself whose invariant is the rules given in the model of exchanges) must not under any circumstances contradict the specific service contracts for each of the systems. This creates a set of constraints/requirements that make engineering of these devices particularly problematic because they are necessarily abstract with respect to the various businesses of which the common elements must be identified. The corresponding semantic coherence of the models is a logic problem known to be difficult and which requires deployment of temporal logic.

As for all systems that are based on cooperation, everyone, in other words the AU, plays the game; and we could say, the same game. If one of the systems does not comply with or no longer complies with the rules, in other words they have behaviors that are incompatible with survival of the whole for any reason, they must immediately and without any means of avoidance be removed from the federation or at least strictly confined. The operations carried out by the deviant system must, if necessary, be taken over by other systems in the federation. If this is not the case, the capacity of the SoS (system of systems) is necessarily reduced and the users must accept a deterioration in services.

In terms of energy, it becomes almost impossible to estimate what is necessary for correct operation of the “bus” without overdimensioning its capacities, which is generally contradictory to the very objective of the federation. In the same way, for each of the systems that are responsible for their autonomy it is possible to adopt and to ensure application of a strategy thanks to the unique C2 command–control center (see Figures 4.1–4.4); this is no longer possible for the bus, because this must manage a complexity which is the “sum”, or a combinatorial of the complexities of each of the systems participating in the federation. The only way in which to prevent the “bus” from transforming into a sort of crystal ball with unpredictable operation, contrary to all the rules of engineering when the latter is mastered and not endured, is to have a prediction/measure of the load in real time at the bus for each of the flows and unloading rules if the predicted and/or authorized load is exceeded. This is part of the exchange models which set up the rules of use. These rules “of the game” necessarily constitute the language of authorized interactions, and reciprocally those that are prohibited, which can be analyzed with all the methods available in the theory of languages and abstract machines (see Chapter 5). With interoperability and SoS, we have crossed a level of abstraction within the systemic stack, but by using the same methods to organize the complexity.

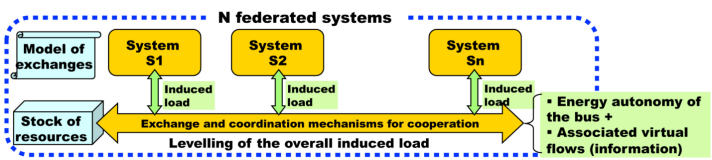

The situation is then as follows (Figure 8.12): each of the systems in the federation induces a flow that varies with time, it is up to the bus to ensure that their sum does not exceed its capacity and/or to ensure in real time that there are sufficient resources to guarantee its service contract.

Figure 8.12. Control of energy load of systems of systems. For a color version of this figure, see www.iste.co.uk/printz/system.zip

In many ways, this situation is entirely similar to the situation seen by clockmakers in the 18th and 19th Centuries who manufactured the first watches and chronometers to use complications18, which were systems renowned as the most complex of those produced by the human brain until the beginning of the 20th Century and the beginning of aviation and the first telephone switchboards, or electromechanical weaving professions. In a complications timepiece, like in all mechanical astronomical watches and/or clocks, there is only one source of energy; either a spring, or a weight. Each of the complication mechanisms extracts its energy from the equivalent of a mechanical “bus”, a set of gears and cams, which thanks to the escapement is regularly liberated (a time base ensures the precision of the chronometer) until the resource is exhausted or until it is then necessary to “wind up” the chronometer without stopping it. If the samples exceed the capacities of the transport mechanism of mechanical energy (significant problems with friction, hence the diamond or ruby pivots, which are part of the rules of the model of exchanges), the watch stops and/or the escapement is irremediably slowed, which means that the watch will never be able to show the correct time.

In systems of systems such as an electrical system (see Figure 4.4), the control function is now totally virtualized thanks to ICT capabilities, but it is still necessary to have sufficient energy to supply the various organs of the corresponding information system, an energy known as control energy which, for obvious reasons of autonomy, must absolutely have its own energy source. This regulation energy is critical for the system of systems, as it also is for each of the constitutive systems. If the organ carrying this energy becomes saturated, meaning that the system no longer has enough control energy, the controlled system and its environment can put themselves at a significant amount of risk. This is because, for example, in the case of an electrical system, a quantity of energy (note: a power) of the order of 100 gigawatts (equivalent to an atomic bomb of average power, in one minute) is no longer controlled? An Ariane 5 launcher builds up an energy of around 17 gigawatts on take-off to escape the Earth’s gravity!

8.3.2. Information energy

With the rise of ICT which today is indissociable from any system that uses it, and which therefore has become its most critical resource, a new form of energy has appeared. Independent of the fact that any electronic systems, with any function, is primarily an electrical system which as such must have a suitable source of electrical energy, it must, if it is ICT, have sufficient information processing capacity to carry out what is expected of it at the right speed.

For lack of a better term, we can describe this new form of energy as information energy. Since the 1980s–1990s it has held the attention of physicists, as demonstrated in the works and symposiums published under the label “information physics” as we briefly mentioned in Chapters 2 and 4. This physics was already in development in A. Turing’s works on logic, and the work of C. Shannon on communications as we have also mentioned.

The corresponding information energy capacity has three indissociable aspects: (1) a calculation capacity expressed in millions/billions of operations carried out per second, that the wider public has seen in the imprecise form of “Moore’s Law”19; (2) a capacity for interaction of the calculation organ with the “exterior” to supply its working memory with useful information, via, for example, SCADA systems that we will mention in Chapter 9, a capacity determined by the bandwidth of the information transport network (today in gigabytes per second); and (3) a capacity to store information to memorize everything that needs to be memorized given the system’s mission. For example, for an instrument like the LHC at the CERN20, this capacity can be measured in hundreds of terabytes of data coming from measurement appliances that detect trajectories when an experiment is carried out, in other words of the order of 1012 to 1015 bytes (petabytes). The same is so for the storage required for the calculation of rates for telephone operators, or for calculation of the state of the energy transport network for the smart grids that are required for “intelligent” management of renewable energies.

To conduct an automatic control of any physical device, it is therefore necessary to ensure that the corresponding information processing system has enough “information energy”, whatever the circumstances, to accomplish its mission, complying with the temporal constraints of the phenomena to be regulated.

In a high-speed train system such as the French TGV, the signaling system on the tracks and in the carriages in circulation must be able to react and make necessary decisions given the speeds of the carriages and the braking distances. With trains that circulate at more than 300 km/h, every 15–20 minutes during rush hour, any break in service can lead to a catastrophic collision.

Hence the back-up of control of the induced load, where it becomes necessary to reveal the induced information load (see Figure 8.13). We note that this back-up obliges us to look at the detail of the coexistence of fundamentally continuous phenomena, and of discrete phenomena, and therefore asynchronous phenomena via transactional mechanisms that are specific to computing.

Figure 8.13. Control of the information load of systems of systems. For a color version of this figure, see www.iste.co.uk/printz/system.zip

The two mechanisms that can appear as separate or independent form a single one that must be analyzed and integrated as a single block. These are correlated mechanisms because one cannot be designed/organized without reference to the other, including in non-nominal situations which are always at the origin of service breakdowns, or disasters. This mechanism is transactional, in the strongest sense of the term, because coherence requires that what happens in reality is the exact reflection of what happens in the virtual information world, and inversely. The fact that the physical–organic mechanisms are backed up by an information mechanism allows a trace to be generated which is a true image of all the interactions between federated systems, which will allow post mortem analyses in the event of failure. We note in passing that the spatio-temporal synchronization of these traces requires availability of a unique universal time for all federated systems, analogous to what exists for constellations of GPS satellites and/or Galileo fitted with atomic clocks that are themselves synchronized.

In the case of large breakdowns suffered by the electrical system, for example, breakage of a high voltage transmission line, an event that affects physical resources, in this case loss of an EHV line, the energy transported is instantaneously transferred to other available physical connections, in application of electromagnetic laws: the laws of Ohm and Kirchhoff.

The information system which controls everything must analyze the capacities of the available physical resources that remain and decide in a few seconds whether the transport system will “retain” the load or whether unloading must be organized. The information system must therefore be perfectly informed of the situation, in real time, and itself have the processing capacity for all the information that comes from the organic/physical resources that constitute the transport network, to make the right decision with the help of the human operators that use the network. This feedback loop that manages considerable amounts of energy is the critical element of the system which in a certain manner determines its size.

8.3.3. Limitations of external origin: PESTEL factors

Many external factors, the “outside”, are going to constrain the system, and therefore limit its “normal” growth, up to certain intrinsic limits that are presented above. By convenience we use the PESTEL sign (in other words political, economic, social, technical, ecological, legal), which has already been mentioned21, to describe the environment. An entire book would be required in order to present them in detail. Here, we are going to provide a few analysis clues to show how to go about figuring out this type of external limitation, because for SoS, these factors become fundamental.

Politics, in the Greek sense of the term, meaning the organization of the City, and more generally of the State and the Nation, intervenes directly in the development and the “life” of a great number of systems, in particular those that provide a service to the public (police, army, energy, post and telecommunications, health, education, etc.). For example, the electrical system in the case study is completely constrained by the energy policy decided on by successive governments, and by European directives, which have deregulated the energy market. The energy transition that we find ourselves at the center of is political in nature, rather than technical, at least for the moment. EDF and RTE are not allowed to make their own decisions about what prices they are going to apply for distribution of the energy resource to their clients, in other words all of us. The decision made in 1988 to stop experiments at the power plant prototype at Creys-Malville22 in France was not EDF’s decision, and the dismantling of the facility will be finished in 2027. As a regulator, the State decided that this type of reactor cooled with melted sodium as a heat transfer fluid was dangerous and that the engineering was not mastered to a sufficient degree, in particular regarding the impossibility of putting out a sodium fire.

REMARK.– Water cannot be used, which makes an explosive mixture, and sodium burns spontaneously in the air.

In the United States, the American government has decided to dismantle certain monopolistic companies that are considered to be dangerous, which violate the American constitution and the liberty of American citizens, hence the anti-trust laws with the latest, AT&T, of which parts are to be found in Alcatel-Lucent, with a part by Alcatel, distant heir of CGE Energy, which itself is a result of the various restructurings of French industry, both nationalization and privatization.

A company such as France Télécom was for a long time a national company under the guardianship of the ministry for Post and Telecommunications, with a national level laboratory, the CNET, to guarantee its mission of service to the public whose research fed into the entire telecommunications industry. Privatization of the company led ipso facto to the disappearance of the CNET, replaced by a classic R&D type structure typical for large companies but which, due to the privatization, no longer needed to play a national role. This led to some question of systemics posed to the regulator State: who was then going to take on the mission at a national scale? The CNRS? The INRIA? Or a new structure that needed to be created from different entities? How can the transition be guaranteed without a loss of skill, when we know that direction of a research team of 40–50 people often relies on leadership from two or three people?

Economic policies described as liberal have the role, at least in theory, of organizing economic competition between the companies in compliance with the rules, which is another way of limiting growth of certain systems. Here, we refer back to the previously cited works of M. Porter.

The economic factor characterizes the capacity of a country, of its companies and its banks, its State structure, to mobilize resources to create infrastructures, goods and services, which are required for the well-being of its citizens. In the United States, since World War II, the Department of Defense (the DoD) has fulfilled the mission of providing American technological leadership. As everyone knows, the United States does not have a research ministry, because it is primarily the DoD that takes care of it, in close collaboration with the large American universities, and with a few large agencies such as NASA and research organisms such as RAND or MITRE which are FFRDCs23. President Reagan’s Strategic Defense Initiative (SDI), better known as “Star Wars” financially supplied American universities and created many research projects which were fully beneficial to American industry, which now enjoys an obvious competitive advantage.

In France, a country with a tradition of centralizing and a strong State, large projects that have given rise to large systems such as the electronuclear program and the “Force de Frappe” or strike force, the plan for modernization of the telecommunications, motorways, TGV high speed train, space program, etc. all arose from the voluntarist action of public powers supported by large organizations such as the CEA, or by national companies like the SNCF or France Télécom, which themselves played the role of project managers or project owners of an entire network of industry in charge of “the action”.

An English colleague pointed out that a system like the TGV high speed train is almost impossible to set up in Great Britain because the financing capacity that must be obtained is virtually inaccessible to private companies, even large ones, which in addition do not have the same degree of guarantee as the State.

Dissolution of the CNET due to privatization was a tragic loss for the telecommunications industry in France and for industrialists who did not see the situation looming on the horizon, in particular Alcatel. A research center is not simply about patents, it is also a way of training high level professors, engineers, and scientists who are required for the development of sectors of industry. It is a center of excellence which reaches out to its environment, hence the importance of the social factor about which we are now going to say a few words.

The social factor, in the widest sense of the term, is everything that relates to human development of the citizens of a country and, in a first instance, education. The analysis of technical objects/systems using Simondon’s method has shown the importance of the communities of users and of engineering for the “life” of systems. The human factor, namely the average level of education of these communities (the “grade” in the graduate sense), obviously plays a major role. A well-educated, responsible, motivated and entrepreneurial population, is an essential competitive advantage; this is the condition required for the appearance of socio-economic phenomena such as the development of Silicon Valley in California since the 1980s, and prior to that around “Route 128” which surrounds Boston and its large universities (MIT, Harvard, etc.); the birthplace of the computing industry. Implementation of sensitive technologies such as nuclear power demand a highlevel of maturity, both at the user level (so, in the case of the nuclear sector, this relates to the service industries involved) and at the level of the engineering teams (who develop the technology) (in France, the CEA, the constructor AREVA, also in charge of fuels, and the EDF operator in addition to their direct sub-contractors), in other words the entire nuclear sector and all the workers, technicians, engineers and directors who are involved as actors, without forgetting the parties concerned.

In the case of the systems that we focus on in this book, training in systems sciences at the level of an engineer (Baccalaureate + 5 years, or more) is essential, on the one hand to avoid leaving each of the engineering teams to reinvent the wheel several times, each in their own way, and on the other hand to create transversality, a common language from which all the sectors that implement systems will benefit (here we refer back to section 1.3); the “Babel” effect, as we have seen, is fatal to interoperability. In all artificial systems created by humankind, there is always knowledge and expertise that is specific to the phenomenology of the system such as electricity or nuclear, but also knowledge and general expertise which characterize a class of systems. This category of knowledge must come from teaching at a higher level, but the difficulty is of presenting it simultaneously in a specific and abstract manner.

For the technical factor, we have highlighted throughout the book the importance and all-pervasive nature of ICT in the design, development and operation of systems, which are integrated into the rather more vast context of NBICs. The “digital” sector has been recognized as a great national cause in many countries, including France, slightly late if we compare it to the United States or Israel, taking two extremes on the spectrum of States. The information component which is now present in all systems needs to be assessed properly, and prepared for. It is essential to understand that the “physics of information”, to use the terminology introduced by physicists themselves, will play the same role for ICT/NBIC that electronics played in its era, or that physics of condensed matter and particle physics played for the entire energy sector.

While the engineering teams were “not up to standard”, this does not mean that they cannot be effective. It just means that productivity will be lower, that the rate of errors will be higher, breakdowns will be more frequent with a higher level of risk (e.g. nuclear power plants such as Chernobyl, without a safety enclosure!), and that the quality in general will not be what users should expect, etc.

Quality is a fundamental component of the technical factor and, in France at least, it is not taken as seriously as it should be, in particular by the education system and by many industrialists, including among the greats of the CAC 40. Financialization of industrial activities has been accompanied by uncontrolled (and uncontrollable?) risk-taking whose deleterious effects are beginning to appear.

Is the technical capacity to be effective dependent on the capacity to carry out system development projects of all kinds? Here again, we should avoid delighting in hollow words which are never a substitute for competence. Since the 2000s we have talked a lot about “agility” in systems engineering environments, a hollow word selected from a good collection of them, because what should be done is not only to name and/or define, but to specifically explain what it is for, how to go about it, in other words the what and what for, or the objective, taking into account the phenomenology of the project. This was indicated to us a very long time ago by Wittgenstein in his Tractacus logico-philosophicus (Routledge & Kegan Paul Editions, translated by B. Russell), when he said: “If a sign is useless, it is meaningless”, (aphorism 3.328).

Here again, we see that the technical factor can only develop if individual and collective actors (that we have called “active units”) are humanly up to standard, as much in terms of the knowledge required and/or to be acquired, collective expertise within projects, but also interpersonal skills, in other words the ethics that in fine determine the cooperation capacities within the teams themselves24. This truly is a symbiosis, and it cannot be bought like a pocket watch or a hamburger.

Concerning the ecological factor, we will only say a few words, remaining at a very generalized level, because for any system that is constructed, its dismantling must also be included. The dismantling is the normal terminal stage of the life of a system (it is the equivalent of the programmed “death” or biological apoptosis), but in order for it to be carried out in good conditions, it must be integrated as a demand right from the start in the design phase of the system. In the case of nuclear energy, reactors under construction will have an estimated lifetime of one hundred years, in other words the teams in charge of the dismantling will know those that designed it only through the documents that they have been left.

All systems are traversed by flows of energy and materials that will be transformed by the system and delivered to the users. As for the system itself, it must be possible for objects manufactured in this way to be recycled once they are no longer being used. These flows are moreover never totally transformed; there is always waste of all kinds: unused materials, thermal, sound, electromagnetic pollution, etc. Ideally, it must be possible to recycle everything in order to avoid the hereditary term of the growth model of the Lokta–Volterra equation becoming dominant. This is the necessary condition for general equilibrium of the terrestrial system in the long term.

The ecological component is a vital requirement for future generations and a true technological challenge to be met for engineers in the 21st Century who must now reason in a world of finite resources. All waste that is not compensated for and left to the goodwill of natural processes is a mortal risk whose importance we are beginning to measure. The additional devices need to be taken with the same level of seriousness as operational devices, and to be honest, one never happens without the other.

Concerning the legal factor, all advanced countries have a diversified legislative arsenal and standards to be complied with, with agencies to control them, and finally tribunals to judge those who violate the law. This is not without its difficulties, as we all know, with multinational companies who can offshore certain production activities to countries which pay less attention to this aspect.

In conclusion, all these external constraints are going to play a full role when it is necessary to cooperate in order to grow, as has become the rule in globalized economies; the PESTEL factors which then become deciding factors for the choice of a country in which to organize growth and invest, either for the quality of its workforce, of its infrastructure, or for its taxation, or for its lack of legislation, etc.

8.4. Growth by cooperation

Whether we take the example of the electrical system, that of a computing stack, or that of the hierarchical composition of an automobile – we could take many others – we see that through a progressive, methodical approach, we get to very large constructions that are originally inconceivable, but whose complexity is organized by architecture and mastered by the project director. For this, we must not hurry into the complexity of a problem that is initially too vast to be understood, but follow the good advice given by J. von Neumann, or Descartes: constructing step by step from what is correctly understood and mastered.

The LHC25 at the CERN which allowed the Higgs boson to be discovered and which explained the mystery of mass is a fantastic machine of colossal size, which is the result of a history which began in the 1940s in the United States, and at the CERN in the 1950s.

Without cooperation at all levels and organization of complexity, systems of this kind would be entirely unrealistic. The problem therefore relates to understanding how to organize the cooperation so that the efforts of some are not ruined by the failure of others when the entire set is integrated, which implies a good understanding of the sociodynamics of projects. The effort made must be cumulative at the level of the system of systems.

For this, progression in stages is essential. Prior to reactors of 1.3 and 1.6 gigawatts like the EPR, there were intermediate stages which began with machines at 200–300 megawatts, stages that were essential to the development of learning and technological maturity. Before the computing stack of abstractions that we are now familiar with via the MMI in the form of our smartphones, tablets, etc., there were also numerous intermediary stages and thousands of man-years of effort in R&D which have been built up.

Cooperation is an emergence, and for the phenomenon to be cumulative, it is imperative that what emerges can rely on stable foundations, as shown in Figure 8.14. This figure simply systematizes what was presented in Chapter 3. The fundamental point is that the stacking of layers is not expressed as a combination of the underlying complexities. At any level we place ourselves at in the hierarchy, the next level down must appear as a perfect “black box”, in the sense that it lets nothing show through its internal complexity other than via its access interfaces, in other words the dashboard of the black box. However, it must be possible for the whole to be observed, hence the importance of components/equipment that we have described as “autonomic” in Chapters 4 and 5, in particular Figure 4.15, and the generalized mechanisms of traces that are associated with these modular components.

Figure 8.14. Cooperation and growth of systems of systems. For a color version of this figure, see www.iste.co.uk/printz/system.zip

The diagram of the autonomic component/equipment is an abstract model which applies to all levels, the interface, for its part, materializing the distinction between the internal language that is specific to the component/equipment, and the external language which only allows what is functionally required to be seen by the user of the component. The cost of “infinite” growth, in the sense that it has no limit except that of our own turpitude, is a logic cost, a pure product of human intelligence which does not exist in the natural state; a cost that we know how to master thanks to the progress of constructive logics and their associated languages, as much from the most abstract point of view as from the point of view of specific realizations with transducers that allow a change from one world to the other with the perfectly mastered technologies of compilation and/or interpretation. We note the deep analogy that exists between this potential capacity and C. Shannon’s second theorem, concerning the “noisy” channel26.

The “raw” information that comes from the human brain which passes and is refined from brain to brain thanks to the implementation of quality systems (refer to the references by the author for project management, given in the bibliography) until it becomes, via organized interactions, a common good which is then “ripe” for transformation into programs and rules understood by all communities and interested parties, and can therefore be reused.

The example of an electrical system provides us with a completely general model of this evolution. We find this again in the evolution of company systems or in C4ISTAR strategic systems for defense and security. It can be explicit or implicit, but it is always there. We are now going to specify this general model.

8.4.1. The individuation stage

Initially, systems are born from the conjunctural requirements expressed by the users, both individually and collectively, or by anticipation from the R&D teams that are internal and/or external to the company or to the administration. At this stage, it is often technology, a source of new opportunities, that takes control. Defense and security, strategic leadership, have been at the origin of a great number of systems in the United States and in Europe. Development of the electronuclear sector in France in the immediate after-war period is typical of this situation. Progressively, the company is going to host a whole array of systems which each have their own individual dynamic and which are going to organize their growth as a function of the specific requirements and demands of the community that has led to their creation.

In Figure 8.15, we see systems that are born and develop at dates T1, T2, … and mono-directional connections that are established case by case at dates that are compatible with dates of birth of the systems and/or of their stages of development. All this is initially perfectly contingent and almost impossible to plan globally. Individual dynamics mean that at an instant Tx, a function will be added in the system Si, whereas this is not its logical place, because the system which should host it has simply not been created, or even, as frequently is the case, the director of system Si wishes to keep its technical and decisional autonomy. If on the contrary the function to be developed exists elsewhere and the two directors are in agreement to cooperate because they see a win-win reciprocal interest, then an explicit connection is born which will allow an interaction.

Figure 8.15. Birth of systems and their connections. For a color version of this figure, see www.iste.co.uk/printz/system.zip

The logical functions which answer a user’s semantic will take root wherever this is possible, with possible redundancies in different systems in which they will exist in various ways that are more or less compatible. At a certain stage of this anarchical development without a principle of direction, but anarchical by necessity because it is unplannable, the situation will become progressively unmanageable, which was the case of the electrical system in the 1940s–1950s.

After the time taken for action and disordered development without cooperation nor coordination, we have the time to return to an energy-economical optimum where the interactions will be considered as a priority, such as the first data of a global system that will have to optimize itself on the network of interactions (see Figure 8.5 and the comments). This is an illustration of the implementation of the MEP principle.

REMARK.– In the world of strategic systems, known in the 1990s–2000s as network-centric warfare, accompanied by many publications by the institutes and think tanks working in the world of defense and security. The SDI project, “Star Wars”, is an emblematic example of this.