Chapter 6. Security and Privacy

You might wonder why a book on UX has a chapter about security. Isn’t security the domain of the geekiest geeks? Mathematical wizards like Alan Turing, considered weird even by programmer standards? Isn’t it all about cryptography?

Nothing could be further from the truth. As security guru Bruce Schneier wrote in his classic book Secrets and Lies (Wiley, 2000), “If you think technology can solve your security problems, then you don’t understand the problems and you don’t understand the technology.” User interaction and behavior dictate the success or failure of all security. Come, hear my words, and understand.

Everything’s a Trade-off

The only computer that’s completely safe is one that’s turned off, unplugged, sealed in concrete, and buried ten feet in the ground. But you can’t get much work done on one of those. So any sort of computer usage involves some form of compromise between usability and security. Choosing the right compromises for your application is the difference between success and failure of your project. And avoiding complete boneheaded idiocy doesn’t hurt either.

Security has not historically been viewed as the realm of UX. But by understanding who our users are, what they’re trying to do, and what benefit they expect from doing it, we can generate some notion of the amount of security-related effort they’ll tolerate and channel that effort into the areas that will provide the most safety. That’s our job—to understand users and shape the application for their maximum benefit.

Not that we should demand the keys to the security kingdom. The uber-geeks won’t hand them over, and we don’t really want them anyway. And there are levels of it that we have no business touching. But you and I, the advocates for our user population, need to take our place at the table to guide the trade-offs. Because if our application gets the UX wrong, all security is out the window, and our products are, too.

Users Are Human

I recently attended a talk on computer security at which the speaker described a particular badguy exploit and asked, “Why does this happen?” A guy in the audience behind me yelled out, “Because users are stupid.”

The speaker (whom I greatly respect) let the comment pass. But at the end of the talk, I stood up and announced in my auditorium-filling voice, “To the gentleman who said that users are stupid: Users are human. And they will remain human for the foreseeable future. If you expect them to change into something else, then you’re the one that’s stupid.”

What are humans like? Figure 6.1 shows some ideas. They are innumerate (the mathematical analog of “illiterate”—that’s why the gambling industry can exist). They are lazy. They are distractible. They are uncooperative. They are, in a word, human.

Figure 6.1 Humans are (a) innumerate, (b) lazy, (c) distractible, and (d) uncooperative—in a word: human. (Photo by Antoine Taveneaux/Photos © iStock.com/Spauln tap10 and Michael Krinke)

Years ago, we required human users to adapt to the requirements of their software, to become “computer literate” in the idiom of the day. By now you’ve absorbed the message of this book: that day has long since passed, and it is now our job to adapt our software to our users.

In those early days, we didn’t have the Internet continuously connecting every intelligent device on the planet to every other intelligent device on the planet. If typing keyboard commands into a Star Trek game, as we had to do then, was more difficult than clicking a mouse as we do now, at least we didn’t have to worry about bad guys stealing the passwords to our bank accounts while we played. We didn’t have our retail purchases online, or our financial affairs, or our medical data. We didn’t have security threats evolving from script kiddies to the Russian mafia to foreign sovereign nations. The world was simpler and safer then.

While threats to our security keep escalating, users keep demanding apps that are easier to use. For example, many banks would like to demand two-factor authentication, with a password and a phone message, but users resist this extra effort even when it’s done for their safety. Usability guys like us are caught in a nutcracker. But hey, if it was easy, anyone could do it.

What Users Really Care About

If you ask users if security is important to them, they will always answer yes. Very important? Yes, of course, very, very important. But at the same time, security is never a user’s prime goal. When the user’s wife calls down to the basement, “Bob, what are you doing now?” does the guy ever yell back, “I’m being secure!”? No. He’s balancing his checkbook or playing Zork or whatever. Any distraction from that, for security or anything else, is unwelcome.

Users say they want security but are unwilling to divert any effort to it. As Alma Whitten and J. D. Tygar wrote in the book Security and Usability (ed. Cranor and Garfinkel, O’Reilly, 2005), “[Users] do not generally sit down at their computers wanting to manage their security; rather, they want to send email, browse web pages, or download software, and they want security in place to protect them while they do those things. It is easy for [users] to put off learning about security, or to optimistically assume that their security is working, while they focus on their primary goals.”

Like defending our country, users feel it’s very, very important, but they want someone else to do it. In addition to all the qualities mentioned before, humans are two-faced. They’ll say one thing and do exactly the opposite and see nothing contradictory about their actions. The statue in Figure 6.2 is 2,000 years old. This is not a new problem.

Figure 6.2 Humans are two-faced. This statue is 2,000 years old. This is not a new problem. (Photo by Loudon Dodd)

As Jesper Johansson, today a Senior Principal Security Engineer at Amazon, has said to me many times, “Given a choice between dancing pigs and security, users will pick dancing pigs every time.” This is the bargain that we accept when we go into this end of the business.

The Hassle Budget

Because users are human, they view any effort required for security as a distraction, an unwelcome intrusion, a tax. Therefore, they will tolerate only a limited amount of it. I have coined the term hassle budget to describe this amount of effort. If your application exceeds your users’ hassle budget, they will either figure out a workaround or toss your app.

Consider the following example. You probably have a lock on the front door of your house. The lock requires a certain amount of effort to install initially, then some amount of effort to carry a key and use it every time you enter your house. You accept this overhead because you consider it small compared to its benefit: keeping random strangers out of your home. This effort is within your hassle budget.

Suppose now that your landlord installed a combination lock on the door of your bathroom inside the house, with spring hinges to automatically close and latch the door. You would probably consider the effort to work this lock every time you need to go to be much larger than the benefit the lock provides. It now exceeds your hassle budget.

How long would you tolerate this? Once, certainly, to get the door open initially. Perhaps twice. No way, nohow, would you do it a third time. Uninstalling it isn’t an option because the landlord holds the power and decrees that you shall have the lock. So you would perform some sort of workaround—tape down the latch, prop the door open with a chair, or say, “To hell with this,” and go piss in the kitchen sink.

Because the landlord’s imposed security requirements exceed your hassle budget, the bathroom actually becomes less secure than it was before. The usual bathroom privacy lock, easily defeated with a screwdriver, provided a polite reminder: “Bathroom in use. Please follow the rules.” With the tape or the chair workaround, you’ve lost even that rudimentary level. And with the kitchen sink workaround, you’ve lost that customer.

And take this thought one step further. The landlord thinks that because he’s installed the combination lock, the bathroom’s security is higher than before. But instead it is actually lower, because the more stringent requirement exceeded the tenant’s hassle budget and triggered the workaround. The landlord now has the worst possible sense of security: a false one.

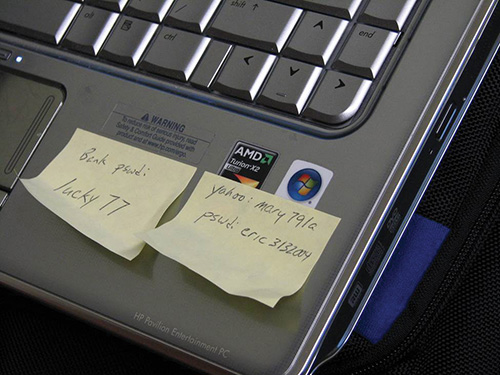

That’s exactly what users do with computer security requirements. If you exceed their hassle budget, they’ll either figure out a workaround or say to hell with it and get rid of your app. Figure 6.3 shows one sort of workaround. And you then have either a system that’s as secure as the workaround, or no customers. Like an ancient Greek tragedy, the more security engineers struggle against insecure users, the less secure they all become.

Figure 6.3 Users will employ a workaround if their hassle budget has been exceeded. (Photo by Jake Ludington)

Hassle budgets are highly subjective. They vary by user, by the benefit the users perceive they will gain from your app, and by the ease of getting that same benefit somewhere else. Aunt Millie’s hassle budget is very low for the genealogy program, slightly higher for seeing pictures of her grandchildren’s high school graduation, but never all that large for anything. She won’t spend time struggling with a computer, for security or any other reason. What do you think she’ll do if her family photo Web site demands that she change her password every three months and insists on a strong password that contains upper- and lowercase letters, a number, and a symbol? She’ll say, “Heck with it,” and go off to knit or play bridge or watch Turner Classic Movies on cable. She knows that her grandson will be delighted to show off those pictures on his new iPhone the next time he comes to visit. On the other hand, 15-year-old techno-nerd Elmer (whiz-bang programmer, terrible at sports, bedeviled by acne, shunned by girls) will do almost anything to obtain undocumented cheat codes to his favorite games. His hassle budget in this case is much higher. (By the way, do you see the power of personas for illustrating this example?)

Respect Your Users’ Hassle Budget

You may think that the hassle budget and its workarounds are wrong and bad and stupid. You may think that further education will cause users to accede to your demands for greater security effort. You’d be wrong. I’ve seen user education touted as the solution to security concerns for at least 20 years. If it were going to work, it would have by now. Why hasn’t it, and what should we do instead?

Cormac Herley, a scientist at Microsoft Research, has published some good papers on the interaction of security with users. I especially admire a brilliant one of his entitled “So Long, and No Thanks for the Externalities: The Rational Rejection of Security Advice by Users.” I strongly urge you to find this paper and read it completely.

Herley has a new take on users’ hassle budgets: they are not wrong or stupid. He argues that the professionals who prescribe security advice to users rarely weigh the cost of following that advice against the harm that advice would prevent if users indeed followed it. He says that “users’ rejection of the security advice they receive is entirely rational from an economic perspective. The advice offers to shield them from the direct costs of attacks, but burdens them with far greater indirect costs in the form of effort.”

Consider an attack that hits one user out of 100 every year, and it takes the targeted user ten hours to clean up after it. The total cost of this exploit is thus 36,000 seconds per year per 100 users, or 360 seconds per user per year. If the user action required to prevent this exploit consumes more than about one second per day, the treatment is, overall, worse than the disease. An ounce of cure is not worth five pounds of prevention.

Herley continues (my emphasis added):

There are about 180 million online adults in the US. At twice the US minimum wage one hour of user time is then worth $7.25 * 2 * 180 million = $2.6 billion. . . . This places things in an entirely new light. We suggest that the main reason security advice is ignored is that it makes an enormous miscalculation: it treats as free a resource that is actually worth $2.6 billion an hour. It’s not uncommon to regard users as lazy or reluctant. A better understanding of the situation might ensue if we viewed the user as a professional who bills at $2.6 billion per hour, and whose time is far too valuable to be wasted on unnecessary detail.

I just saw an article on the Web entitled “Thirty-Seven Ways to Prevent Identity Theft.” If I have to do 37 different things to keep my identity safe, forget it. The bad guys can have the damn thing.

The key, as I’ve said throughout this book, is to understand the user. It is to figure out what the user really wants and needs, to weigh the costs of prevention versus cleanup, and ruthlessly trim, automate, and optimize the efforts we require of our users.

A Widespread, Real-Life, Hassle Budget Workaround

Here’s an example of the users’ hassle budget and the security mafia’s cluelessness as to how to manage it.

Highly paid security gurus pontificate that your password should be random so as to be unguessable (not your wife’s name, or children’s, or dog’s, etc.). OK, that makes sense; I’ve cracked a few by guessing the obvious choices. That’s why I always use a random generator when I need to choose a new password. (See Figure 6.4 for the logical conclusion of this idea.) Then they’ll say that you shouldn’t write it down. Again, I can see the logic of this. That’s one of the ways that Richard Feynman cracked classified safes on the atomic bomb project during the Second World War and left goofy notes, which drove the security people batty. (See his autobiography, Surely You’re Joking, Mr. Feynman, for the other two ways.) Then they’ll tell you to use a different password for every account you have. Like the other two, it makes sense on its own. Then, finally, they’ll tell you to change them all periodically.

Human beings cannot do all four of these things together. Our brains aren’t built that way; that’s why we invented computers. You can get any two of these things at once, if you’re lucky. Telling users to do all of them while knowing, knowing that they can’t, instead of figuring out how to be as secure as you can with what users actually can (and sometimes even will) do, constitutes malpractice.

Which of these items offers the least bang for the buck? Which spends the users’ hassle budget least wisely? Probably the last one: changing your passwords every so often. Here’s why:

In mathematical theory, periodic changes make a small amount of sense. Changing a password provides some protection against a bad guy’s brute-force attack on a stolen password file. It does not protect at all against a password stolen by a keystroke logger, or by shoulder surfing, or by phishing. Nor does it solve the problem of a user handing over credentials to a bad guy in return for money or sex or revenge. Are you sure there’s no one like that in your network?

If an even-halfway-competent bad guy steals a password, he knows that it will probably have a very short lifetime, like a stolen credit card number. He will therefore use it immediately, trying to steal the maximum amount before the exploit gets noticed and the account locked. The three-month or six-month password reset interval won’t help us here, because the bad guy will almost certainly not encounter it before other security mechanisms kick in.

So this security fix, if it works, only solves the problem of someone stealing a password, and then remaining undetected while observing on the network, like (say) Yahoo spying on (say) Microsoft’s internal telephone directory to woo key employees away. (Of course, if Yahoo really wanted Microsoft employees, a full-page ad in the Seattle Times offering large cash bonuses would probably work better.)

A case might still exist for changing passwords to prevent this last exploit, if such changes did indeed work. But periodic password changes backfire on administrators because their user population is human—in this case, the uncooperative and lazy part of being human. Think about the trodden path in Figure 6.1d, which is called a “desire line.” Were the walkers being asked for too much, to deviate from their path by a few feet to stay on the pavement and keep the grass nice? The askers didn’t think so, but the walkers, with their behavior, said yes. It exceeded their hassle budget. They wouldn’t cooperate.

And neither will users when they are required to change their passwords. I have never, and I mean not once ever in my entire life, seen or even heard of users doing anything other than changing the last character of their passwords, almost always a number in ascending sequence, so they can remember it from one mandated change to the next. They might start with a random password (if they’re forced to), such as w5NCzr#@h. But when they have to change it, they’ll add a suffix to make w5NCzr#@h1. The next one will be w5NCzr#@h2 and so on. The real irony here is that the more random you make the rest of the password, the more the repetitive pattern sticks out. Do you think even a script-kiddie-level bad guy won’t figure it out? My daughter did, at age ten. Because users feel that this requirement exceeds their hassle budget, they perform a minimum-effort workaround that gets the security nazis off their back.

Microsoft has 128,000 employees. Changing passwords quarterly, figuring ten minutes per change, costs Microsoft over 85,000 employee hours per year, roughly the equivalent of 42 full-time employees. That doesn’t count the time consumed by resets of forgotten passwords, increased tech support line costs, or the opportunity cost of locked-out employees. Is Microsoft getting value for its money? I think not.

The only way to solve this password-changing problem would be to assign each user a new random password every quarter. Of course, users can’t (won’t) remember those, so they will (as always) perform a workaround by writing them down. The new passwords would then be as secure as the sticky notes that they’re written on. Again: Greek tragedy.

So why do companies force users to change their passwords? When I ask the people in charge of such matters, I get two different answers. The first is that many security administrators actually believe that it works. This is scary, because it would mean that the people who are in charge of watching our backs are incompetent. I hope it really means that they aren’t willing to admit that they know it doesn’t, or at least not admit it to me. Sometimes they’ll say, “We have a user education program that takes care of that.” They may well have a user education program, but I’d bet my house it doesn’t take care of that.

The second, smaller, group admits that, no, they probably don’t derive any benefit from periodic password changes, certainly nothing proportionate to the user time it consumes. But they say that, since it shows up on some best practice lists, they don’t have the authority to change it. It’s security theater, a placebo to show the masses, or their technologically illiterate bosses, that something is being done. They sometimes quote the serenity prayer (“God grant me the serenity to accept the things I cannot change, the courage to change the things I can, and the wisdom to know the difference”). And they say that they want to save their political capital for things that really do matter.

And finally, the security administrators are not paying the bill for the user time that these policies waste. In fact, another excellent paper by Cormac Herley (“Where Do Security Policies Come From?,” coauthored with Dinei Florêncio) finds the most stringent policies not where the need is the greatest, measured by either assets guarded or number of attacks. Rather, they find that the strongest security policies are at .edu and .gov domains, which are “better insulated from the consequences of imposing poor usability decisions on their users.” To those decisions and their consequences we now turn our attention.

Case Study: Amazon.com

Amazon is one of the most successful companies ever. It therefore stands to reason that the trade-offs Amazon makes between security and usability are effective ones for their user population. They are tight enough to hold losses to an acceptable level and to keep users comfortable with shopping there. And they are lenient enough that they don’t obstruct users from giving Amazon their money—lots and lots of their money. Let’s examine these trade-offs.

Originally founded to sell paper books (remember them?), and then CDs (them too?), Amazon now sells anything and everything, physical or digital. Its Kindle reader has redefined what it means to read a book or to write one. Their patented 1-Click order system is so effective at facilitating impulse purchases that I had to turn the damn thing off because I was buying too much stuff.

When you first bring up Amazon’s Web page, it is completely generic (Figure 6.5). It shows what other people are buying, but nothing tailored for you. You can search for products and add them to the cart.

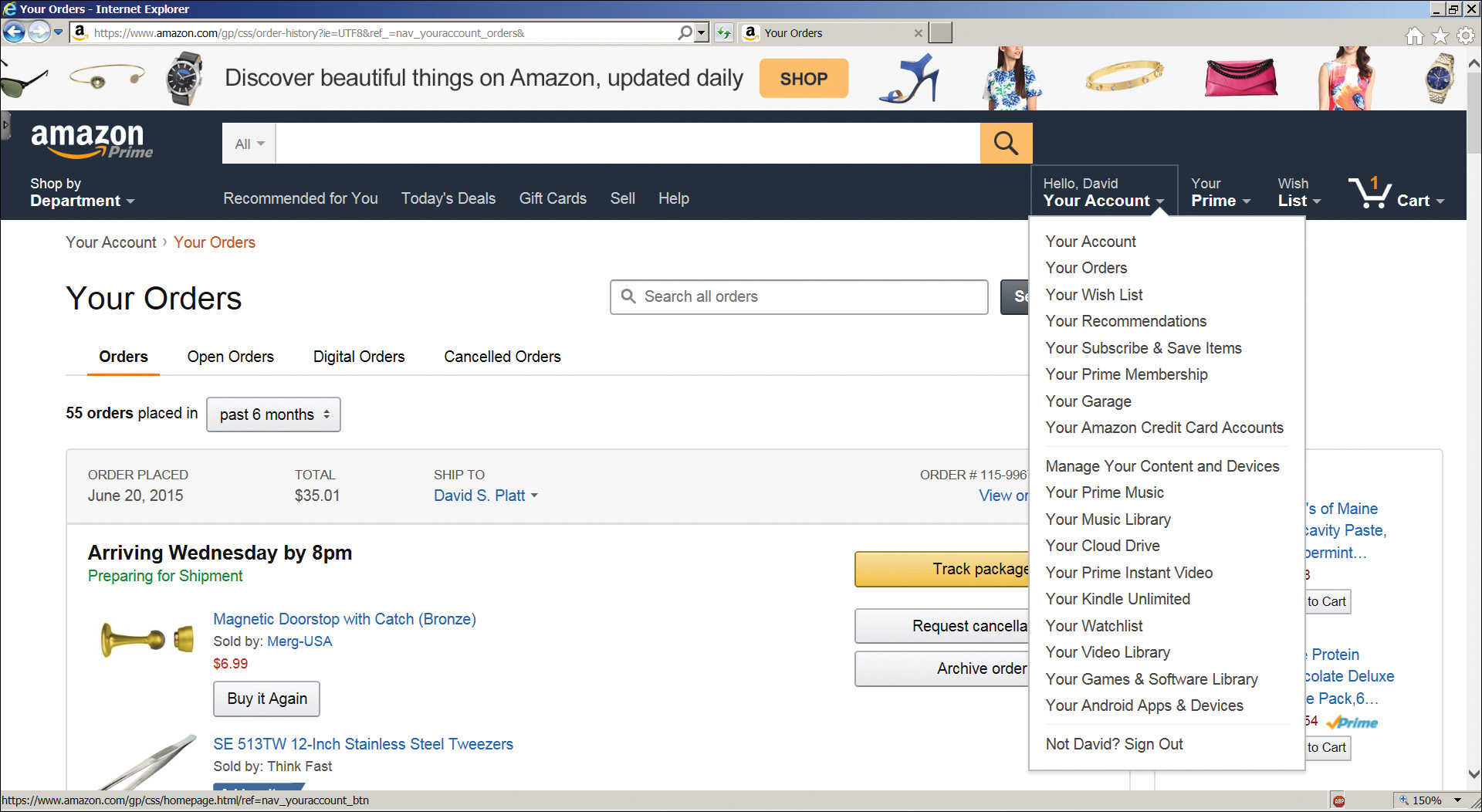

If you want to buy something or check your orders, you have to log in. Note that Amazon at this point (Figure 6.6) does not offer the check box that says “Keep me signed in.”

Now that it knows who you are, Amazon’s home page shows items that Amazon thinks you are likely to want, based on previous purchases (Figure 6.7). You can check out, view your wish list, view or modify previous orders, and so on, as a logged-in user would expect. So far, so standard.

Now, close your browser, reopen it, and go back to Amazon. It still (or again) presents your personalized view as shown in Figure 6.7. It does this by default, even though you didn’t ask Amazon to keep you logged in, and even though Amazon didn’t offer you the option to do that. What’s going on here?

Like most businesses, Amazon makes most of its money from repeat purchasers, and the UX is optimized for this. Amazon figures that since it’s identified you once, and no one else has logged in to Amazon on this computer since, the user is most likely you coming back again, and that both Amazon and you would be happier to pick up where you left off last time.

You aren’t fully logged in at this point. You are in a sort of twilight, semi-authenticated state. “We think you are probably [this person], but we are not sure. Therefore, we will go so far with you and no farther,” Amazon thinks. You can view your recommendations from this screen, Amazon’s primary mechanism for encouraging you to buy more stuff. You can see or modify your wish list. You can order items via 1-Click, if you’ve set that up previously. Because you have already successfully shipped items to your 1-Click address, Amazon figures that it’s OK to do that again without further authentication.

If you want to use the standard checkout or view your orders, you need to log in again. Amazon will automatically take you to the login page when you click on any link that requires authentication. Note that when you log in from this intermediate state, the login screen prefills your email address and also contains a check box that allows you to stay logged in (Figure 6.8). If you check this box, Amazon will keep you logged in for two weeks. You can check your orders or buy things from your cart without further authentication. But if you want to change your account settings, such as adding addresses, you have to log in again at that time. And if you want to ship something to an address that you have not previously used, you need to enter your credit card number again at that time.

Figure 6.8 Amazon login screen on a PC where Amazon thinks it knows the user. Note the prefilled email address field, and the presence of a “Keep me signed in” check box.

You see that Amazon provides a hierarchy of escalating privileges, based on how recently you have authenticated. These levels are shown in Table 6.1.

Obviously, you might be viewing Amazon on someone else’s computer. It might be someone you trust, say, a family member. Or it might be in an untrusted environment, such as the public library. Amazon provides you the capability to explicitly log out and wipe your history. But that takes work to find and to use. The Sign Out menu option is buried at the bottom of the Your Account dropdown (Figure 6.9). Even the label suggests that it’s not for ordinary usage—“Not David? Sign Out.” How about “Paranoid David in Public Library? Sign Out.” I would be curious as to what Amazon’s telemetry says is the percentage of users who ever do this.

Amazon has calculated that its biggest profits come from ongoing relationships, from being the users’ default option when they need to buy something. Just finished the last of the dental floss? No problemo, order more from your bedside Kindle. “People who bought Tom’s of Maine brand floss also bought Tom’s toothpaste.” Want some? Sure, as long as I’m here, why not? We’re probably getting low.

That’s why Amazon sets the default option to remember the user’s identity from one browser session to another: Amazon makes more money that way. Can it backfire? Of course. Suppose you order something from a public library computer and forget to log out from Amazon. The next library patron can see what you were looking at, or what you’ve recently ordered. It’s not all that much work to connect this knowledge to you personally, especially if you live in a small town as I do. A library patron with a nasty sense of humor could order an embarrassing item sent to you via 1-Click.

But Amazon calculates: Our users probably won’t order from a public PC anywhere near as much as from a home PC. Not never, but a whole lot less. And if they do order from a public PC, they’ll probably be paranoid enough to log out after their session. And if they’re not, public PCs often restart their desktop sessions after each patron. And if not, maybe the next person won’t do anything bad. She could wipe out your wish list or add bad things to it, not a whole lot of harm done, but we bet she won’t. And if she does order something, we’ve limited her to your 1-Click addresses. You’ll probably see the order and cancel it. And if not, we’ll offer you free return shipping and maybe a $20 credit and you’ll probably sing our praises.

Amazon calculates that fixing the errors that do occur with this level of trade-off is more profitable than preventing the possibility by dumping your browser session. Its ubiquitous connection to your life makes the company more money, a lot more money, than the bad guys steal from it. It’s that ounce of cure again.

Amazon does not insist on strong passwords, nor does it ever make you change a password. As I explained, the latter provides very little additional security. Unlike corporate or government or educational establishments, Amazon does, or would, bear the financial burden of bad usability. If a customer comes to Amazon and is told to change her password, she could easily get angry and buy that thing somewhere else on the Web. Amazon usually has good prices, but they’re not always the very best anymore. Rather, Amazon offers a trusted name, excellent customer service, and absolutely killer ease of use.

Another reason that Amazon gets away with relatively weak passwords is because passwords are not the only line of defense against bad guys. Amazon ships goods to specific addresses. Once it’s successfully shipped to your address, it knows that address is good. A random bad guy somehow ordering something on your account would want it shipped to his own address. But if you want Amazon to ship to a new address, you have to enter the credit card number again. And if the bad guy has stolen your wallet and credit cards, you have more problems than just Amazon.

Amazon also has more security going on behind the scenes. Amazon is constantly checking usage patterns—does this purchase fit your pattern? And the credit card companies backstop Amazon. Expensive, highly resalable items will be shipped only to the billing address of the credit card. I had a client who tried to send me a computer for a project he wanted me to work on, and Amazon wouldn’t ship it directly to me for that reason. Once in a while I get a call from Amazon, or my credit card company: “Did you really order [whatever]?” Almost always, I have. “Great! Thank you for using [whatever].” And if not, they’ve found the fraud before I ever would and can stop it right away. This is good defense in depth.

I am not saying that Amazon has the optimum balance between security and usability for all apps at all times. It’s probably too lenient for your bank, which logs you out automatically after ten or so minutes of inaction. It’s probably too stringent for your magazine subscription, which never logs you out no matter what. What you need to learn from this analysis is threefold: first, Amazon has very carefully and thoughtfully adjusted its level of security to balance safety with ease of use; second, Amazon has done that through constantly, repeatedly putting itself in the shoes (Amazon now owns Zappos) of its users; and third, Amazon is making a boatload of money because it is getting the trade-off right. So, too, do you need to carefully adjust your level of security, by putting yourself in your users’ shoes.

Securing Our Applications

Now that we’ve seen what users want from their products (complete security, complete usability) and what they’re willing to do to get it (as little as possible, down to and including nothing at all), what the heck do we do?

Understand Our Users’ Hassle Budget

As with most things in UX, there are very few things in security that are absolutely good or absolutely bad under all circumstances. The level of security that is reasonable and appropriate, and that users are willing to tolerate, for the mutual fund company that holds your million-dollar retirement portfolio is way overkill for Candy Crush. Figure out the user’s hassle budget at the beginning, and work with it. Who is the user? Get out your persona. What problem is Aunt Millie or Reverend Foster trying to solve, and what would they consider to be the characteristics of a good solution? Get out your stories. Where else could they get the same thing done today, if they don’t use your products? Try asking the question “What type of hoops are they willing to jump through to get it?” Phrasing it in this deliberately provocative way will concentrate your mind on minimizing the amount of hassle your apps require from your users.

When you’ve figured out that hassle budget, then try to figure out what sort of workarounds your users will do if they perceive that your security requirements exceed their hassle budget. In a consumer-type situation, they will often simply walk away from your app, and then you’ve spent all your money building an expensive paperweight. Users of enterprise systems, who often do not have a choice of which software to use, tend to be more ingenious with workarounds. For example, I’ve seen situations where users are automatically logged off the network when their PCs have been quiescent for some amount of time. Technical users often resent this, especially if the interval is short, and will write or download applications that stimulate their PC frequently enough to defeat the automatic logout. What workarounds will your users do? Try to make sure that they aren’t ones that will kill your app. And if they are, go back and rethink the security situation. Remember the bathroom lock example.

Start with Good Defaults

Again, the default settings of an app are absolutely critical. And nowhere are the default settings more important than in the realm of security.

Few users ever change their default settings. Many don’t even know that they can, and others don’t know where to start. The rest consider it more trouble than it’s worth, or fear damaging a working installation. UI guru Alan Cooper considers changing default settings to be the defining characteristic of an advanced user. Thinking of all the applications I use regularly, there isn’t one on which I’d consider myself an advanced user in this sense.

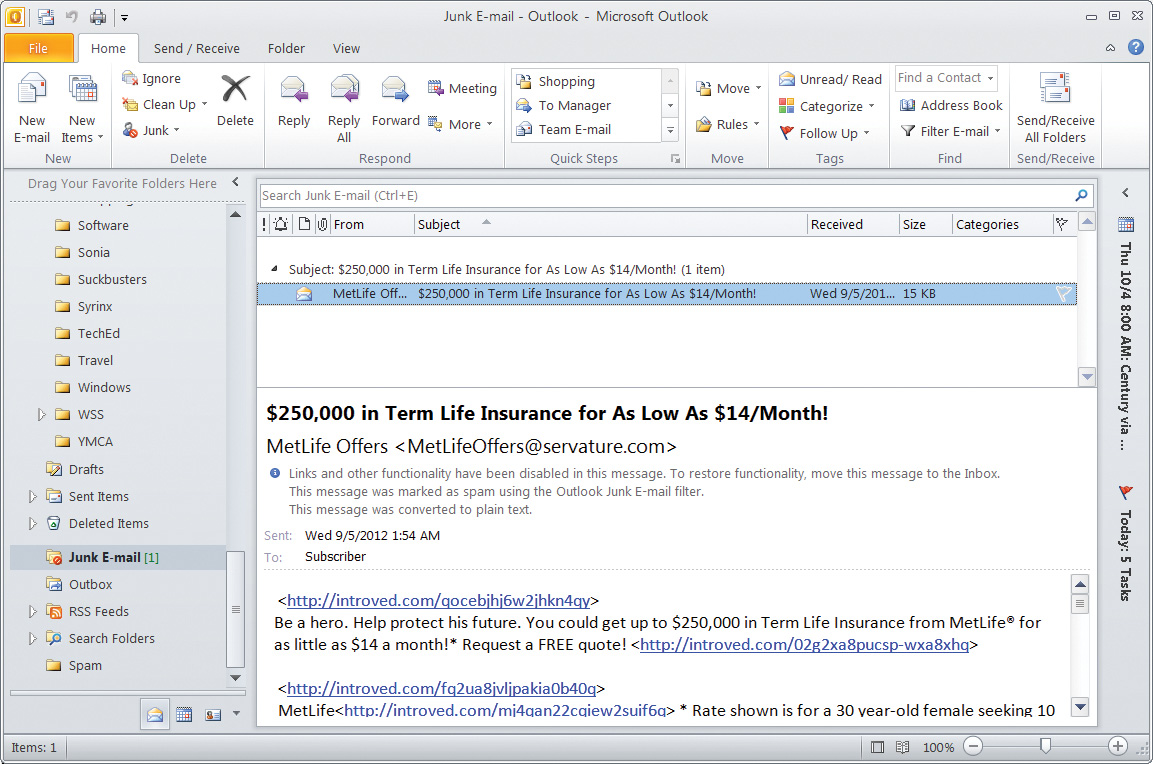

Consider Microsoft Outlook. Like most users, I get a lot of junk mail, usually spam or phishing. Back in the 1990s, I remember right-clicking on an email in my in box, seeing the “Junk” entry on the context menu, and saying, “Ah! Outlook knows about junk mail. Good, I’ll use it and try to get rid of some of this garbage.” But when I selected that option, Outlook brought up a configuration wizard, asking me questions that I had no idea how to answer. Outlook’s junk mail filtering didn’t do anything at all right out of the box. It insisted that I think. As any human (lazy, forgetful, uncooperative, etc.) user would, I said to hell with it, closed the wizard, and never touched it again.

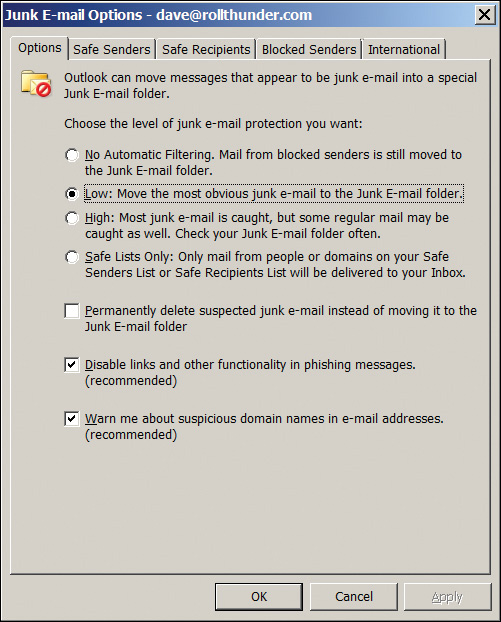

The current version of Outlook, however, installs with decent defaults. When a message comes in that is obviously junk (most users don’t know how Outlook figures it and don’t really care, as long as Outlook gets it right most of the time), it automatically goes to the junk mail folder (Figure 6.10). It’s almost always right, and we can easily see and correct when it isn’t.

Figure 6.10 Microsoft Outlook default junk mail settings automatically send this spam to the Junk E-mail folder.

You can configure this behavior if you want to. But this dialog box is difficult to find. You have to right-click on an email message to get the context menu, then open the Junk submenu, then select Junk E-mail Options . . . at the bottom of that one. Telemetry would provide an exact number, but not very many users will ever see this box, let alone change anything in it.

Figure 6.11 shows these default settings. The filter level is Low, catching only the most obvious junk. Messages that are thought to be phishing have their links disabled. If there’s a suspicious domain in the email address, you get a warning.

These settings aren’t perfect. Any set of defaults will always be a compromise. Not all of these complex setting capabilities are useful (you can, for example, block any messages coming from Andorra should you wish to), but that’s MS and its Office’s feature bloat. The company has done a decent job of putting default settings to work. And you need to do that as well.

Decide, Don’t Ask

“Dave, come here! My computer is acting weird again!” I hate when my wife calls me from the other end of the house like that. I just know something bad has come up. Something beyond the capability of my daughters—now 13 and 15—and if a teenager can’t fix it, you know that it’s serious.

She was reacting to a dialog box displayed by Norton Internet Security. Shown in Figure 6.12, the dialog box read, in part: “carboniteservice.exe is attempting to access the Internet. This program has been modified since it was last used.” It then went on to ask if the program should be allowed to access the Internet.

What kind of silliness is this? If all the brainpower at Norton can’t figure out whether this application should be allowed to access the Internet, how the hell is my wife ever going to?

For that matter, how would you or I, computer professionals that we claim to be, go about figuring it out? We know that the name of the process means nothing at all. Even if we stipulate that Norton is correctly indicating the Carbonite process that we installed, how do we know that Carbonite has been properly updated rather than hijacked by a bad guy, a common attack mode?

We don’t, we can’t, and we shouldn’t be asked to. That’s why we buy Norton, to access the top brains in the computer security business. Accepting money for a product called “Internet Security” means knowing how to handle these situations. If the risk is low, Norton shouldn’t be bugging me. And if it’s not low, Norton shouldn’t be saying it is.

What does Norton think it’s doing? I spoke at a conference some time ago, next door to an unrelated computer security conference. When I slid over during a break to scarf their free beer (we only had juice), I saw a guy wearing a Norton badge and jumped on him about this dialog box. He said it makes perfect sense to Norton: “We’re getting the user’s informed consent.”

Sorry, that doesn’t cut it. Informed consent is consent given when you understand the facts, implications, and future consequences of an action. Ordinary users can’t do this, and neither can computer professionals who are not security specialists. Informed consent is impossible in this type of situation.

I opened myself another beer and handed one to the Norton guy, as his meeting was paying for them. He wasn’t giving up. “It’s like the doctor who tells you the risks and lets you decide,” he said.

No, it isn’t. Norton throwing this box in a user’s face is like an airline asking a passenger if he thinks the weather is safe for flying. The passenger is not competent to make such a judgment. That decision requires professional-grade technical knowledge and rests entirely on trained and licensed professionals who hold responsibility for transporting passengers safely. That model works well for air travel, and we should be working the same way.

The main reason we’re seeing this box is likely lawyers. Norton’s lawyers told the developers, in effect, “If you’re not sure, then just ask the user, and you’re off the hook. Then if it breaks, it’s the user’s own fault.” I disagree. It’s our job to get it right.

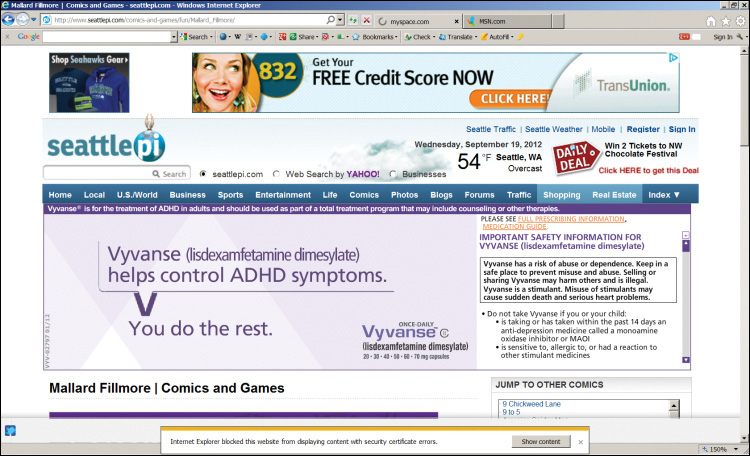

For another example, consider the dialog box in Figure 6.13. It indicates that there is some sort of problem with the security certificate of the target server.

What the heck is going on here? Do you seriously think that Aunt Millie is going to read this box, understand what it means, and make an informed decision about whether to proceed or not? Maybe her sewing circle Web site has been hacked, maybe it hasn’t, but this box doesn’t help. Not at all.

That’s especially true because many legitimate Web sites give you software installation directions telling you to ignore this box. In researching this book, my first page of Google search results showed such instructions coming from UNC Chapel Hill, the government of Oklahoma, and the Consulate General of India, among many others.

Not only does this box not help anything, but it decreases the respect and attention paid to any and all security communications. The “boy crying wolf problem” has been around since at least the time of Aesop (620 BCE), and it probably wasn’t new then. It shouldn’t surprise anyone when the same actions produce the same results today.

It’s especially bad as you will almost never see this message coming from a bad guy’s site. As Herley writes, “Attackers wisely calculate that it is far better to go without a certificate than risk the warning. In fact, as far as we can determine, there is no evidence of a single user being saved from harm by a certificate error, anywhere, ever.”

Figure 6.14 shows a better example. The page contained some content, probably ads, from servers that had certificate errors. Rather than asking the user, IE simply doesn’t display them. It communicates that fact with a bottom bar, far better than a modal dialog box. IE made the decision to err on the side of safety and didn’t ask for permission. Someone who depended on the blocked content could probe and try to figure it out, but it doesn’t bother most users most of the time. It’s a whole lot better than throwing a modal dialog box in the face of someone who cannot possibly know the right answer.

We developers are the experts, and users depend on us. We cannot abdicate our responsibility by asking for guidance from someone who cannot possibly know. Informed consent in computing is a myth, and companies that claim it as an excuse for their malpractice are weasels. Decide, don’t ask. If you can’t decide, decide anyway. Because your users sure as heck can’t decide.

Use Your Persona and Story Skills to Communicate

The geeks who run the security department probably have the least contact with their users of the entire development team. By using your persona and story skills, you can make the users and their hassle budget real to your security developers, so they can truly make informed judgments.

Because we are responsible for the users, it is our job to communicate their needs and concerns to the rest of the design team. This is another reason why the persona and story work needs to be done early, because it will dictate what the security design decisions are, before they are implemented.

Suppose the genealogy program was a Web site, and suppose we were discussing the login requirements. You could say, “Look, here’s our user. Her name is Aunt Millie. She’s 68 years old, doesn’t see too well anymore. Her grandkids are going to set this up for her, but she’s the one who’s going to be using it day to day. Do we have to make her enter a password every day? Couldn’t we let her stay logged in, once we set it up? I don’t think we have to worry too much about the cleaning lady turning it on and destroying her family tree.”

Try using a story to communicate with the developers, and don’t be afraid to play on their heartstrings: “Poor Aunt Millie! She visited the nursing home today, and her mother didn’t even recognize her. She drove home in tears. She so desperately fears winding up like that, and she really wants to get these stories out of her brain before that happens to her. She turns on her PC, clicks the desktop icon that her grandson set up for her, and wants our site to load. Damn! The silly computer wants a password. What happened to the password? She looks around, but the sticky note fell off her monitor and she can’t find it. Can’t we let her stay logged in, like the AARP Web site does?” And so on. Be shameless. I am.

Strengthen Your Stories with Data

One of the main problems with any security decision, as with any other UX decision, is knowing what users actually do, as opposed to what they’ll admit to doing or can remember doing. Today we have telemetry to tell us these kinds of things. Since we, as UX, are in charge of much of this telemetry, we can obtain hard data on which to base our hard decisions.

Microsoft SQL Server, for example, used to ship with a built-in administrator account named “sa,” with a password that was blank. I made use of this in an earlier book for geeks, to make sample programs that would run right out of the box with no user setup. It sure was convenient. But that’s not what passwords are supposed to be for.

SQL Server came with a written checklist of installation instructions, which included changing that password. It was supposed to be installed by a qualified professional, but, being human (lazy, distractible, uncooperative), many of them skipped this step. Bad guys later used this lapse to attack SQL Server over the Internet, causing much sturm und drang.

Had we had telemetry then, we would have the data to say, “You know what? Only 65% of SQL administrators actually change their administrator account password from blank. We really ought to think about requiring them to enter one as we run the installer program. We do that for Windows NT. That should be well within the hassle budget of a professional administrator, no? And if they write it down on a sticky note next to their server box, that’s still safer than an Internet attack.”

You will also have the data to say, “HIPAA [Health Insurance Portability and Accountability Act] regulations require automatic logoff after a system has been inactive for ‘a preset amount of time.’ When we set it to ten minutes, we find that our nurses have to log on an average of 22 times per day, taking about 30 seconds each. Can we try something else? Maybe a longer timeout interval? How about a fingerprint reader? My bank does that for its tellers, and my pharmacy uses them too. Or maybe an RFID reader that senses the badges we already wear to get into the building? That automatic facial recognition getting built into Windows 10 looks cool. Do you suppose it really works? What happens when a guy shaves off his beard?” And so on.

If you have good usability testing videos, they can also make a big difference in communicating users’ feelings. Using the techniques from Chapter 4, you can try various options on the users and record their reactions. Sometimes the videos can speak volumes. It’s one thing to say, “Our users got mad and stormed out.” It’s another thing to actually show the video of them cursing the facilitator up one side, down the other, and throwing the free donuts on the floor.

Cooperate with Other Security Layers

Security is a multilayered heuristic process. Neither user-based security, nor any other layer, can work in the absence of others. The other layers are part of the process and will (at least sometimes) backstop you when your layer fails or gets bypassed.

I once bought a MetroCard (a stored-value fare card) in the New York City subway, paying for it with my credit card. Later that day I decided I needed another MetroCard and tried to buy one. The ticket vending machine accepted my business credit card the first time but rejected it the second time. I called the credit card company, rather annoyed, to see why my good credit wasn’t being accepted. The customer service lady told me that that’s their loss prevention algorithm. When a card gets stolen, bad guys quickly try to use it before it gets reported and stopped, so they buy things that are easy to resell. MetroCards are about the easiest thing to resell in all of NYC. Everyone in the city needs them, and it’s easy to read the remaining value on them. They sell readily for about 50 cents cash on the dollar. So the credit card company will decline a second MetroCard purchase on the same day, figuring it’s probably a bad guy. If I wanted, she’d authorize one more, or I could just wait until tomorrow.

This is the sort of thing with which you are cooperating. You are not standing alone. Don’t try to solve it all in the user layer, because you can’t.

Read a Good Book

You should read the book Beyond Fear: Thinking Sensibly about Security in an Uncertain World, by Bruce Schneier (Copernicus, 2006). He is one of the world’s top authorities on computer security, and he fully understands the human element; see the quote that opens this chapter. He definitely groks the concept that security measures have a cost as well as (you hope) a benefit. It’s fascinating and he writes very well. You need to read it for your own education.

Bury the Hatchet

Security and usability guys often see themselves as adversaries. Scott Adams’s famous comic strip fans these flames with a character named Mordac the Preventer. Mordac values security far more than usability, believing that in a perfect world, no user could ever make use of anything. While Dilbert struggles with password requirements, Mordac taunts him with lines from Deliverance that I’m stunned he ever got into a family newspaper.

Have you ever tried sitting down with security people and just chatting? Not even necessarily about imminent business, just “Hey, how are you? Don’t the Red Sox stink this year?” It’s surprising how often no one ever has. They might be a little suspicious at first, but if you don’t immediately try telling them how to run their practices, they might open up to you. Invest in a box of donuts once in a while. Pick a specific example from Beyond Fear and ask them what they think. Eat lunch with them if you can, maybe offer to start a security-usability discussion group. They’d probably like a little love, and they might settle for just a little less hatred.

The Last Word on Security

I’ll give the last word to Cormac Herley, from another excellent paper entitled “More Is Not the Answer” (my emphasis added again):

It is easy to fall into the trap of thinking that if we find the right words or slogan we can convince people to spend more time on security. Or that usable security offers a bag of tricks to cajole users into increasing effort. We argue that this view is profoundly in error. It presupposes that users are wrong about the cost-benefit tradeoff of security measures, when the bulk of the evidence suggests the opposite. The problem with the product we offer is not simply that it lacks attractive packaging, but that it offers poor return on investment. There are many ways to reduce potential harm with more user effort. Yet, when the answer is always “do more,” they don’t sound like the response to any question that the user population asks. There is a pressing need however for better protection at the same or lower levels of effort. Rather than techniques to convince users to treat low-value assets as high, we need advice and tools that are appropriate to value.

Amen.