Chapter 11

Future of the SOC

The best way to predict the future is to create it.

—Abraham Lincoln

Welcome to the last chapter of this journey into the SOC. This book has covered topics ranging from how to build a SOC to what types of practices are common in mature SOCs around the world. You have explored what works today in a mature SOC, but this leaves one final topic open, which is what you should expect to see in future SOCs. Technology is rapidly changing and so are the tactics used by cybercriminals. Responding to these changes requires adapting your SOC practice or eventually you will encounter a compromise. Security is a journey, not a destination. You don’t become secure and move on to another project. Instead, you continuously observe and adapt as described in the OODA loop (covered in Chapter 10, “Data Orchestrtion”).

This final chapter provides an overview of security industry trends and what I believe will be key elements for the success of the future SOC. Topics are based on a combination of what industry analysts have predicted for the security industry, expectations industry analysts have for how technology will improve, and what I’m hearing in the industry based on industry conferences, meetings with customers, and expectations industry analysts have for future compute power. Think about these concepts and push yourself and SOC to be forward thinking as you plan your next SOC project. It is critical to be forward thinking as you mature your SOC services.

All Eyes on SD-WAN and SASE

The first concept is one that every organization’s C levels have been talking about since early 2020, which is how to address the need for a new network architecture approach designated by Gartner as secure access service edge, also known as SASE (pronounced “sassy”). SASE represents merging WAN and security technologies into a single cloud-delivered service. The crazy thing is there wasn’t a single vendor that offered SASE as it was first defined by Gartner in 2019, but since late 2019 just about every security vendor has claimed that it can provide SASE. The reality is that many vendors are offering only some of the capabilities that are part of what SASE promises, causing an overload of inaccurate marketing with no specific reference to how Gartner explains SASE should be provided by vendors and service providers. This has caused a lot of confusion since Gartner introduced SASE, which is why it is the first future-looking topic that needs to be clearly explained in this chapter, as it will impact your SOC today and in the future.

If you want to be forward thinking, you need to develop a SASE strategy. Next, I’ll explain why doing so is critical for the future of your SOC.

VoIP Adoption As Prologue to SD-WAN Adoption

To understand SASE, let’s first step back and review a technology trend that was similar in its transformational impact on the IT industry but focused on how organizations run their phone systems. Prior to the invention of Voice over IP (VoIP), organizations would have telephone service provided by a telephone company and Internet service provided by an ISP. Phone system technology was very basic, providing dial tone and limited calling features. Sometime in the 1990s, VoIP started to be introduced as a way to combine phone service and Internet service into a single plan, saving organizations tons of money. On top of the savings, organizations also got to use bleeding-edge VoIP phones, which included centralized management systems with simplified user interfaces and other cool features that dramatically improved end-user satisfaction.

All the benefits of VoIP caused a major transformation in how organizations viewed what they needed for voice communication. By 2000, VoIP was considered a common technology in organizations. Retail stores to large enterprises all had computer-based phone systems. VoIP was an industry-transforming technology, causing many engineers skilled in deploying and managing analog phone systems to have to switch to digital phone systems or face job obsolescence. Today, it is rare to find an enterprise using analog phone systems.

VoIP Benefits Summarized

Some of the reasons why VoIP changed the IT marketspace can be summarized as follows:

Combined Internet bill and phone bill, leading to savings

Offered bleeding-edge technology:

New IP phones

Centralized management

Bleeding-edge features such as customized ring tones and Music on Hold

Better performance monitoring to understand return on investment

More options for security

Became a trend everybody else was investing in

Perceived as providing a competitive advantage

Introduction of SD-WAN

Around 2018, a new transformational technology was introduced that had similar characteristics as VoIP in regard to the value being provided and the reasons why organizations became interested in adopting the technology: software-defined wide-area networks, more commonly referred to as SD-WANs. The traditional way to host multiple offices is to connect each branch office over a WAN link connection using a network protocol. The early days used point-to-point or Frame Relay lines; however, those technologies were replaced with Multiprotocol Label Switching (MPLS).

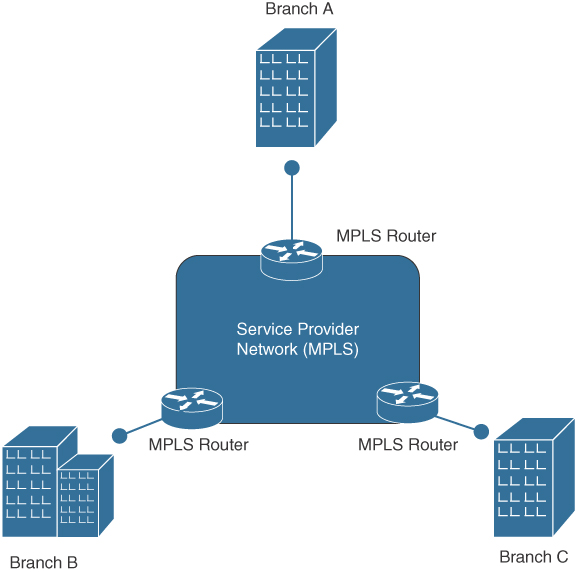

With MPLS, the organization is required to pay one service provider for each branch location and traffic needs to be managed from a router connected to the service-provided MPLS connection in order for communication to take place between offices. The service provider controls throughput and is essentially a blind spot between branch offices that are managed by the organization’s network administrators because organizations can send and receive traffic only through the service provider connection, but network administrators of the organization don’t see what occurs while the traffic crosses into the service provider’s network. Managing multiple branch offices means the organization must manage multiple routers that provide the MPLS connections to the service provider’s network. If the router at either side of the MPLS connection experiences problems, the entire site will lose connectivity unless a high-availability link is in place, costing the organization even more for the additional MPLS service. Figure 11-1 represents a traditional MPLS network design.

FIGURE 11-1 Traditional WAN Connection Between Different Branch Locations

Challenges with the Traditional WAN

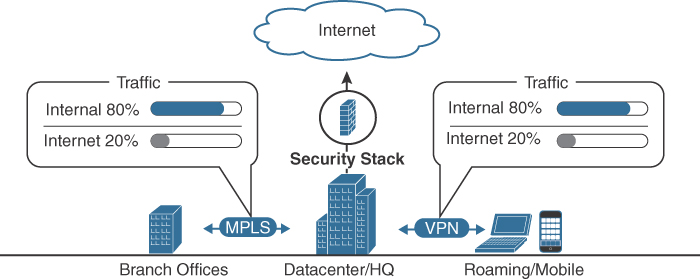

In the past, the traditional network traffic was predictable and security needs were simple to understand regarding securing access to critical resources in the datacenter. The majority of datacenter-based traffic would be internal and the remaining traffic that required being sent outside the organization would be forced through a centralized point known as the network gateway, which included a stack of security capabilities to protect the traffic from cyberattack. The gateway security stack would typically have a firewall, IPS, and possibly additional file analysis technologies that could run files in a sandbox to better understand their intent or send them to a cloud team to analyze. Any remote users would be required to use a VPN to gain access to the datacenter, and all of their remote traffic would be forced through the security stack if the traffic was going in or outbound based on what was sent through the VPN tunnel back to the internal network. Figure 11-2 represents a high-level view of traditional network and security for the datacenter.

FIGURE 11-2 Traditional Network and Datacenter Architecture

Over time, new challenges required multiple exceptions to be made in order to maintain operations. First, the datacenter’s traffic needed more external access to the Internet, swinging from an 80/20 ratio of internal versus external daily traffic to a 20/80 ratio. The increase in external-bound traffic put stress on the gateway, causing a performance bottleneck to occur. The bottleneck problem increased as remote-user traffic was forced through the gateway, leading to the entire organization experiencing performance issues. The VPN overload created the need for split tunneling, which separates normal user traffic from traffic that needs to go over the VPN. Split tunneling reduced the stress on the gateway; however, it also exposed user traffic to the risk of attack because it was no longer protected by the security tools in the gateway. On top of all of these challenges, cloud technologies such as Software as a Service (SaaS) and Infrastructure as a Service (IaaS) started to be used without controls. This created an unknown data-sharing and operations space that the organization’s security team could not see (this space is sometimes referred to as dark operations for this reason). Figure 11-3 represents a high-level view of these challenges applied against the traditional datacenter and network architecture.

FIGURE 11-3 Challenges with Traditional Networking, Datacenters, and Roaming Users

SD-WAN to the Rescue

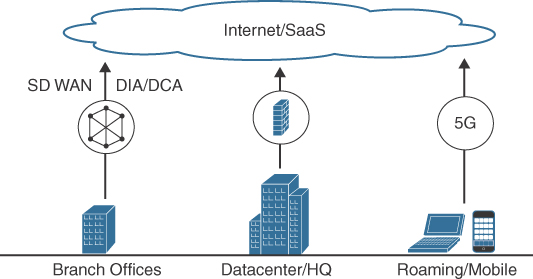

SD-WAN changes the old model of connecting branch offices and managing north–south traffic from the datacenter by allowing an organization to use other protocols such as Long-Term Evolution (LTE) and Digital Subscriber Line (DSL) along with MPLS when a more reliable connection is needed between branch offices. Allowing the use of other protocols translates to saving organizations a ton of money as well as allowing for the most optimal path based on the application being accessed. The key to the value of SD-WAN is that the SD-WAN service, managed by the organization (not the service provider), controls the allocation of bandwidth. In addition, SD-WAN supports much improved high availability because it allows multiple services to run in an active/active type of architecture—if one line goes down, another line can take on the workload. Looking back at my real-world story of what can go wrong with a traditional MPLS connection, if SD-WAN was being used, the branch office that went down when the cleaning person bumped the connection cable between offices could have automatically failed over to another line such as a much less expensive DLS line, keeping the network up until operations returned to work at a more reasonable hour. SD-WAN removes the need for an on-call technician to arrive onsite late at night! Figure 11-4 represents the transformative change SD-WAN makes in how the branch, datacenter, and roaming user access cloud resources. This approach removes the dreadful bottleneck by not forcing traffic through a centralized gateway.

FIGURE 11-4 SD-WAN Approach to Connectivity

SD-WAN Benefits

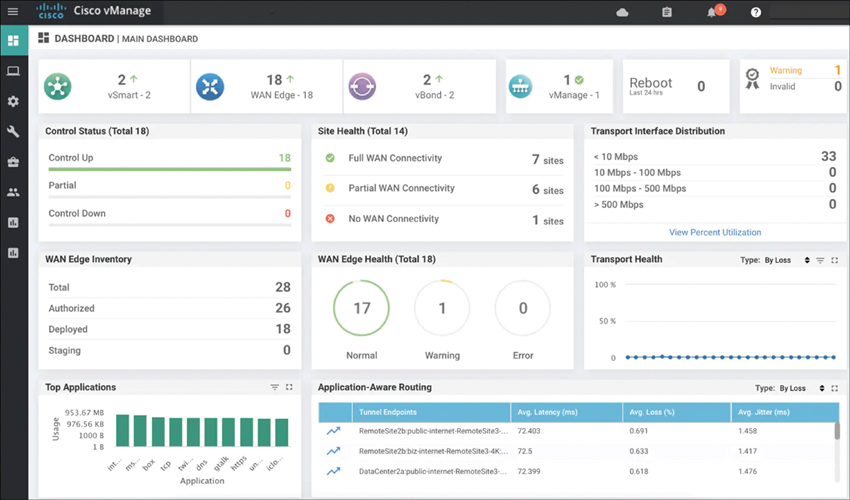

SD WAN offers much better management technology than MPLS, including options to measure performance and improve response time when there are potential issues with connectivity between sites. Having more visibility means IT has a much better understanding of the problem. Looking back at my example, if a connection issue occurs, IT can identify whether the issue is a problem at the application layer, a problem on the branch side of the connection, a problem with the traffic traveling over a WAN connection, or a user problem, whereas in the real-world MPLS scenario I described I had to be physically onsite to figure out the problem. As stated earlier, SD-WAN reduces the likelihood that an on-call engineer will be engaged because the technology is able to better understand the problem and offer automated corrections when a problem occurs. Figure 11-5 shows an example of the Cisco vManage SD-WAN management dashboard summarizing various WAN performance statistics. SD-WAN dashboards such as this provide a ton more information than traditional WAN management platforms.

FIGURE 11-5 Cisco SD-WAN Dashboard Example

To summarize the value of SD-WAN, the following are five core values gained by investing in SD-WAN:

Software intelligence exists within a network virtual environment providing agility and flexibility using a web portal configuration, versus managing routers and traffic monitoring taps.

SD-WAN leverages an Internet connection, which is much cheaper than an MPLS service line; however, MPLS can be used when dedicated lines are needed.

SD-WAN is an agnostic technology, allowing you to select the right connection for your business, whether it is using the Internet, MPLS, or other service.

SD-WAN is able to terminate any connection from any source and apply security, load balancing, and local traffic shaping depending on network conditions.

SD-WAN builds on the traditional WAN router and is revolutionizing the telecoms market.

If you compare why organizations have invested or will invest in VoIP technology and SD-WAN technology, you will find that both technologies offer similar value points and both are considered major disruptions to how they have impacted IT services. Both technologies provide huge cost savings by reducing what organizations must pay to an external service provider and both offer modern technology as part of the migration plan, which includes better management, troubleshooting, and an overall better experience for end users and IT managers. Unlike VoIP, however, a major catch to operationalizing SD-WAN is causing many organizations to hold back from making a full SD-WAN investment. That major roadblock to the success of SD-WAN is security!

SASE Solves SD-WAN Problems

Figure 11-4 showed a general concept of the SD-WAN architecture, which essentially involves connecting branch networkers over the Internet without using a service provider’s network. Any security professional will point out that a major concern with this approach to connecting your offices is that SD-WAN exposes to the public Internet what in the past was somewhat hidden through a private service provider network, putting SD-WAN traffic at risk of attack from anywhere in the world. Imagine an organization that traditionally sent all traffic from multiple locations around the world through a service provider network, yet only had to focus on traffic hitting the Internet at a few gateway points now having every branch office represent a network gateway that must be secured. When I meet with organizations excited about SD-WAN and I mention the concept of securing every network point as part of the deployment, common responses are “Wait, now we need a firewall and IPS at every branch office?”; “Wait, now every branch office can be attacked by malicious parties somewhere on the Internet?”; and “Wait, now we’re exposed to exploitation in a way we can’t monitor and are not ready to protect against?” These concerns are real, which is why every SD-WAN conversation must also be a security conversation. Security must be included in order for SD-WAN to be operationally feasible, which is why Gartner coined the term as Secure Access Service Edge.

SASE combines network security features with WAN capabilities to support the dynamic secure access needs of organizations exposing every branch and its users to the risks associated with the Internet. Security for organizations also needs to extend beyond the branch, and so does Gartner’s vision of SASE, meaning all aspects of cloud security must be included. SASE includes connectivity between clouds, the use of Infrastructure as a Service (IaaS), Platform as a Service (PaaS), Software as a Service (SaaS), and even the remote worker accessing the Internet from a mobile device over a cellular network. For WAN capabilities, Gartner’s SASE model leverages the value provided by SD-WAN, including performance, troubleshooting, and managing networks, combined with security best practices recommended in popular industry guidelines such as those published by ISO and NIST. SASE considers how the future employee will access applications from their office or from the cloud, meaning traffic might never cross an employer-owned connection yet still needs to be secure. A true end-to-end SASE offering as defined by Gartner represents all of this as a unified service.

Note

I have been asked if secure SD-WAN and SASE are the same thing. My answer is no. Securing SD-WAN is only part of the SASE story. Securing SaaS usage (such as Dropbox or Box) is an example of a cloud problem that has nothing to do with SD-WAN, yet is part of the Gartner SASE vision. Another example is that Gartner’s SASE model includes data loss prevention concepts. I consider securing SD-WAN to be a subset of SASE, but more is required than just securing SD-WAN to qualify as a SASE offering.

Gartner Shoots the SASE Flare Gun!

Gartner’s SASE predictions represent a warning to the IT industry of a major disruption that will occur. Cloud adoption is going to happen at a pace that hasn’t previously happened. SD-WAN will become as common as VoIP is today in most organizations. Solving the security challenges that SASE solves will be a normal expectation from IT providers. Those that don’t see the warning flare from Gartner will miss a huge market shift and likely not survive. Gartner is throwing huge numbers behind its SASE prediction with the following statements:

By 2023, 20% of enterprises will have adopted secure web gateway (SWG), cloud access security broker (CASB), zero trust network access (ZTNA), and branch Firewall as a Service (FWaaS) capabilities from the same vendor, up from less than 5% in 2019.

By 2024, at least 40% of enterprises will have explicit strategies to adopt SASE, up from less than 1% at year-end 2018.

By 2025, at least one of the leading IaaS providers will offer a competitive suite of SASE capabilities.

To be clear on the numbers, 40% of enterprises represents billions of dollars of potential business for IT vendors. On top of that, these predictions show customers investing more with a single vendor, meaning the vendor with the best SASE offering has the potential to claim many areas of security and network business from firewall and branch service providers. This is why many experts are warning that if an IT company does not adapt to the SASE model, it will become obsolete and locked out of future business. As a result, multiple SASE-focused acquisitions occurred in 2020, including the following:

Palo Alto Networks acquired CloudGenix

Fortinet acquired OPAQ

VMware acquired Nyansa

McAfee acquired Light Point Security

Zscaler acquired Edgewise Networks

Cloudflare acquired S2 Systems

What are all of these organizations trying to achieve through their acquisitions? Read on to find out.

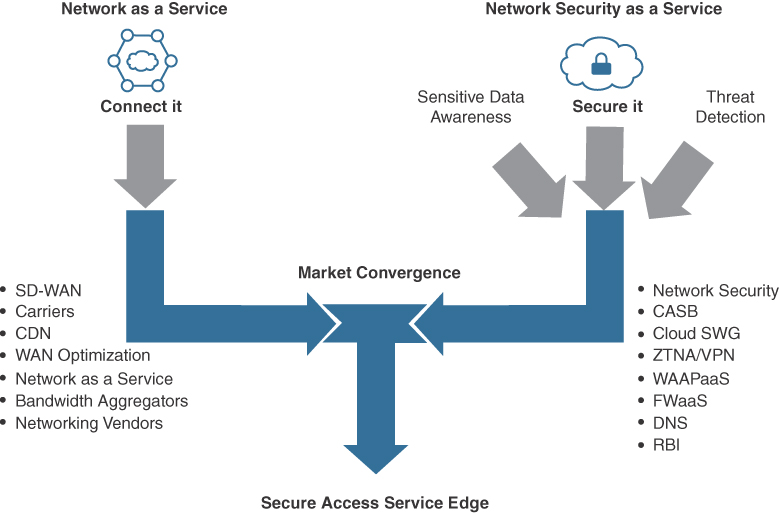

SASE Defined

Figure 11-6 represents a summary of different capabilities that are converging into the SASE concept from both the “connect it” side, meaning SD-WAN, and the “secure it” side via security capabilities. The challenge for this to occur, as pointed out by Gartner, is that many security solution providers do not offer SD-WAN or have experience with providing networking services, while many SD-WAN service providers have not built security into their offering as described by the Gartner SASE model and instead must partner with a third-party bolt-on security approach. This is also why Gartner stated in 2019 that no vendor was currently offering SASE as a single service. Some organizations were closer than others in 2020, and all security vendors who plan to stay in business while offering the capabilities listed in Figure 11-6 continue to adjust their strategies to accommodate the expected SASE disruption to the IT market. Gartner sees all of the components in Figure 11-6 as being a single service for a single cost, which Gartner believes is a true SASE service.

FIGURE 11-6 Services Combined to Form SASE

Future of SASE

Based on conversations I’ve had with executives of enterprises around the world, I’ve identified core fundamental SASE capabilities and methodologies that will exist in the SOCs of the future, some of which may already be present in your SOC. The data points in the sections that follow are lessons learned from the disruption of SASE and my predictions for how SASE will impact the future SOC. It is important to be forward thinking in your SOC planning by considering how cloud topics such as SASE will shape technology and how organizations will leverage IT services in the future.

Prediction: Integrated Network and Security

Organizations will look to acquire SD-WAN with security included versus keeping security as a dedicated expense and technology to manage. Organizations are already recognizing SD-WAN as a way to reduce the cost of services, but they will not want to spend more to secure it than they can save by switching to a SD-WAN architecture. Organizations are more likely to invest in SD-WAN if it is secure (as described as SASE) and still provides cost savings after everything is acquired and deployed. Referring again to the example of VoIP adoption, migration to VoIP accelerated rapidly after the industry realized switching to VoIP had an extremely short return on investment, meaning the entire system would pay for itself within three to five years. If a SASE offering can demonstrate a similar quick return on investment with security included, organizations will rapidly jump on the offering. Organizations also will not want to spend more to secure an SD-WAN offering than they can save by switching to an SD-WAN architecture.

Note

In my role as a trusted advisor for organizations ranging from fortune 500 to federal government, I meet with customers interested in buying SD-WAN. I find much lower success rates when SD-WAN is covered by a trusted advisor without security being addressed since it leaves a huge gap in what needs to be addressed for the deployment to be successful. I tell my sales teams the likelihood of a customer adapting SD-WAN when security is addressed upfront increases by as much as 80%, according to previous sales history that is tracked based on win rate percentages. This is based on my personal experience with various types of organizations.

Prediction: SaaS Is the Future of Security

Software as a Service (SaaS) will continue to grow and it needs to be secured with technologies like cloud access security broker (CASB) and access control. Although SaaS means you are outsourcing most of the responsibilities to a service provider, you are still responsible for your data. I point out the concept of securing data in a SaaS environment because you must secure your data with the limited technologies that are available, while other aspects of security are covered by the SaaS provider. The future of SaaS will include security such as CASB capabilities as part of the offering, representing a flavor of SASE by combining a service with security for a single cost or as an option in a SaaS service-level agreement. This makes sense as CASB is a security tool that acts as an intermediary between the users and cloud service provider, allowing the users’ organization to enforce desired security policies and monitor how SaaS is used. I predict CASB offerings will become part of other services and will no longer be a dedicated solution, as it just makes sense to combine this with many SaaS services.

Prediction: Dynamic User/Device Fingerprint

Identifying a user and/or device is and will continue to be based on multiple factors, which includes where the user/device is located, what type of system the user is on, and what applications the user/device is permitted to access. Results of how a user/device is fingerprinted can dynamically determine what access rights they will be provisioned, regardless of where they are located, meaning the place of work can be anywhere in the world at any time. This type of technology exists today; however, it will continue to get better. Areas of improvement include the accuracy and details associated with a user/device, the potential risk, relating the user/device to historical data, comparing the user/device against behavior and attributes found with similar users/devices around the world, and continuously adapting to changes. I have seen movies depicting these types of futuristic features, such as a device scanning a user’s eye from across the room to provision various services at an office or an automated voice greeting by name a customer walking into a retail store and then suggesting what the customer should purchase based on previous buying habits. I have seen aspects of these features already used in the real world, with collaboration technologies leveraging big data trends blended with scrapping social media accounts and facial recognition technology.

Prediction: OPEX Replaces CAPEX

Organizations will increase investment in subscription-based technology with the goal of converting CAPEX (capital expenses, meaning to buy something) to OPEX (operational expenses, meaning the cost to do something), reducing the need to acquire and manage technology. Many IT hardware providers have already made this shift, and the future of successful technology providers is dependent on reoccurring revenue business models. For example, most vulnerability scanning providers in the past sold their scanners only as software you downloaded, installed, and managed (CAPEX). Today, it is more common for vulnerability scanning providers to offer the same technology from the cloud and as a subscription service (OPEX). From a customer viewpoint, the customer doesn’t have to deal with setting up or updating the product, and management of their scanning is accessible from anywhere that has Internet access. From a vulnerability service provider viewpoint, the vendor can quickly add new features, onboard customers, and troubleshoot tickets, enabling them to offer a better experience to customers. SaaS also curtails pirating of software and allows for more control over how customers can test out their solution using a cloud-based proof of concept approach.

Prediction: The Office Is Everywhere!

User performance needs and requirements for flexibility will continue to increase, forcing organizations to move security to the application and host rather than requiring traffic to tunnel through a security stack. In older network designs, organizations would build a defense-in-depth design at the network gateway and put a VPN concentrator at the front end. Any VPN user requiring access to internal resources would have to send traffic through a VPN back to their organization’s VPN concentrator, forcing traffic through the security stack within the gateway. Throughput requirements have already forced many organizations to leverage split tunneling—sending only those requests that need to go to the organization through the VPN while separating and sending all other traffic over the Internet. I previously explained how battling the bottleneck problem, meaning having too much traffic forced through a stack of security solutions, had led to a major driver for SD-WAN.

User traffic going through the gateway security stack is becoming more difficult to inspect due to SSL/TLS encryption, because data privacy rights require certain traffic to maintain its encryption even if decryption options are available. Secure Internet gateway (SIG) is a SASE concept that addresses this design problem by forcing user traffic through a cloud-based security stack, relieving organizations of the responsibility to force traffic through their corporate-owned security stack. SIG is growing in popularity and is a concept that will become the standard way to secure traffic from employees who are not physically at a corporate office. Essentially, security will follow the users, devices, and data rather than everybody having to accommodate restrictions based on how the network is designed. The need for encryption will not go away, but VPN as a service will be the more common method to encrypt than traditional VPN hosted from a VPN concentrator owned by an organization. The COVID-19 pandemic has prompted many organizations to adopt a work-from-anywhere mentality to accommodate their workforce having to work from home. Many organizations I have spoken with since the pandemic began are developing a work-from-anywhere architecture not only to improve work efficiency but to prepare for any future event that would force their employees to work from home. Some organizations have even announced permanently using a work-from-home model as it has shown to work during the pandemic.

Prediction: Increase Data Loss Prevention Needs

Data loss and data at rest will continue to increase in demand as both technology options become easier to deploy and manage. Frameworks such as Zero Trust are extremely popular in concept but difficult to operationalize. In theory, the concept of encrypting important data is easy to state as a requirement; however, the biggest hurdle is determining what data is considered important and requires protection.

Predefined data such as credit card numbers and Social Security numbers are easy to set up for monitoring; however, automatically labeling an organization’s data as important enough to be encrypted is much harder based on all the dynamics of what can be considered important and how fast data changes. Advances in technology based on machine learning and artificial intelligence are allowing for quicker and better decisions to be made regarding the importance of data, which can translate to a more accurate and automated data loss and data at rest technology. The future of the modern SOC will include automated data loss and data at rest technology as a backbone of its security practice similar to how antivirus is used today.

Prediction: Goodbye Tier One Support

Tier one support in most organizations represents the group responsible for basic customer issues. They are seen as the first responder when an employee has technical needs. I predict tier one support for services will be fully automated in the future SOC. As SD-WAN adaption increases, organizations will have access to more details on how their services are functioning. These details will open the door to automated response when issues are found in a service and automated response when a potential security event is occurring.

By automating responses to these situations, tier one support will not be needed because the network will know when an issue or attack is occurring and either resolve it on its own or send event data directly to higher-tier support when its automated response fails to remediate the situation. Because SASE is a cloud-based service, support for tier one services will move to the service provider, saving the SOC the need to offer any low-level support services. Automated tier one support is in common use by businesses via automated assistance that attempts to solve your problem rather than sending you to a human.

Prediction: Automated Upgrades

With SASE, software upgrades will just happen rather than be a responsibility of the SOC. SASE means a service is provided by a cloud service provider, translating to the service provider always having access to the management tools. If new features are needed, the entire configuration and upgrade process can occur without the end user being notified because backup systems can be used while primary systems are being upgraded, leading to zero downtime during the upgrade process. This can and will all occur in the cloud without customers’ knowledge, removing technology maintenance requirements from the SOC’s list of responsibilities. Tesla provides a great example of this concept. Tesla owners agree to receiving updates, and their parked vehicles will inform drivers that an update is available and will be installed when they are not using the car. The Tesla owner doesn’t have to do anything but wait for updates to be pushed from the cloud to their vehicle. SASE allows for the same update strategy to occur. I point out this example because technology continues to be part of all industries, leading to SASE impacting more than just our computers.

Another software development concept that will impact automated upgrades is agile software development methodologies. Agile software development means smaller chunks of a program are developed and released more often. Using this approach also allows for pushing upgrades and patches along with software releases, providing a much smoother upgrade experience than waiting and combining multiple changes into one larger release. Cloud complements agile software development because the cloud service provider can continuously push smaller updates with little risk of impacting its customers.

SASE Predictions Summarized

The potential value of SASE to the SOC includes shifting the responsibilities of certain services to the cloud, which frees up time for SOC analysts to focus on different tasks. SASE technology also solves some challenges that SOCs deal with, reducing or even outright eliminating some risk that today’s SOCs must account for.

Pay attention to the SASE trend as it impacts all of IT. There are many resources you can follow as SASE matures. I recommend using a blend of reading publications from industry firms such as Gartner, monitoring IT vendor acquisition trends, and speaking with other organizations regarding their cloud adaption roadmap. If you do not already have a SASE strategy today, now is a good time to start looking at how you plan to adopt cloud technology into your SOC’s practice.

The next topic regarding the future of the SOC is how the services that it provides today will change in the future. Any forward-thinking SOC must consider not only what services the organization needs from the SOC today, but also how those and other services will change over time.

IT Services Provided by the SOC

Another concept that continues to change is how services are provisioned to customers. The term “customers” can mean the people buying something from an organization, the employees needing services from their organization to do their jobs, or the entire organization needing security services from the SOC. Regarding servicing employees, an organization is expected to deliver a range of general services that enable employees to be productive. For many organizations, common services they provide to their employees include the following:

Safe Internet connection

Desktop and phone

Collaboration tools for teams

Access to corporate data

Access to personal data

Computer power to host applications

Secure way to use third-party services

Workflow and sales tracking technology

Note

This list is a general look at IT services employees expect from their employers. Many of these services are not related to what the SOC is responsible for, which is the point of my predictions to come!

IT Operations Defined

Organizations of the past designated all of the preceding services as the responsibility of “IT operations,” a group within the organization operating out of its physical headquarters. As an organization of the past would grow, it would need more physical office space, it would need to provision more devices, and consequently it would require more staff responsible for supporting IT operations. If remote workers required external access to the corporate network to do their jobs, IT operations would configure tunneling technologies such as VPN to enable them to gain secure access to the services they needed. End-user equipment would be configured by IT operations at a corporate office and shipped to employees or made available in a corporate office to pick up. In the past, the SOC was not part of the team performing IT operations’ responsibilities, but sometimes the SOC would advise on security policies for assets that IT operations managed. Those policies included host security standards, requirements for VPN, password policies, and web policies.

If the SOC doesn’t handle IP operations, how does the concept of IT services relate to a SOC? With the growing trend toward SASE, the focus is a convergence of “connect it” and “secure it” (as depicted earlier in Figure 11-6), meaning blending together IT operations and security. As this occurs, the SOC will need to be involved with IT operations because network connectivity and other IT services will have security as part of the package, meaning it will be one single service. If the SOC isn’t involved with a SASE-based IT service, blind spots will emerge leading to dysfunctional SOC services.

The following are some examples of aligning IT operations to potential future security capabilities that the SOC would need to be responsible for:

Safe Internet connection: Security visibility and controls will be included with many network services based on the SASE unified service concept. The SOC will need access to all security stats and will manage security policies such as what can be accessed and what to block as potential threats. Event data will be exported to the SOC’s log management tools. All of these controls lead to providing end-users a more secure Internet connection.

Desktop and phone: Collaboration technology continues to shift from hardware to cloud-provided services. A Chromebook is an example of hardware that is dependent on the cloud for many of its services. Most Chromebook capabilities are not available without Internet access. Another example is Cisco Meraki, which uses the cloud to manage the physical hardware. If the hardware is disconnected from the Internet, it can’t be configured or updated, and sometimes might not even be functional. If desktops and phones become service-based technologies, the SOC will have to be involved to ensure those services are secured. The only way the SOC can accomplish applying security is to have involvement in the control of policies over such devices.

Collaboration tools for teams: As a work-from-anywhere approach grows in popularity (or from necessity), collaboration tools will increase in importance. This approach can also lead to serious data loss concerns based on the sensitivity of data that can be shared. Due to data loss risks, the SOC of the future will need to police the content being shared. Trends show collaboration tools being consumed in a SaaS format; therefore, the SOC of the future might own the responsibility of securing the data within collaboration tools while an external service provider handles all other management tasks.

Access to corporate and personal data: Data privacy has become and will continue to be a huge concern for employees and customers. The challenge is that there is a constant battle for enforcing security (allowing security tools to evaluate data) versus maintaining privacy (not giving access to data), because maintaining privacy can lead to not having traffic evaluated by security, resulting in security gaps. This challenge justifies the continued involvement of the SOC with data loss and privacy policies. I predict data loss prevention will continue to grow in complexity and importance, eventually leading to a dedicated service offered by the SOC of the future.

Computer power to host applications and a secure way to use third-party services: I pointed out in Chapter 10 that DevOps is a rapidly growing field of expertise based on the need to configure technology to work with other technology. Part of this demand is the need to host technology that can help accomplish an organization’s mission. As organizations rely more on applications being stood up by employees, the risk of cybercriminals targeting those applications will increase. The SOC needs to include this risk as part of its risk management service. The same concept applies to leveraging third-party applications and services, which is when an organization outsources required applications.

Workflow and sales tracking technology: The crown jewels of most organizations is their data. For organizations involved with sales and developing technology, tracking is critical to understanding how the business functions; therefore, the tracking system must be protected from cyberattack. The SOC needs to responsibly protect such tracking systems.

As you can see based on technology trends, the SOC of the future will have more IT operations responsibilities than the average SOC has today. Many organizations have kept IT operations and SOC teams siloed, but technology trends such as cloud and SASE adoption are forcing a merger between compute, network, and security. I predict every organization will be forced to readjust how they view IT operations and the responsibility of the SOC as these technology trends continue. To be a forward-thinking SOC, you need to consider how to incorporate IT services, as that will eventually be part of the SOC responsibilities, if it isn’t already assigned to the SOC.

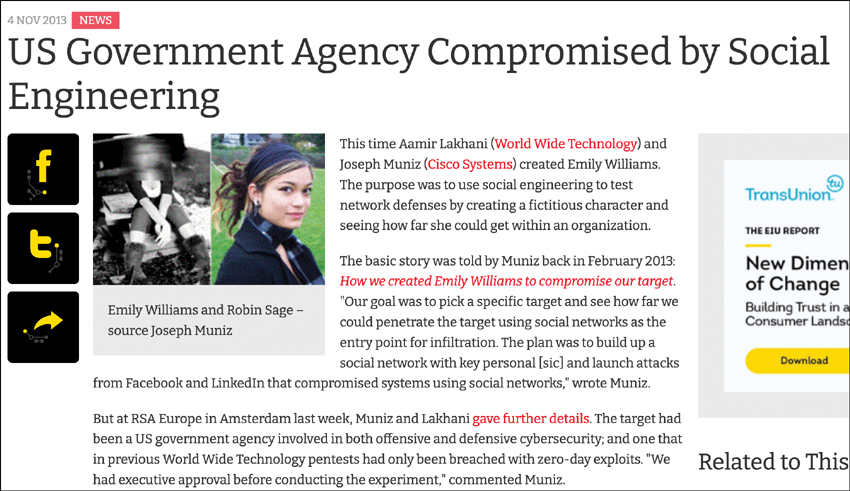

The merging of IT operations and security will lead to new attack vectors. A few years ago, my good buddy Aamir Lakhani and I performed a penetration test targeting IT services. IT services in our targeted organization were not part of the SOC and were extremely vulnerable to social engineering. This use case demonstrates why it is critical to secure IT operations. The section that follows provides an overview of that research, which demonstrates the danger of keeping IT operations separate from security.

Hacking IT Services

Back in 2013, Aamir Lakhani and I spoke at the RSA Europe Conference about our research that showed flaws in the traditional model of provisioning IT services to employees. We created a fictitious person named Emily Williams using social media resources. She is not real; however, we used photos of a real person who allowed us to use the photos to create a completely new identity within social media circles. We created a Facebook account and a LinkedIn account for “Emily Williams” showing she was a new hire for an organization whose leadership authorized us to perform a penetration test of the organization’s security capabilities and IT operations without the knowledge of the IT staff.

Hello World, Meet Emily Williams

Playing the role of Emily, we became connected via social media to key members of the organization we were penetration testing. Within a few days, we had over 170 employees of that organization associated as digital friends to our fake account. On LinkedIn, Emily Williams was receiving endorsements for her fake certifications. On Facebook, people were congratulating her on her new job and offering help as she started her fake role in the organization. In a short amount of time, Emily Williams was welcomed in social media circles as a new hire looking to connect with fellow employees. Figure 11-7 shows LinkedIn endorsements for Emily’s certifications. Some of these endorsements came within two hours after we created her account.

FIGURE 11-7 LinkedIn Endorsements for Emily Williams

During my initial outreach to targets using Facebook, one IT administrator at the organization being penetration tested asked “Emily” if he knew her after receiving her friend request that I sent, as shown in Figure 11-8. I quickly looked at his Facebook profile and saw that he had worked at Hungry Howie’s Pizza 10 years prior to the conversation, so I immediately jumped on that topic and responded that I (“Emily”) knew him from back then. My strategy was based on the assumption that it would be hard for this person to remember the girlfriends of people he knew 10 years ago. That strategy required finding another person he knew 10 years ago to be Emily’s former boyfriend. I clicked the target’s Facebook connections and saw he had a friend named Derrick that worked at Hungry Howie’s during the same time period. Derrick’s Facebook page showed that he currently was living in New York, allowing me to quickly make up a story about being Derrick’s girlfriend 10 years ago and recently running into Derrick while in New York. I explained that Derrick told me the IT employee I was speaking with worked at the organization Emily was recently hired at; hence, the reason for the Facebook friend request. The person not only accepted my friend request but also provided me valuable information about the interworking of the organization’s IT operations.

FIGURE 11-8 Facebook Social Engineering Attack Example

Impact of Emily Williams

The results of the penetration test enabled my team to conduct further social engineering that resulted in receiving a phone, a computer, and access to the target’s network without stepping foot into the organization (all equipment was shipped to us and preconfigured). With leadership’s explicit authorization, we were able to abuse vulnerabilities in how services were distributed, which led to a complete compromise of the organization.

Compromise not only occurred through receiving equipment from the organization, but also occurred through social engineering attacks launched from Facebook that allowed my team to collect login credentials to remote worker VPN accounts using a social engineer penetration tool known as the Browser Exploitation Framework (BeEF). The goal of our presentation at RSA Europe 2013 was to show the vulnerabilities of social media as well as weakness in human trust, including those people responsible for delivering services to employees: IT operations. Figure 11-9 is an example of one of the many articles covering our research results.

FIGURE 11-9 Article About the “Emily Williams” Penetration Test

The key takeaway from this research is the potential danger of not securing IT operations. I predict IT operations and security will merge and be part of the SOC due to the types of risk exposed in our research project. Every forward-thinking SOC must consider securing IT services, which implies the SOC being involved with IT service security.

IT operations has changed since we conducted our Emily Williams research, and events such as COVID-19 have escalated certain trends such as the work-from-anywhere concept. Next, let’s look at recent IT services trends.

IT Services Evolving

Over recent years, the traditional model for providing IT services to employees has been falling apart. The most drastic change is how organizations and SOCs are removing physical boundary requirements. In 2020 I spoke with dozens of CEOs during the COVID-19 pandemic and heard the same phrase over and over again: “We thought if everybody had to work from home, our business would shut down. We were surprised to be wrong, and even more surprised to find that many parts of our business were actually more productive!” Some organizations such as Google announced short sprints of required remote work programs for their employees, expiring when COVID-19 concerns were no longer relevant. Other organizations such as Twitter and Facebook announced a commitment that their employees could work remotely for as long as they choose. The removal of physical boundaries makes the old way to deliver services operationally challenging and dramatically increases the risk of exploitation, such as what my team showed back in 2013 with our Emily Williams penetration test.

SASE and IT Services

As I stated earlier in this chapter, the future of SOC services will include SASE components. A major driver for this shift is the need to adapt to a work-from-anywhere architecture. Using cloud services means that technology doesn’t have to be shipped to an employee and that the risk of compromise can be shared or altogether handed off from the SOC to a service provider—other than securing the data being used. Data security will continue to be a fundamental responsibility of the SOC even after SASE becomes the norm.

Cloud technology allows for more flexibility, enabling employees to obtain the services they require rather than receiving tools they might or might not use. I personally have worked remotely for the past 15 years and rarely use an employer-provided VoIP phone. In order to use the physical VoIP phone, I have to plug it into my home network and use it as a desk phone; however, I don’t require that type of service for the work I do. Instead, I prefer to use a softphone or my mobile phone, making the company’s investment in my physical VoIP phone a waste of IT resources. In a large company, if you multiply the number of remote employees that don’t use their company-provisioned VoIP phone by the expense of provisioning and managing the unused VoIP phones, the sum will be a large amount of wasted money. One huge benefit of cloud computing is the ability to convert CAPEX to OPEX, which would be ideal versus always automatically provisioning a physical phone when a softphone is all that is needed.

Future of IT Services

Once again, I have some predictions that impact the future SOC. This time my predictions are for how IT services will be offered in the future. Many of these predictions are related to services that are already being offered, but for different industries and for specific use cases rather than for the average SOC. Because the SOC will have IT operations responsibilities in the future, you should be aware of these trends as they become part of normal IT operations within the SOC. Every forward-thinking SOC needs to have a plan for incorporating responsibilities for securing IT services.

Prediction: 3D Printing Resources

The IT operations function within the SOC of the future will offer a catalog of services that employees can request as needed rather than being provisioned as a set of services. As an example, 3D printing technology introduces the interesting concept of enabling employees to print something that was created by an outside party. I predict that in the future, if an employee needs a VoIP phone, they will be emailed a 3D image and be authorized to print their phone, removing the need to ship equipment. The services for the phone will automatically be set up, creating an extremely efficient process to provision such services.

When the asset is no longer needed, the service to the device will be automatically terminated and the user will be instructed to destroy the asset or mail it to a third party responsible for asset destruction. In the example of a VoIP phone, the future 3D printer could also offer the option to recycle the material, meaning melt the phone back into material that can be used to print future assets. Some manufacturers are already offering 3D sketches of replacement parts for their products. Rather than having to purchase a replacement part, a customer can simply download the 3D diagram and print what is needed.

Prediction: Virtualized Computers

Another service model that is shifting to the cloud is how compute power is provided to employees. Organizations today provide employees with computers for daily work. If higher compute power is needed, organizations either upgrade an employee’s computer or provision access to high-end servers in a corporate-owned datacenter. This approach to provision resources requires interaction with multiple departments, including the datacenter administrators to create the account and service, network administrator to connect the system to the network, and security administrator to open required services in existing security tools. Due to the multiple parties being involved, adjustment to services is tedious to enforce, causing security exceptions to exist beyond the life of the service and wasted services based on requests for more than what is needed to avoid having to go through the request process in the future.

The future of provisioning compute is to offer it from the cloud based on business need. When a user needs compute resources of a certain type or size, that user will be able to access a system that can quickly make the desired resources available. When the resource is no longer needed or is not actively being used for a certain time period, it will dynamically be reduced or be deleted. I predict even personal computers will function in this manner. Everybody will have template hardware similar to the Chromebook model; however, the organization will determine what resources that hardware will have access to. The future might even allow for template hardware to be vendor agnostic, meaning an employee could be using Windows while employed with a company, but later have the software switch to Linux on their personally owned hardware template based on a new company sending it different compute services. The employee would simply provide their MAC address and their employer would be able to send everything from the operating system to what is installed.

Another huge value of using cloud computing is the flexibility to adjust services when demand increases. Microsoft defines the ability to dynamically deal with peaks in IT demand as cloud bursting. According to Microsoft, Azure always has additional resources available to the customer via cloud bursting, and the customer is charged only for additional services used. This approach to provisioning services allows organizations to offer an almost limitless amount of services (setting aside concerns about associated costs to the organization). The concept of cloud bursting is the future of provisioning services, eliminating the traditional approaches of buying more services than needed just to accommodate future requirements or limited high-usage scheduling. I can imagine a future SOC analyst needing to run a task requiring heavy compute power and his/her laptop being able to pull down the needed resources dynamically. An example could be needing to decrypt packets using a brute-force technique, which would be much quicker if a supercomputer could be accessed for this purpose for a short period of time.

Prediction: Cloud Management Platforms

Management platforms for IT operations and security products are shifting to the cloud. This makes sense because having a hardware platform for management of technology affords no benefit, outside of supporting network environments that do not permit Internet access. The benefits of moving management platforms to the cloud include the following:

Easy access from anywhere

No hardware maintenance, power cost, or labor for updates

Easy vendor access for support and troubleshooting

Simplified integration with other tools because management is already in the cloud

CAPEX converted to OPEX

Much more robust high availability

Many vendors are already moving to this model. Certain customers, such as those that do not permit Internet access to their management tools, will need hardware options; however, I predict the majority of all IT and security tools used by the future SOC will be managed from the cloud. This includes firewalls, IPS, routers, switches, honeypots, and sandboxes.

IT Services Predictions Summarized

Of all my predictions, the biggest prediction I’m making regarding IT operations is that it will become part of security and therefore be a responsibility of future SOCs. Other predictions for IT operations fall in line with the predictions I made regarding the impact of SASE to the SOC. Tier one support will go away. Updates will be automatic. The office will be anywhere the employee can work. Cloud services will be the standard for delivering IT operations. Many of these predictions are starting to happen today and others might have already happened within your organization. Make sure your SOC has a plan for rolling in IT services, as all signs point to this becoming a normal practice within the SOC.

The third topic to dive into regarding future SOCs is how employees will be trained. Remember that security involves people, process, and technology. I predict there will be major changes in how people are trained as well as what training will be needed within the future SOC. Every organization leader I meet with has training as part of their agenda for maturing their SOC.

Future of Training

People are an organization’s greatest asset. The most skilled and best performers need to be provided a competitive work environment, including the opportunity for career advancement or they will go somewhere else that offers their desired future role. Unskilled and newer employees need to be trained to support the skilled and best performers so that they eventually can take over the tasks of other skilled employees. Doing so opens up the possibility for veteran employees to move to a higher rank within the organization with new and more challenging responsibilities. This entire lifecycle of employee development requires offering quality training to employees so that they can learn new tasks and eventually increase their responsibilities. This section focuses on trends in training today as well as what the future of training might look like for your SOC. Since training is such a critical element of improving the SOC, any forward-thinking SOC will be looking at training trends to take advantage of the latest training offerings.

Training Challenges

A common goal of leadership teams across organizations is to improve how training is delivered in their organization. I continually hear from leaders I meet with from various organizations that a top concern for their organization is finding and maintaining the right people.

According to Karen Greenbaum, president and CEO of the Association of Executive Search and Leadership Consultants (AESC), in her article “The Five Top Talent Challenges of Today’s C-Level Executives” (Forbes, 2017), a survey of senior executives concluded the top talent challenges are as follows:

Lack of diversity

Lack of key leadership successors

Competition for talent

Mismatch of current talent and future strategies

Need for digital experience

Training is a critical component to address all five of these challenges. Lack of diversity means organizations are not supporting certain groups of people to grow their careers into leadership roles. There are many reasons why this can occur in an organization. One cause is not providing the right training for people at lower levels to be able to perform at a higher level of leadership. The right training not only includes how to be a leader but also provides the opportunity to interact with leadership, as business connections are part of what leads to people being promoted to executive roles.

The same concept regarding lack of training applies to the challenge of not having leadership successors. If you don’t train people to be able to perform at a leadership level, then you won’t have successors at hand when a leader takes on another role or leaves the organization. Top-performing employees want to improve their abilities and advance to higher ranking roles in the organization, which requires a history of strong performance along with training. Not providing training will allow another organization to poach your employees by offering higher ranking roles with better pay. People leave an organization for a reason, and it is not always purely for an increase in pay. People want career growth opportunities, and if they don’t find them in your organization, they will find them somewhere else.

Technology continues to change, and training is required to keep up with it. Without training, your SOC won’t have the people to run the latest technologies, leading to a SOC with limited capabilities. An example of a skill needed for running security orchestration, automation, and response is DevOps, and I find some SOCs lack this skill within their staff. Knowing DevOps is just one of the skills that represents the digital experience needed to manage current SOC technologies.

Note

Some organizations are making huge strides to address the challenge of a lack of diversity in leadership roles. While working at Cisco, I was identified as being a top performer and having Latino heritage. I was invited to join a group within Cisco that met with the goal of gaining exposure to senior leadership and being provided special coaching/leadership training to help top employees with a Latino heritage obtain leadership roles. Other similar groups within Cisco have been formed, such as women in IT. I believe these types of investments not only show that an organization’s leadership is invested in addressing diversity problems, but also build employee loyalty to the organization.

Training Today

There are various ways organizations offer training to their employees. One option is to contract with an external training service to run a class, which can be at a discount per employee if enough employees sign up. Another option is to have the organization cover the expense of external training for employees who request it. This often entails attending certification bootcamp training, vendor product training, or specific skill training such as a programming language. Another training option is online on-demand courses, which tend to be less expensive than live classes because the class size is not limited.

Note

Some organizations offer to pay for employees’ external training with the precondition that the employees sign a contract specifying that if they do not continue to work for the organization for a specific period of time, they must reimburse the organization for the training. Organizations do this to retain talent and avoid the situation of investing in an expensive training for an employee that will leave before the organization benefits from providing the training.

SOCs can also develop their own “over-the-shoulder” training options, which allows employees to share skills. The SOC’s situational and security awareness responsibilities can include running security training as well as offering options for non-SOC employees to shadow different SOC team members. This enables the SOC to not only identify employees within the organization who are capable of moving into SOC roles but also identify SOC members who show leadership skills. Sometimes organizations ask employees who have taken external training to build a course based on what they learned and train fellow employees. Many of these internal training options are advantageous because they do not have a cost outside of employee time to allow shadowing or develop training material.

Training Today Summarized

To summarize training today, I refer to the article “Top 10 Types of Employee Training Methods” by Corey Bleich (CEO of EdgePoint Learning, an employee training service provider), which lists the following top 10 ways organizations provide training:

Instructor-led training

Learning

Simulation employee training

Hands-on training

Coaching or mentoring

Lectures

Group discussion and activities

Role-playing

Management-specific activities

Case studies or other required reading

When I ask an organization’s leadership about how they deliver training to their employees, the typical answer is that they use a blend of these options. My experience is that the most common options used by organizations are instructor-led training, eLearning, and hands-on training, all three of which tend to be outsourced to either the vendor of a certification program or a third party that is aligned with a certification program.

Next, I’ll briefly describe which of the preceding list of methods I use when I require training.

Case Study: Training I Use Today

In my personal experience, I tend to use a blend of training resources. My training includes eLearning from free resources, lectures from industry conferences, case study research, conversations with experts on a specific topic, and lots of hands-on training using labs provided by my employer as well as labs I build on my own. When I need to aggressively shorten the time to learn required material for an industry certification exam, I take an instructor-led, bootcamp-style course after reading a book on the topic; I also run through tons of practice exam questions to ensure I mastered all required concepts. If I need to figure out how to make something work, I always start by searching the Internet to see if there is a short video or article that gives me enough details to accomplish my goal. If I need to learn about industry concepts or recent threats, I start with specific blogs and bookmarked resources rather than searching the Internet, because I trust the opinions of the experts I follow over anonymous Internet blog posts. I also have friends whose technical knowledge I trust and I sometimes call them to ask questions about concepts I need to better understand. In short, I leverage a lot of different resources for my training.

The following is a summary of the resources I personally use for training:

Instructor-led training: Bootcamps I take when preparing for a specific certification

eLearning: I use Google and YouTube to research concepts

Simulation employee training: When my employer asks me to do mandated training such as ethics training

Hands-on training: Labs I built, or employer provides

Lectures: YouTube or bootcamps

Group discussion and activities: Industry friends

Case studies or other required reading: Internet research

Everybody has their own way to learn, and my personal case study on resources I use shows a blend of different training options. I can claim seven out of ten from Corey Bleich’s top ten training methods. Some of these resources cost as much as a few thousand dollars, while others are free online options (or freely provided advice by people I trust to contact with questions, which I reciprocate). Sometimes I lean on a free resource, while other times investing in a professional resource makes sense regarding time saved and quality of content. You should expect a similar blend of training needs from all of your SOC employees.

Free Training

One life hack that can help save your organization’s training budget is to ask product vendors for free training. Vendors want customers to try out their products with the intent of eventually purchasing from them. This helps overcome customer concerns that a large learning curve is required to use a vendor’s product. I find many vendors offer really good free training to help encourage a sale, on par with third-party training that has a cost. Consider including training with a proof of concept before the sale or bundle training with a purchase to avoid having to invest in future training.

Online free resources can be very solid as well. Many universities are posting free versions of an entire education program, such as a master’s degree in computer science or cryptology, for anybody to access and learn. Resources such as YouTube continue to grow their libraries of on-demand content, allowing anyone who is interested in learning a topic to instantly pull up material. I can’t count the number of times I needed to figure something out and immediately searched YouTube and followed an expert’s instructions. Consider all of this as you validate what your personal case study for training requirements or SOC staff requirements will include.

Note

I have an important tip regarding sharing your knowledge about your job role. Early in my career, the CEO of my employer gave me valuable advice that I live by: share your knowledge, and become so good at your job that your employer could replace you because you are no longer needed. Doing so allows you to be promoted as well as name your successors during the interview for a better role within the organization. People who hoard knowledge and are required for a position will be stuck in that position until they can be replaced by technology or new processes and will never be promoted because their employer needs them to continue in their current position. Try this tactic the next time you ask for a promotion. As you request new responsibilities, not only explain that the people you have trained can do your job, but specifically name a few employees who are ready for your role. Doing so will improve your chances of the promotion since your manager doesn’t have to worry about backfilling your role. You also demonstrate leadership by bringing a solution to backfilling your current role.

Gamifying Learning

One interesting learning style that is growing in popularity is gamifying content. Learning is not always fun and can be overwhelming when combined with daily work and personal responsibilities. There is a growing field in learning management applications, known as learning management systems (LMSs), that aims to drive interest in learning through interaction by turning learning into a game. A paper by Juan Burguillo titled “Using Game Theory and Competition-based Learning to Stimulate Student Motivation and Performance” described a study that compared using a gamified approach to teaching topics versus using traditional methods. Results showed students using the gamified approach were much more motivated, were satisfied with the experience, and had a better understanding of the topic.

Successful video games tap into satisfying basic human needs by providing small accomplishments as the player works through the game. The concept of “leveling up” is addictive and encourages the player to proceed through the game even if leveling up requires very tedious and repetitive tasks. By tapping into the desire to level up, training becomes a challenge that doesn’t seem hard to the student if they can see success as a result of their time spent.

Learning Management Systems

Learning management systems apply gaming concepts to learning complex content with the goal of motivating students to complete tasks. An example of a popular LMS is Moodle (https://moodle.org/). I run a lab at Cisco that has a lab guide containing more than 300 pages of steps to perform all tasks. The older versions of my lab required a student to download the lab guide and perform all of the tasks as listed in a huge PDF file. I had no way to know how far students got in the lab, where they encountered problems (other than when they reached out to me), what areas they found the most and least interesting (unless I asked using a survey), and where they had to spend the most time. Therefore, I had trouble determining how and where to improve the lab to help encourage students to want to complete the lab. I also had no way to motivate students to overcome challenging tasks. Essentially, I was blind to how my lab was being used.

LMS technology (Moodle in my case) changed the way my team delivered the lab by allowing my team to associate points with completing steps. I could see how much time was spent on each step, what topics were considered interesting, and how far the average person would get in the lab. I was able to adjust the lab instructions based on real feedback as displayed in Moodle as it tracked people’s progress. I found that when students ran into problems, they were more likely to push through when they were close to scoring enough points for a digital badge. Student feedback from the new approach to delivering the lab was very positive. Figure 11-10 is a screenshot from my lab guide converted into the Moodle platform. LMS didn’t replace anything that had to do with the actual lab configuration; LMS replaced the lab guide.

FIGURE 11-10 Lab Guide Converted to Moodle

The first key point to note in Figure 11-10 is the Completion Progress bar at the top right. LMSs include this type of progress indicator to show the student how far they are into the learning objectives as well as offer a click and access approach to picking up where a student left off when they need to come back to a task at a later time by clicking the progress bar step. Notice on the right in Figure 11-10 the Your Score area showing a ranking system, leader board, and other gaming tactics included to encourage a student to continue learning. The lab guides are posted on an Internet-based platform that offers language translation plugins to open up content to more people without the steps needed to translate the lab guide.

I predict LMSs will be the future for learning technical content. The LMS approach connects the developers with the students in a way that allows for more interactive collaboration between what is delivered and how it is perceived by the end user, leading to better results. The LMS approach also drives students to complete content and encourages better performance through various reward options and ranking systems. Don’t be surprised if you start to find more learning platforms moving away from static PDFs and becoming more interactive.

On-Demand and Personalized Learning

Another trend I predict will become the industry standard is on-demand learning. Just like the “instant expert” concept using YouTube that I previously mentioned, on-demand learning is a way to get quick access to education. YouTube is great for small knowledge chunks; however, there are much more-defined learning curriculums that allow the student to proceed at his or her own pace. I used one version of this learning style to obtain my master’s degree in cybersecurity and information assurance while being employed and having a family. The on-demand approach contradicts the traditional education model, which is based on a calendar of specific times for classes and testing. The challenges to the traditional approach include having the time to be available when teaching occurs, the costs associated with providing live teaching, and limited capabilities to adjust to an individual student’s personal learning style. The traditional approach to learning is based on an adapt or die delivery.

According to https://news.fit.edu/archive/the-7-benefits-of-delivering-on-demand-training/, the following are key benefits from switching to an on-demand teaching approach:

Convenience leads to compliance

Consistency of quality

Easy updating

Lower costs

Fosters independence

Allows partnerships with experts

Improved data collection

Looking closer at this list, the concept of convenience is critical for many people who have other obligations. Also, the flexibility enables individual students to take class at the time of day that is their peak learning point mentally. Consistency of quality is another interesting point. The quality of a teacher will make or break a class. My 11-year-old daughter recently told me her favorite class is chemistry. When I questioned her further about why, her answer was that she enjoys the teacher, which has led to her performing very well in that class. Easy updating is yet another huge benefit from on-demand learning. Looking back at the last section’s example of the lab I deliver using Moodle, I found that every time I released new content, I would have several bugs in the instructions that students would point out. On-demand systems allow the teacher to quickly make adjustments to the content stored in the cloud so that students not only benefit from the changes but also see their feedback quickly converted into actions. I also pointed out in the Moodle example the value of collecting statistics regarding how students use the learning platform.

The final point from the news.fit.edu article I want to focus on is cost savings from on-demand learning. On demand means top talented teachers can record their content and share it with hundreds of thousands of students. The teacher can make much better profits by expanding to a much larger audience, and students can learn from the highest rated teachers rather than being limited to whoever is available to teach locally or during a specific time period. Questions and labs can also be injected at any point, allowing for a change of teaching style to keep content interesting. Education that would cost thousands of dollars can now be obtained from anywhere at a far lower cost using this on-demand approach.

On-Demand Learning Example: Khan Academy

One fantastic example of on-demand learning is Khan Academy, which is a free version of Kindergarten-College education. One specific feature of on-demand learning that Khan Academy uses is personalized learning. Personalized learning means the content adjusts to the student; as the student masters topics, the content will adjust what is covered next based on what concepts the student needs to learn. Also, if the evaluation of a subject shows that more improvement is needed, the content will adjust and keep focusing on a topic until the student shows they have a firm understanding of the content. Personalized learning mixed with 24/7 access means students can learn when they want to learn and maximize their time, making this approach extremely effective. Figure 11-11 shows an example of a Khan Academy dashboard offering computer programming, early math, and grammar classes.

FIGURE 11-11 Khan Academy Dashboard

Future of Training

I predict the future of training will move to an on-demand platform. When a SOC analyst needs to master a skill such as programming, they will simply register for a course and complete it when time permits. Regarding other predictions for the future of training, this section represents a few forward-looking views of training. These predictions are based on technology trends and expected future training requirements. I encourage any forward-thinking SOC to change its training strategy from classic in-person training to on-demand options that allow for a “learn from anywhere” delivery, incorporation of external industry experts from anywhere in the world, and more interaction with LMS-type platforms. The sections that follow offer some predictions that further drive my case for this change.

Future of Training: On-Demand Experts

I am a landlord, but I have never gone through formal training to learn how to repair things in my rental units. Instead, YouTube provides me access to very experienced advisors. If I need to install a new sink, I can buy the materials as listed in a YouTube video, follow the video’s steps to install it, and complete the project without any prior experience. I predict on-demand training will continue to grow and become the standard for learning how to complete technical projects just like it has helped me become my own handyman. This trend will lead to engineers not needing training to accomplish certain tasks, such as setting something up or performing a specific task, since they can learn in real time.

The SOC of the future will rely on a blend of wizards and on-demand learning to accomplish tasks, with expectations that the tools will teach the people rather than the people being expected to know how to use the tools. For example, a new analyst will be able to verbally ask a tool how to do something and the tool’s wizard will explain and do the work along with the analyst. This will also lead to a different skillset requirement such as DevOps and programming, meaning understanding how to create or build things rather than needing skillsets for managing existing tools, because those tasks can be done by following a wizard.