Wikipedia defines “optimization” as the process of modifying a system to make some aspect of it work more efficiently or use fewer resources. But why optimize your code?

Each game has a certain fixed amount of computing resources available to it. Within that world of possibility, the developer must create a compelling experience. Market forces dictate that it must be as good an experience as possible.

When you optimize your code, you are increasing the amount of work you are able to do per unit of computational power and maximizing your efficiency. By optimizing your CPU and GPU usage, you can add more polygons, more pixels, more physics, more AI, more responsiveness, more immersion—leading to more fun and better visuals, the elements that help make and sell revolutionary video games.

Optimization should always be done holistically. Look at the big picture first and then drill down until you find the specific problem that is slowing your application. When you don’t optimize holistically, you risk fruitless optimizations. Many beginner optimizers start by choosing the exact lines they want to speed up, without considering the big picture. Deadlines are tight, and you can’t afford to waste time optimizing every line.

The optimization process is simple. Start by looking at the whole system. Find a situation that consistently exhibits bad performance. That done, measure where resources (such as computing power or memory) are being used. Take steps to reduce them. Finally, measure again to see if you have improved the situation sufficiently. If it is good enough, you are done. Otherwise, find the next performance problem and start all over again.

A good optimizer uses a wide variety of techniques to perform this process. Don’t worry—the next few hundred pages are all about how to get performance wins quickly, reliably, and sanely by focusing on a repeatable process.

Let’s start by discussing, at a high level, the contents of this book and the why, where, and when of optimization.

Anyone can follow the basic optimization process and eventually get a game to run faster. You could even write a program to randomly change one line of your source code and only keep the changes that increase your frame rate. A beginner optimizer who chooses lines to optimize without regard for the big picture works this way. However, this type of optimization will fall short of the efforts of a good optimizer.

The goal is to help you, a software developer, become a great optimizer. You’ll find that experience, skill, and knowledge are necessities. You will also need to use your intuition and your patience. Optimization is a crucial step to building compelling games.

Unlike a random code-rewriting program, any developer will approach a performance problem with a few ideas about what might solve it. For instance, if you see a loop is running a million times and causing the game to run slowly, you might try to reduce the number of iterations. If your knowledge of the algorithm prevents it from entering the loop at all, surely the application will go faster.

Using your intuition involves assumptions. The downfall of novice optimizers is that they do not know the assumptions under which they are operating. Their intuition misleads them. They may assume, for example, that inline code always makes it run faster, or that garbage collection is always slower than manual memory allocation. The expert optimizer understands his assumptions, and he knows when to question them—and better still, realizes that he is fallible and must build assumptions based on measurements and analysis

The difference between a novice and master chess player isn’t how quickly they move their pieces. The difference is that the master chess player immediately identifies the key details of the situation. Both players try to look several moves ahead, but the master only thinks about the moves that will likely lead to victory.

In optimization, there are a million different things you can do to speed up your code. A beginner will struggle because he has to try a lot of approaches, and may not hit on the things most likely to give big performance wins.

On the other hand, good optimizers are able to quickly identify the three or four things that will make the biggest difference, measure their effects, and choose the best from among them.

The major goal of this book is to get you into the position of being an experienced optimizer as quickly as possible. We will do this in a few ways:

Performance tests: Every optimization book and article includes a lot of little cryptic code snippets with timing information, usually recorded on the authors’ systems. In our experience, the authors are generally developing on a cutting-edge 486DX and the numbers are completely useless for your Core 2 Quad development system and Centrino target system. We’ll include a performance test harness with this book, and every performance claim we make is backed by hard data. In five years when everyone has 128-core terahertz systems, all you have to do is rerun the tests to see what the performance picture is for your hardware right then.

Theoretical understanding: We will discuss the fundamentals of performance. How are things perceived as fast or slow, responsive or lagging? How does measurement work? What are the properties of code in the context of performance?

Practical knowledge: Being able to write 3D graphics code is a specialized skill. Making it fast is another issue entirely. We’ll cover the background you need in order to ask such questions as “What is fast on this hardware?” and we’ll give you the answers for a typical PC circa 2010.

We should also discuss some of the things that this book will not cover. There are an infinite number of things to know, and the best resources are focused. Therefore, this book will not teach you about the following areas:

You will not learn how to write assembly, but we will discuss when to appropriately use or understand assembly to optimize your game.

This book is not a game design algorithm book. Although we will certainly be discussing game algorithms, we will not be giving you an exhaustive review on which game algorithm is suitable from a game design perspective. We will, however, use game algorithms as examples to illustrate optimization processes or end goals. There are already plenty of books on game design algorithms, and we don’t feel the need to be overly redundant.

This book will not teach you about the best practices for writing and maintaining your code. Design patterns, refactoring, and dealing with legacy code are subjects beyond this book’s scope.

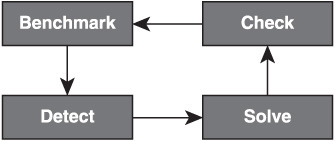

Optimization is a cycle that you repeat until your game is fast enough. Let’s go into some detail about how the optimization process works. There are many different ways to optimize, but the basic concepts we cover here will serve you well until you develop your own style. The steps are benchmark (see Figure 1.1), detect, solve, check, and repeat.

Figure 1.1. All optimization starts with a benchmark. Once a benchmark is found, it is possible to profile, identify the performance problem, and solve it. Finally, we return to our benchmark to see if we have improved performance.

Let’s talk about benchmarks. When you are optimizing, it is essential that you be able to measure your gains. Having a good benchmark makes optimization easier, because you will get immediate feedback on the effect of your changes.

For a game, a benchmark is a point of reference in your game that serves as a standard for comparison against future implementations.

A good benchmark will have a few important qualities. First, it will be consistent across runs. Running the benchmark twice without changing your code should give you the same results. Second, it will be quick. It shouldn’t take more than a minute or so to run the benchmark; otherwise, you’ll spend more time waiting on the test than working on the fix! Third, it should be representative of an actual game situation, so that you don’t waste time optimizing for scenarios that will never arise. Finally, it will be responsive to changes. It should be obvious when you’ve fixed the performance problem.

For instance, you might decide your frame rate is too low in certain situations. Before you start the process of optimization, you would identify a specific scene in your game that exhibited the performance problems and modify the game so you could easily get to that scene and measure the frame rate. Ideally, the whole process would be automated so you could run the benchmark and get an identical run with consistent behavior. This would make it easy to get accurate measurements.

The important thing about benchmarks is that they let you make relative comparisons—before and after you make a change. System performance benchmarks like 3D Mark are excellent for analyzing the performance of a computer system, but won’t tell you anything about your code.

Anytime you start to optimize something, make sure that you can measure your success.

The next step is to gather and analyze data to help identify where you need to optimize. With every change you make, you are hoping for the biggest possible performance increase with the least amount of effort. In other words, you are looking for the biggest return on your time investments. Proper detection doesn’t absolutely guarantee the highest ROI, but it does ensure that what you are attempting to optimize is actually a problem. Information, like a flashlight, lets you avoid stumbling around in the dark.

Consider a mechanic tuning a car. Something is wrong with it. The mechanic is trying to fix it as best she can. Sometimes, she can just reach into the engine, make an adjustment, and everything runs better.

More often, fixing the problem is a complex process. She has different tools—a stethoscope, a dynamometer, the on-board computer, her own senses—that can help her. Often, she has to make a guess about where the problem might be and do further work to narrow things down. Eventually, she’ll gather enough clues to identify the problem.

In optimization, you always start at the big picture and work your way down to the fine grain problem. The first big division is typically finding out whether the CPU or the GPU is slowing you down more; sometimes the CPU and GPU are equally balanced. There are a wide variety of tools that can help, such as profilers and system monitors. We’ll go into the details of detection in Chapter 5, “Holistic Video Game Optimization.”

For our frame rate optimization example, we’ll start by using a system monitor to see if we are maxing out the CPU or the GPU. If the GPU were underutilized, we might use a profiler to measure the CPU time spent updating each object in the scene. Since the game normally runs fine, it’s likely that a single problem is eating most of the time, so that is where we know to optimize.

Don’t be confused by the word “single.” Since the atomic level of software is the micro-operation, software performance problems are almost always a conglomerate of many subparts. The distinction of “singular” depends on your perspective. For example, a fine grain problem is a CPU stalling CPUID assembly call. A coarse grain problem is an unbalanced graphics pipeline. We use different perspectives to find the biggest “single” problem.

Start at the big picture and work your way down toward a detailed understanding of where specific performance problems are located.

After you’ve gathered enough information to identify the factor limiting your performance, then you can change something to try to improve the performance. You might toggle a flag, rewrite an algorithm, or change the data you are loading.

Coming back to our example, we notice that there’s a loop that’s not updating correctly, and as a result, it is running far too often. We expect that fixing it will win us back a lot of wasted CPU cycles, so we go ahead and make the change.

Even the most experienced optimizer will often have to guess at the best solution. The best practice is always to try several options before settling on a solution.

Once you have made a change, always measure to see if it really did give a performance win. Typically, you will run your benchmark again and observe the change. A common trap for novice optimizers is changing code blindly based on an assumption (for instance, “inlined functions are faster”) without evaluating the effectiveness of the tactic.

In our example, we might find that the loop was indeed not executing right, but the actual slowdown was a bad memory access pattern. If we hadn’t measured, we would not have found the deeper issue.

Measuring has another benefit, too. It develops your intuition, giving you data that will help you optimize more effectively next time you encounter a problem. This corrective action will help you optimize even in the strangest situations—on weird hardware, sharing time with odd processes, or even on future architectures.

In a river, when you remove a rock, the water flows in a new way, down the new path of least resistance. Computer programs are the same.

Removing a bottleneck or a hotspot causes cascades and ripples across the entire performance picture. In other words, every change affects the flow of the program. Your old measurements, and therefore your analysis, may no longer be valid.

After a successful frame rate increase, it is time to begin the process again. You may need to create a new benchmark, but you can always repeat the detection process and begin your search for the new, slowest part of your game.

Like the back of the shampoo bottle says: lather, rinse, and repeat.

When you optimize, you are looking for hotspots and bottlenecks.

Lean manufacturing, a manufacturing ideology developed primarily by Toyota (described by Jeffrey Liker in “The Toyota Way”) focuses on eliminating waste in assembly lines. There are three types of waste the system focuses on: muda (non–value-added work), mura (unevenness), and muri (overburdening). By removing waste, the system creates efficiency, increases savings, and improves quality. Auto manufactures use these waste descriptions to evaluate their assembly lines.

When you optimize systems made of multiple concurrent elements, you are dealing with a digital assembly line. Data flows from CPU to GPU, and memory accesses are fulfilled while you process. When everything flows smoothly, the system is harmonious. But if one piece must work too hard and others have to wait, there is discord.

When you look at an object like a car motor with an IR camera, you’ll see that most of the engine is relatively cool. But a few areas—like the pistons—are hot. Really hot. So hot that they affect the functioning of the rest of the car. So hot that auto manufacturers have to install fans and cooling fins and coolant systems to keep the car functioning properly.

Hotspots in the context of optimization are places in the code that consume more than their fair share of execution time, memory bandwidth, or function calls. Typically, a hotspot is small in code footprint, but big in performance impact. The Pareto Principle states that, generally, a small fraction of code (in terms of lines) is responsible for the majority of resource usage. You may also know it as the 80–20 rule.

When optimizing, you want to find the “hottest” parts of your program and change them so they run cooler. Other parts of the code then become the new “hottest” part, and you can optimize them in turn.

Imagine two machines running in parallel in an assembly line. Each spits out a different kind of widget. Combining the two widgets makes the final product.

Suppose that one machine creates 100 widgets an hour and the other creates only 50. You will only be able to produce 50 products an hour. In other words, the slow machine is the limiting factor or bottleneck in the system. If you wanted to speed up total production rate, the only way to do it would be to get the 50-widget machine to make more widgets per hour.

Any system with multiple elements, such as a computer with multiple cores and a GPU, along with deep pipelines with multiple subunits in those processors, has a bottleneck somewhere, and as optimizers the goal is to minimize its impact on performance.

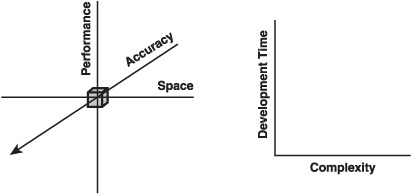

There are three important trade-offs to consider when you are optimizing: performance versus space, accuracy versus speed, and maintainability versus complexity (see Figure 1.2). Let’s review all of them.

The first and most important is performance versus storage. It’s often possible to trade calculation time against memory usage. One example is memoization, a term coined by Donald Michie in 1968 to describe a technique where you cache recently calculated results to avoid recomputing values. Memoization replaces expensive calculations with a quick memory read. You use more memory, but save CPU. Another example of trading memory for performance is using a kD-tree to accelerate collision queries, instead of looping over every triangle in a mesh. The tree adds to the memory footprint, but saves you so much extra computation that it is well worth it in most cases.

The second trade-off is accuracy versus speed. Sometimes, you can reduce precision in order to increase performance. For an example of this trade-off, you can look to the vertex shader 1.1 instructions log and logp. Both perform a log2 operation. The difference is that log performs the operation using 10 instruction slots and logp performs the operation using one slot. The performance cost represents a trade-off in accuracy; the logp function is less precise, but in most situations it doesn’t matter for visualization.

Accuracy versus speed plays a role in the data you use to render, too. You can also use lower polygon meshes, as they consume less screen space. A lower detail model isn’t as accurate as the highest resolution LOD, but it consumes fewer resources. You can also reduce texture resolution.

Finally, you can apply the accuracy/speed trade-off to gameplay. Distant enemies might require less frequent AI updates. Effects that are purely visual, such as debris from an explosion, might require lower precision physics simulation than gameplay-critical physics driving a player-controlled vehicle.

The final trade-off is development time versus complexity. Frequently, there are changes that will yield a speedup but impact the clarity or maintainability of code. Adding a complex image-based caching system to your renderer might speed things up, but getting it to work properly in all cases might be a nightmare—never mind the added complexity in the code base. As you optimize, these trade-offs give you several important angles from which to attack the problem. The most elegant solutions will give you exactly what you want without having to make any trade-offs. But when performance is your goal, understanding your arsenal is the key to winning the war.

There are many approaches to cleaning a hard drive. Some people choose to search for the biggest files and delete the unneeded ones. This is smart because it’s likely that most of the capacity of a full drive is spent on a small number of large files. Does that mean that you shouldn’t look at the folder that holds a million 1-kilobyte files?

Of course not, but there’s another consideration. Maybe you partitioned your hard drive, and you weren’t utilizing as much of it as you thought.

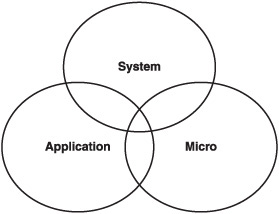

When searching for opportunities to improve performance, the answer to what you optimize may lie in the “level” of optimization. We’ve found it useful to categorize optimizations into three levels: system, application, and micro (see Figure 1.3).

Figure 1.3. These three levels describe the process of optimization. Each level has definable characteristics; however, solutions may involve changes to one or more levels.

The system level of optimization focuses on the resources of your machine and their use in your game—the global, holistic performance picture. At the system level, you are looking at broad elements of the computer, and you are concerned with three things: utilization, balancing, and efficiency.

Utilization. describes how much of a resource’s time is spent doing productive work. Your word processor, for instance, might use 50 percent of your CPU time, but it’s unlikely to use any GPU time at all. When a resource is not utilized, it is idle.

Balancing. is when you move work from a resource that is overutilized to one that is idle. For instance, as GPUs grew in power, games had to switch from carefully considering whether each triangle would be visible to sending large batches of geometry. GPUs became so fast that now it’s often cheaper to let the GPU determine visibility by drawing geometry than it is to cull individual triangles.

Efficiency. is another consideration. Each part of the computer excels at a specific task. Part of system level optimization is making sure that each task is done with the right resources. Runtime data structures should be kept in RAM, not on mass storage. Massively parallel operations like rendering should be done on the GPU, not the CPU. Something that takes the CPU 50 percent of frame time might only take the GPU 1 percent.

It is easy to write a program that will use 100 percent of a system’s resources, but it’s unlikely that this will translate into a compelling game. You have to put your money on the screen. If resources aren’t being spent on making a better game, then they are wasted. The purpose of a game is to be fun and sell lots of units, not maximize CPU usage.

The ideal scenario is a system that is completely balanced, fully utilized, and highly efficient.

Algorithmic level optimizations are the choices you make in the algorithms and data structures that make up your application. The Zen of optimization is in making use of the best algorithms in an elegant manner, maximizing performance and maintainability.

Algorithmic level optimizations focus on removing work using better and typically more complex algorithms.

One of the best ways to analyze a program at the algorithmic level is to use a tool that will show you the call hierarchy of your code. A call hierarchy will trace what lines of code call others, building a tree rooted at the main loop of your program. Additionally, it will tell you how much time was spent in each part of the code, as well as how many times it was run. By looking at this information, you can quickly identify subroutines that are taking the most time and target them for optimization.

For example, after analyzing your game, you may find that one function is being called 500,000 times, amounting to a total of 15 percent of execution time. You realize that the time per call is quite low. Instead of trying to optimize the function (which would probably be hard), you look at the code that calls the offending function, and realize that with a few small changes, it would only need to call it 500 times. After making the change, the offending function is now way down the list at less than one percentage point.

Remember, algorithmic level optimizations are crucial. Good algorithmic level optimizations can trump the best micro-level or be more robust than system level changes. Removing work is an optimization that will benefit all hardware.

The stereotypical optimization work happens at the micro-level. Pouring over assembly listings, checking instruction timings and pipeline stages, using obscure hardware features to gain small wins—they’re all right here. Micro-optimizations are line-by-line optimizations, and they are the ones that most often cause long arguments on mailing lists. Concepts such as branch prediction, loop unrolling, instruction throughput, and latency are all considerations when optimizing code line by line.

Compilers are considerably better at performing micro-optimizations today than they were 15 years ago. The term optimizing compiler is rarely used now because it is standard for compilers to perform many advanced optimizations automatically.

Micro-optimizations can give big wins for inner loops, which are small sections of code that are run many, many times. Suppose that you ran a routine every frame that sets each pixel on the screen to a color. The line of code inside the loop accounts for 99 percent of execution time, and as a result, finding a way to speed that one line up could yield a big boost in performance.

There are still some areas where micro-optimizations are the default choice. If you find that a shader is a bottleneck (dealt with in more detail in Chapter 10, “Shaders”), you will almost immediately start with micro-optimization. Because shader programs will be run many times per frame, shaving just a few operations from your shaders can yield significant performance gains.

These performance gains come at a price, though. Micro-optimizations can be hard to maintain, because they often rely on obscure knowledge and specific compiler or hardware behavior. It isn’t uncommon to have a game still under development that will run flawlessly on one machine and crash on another. The critical difference may be drivers, hardware, or both.

Micro-optimizations are more reliable on fixed platform such as consoles. Be careful if you are working on the micro-level for a PC game because taking advantage of the latest greatest feature might result in a game that only runs on the latest greatest hardware.

You can spend an infinite amount of time tuning, tweaking, and profiling your application. There is never a true ending, a point at which code can’t be improved any further. But there are points of diminishing returns, and there are also paths that will take you in unproductive directions. In the following sections, we will identify several pitfalls that lead optimizers astray.

Although it’s redundant for us to state this pitfall, it occurs so often, and is so fundamental that it is worth it for us to single out this topic here. You should not make assumptions about what is slow. Instead, you should measure and analyze. Doing so will promote your assumptions to educated decisions. You should, however, understand the underlying assumptions of your hardware and APIs. For example, a GPU and its drivers assume that you will send data with large submissions. The DirectX API assumes that you will not lock a static vertex buffer more than once.

Don’t sweat the majority of details until you have to.

You’ve probably heard the saying “Premature optimization is the root of all evil.” It’s frequently attributed to Professor Donald Knuth, from his 1974 paper “Structured Programming Using Goto Statements.”

When an esteemed professor from Stanford declares the root of all evil, people listen. The problem is, however, that they failed to hear and repeat the entire message. The full quote reads, “We should forget about small efficiencies, say 97% of the time: premature optimization is the root of all evil.”

In other words, don’t worry about optimizing anything until you know it is a big performance sink. Of course, there are those three percent cases where you should not forget small efficiencies. The difficulty is in knowing which three percent, and that’s why detection is so essential.

Many people believe you cannot optimize code until you have written it. This is not the case. You can solve 90 percent of your optimization issues by designing flexible, maintainable code. A well-designed object hierarchy, even with the overhead of a language like C# or Java, can be much faster than pure assembly code that is poorly written. If your code is flexible and maintainable, you will be able to manipulate it after detecting the problem.

When you optimize, you should focus on the worst-case scenario—both in terms of benchmarks and in terms of target hardware. Most developers do their work on only one or two machines. This can lead to a false performance picture. The bottlenecks on a system with a high-end GPU, lots of RAM, and a generous CPU are going to be different than a system with no GPU, minimal RAM, and a basic CPU.

In interactive applications like games, the worst-case performance is generally a bigger issue than the average. Users notice and are distracted by intermittent dramatic slowdowns, while consistent performance, even if it is on the mediocre side, is quickly accepted. We go into more detail on this in Chapter 8, “From CPU to GPU.”

Therefore, you should make sure to identify and test on your worst-case system. This will give you a true skewed-to-pessimistic performance picture, keeping you honest when the final deadline looms, and you have to deliver acceptable performance on old, misconfigured hardware.

Test on as much hardware as you can, and especially on the worst you can find.

This mistake is simple to avoid, but easy to fall into. If you aren’t going to ship a debug build, don’t do your performance profiling on one. Debug builds often run much more slowly, and slower in different ways, than your release build. The compiler, once optimizations are turned on, can change the performance picture significantly.

Make sure that you are testing your release builds. Any good optimization tool will work with them. You can turn on debug symbols and vary your optimization level to trade speed for ease in debugging.

Debug builds do have one benefit: They typically expose performance problems sooner. If you want a pessimistic view of your application’s CPU performance, then run in debug mode.

Sometimes, a bad benchmark will lead you to believe that you have made your game faster, even if you haven’t. This often occurs when you aren’t disciplined and inadvertently change a variable between runs of a benchmark.

Suppose that a developer identifies a scene that exhibits an abnormally low frame rate. They do their first benchmark in full-screen mode. They analyze the data and make some changes. When they run the benchmark again, though, they run in windowed mode at a different resolution, resulting in a gain in a frame rate, independent of their code changes. Because they changed multiple variables, they can’t make a valid comparison between the two runs, and they can’t tell if their optimization brought any benefit.

It is very important to control the environment during your benchmark. In order to avoid this pitfall, the only thing that should change from benchmark to benchmark is the code you are optimizing. The best practice here is to fully automate the benchmark so that it runs without user input—that way you can get maximum consistency.

Game development has involved concurrency almost since its inception. Consoles like the NES and Atari 2600 featured multiple chips that cooperated in order to run the game. The arcade space frequently had similar setups. Almost all arcade systems since 1980 featured more than one active CPU, frequently one for gameplay, another for graphics, and a third for sound. Today’s consoles (other than handhelds) all feature at least two cores, and frequently many more, in addition to the GPU and other specialized resources.

However, in the PC, concurrency has been absent or hidden until more recently. Graphics drivers deal with the details of talking asynchronously to the video hardware. Most computers had only a single CPU core. Now, multiple cores are mainstream, and CPU design is complex enough that even within a single core, parallelism is present.

Let’s talk about instruction level parallelism. In order to delay the need for coarser grained parallelism, chipmakers introduced longer pipelines and out-of-order execution that enabled the chips to perform many instructions in parallel. Without realizing it, your code is performing some level of parallelism, even when you are writing single-threaded code. Instruction level parallelism usually occurs without our knowledge; a compiler will seek to take advantage of this parallelism whenever possible.

Another option for concurrency is the SIMD instruction sets available on many CPUs—MMX, SSE, AltiVec. SIMD—single instruction, multiple data—instructions can operate on more than one piece of data at once. A typical example is an instruction that can add one vector of four floats to another vector of four floats. Older GPUs used SIMD instruction sets almost exclusively, as they were optimized for working on batches of homogenous data. Today, GPUs handle scalar data quite efficiently using a SIMT, or single-instruction multiple-thread approach.

These kinds of parallelism, for all their wins, are invisible from an architectural level.

Multithreading, however, can have major effects on architecture. Driven by physical barriers inherent in building ever smaller, faster cores, chipmakers now add more cores to their chips to increase speed instead of increasing clock frequency. Now, it’s difficult to buy a CPU with only one core. By changing an application to run in multiple threads, big increases in speed can be had. But this requires a major change in the application’s architecture, to separate it into multiple, independent units.

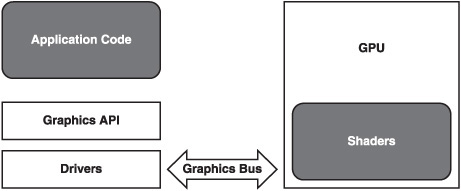

Many of our bottlenecks and hotspots reside in code that we didn’t write (see Figure 1.4). Years ago, it wasn’t uncommon to write your game with little help from outside APIs. In fact, for a long time, there were no other options and no novel hardware to access requiring an API. Now, however, it is common to use many different APIs and many different pieces of hardware. With many APIs, there is no publicly available source code. This is especially true of graphics APIs. The innards of DirectX, OpenGL, and graphics drivers are not available for review in most situations. In the past, you could optimize all of your game using assembly because you had access to the entire pipeline. Now, you benefit and depend on the APIs and hardware that keep you from optimizing with assembly. The software and hardware you use, not the software and hardware you write, controls a large percentage of your game’s execution time. If you didn’t write the code, or don’t have the source, then optimizing using hand-coded assembly is not possible.

From theoretical and practical perspectives, big O notation is a key concept. By knowing how quickly the execution time for an algorithm grows as you increase workload, you can determine quickly if an algorithm is feasible, given your dataset and performance requirements.

However, big O ignores the constant factor. You know that an O(n2) algorithm will generally decrease in speed geometrically as you increase its workload. But if the constant factor is microseconds, even a workload of thousands of items might give acceptable performance. An O(n) algorithm with a high constant factor of a millisecond per item might never be acceptable.

Additionally, because of the context, the increase may not be consistent with the theoretical model. For instance, an O(n2) sort algorithm might be much faster than the O(n log n) sort if it can take better advantage of the cache or pre-fetcher. Big O is a good guide, but you have to make real-world measurements to get the full picture.

In writing this book, we have tried to be mindful of the fact that optimization involves balancing details and trade-offs from a dozen different, daily-changing sources. Just in the past 20 years, we have seen parallelism go through several incarnations, seen CPU calculation speed go from slower than memory access speed to faster, and the speed of all these pieces grow by several orders of magnitude. The landscape for optimization is always evolving, yet the basic principles tend to stay the same.

We hope to pass on those principles to you. Carefully measure, wisely prioritize, cleverly optimize, and you will find success.