Chapter 1. The History of Software Security

Before delving into actual offensive and defensive security techniques, it is important to have at least some understanding of software security’s long and interesting history.

A brief overview of major security events in the last one-hundred years should be enough to give you an understanding of the foundational technology today’s web applications build on top of.

Furthermore, it will show off the ongoing relationship between the development of security mechanisms and the improvisation of forward-thinking hackers looking for opportunities to break or bypass those mechanisms.

The Origins of Hacking

In the past two decades hackers have gained more publicity and notoriety than ever before.

As a result of this it’s easy for anyone without the appropriate background to assume that hacking is a concept closely tied to the internet and that most hackers emerged in the last twenty years.

But that’s only a partial truth. While the number of hackers worldwide has definitely exploded with the rise of the world wide web, hackers have been around since the middle of the 20th century—potentially even earlier depending on what you define as “hacking”.

Many experts debate the decade which marks the true origin of modern hackers because a few significant events in the early 1900’s showed significant resemblance to the hacking you see in today’s world.

For example, there have been specific isolated incidents that would likely qualify as hacking prior in the 1910’s and 1920’s, most of whom involved tampering with Morse code senders and receivers or interfering with the transmission of radio waves.

However, while these events did occur—they where not incredibly common and it is difficult to pinpoint large scale operations that where abrupted as a result of these technologies being abused.

It is also important to note that I am no historian. I am a security professional with a background in finding solutions to deep architectural and code level security issues in enterprise software.

Prior to this I spent many years as a software engineer writing web applications in various languages and frameworks. I continue writing software today in the form of security automation, in addition to contributing to various projects on my own time as a hobby.

This means that I am not here to argue specifics or debate alternative origin stories. Instead this section is compiled based off of many years of independent research, with the emphasis being on the lessons we can extract from these events and apply today.

Because this chapter is not intended to be a comprehensive overview but instead a reference for critical historical events—we will beginning our timeline in the early 1930’s.

Now without further interruption, let us examine a number of historical events that helped shape the relationship between hackers and engineers today.

The Enigma Machine, Circa 1930

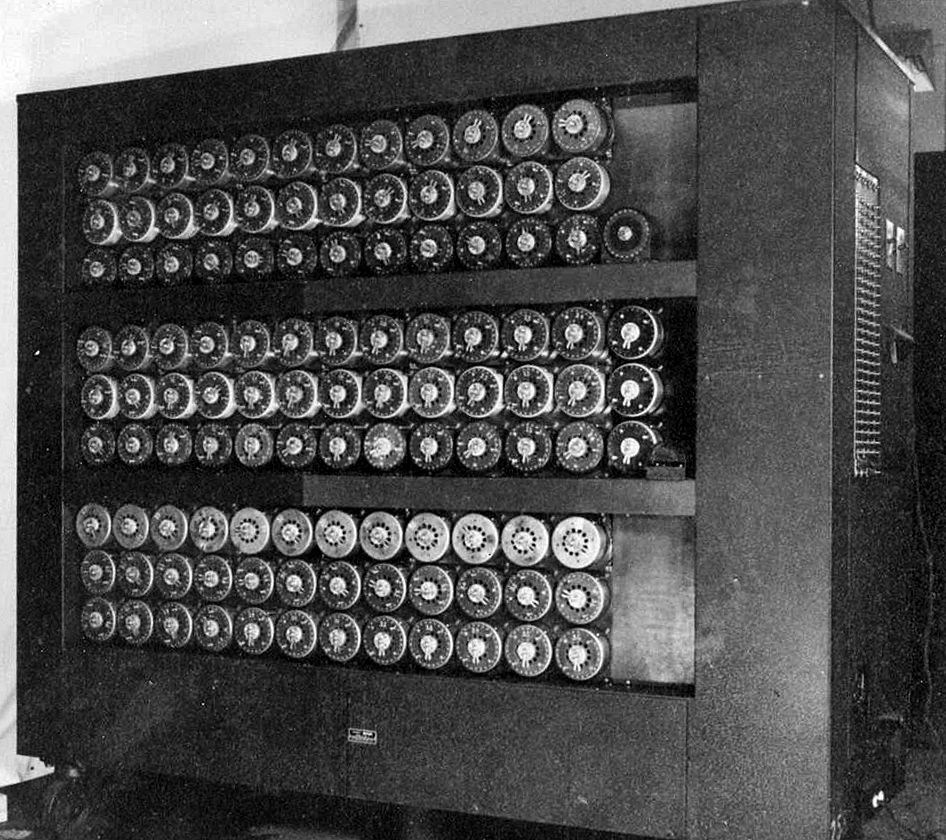

Figure 1-1. An Enigma Machine—This was an electro-mechanical device that was used for transmitting encrypted messages across radio waves in World War II.

The term “Enigma Machine” refers to a machine that used electricity-powered mechanical rotors to both encrypt and decrypt text-based messages sent over radio waves. The device had German origins and would become an important technological development during the second world war.

The device itself looked akin to a large square or rectangular mechanical typewriter. On each keypress, the rotors would move and record a seemingly-random character which would than be transmitted to all nearby Enigma Machines.

These seemingly-random characters where however not random, and instead defined by the rotation of the rotor and a number of configuration options that could be modified at any time on the device.

Any enigma machine with a specific configuration could read, or “decrypt” messages sent from another machine with an identical configuration. This made the enigma machine extremely valuable for sending crucial messages while avoiding interception.

While a sole inventor of the rotary encryption mechanism used by the the machine is hard to pinpoint, the technology was popularized by a two-man company called Chiffriermaschinen AG based out of Germany. Chiffriermaschinen AG traveled throughout Germany demonstrating the technology between 1920 and 1930 which led to the German military adopting the technology in 1928 in order to secure top-secret military messages in transit.

The ability to avoid the interception of long-distance messages was a radical development that had never before been possible. In the software world of today the interception of messages is still a popular technique that hackers try to employ, often called a “man in the middle” attack. Today’s software uses similar (but much more powerful) techniques to those that the enigma machine used 100 years ago in order to protect against such attacks.

While the enigma machine was incredibly impressive technology for it’s time, it was not without any flaws. Because the only criteria for interception and decryption was an enigma machine with an identical configuration to the sender—a single compromised configuration log (or “private key”, in today’s terms) could render an entire network of enigma machines useless.

In order to combat this, any groups sending messages via enigma machine would change their configuration settings on a regular basis.

Reconfiguring enigma machines was a time-consuming process. First the configuration logs must be exchanged in person, as secure ways of sharing these remotely did not yet exist. Sharing configuration logs between a network of two machines and two operators might not be painful. But a larger network, say 20 machines could require multiple messengers to deliver the configuration logs—each increasing the probability of a configuration log being intercepted and stolen, or potentially even leaked or sold.

The second problem with sharing configuration logs was that manual adjustments to the machine itself that where required for the enigma machine to be able to read, encrypt and decrypt a new messages sent from other enigma machines. This meant that a specialized and trained staff member must be present in case a configuration update was needed.

This all occurred in an era prior to software, so these configuration adjustments required tampering with the hardware and adjusting the physical layout and wiring of the plugboard. This meant that the adjuster needed a background in electronics, which was very rare in the early 1900’s.

As a result of how difficult and time-consuming it was to update these machines, updates would typically occur on a monthly basis—daily for mission critical communication lines. This means that if a key was intercepted or leaked, all transmissions for the remainder of the month could be intercepted by a malicious actor—the equivalent of a hacker today.

The type of encryption these enigma machines used is now known as a symmetric key algorithm, which is a special type of cipher that allows for the encryption and decryption of a message using a single cryptographic key.

This family of encryption is still used today in software in order to secure data in transit (between sender and receiver), but with many improvements on the classic model that gained popularity with the enigma machine.

In software, keys can be made much more complex. Modern key generation algorithms produce keys so complex that attempting every possible combination (aka, “Brute Forcing”) with the fastest possible modern hardware could easily take more than a million years.

Additionally, unlike the enigma machines of the past—software keys can change rapidly.

Depending on the use case, keys can be regenerated on every user session (per-login), on every network request, or on a scheduled interval. When this type of encryption is used in software, a leaked key might expose you for a single network request in the case of per-request regeneration or worst-case-scenario a few hours in the case of per-login (per-session) regeneration.

If you trace the lineage of modern cryptography back far you will eventually reach World War II in the 1930’s. It’s safe to say that the enigma machine was a major milestone in securing remote communications.

From this, we can conclude that the enigma machine was an essential development in what would later become the field of software security.

But the Enigma machine was also an important technological development in regards to those who would be eventually known as “hackers”.

The adoption of enigma machines by the axis powers during World War II, resulted in an extreme pressure for the allies to develop encryption-breaking techniques. Dwight D Eisenhower himself claimed that doing so would be essential for victory against the Nazis.

In September of 1932, a Polish mathematician named Marian Rejewski was provided a stolen Enigma Machine. At the same time a French spy named Hans-Thilo Schmidt was able to provide him with valid configurations for September and October of 1932.

This allowed Marian to intercept messages from which he could begin to analyze the mystery of enigma machine encryption.

Marian was attempting to determine how the machine worked both mechanically and mathematically. He wanted to understand how a specific configuration of the machine’s hardware could result in an entirely different encrypted message being output.

Marian attempted decryption based on a number of theories as to what machine configuration would lead to a particular output. By analyzing patterns in the encrypted messages, and coming up with theories based on the mechanics of the machine—Marian and two co-workers Jerzy Różycki and Henryk Zygalski eventually reverse engineered the system.

With the deep understanding of enigma rotor mechanics and board configuration that the team developed they where able to make educated guesses at which configurations would result in what encryption patterns.

In doing so, they could reconfigure a board with reasonable accuracy and after several attempts begin reading encrypted radio traffic.

By 1933 the team was intercepting and decrypting enigma machine traffic on a daily basis.

Much like the hackers of today, Marian and his team intercepted and reverse engineered encryption schemes in order to get access to valuable data generated by a source other than themselves. For these reasons, I would consider Marian Rejewski and the team assisting him as some of the world’s earliest hackers.

In the following years Germany would continually increase the complexity in their Enigma Machine encryption. This was done by gradually increasing the number of rotors required to encrypt a character.

Eventually the complexity of reverse engineering a configuration would become too difficult for Marian’s team to break in a reasonable timeframe.

This development was also important, because it provided a look into the ever-evolving relationship between hackers and those who try to prevent hacking.

This relationship continues today, as creative hackers continually iterate and improve their techniques for breaking into software systems. And on the other side of the coin, smart engineers are continually developing new techniques for defending against the most innovative hackers.

Automated Enigma Code Cracking, Circa 1940

Figure 1-2. Cryptii.com—This website allows you to emulate Enigma code encryption, including over a dozen Enigma models and multiple configurable virtual rotors.

Alan Turing was an English mathematician that is best known for his development of a test known today as “The Turing Test”.

The Turing Test was developed in order to rate conversations generated by machines based on the difficulty to differentiate those conversations from the conversations of real human beings.

This test is often considered to be one of the most foundational philosophies in the field of artificial intelligence (AI).

While Alan Turing is best known for his work in artificial intelligence, he also was a pioneer in cryptography and automation.

In fact, prior to and during World War II Alan’s research focus was primarily on cryptography rather than AI.

Starting in September 1938, Alan worked part time at the Government Code and Cypher School (GC&CS). GC&CS was a research and intelligence agency funded by the English army which was located in Bletchley Park, England.

Alan’s research primarily focused on analysis of enigma machines. At Bletchley Park Alan would research enigma machine cryptography alongside his than-mentor Dilly Knox who at the time was an experienced cryptographer.

Much like the Polish mathematicians before them, Alan and Dilly wanted to find a way to break the (now significantly more powerful) encryption residing in German enigma machines.

Due to their partnership with the Polish Cipher Bureau the two gained access to all of the research Marian’s team had produced nearly a decade earlier.

This meant they already had a deep understanding of the machine mechanically. They understood the relationship between the rotors and wiring, and knew about the relationship between the device configuration and the encryption that would be output.

Figure 1-3. A Pair of Enigma Rotors—These where used for calibrating the Enigma machine’s transmission configuration. This was an analog equivalent of changing a digital cipher’s primary key.

Marian’s team had been able to find patterns in the encryption that would allow them to make educated guesses regarding a machines configuration. But this was not scalable now that the number of rotors in the machine had been increased as much as ten-fold.

In the amount of time required to try all of the potential combinations based on an such a system, a new configuration would have already been issued.

Because of this, Alan and Dilly where looking for a different type of solution. They wanted a solution that would scale, and could be used to break new types of encryption. They wanted a general purpose solution, rather than a highly specialized solution.

Introducing the “Bombe”.

Figure 1-4. A Bletchley Park Bombe—This was an early bombe used during World War II. Note the many rows of rotors used for rapidly performing Enigma configuration decryption.

A bombe was an electricity powered mechanical device that would attempt to automatically reverse engineer the position of mechanical rotors in an enigma machine based on mechanical analysis of messages sent from such machines.

The first bombes where actually built by the Polish, in an attempt to automate Marian’s work. Unfortunately, these devices where designed to find the configuration of enigma machines with very specific hardware. In particular, they where ineffective against machines with more than three rotors.

Because the Polish bombe could not scale against the development of more complex enigma machines, the Polish cryptographers eventually went back to using manual methods for attempting to decipher German wartime messages.

Alan Turing believed that the reason the original machines failed, was because they where not written in a general purpose manner.

In order to develop a machine which could decipher any enigma configuration (regardless of the number of rotors), he began with a simple assumption: in order to properly design an algorithm to decrypt an encrypted message you must first know a word or phrase that exists within that message and it’s position.

Fortunately for Alan, the German military had very strict communication standards. Each day a message was sent over an encrypted enigma radio waves containing a detailed regional weather report.

This is how the German military would ensure all units had sufficient knowledge of weather conditions without sharing them publically to anyone listening on the radio. The German’s did not know that Alan’s team would be able to reverse engineer the purpose and position of these reports.

As a result of knowing the inputs (weather data) being sent through a properly configured enigma machine, algorithmically determining the outputs would become much easier.

Alan would use this newfound knowledge to determine an bombe configuration that could work independently of the number of rotors the enigma machine it was attempting to crack relied on.

Alan requested a budget to build a bombe that would accurately detect the configuration requirements needed to intercept and read encrypted messages from German enigma machines.

Once the budget was approved, Alan constructed a bombe comprised of 108 drums that could rotate as fast as 120 RPM. This machine would run through nearly 20,000 possible enigma machine configurations in just 20 minutes.

This meant any new configuration could be rapidly compromised. Enigma encryption was no longer a secure means of communication.

Today we know Alan’s reverse engineering strategy as a “Known-plaintext Attack” or KPA. It’s an algorithm that is made much more efficient by being provided with prior input/output data.

Similar techniques are used by modern hackers in order to break encryption on data stored or used in software.

The machine Alan built would mark an important point in history as it was one of the first automated hacking tools ever built.

Telephone “Phreaking”, Circa 1950

After the rise of the Enigma Machine in the 1930’s and the cryptographic battle that occurred between major world powers—the introduction of the telephone would become the next major event in our timeline.

The telephone allowed normal everyday people to communicate with each other over large distances, and at rapid speed. As telephone networks grew, they would require automation in order to function at scale.

In the late 1950’s telecoms like ATT began implementing new phones that could be automatically routed to a destination number based on audio signals emitted from the phone unit itself. Pressing a key on the phone pad would emit a specific audio frequency which would be transmitted over the line and interpreted by a machine in a switching center. A switching machine would translate these sounds into numbers and route the call forwards to the appropriate receiver.

This system was known as “tone dialing”, and would be an essential development that telephone networks at scale could not function without. Tone dialing dramatically reduced the overhead of running a telephone network, since the network no longer needed an operator to manually connect every call. Instead, one operator overseeing a network for issues could now manage hundreds of calls in the same time that one call would have taken previously.

Within a short period of time, small groups of people began to realize that any systems built on top of the interpretation audio tones could be easily manipulated. Simply learning how to reproduce identical audio frequencies near to the telephone receiver could interfere with the intended functionality of the device.

Hobbiests who experimented with manipulating this technology would eventually go on to be known “phreakers”—an early type of hacker specializing in breaking or manipulating telephone networks.

Although the true origin of the term is not known, the term “phreaking” has several generally accepted possible origins. The term is most often thought to be derived from two words, “freaking” and “phone”.

There is an alternatively suggested derivation that I believe makes more sense. I believe that the term phreaking originated from “audio frequency” in response to the audio signaling languages phones of the time used.

I believe this explanation makes more sense since we can see that the origin of the term is very close chronologically to the release of ATT’s original tone dialing system. Prior to tone dialing, telephone calls would have been much more difficult to tamper with because each call required an operator to connect the two lines.

We can trace phreaking back to several events, but the most notorious case of early phreaking was the discovery and utilization of the 2600hz tone. A 2600hz audio frequency was used internally by ATT to signal a call had ended. It was essentially an “admin command” that was built into the original tone dialing systems.

Emitting a 2600hz tone would stop a telecom’s switching system from realizing a call was still open (log the call as ended, although it was still ongoing). This allowed expensive international calls to be placed without a bill being recorded or sent to the caller.

The origin of the discovery of the 2600hz tone is often attributed to two events. First off, a young boy named Joe Engressia was known to have a whistling pitch of 2600hz and would reportedly show off to his friends by whistling a tone that could prevent phones from dialing. Some consider Joe to be one of the original phone phreakers, although his discovery came by accident.

Later on a friend of Joe Engressia’s named John Draper discovered that toy whistles included in Cap’n Crunch cereal boxes mimicked a 2600hz tone. Because these whistles could generate a single frequency 2600hz tone, careful usage of the whistle could also generate free long distance phone calls through the same technique.

Knowledge of these techniques spread throughout the western world, eventually leading to the generation of hardware that could match specific audio frequencies with the press of a button.

The first of these hardware devices was known as a “blue box”. Blue boxes would play a nearly-perfect 2600hz signal in order to allow anyone who owned one to take advantage of the free calling bug inherit in telecom switching systems.

Blue boxes where only the beginning of automated phreaking hardware, as later generation phreakers would go on to tamper with pay phones, prevent billing cycles from starting without utilizing a 2600hz signal, emulate military communication signals, and even fake caller ID.

From this we can see that architects of early telephone networks only considered normal people and their communication goals. In the software world of today is known as the “best-case scenario” design case. Designing based off of this was a fatal flaw, but it would become an important lesson that is still relevant today. That lesson is to always consider the “worst-case scenario” first when designing complex systems.

Eventually, knowledge of weaknesses inherit in tone dialing systems became more widely known—which would lead to budgets being allocated in order to develop countermeasures to protect telecom profits and call integrity against phreakers.

Anti-Phreaking Technology, Circa 1960

In the 1960’s, phones became equipped with a new technology known as dual-tone multifrequency signaling aka DTMF.

DTMF was an audio-based signaling language developed by Bell Systems and patented under the more commonly known trademark “Touch Tones”.

DTMF was intrinsically tied to the phone dial layout we know today that consists of three columns and four rows of numbers. Each of the keys on a DTMF phone emitted two very specific audio frequencies, versus a single frequency like the original tone dialing systems.

The development of DTMF was due largely to the fact that phreakers had been taking advantage of tone dialing systems due to how easy those systems where to reverse engineer. Bell Systems believed that because DTMF systems used two very different tones at the same time, it would be much more difficult for a malicious actor to take advantage of the system.

DMTF tones could not be easily replicated by a human voice or a whistle, which meant the technology was significantly more secure than it’s predecessor. DTMF was a prime example of a successful security development that was introduced in order to combat phreakers, the hackers of that era.

The mechanics behind how DTMF tones were generated are pretty simple. Behind each key is a switch which signals to an internal speaker to emit two frequencies—one frequency based on the row of the key and one frequency based on the column. Hence the use of the term “dual-tone”.

1 |

2 |

3 |

(697hz) |

4 |

5 |

6 |

(770hz) |

7 |

8 |

9 |

(852hz) |

* |

0 |

# |

(941hz) |

(1209hz) |

(1336hz) |

(1477hz) |

DTMF was adopted as a standard by the International Telecommunication Union and would later go on to be used in cable TV (to specify commercial break times) in addition to phones.

DTMF is an important technological development because it shows that systems can be engineered to be more difficult to abuse if proper planning is taken. Do note these DTMF tones would eventually be duplicated as well—but the effort required would be significantly greater.

Eventually switching centers would move to digital (versus analog) inputs which would eliminate nearly all phreaking.

The Origins of Computer Hacking, Circa 1980

In 1976 Apple released the Apple 1 personal computer. This computer was not configured out of the box and required the buyer to provide a number of components and connect them to the motherboard. Only a few hundred of these devices where built and sold.

In 1982, a Commodore International would release their own competitor device. This device was the Commodore 64—a personal computer that was completely configured right out of the box. It came with it’s own keyboard, could supported audio and even could even be used with multicolor displays.

The Commodore 64 would go on to sell nearly 500,000 units per month until the early 1990’s. From this point forward the sales trend for personal computers would continually increases year over year for several decades to come.

Computers would soon become a common tool in households as well as businesses, and take over common repetitive tasks such as managing finances, human resources, accounting and sales.

In 1983 Fred Cohen, an American computer scientist would go on to demonstrate the very first computer virus. The virus he wrote was capable of making copies of itself, and being easily spread from one personal computer to another via floppy disk. He was able to store the virus inside of a legitimate program, masking it from anyone who did not have source code access.

Fred Cohen would later become known as a pioneer in software security, and go on to demonstrate that detecting viruses from valid software with algorithms was almost impossible.

A few years later in 1988 another American computer scientist named Robert Morris would be the first person to ever deploy a virus that would infect computers outside of a research lab. The virus would go on to be known as the “Morris Worm”, with “worm” being a new phrase used to describe a self-replicating computer virus.

The Morris Worm would spread to about 15,000 network attached computers within the first day of it’s release.

For the first time in history, the U.S. Government would step in to consider official regulations against hacking. The U.S. Government Accountability Office estimated the damage caused by this virus at as much as $10,000,000.

Robert would receive three years of probation, 400 hours of community service and a fine of $10,050. This would make him the first convicted hacker in the USA.

These days most hackers do not build viruses that infect operating systems, but instead target web browsers. Modern browsers provide extremely robust sandboxing which makes it difficult for a website to run executable code outside of the the browser (aka, against the host operating system) without explicit user permission.

Although hackers today are primarily targeting users and data that can be accessed via web browser there are many similarities to those that targeted the OS. Scalability (jumping from one user to another) and camouflaging (hiding malicious code inside of a legitimate program) are both techniques employed by attacks against web browsers.

Today attacks scale often by distribution through email, social media or instant messengers. Some hackers even build up legitimate networks of real websites in order to promote a single malicious website.

Often times malicious code is hidden behind a legitimate looking interface. Phishing (credential stealing) attacks occur on websites that look and feel identical to social media or banking sites. Browser plugins are frequently caught stealing data, and sometimes hackers even find out ways to run their own code on websites they do not own.

The Rise of the World Wide Web, Circa 2000

The World Wide Web (WWW) would spring up in the 1990’s, but it’s popularity would begin to explode at the end of the 1990’s and the early 2000’s.

In the 1990’s, the web was almost exclusively used as a way of sharing documents written in HTML. Websites did not pay attention to user experience, and very few allowed the user to send any inputs back to the server in order to modify the flow of the website.

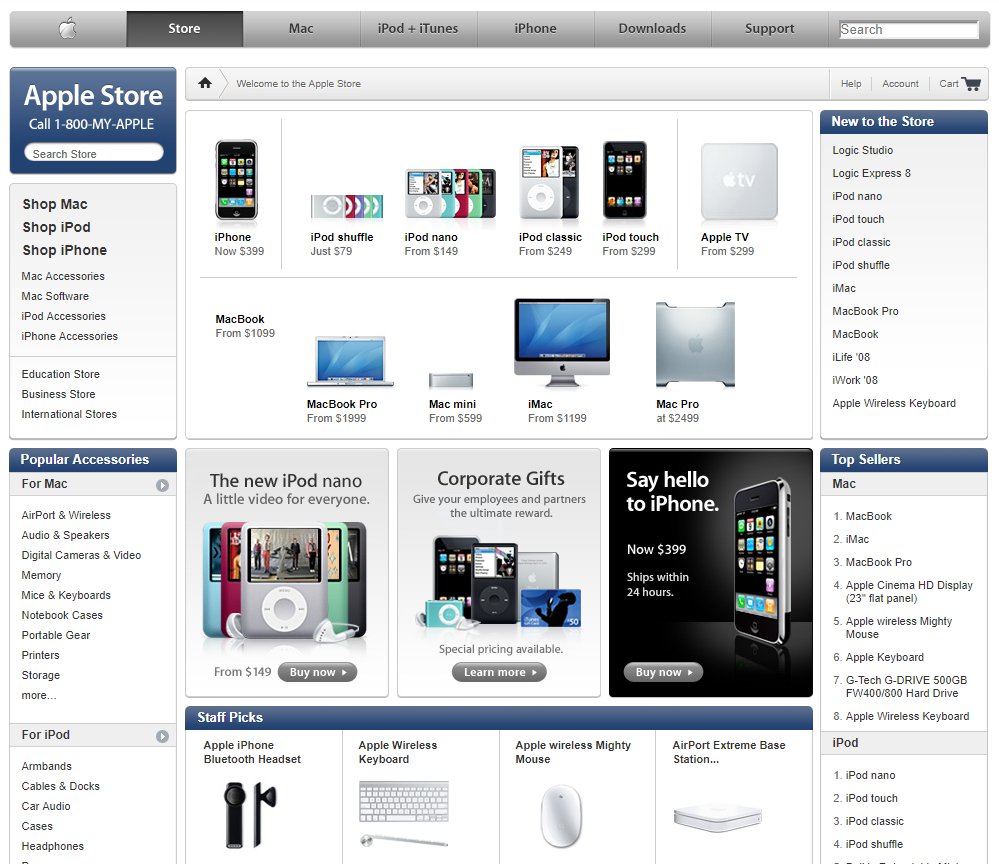

Figure 1-5. Apple.com—July 1997: note the data presented is purely informational and there is no way for a user to sign-up, sign-in, comment or persist any data from one session to another.

The early 2000’s would mark a new era for the internet because websites would begin to store user submitted data and modify the functionality of the site based on the user’s input. This would be a key development later known as “Web 2.0”.

Web 2.0 websites allowed users to collaborate with each other via submitting their inputs over hyper text transport protocol (HTTP) to a webserver which would than store the inputs and share them with fellow users upon request.

This new ideology in building websites would give birth to social media as we know it today. Web 2.0 enabled blogs, wikis, media sharing sites and more.

This radical change in web ideology would cause the web to change from a document sharing platform to an application distribution platform.

Figure 1-6. Apple.com—October 2007: here a store front is present, and items can be purchased online. Furthermore, you will note in the upper right-hand corner there is a “account” link. This suggests the website had begun support for user accounts and data persistence. The account link existed in previous iterations of the Apple website in the 2000’s, but 2007 was when it was promoted to the top right of the UX instead of a link at the bottom. This suggests it may have been experimental or underutilized beforehand.

This huge shift in architecture design direction for websites would also change the way that hackers target web applications. By now, serious efforts had been taken to secure servers and networks—the two leading attack vectors for hackers of the last decade.

With the rise of application-like websites, the user would become an perfect target for hackers.

It was a perfect setup. Users would soon have access to mission-critical functionality over the web. Military communications, bank transfers, and more would all eventually be done through web applications (a website that operates like a desktop application).

Unfortunately, there where very few security controls in place at the time to protect users against attacks that targeted them. Furthermore, education regarding hacking or the mechanisms that the internet ran on where very scarce. Few early internet users in the 2000’s could even begin to grasp the underlying technology that acted as an enabler for them.

In early 2000’s, the first largely publicized denial of service (DDoS) attacks would shut down Yahoo, Amazon, Ebay and other popular sites.

In 2002, Microsoft’s ActiveX plugin for browsers would end up with a vulnerability that would allow remote file uploads and downloads to be invoked by a website with malicious intentions.

By the mid 2000’s hackers would regularly utilize “phishing” websites to steal credentials. No controls where in place at the time to protect users against these websites.

Cross Site Scripting vulnerabilities that allowed a hacker’s code to run in a user’s browser session inside of a legitimate website would run rampant throughout the web during this time, as browser vendors had not yet been able to build defenses for such attacks.

Many of the hacking attempts of the 2000’s came as a result of the technology driving the web being designed for a single user (the website owner). These technologies would topple when used to build a system that allowed the sharing of data between many users.

Hackers in the Modern Era, Circa 2015+

The whole point in discussing hacking in previous eras was in order to build a foundation from which we can begin our journey in this book.

From analyzing the development and cryptoanalysis of Enigma Machines in the 1930’s we gained insight into the importance of security, and the lengths that others will go to in order to break said security.

In the 1940’s we saw an early use case for security automation. This particular case was driven by the ongoing battle between attackers and defenders. In this case, the Enigma Machine technology had improved so much it could no longer be reliably broken by manual cryptoanalysis techniques. As a result, Alan Turing turned to automation in order beat the aforementioned security improvements.

The 1950’s and 1960’s showed us that hackers and tinkerers have a lot in common. We also learned that technology designed without considering users with malicious intent will lead to said technology eventually being broken. We must always consider the worst-case scenario when designing technology to be deployed at scale and across a wide userbase.

In the 1980’s the personal computer started to become popular. Around this time period we would begin to see the hackers we recognize today emerge. These hackers would take advantage of the powers that software enables, camouflaging viruses inside of legitimate applications and using networks to spread their viruses rapidly.

Finally, the introduction and rapid adoption of the World Wide Web would lead to the development of Web 2.0. Web 2.0 would change the way we think about the internet. Instead of the internet being a medium for sharing documents, it would become a medium for sharing applications. As a result of this, new types of exploits would emerge that take advantage of the user rather than the network or server. This is a fundamental change that is still true today, as most of today’s hackers have moved to targeting web applications via browsers instead of desktop software and operating systems.

Let’s jump ahead to 2019, the year when I started writing this book. As of the time of writing, there are literally thousands of websites on the web that are backed by million and billion dollar companies. In fact, many companies make all of their revenue off of their websites. Some examples you are probably familiar with are: Google, Facebook, Yahoo, Reddit, Twitter, etc.

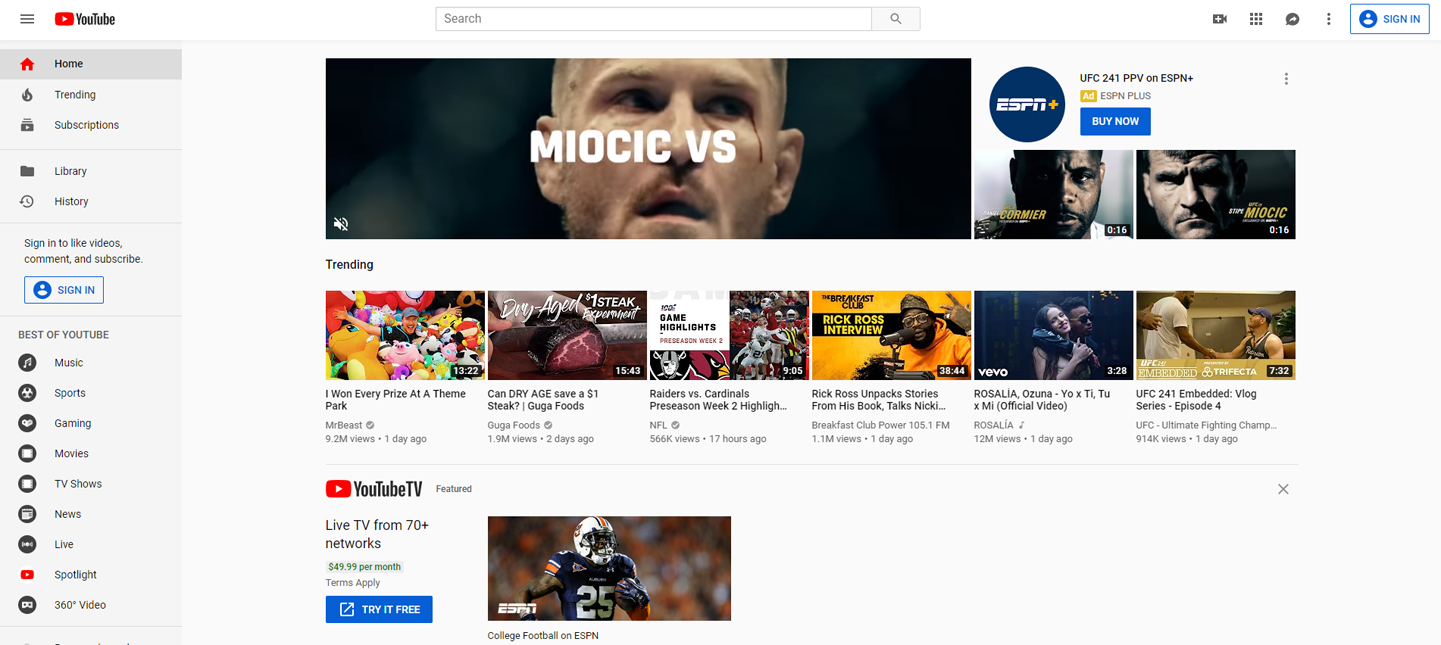

Figure 1-7. YouTube.com: now owned by Google, YouTube is a fantastic example of a web 2.0 website. YouTube allows users to interact with each-other, and with the application itself. Comments, videos uploads and image uploads are all supported. All of these uploads have variable permissions which allow the uploader to determine who the content should be visible to. Much of the hosted data persists permanently and across sessions, and several features have changes reflected between users in near-realtime (aka notifications). Also a significant number of critical features are offloaded to the client (browser) rather than residing on the server.

Some traditional desktop software companies are now trying to move their product lineup to the web, to what is known today as “the cloud” but is simply a complex network of servers. Examples of this include Adobe with “Creative Cloud”—a subscription offering that provides Photoshop and other Adobe tools via the web, and Microsoft Office—which of course provides Word and Excel, but now as a web application.

Because of how much money is parked in web applications, the stakes are the highest they have ever been. This means applications today on the web are ripe for exploitation, and the rewards for exploiting them are sky high.

This is truly one of the best eras to be in for both hackers and engineers who emphasize security. Work for both is in high demand, and on both sides of the law.

Browsers have become significantly more advanced than they where 10 years ago. Alongside this advancement has come a host of new security features. The networking protocols we use to access the internet have advanced as well.

Today’s browsers offer very robust isolation between websites with different origins, following a security specification known as Same Origin Policy (SOP). This basically means website A cannot be accessed by website B even if they are both open at once or one is embedded as an iframe inside of the other.

Browsers also accept a new security configuration known as Content Security Policy (CSP). CSP allows the developer of a website to specify various levels of security, for example if scripts should be able to execute inline (in the HTML). This allows web developers to further protect their applications against common threats.

HTTP—the main protocol for sending web traffic has also improved from a security perspective. HTTP has adopted protocols like SSL and TLS which enforce strict encryption for any data traveling over the network. This makes man in the middle attacks very difficult to pull off successfully.

As a result of these advancements in browser security, many of the most successful hackers today are actually targeting the logic written by developers that runs in their web applications.

Instead of targeting the browser itself, it is much easier to successfully breach a website by taking advantage of bugs in the application’s code. Fortunately for hackers, web applications today are many times larger and more complex than web applications of the past.

Often today, a well known web application can have hundreds of open source dependencies, integrations with other websites, multiple databases of various types and be served from more than one web server in more than one location.

These are the types of web applications you will find the most success in exploiting, and the types of web applications we will be focusing on throughout this book.

To summarize, today’s web applications are much larger and more complex than their predecessors. As a hacker, you can now focus on breaking into web applications by exploiting logic bugs in the application code. Often these bugs result as a side effect of advanced user-interaction featured within the web application.

The hackers of the last decade focused much of their time breaking into servers, networks and browsers. The modern hacker spends most of their time breaking into web applications by exploiting vulnerabilities present in code.

Summary

The origins of software security and the origins of hackers attempting to bypass said security goes back at least around 100 years.

Today’s software builds on top of lessons learned from the technology of the past, as does the security of that software.

Hackers of the past targeted applications much differently than they do today. As one part of the application stack becomes increasingly more secure, hackers often move on to targeting new emerging technologies instead.

These new technologies often do not have the same level of security controls built in, and only through trial and error will engineers be able to design and implement the proper security controls in said emerging technology.

Similarly to how simple websites of the past where riddled with security holes (in particular on the server and network levels), modern web applications bring new surface area for attackers which is being actively exploited.

This brief historical context is important because it highlights that today’s security concerns regarding web applications are just one stage in a cyclical process.

Web applications of the future will be more secure, and hackers will likely move on to new attack surface (maybe RTC or web sockets, for example).

Tip

Each new technology comes with it’s own unique attack surface and vulnerabilities.

One way to become an excellent hacker is to always stay up to date on the latest new technologies—these will often have security holes not yet published or found on the web.

In the mean time, this book will show you how to break into and secure modern web applications. But learning modern offensive and defensive security techniques is just one facet of learning you should derive from this book.

Ultimately, being able to find your own solutions to security problems is the most valuable skill you can have as a security professional.

If you can derive security-related critical thinking and problem solving skills from the coming chapters than you will be able to stand above your peers when new or unusual exploits are found—or previously unseen security mechanisms stand in your way.