CHAPTER 8

MExE-Based QoS

We have identified delay and delay variability as two components that add or subtract from user value. Some of our rich media mix is delay-tolerant but not all of it, and the part of the media mix that is the most sensitive to delay and delay variability tends to represent the highest value. Application streaming is part of our rich media mix—Application streaming implies that we have one or more applications running in parallel to our audio and video exchange.

In this chapter, we consider how handset software performance influences the quality of the end-to-end user experience.

An Overview of Software Component Value

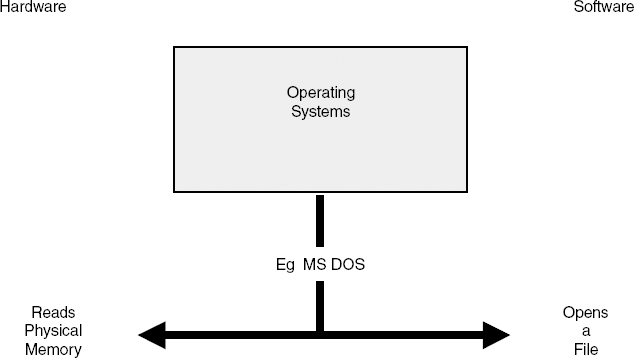

Delay and delay variability and the ability to multitask are key elements of software component value. The job of an operating system is to sit between the software resident in the device and the device hardware (see Figure 8.1). Going back in history, an operating system such as Microsoft DOS (Disk Operating System) reads physical memory (hardware) in order to open a file (to be processed by software). In a PDA or 3G wireless handset, the operating systems to date have typically been ROM-based products. This is changing, however, because of the need to support remote reconfigurability and dynamic application downloads.

Figure 8.1 Software partitioning.

Applications are sometimes described as embedded or even deeply embedded. The more deeply embedded the application, the more remote it is from the outside world. In other words, the more deeply embedded the application, the more deterministic it becomes—that is, it performs predictably. As the application is moved closer to the real world, it has to become more flexible, and as a result, it becomes less predictable in terms of its overall behavior.

An example of embedded software is the driver software for multiple hardware elements—memory, printers, and LCDs. There are a number of well-defined repetitive tasks to be performed that can be performed within very closely defined time scales. Moving closer to the user exposes the software to unpredictable events such as keystroke interrupts, sudden unpredictable changes in the traffic mix, and sudden changes in prioritization.

Defining Some Terms

Before we move on, let's define some terms:

Protocols. Protocols are the rules used by different layers in a protocol stack to talk to and negotiate with one another.

Protocol stack. The protocol stack is the list of protocols used in the system. The higher up you are in the protocol stack, the more likely you are to be using software—partly because of the need for flexibility, partly because speed of execution is less critical. Things tend to need to move faster as you move down the protocol stack.

Peers. The machines in each layer are described as peers.

Entities. Peers are entities, self-contained objects that can talk to each other. Entities are active elements that can be hardware or software.

Network architecture. A set of layers and protocols make up a network architecture.

Real-time operating system. We have already, rather loosely, used the term real-time operating system (RTOS). What do we mean by real time? The IEEE definition of a real-time operating system is a system that responds to external asynchronous events in a predictable amount of time. Real time, therefore, does not mean instantaneous real time but predictable real time.

Operating System Performance Metrics

In software processing, systems are subdivided into processes, tasks, or threads. Examples of well-established operating systems used, for example, to control the protocol stack in a cellular phone are the OS9 operating system from Microware (now RadiSys). However, as we discussed in an earlier chapter, we might also have an RTOS for the DSP, a separate RTOS for the microcontroller, an RTOS for memory management, as well as an RTOS for the protocol stack and man-machine interface (MMI) (which may or may not be the same as the microcontroller RTOS). These various standalone processors influence each other and need to talk to each other. They communicate via mailboxes, semaphores, event flags, pipes, and alarms.

Performance metrics in an operating system include the following:

Context switching. The time taken to save the context of one task (registers and stack pointers) and load the context of another task

Interrupt latency. The amount of time between an interrupt being flagged and the first line of the code being produced (in response to the interrupt), including completion of the initial instruction

As a rule of thumb, if an OS is ROM-based, the OS is generally more compact, less vulnerable to virus infection, and more efficient (and probably more predictable). Psion EPOC, the basis for Symbian products, is an example. The cost is flexibility, which means, the better the real-time performance, the less flexible the OS will be. Response times of an RTOS should generally be better than (that is, less than) 50 μs.

The OSI Layer Model

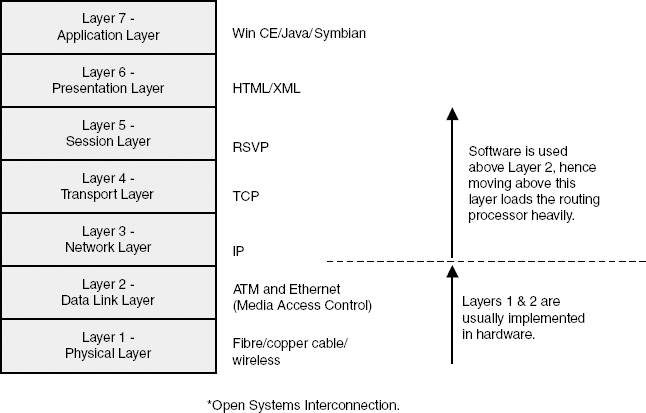

We can qualify response times and flexibility using the OSI layer model, shown in Figure 8.2. The OSI model (Open System Interconnection, also known as Open Standard Interconnection) was developed by ISO (International Standards Organization) in 1984 as a standard for data networking. The growth of distributed computing—that is, computers networked together—has, however, made the OSI model increasingly relevant as a means for assessing software performance.

Figure 8.2 Software performance–the OSI reference model.

At the Application layer, the software has to be very flexible. This means it must be able to respond to a wide range of unpredictable user requests. Although the execution of particular tasks may be real time (as defined earlier in the chapter), the tasks vary in complexity and may require a number of interactive processes (semaphores and alarms, for example) before the task can be considered as completed (a high degree of interrupt latency).

As we move down the protocol stack, task execution becomes more deterministic. For example, at the Physical layer, things are happening within very precise and predictable time scales. Returning to our reference model, following are descriptions of each protocol layer and the types of tasks performed:

Application layer (7). Windows CE, Symbian, or Java, for example, will look after the user (the MMI and the housekeeping tasks associated with meeting the user's requirement), organizing display drivers, cursors, file transfer, naming conventions, e-mail, diary, and directory management.

Presentation layer (6). This layer does work that is sufficiently repetitive to justify a general solution—page layout, syntax and semantics, and data management. HTML and XML are examples of Presentation layer protocols (for Web page management).

Session layer (5). This layer organizes session conversations: The way our dialogue is set up, maintained, and closed down. Session maintenance includes recovery after a system crash or session failure—for example, determining how much data needs resending. RSVP and SIP (covered later) are examples of Session layer protocols.

Transport layer (4). This layer organizes end-to-end streaming and manages data from the Session layer, including segmentation of packets for the Network layer. The Transport layer helps to set up end-to-end paths through the network. Transmission Control Protocol (TCP) is usually regarded as a Session layer protocol. (SIP is also sometimes regarded as a Session layer protocol.)

Network layer (3). This layer looks after the end-to-end paths requested by the Transport layer, manages congestion control, produces billing data, and resolves addressing conflicts. Internet Protocol (IP) is usually considered as being a Network layer protocol.

Data Link layer (2). This layer takes data (the packet stream) and organizes it into data frames and acknowledgment frames, checks how much buffer space a receiver has, and integrates flow control and error management. ATM, Ethernet, and the GSM MAC (Media Access Control) protocols are all working at the Data Link layer. GSM MAC, for example, looks after resource management—that is, the radio bandwidth requirements needed from the Physical layer.

Physical layer (1). This layer can be wireless, infrared, twisted copper pair, coaxial, or fiber. The Physical layer is the fulfillment layer—transmitting raw bits over a wireless or wireline communication channel.

Any two devices can communicate, provided they have at least the bottom three layers (see Figure 8.3). The more layers a device has, the more sophisticated—and potentially the more valuable—it becomes.

As an example, Cisco started as a hardware company; most of its products (for example, routers and switches) were in Layer 3 or 4. It acquired ArrowPoint, IPmobile, Netiverse, SightPath, and PixStream—moving into higher layers to offer end-to-end solutions (top three layers software, bottom four layers hardware and software). Cisco has now started adding hardware accelerators into routers, however, to achieve acceptable performance.

Figure 8.3 Data flow can be vertical and horizontal.

There is, therefore, no hard-and-fast rule as to whether software or hardware is used in individual layers; it's just a general rule of thumb that software is used higher up in the stack and hardware further down. As we will see in our later chapters on network hardware, the performance of Internet protocols, when presented with highly asynchronous time-sensitive multiple traffic streams, is a key issue in network performance optimization. We have to ensure the protocols do not destroy the value (including time-dependent value) generated by the Application layer; the protocols must preserve the properties of the offered traffic.

MExE Quality of Service Standards

So the software at the Application layer in the sender's device has to talk to the software at the Application layer in the receiver's device. To do this, it must use protocols to move through the intermediate layers and must interact with hardware—certainly at Layer 1, very likely at Layer 2, probably at Layer 3, and possibly at Layer 4.

MExE (the Mobile Execution Group) is a standards group within 3GPP1 working on software/hardware standardization. Its purpose is to ensure that the end-to-end communication, described here, actually works, with a reasonable amount of predictability. MExE is supposed to provide a standardized way of describing software and hardware form factor and functionality, how bandwidth on demand is allocated, and how multiple users (each possibly with multiple channel streams) are multiplexed onto either individual traffic channels or shared packet channels.

MExE also sets out to address algorithms for contention resolution (for example, two people each wanting the same bandwidth at the same time with equal priority access rights) and the scheduling and prioritizing of traffic based on negotiated quality of service rights (defined in a service level agreement). The quality of service profile subscriber parameters are held in the network operator's home location register (the register used to support subscribers logged on to the network) with a copy also held in the USIM in the subscriber's handset. The profiles include hardware and software form factor and functionality, as shown in the following list:

Class mark hardware description. Covers the vendor and model of handset and the hardware form factor—screen size and screen resolution, display driver capability, color depth, audio inputs, and keyboard inputs.

Class mark software description. Covers the operating system or systems, whether or not the handset supports Java-based Web browsers (the ability to upload and download Java applets), and whether the handset has a Java Virtual Machine (to make Java byte code instructions run faster).

Predictably, the result will be thousands of different hardware and software form factors. This is reflected in the address bandwidth needed. The original class mark (Class Mark 1) used in early GSM handsets was a 2-octet (16-bit) descriptor. The class mark presently used in GSM can be anything between 2 and 5 octets (16 to 40 bits). Class Mark 3 as standardized in 3GPP1 is a 14-octet descriptor (112 bits). Class Mark 3 is also known as the Radio Access Network (RAN) class mark. The RAN class mark includes FDD/TDD capability, encryption and authentication, intersystem measurement capability, positioning capability, and whether the device supports UCS2 and UCS4. This means that it covers both the source coding, including MPEG4 encoding/decoding, and channel coding capability of the handset. Capability includes such factors as how many simultaneous downlink channel streams are supportable, how many uplink channel streams are supportable, maximum uplink and downlink bit rate, and the dynamic range of the handset—minimum and maximum bit rates supportable on a frame-by-frame basis.

MExE is effectively an evolution from existing work done by the Wireless Application Protocol (WAP) standard groups within 3GPP1.

Maintaining Content Value

Our whole premise in this book so far has been to see how we can capture rich media and then preserve the properties of the rich media as the product is moved into and through a network for delivery to another subscriber's device. There should be no need to change the content. If the content is changed, it will be devalued. If we take a 30 frame per second video stream and reduce it to 15 frames a second, it will be devalued. If we take a 24-bit color depth image and reduce it to a 16-bit color depth image, it will be devalued. If we take a CIF image and reduce it to a QCIF image, it will be devalued. If we take wideband audio and reduce it to narrowband audio, it will be devalued.

There are two choices:

- You take content and adapt it (castrate it) so that it can be delivered and displayed on a display-constrained device.

- You take content, leave it completely intact (preserve its value), and adapt the radio and network bandwidth and handset hardware and software to ensure the properties of the content are preserved.

WAP is all about delivering the first choice—putting a large filter between the content and the consumer to try and hide the inadequacies of the radio layer, network, or subscriber product platform. Unsurprisingly, the result is a deeply disappointing experience for the user. Additionally, the idea of having thousands of devices hardware and software form factors is really completely unworkable. The only way two dissimilar devices can communicate is by going through an insupportably complex process of device discovery.

Suppose, for example, that a user walks into a room with a Bluetooth-enabled 3G cellular handset, and the handset decides to use Bluetooth to discover what other compatible devices there are in the room. This involves a lengthy process of interrogation. The Bluetooth-enabled photocopier in the corner is particularly anxious to tell the 3G handset all about its latest hardware and software capability. The other devices in the room don't want to talk at all and refuse to be authenticated. The result is an inconsistent user experience and, as we said earlier, an inconsistent user experience is invariably perceived as a poor-quality user experience.

Pragmatically, the exchange of complex content and the preservation of rich media product properties delivered consistently across a broad range of applications can and will only be achieved when and if there is one completely dominant de facto standard handset with a de facto standard hardware and software footprint. Whichever vendor or vendor group achieves this will dominate next-generation network-added value.

Do not destroy content value. If you are having to resize or reduce content and as a result are reducing the value of the content, then you are destroying network value.

Avoid device diversification. Thousands of different device hardware and software form factors just isn't going to work—either the hardware will fail to communicate (different flavors of 3G phones failing to talk to each other) or the software will fail to communicate (a Java/ActiveX conflict for example).

Experience to date reinforces the “don't meddle with content” message.

Network Factors

Let's look at WAP as an example of the demerits of unnecessary and unneeded mediation. Figure 8.4 describes some of the network components in a present GSM network with a wireless LAN access point supporting Dynamic Host Configuration Protocol, or DHCP (the ability to configure and reconfigure IPv4 addresses), a Web server, a router, and a firewall. The radio bearers shown are either existing GSM, high-speed circuit-switched data (HSCSD)—circuit-switched GSM but using multiple time slots per user on the radio physical layer—or GPRS/EDGE.

The WAP gateway is then added. This takes all the rich content from the Web server and strips out all the good bits—color graphics, video clips, or anything remotely difficult to deal with. The castrated content is then sent on for forward delivery via a billing system that makes sure users are billed for having their content destroyed. The content is then moved out to the base station for delivery to the handset.

Figure 8.4 GSM Network Components.

Figure 8.4 illustrates what is essentially a downlink flow diagram. It assumes that future network value is downlink-biased (a notion with which we disagree). However, the WAP gateway could also compromise subscriber-generated content traveling in the uplink direction.

Figure 8.5 shows the WAP-based client/server relationship and the transcoding gateway, which is a content stripping gateway not a content compression gateway (which would be quite justifiable).

Figure 8.6 shows the WAP structure within the OSI seven-layer model—with the addition of a Transaction and Security layer. One of the objectives of integrating end-to-end authentication and security is to provide support for micro-payments (the ability to pay for relatively low value items via the cellular handset).

Figure 8.6 WAP layer structure.

The problem with this is verification delay. There is not much point in standing in front of a vending machine and having to wait for 2 minutes while your right to buy is verified and sent to the machine. It is much easier and faster to put in some cash and collect the can.

There is also a specification for a wireless datagram (WDP). Because the radio layer is isochronous (packets arrive in the same order they were sent), you do not need individual packet headers (whose role in life is to manage out-of-order packet delivery). This reduces some of the Physical layer overhead, though whether this most likely marginal gain is worth the additional processing involved is open to debate.

Work items listed for WAP include integration with MExE, including a standardized approach to Java applet management, end-to-end compression encryption and authentication standards, multicasting, and quality of service for multiple parallel bearers. Some of the work items assume that existing IETF protocols are nonoptimum for wireless network deployment and must be modified.

As with content, we would argue it is better to leave well enough alone. Don't change the protocols; sort out the network instead. Sorting out the network means finding an effective way of matching the QoS requirements of the application to network quality of service. This is made more complex because of the need to support multiple per-user QoS streams and security contexts. QoS requirements may also change as a session progresses, and network limitations may change as a session progresses.

As content and applications change then, it can be assumed new software will need to be downloaded into base stations, handsets, and other parts of the network. Some hardware reconfiguration may also be possible. Changing the network in response to changes in the content form factor is infinitely preferable to changing the content in response to network constraints. Reconfiguration does, however, imply the need to do device verification and authentication of bit streams used to download change instructions.

The Software Defined Radio (SDR) Forum (www.sdrforum.org) is one body addressing the security and authentication issues of remote reconfiguration.

Summary

In earlier chapters, we described how the radio physical layer was becoming more flexible—able to adapt to rapid and relatively large changes in data rate. We described also how multiple parallel channel streams can be supported, each with its own quality of service properties. The idea is that the Physical layer can be responsive to the Application layer. One of the jobs of the Application layer is to manage complex content—the simultaneous delivery of wideband audio, image, and video products.

Traditionally, the wireless industry has striven to simplify complex content so it is easier to send, both across a radio air interface and through a radio network. Simplifying complex content reduces content value. It is better, therefore, to provide sufficient adaptability over the radio and network interface to allow the network to adapt to the content, rather than adapt the content to the network.

This means that handset hardware and software also needs to be adaptable and have sufficient dynamic range (for example, display and display driver bandwidth and audio bandwidth) to process wideband content (the rich media mix). In turn this implies that a user or device has a certain right of access to a certain bandwidth quantity and bandwidth quality, which then forms the basis of a quality of service profile that includes access and policy rights.

Given that thousands of subscribers are simultaneously sending and receiving complex content, it becomes necessary to police and regulate access rights to network resources. As we will see in later chapters, network resources are a product of the bandwidth available and the impact of traffic-shaping protocols on traffic flow and traffic prioritization.

Radio resources can be regarded as part of the network resource. Radio resources are allocated by the MAC layer (also known as the data link layer, or Layer 2). The radio resources are provided by Layer 1—the Physical layer. MExE sets out to standardize how the Application layer talks to the Physical layer via the intermediate layers. This includes how hardware talks to hardware and how software talks to software up and down the protocol stack.

The increasing diversity of device (handset) hardware and software form factor and functionality creates a problem of device/application compatibility. Life would be much easier (and more efficient) if a de facto dominant handset hardware and software standard could emerge. This implies a common denominator handset hardware and software platform that can talk via a common denominator network hardware and software platform to other common denominator handset hardware/software platforms.

It is worthwhile to differentiate application compatibility and content compatibility. Applications include content that might consist of audio, image, video, or data. Either the application can state its bandwidth (quantity or quality) requirements or the content can state its requirements (via the Application layer software). This is sometimes described as declarative content—content that can declare its QoS needs. When this is tied into an IP-routed network, the network is sometimes described as a content-driven switched network.

An example of a content-driven switching standard is MEGACO—the media gateway control standard (produced by the IETF), which addresses the remote control of session-aware or connection aware devices (for instance, an ATM device). MEGACO identifies the properties of streams entering or leaving the media gateway and the properties of the termination device—buffer size, display, and any ephemeral, short-lived attributes of the content that need to be accommodated including particular session-based security contexts. MEGACO shares many of the same objectives as MExE, and as we will see in later chapters, points the way to future content-driven admission control topologies.

Many useful lessons have been learned from deploying protocols developed to accommodate the radio physical layer. If these protocols take away rather than add to content value, they fail in terms of user acceptance. At time of writing, the WAP form is being disbanded and being subsumed into the Open Mobile Alliance (OMA), which aims to build on work done to date on protocol optimization.