CHAPTER 19

3G Cellular/3G TV Software Integration

In this chapter we review the potential convergence between 3G TV networks and 3G cellular networks. We compare U.S. digital TV, and European and Asian DAB (Digital Audio Broadcasting) and DVB (Digital Video Broadcasting) standards. The DAB and DVB standards provide an air interface capable of supporting mobility users, providing obvious opportunities for delivering commonality between cellular and TV applications. We point out that TV has plenty of downlink power but lacks uplink bandwidth (which cellular is able to provide). There are also commonalities at network level, given that both 3G cellular and 3G TV networks use ATM to move traffic to the broadcast transmitters (3G TV), and to and from the Node Bs, RNCs, and the core network in a 3G cellular network.

The DAB/DVB radio physical layer is based on a relatively complex frequency transform, which is hard to realize at present in a cellular handset transmitter (from a power efficiency and processor overhead perspective). We are therefore unlikely to see 3G cellular phones supporting a DVB radio physical layer on the uplink, but the phones could use the existing (W-CDMA or CDMA2000) air interface to deliver uplink bandwidth (subscriber-generated content). We will also look at parallel technologies (Web TV).

The Evolution of TV Technology

To date there have been three generations of TV technology (see Table 19.1):

Table 19.1 The Three Generations of TV

- The start of black-and-white TV in the 1930s (fading out or being switched off in the 1980s)

- Second-generation color introduced in the 1950s in the United States (NTSC), and 1960s in Europe and Asia (PAL)

- Third-generation digital TV, presently being deployed at varying rates and with various standards in Europe, Asia, and the United States

Technology maturation in television works in 50-year cycles. Black-and-white 405-line TV survived from the 1930s to the 1980s. Analog color (introduced in the 50s and 60s) is still very much with us today—though it will be switched off in Europe by mandate by 2010.

Getting 2 billion people to change their TV sets takes a while. In practice, there has been an evolution of other new TV technologies, including Web TV and IPTV.

The Evolution of Web-Based Media

In 3G cellular networks and wireline telecom networks we have defined the changes taking place in the traffic mix—from predominantly voice-based traffic to a complex mix of voice, text, image, video, and file exchange.

A similar shift is occurring on the World Wide Web—initially a media dominated by text and graphics but now increasingly a rich media mix of text/graphics, audio, and video streaming.

As the percentage of audio, image, and video streaming increases on the Web, user quality expectations increase. These expectations include the following:

- Higher resolution (text and graphics)

- Higher fidelity (audio)

- Resolution and color depth (imaging)

- Resolution, color depth, and frame rate (video)

These expectations increase over time (months and years). They may also increase as a session progresses. Remember: It is the job of our application software to increase session complexity—and by implication, session value. We want a user downloading from a Web site to choose:

- High-quality audio

- High-value, high-resolution, high color depth imaging

- High-value, high-resolution, high color depth, high frame rate video

Maximizing session complexity maximizes session value. As we make a session more complex, it should also become longer (more persistent). Also, as we increase session persistency, we should be able to increase session complexity, as shown in Figure 19.1.

We are trying to ensure that session delivery value increases faster than session delivery cost in order to deliver session delivery margin. Over the longer term (months/years), we can track how user quality expectations increase. As with cellular handsets, this is primarily driven by hardware evolution. As computer monitor displays improve, as refresh rates on LCDs get faster, as resolution and contrast ratio improves, as display drivers become capable of handling 24-bit and 32-bit color depth, we demand more from our application.

We don't always realize the quality that may be available to us. For example, we may have a browser with default settings, and we may never discover that these settings are changeable (arguably a failure of the browser server software to realize user value).

The browser describes the display characteristics preset, set by the user, or set by the user's device. This information is available in the log file that is available to the Internet service provider or other interested parties. This information can be analyzed to show how, for example, resolution settings are changing over time.

A present example would be the shift of users from 800 × 600 pixel resolution to 1280 × 1024 pixel resolution and 1152 × 864 pixel resolution.

Figure 19.1 Session persistency and complexity.

Resolving Multiple Standards

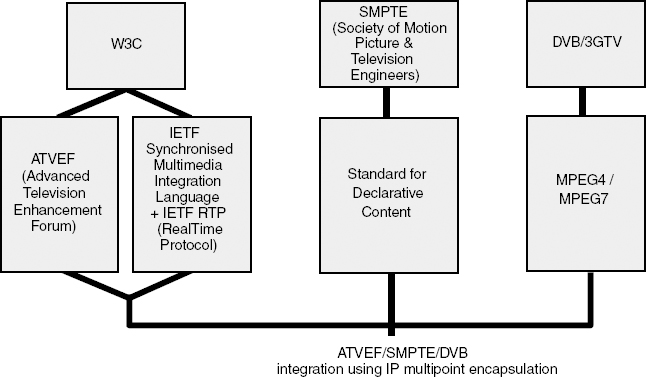

As the Web moves toward being a rich media experience, it moves closer to television as an entertainment and information medium. This poses some challenges as to who should make the standards needed to provide interoperability and content transparency (the ability to deliver content to different devices without destroying content value). Figure 19.2 shows the different standards-making groups presently involved. They are as follows:

- The W3C (World Wide Web Consortium) has workgroups focusing on presentation layer standards (HTML).

- The IETF has workgroups presently trying to standardize the delivery protocols needed at the session layer, transport layer, and network layer to preserve the value of Web-based complex content as the content is moved into and through a complex network, including control of the real-time components of the rich media mix.

- The ATVEF (Advanced Television Enhancement Forum) is working on a standard for HTML-based enhanced television (XHTML).

- The SMPTE (Society of Motion Picture and Television Engineers) is working on a standard for declarative content.

- The digital TV industry, predominantly in Europe and Asia, is working on future digital TV standards including MPEG-4/MPEG-7/MPEG-21 evolution.

Ideally, the best work of each of these standards groups would be combined in a common standard. Although this may not happen, it is, however, well worth reviewing some of the common trends emerging.

Figure 19.2 Multiple standards.

Working in an Interactive Medium

In common with TV, the Web has been to date largely a one-way delivery medium.

The Web, however, provides interactivity and an almost infinite choice of download material. Increasingly, the Web contains subscriber-generated content (an almost infinite choice of content of almost infinitely variable quality). This is due to the availability of low-cost hardware—Web cams and low-cost recording devices and the software associated with that hardware.

As mentioned, TV has traditionally been a one-way medium. Journalists produce content, which is then delivered to us. Increasingly, though, television is becoming a two-way medium based on subscriber-generated content or subscriber-generated entertainment. For example:

- Talk shows

- Phone-ins

- Fly-on-the-wall documentaries (or “reality shows”)

- Game shows, where we vote for the winners and losers

- National IQ competitions

Both the Web and traditional TV can provide real-time or virtual real-time coverage of events—elections, sports fixtures, game shows, or competitions. These events have trigger moments when the subscribers are asked to vote. Trigger moments can generate a huge demand for instantaneous uplink bandwidth.

We may often charge a premium rate to subscribers for the right to vote, the right to contribute or comment. Uplink bandwidth is becoming a principal source of income. Unfortunately, however, we have designed our networks (Web delivery and TV networks) to be asymmetric in the downlink direction, whereas our value is increasingly generated in the uplink direction.

Delivering Quality of Service on the Uplink

This presents us with a major uplink Quality of Service issue. Broadcast triggers generate large numbers of uplink requests. We all want to be first to vote or bid or buy (in an online auction, for example). We all feel we have the right to be first to vote or bid or buy, but it may take several hours to deliver our vote (or our bid or buy request) because of the lack of instantaneous uplink bandwidth. The only satisfactory resolution to this problem is to dimension our uplink bandwidth to accommodate these peak loading points. This will, however, result in gross overprovisioning for the vast majority of the time (and add to uplink bandwidth cost).

We can ease the uplink problem a little by providing the illusion of interactivity. In Europe and Asia Teletext is an integral part of the TV experience. Teletext involves sending additional program information (information about programs and additional information for programs) on a subcarrier simultaneous with the mainstream programming. An example would be local weather. When watching the weather broadcast, viewers are told they can go to Teletext to look up local weather. This information has been pre-sent to and is stored in the receiver; it is then updated on a rolling basis.

Viewers can also look at information such as flight availability or flight status (incoming flight arrival times). This experience provides the illusion of interaction without the need for any uplink bandwidth. It is only when they choose to act on the information (book a flight, for example), that they need an uplink—typically a telephone link to the service provider.

The ATVEF Web TV Standard

The ATVEF is working on a standard very similar to Teletext intended for Web TV. Web TV receivers are required to have a kilobyte of memory available for session cookies (storage of short-lived, session-long attributes needed to support an interactive session). Receivers also need to be able to support 1 Mbyte of cached simultaneous content. The ATVEF is also trying to address the problem of large numbers of uplink requests responding to broadcast triggers. The only solution (assuming the service provider does not want to overprovision uplink access capacity) is to provide local buffering and a fair conditional access policy (priority rights).

Topics addressed by the ATVEF include how to place a Web page on TV, how to place TV on a Web page, and how to realize value from a complex resource stream. A complex resource stream is a stream of content that can capture subscriber spending power, which means the content has spending triggers—the ability to respond to an advertisement by making an instant purchase, respond to a charity appeal by making an instant donation, or respond to a request to vote by making a (premium charge) telephone call. The ATVEF has a broadcast content structure that consists of services, events, components, and fragments:

Service. A concatenation of programs from a service provider (analogous to a TV channel)

Event. A single TV program

Component. Represents the constituent parts of an event—an embedded Web page, the subtitles, the voice over, the video stream

Fragment. A subpart of a component—a video clip, for example

Integrating SMIL and RTP

In parallel, the IETF is working on a Synchronized Multimedia Integration Language (SMIL). SMIL supports the description of the temporal behavior of a multimedia presentation, associates hyperlinks with media objects and describes the layout of the screen presentation, and expresses the timing relationships among media elements such as audio and video (animation management). Note how important end-to-end delay and delay variability becomes when managing the delivery of this complex content, particularly when the content is being used as the basis for a complex resource stream whose sole purpose is to trigger a real-time uplink response, which means we are describing a conversational rich media exchange.

The intention is to try and integrate SMIL with the IETF Real-Time Protocol (RTP). The purpose of RTP is to try and determine the most efficient multiple paths through a network in order to deliver (potentially) a number of multiple per-user information streams to multiple users. RTP allows but controls buffering and re-tries to attempt to preserve end-to-end performance (minimize delay and delay variability) and preserve real-time content value. The IETF describes this as IP TV.

The Implications for Cellular Network Service

So perhaps we should now consider what this means for cellular network service provision. The W3C is working on standards for Web TV. The IETF is working on standards for IP TV. Hopefully, these standards will work together over time. Web TV and IP TV are both based on the idea of multiple per-user channel streams, including simultaneous wideband and narrowband channel streams. This is analogous to SMS-coded data channels in IMT2000. We know that the IMT2000 radio physical layer is capable of supporting up to six simultaneous channel streams per user. We also know that the hardware (handset hardware) is, or at least will be, capable of supporting potentially up to 30 frames per second high color depth high-resolution video (for the moment we are rather limited by our portable processor power budget).

Media delivery networks depend on server architectures that are capable of undertaking massive parallel processing—the ability to support lots of users simultaneously. Note that each user could be downloading multiple channel streams, which means each user could be downloading different content in different ways. This is reasonably complex. It is even more complex when you consider that each user may then want to send back information to the server. This information will be equally complex and equally diverse.

To put this into a broadcasting context, in the United Kingdom, 8 million Londoners are served by one TV broadcast transmitter (Crystal Palace). This one transmitter produces hundreds of kiloWatts of downlink power to provide coverage. In the new media delivery network model, 8 million subscribers now want to send information back to that transmitter. The transmitter has no control over what or when those subscribers want to send.

The network behind the transmitter has to be capable of capturing that complex content (produced by 8 million subscribers), archiving that content, and realizing revenue from that content by redelivering it back to the same subscribers and their friends. This is now an uplink-biased asymmetric relationship based on uplink-generated value, realized by archiving and redelivering that content on the downlink.

Alternatively, it may be that subscribers might not want to use the network to store content and might prefer to share content directly with other subscribers (the peer-to-peer network model).

Either way, the model only works if the content is reasonably standardized, and this only happens if the hardware (and the software needed to manage the hardware) is reasonably standardized. Note this is harder to achieve if the hardware is flexible (reconfigurable), as this makes the software more complex, which makes consistent interoperability harder to achieve.

Television hardware is reasonably consistent, though it is less consistent than it used to be. For instance, we can now have 16:4 wide-screen TVs or 4:3 aspect ratio TVs, which complicates the content delivery process. But even taking this into account, cellular phones are far more diverse in terms of their hardware footprint—display size, aspect ratio, color resolution, processor bandwidth, and so on.

Device-Aware Content

This has resulted in the need to make content device-aware—using transcoders and filters to castrate the content. As we have argued, it is actually better to make the device content-aware and to make sure that user devices are reasonably consistent in the way in which they deliver content to the network. In other words, devices need to be able to reconfigure themselves to receive content from the network and need to be adaptive in the way in which they deliver content to the network.

This is made much easier if the devices share a common hardware and software platform. This either emerges through the standards process (unlikely) or via a de facto vendor dominance of the market. Note this might not be a handset vendor but will more likely be a component vendor. Intel's dominance of the computer hardware space is an example of de facto vendor-driven device commonalty. Similarly, it is the device (hardware) commonality that has helped Microsoft establish a de facto vendor-driven software/operating system.

Given the dominance of the DSP in present cellular phones and the likely dominance of the DSP in future cellular phones, it is most likely to be a DSP vendor who imposes this necessary hardware commonality.

The Future of Digital Audio and Video Broadcasting

In the meantime, we need to consider the future of digital TV (3G TV). Specifically, we need to consider the future of Digital Audio Broadcasting and Digital Video Broadcasting. Digital Audio Broadcasting is based on a 1.536 MHz frequency allocation at 221.296 to 222.832 MHz. This is shown in Table 19.2.

The physical layer uses QPSK modulation and then an Orthogonal Frequency-Division Multiplex (OFDM)—that is, a time domain to frequency domain transform, with the bit rate spread over either 1536 frequency subcarriers, or 768, 384, or 192 frequency subcarriers. The more subcarriers used, the longer the modulation interval and therefore the more robust the channel will be to intersymbol interference.

However, as we increase the number of frequency subcarriers, we increase the complexity of the transform and therefore increase processor overhead. This does not matter when we have one or several or a small number of mains-powered transmitters transmitting just a downlink. It would be computationally expensive and therefore power hungry to make the present receive-only receivers into receiver/transmitters. Apart from the processor overhead, the peak-to-average ratios implicit in OFDM would make power-efficient small handsets difficult to implement. This is why you do not have OFDM in any 3G cellular air interface.

Table 19.2 European Digital Audio Broadcasting Modulation Options

Table 19.3 TV Bands Across Europe

OFDM does deliver a very robust physical channel. It trades processor overhead against channel quality and is therefore a strong contender for 4G cellular air interfaces (by which time processor overhead and linearity will be less of an issue). The DAB channel delivers a gross transmission rate of 2.304 Mbps and a net transmission rate of 1.2 Mbps that supports up to 64 audio programs and data services or up to 6 × 192 kbps stereo programs with a 24 kbps data service delivered to mobile users traveling at up to 130 kph.

Digital TV uses a similar multiplex to deliver good bandwidth utilization and good radio performance, including the ability to support mobility users. Four TV bands were allocated across Europe by CEPT in 1961 for terrestrial TV, as shown in Table 19.3.

In the 1990s proposals were made to refarm bands 4 and 5, dividing 392 MHz of channel bandwidth into 8 MHz or 2 MHz channels. This was to be implemented in parallel with a mandated shutdown of all analog transmissions by 2006 in some countries (for example, Finland and Sweden) and by 2010 in other countries (for example, the United Kingdom). In addition, some hardware commonality was proposed between digital terrestrial TV (DVB-T), satellite (DVB-S), and cable (DVB-C), including shared clock and carrier recovery techniques.

Partly because of its addiction to football and other related sports (Sky TV, the dominant DVB-S provider, bought out most of the live television rights to most of the big sporting fixtures), the United Kingdom now has one of the highest levels of digital TV adoption in the world (over 40 percent of all households by 2002).

DVB-S, DVB-C, and DVB-T use different modulation techniques. DVB-S uses single-carrier QPSK, DVB-C uses single-carrier 64-level QAM, and DVB-T uses QPSK or QAM and OFDM. This is due to the physical layer differences. The satellite downlink is line of sight and does not suffer from multipath; the cable link by definition does not suffer from multipath. Terrestrial suffers from multipath and also needs to support mobile users, which would be difficult with satellite (budgeted on the basis of a line-of-site link) and impossible for cable.

In DVB-T, transmitter output power assumes that many paths are not line of site. For DVB-S, downlink power is limited because of size and weight constraints on the satellite. Terrestrial transmitters do not have this limitation (though they are limited ultimately by health and safety considerations). As in DAB, the carrier spacing of the OFDM system in DVB-T is inversely proportional to the symbol duration. The requirements for a long guard interval determines the number of carriers: A guard interval of ≈250 μs can be achieved with an OFDM system with a symbol time of ≈ 1 ms and hence a carrier distance of ≈ 1 kHz resulting in ≈ 8000 carriers in an 8-MHz-wide channel. The OFDM signal is implemented using an inverse Fast Fourier Transform, and the receiver uses an FFT in the demodulation process. The FFT size 2N, where N = 11 or N = 13 are the values used for DVB-T. The FFT size will then be 2048 or 8192, which determines the maximum number of carriers. In practice, a number of carriers at the bottom and top end of the OFDM spectrum are not used in order to allow for separation between channels (guard band).

Systems using an FFT size of 2048 are referred to as 2k OFDM, while systems using an FFT size of 8192 are referred to as 8k OFDM. The more subcarriers used, the longer the guard interval duration, and the longer the guard interval duration, the more resistant the path will be to multipath delivery. There is some lack of orthogonality between the frequency subcarriers that is coded out—hence, the term COFDM: coded orthogonal frequency-division multiplexing.

Planning the Network

Although this may seem rather complicated, it makes network planning quite easy. In many cases, you can implement a single-frequency network using existing transmitter sites (assuming the 8k carrier is used). More frequency planning is needed for the 2k carrier implementation. The guard interval can be used to compensate for multipath between the transmitter and a receiver and the multipath created from multiple transmitters transmitting at the same frequency. Presently, Europe is a mixture of 8k and 2k networks, the choice depending on the coverage area needed, existing site locations, and channel allocation policy.

We said that the modulation used in DVB-T is either QPSK or QAM. Actually, there are a number of modulation, code rate, and guard interval options. These are shown in Table 19.4.

Going from QPSK to 16-level QAM to 64-level QAM increases the bit rate but reduces the robustness of the channel. Remember, the link budget also has to be based on only some users being line of site (satellite tends to win out in the bit rate stakes). This relationship is shown in Table 19.5. The link budget needs to be increased in a 2k network when higher-level modulation is used and depending on what coding scheme is used. In (1) we have a heavily coded (2 bits out for every 1 bit in) 16 QAM channel. Reducing the coding overhead, in (2), but keeping the 16 QAM channel requires an extra 4 dB of link budget, though our user data throughput rate will have increased. (3) shows the effect of moving to 64-level QAM and using a 2/3 code rate (3 bits out for every 2 bits in). The user data rate increases but an extra 4 dB of link budget is needed.

Table 19.4 DVB-Modulation Options

Table 19.5 2K Carrier Comparison (NTL)

Because DVB-T needs to cope with moving objects in the signal path and user mobility, additional coding and interleaving is needed, together with dynamic channel sounding and phase error estimation, which means the use of pilot channels consisting of scattered pilots—spread evenly in time and frequency across OFDM symbols for channel sounding—and continuous pilots—spread randomly over each OFDM symbol for synchronization and phase error estimation.

The idea of the adaptive modulation and adaptive coding schemes is to provide differentiated Quality of Service with five service classes:

LDTV. Low definition (video quality)—multiple channel streams

SDTV. Standard definition—multiple channel streams

EDTV. Enhanced definition—multiple channel streams

HDTV. High definition—single channel stream 1080 lines; 1920 pixels per line

MMBD. Multimedia data broadcasting (including software distribution)

Conditional access billing will then be based on the service class requested or the service class delivered (or both). We use the future tense because, presently, the link budget—determined by the existing transmitter density and present maximum power limits—prevents the use of the higher-level modulation and coding schemes. This has, to date, prevented the deployment of EDTV or HDTV—the cost of quality bandwidth. However, the building blocks exist for quality based billing based on objective quality metrics (pricing by the pixel).

In the transport layer, digital TV is delivered in MPEG-2 data containers—fixed-length 188-byte containers. ATM is then used to carry the MPEG-2 channel streams between transmitters. The MPEG-2 standard allows for individual packet streams to be captured and decoded in order to capture program specific information. This forms the basis for an enhanced program guide, which can include network information, context and service description, event information, and an associated table showing links with other content streams—a content identification procedure. We can therefore see a measure of commonality developing both at the physical layer and at the application layer between 3G cellular and 3G TV. Physical layer convergence is unlikely to happen until next-generation (4G) cellular, since the COFDM multiplex is presently too processor-intensive and requires too much linearity to be economically implemented in portable handheld devices.

There is, however, convergence taking place or likely to take place at the application layer and transport layer. At the application layer, digital TV is establishing the foundations for quality-based billing, which will be potentially extremely useful for cellular service providers. At the transport layer, both 3G cellular and 3G TV use ATM to multiplex multiple channel streams for delivery to multiple users. The IU interface in 3G cellular and 3G TV uses 155 Mbps ATM to move complex content streams into and through the network, from the RNC to and from the core network in 3G cellular and between transmitters in 3G TV.

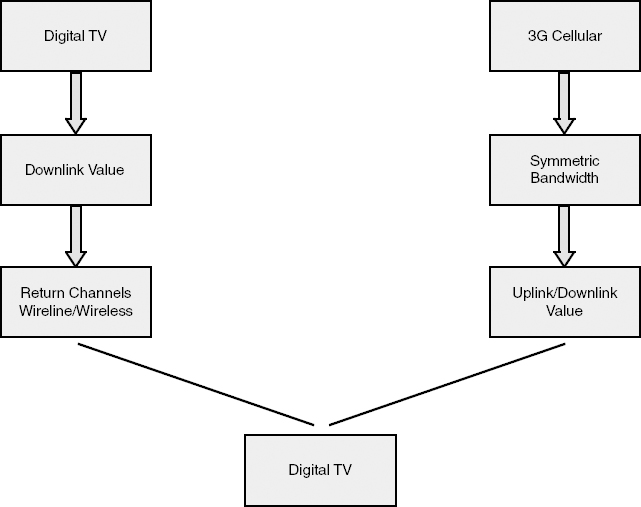

3G cellular and 3G TV both use MPEG standards to manage the capture and processing of complex content—the rich media mix. 3G TV has lots of downlink bandwidth (392 MHz) and lots of downlink RF power (hundreds of kiloWatts). 3G cellular has plenty of bandwidth but not a lot of power (10 or 20 W on the downlink and 250 mW on the uplink). 3G cellular, however, does have a lot of uplink bandwidth (60 MHz), which digital TV (3G TV) needs. This relationship is shown in Figure 19.3.

Digital TV needs uplink bandwidth because uplink bandwidth is the mechanism needed to capture future subscriber value. Digital TV needs a return channel, preferably a return channel that it owns or has control of (that does not need to share uplink revenue with other transport owners).

Many cellular operators already share tower space with TV transmission providers. Most of the dominant tower sites were set up by the TV broadcasting companies many years ago. Some companies, the BBC, for example, have sold off these towers for use by TV and cellular providers. There are already well-established common interests between the two industries. Content convergence will help to consolidate this common interest.

Figure 19.3 Downlink/uplink value.

In the United States, digital TV is experiencing slower adoption. U.S. digital TV standards are the responsibility of the Advanced Television Systems Committee (ATSC). The standard is very different to and in competition with the European and Asian DVB standard. The physical layer uses a modulation known as 8 VSB (vestigial side band) rather like single side band AM with a 6 MHz channel to deliver a gross channel rate of 19 Mbps. It is not designed to support mobile reception. The typical decoder power budget is 18 Watts, so, as with DVB, inclusion of a digital TV in a digital cellular handset is presently overambitious. It is difficult to see how the economics of digital TV using the ATSC standard will be sustained in the United States without access to the rest of world markets presently deploying DVB-T, DVB-S, or DVB-C technologies.

From 2010 onward there would appear to be very clear technology advantages to be gained by deploying integrated 3G TV and 3G cellular networks and moving toward a common receiver hardware and software platform. European and Asian standards making (including the MPEG standards community) is beginning to work toward this goal. The proximity of spectral allocation in the 700- and 800-MHz bands will provide a future rationale for 3G TV/3G cellular technology integration.

The Difference Between Web TV, IPTV, and Digital TV

Traditionally the Web has been described as a lean-forward experience, which means you are close to a monitor and keyboard, searching for a particular topic to research, using a search engine or going from Web site to Web site using hyperlinks. The time spent on any one Web site is typically quite short. TV is often described as a lean-back experience—more passive. You sit back and watch a program at a defined time, for a defined time. The program is broadcast on a predetermined predefined RF channel (DVB-T or DVB-S broadcast). Session length can typically be several hours.

IP TV gives you access to either Web-based media or traditional TV content. Expatriates often use IP TV to access favorite radio and TV programs from their home country that are not available locally. It provides access via the Internet to a variety of media from a variety of sources. However, these distinctions are becoming less distinct over time.

The job of a Web designer is to increase the amount of time spent by visitors on a site. Web casting particular events at a particular time (a Madonna concert, for example) is becoming more popular. The mechanics of multicasting—delivering multiple packet streams to multiple users—are being refined.

In parallel, digital TV is becoming more interactive. Access to the Internet is available through cable TV or via a standard telephone connection, and TV programs have parallel Web sites providing background information on actors, how the program was made, and past and future plotlines.

Probably the most visible change will be display technology. High-resolution widescreen LCD or plasma screens for home cinema requires ED TV or HDTV resolution (and high-fidelity surround sound stereo). Equally significant will be the evolution of storage technology (the availability of low-cost recordable/rewritable DVDs) and the further development of immersive experience products (interactive games). Note the intention is to use Multimedia Data Broadcasting (MMDB) for software distribution, which will include distribution of game infotainment products.

So it all depends on how attached we become to our set-top box and whether we want to take it with us when we go out of the house. We can already buy portable analog TVs (and some cell phones—for example, from Samsung—integrate an analog TV receiver chip). These products do not presently work very well. As digital TV receiver chip set costs reduce and as TV receiver chip set power budgets reduce, it will become increasingly logical to integrate digital TV into the handset—and it will work reasonably well because DVB-T has been designed to support mobility users.

Additionally, digital TV and Web TV can benefit from capturing content from digital cameras embedded into subscriber handsets—two-way TV (uplink added value).

We are unlikely to see any short-term commonality of the radio physical layer because of the processor overheads presently implicit in OFDM, including the requirements for transmit linearity. Use of OFDM in fourth-generation cellular would, however, seem to be sensible.

Co-operative Networks

A number of standards groups are presently involved in the definition of co-operative networks. Co-operative networks are networks that combine broadcast networks with 3G cellular or wireless LAN network technologies.

There are a number of European research projects also presently under way, including DRIVE (Dynamic Radio for IP Services in Vehicular Environments) and CIS-MUNDUS (Convergence of IP-Based Services for Mobile Users and Networks in DVB-T and UMTS Systems). The research work focuses on the use of a 3G cellular channel as the return channel with the downlink being provided by a mixture of DAB and DVB transmission, collectively known as DXB transmission.

Figure 19.5 Content re-purposing.

A European forum known as the IP Data Casting Forum has proposed that DVB and DAB be used to deliver IP data using data cast lobes—reengineering the broadcast transmitters to provide some directivity to support localization of deliverable content. Data-cast lobes are shown in Figure 19.4. As with cellular networks, this would also allow for a more intensive use of the UHF digital TV spectrum.

The dynamic range of display technologies involved (high-quality CRT, high-quality LCD, low-quality LCD), including a range of aspect ratios (16 × 9, 4 × 3, 1 × 1), requires a measure of re-purposing of content, as shown in Figure 19.5.

This is similar in concept to the transcoding used in the Wireless Application Protocol. Care will need to be taken to maintain consistency of delivered content quality. Co-operative networks will need to be consistently co-operative.

Summary

Over the next 10 years there are increasing opportunities to develop physical layer commonality between 3G TV and 3 or (more likely) 4G cellular networks. There are also commonalities in the way that traffic is handled and transported across digital TV and 3G cellular networks using ATM cell switching. In addition, digital TV is increasingly dependent on uplink-generated value, which is available, as one option, on the cellular uplink. Trigger moments in TV content can, however, create a need for large amounts of instantaneous uplink bandwidth, and care needs to be exercised to ensure adequate uplink bandwidth and uplink power is available to accommodate this.

Highly asynchronous and at times highly asymmetric loading on the uplink places particular demands on the design of network software and its future evolution—the subject of our next and final chapter.