In the previous chapter, we saw how Solr provides us with a way to index data using a schema. This chapter will cover techniques that can be used to index data in Solr. There are many ways of sending data to Solr using API or by making a POST call to update handlers. We'll cover the following topics in this chapter:

- Inserting data into Solr using basic POST tools

- Using XML and JSON handlers

Solr provides an easy-to-use command-line tool for sending data in various formats to the Solr server. We can use the post.jar or post.sh tool to send data to the Solr server to index data. Both of these tools are located in %SOLR_HOME%/example/exampledocs in the default installation folder.

Note

To see the commands for Solr 5.x, visit the Solr Wiki (https://wiki.apache.org/solr/).

We'll copy the two files (post.sh and post.jar) to the %SOLR_HOME/bin folder. The post.sh is a Unix shell script that wraps around the cURL command to send data to the Solr server. For Windows users, Solr has provided a standalone Java application packaged in a JAR format. It can be used in a way similar to the post.sh tool.

To run post.jar, open Command Prompt in Windows and enter the following:

$ %SOLR_HOME%/bin>java –jar post.jar –h

The result obtained by executing the preceding commands is as follows:

SimplePostTool version 1.5 Usage: java [SystemProperties] -jar post.jar [-h|-] [<file|folder|url|arg> [<file|folder|url|arg>...]] Supported System Properties and their defaults: -Ddata=files|web|args|stdin (default=files) -Dtype=<content-type> (default=application/xml) -Durl=<solr-update-url> (default=http://localhost:8983/solr/update) -Dauto=yes|no (default=no) -Drecursive=yes|no|<depth> (default=0) -Ddelay=<seconds> (default=0 for files, 10 for web) -Dfiletypes=<type>[,<type>,...] (default=xml,json,csv,pdf,doc,docx,ppt,pptx,xl s,xlsx,odt,odp,ods,ott,otp,ots,rtf,htm,html,txt,log) -Dparams="<key>=<value>[&<key>=<value>...]" (values must be URL-encoded) -Dcommit=yes|no (default=yes) -Doptimize=yes|no (default=no) -Dout=yes|no (default=no)

Let's test the post.jar utility by sending a JSON document to our Solr server. We'll feed some data to the musicCatalogue example, which we created in Chapter 3, Indexing Data. The sample files are available in this chapter code base, which can be used to feed the data into the Solr instance.

For sending this JSON data, we'll execute the following command from the examples' directory that comes with this book. We're specifying the -Durl system property, which will refer to our musicCatalog core:

$ java -Durl="http://localhost:8983/solr/musicCatalog/update" -Dtype=application/json -jar %SOLR_HOME%/post.jar %SOLR_INDEXING_EXAMPLE/Chapter-4/sampleMusic.json SimplePostTool version 1.5 Posting files to base url http://localhost:8983/solr/musicCatalog/update using content-type application/json.. POSTing file sampleMusic.json 1 files indexed. COMMITting Solr index changes to http://localhost:8983/solr/musicCatalog/update. . Time spent: 0:00:00.150

Note that we're assuming that you have set the SOLR_HOME path to the Solr installation directory.

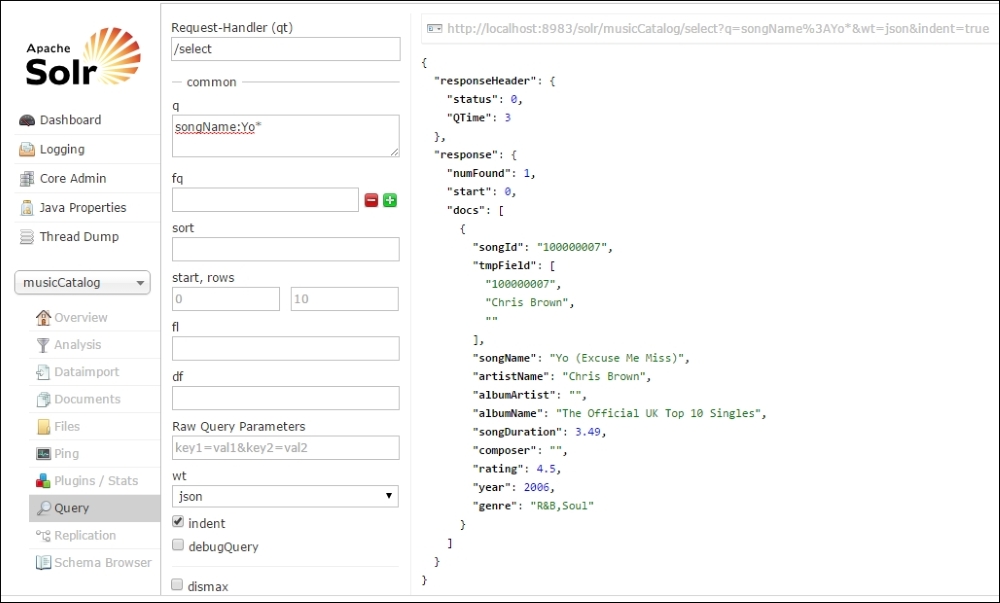

After we have executed this command, we can go to the query browser window from the Solr admin UI console and see that the data gets indexed in our musicCatalogue example. We've added a song with the songName attribute set to "Yo (Excuse Me Miss)" and the artistName attribute set to Chris Brown. We can execute the following query to see the newly inserted data:

q = songName:Yo*

The following screenshot shows the data that is returned after we perform the query:

As we can see from the preceding screenshot, the Solr query browser has returned us a document that was indexed after running the post.jar utility tool.

The next important topic in indexing data in Solr is the use of request handlers that come with Solr. Request handlers in Solr provide us with a way to add, delete, update, and search for documents in the Solr Index.

Solr comes with a lot of plugins that can be used to import documents from a large number of sources. Documents can be indexed using Apache Tika—you can index documents such as MS Word documents, Excel spreadsheets, PDF documents, and many more file formats.

Also, Solr provides us with a way to import data from relational databases or structured data types using the data import handler. We'll see how we can use the data import handler in Chapter 5, Index Data Using Structured Datasources Using DIH, where we'll cover this in detail.

By default, Solr provides a way to index structured documents in XML, CSV, and JSON documents. In the following section, we'll see how we can use the request handlers provided by Solr to import these documents.

Request handlers can be mapped in the following two ways:

- Path-based names, which can be specified in the URL

- Using the

qt(query-type) parameter

A request handler can be used to support different data types using the content-type parameter.

In Chapter 3, Indexing Data, we created a Solr configuration file, and we'll be reusing it to add a request handler.

In solrconfig.xml, we've added the following line to add a request handler of the solr.UpdateRequestHandler type, which will be mapped to the /update url path. This handler will tell Solr that /update will be used to receive commands/documents that will be used by UpdateRequestHandler:

<requestHandler name="/update" class="solr.UpdateRequestHandler" />