Thus far this book’s main focus has been on formally specifying a database design. Of course, you want to do more than just specify a database design; you usually would like to implement it using some DBMS. In this chapter we turn our attention towards implementing a database design.

Given the background of both authors, this chapter focuses specifically on implementing a database design in Oracle’s SQL DBMS. We’ll assume you’re familiar with the PL/SQL language—Oracle’s procedural programming language. We assume concepts such as database triggers, packages, procedures, and functions are known concepts.

![]() Note We refer you to the standard Oracle documentation available on the Internet (

Note We refer you to the standard Oracle documentation available on the Internet (http://tahiti.oracle.com) if you are unfamiliar with any of the PL/SQL concepts that you’ll encounter in this chapter.

This will be a less formal chapter. We’ll use SQL terminology (such as row and column) when referring to SQL constructs. We’ll still use formal terminology when referring to the formal concepts introduced in this book.

The first few sections (through the section “Implementing Data Integrity Code”) establish some concepts with regards to implementing business applications on top of a database. You’ll also see that three distinctly different strategies exist to implement data integrity constraints.

Sections then follow that deal with implementing table structures, attribute constraints, and tuple constraints (through the section “Implementing Tuple Constraints”).

The section “Table Constraint Implementation Issues” is a rather large section. It introduces the challenges that you’re faced with when implementing multi-tuple constraints (that is, table or database constraints). A big chunk of this section will explore various constraint validation execution models. These execution models range from rather inefficient ones to more sophisticated (efficient) ones.

The sections “Implementing Table Constraints” and “Implementing Database Constraints” cover the implementation of table and database constraints, respectively, using one of the execution models introduced in the section “Table Constraint Implementation Issues.” This is followed by an exploration of the implementation of transition constraints (the section “Implementing Transition Constraints”).

The section “Bringing Deferred Checking into the Picture” deals with deferring the validation of constraints—a nasty phenomenon that cannot be avoided given that you implement a database design in an SQL DBMS.

At the end of this chapter we introduce you to the RuleGen framework: a framework that can help you implement data integrity constraints in Oracle’s SQL DBMS, and that applies many of the concepts explained throughout this chapter.

This chapter does not have an “Exercises” section.

If you’re familiar with a different SQL DBMS, then most of the concepts discussed in this chapter will probably also apply. We can’t be sure though, given our background. The discussion that involves serializing transactions is likely Oracle specific. Of course, the actual code examples will have to be done differently given another SQL DBMS.

Introduction

Thus far this book’s main focus has been to show you how a database design can be formally specified. To that end, we’ve introduced you to a bit of set theory and a bit of logic. Set theory and logic are the required mathematical tools that enable you to deal with professionally, talk about, manage, and document a database design. Also, as you have seen, a database design is much more than just table structures; the data integrity constraints are by far the most important part of a database design.

Of course, you want to do more than just specify a database design; you usually would like to implement it using some DBMS, and build business applications on top of it.

Unfortunately there are no true relational DBMSes available to us; we are forced to use an SQL DBMS to implement a database design. Implementing a database design in an SQL DBMS, as you’ll see in the course of this chapter, poses quite a few challenges—not so much in the area of implementing the table structures, but mainly in the area of implementing the involved data integrity constraints.

As you’ll see in the course of this chapter, SQL provides us with constructs to state data integrity constraints declaratively; however, not all of these are supported by SQL DBMSes available today. Most notably, the CREATE ASSERTION command—which has been in the official standard of SQL since 1992—is not supported in most SQL DBMSes (including Oracle’s).

Before we start investigating these constraint implementation challenges, we’ll first broaden our scope by investigating the general software architecture of a business application, or, as we prefer to call such an application from here on, a window-on-data application. Once we’ve established this architecture, we’ll then, within the context of that architecture, discuss the challenges of implementing a database design.

![]() Note This chapter will be quite different from the previous ones. It contains far less mathematics, although, as you will see, mathematics can still be applied when implementing a database design. We think that this chapter adds value to this book, because in our experience, the challenges investigated in this chapter are often overlooked.

Note This chapter will be quite different from the previous ones. It contains far less mathematics, although, as you will see, mathematics can still be applied when implementing a database design. We think that this chapter adds value to this book, because in our experience, the challenges investigated in this chapter are often overlooked.

Window-on-Data Applications

A window-on-data (WoD) application is—well, just as it says—an application that provides the user with windows on data. In a WoD application, users navigate through various windows of the application (browser pages, nowadays). Every page in the application either

- Enables the user to compose a data retrieval request, execute it, and then have the retrieved data displayed in the window, or

- Offers the user the possibility of composing a transaction using already retrieved—or newly entered—data, and then execute it.

Because these applications are all about querying and manipulating data, you should spend a fair amount of effort on designing and implementing the underlying table structures and involved integrity constraints necessary to support the application. The database design constitutes the underlying foundation of a WoD application; the quality of a WoD application can only be as good as the quality of the underlying database design.

![]() Note Here actually lay a main motivation for us to start writing this book. The current state of our industry is pretty bad when it comes to focusing on database design capabilities within an IT project. Few IT professionals nowadays are educated in the foundations of the relational model of data. Often, database designs are created that inevitably will cause the business application to not perform well, and/or be difficult to maintain. As mentioned earlier, discussing the quality of database designs is not within the scope of this book (it justifies at least another book in itself). We chose to offer you first the necessary tools to enable you to start dealing with database designs in a clear and professional way.

Note Here actually lay a main motivation for us to start writing this book. The current state of our industry is pretty bad when it comes to focusing on database design capabilities within an IT project. Few IT professionals nowadays are educated in the foundations of the relational model of data. Often, database designs are created that inevitably will cause the business application to not perform well, and/or be difficult to maintain. As mentioned earlier, discussing the quality of database designs is not within the scope of this book (it justifies at least another book in itself). We chose to offer you first the necessary tools to enable you to start dealing with database designs in a clear and professional way.

So, in short, a WoD application is all about managing business data. Needless to say, by far the majority of all business applications built on top of a database are WoD applications. This justifies taking a closer look at the general software architecture of this type of application.

In the next section we’ll introduce you to a classification scheme for the application code of a WoD application. Later on, when we discuss three implementation strategies for the data integrity constraints of a database design, you’ll see that we refer to this code classification scheme.

Classifying Window-on-Data Application Code

In this section, we’ll introduce you to a high-level code classification scheme that is at the core of every WoD application. Before we do this, we need to introduce two terms we’ll use in defining this classification scheme. These are data retrieval code and data manipulation code.

Data retrieval code is all code that queries data in the database. In our day-to-day practice these are typically the SQL queries (SELECT expressions) embedded within application code and interacting with the SQL DBMS.

Data manipulation code is all code that changes data in the database. These are the SQL data manipulation language (DML) statements (INSERT, UPDATE, or DELETE) embedded within application code that maintain data in the database.

![]() Note We’ll refer to these three types of DML statements (

Note We’ll refer to these three types of DML statements (INSERT, UPDATE, or DELETE) jointly as update statements.

Having introduced these two terms, we can now introduce the code classification scheme. All code of a WoD application can be classified into one of the following three code classes:

- Business logic code

- Data integrity code

- User interface code

The following three sections will discuss what we mean by the preceding three code classes.

Business Logic Code (BL Code)

Business logic code can be subdivided into two subclasses: first, code that composes and executes queries, and second, code that composes and executes transactions.

- Query composing and executing code: This is procedural code holding only embedded data retrieval code (that is, query expressions). This code is responsible for composing the actual SQL queries, or conditionally determining which SQL queries should be executed. This code also initiates the execution of these queries and processes the rows returned by the SQL DBMS. We’ll refer to this subclass as read BL code (rBL code for short).

- Transaction composing and executing code: This is procedural code holding embedded data manipulation code (that is, SQL DML statements). This code is responsible for composing update statements, or conditionally determining which update statements should be executed. This code also initiates the execution of these statements. Depending upon the return code(s) given by the SQL DBMS, this code might also execute the commit or rollback processing for the transaction. Note that data retrieval code will often be part of (the procedural code of) transaction composition. We’ll refer to this class as write BL code (wBL code for short).

Write BL code attempts to change the current database state. When it executes, the SQL DBMS should ensure that none of the data integrity constraints gets violated; the resulting database state should satisfy all data integrity constraints.

Often, you’ll find code embedded within wBL code whose specific purpose is to verify that the transaction that gets executed won’t result in a state that violates any of the involved data integrity constraints. We consider this constraint-verifying code not part of the wBL code class, but rather part of the data integrity code class discussed in the next section.

Data Integrity Code (DI Code)

Data integrity code is all declarative or procedural code that deals with verifying the continued validity of data integrity constraints. Whenever wBL code executes transactions, the data manipulation statements that get executed as part of such transactions can potentially violate data integrity constraints. If this is indeed the case, data integrity code will ensure that such a statement fails and that the changes it made are rolled back.

![]() Note We deliberately consider DI code to be in a distinct class by itself and not part of the business logic code class. Most other books and articles dealing with business logic often remain fuzzy about whether DI code is considered part of business logic or not.

Note We deliberately consider DI code to be in a distinct class by itself and not part of the business logic code class. Most other books and articles dealing with business logic often remain fuzzy about whether DI code is considered part of business logic or not.

For the majority of the data integrity constraints—in fact, all but attribute and tuple constraints—DI code will have to execute data retrieval code (queries) to determine if a given data manipulation statement is allowed or not. For multi-tuple constraints, DI code will always need to execute queries (inspecting other involved rows) to verify that the resulting database state still satisfies all data integrity constraints.

For example, consider the “at most one president allowed” table constraint defined in Listing 7-26 (table universe tab_EMP). When a transaction attempts to insert a president, you should run a constraint validation query either before execution of the insert or after execution of the insert, to verify that the insert is allowed given this constraint.

If you decide to validate whether the constraint remains satisfied before the actual execution of the insert, you could execute the following constraint validation query:

select 'there is already a president'

from emp

where job='PRESIDENT'

and rownum=1

If this query returns a row, then obviously the insert must not be allowed to proceed; inserting another president will clearly create a database state that violates this constraint.

In the other case—executing a query after the insert—you could run the following query:

select count(*)

from emp

where job='PRESIDENT'

If this query returns more than 1, then the constraint will be violated and the insert must be rolled back.

Ideally you would want that, given the declaration of all constraints, the DBMS automatically deduces and executes these constraint validation queries (alongside some conditional code either to refrain execution of a DML statement or to force a DML statement rollback). A DBMS that provides this service makes the task of the developer who codes business logic a lot easier.

![]() Note If you’re familiar with Oracle, you’ll know that Oracle’s SQL DBMS can perform this service only for a limited number of types of constraints.

Note If you’re familiar with Oracle, you’ll know that Oracle’s SQL DBMS can perform this service only for a limited number of types of constraints.

You can subdivide the DI code class into five subclasses, one per type of constraint:

- Code that verifies attribute constraints

- Code that verifies tuple constraints

- Code that verifies table constraints

- Code that verifies database constraints

- Code that verifies transition constraints

As you’ll see shortly, you can state the required DI code for some constraints declaratively in an SQL DBMS (which is then responsible for maintaining the constraint). However, you need to develop the code for most of them manually; that is, you are responsible for the implementation. This means that you need to design and write procedural code to maintain the constraint yourself.

![]() Note There are two high-level implementation strategies for creating DI code procedurally; either you make use of database triggers, or you embed DI code within wBL code. We’ll discuss the former one in more detail in the remainder of this chapter.

Note There are two high-level implementation strategies for creating DI code procedurally; either you make use of database triggers, or you embed DI code within wBL code. We’ll discuss the former one in more detail in the remainder of this chapter.

User Interface Code (UI Code)

UI code is code that determines the look and feel of a business application. It is responsible for the front end of the business application that the user deals with when using the application. UI code either

- Creates user interface for the user—the look of the business application—and typically displays retrieved data within the user interface, or

- Responds to user interface events initiated by the user and then modifies the user interface—the feel of the business application.

Creating and/or modifying the user interface always requires embedded calls to business logic code. This BL code will return the data to the UI code for display, and/or will change data as part of the transaction requested by the user. Depending upon the results of these embedded BL code calls, UI code will modify the user interface accordingly.

As a summary, Figure 11-1 illustrates the correlation between the different WoD application code classes that we’ve introduced in this section.

![]() Note In Figure 11-1, the reason why the DI code box is deliberately drawn outside the DBMS box is because application developers who develop wBL code need to implement the majority of DI code procedurally (as will become obvious in the course of this chapter).

Note In Figure 11-1, the reason why the DI code box is deliberately drawn outside the DBMS box is because application developers who develop wBL code need to implement the majority of DI code procedurally (as will become obvious in the course of this chapter).

Figure 11-1. WoD application code classes

This figure illustrates the following relations between the code classes (note that the numbers in this list refer to the numbers inside Figure 11-1):

- UI code holds calls to rBL code (queries) or wBL code (transactions).

- rBL code holds embedded data retrieval code that reads data from the database.

- wBL code holds embedded data manipulation code that writes to the database. wBL code often also holds embedded queries reading the database.

- DI code requires the execution data retrieval code.

- DI code is typically called from wBL code.

- DI code can also be called by the DBMS via database triggers (see the next section), which fire as a result of the execution of DML statements originating from wBL code.

- Sometimes you’ll see that UI code calls DI code directly; this is often done to create a responsive, more user-friendly user interface.

Implementing Data Integrity Code

In this section you’ll find a brief exploration of the aforementioned strategies for implementing DI code in Oracle’s SQL DBMS. We’ll also discuss a few related topics.

Alternative Implementation Strategies

As mentioned in the previous section, when transactions execute, you should run DI code to verify that all data integrity constraints remain satisfied. There are three different strategies to ensure execution of DI code when a WoD application attempts to execute a transaction:

- Declarative

- Triggered procedural

- Embedded procedural

Declarative

In the declarative strategy, you simply declare the constraints that are involved in your database design to the DBMS. The DBMS then automatically ensures—hopefully in an efficient manner—that offending DML statements will be rolled back when they try to create a database state that violates any of the declared constraints.

Note that to be able to declare constraints to the DBMS, you first require (some form of) a formal specification of these constraints that states exactly what the constraint is. The strength of the declarative strategy is that you just tell the DBMS what the constraint is; that is, there is no need for you to write any DI code. In essence, the declaration of the constraint to the DBMS constitutes the DI code.

Once you’ve declared a constraint, the DBMS then has the challenge of computing how best to check the constraint in an efficient manner; it is tasked to generate (hopefully) efficient DI code and ensure this code is automatically executed at the appropriate times when transactions are processed.

![]() Note You’ll see in the course of this chapter that the word “efficient” in the preceding sentence is imperative.

Note You’ll see in the course of this chapter that the word “efficient” in the preceding sentence is imperative.

Oracle’s SQL DBMS allows you to specify certain constraints declaratively either as part of the create table command, or separately (after the table has already been created) through the alter table add constraint command.

Starting from the section “Implementing Attribute Constraints” onwards, you’ll find a full treatment of constraints that you can specify declaratively. As a preview, Listing 11-1 contains an example of adding a constraint to an already created table. It shows the SQL syntax for declaring the second table constraint in Listing 7-26 (the table universe for EMP) stating that {USERNAME} is a key.

Listing 11-1. Declaring a Key Using SQL

alter table EMP add constraint EMP_K2 unique (USERNAME);

Through the preceding alter table SQL statement, you declare {USERNAME} as a key for the EMP table structure. This command allows you to give the constraint a name (in the preceding case it is named EMP_K2). You’ll need to use this name if you want to drop the constraint.

We’ve chosen to use only the alter table add constraint statement in our examples throughout this chapter, so that we can gradually build the implementation of the database design. You could have declared every declaratively added constraint instead as part of the create table statement. You can find the SQL expressions that declare constraints of the UEX database design as part of the create table statement online at http://www.rulegen.com/am4dp.

Triggered Procedural

In the triggered procedural strategy, you don’t declare the constraint (the what) to the DBMS. Instead, you are tasked to write procedural DI code (the how) yourself. And, in conjunction, you also tell the DBMS when to execute your DI code by creating triggers that call your DI code. You can view a trigger as a piece of procedural code that the DBMS will automatically execute when certain events occur. Let’s explain this a bit more.

![]() Note Triggers can serve many purposes. Executing DI code to enforce data integrity is only one such purpose (and the one we’ll be focusing on in this chapter).

Note Triggers can serve many purposes. Executing DI code to enforce data integrity is only one such purpose (and the one we’ll be focusing on in this chapter).

Every trigger is associated with a table structure and will automatically be executed (fired) by the DBMS whenever a transaction executes a DML statement against that table structure. The trigger code—which executes as part of the same transaction—can then check whether the new database state still satisfies all constraints.

Oracle’s SQL DBMS offers the 12 different types of triggers for each table structure, which are defined in the SQL standard:

- Four insert triggers: One that fires before an

INSERTstatement starts executing (referred to as the before statement insert trigger), one that fires right before an actual row is inserted (before row insert trigger), one that fires after a row has been inserted (after row insert trigger), and finally one that fires after completion of anINSERTstatement (after statement insert trigger). - Four update triggers: Similar to the insert triggers, there are four types of update triggers—before statement, before row, after row, and after statement.

- Four delete triggers: The same four types.

The statement triggers (before and after) will only fire once per (triggering) DML statement. The row triggers will fire as many times as there are rows affected by the DML statement. For instance, an UPDATE statement that increases the monthly salary of all employees in the employee table (say there are currently ten) will cause the before and after row update triggers each to fire ten times. The before and after statement update triggers will only fire once each in this case.

Row-level triggers are able to refer to the column values of the rows that are affected by the triggering DML statement. Before and after row insert triggers can inspect all column values of the new rows (the ones that are inserted). Before and after row delete triggers can inspect all columns of the old rows (the ones that are deleted). Before and after row update triggers can inspect both all column values of the old rows and all column values of the new rows.

![]() Note An

Note An UPDATE statement replaces one set of rows (the “old” ones) by another (the “new” ones).

If you aren’t already familiar with the various types of table triggers available in Oracle, we refer you to the Oracle manuals for more information on this. In the rest of this chapter, we assume you are familiar with them.

There isn’t an easy way for statement-level triggers to reference the rows that are affected by the triggering DML statement; they either see the (old) table as it existed before the start of the DML statement (before trigger), or the resulting (new) table as it exists after completion of the DML statement (after trigger). You can enable statement-level triggers to see the affected rows, but it requires some sophisticated coding on your part. Later on in this chapter, you’ll discover that this is actually a requirement in order to be able to develop efficient DI code in the triggered procedural strategy. We’ll then demonstrate how this can be done.

To illustrate the use of a trigger as a means to implement DI code, here is an example. Let’s take a look at implementing a trigger for the “at most one president allowed” table constraint of the EMP table structure. Listing 11-2 shows the SQL syntax for implementing such a trigger that is attached to the EMP table structure.

Listing 11-2. Attaching a Trigger to a Table Using SQL

create trigger EMP_AIUS

after insert or update on EMP

declare pl_num_presidents number;

begin

--

select count(*) into pl_num_presidents

from EMP

where job='PRESIDENT';

--

if pl_num_presidents > 1

then

raise_application_error(-20999,'Not allowed to have more than one president.');

end if;

--

end;

/

The way this trigger is created (in the second line: after insert or update) ensures that the DBMS executes the procedural code of the trigger whenever BL code inserts or updates an employee (the when of this constraint). The trigger will fire immediately after execution of such an insert or update. Therefore, the embedded code will see a database state that reflects the changes made by the insert or update.

The body of the trigger (that is, the procedural code between declare and end), shown in Listing 11-2, represents the how of this constraint. First a query is executed to retrieve the number of presidents in the EMP table. Then, if this number is more than one, the trigger generates an error by calling raise_application_error. This call raises an SQL exception, which in turn forces the triggering DML statement to fail and the changes it made to be undone. In this case, the DBMS will perform this DML statement rollback for you, contrary to the embedded procedural strategy that’s described hereafter.

Note that it would be inefficient to have this trigger also fire for DELETE statements. This particular constraint cannot be violated when a transaction deletes rows from the EMP table structure; if there is at most one president before deleting a row from EMP, then there will still be at most one president after deletion of the row.

In the triggered procedural strategy, you—the DI code developer—need to think about not only when DI code should be run, but also when it would be unnecessary to run DI code. This is part of ensuring that DI code is efficiently implemented. Of course, in the declarative strategy you needn’t think about either of these things.

![]() Note The trigger in Listing 11-2 now fires for every type of

Note The trigger in Listing 11-2 now fires for every type of INSERT and every type of UPDATE statement executed against the EMP table structure. This is still rather inefficient. For instance, if you insert a new clerk or update the monthly salary of some employee, the trigger really needn’t be run. In both of these cases (similar to a DELETE statement, discussed earlier) the triggering DML statement can never violate the constraint.

Apart from being suboptimal in terms of efficiency, we need to address a more serious issue about the trigger in Listing 11-2: it is not complete when it comes to implementing the given constraint. There are still issues, which we will address in more detail in the section “Table Constraint Implementation Issues,” in the area of transactions executing concurrently.

Embedded Procedural

Finally, in the embedded procedural strategy to implement DI code, you again need to write the DI code (the how) yourself. Instead of creating triggers that call your DI code, you now embed the DI code into the wBL code modules that hold the DML statements that might cause constraints to be violated. DI code then executes as part of the execution of wBL code.

This is probably by far the most used procedural strategy for implementing DI code in WoD applications.

![]() Note In this case, “most used” strategy certainly doesn’t imply “best” strategy.

Note In this case, “most used” strategy certainly doesn’t imply “best” strategy.

Let’s take a look at an example. Assume the application supplies a page where the user can enter data for a new employee to be inserted. The user supplies attributes ENAME, BORN, JOB, and DEPTNO. The BL code determines the other employee attributes (EMPNO, SGRADE, MSAL, HIRED, and USERNAME) according to rules that were agreed upon with the users. Listing 11-3 lists the procedure the UI code calls to insert a new employee. It holds BL code first to determine the other employee attributes, and then executes an INSERT statement. Immediately following this INSERT statement, the BL code runs embedded DI code to ensure the “at most one president” constraint remains satisfied.

Listing 11-3. Embedding DI Code in wBL Code

create procedure p_new_employee

(p_ename in varchar

,p_born in date

,p_job in varchar

,p_deptno in number) as

--

pl_sgrade number;

pl_msal number;

pl_hired date;

pl_username varchar(15);

--

begin

-- Determine monthly start salary.

select grade, llimit into pl_sgrade, pl_msal

from grd

where grade = case p_job

when 'ADMIN' then 1

when 'TRAINER' then 3

when 'SALESREP' then 5

when 'MANAGER' then 7

when 'PRESIDENT' then 9;

-- Determine date hired.

pl_hired := trunc(sysdate);

-- Determine username.

pl_username := upper(substr(p_ename,1,8));

-- Now insert the new employee row. Set a transaction savepoint first.

savepoint sp_pre_insert;

-- Use EMP_SEQ sequence to generate a key.

insert into emp(empno,ename,job,born,hired,sgrade,msal,username,deptno)

values(emp_seq.nextval,p_ename,p_job,p_born,pl_hired,pl_sgrade

,pl_msal,pl_username,p_deptno);

-- Verify 'at most one president' constraint.

if p_job = 'PRESIDENT'

then

declare pl_num_presidents number;

begin

--

select count(*) into pl_num_presidents

from EMP

where job='PRESIDENT';

--

if pl_num_presidents > 1

then

-- Constraint is violated, need to rollback the insert.

rollback to sp_pre_insert;

raise_application_error(-20999,

'Not allowed to have more than one president.');

end if;

end;

end if;

end;

The embedded DI code following the INSERT statement very much resembles the code in the body of the trigger listed in Listing 11-2. It is a more efficient implementation than the INSERT statement trigger; the DI code is executed only when a president is inserted.

Also note that in this procedural strategy—in contrast to the triggered procedural strategy— it is up to the developer to force a statement rollback explicitly. In the preceding code, this is done by setting a transaction savepoint just prior to executing the INSERT statement, and performing a rollback to that savepoint whenever the constraint is found to be violated.

There is another more serious drawback of the embedded procedural strategy. You now have to replicate DI code for a given constraint into every wBL code module that holds DML statements that might potentially violate the constraint. In this strategy, all necessary DI code for a given constraint is typically scattered across various wBL code modules. Often, when wBL code of a WoD application needs to be changed, you’ll forget to change (add or remove) the necessary DI code. Even more so, when an actual constraint of a database design needs to be changed, it is often difficult to locate all wBL code modules that hold embedded DI code for the constraint.

![]() Note This drawback also applies in some extent to the triggered procedural strategy. The example trigger given in Listing 11-2 is actually shorthand for two triggers: an update trigger and an insert trigger. DI code for the constraint can be considered scattered across two triggers. In more complex cases of constraints, you’ll need more triggers to implement the DI code; you could need as many as three times the number of table structures involved in the constraint (an insert, an update, and a delete trigger per involved table structure). However, with the triggered procedural strategy, this drawback is not as bad as it can get in a WoD application that was built using the embedded procedural strategy.

Note This drawback also applies in some extent to the triggered procedural strategy. The example trigger given in Listing 11-2 is actually shorthand for two triggers: an update trigger and an insert trigger. DI code for the constraint can be considered scattered across two triggers. In more complex cases of constraints, you’ll need more triggers to implement the DI code; you could need as many as three times the number of table structures involved in the constraint (an insert, an update, and a delete trigger per involved table structure). However, with the triggered procedural strategy, this drawback is not as bad as it can get in a WoD application that was built using the embedded procedural strategy.

The embedded procedural strategy has the same issues in the area of transactions executing concurrently (we’ll explain these in the section “Table Constraint Implementation Issues”).

Order of Preference

It should not be a surprise that the declarative strategy is highly preferable to the two procedural strategies. Not only will a declaratively stated constraint free you of the burden of developing DI code, it will likely outperform any self-developed procedural implementation. Don’t forget that it also frees you of the burden of maintaining this code during the WoD application’s life cycle!

![]() Note This last remark assumes that the DBMS vendor has done its research with respect to designing and implementing an efficient execution model for DI code. In the section “Table Constraint Implementation Issues,” we’ll introduce you to various execution models, ranging from inefficient ones to more efficient ones.

Note This last remark assumes that the DBMS vendor has done its research with respect to designing and implementing an efficient execution model for DI code. In the section “Table Constraint Implementation Issues,” we’ll introduce you to various execution models, ranging from inefficient ones to more efficient ones.

However, as you’re probably aware, you can state only a limited set of constraint types declaratively in Oracle’s SQL DBMS. Note that this is not due to an inherent deficiency in the SQL standard, but rather due to the poor implementation of this language in an SQL DBMS. For implementing constraints that cannot be declared to the DBMS, we prefer to follow the triggered procedural strategy. Following are the main two reasons why we prefer the triggered over the embedded procedural strategy:

- Like declared constraints, the triggered procedural strategy cannot be subverted.

- The triggered procedural strategy is likely to create a more manageable code architecture; DI code isn’t replicated in lots of BL code. DI code is fully detached from all BL code, and is therefore better manageable (the BL code itself, by the way, will be better manageable too).

![]() Note The second reason assumes that triggers will only hold DI code. Embedding BL code in triggers is generally considered a bad practice.

Note The second reason assumes that triggers will only hold DI code. Embedding BL code in triggers is generally considered a bad practice.

The embedded procedural strategy is the worst strategy of all; it can obviously be subverted, simply by using some other application (perhaps a general-purpose one supplied by the DBMS vendor) to update the database.

As you’ll see starting from the section “Table Constraint Implementation Issues” and onwards, when we start dealing with table constraints and database constraints (that is, multi-tuple constraints), implementing efficient DI code for these constraints through triggers is far from being a trivial task.

Obviously, the type of effort involved in implementing different constraints within one class (attribute, tuple, table, database, transition) is the same. Also, the complexity involved in implementing DI code for constraints probably increases as the scope of data increases. The remainder of this chapter will offer guidelines for implementing the table structures of a database design and, in increasing scope order, for implementing the DI code for the involved constraints. Here is an overview of the remaining sections of this chapter:

- The section “Implementing Table Structures” deals with implementing the table structures in Oracle’s SQL DBMS.

- The section “Implementing Attribute Constraints” discusses how to implement attribute constraints.

- The section “Implementing Tuple Constraints” discusses how to implement tuple constraints.

- The section “Table Constraint Implementation Issues” acts as a preface for the sections that follow it. In this section, we’ll introduce you to various issues that come into play when developing triggers to implement DI code for multi-tuple constraints. This section will also elaborate on different execution models.

- The section “Implementing Table Constraints” discusses how you can state some table constraints declaratively, and how to design triggers for checking all other table constraints.

- The section “Implementing Database Constraints” discusses how you can state some database constraints declaratively, and how to design triggers for checking all other database constraints.

- The section “Implementing Transition Constraints” discusses an approach for implementing DI code for transition constraints.

- The section “Bringing Deferred Checking into the Picture” explains the need for deferring the execution of DI code for certain types of constraints.

- The section “The RuleGen Framework” introduces you to RuleGen, a framework that supports implementing DI code using the triggered procedural strategy, and that takes care of many of the issues discussed in this chapter.

Implementing Table Structures

Whenever you implement a database design in an SQL DBMS, you usually start with creating the table structures. Through the create table command, you implement the table structures of your database design within the SQL DBMS. In this chapter, we’ll implement the UEX database design one on one. Specifically, we’ll create an SQL table-per-table structure.

![]() Note It is not the goal of this book to investigate denormalization (for improving performance) or other reasons that could give rise to deviation from the formally specified database design. Moreover, it’s generally our personal experience that such one-on-one implementation usually provides excellent performance given the optimizer technology available in Oracle’s current SQL DBMS.

Note It is not the goal of this book to investigate denormalization (for improving performance) or other reasons that could give rise to deviation from the formally specified database design. Moreover, it’s generally our personal experience that such one-on-one implementation usually provides excellent performance given the optimizer technology available in Oracle’s current SQL DBMS.

Creating a table in an SQL DBMS involves the following:

- Choosing a name for the table structure (we’ve done this formally in the database characterization)

- Declaring names for the columns (we’ve done this formally in the characterizations)

- Declaring data types for the columns (also done in the characterizations)

As for the last bullet, unfortunately the support is rather immature for creating user-defined types—representing the attribute-value sets—that can be used in a create table statement. For instance, we cannot do the following:

-- Create a type to represent the value set for the EMPNO attribute.

-- Note this is not valid Oracle syntax.

create type empno_type under number(4,0) check(member > 999);

/

-- Create a type to represent the enumeration value set for the JOB attribute.

-- Invalid syntax.

create type job_type under varchar(9)

check(member in ('ADMIN','TRAINER','SALESREP','MANAGER','PRESIDENT));

/

-- Now use the empno_type and job_type types in the create table statement.

create table EMP

(empno empno_type not null

,job job_type not null

,...);

In all practicality, we’re forced to use built-in data types provided by the SQL DBMS. In the case of Oracle, the most commonly used built-in data types are varchar, number, and date. These will suffice for our example database design.

In the next section (“Implementing Attribute Constraints”), you’ll see that with the use of the SQL alter table add constraint statement, you can still in effect implement the attribute-value sets. The column data types declared in the create table statements act as supersets of the attribute-value sets, and by adding constraints you can narrow down those supersets to exactly the attribute-value sets that were specified in the characterizations for the table structures.

Listings 11-4 through 11-13 show the create table statements for the UEX example database design.

Listing 11-4. Create Table for GRD Table Structure

create table grd

( grade number(2,0) not null

, llimit number(7,2) not null

, ulimit number(7,2) not null

, bonus number(7,2) not null);

![]() Note The

Note The not null alongside every column indicates that no NULLs are allowed in that column. In such cases, we say that the column is mandatory.

Listing 11-5. Create Table for EMP Table Structure

create table emp

( empno number(4,0) not null

, ename varchar(8) not null

, job varchar(9) not null

, born date not null

, hired date not null

, sgrade number(2,0) not null

, msal number(7,2) not null

, username varchar(15) not null

, deptno number(2,0) not null);

Listing 11-6. Create Table for SREP Table Structure

create table srep

( empno number(4,0) not null

, target number(6,0) not null

, comm number(7,2) not null);

Listing 11-7. Create Table for MEMP Table Structure

create table memp

( empno number(4,0) not null

, mgr number(4,0) not null);

Listing 11-8. Create Table for TERM Table Structure

create table term

( empno number(4,0) not null

, left date not null

, comments varchar(60));

![]() Note In our example, the user doesn’t always want to supply a value for

Note In our example, the user doesn’t always want to supply a value for COMMENTS when an employee has been terminated. Oracle doesn’t allow an empty string in a mandatory column. To prevent the users from entering some random varchar value in these cases (for instance, a space), this column has been made optional (sometimes called nullable), meaning that NULLs are allowed to appear in this column.

Listing 11-9. Create Table for HIST Table Structure

create table hist

( empno number(4,0) not null

, until date not null

, deptno number(2,0) not null

, msal number(7,2) not null);

Listing 11-10. Create Table for DEPT Table Structure

create table dept

( deptno number(2,0) not null

, dname varchar(12) not null

, loc varchar(14) not null

, mgr number(4,0) not null);

Listing 11-11. Create Table for CRS Table Structure

create table crs

( code varchar(6) not null

, descr varchar(40) not null

, cat varchar(3) not null

, dur number(2,0) not null);

Listing 11-12. Create Table for OFFR Table Structure

create table offr

( course varchar(6) not null

, starts date not null

, status varchar(4) not null

, maxcap number(2,0) not null

, trainer number(4,0)

, loc varchar(14) not null);

![]() Note For reasons we’ll discuss in the section “Implementing Database Constraints,” the

Note For reasons we’ll discuss in the section “Implementing Database Constraints,” the TRAINER attribute has been defined as nullable; we’ll use NULL instead of the special value -1 that was introduced in this attribute’s attribute-value set.

Listing 11-13. Create Table for REG Table Structure

create table reg

( stud number(4,0) not null

, course varchar(6) not null

, starts date not null

, eval number(1,0) not null);

In the relational model of data, all attributes in a database design are mandatory. Therefore, you can consider it a disappointment that in the SQL standard by default a column is nullable; SQL requires us to add not null explicitly alongside every column to make it mandatory.

The next section deals with the aforementioned “narrowing down” of the built-in data types that were used in the create table statements.

Implementing Attribute Constraints

We now revisit the matter with regards to the term attribute constraints (mentioned in the section “Classification Schema for Constraints” in Chapter 7).

Formally, a characterization just attaches attribute-value sets to attributes. Attaching an attribute-value set to an attribute can be considered an attribute constraint. However, in practice you implement database designs in SQL DBMSes that are notorious for their poor support of user-defined types. User-defined types would have been ideal for implementing attribute-value sets. However, as discussed in the section “Implementing Table Structures,” you can’t use them to do so. Instead, you must use an appropriate superset (some built-in data type, as shown in the previous section) as the attribute-value set of a given attribute. Luckily you can use declarative SQL check constraints to narrow down these supersets to exactly the attribute-value set that was specified in the characterizations. During implementation, we refer to these declarative check constraints as the attribute constraints of an attribute.

All attribute constraints can—and, given our preference in strategies, should—be stated as declarative check constraints. You can declare these constraints using the alter table add constraint statement.

Listing 11-14 shows the declaration of six check constraints that are required to declaratively implement the attribute-value sets for the EMP table structure as defined in the definition of chr_EMP in Listing 7-2. We’ll discuss each of these after the listing.

Listing 11-14. Attribute Constraints for EMP Table Structure

alter table EMP add constraint emp_chk_empno check (empno > 999);

alter table EMP add constraint emp_chk_job

check (job in ('PRESIDENT','MANAGER','SALESREP'

,'TRAINER','ADMIN' ));

alter table EMP add constraint emp_chk_brn check (trunc(born) = born);

alter table EMP add constraint emp_chk_hrd check (trunc(hired) = hired);

alter table EMP add constraint emp_chk_msal check (msal > 0);

alter table EMP add constraint emp_chk_usrnm check (upper(username) = username);

As you can see from this listing, all check constraints are given a name. The name for the first one is emp_chk_empno. It narrows down the declared data type for the empno column, number(4,0), to just numbers consisting of four digits (greater than 999).

Once this constraint is declared and stored in the data dictionary of Oracle’s SQL DBMS, the DBMS will run the necessary DI code whenever a new EMPNO value appears in EMP (through an INSERT statement), or an existing EMPNO value in EMP is changed (through an UPDATE statement). The DBMS will use the constraint name in the error message that you receive, informing you whenever an attempt is made to store an EMPNO value in EMP that does not satisfy this constraint.

Constraint emp_chk_job (the second one in the preceding listing) ensures that only the five listed values are allowed as a value for the JOB column.

Constraints emp_chk_brn and emp_chk_hrd ensure that a date value (which in the case of Oracle’s SQL DBMS always holds a time component too) is only allowed as a value for the BORN or HIRED columns, if its time component is truncated (that is, set to 0:00 midnight).

Constraint emp_chk_msal ensures that only positive numbers—within the number(7,2) superset—are allowed as values for the MSAL column.

Finally, constraint emp_chk_usrnm ensures that values for the USERNAME column are always in uppercase.

Listings 11-15 through 11-23 supply the attribute constraints for the other table structures of the UEX database design.

Listing 11-15. Attribute Constraints for GRD Table Structure

alter table GRD add constraint grd_chk_grad check (grade > 0);

alter table GRD add constraint grd_chk_llim check (llimit > 0);

alter table GRD add constraint grd_chk_ulim check (ulimit > 0);

alter table GRD add constraint grd_chk_bon1 check (bonus > 0);

Listing 11-16. Attribute Constraints for SREP Table Structure

alter table SREP add constraint srp_chk_empno check (empno > 999);

alter table SREP add constraint srp_chk_targ check (target > 9999);

alter table SREP add constraint srp_chk_comm check (comm > 0);

Listing 11-17. Attribute Constraints for MEMP Table Structure

alter table MEMP add constraint mmp_chk_empno check (empno > 999);

alter table MEMP add constraint mmp_chk_mgr check (mgr > 999);

Listing 11-18. Attribute Constraints for TERM Table Structure

alter table TERM add constraint trm_chk_empno check (empno > 999);

alter table TERM add constraint trm_chk_lft check (trunc(left) = left);

Listing 11-19. Attribute Constraints for HIST Table Structure

alter table HIST add constraint hst_chk_eno check (empno > 999);

alter table HIST add constraint hst_chk_unt check (trunc(until) = until);

alter table HIST add constraint hst_chk_dno check (deptno > 0);

alter table HIST add constraint hst_chk_msal check (msal > 0);

Listing 11-20. Attribute Constraints for DEPT Table Structure

alter table DEPT add constraint dep_chk_dno check (deptno > 0);

alter table DEPT add constraint dep_chk_dnm check (upper(dname) = dname);

alter table DEPT add constraint dep_chk_loc check (upper(loc) = loc);

alter table DEPT add constraint dep_chk_mgr check (mgr > 999);

Listing 11-21. Attribute Constraints for CRS Table Structure

alter table CRS add constraint reg_chk_code check (code = upper(code));

alter table CRS add constraint reg_chk_cat check (cat in ('GEN','BLD','DSG'));

alter table CRS add constraint reg_chk_dur1 check (dur between 1 and 15);

Listing 11-22. Attribute Constraints for OFFR Table Structure

alter table OFFR add constraint ofr_chk_crse check (course = upper(course));

alter table OFFR add constraint ofr_chk_strs check (trunc(starts) = starts);

alter table OFFR add constraint ofr_chk_stat

check (status in ('SCHD','CONF','CANC'));

alter table OFFR add constraint ofr_chk_trnr check (trainer > 999)

alter table OFFR add constraint ofr_chk_mxcp check (maxcap between 6 and 99);

![]() Note You might be wondering how an SQL DBMS deals with constraint

Note You might be wondering how an SQL DBMS deals with constraint ofr_chk_trnr whenever it encounters NULLs in the TRAINER column. We’ll discuss this at the end of this section.

Listing 11-23. Attribute Constraints for REG Table Structure

alter table REG add constraint reg_chk_stud check (stud > 999);

alter table REG add constraint reg_chk_crse check (course = upper(course));

alter table REG add constraint reg_chk_strs check (trunc(starts) = starts);

alter table REG add constraint reg_chk_eval check (eval between -1 and 5);

If a declarative check constraint evaluates to UNKNOWN, usually arising from the use of NULLs, then the SQL standard considers the constraint satisfied; the check evaluates to TRUE. Beware; you’ll observe the opposite behavior in the PL/SQL programming language. Here a Boolean expression evaluating to unknown is handled as FALSE. To illustrate this, take a look at the following trigger definition; it is not equivalent to check constraint ofr_chk_trnr:

create trigger ofr_chk_trnr

after insert or update on OFFR

for each row

begin

if not (:new.trainer > 999)

then

raise_application_error(-20999,'Value for trainer must be greater than 999.);

end if;

end;

The declarative check constraint will allow a NULL in the TRAINER column, whereas the preceding trigger won’t allow a NULL in the TRAINER column. You can fix this discrepancy by changing the fifth line in the preceding trigger definition into the following:

if not (:new.trainer > 999 or :new.trainer IS NULL)

The trigger is now equivalent to the declarative check constraint.

We continue by investigating how you can implement tuple constraints (the next level after attribute constraints) in Oracle’s SQL DBMS.

Implementing Tuple Constraints

Before we deal with the implementation of tuple constraints, we need to confess something up front. The formal methodology that has been developed in this book is based on 2-valued logic (2VL). The science of 2VL is sound; we’ve explored propositions and predicates in Chapters 1 and 3 and developed some rewrite rules with it. However, in this chapter we’ll make various statements about predicates that are expressed in SQL. As demonstrated by the preceding trigger and attribute constraint ofr_chk_trnr in Listing 11-22, due to the possible presence of NULLs SQL doesn’t apply 2VL; instead it applies 3-valued logic (3VL). The most crucial assumption in 3VL is that, besides the two truth values TRUE and FALSE, a third value represents “possible” or UNKNOWN.

![]() Note 3VL is counterintuitive, as opposed to the classical 2VL. We won’t provide an in-depth discussion of 3VL here; you can find a brief exploration of 3VL in Appendix D.

Note 3VL is counterintuitive, as opposed to the classical 2VL. We won’t provide an in-depth discussion of 3VL here; you can find a brief exploration of 3VL in Appendix D.

We admit up front that we’re taking certain liberties in this chapter. By using NOT NULL on almost all columns in the SQL implementation of the example database design, we’re in effect avoiding 3VL issues. Without the use of NOT NULL, various statements we’re making about logical expressions in this chapter would be open to question.

As you saw in Chapter 1, conjunction, disjunction, and negation are truth functionally complete. Therefore, you can rewrite every formally specified tuple constraint into an equivalent specification that uses just the three connectives that are available in SQL.

Once transformed in such a way, all tuple constraints can—and therefore should—be stated declaratively as check constraints. You can use the alter table add constraint statement to declare them to the DBMS. Let’s demonstrate this using the tuple constraints of the EMP table structure. For your convenience, we repeat the tuple universe definition tup_EMP here:

tup_EMP :=

{ e | e∈Π(chr_EMP) ⋀

/* We hire adult employees only */

e(BORN) + 18 ≤ e(HIRED) ⋀

/* Presidents earn more than 120K */

e(JOB) = 'PRESIDENT' ⇒ 12*e(MSAL) > 120000 ⋀

/* Administrators earn less than 5K */

e(JOB) = 'ADMIN' ⇒ e(MSAL) < 5000 }

The preceding three tuple constraints can be stated as follows (see Listing 11-24).

![]() Note The preceding three constraints are formally expressed in 2VL, but the three constraints expressed in SQL in Listing 11-24 are in 3VL. In this case, the constraints expressed in 3VL are equivalent to the formally expressed constraints only because we have carefully declared all involved columns to be mandatory (

Note The preceding three constraints are formally expressed in 2VL, but the three constraints expressed in SQL in Listing 11-24 are in 3VL. In this case, the constraints expressed in 3VL are equivalent to the formally expressed constraints only because we have carefully declared all involved columns to be mandatory (NOT NULL).

Listing 11-24. Tuple Constraints for EMP Table Structure

alter table EMP add constraint emp_chk_adlt

check ((born + interval '18' year) <= hired);

alter table EMP add constraint emp_chk_dsal

check ((job <> 'PRESIDENT') or (msal > 10000));

alter table EMP add constraint emp_chk_asal

check ((job <> 'ADMIN') or (msal < 5000));

The implementation of the first constraint, named emp_chk_adlt, uses date arithmetic (the + interval operator) to add 18 years to a given born date value.

Because SQL only offers three logical connectives (and, or, not), you are forced to transform the second and third tuple constraints—both involving the implication connective—into a disjunction. In case you’ve forgotten the important rewrite rule that enables you to do so, here it is once more:

( P ⇒ Q ) ⇔ ( ( ¬P ) ⋁ Q )

Once again, you should be aware that this transformation might not be safe in general, because when you’re using SQL you’re in the world of 3VL, not the 2VL world from which the rewrite rule is taken. If NULL is permitted in any of the columns involved, you’ll need to think about how these constraints work in SQL’s 3VL logic.

Given that the tuple constraints are declared in the way shown in Listing 11-24, the DBMS will ensure that rows that violate any of them are rejected.

In Listings 11-25 through 11-28, you can find the implementation of the tuple constraints for table structures GRD, MEMP, CRS, and OFFR. The other remaining table structures in the example database design don’t have tuple constraints.

Listing 11-25. Tuple Constraints for GRD Table Structure

alter table GRD add constraint grd_chk_bndw check (llimit <= (ulimit - 500));

alter table GRD add constraint grd_chk_bon2 check (bonus < llimit);

Listing 11-26. Tuple Constraints for MEMP Table Structure

alter table MEMP add constraint mmp_chk_cycl check (empno <> mgr);

Listing 11-27. Tuple Constraints for CRS Table Structure

alter table CRS add constraint reg_chk_dur2 check ((cat <> 'BLD') or (dur <= 5));

Listing 11-28. Tuple Constraints for OFFR Table Structure

alter table OFFR add constraint ofr_chk_trst

check (trainer is not null or status in ('CANC','SCHD'));

The accompanying formal specification for the tuple constraint stated in Listing 11-28 was the following:

tup_OFFR :=

{ o | o∈P(chr_OFFR) ⋀

/* Unassigned TRAINER allowed only for certain STATUS values */

o(TRAINER) = -1 ⇒ o(STATUS)∈{'CANC','SCHD'}

}

After a rewrite of the implication into a disjunction, this changes into the following:

tup_OFFR :=

{ o | o∈P(chr_OFFR) ⋀

/* Unassigned TRAINER allowed only for certain STATUS values */

o(TRAINER) ≠ -1 ⋁ o(STATUS)∈{'CANC','SCHD'}

}

Because we have decided to represent the -1 with a NULL in the implementation of the OFFR table structure (again for reasons that will be explained later on), the first disjunct changes to trainer is not null in the preceding check constraint.

We’ll end this section on implementing tuple constraints with an observation that is also valid for the constraint classes that follow hereafter.

It is good practice to write all tuple constraints in conjunctive normal form (CNF; see the section “Normal Forms” in Chapter 3). This might require you to apply various rewrite rules first. By rewriting a constraint into CNF, you’ll end up with as many conjuncts as possible, where each conjunct represents a separately implementable constraint. For tuple constraints, you would create one declarative check constraint per conjunct. This in turn has the advantage that the DBMS reports violations of tuple constraints in as detailed a way as possible.

Let’s explain this.

![]() Note We again assume that SQL’s 3VL behaves in a 2VL fashion because all columns that are involved in constraints are mandatory.

Note We again assume that SQL’s 3VL behaves in a 2VL fashion because all columns that are involved in constraints are mandatory.

Suppose you create one check constraint for a tuple constraint that is—when rewritten in CNF—of the form A ⋀ B. When that check constraint gets violated, all you know (in 2VL) is that A ⋀ B is not TRUE. This, using the laws of De Morgan, translates as either A is not TRUE or B is not TRUE. Wouldn’t it be nicer if you knew exactly which one of the two was FALSE? If you would have created two separate checkconstraints (one for A and one for B), the DBMS could report which one of the two was causing the violation (or maybe they both are). In other words, by rewriting a constraint specification into CNF and implementing each conjunct separately, you’ll get more detailed error messages.

As mentioned earlier, this observation also applies to the constraint classes that follow (table, database, and transition).

Table Constraint Implementation Issues

Up until now, everything has been straightforward concerning the implementation of data integrity constraints. However, when you increase the scope from tuple constraints to table constraints, and thus start dealing with constraints that span multiple tuples, implementing efficient DI code rapidly becomes much more complex.

The main reason for this complexity is the poor support for declaring these constraints to the DBMS. You can state only two types of table constraints declaratively: uniquely identifying attributes (keys) and subset requirements referencing back to the same table, in which case a subset requirement is a table constraint (foreign key to the same table).

Implementing all other types of table constraints requires you to develop procedural DI code. In practice, this means that you’ll often have to resort to the triggered procedural strategy.

![]() Note We think there’s a reason why DBMS vendors offer us such poor declarative support. We’ll reveal this reason in the course of this section.

Note We think there’s a reason why DBMS vendors offer us such poor declarative support. We’ll reveal this reason in the course of this section.

We’ll introduce you to the complexity involved in implementing table constraints by illustrating different DI code execution models. In the first (rather large) subsection that follows, we’ll illustrate six different execution models, ranging from very inefficient to more efficient. As you’ll see, implementing more efficient execution models for DI code is also more complex.

To explain every execution model clearly, we’ll be using two example table constraints and show how these constraints are implemented in every execution model. The constraints we’ll use are the last one specified in table universe tab_EMP in Listing 7-26 and the last one specified in table universe tab_DEPT in Listing 7-30. For your convenience, we repeat the formal specifications of these two constraints here (note that in these specifications E represents an employee table and D represents a department table).

/* A department that employs the president or a manager */

/* should also employ at least one administrator */

(∀d∈E⇓{DEPTNO}:

( ∃e2∈E: e2(DEPTNO) = d(DEPTNO) ⋀ e2(JOB) ∈ {'PRESIDENT','MANAGER'} )

⇒

( ∃e3∈E: e3(DEPTNO) = d(DEPTNO) ⋀ e3(JOB) = 'ADMIN' )

)

/* You cannot manage more than two departments */

( ∀m∈D⇓{MGR}: #{ d | d∈D ⋀ d(MGR) = m(MGR) } ≤ 2 )

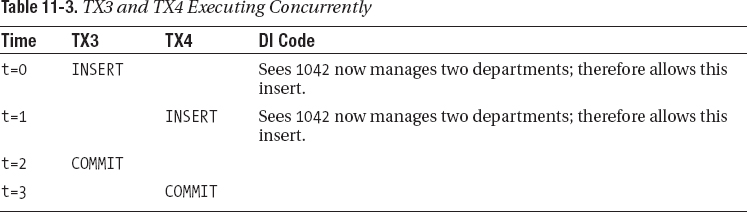

Next to implementing an efficient execution model, another—rather serious—issue comes into play when implementing DI code for table constraints. This concerns transaction serialization. Given that Oracle’s SQL DBMS can execute transactions concurrently, you must ensure that the queries inside DI code for a given constraint are executed in a serializable way: Oracle’s SQL DBMS does not guarantee serializability. We’ll explain this issue to you in detail in the section “DI Code Serialization.”

DI Code Execution Models

This section will discuss various execution models for implementing DI code for table constraints following the triggered procedural strategy. However, before doing so we’ll first provide you with a few preliminary observations with regards to the timing of DI code execution in relation to the DML statement execution.

Some Observations

With an SQL DBMS, you update the database by executing INSERT, UPDATE, or DELETE statements. Each of these statements operates on just one target table structure in just one manner—it’s either an INSERT, or an UPDATE, or a DELETE. Typically, transactions need to change more than one table, or possibly just one table in more than one manner. Therefore, your transactions in general consist of multiple DML statements that are serially executed one after the other.

You implicitly start a transaction when the first DML statement is executed. A transaction is explicitly ended by either executing a COMMIT statement (requesting the DBMS to persistently store the changes made by this transaction), or by executing a rollback statement (requesting the DBMS to abort and undo the changes made by the current transaction). After ending a transaction, you can start a new one again by executing another (first) DML statement. All changes made by a transaction—that has not yet committed—are only visible to that transaction; other transactions cannot see these changes. Once a transaction commits, the changes it made become visible to other transactions.

![]() Note Here we’re assuming that the DBMS is running in the

Note Here we’re assuming that the DBMS is running in the read-committed isolation mode—the mode most often used within Oracle’s installed base.

Of course, all constraints must be satisfied at the end of a transaction (when it commits). That is to say, you don’t want a transaction to commit successfully while the database state, produced by the serial execution of its DML statements so far, violates one of the constraints. But what about the database states that exist in between the execution of two DML statements inside the same transaction? Should these database states always satisfy all constraints too, or might they be in violation of some constraints, as long as the last database state (the one that the transaction commits) satisfies all constraints?

For the time being, we disallow that these intermediate database states violate any database constraint.

![]() Note However, we’ll revisit this question in the section “Bringing Deferred Checking into the Picture,” where you’ll see that to implement certain transactions using Oracle’s SQL DBMS, we must allow certain constraints to be temporarily violated in one of the intermediate database states.

Note However, we’ll revisit this question in the section “Bringing Deferred Checking into the Picture,” where you’ll see that to implement certain transactions using Oracle’s SQL DBMS, we must allow certain constraints to be temporarily violated in one of the intermediate database states.

A DML statement that attempts to create a database state that violates a constraint will fail; in the following execution models we’ll ensure that the changes of such a DML statement will be rolled back immediately, while preserving the database state changes made by prior DML statements that executed successfully inside the transaction.

In Table 11-1 you can find an example transaction that executes four DML statements; the table shows the database state transitions that occur within this transaction.

Our execution model will be based on triggers. As mentioned before, triggers are associated with a table structure and will automatically be executed (“fired”) by the DBMS if a DML statement changes the content of that table. The code inside the trigger body can then check whether the new database state satisfies all constraints. If the state does not satisfy all constraints, then this code will force the triggering DML statement to fail; the DBMS then ensures that its changes are rolled back.

You should be aware of a limitation that row triggers have (the ones that fire for each affected row). These triggers are only allowed to query the state of other table structures; that is, they are not allowed to query the table structure on which the triggering DML statement is currently operating. If you try this, you’ll hit the infamous mutating table error (ORA-04091: table ... is mutating, trigger/function may not see it).

The very valid reason why Oracle’s SQL DBMS disallows you to do this is to prevent nondeterministic behavior. That’s because if your row triggers would be allowed to query a table structure that a DML statement is currently modifying, then these queries would perform a dirty read within the transaction. These queries see intermediate table states that only exist while the triggering DML statement is being executed row by row. Depending upon the order in which the SQL optimizer happens to process the rows, the outcome of these queries can be different. This would cause nondeterministic behavior, which is why Oracle’s DBMS won’t allow you to query the “mutating” table.

Given the essence of a table constraint—that is, it involves multiple rows in a table—the DI code for a table constraint will always require you to execute queries against the table that has been modified; however, the mutating table error prevents you from doing so. Therefore, row triggers are not suitable to be used as containers of DI code for table constraints.

Before statement triggers see the start database state in which a DML statement starts execution. After statement triggers see the end database state created by the execution of a DML statement. Because DI code needs to validate the end state of a DML statement, you are left with no more than three after statement triggers per table structure (insert, update, and delete) on which to base an execution model.

Given these observations, we can now go ahead and illustrate six different execution models for DI code. In discussing the execution models, we’ll sometimes broaden the scope to also include database constraints.

Execution Model 1: Always

In the first execution model (EM1), whenever a DML statement is executed, then the corresponding after statement trigger will hold code that sequentially executes the DI code for every constraint. In this model, every intermediate database state (including the last one) is validated to satisfy all constraints.

This execution model only serves as a starting point; you would never want to make use of this model, because it’s highly inefficient. For instance, if a DML statement changes the EMP table structure, then this execution model would then also run the DI code to check constraints that do not involve the EMP table structure. Obviously, this is completely unnecessary because these other table structures remain unchanged; constraints that don’t involve the table structure upon which the triggering DML statement operates need not be validated.

Let’s quickly forget this model, and move on to a more efficient one.

Execution Model 2: On-Involved-Table

This execution model (EM2) very much resembles EM1. The only difference is that you now make use of the knowledge of what the involved table structures are for each constraint. You only run DI code for a given constraint, if the table structure that is being changed by a DML statement is involved in the constraint (hence the “On-Involved-Table” in the section title).

Let’s take a closer look at how the example table constraint of the EMP table structure is implemented in this execution model. Remember, this was the constraint: “A department that employs the president or a manager should also employ at least one administrator.” You can formally derive the constraint validation query that you need to execute for verifying whether a new database state still satisfies this constraint. The way to do this is by translating the formal specification into an SQL WHERE-clause expression and then executing a query that evaluates the truth value of this expression. You can use the DUAL table to evaluate the expression. Let’s demonstrate this. Here is the formal specification of this table constraint:

( ∀d∈E⇓{DEPTNO}:

( ∃e2∈E: e2(DEPTNO) = d(DEPTNO) ⋀ e2(JOB) ∈ {'PRESIDENT','MANAGER'} )

⇒

( ∃e3∈E: e3(DEPTNO) = d(DEPTNO) ⋀ e3(JOB) = 'ADMIN' )

)

Before you can translate the formal specification into an SQL expression, you’ll need to get rid of the universal quantifier and implication. Following is the rewritten version of the specification.

![]() Tip Try to rewrite this specification yourself; start by adding a double negation in front of the preceding specification.

Tip Try to rewrite this specification yourself; start by adding a double negation in front of the preceding specification.

¬ ( ∃d∈E⇓{DEPTNO}:

( ∃e2∈E: e2(DEPTNO) = d(DEPTNO) ⋀ e2(JOB) ∈ {'PRESIDENT','MANAGER'} )

⋀

¬ ( ∃e3∈E: e3(DEPTNO) = d(DEPTNO) ⋀ e3(JOB) = 'ADMIN' )

)

This now easily translates to SQL (we’re naming this constraint EMP_TAB03).

![]() Note The

Note The DUAL table in Oracle is a single-column, single-row system table. It is most often used to have the SQL engine evaluate either a SELECT-clause expression or a WHERE-clause expression. The following code displays the latter usage.

select 'Constraint EMP_TAB03 is satisfied'

from DUAL

where not exists(select d.DEPTNO

from EMP d

where exists(select e2.*

from EMP e2

where e2.DEPTNO = d.DEPTNO

and e2.JOB in ('PRESIDENT','MANAGER'))

and not exists(select e3.*

from EMP e3

where e3.DEPTNO = d.DEPTNO

and e3.JOB = 'ADMIN'))

In EM2, you would create three after statement triggers for this constraint on only the EMP table structure (the one involved in this constraint). These triggers hold the preceding query to verify that the new database state still satisfies this constraint. Listing 11-29 shows these three triggers combined into one create trigger statement.

Listing 11-29. EM2 DI Code for Constraint EMP_TAB03

create trigger EMP_AIUDS_TAB03

after insert or update or delete on EMP

declare pl_dummy varchar(40);

begin

--

select 'Constraint EMP_TAB03 is satisfied' into pl_dummy

from DUAL

where not exists(select d.DEPTNO

from EMP d

where exists(select e2.*

from EMP e2

where e2.DEPTNO = d.DEPTNO

and e2.JOB in ('PRESIDENT','MANAGER'))

and not exists(select e3.*

from EMP e3

where e3.DEPTNO = d.DEPTNO

and e3.JOB = 'ADMIN'));

--

exception when no_data_found then

--

raise_application_error(-20999,'Constraint EMP_TAB03 is violated.');

end;

![]() Note A DBMS could, by parsing the declared formal specification of a constraint, compute what the involved tables are. Also, the DBMS could compute the validation query that needs to be run to validate whether a constraint is still satisfied (all this requires is the application of rewrite rules to end up with a specification that can be translated into an SQL expression). Therefore, the DBMS could generate the preceding trigger. In other words, this execution model could be fully supported by a DBMS vendor in a declarative way!

Note A DBMS could, by parsing the declared formal specification of a constraint, compute what the involved tables are. Also, the DBMS could compute the validation query that needs to be run to validate whether a constraint is still satisfied (all this requires is the application of rewrite rules to end up with a specification that can be translated into an SQL expression). Therefore, the DBMS could generate the preceding trigger. In other words, this execution model could be fully supported by a DBMS vendor in a declarative way!

Listing 11-30 shows the three triggers representing the DI code for constraint DEPT_TAB01 using this execution model.

Listing 11-30. EM2 DI Code for Constraint DEPT_TAB01

create trigger DEPT_AIUDS_TAB01

after insert or update or delete on DEPT

declare pl_dummy varchar(40);

begin

--

select 'Constraint DEPT_TAB01 is satisfied' into pl_dummy

from DUAL

where not exists(select m.DEPTNO

from DEPT m

where 2 < (select count(*)

from DEPT d

where d.MGR = m.MGR));

--

exception when no_data_found then

--

raise_application_error(-20999,'Constraint DEPT_TAB01 is violated.');

--

end;

/