5

Non-Functional Design for Operability

In the previous chapters, we touched upon two crucial elements of architecture design: security and connectivity. However, there are several more aspects you need to consider in your designs. In the first part of this chapter, we will discuss these design elements and how you can address them when designing with SAP Business Technology Platform (SAP BTP).

Practically, your design is not merely for presentation purposes. Eventually, it needs to be realized as an implemented solution. As you know, the story never ends there, and the solution needs to be maintained until you don’t need it anymore. As an architect, although your mindset is geared toward the design aspects, it will always help to know how SAP BTP operates.

You can design more robust architectures by also considering the second half of the story. So, the latter part of this chapter will cover topics around support elements.

Therefore, in this chapter, we are going to cover the following main topics:

- Non-functional design elements

- Understanding service-level agreements (SLAs)

- Getting support

Technical requirements

This chapter does not have specific technical requirements. However, if you have already gone through the previous chapters, you should have a trial account that you can use to check some of the elements we will be discussing in this chapter.

Non-functional design elements

Delivering a technology project is never for the sake of merely introducing technology. There must be a business case behind your design. The drivers of the business case may require an intensive design, especially if it is for executing strategic transformation. But sometimes, the design objective may be geared more toward ensuring stability. In such cases, as an architect, your role may be limited to ensuring no adverse deviations happen to the existing design, such as system upgrades aimed at keeping the lights on.

For business-driven demands, there are business requirements, while for technology-driven initiatives, there are technical requirements that eventually serve business purposes. For both types, you need to design with requirements that are not necessarily specified within the core motivation; however, they are essential if you wish to implement them successfully and ensure they operate properly. Furthermore, fulfilling these requirements contributes to the quality of the solution. These are categorized as non-functional requirements (NFRs), and as an architect, you produce non-functional design elements to address the related constraints. The naming here is a bit controversial; however, these are widely used terms.

Depending on the nature of the problem you are solving, some of these design elements may become more important than others, and some may even be irrelevant to your design. You can quantify some of these NFRs as they are based on measurable qualities. However, for others, you would need to specify the required quality, such as a feature. For example, you must specify what accessibility standards your solution design supports.

Now, let’s discuss different types of NFRs.

Important Note

There are so many aspects that can be considered NFRs; some of the terms are used interchangeably, while some have overlapping scopes. Yet, each has its importance. We will discuss the NFRs that we believe need to be focused on more while briefly touching on others. So, let’s get started.

Security

Security is so vital that we had to discuss it by dedicating almost a whole chapter to it. After reading the previous chapter, you should already know about it and be able to design for NFRs such as the following:

- Security: In general, this involves encrypting the data that is in transit and the data that is at rest, which SAP BTP ensures at the infrastructure level.

- Auditability: For example, SAP BTP Audit Recording service that records important security events and changes.

- Access and authorizations: For example, allowing only certain users to act, for instance, via SAP BTP’s role-based access control entities.

- Regulatory: For example, measures that are taken for data protection and privacy legislation or even for more specific levels, such as company policies and standards.

We have extensively discussed concrete examples of these in the previous chapter, so we’ll leave it here for security. If you need to refresh your memory on these, just scroll back.

Business continuity

This section will discuss several qualities that serve the eventual goal of ensuring steadiness for business operations. You may have already classified your solutions based on how crucial they are for your business; that is, their business criticality. Based on this classification, you need to think about the measures you want to take to keep your solutions running stably.

Availability and resilience

There is always the possibility of the system components failing. Therefore, it would be best if you took precautions so that you’re ready to remediate such incidents effectively and promptly. For cloud providers, it can be a problem with the data center’s infrastructure elements, such as the power outlet or cooling system, as well as computer hardware problems, such as a hard disk or network device failure.

The first dimension for ensuring business continuity is maintaining the correct level of availability for your systems and applications. Ensuring availability typically transpires by introducing redundancy.

The cloud infrastructure providers arrange availability by introducing different isolation levels between fault domains and virtual separation for update domains. This gives the application’s owner the choice to deploy their application in multiple separated infrastructure arrangements, as well as the option of automatic failover in case of failures.

General resilience concepts

In this book, we assume you have basic knowledge of these arrangements, such as regions, availability zones, and availability sets. Now, let’s refresh our memory with a brief overview:

Figure 5.1: Availability level arrangements of a cloud infrastructure provider

Here, you can see different levels of arrangement that are typical to cloud infrastructure providers. By leveraging these, you can introduce availability at the following levels:

- Local: By deploying your application in multiple availability sets within the same zone, you can introduce a basic level of high availability, which protects your application against failures within a single location (for example, a hardware malfunction). With this level of redundancy, software updates for your application require less or even near-zero downtime because while you are updating one instance, another instance continues to serve the users.

- Zonal: By deploying your application in multiple availability zones, you extend the protection to cover the failures that affect an entire data center (for example, power outages, network infrastructure problems, or cooling system breakdowns).

- Regional: If your application is mission-critical and you cannot risk a prolonged downtime in the rare larger-scale cases of failures (such as disasters), then you can deploy your application in multiple regions.

The availability of a system is specified as a percentage of uptime during a unit of time; for example, 99.9% availability means approximately 44 minutes of downtime per month.

By introducing higher availability, you increase the reliability of your application. In addition, with regional redundancy, you enable maximum protection and have the option of disaster recovery.

Furthermore, for the highest level of availability, you need to ensure failures are detected early and fixed quickly. This requires proper monitoring, automated mechanisms, and a robust process for handling disruptions. The cost of your setup increases as you step up the protection and efficiency levels. Therefore, it’s important to determine the level of availability for an application properly.

For solutions that include a persistence layer, redundancy needs to be accompanied by a replication mechanism that synchronizes data between the storage instances (for example, databases). This mechanism determines how quickly your application can recover (recovery time objective (RTO)) and the closest restore point for the data (recovery point objective (RPO)). Besides replication, data backups also contribute as a measure of recoverability.

In most cases, your solutions will contain several elements. Therefore, in such cases, you can initially think that your end-to-end solution is only as resilient as the resiliency of the weakest element in the design. To quantify this with a probabilistic approach, you can work out composite availability figures while considering the dependencies, fallback arrangements, individual component availabilities, and the technical flow for the critical scenarios.

Finally, like any technical deliverable, you should test your availability setup at project delivery and, later, continuously monitor to check whether its functionality is as expected. Furthermore, it is a good idea to test your disaster recovery arrangements with a certain frequency.

By incorporating all these aspects, your design will have a high level of resilience for your solution. Briefly, you need to introduce redundancy, eliminate single points of failure, and minimize the mean time to recovery so that you can make your solution resilient.

Now, let’s discuss how you can achieve this in SAP BTP.

Achieving resilient solutions with SAP BTP

As you may recall from our previous discussions on the cloud service models, the responsibility for ensuring resilience may be with the provider or you, as the consumer. Considering that SAP BTP is a Platform as a Service (PaaS), the liability is with you when it’s about your applications and your data. For other layers, the onus will be mainly on SAP. There are three types of arrangements that underpin your solution’s resilience level:

- You can rely on the availability provided by SAP. We will discuss the level of availability that SAP promises in the Understanding SLAs section.

- For some services, SAP provides you with configuration options so that you can define the resilience level. In that case, it becomes a risk management question because the stricter measures you choose, the more you will pay for them. An example here is SAP HANA Cloud, HANA database. You have the option of creating HANA database replicas to increase availability and enable disaster recovery. We will discuss this later in this book.

- We can give another example here for the custom applications you deploy in SAP BTP. SAP BTP Cloud Foundry lets you use the availability zones of the region that your subaccount runs on, provided that the region has availability zones. When you create multiple instances for your application, the platform automatically distributes them across different availability zones, which means you achieve zonal redundancy, as shown in the following diagram:

Figure 5.2: Built-in high availability setup for custom applications in the SAP BTP Cloud Foundry runtime using availability zones

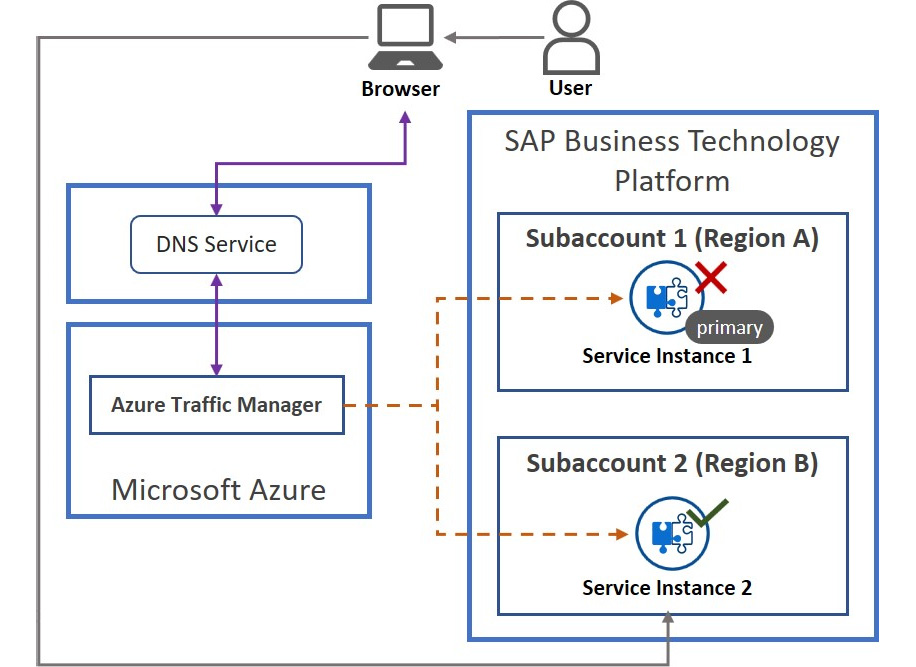

- You can design your solution with additional elements to increase the level of resilience. For example, you can create two instances of the same service in two different subaccounts that are located in different regions. Then, you can add a component such as Azure Traffic Manager, Azure Front Door, Amazon Route 53, or Akamai Ion. With these, you can monitor the primary service instance and automatically route the traffic to the secondary instance in case of failure:

Figure 5.3: High availability setup with two service instances in different subaccounts

The preceding diagram illustrates a design that uses Azure Traffic Manager, which can route traffic to one of the multiple target endpoints. In this example, Azure Traffic Manager detects that the primary service instance is unavailable and makes sure that the request is routed to the secondary service instance. If applicable, you can also configure this routing so that it functions as a load balancer.

With this arrangement, you need to make sure the two instances have been configured in the same way unless it is explicitly required otherwise. Although you can do this manually, it’s best to establish an automated change delivery pipeline that applies the same configuration to all instances.

Backup and recovery

Traditional backup and recovery mechanisms can be a primary or secondary measure for the recoverability of your applications. Here, we can consider SAP BTP services under three groups:

- As the most primitive way, for some services, SAP BTP lets you export and import the data used by the service. The data, in this case, is generally the configuration data of a service, such as the Destination service, or design-time artifacts that are created in a service – for example, SAP Integration Suite. You can leverage the export/import functionality in different ways, provided that they are available for the service:

- The UI application for the service may contain UI controls that can be used for backup and restore purposes (for example, buttons for export/import).

- You can use APIs to manage content for some services (for example, SAP Cloud Integration, the Destination service, and more).

- You can use the Content Agent service, which assembles content into archive files. You can then use these files in the Cloud Transport Management service to import content.

- SAP BTP automatically backs up the data stored by some services, such as SAP HANA Cloud and PostgreSQL (hyperscaler option). For the SAP HANA database, besides the full daily backups, frequent log backups are taken so that backup-based RPO is no longer than 15 minutes. As an additional measure, the backups are replicated in other availability zones. The backups are not only safeguards for failures at the SAP side but also available for customers to initiate ad hoc recovery if needed.

- There are services for which none of the options we’ve discussed exist. For these, you need to establish a mechanism to take backups. For example, if you need a backup of the objects that have been stored with the Object Store service, you can implement your application to maintain objects in two different locations. For this specific service, bear in mind that the service plan you choose may already provide a level of redundancy at the infrastructure level.

All the resilience aspects we’ve discussed so far must be a part of your overall business continuity plan, which takes care of the process and event management aspects as well. By designing resilient solutions, you ensure operational stability. With this, you also ensure you don’t lose data valuable to your business. Now, let’s discuss some other non-functional requirements related to data management.

Data management

Do you wish to read about how important data is again? We guess not since we have done that before. But remember, one essential motivation for securing your systems is protecting your data, and business continuity is meaningful if it helps you keep your data safe. So, let’s continue learning about non-functional design elements in data management.

Data lifecycle and retention

Data is valuable; however, it has a lifecycle and its operational value may depreciate. Besides, storing data is not free of cost, and considering data protection and privacy aspects, it may also become a liability. Therefore, if your design introduces new data entities, you need to define how long to retain this data based on legal or regulatory obligations and operational considerations.

Depending on how frequently a piece of data is accessed and how crucial it is for business operations, data can be classified into different data temperature categories such as hot, warm, and cold. In line with this categorization, you can store data in different technologies based on its temperature to optimize the storage cost.

For example, as we will discuss later in this book, SAP HANA Cloud supports data tiering, with which you can store the data in different integrated tiers. Here, only one copy of the data is stored, and you can optimize cost and performance by moving the data between these tiers. Typically, the data movement from hot to cold storage coincides with the age of the data. For example, financial data for older fiscal years can be moved to warm storage, whereas the data for the recent fiscal years stays in the hot tier.

The final step in the data lifecycle is archiving. Here, the data is archived with much cheaper storage technology because access is scarcely required. Once the data has been archived, it can be deleted from the operational storage. For some data types, you can also delete data without archiving it. By archiving or removing data, you slow down database growth and improve database query performance.

Your design needs to take care of the data protection and privacy perspectives as well. Thus, it would be best to define the rules for deleting privacy-related data as keeping it may be an unnecessary liability.

Furthermore, bear in mind that there may be overarching considerations that may override your data retention rules. For instance, when an inactive customer requests to exercise their right to be forgotten, you may need to erase their data, even though your retention rules advise a more extended retention period. Here, you may even need to erase their data from data archives. Or, if there is a legal hold for a customer, you may need to keep their data longer than the retention rules specified.

Let’s consider this with an example: you are designing an application on SAP BTP that stores customer data in SAP HANA Cloud, HANA Database. For the application, you persist customers’ names, email addresses, payment details, and orders. In your design, you can specify some data retention rules, as follows:

- If a customer has no active orders and their last order was fulfilled, do the following:

- 1 year ago ? Delete their payment details

- 2 years ago ? Delete their names and email addresses, and anonymize data for their orders (for example, change the customer IDs in the order records so that they cannot be linked to the particular customer)

- If an order belongs to a customer whose data has been deleted or anonymized, and the order fulfillment date is older than 5 years, archive the order data and delete it from the system.

Important Note

Don’t confuse data archives with data backups. They are both copies of data; however, they serve different purposes. Archives are created for data retention, whereas backups are taken for recoverability.

Next, let’s learn the importance of accuracy of data.

Data quality

Data is valuable only if it serves a purpose. For this, you must capture data accurately so that your business operations are handled appropriately. For example, think of a customer service agent on the phone who cannot locate a customer’s record because of a data problem. Or perhaps you’re preparing an analytical report to present to senior stakeholders, and at the last minute, you realize that it doesn’t consider a significant portion of the operational data because one of the data fields hasn’t been populated correctly.

Lack of data quality is a chronic issue for many businesses as once it is widespread, it becomes very challenging to fix. For some companies, the problem even slows down the transformation they want to have.

Data quality generally becomes a problem for the master data that is entered manually by users. Therefore, you should consider data quality as a mandatory part of operational practice by incorporating necessary checks in the applications that are used and training the users. For this, you can use data management solutions such as SAP Master Data Governance (MDG), which can help improve data quality.

When designing solutions with custom applications in SAP BTP, you need to specify data rules to make sure users enter data accurately and without breaking integrity. These rules can specify mandatory fields, data validations, and dependencies. In addition, your design should indicate where the application can provide the user with input value suggestions. For some data types, it may be a good idea to leverage external services to validate data or obtain a list of accurate input value suggestions. A typical example of this is when your application needs to capture location data. SAP BTP provides the Data Quality Management (DQM) service, which offers cloud-based microservices for address cleansing, geocoding, and reverse geocoding. This service can be integrated with other SAP solutions such as SAP Data Intelligence. Furthermore, some solutions, such as SAP Cloud for Customer, come with prebuilt integration with the SAP DQM service.

Your application can call SAP DQM location microservices by providing an address as input and getting the cleansed address information that is also enriched with geolocation data. If the received response points to an error, your application can prevent users from saving the record unless they fix the problem. Don’t forget that it should be the backend application, which ultimately checks the data’s quality. Upon receiving the backend response, your frontend application can expose the error message and highlight the relevant data input components with error designation. Alternatively, your application can offer the user to input the cleansed address data automatically:

Figure 5.4: SAP BTP application using a SAP Data Quality Management location microservice

Here, you can see how SAP DQM, microservices for location data can be used to cleanse address data.

Performance, capacity, and scalability

Do you remember that website that took so many seconds to load, and you haven’t even waited to see what it exactly offers and navigated away to check another website? This example shows how important performance is for a software application. End users want responsive and performant applications. If your solution includes components that interact with users, its responsiveness is a crucial element of the user experience, promoting or hindering the adoption of your solution. Therefore, your solution design should also focus on the performance of each component and make sure the overall performance is acceptable.

Let’s look at another example. Let’s say you’ve designed a solution with an application that makes an expensive query at the database layer. Unfortunately, the query chokes the database processes and eventually brings the application to a halt. Are you looking for a quick resolution to the problem? How about doubling the sizes of the database server memory and CPU? You do that, and everything is back to normal. Or is it?

Performance

There is often a correlation between performance and capacity. So, by increasing capacity, you can improve performance, which would saturate eventually. But we have bad news: capacity always comes with a cost. Do you still think the resolution in the preceding example was the best one? It may be. However, you can only decide this after making sure the technical resources have been utilized efficiently and by balancing performance and cost – that is, tuning the performance.

Let’s take SAP HANA Cloud, HANA Database and list examples of the performance tuning options we have:

- You can work on the data model to check whether remodeling may help

- Use data partitioning to break large tables down into smaller parts

- Use hints to query data snapshots

- Proactively manage memory

- Manage workload with workload classes

- Tune settings for parallel execution of SQL statements

When it comes to the artifacts you create, you should take care of the performance aspect – for example, data model design with performance in mind, query optimization, avoiding unnecessary data retrieval, avoiding over-engineered modularization, and more. This is valid for the custom applications, integration flows, API management policies, SAP Data Intelligence graphs, and smaller code scripts you write inside these artifacts.

Sizing/capacity

If it is about tuning performance, there must be a baseline. So, where does it start? As previously mentioned, performance and capacity are somewhat correlated, and this begins with your capacity allocation for a service instance. For this, you need to run a sizing exercise. In practice, we have seen some architects relying on their intuitions and others taking this as an exact science. In both cases, you need to consider the factors that will impact performance, quantify them, and allocate resources for the service instance accordingly. The specification for the sizing factors can start with requirements such as how many concurrent users need to use the application, or how many transactions the application needs to process in a minute.

Where sizing is important, you will see that SAP BTP gives you options so that you can optimize according to your requirements. While working on sizing, you can use the Estimator Tool to check how much your configuration will cost. You should remember this tool from Chapter 3. In the following screenshot, you can see the capacity unit estimator for SAP HANA Cloud, HANA Database, which can be accessed from the Estimator Tool:

Figure 5.5: Capacity unit estimator for SAP HANA Cloud, HANA Database

As we can see, SAP BTP allocates a technical resource capacity for your SAP HANA Database mainly in terms of memory, compute, storage, and network traffic. Similar estimators exist for other services such as SAP Data Intelligence and the Kyma environment. The details here explain how these elements impact the pricing; however, when configuring the service, a configuration element can be linked to another one for the sake of simplicity. For example, when creating a SAP HANA Cloud, HANA Database instance, you are given the option to select the memory size, which automatically determines the number of vCPUs.

Did you think sizing was a matter of the past as it was required for installing on-premise systems? Well, you see, the services you consume still run on some infrastructure. Therefore, from time to time, your input will be required, where your consumption will be correlated with reserved infrastructure capacity and the providers will reflect the cost to you accordingly.

The capacity you get will be linked to the pricing metric, and your entitlement for that metric may also impose constraints on performance elements, such as the bandwidth that’s been allocated for your consumption. This will be more implicit as you get to models that abstract more layers above the infrastructure. For example, although you may have a level of flexibility to control performance in platform-as-a-service (PaaS), it is almost totally out of your control when it comes to software-as-a-service (SaaS) solutions.

Once you’ve established the initial capacity for a service, continuously monitor whether your requirements change so that you can take timely action for capacity adjustments when needed.

Scalability

It is high time we talk about another cloud quality here, and that is scalability. Scalability is one of the primary motivations why companies move to the cloud – they want to use resources efficiently. Companies do not want to pay for infrastructure when they don’t use it and enjoy the flexibility of using more resources when needed. With scalability, you can flexibly ramp up or ramp down the resources you use, depending on your requirements. Are you asking why we need sizing when creating a service instance, when we could use scaling? If so, you are missing the point that to scale, you need a baseline as a reference. And the sizing is required for establishing that baseline.

After onboarding to a service with the initial capacity configuration, you can leverage scaling options when your requirements change. Human intervention is more appropriate for services in which scaling has a significant impact and may not be that straightforward. For example, you can use SAP HANA Cloud Central to scale up or scale down your SAP HANA Cloud, HANA Database instance memory. At the time of writing this book, scale-out support for the SAP HANA database was still on the roadmap to be delivered later.

For your custom applications in the Cloud Foundry environment, SAP BTP provides both horizontal and vertical scalability options. You can define memory and disk quotas for your application when deploying it, and you can change these quotas for scaling up or scaling down without requiring a new deployment. The memory quota implicitly defines the CPU share of the application (for example, the Cloud Foundry environment guarantees a share of a quarter core per GB instance memory). Bear in mind that there are quota limits for the maximum instance memory per application and the maximum disk quota.

For horizontal scaling, you can add and remove application instances. As you may recall from the previous section on business continuity, SAP BTP automatically creates instances across multiple availability zones. That’s why you can also achieve higher availability with horizontal scaling.

As horizontal scaling for applications can be achieved without human intervention, SAP BTP provides Application Autoscaler, which you can use to scale applications by adding or removing instances automatically. With Application Autoscaler, you can specify the following policies:

- Dynamic policies: Autoscaler acts based on changes in terms of CPU utilization, memory utilization, throughput, and response time metrics. Alternatively, you can define custom metrics for which you need to feed the quantity to the Autoscaler instance via its API.

- Schedule-based policies: Autoscaler scales the application based on a schedule. Therefore, you can use schedule-based policies when you know the periods during which your application will be accessed intensively.

So, you did the hard work and estimated the size for the service instance you use or the application you deploy. The natural next step is to verify your estimation based on actual data and calibrate it if needed. To do this, you need to monitor your service or application metrics, which is part of what we’ll discuss in the next section.

Observability

You must have seen movies where there is a group of people in a room with several huge monitors on the walls, and most of the people have computers in front of them as well. In the Bourne series, it’s the CIA agents monitoring Jason Bourne’s movements. In Apollo 13, it’s the NASA workers in the Mission Control Center. And in many movies, it may be as simple as a building’s concierge with a couple of screens.

All the people in these rooms are there for some common reasons:

- They want to respond to events swiftly when they happen.

- They want to proactively prevent events that may adversely impact their mission.

- They want to gather data that will help them retrospectively analyze past events so that they become lessons learned.

Besides, all the setups that are used in the rooms serve a common purpose. They support decision-making. For example, some monitors show real-time status data, while others display trend graphics. In some movie scenes, you may also hear an alarm going off to signal something significant has happened. With all these, people in the room can make decisions to direct the operation.

You need a similar setup to achieve your goal of running your applications and systems smoothly. This setup should facilitate two things. First, with observability, you gain insights from the operational data produced in metrics, logs, and traces. With monitoring, you get to know about adverse events and why they happened. Here, the events can be performance or security-related, and monitoring depends on observability elements.

Where observability is crucial, SAP BTP provides necessary functionality so that you can observe and monitor the operations of the service. Let’s look at some examples:

|

SAP BTP Service |

Monitoring Features |

|

SAP HANA Cloud, SAP HANA Database |

Using the SAP HANA Cockpit, Monitoring page (Figure 5.6), you can do the following:

|

|

SAP Data Intelligence |

Using the SAP Data Intelligence Monitoring application, you can do the following:

|

|

SAP Integration Suite |

Using the Cloud Integration Monitoring dashboard, you can do the following:

Using the Analytics view of the API Portal, you can do the following:

SAP Integration Suite also supports out-of-the-box integration with Solution Manager and SAP Analytics Cloud. |

Table 5.1: Examples of SAP BTP service-specific monitoring features

The following screenshot shows the monitoring page of the SAP HANA Cockpit, which gives you an overview of the main monitoring categories. From this screen, you can click on the links to drill down to more detailed views for further analysis:

Figure 5.6: SAP HANA Cockpit – the Monitoring page

Application logging for custom applications

As discussed previously, certain services provide the necessary information to monitor their operations. But how about custom applications?

This is where the Application Logging service enters the picture. By default, this service records logs from the Cloud Foundry router. In addition, if you want to log other information from your custom Cloud Foundry application explicitly, you can do so by creating an instance of this service and binding your application to it.

The SAP BTP Cloud Foundry environment uses Elastic Stack, a group of open source products, to record and visualize application logs. You can view the logs for your application by navigating to the application’s page in the Cloud Foundry space and then selecting Logs from the menu. If you want more fun, you can click the Open Kibana Dashboard button to view dashboards and lots of information that’s been logged for your applications. An example dashboard can be seen in the following screenshot.

In the Kyma environment, you have options in the console for viewing logs and metrics. These take you to Grafana, an open source observability platform, to query and visualize logs and metrics that have been collected from Kyma components:

Figure 5.7 – The Kibana dashboard showing statistics for custom applications

Important Note

You should never let sensitive or confidential information, such as personal data, passwords, access tokens, and more, be collected in the logs.

Alert notification

At the beginning of this section, the last element in our analogy was an alarm going off for significant events. It is analogous to alert notification in IT operations, which is an essential element of monitoring because you may need to race against time when remediating problems. Thus, you must be notified about the issues as soon as they happen.

The SAP Alert Notification service for SAP BTP comes to the rescue here. This service receives information about events in a predefined format and lets you configure three entities that help construct a notification pipeline, as follows:

- Conditions: Logical expressions that are based on event properties – for example, “[If] the severity of the event is equal to Error.”

- Actions: A definition of how the service performs the notification. With an action, you configure technical details around predefined action types. With the full specification, actions correspond to delivery channels.

- Subscriptions: With subscriptions, you bring together conditions and actions to define the end-to-end notification process. You can add multiple conditions together to form a conjunct rule set and add multiple actions to push the events to multiple delivery channels:

Figure 5.8: SAP Alert Notification service, sources, and delivery channels

The preceding diagram illustrates how you can use the SAP Notification Service. Let’s discuss some of the details:

- There are built-in events for many SAP BTP services that become available as soon as you subscribe to the SAP Notification Service, such as SAP HANA Cloud, SAP Cloud Integration, SAP Cloud Transport Management, and more.

- An SAP BTP application or an external application can send event information to the SAP Alert Notification Service by using its Producer API.

- Applications can use the SAP Alert Notification Service Consumer API to pull events that are stored in the service’s temporary storage or the events that have been undelivered for some reason.

- You can configure SAP Alert Notification Service actions to define delivery channels for many targets, such as email, ServiceNow, Microsoft Teams, and more.

An interesting action target here is the SAP Automation Pilot, which is another SAP BTP service. This service lets you create automation procedures using predefined platform commands or flexible commands to write scripts or make API calls. You can execute the commands, which can also be a set of other commands, in a scheduled manner, by using a URL trigger, via integration with the SAP Alert Notification Service, or through an API call.

Although not directly linked to observability, let’s think of an interesting example for this service. Being a premium product, SAP HANA Cloud, HANA Database is not the cheapest on the market. As a result of having many environments for change delivery, sandbox, and training, you probably have several SAP HANA Cloud, HANA DB instances. But do you need all of them running all the time? Maybe one of your database administrators should stop the non-production instances every night and then start them again in the morning to save costs. You must be smelling the opportunity for automation here, and SAP Automation Pilot can help. For this example, let’s also consider our hard-working developers who may need to work during the night from time to time. Let’s build a solution step by step:

- Build an application that exposes a REST/OData API that, when queried, tells you whether a database can be stopped that night or not. The developers can use the UI of this application to mark a database as not to be stopped that night.

- Schedule an SAP Automation Pilot execution to run every night, which makes a call to the API and, according to the response, stops all applicable non-production databases.

- Schedule another SAP Automation Pilot execution to run every morning to start the databases.

Traceability

Let’s assume you are in a war room, troubleshooting a recent high-priority incident, or you are about to deliver a solution and must find the performance bottleneck. At that moment, if you had three wishes, one would be to have an end-to-end trace of how a request flows across multiple components. This trace is even more valuable when it correlates the requests of the same flow hitting each component. At the time of writing this book, unfortunately, no tools provide out-of-the-box traceability for SAP BTP. However, there are roadmap items that could deliver this.

Tools

Let’s briefly talk about the tools you can use for observability. If you have been using SAP on-premise solutions, most probably, you already have SAP Solution Manager, which provides monitoring capabilities. For customers who want a similar tool for cloud solutions, SAP provides SAP Cloud Application Lifecycle Management (Cloud ALM), which comes included with SAP BTP and other cloud subscriptions. SAP Cloud ALM is still evolving, and capabilities for SAP BTP are on its roadmap. Finally, for system monitoring that extends from on-premise to the cloud with extra capabilities, SAP Focused Run can help as an observability solution. Contrary to the other two, SAP Focused Run is a paid product.

Apart from SAP solutions, you can leverage generic monitoring tools from the vendors, such as Dynatrace and AppDynamics. However, unlike on-premise monitoring, where you generally install agents on your systems, you may have restricted options for integrating your SAP cloud solutions to these tools. In most cases, this will be through the APIs to stream events, metrics, and statistics to the central monitoring tool.

Our suggestion for on-premise, cloud, or hybrid landscapes would be to use SAP-specific monitoring tools primarily because they are SAP-aware and have specifically crafted capabilities. After that, you can extend your monitoring to other generic tools for centralized operations.

With this, we conclude our discussion around observability and, in general, non-functional design elements. Surely, there are many other aspects that we can discuss under non-functional design. However, we will suffice here since we’ve discussed the major elements. Next, let’s talk about how these become a part of an agreement between a supplier and a consumer.

Understanding SLAs

As an architect, once you’ve gathered and understood the non-functional requirements for a solution, you must design an architecture that balances the quality of service and the cost to implement and operate it. With your solution becoming a service or a product, you need to convey a message to your stakeholders, customers, and users claiming at what levels you will fulfill the non-functional requirements. When quantified, these become service-level objectives (SLOs) – hence, quality of service targets. And by conveying these SLOs to your stakeholders, customers, and users, you get into service-level agreements (SLAs).

If you are delivering an in-house solution, the SLAs become the service quality that needs to satisfy your stakeholders. On the other hand, if you supply your solution to customers, the SLAs become part of the official and legal engagement between you and your customers. In the cloud, as everything is a service, the SLAs critically influence technology choices.

Depending on how much control you have over the operational elements, you may rely on service levels provided to you by other suppliers. If you have full control over all the components of a solution, you solely define its SLOs. For example, this is the case for hyperscalers. Their design choices primarily define the SLAs they can offer.

On the other hand, if you are building a solution on top of an as-a-service, you need to cascade the supplier’s SLAs into your design’s SLAs. Here, you can adjust an SLA if the supplier provides options; alternatively, you can add other components to your design to adjust your SLAs to the required level.

An SLA can be defined for any quality that can typically be quantified as an objective, such as web page load time, API response time, or database RTO/RPO. However, in the cloud, engagements are made prominently based on an availability SLA in terms of a percentage of uptime in a certain unit of time. For example, a supplier can offer a database-as-a-service with a 99.95% availability (uptime) SLA, which corresponds to a maximum of 22 minutes of downtime a month.

Generally, the availability SLA excludes the downtime that’s required for planned maintenance activities that the customers are notified of in advance. This suggests that the suppliers may be correlating their SLAs to the risk probabilities they envisage.

At the end of the day, an SLA is a promise and can be unintentionally breached. In such cases, most suppliers offer a service credit. This can be considered a form of apology since it may not always compensate for the business impact of the disruption. In the end, the supplier’s performance in consistently delivering the service within the SLAs determines its reliability. Customers want resilience, and suppliers strive to provide the maximum they can.

Finally, let’s highlight something we have hinted at previously. Surely, you would want to provide the highest SLA possible. But, as with many things, this comes with additional costs. As an architect, you need to balance the SLA against the cost. So, anything you do to improve the SLA of your solution should justify its cost impact. For example, if the solution you are designing for is not mission-critical, 99.9% availability may be satisfactory. Yes, designing for 99.95% availability may introduce an extra uptime of 22 minutes per month, but you should think about whether this improvement is worth the additional implementation and operational costs.

SAP BTP SLAs

Let’s materialize what we have discussed so far by putting forward the SLA considerations for SAP BTP. As an architect designing solutions with SAP BTP, you rely on the SLAs that SAP BTP offers. These SLAs are part of the terms and conditions when you get into a formal engagement with SAP by purchasing SAP BTP. As part of the procurement, SAP provides the necessary documentation. Besides, in their generic forms, these documents are available in SAP Trust Center at https://www.sap.com/uk/about/trust-center.html. Here, you can navigate to the Agreements section and then to the Cloud Services Agreements section.

To understand the SAP BTP-related terms, you will need to check out several documents. Because these documents and their URLs are updated from time to time, we won’t provide URLs here. Instead, you need to search for the latest version in SAP Trust Center. In addition, SAP provides documents that are specific to countries, so they will be in different languages. You can pick the one that suits your situation.

As mentioned previously, as with many software vendors, SAP refers to system availability as the primary SLA. So, let’s go through the documents we can use to trace the SLA for SAP HANA Cloud as an example:

- General Terms and Conditions for SAP Cloud Services: As the name suggests, this document puts forward general terms and conditions with headings such as definitions, usage rights, responsibilities, warranties, and more. This document, then, refers to an applicable SLA or Supplement document.

- Service Level Agreement for SAP Cloud Services: This document considers SLAs that are generic for all SAP cloud solutions. Besides, it provides important definitions. With this document, we learn that system availability calculation excludes downtime due to planned maintenance. According to this document’s version at the time of writing this book, SAP promises a system availability of 99.7% for its cloud solutions in general.

- SAP Business Technology Platform Supplemental Terms and Conditions: With this document, SAP provides terms and conditions specific to SAP BTP. Here, we learn that SAP increases its promise for the availability of SAP BTP to an SLA of 99.9%. However, the document says there may be deviations from this SLA and refers to another document – that is, the Service Description Guide.

- SAP Business Technology Platform Service Description Guide: This document contains details for all SAP BTP services in terms of their metric definitions, sizing considerations, and SLAs. For some sophisticated services, this document may refer to other supplement documents, and SAP HANA Cloud is one of them.

- SAP HANA Cloud Supplement: Finally, here, we will find specific information for SAP HANA Cloud. At the time of writing this book, the minimum SLA that SAP offers for SAP HANA Cloud is 99.9%. As discussed earlier, the document says it is up to the customer to improve an SLA of 99.95% by creating replicas. SAP does not refer to any SLAs for RTO and RPO.

It may not end there, and you may need to check your contract with SAP to see whether any specific agreement terms override these options.

Working with SLAs

Now that you know how to check the SLAs for SAP BTP services, let’s discuss how you can use this information. You must have already realized that we have been referring to availability SLA even though we use it generically as SLA. When discussing non-functional design for business continuity, we discussed how you could enhance resilience for your designs; however, we did that without specifying SLAs. The baseline SLA will be what you get from SAP BTP and, depending on your design, you may need to consider composite SLAs. As an example, let’s take a custom application in the Cloud Foundry runtime:

- Suppose you deploy only one instance for the application. In that case, you should expect a SLA of 99.9% because the documentation provides a specific SLA only if you deploy multiple instances. Thus, with one instance, you are at the minimum SLA specified for SAP BTP.

- If you create multiple instances for your application, the SLA becomes 99.95% because SAP BTP, under the hood, deploys the application in different availability zones.

- If you want even more SLA and justify the cost of implementing and maintaining it, you can design a multi-region arrangement, as illustrated in Figure 5.3.

Checking the Service Description Guide when writing this book, we can see that most technical SAP BTP services are offered with 99.95% availability SLA, whereas business services generally have 99.7%. Although still on par with industry standards, the latter being lower is mainly because these services consist of several components, thus bringing the composite SLA down.

Now, let’s see what you can do on the operations side. To begin with, you need to be aligned with SAP’s maintenance and upgrade strategy for planned downtime. You are in the cloud now, and as you may already know, you cannot opt out of receiving a product update in most cases. To ensure this alignment, subscribe to cloud system notifications by going to https://launchpad.support.sap.com/#/csns.

To monitor the availability of SAP BTP services, you can use SAP’s Cloud Availability Center (CAC) at https://launchpad.support.sap.com/#/cacv2. This tool provides detailed information on cloud service availability such as planned events, disruptions, timelines, disruption causes, and more. These two URLs are for SAP customers, so you will need an s-user to access these tools. For general information, you can visit https://support.sap.com/en/my-support/systems-installations/cac.html.

Finally, if, for a month, the availability you experience goes below the quantity set by the SLA, you can open an incident to request service credits, subject to the terms and conditions.

This concludes what we wanted to say about SLAs. Next, let’s discuss how SAP supports SAP BTP and some peripheral considerations for you to make the most of SAP BTP.

Getting support

With a fast-evolving cloud platform in hand, having vendor support becomes much more important to keep pace with it. On the other hand, the cloud vendors are keener to interact more intensely with customers for strategic alignment. Their success is measured not only by how much they sell but also by how much their solutions are adopted and consumed. The competition in the cloud market makes this mandatory. Therefore, it’s advantageous for both the supplier and the customer to continuously monitor and maximize the consumption of cloud services. For the customer, this means they get what they pay for, while for the supplier, it increases the chance of the customer staying with them. Here, support quality is a key factor that accelerates adoption, eliminates roadblocks, and increases consumption.

Support services

SAP has a support framework that provides various channels for a diverse set of requirements. For SAP cloud, which also includes SAP BTP, there are two primary support services:

- Enterprise Support, cloud edition: This is the standard support service level with no additional cost. Through this support channel, you can interact with the experts, get involved in Enterprise Support Academy training opportunities, and get access to tools such as SAP Solution Manager, SAP Cloud Application Lifecycle Management, and SAP Innovation and Optimization Pathfinder.

- Preferred Success: This is the improved support service that comes with a price. With this plan, you can get more proactive and tailored guidance that aims to increase the adoption and efficiency of SAP cloud technologies and solutions. In addition, you get faster issue resolution as the plan includes quicker target response times.

Apart from the support plans that apply to the SAP cloud, you can also benefit from premium engagement plans such as SAP ActiveAttention and SAP MaxAttention. SAP MaxAttention is the most exclusive level of premium engagement, and with it, you can enable the orchestration of all service and support engagements under one roof.

For reaching out to SAP support, you can report incidents in the SAP Support Portal at https://support.sap.com. When reporting incidents, it is important to provide the correct product function or component information so that your incident can be forwarded to the right team quickly. You can use the search functionality in the portal to find the right component. Alternatively, you can get this information at the SAP Discovery Centre. On the service information page, the support section will generally refer to the support component for the service:

Figure 5.9 – SAP Discovery Center – support information for the SAP Launchpad Service

The preceding screenshot shows the support section for the SAP Launchpad Service at the SAP Discovery Center. As you can see, it specifies the support component – that is, EP-CPP-CF – which you can use to raise an incident about the SAP Launchpad Service.

People

When getting support for your SAP solutions, you may interact with people with different roles. Let’s talk about some of them:

- Account Executive: This is the main point of contact for the business relationship between SAP and your company. In general, account executives deal with commercial matters, such as licenses and contracts. However, they can also step in for serious escalations. Apart from the industry account executive, who generally leads the customer account, you may also interact with other line-of-business account executives specialized in specific solutions.

- Cloud Success Partner (CSP): This role’s main aim is to help customers successfully adopt, consume, and renew their investment in a specific SAP cloud solution. This role was previously known as Cloud Engagement Executive (CEE). For example, with your SAP BTP CSP, you can discuss any matter related to your consumption of SAP BTP, such as strategic alignment, technical issues, product information, and more.

- Cloud Success Executive: This role’s aim is like CSP’s; however, their responsibility is at a generic level and supports the account executive to coordinate cloud adoption across solutions and route cloud-related matters to specific CSPs where needed.

- Technical Quality Manager (TQM): This role manages the customer relationship for premium engagements and coordinates operational support activities. Their scope is not restricted to on-premise or cloud and can support customers with incidents, as well as general support matters, within the premium engagement terms.

Surely, there are people in other roles who support your relationship with SAP as a vendor. For example, when meeting with the aforementioned people, they can bring in experts from the product, solution advisory, RISE with SAP, or consulting teams where needed.

Resources

There are a plethora of resources that you can leverage to maximize your use of SAP BTP, as well as help you find answers to your questions, including learning resources. Cloud technology is continuously changing, and you need to be proactive to upskill your teams to adopt new changes quickly. The following are the main resources you can go to:

- Read this book (it goes without saying, right?).

- Follow SAP Community at https://community.sap.com, where you can ask questions or answer others’ questions to sharpen your skills. Besides, you can find thousands of blog posts that provide valuable information. SAP uses this channel to publicize information on new solutions or changes to existing solutions.

- Use SAP Discovery Center at https://discovery-center.cloud.sap.

- Explore SAP Learning Journeys for free at https://learning.sap.com.

- Watch courses and microlearning content in openSAP at https://open.sap.com. In ongoing courses, you can interact with the instructors, who are SAP experts. Besides, you can watch previously conducted courses at your own pace.

- Follow SAP Developer Center, where you can find hundreds of tutorials, trials, and other learning materials. Also, follow people such as SAP Developer Advocates, who create valuable content on various online channels.

- Use SAP Help at https://help.sap.com/viewer/product/BTP.

- Join SAP’s Customer Influence programs such as Customer Engagement Initiatives (CEI) and Early Adopter Care (EAC). With these, you can get extra support for SAP solutions, get to know newly released features, and influence product development with your ideas.

- Attend significant events such as SAP TechEd and SAPPHIRE NOW, where SAP releases lots of new information and training content.

- Check out standard SAP training offerings, including SAP Learning Hub and in-class training.

- Leverage SAP initiatives such as Intelligent Enterprise Institute, Co-Innovation Lab (COIL), Enterprise Support Advisory Council, Technical Academy, and SAP BTP Customer Value Network.

- Finally, keep an eye on other books, virtual events, webinars, blogs, and articles – for example, webinars from SAP user groups such as ASUG and UKISUG.

Summary

How did it go? We have gone through so many topics. First, we discussed non-functional design while concentrating on business continuity, data management, performance, capacity, scalability, and observability. For these, we set the stage first and then explained what SAP BTP provides for non-functional requirements and how you, as the consumer, can design solutions around the options you have.

In the second section, we touched upon SLAs that are primarily linked to the non-functional requirements around availability. After that, we showed you how you could find out the SLA’s SAP promises for SAP BTP services so that you can cascade them in your design’s resilience arrangements.

Finally, we talked about SAP support services, people you can interact with while getting support, and the primary resources you can use to get help.

This was the last chapter of the first part of this book. In the next chapter, we will start a new part, where we will delve into the topic of integration. Excited? Continue reading!