You worked hard to digitize your ideas and send them our way in the form of light and sound. But they must be encoded into neural impulses for your app to work and your business model to succeed. From a business perspective, a meme that never enters a brain is the tree that falls in the proverbial empty forest—it doesn’t exist.

To cross the organic boundary into our nervous systems, the first requirement is that it must fall in our line of sight. That statement may be painfully obvious to you, but it is an even bigger pain point for us. Your meme will fail if the light from it only reaches our peripheral visionwhere we can neither read nor see color.

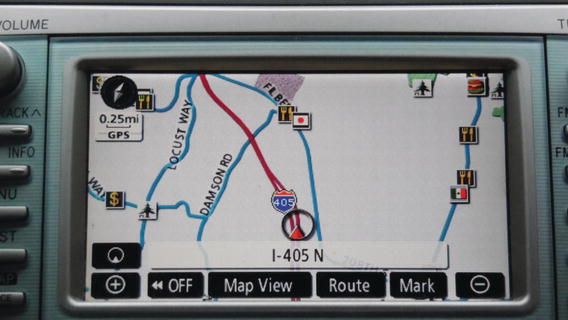

Several billion-dollar examples instantly leap to mind: navigating while driving, video calling , and seeing display ads on web sites . To illustrate, most if not all of the point-of-interest icons designed for this dashboard navigational display are difficult or impossible to be seen or appreciated while driving (Figure 1-1). It was someone’s job (maybe yours) to make these memes, like the hamburger icon, or Korean, Italian, and American flags for different ethnic restaurants, but they may never actually enter a driver’s brain in the moment when they might be of use.

Figure 1-1. Dashboard display.

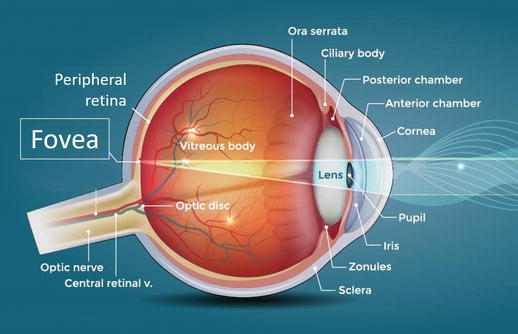

Consider closely the back wall of our eyeballs and you’ll understand what you’re up against. Our retinaehave a lot of neurons to catch the light, but the cone-shaped neuronsthat let us see color and the detailed edges of characters are concentrated in one tiny area, called the fovea, which is opposite our pupils (Figure 1-2). i Our fovea are amazingly sensitive when we point them your way: we can detect whether or not you’re holding a quarter from 90 yards off. But if we’re looking just to the right or left of you, our acuityplummets to only 30% of what it is when we look straight at you. A little further off, our acuity drops to 10%. ii

Figure 1-2. Diagram of the fovea. iii

What that means for your meme is that we cannot read it if we’re looking a mere six degrees to the left or right. At the typical distance to a screen, we’re blind to symbolic information a mere five characters away from where we are focused. Stare at the period at the end of this sentence and count the number of words you can make out past it. Not too many. Perhaps we could read your meme in our peripheral vision if you increased your font size. But you’d have to increase it 400% if we’re looking six degrees off, 3000% if we’re looking 20 degrees off, and 9000% if we’re looking 30 degrees off. Good luck doing that on a smartphone screen or a dashboard display.

Key Point

Your meme will fail if the light from it only reaches our peripheral vision, where we can neither read nor see color.

But even useful memes are impeded by our anatomy if they are not designed in harmony with it. For example, many human-factors experts consider video calling to be among the slowest-spreading meme in the history of tech inventions (Figure 1-3). We’ve had video calling technology longer than we’ve had microwave ovens or camcorders. And yet, while the penetration of those other inventions is all but complete in developed markets, as are other forms of communication like texting, still only a fraction of us use video calling daily or monthly, if at all.

Figure 1-3. Technology adoption rates. iv

Why would this be? Many factors could be to blame, but the 20 degree offset or more between webcams and the eyes of the person we are talking with might be one. Because no one yet has invented a webcam, native or peripheral, that sits right behind the monitor, only on top or to the side of it, we never get to look directly into the gaze of our friends and family members while we talk (Figure 1-4). Nor do they look into ours, because to do so, we’d both have to look directly into the cameras, at which point we could no longer make out each other’s faces. The problem persists even on smaller devices because our foveal acuity is so narrow (Figure 1-5).

Figure 1-4. Sensitivity to gaze direction from Chen (2002). Original caption: “The contour curves mark how far away in degrees of visual angle the looker could look above, below, to the left, and to the right of the camera without losing eye contact. The three curves indicate where eye contact was maintained more than 10%, 50%, and 90% of the time. The percentiles are the average of sixteen observers. The camera is at the graph origin.” v

Figure 1-5. (a) The typical experience with video calls in which, when we look at others’ faces, we see them looking away. (b) Looking into the camera directs our gaze appropriately, but now we can no longer make out each other’s faces. This artificial view is shown in most advertising for video-calling services.

Thus we’ve had face-to-face calling for over 85 years, but never quite eye-to-eye calling. The best that the top video-calling applications have ever given us is a view of our friends’ eyes looking away from us as we look at them (although interestingly, their ads never show it this way). This mismatch with human nature has proved to have a very slow rate of adoption, far slower than voice-to-voice calling did before it.

Or consider ads on web sites . By 2016, U.S. companies alone were spending over $30 billion on internet display ads, vi over half of which didn’t display on a screen long enough to be viewable (half of their pixels were rendered for less than a second). vii And of those that did, a vast majority were hitting our peripheral retina , where we can’t make them out as we read the content elsewhere on the page. We need only point our fovea five characters to the left or right, doing whatever it is we came to do, and your ads were lost on us. Let the sheer waste of that and the lack of ROI sink in as a result of this incredibly powerful psychological bottleneck. Not to mention the inaccuracy of reach statistics, which only measure whether the ad was queried from a server, ignoring whether it landed on a fovea, or even a peripheral retina . This is not the path to memetic fitness, let alone marketing success and profitability.

And then there are our cars, the next big battleground for tech dominance. Whoever prevails in this context must find design solutions to accommodate the fact that we must point our fovea forward out of the windshield while we drive. This is because our fovea are also required for depth perception, something our peripheral vision is incapable of, and thus many states mandate we keep them on the stop-and-go traffic ahead. The problem is that you need to rethink the traditional monitor. Positioned currently where the radio traditionally sits, or on a smartphone held in a driver’s hand, it is so far away from our foveal vision that we expose ourselves to real danger in trying to view any of your memes shown there (Figure 1-6). In a 2014 report, the U.S. National Transportation Safety Board listed “visual” and “manual” distractions on their “most wanted list” of critical changes to reduce accidents and save lives (in addition to “cognitive” distractions, which we’ll return to later). They specifically referenced “the growing development of integrated technologies in vehicles” and its potential to contribute to “a disturbing growth in the number of accidents due to distracted operators”. viii

Figure 1-6. Dashboard display challenge. Most design elements on dashboard displays will be unreadable by drivers focusing on the traffic ahead unless they are projected onto the windshield. ix

Certainly, if self-driving cars proliferate, then the entire interior of cars can be redesigned and turned into a media room or a productivity center (which will spark its own platform for competing memes). The speed by which this technology proliferates will depend on the incidence of fatal crashes, like the 2016 accident by a self-driving Tesla, and on whether drivers will legally be allowed to let their attention wander. x

But for those of us who continue to drive, whether out of economics or the pace of change, our retinal anatomy would predict that our windshields will become our monitors, where your digital memes will be displayed. Clearly, they must not compete with the things we need to see outside the car, but instead augment them. The first memes to warrant display on windshields will make road hazards like falling rocks and crossing deer more visible, forewarn us of tight curves, and signal slowdowns in traffic. After the first wave of safety memes is established, next will come convenience memes : those that enhance street signs and outline upcoming freeway exits. Finally, with a considerable testing, providing jobs for memetic engineers, the third wave of commercial memes will arise on our windshields: digital billboards pointing the way to gas stations and restaurants, specially adapted for the windshield. Commercial logos have been displayed on GPS units and “heads-up” displays already for some time; maybe on windshields they will finally hit our fovea and enter our brains.

Is there a limit to the content that can be projected on a windshield? Of course there is. But scarcity is the foundation of value, so this only drives up the price for a placement. How can legislators help? Not by banning windshield displays altogether, but by establishing a data-driven regulatory agency, in the United States perhaps under the National Transportation Safety Board (NTSB) or the National Highway Traffic Safety Administration (NHTSA), which approves memes like the FDA approves drugs. Broadly, windshield memes must be shown empirically to…

Increase drivers’ safety, not imperil it

Improve our driving, not impair it

Augment reality, not distract from it

As you see, our psychological constrictions matter, starting with the nerves in our eyeballs. But this is only the beginning, since our attentional capacity is just as laser-thin.

Notes

Jonas, J. B., Schneider, U., Naumann, G.O.H. (1992). Count and density of human retinal photoreceptors. Graefe’s Archive for Clinical and Experimental Ophthalmology, 230 (6), 505–510.

Anstis, S. M. (1974). A chart demonstrating variations in acuity with retinal position. Vision Research, 14, 589–592. Retrieved from http://anstislab.ucsd.edu/2012/11/20/peripheral-acuity/ .

Used with permission from Cellfield Canada Inc.

Adapted from Felton, N. (2008, February 10). Consumption spreads faster today (graphic). The New York Times. Retrieved from http://www.nytimes.com/imagepages/2008/02/10/opinion/10op.graphic.ready.html .

See also Rainie, L. & Zickuhr, K. (2010). Video calling and video chat. Pew Research Center’s Internet & American Life Project. Retrieved from http://www.pewinternet.org/2010/10/13/video-calling-and-video-chat/ .

See also Poltrock, S. (2012, October 19). Why has workplace video conferencing been so slow to catch on? Presentation for Psychster Labs.

Chen, M. (2002, April 20). Leveraging the asymmetric sensitivity of eye contact for videoconferencing. Presentation given at CHI, Minneapolis, MN. Retrieved from http://dl.acm.org/citation.cfm?id=503386&CFID=864400319&CFTOKEN=50601798 . Copyright ACM Inc. Used with permission.

eMarketer. (2016, January 11). US digital display ad spending to surpass search ad spending in 2016. Retrieved from http://www.emarketer.com/Article/US-Digital-Display-Ad-Spending-Surpass-Search-Ad-Spending-2016/1013442 .

Loechner, T. (2013, Oct 30). 54% of digital ads aren’t viewable, and even ‘viewability’ is in a black box. MediaPost. Retrieved from http://www.mediapost.com/publications/article/212460/54-of-digital-ads-arent-viewable-and-even-view.html .

National Transportation Safety Board. (2014). NTSB most wanted list: Critical changes needed to reduce transportation accidents and save lives. Retrieved from http://www.ntsb.gov/safety/mwl/Documents/2014/03_MWL_EliminateDistraction.pdf .

Evans, G. A. (Photographer). (2016, November).

Stoll, J. D. (2016, July 22). Tesla autopilot crash shouldn’t slow self-driving development, regulator says. The Wall Street Journal. Retrieved from http://www.wsj.com/articles/tesla-autopilot-crash-shouldnt-slow-self-driving-development-regulator-says-1469200956 .