Although this chapter conflicts with tips on not purely focusing on performance, there’s a fine line to follow. On the one hand, you don’t need to get bogged down shaving off microseconds of runtime. On the other hand, though, you shouldn’t completely disregard script performance.

There is a gray area that you need to stay within to ensure a well-built PowerShell script.

Don’t Use Write-Host in Bulk

Although some would tell you never to use the Write-Host cmdlet , it still has its place. But, with the functionality, it brings also a small performance hit. Write-Host does nothing “functional.” The cmdlet outputs text to the PowerShell console.

Don’t add Write-Host references in your scripts without thought. For example, don’t put Write-Host references in a loop with a million items in it. You’ll never read all of that information, and you’re slowing down the script unnecessarily.

If you must write information to the PowerShell console, use [Console]::WriteLine() instead.

Tip Source: https://twitter.com/brentblawat

Further Learning

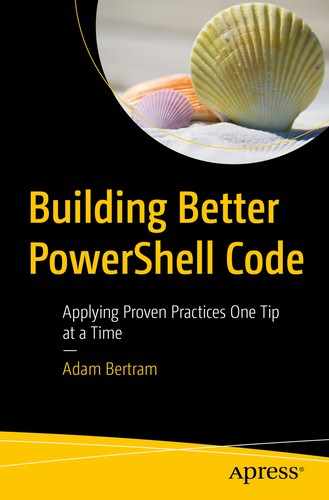

Don’t Use the Pipeline

The PowerShell pipeline, although a wonderful feature, is slow. The pipeline must perform the magic behind the scenes to bind the output of one command to the input of another command. All of that magic is overhead that takes time to process.

The pipeline simply has a lot more going on behind the scenes. When the pipeline isn’t necessary, don’t use it.

Further Learning

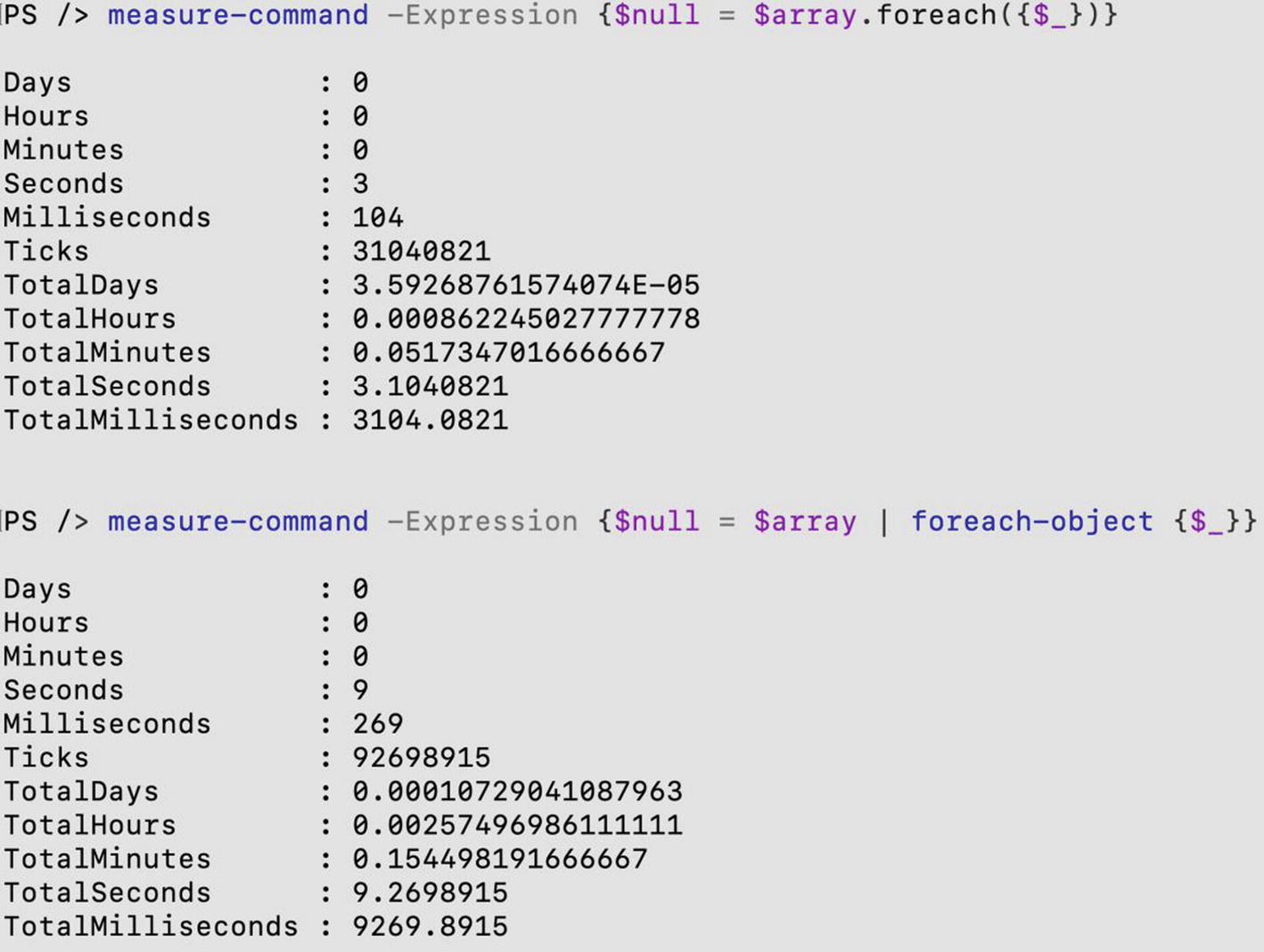

Use the foreach Statement in PowerShell Core

PowerShell has a few different ways to iterate through collections. The fastest way is with the foreach statement in PowerShell Core. The speed of the foreach statement varies over different versions, but in PowerShell Core, the PowerShell team has really made it fly.

You can see here that the foreach() method took four times as long!

Further Learning

Use Parallel Processing

Leveraging PowerShell background jobs and .NET runspaces, you can significantly speed up processing through parallelization. Background jobs are a native feature in PowerShell that allows you to run code in the background in a job. A runspace is a .NET concept that’s similar but requires a deeper understanding of the .NET language. Luckily, you have the PoshRSJob PowerShell module to make it easier.

Let’s say you have a script that attempts to connect to hundreds of servers. These servers can be in all different states – offline completely, misconfigured leading to no connection, and connectable. If processing each connection in serial, you’ll have to wait for each of the offline or misconfigured servers to time out before attempting to connect to the other.

Instead, you could use put each connection attempt in a background job which will start them all nearly at the same time and then wait on all of them to finish.

You then query these server names with Get-Content -Path C:servers.txt.

Further Learning

Use the .NET StreamReader Class When Reading Large Text Files

The Get-Content PowerShell cmdlet works well for most files, but if you’ve got a large multi-hundred megabyte or multi-gigabyte file, drop down to .NET.

Using the Get-Content cmdlet is much simpler but much slower. If you need speed though, the StreamReader approach is much faster.

Further Learning

PERF-02 Consider Trade-offs Between Performance and Readability