Chapter 2. User-Item Ratings and Framing the Problem

If you were asked to curate the selection for a cheese plate at a local cafe, you may start with your favorites. You may also spend a bit of time asking for your friends’ favorites. Before you order a large stock in these cheeses for the cafe, you would probably want to run a small experiment. Maybe asking a group of friends to taste your selections and tell you their preferences.

In addition to learning their feedback, you’d also learn about your friends and the cheeses. You’d learn which kinds of cheeses your friends like and which friends have similar taste. You can also learn which cheeses are the most popular, and which cheeses are liked by the same people.

These data would start to give you hints about your first cheese recommender. In this chapter, we’ll talk about how to turn this idea into the right stuff for a recommender system. By way of this example, we’ll discuss one of the underlying notions of a recommender: how to predict a user’s affinity for things they’ve never seen.

The user-item matrix

It’s extremely common to hear those who work on recommendation systems talk about matrices, and in particular the user-item matrix. While linear algebra is deep, both mathematically and as it applies to RecSys, we will begin with very simplistic relationships.

Before we get to the matrix forms, let’s write down some binary relationships between a set of users and a set of items. For the sake of this example, think of a group of 5 friends (mysteriously named

-

-

-

-

-

The first thing you may notice is that such expository writing is a bit tedious to read and parse. Let’s summarize these results in a convenient table:

| Cheese Taster | Gouda | Chevre | Emmentaler | Brie |

|---|---|---|---|---|

A | 5 | 4 | 4 | 1 |

B | 2 | 3 | 3 | 4.5 |

C | 3 | 2 | 3 | 4 |

D | 4 | 4 | 5 | - |

E | 3 | - | - | - |

Your first instinct may be to write this in a form more appropriate for computers. Like a collection of lists:

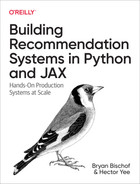

This may work in some scenarios, but you might want to more clearly indicate the positional meaning in each list. We could simply vizualize this data with a heatmap:

importseabornassns_=np.nanscores=np.array([[5,4,4,1],[2,3,3,4.5],[3,2,3,4],[4,4,5,_],[3,_,_,_]])sns.heatmap(scores,annot=True,fmt=".1f",xticklabels=['Gouda','Chevre','Emmentaler','Brie',],yticklabels=['A','B','C','D','E',])

Figure 2-1. Cheese ratings matrix

As we observe datasets with huge numbers of users or items, and with more and more sparsity we will need to employ a data structure more well suited to only representing the data necessary. There are a variety of so-called dense representations, but for now we will use the simplest form: tuples of user_id, item_id, and the rating. In practice, it’s often a dictionary with indices provided by the ids.

Note

Two types of data structures for these kinds of data are dense and sparse representations. Loosely, a sparse representation is one such that a datum exists for each non-trivial observation. Whereas a dense representation always contains a datum for each possibility even when trivial (null or zero).

'indices':[(0,0),(0,1),(0,2),(0,3),(1,0),(1,1),(1,2),(1,3),(2,0),(2,1),(2,2),(2,3),(3,0),(3,1),(3,2),(4,0)]'values':[5,4,4,1,2,3,3,4.5,3,2,3,4,4,4,5,3]

A few natural questions emerge:

-

What’s the most popular cheese? From the observations so far, it’s looking like

-

Would

-

If we were asked to buy two cheeses only, which should we buy to satisfy everyone the most?

This example and associated questions are intentionally simplistic, but the point is clear that this matrix representation above is at least convenient for capturing these ratings.

What may not come obviously is that beyond the convenience of this data visualization, is the mathematical utility of this representation. Question 2 above posits a question that is inherent in the RecSys problem space: “predict how much a user will like an item they’ve not seen”. This question also may be recognizable as a problem from a linear algebra class: “how can we fill in unknown elements of a matrix from the ones we know”. This is what is called matrix completion.

The back-and-forth between formulating user-experiences that capture their needs, and the mathematical formulations to model these data and needs will be at the heart of recommendation systems.

User-user vs item-item Collaborative Filtering

Before we dive into the linear algebra, let’s consider the purely data science perspective called collaborative filtering (CF) (Goldeberg et al, ’92).

The underlying idea of collaborative filtering is that those with similar taste, help others to know what they like without having to try it themselves. The collaboration terminology was originally intended to mean among similar-taste users, and the filtering aspect was originally intended to mean filtering out things people will not like.

There are two ways to think of this collaborative filtering strategy:

-

Two users with similar taste, will continue to have similar taste

-

Two items with similar user fans, will continue to be popular with other similar users to those fans

It may sound like these are identical, but they appear differently in the mathematical interpretations. At a high level, the difference is deciding which kind of similarity do you wish your recommender to make priority of–user similarity or item similarity.

If you prioritize user similarity, then to provide a recommendation for a user

If you prioritize item similarity, then to provide a recommendation for a user

Later we will dive deeper into similarity, but let’s quickly link these ideas to our brief discussion above. Similar users are rows of the user-item matrix which are similar as vectors; similar items are columns of the user-item matrix which are similar as vectors.

The Netflix challenge

In 2006, an online competition was kicked off on the website the Netflix prize. This competition was to see if teams could improve on the performance of the Netflix team’s collaborative filtering algorithms on an open sourced dataset from the company. While this is more common today via websites like Kaggle or via conference competitions at that time, such a competition was very exciting and novel for those interested in RecSys.

The competition consisted of several intermediate rounds of what were called Progress Prize, and the final Netflix Prize was awarded in 2009. The data provided was a collection of 2,817,131 triples consisting of (user, movie, date_rated). And half of these additionally include the rating itself. Notice that like our above example, the user-item information is nearly enough to specify the problem. In this particular dataset, the date was provided. Later on, we will dig into how time might be a factor, and in particular, for sequential recommendation systems.

The stakes were quite high in this competition, requirements for beating the internal performance were a 10% increase in Root Mean Square Error, RMSE–we will discuss this loss function later–but the spoils added up to over $1.1M. The final winners were BellKor’s Pragmatic Chaos(who incidentally won the two previous Progress prizes) with a test RMSE of 0.8567. In the end, only a 20-min earlier submission time kept BellKor ahead of the competitors The Ensemble.

To read in detail about the winning submissions check out Big Chaos’ Netflix Grand Prize and Big Chaos’ Progress Prize. In case you choose not to read those, we will a few important lessons from this game.

First, we see that the user-item matrix from before appears in these solutions as the critical data structure and mathematical. The model selection and training is important, but parameter tuning provided a huge improvement in several algorithms. We will return to parameter tuning in later chapters to make this more precise. The authors state that several model innovations came from reflecting on the business use-case and human behavior and trying to capture those patterns in the model architectures. Next, linear algebraic approaches served not only as the first reasonably performant solutions, but building on top of them led to the ultimately winning approach. Finally, eking out the performance that Netflix originally demanded to win the competition took so long, that business circumstances changed, and the solution was no longer useful.

That last one might be the most important thing a machine learning developer needs to learn about recommendations systems:

Tip

Build a working usable model quickly and iterate while the model is still relevant to the needs of the business.

Soft ratings

In our cheese tasting example above, each cheese received either a numerical rating, or was not tried by a guest. These are what are called hard ratings–regardless if the cheese is a brie or a chevre; they are explicit, and their absence indicates a lack of interaction between the user and item. In some contexts, we wish to accommodate occurrences wherein a user does interact with an item, and yet no rating is provided.

A common example is a movies app; a user may have watched a movie with the app, but not provided a star rating. This indicates that the item–in this case a movie–has been observed, but we don’t have the rating for our algorithms to learn from. However, we can still make use of this implicit data:

-

we can exclude this item from future recommendations

-

we could separately use this data as a separate term in our learner

-

we could assign a default rating value to indicate “interesting enough to watch, not significant enough to rate”

It turns out that implicit ratings are very important for training effective recommendation systems, not only because it’s common that users don’t give hard ratings, but because they provide a different level of signal. Later, when we wish to train multi-level models to predict both click-likelihood and buy-likelihood, these two levels will prove extremely important.

To sum up:

-

a hard rating is when the user is directly prompted for feedback on an item

-

a soft rating is when the user’s behavior implicitly communicates feedback on an item without direct prompt.

Data collection and user-logging

We established above that we learn both from explicit ratings and implicit ratings, so how and where do we get this data? To dive into this, we’ll need to start worrying about application code. In many businesses, the data scientists and ML engineers are separate from the engineers, but for recommendation systems there’s a strong need for alignment between the two functions.

What to log

The simplest and most obvious data collection is user-ratings. If users are given the option to provide ratings, or even thumbs-up and thumbs-down, that component will need to be built and that data will be stored. These ratings must be stored not only for the opportunity to build recommendations, it’s also a bad user experience to rate something and then shortly thereafter the rating doesn’t appear if you revisit the page.

Similarly, it’s useful to understand a few other key interactions that we will see can improve and expand your RecSys: page loads, page views, clicks, and add-to-bag.

For these data, let’s use a slightly more complicated example, an e-commerce website. Let’s take for this example bookshop.org. There are many different applications of RecSys on this one page, almost all of which we will return to in time, but for now let’s focus on some interactions.

Figure 2-2. Bookshop.org Landing Page

Page Loads

When you first load up Bookshop, it starts with items on the page. In xref:bookshop-landing, the Best Sellers of the Week are all clickable images to those book listings. Despite the user having no choice in this initial page, it’s actually quite important to log what is contained in this initial page load.

These options provide the population of books that the user has seen. If a user has seen an option, then they have the opportunity to click on it, which will ultimately be an important implicit signal.

Note

The consideration of the population of all items a user has seen is deeply tied to propensity score matching; in mathematics, propensity scores are the probability that an observational unit will be assigned to the treatment vs. the control.

Compare this to the simple 50-50 A/B test: every unit has a 50% chance of being exposed to your treatment. In a feature-stratified A/B test, you purposely change the probability of exposure dependent on some feature or collection of features (often called covariates in this context). Those probability of exposures are the propensity scores.

Why bring up A/B testing here? Later, we’ll be interested in mining our soft ratings for signal on user preference, but we must consider the possibility that the lack of a soft rating, is not an implicit bad rating. Thinking back to the cheeses: taster

Now thinking back to Bookshop: the page above does not show The Hitchhiker’s Guide to the Galaxy, so I have no way to click on it and implicitly communicate that I’m interested in that book. I could use the search, but that’s a different kind of signal–which we’ll talk about later and, in fact, is a much stronger signal.

When understanding implicit ratings like “did the user look at something” we need to properly account for the entire population of things they were exposed to, and use the inverse of that population size to weight the importance of clicking. For this reason, understanding all page loads is important.

Page views and hover

Websites have gotten much more complicated, and now there are a variety of interactions one must contend with. xref:bookshop-top-sellers demonstrates what happens if I click the right arrow in the Best Sellers of the Week carousel and then move my mouse over the Cooking at Home option:

Figure 2-3. Bookshop.org Top Sellers

I’ve unveiled a new option, and by mousing over it, made it larger and have a visual effect. These are ways to communicate to the user more information, and remind the user that these are clickable. To the recommender, these can be used as more implicit feedback.

First, the user clicked the carousel scroll–so some of what they saw in the carousel was interesting enough to dig further. Second, they moused over Cooking at Home they might click, or they might just want to see if there’s additional information when hovering. Many websites use a hover interaction to provide a pop-up detail. While bookshop doesn’t implement something like this; internet users have been trained to expect this behavior by all the websites that do, and so the signal is still meaningful. Third, they’ve now uncovered a new potential item in their carousel scroll–something we should add to our page loads, but really should come with a higher rating because it required interaction to uncover.

All this and more can be encoded into the website’s logging. Rich and verbose logging is one of the most important things to improve a recommendation system, and it’s almost always better to have more than you need rather than the opposite.

Clicks

If you thought hovering meant interest, wait until you consider clicking! Not in all cases, but in the large majority, clicking is a very strong indicator of product interest. For e-commerce, clicking often is computed as part of the recommendation team’s core KPIs (key performance indicators).

This is for two reasons:

-

clicking is almost always required to purchase; so it’s an upstream filter for most business transactions

-

clicking requires explicit user action; so it’s a good measure of intent

There will always be noise of course, but clicks are the go-to indicator of what a client is interested in. Many production recommendation systems are trained on click data–not ratings data–because of the much higher data volume and because of strong correlation between click behavior and purchase behavior.

Note

Sometimes in Recommendation Systems you hear people talk about click-stream data. It is an important view into clicks data, that also considers the order of a users click in a single session. Modern recommendation systems put a lot of effort into utilizing the order of items a user clicks on, calling this sequential recommendations, and have show dramatic improvements via this additional dimension. We will discuss sequence-based recs later in the book. xref:sequence-based-recs

Add to bag

We’ve finally arrived; the user has added some item to their bag or cart or queue. This is an extremely strong indicator of interest, and is often very strongly correlated with purchasing. There are even reasons to argue that add-to-bag is a better signal than purchase/order/watch. Add-to-bag is essentially the end of the line for soft ratings, and usually beyond this you’d want to start collecting ratings and reviews.

Impressions

One might also wish to log impressions of an item that wasn’t clicked on. This supplies the RecSys with negative feedback on items that the user isn’t interested in. For example if cheeses

Collection and instrumentation

Web applications very frequently instrument all of the above via events. If you don’t yet know what events are, maybe ask a buddy in your engineering org–but we’ll give you the skinny. Like logging, events are specially formatted messages that the application sends out when a certain block of code is executed. As in the example of a click, the application needs to make a call to get the next content to show the user, tt’s common to also “fire an event” at this moment indicating information about the user, what they clicked on, the session-id for later reference, the time, and various other useful things. This event can be handled downstream in any number of ways, but there’s a increasingly prevalent pattern of path bifurcation to:

-

a log database, like a mysql application database tied to the service

-

an event stream for real-time handling.

The latter will be very interesting: event streams are often connected to listeners via technologies like Kafka. This kind of infrastructure can get complicated fast–consult your local data engineer or MLOps person–, but a simple model for what happens is that all of a particular kind of log are sent to several different destinations that you think can make use of these events.

In the recommender case, an event stream can be connected up to a sequence transformations to process the data for downstream learning tasks. This will be enormously useful if you want to build a recommendation system that uses those logs. Other important uses are real-time metrics logging for what is going on at any given time on the website.

Funnels

We’ve just worked through our first example of a funnel, and no good Data Scientist can avoid thinking about funnels. Like them or hate them, funnel analyses are crucial for critical analyses of your website, and by extension your recommendation system.

Note

A funnel is a collection of steps a user must take to get from one state to another; it’s called a funnel because at each of the discrete steps, a user may stop proceeding through or drop off, thus reducing the population size at each step.

In our discussion of events and user logging; each step was relevant for a subset of the previous. This means that the process was a funnel, and understanding the drop-off rate at each step, reveals important characteristics of your website, and your recommendations.

Figure 2-4. An onboarding funnel

There are actually three important funnel analyses to be considered in the xref:funnel figure:

-

page view to add-to-bag user flow

-

page view to add-to-bag per recommendation

-

add-to-bag to complete purchase

The first funnel is merely understanding at a high level, what percentage of users take each step in the above flow. This is a high level measure of your website optimization, the general interestingness of your product offering, and the quality of your user leads.

The second funnel is more fine-grained to take into consideration the recommendations themselves. As mentioned above in the propensity scoring, users can only proceed through the funnel for a particular item if they’re shown the item. This intersects with these ideas of funnels because you want to understand at a high level how certain recommendations correlate with funnel drop-off, but also when using a recommender system, the confidence in your recommendations should correlate well with the funnel metrics. We will return to this more in detail later when we discuss loss functions, but for now you should remember to think of different categories of recommendation-user pairs and how their funnels may look compared to average.

Finally, add-to-bag to completion. This actually isn’t part of the RecSys problem, but should be on your mind as a Data Scientist or Machine Learning engineer trying to improve the product. No matter how good your recommendations are, this funnel may destroy any of your hard work. Before working on a recommender problem, you should almost always investigate the funnel performance in getting a user from add-to-bag to check-out-completed. If there’s something cumbersome or difficult about this flow, it will almost certainly provide a bigger bang-for-your-buck to fix this than to improve recommendations. Investigate the drop-offs, do user-studies to understand what might be confusing and work with product and engineering to ensure everyone is aligned on this flow before you start building a recommender for e-commerce.

Business insight and what people like

In the earlier example from Bookshop.org, we noticed that Top Sellers of the Week is the primary carousel on the page. Recall our earlier work on get_most_popular_recs, this is simply that recommender but applied to a specific Collector–one that only looks in the last week.

This carousel is an example of a recommender providing business-insight, in addition to driving recommendations. A common mission of a growth team is to understand weekly trends and KPIs, often things like weekly-active-users, and new signups. For many digital-first companies, growth teams are additionally interested in understanding the primary drivers of engagement.

Let’s take an example: as of the writing of this, the Netflix show Squid Game became Netflix’ most popular series of all time, breaking a huge number of records in the process. Squid Game achieved 111 Million viewers in the first month. Most obviously, Squid Game needs to be featured in Top Shows of the Week or Hottest Titles carousels, but where else should a breakout hit like this matter?

The first important insight companies almost always ask for is attribution–if the numbers go up in a week, what led to that? Is there something important or special about launches that drove additional growth? How can we learn from those signals to do better in the future? In the case of Squid Game–a foreign-language show that saw massive interest with an English-speaking audience–executives might take away the inclination to invest more in shows from South Korea, or subtitled shows with high-drama. The flip side of this coin is also important, when growth metrics lag executives nearly always ask why–being able to point to what was the most popular, and how it may have deviated from expectation, helps a lot.

The other important insight can feed back into recommendations; during exciting debuts like Squid Game, it’s easy to get caught up in the excitement as you see all your metrics go up and to the right, but might this negatively effect things also? If you have a debuting show the same week or two as Squid Game’s you’ll be less enthusiastic about all this success. Overall, successes like this usually drive incremental growth which is great for business, and in total, metrics will all probably look up. Other items, however, may have less successful launches due to a zero-sum game amongst the core user base. This can have a negative effect on longer term metrics, and even can make later recommendations less effective.

Later, we will learn about diversity of recommendations; there are a large number of reasons to care about diversifying your recommendations, but here we observe one which is to increase the overall matching valence of your users with items. As you keep a broad base of users highly engaged, you increase your future opportunity for growth.

Finally, beyond surfacing the trending hits, another benefit of knowing what’s really hot on your platform or service, is advertising. When a phenomenon starts, there can be a huge advantage in priming the pump, i.e. making noise and driving publicity of the success. This sometimes leads to a network effect, and in the days with viral content and easy distribution, this can have multiplier effects on your platform’s growth.