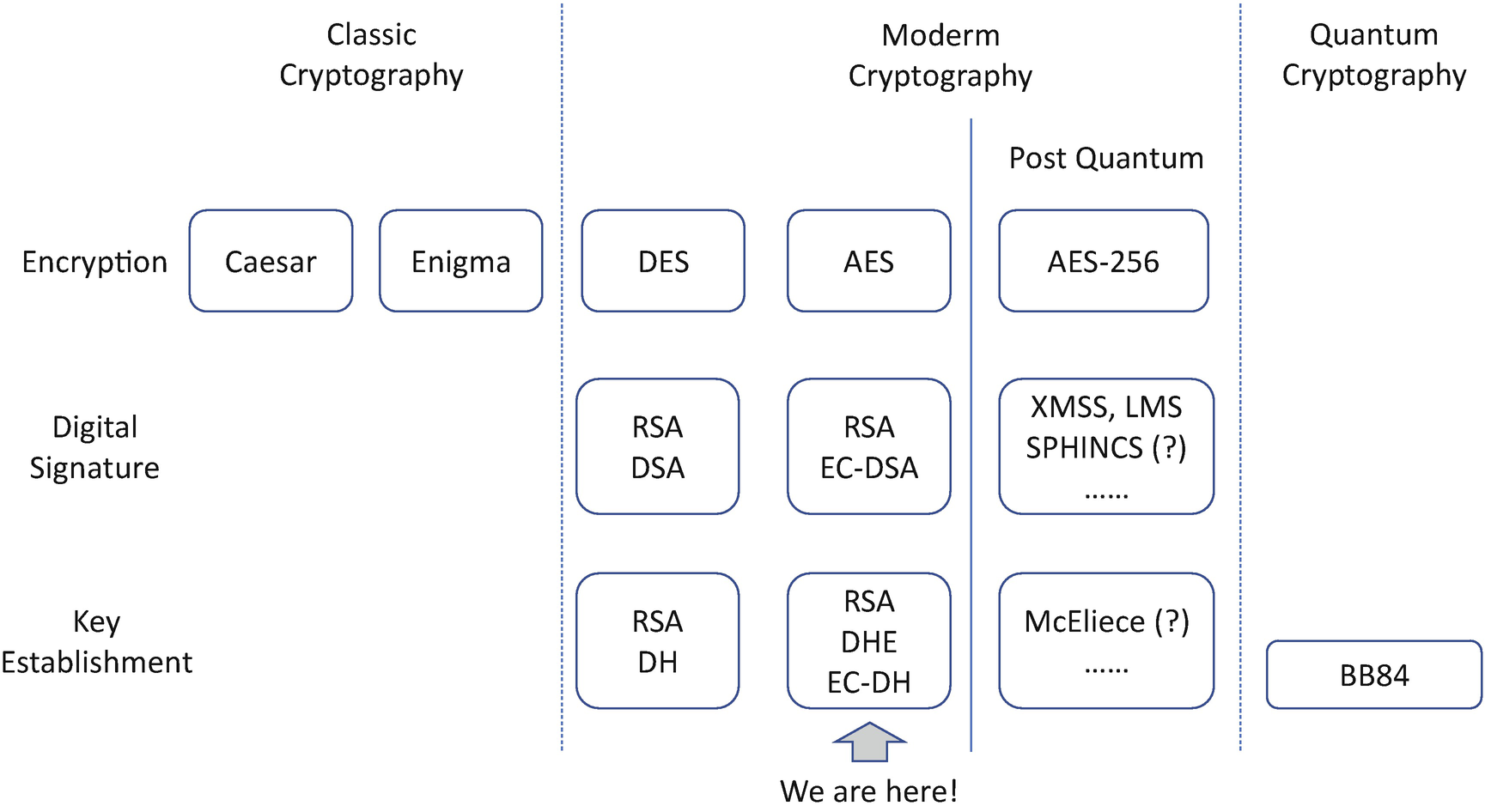

When we implement the firmware and add security features, such as firmware image verification and firmware attestation, we need to use cryptography to protect our system. Classic cryptography can be traced back to the Roman empire when people used the Caesar cipher. It ended at World War II when the Germans used the Enigma machine to encrypt the message, and the Enigma was cracked finally by Bombe – an electro-mechanical device used by the British cryptologists. The two types of classic ciphers are substitution and transposition. However, most classic ciphers can be computed by hand and broken with the modern computer and modem technologies. We moved into the modern cryptography age in the 1970s.

In 1972, the US National Bureau of Standards (NBS) called for the proposal of a standardized cipher which could be used for message encryption. Finally, the NBS released the Data Encryption Standard (DES) in 1977. This was a big revolution because it was the first time that a cryptographic algorithm was standardized. Today, NBS has become the National Institute of Standards and Technology (NIST). DES is no longer secure. It is replaced by the Advanced Encryption Standard (AES). There are lots of cryptography books introducing these standardized cryptographic algorithms and the mathematical principles behind. In this chapter, we will not discuss the details of cryptographic algorithms. Instead, we will focus on how to use these standardized cryptographic algorithms to harden our system.

Modern Cryptography

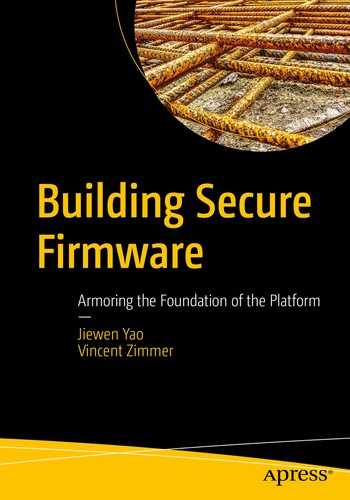

Symmetric Cryptography

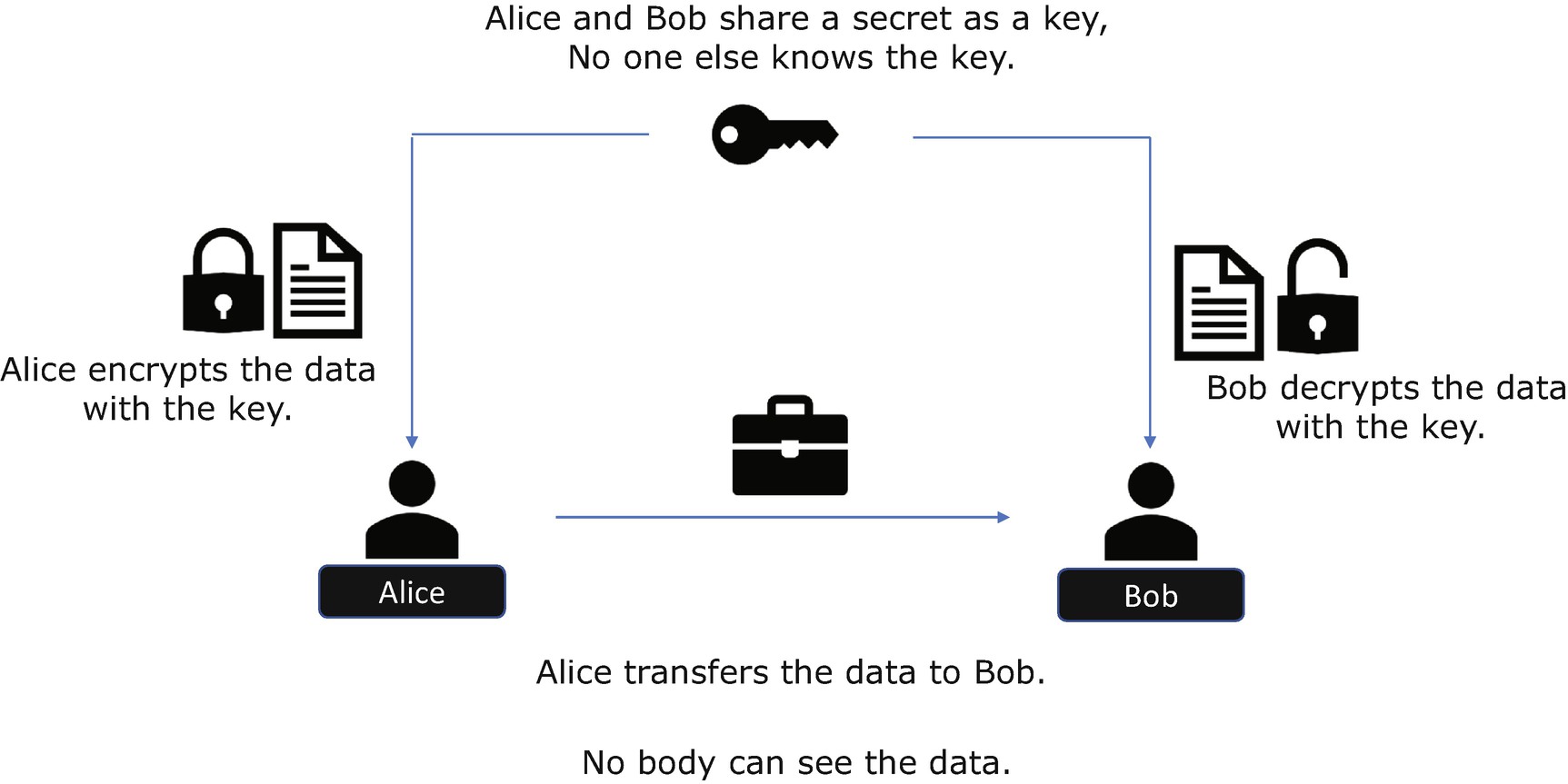

Asymmetric Cryptography – Data Encryption

Asymmetric Cryptography – Digital Signature

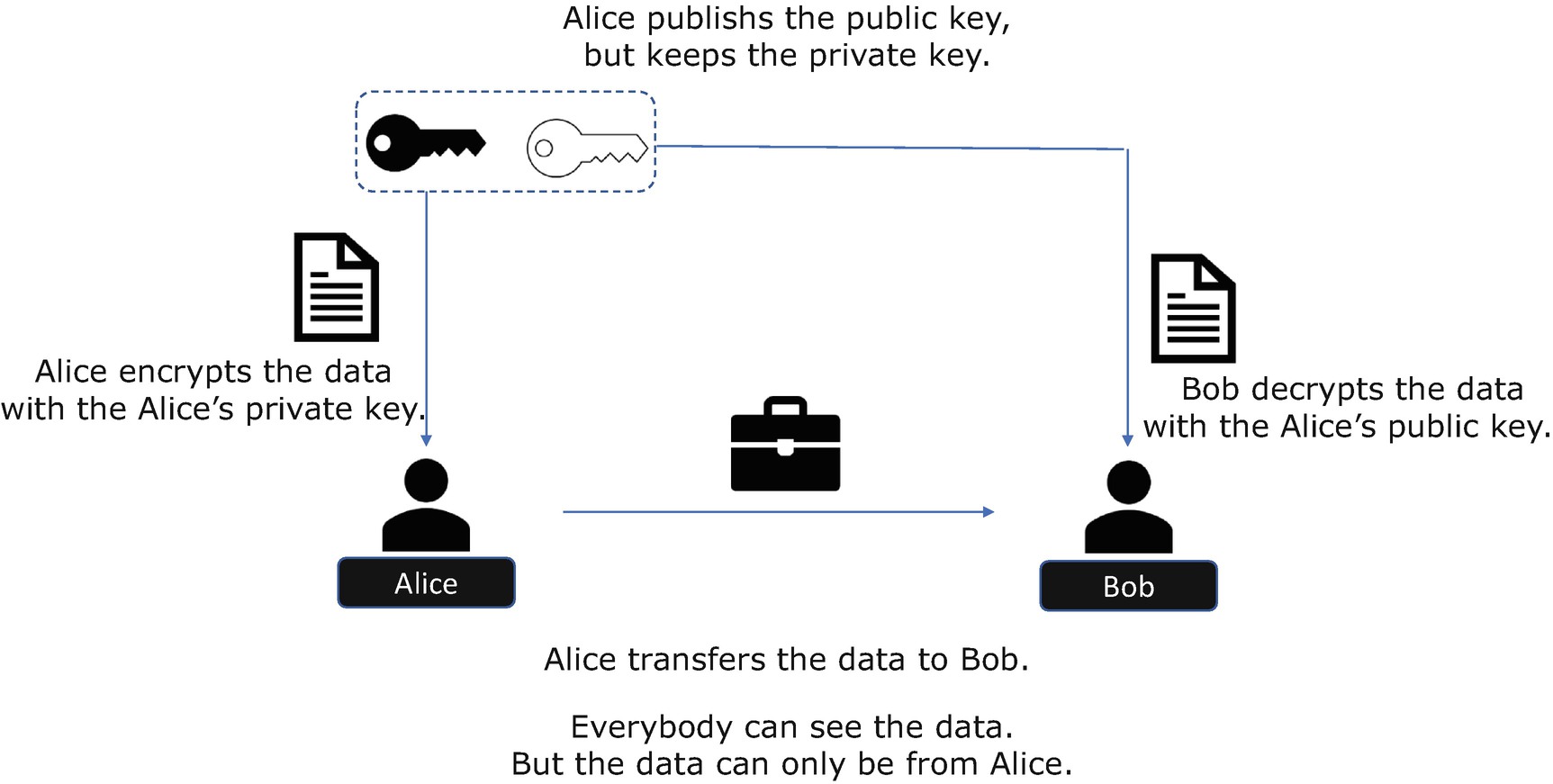

Asymmetric Cryptography – Key Establishment

Cryptography Usage in the Firmware

Cryptographic Algorithm Usage in Firmware

Function | Symmetric Algorithm | Asymmetric Algorithm | |||||

|---|---|---|---|---|---|---|---|

Block Cipher | Stream Cipher | Hash | Message Authentication Code | Digital Signing | Digital Signature Verification | Key Exchange | |

Secure boot (image verification) | V | V | |||||

Firmware update | V | V | |||||

Firmware recovery | V | V | |||||

Image encryption | V | ||||||

Trusted boot (measurement) | V | ||||||

SPDM | V | V | V | (V) | V | V | |

User authentication | V | ||||||

HDD password | V | ||||||

Authenticated Variable update | V | V | |||||

Variable encryption and rollback prevention | V | V | |||||

iSCSI boot | V | ||||||

HTTPs boot | V | V | V | (V) | V | V | |

In Chapter 8, we have discussed the host-device firmware interaction with the Secure Protocol and Data Model (SPDM). SPDM 1.0 measurement and authentication requires the hash function and digital signature verification. SPDM 1.1 includes the key exchange capability with the message authentication code (MAC) to create a session. Then both sides can use the block cipher to encrypt the communication data.

In Chapter 10, we have discussed the user authentication feature and hard disk drive (HDD) password feature. In order to support the password verification, we need to save the hash value with the salt data into a UEFI variable. In Chapter 11, we have discussed the configuration protection and used the UEFI variable as an example. UEFI authentication variable update requires the signature verification to maintain the integrity of the variable region. UEFI variables can also use a block cipher to encrypt the data and use the message authentication code (MAC) to prevent a rollback attack.

Finally, the firmware may include a network stack. The iSCSI boot mechanism uses the hash algorithm in the Challenge-Handshake Authentication Protocol (CHAP). The HTTPs boot requires the Transport Layer Security (TLS) support. The TLS handshake includes the digital signature verification, key exchange, and MAC. Once the session key is created, the TLS driver uses the block cipher or stream cipher to encrypt the communication.

Algorithm Recommendation

Cryptographic Algorithm Recommendation

Function | Algorithm | NIST Recommendation (NIST SP800-131A) | NSA Recommendation (NSA CNSA) | |

|---|---|---|---|---|

Symmetric algorithm | Block cipher | AES | AES-128 | AES-256 |

Hash | SHA | SHA256 | SHA384 | |

Asymmetric algorithm | Digital signature | RSA | RSA-2048 | RSA-3072 |

ECDSA | P-256 | P-384 | ||

Key establishment | RSA | RSA-2048 | RSA-3072 | |

DH | DH 2048bit | DH 3072bit | ||

EC-DH | P-256 | P-384 | ||

Some Concepts

Besides choosing the right algorithm, we also need to understand some basic concepts in cryptography to design a proper solution for production.

Kerckhoffs’s Principle

In 1883, Kerckhoffs published a paper and stated the security of a system should be based upon the key instead of the algorithm. The algorithm should be public. The key should be the only secret.

Today, most cryptographers agree with Kerckhoffs’s principle. They believe that we should publish the algorithm and open source the implementation so that more people can have the chance to review and find the vulnerability earlier.

On the other hand, hiding the algorithm may have some advantages. Because the enemy does not know the design, they need to spend more time and more resources to break the system.

Taking firmware as an example, most of the firmware binaries are write protected but not encrypted. Once the attacker dumps the binary, they can do reverse engineering. We also observed some firmware binaries are encrypted. Even if an attacker gets the binary, the binary analysis is not feasible. Maybe this is valuable for intellectual property protection, but it also raises the bar of attacking the system.

Random Number and One-Time Pad

A random number may be required in the firmware, such as a salt for the password hash or a random number in the TLS handshake. Fundamentally, there are two ways to generate a random number:

1) To generate a random number based upon an unpredictable physical source, such as noise: This is named as a non-deterministic random number generator (NDRNG) or true random number generator (TRNG).

2) To generate a random number based upon a predefined algorithm: This is called a deterministic random number generator (DRNG) or pseudo random number generator (PRNG).

Random Number Generator

The random number generator can be supported by software or hardware. NIST SP800-90 provides the detailed requirements on the random number generation. The software implementation for the pseudo random generation shall follow NIST SP800-90 recommendations, such as hash-based PRNG, HMAC-based PRNG, or block cipher counter (CTR) mode–based RRNG. Currently the Intel X86 architecture defines two random number instructions: RDSEED and RDRNG. RDSEED returns random numbers that are supplied by a cryptographically secure, enhanced non-deterministic random bit generator (NDRBG). RDRAND returns random numbers that are supplied by a cryptographically secure, deterministic random bit generator (DRBG). ARMv8.5 also defines the RNDR and RNDRRS instruction. If possible, the software needs to use the hardware instructions as the TRNG.

The random number may be used in a one-time pad (OTP). The one-time pad is an example of perfect security or unconditional security. It cannot be broken if it is implemented correctly. The gist of OTP is 1) the key is generated with TRNG, 2) the key is only known by the sender and receiver, 3) the key must be only used once, and 4) the key must be as long as the message.

However, it is impractical in most situations because of those hard requirements. The one-time key distribution and storage is a big problem for the firmware usage.

Block Cipher and Modes of Operation

Block cipher may be used in firmware to encrypt and decrypt the messages between two entities. It is required in secure communication protocols such as Transport Layer Security (TLS) in the network stack or Secure Protocol and Data Model (SPDM) in device communication.

Block Cipher Modes of Operation

Mode of Operation | Description | Attribute |

|---|---|---|

Deterministic encryption | ||

Electronic Code Book (ECB) mode | Each block is encrypted separately. Those identical plain text blocks result in identical ciphertext blocks. | It can be parallelized. No error propagation. |

Probabilistic encryption | ||

Cipher Block Chaining (CBC) mode | The encryption of all blocks is chained together. The encryption is randomized by using an initialization vector (IV). | It supports parallelized decryption. Error propagation. |

Cipher Feedback (CFB) mode | It is used to build a stream cipher encryption scheme. | It supports parallelized decryption. Error propagation. |

Output Feedback (OFB) mode | It is used to build a stream cipher encryption scheme. | It cannot be parallelized. No error propagation. |

Counter (CTR) mode | It is used to build a stream cipher encryption scheme. IV + counter as the initialized input. | It can be parallelized. No error propagation. |

Authenticated Encryption with Associated Data (AEAD) | ||

Galois Counter Mode (GCM) | It provides the confidentiality and authenticity of data. IV and additional associated data (ADD) as input. | - |

Counter with CBC-MAC (CCM) | It provides the confidentiality and authenticity of data. Nonce and additional associated data (ADD) as input. | - |

NIST SP800-38 provides the detailed recommendations for each mode. The deterministic encryption has a weakness that the identical plain text results in identical ciphertext block. Also, the reordering of a valid plain text cannot be detected.

In general, if there is only a confidentiality requirement, a probabilistic encryption method should be used. The randomness in the probabilistic encryption is based upon the initialization vector (IV). They must be random at each encryption.

If there is a requirement for both data confidentiality and data authenticity, we need to include both encryption and message authentication code (MAC). Because we have seen issues in Encrypt-and-MAC (E&M), Encrypt-then-MAC (EtM), and MAC-then-Encrypt (MtE), the general recommendation is to use the existing standard Authenticated Encryption with Associated Data (AEAD) algorithm, such as GCM or ChaCha20Poly1305.

The different modes may require different input. The IV, which stands for initialization vector, must be uniformly generated in a random fashion. The nonce, which means “number used once,” must be unrepeatable and unpredictable. The counter is incremented in each message, and it is predictable. Semantically, an IV is different from a nonce. If an IV is not uniformly random, the solution may have a vulnerability.

Digital Signature and Signature Scheme

The digital signature is probably the most popular usage of cryptography in the firmware. The NIST SP800-193 standard requires firmware resiliency. Both update protection and detection rely upon the digital signature.

NIST FIPS-186 describes the approved digital signature standards, including RSA (Rivest-Shamir-Adleman), Elliptic Curve Digital Signature Algorithm (ECDSA), and Edwards-Curve Digital Signature Algorithm (EdDSA).

Digital Signature Scheme

Signature Scheme | Description | Attribute |

|---|---|---|

Deterministic signature | ||

RSASSA-PKCS1-v1_5 | The signature is the encoding message with hash and algorithm ID. | Fast. |

Deterministic ECDSA | Weierstrass Curve. The per-message secret number (k) is derived from the message to be signed and the private key. k is involved in the signature generation. | Small key size. |

EdDSA | Twisted Edwards curve. A different model. A unique value computed from the hash of the private key and the message is used in the signature generation process. | Simpler and faster than ECDSA. |

Probabilistic signature | ||

RSASSA Probabilistic Signature Scheme (RSASSA-PSS) | A random salt is involved. The signature is the encoding message with hash and salt. | Fast. |

ECDSA | Weierstrass Curve. The per-message secret number (k) is random. k is involved in the signature generation. | Small key size. |

In general, the RSA signature method is faster than the ECDSA. For RSA, the signing scheme includes a padding for the message. With the padding, the final signature might be deterministic or non-deterministic. The RSASSA-PKCS1-v1_5 is deterministic, where the identical plain text results in the identical signature. The RSASSA Probabilistic Signature Scheme (RSASSA-PSS) adds a random salt value in the signature. As such, the identical plain text results in a different signature. Currently RSASSA-PSS is recommended. In RSASSA-PSS, the salt length is between 0 and the hash length. In practice, the salt length should be same as the hash length.

The ECDSA is used by the device usually because the key size is smaller. If the device has the capability to generate the random numbers for the per-message secret number, the ECDSA should be used. The use of deterministic ECDSA may be desirable for devices that do not have a good source of the random numbers. The per-message secret number is derived from the message and private key. As such, the final signature result is deterministic.

The Edwards curves offer high performance for elliptic curve calculations and protection against side channel attacks, because it is easier to write branch-free, constant time code to implement Edwards curves. However, care must be taken to implement the deterministic signatures. The secrecy of the private key in the EdDSA calculation is critical.

Asymmetric Encryption Scheme

Encryption Scheme | Description | Attribute |

|---|---|---|

Probabilistic Scheme | ||

RSAES-PKCS1-v1_5 | Random octets are included in the encrypted message. | Known weakness. |

RSAES Optimal Asymmetric Encryption Padding (RSAES-OAEP) | Random octets are involved in the encrypted message. An optional label (L) is associated, which is required for both encryption and decryption. | Recommended. |

Similar to symmetric authenticated encryption, some solution may require asymmetric encryption and signing. A naïve asymmetric sign-and-encrypt has different security properties from symmetric encryption. From the sender perspective, they are the same. The sender is sure that 1) the recipient knows who wrote the message and 2) only the recipient can decrypt the message. From the recipient perspective, they are different. The recipient of the symmetric encrypted message knows who sent it to them. The recipient of the naïve asymmetric signed-and-encrypted message only knows who wrote the message but does not know who encrypted it. This naïve sign-and-encrypt vulnerability is known as “surreptitious forwarding.” The solution for this problem includes 1) adding the sender and/or recipient name, 2) using sign-encrypt-sign, and 3) using encrypt-sign-encrypt.

RFC 8017 defines the encryption scheme (RSAES-OAEP and RSAES-PKCS1-v1_5) and signature scheme (RSASSA-PSS and RSASSA-PKCS1-v1_5).

Key Establishment and Forward Secrecy

Key exchange may be used in firmware to exchange a pre-master key or shared secret between two entities. It is required in the secure communication handshake, such Transport Layer Security (TLS) in the network stack or Secure Protocol and Data Model (SPDM) in the device communication.

From the cryptography perspective, the key establishment can use the symmetric algorithm. For example, a trusted key distribution center (KDC) can be set up to share the secret key with each user. The key is named as the key encryption key (KEK) and used to encrypt the session key. In practice, there are lots of problems in the solution, such as establishing a secure channel during initialization, a single point of failure, and no forward security. As such, the practical key establishment usages employ the asymmetric algorithm.

NIST SP800-56 provides the recommendations for the discrete logarithm-based key establishment, such as Diffie-Hellman (DH), Elliptic Curve Diffie-Hellman (ECDH), and so on, and integer factorization–based key establishment such as RSA.

Key Establishment

Key Establishment | Description | Attribute |

|---|---|---|

Without forward secrecy | ||

RSA | Client generates random (S) and encrypts it with server public key as C. Server decrypts the C with server private key. Both client and server get S as secret. | Very fast. |

With forward secrecy | ||

DH Ephemeral (DHE) | Client generates random (a) and server generates random (b) as a and b are used as exponents to generate A and B. A and B are published. Client generates secret (S) based upon a and B, and server generates same secret (S) based upon b and A. | Slow. |

ECDH Ephemeral (ECDHE) | Similar to DHE, the only difference is that the random a and b are used for elliptic curve calculation. | Faster than DHE. |

If a long-term public/private key pair is involved in the pre-master secret generation, this is not a forward secrecy solution because once the long-term key is compromised, the attacker can calculate the secret (S).

In forward secrecy, an ephemeral key (EK) pair is generated as a random number, and the only usage of this ephemeral key pair is to establish a secret (S). The long-term public/private key pairs should never be involved in the secret calculation.

The client/server long-term public/private key pairs are still important for user identity and authentication purposes. Without the authentication step, the communication channel may be vulnerable to a man-in-the-middle attack.

If forward secrecy is required, please make sure you choose the right cipher suites to support generation of the ephemeral key. The ECDHE is much faster than DHE. But just in case the elliptic curve cryptography (ECC) is not well supported on the other side, then DHE can be used instead.

Hash, Message Authentication Code, and Key Deviation

Hash is another popular usage in the firmware. The trusted boot process needs to measure the hash of a firmware image and data into the Trusted Platform Module (TPM) Platform Configuration Register (PCR) to establish the static root-of-trust for measurement (SRTM).

- 1)

Preimage resistance (or one-wayness): Given X, it is easy to calculate Hash(X). But given Hash(X), it is hard to calculate X.

- 2)

Second preimage resistance (or weak collision resistance): Given Hash(X), it is hard to find Y, where Hash(Y) == Hash(X).

- 3)

Collision resistance (or strong collision resistance): It is hard to find both X and Y, where Hash(X) == Hash(Y).

Hash Function

Hash Function | Description | Attribute |

|---|---|---|

SHA2 family | ||

SHA224, SHA256 | 512-bit block size, 32-bit word size | Used today |

SHA384, SHA512 | 1024-bit block size, 64-bit word size | More security strength |

SHA3 family | ||

SHA3224, SHA3256, SHA3384, SHA3512 | Based upon the KECCAK algorithm | - |

SHAKE128, SHAKE256 | Extendable-output function (XOF) | - |

SHA2 is the classic hash algorithm and widely used today. The core of the SHA3 family is the new KECCAK algorithm. The SHA3 also supports the extendable-output function (XOF), which means the hash output can be extended to any desired length. For the XOF function, the suffix 128 or 256 means the security strengths, instead of digest length.

Two typical usages of the hash function are digital signature and message authentication code. The message authentication code (MAC) is also known as a cryptography checksum. Similar to the hash, the MAC function accepts an arbitrary-length message and creates a fixed-length authentication tag. MAC can provide the message integrity and authentication. The fundamental difference between MAC and digital signature is that MAC does not provide non-repudiation. The reason is that the key used in the MAC function is a symmetric key shared between two entities, whereas the key used in the digital signing function is a private key and only known by the sender.

Message Authentication Code

MAC Function | Description | Attribute |

|---|---|---|

Hash-based MAC | ||

Hash-based MAC (HMAC) | It is based upon approved hash. | Fast. |

KECCAK MAC (KMAC) | It is based upon SHA3 KECCAK. | - |

Block cipher–based MAC | ||

Cipher Block Chaining MAC (CBC-MAC) | It is based upon approved block cipher. | Known deficiency. |

CMAC | It is the enhancement of CBC-MAC. It is one-key CBC-MAC1 (OMAC1). | Replaces CBC-MAC. |

Galois Counter MAC (GMAC) | It is a variant of the Galois Counter Mode (GCM), where the input data is not encrypted. | - |

NIST FIPS-198 defines the HMAC, and NIST SP800-38B and SP800-38D provide recommendations for CMAC and GMAC.

If there is a requirement for an authentication code, we need to use the MAC function. Because we have seen issues in secret-prefix MAC or secret-suffix MAC, the general recommendation is to use the existing standard MAC function, such as HMAC, KMAC, or CMAC.

Beyond message integrity and authentication, one important usage of HMAC is to help derive the key. This might be a use case in the firmware. The firmware may include the user authentication with the password and use the password to derive the key to protect the user data. Or the firmware may get a master secret from a hardware engine and derive the key to protect the platform data.

RFC 8018 PKCS#5 defines the password-based key derivation functions (PBKDFs). Usually the password is chosen from a relatively small space. It cannot be used directly as a key for encryption. We need a key derivation function to convert the password into a key. First, we need to add a random salt to resist the password rainbow table attack. As such, the pre-build hash table by the attacker is useless. The length of the salt should be the same as the digest length chosen in the KDF. Second, we need to include an iteration count of processing to increase time of brute force attack. The minimal iteration count is 1000.

RFC 5869 defines a HMAC-Based Extract-and-Expand Key Derivation Function (HKDF). The typical usage of HKDF is to derive a session key from a master shared secret from the key establishment or derive a subkey from a root key. HKDF includes two phases: HKDF_Extract and HKDF_Expand. HKDF_Extract adds a random salt to derive a pseudorandom key (PRK). HKDF_Expand adds a fixed context info to derive the final output key. A solution may choose to include both phases or only include the HKDF_Expand to derive a key deterministically.

Key Derivation Function

Key Derivation Function | Description | Attribute |

|---|---|---|

PKCS5 Password-Based Key Derivation Function 2 (PBKDF2) | Input: password, a random salt, iteration count Output: key | Includes iteration count. Slow. |

HMAC-Based Extract-and-Expand Key Derivation Function (HKDF) | Two phases: 1) HKDF_Extract Input: input key material (IKM), random salt Output: pseudorandom key (PRK) 2) HKDF_Expand Input: PRK, context info Output: output key material (OKM) | No iteration. Fast. |

Digital Certificate and Certificate Chain

In the previous section, we introduced the digital signature. An entity may generate a public and private key pair, sign the message with the private key, and publish the public key to let other entities verify the signature. In practice, a single public key is not enough to describe the identity of the entity. We need more information such as the key owner’s information. To combine the information together, we can generate a certificate.

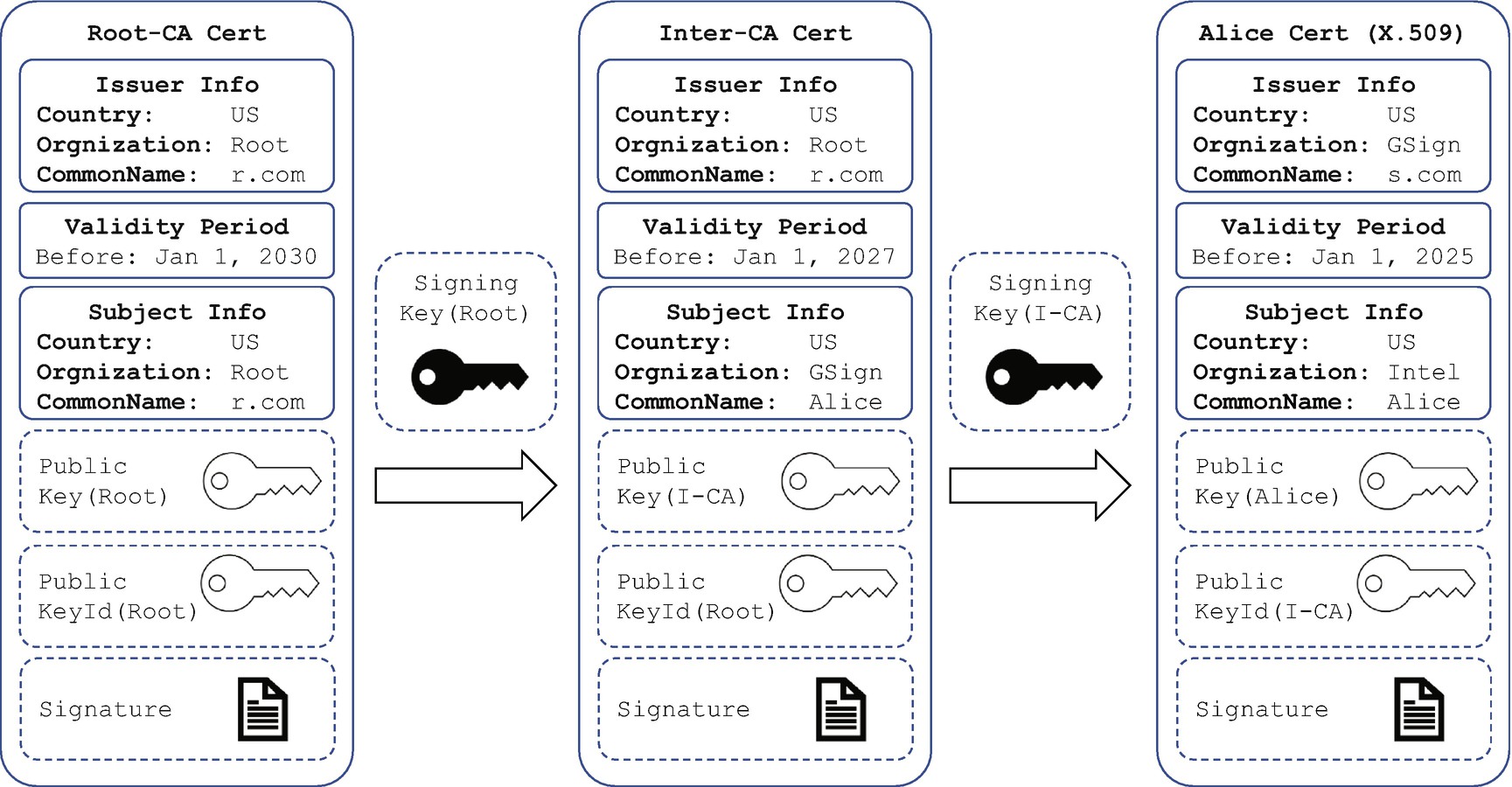

X.509 Certificate Generation

X.509 Certificate Chain

Once the certificate chain is created, the verification process needs additional steps. The verifier needs to discover the issuer of the certificate – the intermediate CA – and verify if the certificate of the subject is valid based upon the certificate of the intermediate CA. Then the verifier needs to find out the issuer of the intermediate CA’s certificate – the root CA – and verify if the certificate of the intermediate CA is valid based upon the certificate of the root CA.

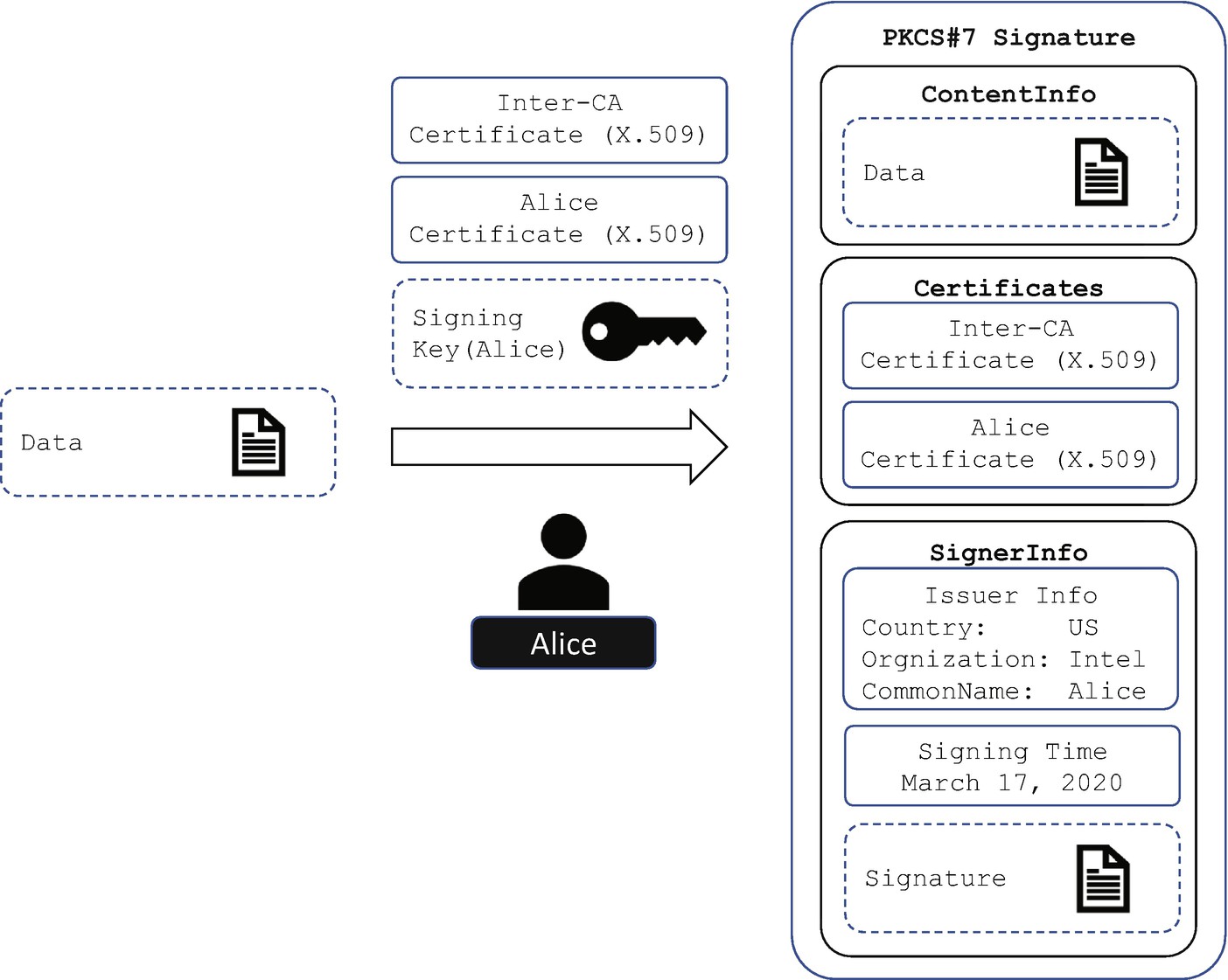

PKCS#7 Digital Signature Generation

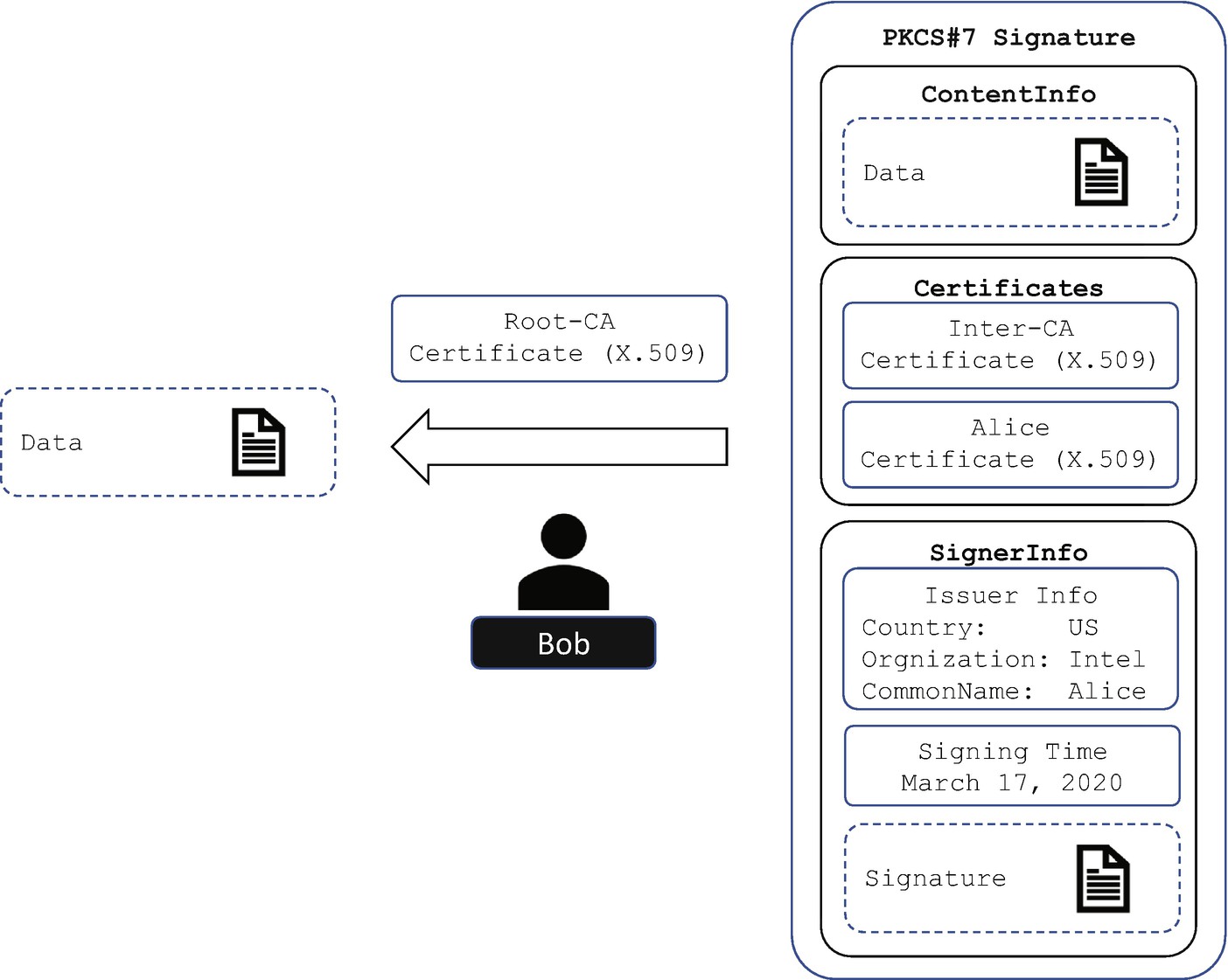

PKCS#7 Digital Signature Verification

Today the X.509 public certificate format is widely used in the industry, such as by network Transport Layer Security (TLS). PKCS#7 is also widely used as the cryptographic message syntax for the signature, such as the executable image signing. But they are not the only one. For example, in the Internet of Things (IoT) environment, people are considering using Concise Binary Object Representation (CBOR) Object Signing and Encryption (COSE) syntax to replace the complicated ASN.1 format used in X.509 and PKCS#7.

Challenge in the Firmware

Now let’s see what the challenges are that we may have to implement the cryptography algorithms in the firmware.

Certificate Management

Saving a public certificate in the firmware requires the non-volatile (NV) storage. The typical firmware only has limited NV storage, such as an 8M-byte Serial Peripheral Interface (SPI) NOR flash. The NAND flash Replay Protected Memory Block (RPMB) area is bigger, but not all platforms include the RPMB flash. System partition of an operating system (OS) disk is another option available for non-volatile storage for the firmware. Unfortunately, it is unprotected. The attack may update the content there directly.

The certificate revocation is also a challenge. For the OS that has network capability, the Online Certificate Status Protocol (OCSP) can be used. However, it is not practical for the standalone firmware solution if the firmware does not have network support. The firmware may expose an interface to update the certificate with the help of another authorized entity. The UEFI specification defines UEFI authenticated variables as the UEFI image signature database. Any user may read it, but only an authenticated user can write it. The OS can update the allowed certificate or add the revoked certificate to the forbidden signature database if it has an entry in the KEK. The Cerberus specification defines a set of root-of-trust (RoT) commands to update the platform manifest and the component manifest. Some of them became part of the Secure Protocol and Data Model (SPDM) specification for the device management.

One reminder is that when the final production image is generated, the pre-production certificate or test certificate must be removed from the production image. Otherwise, any developer can generate a new signed image. The firmware may implement a built-in self-detection mechanism. We can standardize the pre-production certificate and test certificate and let firmware display a warning message to tell people that this firmware is only for test purpose and should not be released, in hopes that the validation engineers will report issues in case that they see this message.

Not all firmware has confidentiality support. In that case, the firmware may not include a private certificate for the data signing purpose. This might become a limitation for the mutual authentication use case, such as the handshake phase of the Transport Layer Security (TLS) or Secure Protocol and Data Model (SPDM). In order to achieve that, we can use some other mechanisms. For example, we can let the firmware get the private certificate from another security coprocessor such as the management engine (ME) and use it once and discard it afterward. With this solution, the security coprocessor should implement an interface to lock down the private certificate retrieving. Another solution is to leverage the hardware to generate the certificate such as Software Guard Extension (SGX).

Cryptographic Algorithm Agility

There are lots of cryptographic algorithms in the world, and there is no need to support all of them in a single firmware. Taking the Trusted Platform Module (TPM) as an example, a TPM chip may support three different hash algorithms – SHA1 for the legacy system, SHA256 for the current system, and SM3 for the Chinese market. The final platform may just choose one of them. On the one hand, the system firmware should only enable one hash algorithm for the firmware hash calculation to save the system boot time. On the other hand, the system firmware should be designed with multiple hash capabilities. This is important because it will help to migrate from one hash algorithm to the other. For example, SHA1 was used in the old system. After it has been proved to be insecure, we can migrate to SHA256. The current system configurations use the RSA2048 and SHA256. NSA gives the recommendation that we should move to RSA3072 and SHA384 for future production.

Chinese Cryptographic Algorithms

Function | Algorithm | Parameter | |

|---|---|---|---|

Symmetric algorithm | Block cipher | SM4 | 128 bits |

Stream cipher | ZUC | - | |

Hash | SM3 | 256 bits | |

Asymmetric algorithm | Digital signature | SM2 | 256-bit ECC |

Key establishment | SM2 | 256-bit ECC | |

Performance and Size

Most cryptographic algorithms might be complicated and need lots of calculation. It may bring firmware code size impacts and runtime performance impacts. For example, the OpenSSL is a good choice for the cryptographic algorithm implementation, but it is big because it is designed for OS applications. For the embedded system, we need to find a smaller one, such as mbed TLS or wolfSSL.

The hardware may also add instructions to support the algorithms. Besides true random number generation, the Intel X86 architecture defines Advanced Encryption Standard new instructions (AES-NIs) such as AESENC, AESDEC, AESIMC, AESKEYGEN, and so on and SHA extensions such as SHA256MSG1, SHA256MSG2, and so on. The ARMv8.0 architecture also defines cryptographic extension for AES such as AESD, AESE, AESIMC, AESMC, and so on and SHA such as SHA256H, SHA256H2, and so on. The firmware may leverage these hardware capabilities.

A Hardware Security Module (HSM) is another possible implementation for the cryptographic algorithms. It might be hard for the firmware to leverage because it is a vendor-specific solution.

Attack and Mitigation

The attacks against the cryptographic algorithms can be separated into two parts: cryptanalysis and implementation attack.

Cryptanalysis

- 1)

To keep the product aligned with the latest approved, recommended algorithm and its parameters. The deprecated algorithms must not be used, such as SHA1 or MD5.

- 2)

To ensure that the algorithm is used in the right place. For example, using HKDF on the user password is a bad choice. Instead, the PBKDF should be used.

- 3)

To follow the industry best practice. For example, the ephemeral key (EK) should be used in the key exchange for the forward secrecy consideration.

Last but not least of importance, please do not try to invent a cryptographic algorithm or protocol without a review with a cryptography expert. For example, if there is encryption and authentication requirement, the standard AEAD should be used instead of a customized E&M, EtM, or MtE.

Implementation Attack

Implementation attack means there is no problem with the cryptographic algorithm and the usage, but a coding error caused the security claims to be broken.

Untrusted External Input

Untrusted external input is still the most potent attack in practice because of the memory safety issue in the software. Take OpenSSL heartbleed as an example, wherein an unchecked memcpy() exposes the memory which may contain the secret. A similar case can be found in the AMD PSP fTPM wherein a stack overflow in EkCheckCurrentCert may cause remote code execution. Any external input must be validated.

Data Used Before Verified

The cryptographic algorithm may be used for data verification. In such case, the data cannot be used before it is verified. Violations may cause a security issue. Take FPGA starbleed as an example. The Xilinx FPGA bitstream is interpreted before HMAC validation, so it causes the whole decrypted bitstream data being exposed.

Key Included in Data

The second issue of FPGA starbleed is that the HMAC key is stored inside of the bitstream data. Once the bitstream data is exposed, the key is exposed as well. Then the attacker may construct a valid bitstream to pass the HMAC verification.

Downgrade Attack

The industry standards are moving forward. An old implementation might be insecure and deprecated. However, an implementation may support the ability to download an old version for the compatibility considerations. Take SSL Padding Oracle on Downgraded Legacy Encryption (POODLE) as an example. If the solution supports to downgrade from TLS1.0 to SSL3.0, then the POODLE attack may steal “secure” HTTP cookies. Qualcomm DSP Achilles is another example of downgrade vulnerability. The signature of binary is verified but not the version of the binary. The insecure downgrade must be prohibited.

Technologies such as security version numbers (SVNs) or lowest support version number (LSN) can be used to note which successive versions are security related so that we can create “fences” that allow downgrades of versions without security fixes but prohibit a downgrade that crosses an SVN or LSN.

Security Policy

Security policy is the enforcement to ensure that the security check must happen and includes all necessary steps, such as public key comparison, version comparison, and signature comparison. We have seen that UEFI secure boot is bypassed because of bad policy control. Another example is the HP iLO BMC secure boot. It is broken because the attacker finds a path to bypass the signature check by triggering a failure on load_legacy_key(). If the verifier fails to get enough information or policy such as public key or hash for the verification, the result must be failure instead of success.

Quantum Safe Cryptography

Today’s computers are built based upon classical physics. However, at the nanoscopic level, everything follows quantum physics instead of classical physics. More and more researchers have figured out it is possible to build a machine based upon quantum mechanics, which is known as the quantum computer.

In classical computing, the fundamental unit of information storage is the bit. A bit can be either 0 or 1, and it can only be 0 or 1. However, in quantum computing, the fundamental unit can hold both 0 and 1 at the same time. This unit is called “qubit.” The state is named as “superposition.” Once we measure the qubit state, the action will disturb the superposition and cause the qubit to collapse into either 0 or 1. These characteristics of the qubit change the way of computing. Multiple dimensions of processing can occur in 1 qubit. The computing power can grow exponentially.

Recently, more and more companies have started building quantum computers. For supercomputing, D-Wave released the D-Wave 2000Q computer with 2048 qubits in 2017 and announced a next-generation Pegasus which will have 5640 qubits. For smaller computers, IBM prototyped a 50-qubit system. Intel delivered the Tangle Lake prototype in 2018, which has 49 qubits. Google announced a 72-qubit system Bristlecone in 2018.

Security Challenge

Unconditional security: It means that the cryptosystem cannot be broken, even if the attacker has unlimited computational resources. This is the strongest cryptographic algorithm. One example is the one-time pad (OTP) cipher, also known as Vernam’s cipher.

Computational security: It means that the cryptosystem can be broken with a fixed number of operations. However, because the number is very large, this cannot be achieved with current computational resources. For example, the brute force attack may take a long time. In reality, it is hard to prove that we only need a fixed number of operations to break the system. Maybe it is not broken just because we have not figured out a good algorithm.

Provable security: It means that the cryptosystem is built based upon well-studied hard mathematical problems, such as large integer factorization, discrete logarithm, elliptic curve discrete logarithm, and so on. Again, if someone can figure out a way to resolve the mathematical problem, then this cryptosystem can be broken. Today, most pervasive asymmetric cryptographies are built upon this, such as Rivest-Shamir-Adleman (RSA), elliptic curve cryptography (ECC), and Diffie-Hellman (DH).

Now let’s see how the quantum algorithm can break the security.

Shor’s Algorithm

Cryptographic Algorithm Impacted by Shor’s Algorithm

Function | Algorithm | Hard Problem |

|---|---|---|

Digital signature | RSA | Integer factorization |

DSA | Finite field cryptography | |

ECDSA | Elliptic curve discrete logarithm | |

Key establishment | RSA | Integer factorization |

DH | Discrete logarithm | |

ECDH | Elliptic curve discrete logarithm |

Grover’s Algorithm

Cryptographic Algorithm Impacted by Grover’s Algorithm

Function | Algorithm | Effective Key Strength (Current) | Effective Key Strength (Quantum Computing) |

|---|---|---|---|

Block cipher | AES-128 | 128 | 64 |

AES-256 | 256 | 128 | |

Hash | SHA256 | 256 | 128 |

SHA3256 | 256 | 128 |

Quantum Safe Algorithm

With quantum computing, some cryptographic algorithms are proved to be vulnerable. Some other algorithms are believed to be quantum safe because we have not figured out a way to attack based upon current knowledge. These algorithms are presumed to be quantum safe at this moment.

Please be aware that a quantum safe algorithm today might be vulnerable tomorrow if we can find a new algorithm to break the cryptographic primitive. The only unimpacted algorithm is the one-time pad (OTP), which is proved to be unconditionally secure.

Symmetric Algorithm

The symmetric cryptography is not impacted too much. AES is presumed to be quantum safe because Grover’s algorithm just aids the brute force search. What we need to do is to double the key size from AES-128 to AES-256.

Asymmetric Algorithm

The asymmetric cryptography is heavily impacted, though. RSA/ECC/DH and their derivatives are not quantum safe because of Shor’s algorithm. As such, we have to figure out the replacement. Fortunately, there are some other asymmetric algorithms that are presumed to be quantum safe, such as hash-based digital signature, lattice-based cryptography, code-based cryptography, Multivariate Public Key Cryptography, and so on. Before a new asymmetric algorithm is used, the RSA and DH are preferred than the ECDSA and ECDH, because the ECC uses a smaller key size and is considered to be an easier target for quantum computers.

Because the majority of cryptographic algorithm usage in firmware is the digital signature, it is important to know the basic principle of the quantum safe algorithms. In the following sections, we will give a brief introduction to the hash-based signature.

Hash-Based Signature

In 1979, Lamport published “Constructing digital signatures from a one-way function” and invented the hash-based signature. The gist of the digital signature is to provide evidence that the message data is created by the known sender and the message is not modified in transit. Because the hash is a one-way function, we can create the digital signature only with the hash function. The security of the hash-based signature is based upon the collision resistance of the hash function.

Lamport One-Time Signature Scheme

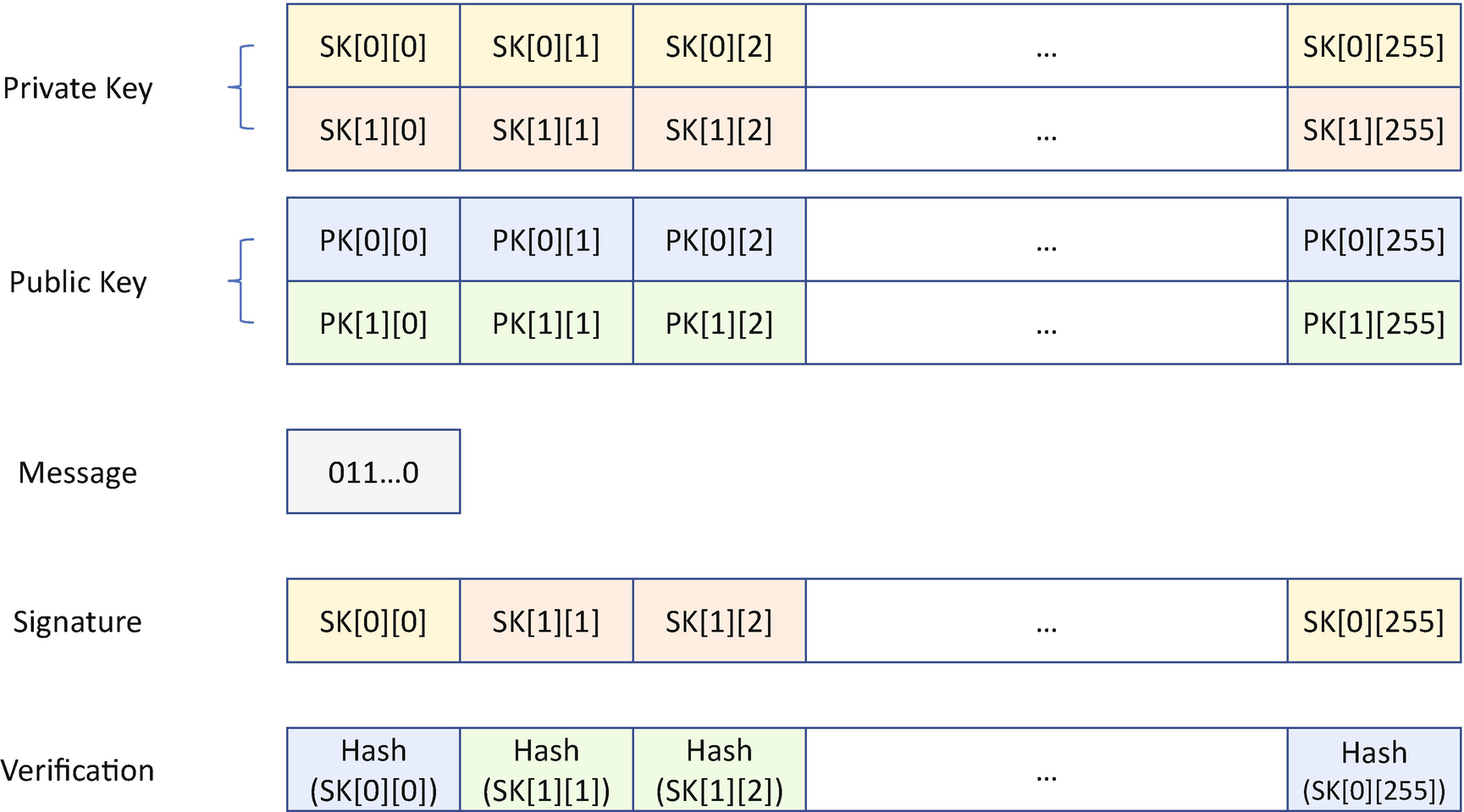

Lamport One-Time Signature Scheme

- 1)First, we need to generate the key. In order to sign the 256-bit message, we need to generate 512 bit strings. Each bit string has 256 bits. The private key is SK whereSK = SK[0] || SK[1]SK[0] = SK[0][0] || SK[0][1] || SK[0][2] || ... || SK[0][255]SK[1] = SK[1][0] || SK[0][1] || SK[0][2] || ... || SK[0][255]SK[x][y] = Random, (x=0,1; y=0,1,2,...,255)

Each SK[x][y] is a 256-bit random data. The total private key length is 512*256 bits =128K bits = 16K bytes.

The public key is PK wherePK = PK[0] || PK[1]PK[0] = PK[0][0] || PK[0][1] || PK[0][2] || ... || PK[0][255]PK[1] = PK[1][0] || PK[0][1] || PK[0][2] || ... || PK[0][255]PK[x][y] = HASH (SK[x][y]), (x=0,1; y=0,1,2,...,255)Each PK[x][y] is the hash of the SK[x][y]. Assuming we are using SHA256 as the hash function, then each PK[x][y] is 256 bits. The total public key size is also 16K bytes.

- 2)Second, we need to sign the message. Assuming the 256-bit message is M whereM=m[0] || m[1] || m[2] || ... || m[255]m[i] (i=0,1,2,...,255) = 0 or 1.then the message signature is Sig(M) whereSig(M) = s[0] || s[1] || s[2] || ... || s[255]s[i] = SK[m[i]][i], (i=0,1,2,...,255)

Each s[i] is SK[0][i] or SK[1][i] based upon the m[i] value 0 or 1. As such, the total signature length is 256 * 256 bits = 64K bits = 8K bytes.

- 1)First, the receiver needs to generate the verification data Ver(M) with the message Sig(M):Sig(M) = s[0] || s[1] || s[2] || ... || s[255]Ver(M) = v[0] || v[1] || v[2] || ... || v[255]v[i] = HASH(s[i]), (i=0,1,2,...,255)

Each v[i] is the hash of the s[i]. The verification data is also 256 * 256 bits = 8K bytes.

- 2)Second, the receiver can verify the message M with PK:M=m[0] || m[1] || m[2] || ... || m[255]PK = PK[0] || PK[1]PK[0] = PK[0][0] || PK[0][1] || PK[0][2] || ... || PK[0][255]PK[1] = PK[1][0] || PK[0][1] || PK[0][2] || ... || PK[0][255]VerificationResult = (v[i] == PK[m[i]][i]), (i=0,1,2,...,255)

Each v[i] should be checked with PK[0][i] or PK[1][i] based upon the m[i] value 0 or 1. If all v[i] are the same as the corresponding PK[][i] value, then the verification passes. Otherwise, the verification fails.

The security of this hash-based signature is based upon the hash function. Because of preimage resistance and collision resistance properties, the attacker cannot calculate the SK and cannot calculate the Sig(M).

- 1)

The key size and signature size are big. In the preceding example, the private key and public key size are 16K bytes. The signature is 8K bytes. Comparing to RSA2048, the signature is 2048 bits = 256 bytes.

- 2)

The signature can only be used once. Once you sign a message, you expose half of the private key. If you sign the second message, you may expose the other half of the private key based upon how many bits are the same as the first message. In the worst case, if the bits in the two messages are totally different, then you expose the whole private key.

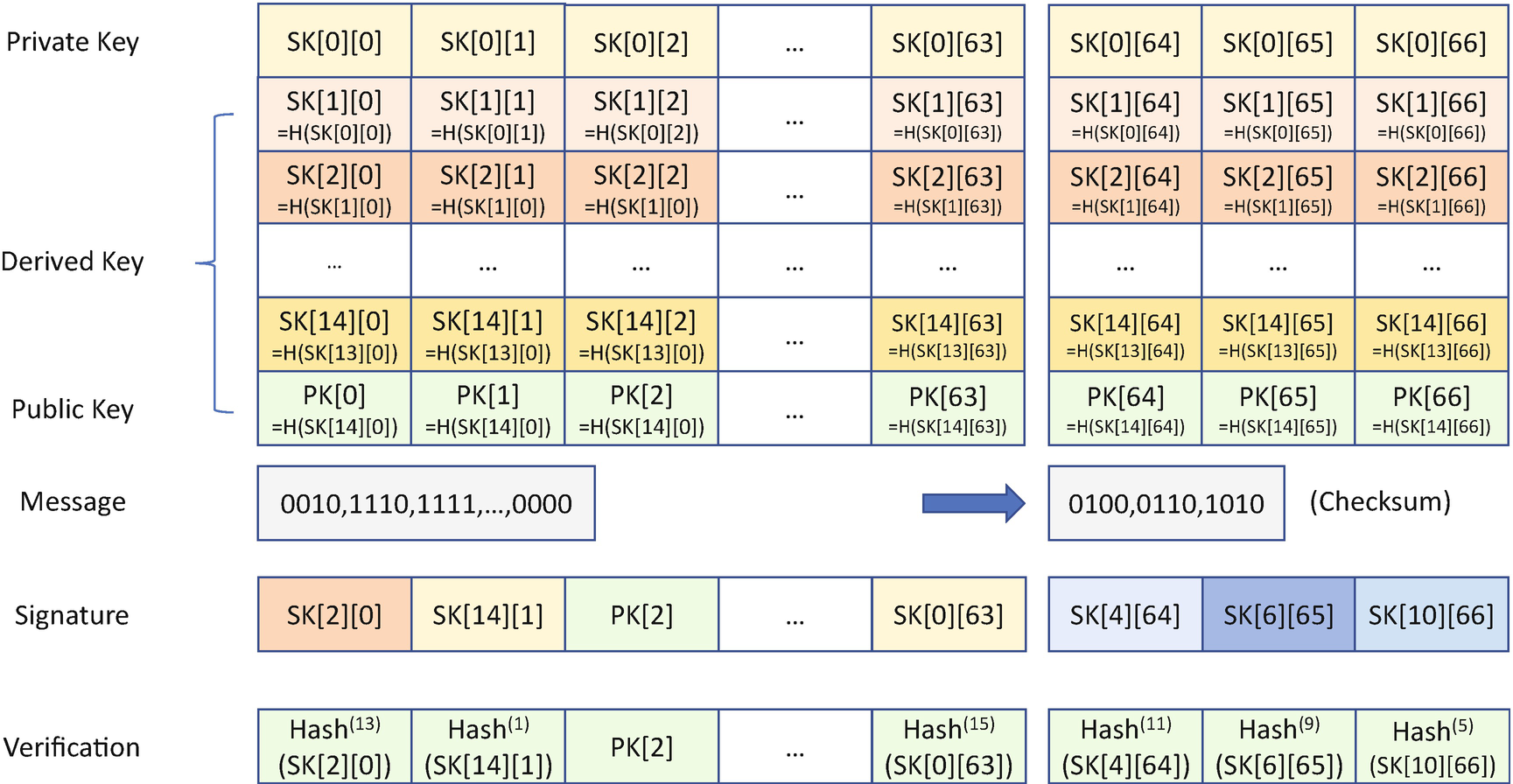

Winternitz One-Time Signature Scheme

In order to resolve the Lamport OTS limitation on large signature sizes, Winternitz proposed an idea based upon time-space trade-off. The signature size can be reduced at the cost of more hash operations. The gist of the Winternitz one-time signature (WOTS) scheme is to sign multiple bits at one time, instead of 1 bit at one time. This number of bits that can be signed at one time is named as the Winternitz parameter (w).

Winternitz One-Time Signature Scheme

- 1)First, we need to generate the key. If we divide the 256-bit message by 4 bits, we can get 64 chunk messages. In order to sign the 64 chunk messages, we need to generate 64 bit strings. Each bit string has 256 bits. The private key is SK whereSK = SK[0]SK[0] = SK[0][0] || SK[0][1] || SK[0][2] || ... || SK[0][63]SK[0][y] = Random, (y=0,1,2,...,63)

Each SK[0][y] is a 256-bit random data. The total key size is reduced to 64*256 bits =16K bits = 2K bytes.

Because each chunk message is 4 bits, we need 2^4 = 16 possible signing keys. Instead of generating other random bit strings, we can hash the SK[0] and use the result as SK[1]. Then we can hash the SK[1] and use the result as SK[2]. Finally we can hash SK[14] and use the result as SK[15]. The public key PK is SK[15]:SK[x] = SK[x][0] || SK[x][1] || SK[x][2] || ... || SK[x][63], (x=1,2,...,15)SK[x][y] = HASH (SK[x-1][y]), (x=1,2,...,15; y=0,1,2,...,63)PK = SK[15]PK[y] = SK[15][y], (y=0,1,2,...,63)With this method, all the signing keys can be derived from the original SK.

- 2)Second, we need to sign the message. Assuming the 256-bit message is M whereM=m[0] || m[1] || m[2] || ... || m[63]m[i] (i=0,1,2,...,63) = 0,1,2,...15.then the message signature is Sig(M) whereSig(M) = s[0] || s[1] || s[2] || ... || s[63]s[i] = SK[m[i]][i], (i=0,1,2,...,63)

Each s[i] is SK[x][i] based upon the m[i] value. As such, the total signature length is 2K bytes.

- 1)First, the receiver needs to generate the verification data Ver(M) with the message Sig(M):M=m[0] || m[1] || m[2] || ... || m[63]Sig(M) = s[0] || s[1] || s[2] || ... || s[63]Ver(M) = v[0] || v[1] || v[2] || ... || v[63]v[i] = HASH (2^w – 1 – m[i], s[i]), (i=0,1,2,...,63)

Each v[i] is the hash of the s[i]. Given a message chunk m[i], the hash operation should be repeated with (2^w – 1 – m[i]) times. For example, if m[i] is 1, then we need to call the hash function 14 times. If the m[i] is 10, then we need to call the hash function 5 times. If m[i] is 15, then we don’t need to call the hash function because the SK[15] is PK itself.

- 2)Second, the receiver can verify the message M with PK:PK = PK[0] || PK[1] || PK[2] || ... || PK[63]VerificationResult = (v[i] == PK[i]), (i=0,1,2,...,63)

Each v[i] should be checked with PK[i]. If all v[i] are the same as the corresponding PK[][i] value, then the verification passes. Otherwise, the verification fails.

Then we need to add an additional SK for the checksum – SK[64], SK[65], SK[66]. In the verification phase, the receiver needs to reconstruct the checksum and verify the signature of the checksum. The right side of Figure 19-6 shows the checksum signing and verification. Even with the additional signing key for the checksum, the final key size in Winternitz OTS is still much smaller than the key size in Lamport OTS.

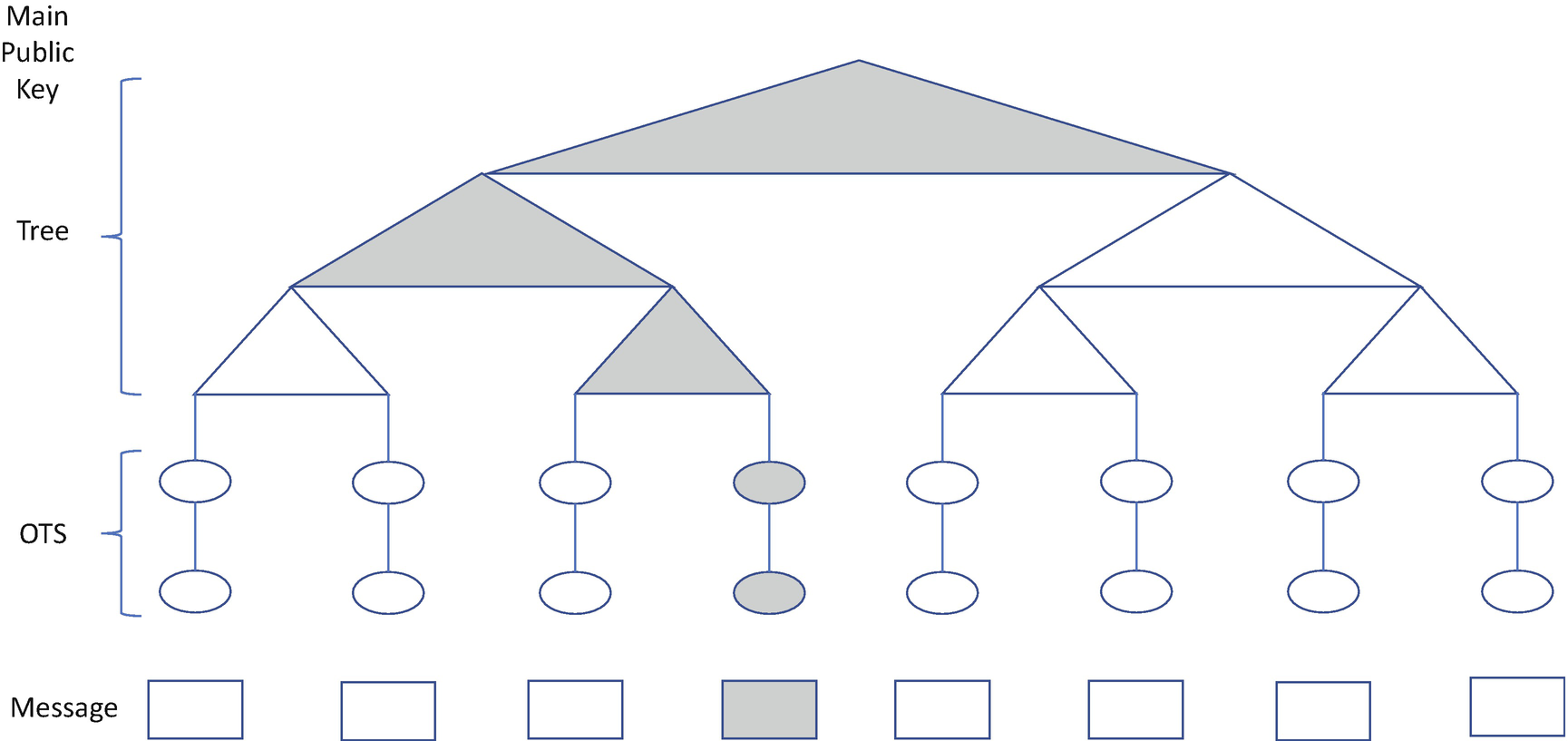

Merkle Signature Scheme

The second limitation of Lamport OTS is the one-time usage. This is an even bigger problem because of the key management requirements. Exchanging a public key is complicated. If a new public key is always required for a new signature, the OTS scheme is hard to be used in practice. In order to make OTS feasible, Merkle introduced the Merkle Signature Scheme (MSS). With MSS, one public key can be used to verify many messages signed by the private key. The user may choose a height value h, and the MSS can help to generate the private key to sign N = 2^h messages.

- 1)

First, we need to generate the keys – a Merkle tree. See Figure 19-12.

Merkle Hash Tree

- 2)

Second, we need to sign the message. We choose the SK[s] to sign the message M and generate Sig(M). This is same as OTS. Then we need to calculate a Path(s), which is the sequence of nodes in the Merkle tree, from the leaf node to the root node. From the Path(s) we can get an authentication path – AuthPath(s) – which is the sequence of nodes in the Merkle tree to help the node in Path(s) to create the main public key.

Path in the Merkle Hash Tree

The final signature is SigMerkle (M, s) = (s, Sig(M), PK[s], AuthPath[s]). AuthPath adds the additional size of the final signature, and it highly depends upon the h value. Here the h is 3, and then the AuthPath size is 3 * 256 bits = 768 bits. In practice, the h will be much bigger to support signing more messages with one main public key.

- 1)

First, the receiver uses PK[s] in the SigMerkle to verify the Sig(M). This step is the same as OTS.

- 2)

Second, the receiver needs to verify the PK[s] with the main public key. This can be achieved to reconstruct the Path[s] with the help of AuthPath[s]. Here, the verifier needs to calculate the following:

If the final calculated v[3][0] is same as the published main public key, then the verification passes. Otherwise, the verification fails.

Once s = 3 is selected, then Path[3] cannot be used anymore. In the next time, we need to choose another path.

Current Status

Hash-Based Signature Scheme (OTS + Merkle Tree)

Today, we have other OTS variants, such as Winternitz OTS+ (WOTS+), Hash to Obtain Random Subset (HORS), Forest of Random Subsets (FORS), and so on, with the purpose to shorten the signature size and accelerate signing and verification. Some of them can be used for more than one time. They are called few-times signature (FTS) schemes. People build other hash-based signature (HBS) schemes, such eXtended Merkle Signature Scheme (XMSS)/Multi-tree XMSS (XMSS-MT) and Leighton-Micali Signatures (LMS). Take XMSS as an example. The big difference is the leaf node. It is not a hash of the OTS public key, but the root node of another tree called L-tree. The L-tree is similar to the Merkle tree. The WOTS+ public key is stored in the leaf node of the L-tree.

Unfortunately, stateful HBS schemes are easy to be misused. Because they are one-time signatures, any private key cannot be used on two or more messages. The implementation must maintain the state to keep track of the one-time private key usage in order to prevent the reuse. The stateful HBS may be used for image signing in the firmware security area. Assuming we choose h to be 10 in a Merkle tree, we can sign 1024 images with one main public key. If the firmware image is released every month, this main public key will cover 85 years.

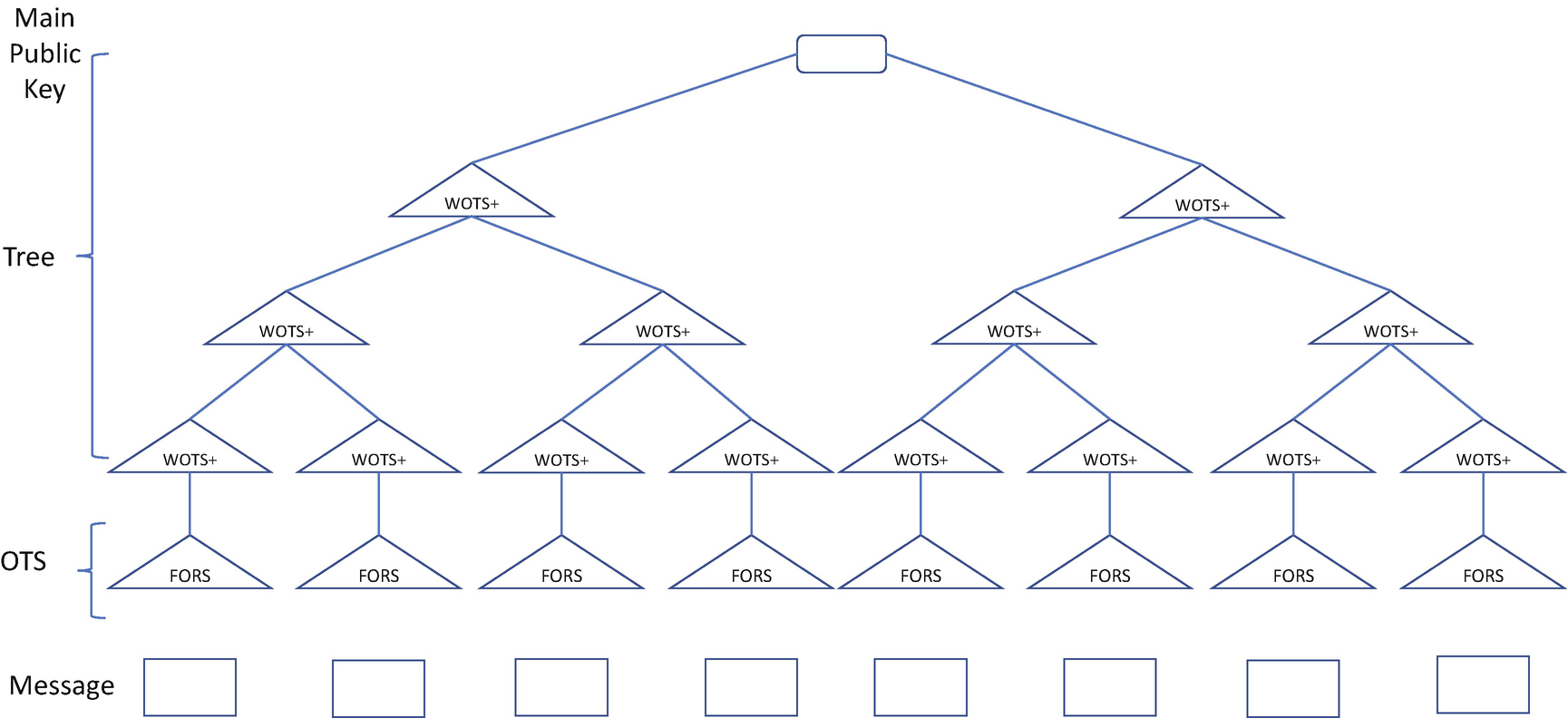

SPHINCS+ Hyper Tree

The benefit of stateless HBS is to eliminate the state maintenance requirement. Since it is also hash based, the security is based upon the hash algorithm. However, the disadvantage of SPHINCS+ is the signature size and speed, because it eliminates the state by using the components from XMSS and working with larger keys and signatures.

For more details of XMSS, LMS, and SPHINCS+, please refer to the RFC 8391, RFC 8554, and the SPHINCS specifications.

Quantum Cryptography

In the previous section, we introduced some cryptographic algorithms which are presumed to be quantum safe. But they are still modern cryptographic algorithms and do not use any quantum mechanism. In this section, we will introduce some cryptographic algorithms which use quantum mechanics as a cryptographic solution.

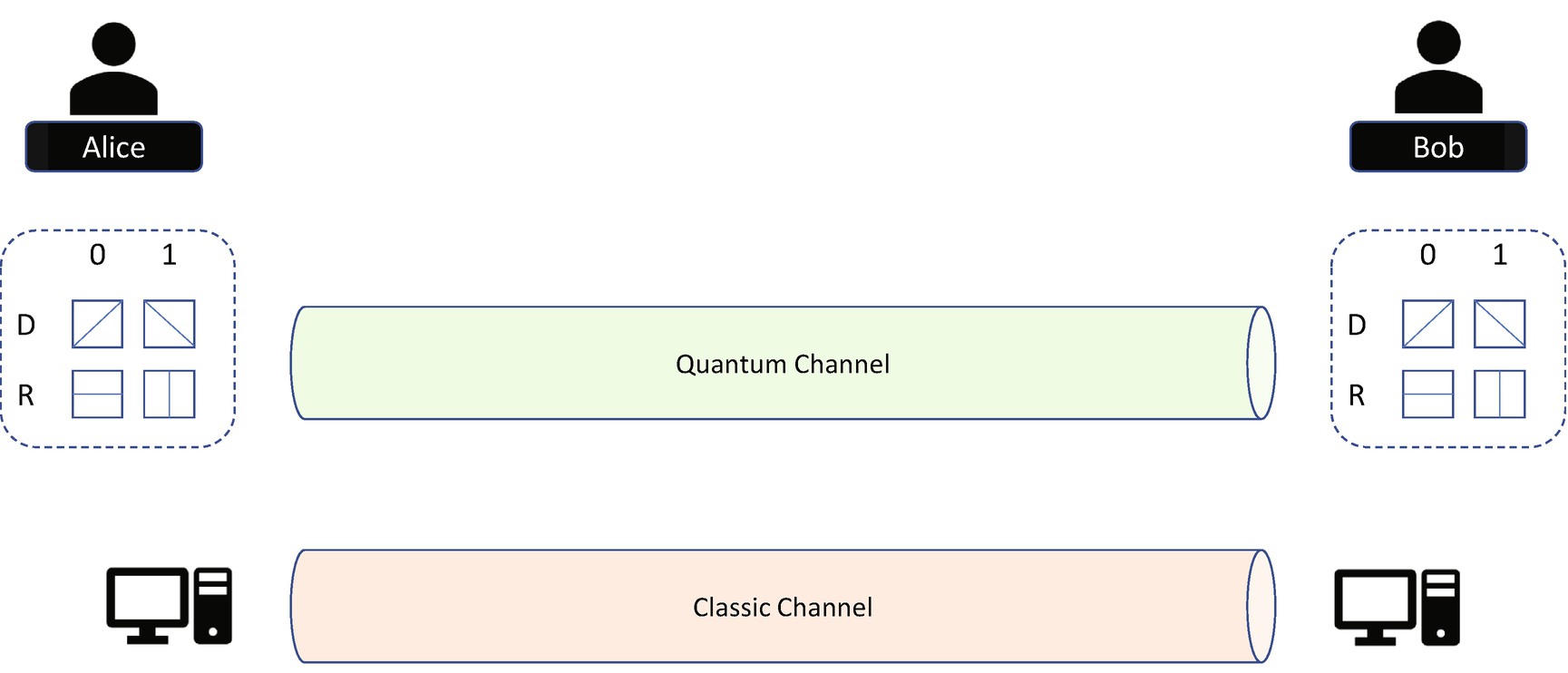

Quantum Key Distribution (QKD)

Quantum key distribution (QKD) is an advanced secure communication method for key exchange. With QKD, two parties can produce and share a common secret for encryption and decryption. QKD is tamper-proof naturally because it is based upon the properties of quantum mechanics. If there is a third party trying to eavesdrop upon the communication, this action will disturb the system. If the modification is detected, then the key distribution can be aborted.

In 1984, Bennet and Brassard published “Quantum cryptography: Public key distribution and coin tossing” to describe a quantum key distribution mechanism. This is the first quantum cryptography protocol, and it is provably secure based upon the quantum property. It is also named as the BB84 protocol.

BB84 Communication Channel

Alice can send photon from either the R or D polarizer. The photon sent from R could be vertical (bit 1) or horizontal (bit 0). The photon sent from D could be 135 degrees (bit 1) or 45 degrees (bit 0). Bob can choose either the R or D beam splitter and check the photon detector. If Bob chooses the same R or D with Alice, the final result will be correct. If Bob choses the different R or D with Alice, the result is unknown – 50% correct and 50% wrong. Realistically, some photons may be lost in transition because of the limitation of the detector.

BB84 Communication Protocol

- 1)

Alice generates a random bit string.

- 2)

Alice chooses a random sequence of sending base – D or R.

- 3)

Alice encodes each bit 0 or 1 in the bit string and sends the photons in the quantum channel. There are four types of photons in the quantum channel.

- 4)

Bob chooses a random sequence of receiving base – D or R.

- 5)

Bob receives the photon sent from Alice and decodes it to 0 or 1. Because some photons might be lost in transition, some bits might be missing in the final result. Because Bob may choose a different receiving base, some bits might be incorrect.

- 6)

Bob reports the sequence of bases which receive the bit. This step filters the bits lost in transition.

- 7)

Alice checks the sequence and tells Bob which one is correct. This step filters the bits which are decoded with a different base D or R. At this time, both Alice and Bob know the correct bits. This correct bit sequence can be treated as a presumably shared secret if there is eavesdropping on the quantum channel. We call it pre-shared secret.

- 8)

Bob reveals some random bits in the pre-shared secret and sends to Alice. This step is to test for eavesdrops. If it happens, the photon state will be disturbed. The bit value might be incorrect.

- 9)

Alice checks the random bits from Bob by comparing them with the original random bits generated. If they are different, that means eavesdrops happened and the communication must be aborted. If they are the same, Alice sends the confirmation to Bob.

Step 3: Shared secret generation phase

- 10)

Alice and Bob remove the revealed bits from the pre-shared secret and use the rest of the bits as the shared secret. Now Alice and Bob finish the negotiation and have a common secret.

The QKD process can help to generate the one-time pad (OTP) password. It must be used one time, and its length must be as long as the message itself.

Quantum Random Number Generation (QRNG)

- 1)

We initialize the qubit to state 0.

- 2)

We put the qubit in the superposition in which the probabilities for 0 and 1 are the same.

- 3)

We measure the value and record the output. Because the output is random – 50% 0 and 50% 1 – we get a random bit.

- 4)

We can repeat steps 1–3 and get a random bit stream.

Quantum Random Bit Generation

Algorithm Recommendation

Similar to modern cryptography, different organizations have started giving recommendations for the quantum safe cryptographic algorithms, also known as post-quantum cryptographic algorithms.

PQCRYPTO Initial Recommendation

Function | Algorithm | Parameter | |

|---|---|---|---|

Symmetric algorithm | Block cipher | AES-256 | 256-bit key |

Salsa20 | 256-bit key | ||

MAC | GCM | 96-bit nonce and 128-bit authenticator | |

Poly1305 | 96-bit nonce and 128-bit authenticator | ||

Asymmetric algorithm | Public key encryption | McEliece with binary Goppa codes | length n = 6960, dimension k = 5413 andadding t = 119 errors |

Digital signature | XMSS (stateful) | ||

SPHINCS-256 (stateless) | |||

NIST Post-Quantum Algorithm Candidates

Usage | Algorithm | Hard Problem |

|---|---|---|

Public key encryption and key establishment | BIKE, Classic McEliece, HQC, LEDAcrypt, NTS-KEM, ROLLO, RQC | Code |

CRYSTALS-KYBER, FrodoKEM, LAC, NewHope, NTRU, NTRU Prime, Round5, SABER | Lattice | |

SIKE | Isogeny | |

Three Bears | Integer ring | |

Digital signature | SPHINCS+, Picnic | Hash |

CRYSTALS-DILITHIUM, FALCON, qTESLA | Lattice | |

GeMSS, LUOV, MQDSS, Rainbow | Multivariate |

NIST Recommendation for Stateful Hash-Based Signatures

Function | Algorithm | Parameter | |

|---|---|---|---|

Asymmetric algorithm | Digital signature | LMS | LMOTS_[SHA256|SHAKE]_[N32|N24]_[W1|W2|W4|W8] |

LMS_[SHA256|SHAKE]_[M32|M24]_[H5|H10|H15|H20|H25] | |||

XMSS | WOTPS-[SHA2|SHAKE256]_[256|192] | ||

XMSS-[SHA2|SHAKE256]_[10|16|20]_[256|192] | |||

XMSSMT-[SHA2|SHAKE256]_ [20/2|20/4|40/2|40/4|40/8|60/3|60/6|60/12]_[256|192] | |||

As we mentioned in the previous section, the stateful hash-based signature has limited use cases, such as image signing, because of the state management requirement. The stateless hash-based signature and other algorithms are still under evaluation.

Image signing is an important use case in the firmware, such as UEFI secure boot feature. Fortunately, the PKCS#7 standards allow for multiple signatures in a SignedData content type. An image may consider including dual signatures. One signature uses the existing asymmetric cryptographic algorithm such as RSA or ECDSA. The other uses the quantum safe algorithm such as XMSS or LMS. The existing system can verify the RSA or ECDSA signature, while the HBS capable system can verify the XMSS or LMS signature.

For the key establishment, using quantum safe modern cryptography could still be the first choice, such as McEliece and other candidates, unless they are proven to be insecure. Quantum cryptography such as QKD might be still a long way from broad usage.

Preparation for the Future

Since we know the quantum computers and quantum computing age are coming, is there anything we need to worry about now?

Mosca’s Theorem

X = the number of years you need your public key cryptography to remain unbroken.

Y = the number of years it will take to replace the current system with one that is quantum safe.

Z = the number of years it will take to break the current tools, using quantum computers or other means.

Mosca’s Theorem

The X factor is determined by the product in the organization. The organization needs to decide the update plan and the end-of-life plan for the product carefully. If X is much small, such as two years, that means your product will have its end of life before the quantum age. Then you don’t need to worry. But if the X is big in the Internet of Things (IoT) environment, such as ten years from now, then you probably need think about the transition plan.

The Y factor is the migration time from the existing infrastructure to the quantum safe infrastructure. It is determined by the readiness of the industry development environment. Currently, there are lots of activities on Y. For example, NIST started the call for proposal for the post-quantum algorithm and hopefully will provide recommendations after two or three years.

The Z factor is determined by the companies which are building the quantum computers. According to research, the large-scale quantum computers that can break 2000-bit RSA are likely to be built in 2030.

Post-Quantum Cryptography Project

Currently, there is an open quantum safe (OQS) project created on GitHub. The goal is to provide an open source library for the quantum safe cryptographic algorithms. It includes a set of key establishment algorithms and digital signature algorithms. liboqs is the open source version quantum safe API. Open quantum safe OpenSSL (OQS-OpenSSL) is a fork of the OpenSSL and adds quantum safe key exchange and authentication algorithms using liboqs for the network Transport Layer Security (TLS) protocol. This prototype can help people understand the quantum safe cryptography and prepare for the migration.

Care must be taken when we include a quantum safe algorithm implementation in the firmware. Most firmware only has limited stack and heap, while we observed that some quantum safe implementations use a large stack during runtime, which may cause the program crash. It is recommended to enable the stack guard feature to detect such stack overrun issue.

Quantum Cryptography Project

At the same time, the industry companies are creating quantum programming projects. For example, Microsoft introduced a Q# programming language and Microsoft Quantum Development Kit. People can write quantum programming code and run on a quantum platform simulation, such as quantum random number generation, BB84 protocol, Grover search, and so on. ProjectQ is an open source software framework for quantum computing with the Python language. It includes examples such as Shor’s algorithm, Grover’s algorithm, and so on. Qiskit is a rich open source framework for working with noisy quantum computers at the level of pulses, circuits, and algorithms. It includes four elements: Aer, a high-performance simulator for quantum circuit with noise; Terra, a set of tools to compose a quantum program at the level of circuits and pulses; Ignis, a framework to understand and mitigate noise in quantum circuits and systems; and Aqua, quantum algorithms and applications.

Summary

Progress in Cryptography

References

Book

[B-1] Ross J. Anderson, Security Engineering: A Guide to Building Dependable Distributed Systems, 2nd edition, Wiley, 2008

[B-2] Bruce Schneier, Applied Cryptography: Protocols, Algorithms and Source Code in C, 2nd edition, Wiley, 1996

[B-3] Bruce Schneier, Secrets and Lies: Digital Security in a Networked World, Wiley, 2004

[B-4] Niels Ferguson, Bruce Schneier, Tadayoshi Kohno, Cryptography Engineering: Design Principles and Practical Applications, Wiley 2010

[B-5] Oded Goldreich, Foundations of Cryptography, volume I & volume II, Cambridge University Press, 2001

[B-6] Jean-Philippe Aumasson, Serious Cryptography: A Practical Introduction to Modern Encryption, No Starch Press, 2017

[B-7] David Johnston, Random Number Generators – Principles and Practices, DeG Press, 2018

[B-8] David Kleidermacher, Embedded System Security – Practical Methods for Safe and Secure Software and Systems Development, Newnes, 2012.

[B-9] Daniel J. Bernstein, Johannes Buchmann, Erik Dahmen, Post-Quantum Cryptography, Springer, 2009

[B-10] Michael A. Nielsen, Isaac L. Chuang, Quantum Computation and Quantum Information, Cambridge University Press, 2011

[B-11] Scott Aaronson, Quantum Computing since Democritus, Cambridge University Press, 2013

[B-12] Eleanor G. Rieffel, Wolfgang Polak, Quantum Computing: A Gentle Introduction, MIT Press, 2014

Conference, Journal, and Paper

[P-1] Auguste Kerckhoffs, “La cryptographie militaire,” Journal des sciences militaires, vol. IX, 1883, www.petitcolas.net/kerckhoffs/index.html

[P-2] Claude Shannon. “Communication Theory of Secrecy Systems,” Bell System Technical Journal, 1949. http://netlab.cs.ucla.edu/wiki/files/shannon1949.pdf

[P-3] Whitfield Diffie, Martin E Hellman, “New Directions in Cryptography,” in IEEE Transactions on Information Theory, 1976, available at https://ee.stanford.edu/~hellman/publications/24.pdf

[P-5] Mihir Bellare, Chanathip Namprempre, “Authenticated Encryption: Relations among notions and analysis of the generic composition paradigm,” in Extended Abstract in Advances in Cryptology: Asiacrypt 2007 Proceedings, http://cseweb.ucsd.edu/~mihir/papers/oem.pdf

[P-6] Hugo Krawczyk, “The Order of Encryption and Authentication for Protecting Communications (Or: How Secure is SSL?),” in Crypto 2001, available at www.iacr.org/archive/crypto2001/21390309.pdf

[P-7] Don Davis, “Defective Sign & Encrypt in S/MIME, PKCS#7, MOSS, PEM, PGP, and XML,” 2001 http://world.std.com/~dtd/sign_encrypt/sign_encrypt7.html

[P-8] Phillip Rogaway, “Evaluation of Some Blockcipher Modes of Operation,” in Cryptography Research and Evaluation Committees (CRYPTREC), 2011, available at https://web.cs.ucdavis.edu/~rogaway/papers/modes.pdf

[P-9] Chanathip Namprempre, Phillip Rogaway, Thomas Shrimpton, “Reconsidering Generic Composition,” in EUROCRYPT 2014, available at https://eprint.iacr.org/2014/206.pdf

[P-10] Pierre L’Ecuyer, “Random Number Generators: Design Principles and Statistical Testing,” Mixmax Workshop CERN, 2016, available at www.iro.umontreal.ca/~lecuyer/myftp/slides/mixmax-cern16.pdf

[P-11] FailOverflow, “Console Hacking – PS3 Epic Fail,” in 27C3 2010, available at www.cs.cmu.edu/~dst/GeoHot/1780_27c3_console_hacking_2010.pdf

[P-12] Bodo Möller, Thai Duong, Krzysztof Kotowicz, “This POODLE Bites: Exploiting The SSL 3.0 Fallback,” google 2014, www.openssl.org/~bodo/ssl-poodle.pdf

[P-13] David Adrian, Karthikeyan Bhargavan, Zakir Durumeric, Pierrick Gaudry, Matthew Green, J. Alex Halderman, Nadia Heninger, Drew Springall, Emmanuel Thomé, Luke Valenta, Benjamin VanderSloot, Eric Wustrow, Santiago Zanella-Béguelin, and Paul Zimmermann, “Imperfect Forward Secrecy: How Diffie-Hellman Fails in Practice,” in 22nd ACM Conference on Computer and Communications Security (CCS ’15), https://weakdh.org/imperfect-forward-secrecy-ccs15.pdf

[P-14] Fabien Perigaud, Alexandre Gazet, Joffrey Czarny, “Turning your BMC into a revolving door,” in ZeroNight 2018, https://airbus-seclab.github.io/ilo/ZERONIGHTS2018-Slides-EN-Turning_your_BMC_into_a_revolving_door-perigaud-gazet-czarny.pdf

[P-15] Maik Ender, Amir Moradi, Christof Paar, “The Unpatchable Silicon: A Full Break of the Bitstream Encryption of Xilinx 7-Series FPGAs,” in USENIX 2020, available at www.usenix.org/system/files/sec20fall_ender_prepub.pdf, https://bit.ly/Starbleed

[P-16] Vincent Lee, “Dealing With Encrypted Router Firmware,” 2020, www.thezdi.com/blog/2020/2/6/mindshare-dealing-with-encrypted-router-firmware

[P-17] “AMD PSP: fTPM Remote Code Execution via crafted EK certificate,” 2018, https://seclists.org/fulldisclosure/2018/Jan/12

[P-18] “AMD-SEV: Platform DH key recovery via invalid curve attack,” 2019, https://seclists.org/fulldisclosure/2019/Jun/46

[P-19] Daniel Moghimi, Berk Sunar, Thomas Eisenbarth, Nadia Heninger, “TPM-FAIL: TPM meets Timing and Lattice Attacks,” in 29th USENIX Security Symposium 2020, available at https://arxiv.org/abs/1911.05673

[P-20] Matus Nemec, Marek Sys, Petr Svenda, Dusan Klinec, Vashek Matyas, “The Return of Coppersmith’s Attack: Practical Factorization of Widely Used RSA Moduli,” in ACM CCS 2017, available at https://crocs.fi.muni.cz/_media/public/papers/nemec_roca_ccs17_preprint.pdf, https://crocs.fi.muni.cz/_media/public/papers/ccs-nemec-handout.pdf

[P-21] Leslie B. Lamport, “Constructing digital signatures from a one-way function,” in Technical Report SRI-CSL-98, SRI International Computer Science Laboratory, 1979. available at www.microsoft.com/en-us/research/uploads/prod/2016/12/Constructing-Digital-Signatures-from-a-One-Way-Function.pdf

[P-22] Charles H. Bennett and Gilles Brassard, “Quantum cryptography: Public key distribution and coin tossing,” in Proceedings of IEEE International Conference on Computers, Systems and Signal Processing, 1984, available at http://researcher.watson.ibm.com/researcher/files/us-bennetc/BB84highest.pdf

[P-23] Ralph C. Merkle, “A Digital Signature Based on a Conventional Encryption Function,” in Proceeding of CRYPTO '87, 1987, available at https://people.eecs.berkeley.edu/~raluca/cs261-f15/readings/merkle.pdf

[P-24] Peter W. Shor, “Algorithms for quantum computation: discrete logarithms and factoring,” in Proceedings 35th Annual Symposium on Foundations of Computer Science, 1994, available at https://pdfs.semanticscholar.org/6902/cb196ec032852ff31cc178ca822a5f67b2f2.pdf

[P-25] Peter. W. Shor, “Polynomial-time algorithms for prime factorization and discrete logarithms on a quantum computer,” in SIAM Journal of Computing 1997, available at https://arxiv.org/pdf/quant-ph/9508027

[P-26] Lov K. Grover, “A fast quantum mechanical algorithm for database search,” in Proceedings 28th Annual ACM Symposium on the Theory of Computing, 1996, available at https://arxiv.org/pdf/quant-ph/9605043.pdf

[P-27] John Proos, Christof Zalka, “Shor’s discrete logarithm quantum algorithm for elliptic curves,” in Quantum Information & Computation 2003, available at https://arxiv.org/pdf/quant-ph/0301141.pdf

[P-28] Martin Roetteler, Michael Naehrig, Krysta M. Svore, Kristin Lauter, “Quantum resource estimates for computing elliptic curve discrete logarithms,” in ASIACRYPT 2017, available at https://arxiv.org/pdf/1706.06752

[P-29] Georg Becker, “Merkle Signature Schemes, Merkle Trees and Their Cryptanalysis,” Seminararbeit Ruhr-Universität Bochum, 2008, available at www.emsec.ruhr-uni-bochum.de/media/crypto/attachments/files/2011/04/becker_1.pdf

[P-30] Mosca M, “Setting the Scene for the ETSI Quantum-safe Cryptography Workshop,” in e-proceedings of 1st Quantum-Safe-Crypto Workshop, 2013, available at http://docbox.etsi.org/Workshop/2013/201309_CRYPTO/e-proceedings_Crypto_2013.pdf

[P-31] European Telecommunications Standards Institute, “Quantum Safe Cryptography and Security: An Introduction, Benefits, Enablers and Challenges,” ETSI Whitepaper 2015, available at https://portal.etsi.org/Portals/0/TBpages/QSC/Docs/Quantum_Safe_Whitepaper_1_0_0.pdf

[P-32] NIST NISTIR-8105, “Report on Post-Quantum Cryptography,” 2016, available at https://csrc.nist.gov/publications/nistir

[P-33] Daniel J. Bernstein, Tanja Lange, “Post-quantum cryptography – dealing with the fallout of physics success,” in Nature 2017, available at https://cr.yp.to/papers/fallout-20170409.pdf

[P-34] Konstantinos Karagiannis, “Quantum Computing Is Here, Powered by Open Source,” 2018, www.rsaconference.com/industry-topics/presentation/quantum-computing-is-here-powered-by-open-source

[P-35] Panos Kampanakis, Dimitrios Sikeridis, “Two Post-Quantum Signature Use-cases: Non-issues, Challenges and Potential Solutions,” in 7th ETSI/IQC Quantum Safe Cryptography Workshop, 2019, available at https://eprint.iacr.org/2019/1276.pdf

[P-36] Leon Groot Bruinderink, Andreas Hulsing, “Oops, I did it again – Security of One-Time Signatures under Two-Message Attacks,” in Selected Areas in Cryptography – SAC 2017, https://leongb.nl/wp-content/uploads/2016/03/SAC2017.pdf

Specification and Guideline

[S-1] IETF, “RFC 8708 – Use of the HSS/LMS Hash-Based Signature Algorithm in the Cryptographic Message Syntax (CMS),” 2020, available at https://tools.ietf.org/html/rfc8708

[S-2] IETF, “RFC 8554 – Leighton-Micali Hash-Based Signatures,” 2019, available at https://tools.ietf.org/html/rfc8554

[S-3] IETF, “RFC 8512 – CBOR Object Signing and Encryption (COSE),” 2017, available at https://tools.ietf.org/html/rfc8512

[S-4] IETF, “RFC 8439 – ChaCha20 and Poly1305 for IETF Protocols,” 2018, available at https://tools.ietf.org/html/rfc8439

[S-5] IETF, “RFC 8422 – Elliptic Curve Cryptography (ECC) Cipher Suites for Transport Layer Security (TLS) Versions 1.2 and Earlier,” 2018, available at https://tools.ietf.org/html/rfc8422

[S-6] IETF, “RFC 8391 – XMSS eXtended Merkle Signature Scheme,” 2018, available at https://tools.ietf.org/html/rfc8391

[S-7] IETF, “RFC 8018: PKCS #5: Password-Based Cryptography Specification Version 2.1,” 2017, available at https://tools.ietf.org/html/rfc8018

[S-8] IETF, “RFC 8017 – PKCS #1: RSA Cryptography Specifications Version 2.2,” 2016, available at https://tools.ietf.org/html/rfc8017

[S-9] IETF, “RFC 7919 – Negotiated Finite Field Diffie-Hellman Ephemeral (FFDHE) Parameters for Transport Layer Security (TLS),” 2016, available at https://tools.ietf.org/html/rfc7919

[S-10] IETF, “RFC 7049 – Concise Binary Object Representation (CBOR),” 2013, available at https://tools.ietf.org/html/rfc7049

[S-11] IETF, “RFC 6979: Deterministic Usage of the Digital Signature Algorithm (DSA) and Elliptic Curve Digital Signature Algorithm (ECDSA),” 2013, available at https://tools.ietf.org/html/rfc6979

[S-12] IETF, “RFC 5869: HMAC-based Extract-and-Expand Key Derivation Function (HKDF),” 2010, available at https://tools.ietf.org/html/rfc5869

[S-13] IETF, “RFC 5116 – An Interface and Algorithms for Authenticated Encryption,” 2008, available at https://tools.ietf.org/html/rfc5116

[S-14] IETF, “RFC 3526 – More Modular Exponential (MODP) Diffie-Hellman groups for Internet Key Exchange (IKE),” 2003, available at https://tools.ietf.org/html/rfc3526

[S-15] IETF, “RFC 2315 – PKCS #7: Cryptographic Message Syntax Version 1.5,” 1998, available at https://tools.ietf.org/html/rfc2315

[S-16] ITU, “X.509 : Information technology – Open Systems Interconnection – The Directory: Public-key and attribute certificate frameworks,” 2019, www.itu.int/rec/T-REC-X.509

[S-17] ITU, “X.680 : Information technology – Abstract Syntax Notation One (ASN.1): Specification of basic notation,” 2015, www.itu.int/rec/T-REC-X.680-201508-I/

[S-18] NIST SP800-208, “Recommendation for Stateful Hash-Based Signature Schemes,” 2015, available at https://csrc.nist.gov/publications/sp800

[S-19] NIST SP800-186, “Recommendations for Discrete Logarithm-Based Cryptography: Elliptic Curve Domain Parameters,” 2019, available at https://csrc.nist.gov/publications/sp800

[S-20] NIST SP800-185, “SHA-3 Derived Functions: cSHAKE, KMAC, TupleHash, and ParallelHash,” 2016, available at https://csrc.nist.gov/publications/sp800

[S-21] NIST SP800-132, “Recommendation for Password-Based Key Derivation,” 2010, available at https://csrc.nist.gov/publications/sp800

[S-22] NIST SP800-131A, “Transitioning the Use of Cryptographic Algorithms and Key Lengths,” 2019, available at https://csrc.nist.gov/publications/sp800

[S-23] NIST SP800-108, “Recommendation for Key Derivation Using Pseudorandom Functions,” 2009, available at https://csrc.nist.gov/publications/sp800

[S-24] NIST SP800-90C, “Recommendation for Random Bit Generator (RBG) Constructions,” 2016, available at https://csrc.nist.gov/publications/sp800

[S-25] NIST SP800-90B, “Recommendation for the Entropy Sources Used for Random Bit Generation,” 2018, available at https://csrc.nist.gov/publications/sp800

[S-26] NIST SP800-90A, “Recommendation for Random Number Generation Using Deterministic Random Bit Generators,” 2015, available at https://csrc.nist.gov/publications/sp800

[S-27] NIST SP800-67, “Recommendation for the Triple Data Encryption Algorithm (TDEA) Block Cipher,” 2017, available at https://csrc.nist.gov/publications/sp800

[S-28] NIST SP800-56C, “Recommendation for Key-Derivation Methods in Key-Establishment Schemes,” 2018, available at https://csrc.nist.gov/publications/sp800

[S-29] NIST SP800-56B, “Recommendation for Pair-Wise Key-Establishment Using Integer Factorization Cryptography,” 2019, available at https://csrc.nist.gov/publications/sp800

[S-30] NIST SP800-56A, “Recommendation for Pair-Wise Key-Establishment Schemes Using Discrete Logarithm Cryptography,” 2018, available at https://csrc.nist.gov/publications/sp800

[S-31] NIST SP800-38G, “Recommendation for Block Cipher Modes of Operation: Methods for Format-Preserving Encryption,” 2016, available at https://csrc.nist.gov/publications/sp800

[S-32] NIST SP800-38F, “Recommendation for Block Cipher Modes of Operation: Methods for Key Wrapping,” 2012, available at https://csrc.nist.gov/publications/sp800

[S-33] NIST SP800-38E, “Recommendation for Block Cipher Modes of Operation: the XTS-AES Mode for Confidentiality on Storage Devices,” 2010, available at https://csrc.nist.gov/publications/sp800

[S-34] NIST SP800-38D, “Cipher Modes of Operation: Galois / Counter Mode (GCM) and GMAC,” 2007, available at https://csrc.nist.gov/publications/sp800

[S-35] NIST SP800-38C, “Cipher Modes of Operation: The CCM Mode for Authentication and Confidentiality,” 2007, available at https://csrc.nist.gov/publications/sp800

[S-36] NIST SP800-38B, “Recommendation for Block Cipher Modes of Operation: the CMAC Mode for Authentication,” 2016, available at https://csrc.nist.gov/publications/sp800

[S-37] NIST SP800-38A Addendum, “Recommendation for Block Cipher Modes of Operation: Three Variants of Ciphertext Stealing for CBC Mode,” 2010, available at https://csrc.nist.gov/publications/sp800

[S-38] NIST SP800-38A, “Recommendation for Block Cipher Modes of Operation: Methods and Techniques,” 2001, available at https://csrc.nist.gov/publications/sp800

[S-39] NIST FIPS 202, “SHA-3 Standard: Permutation-Based Hash and Extendable-Output Functions,” 2015, https://csrc.nist.gov/publications/fips

[S-40] NIST FIPS 198-1, “The Keyed-Hash Message Authentication Code (HMAC),” 2008, https://csrc.nist.gov/publications/fips

[S-41] NIST FIPS 197, “Advanced Encryption Standard (AES),” 2001, https://csrc.nist.gov/publications/fips

[S-42] NIST FIPS 186-5, “Digital Signature Standard (DSS),” 2019, https://csrc.nist.gov/publications/fips

[S-43] NIST FIPS 180-4, “Secure Hash Standard (SHS),” 2015, https://csrc.nist.gov/publications/fips

[S-44] NIST FIPS 140-3, “Security Requirements for Cryptographic Modules,” 2019, https://csrc.nist.gov/publications/fips

[S-45] SPHINCS org, “SPHINCS+ specification,” 2019, available at https://sphincs.org/resources.html

[S-46] GB/T 35276, “SM2 cryptographic algorithm usage specification,” 2017, available at http://openstd.samr.gov.cn

[S-47] GB/T 35275, “SM2 cryptographic algorithm encrypted signature message syntax specification,” 2017, available at http://openstd.samr.gov.cn

[S-48] GB/T 33133, “ZUC stream cipher algorithm,” 2016, available at http://openstd.samr.gov.cn

[S-49] GB/T 32918, “Public key cryptographic algorithm SM2 based on elliptic curves,” 2016, available at http://openstd.samr.gov.cn

[S-50] GB/T 32907, “SM4 block cipher algorithm,” 2016, available at http://openstd.samr.gov.cn

[S-51] GB/T 32905, “SM3 cryptographic hash algorithm,” 2016, available at http://openstd.samr.gov.cn

[S-52] Intel, “Intel 64 and IA-32 Architecture Software Developer Manuals,” 2019, available at https://software.intel.com/en-us/articles/intel-sdm

[S-53] ARM, “ARM TrustZone True Random Number Generator Technical Reference Manual,” 2017, available at http://infocenter.arm.com/help/topic/com.arm.doc.100976_0000_00_en/trustzone_true_random_number_generator_technical_reference_manual_100976_0000_00_en.pdf

Web

[W-1] NSA Commercial National Security Algorithm Suite, https://apps.nsa.gov/iaarchive/programs/iad-initiatives/cnsa-suite.cfm

[W-2] NIST Random Bit Generation, https://csrc.nist.gov/Projects/Random-Bit-Generation

[W-3] NIST Block Cipher Techniques, https://csrc.nist.gov/Projects/block-cipher-techniques

[W-4] NIST Elliptic Curve Cryptography, https://csrc.nist.gov/Projects/elliptic-curve-cryptography

[W-5] NIST Digital Signatures, https://csrc.nist.gov/Projects/digital-signatures

[W-6] NIST Key Establishment, https://csrc.nist.gov/Projects/Key-Management/key-establishment

[W-7] NIST Hash Functions, https://csrc.nist.gov/Projects/Hash-Functions

[W-8] NIST Message Authentication Codes, https://csrc.nist.gov/Projects/Message-Authentication-Codes

[W-9] Cryptographic Key Length Recommendation, https://www.keylength.com/en/4/

[W-10] OpenSSL vulnerabilities, www.openssl.org/news/vulnerabilities.html

[W-11] OpenSSL heartbleed, https://heartbleed.com/

[W-12] ROCA, https://crocs.fi.muni.cz/public/papers/rsa_ccs17, https://github.com/crocs-muni/roca

[W-13] NIST Post Quantum Cryptography, https://csrc.nist.gov/Projects/Post-Quantum-Cryptography