CHAPTER 6

Security Controls for Host Devices

This chapter presents the following topics:

• Trusted operating system

• Endpoint security software

• Host hardening

• Boot loader protections

• Vulnerabilities associated with hardware

• Terminal Services/application delivery services

It’s not inline network encryptors, proxy servers, and load balancers that users directly interface with each day but rather the host devices such as desktops and laptops. Given the users’ laser focus on these device types, hackers will be equally focused on attacking them. Naturally, we must match the attacker’s effort with a myriad of security controls that specifically secure host devices.

In this chapter, we take a look at trusted operating systems to serve as a starting point for a secure computer. Next, we dive into endpoint security software, which is composed of a variety of security tools designed to secure the local computer. We follow this up with host-hardening techniques, which involve various configurations and changes to default settings to lock down a host device. After that we look at boot loader protections to ensure that a computer boots up securely. The last two sections tackle hardware vulnerabilities as well as Terminal Services and application delivery services. By locking down the host devices, users will be assured of a secure and productive working environment to help achieve company objectives.

Trusted Operating System

The concept of a trusted operating system has been around since even before the days of the DoD Trusted Computer System Evaluation Criteria (TCSEC), known as the “Orange Book.” In the early days of computer security, it was believed that if a trusted computing base (TCB) could be built, it would be able to prevent all security issues from occurring. In other words, if we could just build a truly secure computer system, we could eliminate the issue of security problems. Although this is a laudable goal, the reality of the situation quickly asserted itself—that is, no matter how secure we think we have made the system, something always seems to happen that allows a security event to occur. The discussion turned from attempting to create a completely secure computer system to creating a system in which we can place a certain level of trust. Thus, the Orange Book was developed in which different levels were defined related to varying levels of trust that could be placed in systems certified at those levels.

The Orange Book, although containing many interesting concepts that are as valid today as they were when the document was created, was replaced by the Common Criteria (CC), which is a multinational program in which evaluations conducted in one country are accepted by others that also subscribe to the tenets of the CC. At the core of both the Orange Book and the CC is this concept of building a computer system or device in which we can place a certain amount of trust and thus feel more secure about the protection of our systems and the data they process. The problem with this concept is that common operating systems have evolved over the years to maximize ease of use, performance, and reliability. The desire for a general-purpose platform on which to install and run any number of other applications does not lend itself to a trusted environment in which high assurance often equates to a more restrictive environment. This leads to a generalization that if you have an environment requiring maximum flexibility, a trusted platform is not the way to go.

In general, somebody wanting to utilize a trusted operating system probably has a requirement for a multilevel environment. Multilevel security is just what its name implies. On the same system you might, for example, have users who have Secret clearance as well as others who have Top-Secret clearance. You will also have information that is labeled as Secret and other information that is Top-Secret stored on the system. The operating system must provide assurances that individuals who have only a Secret clearance are never exposed to information classified as Top-Secret, and so forth.

Implementation of such a system requires a method to provide a label on all files (and a similar mechanism for all users) that declares the security level of the data. The trusted operating system will have to make sure that information is never copied from a document labeled Top-Secret to a document labeled Secret because of the potential for disclosing information. In the Common Criteria, the requirements for implementing such a system are described in the Labeled Security Protection Profile. In the older Orange Book, this level of security was enabled through the implementation of mandatory access control (MAC). Some vendors have gone through the process of obtaining a certification verifying compliance with the requirements for multilevel security, resulting in products such as Trusted Solaris. Other products have not gone through the certification, but may still provide an environment of trust allowing for this level of separation. An example of this is Security-Enhanced Linux (SELinux).

Microsoft, which has seen many vulnerabilities discovered in its Windows operating systems, attempted to address this issue of trust with its Next-Generation Secure Computing Base (NGSCB) effort. This effort highlighted what has been stated about trusted platforms because it offered users the option of a more secure computing environment, but this came at the expense of giving up a level of control as to what applications and files could be run on an NGSCB PC. The Microsoft initiative was announced in 2002 and given the code name Palladium. It would have resulted in a secure “fortress” designed to provide enhanced data protection while ensuring digital rights management and content control. Two years later, Microsoft announced it was shelving the project because of waning interest from consumers, but then turned around and said the project wasn’t dead completely but was just not going to take as prominent a role.

A little clarification is important at this point. Another term that is often heard related to the subject of trusted computing is “trustworthy computing.” The two are not the same. A trusted system is one in which a failure of the system will break the security policy upon which the system was designed. A trustworthy system, on the other hand, is one that will not fail. Trustworthy computing is not a new concept, but it has taken on a larger presence due to the Microsoft initiative by the same name. This initiative is designed to help instill more public trust in the company’s software by focusing on the four key pillars of security, privacy, reliability, and business integrity.

The CC implemented Evaluation Assurance Levels (EALs) to rate operating systems according to their level of security testing and design. Although the CC has deprecated EALs, they still might appear on the exam due to historical relevance. Here is a breakdown of the different EAL levels:

• EAL1: Functionally Tested

• EAL2: Structurally Tested

• EAL3: Methodically Tested and Checked

• EAL4: Methodically Designed, Tested, and Reviewed

• EAL5: Semi-formally Designed and Tested

• EAL6: Semi-formally Verified Design and Tested

• EAL7: Formally Verified Design and Tested

Although most users won’t have a need for a highly trusted operating system, you’ll find these systems in various high-security government and military environments. What they lack in functionality and ease of use they make up for with security. Such an operating system will have a steeper learning curve, but this is a necessary sacrifice for the furtherance of national security. What follows in the next few topics are examples of trusted operating systems.

SELinux

A project of the National Security Agency (NSA) and the Security-Enhanced Linux (SELinux) community, SELinux is a group of security extensions that can be added to Linux to provide additional security enhancements to the kernel. SELinux provides a mandatory access control (MAC) system that restricts users to policies and rules set by the administrator. It also defines access and rights for all users, applications, processes, and files on the OS. Unlike many OSs, SELinux operates on the principle of default denial, where anything not explicitly allowed is implicitly denied. SELinux is commonly implemented on Android distributions, Red Hat Enterprise Linux, CentOS, Debian, and Ubuntu, among many others. SELinux can operate in one of three modes:

• Disabled SELinux does not load a security policy.

• Permissive SELinux displays warnings but does not enforce security policy.

• Enforcing SELinux enforces security policy.

SEAndroid

As stated previously, SELinux commonly runs on Android, hence the adapted version called SEAndroid. As of Android version 4.4 (KitKat), Android supports SEAndroid with the “enforcing” mode, which means that permission denials are not only logged but also enforced by a security policy. This helps limit malicious or corrupt applications from causing damage to the OS. The benefits described previously for SELinux have been grafted onto the Android OS.

Trusted Solaris

Although deprecated now in favor of Solaris Trusted Extensions, Trusted Solaris was a group of security-evaluated OSs based on earlier versions of Solaris. The Solaris Trusted Extensions added enhancements to Trusted Solaris, including accounting, auditing, device allocation, mandatory access control labeling, and role-based access control.

Least Functionality

The principle of least privilege (or functionality) is a requirement that only the necessary privileges are granted to users to access resources—nothing more and nothing less. If a task exists that is not explicitly in a user’s job description, they should not be able to perform that task. This helps limit the permissions and rights of users to prevent unauthorized behaviors, not to mention causing accidental damage to their own systems. It also prevents any malware running on their systems from easily escalating privileges. As a result, least functionality helps achieve the goals of a trusted operating system.

Endpoint Security Software

Endpoint security refers to a security approach in which each device (that is, endpoint) is responsible for its own security. That is not to say that other layers of security aren’t required, but rather the endpoint must also directly contribute to its own security as well. We have already discussed an example of endpoint security: the host-based firewall. Instead of relying solely on physical network firewalls to filter traffic for all hosts on the network, the host-based firewall implements filtering specifically at the host endpoint. Often, other security mechanisms such as virtual private networks (VPNs) will make it harder for network security devices (such as intrusion detection systems) to do their job because the contents of packages will be encrypted. Therefore, if examination of the contents of a packet is important, it will need to be done at the endpoint. A number of very common software packages are also designed to push protection to the devices, including antimalware, antivirus, anti-spyware, and spam-filtering software, to name a few. We will discuss each of these, and more, in this section.

Antimalware

Antimalware software is a general-purpose security tool designed to prevent, detect, and eradicate multiple forms of malware such as viruses, worms, Trojan horses, spyware, and more. The term “malware” is short for malicious software and encompasses a number of different pieces of programming designed to inflict damage on a computer system or its data, to deny use of the system or its resources to authorized users, or to steal sensitive information that may be stored on the computer.

For malware to be effective, its malicious intent generally must be concealed from the user. This can be done by attaching the malware to another program, or making it part of the program itself—while still remaining hidden. One of the things that malware will often do is to attempt to replicate itself (in the case of worms and viruses, for example), and often the nefarious purpose may not immediately manifest itself so that the malware can accomplish maximum penetration before it performs its destructive purpose.

Although most of us are guilty of calling malware a “virus,” we need to be much more specific for the exam, as shown in the following list:

• Virus Malicious code that replicates after attaching itself to files on a victim’s device. When the victim’s files run, the virus is able to execute its payload. In other words, viruses cannot replicate on their own.

• Worm Self-replicating malicious code that can execute and spread independently of the victim’s applications or files. Unlike viruses, worms replicate on their own with zero human intervention.

• Trojan horse Malicious code disguised as seemingly harmless or friendly code.

• Spyware Malware that collects sensitive information about infected victims.

• Rootkit A stealth-like group of files that seek administrator or root privileges for total and near-invisible control of a device. Some rootkits can obtain kernel-level privileges on the device, which makes them difficult to detect and eradicate.

• Ransomware Malicious software that encrypts the victim’s files or threatens to publish them unless the victim pays a timely ransom—usually in the form of cryptocurrency for untraceability.

• Keylogger Software (or hardware) that captures a victim’s physical keystrokes on the keyboard. Although not necessarily illegal, many keyloggers are used for capturing passwords and other sensitive information.

• Grayware Software that behaves in an irritating or abnormal way, but isn’t classified with the more destructive forms of malware like viruses, worms, and Trojan horses. For example, grayware might change your home page, rearrange your desktop icons, or perform other annoying actions.

• Adware Applications that generate unwanted pop-ups or advertisements. Like grayware, adware generally isn’t considered a “major league” form of malware, but that doesn’t mean it can’t be.

• Logic bomb A form of malware that only runs after certain conditions are met, such as a specific date/time or when the Calculator application has been launched 15 times.

Many security vendors that create antimalware create applications designed to prevent, detect, and remove malware infections. The preferred route is to prevent infection in the first place. Antimalware packages that are designed to prevent installation of malware on a system provide what is known as real-time protection, because in order to prevent infection, the antimalware package must spot an attempt to infect a system and prevent it from occurring. Antimalware packages designed to detect and remove malware will perform scheduled or manual scans of the computer system, which includes all files, programs, and the operating system. Real-time protection requires the antimalware package be run continuously, whereas detect-and-remove antimalware packages can be run on an occasional basis.

Antivirus

Although most of today’s antimalware tools target multiple forms of malware, many businesses still use tools that are more limited in scope, such as antivirus software. Antivirus software is designed specifically to remediate viruses, worms, and Trojan horses—and that’s about it. Such tools can accomplish their goal in a couple of ways:

• Signature-based detection A detection method that looks for patterns of data known to be part of a virus. This works well for viruses that are already known and are not known to evolve or change—as opposed to polymorphic viruses that can modify themselves in order to avoid signature-based detection. Another method to detect such self-modifying viruses is to analyze code in order to allow for slight variations of known viruses. This will generally allow the antivirus software to detect viruses that are a variant of an older virus, which is quite common.

• Heuristic-based detection A detection method based on analysis of code in order to detect previously unknown or new variants of existing viruses. The new viruses that have not been seen before are often referred to as zero-day (or 0-day) viruses or threats.

Because virus detection is an inexact science, two possible types of errors can occur. A false-positive error is one in which the antivirus program decides that a certain file is malicious when, in fact, it is not. A false-negative error occurs when the antivirus software decides that a file is safe when it actually does contain malicious content. Obviously, the desire is to limit both of these errors. The challenge is to “tighten” the system to a point so that it catches all (or most) viruses while rejecting as few benign programs as possible.

When you’re selecting an antivirus vendor, one important factor to consider is how frequently the database of virus signatures is updated. With variations of viruses and new viruses occurring on a daily basis, it is important that your antivirus software uses the most current list of virus signatures in order to stand a chance of protecting your systems. Most antivirus vendors offer signature updating for some specified initial period of time—for example, one or two years. At the conclusion of this period, a subscription renewal will be required in order to continue to obtain information on new threats. Although it is tempting to let this ongoing expense lapse, this is not generally a good idea because your system would then only be protected against viruses that are known up to the point when you quit receiving updates.

Anti-Spyware

Spyware is a special breed of malicious software. It is designed to collect information about the users of the system without their knowledge. The type of information that may be targeted by spyware includes Internet surfing habits (sites that have been visited or queries that have been made), user IDs and passwords for other systems, and personal information that might be useable in an identity theft attempt.

Anti-spyware is designed to perform a similar function to antivirus software, except its purpose is to prevent, detect, and remove spyware infections. Windows Defender was originally just an anti-spyware tool but has since incorporated multiple forms of malware eradication into its purview. Anti-spyware software can be employed in a real-time mode to prevent infection by scanning all incoming data and files, attempting to identify spyware before it can be activated on the system. Alternatively, anti-spyware software can be run periodically to scan all files on your system in order to determine if it has already been installed. It will concentrate on operating system files and installed programs. Similar to antivirus software, anti-spyware software looks for known patterns of existing spyware. As a result, anti-spyware software also relies on a database of known threats and requires frequent updates to this database in order to be most effective. Some anti-spyware software does not rely on a database of signatures but instead scans certain areas of an operating system where spyware often resides.

Writers of spyware have gotten clever in their attempts to evade anti-spyware detection. Some now have a pair of programs that run (if you were not able to prevent the initial infection) and monitor each other so that if one of the programs is killed, the other part of the pair will immediately respawn it. Some spyware also watches special operating system files (such as the Windows registry), and if the user attempts to restore certain keys the spyware has modified, or attempts to remove registry items it has added, it will quickly set them back again. One trick that may help in removing persistent spyware is to reboot the computer in safe mode and then run the anti-spyware package to allow it to remove the installed spyware.

Spam Filters

Spam is the term used to describe unsolicited bulk e-mail messages. It is also often used to refer to unsolicited bulk messages sent via instant messaging, newsgroups, blogs, or any other method that can be used to send a large number of messages. E-mail spam is also sometimes referred to as unsolicited bulk e-mail (UBE). It frequently contains commercial content, and this is the reason for sending it in the first place—for quick, easy mass marketing. Increasingly today, e-mail spam is sent using botnets, which are networks of compromised computers that are referred to as bots or zombies. The bots on the compromised systems will stay inactive until they receive a message that activates them, at which time they can be used for mass mailing of spam or other nefarious purposes such as a denial-of-service attack on a system or network. Networks of bots (referred to as botnets) usually number in the thousands but can grow to, without exaggeration, tens of millions of systems, as with the Conficker, Bredolab, and other botnets.

Spam is generally not malicious but rather is simply annoying, especially when numerous spam e-mail messages are received daily. Preventing spam from making it to your inbox so that you don’t have to deal with it is the goal of spam filters. Spam filters are basically special versions of the more generic e-mail filters. Spam filtering can be accomplished in several different ways. One simple way is to look at the content of the e-mail and search for special keywords that are often found in spam (such as various drugs, such as Cialis, commonly found in mass-mailing advertising). The problem with keyword searches is the issue discussed before of false positives. Filtering on the characters “cialis” would also cause an e-mail with the word “specialist” to be filtered because the letters are found within it. Users are generally much more forgiving of an occasional spam message slipping through the filter rather than having valid e-mail filtered, so this is a critical issue. Usually, when an e-mail has been identified as spam, it will be sent to a special “quarantine” folder. The user can then periodically check the folder to ensure that legitimate e-mail has not been inadvertently filtered.

Another method for filtering spam is to keep a “blacklist” of sites that are known to be friendly to spammers. If e-mail is received from an IP address for one of these sites, it will be filtered. The lists may also contain known addresses for botnets that have been active in the past. An interesting way to populate these blacklists is through the use of spamtraps. These are e-mail addresses that are not real in the sense that they are not assigned to a real person or entity. They are seeded on the Internet so that when spammers attempt to collect lists of e-mail addresses by searching through websites and other locations on the Internet for e-mail addresses, these bogus e-mail addresses are picked up. Any time somebody sends an e-mail to them, because they are not legitimate addresses, it is highly likely that the e-mail is coming from a spammer and the IP address from which the e-mail was generated can be placed on the blacklist.

Individuals who want to avoid having their e-mail harvested from websites can use a method such as modifying their address in such a way that human users will quickly recognize it as an e-mail but automated programs may not. An example of this for a user with the e-mail of [email protected] might be john(at)abcxyzcorp(dot)com.

The more generic e-mail filters can be used to block spam but also other incoming or outgoing e-mails. They may block e-mail from sites known to send malicious content, may block based on keywords that might indicate the system is being used for other-than-official purposes, or could filter outgoing traffic based on an analysis of the content to ensure that sensitive company data is not sent (or at least not sent in an unencrypted manner).

Patch Management

Managing an organization’s software updates is a classic case of picking your poison. If you patch systems too quickly, you risk breaking your stuff. If you patch systems too slowly due to testing, you risk others breaking your stuff. Although patch management cannot completely solve these challenges, it helps balance the competing desires of testing patches while not waiting too long to deploy them. Shown here are the common types of software updates:

• Security patch Software updates that fix application vulnerabilities

• Hotfix Critical updates for various software issues that should not be delayed

• Service packs Large collection of updates for a particular product released as one installable package

• Rollups Smaller collection of updates for a particular product

Patching is necessary because software that is actively supported by vendors, or internal developer teams, is never truly “finished.” There’s always room for improvement, whether the goals are to enhance the software’s reliability, functionality, performance, or, most commonly, security. Software patches are developed by the application vendor, or in-house developer, due to bugs discovered with the software during in-house code testing, public beta testing, or by white hat and black hat hackers alike. Unless software is developed in-house, updates typically stem from the software vendor’s website. Since updates are published for various operating systems, applications, and even firmware, organizations will sometimes be overwhelmed. The larger the organization, the more unique products they have that require patching. Certain vendors release updates all the time due to increased product popularity (and the resulting attention it receives from hackers).

Although most products try to help by automatically downloading updates, this effectively kills your patch management solution due to lack of testing, bandwidth control, compliance monitoring, and so forth. Testing updates is key because, as with medications in the pharmaceutical industry, updates should be thoroughly tested after development to ensure no adverse side effects are experienced on production systems. A proper patch management solution involves detecting, assessing, acquiring, testing, deploying, and maintaining software updates. This will help ensure that all operating systems, applications, and firmware continue to receive the latest updates with minimal security risks. Given the strong security focus of software updates, it is critical that organizations take patch management seriously. The patch management steps are as follows:

• Detect Discover missing updates.

• Assess Determine issues and resulting mitigations expected from the patch.

• Acquire Download the patch.

• Test Install and assess the patch on quality assurance systems or virtual machines.

• Deploy Distribute the patch to production systems.

• Maintain Manage systems by observing any negative effects from updates, and if other security patches are needed.

HIPS/HIDS

Earlier we discussed the use of firewalls to block or filter network traffic to a system. Although this is an important security step to take, it is not sufficient to cover all situations. Some traffic may be totally legitimate based on the firewall rules you set, but may result in an individual being able to exploit a vulnerability within the operating system or an application program. Firewalls are prevention technology; they are designed to prevent a security incident from occurring. Intrusion detection systems (IDSs) were initially designed to detect when your prevention technologies failed, allowing an attacker to gain unauthorized access to your system. Later, as IDS technology evolved, these systems became more sophisticated and were placed in-line so that they did not simply detect when an intrusion occurred but rather could prevent it as well. This led to the development of intrusion prevention systems (IPSs).

Early IDS implementations were designed to monitor network or system activity, looking for signs that an intrusion had occurred. If one was detected, the IDS would notify administrators of the intrusion, who could then respond to it. Two basic methods were used to detect intrusive activity. The first, anomaly-based detection, is based on statistical analysis of current network or system activity versus historical norms. Anomaly-based systems build a profile of what is normal for a user, system, or network, and any time current activity falls outside of the norm, an alert is generated. The type of things that might be monitored include the times and specific days a user may log into the system (for example, do they ever access the system on weekends or late at night?), the type of programs a user normally runs, the amount of network traffic that occurs, specific protocols that are frequently (or never) used, and what connections generally occur. If, for example, a session is attempted at midnight on a Saturday, when the user has never accessed the system on a weekend or after 6:00 P.M., this might very well indicate that somebody else is attempting to penetrate the system.

The second method to accomplish intrusion detection is based on attack signatures. A signature-based system relies on known attack patterns and monitors for them. Certain commands or sequences of commands may have been identified as methods to gain unauthorized access to a system. If these are ever spotted, it indicates that somebody is attempting to gain unauthorized access to the system or network. These attack patterns are known as signatures, and signature-based systems have long lists of known attack signatures they monitor for. The list will occasionally need to be updated to ensure that the system is using the most current and complete set of signatures.

Advantages and disadvantages are associated with both types of systems, and some implementations actually combine both methods in order to try and cover all possible avenues of attack. Signature-based systems suffer from the tremendous disadvantage that they, by definition, must rely on a list of known attack signatures in order to work. If a new vulnerability is discovered (a zero-day exploit), there will not be a signature for it and therefore signature-based systems will not be able to spot it. There will always be a lag between the time a new vulnerability is discovered and the time when vendors are able to create a signature for it and push it out to their customers. During this period of time, the system will be at risk to an exploit taking advantage of this new vulnerability. This is one of the key points to consider when evaluating different vendor IDS and IPS products—how long does it take them to push out new signatures?

Because anomaly-based systems do not rely on a signature, they have a better chance of detecting previously unknown attacks—as long as the activity falls outside of the norm for the network, system, or user. One of the problems with systems that strictly use anomalous activity detection is that they need to constantly adapt the profiles used because user, system, and network activity changes over time. What may be normal for you today may no longer be normal if you suddenly change work assignments. Another issue with strictly profile-based systems is that a number of attacks may not appear to be abnormal in terms of the type of traffic they generate and therefore may not be noticed. As a result, many systems combine both types so that all the aforementioned advantages can be used to create the best-possible monitoring situation.

IDS and IPS also have the same issues with false-positive and false-negative errors as was discussed before. Tightening an IDS/IPS to spot all intrusive activity so that no false negatives occur (that is, so no intrusion attempts go unnoticed) means that the number of false positives (that is, activity identified as intrusive that in actuality is not) will more than likely increase dramatically. Because an IDS generates an alert when intrusive activity is suspected and because an IPS will block the activity, falsely identifying valid traffic as intrusive will cause either legitimate work to be blocked or an inordinate number of alert notifications that administrators will have to respond to. Frequently, when the number of alerts generated is high, and most turn out to be false positives, administrators will get into the extremely poor habit of simply ignoring the alerts. Tuning your IDS/IPS for your specific environment is therefore an extremely important activity.

Data Loss Prevention

Data loss prevention (DLP) is, in a way, the opposite of intrusion prevention systems. Think about what intrusion prevention systems do—they detect, notify, and mitigate inbound attacks. They help stop the bad stuff from being brought into the company. As for opposites, what solution could we use to detect, notify, and mitigate good stuff from getting out of the company? In other words, how do we prevent data from being leaked or otherwise falling into unauthorized hands? That’s where data loss prevention comes in.

DLP involves the technology, processes, and procedures designed to detect when unauthorized removal of data from a system occurs. Like host-based firewalls, DLPs are often implemented at the endpoint (host level); therefore, the host can determine if unauthorized attempts at destroying, moving, or copying data are taking place. DLP solutions will respond by blocking the transfer or dropping the connection entirely. DLP policies are created that identify sensitive content based on classification, and then actions to take based on which unauthorized behaviors are performed on the content in question. This guards against malicious attacks and accidents as well.

Host-Based Firewalls

In your car, the firewall sits between the passenger compartment and the engine. It is a fireproof barrier that protects the passengers within the car from the dangerous environment under the hood. A computer firewall serves a similar purpose—it is a protective barrier that is designed to shield the computer (the system, user, and data) from the “dangerous” environment it is connected to. This dangerous and hostile environment is the network, which in turn is most likely connected to the Internet. A firewall can reside in different locations. A network firewall will normally sit between the Internet connection and the network, monitoring all traffic that is attempting to flow from one side to the other. A host-based firewall serves a similar purpose, but instead of protecting the entire network, and instead of sitting on the network, it resides on the host itself and only protects the host. Whereas a network firewall will generally be a hardware device running very specific software, a host-based firewall is a piece of software running on the host.

Firewalls examine each packet sent or received to determine whether or not to allow it to pass. The decision is based on the rules the administrator of the firewall has set. These rules, in turn, should be based on the security policy for the organization. For example, if certain websites are prohibited based on the organization’s Internet Usage Policy (or the desires of the individual who owns the system), sites such as those containing adult materials or online gambling can be blocked. Typical rules for a firewall will specify any of a combination of things, including the source and/or destination IP address, the source and/or destination port (which in turn often identifies a specific service such as e-mail), a specific protocol (such as TCP or UDP), and the action the firewall is to take if a packet matches the criteria laid out in the rule.

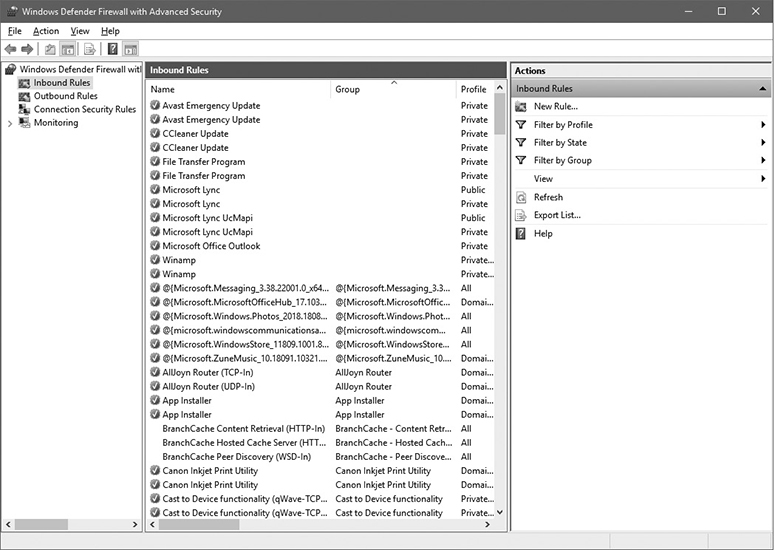

A screen capture of the simple firewall supplied by Microsoft for its Windows 10 operating system is shown in Figure 6-1. As can be seen, the program provides some simple options to choose from in order to establish the level of filtering desired. A finer level of detail can be obtained by going into the Advanced option, but most users never worry about anything beyond this initial screen. In the newer Windows operating systems, the firewall is based on the Windows Filtering Platform. This service allows applications to tie into the operating system’s packet processing to provide the ability to filter packets that match specific criteria. It can be controlled through a management console found in the Control Panel under Windows Firewall. It allows the user to select from a series of basic settings, but will also allow advanced control, giving the user the option to identify actions to take for specific services, protocols, ports, users, and addresses.

Figure 6-1 Windows Defender Firewall with Advanced Security (Windows 10)

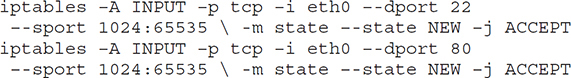

For Linux-based systems, a number of firewalls are available, with the most commonly used one being iptables, which replaced the previous most commonly used package, called ipchains. Iptables provides the functionality to accomplish the same basic functions as those found in commercial network-based firewalls. Common functionality includes the ability to accept or drop packets, to log packets for future examination, and to reject a packet, along with returning an error message to the host sending the package. As an example of the format and construction of firewall rules used by iptables, the rules that would allow WWW (port 80) and SSH (port 22) traffic would look like the following:

The specifics of these commands is as follows: -A tells iptables to append the rule to the end of the chain; -p identifies the protocol to match (in this case, TCP); -i identifies the input interface; --dport and --sport specify the destination and source ports, respectively; -m state --state NEW states the packet should be the start of a new connection, and -j ACCEPT tells iptables to stop further processing of the rules and hand the packet over to the application.

An important point to note that is not always considered is that a firewall can filter packets that are either entering or leaving the host or network. This allows an individual or organization the opportunity to monitor and control what leaves the host or network, not just what enters it. This is related to, but is not entirely the same as, data exfiltration, which we will discuss later in this chapter.

Another consideration from the organization’s point of view is the ability to centrally manage the organization’s host firewalls if they are used. The issue here is whether you really want your users to have the ability to set their own rules on their hosts or whether it is better to have one policy that governs all user machines. If you let the users set their own rules, not only can they allow access to sites that might be prohibited based on the organization’s usage policy, but they might also inadvertently block important sites that could impact the functionality of the system.

Special-purpose host-based firewalls, such as host-based application firewalls, are also available for use. The purpose of an application firewall is to monitor traffic originating from or traveling to a specific application. Application firewalls can be more discriminating beyond simply looking at source/destination IP addresses, ports, and protocols. Application firewalls will understand something about the type of information that is generated by or sent to the specific application and can, in fact, make decisions on whether to allow or deny information based on the contents of the connection.

Log Monitoring

Today’s security professionals should operate under the assumption that host devices have already been compromised. Regardless of how probably such a compromise might be at the moment, operating from the assumption of “implicit compromise” makes sense. A certain amount of nerves is actually healthy for us as security practitioners because it sharpens our senses to perform at their highest level. Having a heightened sense of urgency is needed when we’re competing against a faceless enemy of indeterminate skill, size, motivation, and location.

The unavoidable fact is that we cannot prevent all attacks; therefore, we turn to detection, which is epitomized through the discovery and analysis of malicious activities through log monitoring. We have a ton of logs to help us discover potential abuses, yet having so many logs also complicates our ability to detect, analyze, and respond to security breaches in a timely fashion. Just the Windows Event Viewer alone may have over 100,000 records.

To tame this beast, security professionals should implement a log-monitoring tool that can automate the collection and analysis of various log types. With all the logs under the same roof, malicious event detection becomes much easier; plus, it’ll help us verify the effectiveness of our security controls.

Since Windows is the most popular desktop operating system, it’s important to understand both the Event Viewer and the Windows Audit Policies.

Windows Event Viewer

The Windows Event Viewer is a logging tool that records various operating system, security, and application events using descriptions such as “information,” “warning,” “error,” and “critical.” These events are categorized into separate logs, as shown here:

• Application Contains events generated by applications. Useful for troubleshooting application issues.

• Security Contains audited events for account logins, resource access, and so on. Useful for auditing and determining accountability of human activities.

• Setup Contains setup events such as Windows Update installations. Useful for troubleshooting setup failures.

• System Contains events for operating system and hardware activities. Useful for troubleshooting driver, service, operating system, and hardware issues. This is arguably the most important log in Event Viewer.

• Forwarded Events Contains events forwarded from other systems. Useful for aggregating events from servers onto your IT workstations for centralized log monitoring.

Windows Audit Policies

As with most operating systems, Windows has built-in auditing capabilities to help us determine accountability of outcomes—as in who committed the desirable or undesirable actions. Such outcomes may be in reference to successful or failed login attempts, file access, password changes, and so forth. However, many individuals think that auditing is just another word for logging. Is there a difference between the two? In certain contexts, no—but in light of information security, an important distinction does exist. Think of auditing as a specialized type of logging. Logging, in itself, is just an automated collection of records, whereas auditing is more fact-finding in nature.

Let’s take a look at three sequential log entries for a Sales employee named John Smith:

1. John Smith successfully logged into the Sales-1 workstation at 7:30 A.M.

2. John Smith successfully used the “Read” permission on the Sales shared folder located on the file server at 7:35 A.M.

3. John Smith failed to access the Human Resources shared folder located on FileServer1 at 7:45 A.M.

Logging simply records these three activities into a log file. Auditing, however, digs deeper. Auditing is a more analytical, security, and human-focused form of logging in that it helps us to piece together a trail of evidence to determine if authorized or unauthorized actions are being conducted by users. In other words, auditing involves not only the generation but also the examination of logs to identify signs of security breaches. In the preceding example, John Smith failed to access the Human Resources share. This begs a few questions:

• Why would John Smith, a Sales user, attempt to access a Human Resources directory?

• Was this a deliberate malicious act or an accident?

• Is the individual logged in as John Smith actually John Smith or someone else?

Through additional generation and review of such records, we’ll be able to reasonably determine if this was an attempted security breach or a false alarm.

Let’s take a look at Windows Group Policy auditing from the perspective of Windows Server Domain Controllers using the Group Policy Management tool. You would then navigate to Computer ConfigurationWindows SettingsSecurity SettingsLocal PoliciesAudit Policy for configuration. After configuration, you would visit the Security log under the Event Viewer. Here are some examples of auditing policies:

• Audit account logon events Audits all attempts to log on with a domain user account, regardless from which domain computer the domain user login attempt originated. This policy is preferred over the “Audit logon events” policy below due to its increased scope.

• Audit account management Audits account activities such as the creation, modification, and deletion of user accounts, group accounts, and passwords.

• Audit directory service access Audits access to Active Directory objects such as OUs and Group Policies, in addition to users, groups, and computers. Think of this as a deeper version of “Audit account management.”

• Audit logon events Tracks all attempts to log onto the local computer (say, a Domain Controller), regardless of whether a domain account or a local account was used.

• Audit object access Audits access to non–Active Directory objects such as files, folders, registry keys, printers, and services. This is a big one for determining if users are trying to access files/folders from which they are prohibited.

• Audit policy change Audits attempts to change user rights assignment policies, audit policies, account policies, or trust policies (in the case of Domain Controllers).

• Audit privilege use Audits the exercise of user rights, such as adding workstations to a domain, changing the system time, backing up files and directories, and so on. Often considered a messy and “too much information” policy and therefore not generally recommended.

• Audit process tracking Audits the execution and termination of programs, also known as processes.

• Audit system events Audits events such as a user restarting or shutting down the computer, or when activities affect the system or security logs in Event Viewer.

Endpoint Detection and Response

Traditional antimalware, HIDS/HIPS, and DLP solutions are known for taking immediate eradication and recovery actions upon discovery of malicious code or activities. Although this is a good thing, such quick reactions may deprive us from fully understanding the threat’s scope. In other words, we don’t want to win the battle at the expense of losing the war. Greater threat intelligence must be ascertained, including the determination of the threat’s level of sophistication, and whether or not the threat is capable of using infected endpoints to attack other endpoints.

Endpoint detection and response (EDR) solutions will attempt to answer these concerns by initially monitoring the threat—collecting event information from memory, processes, the registry, users, files, and networking—and then uploading this data to a local or centralized database. The EDR solution will then correlate the uploaded information with other information already present in the database in order to re-analyze and, potentially, mitigate the previously detected threat from a position of increased strength. Other endpoints should be examined by EDR solutions to ensure similar threats are understood and eradicated in a timely fashion.

Host Hardening

Implementing a series of endpoint security mechanisms as described in the previous section is one approach to securing a computer system. Another, more basic approach is to conduct host-hardening tasks designed to make it harder for attackers to successfully penetrate the system. Often this starts with the basic patching of software, but before attempting to harden the host, the first step should be to identify the purpose of the system—what function does this system provide? Whether the system is a PC for an employee or a network server of some sort, before you can adequately harden the system, you need to know what its intended purpose is. There is a constant struggle between usability and security. In order to determine what steps to take, you have to know what the system will be used for—and possibly of equal importance, what it is not intended to be used for.

Defining the standard operating environment for your organization’s systems is your first step in host hardening. This allows you to determine what services and applications are unnecessary and can thus be removed. In addition to unnecessary services and applications, similar efforts should be made when hardening a system to identify unnecessary accounts and to change the names and passwords for default accounts. Shared accounts should be discouraged, and if possible two-factor authentication can be used. An important point to remember is to always use encrypted authentication mechanisms. The access to resources should also be carefully considered in order to protect confidentiality and integrity. Deciding who needs what permissions is an important part of system hardening. This extends to the limiting of privileges, including restricting who has root or administrator privileges and more simply who has write permissions to various files and directories.

Standard Operating Environment/Configuration Baselining

It is generally true that the more secure a system is, the less useable it becomes. This is true if for no other reason than hardening your system should include removing applications that are not needed for the system’s intended purpose—which, by definition, means that it is less useable (because you will have removed an application).

If you’ve done a good job in determining the purpose for the system, you should be able to identify what applications and which users need access to the system. Your first hardening step will then be to remove all users and services (programs/applications) not needed for this system. An important aspect of this is identifying the standard environment for employees’ systems. If an organization does not have an identified standard operating environment (SOE), administrators will be hard pressed to maintain the security of systems because there will be so many different existing configurations. If a problem occurs requiring massive reimaging of systems (as sometimes occurs in larger security incidents), organizations without an identified SOE will spend an inordinate amount of time trying to restore the organization’s systems and will most likely not totally succeed. This highlights another advantage of having an SOE—it greatly facilitates restoration or reimaging procedures.

A standard operating environment will generally be implemented as a disk image consisting of the operating system (including appropriate service packs and patches) and required applications (also including appropriate patches). The operating system and applications should include their desired configuration. For Windows-based operating systems, the Microsoft Deployment Toolkit (MDT) can be used to create deployment packages that can be used for this purpose.

Application Whitelisting and Blacklisting

An important part of host hardening is ensuring that only authorized applications are allowed to be installed and run. There are two basic approaches to achieving this goal:

• Application whitelisting This is a list of applications that should be permitted for installation and execution. Any applications not on the list are implicitly denied. Firewalls typically adopt this approach by implicitly denying all traffic, while generating exceptions of the traffic you wish to allow. The downside to this method is if you forget to put certain desired applications on the list, they will be prohibited.

• Application blacklisting This is a list of applications that should be denied installation and execution. Any applications not on the list are implicitly allowed. This method is frequently used by antimalware tools via definition databases. The advantage of blacklisting is that it’s less likely to block desirable software than whitelisting.

Prior to Windows 7, we used to implement Software Restrictions Policies via Group Policy to identify software for whitelisting or blacklisting purposes. This feature was usurped by Windows 7 Enterprise/Ultimate’s introduction of a Group Policy tool called AppLocker. AppLocker provides additional whitelisting and blacklisting capabilities for the following software scenarios:

• Software that can be executed

• Software that can be installed

• Scripts that can run

• Microsoft Store apps that can be executed

Security/Group Policy Implementation

Group Policy is a feature of Windows-based operating systems dating back to Windows 2000. It is a set of rules that provides for centralized management and configuration of the operating system, user configurations, and applications in an Active Directory environment. The result of a Group Policy is to control what users are allowed to do on the system. From a security standpoint, Group Policy can be used to restrict activities that could pose possible security risks, limit access to certain folders, and disable the ability for users to download executable files, thus protecting the system from one avenue through which malware can attack. The Windows 10 operating system has several thousand Group Policy settings, including User Experience Virtualization, Windows Update for Business, and for Microsoft’s latest browser called Microsoft Edge.

Based on the Windows OS, the security settings include several important areas, such as Account Policies, Local Policies, Windows Defender Firewall with Advanced Security, Public Key Policies, Application Control Policies, and Advanced Audit Policy Configuration.

Command Shell Restrictions

Restricting the ability of users to perform certain functions can help ensure that they don’t deliberately or inadvertently cause a breach in system security. This is especially true for operating systems that are more complex and provide greater opportunities for users to make a mistake. One very simple example of restrictions placed on users is those associated with files in Unix-based operating systems. Users can be restricted so that they can only perform certain operations on files, thus preventing them from modifying or deleting files that they should not be tampering with. A more robust mechanism used to restrict the activities of users is to place them in a restricted command shell.

A command shell is nothing more than an interface between the user and the operating system providing access to the resources of the kernel. A command-line shell provides a command-line interface to the operating system, requiring users to type the commands they want to execute. A graphical shell will provide a graphical user interface for users to interact with the system. Common Unix command-line shells include the Bourne shell (sh), Bourne-Again shell (bash), C shell (csh), and Korn shell (ksh).

A restricted command shell will have a more limited functionality than a regular command-line shell. For example, the restricted shell might prevent users from running commands with absolute pathnames, keep them from changing environment variables, and not allow them to redirect output. If the bash shell is started with the name rbash or if it is supplied with the --restricted or -r option when run, the shell will become restricted—specifically restricting the user’s ability to set or reset certain path and environment variables, to redirect output using “>” and similar operators, to specify command names containing slashes, and to supply filenames with slashes to various commands.

Patch Management

Many of the fundamentals of patch management were discussed earlier in this chapter. What we haven’t quite looked at yet are the methods of patch deployment. This section covers manual and automated methods of deploying patches to an organization’s infrastructure.

Manual

Sacrificing speed for control, organizations sometimes manually deploy patches to their host devices. Manual patching benefits us in a few different ways:

• It places greater emphasis on patch testing in quality assurance labs or virtual machines.

• Patches can be staggered to individual groups or departments as opposed to wide-scale rollouts. This helps prevent issues.

• Rollbacks from failed patch deployments are easier as a result of staggered rollouts. This speeds up the recovery from issues.

The downside to manual deployment methods is the increased administrative effort involved in manual approval processes.

Automated

Manual patching is fine for smaller environments but it doesn’t bode well for the large ones. Imagine manually approving patches for thousands, tens of thousands, or more devices? Automated patching provides a centralized solution in which local or cloud-based servers automatically deploy patches to devices. As you would expect, automated patching is considerably faster than with the manual approach.

Frequently used automated patching solutions include Microsoft System Center Configuration Manager and Windows Server Update Services (WSUS). WSUS is popular, effective, and free, since it is an included role in most Windows Server operating systems. Organizations set up a local WSUS server—or a parent-child series of WSUS servers at headquarters and various branch offices—and then configure the Windows devices to connect to the WSUS server via Group Policy configurations. For non-domain-joined devices, consider using Microsoft Intune for a cloud-based solution that offers over-the-Internet patching and a variety of other exciting Mobile Device Management features.

The downside to automated patching stems from the fact that you’re simultaneously increasing the number of systems that will get patches, and how quickly. This leaves little time to stop a patching problem from spreading too far in a timely fashion. In other words, we may not realize a patching issue until all systems have received the patch, which leads to a nasty rollback process afterward.

Scripting and Replication Scripting is becoming increasingly common for automating administrative tasks. It combines the speed benefits of automation with some of the control benefits of manual patching. Scripting also gives the ability to automate tasks in a way that a centralized patching solution could not achieve on its own. That is because we design the administrative code ourselves as opposed to relying solely on the third-party tool’s feature set.

Regardless of the nature of automated patching or scripting, if you use multiple patching servers to source your patches, be sure the servers converge their patches through an effective replication topology. Patching is too urgent a security control to delay through lack of server synchronization.

Configuring Dedicated Interfaces

Certain hosts, such as a server or a technician’s computer, are likely to have multiple network interface cards. One interface is likely provisioned for everyday LAN communications like Internet, e-mail, instant messaging, and the like. Meanwhile, the other interface is used to isolate the critical, behind-the-scenes management and monitoring traffic from the rest of the network. This second interface is referred to as a dedicated interface since it is dedicated to several key administrative functions. The details of these functions will be outlined throughout the next few topics, including out-of-band management, ACLs, and management and data interfaces.

Out-of-Band Management

An out-of-band interface is an example of a dedicated NIC interface through which network traffic is isolated from both the LAN and Internet channels. This is because the out-of-band NIC is designed to carry critical (and sometimes sensitive) administrative traffic that needs a dedicated link for maximum performance, reliability, and security. Shown here are some features of out-of-band-management:

• Reboot hosts.

• Turn on hosts via Wake on LAN.

• Install an OS or reimagine a host.

• Mount optical media.

• Access a host’s firmware.

• Monitor the host and network status.

There’s not much point to out-of-band management if it is bottlenecked by other areas of the network; therefore, be sure to provide it with adequate performance and reliability via quality-of-service policies. Ensure that traffic is sufficiently isolated from the regular network through subnetting or virtual local area network (VLAN) configurations. Also, when purchasing host devices, consider those with motherboards and NICs that have native support for out-of-band management to enhance your administrative flexibilities.

ACLs

The exact context of access control lists (ACLs) can vary, whether discussing things like file permissions or rules on a router, switch, or firewall. From a file system context, an ACL is a list of privileges that a subject (user) has to an object (resource). From a networking perspective, ACLs are a list of rules regarding traffic flows through an interface based on certain IP addresses and port numbers.

For dedicated interfaces, ACLs will need to be carefully configured to ensure that only approved traffic flows to and from the interface, at the exclusion of all others. This will help secure the source and destination nodes in the network communications.

Management Interface

Management interfaces are designed to remotely manage devices through a dedicated physical port on a router, switch, or firewall, or a port logically defined via a switch’s VLAN. In contrast to out-of-band management, management interfaces connect to an internal in-band network to facilitate monitoring and management of devices via a normal communications channel. Typically, these management interfaces are controlled through a command-line interface (CLI); therefore, you’ll likely use a terminal emulation protocol such as Telnet (insecure) or SSH (secure). It is common practice to use SSH for all management interface communications given its reliance on cryptographic security, including public and private keys, session keys, certificates, and hashing.

Data Interface

Unlike the out-of-band and in-band management topics just discussed, data interfaces carry everyday network traffic. From a traditional switch’s perspective, we’re referring to the Ethernet frame headers, whereas routers operate at the IP packet header. However, let’s not mistake everyday hosts and network traffic as being unimportant. A bevy of attack vectors exist on switches, and we’re going to focus our security considerations on switch ports since those are what host devices typically connect to. Here are some examples of security techniques that can be implemented on switch ports:

• Port security Permits traffic to switch ports from predefined MAC addresses only. This guards against unauthorized devices but is easily circumvented by MAC spoofing.

• DHCP snooping Restricts DHCP traffic to trusted switch ports only. This guards against rogue DHCP servers.

• Dynamic ARP inspection Drops ARP packets from switch ports and incorrect IP-to-MAC mapping. Guards against ARP spoofing.

• IP source guard Drops IP packets if the packet’s source IP doesn’t mesh with the switch’s IP-to-MAC bindings. Guards against IP spoofing.

External I/O Restrictions

To the untrained eye, it appears that organizations are unnecessarily paranoid about workers bringing external devices or peripherals to work. After all, how much harm can a tiny little flash drive, smartphone, or Bluetooth headset possibly cause? Answer: a lot. Although these devices can potentially carry many threats, they all originate from two primary directions:

• Ingress Bad stuff coming in (malware, password crackers, sniffers, keyloggers)

• Egress Good stuff going out (company, medical, and personal data)

Security professionals need to be fully aware of the different external devices that people may bring in, plus the risk factors and threats presented by each. In addition, we may need to look into outright preventing such devices from entering the workplace, and denying the devices that slip through from initializing upon attachment to a host device. This section takes a look at a variety of external devices, including USB, wireless, and audio and video components, plus the mitigations for the threats they introduce.

USB

Since 1996, the Universal Serial Bus (USB) data transfer and power capabilities have permitted the connectivity of virtually every device imaginable to a computer. The ubiquity of USB gives it the rare distinction of being not only the most popular standard for external device connections but also the source of the most device-based threats. Plugging in external devices containing storage such as flash drives, external hard drives, flash cards, smartphones, and tablets makes it easy for both innocent and not-so-innocent users to install malicious code onto a host. This includes malware, keyloggers, password crackers, and packet sniffers. Other USB attacks may seek to steal sensitive materials from the organization. Since most of these devices are small, they can easily be concealed in a pocket, backpack, purse, or box—and thus escape the notice of security staff or surveillance cameras.

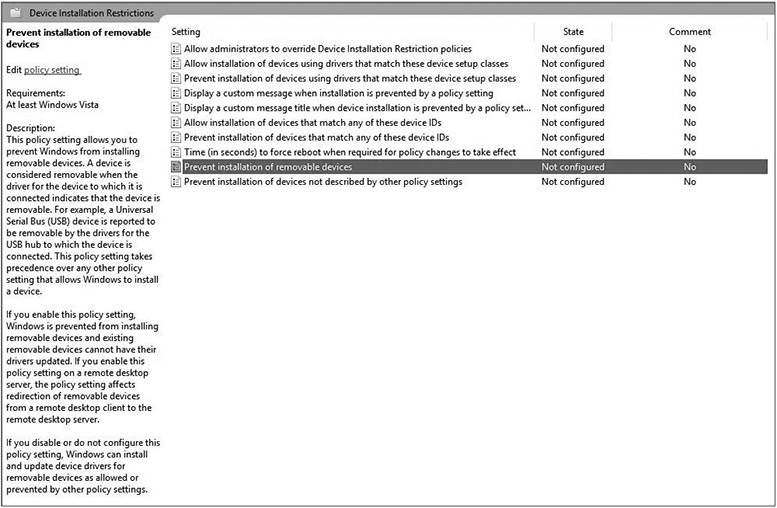

To combat the USB threats, organizations often use technological means to block or strictly limit the use of USB devices. Figure 6-2 shows an example of restricting removable devices via Windows Group Policy.

Figure 6-2 Group Policy restricting removable devices

Wireless

Like USB, wireless technologies bring convenience, practicality, and numerous attack vectors to an organization. Unlike their cabled brethren, wireless devices are susceptible to various over-the-air communication attacks, which may result in malware infection, device hijacking, denial-of-service (DoS) attacks, data leakage, and unauthorized network access.

The nature of the threats can vary based on whether the device uses radio frequencies such as Bluetooth, near field communication (NFC), radio-frequency identification (RFID), and 802.11 Wi-Fi or the infrared signals used by the infrared data association (IrDA) protocols. This section will cover the threats introduced by these wireless technologies and their mitigations.

Bluetooth Bluetooth is a wireless technology standard designed for exchanging information between devices such as mice, keyboards, headsets, smartphones, smart watches, and gaming controllers—at relatively short distances and slow speeds. With various Bluetooth versions out there, devices may range widely in terms of signal range and bandwidth speeds. You may see ranges between 10 and 1,000 feet with speeds between 768 Kbps and 24 Mbps. Keep in mind that 1,000-foot Bluetooth distances are rare and typically achieved by Bluetooth hackers using amplifiers to perform their exploits from well out of sight.

Like any wireless technology, Bluetooth is subject to various attack vectors:

• Bluesmacking DoS attack against Bluetooth devices

• Bluejacking Delivery of unsolicited messages over Bluetooth to devices in order to install contact information on the victim’s device

• Bluesnarfing Theft of information from a Bluetooth device

• Bluesniffing Seeking out Bluetooth devices

• Bluebugging Remote controlling other Bluetooth devices

• Blueprinting Collecting device information such as vendor, model, and firmware info

Despite the litany of attack vectors, several countermeasures exist to reduce the threats against Bluetooth devices. One of the best things you can do is keep devices in a non-discoverable mode to minimize their visibility. Another idea is to change the default PIN code used when pairing Bluetooth devices. Also, disregard any pairing requests from unauthorized devices. If one is available, you should also consider installing a Bluetooth firewall application on your device. Enabling Bluetooth encryption between your device and the computer will help prevent eavesdropping. If possible, ensure the device has antimalware software to guard against various Bluetooth hacking tools.

NFC Near field communication (NFC) is a group of communication protocols that permit devices such as smartphones to communicate when they are within 1.6 inches of each other. If you’re ever at a Starbucks drive-thru, you’ll frequently see customers paying for products by holding up their smartphone to the NFC payment reader. NFC payments are catching on due to their convenience, versatility, and having some security enhancements over credit cards. Security benefits include an extremely small signal range (which makes compromise more difficult), PIN/password protection, remote wiping of smartphone to guard against credit card number theft from lost devices, contactless or “bump” payment, credit card number being kept invisible to outsiders, and no credit card magnetic strip needed. Plus, the owner of the NFC card reader does not have access to the customer’s credit card information.

Although NFC is generally considered to be more secure than typical credit card payments, there are some downsides, including the following:

• Cost prohibitive, particularly for small businesses, which reduces their competitive edge

• Lack of support from many businesses

• Hidden security vulnerabilities subjecting NFC to radio frequency interception and DoS attacks

There are various mitigations for NFC, including the following:

• Encrypting the channel between the NFC device and the payment machine

• Implementing data validation controls to guard against integrity-based attacks

• End-user awareness training for NFC risks and best practices

• Disabling NFC permanently or only when not in use

• Only tapping tags that are physically secured, such as being located behind glass

• Use of NFC-supported software with password protection

IrDA Infrared Data Association (IrDA) created a set of protocols permitting communications between devices using infrared wireless signals. Unlike most wireless communications that use radio waves, infrared is a near-visible form of light. IrDA is generally considered to be accurate, relatively secure (primarily due to line-of-sight requirements), resilient toward interference, and can serve as a limited alternative to Bluetooth/Wi-Fi due to some environments having challenges with radio frequency devices or radio interference.

Although sometimes used as a communications method between laptops and printers, IrDA doesn’t see much use due to its limited speed (16 Mbps), range (2 meters), and line-of-sight requirements. IrDA doesn’t implement authentication, authorization, or cryptographic support. Plus, it is possible (although not easy) to eavesdrop on IrDA communication. The best mitigation is to be mindful of device position in relation to other untrusted users or devices to prevent eavesdropping, or switch to another wireless technology such as Bluetooth or Wi-Fi if possible.

802.11 Dating back to 1997, the 802.11 specification has been managed by the Institute of Electrical and Electronics Engineers (IEEE), which helps globally standardize wireless local area network communications. The frequencies used in the various 802.11 standards are commonly 2.4 GHz and 5 GHz; meanwhile, a newer 60 GHz frequency band has emerged. Although 802.11 forms the foundation for Wi-Fi technologies, they are not interchangeable terms. Given the large scope of 802.11 topics and standards, we will flesh these out over the next several sections.

Wireless Access Point (WAP) Wireless access points (WAPs) are devices that connect a wireless network to a wired network—which creates a type of wireless network called “infrastructure mode.” If you build a wireless network without a WAP, this is known as an ad-hoc network. In most cases, wireless access points are incorporated into wireless broadband routers.

Hotspots Hotspots are wireless networks that are available for public use. These are frequently found at bookstores, coffee shops, hotels, and airports. They are notorious for having little to no wireless security. It is recommended that you establish a VPN connection to secure yourself on hotspots.

SSID The service set identifier is a 32-alphanumeric-character identifier used to name a wireless network. This name is broadcasted via a special type of frame called a “beacon frame” that announces the presence of the wireless network. The SSID should be renamed in addition to disabling the broadcasting of it to decrease the visibility of the wireless network.

802.11a The first revision to the 802.11 standard, 802.11a was released in 1999. It uses the orthogonal frequency-division multiplexing (OFDM) modulation method via the 5 GHz frequency with a maximum speed of 54 Mbps. This standard didn’t see a lot of action due to limited indoor range.

802.11b Also released in 1999, 802.11b uses the direct sequence spread spectrum (DSSS) method via the 2.4 GHz frequency band at a top speed of 11 Mbps. Despite its slower speeds, it has excellent indoor range. This standard became the baseline for which technologies would eventually be called “Wi-Fi certified.”

802.11g Released in 2003, 802.11g uses the 2.4 GHz band of 802.11b, but has the 54 Mbps speed of 802.11a. Like 802.11a, it uses the OFDM modulation technique. Given its excellent indoor range, this was a huge hit for many years and is still in use today.

802.11i Released in 2004, 802.11i is a security standard calling for wireless security networks to incorporate the security recommendations included in what is now known as Wi-Fi Protected Access II (WPA2). More to follow on WPA2 later in this section.

802.11n Although sold on the market since the mid-2000s as a draft standard, 802.11n was formally released in 2009 and supports OFDM via both the 2.4 GHz and 5 GHz frequencies. Having support for both frequencies is good because if the 2.4 GHz band has too much interference, we can switch to the less-crowded 5 GHz band. This standard’s speed can scale up to 600 Mbps if all eight multiple-input multiple-output (MIMO) streams are in use. Plus, it has nearly double the indoor range of the previous standards.

802.11ac Released in 2013, 802.11ac uses the 5 GHz band and the OFDM modulation technique. Its reliance on 5 GHz helps it to avoid interference in the “chatty” 2.4 GHz band. It supports some of the fastest speeds on the market at 3+ Gbps and has good indoor range.

WEP Wired Equivalent Privacy (WEP) is the original pre-shared key security method for 802.11 networks in the late 1990s and early 2000s. Prior to the pre-shared method, wireless networks used open system authentication in which no password was needed to connect. Given its name, the wireless encryption offered by WEP was equivalent to no encryption via cables. In other words, the goal was to make these two methods “equivalent.” WEP uses a fairly strong and fast symmetric cipher in RC4; however, WEP poorly manages RC4 by forcing it to use static encryption keys. In addition, WEP uses computationally small 24-bit initialization vectors (IVs), which are input values used to add more randomization to encrypted data. As a result, WEP hacking can easily be performed by capturing about 50,000 IVs to successfully crack the WEP key. WEP should be avoided on wireless networks unless no alternatives exist.

WPA Wi-Fi Protected Access (WPA) was an interim upgrade over WEP in that it did away with static RC4 encryption keys, in addition to upgrading the IVs to 48 bits. Although WPA still uses RC4, it also supports Temporal Key Integrity Protocol to provide frequently changing encryption keys, message integrity, and larger IVs. Despite its vast improvements over WEP, WPA can be exploited via de-authentication attacks, offline attacks, or brute-force attacks.

WPA2 Wi-Fi Protected Access II (WPA2) is the complete representation of the 802.11i wireless security recommendations. Unlike WPA, it replaced RC4 with the globally renowned Advanced Encryption Standard (AES) cipher, while also being supplemented by the Counter Mode Cipher Block Chaining Message Authentication Code Protocol (CCMP) cipher. As with WPA, WPA2 supports either Personal or Enterprise mode implementations. WPA2 Personal mode uses pre-shared keys, which are shared across all devices in the entire wireless LAN, whereas WPA2 Enterprise mode includes Extensible Authentication Protocol (EAP) or Remote Authentication Dial-in User Service (RADIUS) for centralized client authentication, including Kerberos, token cards, and so on. The Enterprise mode method is designed for larger organizations with AAA (authentication, authorization, and accounting) servers managing the wireless network, and Personal mode is more common with small office/home office (SOHO) environments in which the WAP controls the wireless security for the network.

MAC Filter This is a simple feature on WAPs where we whitelist the “good” wireless MAC addresses or blacklist the “bad” MAC addresses on the wireless network. Although this provides a basic level of protection, hackers can fairly easily circumvent it with MAC spoofing. It is best to supplement this security feature with others.

RFID Radio frequency identification (RFID) uses antennas, radio frequencies, and chips (tags) to keep track of an object or person’s location. RFID has many applications, including inventory tracking, access control, asset tracking (such as laptops and smartphones), pet tracking, and people tracking (such as patients in hospitals and inmates in jails).

RFID uses a scanning antenna (also known as the reader or interrogator) and a transponder (RFID tag) to store information. For example, a warehouse may require RFID tags to be placed on staff smartphones, as per a mobile device security policy. A warehouse manager can then use an RFID scanner device to remotely monitor the smartphones.

RFID does introduce some interference and eavesdropping risks due to other readers being capable of picking up the tag’s transmission. Plus, the tag can only broadcast so far, which doesn’t do any good for device theft. Some mitigations for RFID security threats include blocker tags, which seek to DoS unauthorized readers with erroneous tags, and kill switches, which seek to disable tags once activation is no longer required. Also, RFID encryption and authentication are supported on some newer RFID devices.

Drive Mounting