CHAPTER 13

Cloud and Virtualization

This chapter presents the following topics:

• Cloud computing basics

• Virtualization basics

• Technical deployment models (outsourcing/insourcing/managed services/partnerships)

• Security advantages and disadvantages of virtualization

• Cloud-augmented security services

• Vulnerabilities associated with the commingling of hosts with different security requirements

• Data security considerations

• Resources provisioning and deprovisioning

Cloud computing and virtualization are textbook examples of how the more things change, the more they stay the same. For IT folks who have been around a while, this new paradigm of centralized computing might seem familiar. A few decades ago, the computing landscape was ruled by centralized mainframe computers. As shown in Figure 13-1, these large and powerful machines hoarded all of the processing, storage, and memory operations to themselves. The user’s computer terminal that sent input to and received output from the mainframe was little more than just a monitor and keyboard. Such minimization of the end-user device gave rise to the term “dumb terminal.” However, living by the mainframe also meant dying by the mainframe. The terminals couldn’t perform any tasks if the single mainframe failed. This dependence on a centralized endpoint became an unsustainable single point of failure.

Figure 13-1 Centralized mainframe model

To reduce the risks of this early form of centralized computing, a more decentralized client/server model allowed for a balanced distribution of the computing workloads across clients and servers. As depicted in Figure 13-2, these computers could perform some tasks independently of one another, albeit not nearly as well as when taken together. Although this shifted the focus away from the hierarchal computing model, computing operations were localized to the organizational boundary. While the client/server model is still popular today, the organizational boundary is increasingly viewed as an obstacle in the areas of worker productivity, cost-effectiveness, and team collaboration. To that end, this past decade has seen a groundswell of technological and workplace productivity trends including high-speed Internet, personal and mobile devices, teleworking, and telecommuting. These technologies have extended the organizational boundary to wherever the user goes.

Figure 13-2 Client/server architecture

Many of today’s businesses have a patchwork quilt of desires, including cost cutting, remote worker productivity, and ubiquitous tool access, while maintaining strict control over company assets. Cloud computing and virtualization are the strongest candidates to make all of these goals a reality. They are today’s expression of the centralized ideals that originated from mainframes—only without most of the hassles. Figure 13-3 depicts cloud computing.

Figure 13-3 Cloud computing

Although virtualization is not exclusive to cloud computing, its inherent cost savings and administrative flexibilities are the perfect anecdote to the (arguably) radical methodologies of cloud computing. Once again, computing workloads are being aggregated onto central powerful servers with our web browsers presiding over dumb terminals. Unlike mainframes, which limit productivity to a single work area, today’s centralized server and Internet solutions shift the scope of business and worker productivity onto a global stage. Yet, they reduce the single-point-of-failure concerns of mainframes while also dissolving the organizational perimeter to one without boundaries.

Cloud computing and virtualization—particularly cloud computing—are polarizing concepts that people either love or hate. To the skeptic, they represent everything that is evil about the world: a conspiracy bringing a mass of wealth, control, data aggregation, data selling, and surveillance to a global cartel of computing superpowers. Not that these concerns are entirely unfounded, but many of our enlightened population are harnessing these computing forces for good—while also securing their organizations from threats in the process. These technologies are not intrinsically evil and, more importantly, they’ve come far enough along in the past decade or so that they are capable of providing a wealth of solutions that positively affect our pocketbook, technology portfolio, personnel options, and, most importantly, business objectives.

Like anything else, there are risks, threats, and vulnerabilities—but that is why having comprehensive knowledge of our choices will allow us to tailor-fit the right cloud and virtualization features to the unique security requirements of our organization.

This chapter focuses on the integration of cloud and virtualization technologies into a secure enterprise environment. It covers some of the basics of these technologies while also diving into technical deployment models, security advantages and disadvantages of virtualization, cloud-augmented security services, vulnerabilities, data security considerations, as well as resource provisioning and deprovisioning.

Cloud Computing Basics

NIST Special Publication 800-145 defines cloud computing as “a model for enabling ubiquitous, convenient, on-demand network access to a shared pool of configurable computing resources (e.g., networks, servers, storage, applications, and services) that can be rapidly provisioned and released with minimal management effort or service provider interaction.” With that being about as official a definition as you can get, let’s unpack the key aspects of it:

• Ubiquitous Applications and data are accessible from anywhere.

• On-demand Applications and data are accessible at any time.

• Shared pool Resources are allocated or deallocated from a dynamic and large pool that is shared by multiple subscribers.

• Rapid provisioning Resources are provided in a timely fashion to maximize performance and cost-effectiveness.

• Minimal management effort Many cloud vendors provide a comprehensive “managed service” or “managed security service” to reduce the management responsibilities of the cloud subscribers.

Cloud computing delivers capabilities and computing resources without the end user having any idea where the resources being used to deliver those services are located or how those resources are configured. Naturally, this makes most security and IT personnel extremely nervous. How do you know those resources are secure? Who is watching them? How secure are they? Where is my data being processed and stored? Who else has access to it? Let’s examine some of the advantages and possible issues of cloud computing.

Advantages Associated with Cloud Computing

In theory, cloud computing should save your organization time, manpower, and money. Although these should be carefully weighed against the potential risks and lack of control, there are some advantages to consider when examining cloud services:

• Availability Cloud providers invest heavily into their infrastructures to ensure maximum service uptime and speedy recoverability during outages. With cloud services, you often have the ability to recover services quickly and provision instances in multiple data centers to maximize availability. This can usually be done far cheaper (because it’s virtual) than if your organization were deploying physical servers in multiple locations. Look for availability expectations and requirements in the SLA.

• Dispersal and replication of data Cloud services can be designed to disperse and replicate data over a range of virtual instances. Although this may increase the risk of losing some data or having chunks of data compromised, it can help to ensure the majority of your data is always available and accessible to your organization.

• DDoS protection By design, cloud services should be more resistant to DDoS attacks. If one part of the cloud is under fire, resources can be shifted to service requests from a different part of the cloud—provided the equipment exists and is configured to do so.

• Better visibility into threat profiles When configured to do so, a cloud environment can provide a great deal of threat intelligence. A cloud is essentially a big group of servers, and at any given moment some of those servers are being scanned, probed, or even attacked. By monitoring attack traffic carefully, a cloud service provider is able to gain significant visibility into current and rising attack trends. If the cloud provider has a well-trained and competent security staff, they may even be able to better protect your servers in the cloud than you would at your own organization. A cloud provider may be able to identify a rising attack trend and neutralize it before it can reach your virtual servers, whereas your organization may only discover the attack trend when it hits your servers for the first time.

• Use of private clouds If your organization is overly sensitive to sharing resources, you may wish to consider the use of a private cloud. Private clouds are essentially reserved resources used only for your organization—your own little cloud. This will be considerably more expensive but should also carry less exposure and should enable your organization to better define the security, processing, handling of data, and so on that occurs within your cloud.

Issues Associated with Cloud Computing

Here are some of the more common issues and potential disadvantages associated with cloud computing solutions:

• Loss of physical control With a cloud solution, your services and data are figuratively in “the clouds” are far as you are concerned. Although you can control which geographical region hosts your cloud services, you may not be able to control exactly how those services are provided. You also may have no way to know if weather, war, or natural disasters will affect the data center supporting you because you likely won’t know which data center it is.

• Must trust the vendor’s security model We must ask ourselves and the cloud provider how its cloud is secured, what technologies it is using, and how the cloud is monitored. Increasingly, infrastructure as service models are becoming black boxes to the customer. Subscribers are, somewhat, being forced by vendors to accept that the cloud is being secured and being watched over by competent personnel. However, the onus is on us to verify the cloud provider’s compliance with any regulations such as HIPAA, FIPS, PCI DSS, GLBA, and so forth.

• Proprietary models Proprietary technologies will likely come up during your discussions with a cloud service provider. Yes, the provider has a right to protect its technology and its operations, but if a large number of your questions and concerns are answered with, “Don’t worry, we have that covered, but it’s all proprietary so we can’t talk about it,” then consider another provider. Make sure your vendor isn’t using “proprietary” excuses to cover up negligence and incompetence.

• Support for investigations If you’re unable to obtain all the answers you need yourself, you may have difficulty getting support from your cloud provider for investigations that require manpower-intense activities such as forensic examination. If your service gets compromised, your data gets corrupted, or you suspect foul play, what support can your cloud provider offer? What is it required to offer? And what will it provide “for an additional fee”? Not everyone considers the “what if” scenarios critically enough when examining service contracts—but we all should.

• Inability to respond to audit findings Any time you outsource a critical service, you give up a degree of access and control. Give up too much, and you may find that you’re unable to adequately address or remediate findings from an audit, such as a PCI DSS compliance audit.

• What happens to your data Although major cloud products like Amazon AWS and Microsoft Azure give us increased control over data security and management, don’t assume they all do. You must inquire about the nature of the controls and the responsibilities that lie with the provider and subscriber respectively. You must also inquire about encryption of data in use, in transit, and in storage. How long is the data archived? How is it backed up? What happens to your data when you delete it—is it really gone? What happens to your data if you cancel the contract? Data has value—in some cases a great deal of value. Knowing exactly how your data is handled in a cloud service environment is important. If you have specific concerns, be sure they are addressed ahead of time and make sure your service agreement covers any concerns or special requirements you may have.

• Deprovisioning When you’ve finished with a project and decide to remove a server from the cloud, migrate from one cloud provider to another, or even cancel a line of service: How is the virtual server deprovisioned? What steps are taken to ensure those virtual machines are reused? Are backups purged as well? How does the provider make sure your presence is well and truly “gone” from that cloud?

• Data remnants What happens when that server, or your data, is deleted in the cloud? Are files securely deleted with contents overwritten? Or does your provider just perform a simple delete that may delete the file record but leaves partial file contents on the disk? Chances are your servers and your data resided on the same physical drives as another organization’s servers and data; therefore, the data destruction mechanisms may not be super aggressive. Also, the provider is unlikely to be degaussing drives after you cancel your service and delete your servers. So how can you make sure your data is truly gone and that nothing remains behind when you leave?

Virtualization Basics

In many respects, virtualization is the other side of the coin shared by cloud computing. Virtualization is the act of creating a virtual or simulated version of real things like computers, devices, operating systems, or applications. For example, a hypervisor program can virtualize hardware into software versions of CPUs, RAM, hard drives, and NICs so that we can install and run multiple isolated operating systems instances on the same set of physical hardware. As shown in Figure 13-4, these virtual machines (VMs) behave like separate physical computers; therefore, each VM can contain its own operating system.

Figure 13-4 Virtual machines

Virtualization has come a long way since its days spent in test beds and development labs. Organizations that used to swear by racks and racks of dedicated iron are increasingly turning to virtualization to save money, reduce server count, and maximize utilization of hardware. Sounds great, right? Well, that depends on how you look at it. Virtual environments, like any other environment, have their own risks, security concerns, and special considerations. The upcoming sections discuss cloud computing and virtualization in more detail.

Technical Deployment Models (Outsourcing/Insourcing/Managed Services/Partnership)

This section focuses primarily on the security considerations of cloud computing technical deployment models, while deferring most of the virtualization considerations for later sections. Cloud computing includes a variety of deployment models that permit organizations to strike the best balance between cost, control, responsibility, security, and features. Virtualization can be thought of as a feature of deployment models, particularly in the case of private cloud networks. Organizations will also need to factor in the benefits and security considerations of outsourcing cloud-based and/or virtualization services to a third party, insourcing the security benefits of cloud and virtualization internally, utilizing a managed service provider for security services, or creating a joint cloud venture with partners.

Cloud and Virtualization Considerations and Hosting Options

Most cloud computing solutions are Internet based, yet virtualization equally permeates the Internet and on-premises infrastructures of organizations. It can almost be said that all cloud computing involves virtualization, but not all virtualization involves cloud computing. There are many cloud computing hosting options to choose from—some of which may or may not involve virtualization. These options differ in several ways, including cost, configuration controls, resource isolation, security features, and locations of data, servers, and applications.

Although it is easier and, perhaps, more cost-effective for an outsourced Internet provider to deliver these options to an organization, many businesses successfully implement these capabilities on-premises. As you will see, cloud computing is a patchwork of computer networking features as opposed to being a single and entirely new capability in itself. What follows are some of the technical deployment models that security practitioners must choose from to balance the cost, productivity, and security requirements of the organization.

Public

One of the more common reasons to utilize public cloud computing services is the combination of cost benefits and simplicity. The public cloud computing model involves a public organization providing cloud services to paying customers (either pay-as-you-go or subscription-based customers) or non-paying customers. Whereas paying customers typically enjoy more features and security, customers using free services may lose important features like encryption, access control, compliance, and auditing.

The public cloud provider will generally host all of the services itself, while occasionally offloading some of these services to other providers. Organizations and people gravitate toward public cloud solutions due to the transference of most responsibilities to the cloud provider. These organizations must also exercise caution with regard to public cloud computing due to the inherent security risks attributed to a solely Internet-based solution.

Other examples of public cloud products include Amazon Web Services (AWS), Google Cloud Platform, and IBM Cloud. At the time of this writing, AWS is the cloud computing market leader, but the Microsoft Cloud products, including Azure and Office 365, are fast on its heels.

Pros and Cons of Public Cloud Computing As with any form of cloud computing, a public cloud has well-known strengths and weaknesses. Here’s a breakdown of pros and cons of public cloud computing:

Pros

• Accessibility The infrastructure is immediately available and accessible from clients anywhere at any time.

• Scalability Scaling up and out provisions resources at higher and more cost-effective amounts to the customer. This can improve the customer’s own performance and availability requirements, which is an important tenant of the CIA triad.

• Capital expenses The cloud subscriber utilizes the provider’s back-end hardware and thus reduces the need for local server purchases.

• Pay per use Like for electricity and water, the customer is typically billed based on resource usage, not necessarily resource availability (the former being more cost-effective).

• Economies of scale Large-scale providers can generally produce more output at less cost; therefore, they’re better positioned to secure their infrastructure than private organizations. This, in turn, enhances their capability to comply with numerous security accreditations like HIPAA, FIPS, PCI DSS, SOX, and so on.

Cons

• Security and privacy Hackers may target the cloud; customers lose some control over data; and there are inherent vulnerabilities associated with resource sharing.

• Performance Fluctuations of Internet connectivity, and the demands of other cloud tenants, can negatively affect performance.

• Configuration Some public cloud solutions significantly limit the configuration options available to the tenants.

• Reliability Hackers sometimes target cloud networks, thus potentially reducing their reliability.

Private

Whether it’s skepticism of the public cloud’s inherent security challenges or pressure from laws and regulatory requirements, organizations might feel compelled to adopt an internal private cloud. This model allows the local organization to be the sole beneficiary of an infrastructure that duplicates many of the public cloud benefits like on-demand self-servicing, ubiquitous network access, resource pooling, rapid elasticity, agility, and service measuring. The local organization, or a third party, can maintain the on-premises cloud infrastructure. The main component of private cloud computing is that the local organization does not share the benefits of this cloud network with other organizations—hence the term “private.”

Many leading technology companies sell private cloud solutions, including Amazon, Cisco, Dell, HP, IBM, Microsoft, NetApp, Oracle, Red Hat, and VMware. For example, a Microsoft private cloud might be composed of a combination of Microsoft Remote Desktop Services (RDS) and Microsoft Hyper-V Server.

Pros and Cons of Private Cloud Computing Unlike a public cloud, a private cloud enjoys many of the benefits inherent with on-premises computing environments, but it also has some of its negatives as well. Here’s a list of pros and cons of private cloud computing:

Pros

• Control A locally focused cloud infrastructure allows many of the control benefits of an on-premises infrastructure.

• Security With in-house equipment, focus, and control, security postures resemble most other on-premises infrastructures.

• Reliability Internet connectivity, or lack thereof, won’t have the same impact to a private cloud as it would public and hybrid cloud configurations.

Cons

• Private Since the cloud is not hosted on the Internet, the ubiquitous access benefits typified by public clouds can be more difficult to provide to remote workers.

• Capital expense The private cloud is composed of locally owned equipment, thus increasing costs to the organization.

• Operational expenses The local organization owns, operates, and maintains the equipment and therefore incurs all of the day-to-day costs of business.

• Scalability Organizations might lack sufficient hardware to deal with sudden spikes in resource demand.

• Disaster recovery If a data center lacks suitable replication—or an alternate hot site to replicate to—a large-scale disaster event can render that site inoperable.

Hybrid

As the name implies, hybrid cloud computing is a combination of multiple cloud models such as the public, private, and community cloud models. An organization might utilize a local server solution in addition to outsourcing other aspects of that solution to a cloud provider. This allows organizations to experience the best of both words, whereby the most critical data is kept on the premises to meet organizational security requirements, while still enjoying the various benefits offered by public cloud computing.

Pros and Cons of Hybrid Cloud Computing By not going “all in” with public or private cloud computing, a hybrid cloud shares the strengths and weaknesses of both methods. Here’s a list of pros and cons of hybrid clouds:

Pros

• Balance Organizations can use a private cloud for stricter security requirements and a public cloud for less-strict security requirements.

• Cloud bursting As demands for private cloud resources exceed the supply, the organization can redistribute or “burst” the excess demand onto a public cloud to stabilize performance.

• Accessibility When users are working remotely, they can easily reach the public cloud as needed.

Cons

• Cost The expenses incurred from the private cloud setup offset some of the cost-savings of the public cloud.

• Complexity With multiple cloud models requiring a synergistic setup, more knowledge and skills are required for proper configuration, maintenance, and recovery.

• Security Although many public cloud computing vendors provide considerable behind-the-scenes security benefits, not all of them do. Plus, not all private organizations are masters of securing private cloud infrastructures.

Community

Community cloud computing is a model that involves a group of organizations that collectively own, share, or consume a common cloud computing infrastructure as a result of mutual interests like software interfaces and security features. For example, a broad network of doctors and hospitals might consume a healthcare-specific cloud computing network that aggregates the input and sharing of electronic health records, data analysis, and HIPAA/HITECH compliance requirements. Due to the depth of interactions between the community of organizations, security policies, controls, and responsibilities need to be established up front to avoid future issues.

Pros and Cons of Community Cloud Computing Community clouds have some pros and cons that security professionals need to be aware of to help organizations make informed decisions. Refer to the following list for the pros and cons of community clouds:

Pros

• Cost More cost-effective than a private cloud.

• Outsourced Management can be delegated to a third party.

• Universal tools The tools are accessible by both suppliers and consumers of the cloud infrastructure.

Cons

• Cost More expensive than a public cloud.

• Sharing Infrastructure elements including bandwidth and storage are potentially shared across a pool of organizations.

Multitenancy

If you consider the fact that millions of times a day, all around the world, people line up at shared ATM machines to deposit or withdraw money, then multitenancy isn’t much of a stretch. Multitenancy involves cloud organizations making a shared set of resources available to multiple organizations and customers. The cloud servers will share out a common virtualized environment to multiple tenants while also providing the logical isolation and control set needed by customers. The primary motivation behind multitenancy is the cost benefits to the cloud provider in the form of automated software provisioning and shared resources. When cloud providers save money through these conservation efforts, they pass on those savings to the customers.

It is important that the cloud provider allocate adequate resources for the shared spaces of multiple tenants. Resource demands will only go up in the future, and it’s important that individual tenants are equally isolated and provisioned enough with resources to prevent one tenant from hoarding resources at another’s expense.

Single Tenancy

If security requirements dictate increased privacy and isolation of cloud-based resources, single tenancy is the way to go. The cloud provider will grant each customer its own virtualized software environment to ensure more privacy, performance, and control requirements are upheld to a greater standard. This is similar to renting an office building to one company as opposed to multiple organizations occupying separate suites within a building. The prioritization and allocation of resources to a single tenant will increase the cloud provider’s costs; therefore, the customer can expect costs to be passed on to them.

On-premises vs. Hosted

We have been using on-premises IT infrastructures for decades. For many IT and security personnel, the inherent control and privacy benefits provided by on-premises solutions outweigh the flashier but riskier benefits of hosted cloud computing. Although hosted cloud computing typically provides greater scalability, availability, elasticity, accessibility, and cost-effective benefits, an on-premises (or private) cloud is essentially a modernized version of on-premises computing. An on-premises cloud provides some of the benefits of a hosted cloud, but without some risks. A private cloud might be all the “cloud” such on-premises loyalists can stomach.

On the other hand, the people cutting the checks probably won’t care about the practicalities of a Linux server hosted on-premises versus in a hosted cloud. What decisions makers want is for the organization to achieve its business objectives in the most cost-effective, simple, efficient, and risk-averse ways as possible. More often than not, hosted cloud environments will provide for all of those deliverables. Whether justified or not, the managerial viewpoint on IT personnel, infrastructures, power and A/C requirements, data centers, and so forth has become strained at best. A growing number of businesses would rather transfer some of those “costs” to a third party and let them worry about it all.

There’s no arguing that on-premises computing comes with the greatest level of control and insight over our digital assets. For many organizations with strict compliance requirements, this might be not only the best option but the only option. Yet, with organizational assets increasingly being outsourced to a hosted cloud environment, organizations are benefitting from the increased focus on business objectives as opposed to sharing that focus with an on-premises infrastructure.

Okay, truth time: there’s an elephant in the room, and it’s about time we address it. For the local technical staff, the prospect of outsourced IT and security solutions is generating some anxiety. Many feel outright disdain toward outsourced cloud computing solutions, and who can blame them? Nobody wants to potentially lose their job to someone else—particularly at little to no fault of their own.

However, it’s not all doom and gloom because you probably already know exactly what to do—add cloud computing and virtualization to your skillset! Become the provider of the outsourced IT solutions as opposed to the victim of it. Local IT jobs aren’t so much “disappearing” as they are being converted into something new and, possibly, transferred to someone else. Be that someone else! There are more employment opportunities for cloud and virtualization positions than qualified applicants to fill them. We’re living in a new generation of technologies and opportunities. Your preexisting knowledge, skills, and abilities put you in prime position to capitalize on this movement—but only if you are willing to adapt to the new environment.

Cloud Service Models

In the previous section we discussed cloud deployment models such as public, private, hybrid, and community clouds. The next step is to go over cloud service models. These are the particular services being offered to us by the deployment models. These services are what the customer directly interacts with and benefits from. A public cloud in itself is really just someone else’s data center. What we’re looking for are the particular software, platform, and infrastructure services being provided to us by that data center.

Software as a Service (SaaS)

When cloud computing providers offer applications to customers to use, they are providing software as a service (SaaS). Common features of SaaS include web-based e-mail, file storage and sharing, video conferencing, learning management systems (LMS), and others. This is not only the most common cloud computing service model but also the one that is designed for end users. The cloud provider has all management responsibilities of SaaS, whereas our job is to simply use the software.

Platform as a Service (PaaS)

Whereas SaaS requires the mere use of the cloud provider’s software within a limited set of confines, platform as a service (PaaS) gives us a lower-level virtual environment—or platform—so we can host software of our choosing, including locally developed or cloud-developed applications, guest operating systems, web services, databases, and directory services. Although we don’t have direct control over the host operating system, or its hardware, we do have responsibility over the applications and data contained within.

Infrastructure as a Service (IaaS)

Infrastructure as a service (IaaS) provides customers with direct access to the cloud provider’s infrastructure. This includes resources like processing, memory, storage, load balancers, firewalls, and VLANs. Put another way, IaaS is almost like an outsourced data center. Although we don’t have direct control over the overall cloud infrastructure, we do have control over host operating systems, storage, and various other networking equipment.

Security Advantages and Disadvantages of Virtualization

Virtualization is a complex topic with security as one of its many components. However, what exactly is virtualization? This section introduces the benefits and disadvantages to virtualization, in addition to tying in all the security advantages and disadvantages inherent in the usage of virtualization.

Advantages of Virtualizing

Although most IT departments look at virtualization as primarily a money-saving technology, virtualization does bring some unique capabilities that enhance security, recovery, and survivability. Let’s examine the following advantages of virtualization:

• Cost reduction

• Server consolidation

• Utilization of resources

• Security

• Disaster recovery

• Server provisioning

• Application isolation

• Extended support for legacy applications

Although some of these seem like considerable advantages, we’ll see later how some of these same capabilities could be disadvantages in the wrong situation. Data centralization is another potential security advantage associated with virtualization. As you move to virtualize servers, the need to store data on specific hardware sets or endpoints can decrease as you migrate toward centralized storage. Centralizing data provides a much smaller attack surface—the fewer places data is stored, the fewer places you have to worry about securing and protecting. If your security staff can focus on a few central data stores, it should have more time to ensure they are patched, secured, monitored, backed up, and so on.

Cost Reduction

Cost reduction is often the overwhelming driver behind virtualization. In theory, a virtualized infrastructure consumes less power, can be managed by a smaller workforce, is easier to manage and maintain, and is more “efficient” in serving your enterprise. Of the factors to consider in cost reduction, the two most often examined and quoted to justify return on investment (ROI) are reduced power consumption and reduced headcount. Most virtualization projects make the assumption that 100 lightly used physical servers will consume more power than 20 larger, heavily utilized servers. For example, if we assume 100 physical servers with an average of 750 watts per server and a consolidation ratio of 5:1, we might have in excess of $200,000 a year in power and cooling savings with a cost per kilowatt hour of around 10 cents. Some utility companies even offer incentives and rebates for virtualization, which can add to potential cost savings.

By contrast, many organizations make the assumption that with fewer physical servers and an “easier” to manage infrastructure they will be able to reduce headcount. This is not always the case—especially if your current staff is not familiar with virtualization. In most cases, you still have the same number of “servers” to manage—they’re just not running on their own dedicated platforms anymore.

Server Consolidation

Server consolidation is a typically undisputed benefit of virtualization. The main point of virtualization is to take server instances with 10 to 15 percent utilization off of dedicated hardware platforms and move them to an environment where they share resources. Condensing 100 physical servers down to 20 smaller servers reduces the amount of physical space needed to support business functions—which can be a direct cost savings in overhead if you are leasing data center space. Server consolidation does come at a cost, though—the more you want to consolidate your environment, the “beefier” your virtualization servers will need to be in terms of CPU and memory. Chances are these servers will run at higher utilization and likely produce more heat, which may change the spot cooling requirements for your equipment racks. Organizations will often closely examine virtualization before a major equipment refresh because it can make a great deal of sense to replace 100 old physical servers with a virtualized environment supported by 20 new, more capable servers.

Utilization of Resources

A primary goal of any virtualization project is to increase the utilization of resources. Prime candidates for virtualization include any dedicated server running at 15 percent or less average utilization. A well-designed virtualization solution takes a whole series of underutilized servers and places them in an environment where CPU and memory can be shared between them. In theory, when we convert these physical servers using only 15 percent of their available CPU resources, we can have four of these virtual instances sharing the same CPU, for a 60 percent utilization average (assuming the servers are not always tasked at the same level at the exact same time). In a similar fashion, RAM can be shared among the same servers. If each physical machine contained 8GB and only used 2GB or less consistently, we could potentially have four of the virtual conversions sharing 12GB, which allows us to dedicate 2GB to each virtual machine and leave 4GB for dynamic allocation.

Security

Virtualization can also have some significant benefits to security. Virtualization makes it far easier to develop production and development baselines because virtual machines tend to use the same “generic hardware.” Production servers can be easily copied or cloned for use in testing of patches, fixes, and so on. Those same virtual servers can be quickly rolled back to a known good configuration in the event of corruption or compromise. Testing of “what if” scenarios no longer requires the purchase of additional racks of identical hardware. Production environments can be cloned and then safely scanned for vulnerabilities without impacting customers. Instant snapshots of a system’s running state can be taken for forensics or incident response. Virtual machines can be configured to revert to a known good state on reboot. Although some of these seem like considerable advantages, we’ll see later how some of these same capabilities could be disadvantages in the wrong situation.

Data centralization is another potential security advantage associated with virtualization. As you move to virtualize servers, the need to store data on specific hardware sets or endpoints can decrease as you migrate toward centralized storage. Centralizing data provides a much smaller attack surface—the fewer places data is stored, the fewer places you have to worry about securing and protecting. If your security staff can focus on a few central data stores, it should have more time to ensure they are patched, secured, monitored, backed up, and so on. Of course, consolidation can also bring risks such as a greater impact when a failure occurs, more users accessing the same resources, and the attractiveness of a target with more eggs in one basket. But these disadvantages can usually be overcome with careful planning and good policies, processes, and procedures.

Another security benefit is the hardware abstraction that virtualization provides. Virtual machines have limited direct access to the actual hardware they are running on. The hardware abstraction offered by hypervisors provides each virtual machine with a more “generic” set of “hardware”—a virtual network interface card (NIC) instead of a physical one, filtered (or no) access to peripherals, limited (or no) direct access to disks, and so on. From a security perspective, this means fewer drivers to patch and maintain, less chance of a rogue or infected driver being installed, less chance of certain types of attacks being successful, and less chance of a hardware failure forcing you to modify the configuration of all the virtual machines running on that hardware. If the platform running your hypervisor fails or is compromised, you can easily migrate your virtual machines (VMs) over to a different platform. The hardware abstraction is taken care of at the hypervisor level and should have little to no impact on your VMs.

Disaster Recovery

Disaster recovery can be greatly enhanced by a virtualized environment. In the right environment, virtual machines can be migrated from one platform to another while they are still running (transferring from one data center to another in anticipation of a hurricane, for example). Virtual machines can be cloned, transferred, and redeployed far easier than physical machines because you don’t need identical hardware to stand up a cloned virtual machine—just compatible hardware and the correct version of your virtualization software. Recovery times with virtualized environments can be in terms of minutes versus hours or even days when restoring an entire server from a tape archive. Virtualization is not a silver bullet—you still must plan for redundancy, migration, failover, failback, restoration, and so on. However, virtualization, when properly implemented, can help speed up the recovery process.

Server Provisioning

Virtualization provides some significant advantages when it comes to server provisioning. Need to add an additional server? With a virtual environment, you can deploy one from a template or clone an existing virtual server in a matter of minutes. This is particularly convenient when you have a set of “master” or “golden” server images in your inventory—you can have a fully patched, production-ready server in a fraction of the time it would take to deploy a new server running on physical hardware. This rapid deployment capability is particularly useful in development environments or for addressing a specific need for additional capacity on a temporary basis.

Application Isolation

Best practices will tell you an ideal scenario is to run one critical service on one server—this decreases the attack footprint of that server, reduces the chance of service A getting compromised through an attack on service B, and so on. With a physical server environment, this is rarely possible because the additional expense is too prohibitive, particularly for smaller organizations. With virtualization and server consolidation, the concept of dedicating a server to a specific critical service becomes a possible reality. Separating critical functions such as web servers, mail servers, and DNS servers onto separate virtual systems allows administrators and security personnel to deploy and configure those virtual servers to support a specific service rather than having to compromise and configure the server to run multiple critical services. You can also isolate sensitive applications to their own servers and tightly restrict access to those servers.

Extended Support for Legacy Applications

Still running some obscure, custom application on Windows NT and praying the hardware it’s on doesn’t die? You can migrate that NT server over to a virtual environment and potentially extend its use. Although it may take some tweaking with certain hypervisors, older operating systems and applications can often be migrated to the virtual environment far easier than they can to new physical environments. In an ideal world you would be able to ditch ancient operating systems and applications, but in reality, there are cases where you simply have to maintain something well beyond its projected “useful” life. In many cases, virtualization can help you do just that.

Disadvantages of Virtualizing

Although it may seem like a very attractive solution, organizations need to carefully consider virtualization and weigh the true costs and potential risks. Let’s examine the following disadvantages of virtualization:

• Hidden costs

• Personnel

• Server consolidation

• Virtual server sprawl

• Security configuration

Hidden Costs

When considering the cost benefits of virtualization, organizations sometimes fail to factor in all the upfront costs associated with virtualization. There are licenses for virtualization platforms to buy, new management tools, training costs to get personnel up to speed, a familiarization period in which the organization will likely see decreased performance, and so on. When consolidating, you may need a much “larger” and more powerful server platform than you are used to ordering. Although there will be fewer servers, they will be far more expensive on a per-unit basis. There’s even a chance your preferred hardware vendor doesn’t have the hardware you’ll need or the hardware isn’t supported by the hypervisor you’ve chosen. When examining the cost-benefit or ROI from virtualization, it is critical that companies carefully consider all the costs that will go into the project.

Personnel

Great IT people can do anything—after all, it’s just a server, right? Far too many organizations leap into virtualization without first making sure their existing staff is ready for such a dramatic shift in operations. Virtualization requires a new knowledge set. Not only does your IT staff now need to know how to manage your existing server base (which you just converted to virtual machines), but now they need to learn new management tools, resource planning, management of shared resources such as CPU, disk, and memory, new monitoring tools, and so on. Chances are your organization will need to either bring in new personnel or spend a fair amount of time and money training your existing staff. While your existing staff is adjusting to the migration, they will likely be operating in a less efficient manner until they become more familiar with the new environment.

Virtualization also introduces a new layer of complexity with troubleshooting issues or performance problems. The e-mail server is running slowly. Is it the virtual machine, or is the host server overloaded? Network connections are being terminated prematurely on web servers. Is it the virtual machine, or is the physical network interface going bad? Is the cable loose? Is some other virtual machine flooding the interface? This additional layer can make root-cause analysis much more difficult and could even slow down problem resolution.

Server Consolidation

Wasn’t reducing the number of servers a good thing? It can be, but it can also be a fairly significant potential negative. Hardware failures can have a much larger impact when multiple virtual servers are running on a single physical platform. This can be somewhat mitigated through the use of failover technologies, RAID, redundant power supplies, and so on, but it is still a serious risk that becomes more critical as more critical business functions rely on the same physical hardware.

Virtual Server Sprawl

Standing up a new physical server is a fairly significant task, whereas standing up a new virtual server is a few mouse clicks. Need a new development server? Click. Need to set up a web server for a marketing campaign? Click. The ease with which virtual servers are created has led to server sprawl in many virtual environments. The ease with which virtual machines can be created presents the very real possibility that your virtual environment can quickly outgrow your organization’s ability to manage it. Fortunately, this is somewhat mitigated by available resources—when you run out of memory and disk space, you can’t create any more virtual servers.

Security Configuration

Does your organization’s security staff know how to secure and monitor a virtual environment? How will they monitor traffic passing from one virtual machine to another inside the same physical platform? Are you separating virtual machines that process and store sensitive data from Internet-facing virtual machines? Can you maintain separation of duties between network and security controls in a virtualized environment? Is there a risk that someone will compromise the virtualization platform itself? And what will happen if that occurs? Will your virtualized environment be compatible with your existing security controls (such as IDS/IPS)? What sort of changes will you need to make to your security strategy?

As virtualization continues to grow in popularity, we’ll continue to see a growth in the risks and attacks targeted specifically at virtual environments, including the hypervisor itself. Virtualization carries a whole new set of risks—make sure your organization weighs them carefully and addresses them before setting up a new virtual environment.

Type 1 vs. Type 2 Hypervisors

Now that we’ve discussed some of the generalized advantages, disadvantages, and security considerations brought about by virtualization, we’re going to move on to more specific security objectives. Hypervisors are a critical component of virtualization that also include their own security considerations. Simply stated, hypervisors are thin layers of software that imitate hardware. For example, hypervisor software can mimic the behavior of CPUs so that the “virtual CPU” behaves like a real CPU. The same goes for virtual RAM, hard drives, network interface cards, optical drives, and BIOS/UEFI firmware. As VMs interact with hypervisors, they believe they’re dealing with actual hardware. This illusion created by hypervisors makes it possible to host multiple VMs—which contain guest operating systems—simultaneously on the same hardware. The ramifications of running multiple OSs simultaneously on hardware has spawned entire industries, careers, and numerous practical benefits for organizations and customers alike.

Hypervisors vary by type in that they can act as an intermediary between the VMs and the host operating system, or between the VMs and the actual hardware. From the VM’s perspective, there’s no difference. Yet, from our perspective the choice between hypervisor types will influence security and performance outcomes. It is important to understand the pros and cons of the different hypervisor types, which we’ll cover next.

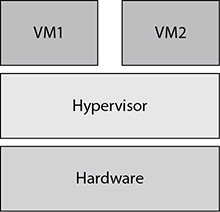

Type 1 Hypervisor

This server-based hypervisor sits between the VMs and the hardware. Type 1 hypervisors are also known as “bare-metal” hypervisors since they directly interact with hardware. Because this hypervisor does not have to communicate through a thick host operating system to reach the hardware, the physical server will have reduced hardware requirements, faster performance, and the increased capacity to run more VMs. The server’s smaller footprint significantly reduces its attack surface; therefore, security is markedly improved. Figure 13-5 shows an example of a Type 1 hypervisor.

Figure 13-5 Type 1 hypervisor

This is the primary hypervisor running on servers in data centers—forming the nexus of private cloud computing networks for countless organizations. Frequently, these data centers have excess, or unused, servers undergoing the conversion of physical to virtual, which then gets swallowed up by the remaining servers running Type 1 hypervisors. This process is typically known as “data center consolidation.”

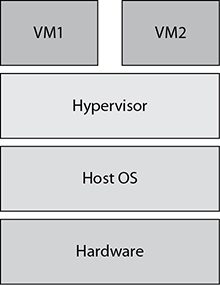

Type 2 Hypervisor

Although this type of hypervisor can run on servers, it is more appropriate for clients. Type 2 hypervisors are different from Type 1 in that they communicate with a host operating system, which in turn communicates with the hardware. As a result, the software’s larger footprint will hurt performance and increase the attack surface. Although attackers can inject malware into the VMs of both Type 1 and Type 2 hypervisors, Type 2, as shown in Figure 13-6, has greater malware potential due to it having both the VM and the host operating system at the hacker’s disposal. The extra options leave the computer vulnerable to a total takeover by the attacker. Servers should not tolerate such risks; yet, the more rudimentary needs of clients, such as hardware testing, software testing, training, and application compatibility, make it a suitable fit.

Figure 13-6 Type 2 hypervisor

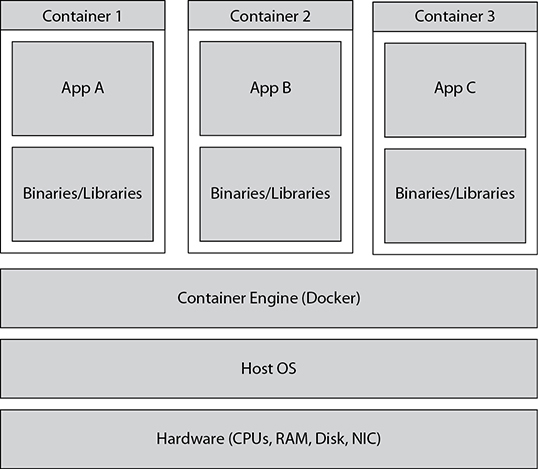

Containers

Among other things, containers do for operating systems what VMs do for computers—reduce the number of them. Rather than being an outright replacement to virtualization, containers are a different form of virtualization in which the OS itself (not the hardware) is virtualized into multiple independent OS slices. Containers store application binary and config files, along with dependent software components. In other words, containers isolate apps from one another yet share the same overall operating system. This provides both isolation and performance benefits.

Microsoft recently debuted its version of the popular and vendor-neutral Docker container engine with Windows 10 and Windows Server 2016. Containers are shown in Figure 13-7.

Figure 13-7 Containers

As with any form of virtualization, the goal is typically to provide good things in small packages—only in this case good things in even smaller packages. In addition to container virtualization (also known as operating system virtualization), there are other virtualization types, including the following:

• Hardware virtualization

• Desktop virtualization

• Application virtualization

Unlike hardware virtualization, which uses a hypervisor to virtualize a physical computer into one or more virtual machines, containers typically virtualize a shared OS kernel into multiple virtualized kernel slices. Think of each slice as a mini operating system being provided to an application. These slices are presented to users and applications as if they were separate isolated operating systems—when in fact they are only portions of a single OS.

Since having multiple VMs means having multiple OSs, and containers typically share only one OS kernel, containers provide organizations with many benefits, including the following:

• They can squeeze more apps onto servers due to containers sharing an OS versus VMs requiring their own OS.

• They can significantly reduce a host’s footprint due to containers sharing one OS.

• Containers can be exported to other systems for immediate adoption.

• They can reduce host total cost of ownership due to having a smaller footprint to maintain.

Such a windfall of capabilities will extend the productivity, life, and usefulness of computers. Diminishing the footprint of the computer also adds the bonus of reducing a host’s attack surface, which will prove to be an attractive option for security professionals and systems administrators.

Another type of containerization, rather than sharing a kernel, provides a separate kernel instance to a container residing inside of a VM. This form of containerization commingles the best of hardware-based virtualization with operating system virtualization.

vTPM

A virtual Trusted Platform Module (vTPM) is a piece of software that simulates the capabilities of a physical TPM chip. This is important for VMs because, just like their physical TPM counterparts, vTPMs will allow VMs to provide attestation of their state, generate and store cryptographic keys, passwords, certificates, and provide platform authentication to ascertain its overall trust worthiness. Each VM could have its own vTPM even if the host computer doesn’t have an actual TPM chip.

Hyper-Converged Infrastructure (HCI)

A hyper-converged infrastructure (HCI) takes converged infrastructures (CIs) to another level. Whereas a CI aggregates vendor-specific compute, network, and storage resources into a single box or appliance, HCI essentially virtualizes CIs into a software-defined solution. Similar to using hypervisor tools to convert physical hardware into virtual instances, an HCI can use a commercial off-the-shelf (COTS) management tool to virtualize and manage these all-in-one CIs in a more flexible, agile, vendor-neutral, and efficient data center. This helps to simplify the infrastructure as well as reduce rack space, power consumption, and total cost of ownership.

Due to the aggregation benefits of HCI, security risks suffer the usual ramifications of centralization. With HCI being an all-in-one management capability, security exploitation only has to compromise the top level in order to breach the entire HCI. With the HCI hardware being managed as one big software whole, it becomes more difficult to individually secure each of its layers. There are several recommendations for securing an HCI, including the following:

• Implement delegation and strong access control for each HCI administrative interface to adhere to the principle of least privilege.

• Use a balanced mix of hardware and software vendor products wherever possible to avoid vendor-specific threats, exploits, and vulnerabilities compromising the entire HCI.

• Utilize a layered security approach to the HCI to protect it from malware, eavesdropping, unauthorized access, modification, and disclosure of transmitted data.

Virtual Desktop Infrastructure (VDI)

Virtual Desktop Infrastructure is the practice of hosting a desktop OS within a virtual environment on a centralized server (see Figure 13-8). Using VDI, administrators are able to migrate a user’s entire desktop, including operating system, applications, data, settings, and preferences, to a virtual machine. Although similar to other client/server computing models, VDI goes a step further in that it can often be implemented so that remote access is technology independent and, in some cases, allows access from mobile or low-power devices.

Figure 13-8 VDI

Three Models of VDI

The three main models of VDI operate as follows:

• Centralized virtual desktops In this VDI model, all desktop instances are stored on one or more central servers. Data is typically stored on attached storage systems such as SANs or RAID subsystems. This model requires a fair amount of resources on the central servers, depending on how many virtual desktops are being supported.

• Hosted virtual desktops In this VDI model, the virtual desktops are maintained by a service provider (usually in a subscription model). A primary goal of this model is to transfer capital expenses to operating costs and hopefully reduce expenses at the same time.

• Remote virtual desktops In a remote VDI environment, an image is copied to the local system and run without the need for a constant Internet connection to the hosting server. The local system will typically run an operating system of some sort and a hypervisor capable of supporting the downloaded image. This requires more CPU, memory, and storage on the local system because it must support the virtual desktop and the underlying support system. Remote VDI is portable and allows users to operate without constant network connectivity. In most cases, it is required to reconnect periodically to be either refreshed or replaced.

Housing the desktop environment onto central servers reduces the number of assets that need securing as opposed to securing assets that are spread out across an entire organization of computers. However, the reliance on a network or Internet connection to the VDI server can be problematic if bandwidth or connectivity should become impaired.

Terminal Services

Terminal Services (or Remote Desktop Services, as Microsoft now calls it) is quite simply a method for allowing users to access applications on a remote system across the network. Depending on the implementation, one application, multiple applications, or even the entire user interface is made available to a remote user through the network connection. Input is taken from the user at the client side (which can be a full-featured desktop system or a thin client of some sort) and processing takes place on the server side (or system hosting the application).

As with any network service, the security of a Terminal Services implementation depends on how restricted access is to the listening service and how well secured and maintained the hosting server is. A Terminal Server by its very nature needs to allow remote access to be useful. When the service is visible to the Internet and untrusted IP addresses (as it usually is), it is crucial to monitor incoming connections with intrusion detection and prevention systems (IDS/IPS) and ensure connections to the service are filtered as much as possible.

Secure Enclaves and Volumes

Whether we’re talking data or volumes that contain data, many security requirements call for the encryption of data in use. Since data-in-use encryption solutions are beginning to catch up with encryption solutions for data in transit and data in storage, organizations are increasingly able to ensure that data spends little to no time in an unencrypted state.

Much of data-in-use security focuses on protecting a system’s data from that very system. To guard against a compromised OS, secure enclaves use a separate coprocessor (also known as a secure enclave processor) from a device’s main processor to prevent the main processor from having direct or unauthenticated access to sensitive content such as cryptographic keys and biometric information. This coprocessor or secure enclave implements various cryptographic and authentication functions to ensure the user and OS are given authorized access to data.

In a sense, secure volumes are the opposite of secure enclaves, yet they provide a similar goal—secure data. Secure volumes are encrypted and hidden when not in use, and then decrypted when in use. In the end, the drive is in its most secure state when it needs to be, much like what secure enclaves do for data.

Cloud-Augmented Security Services

All over the world, organizations and customers are placing their data inside cloud computing data centers. Whether the needs include file storage, learning management systems (LMS), online meeting rooms, or virtual machine lab environments, cloud computing has a solution. Considering that such solutions are typically Internet based, what control do we have over the protection of that data? Do we have control or must we rely solely on the cloud provider’s due diligence?

Today’s cloud computing market is rife with tools that provide subscribers with various security services and controls like role-based access control for applications and data, auditing of access, strong identification and authentication services, antimalware scanning—essentially all the benefits that stem from on-premises security tools. Not to mention, many cloud providers offer security services that specifically cater to the subscriber’s cloud-hosted data.

Antimalware

Similar to using local antimalware tools for on-premises malware scans, cloud-based antimalware tools exist for the detection and eradication of malware in the cloud. Using cloud tools puts little to no burden on the client due to the tools being hosted on the cloud. For this same reason, the cloud antimalware company is also responsible for all application patching, upgrading, and maintenance requirements.

Yet, the diversity of vendors and tools will yield varying degrees of control and insight into antimalware operations. Although cloud computing tools have evolved in terms of subscriber controls and capabilities, on-premises tools are likely to yield more control and insight. There’s also the Internet connectivity, or lack thereof, which can be the difference between accessing the antimalware tools or not. Finally, cloud providers continue to attract more malware attention from hackers due to the astronomical amount of data they possess.

Vulnerability Scanning

Cloud computing environments are just as vulnerable to attack as anything else. In fact, an argument could be made that they are more vulnerable than on-premises environments due to the volume and richness of their data. Take burglary as an example. If the chances of success were equal—would a robber prefer to rob a local convenience store or a bank? They both have valuables, but banks are likely to have even more. It doesn’t necessarily make the bank more “vulnerable,” but the frequency of attacks will affect the frequency of successes just as much as the bank’s relative vulnerability to those attacks.

Organizations need to get ahead of the game with vulnerability assessments. Rather than wait for the black hat hackers to perform vulnerability scans on the cloud network, the cloud providers and subscribers should perform this vital important task early on. The vulnerability scans will proactively reveal many of the vulnerabilities that hackers might exploit—only we’re going to mitigate those vulnerabilities beforehand. That is the primary advantage of performing vulnerability scans.

Cloud-based vulnerability scans work like traditional antimalware scans in that the scanning engine will enumerate an operation system, service, or program and then compare what it sees to a database of approved or unapproved signatures, anomalies, and heuristic-like behaviors. These vulnerability lists will stem from not only the cloud vendor’s personal reserves but also from well-known vulnerability databases such as the Common Vulnerabilities and Exposures (CVE) and the National Vulnerability Database (NVD).

The downside to cloud-based vulnerability scanning is the reliance on the vendor to produce a tool that integrates well with other cloud-based products. Does the tool have access to a reputable vulnerability database like the CVE and the NVD? Does the tool provide strong reporting capabilities? Can it integrate with patch management solutions?

Sandboxing

Sandboxing allows for the separation of programs or files from a more generalized computing environment for testing and verification purposes. A good example of this is a disconnected sheep dip computer, which is used in highly secure environments for testing of suspicious or malicious files from external media like floppies, CDs, and flash drives before the files can be introduced to the production network. Another more common example involves the usage of virtual machines to provide isolation of files and programs from the host operating system’s point of view.

As with antimalware, vulnerability scanning, and so on, many organizations are utilizing cloud-based sandboxes for application- and file-testing purposes. The benefits of cloud sandboxing include the following:

• Ubiquitous access and availability

• Improved scalability

• SSL/TLS inspection services

• Dynamic back-end reputation database

• Cost-effective

Downsides to cloud-based sandboxing include possible restrictions within the sandbox, plus incompatibilities with other cloud or on-premises tools. Do your homework before committing to a provider.

Content Filtering

Rather than utilizing a local server for filtering web-based content, organizations can leverage the content-filtering services of a cloud provider. The rules governing the content filtering can be blanketed to the entire organization or controlled on a case-by-case basis. For example, a rule might block social media platforms like Facebook and Twitter while permitting LinkedIn for customer outreach purposes. In other cases, restrictions may be reduced or lifted entirely if appropriate credentials are supplied.

The key to this service is not just the content filtering but the fact that it is being outsourced to an Internet-based cloud computing provider. As a result, organizations will enjoy the customary cloud benefits, including scalability, ubiquitous access, patching, upgrades, and maintenance. The downside stems from the relative lack of control in securing the cloud-based application and/or the OS providing the content filtering services. Also, if there are issues with the service, organizations will have to rely on the cloud provider’s knowledge, skills, and abilities to resolve the matter. Such lack of control can prove to be difficult for IT and security professionals.

Cloud Security Broker

According to the research firm Gartner, by 2020, 60 percent of large enterprises will use a cloud access security broker. Typically, this is a cloud-based security policy environment that resides between an organization’s on-premises network and some other cloud provider’s network. It helps organizations with cloud policy enforcement, malware protection, DLP services, compliance alignment, and measuring service usage. This is particularly helpful for organizations that are uncomfortable or unfamiliar with navigating the multifaceted landscape of cloud computing. In a sense, cloud security brokers are like lawyers or investment brokers helping customers out with the complexities of legal or financial systems, respectively.

Security as a Service (SECaaS)

As organizations build up trust in cloud organizations, they start relying on them for more services—in this case security services. As the name implies, SECaaS is a series of security services provided to consumers by a cloud provider. Many organizations don’t have full-time security professionals; therefore, they often place their security faith in network or systems administrators. Although highly skilled, such administrators are unlikely to be security experts. Such organizations may be better served to outsource security responsibilities to a managed security service provider.

Organizations can derive several benefits from SECaaS, including the following:

• Cost effective

• Dedicated security experts

• Reduced in-house management

• Malware reputation databases

• Faster provisioning

For more information about managed security service providers, see Chapter 1.

Vulnerabilities Associated with the Commingling of Hosts with Different Security Requirements

Chances are, if you’re operating in a virtual or cloud-based environment, you’re going to have services operating at different trust levels on the same physical resources—for example, an e-mail service running alongside a blog website, which is running on the same physical platform as an e-commerce site. Each of these has a different threat profile and each will have different security requirements. If you are considering operating in this type of environment (or already are), then here are some significant potential vulnerabilities you should address:

• Resource sharing You definitely need to understand how resource usage and resource sharing are addressed by your provider. If you require a significant burst in resources at the same time other clients also need a significant burst, you may end up overloading the cloud and reducing availability for all the applications, unless your provider has taken the necessary precautions.

• Data commingling You must understand how your data is stored. Is your CRM data stored in the same database as the content of the Chinchilla farmer’s forum? Are they separate databases but still in the same instance of MySQL? What steps has your provider taken to ensure data from other clients does not mingle with yours or that a breach in another client’s data store does not affect yours as well? Be sure to consider where the data is stored physically—are your VMs using the same storage devices as other clients? Are you sharing logical unit numbers (LUNs)?

• Live VM migration Sometimes the running, or storage, of a VM needs to be migrated to a different host. This can occur during host failures, storage failures, or for maintenance or upgrade reasons. Regardless, the VM is vulnerable during this transitory period; therefore, confidentiality, integrity, and availability controls should be implemented for the VM’s protection. If not, migrated VMs can be hijacked and redirected to other hacker or victim machines for various purposes.

• Data remnants What happens when that server or your data is deleted? Are files securely deleted with contents overwritten? Does your provider or on-premises administrator just use a simple delete operation that may delete the file record but leaves partial file contents on the disk? In the case of cloud computing, chances are your servers and your data resided on the same physical drives as another organization’s servers and data. You can pretty much guarantee your provider is not degaussing drives after you cancel your service and delete your servers. So how can you make sure your data is really, really gone and that nothing remains behind when you leave?

• Network separation Another thing to closely consider is the network configuration in use at your provider. Ideally you would not be sharing a physical NIC with VMs operating in a less secure state than your VMs, but this may not be the case. You should ensure your provider is taking adequate steps to separate your network traffic from other user traffic.

• Development vs. production In a physical environment that you control, you can take great efforts to ensure that your development and production environments are not connected. How can you get this same level of assurance in a cloud environment? How can you ensure your production systems are not running alongside another organization’s development systems on the same physical resources?

• Use of encryption Encrypting data is good—as long as it’s done properly. You need to know how your provider manages encryption in a multitenant situation. Do all customers share the same encryption keys? Can you get your own unique key? How does the provider handle key storage and recovery? If your data is more sensitive than other tenants, can your provider offer a greater level of encryption and protection for your data?

• VM escape Ideally the hypervisor keeps all virtual machines separate—in other words, system A can’t talk to or interact with system B unless you want it to. As with any new technology, new attack techniques are developed, and within virtual environments “VM escape” attacks are designed to allow an attacker to break out of a virtual machine and interact with the hypervisor itself. If an attacker is successful and can interact directly with the hypervisor, they have the potential to interact with and control any of the virtual machines running on that hypervisor. Gaining access to the hypervisor puts the attacker in a very unique spot—because they are between the physical hardware and the virtual machine, they could potentially bypass most (if not all) of the security controls implemented on the virtual machines.

• Privilege elevation Virtual environments do not remove all risk of privilege escalation—unfortunately they actually create additional risk in some cases. The hypervisor sits between the physical hardware and the guest operating system. As it does so, the calls it makes between the guest OS and hardware could contain flaws that allow attackers to escalate privileges on the guest OS. For example, an older version of VMware did not correctly handle the “Trap flag” for virtual CPUs. This specific type of privilege escalation related to the virtual hardware, and the hypervisor would not be present if running an operating system directly on physical hardware.

Data Security Considerations

If you ask a group of cloud cynics what they don’t like about cloud computing, one of the things they’ll point out is the lack of data security. Repeat the same question to a group of cloud evangelists and they’ll likely point to the abundance of data security. So, who’s right?

The irony of cloud computing data security is that, in many ways, it is simultaneously more and less secure than on-premises computing. Whereas most organizations implement technology and security in support of their data, the cloud organization’s technology and security are the product itself. As a result, you can expect considerably more investment into these areas than from a non-cloud organization. Since the world is their customer, cloud computing providers often have several or even dozens of compliance accreditations and more servers, engineers, physical security, logical security, environmental controls, failover systems, generators, redundant ISPs, and even redundant data centers. Such investment should improve resistance toward threats. Yet, as we’ll see in the next section, you can be more resistant to threats, but that does not necessarily equate to being hacked less.

Despite cloud computing and virtualization infrastructures being some of the largest and most secure in the world, they’re victims of their own success. Many cloud computing vulnerabilities are unraveled due to the cloud’s skyrocketing popularity. The sheer abundance of data housed in cloud computing data centers makes them irresistible to attackers. Hackers are no different from fishermen—they go where the action is.

It is wise to consider the popularity of a cloud and virtualization solution because more popular products are likely to be compromised more. As a result, you’ll have more security bulletins, websites, articles, message boards, and a larger customer base available to you to enhance your knowledge of a provider’s vulnerabilities and exploits. This is crucial for helping security practitioners decide which cloud computing provider is the best fit for an organization’s data security needs.

A common source of cloud computing and virtualization vulnerabilities stems from the resource-sharing model they both rely on. For the sake of cost-effectiveness, cloud providers are frequently going to share physical and virtual resources with their customers. This cost savings gets passed on to the customers, but at the customer’s expense of less security and isolation. In the following sections, we discuss a few vulnerabilities common to the single-server and single-platform hosting methods.

Vulnerabilities Associated with a Single Server Hosting Multiple Data Types

You’ve just completed a major hosting effort and migrated your organization’s website, mail service, and external DNS services to a large, commercial hosting environment. Taking advantage of this outsourced cloud infrastructure should reduce costs, reduce the burden on the IT staff at your location, and free up some resources to focus on your core business activities, right? It just may, but where did your organization’s virtual machines really end up? Unless you negotiated for your own private cloud, chances are your organization’s virtual instances are running on the same physical equipment as some other organization’s virtual instances. What are those other organizations? How secure are their systems? What type of traffic will they attract? Unfortunately, outsourcing your virtual environment can carry some significant risks, and impacts to another organization’s instances may very well end up impacting yours as well. Here is a sampling of risks and impacts to consider: