CHAPTER 18

Technology Life Cycles and Security Activities

This chapter presents the following topics:

• Systems development life cycle

• Software development life cycle

• Adapting solutions

• Asset management (inventory control)

Like all things in the universe, hardware and software systems have a life cycle—a beginning, middle, and end. Considering that customers, competitors, industries, and regulations are in a constant state of change, organizations must quickly adapt to their new surroundings. Typically, new technological systems are developed or acquired as part of this adaptation. The technology life cycle refers to new technologies entering the enterprise on a regular basis—in limited use at first—followed by widespread adoption when it makes business sense. Eventually, technologies are retired as new ones take their place. Across this life cycle, security functionality must be maintained, and changes in the technology environment force potential changes in the security environment.

Software development life cycles are increasingly important and mandate the incorporation of security into all phases of development. We’ve seen a significant uptick in software life cycle documentation requirements, code testing, and the need for software to adapt to the ever-changing threat landscape. This chapter covers the systems development life cycle, software development life cycle, various testing methods and documentation sources, and the adaptation of solutions to keep our systems and software safe.

Systems Development Life Cycle

More generalized in nature, the systems development life cycle (SDLC) refers to the process of planning, creating, testing, and deploying hardware or software systems. This includes hardware-only, software-only, or both information system types in a single project.

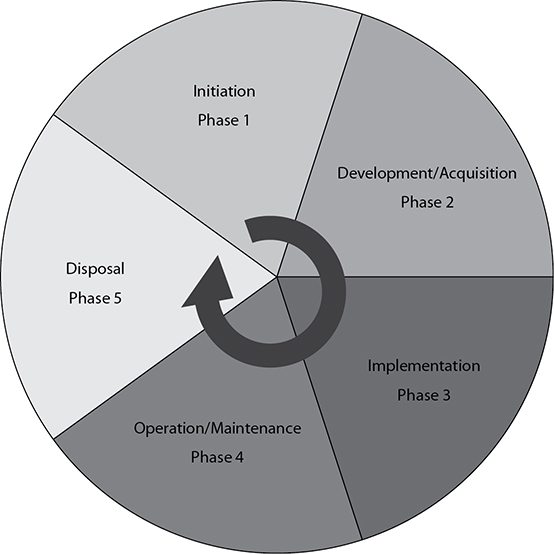

The National Institute of Science and Technology (NIST) has published a special publication on the topic of systems development life cycles: NIST SP 800-64, “Security Considerations in the Information Systems Development Life Cycle.” This document is designed to assist implementers in the integration of security steps into their existing development processes. The NIST process consists of five phases: initiation, development/acquisition, implementation, operation/maintenance, and disposal (see Table 18-1).

Table 18-1 Information Systems Development Life Cycle Phases

As indicated in the table, the initiation phase is where systems begin. This is where needs and requirements are determined and where system planning and feasibility studies are conducted. Before a system can be architected and designed, a full set of requirements needs to be developed, both technical and operational. In addition to those functionality requirements, security requirements must also be defined here.

The development/acquisition phase is when the requirements from the initiation phase are turned into functional designs. Whether a system is built or purchased, there is a need to determine what security controls are required and how they should be employed to ensure the system meets the requirements.

The implementation phase sees these requirements are met with actual security controls upon approval from executive management.

Once a system has been implemented, the operation/maintenance phase continually ensures the system’s security posture is verified periodically with respect to continued compliance with the initial security requirements. This is done through a series of security audits. During the operation of a system, it will be upgraded and changed as software gets updated and hardware capabilities improve. These incremental changes, governed by the Change Control Board (CCB) process, keep the system aligned with ever-changing business requirements as well.

The disposal phase involves the removal of the system after it loses its value to the organization. It ensures the secure destruction of information, including data and cryptographic keys, and possibly the physical hardware and storage devices on which the information was saved. See Figure 18-1 for a visual depiction of the systems development life cycle.

Figure 18-1 Systems development life cycle

Requirements

It’s important to know in advance which business needs the proposed system must fulfill in order to rationalize implementation. To formalize this understanding, a useful SDLC step is the creation of a requirements definition document. This document will outline all system requirements and why they are needed. It discusses the needed features, functions, and any customizations that should be implemented. Certain requirements will be prioritized over others in order to better align the proposed system with business objectives. The requirements help us determine the overall capabilities that the system must have to meet the organization’s needs.

Acquisition

Ironically, not all systems development life cycles involve development. In many instances, organizations will elect to purchase systems or applications from a vendor as opposed to developing an in-house solution. Other than this switching of the second phase of the SDLC from development to acquisition, the process remains much the same. Implementers must still evaluate the acquired solution’s ability to fulfill the organization’s security requirements prior to purchasing, which will be discussed in the next section.

Test and Evaluation

Regardless of whether the new technology was developed or acquired, organizations must test and evaluate the technology to identify any shortcomings or vulnerabilities in its functionality, security, and performance. This will help determine if the product conforms to organizational expectations. Such tests may even include black-box testing and white-box testing to exploit the vulnerabilities to demonstrate how hackers might make similar attempts themselves—but more importantly discover and mitigate the vulnerabilities before the hackers attack.

Commissioning/Decommissioning

Commissioning a new, modified, or upgraded system marks the implementation of the technology into the production environment. Conversely, decommissioning is the retirement of technology from the environment. At no point in the system’s life cycle is a technology more vulnerable than when it is first implemented. Security controls must be implemented promptly, yet the nature of those controls will depend on whether the newly integrated system is hardware or software based, a developed system versus an acquired one, or a network-based system as opposed to a host-based system.

On the flip side, systems will be periodically decommissioned or disposed of as part of normal operations. Such decommissioning of systems comes with legal and security implications. If the device stores data, the following questions are in order:

• Has all the data been removed from the system?

• Is the decommissioning of the system documented?

• If a vendor is given access to repair a system, how is the vendor access managed with respect to data security?

• If the vendor has administrative permissions on a system, how is their activity logged, managed, and regulated?

Operational Activities

Systems operate within the context of other systems. Whether you’re implementing something as simple as new functionality in an existing system or putting in place a completely new system, security issues arise from the interaction of the system with the rest of the enterprise systems. Sometimes the risk is in the new system only, and sometimes a new system can introduce risk elsewhere in the enterprise. To ensure that the desired risk profiles of the enterprise are maintained, it is important to perform specific security-related activities associated with determining and managing the risk.

The introduction of new systems or components can affect the security policy of an organization. Periodic analysis and maintenance of the security policy are necessary to ensure that the organization is cognizant of the desired risk profiles associated with operations. To understand the impact of a system on the risk profile, a security impact analysis can be performed. This examines the impact on the desired levels of security attributes—typically confidentiality, integrity, and availability—due to the system’s implementation. The security impact analysis can also examine the issues of configuration management and patch management activities and their impact on risk profiles. A privacy impact analysis can perform the same function toward privacy impacts.

Threat modeling and vulnerability analysis can provide information as to the levels and causes of risk associated with a system. These tools provide the means to examine, characterize, and communicate the elements of risk in a system. Security awareness and training assist in enabling the people component of security, communicating to users the security expectations, risks, and implications that can result from their specific interactions.

Monitoring

The systems development life cycle doesn’t end once a product is operational. We must continuously monitor the system’s operational state in order to identify performance and usage patterns, in addition to any signs of malicious activities. An important part of this monitoring is the capturing of a baseline, especially given the pristine condition of a system that was just freshly integrated into the environment. The baseline will serve as the measuring stick for future performance benchmarks comparisons in order to ensure that performance remains aligned with the baseline, or addressed in order to realign it with the baseline.

Intentional changes to the system are inevitable; therefore, a change management process may be invoked to ensure proposed changes are formally requested, approved, and scheduled for implementation. A security assessment may also be necessary during this stage to identify and mitigate any vulnerabilities that were missed during earlier life cycle phases.

Maintenance

Every system requires maintenance as part of its life cycle. Both hardware and software require maintenance, and these activities can have an impact on security. Maintenance activities can be both on a specific system and on the environment where the system resides. The maintenance of antivirus and antimalware programs on a server may be considered separate from the systems running on the server, yet this is an important security activity. There have been cases of antivirus/antimalware updates resulting in the disabling of critical system components because of false positive issues. There have been cases of operating system patches that have resulted in the disabling of system functionality that a specific system was counting on to properly function.

The policy should not be to skip these critical maintenance functions, but rather to perform them in a documented manner that permits recovery from unknown effects. The policy should specify timeframes for applying updates, both in the daily operational cycle and with respect to time, from release to implementation. These are important not just for antivirus and antimalware updates, but also operating system and application updates and patches. When new hardware is employed, or existing hardware is replaced as a form of maintenance, a security analysis of the changes needs to be performed to understand the risks and the plan to recover from maintenance issues.

A solid maintenance program will completely document maintenance history activities, both past and future (schedule), as well as who performed the maintenance, the results of the maintenance, and any noted concerns during the maintenance process. This documented log will assist in future planning activities as well as diagnosing problems associated with the actual maintenance.

Configuration and Change Management

Configuration management is the methodical process of managing configuration changes to a system throughout its life cycle. Carefully managing the configurations ensures that chances are slim the system’s security posture will be accidentally diminished. Sustaining these configurations is important; therefore, detailed records are kept to permit validation that the system’s trusted state hasn’t been altered, or was only altered under authorized conditions.

If the system in question is a server, configuration management will result in the following outcomes:

• Enhanced provisioning Server deployments can be automated via a configuration management tool such as Microsoft System Center Configuration Manager. This deployment will include a fully configured OS, applications, drivers, and updates without user intervention.

• Reduced outages Using intelligent configuration management tools will help sustain configuration integrity and instill positive changes while preventing negative changes, which will result in less outages and security breaches.

• Cost-effective With IT automation resulting in faster deployments, greater consistency of configurations, increased reliability, and more efficient recoveries from failures, cost reductions are achieved at multiple levels.

Change management is a formalized process by which all changes to a system are planned, tested, documented, and managed in a positive manner. In the event of an issue, a key element of each change plan is a back-out process that restores a system to its previous operating condition. Change management is an enterprise-wide function; therefore, changes are not performed in a vacuum, but under the guidance of all stakeholders.

Change management activities are frequently managed through a CCB process, where a representative group of stakeholders approves change management plans. The CCB ensures that proposed changes have the necessary plans drafted before implementation, that the changes scheduled do not conflict with each other, and that the change process itself is managed, including testing and post-change back-out if needed.

As an example, the IT department installs a new network printer at a branch office. The printer is statically configured with an IP address that was not already assigned by the DHCP server. Moments later, a branch office manager attending a video conference session is suddenly disconnected. It is soon determined that the IT technician configured the printer with the same IP address as the video conferencing equipment. Had the network printer implementation gone through a change management process, it is very likely that the proposed IP address for the printer would have been denied due to the conflict it would have caused with the video conferencing equipment.

Change management is an important process for system stability. Change management is an enterprise-level process designed to control changes. It should be governed by a change management policy and implemented via a series of change management procedures. Anyone desiring a change should have a checklist to complete to ensure that the proper approvals, tests, and operational conditions exist prior to implementing a change. The change management process is driven by the CCB and governed by a series of procedures. The typical set of change management procedures will include the following:

• Change management process

• Documentation required for a change request

• Documentation required for actual change, including back-out

• Training requirements and documentation associated with a proposed change

Asset Disposal

Asset disposal refers to the organizational process of discarding assets when they are no longer needed. All system assets, whether software based or hardware based, eventually reach the end of their usefulness to the organization. If the asset to be discarded is an acquired or developed application, the organization will likely eradicate the data from the storage device. There are several ways to sanitize storage media:

• Erasing A software technique involving overwriting each area on the storage media. NIST recommends at least three full-disk overwrite operations; yet more passes may be required for government compliance. Overwriting balances the needs of strong sanitization without media destruction. This permits the recirculation of the drive back into the organization, or its donation to another company or group. Companies frequently donate computers and storage media to schools or libraries.

• Degaussing Involves demagnetizing the drive media, which is generally considered one of the most powerful sanitization methods. However, it will likely result in destroying the drive media as well. This is a desired outcome for drives that should be thrown out anyway.

• Shredding Uses a hard drive shredder that shreds the drive into many pieces. Shredded drives may undergo a second pass to increase shredded content.

• Disintegrating A disintegrator uses knives to disintegrate drives into fragments even smaller than those from a shredder.

• Melting Completely eradicates a drive through exposure to a molten vat, battery acids, or another type of acid.

Asset/Object Reuse

Eventually, all assets will require disposal, yet some are still viable for recirculation back into the organization’s inventory. Before reintegrating the asset, we should first identify the asset’s current purpose and information content. If the asset will be provided to someone with a similar role or usage requirement, the asset may require little to no data wiping or restructuring. If the asset contains sensitive materials, we’ll likely have to erase or overwrite the drive prior to reprovisioning it to the user from a disk image or factory reset.

Software Development Life Cycle

Considered a subset of the systems development life cycle—as well as used interchangeably with it on occasion—the software development life cycle (SDLC) represents the various processes and procedures employed to develop software. It encompasses a detailed plan for not only development but also the replacement or improvement of the software. Software is complicated to develop; therefore, a series of steps are needed to guide the development through various milestones. Since SDLC focuses on software development as opposed to the more generalized systems development, the SDLC’s steps will guide the development of the software from planning and designing to testing, maintenance, and even disposal.

Given the variety of popular SDLC models such as Waterfall, Agile, and Spiral, the SDLC steps can vary a bit. One thing all of these development strategies have in common is to develop great software in an affordable and efficient way. Shown next are the order of steps that roughly capture the essence of any SDLC model. Mind you, the exact process will vary based on the software development methodology chosen:

1. Requirements gathering

2. Design

3. Development

4. Testing

5. Operations and maintenance

Requirements Gathering Phase

The requirements gathering phase of a software development life cycle is basically the brainstorming of the who, the what, and the why of the project. Why are we developing the software? What will the software do? Who will use the software? In addition to answering these questions, software engineers and business stakeholders will define the scope of the project. They will consider expected costs, project scheduling, required human resources, hardware and software requirements, and capacity planning. Change management should also weigh in to formalize the request and proposal for the software.

Security requirements must be defined for the software—for example, the exact confidentiality, integrity, and availability requirements as per the CIA scoring system talked about in Chapter 3. Since software development is a major change, a risk assessment should be conducted to identify any risks, threats, or vulnerabilities that could derail the feasibility and cost/benefits of the project. Also, consider performing a privacy risk assessment to determine data sensitivity levels for the data being generated or consumed. Compliance laws may impart specific security and privacy requirements on the software and its data. Such requirements may involve certain physical security, authentication, encryption, logging, and data disposal processes.

Design Phase

The requirements defined in the previous phase will be used as input for the design phase. Although we’re still in the “theoretical” stage of software development, the design phase shifts the focus from one largely about brainstorming both the organizational needs and their obstacles, to a phase focused on addressing said needs and obstacles. In other words, what design elements and protections must the software include to address the requirements? A good design should “connect the dots” by mapping the core behavior of the software directly to the software goals, security, and privacy requirements.

As with the requirements gathering phase, the information gleaned from these models will further shape the design of the software.

In addition to software functional requirements, security and privacy requirements must also be addressed during the design phase. We’ll first want to determine the software’s attack surface to identify the areas that can be attacked or damaged, in addition to performing threat modeling, which examines the threats and their various methods of attacking the software. It should be hoped that the methods of addressing these various risk factors are not overly costly; otherwise, the software may be deemed impractical to implement.

Development Phase

The development phase transitions the SDLC away from the theoretical phases to actual development of the proposed software. This development will utilize all the information gathered from the previous phases to ensure the requirements guide each phase of development. The design phase saw us mapping the software’s behaviors to requirements; therefore, each of those mapped behaviors will serve as independent development milestones or “deliverables” that steer the development piece-by-piece. Increasingly important today is the implementation of secure coding practices, which include manual and automated code review processes, vulnerability assessments from scanning tools, secure coding practices from groups like OWASP and CERT, plus input validation and bounds checking.

Testing Phase

After the software is developed, the testing phase begins with a series of testing processes in order to identify, resolve, and reassess software until it is fit for use. There are numerous testing approaches, testing levels, and testing types that can be employed on software. Examples of testing approaches include static and dynamic testing. Static testing takes place at various points during develop or prior to code actually running, whereas dynamic testing takes place while the code is running. Testing levels include unit testing, where individual components are tested, and integration testing, which tests how components work together. Testing types include acceptance testing, where code is verified to meet requirements, and regression testing, which retests software after modifications are made.

Operations and Maintenance Phase

The final stage of the SDLC sees the software deployed into the production environment. From here on out we’ll be performing continuous monitoring to see how it performs under daily workloads, identify any functional and security issues, and either reconfigure the software or develop software patches to address the discovered issues.

Application Security Frameworks

Secure application development is not an easy task. There are too many issues across too many platforms to expect a person to get it all correct every time. The solution is to adopt an application-level security framework and let the designers and developers spend their resources on designing and developing the desired functionality.

There are several approaches to implementing an application security framework—from homegrown to industry supported to commercial solutions. Numerous resources are available from standard libraries to complete frameworks available for integration into an enterprise solution. The key element is to decide on a path, document the path, train the development team as to the path, and then hold the design and development team’s feet to the fire with respect to staying on a specific path.

Application developers rely on software development frameworks to solve a lot of fundamental problems in their application development efforts. To reduce risk via the development process, we need to use application frameworks and libraries that solve their problems in a secure way. The application framework should protect developers from SQL injection and other injection attacks; it should provide strong session management, including cross-site request forgery protection and auto-escaping protection against cross-site scripting. Through the use of the application framework, developers would be protected from making mistakes that are prevalent in web applications.

Software Assurance

Software assurance is a process of providing guarantees that acquired or developed software is fit for use and meets prescribed security requirements. In doing so, we can be confident that the software will be relatively devoid of vulnerabilities at launch and throughout its life cycle. Software assurance processes are frequently implemented in mission-critical industries, including national security, financial, healthcare, aviation, and more. There are various ways to implement software assurance, including the following:

• Auditing and logging of the software

• Standard libraries such as cryptographic ciphers

• Industry-accepted approaches such as ISO 27000 series, ITIL, PCI DSS, and SAFECode

The next several topics will go into more detail on software assurance methods.

Standard Libraries

Modern web applications are complex programs with many difficult challenges related to security. Ensuring technically challenging functionality such as authentication, authorization, and encryption can take a toll on designers, and these have been areas that are prime for errors. One method of reducing development time and improving code quality and security is through the use of vetted library functions for these complex areas. A long-standing policy shared among security professionals is, “Thou shall not roll your own crypto,” which speaks to the difficulty in properly implementing cryptographic routines in a secure and correct fashion. Standard libraries with vetted calls to handle these complex functions exist and should be employed as part of a secure development process.

Standard libraries can perform numerous functions. For instance, in the C language, there are libraries full of functions to enable more efficient coding. Understanding the security implications of libraries and functions is essential because they are incorporated into the code when compiled. In languages such as C, certain functions can be used in an unsafe fashion. An example is the strcpy() function, which copies a string without checking to see if the size of the source fits in the destination, thus creating a potential buffer-overflow condition. The rationale is that this is done for speed, because not all string copies need to be length verified, and the function strncpy() and other custom functions should be used where length is not guaranteed. The challenge is in getting coders to use the correct functions in the correct place, recognizing the security issues that might occur.

Industry-Accepted Approaches

The information security industry is full of accepted approaches to securing information in the enterprise. From standards such as the ISO 27000 series, to approaches such as IT Infrastructure Library (ITIL), to contractual elements such as PCI DSS, each of these brings various constructs to the enterprise. Also, each has an impact on application development because it frames a set of security requirements.

A more direct approach to an industry-accepted best practices application can be found through the industry group Software Assurance Forum for Excellence in Code (SAFECode, www.safecode.org). SAFECode is an industry consortium with a mission to share best practices that have worked in major organizations.

Web Services Security (WS-Security)

SOAP is a protocol that employs XML, allowing web services to send and receive structured information. SOAP by itself is very insecure. Web Services Security (WS-Security) can provide authentication, integrity, confidentiality, and nonrepudiation for web services using SOAP. However, WS-Security is just a collection of security mechanisms for signing, encrypting, and authenticating SOAP messages. Merely using WS-Security does not guarantee security.

Authentication is critical and should be performed in almost all circumstances. Simple authentication can be achieved with usernames and password tokens. Passwords and usernames should always be encrypted. Furthermore, always use PasswordDigest in favor of PasswordText to avoid cleartext passwords. Authentication of one or both parties can be achieved by using X.509 certificates. Authentication is accomplished by including an X.509 certificate and a piece of information signed with the certificate’s private key.

WS-Security is very flexible and allows the encryption and signing of the underlying XML document, or only parts of it. This is useful because it allows the generation of a single message with different portions readable by different parties. If possible, always sign and encrypt the entire underlying XML document. Encryption should not be applied without a signature, if possible, because encrypted information can still be modified or replayed. The order of signing and encrypting is also important. Generally, the best protection is achieved by signing the message and then encrypting the message and the signature. Stronger encryption algorithms such as AES should be used instead of older algorithms such as 3DES. Do not transmit symmetric keys over the network, if possible. If transmission of symmetric keys is required, always encrypt the symmetric key with the recipient’s public key and be sure to sign the message.

Forbidden Coding Techniques

CASP+ practitioners are not likely to be expert programmers, yet they’re expected to be capable of warning software developers about poor coding techniques. This section lists some of the forbidden coding techniques regarding unacceptable developer and application behaviors.

• Integrating plaintext passwords or keys into source code

• Using absolute values in file paths, which reduces code flexibility

• Lack of cryptography at rest, in transit, and in use

• Error messages that are too verbose or useless

• Single-factor authentication and plaintext delivery of credentials

• Applications requiring administrative privileges and generous access to the Windows Registry

• Utilizing “self-made” cryptographic ciphers

• Code typos

• Long code with many possible functions

• Unnecessary time spent optimizing less-important code

• Applications making unnecessary connections on unnecessary ports

• Lack of proper bounds checking and input validation

• Absence of parameterized APIs

• Code that doesn’t incorporate the principle of least privilege

NX/XN Bit Use

NX (no-execute) bit use refers to CPUs reserving certain areas of memory for containing code that cannot be executed. Akin to malware sandboxing, malware can be quarantined to this memory space so that it is restricted from execution. This will help protect the computer from certain malicious code attacks. On the flip side, XN (never-execute) is equivalent to NX bit use, only it applies to ARM CPUs instead.

ASLR Use

Another memory security technique available to us is called address space layout randomization (ASLR). As the name implies, ASLR involves the operating system randomizing the operating locations of various portions of an application (such as application executable, APIs, libraries, and heap memory) in order to confuse a hacker’s attempt at predicting a buffer overflow target. Most modern operating systems support ASLR, including Windows Vista+, macOS 10.5+, Linux kernel version 2.6.12+, iOS 4.3+, Android 4+, plus a few other Unix varieties.

Code Quality

Code quality refers to the implementation of numerous coding best practices that are not officially defined, yet are generally accepted by the coding community. Given its subjectivity, here are characteristics generally associated with code quality:

• Clarity Simply written and easy to comprehend.

• Consistency Closely aligns with functional and security requirements.

• Dependencies Limits the number of outside dependencies.

• Efficient Requires minimal resources and time for task completion.

• Extensibility Accommodates future changes and growth.

• Maintainable Easy to make changes.

• Standardized Uses well-known and standardized techniques.

• Well-documented Documented for current and future developers to understand components.

• Well-tested Constantly subjected to testing to ensure bugs are identified early and often to ensure the product is fit for use.

Code Analyzers

Code analysis involves the various techniques of reviewing and analyzing code. Automated and manual code analysis methods can be employed on static and dynamic code types—in addition to the use of fuzzing tools, which “poke” at code to see how it responds. By better understanding our code, we can discover and mitigate functional and security issues, hopefully before hackers exploit them. The next few topics provide deeper coverage of static, dynamic, and fuzzing analysis techniques.

Fuzzer Previously discussed at length in Chapters 8 and 10, fuzzers take the approach of analyzing code in a more offensive manner by injecting unusual or malicious input into an application to see how it responds. The response generated by the application is then analyzed for signs of vulnerabilities. Fuzzing is commonly implemented via tools such as OWASP’s JBroFuzz and the WSFuzzer tools, in addition to other industry regulars such as Peach Fuzzer, w3af, skipfish, and wfuzz. Given their accessibility and content value, web applications are more frequently targeted for fuzzing than other Internet systems.

Static Static code analysis is the process of reviewing code when it’s not running—hence, the term “static.” Since the code is in a non-executed and motionless state, it provides enhanced predictability. This code can be analyzed via manual review processes that are formal (line by line reviewing by multiple developers) or informal (lightweight and brief) in nature, in addition to automated methods to expedite both the speed and uniformity of code analysis. In either case, the goal is to identify any security or functionality issues.

Dynamic On the contrary, dynamic code analysis is the process of reviewing code that is running. Since the code is running, it’s in a constant state of flux; hence, the term “dynamic.” Since code is meant to be run, dynamic analysis is positioned to identify code issues exposed in their natural state. As a result, such issues are more difficult to identify via static analysis methods. A good example of dynamic code analysis is the actions performed by fuzzers.

Development Approaches

Software development has changed a lot over the years. There are more applications, and devices capable of running applications, than ever before. Just Google Play and the Apple App Store alone have several million applications each. Today’s programmers have more defined specialties in specific disciplines, such as web applications, mobile applications, IoT, video games, and so on, than in years past—while also collaborating in increasingly larger teams and departments that are full-to-bursting with specialists in other areas. Whether it’s software development or life in general, today’s professionals tend to specialize in a given area as opposed to being well-rounded. Think about it, wouldn’t you rather visit a dentist for a root canal as opposed to a primary care physician? Better yet, wouldn’t you rather visit an endodontist, who specializes in root canals, as opposed to a general dentist? With the talent of today’s specialized developer, and their enhanced collaboration with other specialists, today’s software typically has the makings to be truly remarkable.

On the flip side, the greater complexity of today’s software also increases its attack surface. More software features means more can be hacked, and more needs securing. Plus, today’s users are paradoxically making demands for “idiot-proof” and intuitive software that is also very secure and feature-rich. Needless to say, developers have a lot of checkboxes to consider.

To address such issues, while developing software in the most economical and efficient manner possible, developers rely upon various development approaches based on the type of software and project they’re working on. Each of these approaches employs different step-by-step procedures for producing high-quality software. As always, the goal is to make sure that software fulfills its stated promises. This section covers various development approaches and how they all uniquely attempt to achieve those goals. It also discusses DevOps, and how it can significantly improve software development timelines and quality.

DevOps

Typically, the software development group develops software, the quality assurance group tests and validates the software, and the operations group deploys it to the production environment. Having three separate teams inevitably creates an “us and them” dynamic of decreased communication, blame games, inefficiencies, and delayed or even cancelled projects.

DevOps addresses these concerns by combining the software development, quality assurance, and operations teams into a single working unit to expedite and improve the quality of the development, testing, and deployment of software. DevOps units will benefit from less time spent on development, QA, and deployment; enhanced collaboration and creativity; a reduction in application maintenance and repairs; new and improved services; and quicker time to market.

Security Implications of Agile, Waterfall, and Spiral Software Development Methodologies

Given the complexities of software deployment, developers often choose from multiple software development methods, including Agile, Waterfall, and Spiral. As a security practitioner, you can help educate the developers regarding some of the security considerations inherent in these models.

Agile The traditional approach to software development is akin to a parade in that everything is planned out in advance and the marching band cannot simply walk from point A to point B. There are many rules to follow, including walking in a certain formation, wearing a uniform, playing an instrument, smiling, and, most importantly, sticking to the script. Although that’s great for entertainment, many software projects can ill-afford to waste time jumping through hoops. These developers are often given a carefully laid-out plan with numerous processes to follow, and predetermined phases and milestones to achieve. There’s a lot of red tape, and they have to, again, stick to the script. As you can imagine, such over-thoroughness can drag a project out—as in sometimes not finish. Plus, it doesn’t encourage innovation or permit abrupt changes in strategy or tactics to take place if something goes wrong.

Given the name, you might think that Agile software development has a need for speed—and you would be correct. Unlike other development methods, and by no means perfect, Agile focuses on efficiency rather than slow and steady. Agile prefers a less-regulated journey with smaller and adaptable milestones so that developers may quickly react to changes in requirements. When an “adaptive” model like this is used, changes are welcomed; plus it invites better collaboration with team members, and even may solicit feedback from customers. Projects are more likely to finish before deadlines as a result.

On the downside, accelerated approaches may diminish security. Faster coding with smaller-sized milestones, and reduced structure, may lead to less code testing and security implementations. Developers must be made aware to continue incorporating security best practices into their project despite its accelerated pace.

Waterfall When you look at a waterfall, you see it following the same pattern and outcome each time. Water speeds off a cliff and hits the bottom. You’ll never see water changing its mind halfway down and flying back up to the top with a better idea. Such is the nature of the Waterfall development technique.

In many ways the opposite of Agile, the Waterfall approach follows a strict sequential life-cycle approach where each development phase must be finished before beginning the next. The key is that developers cannot revisit previous phases once they’re complete; therefore, you must make absolutely sure you’re done before moving on. Although changes can be made prior to completing a phase, they cannot be added after phase completion. Instead, you’d have to wait until the entire project is finished before revisiting earlier phases. In other words, you have to solve issues earlier than you can anticipate, or much later when the change is long overdue.

There are five steps in the Waterfall process:

• Requirements

• Design

• Implementation

• Verification

• Maintenance

The best thing about the Waterfall approach is its predictability, since projects must be conducted in a very specific and predetermined way. This can work with easier development projects or those without a particular time constraint. Unfortunately, security can fall by the wayside due to the Waterfall’s inability to permit security changes once phases have completed. This can cause significant delay or prevent much-needed security changes.

Spiral Although the Agile and Waterfall approaches have their benefits, there’s no middle ground. Either an approach is accelerated and relatively unplanned or slow and overly planned. Spiral comes to the rescue by borrowing some of the aspects of the other two approaches to make a balanced development solution. It utilizes the incremental progress and revisitation rights of Agile, but within the relative confines of the Waterfall approach. Given the balance of planning and efficiency, this is a good approach to use for large-scale projects.

Security issues can arise from lack of security foresight from the beginning. Since Spiral takes a long-view perspective like Waterfall, failure to incorporate secure code into the project early on may delay its incorporation until after the project has finished. Plus, because of the more accelerated aspects of Spiral due to its Agile-like incremental milestones, the coding might be rushed at certain portions, which leaves little time for security.

Continuous Integration

Continuous integration (CI) is the process of repeatedly incorporating code from various developers into a single code structure. It is performed multiple times throughout the day in an automated fashion. This rapid-fire repetition of integration is the benefit because delayed integration leads to developer code “sprawl,” which makes integration more difficult. By developers continuously performing the integration, code drift is less likely to occur; in addition, this permits functional and security code repairs while the issues are still small, which makes for higher-quality and more secure software.

Versioning

Versioning is the process of marking important code milestones or changes with a timestamped version number. Since code changes take place frequently, version numbers progress through a series of major and minor number increments. Based on the significance or direction of code changes, version numbers may change incrementally—from 1.0 to 1.0.1 (minor), for example—or larger changes such as 1.0 to 1.1 (major).

Whenever code changes occur, it can have a positive or negative effect. To ensure that we’re always taking the right path forward, we may stick with the versions of code that produce the best outcomes. If we hit a snag or dead end, we can roll back to previous versions of code. This will allow us to systematically trace our successes and failures to certain versions of code. By utilizing versioning, we’ll eventually discard the bad code and continue onward with the better code. The end result will be a better and more secure final product.

Secure Coding Standards

Secure coding standards are language-specific rules and recommended practices that provide for secure programming. It is one thing to describe sources of vulnerabilities and errors in programs; it is another matter to prescribe forms that, when implemented, will preclude the specific sets of vulnerabilities and exploitable conditions found in typical code.

Application programming can be considered to be a form of manufacturing. Requirements are turned into value-added product at the end of a series of business processes. Controlling these processes and making them repeatable is one of the objectives of a secure development life cycle. One of the ways an organization can achieve this objective is to adopt an enterprise-specific set of secure coding standards.

Organizations should adopt the use of a secure application development framework as part of their software development life cycle process. Because secure coding guidelines have been published for most common languages, adoption of these practices is an important part of secure coding standards in an enterprise. Adapting and adopting industry best practices is also an important element in the software development life cycle.

Documentation

Documentation is crucial to SDLC since each phase has many requirements that need to be met. Developers look to documentation to guide them through the numerous processes and procedures necessary to succeed at each phase of the SDLC. Documentation not only helps plot a course forward but also gives you a historical account of successes and failures that you can refer to for guidance on a current situation or problem.

Various documentation types exist for these purposes, including a security requirements traceability matrix, a requirements definition, system design documents, and testing plans. The next few topics dive deeper into these forms of documentation.

Security Requirements Traceability Matrix (SRTM)

The security requirements traceability matrix (SRTM) is a grid that allows users to cross-reference requirements and implementation details. The SRTM assists in the documentation of relationships between security requirements, controls, and test/verification efforts. In the case of development efforts, the SRTM provides information as to which tests/use cases are employed to determine security requirements have been met. Each row in the SRTM is for a new requirement, making the matrix an easy way to view and compare the various requirements and the tests associated with each requirement.

This matrix allows developers, testers, managers, and others to map requirements against use cases to ensure coverage by testing. In Table 18-2, Requirement 1.1.2 can be tested using Use Cases 1.2 and 1.5. If changes are made to Use Case 1.5, this potentially affects the ability of the use case to test Requirements 1.1.2 and 6.8.1.

Table 18-2 Sample SRTM for Development

Requirements Definition

As discussed earlier in this chapter, the requirements definition is a document that outlines all system requirements and the reasons they are needed. It lists the needed capabilities, functions, and any modifications that should be implemented. Certain requirements will be given higher priority over others to cater to certain business objectives. The requirements help us determine the overall capabilities that the system must have to meet the needs of the business.

System Design Document

Whereas the requirements document outlines the reasons for software development, the system design document focuses on what will be developed and how it will be developed. This document describes the software at the architecture level and lists out various elements:

• Subsystems/services Outlines which software subsystems will be in use and what their responsibilities are.

• System hardware architecture Describes hardware in use and connectivity between different hardware devices.

• System software architecture Lists out all software components, including languages, tools, functions, subroutines, and classes.

• Data management Includes descriptions of data schemes and the database selection.

• Access control and security Describes access control method for data, including authentication, encryption, and key management capabilities.

• Boundary conditions Discusses system startup/shutdown as well as error/exception handling.

• Interface design Documents internal/external interface architecture and design elements in addition to how the software interfaces with the user.

It should be noted that these elements include security design requirements. These requirements should be taken seriously by the developing team to ensure both functional and secure software.

Test Plans

According to the International Software Testing Qualifications Board (ISTQB) website at www.istqb.org, test plans are “documentation describing the test objectives to be achieved and the means and the schedule for achieving them, organized to coordinate testing activities.” Here are some examples of test plans:

• Master test plan A top-level test plan that unifies all other test plans

• Testing level-specific test plan A plan that describes how testing will work at different testing levels, such as unit testing and integration testing

• Testing type-specific test plan Plans for the implementation of performance testing and security testing plans

Validation of the System Design

Verification and validation are crucial steps in any system development process. Verification is a form of answering the question, Are you building it correctly? It is aimed at examination of whether or not the correct processes are being performed properly. Validation asks a different question: Are you building the right thing, or does the output match the requirements? Both of these are important questions, but after some simple thought it can be seen that verification can be viewed from an operational efficiency perspective and validation from a more important operational sufficiency perspective. Even if you get verification correct, if the product does not meet the requirements, it is not suitable for use.

Testing can go a long way toward meeting system validation requirements. When requirements are created early in the system development process, the development of use cases for testing to validate the requirements can assist greatly in ensuring suitability at the end of the process. Testing in this regard tends to be aimed at seeing whether the system does what it is supposed to do; however, you must also ensure it does not do other things that it is not supposed to do. This leads to a more difficult question: How do you prove something is bounded by a set of desired states and does not perform other actions or have other states that would not be permissible? A branch of computer science called formal proofs is dedicated to examining this particular problem. The challenge is in finding a manner of modeling a system so that formal methods can be employed.

In this section, we’re going to look at a few different testing methods: regression testing, user acceptance testing, unit testing, integration testing, and peer review.

Regression Testing

Regression testing determines if changes to software have resulted in unintended losses of functionality and security. In other words, has the software regressed since a recent change was made? This type of test will help ensure that only acceptable changes are committed and that any negative outcomes are rolled back to a trusted starting point until changes no longer result in regression. The extra care taken will delay completion but will also increase software quality and security.

User Acceptance Testing

User acceptance testing solicits feedback from the software’s target audience to gauge their level of acceptance. Do they like the software? Does the software work? Is the software intuitive? Does the software secure and protect the privacy of their data? If the users reject the software, this may result in discarding the project entirely.

Unit Testing

When airline customers enter an airport, the security team will isolate each person who wishes to board a plane and examine them individually to ensure they don’t pose any threat. Unit testing performs a similar function in that it isolates every line of code in an application and performs an individual test on that code. This ensures the code is functional on its own and behaves in the exact manner it should. Although this slows down development efforts a bit, it results in fewer software bugs.

Integration Testing

In a way the opposite of unit testing, integration testing seeks to combine each developer’s code into an aggregated test to see how all that code meshes together. Since developers often work in silos, it’s important to determine if their code can be integrated with others’ code to create an application that meets functionality and security requirements. Since continuous integration is probably already automating the integration aspects, this would be a perfect time to perform the integration test. The biggest challenge to security is its potential removal due to poor integration efforts sometimes placing the blame on security. If security is the “obstacle” to integration, developers will probably discard the security element with a promise to revisit it later—only to not do so.

Peer Review

Peer review involves programmers on a team analyzing one another’s code to lend a different perspective. Analyzing our own code is a good idea, but it is difficult for us to spot all of the functional issues, both directly in front of us and buried in the details. Whereas functional issues are more easily detected through our own static, dynamic, and automated code analysis techniques, security issues are more difficult to spot and would greatly benefit from the fresh perspective offered by a peer. Anything that helps us detect and mitigate security issues is time well spent.

Adapting Solutions

The security landscape is changing, and we need to change with it. The global proliferation of ransomware (such as WannaCry) by itself has caused billions of dollars of damage to businesses and people. Microsoft smartly adapted its Windows 10 operating system to included ransomware support via its Windows Defender Antivirus tool.

That said, what about us security practitioners? Are we preparing ourselves for the onslaught of emerging threats, security trends, and disruptive technologies that are presently reinventing how hackers select, profile, and attack their victims? The intelligent among us will use our platform to compel organizations into increasing cybersecurity investments, implementations, and education in order to counter the next generation of cybersecurity threats and attackers. This section discusses the adaptation of security solutions based on the changing cybersecurity landscape.

Emerging Threats and Security Trends

The threat environment faced today is different from the one faced last year—and will be different again next year. This is a function of two separate changing factors—one in the business environment and the other in the threat environment. The business environment is always evolving; it changes as markets change, technologies change, and priorities change. All this change leads to both opportunities and challenges with respect to securing the enterprise. But the bottom line from a security perspective remains the same: What is the risk profile, and how can it be improved using the available resources and controls? Will this risk profile meet the requirements of the business?

The changes in the threat environment comprise a second, more pressing issue. What began as simple “Click here to…” spam has morphed into spear phishing threats, where clicking on what you expect to be a PDF report is instead a PDF with malware attached. Most threat vectors have shifted toward more dangerous and costly attacks. Nation-states have begun corporate espionage against literally thousands of firms, not to steal funds directly, but to gain intellectual property that saves them development costs. Organized crime is still mainly a “target of convenience” threat, but the tools being employed are becoming sophisticated and the costs are increasing.

The challenge for the security professional is to determine what the correct controls are to reduce risk to a manageable level and then employ those controls. Then, as the operational situation changes over time, the security professional must reevaluate the tools being employed, reconsider the level of risk, and make adjustments. This is not a casual exercise of “Yep, it’s still all working.” Rather, it needs to be a serious vulnerability-assessment-based examination into the details of the levels of exposed risk.

As in all systems, people play an integral role. The people side of the system also needs a periodic examination and refresh. This is where training and awareness campaigns come into play. However, just as a casual look at security controls doesn’t work, neither does running the same training over and over again. People are adaptive, and if they perceive they know the answers, they will not commit any time to the issue. Training and awareness needs to be fresh, informative, and interesting if you are going to capture the energy necessary to result in changed behaviors.

Disruptive Technologies

Throughout history, disruptive technologies have emerged that were so groundbreaking they changed everything about how people completed tasks. Cars reduced horseback riding, e-mail reduced mailing letters, and arguably the biggest disruptive technology of the past century was the invention of the refrigerator, which changed cooking and food preservation techniques. The “disruption” is very much a good thing, yet great technological strides are known for creating some “causalities” along the way. Current technologies will be displaced by the new disruptive technologies, which will lead to technologies being retired, entire industries disappearing, new industries being created, and, most loathsome of all, job loss. After all, the postal service isn’t thrilled about the effect e-mail has had on the mail delivery industry.

Today, many disruptive technologies are poised to change the world for the better, while also introducing entirely new forms of security threats and vulnerabilities. Couple this with many organizations and people urgently wanting to get the latest and greatest technology, and we might be opening ourselves up to considerable risk. Before we worried about our computers being hacked; now we’re worried about our homes and cars being hacked. Let’s take a look at the current crop of disruptive technologies:

• Artificial intelligence (AI) will improve information gathering and security, in addition to hacker attack methods.

• Internet of Things (IoT) will flood our society with tens of billions of devices by 2020.

• Blockchain will change how financial transactions are conducted around the world.

• Advanced robotics will affect construction, retail, food and hospitality, manufacturing, and many more physical tasks.

• Cloud computing will outsource IT, applications, data, and jobs to large Internet companies.

• Self-driving cars will change how people travel, in addition to what people do while they’re traveling.

• Virtual/augmented reality will vastly affect entertainment, education, and communication.

• 5G wireless will increase cellular bandwidth, between 1 and 10Gbps per second.

Although these are some of the more substantial disruptive technologies, there are many more out there and new ones lurking just over the horizon. Security professionals will need to get educated and update their skills on how to secure the next generation of cybersecurity hardware and software. They’ll need to learn how to adapt risk management, vulnerability assessments, incident response, and various other security controls to the current and future crop of security threats. After all, organizational and personal security hang in the balance.

Asset Management (Inventory Control)

Asset management encompasses the business processes that allow an organization to support the entire life cycle of the organization’s assets (such as the IT and security systems). This covers a system’s entire life, from acquisition to disposal, and acquisition covers not just the actual purchase of the item, but the request, justification, and approval process as well. For software assets, this also includes software license management and version controls (to include updates and patches for security purposes).

An obvious reason for implementing some sort of asset management system is to gain control of the inventory of systems and software. There are other reasons as well, including increasing accountability to ensure compliance (with such things as software licenses, which may limit the number of copies of a piece of software the organization can be using) and security (consider the management controls needed to ensure that a critical new security patch is installed). From a security standpoint, knowing what hardware the organization owns and what operating systems and applications are running on them is critical to being able to adequately protect the network and systems. Imagine that a new vulnerability report is released, providing details on a new exploit for a previously unknown vulnerability. A software fix that can mitigate the impact has been developed. How do you know whether this report is of concern to you if you have no idea what software you are running and where in the organization it resides?

The components of an inventory control or asset management system include the software to keep track of the inventory items, possibly wireless devices to record transactions at the moment and location at which they occur, and a mechanism to tag and track individual items. Common methods of tagging today include barcodes as well as radio-frequency identification (RFID) and barcode tags. Such tags have gone a long way toward increasing the speed and accuracy of physical inventory audits. RFID tags are more expensive than barcodes but have the advantage of not needing a line of sight to be able to read them (in other words, a reader doesn’t have to actually see the RFID tag in order to be able to read it, so it can be placed in out-of-the-way locations on assets).

As a final note, the database of your software and hardware inventory is going to be critical for you to manage the security of your systems, but it could also be an extremely valuable piece of information for others as well. Obviously, vendors would love to know what you have in order to try and sell you other, additional products, but more importantly, attackers would love to have this information to make their lives easier because your database would give them a good start on identifying possible vulnerabilities to attempt to exploit. Because of this, you should be careful to protect your inventory list. For those who need or are interested in assistance with their inventory management, there are software products on the market that assist in automating the management of your hardware and software inventory.

Chapter Review

In this chapter, we covered the implementation of security activities across the technology life cycle. The first section went over the systems development life cycle (SDLC), which covers the five phases of systems development: initiation, development/acquisition, implementation, operation/maintenance, and disposal. We then covered other life cycle components, including determining requirements, acquisition of a system as opposed to development, the testing and evaluation of the developed/acquired system, and then the eventual commissioning of the system from the organization’s infrastructure. We also talked about operational activities, which focus on the ongoing use of the deployed system, including monitoring, maintenance of the system, configuration management, plus change management. Lastly, we talked about the life cycle ending by disposing of the asset or reusing it by providing it to another individual.

The next section was on the software development life cycle (also called SDLC), which could be considered a subset of the system development life cycle, but focusing on software development. In this SDLC process, we once again covered phases of development, beginning with requirements gathering and then design, development, testing, and operations/maintenance. We also talked about application security frameworks to assist developers with incorporating security into their applications. The next topic was on software assurance, which provides guarantees that software will meet company objectives. Implementing standard libraries such as cryptographic libraries will help provide software assurance. Adopting industry-accepted approaches to software development, in addition to utilizing the web service security stack, will further provide software assurance to the organization. On the flip side, it’s important to also know what not to do when it comes to software development; therefore, security professionals must warn software developers about forbidden coding techniques. We talked about NX/XN bit use, which permits CPUs to allocate certain portions of RAM for code that should not execute—malware being the prime example. This helps protect our code from any memory-resident malware. ASLR adds to this by randomizing the locations of application code to discourage buffer overflow attacks by hackers. We ended the software assurance topic with coverage on code quality requirements and the implementation of code analysis techniques such as fuzzers as well as static and dynamic code analysis.

Continuing with the topic of the software development life cycle, we talked about development approaches to SDLC, beginning with the unification of the development, quality assurance, and operations teams, known collectively as DevOps. This greatly enhances software quality and speed. Although there are many development approaches, we focused on the popular ones—Agile, Waterfall, and Spiral. Agile isn’t bogged down with excessive processes and restrictions, thus it focuses on accelerated development of minor milestones, with the opportunity to revisit previous sections and make design changes as requirements dictate. Waterfall goes the opposite route of preplanning the long- and short-term milestones, focusing on completing phases in their entirety before moving onto newer ones. It doesn’t permit revisiting previous sections until the project has been completed. The Spiral approach combines the long-term rigidness of Waterfall with some of the short-term flexibilities and acceleration of Agile.

The next topic talked about continuous integration, which repeatedly incorporates the code of various developers into a single code structure to ensure it all fits together. Another important approach is versioning the software to keep track of successful and unsuccessful code portions. Also, rather than reinvent the wheel, developers can use secure coding standards, which can help developers incorporate security into their code from the ground up. Given the formalities involved with SDLC, there are various documentation needs. For example, the security implications traceability matrix document allows cross-referencing of requirements with implementation details. Other useful documents include the requirements definition, system design, and testing plans documents. The last part of this topic involves validation of the system design. We can perform this validation through regression testing, user acceptance testing, unit testing, integration testing, and peer review.

The next topic focused on adapting solutions to address emerging threats and security trends, in addition to disruptive technologies such as cloud computing, blockchain, artificial intelligence, 5G wireless, self-driving cars, advanced robotics, and virtual/augmented reality.

The final topic in the chapter discussed asset management for the purpose of inventory control. This helps business processes to support the entire life cycle of the organization’s assets, including IT and security systems.

Quick Tips

The following tips should serve as a brief review of the topics covered in more detail throughout the chapter.

Systems Development Life Cycle

• Systems development life cycle is a process for the initiating, developing/acquiring, implementing, operating/maintaining, and disposing of systems.

• Requirements define the business needs the proposed system must fulfill in order to justify its implementation.

• Organizations often acquire systems from third parties as opposed to developing one in-house.

• Organizations must test and evaluate systems to identify any shortcomings or vulnerabilities in their functionality, security, and performance.

• Commissioning a new, modified, or upgraded system marks the implementation of the technology into the production environment.

• Systems will be periodically decommissioned or disposed of as part of normal operations.

• Operational activities involve the actions employed in the daily usage of systems.

• It’s important to monitor the system’s operational state in order to identify performance and usage patterns, in addition to any signs of malicious activities.

• Maintenance activities can be both on a specific system and on the environment where the system resides.

• Configuration management is the methodical process of managing configuration changes to a system throughout its life cycle.

• Change management is a formalized process by which all changes to a system are planned, tested, documented, and managed in a positive manner.

• Asset disposal refers to the organizational process of discarding assets when they are no longer needed.

• Eventually, all assets will require disposal, yet some are still viable for recirculation back into the organization’s inventory.

Software Development Life Cycle

• Software development life cycle (SDLC) represents the various processes and procedures employed to develop software.

• The requirements gathering phase involves the brainstorming of the who, what, and why of the project.

• The design phase shifts the focus from one largely about brainstorming the organizational needs and their obstacles, to a phase focused on addressing said needs and obstacles.

• The core behavior of software is typically designed using the guidance of three well-known models: the informational, functional, and behavioral models.

• The develop phase transitions the SDLC away from the theoretical phases to the actual development of the proposed software.

• The testing phase begins with a series of testing processes in order to identify, resolve, and reassess software until it is fit for use.

• The operations and maintenance phase involves monitoring the ongoing usage and upkeep of the software. We monitor it to see how it performs under daily workloads, identify any functional and security issues, and either reconfigure the software or develop software patches to address the discovered issues.

• Application security frameworks ensure that developers employ consistent and secure development practices.

• Software assurance is the process of providing guarantees that any acquired or developed software is fit for use and meets prescribed security requirements.

• Standard libraries reduce development time and improve code quality and security through the use of vetted library functions.

• Industry-accepted approaches guide programmers into using well-known standards such as ISO 27000 series, ITIL, PCI, and even SAFECode.

• Web Services Security (WS-Security) provides authentication, integrity, confidentiality, and nonrepudiation for web services using SOAP.

• Forbidden coding techniques must be known to developers so they know what coding practices to avoid.

• NX (no-execute) bit use refers to CPUs reserving certain areas of memory for containing code that should not be executed.

• ASLR involves the operating system randomizing the operating locations of various portions of an application (such as the application executable, APIs, libraries, and heap memory) in order to confuse a hacker’s attempt at predicting a buffer overflow target.

• Code quality refers to the implementation of numerous coding best practices that are not officially defined, yet are generally accepted by the coding community.

• Code analysis involves the various techniques of reviewing and analyzing code.

• Fuzzers inject unusual or malicious input into an application to see how it responds.

• Static code analysis is the process of reviewing code when it’s not running.

• Dynamic code analysis is the process of reviewing code that is running.

• DevOps combines the software development, quality assurance, and operations teams into a single working unit to expedite and improve the quality of the development, testing, and deployment of software.

• Agile is an accelerated development approach that favors smaller milestones, reduced long-term planning, and the ability to revisit previous phases without restriction.

• Waterfall follows a strict sequential life-cycle approach, where each development phase must be finished before beginning the next. It does not permit revisiting previous phases until the completion of the projection.

• Spiral utilizes the incremental progress and revisitation rights of Agile, but within the relative confines of the Waterfall approach.

• Continuous integration is the process of repeatedly incorporating code from various developers into a single code structure.

• Versioning is the process of marking important code milestones or changes with a timestamped version number.

• Secure coding standards are language-specific rules and recommended practices that provide for secure programming.

• Documentation guides developers through the numerous processes and procedures necessary to succeed at each phase of the SDLC.

• The security requirements traceability matrix (SRTM) is a grid that allows users to cross-reference requirements and implementation details.

• The requirements definition document outlines all system requirements and the reasons they are needed.

• The system design document focuses on what will be developed and how it will be developed.

• According to the International Software Testing Qualifications Board (ISTQB), test plans are “documentation describing the test objectives to be achieved and the means and the schedule for achieving them, organized to coordinate testing activities.”

• Verification determines whether or not the correct processes are being performed properly.

• Validation determines whether or not the right thing is being built or if the output matches the requirements.

• Regression testing determines if changes to software have resulted in unintended losses of functionality and security.

• User acceptance testing solicits feedback from the software’s target audience to gauge their level of acceptance.

• Unit testing isolates every line of code in an application and performs an individual test on that code.

• Integration testing combines each developer’s code into an aggregated test to see how the code meshes together.

• Peer review involves programmers on a team analyzing one another’s code to lend a different perspective.

Adapting Solutions

• Adapting solutions involves making preventative, detective, and corrective adjustments to our risk management and security controls based on changes in the security landscape.

• Disruptive technologies are ground-breaking advancements that change everything about how people perform tasks.

• Key disruptive technologies include AI, IoT, blockchain, advanced robotics, cloud computing, self-driving cars, virtual and augmented reality, and 5G wireless networking.

Asset Management (Inventory Control)

• Asset management encompasses the business processes that allow an organization to support the entire life cycle of the organization’s assets (such as the IT and security systems).

• The components of an inventory control or asset management system include the software to keep track of the inventory items, possibly wireless devices to record transactions at the moment and location at which they occur, and a mechanism to tag and track individual items.

• Common methods of tagging today include barcodes as well as radio-frequency identification (RFID) and barcode tags.

• RFID tags are more expensive than barcodes but have the advantage of not needing a line of sight to be able to read them.

Questions

The following questions will help you measure your understanding of the material presented in this chapter. Read all the choices carefully because there might be more than one correct answer. Choose all correct answers for each question.

1. SDLC phases include a minimum set of security tasks required to effectively incorporate security in the system development process. Which of the following is one of the key security activities for the initiation phase?

A. Determine CIA requirements.

B. Define the security architecture.

C. Conduct a PIA.

D. Analyze security requirements.

2. The process of creating or altering systems, and the models and methodologies that people use to develop these systems, is referred to as what?

A. Systems Development Life Cycle

B. Agile methods

C. Security requirements traceability matrix

D. EAL level

3. Which of the following statements are true about a security requirements traceability matrix (SRTM)? (Choose all that apply.)