Chapter 1

Cybersecurity Fundamentals

This chapter covers the following topics:

Introduction to Cybersecurity: Cybersecurity programs recognize that organizations must be vigilant, resilient, and ready to protect and defend every ingress and egress connection as well as organizational data wherever it is stored, transmitted, or processed. In this chapter, you will learn concepts of cybersecurity and information security.

Defining What Are Threats, Vulnerabilities, and Exploits: Describe the difference between cybersecurity threats, vulnerabilities, and exploits.

Exploring Common Threats: Describe and understand the most common cybersecurity threats.

Common Software and Hardware Vulnerabilities: Describe and understand the most common software and hardware vulnerabilities.

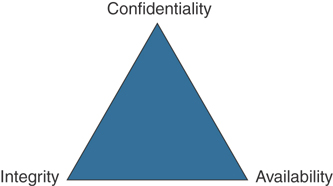

Confidentiality, Integrity, and Availability: The CIA triad is a concept that was created to define security policies to protect assets. The idea is that confidentiality, integrity and availability should be guaranteed in any system that is considered secured.

Cloud Security Threats: Learn about different cloud security threats and how cloud computing has changed traditional IT and is introducing several security challenges and benefits at the same time.

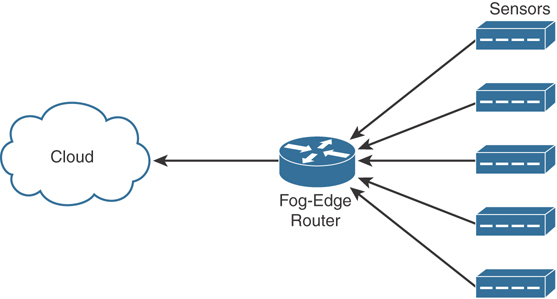

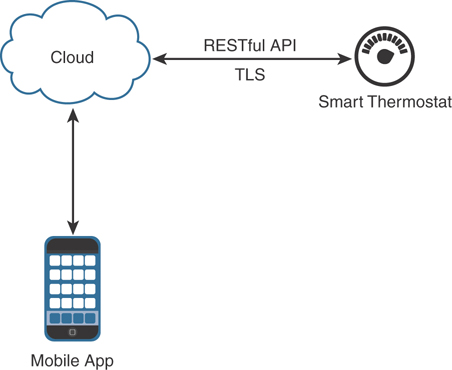

IoT Security Threats: The proliferation of connected devices is introducing major cybersecurity risks in today’s environment.

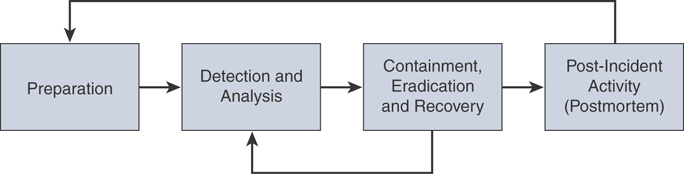

An Introduction to Digital Forensics and Incident Response: You will learn the concepts of digital forensics and incident response (DFIR) and cybersecurity operations.

This chapter starts by introducing you to different cybersecurity concepts that are foundational for any individual starting a career in cybersecurity or network security. You will learn the difference between cybersecurity threats, vulnerabilities, and exploits. You will also explore the most common cybersecurity threats, as well as common software and hardware vulnerabilities. You will learn the details about the CIA triad—confidentiality, integrity, and availability. In this chapter, you will learn about different cloud security and IoT security threats. This chapter concludes with an introduction to DFIR and security operations.

The following SCOR 350-701 exam objectives are covered in this chapter:

1.1 Explain common threats against on-premises and cloud environments

1.1.a On-premises: viruses, trojans, DoS/DDoS attacks, phishing, rootkits, man-in-the-middle attacks, SQL injection, cross-site scripting, malware

1.1.b Cloud: data breaches, insecure APIs, DoS/DDoS, compromised credentials

1.2 Compare common security vulnerabilities such as software bugs, weak and/or hardcoded passwords, SQL injection, missing encryption, buffer overflow, path traversal, cross-site scripting/forgery

1.5 Describe security intelligence authoring, sharing, and consumption

1.6 Explain the role of the endpoint in protecting humans from phishing and social engineering attacks

“Do I Know This Already?” Quiz

The “Do I Know This Already?” quiz allows you to assess whether you should read this entire chapter thoroughly or jump to the “Exam Preparation Tasks” section. If you are in doubt about your answers to these questions or your own assessment of your knowledge of the topics, read the entire chapter. Table 1-1 lists the major headings in this chapter and their corresponding “Do I Know This Already?” quiz questions. You can find the answers in Appendix A, “Answers to the ‘Do I Know This Already?’ Quizzes and Q&A Sections.”

Table 1-1 “Do I Know This Already?” Section-to-Question Mapping

Foundation Topics Section |

Questions |

Introduction to Cybersecurity |

1 |

Defining What Are Threats, Vulnerabilities, and Exploits |

2–6 |

Common Software and Hardware Vulnerabilities |

7–10 |

Confidentiality, Integrity, and Availability |

11–13 |

Cloud Security Threats |

14–15 |

IoT Security Threats |

16–17 |

An Introduction to Digital Forensics and Incident Response |

18 |

1. Which of the following is a collection of industry standards and best practices to help organizations manage cybersecurity risks?

MITRE

NIST Cybersecurity Framework

ISO Cybersecurity Framework

CERT/cc

2. _________ is any potential danger to an asset.

Vulnerability

Threat

Exploit

None of these answers is correct.

3. A ___________ is a weakness in the system design, implementation, software, or code, or the lack of a mechanism.

Vulnerability

Threat

Exploit

None of these answers are correct.

4. Which of the following is a piece of software, a tool, a technique, or a process that takes advantage of a vulnerability that leads to access, privilege escalation, loss of integrity, or denial of service on a computer system?

Exploit

Reverse shell

Searchsploit

None of these answers is correct.

5. Which of the following is referred to as the knowledge about an existing or emerging threat to assets, including networks and systems?

Exploits

Vulnerabilities

Threat assessment

Threat intelligence

6. Which of the following are examples of malware attack and propagation mechanisms?

Master boot record infection

File infector

Macro infector

All of these answers are correct.

7. Vulnerabilities are typically identified by a ___________.?

CVE

CVSS

PSIRT

None of these answers is correct.

8. SQL injection attacks can be divided into which of the following categories?

Blind SQL injection

Out-of-band SQL injection

In-band SQL injection

None of these answers is correct.

All of these answers are correct.

9. Which of the following is a type of vulnerability where the flaw is in a web application but the attack is against an end user (client)?

XXE

HTML injection

SQL injection

XSS

10. Which of the following is a way for an attacker to perform a session hijack attack?

Predicting session tokens

Session sniffing

Man-in-the-middle attack

Man-in-the-browser attack

All of these answers are correct.

11. A denial-of-service attack impacts which of the following?

Integrity

Availability

Confidentiality

None of these answers is correct.

12. Which of the following are examples of security mechanisms designed to preserve confidentiality?

Logical and physical access controls

Encryption

Controlled traffic routing

All of these answers are correct.

13. An attacker is able to manipulate the configuration of a router by stealing the administrator credential. This attack impacts which of the following?

Integrity

Session keys

Encryption

None of these answers is correct.

14. Which of the following is a cloud deployment model?

Public cloud

Community cloud

Private cloud

All of these answers are correct.

15. Which of the following cloud models include all phases of the system development life cycle (SDLC) and can use application programming interfaces (APIs), website portals, or gateway software?

SaaS

PaaS

SDLC containers

None of these answers is correct.

16. Which of the following is not a communications protocol used in IoT environments?

Zigbee

INSTEON

LoRaWAN

802.1X

17. Which of the following is an example of tools and methods to hack IoT devices?

UART debuggers

JTAG analyzers

IDA

Ghidra

All of these answers are correct.

18. Which of the following is an adverse event that threatens business security and/or disrupts service?

An incident

An IPS alert

A DLP alert

A SIEM alert

Foundation Topics

Introduction to Cybersecurity

We live in an interconnected world where both individual and collective actions have the potential to result in inspiring goodness or tragic harm. The objective of cybersecurity is to protect each of us, our economy, our critical infrastructure, and our country from the harm that can result from inadvertent or intentional misuse, compromise, or destruction of information and information systems.

Cybersecurity risk includes not only the risk of a data breach but also the risk of the entire organization being undermined via business activities that rely on digitization and accessibility. As a result, learning how to develop an adequate cybersecurity program is crucial for any organization. Cybersecurity can no longer be something that you delegate to the information technology (IT) team. Everyone needs to be involved, including the board of directors.

Cybersecurity vs. Information Security (InfoSec)

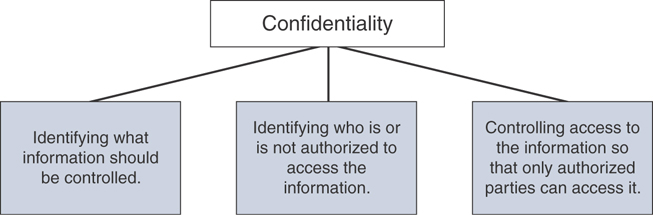

Many individuals confuse traditional information security with cybersecurity. In the past, information security programs and policies were designed to protect the confidentiality, integrity, and availability of data within the confines of an organization. Unfortunately, this is no longer sufficient. Organizations are rarely self-contained, and the price of interconnectivity is exposure to attack. Every organization, regardless of size or geographic location, is a potential target. Cybersecurity is the process of protecting information by preventing, detecting, and responding to attacks.

Cybersecurity programs recognize that organizations must be vigilant, resilient, and ready to protect and defend every ingress and egress connection as well as organizational data wherever it is stored, transmitted, or processed. Cybersecurity programs and policies expand and build upon traditional information security programs, but also include the following:

Cyber risk management and oversight

Threat intelligence and information sharing

Third-party organization, software, and hardware dependency management

Incident response and resiliency

The NIST Cybersecurity Framework

The National Institute of Standards and Technology (NIST) is a well-known organization that is part of the U.S. Department of Commerce. NIST is a nonregulatory federal agency within the U.S. Commerce Department’s Technology Administration. NIST’s mission is to develop and promote measurement, standards, and technology to enhance productivity, facilitate trade, and improve quality of life. The Computer Security Division (CSD) is one of seven divisions within NIST’s Information Technology Laboratory. NIST’s Cybersecurity Framework is a collection of industry standards and best practices to help organizations manage cybersecurity risks. This framework is created in collaboration among the United States government, corporations, and individuals. The NIST Cybersecurity Framework can be accessed at https://www.nist.gov/cyberframework.

The NIST Cybersecurity Framework is developed with a common taxonomy, and one of the main goals is to address and manage cybersecurity risk in a cost-effective way to protect critical infrastructure. Although designed for a specific constituency, the requirements can serve as a security blueprint for any organization.

Additional NIST Guidance and Documents

Currently, there are more than 500 NIST information security–related documents. This number includes FIPS, the SP 800 series, information, Information Technology Laboratory (ITL) bulletins, and NIST interagency reports (NIST IR):

Federal Information Processing Standards (FIPS): This is the official publication series for standards and guidelines.

Special Publication (SP) 800 series: This series reports on ITL research, guidelines, and outreach efforts in information system security and its collaborative activities with industry, government, and academic organizations. SP 800 series documents can be downloaded from https://csrc.nist.gov/publications/sp800.

Special Publication (SP) 1800 series: This series focuses on cybersecurity practices and guidelines. SP 1800 series document can be downloaded from https://csrc.nist.gov/publications/sp1800.

NIST Internal or Interagency Reports (NISTIR): These reports focus on research findings, including background information for FIPS and SPs.

ITL bulletins: Each bulletin presents an in-depth discussion of a single topic of significant interest to the information systems community. Bulletins are issued on an as-needed basis.

From access controls to wireless security, the NIST publications are truly a treasure trove of valuable and practical guidance.

The International Organization for Standardization (ISO)

ISO is a network of the national standards institutes of more than 160 countries. ISO has developed more than 13,000 international standards on a variety of subjects, ranging from country codes to passenger safety.

The ISO/IEC 27000 series (also known as the ISMS Family of Standards, or ISO27k for short) comprises information security standards published jointly by the ISO and the International Electrotechnical Commission (IEC).

The first six documents in the ISO/IEC 27000 series provide recommendations for “establishing, implementing, operating, monitoring, reviewing, maintaining, and improving an Information Security Management System”:

ISO 27001 is the specification for an Information Security Management System (ISMS).

ISO 27002 describes the Code of Practice for information security management.

ISO 27003 provides detailed implementation guidance.

ISO 27004 outlines how an organization can monitor and measure security using metrics.

ISO 27005 defines the high-level risk management approach recommended by ISO.

ISO 27006 outlines the requirements for organizations that will measure ISO 27000 compliance for certification.

In all, there are more than 20 documents in the series, and several more are still under development. The framework is applicable to public and private organizations of all sizes. According to the ISO website, “the ISO standard gives recommendations for information security management for use by those who are responsible for initiating, implementing or maintaining security in their organization. It is intended to provide a common basis for developing organizational security standards and effective security management practice and to provide confidence in inter-organizational dealings.”

Defining What Are Threats, Vulnerabilities, and Exploits

In the following sections you will learn about the characteristics of threats, vulnerabilities, and exploits.

What Is a Threat?

A threat is any potential danger to an asset. If a vulnerability exists but has not yet been exploited—or, more importantly, it is not yet publicly known—the threat is latent and not yet realized. If someone is actively launching an attack against your system and successfully accesses something or compromises your security against an asset, the threat is realized. The entity that takes advantage of the vulnerability is known as the malicious actor, and the path used by this actor to perform the attack is known as the threat agent or threat vector.

What Is a Vulnerability?

A vulnerability is a weakness in the system design, implementation, software, or code, or the lack of a mechanism. A specific vulnerability might manifest as anything from a weakness in system design to the implementation of an operational procedure. The correct implementation of safeguards and security countermeasures could mitigate a vulnerability and reduce the risk of exploitation.

Vulnerabilities and weaknesses are common, mainly because there isn’t any perfect software or code in existence. Some vulnerabilities have limited impact and are easily mitigated; however, many have broader implications.

Vulnerabilities can be found in each of the following:

Applications: Software and applications come with tons of functionality. Applications might be configured for usability rather than for security. Applications might be in need of a patch or update that may or may not be available. Attackers targeting applications have a target-rich environment to examine. Just think of all the applications running on your home or work computer.

Operating systems: Operating system software is loaded on workstations and servers. Attackers can search for vulnerabilities in operating systems that have not been patched or updated.

Hardware: Vulnerabilities can also be found in hardware. Mitigation of a hardware vulnerability might require patches to microcode (firmware) as well as the operating system or other system software. Good examples of well-known hardware-based vulnerabilities are Spectre and Meltdown. These vulnerabilities take advantage of a feature called “speculative execution” common to most modern processor architectures.

Misconfiguration: The configuration file and configuration setup for the device or software may be misconfigured or may be deployed in an unsecure state. This might be open ports, vulnerable services, or misconfigured network devices. Just consider wireless networking. Can you detect any wireless devices in your neighborhood that have encryption turned off?

Shrinkwrap software: This is the application or executable file that is run on a workstation or server. When installed on a device, it can have tons of functionality or sample scripts or code available.

Vendors, security researchers, and vulnerability coordination centers typically assign vulnerabilities an identifier that’s disclosed to the public. This identifier is known as the Common Vulnerabilities and Exposures (CVE). CVE is an industry-wide standard. CVE is sponsored by US-CERT, the office of Cybersecurity and Communications at the U.S. Department of Homeland Security. Operating as DHS’s Federally Funded Research and Development Center (FFRDC), MITRE has copyrighted the CVE list for the benefit of the community in order to ensure it remains a free and open standard, as well as to legally protect the ongoing use of it and any resulting content by government, vendors, and/or users. MITRE maintains the CVE list and its public website, manages the CVE Compatibility Program, oversees the CVE Naming Authorities (CNAs), and provides impartial technical guidance to the CVE Editorial Board throughout the process to ensure CVE serves the public interest.

The goal of CVE is to make it easier to share data across tools, vulnerability repositories, and security services.

More information about CVE is available at http://cve.mitre.org.

What Is an Exploit?

An exploit refers to a piece of software, a tool, a technique, or a process that takes advantage of a vulnerability that leads to access, privilege escalation, loss of integrity, or denial of service on a computer system. Exploits are dangerous because all software has vulnerabilities; hackers and perpetrators know that there are vulnerabilities and seek to take advantage of them. Although most organizations attempt to find and fix vulnerabilities, some organizations lack sufficient funds for securing their networks. Sometimes no one may even know the vulnerability exists, and it is exploited. That is known as a zero-day exploit. Even when you do know there is a problem, you are burdened with the fact that a window exists between when a vulnerability is disclosed and when a patch is available to prevent the exploit. The more critical the server, the slower it is usually patched. Management might be afraid of interrupting the server or afraid that the patch might affect stability or performance. Finally, the time required to deploy and install the software patch on production servers and workstations exposes an organization’s IT infrastructure to an additional period of risk.

There are several places where people trade exploits for malicious intent. The most prevalent is the “dark web.” The dark web (or darknet) is an overlay of networks and systems that use the Internet but require specific software and configurations to access it. The dark web is just a small part of the “deep web.” The deep web is a collection of information and systems on the Internet that is not indexed by web search engines. Often people incorrectly confuse the term deep web with dark web.

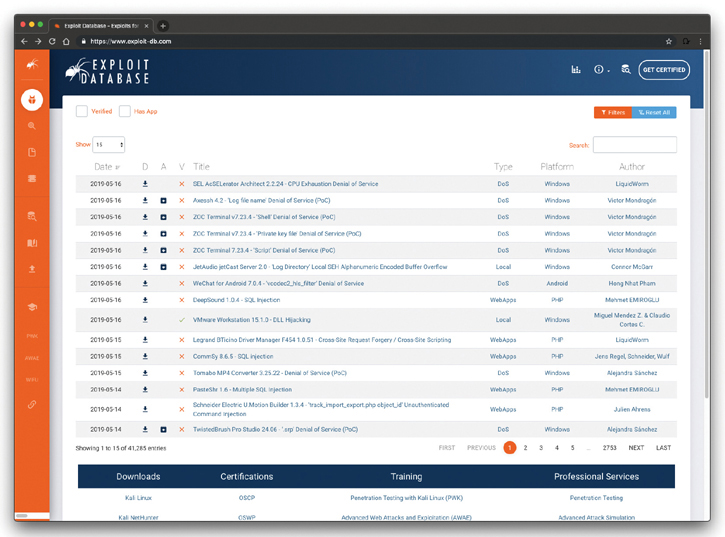

Not all exploits are shared for malicious intent. For example, many security researchers share proof-of-concept (POC) exploits in public sites such as The Exploit Database (or Exploit-DB) and GitHub. The Exploit Database is a site maintained by Offensive Security where security researchers and other individuals post exploits for known vulnerabilities. The Exploit Database can be accessed at https://www.exploit-db.com. Figure 1-1 shows different publicly available exploits in the Exploit Database.

There is a command-line tool called searchsploit that allows you to download a copy of the Exploit Database so that you can use it on the go. Figure 1-2 shows an example of how you can use searchsploit to search for specific exploits. In the example illustrated in Figure 1-2, searchsploit is used to search for exploits related to SMB vulnerabilities.

Figure 1-1 The Exploit Database (Exploit-DB)

Figure 1-2 Using Searchsploit

Risk, Assets, Threats, and Vulnerabilities

As with any new technology topic, to better understand the security field, you must learn the terminology that is used. To be a security professional, you need to understand the relationship between risk, threats, assets, and vulnerabilities.

Risk is the probability or likelihood of the occurrence or realization of a threat. There are three basic elements of risk: assets, threats, and vulnerabilities. To deal with risk, the U.S. federal government has adopted a risk management framework (RMF). The RMF process is based on the key concepts of mission- and risk-based, cost-effective, and enterprise information system security. NIST Special Publication 800-37, “Guide for Applying the Risk Management Framework to Federal Information Systems,” transforms the traditional Certification and Accreditation (C&A) process into the six-step Risk Management Framework (RMF). Let’s look at the various components associated with risk, which include assets, threats, and vulnerabilities.

An asset is any item of economic value owned by an individual or corporation. Assets can be real—such as routers, servers, hard drives, and laptops—or assets can be virtual, such as formulas, databases, spreadsheets, trade secrets, and processing time. Regardless of the type of asset discussed, if the asset is lost, damaged, or compromised, there can be an economic cost to the organization.

A threat sets the stage for risk and is any agent, condition, or circumstance that could potentially cause harm, loss, or damage, or compromise an IT asset or data asset. From a security professional’s perspective, threats can be categorized as events that can affect the confidentiality, integrity, or availability of the organization’s assets. These threats can result in destruction, disclosure, modification, corruption of data, or denial of service. Examples of the types of threats an organization can face include the following:

Natural disasters, weather, and catastrophic damage: Hurricanes, storms, weather outages, fire, flood, earthquakes, and other natural events compose an ongoing threat.

Hacker attacks: An insider or outsider who is unauthorized and purposely attacks an organization’s infrastructure, components, systems, or data.

Cyberattack: Attacks that target critical national infrastructures such as water plants, electric plants, gas plants, oil refineries, gasoline refineries, nuclear power plants, waste management plants, and so on. Stuxnet is an example of one such tool designed for just such a purpose.

Viruses and malware: An entire category of software tools that are malicious and are designed to damage or destroy a system or data.

Disclosure of confidential information: Anytime a disclosure of confidential information occurs, it can be a critical threat to an organization if such disclosure causes loss of revenue, causes potential liabilities, or provides a competitive advantage to an adversary. For instance, if your organization experiences a breach and detailed customer information is exposed (for example, personally identifiable information [PII]), such a breach could have potential liabilities and loss of trust from your customers. Another example is when a threat actor steals source code or design documents and sells them to your competitors.

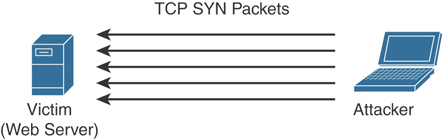

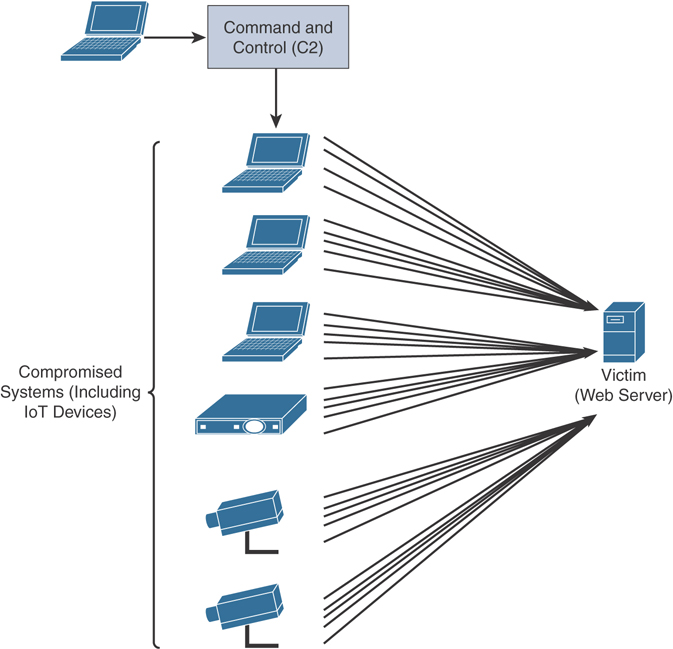

Denial of service (DoS) or distributed DoS (DDoS) attacks: An attack against availability that is designed to bring the network, or access to a particular TCP/IP host/server, to its knees by flooding it with useless traffic. Today, most DoS attacks are launched via botnets, whereas in the past tools such as the Ping of Death or Teardrop may have been used. Like malware, hackers constantly develop new tools so that Storm and Mariposa, for example, are replaced with other, more current threats.

Defining Threat Actors

Threat actors are the individuals (or group of individuals) who perform an attack or are responsible for a security incident that impacts or has the potential of impacting an organization or individual. There are several types of threat actors:

Script kiddies: People who use existing “scripts” or tools to hack into computers and networks. They lack the expertise to write their own scripts.

Organized crime groups: Their main purpose is to steal information, scam people, and make money.

State sponsors and governments: These agents are interested in stealing data, including intellectual property and research-and-development data from major manufacturers, government agencies, and defense contractors.

Hacktivists: People who carry out cybersecurity attacks aimed at promoting a social or political cause.

Terrorist groups: These groups are motivated by political or religious beliefs.

Originally, the term hacker was used for a computer enthusiast. A hacker was a person who enjoyed understanding the internal workings of a system, computer, and computer network and who would continue to hack until he understood everything about the system. Over time, the popular press began to describe hackers as individuals who broke into computers with malicious intent. The industry responded by developing the word cracker, which is short for a criminal hacker. The term cracker was developed to describe individuals who seek to compromise the security of a system without permission from an authorized party. With all this confusion over how to distinguish the good guys from the bad guys, the term ethical hacker was coined. An ethical hacker is an individual who performs security tests and other vulnerability-assessment activities to help organizations secure their infrastructures. Sometimes ethical hackers are referred to as white hat hackers.

Hacker motives and intentions vary. Some hackers are strictly legitimate, whereas others routinely break the law. Let’s look at some common categories:

White hat hackers: These individuals perform ethical hacking to help secure companies and organizations. Their belief is that you must examine your network in the same manner as a criminal hacker to better understand its vulnerabilities.

Black hat hackers: These individuals perform illegal activities, such as organized crime.

Gray hat hackers: These individuals usually follow the law but sometimes venture over to the darker side of black hat hacking. It would be unethical to employ these individuals to perform security duties for your organization because you are never quite clear where they stand.

Understanding What Threat Intelligence Is

Threat intelligence is referred to as the knowledge about an existing or emerging threat to assets, including networks and systems. Threat intelligence includes context, mechanisms, indicators of compromise (IoCs), implications, and actionable advice. Threat intelligence is referred to as the information about the observables, indicators of compromise (IoCs) intent, and capabilities of internal and external threat actors and their attacks. Threat intelligence includes specifics on the tactics, techniques, and procedures of these adversaries. Threat intelligence’s primary purpose is to inform business decisions regarding the risks and implications associated with threats.

Converting these definitions into common language could translate to threat intelligence being evidence-based knowledge of the capabilities of internal and external threat actors. This type of data can be beneficial for the security operations center (SOC) of any organization. Threat intelligence extends cybersecurity awareness beyond the internal network by consuming intelligence from other sources Internet-wide related to possible threats to you or your organization. For instance, you can learn about threats that have impacted different external organizations. Subsequently, you can proactively prepare rather than react once the threat is seen against your network. Providing an enrichment data feed is one service that threat intelligence platforms would typically provide.

Figure 1-3 shows a five-step threat intelligence process for evaluating threat intelligence sources and information.

Figure 1-3 The Threat Intelligence Process

Many different threat intelligence platforms and services are available in the market nowadays. Cyber threat intelligence focuses on providing actionable information on adversaries, including IoCs. Threat intelligence feeds help you prioritize signals from internal systems against unknown threats. Cyber threat intelligence allows you to bring more focus to cybersecurity investigation because instead of blindly looking for “new” and “abnormal” events, you can search for specific IoCs, IP addresses, URLs, or exploit patterns.

A number of standards are being developed for disseminating threat intelligence information. The following are a few examples:

Structured Threat Information eXpression (STIX): An express language designed for sharing of cyber-attack information. STIX details can contain data such as the IP addresses or domain names of command-and-control servers (often referred to C2 or CnC), malware hashes, and so on. STIX was originally developed by MITRE and is now maintained by OASIS. You can obtain more information at http://stixproject.github.io.

Trusted Automated eXchange of Indicator Information (TAXII): An open transport mechanism that standardizes the automated exchange of cyber-threat information. TAXII was originally developed by MITRE and is now maintained by OASIS. You can obtain more information at http://taxiiproject.github.io.

Cyber Observable eXpression (CybOX): A free standardized schema for specification, capture, characterization, and communication of events of stateful properties that are observable in the operational domain. CybOX was originally developed by MITRE and is now maintained by OASIS. You can obtain more information at https://cyboxproject.github.io.

Open Indicators of Compromise (OpenIOC): An open framework for sharing threat intelligence in a machine-digestible format. Learn more at http://www.openioc.org.

Open Command and Control (OpenC2): A language for the command and control of cyber-defense technologies. OpenC2 Forum was a community of cybersecurity stakeholders that was facilitated by the U.S. National Security Agency. OpenC2 is now an OASIS technical committee (TC) and specification. You can obtain more information at https://www.oasis-open.org/committees/tc_home.php?wg_abbrev=openc2.

It should be noted that many open source and non-security-focused sources can be leveraged for threat intelligence as well. Some examples of these sources are social media, forums, blogs, and vendor websites.

Viruses and Worms

One thing that makes viruses unique is that a virus typically needs a host program or file to infect. Viruses require some type of human interaction. A worm can travel from system to system without human interaction. When a worm executes, it can replicate again and infect even more systems. For example, a worm can email itself to everyone in your address book and then repeat this process again and again from each user’s computer it infects. That massive amount of traffic can lead to a denial of service very quickly.

Spyware is closely related to viruses and worms. Spyware is considered another type of malicious software. In many ways, spyware is similar to a Trojan because most users don’t know that the program has been installed, and the program hides itself in an obscure location. Spyware steals information from the user and also eats up bandwidth. If that’s not enough, spyware can also redirect your web traffic and flood you with annoying pop-ups. Many users view spyware as another type of virus.

This section covers a brief history of computer viruses, common types of viruses, and some of the most well-known virus attacks. Also, some tools used to create viruses and the best methods of prevention are discussed.

Types and Transmission Methods

Although viruses have a history that dates back to the 1980s, their means of infection has changed over the years. Viruses depend on people to spread them. Viruses require human activity, such as booting a computer, executing an autorun on digital media (for example, CD, DVD, USB sticks, external hard drives, and so on), or opening an email attachment. Malware propagates through the computer world in several basic ways:

Master boot record infection: This is the original method of attack. It works by attacking the master boot record of the hard drive.

BIOS infection: This could completely make the system inoperable or the device could hang before passing Power On Self-Test (POST).

File infection: This includes malware that relies on the user to execute the file. Extensions such as .com and .exe are usually used. Some form of social engineering is normally used to get the user to execute the program. Techniques include renaming the program or trying to mask the .exe extension and make it appear as a graphic (.jpg, .bmp, .png, .svg, and the like).

Macro infection: Macro viruses exploit scripting services installed on your computer. Manipulating and using macros in Microsoft Excel, Microsoft Word, and Microsoft PowerPoint documents have been very popular in the past.

Cluster: This type of virus can modify directory table entries so that it points a user or system process to the malware and not the actual program.

Multipartite: This style of virus can use more than one propagation method and targets both the boot sector and program files. One example is the NATAS (Satan spelled backward) virus.

After your computer is infected, the malware can do any number of things. Some spread quickly. This type of virus is known as a fast infection. Fast-infection viruses infect any file that they are capable of infecting. Others limit the rate of infection. This type of activity is known as sparse infection. Sparse infection means that the virus takes its time in infecting other files or spreading its damage. This technique is used to try to help the virus avoid infection. Some viruses forgo a life of living exclusively in files and load themselves into RAM, which is the only way that boot sector viruses can spread.

As the antivirus and security companies have developed better ways to detect malware, malware authors have fought back by trying to develop malware that is harder to detect. For example, in 2012, Flame was believed to be the most sophisticated malware to date. Flame has the ability to spread to other systems over a local network. It can record audio, screenshots, and keyboard activity, and it can turn infected computers into Bluetooth beacons that attempt to download contact information from nearby Bluetooth-enabled devices. Another technique that malware developers have attempted is polymorphism. A polymorphic virus can change its signature every time it replicates and infects a new file. This technique makes it much harder for the antivirus program to detect it. One of the biggest changes is that malware creators don’t massively spread viruses and other malware the way they used to. Much of the malware today is written for a specific target. By limiting the spread of the malware and targeting only a few victims, malware developers make finding out about the malware and creating a signature to detect it much harder for antivirus companies.

When is a virus not a virus? When is the virus just a hoax? A virus hoax is nothing more than a chain letter, meme, or email that encourages you to forward it to your friends to warn them of impending doom or some other notable event. To convince readers to forward the hoax, the email will contain some official-sounding information that could be mistaken as valid.

Malware Payloads

Malware must place their payload somewhere. They can always overwrite a portion of the infected file, but to do so would destroy it. Most malware writers want to avoid detection for as long as possible and might not have written the program to immediately destroy files. One way the malware writer can accomplish this is to place the malware code either at the beginning or the end of the infected file. Malware known as a prepender infects programs by placing its viral code at the beginning of the infected file, whereas an appender places its code at the end of the infected file. Both techniques leave the file intact, with the malicious code added to the beginning or the end of the file.

No matter the infection technique, all viruses have some basic common components, as detailed in the following list. For example, all viruses have a search routine and an infection routine.

Search routine: The search routine is responsible for locating new files, disk space, or RAM to infect. The search routine could include “profiling.” Profiling could be used to identify the environment and morph the malware to be more effective and potentially bypass detection.

Infection routine: The search routine is useless if the virus doesn’t have a way to take advantage of these findings. Therefore, the second component of a virus is an infection routine. This portion of the virus is responsible for copying the virus and attaching it to a suitable host. Malware could also use a re-infect/restart routine to further compromise the affected system.

Payload: Most viruses don’t stop here and also contain a payload. The purpose of the payload routine might be to erase the hard drive, display a message to the monitor, or possibly send the virus to 50 people in your address book. Payloads are not required, and without one, many people might never know that the virus even existed.

Antidetection routine: Many viruses might also have an antidetection routine. Its goal is to help make the virus more stealth-like and avoid detection.

Trigger routine: The goal of the trigger routine is to launch the payload at a given date and time. The trigger can be set to perform a given action at a given time.

Trojans

Trojans are programs that pretend to do one thing but, when loaded, actually perform another, more malicious act. Trojans gain their name from Homer’s epic tale The Iliad. To defeat their enemy, the Greeks built a giant wooden horse with a trapdoor in its belly. The Greeks tricked the Trojans into bringing the large wooden horse into the fortified city of Troy. However, unknown to the Trojans and under cover of darkness, the Greeks crawled out of the wooden horse, opened the city’s gate, and allowed the waiting soldiers into the city.

A software Trojan horse is based on this same concept. A user might think that a file looks harmless and is safe to run, but after the file is executed, it delivers a malicious payload. Trojans work because they typically present themselves as something you want, such as an email with a PDF, a Word document, or an Excel spreadsheet. Trojans work hard to hide their true purposes. The spoofed email might look like it’s from HR, and the attached file might purport to be a list of pending layoffs. The payload is executed if the attacker can get the victim to open the file or click the attachment. That payload might allow a hacker remote access to your system, start a keystroke logger to record your every keystroke, plant a backdoor on your system, cause a denial of service (DoS), or even disable your antivirus protection or software firewall.

Unlike a virus or worm, Trojans cannot spread themselves. They rely on the uninformed user.

Trojan Types

A few Trojan categories are command-shell Trojans, graphical user interface (GUI) Trojans, HTTP/HTTPS Trojans, document Trojans, defacement Trojans, botnet Trojans, Virtual Network Computing (VNC) Trojans, remote-access Trojans, data-hiding Trojans, banking Trojans, DoS Trojans, FTP Trojans, software-disabling Trojans, and covert-channel Trojans. In reality, it’s hard to place some Trojans into a single type because many have more than one function. To better understand what Trojans can do, refer to the following list, which outlines a few of these types:

Remote access: Remote-access Trojans (RATs) allow the attacker full control over the system. Poison Ivy is an example of this type of Trojan. Remote-access Trojans are usually set up as client/server programs so that the attacker can connect to the infected system and control it remotely.

Data hiding: The idea behind this type of Trojan is to hide a user’s data. This type of malware is also sometimes known as ransomware. This type of Trojan restricts access to the computer system that it infects, and it demands a ransom paid to the creator of the malware for the restriction to be removed.

E-banking: These Trojans (Zeus is one such example) intercept and use a victim’s banking information for financial gain. Usually, they function as a transaction authorization number (TAN) grabber, use HTML injection, or act as a form grabber. The sole purpose of these types of programs is financial gain.

Denial of service (DoS): These Trojans are designed to cause a DoS. They can be designed to knock out a specific service or to bring an entire system offline.

Proxy: These Trojans are designed to work as proxy programs that help a hacker hide and allow him to perform activities from the victim’s computer, not his own. After all, the farther away the hacker is from the crime, the harder it becomes to trace him.

FTP: These Trojans are specifically designed to work on port 21. They allow the hacker or others to upload, download, or move files at will on the victim’s machine.

Security-software disablers: These Trojans are designed to attack and kill antivirus or software firewalls. The goal of disabling these programs is to make it easier for the hacker to control the system.

Trojan Ports and Communication Methods

Trojans can communicate in several ways. Some use overt communications. These programs make no attempt to hide the transmission of data as it is moved on to or off of the victim’s computer. Most use covert communication channels. This means that the hacker goes to lengths to hide the transmission of data to and from the victim. Many Trojans that open covert channels also function as backdoors. A backdoor is any type of program that will allow a hacker to connect to a computer without going through the normal authentication process. If a hacker can get a backdoor program loaded on an internal device, the hacker can then come and go at will. Some of the programs spawn a connection on the victim’s computer connecting out to the hacker. The danger of this type of attack is the traffic moving from the inside out, which means from inside the organization to the outside Internet. This is usually the least restrictive because companies are usually more concerned about what comes in the network than they are about what leaves the network.

Trojan Goals

Not all Trojans were designed for the same purpose. Some are destructive and can destroy computer systems, whereas others seek only to steal specific pieces of information. Although not all of them make their presence known, Trojans are still dangerous because they represent a loss of confidentiality, integrity, and availability. Common targets of Trojans include the following:

Credit card data: Credit card data and banking information have become huge targets. After the hacker has this information, he can go on an online shopping spree or use the card to purchase services, such as domain name registration.

Electronic or digital wallets: Individuals can use an electronic device or online service that allows them to make electronic transactions. This includes buying goods online or using a smartphone to purchase something at a store. A digital wallet can also be a cryptocurrency wallet (such as Bitcoin, Ethereum, Litecoin, Ripple, and so on).

Passwords: Passwords are always a big target. Many of us are guilty of password reuse. Even if we are not, there is always the danger that a hacker can extract email passwords or other online account passwords.

Insider information: We have all had those moments in which we have said, “If only I had known this beforehand.” That’s what insider information is about. It can give the hacker critical information before it is made public or released.

Data storage: The goal of the Trojan might be nothing more than to use your system for storage space. That data could be movies, music, illegal software (warez), or even pornography.

Advanced persistent threat (APT): It could be that the hacker has targeted you as part of a nation-state attack or your company has been targeted because of its sensitive data. Two examples include Stuxnet and the APT attack against RSA in 2011. These attackers might spend significant time and expense to gain access to critical and sensitive resources.

Trojan Infection Mechanisms

After a hacker has written a Trojan, he will still need to spread it. The Internet has made this much easier than it used to be. There are a variety of ways to spread malware, including the following:

Peer-to-peer networks (P2P): Although users might think that they are getting the latest copy of a computer game or the Microsoft Office package, in reality, they might be getting much more. P2P networks and file-sharing sites such as The Pirate Bay are generally unmonitored and allow anyone to spread any programs they want, legitimate or not.

Instant messaging (IM): IM was not built with security controls. So, you never know the real contents of a file or program that someone has sent you. IM users are at great risk of becoming targets for Trojans and other types of malware.

Internet Relay Chat (IRC): IRC is full of individuals ready to attack the newbies who are enticed into downloading a free program or application.

Email attachments: Attachments are another common way to spread a Trojan. To get you to open them, these hackers might disguise the message to appear to be from a legitimate organization. The message might also offer you a valuable prize, a desired piece of software, or similar enticement to pique your interest. If you feel that you must investigate these attachments, save them first and then run an antivirus on them. Email attachments are the number-one means of malware propagation. You might investigate them as part of your information security job to protect network users.

Physical access: If a hacker has physical access to a victim’s system, he can just copy the Trojan horse to the hard drive (via a thumb drive). The hacker can even take the attack to the next level by creating a Trojan that is unique to the system or network. It might be a fake login screen that looks like the real one or even a fake database.

Browser and browser extension vulnerabilities: Many users don’t update their browsers as soon as updates are released. Web browsers often treat the content they receive as trusted. The truth is that nothing in a web page can be trusted to follow any guidelines. A website can send to your browser data that exploits a bug in a browser, violates computer security, and might load a Trojan.

SMS messages: SMS messages have been used by attackers to propagate malware to mobile devices and to perform other scams.

Impersonated mobile apps: Attackers can impersonate apps in mobile stores (for example, Google Play or Apple Store) to infect users. Attackers can perform visual impersonation to intentionally misrepresents apps in the eyes of the user. Attackers can do this to repackage the application and republish the app to the marketplace under a different author. This tactic has been used by attackers to take a paid app and republish it to the marketplace for less than its original price. However, in the context of mobile malware, the attacker uses similar tactics to distribute a malicious app to a wide user audience while minimizing the invested effort. If the attacker repackages a popular app and appends malware to it, the attacker can leverage the user’s trust of their favorite apps and successfully compromise the mobile device.

Watering hole: The idea is to infect a website the attacker knows the victim will visit. Then the attacker simply waits for the victim to visit the watering hole site so the system can become infected.

Freeware: Nothing in life is free, and that includes most software. Users are taking a big risk when they download freeware from an unknown source. Not only might the freeware contain a Trojan, but freeware also has become a favorite target for adware and spyware.

Effects of Trojans

The effects of Trojans can range from the benign to the extreme. Individuals whose systems become infected might never even know; most of the creators of this category of malware don’t want to be detected, so they go to great lengths to hide their activity and keep their actions hidden. After all, their goal is typically to “own the box.” If the victim becomes aware of the Trojan’s presence, the victim will take countermeasures that threaten the attacker’s ability to keep control of the computer. In some cases, programs seemingly open by themselves or the web browser opens pages the user didn’t request. However, because the hacker is in control of the computer, he can change its background, reboot the systems, or capture everything the victim types on the keyboard.

Distributing Malware

Technology changes, and that includes malware distribution. The fact is that malware detection is much more difficult today than in the past. Today, it is not uncommon for attackers to use multiple layers of techniques to obfuscate code, make malicious code undetectable from antivirus, and employ encryption to prevent others from examining malware. The result is that modern malware improves the attackers’ chances of compromising a computer without being detected. These techniques include wrappers, packers, droppers, and crypters.

Wrappers offer hackers a method to slip past a user’s normal defenses. A wrapper is a program used to combine two or more executables into a single packaged program. Wrappers are also referred to as binders, packagers, and EXE binders because they are the functional equivalent of binders for Windows Portable Executable files. Some wrappers only allow programs to be joined; others allow the binding together of three, four, five, or more programs. Basically, these programs perform like installation builders and setup programs. Besides allowing you to bind a program, wrappers add additional layers of obfuscation and encryption around the target file, essentially creating a new executable file.

Packers are similar to programs such as WinZip, Rar, and Tar because they compress files. However, whereas compression programs compress files to save space, packers do this to obfuscate the activity of the malware. The idea is to prevent anyone from viewing the malware’s code until it is placed in memory. Packers serve a second valuable goal to the attacker in that they work to bypass network security protection mechanisms, such as host-and network-based intrusion detection systems. The malware packer will decompress the program only when in memory, revealing the program’s original code only when executed. This is yet another attempt to bypass antimalware detection.

Droppers are software designed to install malware payloads on the victim’s system. Droppers try to avoid detection and evade security controls by using several methods to spread and install the malware payload.

Crypters function to encrypt or obscure the code. Some crypters obscure the contents of the Trojan by applying an encryption algorithm. Crypters can use anything from AES, RSA, to even Blowfish, or might use more basic obfuscation techniques such as XOR, Base64 encoding, or even ROT13. Again, these techniques are used to conceal the contents of the executable program, making it undetectable by antivirus and resistant to reverse-engineering efforts.

Ransomware

Over the past few years, ransomware has been used by criminals making money out of their victims and by hacktivists and nation-state attackers causing disruption. Ransomware can propagate like a worm or a virus but is designed to encrypt personal files on the victim’s hard drive until a ransom is paid to the attacker. Ransomware has been around for many years but made a comeback in recent years. The following are several examples of popular ransomware:

WannaCry

Pyeta

Nyeta

Sodinokibi

Bad Rabbit

Grandcrab

SamSam

CryptoLocker

CryptoDefense

CryptoWall

Spora

Ransomware can encrypt specific files in your system or all your files, in some cases including the master boot record of your hard disk drive.

Covert Communication

Distributing malware is just half the battle for the attacker. The attacker will need to have some way to exfiltrate data and to do so in a way that is not detected. If you look at the history of covert communications, you will see that the Trusted Computer System Evaluation Criteria (TCSEC) was one of the first documents to fully examine the concept of covert communications and attacks. TCSEC divides covert channel attacks into two broad categories:

Covert timing channel attacks: Timing attacks are difficult to detect because they are based on system times and function by altering a component or by modifying resource timing.

Covert storage channel attacks: Use one process to write data to a storage area and another process to read the data.

It is important to examine covert communication on a more focused scale because it will be examined here as a means of secretly passing information or data. For example, most everyone has seen a movie in which an informant signals the police that it’s time to bust the criminals. It could be that the informant lights a cigarette or simply tilts his hat. These small signals are meaningless to the average person who might be nearby, but for those who know what to look for, they are recognized as a legitimate signal.

In the world of hacking, covert communication is accomplished through a covert channel. A covert channel is a way of moving information through a communication channel or protocol in a manner in which it was not intended to be used. Covert channels are important for security professionals to understand. For the ethical hacker who performs attack and penetration assessments, such tools are important because hackers can use them to obtain an initial foothold into an otherwise secure network. For the network administrator, understanding how these tools work and their fingerprints can help her recognize potential entry points into the network. For the hacker, these are powerful tools that can potentially allow him control and access.

How do covert communications work? Well, the design of TCP/IP offers many opportunities for misuse. The primary protocols for covert communications include Internet Protocol (IP), Transmission Control Protocol (TCP), User Datagram Protocol (UDP), Internet Control Message Protocol (ICMP), and Domain Name Service (DNS).

The Internet layer offers several opportunities for hackers to tunnel traffic. Two commonly tunneled protocols are IPv6 and ICMP.

IPv6 is like all protocols in that it can be abused or manipulated to act as a covert channel. This is primarily possible because edge devices might not be configured to recognize IPv6 traffic even though most operating systems have support for IPv6 turned on. According to US-CERT, Windows misuse relies on several factors:

Incomplete or inconsistent support for IPv6

The IPv6 autoconfiguration capability

Malware designed to enable IPv6 support on susceptible hosts

Malicious application of traffic “tunneling,” a method of Internet data transmission in which the public Internet is used to relay private network data

There are plenty of tools to tunnel over IPv6, including 6tunnel, socat, nt6tunnel, and relay6. The best way to maintain security with IPv6 is to recognize that even devices supporting IPv6 may not be able to correctly analyze the IPv6 encapsulation of IPv4 packets.

The second protocol that might be tunneled at the Internet layer is Internet Control Message Protocol (ICMP). ICMP is specified by RFC 792 and is designed to provide error messaging, best path information, and diagnostic messages. One example of this is the ping command. It uses ICMP to test an Internet connection.

The transport layer offers attackers two protocols to use: TCP and UDP. TCP offers several fields that can be manipulated by an attacker, including the TCP Options field in the TCP header and the TCP Flag field. By design, TCP is a connection-oriented protocol that provides robust communication. The following steps outline the normal TCP process:

A three-step handshake: This ensures that both systems are ready to communicate.

Exchange of control information: During the setup, information is exchanged that specifies maximum segment size.

Sequence numbers: This indicates the amount and position of data being sent.

Acknowledgments: This indicates the next byte of data that is expected.

Four-step shutdown: This is a formal process of ending the session that allows for an orderly shutdown.

Although SYN packets occur only at the beginning of the session, ACKs may occur thousands of times. They confirm that data was received. That is why packet-filtering devices build their rules on SYN segments. It is an assumption on the firewall administrator’s part that ACKs occur only as part of an established session. It is much easier to configure, and it reduces workload. To bypass the SYN blocking rule, a hacker may attempt to use TCP ACK packets as a covert communication channel. Tools such as AckCmd serve this exact purpose.

UDP is stateless and, as such, may not be logged in firewall connections; some UDP-based applications such as DNS are typically allowed through the firewall and might not be watched closely by network and firewall administrators. UDP tunneling applications typically act in a client/server configuration. Also, some ports like UDP 53 are most likely open. This means it’s also open for attackers to use as a potential means to exfiltrate data. There are several UDP tunnel tools that you should check out, including the following:

UDP Tunnel: Also designed to tunnel TCP traffic over a UDP connection. You can find UDP Tunnel at https://code.google.com/p/udptunnel/.

dnscat: Another option for tunneling data over an open DNS connection. You can download the current version, dnscat2, at https://github.com/iagox86/dnscat2.

Application layer tunneling uses common applications that send data on allowed ports. For example, a hacker might tunnel a web session, port 80, through SSH port 22 or even through port 443. Because ports 22 and 443 both use encryption, it can be difficult to monitor the difference between a legitimate session and a covert channel.

HTTP might also be used. Netcat is one tool that can be used to set up a tunnel to exfiltrate data over HTTP. If HTTPS is the transport, it is difficult for the network administrator to inspect the outbound data. Cryptcat (http://cryptcat.sourceforge.net) can be used to send data over HTTPS.

Finally, even Domain Name System (DNS) can be used for application layer tunneling. DNS is a request/reply protocol. Its queries consist of a 12-byte fixed-size header followed by one or more questions. A DNS response is formatted in much the same way in that it has a header, followed by the original question, and then typically a single-answer resource record. The most straightforward way to manipulate DNS is by means of these request/replies. You can easily detect a spike in DNS traffic; however, many times attackers move data using DNS without being detected for days, weeks, or months. They schedule the DNS exfiltration packets in a way that makes it harder for a security analyst or automated tools to detect.

Keyloggers

Keystroke loggers (keyloggers) are software or hardware devices used to record everything a person types. Some of these programs can record every time a mouse is clicked, a website is visited, and a program is opened. Although not truly a covert communication tool, these devices do enable a hacker to covertly monitor everything a user does. Some of these devices secretly email all the amassed information to a predefined email address set up by the hacker.

The software version of this device is basically a shim, as it sits between the operating system and the keyboard. The hacker might send a victim a keystroke-logging program wrapped up in much the same way as a Trojan would be delivered. Once installed, the logger can operate in stealth mode, which means that it is hard to detect unless you know what you are looking for.

There are ways to make keyloggers completely invisible to the OS and to those examining the file system. To accomplish this, all the hacker has to do is use a hardware keylogger. These devices are usually installed while the user is away from his desk. Hardware keyloggers are completely undetectable except for their physical presence. Even then, they might be overlooked because they resemble an extension. Not many people pay close attention to the plugs on the back of their computer.

To stay on the right side of the law, employers who plan to use keyloggers should make sure that company policy outlines their use and how employees are to be informed. The CERT Division of the Software Engineering Institute (SEI) recommends a warning banner similar to the following: “This system is for the use of authorized personnel only. If you continue to access this system, you are explicitly consenting to monitoring.”

Keystroke recorders have been around for years. Hardware keyloggers can be wireless or wired. Wireless keyloggers can communicate via 802.11 or Bluetooth, and wired keyloggers must be retrieved to access the stored data. One such example of a wired keylogger is KeyGhost, a commercial device that is openly available worldwide from a New Zealand firm that goes by the name of KeyGhost Ltd (http://www.keyghost.com). The device looks like a small adapter on the cable connecting one’s keyboard to the computer. This device requires no external power, lasts indefinitely, and cannot be detected by any software.

Numerous software products that record all keystrokes are openly available on the Internet. You have to pay for some products, but others are free.

Spyware

Spyware is another form of malicious code that is similar to a Trojan. It is installed without your consent or knowledge, hidden from view, monitors your computer and Internet usage, and is configured to run in the background each time the computer starts. Spyware has grown to be a big problem. It is usually used for one of two purposes:

Surveillance: Used to determine your buying habits, discover your likes and dislikes, and report this demographic information to paying marketers.

Advertising: You’re targeted for advertising that the spyware vendor has been paid to deliver. For example, the maker of a rhinestone cell phone case might have paid the spyware vendor for 100,000 pop-up ads. If you have been infected, expect to receive more than your share of these unwanted pop-up ads.

Many times, spyware sites and vendors use droppers to covertly drop their spyware components to the victim’s computer. Basically, a dropper is just another name for a wrapper, because a dropper is a standalone program that drops different types of standalone malware to a system.

Spyware programs are similar to Trojans in that there are many ways to become infected. To force the spyware to restart each time the system boots, code is usually hidden in the Registry run keys, the Windows Startup folder, the Windows load= or run= lines found in the Win.ini file, or the Shell= line found in the Windows System.ini file. Spyware, like all malware, may also make changes to the hosts file. This is done to block the traffic to all the download or update servers of the well-known security vendors or to redirect traffic to servers of their choice by redirecting traffic to advertisement servers and replacing the advertisements with their own.

If you are dealing with systems that have had spyware installed, start by looking at the hosts file and the other locations discussed previously or use a spyware removal program. It’s good practice to use more than one antispyware program to find and remove as much spyware as possible.

Analyzing Malware

Malware analysis can be extremely complex. Although an in-depth look at this area of cybersecurity is beyond this book, you should have a basic understanding of how analysis is performed. There are two basic methods to analyze viruses and other malware:

Static analysis

Dynamic analysis

Static Analysis

Static analysis is concerned with the decompiling, reverse engineering, and analysis of malicious software. The field is an outgrowth of the field of computer virus research and malware intent determination. Consider examples such as Conficker, Stuxnet, Aurora, and the Black Hole Exploit Kit. Static analysis makes use of disassemblers and decompilers to format the data into a human-readable format. Several useful tools are listed here:

IDA Pro: An interactive disassembler that you can use for decompiling code. It’s particularly useful in situations in which the source code is not available, such as with malware. IDA Pro allows the user to see the source code and review the instructions that are being executed by the processor. IDA Pro uses advanced techniques to make that code more readable. You can download and obtain additional information about IDA Pro at https://www.hex-rays.com/products/ida/.

Evan’s Debugger (edb): A Linux cross-platform AArch32/x86/x86-64 debugger. You can download and obtain additional information about Evan’s Debugger at https://github.com/eteran/edb-debugger.

BinText: Another tool that is useful to the malware analyst. BinText is a text extractor that will be of particular interest to programmers. It can extract text from any kind of file and includes the ability to find plain ASCII text, Unicode (double-byte ANSI) text, and resource strings, providing useful information for each item in the optional “advanced” view mode. You can download and obtain additional information about BinText from the following URL: https://www.aldeid.com/wiki/BinText.

UPX: A packer, compression, and decompression tool. You can download and obtain additional information about UPX at https://upx.github.io.

OllyDbg: A debugger that allows for the analysis of binary code where source is unavailable. You can download and obtain additional information about OllyDbg at http://www.ollydbg.de.

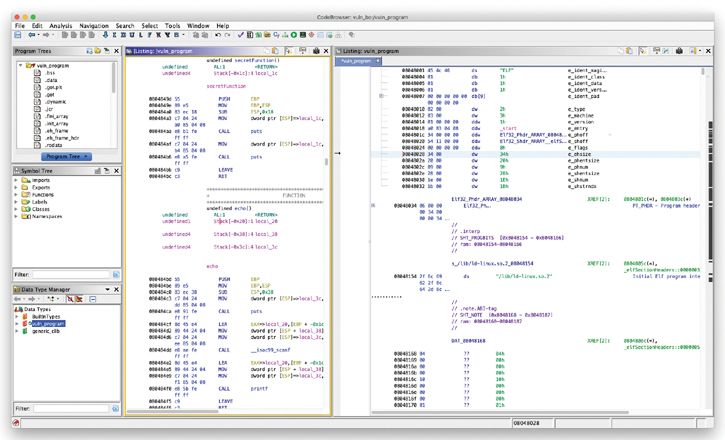

Ghidra: A software reverse engineering tool developed by the U.S. National Security Agency (NSA) Research Directorate. Figure 1-4 shows an example of a file being reversed engineered using Ghidra. You can download and obtain additional information about Ghidra at https://ghidra-sre.org.

Figure 1-4 The Ghidra Reverse Engineering Toolkit

Dynamic Analysis

Dynamic analysis of malware and viruses is the second method that may be used. Dynamic analysis relates to the monitoring and analysis of computer activity and network traffic. This requires the ability to configure the network device for monitoring, look for unusual or suspicious activity, and try not to alert attackers. This approach requires the preparation of a testbed. Before you begin setting up a dynamic analysis lab, remember that the number-one goal is to keep the malware contained. If you allow the host system to become compromised, you have defeated the entire purpose of the exercise. Virtual systems share many resources with the host system and can quickly become compromised if the configuration is not handled correctly. Here are a few pointers for preventing malware from escaping the isolated environment to which it should be confined:

Install a virtual machine (VM).

Install a guest operating system on the VM.

Isolate the system from the guest VM.

Verify that all sharing and transfer of data is blocked between the host operating system and the virtual system.

Copy the malware over to the guest operating system and prepare for analysis.

Malware authors sometimes use anti-VM techniques to thwart attempts at analysis. If you try to run the malware in a VM, it might be designed not to execute. For example, one simple way is to get the MAC address; if the Organizationally Unique Identifier (OUI) matches a VM vendor, the malware will not execute.

The malware may also look to see whether there is an active network connection. If not, it may refuse to run. One tool to help overcome this barrier is FakeNet. FakeNet simulates a network connection so that malware interacting with a remote host continues to run. If you are forced to detect the malware by discovering where it has installed itself on the local system, there are some known areas to review:

Running processes

Device drivers

Windows services

Startup programs

Operating system files

Malware has to install itself somewhere, and by a careful analysis of the system, files, memory, and folders, you should be able to find it.

Several sites are available that can help analyze suspect malware. These online tools can provide a quick and easy analysis of files when reverse engineering and decompiling is not possible. Most of these sites are easy to use and offer a straightforward point-and-click interface. These sites generally operate as a sandbox. A sandbox is simply a standalone environment that allows you to safely view or execute the program while keeping it contained. A good example of sandbox services is the Cisco ThreatGrid. This great tool and service tracks changes made to the file system, Registry, memory, and network.

During a network security assessment, you may discover malware or other suspected code. You should have an incident response plan that addresses how to handle these situations. If you’re using only one antivirus product to scan for malware, you may be missing a lot. As you learned in the previous section, websites such as the Cisco Talos File Reputation Lookup site (https://www.talosintelligence.com/reputation) and VirusTotal (https://virustotal.com) allow you to upload files to verify if they may be known malware.

These tools and techniques listed offer some insight as to how static malware analysis is performed, but don’t expect malware writers to make the analysis of their code easy. Many techniques can be used to make disassembly challenging:

Encryption

Obfuscation

Encoding

Anti-VM

Anti-debugger

Common Software and Hardware Vulnerabilities

The number of disclosed vulnerabilities continues to rise. You can keep up with vulnerability disclosures by subscribing to vulnerability feeds and searching public repositories such as the National Vulnerability Database (NVD). The NVD can be accessed at https://nvd.nist.gov.

There are many different software and hardware vulnerabilities and related categories. The sections that follow include a few examples.

Injection Vulnerabilities

The following are examples of injection-based vulnerabilities:

SQL injection vulnerabilities

HTML injection vulnerabilities

Command injection vulnerabilities

Code injection vulnerabilities are exploited by forcing an application or a system to process invalid data. An attacker takes advantage of this type of vulnerability to inject code into a vulnerable system and change the course of execution. Successful exploitation can lead to the disclosure of sensitive information, manipulation of data, denial-of-service conditions, and more. Examples of code injection vulnerabilities include the following:

SQL injection

HTML script injection

Dynamic code evaluation

Object injection

Remote file inclusion

Uncontrolled format string

Shell injection

SQL Injection

SQL injection (SQLi) vulnerabilities can be catastrophic because they can allow an attacker to view, insert, delete, or modify records in a database. In an SQL injection attack, the attacker inserts, or injects, partial or complete SQL queries via the web application. The attacker injects SQL commands into input fields in an application or a URL in order to execute predefined SQL commands.

Web applications construct SQL statements involving SQL syntax invoked by the application mixed with user-supplied data, as shown in Figure 1-5.

Figure 1-5 An Explanation of an SQL Statement

The first portion of the SQL statement shown in Figure 1-5 is not shown to the user. Typically, the application sends this portion to the database behind the scenes. The second portion of the SQL statement is typically user input in a web form.

If an application does not sanitize user input, an attacker can supply crafted input in an attempt to make the original SQL statement execute further actions in the database. SQL injections can be done using user-supplied strings or numeric input. Figure 1-6 shows an example of a basic SQL injection attack.

Figure 1-6 Example of an SQL Injection Vulnerability

Figure 1-6 shows an intentionally vulnerable application (WebGoat) being used to demonstrate the effects of an SQL injection attack. When the string Snow’ OR 1=’1 is entered in the web form, it causes the application to display all records in the database table to the attacker.

One of the first steps when finding SQL injection vulnerabilities is to understand when the application interacts with a database. This is typically done with web authentication forms, search engines, and interactive sites such as e-commerce sites.

SQL injection attacks can be divided into the following categories:

In-band SQL injection: With this type of injection, the attacker obtains the data by using the same channel that is used to inject the SQL code. This is the most basic form of an SQL injection attack, where the data is dumped directly in a web application (or web page).

Out-of-band SQL injection: With this type of injection, the attacker retrieves data using a different channel. For example, an email, a text, or an instant message could be sent to the attacker with the results of the query. Alternatively, the attacker might be able to send the compromised data to another system.

Blind (or inferential) SQL injection: With this type of injection, the attacker does not make the application display or transfer any data; rather, the attacker is able to reconstruct the information by sending specific statements and discerning the behavior of the application and database.

To perform an SQL injection attack, an attacker must craft a syntactically correct SQL statement (query). The attacker may also take advantage of error messages coming back from the application and might be able to reconstruct the logic of the original query to understand how to execute the attack correctly. If the application hides the error details, the attacker might need to reverse engineer the logic of the original query.

HTML Injection

An HTML injection is a vulnerability that occurs when an unauthorized user is able to control an input point and able to inject arbitrary HTML code into a web application. Successful exploitation could lead to disclosure of a user’s session cookies; an attacker might do this to impersonate a victim or to modify the web page or application content seen by the victims.

HTML injection vulnerabilities can lead to cross-site scripting (XSS). You will learn details about the different types of XSS vulnerabilities and attacks later in this chapter.

Command Injection

A command injection is an attack in which an attacker tries to execute commands that he or she is not supposed to be able to execute on a system via a vulnerable application. Command injection attacks are possible when an application does not validate data supplied by the user (for example, data entered in web forms, cookies, HTTP headers, and other elements). The vulnerable system passes that data into a system shell.

With command injection, an attacker tries to send operating system commands so that the application can execute them with the privileges of the vulnerable application. Command injection is not the same as code execution and code injection, which involve exploiting a buffer overflow or similar vulnerability.

Authentication-based Vulnerabilities

An attacker can bypass authentication in vulnerable systems by using several methods.

The following are the most common ways to take advantage of authentication-based vulnerabilities in an affected system:

Credential brute forcing

Session hijacking

Redirecting

Exploiting default credentials

Exploiting weak credentials

Exploiting Kerberos vulnerabilities

Credential Brute Force Attacks and Password Cracking

In a credential brute-force attack, the attacker attempts to log in to an application or a system by trying different usernames and passwords. There are two major categories of brute-force attacks:

Online brute-force attacks: In this type of attack, the attacker actively tries to log in to the application directly by using many different combinations of credentials. Online brute-force attacks are easy to detect because you can easily inspect for large numbers of attempts by an attacker.