CHAPTER 19

Protecting Your Network

The CompTIA Network+ certification exam expects you to know how to

• 1.8 Summarize cloud concepts and connectivity options

• 2.1 Compare and contrast various devices, their features, and their appropriate placement on the network

• 3.2 Explain the purpose of organizational documents and policies

• 4.1 Explain common security concepts

• 4.2 Compare and contrast common types of attacks

• 4.3 Given a scenario, apply network hardening techniques

• 4.5 Explain the importance of physical security

• 5.5 Given a scenario, troubleshoot general networking issues

To achieve these goals, you must be able to

• Explain concepts of network security

• Discuss common security threats in network computing

• Discuss common vulnerabilities inherent in networking

• Describe methods for hardening a network against attacks

• Explain how firewalls protect a network from threats

The very nature of networking makes networks vulnerable. A network must allow multiple users to access serving systems. At the same time, the network must be protected from harm. Doing so is a big business and part of the whole risk management issue touched on in Chapter 18. This chapter concentrates on threats, vulnerabilities, network hardening, and firewalls.

Test Specific

Security Concepts

IT security is a huge topic and the Network+ exam objectives go into a fair amount of detail on IT security concepts and practices. Before we get into the nitty-gritty, let’s break down a few critical concepts that will help us in this chapter.

CIA

There are three goals that are widely considered the foundations of the IT security trade: confidentiality, integrity, and availability (CIA). Security professionals work to achieve these goals in every security program and technology. These three goals inform all the data and the systems that process it. The three goals of security are called the CIA triad. Figure 19-1 illustrates the three goals of confidentiality, integrity, and availability.

Figure 19-1 The CIA triad

Confidentiality

Confidentiality is the goal of keeping unauthorized people from accessing, seeing, reading, or interacting with systems and data. Confidentiality is a characteristic met by keeping data secret from people who aren’t allowed to have it or interact with it in any way, while making sure that only those people who do have the right to access it can do so. Systems achieve confidentiality through various means, including the use of permissions to data, encryption, and so on.

Integrity

Meeting the goal of integrity requires maintaining data and systems in a pristine, unaltered state when they are stored, transmitted, processed, and received, unless the alteration is intended due to normal processing. In other words, there should be no unauthorized modification, alteration, creation, or deletion of data. Any changes to data must be done only as part of authorized transformations in normal use and processing. Integrity can be maintained by the use of a variety of checks and other mechanisms, including hashing, data checksums, comparison with known or computed data values, and cryptographic means.

Availability

Maintaining availability means ensuring that systems and data are available for authorized users to perform authorized tasks, whenever they need them. Availability is, to some degree, a trade-off between security and ease of use. An extremely secure system that’s not functional is not available in practice. Availability is ensured in various ways, including system redundancy, data backups, business continuity, and other means—but it also means not letting security goals render the system useless to the humans who need to use it.

During the course of your study, keep in mind the overall goals in IT security. First, balance three critical elements: functionality, security, and the resources available to ensure both. Second, focus on the goals of the CIA triad—confidentiality, integrity, and availability—when implementing, reviewing, managing, or troubleshooting network and system security. The book returns to these themes many times, tying new pieces of knowledge to this framework.

Zero Trust

Trust is a big deal when it comes to IT security. Who do you trust? How do you establish trust relationships between systems, organizations, and people? This is a massive conversation. In the traditional network security model, we trusted everyone who was already connected to the network, and focused our energy on protecting our sites and networks from everyone and everything outside it.

A better model for today’s world starts with no automatic trust at all, a concept called zero trust. Quoting NIST Special Publication (SP) 800-207, Zero Trust Architecture: “Zero trust is a cybersecurity paradigm focused on resource protection and the premise that trust is never granted implicitly but must be continually evaluated.”

In this model, there is no “trusted” network where you assume everyone connected is supposed to be connected, every device is malware free, and every resource is accessible. With a zero-trust architecture, you treat all traffic a device encounters as if it’s hostile—like there’s no difference between your office LAN and public Wi-Fi at the airport. In practice, this means that any user, device, or application that accesses a resource on your network should be explicitly authenticated and authorized to do so.

This is a major shift in thinking about how to design networks, but it has advantages. In particular, it reduces the risk that attackers can use one compromised device to attack other systems on the network. This doesn’t mean you can completely prevent lateral movement, but hosts in a proper zero-trust environment will always have their guard up.

Defense in Depth

Zero trust is one instance of a whole philosophy of security-centric thinking that replaces a more traditional focus on building networks with a crunchy perimeter and an easy-to-abuse interior with a focus on security at every node and layer. As a philosophy, defense in depth acknowledges that you can’t build a completely secure perimeter—so you should design your security posture with the assumption that every single defense can be beaten.

Because defense in depth is a philosophy and not a package deal you can buy from Microsoft, understanding what counts toward supporting that philosophy can be a little tricky. The fact of the matter is that almost everything counts. That said, when you see specific things all working together in a single organization—things like strong physical security, network segmentation, separation of duties, strong passwords, great password hygiene, and rigorous patch management—you can be pretty sure somebody in charge understands the value of defense in depth.

Separation of Duties

Much as a defense-in-depth approach acknowledges that all defenses can be beaten, it’s also important to acknowledge that people are flawed—there’s a very real risk employees will make a mistake or be tempted to abuse their power. Separation of duties is all about trying to manage this risk by identifying how people could abuse or misuse a system, determining what access they’d need to do so, and then splitting up that access so that no individual has the ability to do it alone.

If you’ve ever seen a scene in a movie where access to a secure area or weapon requires two keys or access cards, you’ve seen a simple kind of separation of duties. In the real world, separation of duties is usually nowhere near this exciting. For example, the person responsible for designing or implementing your organization’s IT security shouldn’t also be responsible for performing a security audit on it.

Network Threats

A network threat is any form of potential attack against your network. Don’t think only about Internet attacks here. Sure, hacker-style threats are real, but there are so many others. A threat can be a person sneaking into your offices and stealing passwords, or an ignorant employee deleting files they should not have access to in the first place. Traditionally, most of the threats we focus on are external threats posed by people and systems outside of our organizations—but a strong security posture also means being prepared for internal threats posed by members of your organization.

Just by reading the word “potential” you should know that this list could go on for pages. This section includes a list of common network threats. CompTIA does not include all of these in the Network+ exam objectives (because they’re covered in CompTIA A+ or Security+), but I’ve included them here to give a real-world sense of scope:

• Spoofing

• Packet/protocol abuse

• Zero-day attacks

• Rogue devices

• ARP cache poisoning

• Denial of service (with a lot of variations on a theme)

• On-path attack/man-in-the-middle

• Session hijacking

• Password attacks (brute force and dictionary)

• Compromised system

• Insider threat/malicious employee

• VLAN hopping

• Administrative access control

• Malware

• Social engineering

• And more!

It’s quite a list, but before we dive in, I want to nail down some general terms that come up a lot when we discuss threats.

Threat Terminology

In a very general sense, every threat pairs up with one or more vulnerabilities—weaknesses that the threat takes advantage of to work. That said, most of the time a vulnerability refers to an IT-specific weakness, like a problem with hardware, software, or configuration.

We can fix some vulnerabilities by just correcting our configuration or updating software as soon as a patch is available. But sometimes the best we can do is mitigate a vulnerability by taking other steps to minimize the risk—this is especially common when the vulnerability is really a design problem with the hardware or protocols we’re using.

An exploit is an actual procedure for taking advantage of a vulnerability. When a vulnerability is widespread, well known, and easy to take advantage of, working exploits often turn up in hacking and penetration-testing tools that make it easy for people who can’t even spell exploit to abuse one. Other vulnerabilities require the stars to align for anyone to exploit them, and might even lurk undiscovered for decades.

Finally, let’s circle back to talk about a term that has been flying under the radar. A simple definition of an attack is when someone tries to compromise your organization or its systems (especially their confidentiality, integrity, or availability). But the word attack also gets thrown around a lot to categorize different tactics, threats, and exploits. I’ll use it both ways in this chapter, but don’t let it mislead you—unless your organization is neglecting security, most serious efforts to compromise it will string together multiple tactics and exploits that target more than one vulnerability.

Spoofing

Spoofing is the process of pretending to be someone or something you are not by placing false information into your packets. Any data sent on a network can be spoofed. Here are a few quick examples of commonly spoofed data:

• Source MAC address (MAC spoofing) or IP address (IP spoofing), to make you think a packet came from somewhere else

• Address Resolution Protocol (ARP) message (ARP spoofing) that links the attacker’s MAC address to a legitimate network computer, client, or server; to make you think that the message is from a trusted source. (See “ARP Cache Poisoning” later in the chapter for the gory details.)

• E-mail address, to make you think an e-mail came from somewhere else

• Web address, to make you think you are on a Web page you are not on

• Username, to make you think a certain user is contacting you when in reality it’s someone completely different

Generally, spoofing isn’t so much a threat as it is a tool to make threats. If you spoof my e-mail address, for example, that by itself isn’t a threat. If you use my e-mail address to pretend to be me, however, and to ask my employees to send to you their usernames and passwords for network login? That’s clearly a threat. (And also a waste of time; my employees would never trust me with their usernames and passwords.)

One of the nastier spoofing attacks targets DNS servers, the backbone of naming on all networks today. In DNS cache poisoning, an attacker poisons a DNS server’s cache to point clients to an evil Web server instead of the correct one.

To prevent DNS cache poisoning, the typical use case scenario is to add Domain Name System Security Extensions (DNSSEC) for domain name resolution. All the DNS root and top-level domains (plus hundreds of thousands of other DNS servers) use DNSSEC.

Packet/Protocol Abuse

No matter how hard the Internet’s designers try, it seems there is always a way to take advantage of a protocol by using it in ways it was never meant to be used. Anytime you do things with a protocol that it wasn’t meant to do and that abuse ends up creating a threat, this is protocol abuse. A classic example involves the Network Time Protocol (NTP).

The Internet keeps time by using NTP servers. Without NTP providing accurate time for everything that happens on the Internet, anything that’s time sensitive would be in big trouble.

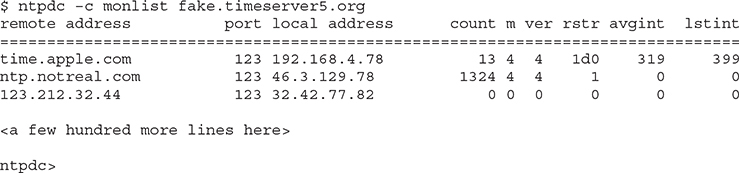

No computer’s clock is perfect, so NTP is designed for each NTP server to have a number of peers. Peers are other NTP servers that one NTP server can compare its own time against to make sure its clock is accurate. Occasionally a person running an NTP server might want to query the server to determine what peers it uses. The command used on just about every NTP server to submit queries is called ntpdc. The ntpdc command puts the NTP server into interactive mode so that you can then make queries to the NTP server. One of these queries is called monlist. The monlist query asks the NTP server about the traffic going on between itself and peers. If you query a public NTP server with monlist, it generates a lot of output:

A bad guy can hit multiple NTP servers with the same little command—with a spoofed source IP address—and generate a ton of responses from the NTP server to that source IP address. Enough of these requests will bring the spoofed source computer—now called the target or victim—to its knees. We call this a denial of service attack (covered a bit later), and it’s a form of protocol abuse.

If that’s not sinister enough, hackers can also use evil programs that inject unwanted information into packets in an attempt to break another system. We call these malformed packets. Programs such as Scapy let you generate malformed packets and send them to anyone. You can use this to exploit a vulnerable server. What will happen if you broadcast a DHCP request with corrupt or incorrect data in the Options field? Well, if your DHCP server happens to have an unpatched vulnerability and reads the malformed request, it will break in some way: crashing the server, corrupting data, or giving an attacker remote access! This is an exploit created by packet abuse.

Zero-Day Attacks

As I mentioned earlier, some vulnerabilities are known and others lurk undiscovered. If that sounds a little sinister, the reality is actually a lot worse. There are plenty of unreported, unfixed vulnerabilities that someone knows about—and there’s a whole black-market trade where nefarious characters sell and buy them for their own purposes.

When we’re lucky, new vulnerabilities come to light due to the tireless efforts of security researchers who discover these problems and try to report them in a responsible way that gives the developer time to come up with a patch or workaround. If we’re a little less lucky, the developer dawdles, prompting the researcher to publicly disclose the vulnerability so that users, at least, can start taking the problem seriously.

What about when we aren’t so lucky? Someone launches a zero-day attack—an attack that leverages a previously unknown vulnerability that we’ve had zero days to fix or mitigate.

Rogue Devices

Some network devices—especially routers, switches, access points, firewalls, and DHCP servers—have a lot of power and require trust. Attackers love to usurp this trust and power by tricking your clients into believing rogue devices under the attackers’ control are legitimate.

DHCP Snooping

In order to defang rogue DHCP servers, DHCP snooping creates a database (called the DHCP snooping binding database) of MAC addresses for all of a network’s known DHCP servers (connected to trusted ports) and clients (connected to untrusted ports). If a system connected to an untrusted port starts sending DHCP server messages, the DHCP snoop–capable switch will block that system, stopping all unauthorized DHCP traffic and sending some form of alarm to the appropriate person.

RA-Guard

DHCP snooping does a great job of protecting IPv4 networks, but DHCP is much less important in IPv6 networks. How do we protect against rogue router advertisements on our IPv6 networks? That’s where Router Advertisement Guard (RA-Guard) comes in. Similar to DHCP snooping, RA-Guard enables the switch to block router advertisements and router redirect messages that are not sent from trusted ports or don’t match a policy. The ability to define a policy for valid RA messages enables administrators to validate that a router advertisement contains what it should—such as only using prefixes from a set list.

ARP Cache Poisoning

ARP cache poisoning attacks target ARP caches on hosts and MAC address tables on switches. As we saw back in Chapter 6, the process and protocol used in resolving an IP address to an Ethernet MAC address is called Address Resolution Protocol (ARP).

Every node on a TCP/IP network has an ARP cache that stores a list of known IP addresses and their associated MAC addresses. On a Windows system you can see the ARP cache using the arp –a command. Here’s part of the result of typing arp –a on my system:

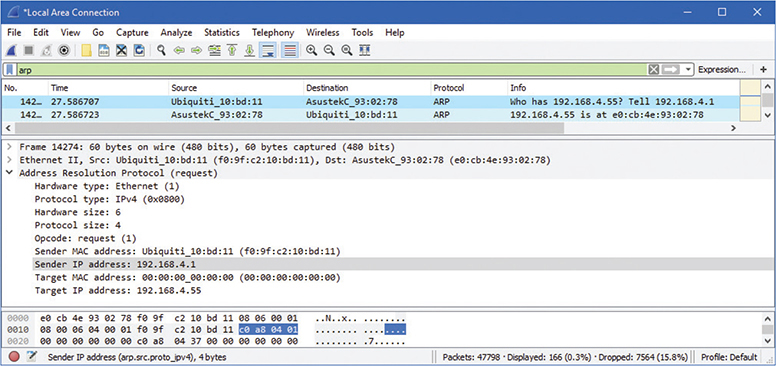

If a device wants to send an IP packet to another device, it must encapsulate the IP packet in an Ethernet frame on wired LANs. If the sending device doesn’t know the destination device’s MAC address, it sends a special broadcast called an ARP request. In turn, the device with that IP address responds with a unicast packet to the requesting device. Figure 19-2 shows a Wireshark capture of an ARP request and response.

Figure 19-2 ARP request and response

The problem with ARP is that it has no security. Any device that can get on a LAN can wreak havoc with ARP requests and responses. For example, ARP enables any device at any time to announce its MAC address without first getting a request. Additionally, ARP has a number of very detailed but relatively unused specifications. A device can just declare itself to be a “router.” How that information is used is up to the writer of the software used by the device that hears this announcement. More than a decade ago, ARP poisoning caused a tremendous amount of trouble.

Poisoning in Action

Here’s how an ARP cache poisoning attack works. Figure 19-3 shows a typical tiny network with a gateway, a switch, a DHCP server, and two clients. Assuming nothing has recently changed with the computers’ IP addresses, each system’s ARP cache should look something like Figure 19-4. (ARP caches don’t store computer names, but I’ve added them for clarity.)

Figure 19-3 Our happy network

Figure 19-4 Each computer’s ARP cache should look about the same.

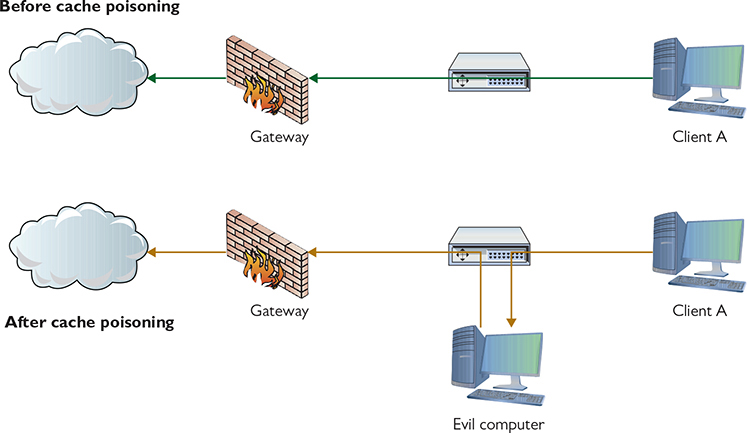

If a bad actor can get inside the network (like plugging into an unused Ethernet port), using the proper tools, he can send false ARP frames that each computer reads, placing evil data into their ARP caches (which is why this is called ARP cache poisoning). See Figure 19-5.

Figure 19-5 Every system’s ARP cache is now poisoned.

Once the poisoning starts, the evil computer can perform an on-path attack (aka man-in-the-middle attack), reading every packet going through it, as shown in Figure 19-6.

Figure 19-6 ARP cache poisoning enables an on-path attack.

Dynamic ARP Inspection

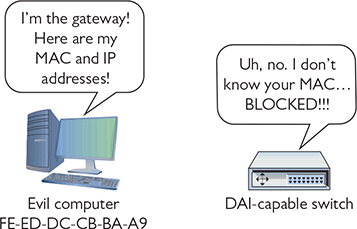

Clearly, we’d like to avoid ARP cache poisoning attacks. Fortunately, help is available. Dynamic ARP Inspection (DAI) technology in switches relies on ARP information that DHCP snooping collects in the DHCP snooping binding database—it’s essentially a list of known-good IP and MAC addresses (Figure 19-7).

Figure 19-7 DAI consulting the DHCP snooping binding database

Now if an ARP poisoner suddenly decides to attack this network, the DAI-capable switch notices the unknown MAC address and blocks it (Figure 19-8).

Figure 19-8 DAI in action

Denial of Service

Hundreds of millions of servers on the Internet provide a multitude of different services. Given the amount of security now built in at so many different levels, it’s more difficult than ever for a bad guy to cripple any one particular service by exploiting a weakness in the servers themselves. So what’s a bad guy (or gal, group, or government) to do to shut down a service he doesn’t like, even if he is unaware of any exploits on the target servers? Why, denial of service, of course!

A denial of service (DoS) attack is a targeted attack on a server (or servers) that provides some form of service on the Internet (such as a Web site), with the goal of making that service unable to process any incoming requests. DoS attacks come in many different forms. The simplest example is a physical attack, where a person physically attacks the servers by going to where the servers are located and shutting them down or disconnecting their Internet connections, in some cases permanently. Physical DoS attacks are good to know for the exam, but they aren’t very common unless the service is very small and served in only a single location.

The most common form of DoS is when a bad guy uses his computer to flood a targeted server with so many requests that the service is overwhelmed and ceases functioning. These attacks are most commonly performed on Web and e-mail servers, but any Internet service’s servers can be attacked via some DoS method.

The secret to a successful DoS attack is to use up so much of a victim’s resources that they can’t serve legitimate requests. The important thing to understand about DoS attacks is that there are a million and one ways to waste resources—and they can be combined in some really creative ways—so it may help to distinguish between tactics that focus on wasting resources with an overwhelming volume of requests and tactics that waste resources in much more targeted ways.

Internet-service servers are robust devices, designed to handle a massive number of requests per second. These robust servers make it tricky for a single bad guy at a single computer to send enough requests to slow them down. The main way to send enough traffic to swamp a server is to get help. In theory this might mean a bad guy and a million of his friends all sign up to spray their target with packets—a distributed denial of service (DDoS) attack. In reality, DDoS operators usually don’t own these computers, but instead use malware (discussed later) to take control of computers. A single computer under the control of an operator is called a zombie or bot. A group of computers under the control of one operator is called a botnet. Various command and control (C2) protocols are used to automate server controls over botnets, thus limiting the need for people once the initial zombification happens.

A botnet isn’t the only way for an attacker to get help, though. Another tactic is to send requests that spoof the target server’s IP address as the source IP address to otherwise normally operating servers, such as DNS or NTP servers, using reflection to aim their resources at your target. Reflection is often combined with amplification—a tactic that focuses on sending small requests that trigger large responses reflected at your target—because it helps the attacker use their own limited resources efficiently to deliver a much larger volume to the target.

Sometimes it’s best to work smart instead of hard—and that’s the bread and butter of low-and-slow DoS tactics. With a low-and-slow attack, the bad guys send a small number of cleverly crafted packets to the victim that keep the target busy for as long as possible. These come in all kinds of shapes and sizes because they generally take advantage of some characteristic of the service they attack.

For example, Web servers can be vulnerable to a R.U.D.Y (R U Dead Yet) attack where the attacker fills out a Web form with a ton of content and opens a connection to submit it. Instead of being polite, the attacker takes their sweet time trickling a few bytes at a time to the server, tying up a connection it needs to serve legitimate traffic. If the attacker opens enough of these requests, they can deny access to the service to everyone else.

Deauthentication Attack

A deauthentication (deauth) attack—a form of DoS attack—targets 802.11 Wi-Fi networks specifically by sending out a frame that kicks a wireless client off its current WAP connection. A rogue WAP nearby presents a great and often automatic alternative option for connection. The rogue WAP connects the client to the Internet and then proceeds to collect data from that client.

The deauth attack targets a specific Wi-Fi frame called a deauthentication frame, normally used by a WAP to kick an unauthorized WAP off its network. The attacker flips this narrative on its head, using the good disconnect frame for evil purposes. (And here you thought only wired networks got all the love from DoS attacks.) Refer to Chapter 14 to refresh your memory on Wi-Fi security.

DHCP Starvation Attack

Deauth attacks aren’t the only way attackers can use DoS to shift legitimate clients over to rogue devices. DHCP is vulnerable to something very similar, even though it looks a little different in practice. Because DHCP servers hand out IP address leases for a set amount of time, and have a limited number of leases to give out, they’re vulnerable to DHCP scope exhaustion: they just plain run out of open addresses.

An attacker can use this limitation to their advantage by spoofing packets to the DHCP server, tricking it into giving away all of its leases—a DHCP starvation attack. Much like a deauth attack, DHCP starvation is usually not the end objective, but just a technique used to encourage clients to switch to a rogue DHCP server that the attacker controls.

On-Path Attack

In an on-path attack—traditionally called a man-in-the-middle attack—an attacker taps into communications between two systems, covertly intercepting traffic thought to be only between those systems, reading or in some cases even changing the data and then sending the data on. Man-in-the-middle attacks are commonly perpetrated using ARP poisoning. But a classic man-in-the-middle attack would be to spoof the SSID and let people connect to the rogue WAP controlled by the attacker. The attacker could then listen in on that wireless network, gathering up all the conversations and gaining access to passwords, shared keys, or other sensitive information. Though heavily mitigated today by TLS and certificate pinning, attacks like this show why many organizations are moving to a zero-trust model of network security.

Session Hijacking

Somewhat similarly to man-in-the-middle attacks, session hijacking tries to intercept a valid computer session to get authentication information. Unlike man-in-the-middle attacks, session hijacking only tries to grab authentication information, not necessarily listen in for additional information.

Password Attacks

In a password attack, a bad actor uses various methods to discover a password, often comparing various potential passwords against known hashes of passwords. The methods vary from the simplest brute-force approach to more sophisticated approaches like dictionary attacks.

Brute Force

Brute force is an attack where a threat agent guesses every permutation of some part of data. Most of the time the term “brute force” refers to an attempt to crack a password, but the term applies to other attacks. You can brute force a search for open ports, network IDs, usernames, and so on. Pretty much any attempt to guess the contents of some kind of data field that isn’t obvious (or is hidden) is considered a brute-force attack.

Dictionary

A dictionary attack uses a list of known words and partial words as the starting point for cracking passwords. People tend to create passwords they can remember. Eduardo’s password is 3L!t3juaN, which looks pretty good at first blush. But a typical dictionary attack can be set up to do all kinds of substitution checks automatically, such as the number 3 for the letter e, for example, or ! for the letter i. Running a scan that does all the permutations for “elite one” would crack Eduardo’s password pretty quickly with the power of modern computers.

Physical/Local Access

Not all threats to your network originate from faraway bad guys. There are many threats that lurk right in your LAN. This is a particularly dangerous place as these threats don’t need to worry about getting past your network edge defenses such as firewalls or WAPs. You need to watch out for problems with hardware, software, and, worst of all, the people who are on your LAN.

Insider Threats

The greatest hackers in the world will all agree that being inside an organization, either physically or by access permissions, makes evildoing much easier. Malicious employees are a huge threat because of their ability to directly destroy data, inject malware, and initiate attacks. These are collectively called insider threats.

Trusted and Untrusted Users A worst-case scenario from the perspective of security is unsecured access to private resources. A couple of terms come into play here. There are trusted users and untrusted users. A trusted user is an account that has been granted specific authority to perform certain or all administrative tasks. An untrusted user is just the opposite, an account that has been granted no administrative powers.

Trusted users with poor password protection or other security leakages can be compromised. Untrusted users can be upgraded “temporarily” to accomplish a particular task and then forgotten. Consider this situation: A user accidentally copied a bunch of files to several shared network repositories. The administrator does not have time to search for and delete all of the files. The user is granted deletion capability and told to remove the unneeded files. Do you feel a disaster coming? The newly created trusted user could easily remove the wrong files. Careful management of trusted users is the simple solution to these types of threats.

Every configurable device, like a managed switch, has a default password and default settings, all of which can create an inadvertent insider threat if not addressed. People sometimes can’t help but be curious. A user might note the IP address of a switch on his network, for example, and try to connect with Secure Shell (SSH) “just to see.” Because it’s so easy to get the default passwords/settings for devices with a simple Google search, that information is available to the user. One change on that switch might mean a whole lot of pain for the network tech or administrator who has to fix things.

Dealing with such authentication issues is straightforward. Before bringing any system online, change any default accounts and passwords. This is particularly true for administrative accounts. Also, disable or delete any “guest” accounts (make sure you have another account created first!). Finally, apply the principle of least privilege—always assign the most-limited privileges that will be sufficient.

Malicious Users Much more worrisome than accidental accesses to unauthorized resources are malicious users who consciously attempt to access, steal, or damage resources. Malicious users or actors may represent an external or internal threat.

What does a malicious user want to do? If they are intent on stealing data or gaining further access, they may try packet sniffing. This is difficult to detect, but as you know from previous chapters, encryption is a strong defense against sniffing.

One of the first techniques that malicious users try is to probe hosts to identify any open ports. There are many tools available to poll all stations on a network for their up/down status and for a list of any open ports (and, by inference, all closed ports too). Nmap is the de facto tool for troubleshooting hosts, but can be used for malevolent activities.

Having found an open port, another way for a malicious user to gain information and additional access is to probe a host’s open ports to learn details about running services. This is known as banner grabbing. For instance, a host may have an exposed SSH server running. Using a utility like Nmap or Netcat, a malicious user can send an request to port 22. The server may respond with a message indicating the type and version of SSH server software that is running; for example:

With that information, the malicious actor can then learn about vulnerabilities of that product and continue their pursuit. The obvious solution to port scanning and banner grabbing is to not run unnecessary services (resulting in an open port) on a host and to make sure that running processes have current security patches installed.

In the same vein, a malicious user may attempt to exploit known vulnerabilities of certain devices attached to the network. MAC addresses of Ethernet NICs have their first 24 bits assigned by the IEEE. This is a unique number assigned to a specific manufacturer and is known as the organizationally unique identifier (OUI), sometimes called the vendor ID. By issuing certain messages such as broadcasted ARP requests, a malicious user can collect all of the OUI numbers of the wired and wireless nodes attached to a network or subnetwork. Using common lookup tools, the malicious user can identify devices by OUI numbers assigned to particular manufacturers. The past few years have seen numerous DDoS attacks using zombified Internet of Things (IoT) devices, such as security cameras.

VLAN Hopping

An older form of attack that still comes up from time to time, called VLAN hopping, enables an attacker to access a VLAN they’d otherwise have no access to. The mechanism behind VLAN hopping is to take a system that’s connected to one VLAN and, by abusing VLAN commands to the switch, convince the switch to change your switch port connection to a trunk link.

Administrative Access Control

All operating systems and many switches and routers come with some form of access control list (ACL) that defines what users can do with a device’s shared resources. An access control might be a file server giving a user read-only privileges to a particular folder, or a firewall only allowing certain internal IP addresses to access the Internet. ACLs are everywhere in a network. In fact, you’ll see more of them from the standpoint of a firewall later in this chapter.

Every operating system—and many Internet applications—are packed with administrative tools and functionality. You need these tools to get all kinds of work done, but by the same token, you need to work hard to keep these capabilities out of the reach of those who don’t need them.

Make sure you know the administrative accounts native to Windows (administrator), Linux (root), and macOS (root). You must carefully control these accounts. Clearly, giving regular users administrator/root access is a bad idea, but far more subtle problems can arise. I once gave a user the Manage Documents permission for a busy laser printer in a Windows network. She quickly realized she could pause other users’ print jobs and send her print jobs to the beginning of the print queue—nice for her but not so nice for her co-workers. Protecting administrative programs and functions from access and abuse by users is a real challenge and one that requires an extensive knowledge of the operating system and of users’ motivations.

Unused Components and Devices

In many organizations, unused components and devices can be an easily overlooked risk. Your old laptops, desktops, hard drives, printers, and network hardware can easily have sensitive data sitting there for the taking—or they could be just the thing an attacker needs to access your network without arousing suspicion. Every computing device and IT system has a system life cycle, from shiny and new, to patched and secure, to “you’re still using that old junk?”, to safely decommissioned.

Organizations that are serious about archiving or destroying sensitive data as needed typically have system life cycle policies that cover everything from how to plan and provision new IT systems to asset disposal. These policies might cover where and how to archive important data before decommissioning components, how to ensure no one else can recover sensitive data from your old devices, and whether you should donate old devices to a worthy nonprofit organization or send them through a shredder.

The big thing to keep in mind here is that there are all kinds of devices and systems out in the world, and they have all kinds of different components and wiping procedures. You don’t necessarily have to send your devices through a shredder, but a lot of people default to physical destruction as a surefire way to sanitize devices for disposal instead of effectively leaving all of your HR department’s files out by the curb for anyone who’s curious. In many cases, performing a factory reset/wipe configuration is sufficient—especially when it comes to networking gear and devices that use full-disk encryption. In every case, you should follow your organization’s policy!

Malware

The term malware describes any program or code (macro, script, and so on) that’s designed to do something on a system or network that you don’t want to have happen. Malware comes in many forms, such as viruses, worms, macros, Trojan horses, rootkits, adware, and spyware. We’ll examine all these malware flavors in this section. Stopping malware, by far the number one security problem for just about everyone, is so important that we’ll address that topic in its own section later in this chapter, “Anti-Malware Programs.”

Crypto-malware/Ransomware

Crypto-malware uses some form of encryption to lock a user out of a system. Once the crypto-malware encrypts the computer, usually encrypting the boot drive, in most cases the malware then forces the user to pay money to get the system decrypted. When any form of malware makes you pay to get the malware to go away, we call that malware ransomware. If a crypto-malware uses a ransom, we commonly call it crypto-ransomware.

Crypto-ransomware is one of the most troublesome malwares today, first appearing around 2012 and still going strong. Zero-day variations of crypto-malware, with names such as CryptoWall or WannaCry, are often impossible to clean.

Virus

A virus is a program that has two jobs: to replicate and to activate. Replication means it makes copies of itself, often as code stored in boot sectors or as extra code added to the end of executable programs. A virus is not a stand-alone program, but rather something attached to a host file, kind of like a human virus. Activation is when a virus does something like erase the boot sector of a drive. A virus only replicates to other applications on a drive or to other drives, such as flash drives or optical media. It does not replicate across networks. Plus, a virus needs human action to spread.

Worm

A worm functions similarly to a virus, though it replicates exclusively through networks. A worm, unlike a virus, doesn’t have to wait for someone to use a removable drive to replicate. If the infected computer is on a network, a worm immediately starts sending copies of itself to any other computers it can locate on the network. Worms can exploit inherent vulnerabilities in program code, attacking programs, operating systems, protocols, and more. Worms, unlike viruses, do not need host files to infect.

Macro

A macro is any type of virus that exploits application macros to replicate and activate. A macro is also programming within an application that enables you to control aspects of the application. Macros exist in any application that has a built-in macro language, such as Microsoft Excel, that users can program to handle repetitive tasks (among other things).

Logic Bomb

A logic bomb is code written to execute when certain conditions are met, usually with malicious intent. A logic bomb could be added to a company database, for example, to start deleting files if the database author loses her job. Or, the programming could be added to another program, such as a Trojan horse.

Trojan Horse

A Trojan horse is a piece of malware that looks or pretends to do one thing while, at the same time, doing something evil. A Trojan horse may be a game, like poker, or a free screensaver. The sky is the limit. The more “popular” Trojan horses turn an infected computer into a server and then open TCP or UDP ports so a remote user can control the infected computer. They can be used to capture keystrokes, passwords, files, credit card information, and more. Trojan horses do not replicate.

Rootkit

It’s easier for malware to succeed if it has a good way to hide itself. As awareness of malware has grown, anti-malware programs make it harder to find new hiding spots. A rootkit takes advantage of very low-level system functions to both gain privileged access and hide from all but the most aggressive of anti-malware tools. Rootkits make their happy little homes deep in operating systems, hypervisors, and even firmware. At this level, they can evade or even actively undermine malware scanners that need to execute on the infected system.

Adware/Spyware

There are two types of programs that are similar to malware in that they try to hide themselves to an extent. Adware is a program that monitors the types of Web sites you frequent and uses that information to generate targeted advertisements, usually pop-up windows. Adware isn’t, by definition, evil, but many adware makers use sneaky methods to get you to use adware, such as using deceptive-looking Web pages (“Your computer is infected with a virus—click here to scan NOW!”). As a result, adware is often considered malware. Some of the computer-infected ads actually install a virus when you click them, so avoid these things like the plague.

Spyware is a function of any program that sends information about your system or your actions over the Internet. The type of information sent depends on the program. A spyware program will include your browsing history. A more aggressive form of spyware may send keystrokes or all of the contacts in your e-mail. Some spyware makers bundle their product with ads to make them look innocuous. Adware, therefore, can contain spyware.

Social Engineering

A considerable percentage of attacks against your network fall under the heading of social engineering—the process of using or manipulating people inside the networking environment to gain access to that network from the outside. The term “social engineering” covers the many ways humans can use other humans to gain unauthorized information. This unauthorized information may be a network login, a credit card number, company customer data—almost anything you might imagine that one person or organization may not want a person outside of that organization to access.

Social engineering attacks aren’t considered hacking—at least in the classic sense of the word—although the goals are the same. Social engineering is where people attack an organization through the people in the organization or physically access the organization to get the information they need.

The most classic form of social engineering is the telephone scam in which someone calls a person and tries to get him or her to reveal his or her username/password combination. In the same vein, someone may physically enter your building under the guise of having a legitimate reason for being there, such as a cleaning person, repair technician, or messenger. The attacker then snoops around desks, looking for whatever he or she has come to find (one of many good reasons not to put passwords on your desk or monitor). The attacker might talk with people inside the organization, gathering names, office numbers, or department names—little things in and of themselves, but powerful tools when combined later with other social engineering attacks.

These old-school social engineering tactics are taking a backseat to a far more nefarious form of social engineering: phishing.

Phishing

In a phishing attack, the attacker poses as some sort of trusted site, like an online version of your bank or credit card company, and solicits you to update your financial information, such as a credit card number. You might get an e-mail message, for example, that purports to be from PayPal telling you that your account needs to be updated and provides a link that looks like it goes to https://www.paypal.com. Upon clicking the link, however, you end up at a site that claims to list a legitimate phone number for PayPal support, but is actually https://paypal-customer-service.example.com, a phishing site. Or the e-mail might have fabricated documents attached—like a speeding ticket or an invoice—designed to spur you into taking action.

Shoulder Surfing

Shoulder surfing is the process of surreptitiously monitoring people when they are accessing any kind of system, trying to ascertain password, PIN codes, or personal information. The term shoulder surfing comes from the classic “looking over someone’s shoulder” as the bad guy tries to get your password or PIN by watching which keys you press. Shoulder surfing is an old but still very common method of social engineering.

Physical Intrusion

You can’t consider a network secure unless you provide some physical protection to your network. I separate physical protection into two different areas: protection of servers and protection of clients.

Server protection is easy. Lock up your servers to prevent physical access by any unauthorized person. Large organizations have special server rooms, complete with card-key locks and tracking of anyone who enters or exits. Smaller organizations should at least have a locked closet. While you’re locking up your servers, don’t forget about any network switches! Hackers can access networks by plugging into a switch, so don’t leave any switches available to them.

Physical server protection doesn’t stop with a locked door. One of the most common mistakes made by techs is to walk away from a server while still logged in. Always log off from your server when you’re not actively managing the server. As a backup, add a password-protected screensaver (Figure 19-9).

Figure 19-9 Applying a password-protected screensaver to a server

Locking up all of your client systems is difficult, but your users should be required to perform some physical security. First, all users should lock their computers when they step away from their desks. Instruct them to press the WINDOWS KEY-L combination to perform the lock. Hackers take advantage of unattended systems to get access to networks.

Second, make users aware of the potential for dumpster diving and make paper shredders available. Last, tell users to mind their work areas. It’s amazing how many users leave passwords readily available. I can go into any office, open a few desk drawers, and invariably find little yellow sticky notes with usernames and passwords. If users must write down passwords, tell them to put them in locked drawers!

Common Vulnerabilities

If a threat is an action that threat agents do to try to compromise our networks, then a vulnerability is a potential weakness in our infrastructure that a threat might exploit. Note that I didn’t say that a threat will take advantage of the vulnerability: only that the vulnerability is a weak place that needs to be addressed.

Some vulnerabilities are obvious, such as connecting to the Internet without an edge firewall or not using any form of account control for user files. Other vulnerabilities are unknown or missed, and that makes the study of vulnerabilities very important for a network tech. This section explores a few common vulnerabilities.

Unnecessary Running Services

A typical system running any OS is going to have a large number of important programs running in the background, called services. Services do the behind-the-scenes grunt work that users don’t need to see, such as wireless network clients and DHCP clients. There are client services and server services.

As a Windows user, I’ve gotten used to seeing zillions of services running on my system, and in most cases I can recognize only about 50 percent of them—and I’m good at this! In a typical system, not all these services are necessary, so you should disable unneeded network services.

From a security standpoint, there are two reasons it’s important not to run any unnecessary services. First, most OSs use services to listen on open TCP or UDP ports, potentially leaving systems open to attack. Second, bad guys often use services as a tool for the use and propagation of malware.

The problem with trying not to run unnecessary services is the fact that there are just so many of them. It’s up to you to research services running on a particular machine to determine if they’re needed or not. It’s a rite of passage for any tech to review the services running on a system, going through them one at a time. Over time you will become familiar with many of the built-in services and get an eye for spotting the ones that just don’t look right. There are tools available to do the job for you, but this is one place where you need skill and practice.

Closing unnecessary services closes TCP/UDP ports. Every operating system has some tool for you to see exactly what ports are open. Figure 19-10 shows an example of the netstat command in macOS.

Figure 19-10 The netstat command in action

Unpatched/Legacy Systems

Unpatched systems—including operating systems and firmware—and legacy systems present a glaring security threat. You need to deal with such problems on live systems on your network. When it comes to unpatched OSs, well, patch or isolate them! There’s a number of areas in the book that touch on proper patching, especially Chapter 18, so we won’t go into more detail here.

Firmware updates enable programming upgrades that make network devices more efficient, more secure, and more robust, as you read in Chapter 18. Follow the procedures listed there to update firmware when necessary.

Legacy systems are a different issue altogether. Legacy means systems that are no longer supported by the OS maker and are no longer patched. In that case you need to consider the function of the system and either update if possible or, if not possible, isolate the legacy system on a locked-down network segment with robust firewall rules that give the system the support it needs (and protect the rest of the network if the system does get compromised). Equally, you need to be extremely careful about adding any software or hardware to a legacy system, as doing so might create even more vulnerabilities.

Unencrypted Channels

The open nature of the Internet has made it fairly common for us to use secure protocols or channels such as VPNs, SSL/TLS, and SSH. It never ceases to amaze me, however, how often people use unencrypted channels—especially in the most unlikely places. It was only a few years ago I stumbled upon a tech using Telnet to do remote logins into a very critical router for an ISP.

In general, look for the following insecure protocols and unencrypted channels:

• Using Telnet instead of SSH for remote terminal connections.

• Using HTTP instead of HTTPS on Web sites.

• Using insecure remote desktops like VNC.

• Using any insecure protocol in the clear. Run them through a VPN!

Cleartext Credentials

Older protocols offer a modicum of security—you often need a valid username and password, for example, when connecting to a File Transfer Protocol (FTP) server. The problem with such protocols (FTP, Telnet, POP3) is that they aren’t encrypted, and clients send cleartext credentials (usernames and passwords) to the server.

Let’s get one thing straight. If anyone’s listening, they’ll know your username and password. Unless you absolutely cannot avoid it, you shouldn’t be depending on the security of any application or protocol that stores or sends credentials in the clear. If you ignore this advice and the bad guys intercept your credentials, expect to get mocked on Twitter and Reddit.

Another place where cleartext credentials can pop up is poor configuration of applications that would otherwise be well protected. Almost any remote control program has some “no security” setting. This might be as obvious as a “turn off security” option or it could be a setting such as Password Authentication Protocol (PAP) (which, if you recall, means cleartext passwords). The answer here is understanding your applications and knowing ahead of time how to configure them to ensure good encryption of credentials.

RF Emanation

Radio waves can penetrate walls, to a certain extent, and accidental spill, called RF emanation, can lead to a security vulnerability. Avoid this by placing some form of filtering between your systems and the place where the bad guys are going to be using their super high-tech Bourne Identity spy tools to pick up on the emanations.

To combat these emanations, the U.S. National Security Agency (NSA) developed a series of standards called TEMPEST. TEMPEST defines how to shield systems and manifests in a number of different products, such as coverings for individual systems, wall coverings, and special window coatings. Unless you work for a U.S. government agency, the chance of you seeing TEMPEST technologies is pretty small.

Hardening Your Network

Once you’ve recognized threats and vulnerabilities, it’s time to start applying security hardware, software, and processes to your network to prevent bad things from happening. This is called hardening your network. Let’s look at three aspects of network hardening: physical security, network security, and host security.

Physical Security

There’s an old saying: “The finest swordsman in all of France has nothing to fear from the second finest swordsman in all of France.” It means that they do the same things and know the same techniques. The only difference between the two is that one is a little better than the other. There’s a more modern extension of the old saying that says: “On the other hand, the finest swordsman in all of France can be defeated by a kid with a rocket launcher!” Which is to say that the inexperienced, when properly equipped, can and will often do something totally unexpected.

Proper security must address threats from the second finest swordsman as well as the kid. We can leave no stone unturned when it comes to hardening the network, and this begins with physical security. Physical threats manifest themselves in many forms, including property theft, data loss due to natural damage such as fire or natural disaster, data loss due to physical access, and property destruction resulting from accident or sabotage.

Let’s look at physical security as a two-step process of prevention methods and detection methods. First, prevent and control access to IT resources to appropriate personnel. Second, track the actions of those authorized (and sometimes unauthorized) personnel.

Prevention and Control

The first thing we have to do when it comes to protecting the network is to make the network resources accessible only to personnel who have a legitimate need to fiddle with them. You need to use access control hardware. Start with the simplest approach: a lock. Locking the door to the network closet or equipment room that holds servers, switches, routers, and other network gear goes a long way in protecting the network. Key control is critical here and includes assigning keys to appropriate staff, tracking key assignments, and collecting the keys when they are no longer needed by individuals who move on. This type of access must be guarded against circumvention by ensuring policies are followed regarding who may have or use the keys. The administrator who assigns keys should never give one to an unauthorized person without completing the appropriate procedures and paperwork.

Locking down servers within the server room with unique keys adds another layer of physical security to essential devices. Additionally, all modern server chassis come with tamper detection features that will log in the motherboard’s nonvolatile RAM (NVRAM) if the chassis has been opened. The log will show chassis intrusion with a date and time. And it’s not just the server room (and resources with it) that we need to lock up. How about the front door? There are a zillion stories of thieves and saboteurs coming in through the front (or sometimes back) door and making their way straight to the corporate treasure chest. A locked front door can be opened by an authorized person, and an unauthorized person can attempt to enter through that already opened door, what’s called tailgating. While it is possible to prevent tailgating with policies, it is only human nature to “hold the door” for that person coming in behind you. Tailgating is especially easy to do when dealing with large organizations in which people don’t know everyone else. If the tailgater dresses like everyone else and maybe has a badge that looks right, he or she probably won’t be challenged. Add an armload of gear, and who could blame you for helping that person by holding the door?

There are a couple of techniques available to foil a tailgater. The first is a security guard. Guards are great. They get to know everyone’s faces. They are there to protect assets and can lend a helping hand to the overloaded, but authorized, person who needs in. They are multipurpose in that they can secure building access, secure individual room and office access, and perform facility patrols. The guard station can serve as central control of security systems such as video surveillance and key control. Like all humans, security guards are subject to attacks such as social engineering, but for flexibility, common sense, and a way to take the edge off of high security, you can’t beat a professional security guard or two.

For areas where an entry guard is not practical, there is another way to prevent tailgating. An access control vestibule—traditionally called a mantrap—is an entryway with two successive locked doors and a small space between them providing one-way entry or exit. After entering the first door, the second door cannot be unlocked until the first door is closed and secured. Access to the second door may be a simple key or may require approval by someone else who watches the trap space on video. Unauthorized persons remain trapped until they are approved for entry, let out the first door, or held for the appropriate authorities.

Brass keys aren’t the only way to unlock a door. This is the 21st century, after all. Twenty-five years ago, I worked in a campus facility with a lot of interconnected buildings. Initial access to buildings was through a security guard and then we traveled between the buildings with connecting tunnels. Each end of the tunnels had a set of sliding glass doors that kind of worked like the doors on the starship Enterprise. We were assigned badges with built-in radio frequency ID (RFID) chips. As we neared a door, the RFID chip was queried by circuitry in the door frame called a proximity reader, checked against a database for authorization, and then the door slid open electromechanically.

It was so cool and so fast that people would jog the hallways during lunch hours and not even slow down for any of the doors. A quarter century later, the technology has only gotten better. The badges in the old days were a little larger than a credit card and about three times as thick. Today, the RFID chip can be implanted in a small, unobtrusive key fob, like the kind you use to unlock your car.

If there is a single drawback to all of the physical door access controls mentioned so far, it is that access is generally governed by something that is in the possession of someone who has authorization to enter a locked place. That something may be a key, a badge, a key fob with a chip, or some other physical token. The problem here, of course, is that these items can be given or taken away. If not reported in a timely fashion, a huge security gap exists.

To move from the physical possession problem of entry access, physical security can be governed by something that is known only to authorized persons. A code or password that is assigned to a specific individual for a particular asset can be entered on an alphanumeric keypad that controls an electric or electromechanical door lock. There is a similar door lock mechanism called a cipher lock. A cipher lock is a door unlocking system that uses a door handle, a latch, and a sequence of mechanical push buttons. When the buttons are pressed in the correct order, the door unlocks and the door handle works. Turning the handle opens the latch or, if you pressed the wrong order of buttons, clears the unlocking mechanism so you can try again. Care must be taken by staff who are assigned a code to protect that code.

This knowledge-based approach to access control may be a little better than a possession-based system because information is more difficult to steal than a physical token. However, poor management of information can leave an asset vulnerable. Poor management includes writing codes down and leaving the notes easily accessible. Good password/code control means memorizing information where possible or securing written notes about codes and passwords.

Well-controlled information is difficult to steal, but it’s not perfect because sharing information is so easy. Someone can loan out his or her password to a seemingly trustworthy friend or co-worker. While most times this is probably not a real security risk, there is always a chance that there could be disastrous results. Social engineering or over-trusting can cause someone to share a private code or password. Systems should be established to reassign codes and passwords regularly to deal with the natural leakage that can occur with this type of security.

The best way to prevent loss of access control is to build physical security around a key that cannot be shared or lost. Biometric access calls for using a unique physical characteristic of a person to permit access to a controlled IT resource. Doorways can be triggered to unlock using fingerprint readers, facial recognition cameras, voice analyzers, retinal blood vessel scanners, or other, more exotic characteristics. While not perfect, biometrics represent a giant leap in secure access. For even more effective access control, multifactor authentication can be used, where access is granted based on more than one access technique. For instance, in order to gain access to a secure server room, a user might have to pass a fingerprint scan (inherence factor) and have an approved security fob (possession factor).

Smart Lockers

A smart locker is a locker that an organization can control via wireless or wired networking to allow temporary access to a locker so users can access items (Figure 19-11). First popularized by Amazon as a delivery tool, smart lockers are common anywhere an organization needs to give users access to…whatever they can fit into a locker!

Figure 19-11 Typical Smart locker

Let me point out something related to all of this door locking and unlocking technology. Physical asset security is important, but generally not as important as the safety of people. Designers of these door-locking systems must take into account safety features such as what happens to the state of a lock in an emergency like a power failure or fire. Doors with electromechanical locking controls can respond to an emergency condition and lock or unlock automatically, respectively called fail secure or fail safe. Users and occupants of facilities should be informed about what to expect in these types of events.

Monitoring

Okay, the physical assets of the network have been secured. It took guards, locks, passwords, eyeballs, and a pile of technology. Now, the only people who have access to IT resources are those who have been carefully selected, screened, trained, and authorized. The network is safe, right? Maybe not. You see, here comes the old problem again: people are human. Humans make mistakes, humans can become disgruntled, and humans can be tempted. The only real solution is heavily armored robots with artificial intelligence and bad attitudes. But until that becomes practical, maybe what we need to do next is to ensure that those authorized people can be held accountable for what they do with the physical resources of the network.

Enter video surveillance. With video surveillance of facilities and assets, authorized staff can be monitored for mistakes or something more nefarious. Better still, our kid with a rocket launcher (remember him?) can be tracked and caught after he sneaks into the building.

Let’s look at two video surveillance concepts. Video monitoring entails using remotely monitored visual systems. IP cameras and closed-circuit televisions (CCTVs) are specific implementations of video monitoring. CCTV is a self-contained, closed system in which video cameras feed their signal to specific, dedicated monitors and storage devices. CCTV cameras can be monitored in real time by security staff, but the monitoring location is limited to wherever the video monitors are placed. If real-time monitoring is not required or viewing is delayed, stored video can be reviewed later as needed.

IP cameras have the benefit of being a more open system than CCTV. IP video streams can be monitored by anyone who is authorized to do so and can access the network on which the cameras are installed. The stream can be saved to a hard drive or network storage device. Multiple workstations can simultaneously monitor video streams and multiple cameras with ease.

Network Security

Protecting network assets is more than a physical exercise. Physically speaking, we can harden a network by preventing and controlling access to tangible network resources through things like locking doors and video monitoring. Next we will want to protect our network from malicious, suspicious, or potential threats that might connect to or access the network. This is called access control and it encompasses both physical security and network security. In this section we look at some technologies and techniques to implement network access control, including user account control, edge devices, posture assessment, persistent and non-persistent agents, guest networks, and quarantine networks.

Controlling User Accounts

A user account is just information: nothing more than a combination of a username and password. Like any important information, it’s critical to control who has a user account and to track what these accounts can do. Access to user accounts should be restricted to the assigned individuals (no sharing, no stealing), and permissions for those accounts should follow the principle of least privilege—access to only the resources those individuals need, no more.

Tight control of user accounts helps prevent unauthorized access or improper access. Unauthorized access means a person does something beyond his or her authority to do. Improper access occurs when a user who shouldn’t have access gains access through some means. Often the improper access happens when a network tech or administrator makes a mistake.

Disabling unused accounts is an important first step in addressing these problems, but good user account control goes far deeper than that. One of your best tools for user account control is to implement groups. Instead of giving permissions to individual user accounts, give them to groups; this makes keeping track of the permissions assigned to individual user accounts much easier.

Figure 19-12 shows an example of giving permissions to a group for a folder in Windows Server. Once a group is created and its permissions are set, you can then add user accounts to that group as needed. Any user account that becomes a member of a group automatically gets the permissions assigned to that group.

Figure 19-12 Giving a group permissions for a folder in Windows

Figure 19-13 shows an example of adding a user to a newly created group in the same Windows Server system.

Figure 19-13 Adding a user to a newly created group

You should always put user accounts into groups to enhance network security. This applies to simple networks, which get local groups, and to domain-based networks, which get domain groups. Do not underestimate the importance of properly configuring both local groups and domain groups.

Groups are a great way to get increased complexity without increasing the administrative burden on network administrators because all network operating systems combine permissions. When a user is a member of more than one group, which permissions does he or she have with respect to any particular resource?

In all network operating systems, the permissions of the groups are combined, and the result is what is called the effective permissions the user has to access a given resource. Let’s use an example from Windows Server. If Timmy is a member of the Sales group, which has List Folder Contents permission to a folder, and he is also a member of the Managers group, which has Read and Execute permissions to the same folder, Timmy will have List Folder Contents and Read and Execute permissions to that folder.

Combined permissions can also lead to conflicting permissions, where a user does not get access to a needed resource because one of his groups has a Deny permission for that resource while another allows it. At the group level, Deny always trumps any other permission (but a user permission will override this).

Watch out for default user accounts and groups—they can grant improper access or secret backdoor access to your network! All network operating systems have a default Everyone group, and it can easily be used to sneak into shared resources. This Everyone group, as its name implies, literally includes anyone who connects to that resource. Some versions of Windows give full control to the Everyone group by default. All of the default groups—Everyone, Guest, Users—define broad groups of users. Never use them unless you intend to permit all those folks to access a resource. If you use one of the default groups, remember to configure it with the proper permissions to prevent users from doing things you don’t want them to do with a shared resource!

All of these groups only do one thing for you: they enable you to keep track of your user accounts. That way you know resources are only available for users who need those resources, and users only access the resources you want them to use.

Before I move on, let me add one more tool to your kit: diligence. Managing user accounts is a thankless and difficult task, but one that you must stay on top of if you want to keep your network secure. Most organizations integrate the creating, disabling/enabling, and deleting of user accounts with the work of their human resources folks. Whenever a person joins, quits, or moves, the network admin is always one of the first to know!

The administration of permissions can become incredibly complex—even with judicious use of groups. You now know what happens when a user account has multiple sets of permissions to the same resource, but what happens if the user has one set of permissions to a folder and a different set of permissions to one of its subfolders? This brings up a phenomenon called inheritance. I won’t get into the many ways different network operating systems handle inherited permissions. Luckily for you, the CompTIA Network+ exam doesn’t test you on all the nuances of combined or inherited permissions—just be aware they exist. Those who go on to get more advanced certifications, on the other hand, must become extremely familiar with the many complex permutations of permissions.

Edge

Access control can be broadly defined as exactly what it sounds like: one or more methods to govern or limit entry to a particular environment. Historically, this was accomplished and enforced with simply communicated rules and policies and human oversight. As systems grew in size and sophistication, it became possible to enforce the governing rules using automated technology, relieving managers to focus on other tasks. These control technologies began their developmental life as a central control system with peripheral actuators.

Let me show you what I mean. Take the example of the Star Trek–like security door system I talked about in the “Physical Security” section a little while ago. That system worked by having a computer with a database of doors, staff, and a decision matrix. Because it controlled many doors, it was centrally located and had wires running to and from it to every controlled door on the campus. Each door had two peripherals installed: a proximity reader with a status indicator, and a door open/close actuator. The proximity reader would read the data from the RFID chip carried by someone and send the data over a sometimes very long data cable to the control computer.

The computer would take the data and the door identifier and check to see if the data was valid, current, and authorized to pass through the door. If it did not meet authorization criteria, a data signal was sent back down the data line to cause a red LED to blink on the proximity reader. Of course, the door would not open. If all of the criteria were met for authorization, a good signal was sent down the data line to make a green LED glow, and power was sent down the line to operate the door actuator.

We’ve talked about the benefits of this system, so let’s look at a few drawbacks. First, the system was proprietary. As systems like these were introduced, competition stymied any effort to create industry standards. Central control meant that large, powerful boxes had to be developed as central controllers. Expandability became an issue as controllers maxed out the number of security doors they could support. Finally, the biggest problem was the large amount of cabling needed to support large numbers of doors and potentially great distances from the central controller. The problem was made worse when facilities had to retrofit nonsecure doors for secure ones.

A lot of time and technology has passed since those days. Today’s automated secure entry systems take advantage of newer technologies by leveraging existing network wiring. By using IP traffic and Power over Ethernet (PoE), the entire system can usually run over the existing wiring. Applications and protocols have been standardized so they can run on existing server hardware.

Also contributing to the simplification and standardization of these security systems are edge devices. An edge device is a piece of hardware that has been optimized to perform a task. Edge devices work in coordination with other edge devices and controllers.

The primary defining characteristic of an edge device is that it is installed closer to a client device, such as a workstation or a security door, than to the core or backbone of a network. In this instance, a control program that tracks entries, distributes and synchronizes copies of databases, and tracks door status can be run on a central server. In turn, it communicates with edge devices. The edge devices keep a local copy of the database and make their own decisions about whether or not a door should be opened.

Posture Assessment

Network access control (NAC) is a standardized approach to verify that a node meets certain criteria before it is allowed to connect to a network. Many product vendors implement NAC in different ways. Network Admission Control (also known as NAC) is Cisco’s version of network access control.

Cisco’s NAC can dictate that specific criteria must be met before allowing a node to connect to a secure network. Devices that do not meet the required criteria can be shunted with no connection or made to connect to another network. The types of criteria that can be checked are broad ranging and can be tested for in a number of ways. For the purposes of this text, we are mostly concerned about verifying that a device attempting to connect is not a threat to network security.

Cisco uses posture assessment as one of the tools to implement NAC. Posture assessment, as you’ll recall from Chapter 18, is a way to expose or catalog all the threats and risks facing an organization. In the Cisco implementation, a switch or router that has posture assessment enabled and configured will query network devices to confirm that they meet minimum security standards before being permitted to connect to the production network.

Posture assessment includes checking things like type and version of anti-malware, level of QoS, and type/version of operating system. Posture assessment can perform different checks at succeeding stages of connection. Certain tests can be applied at the initial physical connection. After that, more checks can be conducted prior to logging in. Prelogin assessment may look at the type and version of operating system, detect whether keystroke loggers are present, and check whether the station is real or a virtual machine. The host may be queried for digital certificates, anti-malware version and currency, whether the machine is real or virtual, and a large list of other checks.

If everything checks out, the host will be granted a connection to the production network. If posture assessment finds a deficiency or potential threat, the host can be denied a connection or connected to a non-production network until it has been sufficiently upgraded.

Persistent and Non-persistent Agents