Chapter 10

Summarizing Virtualization and Cloud Computing Concepts

This chapter covers the following topics related to Objective 2.2 (Summarize virtualization and cloud computing concepts) of the CompTIA Security+ SY0-601 certification exam:

Cloud Models

Infrastructure as a service (IaaS)

Platform as a service (PaaS)

Software as a service (SaaS)

Anything as a service (XaaS)

Public

Community

Private

Hybrid

Cloud service providers

Managed service provider (MSP)/managed security service provider (MSSP)

On-premises vs. off-premises

Fog computing

Edge computing

Thin client

Containers

Microservices/API

Infrastructure as code

Software-defined networking (SDN)

Software-defined visibility (SDV)

Serverless architecture

Services integration

Resource policies

Transit gateway

Virtualization

Virtual machine (VM) sprawl avoidance

VM escape protection

These days, more and more organizations are transferring some or all of their server and network resources to the cloud. This effort creates many potential hazards and vulnerabilities that you, as the security administrator, and the cloud provider must address. Top among these concerns are the servers, where all data is stored and accessed. Servers of all types should be hardened and protected from a variety of attacks to keep the integrity of data from being compromised. However, data must also be available. Therefore, you must strike a balance of security and availability. This chapter provides details about several virtualization and cloud computing concepts.

“Do I Know This Already?” Quiz

The “Do I Know This Already?” quiz enables you to assess whether you should read this entire chapter thoroughly or jump to the “Chapter Review Activities” section. If you are in doubt about your answers to these questions or your own assessment of your knowledge of the topics, read the entire chapter. Table 10-1 lists the major headings in this chapter and their corresponding “Do I Know This Already?” quiz questions. You can find the answers in Appendix A, “Answers to the ‘Do I Know This Already?’ Quizzes and Review Questions.”

Table 10-1 “Do I Know This Already?” Section-to-Question Mapping

Foundation Topics Section |

Questions |

|---|---|

Cloud Models |

1–3 |

Cloud Service Providers |

4–5 |

Cloud Architecture Components |

6–8 |

Virtual Machine (VM) Sprawl Avoidance and VM Escape Protection |

9–10 |

Caution

The goal of self-assessment is to gauge your mastery of the topics in this chapter. If you do not know the answer to a question or are only partially sure of the answer, you should mark that question as wrong for purposes of the self-assessment. Giving yourself credit for an answer you correctly guess skews your self-assessment results and might provide you with a false sense of security.

1. Which of the following cloud service models will you use if you want to host applications on virtual machines, deploy load balancers, and use storage buckets?

IaaS

PaaS

SaaS

None of these answers are correct.

2. Which of the following cloud deployments is a mix of public and private cloud solutions where multiple organizations can share the public cloud portion?

Community cloud

PaaS

SaaS

MSSP cloud

3. Google Drive, Office 365, and Dropbox are examples of which of the following types of cloud service?

SaaS

IaaS

PaaS

All of these answers are correct.

4. What type of company or organization provides services to manage your security devices and can also help monitor and respond to security incidents?

SaaS provider

PaaS provider

MSSP

Serverless provider

5. Which of the following organizations delivers network, application, system, and management services using a pay-as-you-go model?

SaaS provider

PaaS provider

XaaS provider

Managed service provider (MSP)

6. Which term is used to describe an ecosystem of resources and applications in new network services (including 5G and IoT)?

SaaS

VPC

Edge computing

None of these answers are correct.

7. Which of the following are computer systems that run from resources stored on a central server or from the cloud instead of a local (on-premises) system?

Thin clients

Fog edge devices

VPCs

Containers

8. Which of the following are technologies and solutions to manage, deploy, and orchestrate containers?

Docker Swarm

Apache Mesos

Kubernetes

All of these answers are correct.

9. What condition could occur when an organization can no longer effectively control and manage all the VMs on a network or in the cloud?

VM sprawl

VM escape

Hypervisor escape

Hypervisor sprawl

10. Which condition occurs when an attacker or malware compromises one VM and then attacks the hypervisor?

VM escape

Hypervisor escape

VM sprawl

Hypervisor sprawl

Foundation Topics

Cloud Models

Cloud computing can be defined as a way of offering on-demand services that extend the capabilities of a person’s computer or an organization’s network. These might be free services, such as personal browser-based email from various providers, or they could be offered on a pay-per-use basis, such as services that offer data access, data storage, infrastructure, and online gaming. A network connection of some sort is required to make the connection to the cloud and gain access to these services in real time.

Some of the benefits to an organization using cloud-based services include lowered cost, less administration and maintenance, more reliability, increased scalability, and possible increased performance. A basic example of a cloud-based service would be browser-based email. A small business with few employees definitely needs email, but it can’t afford the costs of an email server and perhaps does not want to have its own hosted domain and the costs and work that go along with that. By connecting to a free web browser-based service, the small business can obtain near unlimited email, contacts, and calendar solutions. However, there is no administrative control, and there are some security concerns, which are discussed in a little bit.

Cloud computing services are generally broken down into several categories of services:

Software as a service (SaaS): The most commonly used and recognized of the cloud service categories, SaaS offers users access to applications that are provided by a third party over the Internet. The applications need not be installed on the local computer. In many cases these applications are run within a web browser; in other cases the user connects with screen-sharing programs or remote desktop programs. A common example is webmail.

Note

Often compared to SaaS is the application service provider (ASP) model. SaaS typically offers a generalized service to many users. However, an ASP typically delivers a service (perhaps a single application) to a small number of users.

Infrastructure as a service (IaaS): This service offers computer networking, storage, load balancing, routing, and VM hosting. More and more organizations are seeing the benefits of offloading some of their networking infrastructure to the cloud.

Platform as a service (PaaS): This service provides various software solutions to organizations, especially the ability to develop applications in a virtual environment without the cost or administration of a physical platform. PaaS is used for easy-to-configure operating systems and on-demand computing. Often, this utilizes IaaS as well for an underlying infrastructure to the platform. Cloud-based virtual desktop environments (VDEs) and virtual desktop infrastructures (VDIs) are often considered to be part of this service but can be part of IaaS as well.

Anything as a service (XaaS): There are many types of cloud-based services. If they don’t fall into the previous categories, they often fall under the category “anything as a service,” or XaaS. For instance, a service in which a large service provider integrates its security services into the company’s/customer’s existing infrastructure is often referred to as security as a service (SECaaS). The concept is that the service provider can provide the security more efficiently and more cost effectively than a company can, especially if it has a limited IT staff or budget. The Cloud Security Alliance (CSA) defines various categories to help businesses implement and understand SECaaS, including encryption, data loss prevention (DLP), continuous monitoring, business continuity and disaster recovery (BCDR), vulnerability scanning, and much more. Periodically, new services will arrive, such as monitoring as a service (MaaS)—a framework that facilitates the deployment of monitoring within the cloud in a continuous fashion.

Tip

NIST’s special publication (SP) 800-145 provides a great overview of the definitions of cloud computing concepts. You can access this document at https://csrc.nist.gov/publications/detail/sp/800-145/final.

Public, Private, Hybrid, and Community Clouds

Organizations use different types of clouds: public, private, hybrid, and community.

Public cloud: In this type of cloud, a service provider offers applications and storage space to the general public over the Internet. Examples include free, web-based email services and pay-as-you-go business-class services. The main benefits of this type of service include low (or zero) cost and scalability. Providers of public cloud space include Google, Rackspace, and Amazon.

Private cloud: This type of cloud is designed with a particular organization in mind. In it, you, as security administrator, have more control over the data and infrastructure. A limited number of people have access to the cloud, and they are usually located behind a firewall of some sort in order to gain access to the private cloud. Resources might be provided by a third-party or could come from your server room or data center. In other words, the private cloud could be deployed using resources in an on-premises data center or dedicated resources by a third-party cloud provider. An example is Amazon AWS Virtual Private Cloud (https://aws.amazon.com/vpc) and Microsoft Azure private cloud offering (https://azure.microsoft.com/en-us/overview/what-is-a-private-cloud).

Hybrid cloud: This type is a mixture of public and private clouds. Dedicated servers located within the organization and cloud servers from a third party are used together to form the collective network. In these hybrid scenarios, confidential data is usually kept in-house.

Community cloud: This is another mix of public and private cloud deployments where multiple organizations can share the public portion. Community clouds appeal to organizations that usually have a common form of computing and storing of data.

The type of cloud an organization uses is dictated by its budget, the level of security it requires, and the amount of manpower (or lack thereof) it has to administer its resources. Although a private cloud can be very appealing, it is often beyond the ability of an organization, forcing that organization to seek a public or community-based cloud. However, it doesn’t matter what type of cloud is used. Resources still have to be secured by someone, and you’ll have a hand in that security one way or the other.

Cloud Service Providers

A cloud service provider (CSP), such as Amazon Web Services (AWS), Microsoft Azure, or Google Cloud Platform (GCP), might offer one or more of the aforementioned services. CSPs have no choice but to take their security and compliance responsibilities very seriously. For instance, Amazon created a Shared Responsibility Model to define in detail the responsibilities of AWS customers as well as those of Amazon. You can access the Amazon Shared Responsibility Model at https://aws.amazon.com/compliance/shared-responsibility-model.

Note

The shared responsibility depends on the type of cloud model, whether SaaS, PaaS, or IaaS.

Similar to the CSP is the managed service provider (MSP), which can deliver network, application, system, and management services using a pay-as-you-go model. An MSP is an organization that can manage your network infrastructure, servers, and in some cases your security devices. Those companies that provide services to manage your security devices and can also help monitor and respond to security incidents are called managed security service providers (MSSPs).

Tip

Another type of security service provided by vendors is managed detection and response (MDR). MDR evolved from MSSPs to help organizations that lack the resources to establish robust security operations programs to detect and respond to threats. MDR is different from traditional MSSP services because it is more focused on threat detection rather than compliance and traditional network security device configuration services. Most of the services offered by MDR providers are delivered using their own set of tools and technologies but are deployed on the customer’s premises. The techniques MDR providers use may vary. A few MDR providers rely solely on security logs, whereas others use network security monitoring or endpoint activity to secure the network. MDR solutions rely heavily on security event management; Security Orchestration, Automation, and Response (SOAR); and advanced analytics.

Cloud Architecture Components

Cloud computing allows you to store and access your data, create applications, and interact with thousands of other services over the Internet (off-premises) instead of your local network and computers (on-premises). With a good Internet connection, cloud computing can be executed anytime, anywhere. There are several cloud-related architecture components, described next:

The concept of fog and edge computing

Thin clients

Containers

Microservices and related APIs

Fog and Edge Computing

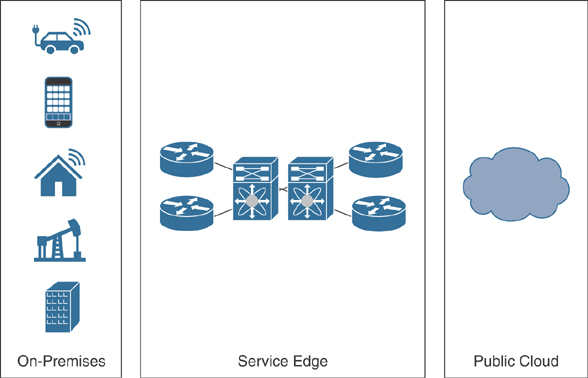

The two architectural concepts called fog computing and edge computing are often used interchangeably. However, there are a few differences. The term edge computing describes an ecosystem of resources and applications in new network services (including 5G and IoT). One of the main benefits is to provide greater network speeds, low latency, and computational power near the user.

Figure 10-1 illustrates the concept of edge computing.

FIGURE 10-1 Edge Computing

The “edge” in edge computing is the location nearer the consumer (otherwise known as the subscriber) and where data is processed or stored without being backhauled to a central location. This service edge is optimized to provide a low-cost solution, increase performance, and provide a better user experience.

The term fog computing was originally created by Cisco in the early 2010s. It describes the decentralization of computing infrastructure by “bringing the cloud to the ground” (thus the term fog). This architecture enables components of the edge computing concept to easily push compute power away from the public cloud to improve scalability and performance. Fog computing accomplishes this by putting data, compute, storage, and applications near the end user or IoT device.

Other similar and related concepts have been adopted throughout the years. For example, consider the multi-access edge computing (MEC) concept created by the European Telecommunications Standards Institute (ETSI), designed to benefit application developers and content providers. Another term used by some organizations is cloudlets, which are micro data centers that can be deployed locally near the data source (in some cases temporarily for emergency response units and other use cases).

Thin Clients

Thin clients are computer systems that run from resources stored on a central server or from the cloud instead of a local (on-premises) system. When you use a thin client, you connect remotely to a server-based computing environment where the applications, sensitive data, and memory are stored.

Thin clients can be used in several ways:

Shared terminal services: Users at thin client stations share a server-based operating system and applications.

Desktop virtualization: A full desktop machine (including the operating system and applications) lives in a virtual machine in an on-premises server or in the cloud (off-premises).

Browser-based thin clients: All applications are accessed within a web browser.

Figure 10-2 shows a user accessing a virtual desktop running Microsoft Windows and custom applications running in the cloud.

FIGURE 10-2 Thin Client Accessing a Virtualized Desktop

Containers

Before you can even think of building a distributed system, you must first understand how the container images that contain your applications make up all the underlying pieces of such a distributed system. Applications are normally composed of a language runtime, libraries, and source code. For instance, your application may use third-party or open-source shared libraries such as the Linux Kernel (www.kernel.org), nginx (https://nginx.org), or OpenSSL (www.openssl.org). These shared libraries are typically shipped as shared components in the operating system that you installed on a system. The dependency on these libraries introduces difficulties when an application developed on your desktop, laptop, or any other development machine (dev system) has a dependency on a shared library that isn’t available when the program is deployed out to the production system. Even when the dev and production systems share the exact same version of the operating system, bugs can occur when programmers forget to include dependent asset files inside a package that they deploy to production.

The good news is that you can package applications in a way that makes it easy to share them with others. In this case, containers become very useful. Docker, one of the most popular container runtime engines, makes it easy to package an executable and push it to a remote registry where it can later be pulled by others.

Container registries are available in all of the major public cloud providers (for example, AWS, Google Cloud Platform, and Microsoft Azure) as well as services to build images. You can also run your own registry using open-source or commercial systems. These registries make it easy for developers to manage and deploy private images, while image-builder services provide easy integration with continuous delivery systems.

Container images bundle a program and its dependencies into a single artifact under a root file system. Containers are made up of a series of file system layers. Each layer adds, removes, or modifies files from the preceding layer in the file system. The overlay system is used both when packaging up the image and when the image is actually being used. During runtime, there are various different concrete implementations of such file systems, including aufs, overlay, and overlay2.

Let’s look at an example of how container images work. Figure 10-3 shows three container images: A, B, and C. Container Image B is “forked” from Container Image A. Then, in Container Image B, Python version 3 is added. Furthermore, Container Image C is built on Container Image B, and the programmer adds OpenSSL and nginx to develop a web server and enable TLS.

FIGURE 10-3 How Container Images Work

Abstractly, each container image layer builds on the previous one. Each parent reference is a pointer. The example in Figure 10-3 includes a simple set of containers; in many environments, you will encounter a much larger directed acyclic graph.

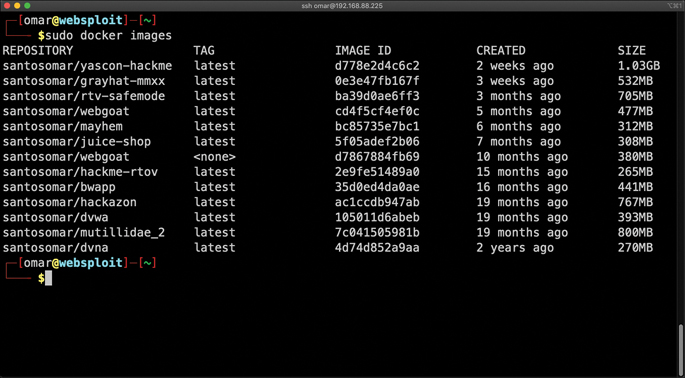

Even though the Security+ exam does not cover Docker in detail, it is still good to see a few examples of Docker containers, images, and related commands. Figure 10-4 shows the output of the docker images command.

FIGURE 10-4 Displaying the Docker Images

The Docker images shown in Figure 10-4 are intentionally vulnerable applications that you can also use to practice your skills. These Docker images and containers are included in a VM called WebSploit (websploit.org) by Omar Santos. The VM is built on top of Kali Linux and includes several additional tools, along with the aforementioned Docker containers. This can be a good tool, not only to get familiarized with Docker, but also to learn and practice offensive and defensive security skills.

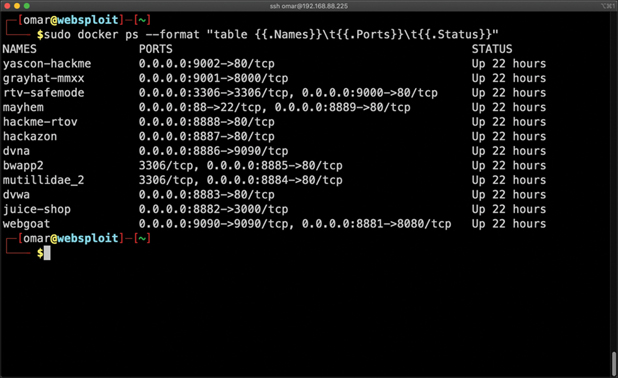

Figure 10-5 shows the output of the docker ps command used to see all the running Docker containers in a system.

FIGURE 10-5 Output of the docker ps Command

You can use a public, cloud provider, or private Docker image repository. Docker’s public image repository is called Docker Hub (https://hub.docker.com). You can find images by going to the Docker Hub website or by using the docker search command, as demonstrated in Figure 10-6.

FIGURE 10-6 Output of the docker search Command

In Figure 10-6, the user searches for a container image that matches the ubuntu keyword.

Tip

You can practice and deploy your first container by using Katacoda (an interactive system that allows you to learn many different technologies, including Docker, Kubernetes, Git, TensorFlow, and many others). You can access Katacoda at www.katacoda.com. There are numerous interactive scenarios provided by Katacoda. For instance, you can use the “Deploying Your First Docker Container” scenario to learn (hands-on) Docker: www.katacoda.com/courses/docker/deploying-first-container.

In larger environments, you will not deploy and orchestrate Docker containers in a manual way. You will want to automate as much as possible. This is where Kubernetes comes into play. Kubernetes (often referred to as k8s) automates the distribution, scheduling, and orchestration of application containers across a cluster.

The following are the Kubernetes components:

Control Plane: This component coordinates all the activities in your cluster (scheduling, scaling, and deploying applications).

Node: This VM or physical server acts as a worker machine in a Kubernetes cluster.

Pod: This group of one or more containers provides shared storage and networking, including a specification for how to run the containers. Each pod has an IP address, and it is expected to be able to reach all other pods within the environment.

There have been multiple technologies and solutions to manage, deploy, and orchestrate containers in the industry. The following are the most popular:

Kubernetes: One of the most popular container orchestration and management frameworks, originally developed by Google, Kubernetes is a platform for creating, deploying, and managing distributed applications. You can download Kubernetes and access its documentation at https://kubernetes.io.

Nomad: This container management and orchestration platform is by HashCorp. You can download and obtain detailed information about it at www.nomadproject.io.

Apache Mesos: This distributed Linux kernel provides native support for launching containers with Docker and AppC images. You can download Apache Mesos and access its documentation at https://mesos.apache.org.

Docker Swarm: This container cluster management and orchestration system is integrated with the Docker Engine. You can access the Docker Swarm documentation at https://docs.docker.com/engine/swarm.

Microservices and APIs

The term microservices describes how applications can be deployed as a collection of services that are highly maintainable and testable and independently deployable. Microservices are organized around business capabilities and enable the rapid, frequent, and reliable delivery of large, complex applications. Most microservices developed and deployed by organizations are based on containers.

Application programming interfaces (APIs), such as RESTful APIs, are the frameworks through which developers can interact with an application. Microservices use APIs to communicate between themselves. These APIs can be used for inter-microservice communication, and they can also be used to expose data and application functionality to third-party systems.

Microservices and APIs need to be secured. Traditional segmentation strategies do not work well in these virtual and containerized environments. The ability to enforce network segmentation in container and VM environments is what people call micro-segmentation. Micro-segmentation is at the VM level or between containers regardless of a VLAN or a subnet. Micro-segmentation solutions need to be “application aware.” This means that the segmentation process starts and ends with the application itself.

Most micro-segmentation environments apply a zero-trust model. This model dictates that users cannot talk to applications and that applications cannot talk to other applications unless a defined set of policies permits them to do so.

Infrastructure as Code

Modern applications and deployments (on-premises and in the cloud) require scalability and integration with numerous systems, APIs, and network components. This complexity introduces risks, including network configuration errors that can cause significant downtime and network security challenges. Consequently, networking functions such as routing, optimization, and security have also changed. The next generation of hardware and software components in enterprise networks must support both the rapid introduction and rapid evolution of new technologies and solutions. Network infrastructure solutions must keep pace with the business environment and support modern capabilities that help drive simplification within the network.

These elements have fueled the creation of software-defined networking (SDN). SDN was originally created to decouple control from the forwarding functions in networking equipment. This is done to use software to centrally manage and “program” the hardware and virtual networking appliances to perform forwarding. Organizations are adopting a framework often referred to as infrastructure as code, which is the process of managing and provisioning computer data centers through machine-readable definition files rather than physical hardware configuration or interactive configuration tools.

In traditional networking, three different “planes,” or elements, allow network devices to operate: the management, control, and data planes. The control plane has always been separated from the data plane. There was no central brain (or controller) that controlled the configuration and forwarding. Routers, switches, and firewalls were managed by the command-line interface (CLI), graphical user interfaces (GUIs), and custom Tcl scripts.

Note

Tcl is a high-level, general-purpose, interpreted, dynamic programming language. It was designed with the goal of being very simple but powerful. Tcl casts everything into the mold of a command, even programming constructs like variable assignment and procedure definition.

For instance, the firewalls were managed by standalone web portals, while the routers were managed by the CLI. SDN introduced the notion of a centralized controller. The SDN controller has a global view of the network, and it uses a common management protocol to configure the network infrastructure devices.

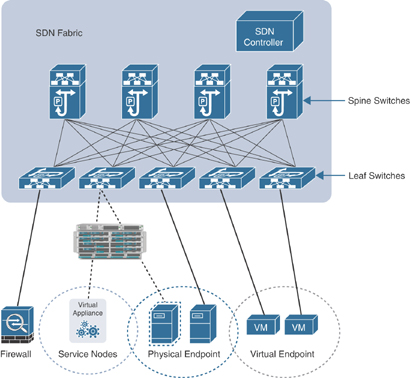

In the topology shown in Figure 10-7, an SDN controller is used to manage a network infrastructure in a data center.

FIGURE 10-7 A High-Level SDN Implementation

The SDN controller can also calculate reachability information from many systems in the network, and it pushes a set of flows inside the switches. The hardware uses the flows to do the forwarding. Here, you can see a clear transition from a distributed “semi-intelligent brain” approach to a “central and intelligent brain” approach.

Tip

An example of an open-source implementation of SDN controllers is the Open vSwitch (OVS) project using the OVS Database (OVSDB) management protocol and the OpenFlow protocol. Another example is the Cisco Application Policy Infrastructure Controller (Cisco APIC). Cisco APIC is the main architectural component and the brain of the Cisco Application Centric Infrastructure (ACI) solution. OpenDaylight (ODL) is another popular open-source project that is focused on the enhancement of SDN controllers to provide network services across multiple vendors. OpenDaylight participants also interact with the OpenStack Neutron project and attempt to solve the existing inefficiencies. OpenDaylight interacts with Neutron via a northbound interface and manages multiple interfaces southbound, including the OVSDB and OpenFlow.

SDN changed a few things in the management, control, and data planes. However, the big change was in the control and data planes in software-based switches and routers (including virtual switches inside hypervisors). For instance, the Open vSwitch project started some of these changes across the industry.

SDN provides numerous benefits in the area of the management plane. These benefits are in both physical switches and virtual switches. SDN is now widely adopted in data centers.

Another concept introduced a few years ago is software-defined visibility (SDV). SDV is a concept similar to SDN but is designed and optimized to provide intelligent visibility of what’s happening across an organization network or in the cloud. Typically, RESTful APIs are used in an SDV environment to orchestrate and automate security operations and monitor tasks across the organization.

Serverless Architecture

Another popular architecture is the serverless architecture. Be aware that serverless does not mean that you do not need a “server” somewhere. Instead, it means that you will be using cloud platforms to host and/or to develop your code. For example, you might have a serverless app that is distributed in a cloud provider like AWS, Azure, or Google Cloud Platform.

Serverless is a cloud computing execution model where the cloud provider (AWS, Azure, Google Cloud, and so on) dynamically manages the allocation and provisioning of servers. Serverless applications run in stateless containers that are ephemeral and event-triggered (fully managed by the cloud provider). AWS Lambda is one of the most popular serverless architectures in the industry. Figure 10-8 shows a “function” or application in AWS Lambda.

FIGURE 10-8 AWS Lambda

In AWS Lambda, you run code without provisioning or managing servers, and you pay only for the compute time you consume. When you upload your code, Lambda takes care of everything required to run and scale your application (offering high availability and redundancy).

Figure 10-9 summarizes the evolution of computing from physical servers to virtual machines, containers, and serverless solutions. Virtual machines and containers could be deployed on-premises or in the cloud.

FIGURE 10-9 The Evolution of Computing from Physical Servers to Serverless

Services Integration

Cloud services integration is a series of tools and technologies used to connect different systems, applications, code repositories, and physical or virtual network infrastructure to allow real-time exchange of data and processes. Subsequently, integrated cloud services can be accessed by multiple systems, the Internet, or in the case of a private cloud, over a private network.

One of the main goals of cloud services integration is to break down data silos, increase visibility, and improve business processes. These services integrations are crucial for most organizations needing to share data among cloud-based applications. Cloud services integration has grown in popularity over the years as the use of IaaS, PaaS, and SaaS solutions continues to increase.

Resource Policies

Resource policies involve creating, assigning, and managing rules over the cloud resources that systems (virtual machines, containers, and so on) or applications use. Resource policies are also often created to make sure that resources remain compliant with corporate standards and service-level agreements (SLAs). Cloud service providers offer different solutions (such as the Microsoft Azure Policy) that help you maintain good cloud usage governance by evaluating deployed resources and scanning for those not compliant with the policies that have been defined.

Let’s look at a resource policy example. You could create a policy to allow only a certain size of virtual machines in the cloud environment. Once that policy is implemented, it would be enforced when you’re creating and updating resources as well as over existing resources. Another example is a policy that can contain conditions and rules that determine if a storage account being deployed is within a certain set of criteria (size, how it is shared, and so on). Such a policy could deny all storage accounts that do not adhere to the defined set of rules.

Transit Gateway

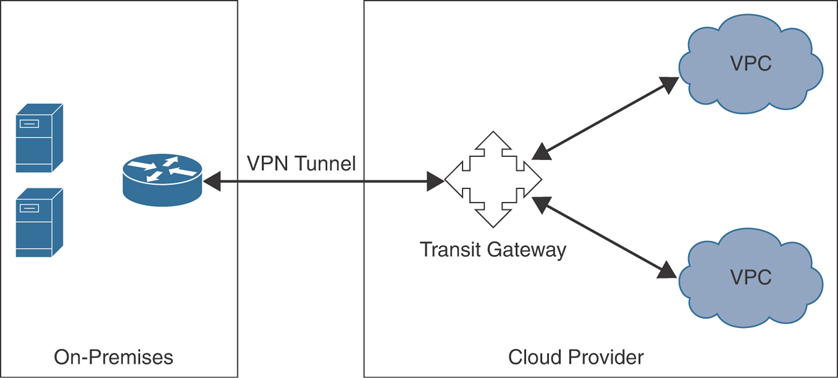

A transit gateway is a system that can be used to interconnect a virtual private cloud (VPC) and on-premises networks. Transit gateways can also be used to connect cloud-hosted applications to software-defined wide-area network (SD-WAN) devices.

Note

SD-WAN is a solution that simplifies the management of a wide-area network (WAN) implementation by decoupling network infrastructure devices from their control mechanism using software.

Figure 10-10 illustrates a transit gateway implementation. On-premises network infrastructure and systems are connected to the transit gateway using a VPN tunnel (typically using IPsec). The transit gateway then connects those resources to different VPCs.

FIGURE 10-10 A Transit Gateway

Virtual Machine (VM) Sprawl Avoidance and VM Escape Protection

Virtualization is a solution that allows you to create IT services using hardware resources that can be shared among different installations of operating systems and applications. The individual virtual systems that run different operating systems and applications are called virtual machines (VMs). The hypervisor is the computer software that is used to create and run VMs. Organizations have used virtualization technologies for decades. There have been a few challenges in virtualized environments, however. One of those issues is VM sprawl. In the following sections, you learn the challenges of VM sprawl and how to potentially avoid it. You also learn about VM escape attacks and how to protect your organization against them.

Understanding and Avoiding VM Sprawl

Regardless of the size of your organization, you may find yourself in a situation in which too many virtual machines (or physical servers for that matter) may be deployed, which results in unnecessary complexity and inefficiency when those resources aren’t used appropriately or are no longer needed. This unnecessary complexity also introduces security risks. VM sprawl (otherwise known as virtualization sprawl) occurs when an organization can no longer effectively control and manage all the VMs on a network or in the cloud.

VM sprawl can happen with rapidly growing networks when numerous VMs are set up by different business units for dispersed applications—especially when you create temporary VMs that do not get purged. For instance, your developers or department might set up VMs as part of a testing environment; however, if the VMs aren’t disposed of properly when they are no longer needed, your IT department can end up with too much to manage.

VM sprawl can impact your organization in a few different ways:

Cost

Management complexity and overhead

Introduction of additional security risks

Unnecessary disk space, CPU, and memory consumption

Potential data protection challenges

One of the best ways to avoid VM sprawl (VM sprawl avoidance) is to have a robust, automated solution to perform audits of the VMs. This automated audit will allow you to identify which VMs are no longer needed and delete them. Additionally, you should have a good process to clean up orphaned VM snapshots and end unnecessary backups or replication of VMs no longer needed. It is also very important to use a good naming convention so that you can keep track of these VMs in your infrastructure or in the cloud.

Protecting Against VM Escape Attacks

In a virtualized environment, the hypervisor controls all aspects of VM operation. Securing a hypervisor is crucial but also fairly complex. There is an attack known as hyper-jacking. In this type of assault, an attacker or malware compromises one VM and then attacks the hypervisor. When a guest VM performs this type of attack, it is often called a VM escape attack. In a VM escape attack, the guest VM breaks out of its isolated environment and attacks the hypervisor or could compromise other VMs hosted and controlled by the hypervisor. Figure 10-11 illustrates a VM escape attack.

FIGURE 10-11 VM Escape Attack

Consider the following to mitigate the risk of VM escape attacks:

Use a Type 1 hypervisor versus a Type 2 hypervisor to reduce the attack surface. A Type 1 hypervisor runs on physical servers or “bare metal,” and Type 2 runs on top of an operating system. Type 1 hypervisors have a smaller footprint and reduced complexity.

Harden the hypervisor’s configuration per the recommendation of the vendor. For example, you can disable memory sharing between VMs running within the same hypervisor hosts.

Disconnect unused external physical hardware devices (such as USB drives).

Disable clipboard or file-sharing services.

Perform self-integrity checks at boot-up using technologies like the Intel Trusted Platform Module/Trusted Execution Technology to verify whether the hypervisor has been compromised.

Monitor and analyze hypervisor logs on an ongoing basis and look for indicators of compromise.

Keep up with your hypervisor vendor’s security advisories and apply security updates immediately after they are disclosed.

Implement identity and access control across all tools, automated scripts, and applications using the hypervisor APIs.

Chapter Review Activities

Use the features in this section to study and review the topics in this chapter.

Review Key Topics

Review the most important topics in the chapter, noted with the Key Topic icon in the outer margin of the page. Table 10-2 lists a reference of these key topics and the page number on which each is found.

Table 10-2 Key Topics for Chapter 10

Key Topic Element |

Description |

Page Number |

|---|---|---|

List |

Listing the cloud computing service categories |

231 |

Tip |

Surveying NIST’s special publication (SP) 800-145 |

232 |

List |

Listing the different types of clouds used by organizations |

232 |

Paragraph |

Defining edge computing |

234 |

Edge Computing |

235 |

|

Paragraph |

Defining fog computing |

235 |

Paragraph |

Understanding what thin clients are |

235 |

Thin Client Accessing a Virtualized Desktop |

236 |

|

Paragraph |

Understanding what containers are |

236 |

How Container Images Work |

237 |

|

Paragraph |

Defining microservices |

240 |

A High-Level SDN Implementation |

242 |

|

Paragraph |

Understanding cloud services integration |

246 |

Paragraph |

Defining resource policies |

246 |

Paragraph |

Understanding what a transit gateway is |

246 |

A Transit Gateway |

247 |

|

Section |

Understanding and Avoiding VM Sprawl |

247 |

Paragraph |

Understanding what a VM escape attack is |

248 |

VM Escape Attack |

249 |

Define Key Terms

Define the following key terms from this chapter, and check your answers in the glossary:

infrastructure as a service (IaaS)

managed service provider (MSP)

managed security service providers (MSSPs)

application programming interfaces (APIs)

software-defined networking (SDN)

Review Questions

Answer the following review questions. Check your answers with the answer key in Appendix A.

1. What system can be used to interconnect a virtual private cloud (VPC) and on-premises networks?

2. ___________ are used in the process of creating, assigning, and managing rules over the cloud resources that systems (virtual machines, containers, and so on) or applications use.

3. What is a series of tools and technologies used to connect different systems, applications, code repositories, and physical or virtual network infrastructure to allow the real-time exchange of data and processes?

4. AWS Lambda is an example of which type of cloud service architecture?

5. OpenDaylight (ODL) is an example of a(n) _________ controller.