Chapter 22

Applying Cybersecurity Solutions to the Cloud

This chapter covers the following topics related to Objective 3.6 (Given a scenario, apply cybersecurity solutions to the cloud) of the CompTIA Security+ SY0-601 certification exam:

Cloud security controls

High availability across zones

Resource policies

Secrets management

Integration and auditing

Storage

Permissions

Encryption

Replication

High availability

Network

Virtual networks

Public and private subnets

Segmentation

API inspection and integration

Compute

Security groups

Dynamic resource allocation

Instance awareness

Virtual private cloud (VPC) endpoint

Container security

Solutions

CASB

Application security

Next-generation secure web gateway (SWG)

Firewall consideration in a cloud environment

Cost

Need for segmentation

Open Systems Interconnection (OSI) layers

Cloud native controls vs. third-party solutions

In this chapter you learn how to apply cybersecurity solutions to the cloud. We start out with an overview of cloud security controls, digging deeper into the topics of high availability across zones, resource policies, and secrets management. You also gain an understanding of integration and auditing in the cloud, as well as storage permissions, encryption, replication, and high availability, then dig in to network-specific cloud security controls such as virtual networks, public and private subnets, segmentation, and API inspection and integration. Additionally, you learn the compute side of cloud security controls, including security groups, dynamic resource allocation instance awareness, virtual private cloud (VPC) endpoint, and container security. The chapter finishes by discussing solutions such as CASB, application security, next-generation secure web gateway (SWG), and firewall considerations in a cloud environment such as cost, need for segmentation, and open systems interconnection (OSI) layers. Finally, we will have a brief discussion on cloud native controls vs. third party solutions.

“Do I Know This Already?” Quiz

The “Do I Know This Already?” quiz enables you to assess whether you should read this entire chapter thoroughly or jump to the “Chapter Review Activities” section. If you are in doubt about your answers to these questions or your own assessment of your knowledge of the topics, read the entire chapter. Table 22-1 lists the major headings in this chapter and their corresponding “Do I Know This Already?” quiz questions. You can find the answers in Appendix A, “Answers to the ‘Do I Know This Already?’ Quizzes and Review Questions.”

Table 22-1 “Do I Know This Already?” Section-to-Question Mapping

Foundation Topics Section |

Questions |

|---|---|

Cloud Security Controls |

1–4 |

Solutions |

5–7 |

Cloud Native Controls vs. Third-Party Solutions |

8 |

Caution

The goal of self-assessment is to gauge your mastery of the topics in this chapter. If you do not know the answer to a question or are only partially sure of the answer, you should mark that question as wrong for purposes of the self-assessment. Giving yourself credit for an answer you correctly guess skews your self-assessment results and might provide you with a false sense of security.

1. In cloud computing environments, which type of policy would be used to control access to CPU and memory allocation and the like?

Resource policies

Key policies

Wireless policies

None of these answers are correct.

2. Which of the following is utilized by a cloud computing environment to handle API keys?

Private key

Secrets management tool

Web key

All of these answers are correct.

3. In cloud computing environments, what term is used for storage instances?

Buckets

Servers

Instances

Containers

4. In cloud computing environments, which type of subnet would have a route to the Internet?

Private subnet

Internet subnet

Cloud subnet

Public subnet

5. Which of the following is a tool used to control access to cloud-based environments?

SCOR

IAM

VPC

CASB

6. Which of the following solutions would help enable remote worker access more efficiently?

IAM

SWG

CASB

None of these answers are correct.

7. Which Open Systems Interconnection (OSI) layer do many cloud-based firewalls focus on?

Presentation

Application

Network

Session

8. Which type of cloud control is typically provided by the actual cloud-computing environment vendor?

Third-party

Cloud native

Commercial

Retail

Foundation Topics

Cloud Security Controls

Almost everyone these days is using the cloud or deploying hybrid solutions to host applications. The reason is that many organizations are looking to transition from capital expenditure (CapEx) to operational expenditure (OpEx). The majority of today’s enterprises operate in a multicloud environment. It is obvious, therefore, that cloud computing security is more important than ever.

A multicloud environment is one in which an enterprise uses more than one cloud platform (with at least two or more public clouds), and each delivers a specific application or service. A multicloud can be composed of public, private, and edge clouds to achieve the enterprise’s end goals.

Note

Cloud computing security includes many of the same functionalities as traditional IT security. This includes protecting critical information from theft, data exfiltration, and deletion, as well as privacy.

Security Assessment in the Cloud

When performing penetration testing in the cloud, you must first understand what you can and cannot do. Most cloud service providers (CSPs) have detailed guidelines on how to perform security assessments and penetration testing in the cloud. Regardless, organizations face many potential threats when moving to a cloud model. For example, although your data is in the cloud, it must reside in a physical location somewhere. Your cloud provider should agree in writing to provide the level of security required for your customers.

Understanding the Different Cloud Security Threats

The following are questions to ask a cloud service provider before signing a contract for services:

Who has access? Access control is a key concern because insider attacks are a huge risk. Anyone who has been approved to access the cloud is a potential hacker, so you want to know who has access and how they were screened. Even if these acts are not done with malice, an employee can leave and then you find out that you don’t have the password, or the cloud service gets canceled because maybe the bill didn’t get paid.

What are your regulatory requirements? Organizations operating in the United States, Canada, or the European Union (EU) have many regulatory requirements that they must abide by (for example, ISO/IEC 27002, EU-U.S. Privacy Shield Framework, ITIL, FedRAMP, and COBIT). You must ensure that your cloud provider can meet these requirements and is willing to undergo certification, accreditation, and review.

Note

Federal Risk and Authorization Management Program (FedRAMP) is a United States government program and certification that provides a standardized approach to security assessment, authorization, and continuous monitoring for cloud products and services. FedRAMP is mandatory for U.S. federal agency cloud deployments and service models at the low-, moderate-, and high-risk impact levels. Cloud offerings such as Cisco Webex, Duo Security, Cisco Cloudlock, and others are FedRAMP certified. You can obtain additional information about FedRAMP from www.fedramp.gov and www.cisco.com/c/en/us/solutions/industries/government/federal-government-solutions/fedramp.html.

Do you have the right to audit? This particular item is no small matter in that the cloud provider should agree in writing to the terms of the audit. With cloud computing, maintaining compliance could become more difficult to achieve and even harder to demonstrate to auditors and assessors. Of the many regulations touching on information technology, few were written with cloud computing in mind. Auditors and assessors might not be familiar with cloud computing generally or with a given cloud service in particular.

Note

Division of compliance responsibilities between cloud provider and cloud customer must be determined before any contracts are signed or service is started.

What type of training does the provider offer its employees? This is a rather important item to consider because people will always be the weakest link in security. Knowing how your provider trains its employees is an important item to review.

What type of data classification system does the provider use? Questions you should be concerned with here include what data classification standard is being used and whether the provider even uses data classification.

How is your data separated from other users’ data? Is the data on a shared server or a dedicated system? A dedicated server means that your information is the only thing on the server. With a shared server, the amount of disk space, processing power, bandwidth, and so on is limited because others are sharing this device. If it is shared, the data could potentially become comingled in some way.

Is encryption being used? Encryption should be discussed. Is it being used while the data is at rest and in transit/motion? You will also want to know what type of encryption is being used. For example, there are big technical differences between DES (no longer considered secure) and AES (secure). For both of these algorithms, however, the basic questions are the same: Who maintains control of the encryption keys? Is the data encrypted at rest in the cloud? Is the data encrypted in transit/motion, or is it encrypted at rest and in transit/motion?

What are the service-level agreement (SLA) terms? The SLA serves as a contracted level of guaranteed service between the cloud provider and the customer that specifies what level of services will be provided.

What is the long-term viability of the provider? How long has the cloud provider been in business, and what is its track record? If it goes out of business, what happens to your data? Will your data be returned and, if so, in what format?

Will the provider assume liability in the case of a breach? If a security incident occurs, what support will you receive from the cloud provider? While many providers promote their services as being unhackable, cloud-based services are an attractive target to hackers.

What is the disaster recovery/business continuity plan (DR/BCP)? Although you might not know the physical location of your services, it is physically located somewhere. All physical locations face threats such as fire, storms, natural disasters, and loss of power. In case of any of these events, how will the cloud provider respond, and what guarantee of continued services is it promising?

Even when you end a contract, you must ask what happens to the information after your contract with the cloud service provider ends.

Note

Insufficient due diligence is one of the biggest issues when moving to the cloud. Security professionals must verify that issues such as encryption, compliance, incident response, and so forth are all worked out before a contract is signed.

Cloud Computing Attacks

Because cloud-based services are accessible via the Internet, they are open to any number of attacks. As more companies move to cloud computing, look for hackers to follow. Some of the potential attack vectors that criminals might attempt include the following:

Denial of Service (DoS): DoS and distributed denial-of-service attacks (DDoS) are still a threat nowadays.

Distributed denial-of-service (DDoS) attacks: Some security professionals have argued that the cloud is more vulnerable to DDoS attacks because it is shared by many users and organizations, which also makes any DDoS attack much more damaging than a DoS attack.

Session hijacking: This attack occurs when the attacker can sniff traffic and intercept traffic to take over a legitimate connection to a cloud service.

DNS attacks: These attacks are made against the DNS infrastructure and include DNS poisoning attacks and DNS zone transfer attacks.

Cross-site scripting (XSS): This input validation attack has been used by adversaries to steal user cookies that can be exploited to gain access as an authenticated user to a cloud-based service. Attackers also have used these vulnerabilities to redirect users to malicious sites.

Shared technology and multitenancy concerns: Cloud providers typically support a large number of tenants (their customers) by leveraging a common and shared underlying infrastructure. This requires a specific level of diligence with configuration management, patching, and auditing (especially with technologies such as virtual machine hypervisors, container management, and orchestration).

Hypervisor attacks: If the hypervisor is compromised, all hosted virtual machines could also potentially be compromised. This type of attack could also compromise systems and likely multiple cloud consumers (tenants).

Virtual machine (VM) attacks: Virtual machines are susceptible to several of the same traditional security attacks as a physical server. However, if a virtual machine is susceptible to a VM escape attack, this raises the possibility of attacks across the virtual machines. A VM escape attack occurs when the attacker can manipulate the guest-level VM to attack its underlying hypervisor, other VMs, and/or the physical host.

Cross-site request forgery (CSRF): This attack is in the category of web-application vulnerability and related attacks that have also been used to steal cookies and for user redirection. CSRF leverages the trust that the application has in the user. For instance, if an attacker can leverage this type of vulnerability to manipulate an administrator or a privileged user, this attack could be more severe than an XSS attack.

SQL injection: This attack exploits vulnerable cloud-based applications that allow attackers to pass SQL commands to a database for execution.

Session riding: Many organizations use this term to describe a cross-site request forgery attack. Attackers use this technique to transmit unauthorized commands by riding an active session using an email or malicious link to trick users while they are currently logged in to a cloud service.

On-path (formerly known as man-in-the-middle) cryptographic attacks: This attack is carried out when attackers place themselves in the communication path between two users. Any time attackers can do this, there is the possibility that they can intercept and modify communications.

Side-channel attacks: Attackers could attempt to compromise the cloud system by placing a malicious virtual machine in close proximity to a target cloud server and then launching a side-channel attack.

Authentication attacks (insufficient identity, credentials, and access management): Authentication is a weak point in hosted and virtual services and is frequently targeted. There are many ways to authenticate users, such as factors based on what a person knows, has, or is. The mechanisms used to secure the authentication process and the method of authentication used are frequent targets of attackers.

API attacks: Often application programming interfaces (APIs) are configured insecurely. An attacker can take advantage of API misconfigurations to modify, delete, or append data in applications or systems in cloud environments.

Known exploits leveraging vulnerabilities against infrastructure components: As you already know, no software or hardware is immune to vulnerabilities. Attackers can leverage known vulnerabilities against virtualization environments, Kubernetes, containers, authentication methods, and so on.

Tip

The Cloud Security Alliance (CSA) has a working group tasked to define the top cloud security threats. You can find details at https://cloudsecurityalliance.org/research/working-groups/top-threats. The CSA’s Top Threats Deep Dive whitepaper is posted at the following GitHub Repository: https://github.com/The-Art-of-Hacking/h4cker/blob/master/SCOR/top-threats-to-cloud-computing-deep-dive.pdf. You also can find additional best practices and cloud security research articles at https://cloudsecurityalliance.org/research/artifacts/.

High Availability Across Zones

In cloud computing environments, high availability is addressed using the concept of regions or zones. Deploying the components of a high-availability environment across multiple zones greatly reduces the risk of an outage. Each cloud service vendor has its own way of achieving high availability across zones. For instance, Amazon AWS uses elastic load balancing, whereas Microsoft Azure uses the concept of availability zones that are configured in different locations within the Azure infrastructure. There is also the concept of applying high availability across regions in each of the various cloud infrastructures.

Note

Availability zones are isolated locations in which cloud resources can be deployed. Although resources aren’t replicated across regions, they are replicated across availability zones. This setup provides load-balancing capabilities as well as high availability. Regions are separate geographic locations where infrastructure as a service (IaaS) cloud service providers maintain their infrastructure. Within regions are availability zones.

Resource Policies

Resource policies in cloud computing environments are meant to control access to a set of resources. A policy is deployed to manage the access to the resource itself. An example of this in Google Cloud Service is the use of constraints. These constraints are essentially a set of restrictions that are meant to outline the actions that can be controlled within the cloud environment. Often they are defined in the form of an organization policy. These policies are associated with an identity or a resource. A policy itself is what defines the permissions that are applied to the identity or resource. An identity-based policy would be utilized for a user, group, or role. This policy would define what that specific user has the permissions to do. Resource-based policies are, of course, as the name explains, associated with a specific resource available in the environment. The resource policy itself would define who has access to these resources and what they can do with them.

Integration and Auditing

The process of cloud integration essentially means “moving to the cloud.” It is the migration of computing services from a traditional on-premises deployment to a cloud services deployment. Of course, there are many different approaches to cloud integration. Part of this integration process should include an auditing phase. This is where the organization that is “moving to the cloud” would evaluate the impact that this move will have on its current security controls as well as the privacy of data being integrated into the cloud. Each of the concerns that is highlighted from this audit phase should be addressed before moving forward with the integration process.

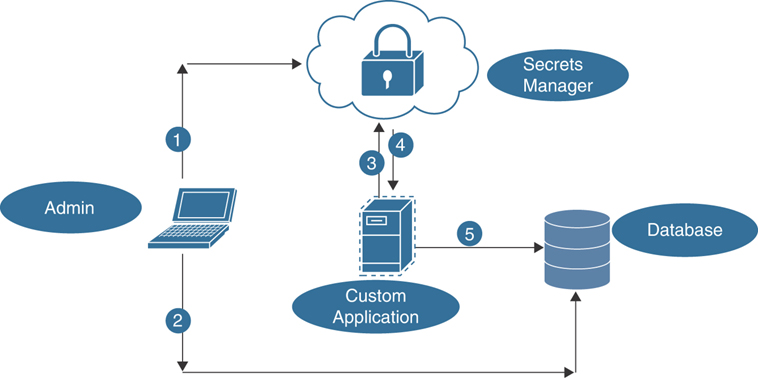

Secrets Management

In cloud computing environments, the management of things such as application programming interface (API) keys, passwords, and certificates is typically handled by some kind of secrets management tool. It provides a mechanism for managing the access and auditing the secrets used in the cloud environment. Each cloud service environment has its version of a secrets manager. In fact, the one used in Google Cloud Service is called Secret Manager. You can find additional information about the specific features of Google’s Secret Manager at https://cloud.google.com/secret-manager. To access the database in Figure 22-1, the custom application will make a call to the secrets manager for the named secret. The secrets manager can manage the task of rotating the secrets and programmatically managing retrieval based on API calls. The secrets management system decrypts the secret and securely transmits the credentials to the custom application (not the database) using transport layer encryption. The application uses the credential received to access the database.

FIGURE 22-1 A Secrets Manager

Storage

The following sections cover secure storage in a cloud computing environment and how it is implemented. We provide an overview of how permissions are handled, as well as the importance of encryption, replication, and high availability when it comes to cloud storage security.

Permissions

In a cloud computing environment, storage is referred to as buckets. The access to these buckets is controlled by an Identity and Access Management (IAM) policy. Storage is offered as a service on the various cloud storage platforms. This service gives customers the capability to manage things like permissions and user access. Typically, the IAM service has predefined roles configured that can be applied to the resources. An example of a storage role in Google Cloud storage is the Storage Object Creator. This role would provide the user with permissions to create objects; however, it does not allow the user to view, delete, or replace the objects. Another example is the Storage Object Admin role. This role provides the user it is assigned to with full control over the objects.

Encryption

Cloud storage buckets provide various options for encryption of the data stored. Typically, cloud computing environments encrypt data stored by default; however, it is a good idea to verify this and explore the options such as user-provided keys. Before data is transferred to the cloud, it goes through a process of encoding and transforming the data. This is the process of cloud encryption. On the cloud storage provider side, the process encrypts the data, and the encryption keys are passed to the users for use in decryption. A sample method of storage using multiple layers of keys is Google’s envelope encryption technique. This process involves encrypting a key with another key.

Replication

Some cloud computing environments provide a mechanism to replicate stored data between regions/zones. This provides for higher levels of redundancy and lower risk of outage or data loss. This increases the availability that we have discussed throughout the book and ties directly back to the CIA triad mentioned early on. In some cases you are actually able to specify which geographic locations you would like your data stored and replicated to. Each cloud service vendor has a different offering when it comes to options for replication of data. The main point to keep in mind when developing a replication strategy is how it will increase the durability of the data you are replicating and your overall availability.

High Availability

In cloud computing environments, the level of high availability is based on the storage class you choose and pay for. Each vendor has different specifications for the classes of storage it offers. This should be determined based on an analysis of the risk while in the integration process. This is an area where you do not want to compromise. For instance, hosting a mission-critical application that is supported by a back-end database would warrant paying for a higher level of storage availability. On the other hand, you might just be hosting some user data that is not as critical to your overall business continuity. This is an area where you might allow for a lower level of availability controls.

Network

Cloud computing environments have many options for network configuration. Each vendor has various options for segmentation and security of networks. This section begins with a look at virtual networks as well as public and private subnets. The section concludes with an overview of segmentation and API inspection and integration.

Virtual Networks

The use of the various virtual network solutions depends on how you intend to segment and/or connect the various networks. There are many use cases for virtual networks. They could be used to connect virtual machines that are dispersed between physical locations. They could also be used to set aside a segmented network for the purposes of containing specific traffic inside an enclave.

Public and Private Subnets

In cloud computing environments, the concept of a public subnet is one that has a route to the Internet. A private subnet would be a subnet in the cloud environment that does not have a route to the Internet. Obviously, a private subnet that cannot reach the Internet is limited in that way; however, many times this is the actual use case that is intended—containing traffic on a specific virtual network. On the other hand, a public subnet that is able to connect to the Internet provides additional capabilities; however, it also has a much higher risk based on the additional attack surface and exposure. A private subnet will typically be used in a situation where there is no need for the devices on that subnet to access the Internet. This makes it possible to minimize the risk of compromise by isolating these network devices. For instance, say you have a public-facing application that utilizes a back-end database. The front-end application server needs access to the Internet, but the back-end database server does not. So it’s best to segment it off to a private subnet.

Segmentation

In cloud computing environments, segmentation can mean many things. There are also many ways to go about segmenting in these environments, based on the particular environment that you’re working with. Some examples are the use of virtual private clouds (VPCs), which are discussed shortly, and microsegmentation of applications. As mentioned, segmentation can be used to protect devices on the network that do not need access to other parts of the network, such as the application server and back-end database previously discussed. This is an example of segmentation and how it can be implemented.

API Inspection and Integration

All cloud computing environments are able to provide API inspection and integration. This allows for better automation of workflow deployment. These integrations typically need to be enabled in the environment to utilize them.

Compute

The following sections cover the concepts of compute. Some of the related concepts include security groups as well as dynamic resource allocation. We also cover instance awareness and finish up with an overview of virtual private cloud endpoint and container security.

Security Groups

Cloud-based security groups provide a mechanism for specifying separate areas where different security controls can be applied. This is similar to the use of security zones on a firewall. Security groups can be used to provide defense-in-depth to cloud-based environments. The primary function of a security group is to control the inbound and outbound traffic on the various interfaces. Typically, these interfaces would be public or private. Obviously, on the public side, it is most important to have a more strict policy for allowing inbound traffic. Most cloud providers have prebuilt security groups that can be applied. One example would be a security group that allows SSH access on port 22/TCP. Often, this is required for management purposes.

Dynamic Resource Allocation

What makes a cloud computing environment efficient is the way it is able to allocate resources based on demand. Without this capability, a cloud-based computing environment would not be feasible. Dynamic resource allocation is the concept of providing resources to tenants based on the demand. This approach provides customers with the ability to scale resources up and down more efficiently. This allows for better resource utilization. One downside to this, however, is that there can be latency in the dynamic allocation process. This is typically not a problem unless the specific application or platform that is running on these resources is sensitive to dynamic resource allocation latency. It is best to consult the application or platform vendor to verify that this is supported before deploying in a cloud environment. A failure due to unsupported cloud deployment could result in increased downtime, which affects the availability of your systems.

Instance Awareness

Instance awareness is a concept used by cloud access security broker (CASB) solutions to enforce policies on specific parts of an application or instance. To properly enforce policy, a CASB needs to recognize the differentiation of cloud service instances—for instance, the difference between a corporate use of Dropbox and home user Dropbox access.

Virtual Private Cloud Endpoint

A virtual private cloud endpoint is a virtual endpoint device that is able to access VPC services in your cloud computing environment. Various types of VPC endpoint devices can be utilized in your environment based on the vendor. Utilizing a VPC endpoint enables you to connect to other supported services without the requirement of using a virtual private network (VPN) or Internet gateway. Consider the following best practices when using a VPC:

Utilize availability zones to provide for high availability.

Utilize policies to control VPC access.

Implement ACLs and security groups where possible.

Enable logging of API activity as well as flow logs for traffic information.

Container Security

Various tools are available for securing containerized cloud computing environments today. Some are native to the actual cloud computing environment, and some are third-party solutions that run on those environments. In today’s computing environment, it is necessary to have a good handle on container security. But what does that mean? First of all, visibility of what containers are running in your environment is essential. From there, you need visibility into what is actually running inside the container and what packages that container is made up of. Additionally, you need to be able to easily identify when a package inside of a container is out of date or vulnerable and then mitigate that vulnerability. As mentioned, each vendor has a different mechanism or manager for performing these functions. If containers are going to be used in your cloud environment (you should assume they are), then you must plan for a full audit during integration. Typically, the tools for managing container security are a part of the container orchestrator tool. For instance, Google Kubernetes Engine utilizes the Google Cloud IAM for controlling and managing access. It is also important to capture the audit logs from the orchestration engine. This is something that is done by default in Google Kubernetes Engine. Additionally, it allows you to apply network control policy for managing containers and pods. These are just some of the considerations you should keep in mind when utilizing containers in your cloud environment.

Summary of Cloud Security Controls

Table 22-2 provides a summary of the key cloud security controls covered in the preceding sections.

Table 22-2 Key Cloud Security Controls

Cloud Security Control |

Description |

|---|---|

High availability across zones |

In cloud computing environments, high availability is addressed using the concept of regions or zones. Deploying the components of a high availability environment across multiple zones greatly reduces the risk of an outage. |

Resource policies |

Resource policies in cloud computing environments are meant to control access to a set of resources. A policy is deployed that will manage the access to the resource itself. |

Integration and auditing |

The process of cloud integration essentially means “moving to the cloud.” It is the migration of computing services from a traditional on-premises deployment to a cloud services deployment. Part of this integration process should include an auditing phase. This is where the organization that is “moving to the cloud” would evaluate the impact that this move will have on its current security controls as well as the privacy of data being integrated into the cloud. |

Secrets management |

In cloud computing environments, the management of API keys, passwords, and certificates is typically handled by some kind of secrets management tool. It provides a mechanism for managing the access and auditing the secrets used in the cloud environment. |

Storage |

Permissions: In a cloud computing environment, storage is referred to as buckets. The access to these buckets is controlled by an Identity and Access Management (IAM) policy. Encryption: Cloud storage buckets provide various options for encryption of the data stored. Typically, cloud computing environments encrypt data stored by default. However, it is a good idea to verify this and explore the options such as user-provided keys. Replication: Some cloud computing environments provide a mechanism to replicate your stored data between regions/zones. This provides for higher levels of redundancy and lower risk of outage or data loss. In some cases, you are actually able to specify which geographic locations you would like your data stored and replicated to. High availability: In cloud computing environments, the level of high availability is based on the storage class you choose and pay for. Each vendor has different specifications for the classes of storage it offers. |

Network |

Virtual networks: In cloud computing environments, there are many options for network configuration. Each vendor has various options for segmentation and security of networks. Public and private subnets: In cloud computing environments, the concept of a public subnet is one that has a route to the Internet. A private subnet would be a subnet in the cloud environment that does not have a route to the Internet. Segmentation: In cloud computing environments, segmentation can mean many things. There are also many ways to go about segmenting in these environments, based on the particular environment that you are working with. Some examples are the use of virtual private clouds and microsegmentation of applications. API inspection and integration: Most cloud computing environments are able to provide API inspection and integration. This allows for better automation of workflow deployment. These integrations typically need to be enabled in the environment to utilize them. |

Compute |

Security groups: Cloud-based security groups provide a mechanism for specifying separate areas where different security controls can be applied, similar to the use of security zones on a firewall. Security groups can be used to provide defense-in-depth to cloud-based environments. Dynamic resource allocation: What makes a cloud computing environment efficient is the way it is able to allocate resources based on demand. Without this ability, a cloud-based computing environment would not be feasible. Dynamic resource allocation is the concept of providing resources to tenants based on the demand. Instance awareness: Instance awareness is a concept used by cloud access security broker solutions to enforce policies on specific parts of an application or instance. Virtual private cloud endpoint: A VPC endpoint is a virtual endpoint device that is able to access VPC services in your cloud computing environment. Various types of VPC endpoint devices can be utilized in your environment based on the vendor. Container security: Various tools are available for securing containerized cloud computing environments today. Some are native to the actual cloud computing environment, and some are third-party solutions and run on those environments. |

Solutions

The following sections cover cloud security solutions, including CASB, application security, and next-generation Secure Web Gateway (SWG). Additionally, we dive into firewall considerations in a cloud environment, such as cost, need for segmentation, and Open Systems Interconnection (OSI) layers.

CASB

A cloud access security broker (CASB) is a tool that organizations utilize to control access to and use of cloud-based computing environments. Many cloud-based tools are available for corporate and personal use. The flexible access nature of these tools makes them a threat to data leak prevention and the like. It is very easy to make the mistake of copying a file that contains sensitive data into the wrong folder and making it available to the world. This is the type of scenario that CASB solutions help to mitigate. Of course, a number of CASB vendors are out there. Each has its own way of doing things. When securing your environment, you should first evaluate what your use cases will be for a CASB. For instance, how many cloud applications are you utilizing in your corporate environment? Make a list of each, how they are used, and by which part of the organization. You most likely will find that there are many more than you expected. When you have that information available, you can continue your journey to find a CASB vendor that will work best for your environment.

Note

Cloud access security brokers help organizations extend on-premises security solutions to the cloud. They are solutions that act as intermediary between users and cloud service providers.

Application Security

The move to cloud-based applications has or is happening in almost every organization these days. It, of course, provides many advantages such as flexibility, ease of access, and more efficient use of resources. The downside is that it also poses some significant security concerns for these organizations. The traditional way of managing applications no longer applies. SecOps organizations need to find a way to allow access to users yet provide them with the security that they would expect from an on-prem application. The term on-prem (or on-premises) refers to any application or service that is hosted in the organization’s physical facilities, not in the cloud. For some organizations, that is a strict requirement. For most, they are looking for ways to implement proper application security. This is where solutions like cloud access security broker come into play.

Aside from utilizing solutions like CASB, companies should follow additional best practices when it comes to application security. First is visibility. You need to know what applications are being accessed and used in your environment by your user base. You can check by simply monitoring your user Internet traffic to see which cloud-based applications they are using. You then need to make known and implement policies regarding what applications are allowed by your organization and how best practices on handling sensitive data can be applied. Additionally, traditional security controls such as malware protection, intrusion prevention, configuration management, and so on should be extended to your cloud-based environment. Many organizations are required to follow specific compliance when it comes to the security of their computing environment. This also applies to any cloud-based environment that they are using and should not be forgotten about. Any time you are implementing a security control on-premises, an overarching best practice is to always ask yourself “How are we doing this in the cloud?”

Next-Generation Secure Web Gateway

The concept of a next-generation Secure Web Gateway (SWG) is top of mind these days. At the time of this writing, we are currently in a global pandemic that has forced millions to work from home. For many years, the solution for providing employees a way to work from home was simply a remote-access VPN back into the office. This solution allowed all of the traffic from the employees’ computers to flow back through the corporate network, which would in turn traverse the same security controls that were in place if the employees were plugged into the corporate network. With the advent of cloud-based applications and storage, this solution is no longer the most efficient way of securing remote workers. This is where the SWG comes into play. A Secure Web Gateway enables you to secure your remote workers’ Internet access while not overloading the corporate Internet pipe. This approach is sometimes thought of as a cloud firewall. However, an SWG typically has many other protection mechanisms in place, including things like a CASB. One example of an SWG is Cisco Umbrella.

Firewall Considerations in a Cloud Environment

The following sections provide an overview of firewall considerations in a cloud environment. The topics we cover are cost, need for segmentation, and OSI layers.

Cost

The cost of implementing a cloud-based firewall is typically based on the amount of data processed. So, as you can imagine, this solution can become very expensive if not used efficiently. The first thing to determine is what kind of throughput you need. That starts with looking at your existing environment to determine what is actually being used. From there, you should also look at what kind of traffic makes up that bandwidth requirement. Many cloud-based firewall solutions allow you to send specific traffic through the firewall, whereas all other traffic goes directly to the Internet or takes an alternate route. This can help with reducing cost. Of course, the trade-off for this approach is that your implementation will be a bit more complex and might require more upfront cost. In the long run, however, it should save you money when it comes to cloud resources used.

Need for Segmentation

Segmentation in a cloud environment is very important for the purposes of high availability, load balancing, and disaster recovery as well as containment of security breaches. By segmenting your cloud environment, you are able to better contain a breach and more quickly mitigate it. As mentioned in previous sections, there are many use cases for network segmentation. Understanding what is on your network is the first step in determining the need for segmentation. This requires visibility into physical and virtual devices as well as containers. This solution can be in the form of network segmentation, or it can also require microsegmentation.

Open Systems Interconnection Layers

Cloud-based firewalls can work at all layers of the OSI model; however, in many cloud computing environments, the firewall is used at the application layer to control access and mitigate threats to applications being hosted by the cloud environment. For instance, with web applications being hosted in a cloud environment, there is a need for a Layer 7 firewall, otherwise known as a web application firewall (WAF). This type of firewall is able to identify and mitigate specific web application type attacks. A WAF can also be implemented to protect a container or as a container to protect a web application in the container environment. As you can see, there are many use cases for a firewall at various layers in the cloud environment.

Summary of Cybersecurity Solutions to the Cloud

Table 22-3 provides a summary of the key cloud security solutions covered in the preceding sections.

Table 22-3 Key Cloud Security Solutions

Cloud Security Solution |

Description |

|---|---|

CASB |

A cloud access security broker is a tool that is utilized in organizations to control access to and use of cloud-based computing environments. Many cloud-based tools are available for corporate and personal use. The flexible access nature of these tools makes them a threat to data leak prevention and the like. It is very easy to make the mistake of copying a file that contains sensitive data into the wrong folder and making it available to the world. This is the type of scenario that CASB solutions help to mitigate. |

Application Secure Web Gateway |

With the advent of cloud-based applications and storage, a VPN is no longer the most efficient way of securing remote workers. This is where the Secure Web Gateway comes into play. An SWG enables you to secure your remote workers’ Internet access while not overloading the corporate Internet pipe. It is sometimes thought of as a cloud firewall. However, an SWG typically has many other protection mechanisms in place, including things like CASB. |

Firewall considerations in a cloud environment |

Cost: The cost of implementing a cloud-based firewall is typically based on the amount of data processed. So, as you can imagine, this solution can become very expensive if not used efficiently. Need for segmentation: Segmentation in a cloud environment is very important for the purposes of high availability/disaster recovery as well as containment of security breaches. By segmenting your cloud environment, you are able to better contain a breach and more quickly mitigate. Open Systems Interconnection (OSI) layers: Cloud-based firewalls can work at all layers of the OSI model; however, in many cloud computing environments, the firewall is used at the application layer to control access and mitigate threats to applications being hosted by the cloud environment. |

Cloud Native Controls vs. Third-Party Solutions

To put it simply, a cloud native control is typically provided by the actual cloud computing environment vendor. A non-cloud native control is provided by a third-party vendor. For instance, each cloud computing environment has security controls built into its platform. However, these controls might not be sufficient for all use cases. That is where third-party solutions come into play. Many companies out there today provide these third-party solutions to supplement where cloud native controls are lacking. Of course, most virtual machine–based controls can be deployed in any cloud computing environment; however, for it to be native, it must go through various integration, testing, and certification efforts. Because each cloud computing environment is built differently and on different platforms, the controls must be adapted to work as efficiently as possible with the specifications of the environment. Often the decision between utilizing cloud native versus third-party solutions comes down to the actual requirements of the use case and the availability of the solution that fits those requirements.

Chapter Review Activities

Use the features in this section to study and review the topics in this chapter.

Review Key Topics

Review the most important topics in the chapter, noted with the Key Topic icon in the outer margin of the page. Table 22-4 lists a reference of these key topics and the page number on which each is found.

Table 22-4 Key Topics for Chapter 22

Key Topic Element |

Description |

Page Number |

|---|---|---|

Section |

High Availability Across Zones |

603 |

Section |

Resource Policies |

603 |

Section |

Integration and Auditing |

604 |

Section |

Secret Management |

604 |

Key Cloud Security Controls |

609 |

|

Key Cloud Security Solutions |

614 |

Define Key Terms

Define the following key terms from this chapter, and check your answers in the glossary:

high availability across zones

API inspection and integration

virtual private cloud (VPC) endpoint

Review Questions

Answer the following review questions. Check your answers with the answer key in Appendix A.

1. In cloud computing environments, which type of policy would be used to control access to things like CPU and memory allocation?

2. What cloud security control is utilized by a cloud computing environment to handle API keys?

3. In cloud computing environments, what is a term used for storage instances?

4. Which type of subnet would have a route to the Internet?

5. What tool is used to control access to cloud-based environments?

6. What cloud security solution would help to enable remote worker access more efficiently?

7. Which Open Systems Interconnection (OSI) layer do cloud-based firewalls focus on?

8. Which type of cloud control is typically provided by the actual cloud computing environment vendor?