Chapter 9

Signal Processing on Complex Networks

Previous chapters discussed various complex network models and different methods to explore the structure of complex networks. This chapter discusses analysis and processing of data defined on complex networks. A huge amount of data is generated by each individual node in a large complex network. Data defined on a complex network can be visualized as a set of scalar values, known as a graph signal, supported by the structure of the network. Graph signals can arise from various scenarios such as information diffusion in social networks, functional activities in the brain, vehicular traffic in road networks, and temperature or pressure in sensor networks. In addition, in computer graphics, data defined on any geometrical shape described by polygon meshes can be formulated as a graph signal. Unlike time series or images, these signals have complex and irregular structure that requires novel processing techniques leading to the emerging field of graph signal processing.

The complex and irregular structures of the underlying graphs, as opposed to the regular structures in case of time series and image signals dealt with in classical signal processing, impose a great challenge in analysis and processing of graph signals. Fortunately, recent work toward the development of important concepts and tools extending classical signal processing theory, including sampling and interpolation on graphs, graph-based transforms, and graph filters, have enriched the field of graph signal processing. These tools have been utilized in solving a variety of problems such as signal recovery on graphs, clustering and community detection, graph signal denoising, and semi-supervised classification on graphs. This chapter gives an overview of concepts and tools that have been developed in the field of graph signal processing. The chapter concludes with a brief list of open research problem in the graph signal processing area.

9.1 Introduction to Graph Signal Processing

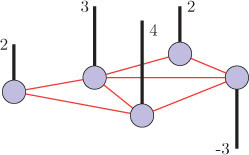

Graph signals are data values lying on the vertices of arbitrary graphs. An example graph signal is shown in Figure 9.1, where the vertical black lines going upward represent positive values and the black line going downward represents a negative value. Vertices of a graph represent entities, and the pairwise relationship between any two vertices is represented as an edge. A graph signal assigns a scalar value to each vertex based on some observations associated with the entities. For example, graph signals can be defined for temperatures within a geographical area, traffic capacities at hubs in a transportation network, or human behaviors in a social network. Graph signal processing (GSP) is concerned with modeling, representation, and processing of signals defined on graphs. Graph signal processing extends the concepts and tools that have been well developed in classical signal processing.

Figure 9.1. An example graph signal

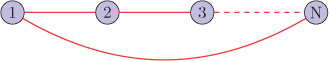

In classical signal processing [159][160], we deal with discrete-time signals or image signals. An example of a discrete-time signal is shown in Figure 9.2(a). The vertical black lines represent corresponding strength of the signal samples at regular time instants t1, t2,... , tN. A discrete-time signal can also be viewed as a signal lying on a regular structure that is a 1-D line graph, as shown in Figure 9.2(b). In this line graph, the nodes correspond to the time instants, and edges represent the time adjacency. In other words, the support of discrete-time signals is a regular line graph. Another class of signals that is considered in classical signal processing is image signals. A digital image contains rows and columns of pixels. Each pixel is assigned an intensity value to form an image signal. The image pixel lattice can be represented as an undirected graph that is a rectangular grid. Figure 9.3(a) shows an example image signal whose support is a 2-D rectangular grid, as shown in Figure 9.3(b). In this rectangular grid nodes correspond to the image pixels and the edges correspond to the pixel adjacency; that is, the pixels that are adjacent in space are connected by an edge. All the edge weights are assumed to be unity. Therefore, an image signal can be viewed as intensity values lying on a rectangular grid.

Figure 9.2. A discrete-time signal and its support

Figure 9.3. An example image signal and its support. Source: hannamariah/123RF.com

The support of discrete-time signals or image signals is unique and regular in nature: 1-D line graph for discrete-time signals and 2-D rectangular grid for image signals. Imagine signals supported on the structures shown in Figure 9.4, which are highly irregular in nature. For such signals, the classical signal processing techniques such as Fourier transform and wavelets cannot be applied because of the irregular nature of the underlying structure. Signals supported by these irregular structures, or graphs, are known as graph signals. The irregular support of graph signals is the main challenge for developing graph signal processing concepts. Many simple yet fundamental operations become extremely challenging when dealing with graph signals. The following difficulties may arise in the graph settings:

Figure 9.4. Example of supports of graph signals

The difficulty can be visualized from a very simple operation of translation. Translation operation is quite simple in classical settings. For example, to translate a signal left by 5 units, all the samples of the signal need to be advanced by 5 units. This operation is illustrated in Figure 9.5(a). In the figure, the solid curve represents the original signal, and the dashed curve represents the translated signal. However, if one wants to translate the graph signal shown in Figure 9.5(b) by, say, 1 unit, it is not straightforward. Since a node is connected to multiple nodes, it is not clear in which direction the sample value at the node should be moved. For example, node 5 is connected to nodes 2 and 4. To translate the sample value at node 5, should one move toward node 2 or node 4?

Figure 9.5. Illustration of translation

To downsample a discrete-time signal, alternate samples of the signal are discarded. However, to downsample the graph signal shown in Figure 9.1, which samples should be discarded?

Graph signal processing addresses these challenges by merging spectral graph theoretical concepts with computational harmonic analysis. The basic analogy between classical and graph signal processing is established through the eigenvalues and eigenvectors of graph Laplacian matrix, which carries a notion of frequency for graph signals and leads to the generalization of traditional signal processing techniques to the graph settings. For frequency analysis of graph signal analogous to classical Fourier transform, graph Fourier transform has been defined. Graph Fourier transform is not only useful in frequency analysis of graph signals, but has also been proved to be central in developing multiple concepts. For example, graph Fourier transform is used in constructing spectral graph wavelets that allow multiscale analysis of graph signals. Also, various operators such as convolution, translation, and modulation have been defined through graph Fourier transform. Throughout this chapter, we assume that the underlying graphs are undirected and have positive edge weights. The directed graphs will be considered in Chapter 10.

9.1.1 Mathematical Representation of Graph Signals

A graph is represented as ?=(?,?)

9.2 Comparison between Classical and Graph Signal Processing

Classical signal processing is well equipped with a number of powerful tools and concepts that have been developed over several decades. Some of them include Fourier transform, filtering, convolution, translation, modulation, dilation, and windowed Fourier transform. Graph signal processing aims to extend these powerful concepts to data residing on general graphs.

The important tools and operators for classical and graph signal processing are summarized in Table 9.1. The concept of frequency analysis of the classical case is extended to the graph setting using eigendecomposition of the graph Laplacian matrix. Complex exponentials are used as expansion basis in classical Fourier transform, whereas in graph settings, eigenvectors of the graph Laplacian are used as expansion basis for graph signals. This equivalent transform in graph settings is termed as graph Fourier transform (GFT).

Table 9.1. Classical vs. graph signal processing

Operator/Transform |

Classical Signal Processing |

Graph Signal Processing |

Fourier Transform |

• • Frequency: ω can take any real value • Fourier basis: Complex exponentials ejωt |

• • Frequency: Eigenvalues of the graph Laplacian (λℓ) • Fourier basis: Eigenvectors of the graph Laplacian (uℓ) |

Convolution |

• In time domain: x(t)*y(t)=∫∞−∞x(τ)y(t−τ)dτ • In frequency domain: ˆx(t)*y(t)=ˆx(ω)ˆy(ω) |

• Defined through graph Fourier transform • |

Translation |

• Can be defined using convolution • Tτ x(t) = x(t − τ) = x(t) ∗ δτ(t) |

• Defined through graph convolution • |

Modulation |

• Multiplication with the complex exponential • Mω x(t) = ejωt x(t) |

• Multiplication with the eigenvector of the graph Laplacian • |

Windowed Fourier Transform |

• Defined through translation and modulation of a window |

• Generalized translation and modulation operators are used |

The convolution product of two graph signals is defined through GFT. This analogy is drawn from the well-known classical convolution theorem according to which convolution in the time domain is equivalent to multiplication in the frequency domain.

Translating a classical signal is equivalent to convoluting the signal with an impulse. Similarly, a translation operator in graph settings has been defined through convolution product with an impulse.

In classical signal processing, modulation of a signal to a certain frequency is nothing but multiplication of the signal with the complex exponential at that frequency. Similarly, the modulation operator in a graph setting is achieved through multiplication of the eigenvector of the graph Laplacian.

9.2.1 Relationship between GFT and Classical DFT

Discrete Fourier transform (DFT) of a discrete-time signal ?∈ℂN

(9.2.1) ?=???N=[1111…11e−2πjNe−2πjN2e−2πjN3…e−2πjN(N−1)1e−2πjN2e−2πjN4e−2πjN6e−2πjN2(N−1)1e−2πjN3e−2πjN6e−2πjN9e−2πjN3(N−1)⋮⋮⋱⋮1e−2πjN(N−1)e−2πjN2(N−1)e−2πjN3(N−1)…e−2πjN(N−1)(N−1)].

A classical discrete-time periodic signal can be viewed as a graph signal living on a ring graph, as shown in Figure 9.6. The Laplacian matrix of a ring graph is a circulant matrix (see Appendix A.2.4 for details) and can be written as and one possible choice of the corresponding (complex) eigenvectors is1

Figure 9.6. A ring graph: support of a discrete-time signal

(9.2.2) ?=[2−10…0−1−12−1000−1200⋮⋱⋮0002−1−100−12].

The eigenvalues of the graph Laplacian of an N-node ring graph are

λℓ=2−2cos(2πℓN),∀ℓ={0,1,…,N−1}

?ℓ=1√N[1e2πjNℓe2πjN2ℓ⋮e2πjN(N−1)ℓ],∀ℓ={0,1,…,N−1}.

These eigenvectors are nothing but the columns of the DFT matrix given by Equation 9.2.1. Therefore, classical discrete-time Fourier basis are eigenvectors of the Laplacian of the undirected ring graph.

9.3 The Graph Laplacian as an Operator

As discussed in Chapter 2, the Laplacian matrix of a graph is defined as

where D is the degree matrix and W is the weight matrix of the graph. For a graph signal ?∈ℝN

where ?i

9.3.1 Properties of the Graph Laplacian

The graph Laplacian constitutes some importantpropertiesthatmakeitextremely useful in the analysis of graph signals. Properties of the Laplacian matrix that are utilized in graph signal processing are listed below. Note that the underlying graph is assumed to be undirected with positive edge weights.

The Laplacian matrix is symmetric.

The eigenvalues and eigenvectors of the Laplacian matrix are real. The symmetric nature of the Laplacian results in this property.

The Laplacian matrix is positive semidefinite; that is, all the eigenvalues of the Laplacian are greater than or equal to zero. It holds true only for graphs with positive edge weights.

The Laplacian matrix always has at least one zero eigenvalue. Moreover, for connected graphs it has only one zero eigenvalue. This property results from the fact that all the rows of the Laplacian matrix sum to zero, thereby ensuring at least one zero eigenvalue.

It has a complete set of orthonormal eigenvectors; that is, it forms a complete orthonormal basis.

The eigenvalues and eigenvectors of the graph Laplacian provide means for analyzing graph signals in the frequency domain, and thus make the graph Laplacian the basic building block of graph signal processing. Although the properties of eigenvalues and eigenvectors of the graph Laplacian have been extensively studied for the analysis of graph structure [161], [162], graph signal processing utilizes the oscillations of eigenvectors of the Laplacian matrix for analyzing signals defined on graphs.

Use of the graph Laplacian for frequency analysis of graph signals is limited to signals residing on undirected graphs. The primary advantage of using graph Laplacian for frequency analysis is that it results in simple analysis and gives precise analogy to classical signal processing. Another framework that uses directed Laplacian for analyzing data residing on directed graphs is discussed in Chapter 10.

9.3.2 Graph Spectrum

The set of eigenvalues of the graph Laplacian matrix is known as graph spectrum or Laplacian spectrum (of graphs). Graph Laplacian L is a real symmetric matrix, and it has a complete set of orthonormal eigenvectors [163]. L is also a positive semidefinite matrix; therefore, it has nonnegative eigenvalues. Moreover, for connected graphs, the multiplicity of zero eigenvalue is one. We denote the system of eigenvalues and eigenvectors of L as L as {λℓ,?ℓ}N−1ℓ=0, where λℓ is the eigenvalue of L and uℓ is the corresponding eigenvector. The graph spectrum of an N-node graph ? is represented as σ(?)={λ0,λ1,⋯,λN−1}, where 0 = λ0 ≤ λ1 ≤ λ2 ··· ≤ λN−1.

9.4 Quantifying Variations in Graph Signals

In classical signal processing, smoothness means adjacent signal coefficients have similar values. The concept of smoothness is useful in numerous applications. A widely used application is denoising; to eliminate noise from a corrupted a signal, the noisy signal is smoothened by using an averaging filter to remove noise. Gradient measures and total variation are some of the popular measures of smoothness (or variation) in classical signal processing. These concepts can easily be extended to graph settings.

Smoothness of a graph signal is defined with respect to the intrinsic structure of the weighted graph on which signal values lie. Smoothness of a graph signal with respect to the structure of the underlying graph is quantified using some discrete differential operators such as edge derivative and gradient.

The edge derivative of a graph signal f with respect to edge eij (which connects the vertices i and j) at vertex i is defined as

and the graph gradient of the signal f at vertex i is the N-dimensional vector containing all the edge derivatives at vertex i:

Note that the number of non-zero entries in the gradient vector at node i is the number of nodes connected to the node i.

The local variation of the signal f at vertex i is expressed as the ℓ2-norm of the gradient vector at node i:

where ?i is the set of vertices that are connected to the vertex i. Local variation provides a measure of local smoothness of f around vertex i, as it is small when the function f has similar values at i and all neighboring vertices of i.

For measuring global smoothness, the discrete p-Dirichlet form (Sp(f)) of f can be used, which is defined as

For p = 1, S1(f) is known as total variation of the graph signal with respect to the graph. Therefore, total variation can be written as

For p = 2, we have

Therefore, S2(f) is also known as the graph Laplacian quadratic form. When the signal f has similar values at neighboring vertices connected by an edge with a large weight—that is, when it is smooth—then S2(f) has a small value. Thus, the smoothness not only depends on the signal values but also depends on the underlying graph structure. The Laplacian quadratic form or 2-Dirichlet form can be used to order the graph frequencies (Section 9.5.1), interpolation on graphs,2 and denoising of noisy graphs signals. The values of graph Laplacian quadratic form of the Laplacian eigenvectors associated with lower eigenvalues are small as compared to the Laplacian eigenvectors associated with higher eigenvalues.

9.5 Graph Fourier Transform

Consider a graph signal f = [2, 3, 2, −3, 4]T shown in Figure 9.1. This signal can be alternatively represented as a linear combination of certain graph signals as

?=[232−34]=(?.??)[0.450.450.450.450.45]+(?.??)[0.600.370−0.37−0.60]+(?.??)[0.51−0.20−0.63−0.200.51]+(-?.??)[0.37−0.6000.60−0.37]+(?.??)[0.20−0.510.63−0.510.20].

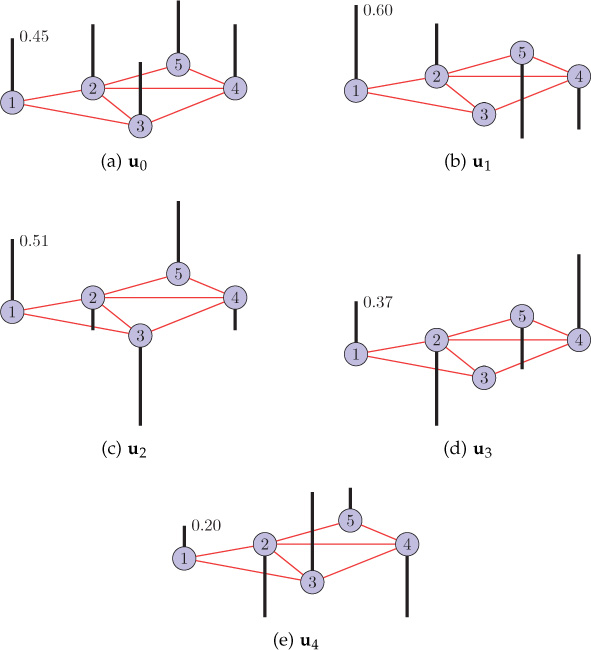

The constituent graph signals in the above sum are nothing but the eigenvectors of the graph Laplacian. These graph signals are known as the graph harmonics. What are the advantages in such a representation? What interesting information do we get from the coefficients of the graph harmonics in the sum? The eigenvectors of the graph Laplacian have a notion of frequency, and the coefficients in the above sum are known as GFT coefficients. The GFT is analogous to the classical Fourier transform and is extremely useful in analyzing graph signals.

The classical Fourier transform allows frequency analysis of discrete-time and image signals, and it lies at the heart of classical signal processing. It decomposes a signal as a linear combination of complex exponentials, which provides notion of frequency. Analogous to the classical Fourier transform, the GFT extends the notion of frequency to irregular graph settings. It provides us a means to extract the structural properties of a graph signal that are not visible in the vertex domain and become evident in the transform (frequency) domain. In graph settings, the notion of frequency is derived from the eigendecomposition of the graph Laplacian matrix. The eigenvalues of the graph Laplacian act as graph frequencies, and the eigenvectors of the graph Laplacian act as graph Fourier basis. It is important to note that the classical Fourier bases are fixed, however, graph Fourier bases depend on the network topology and change with the network structure.

Classical Fourier transform decomposes a signal as a linear combination of complex exponentials known as the Fourier basis. The classical Fourier transform of a function x(t) is given by

where ω is the angular frequency (in radians per second). For large values of ω, the oscillations of complex exponentials ejωt with respect to time t are rapid, and vice versa. Therefore, large values of ω correspond to high frequencies and small values of ω correspond to low frequencies. Furthermore, these complex oscillations are also the eigenfunctions of 1-D Laplace operator Δ, because

Drawing the analogy from the fact that the complex exponentials are the eigenfunctions of the 1-D Laplace operator, the eigenvectors of the graph Laplacian can be used as a graph Fourier basis. Therefore, the GFT ^? of a graph signal f can be defined as the expansion of f in terms of the eigenvectors of the graph Laplacian:

where ˆf(λℓ) is the GFT coefficient corresponding to eigenvalue λℓ and u*ℓ(n) is the complex conjugate of uℓ(n). 〈f1, f2〉 denotes the inner product of vectors f1 and f2. The eigenvectors of the graph Laplacian act as graph harmonics similar to complex exponentials in the classical Fourier transform. The inverse graph Fourier transform (IGFT) is given by

The set of GFT coefficients of a graph signal is called the spectrum of the graph signal. By using GFT in Equation (9.5.3) and IGFT in Equation (9.5.4) a graph signal is represented equivalently in two different domains: the vertex domain and the graph spectral domain. GFT is an energy preserving transform, and a signal can be considered as low-pass (or high-pass) if the energy of the GFT coefficients of that signal are mostly concentrated on the low-pass (or high-pass) eigenvectors.

To define GFT and IGFT in matrix form, consider U = [u0|u1| ... |uN−1] to be the matrix whose columns are the eigenvectors of L. Then the GFT of a graph signal f can be expressed as

In the transformed vector ^?=[ˆf(λ0)ˆf(λ1)…ˆf(λN−1)]T, ˆf(λℓ)=⟨?,?ℓ⟩ is the GFT coefficient corresponding to eigenvalue λℓ. Moreover, IGFT can be calculated as

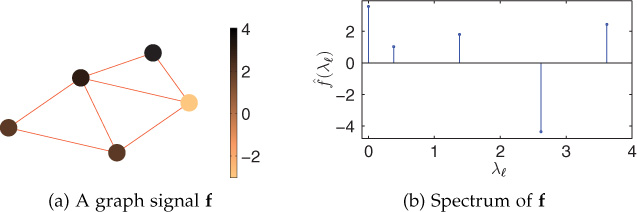

As an example to illustrate GFT, consider the graph signal shown in Figure 9.7(a). The corresponding GFT coefficients with respect to the graph frequencies are plotted in Figure 9.7(b), where the horizontal axis represents the graph frequencies (the eigenvalues of the graph Laplacian) and the vertical axis represents the magnitude of the GFT coefficients. As another example, consider a heat kernel defined on the Minnesota road network. In the graph spectral domain, the heat kernel is represented as ˆg(λℓ)=e−2λℓ, as shown in Figure 9.8(a). The vertex domain representation of the heat kernel is depicted in Figure 9.8(b).

Figure 9.7. Example of graph Fourier transform: a graph signal in vertex and frequency domains

Figure 9.8. Representation of the kernel in spectral and vertex domains

GFT gives frequency content present in a signal defined on a graph. However, it does not reveal local properties of a graph signal. It tells us what frequency components are present in a graph signal, but it does not tell us where in space these frequency components are present. To find out where in space the frequency content are present, one can use windowed graph Fourier transform (WGFT), discussed in Section 9.8. Moreover, to localize graph signal content in the vertex and spectral domains simultaneously, spectral graph wavelet transform (SGWT), discussed in Chapter 11, can be used.

The GFT defined above is applicable to an undirected graph with nonnegative edge weights. There exist other definitions of GFT that are applicable to directed graphs with negative or complex weights as well. These approaches are described in Chapter 10.

9.5.1 Notion of Frequency and Frequency Ordering

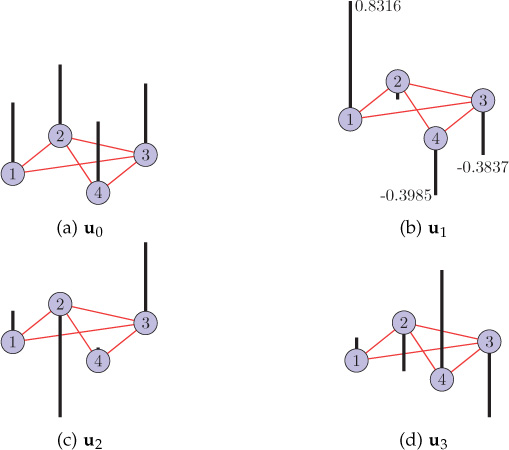

The eigenvalues and eigenvectors of the graph Laplacian provide a notion of frequency. The eigenvectors act as natural vibration modes of the graph, and the corresponding eigenvalues act as associated graph frequencies. Eigenvalue 0 corresponds to zero frequency, and the associated eigenvector u0 is constant and has a value of 1/√N at each node [163]. The eigenvalues of the graph shown in Figure 9.7(a) are {0, 0.38, 1.38, 2.62, 3.62} and the corresponding eigenvector matrix is

(9.5.7) ?=[?0…?4]=[0.450.60−0.510.370.200.450.37−0.20−0.60−0.510.450−0.6300.630.45−0.37−0.200.60−0.510.45−0.600.51−0.370.20].

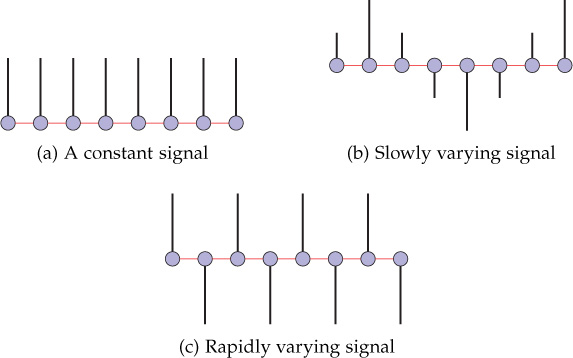

The notion of frequency in the eigenvectors of the graph Laplacian can be visualized through the relation between zero crossings and frequency. Consider the discrete-time signals shown in Figure 9.9. What can be concluded about the frequency content of each of these signals? The first signal does not change with time, or in other words, it has no zero crossings. There is one zero crossing if adjacent signal sample values change their sign, that is, if the signal values go positive from negative or negative from positive. The signal shown in Figure 9.9(b) is a slowly varying signal. There are a few zero crossings; that is, the signal has dominating low frequencies. However, the signal shown in Figure 9.9(c) changes rapidly and has a large number of zero crossings. It suggests that the signal contains significant high frequencies. Therefore, it can be argued that more zero crossings correspond to high frequencies. This relation between zero crossings and frequency can be extended to graph signals too. The eigenvectors of the Laplacian matrix of the graph are plotted in Figure 9.10. Figure 9.10(a) shows the eigenvector corresponding to zero eigenvalue. It has a constant value at each node of the graph, suggesting zero frequency. With the increase in eigenvalue, the zero crossings in the corresponding eigenvector increase, which indicates increase in frequency too. However, by observing zero crossings in a graph signal, only a vague idea regarding its frequency contents can be made. Therefore, some parameter is needed to quantify the oscillations in graph signals and subsequently to order the graph frequencies accurately. Various parameters, such as total variation and p-Dirichlet defined in the previous section, can be used to quantify the oscillations or variations in the graph Fourier basis for the purpose of frequency ordering.

Figure 9.9. Discrete-time signals with various frequencies

Figure 9.10. Eigenvectors of the graph Laplacian

Generally, 2-Dirichlet form or graph Laplacian quadratic form is used for the purpose of frequency ordering. The graph Laplacian quadratic form of the Laplacian eigenvectors is shown in Table 9.2. The graph Laplacian quadratic form of an eigenvector is equal to the corresponding eigenvalue, since ?Tℓ??ℓ=?Tℓλℓ?ℓ=λℓ. Therefore, the eigenvector corresponding to a small eigenvalue has low value of graph Laplacian quadratic form, and vice versa, and thus results in the natural order of frequency–small eigenvalues correspond to low frequency, and vice versa. Frequency ordering is visualized in Figure 9.11.

Table 9.2. Laplacian quadratic forms of the Laplacian eigenvectors of the graph shown in Figure 9.7

Eigenvectors |

Eigenvalues |

Graph Laplacian Quadratic Form |

u0 = [0.45 0.45 0.45 0.45 0.45]T |

0 |

0 |

u1 = [0.60 0.37 0 − 0.37 − 0.60]T |

0.38 |

0.38 |

u2 = [−0.51 − 0.20 − 0.63 − 0.20 0.51]T |

1.38 |

1.38 |

u3 = [0.37 − 0.60 0 0.60 − 0.37]T |

2.62 |

2.62 |

u4 = [0.20 − 0.51 0.63 − 0.51 0.20]T |

3.62 |

3.62 |

Figure 9.11. Frequency ordering from low frequencies to high frequencies. As one moves away from the zero eigenvalue to higher eigenvalues, the eigenvalues correspond to higher frequencies.

From the above discussion, it can be concluded that the eigenvectors of the Laplacian matrix corresponding to low frequencies are smooth with respect to the graph—they vary slowly across the graph. That is, if an edge with a large weight connects two vertices, the values of the eigenvector at those nodes are likely to be similar. The eigenvectors corresponding to larger eigenvalues vary more rapidly as we move from one node to other adjacent node connected by an edge with large weight. Therefore, the eigenvalues of graph Laplacian matrix carry the notion of frequency, where smaller eigenvalues correspond to low frequencies and larger eigenvalues correspond to high frequencies. It should be noted that the graph frequencies are specific to a particular graph. The frequency values cannot be compared between different graphs. For example, consider a graph ?1 having frequencies 0, 0.1, 0.2, 0.3, and 1.2, and a graph ?2 having frequencies 0, 1.2, 2.6, 2.8, and 3. Here, the frequency value of 1.2 corresponds to high frequency for ?1 but corresponds to low frequency for ?2. Indeed, a small change in the topology of a network, resulting from perturbations such as removal or addition of a few edges, changes the graph spectrum completely.

Example

This example illustrates the GFT of an impulse defined on a graph. An impulse δi is a graph signal having all zero values except unit value at node i. In other words,

A graph signal can be represented as a linear combination of impulses. Let ?′i=?T(:,i):

Therefore, GFT of an impulse at node i is

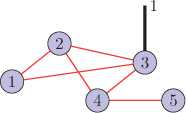

Figure 9.12 shows an impulse at node 3 of the graph. The eigenvector matrix of the graph is

Figure 9.12. Impulse on a graph (δ3)

?=[?0?1?2?3]=[0.44720.43750.703100.33800.44720.2560−0.24220.7071−0.41930.44720.2560−0.2422−0.7071−0.41930.4472−0.1380−0.536200.70240.4472−0.81150.31750−0.2018].

The GFT of the impulse δ3 is

9.5.2 Bandlimited Graph Signals

A graph signal is said to be bandlimited to a graph frequency ω∈ℝ, if the GFT coefficients of the signal are zero outside the graph frequency band [0, ω]. The graph frequency ω is known as bandwidth of the signal. Therefore, for an ω-bandlimited signal f,

The space of ω-bandlimited signals is known as Paley-Wiener space [164]. The Paley-Wiener space is a subspace of ℝN and is denoted by PVω(?).

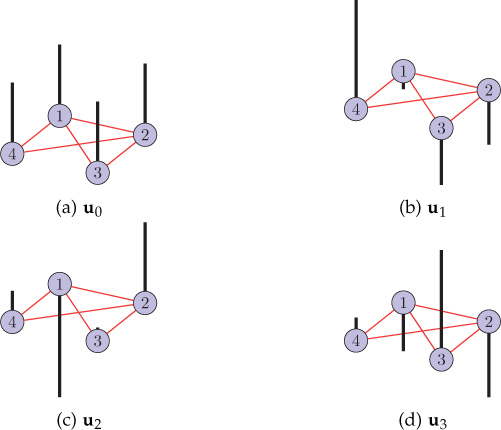

9.5.3 Effect of Vertex Indexing

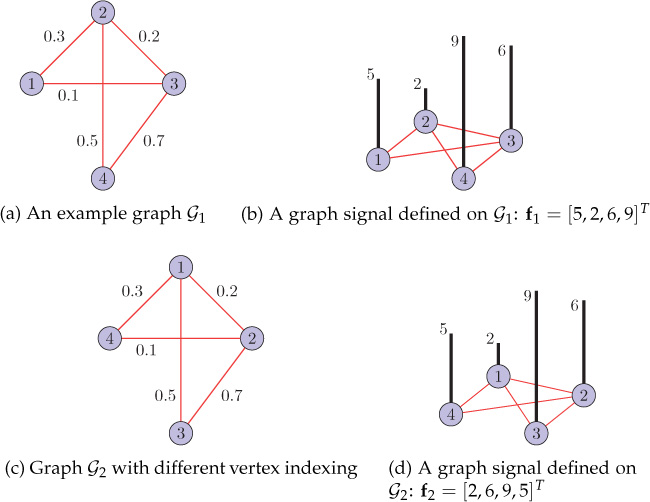

Suppose we are given the values of a graph signal attached to every vertex of the graph. What will happen if only vertex indexing is changed (while keeping all other parameters unchanged)? Figure 9.13(a) shows a weighted graph, and Figure 9.13(b) shows a graph signal defined on the graph of 9.13(a). If only the vertex indexing is changed, as in Figure 9.13(c), how will the signal representation change in the vertex and frequency domains?

Figure 9.13. Graphs with different vertex indexing

First consider the graph ?1 shown in Figure 9.13(a). Its Laplacian and the corresponding eigenvector matrix are

(9.5.13) ?1=[0.4−0.3−0.10−0.31−0.2−0.5−0.1−0.21−0.70−0.5−0.71.2]?1=[0.50.83160.21850.10340.5−0.0494−0.7942−0.34170.5−0.38370.5669−0.53050.5−0.39850.00880.7689].

The graph signal f1 = [5, 2, 6, 9]T (Figure 9.13(b)) can be represented as the linear combination of eigenvectors of the graph Laplacian:

?1=[5269]=(11)[0.50.50.50.5]+(−1.83)[0.8316−0.0494−0.3837−0.3985]+(2.98)[0.2185−0.79420.56690.0088]+(3.57)[0.1034−0.3417−0.53050.7689].

Therefore, ^?1=[11,−1.83,2.98,3.57]T.

Now consider the graph ?2 in Figure 9.13(c), which is the same as the original graph (Figure 9.13(a)) except for a different vertex indexing. The Laplacian and the eigenvector matrix of this graph are

(9.5.14) ?2=[1−0.2−0.5−0.3−0.21−0.7−0.1−0.5−0.71.20−0.3−0.100.4]?2=[0.5−0.0494−0.7942−0.34170.5−0.38370.5669−0.53050.5−0.39850.00880.76890.50.83160.21850.1034]

Altering just the vertex indexing in the graph signal f1 results in a corresponding indexing change in the signal. The resulting graph signal in the vertex domain is represented as f2 = [2, 6, 9, 5]T which is shown in Figure 9.13(d). It can be written as the linear combination of eigenvectors of the graph Laplacian:

?2=[2695]=(11)[0.50.50.50.5]+(−1.83)[−0.0494−0.3837−0.39850.8316]+(2.98)[−0.79420.56690.00880.2185]+(3.57)[−0.3417−0.53050.76890.1034].

Therefore, ^?2=[11,−1.83,2.98,3.57]T. Note that ^?1=^?2, that is, the signal representation does not change in the frequency domain even if vertex indexing is altered. Thus, we can conclude that vertex indexing in a graph does not affect the representation of a graph signal in the frequency domain; it only results in a corresponding change in the vertex domain representation of the signal.

The eigenvectors of the graphs with different vertex labeling are plotted in Figures 9.14 and 9.15. Observe that values of an eigenvector remain unchanged with respect to the graph topology, although the order of indexing changes according to vertex labeling.

Figure 9.14. Eigenvectors of the graph shown in Figure 9.13(a)

Figure 9.15. Eigenvectors of the graph shown in Figure 9.13(c)

9.6 Generalized Operators for Graph Signals

In classical signal processing, operators such as filtering, convolution, translation, and modulation are frequently utilized in various signal processing tasks. These fundamental operators have also been extended to graph settings [165] through GFT. In this section, we discuss the definitions of these fundamental operators in graph settings. For each of the operators, first its classical definition is presented and subsequently analogy is made to extend the definitions to graph settings.

9.6.1 Filtering

Filtering operation, when viewed in the frequency domain, refers to amplifying some frequencies or attenuating some frequencies to get a new signal. A graph filter can be represented as a matrix, as shown in Figure 9.16, where signal f is the input to the filter H and fout = Hf is the output of the filter.

Figure 9.16. A graph filter

Spectral Domain Filters

Consider a graph signal f that can be written in terms of the graph harmonics as

where ˆf(λ0),ˆf(λ1),…,ˆf(λN−1) are GFT coefficients. A graph filter modifies (attenuates or amplifies) these GFT coefficients and results an output graph signal fout.

Here, ˆh(λ0),ˆh(λ1),…,ˆh(λN−1) are filter coefficients in the frequency domain and are also known as the frequency response of the filter. Thus, each frequency component of the output signal is modified according to the filter coefficient corresponding to that frequency:

Equivalently, the above equation can be written in the vertex domain as

In matrix form, the filtering operation provided by Equation (9.6.2) can be expressed as fout = Hf where

From Equation (9.6.5), it is clear that the eigenvectors of H are the same as of L. However, the eigenvalues of H change to ˆh(λℓ). The filter H has N number of parameters: ˆh(λ0),ˆh(λ1),…,ˆh(λN−1). Using the spectral domain approach to filtering, one can design a filter with an arbitrary frequency response by choosing the N filter parameters accordingly. One important point to note here is that the filter H designed in the spectral domain is not localized; that is, to compute the filtered output at node i (in the spatial domain), one may need the signal values that are not within a fixed number of hops from node i. However, with polynomial filters in the spatial domain, one can design localized filters.

Polynomial Filters in the Spatial Domain

A filter H, given by Equation 9.6.5, is designed in the spectral domain, has N number of parameters, and is not localized in space. However, if we use a filter which is a polynomial in the Laplacian matrix L, localization in space can be achieved; that is to compute the filtered output at node i, we will require the signal values that are within K-hops from node i, where K is the order of the polynomial. Consider a polynomial filter of order K (< N):

where h0,h1,…,hK∈ℝ are called filter taps or filter parameters. Since (LK)ij = 0 for d?(i,j)>K (see Problem 16), where d?(i,j) is the hop distance between nodes i and j, the filter H given by Equation 9.6.6 is K-localized at every node.

We can also represent the polynomial filter in the spectral domain. Since the eigendecomposition of Kth power of matrix L is given by LK = UΛKUT, the filter H given by Equation 9.6.6 can be written as

where

and

is a polynomial of order K in eigenvalue λℓ.

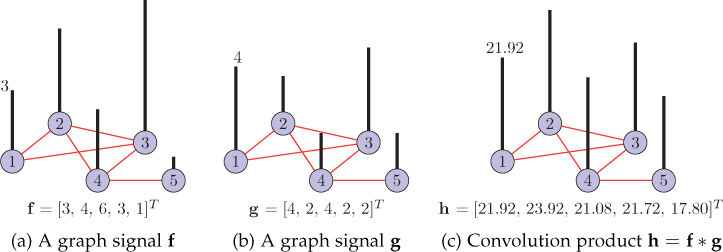

9.6.2 Convolution

The classical convolution product of two signals x(t) and h(t) is defined as

The above definition of convolution product requires translation of one of the signals. If one tries to extend the above definition to graph signals, it will require translating the graph signal, which is difficult to define in the vertex domain. In such a case, one can utilize frequency domain representation.

The classical convolution theorem states that the convolution product in the time domain is equivalent to multiplication in the frequency domain. Therefore, the convolution product of two signals is equivalent to the inverse Fourier transform of the multiplication of the Fourier transforms of the two signals, that is,

In the same way, by utilizing GFT, one can define convolution of two graph signals. Analogous to Equation (9.6.11), the convolution of two graph signals f, g ∈ ℝN is defined as

where ^? and ^? are GFTs of f and g, respectively. It is easy to note that the graph convolution also follows classical convolution theorem; that is, the convolution in the vertex domain is equivalent to element-wise multiplication in the graph spectral domain. In matrix form, the convolution product of two graph signal can be written as

where U is the matrix whose columns are the eigenvectors of the graph Laplacian and represents element-wise Hadamard product. As an example, consider two graph signals f and g shown in Figures 9.17(a) and (b). Their convolution product is plotted in Figure 9.17(c), which can be calculated as

Figure 9.17. Example of graph convolution

?=?(^?⊙^?)=?((?T?)⊙(?T?))=?[7.602.65−1.60−1.41−1.27]⊙[6.261.390.92−1.41−0.16]=?[47.603.67−1.482.000.21]=[21.9223.9221.0821.7217.80].

The convolution of two graph signals follows the properties of commutativity, distributivity, and associativity (see Exercise Problem 15). The definition graph convolution is utilized in defining graph translation operator.

9.6.3 Translation

In Section 9.1, we saw that translating a graph signal is not as straightforward as translating a 1-D time series. However, the translation operator for graph settings can be defined using the convolution operator defined above.

Classical translation of a signal x(t) by some time τ can be represented using impulse at τ:

where δτ(t) is an impulse at τ and, using Equation (9.5.1), its Fourier transform is ^δτ(ω)=e−jωτ.

Analogously, the generalized translation operator Ti can be defined via generalized convolution with an impulse centered at vertex i:

where

δi(n)={1ifn=i0otherwise.

In Equation 9.6.15, √N is a normalizing constant to ensure that the translation operator preserves the mean of a graph signal. In matrix form, the translated vector (to node i) can be written as

where ?′i=?T(:,i) is the ith column of UT and the operator ℓ represents element-wise Hadamard operation.

An example of translation is shown in Figure 9.18. Figure 9.18(a) shows a graph signal f, and Figures 9.18(b) and 9.18(c) show the translated version of f to nodes 1 and 4, respectively. Another example of the translation operation is shown in Figure 9.19. A graph signal on a sensor network is shown in Figure 9.19(a), and the graph signal when translated to the circled node is shown in Figure 9.19(b).

Figure 9.18. Example of graph translation

Figure 9.19. Example of translation operator

The translation operator is usually viewed as a kernelized operator acting in the graph spectral domain. The kernel ˆf(.) can be used to define the translation of a graph signal f around the graph. Translation of a graph signal to vertex i can be achieved by multiplying the ℓth component of the kernel by u*ℓ(i), and then taking the IGFT.

The generalized translation operator is utilized to define the WGFT discussed in Section 9.8.

9.6.4 Modulation

Classical modulation of a signal x(t) by a frequency ω is just multiplication of the signal and the complex exponential at frequency ω; that is, the modulated signal is ejωtx(t). Analogously, in graph settings, the generalized modulation operator Mk is defined as

where the operator ℓ represents element-wise Hadamard operation.

The classical modulation in the time domain is equivalent to translation in the frequency domain, that is, ˆMω0x(ω)=ˆx(ω−ω0),∀ω∈ℝ. On the other hand, because of the irregular nature of the underlying graphs, the generalized modulation defined by Equation 9.6.17 does not represent translation in the graph spectral domain. However, if ^? is localized around zero frequency, then ˆMk? is localized around λk. Localization around zero frequency means that most of the non-zero elements are in the vicinity of λ0 = 0.

9.7 Applications

The ability of the GFT to capture the changes in a graph signal makes it very useful for a number of applications. A few applications are discussed here. First, we present how GFT can be used to explore the structure of various complex network models via performing spectral analysis of node centralities [166]. As a second example, we discuss graph Fourier transform centrality (GFT-C) [167] that utilizes GFT to quantify the importance of a node in a complex network. Finally, we discuss application of GFT in detecting a corrupted sensor in a sensor network just from observing the spectrum of the data.

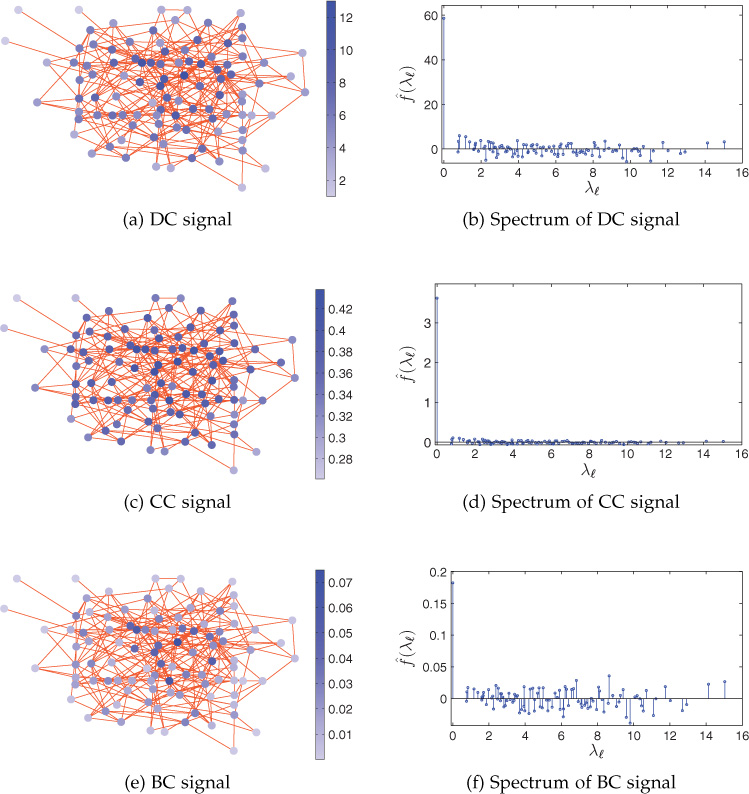

9.7.1 Spectral Analysis of Node Centralities

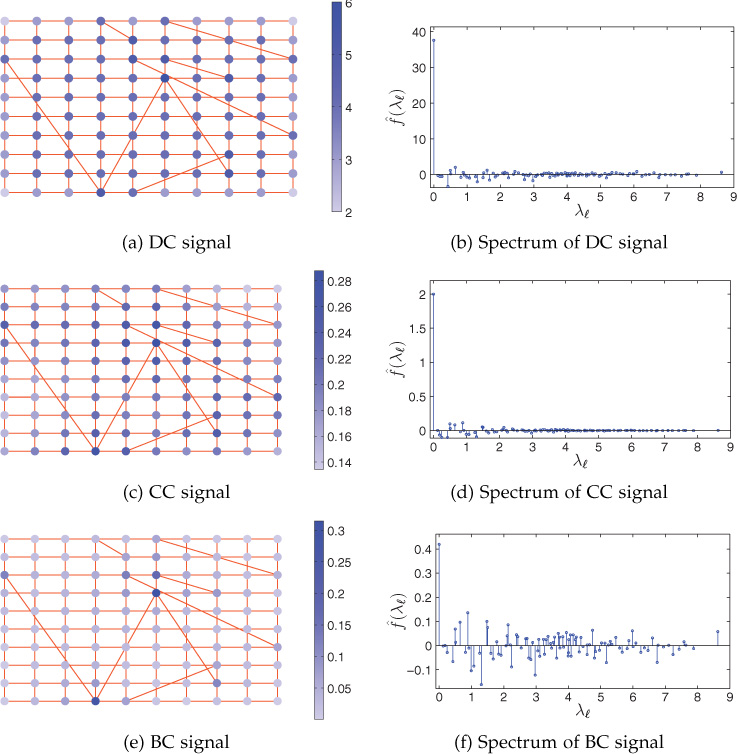

Studying the spectral properties of different node centralities allows one to understand the centrality patterns for various networks. The centrality patterns become very informative in the spectral domain. For illustration, different network models such as regular, Erdös-Rényi (ER), small-world, and scale-free networks are considered here. The networks considered here are undirected and unweighted. Different centralities such as degree centrality (DC), closeness centrality (CC), and betweenness centrality (BC) are defined as signals on the networks and subsequently the GFT coefficients (spectrum) of each signal can be observed.

Regular Network

Consider a 4-regular graph generated by taking 100 nodes randomly. The detailed discussion on r-regular graphs can be found in Chapter 2. The DC signal on the graph is plotted in Figure 9.20(a). The vertical bars indicate the signal values at the nodes. DC signal is constant with a value of 4 at all of the nodes. The spectrum of DC signal has only one non-zero frequency component, which is at zero frequency, as shown in Figure 9.20(b).

Figure 9.20. Centralities as signals on a 4-regular graph and their spectra © [2016] IEEE. Reprinted, with permission, from R. Singh, A. Chakraborty, and B. S. Manoj, “On Spectral Analysis of Node Centralities,” in Proceedings of IEEE ANTS 2016, December 2016.

The CC signal on the graph is plotted in Figure 9.20(c) and corresponding spectrum is shown in Figure 9.20(d). The CC signal varies in the range [0.2626, 0.2964]; however, the variations in the CC signal are very small as we go from one node to an adjacent node connected by an edge. CC values of the two adjacent nodes do not differ by a large amount because the closeness of the two adjacent nodes to the rest of the network is almost same. This similarity in closeness is the reason for the absence of significant high-frequency components in the spectrum of CC signal.

The BC signal is plotted in Figure 9.20(e) and has a range in the interval [0.0142, 0.0358]. The BC signal on the regular graph undergoes a very small change as we move from one node to an adjacent node connected by an edge. Observe from Figure 9.20(f) that the spectrum of BC signal also has only a zero frequency component except for a few very small high-frequency components.

ER Network

Consider an ER random graph3 [62] with the probability of 0.06. DC, CC, and BC signals defined on the ER graph are plotted in Figures 9.21(a), (c), and (e), respectively. DC signal values lie in the interval [1, 13] and are randomly distributed over the graph because in an ER graph, an edge exists between every pair of the graph nodes with certain probability (here the probability is 0.06), which contributes to DC signal value at both the corresponding nodes.

Figure 9.21. Centralities as signals on an ER graph and their spectra © [2016] IEEE. Reprinted, with permission, from R. Singh, A. Chakraborty, and B. S. Manoj, “On Spectral Analysis of Node Centralities,” in Proceedings of IEEE ANTS 2016, December 2016.

The CC signal varies in the interval [0.2612, 0.4381] which is plotted in Figure 9.21(c). It has low value at distant nodes and has high value at the nodes that are central to the network. CC signal variations are small when we move from one node to the adjacent nodes. The BC signal takes a value in the interval [0, 0.0749] and is plotted in Figure 9.21(e). It has more spectral variations as compared to the DC signal.

The spectra of DC, CC, and BC signals are shown in Figures 9.21(b), (d), and (f), respectively. It can be observed from Figure 9.21(b) that the spectrum of DC signal on the ER graph has significant GFT coefficients at low as well as high frequencies. The spectrum of CC signal does not contain high-frequency components, as shown in Figure 9.21(d). The BC signal has stronger frequency components than those of the DC signal, as shown in Figure 9.21(f).

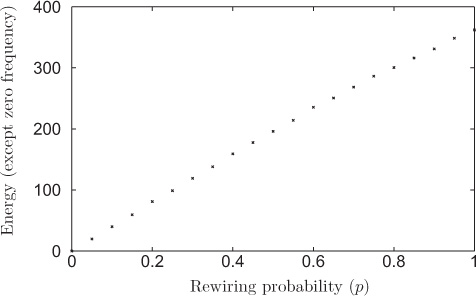

ER Graph with Varying Edge Probability

The above discussion was for an ER graph with a certain fixed edge probability. What about the spectra of DC signal with varying edge probability? Figure 9.22 shows the energy content, except zero frequency, in the spectrum of DC signal with respect to the varying probabilities of edge connection. Observe that the energy increases with increasing probability and becomes maximum for p = 0.5, and then it starts decreasing. For p = 0, the energy is zero because the graph has no edges present in this case; that is, DC signal is a zero vector. Also for p = 1, the energy is zero because the graph becomes regular, where each node is connected to every other nodes in the network. Because the plot is symmetric about p = 0.5, one can also argue that the amount of randomness for probability p is same as for probability (1 − p), and it is maximum for p = 0.5.

Figure 9.22. Energy in DC spectrum Versus edge probability for ER networks. Each value is averaged over 1000 experiments. © [2016] IEEE. Reprinted, with permission, from R. Singh, A. Chakraborty, and B. S. Manoj, “On Spectral Analysis of Node Centralities,” in Proceedings of IEEE ANTS 2016, December 2016.

Small-World Network

Consider a small-world network4 generated by adding a few LLs (5%–8% of the total nodes) in a regular rectangular grid network. DC, CC, and BC signals on a 100 node network are plotted in Figures 9.23(a), (c), and (e), respectively. DC signal lies in the interval [2, 6] as shown in Figure 9.23(a). The CC signal varies in the range of [0.1343, 0.2878], which is shown in Figure 9.23(c). The CC signal has minimal variation as one moves from one node to the other connected by an edge because closeness of any two adjacent nodes, to the rest of the network, does not have a large difference. The CC signal has peaks at the nodes, which are the extremes of LLs; however, the signal value decreases slowly as we move away from these nodes. The range of BC signal is in the interval [0, 0.3148] as shown in Figure 9.23(e). The LLs in a small-world network are present in most of the shortest paths between any two distant nodes; therefore, the nodes corresponding to LLs have high BC value.

Spectra of DC, CC, and BC signals are plotted in Figures 9.23(b), (d), and (f), respectively. The spectrum of DC signal has small values of GFT coefficients at low frequencies, as shown in Figure 9.23(b). High-frequency components are very small in the spectrum. The spectrum of CC signal on the small-world graph is also localized around zero frequency; that is, it has only mild low frequency components (see Figure 9.23(d)). However, one can observe from Figure 9.23(f) that the BC signal on the small-world graph contains some strong low-frequency as well as high-frequency components. As we move from the nodes which have high BC value to any other adjacent node except the node connected via LLs, the BC value changes significantly. These changes in BC value results in the presence of significant high-frequency components in the spectrum of BC signal. Comparing Figures 9.23(f) and 9.23(b), it can be observed that there are relatively stronger high-frequency components present in the spectrum of BC signal than in that of the DC signal, because the BC value at the nodes corresponding to LLs has relatively larger value than the DC value at the corresponding nodes.

Figure 9.23. Centralities as signals on a small-world network and their spectra © [2016] IEEE. Reprinted, with permission, from R. Singh, A. Chakraborty, and B. S. Manoj, “On Spectral Analysis of Node Centralities,” in Proceedings of IEEE ANTS 2016, December 2016.

Watts-Strogatz Network Model with Varying Rewiring Probability

Consider a small-word network generated by the Watts-Strogatz model [6] and then compute the energy of the DC signal lying in non-zero frequency components of the spectrum. Repeat the experiment with varying rewiring probability and compute the corresponding energies, the plot of which is shown in Figure 9.24. Observe the increase in energy with the rewiring probability, which shows the increase in randomness of the network with increasing rewiring probability.

Figure 9.24. Energy in DC spectrum versus rewiring probability for Watts-Strogatz small-world networks. Each value is averaged over 1000 experiments. © [2016] IEEE. Reprinted, with permission, from R. Singh, A. Chakraborty, and B. S. Manoj, “On Spectral Analysis of Node Centralities,” in Proceedings of IEEE ANTS 2016, December 2016.

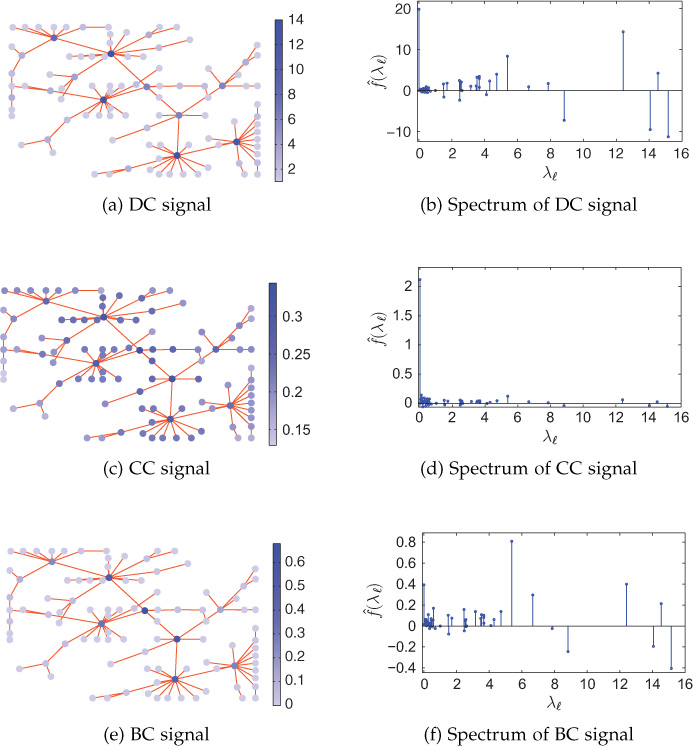

Scale-Free Network

Consider the Barabási-Albert model [39] for the creation of a scale-free network5 with γ = 3. DC, CC, and BC signals on a 100-node scale-free network are shown in Figures 9.25(a), (c), and (e), respectively. The DC signal on the network varies in the interval [1, 14], as shown in Figure 9.25(a). It can be observed that only a few nodes (known as hub nodes) in the graph have large DC values, and a large number of nodes have small DC values. As we move from the hub node to any other adjacent node, the DC signal value changes drastically. The range of the BC signal is [0, 0.6778], as plotted in Figure 9.25(e). The value of the BC signal is also very large at the hub nodes, and it undergoes large variations when we move to other adjacent nodes. The large value of BC at hub nodes is because the hub nodes are present in most of the shortest paths in the graph. The CC signal varies in the interval [0.1292, 0.3438], which is plotted in Figure 9.25(c). It has small values at distant nodes and large values at the nodes that are more central to the network. Although the CC signal has peaks at hub nodes too, it does not change rapidly as we move from a hub node to any other adjacent node, because the closeness of the node to the entire graph does not change significantly.

Figure 9.25. Centralities as signals on a scale-free network and their spectra © [2016] IEEE. Reprinted, with permission, from R. Singh, A. Chakraborty, and B. S. Manoj, “On Spectral Analysis of Node Centralities,” in Proceedings of IEEE ANTS 2016, December 2016.

The spectra of the DC, CC, and BC signals on the scale-free network are plotted in Figures 9.25(b), (d), and (f), respectively. One can observe from Figure 9.25(b) that the DC signal on scale-free graph has very strong high-frequency components, as there is a drastic change in signal value from hub node to neighbor nodes. The BC signal also contains very strong high-frequency components, as shown in Figure 9.25(f). However, the CC signal has very small high-frequency components, as shown in Figure 9.25(d), because of minimal variation of the signal as one moves from one node to another. Compared to all other graphs, the spectra of DC and BC signals on scale-free graphs have much stronger high-frequency components, which shows the presence of hub nodes in scale-free networks.

Network Classification from the Spectra of Centrality Signals

We have seen the spectral patterns of centrality measures on various networks. Given the spectra of node centralities as signals on a network, the type of the network can be identified. If the spectrum of DC signal on a network has only non-zero GFT coefficient at zero frequency, then the underlying network is an r-regular network with r=ˆf(λ0)√N where ^? is GFT of DC signal f and N is the total number of nodes in the network.

Presence of stronger high-frequency components than low-frequency components in the spectrum of DC or BC signal on the network indicates that the underlying network is scale-free. The identification of scale-free network and regular network from the spectrum of DC signal can be done accurately.

If the spectrum of DC signal has significant frequency components over all the frequencies, then the underlying network can be a small-world or an ER network. Picking one of these two networks accurately is difficult. However, from analysis done previously, it can be argued that as the small-worldness of the network increases, more and more high-frequency components exist in the spectrum of DC. Also, even for a random network with a high degree of randomness (edge probability of 0.5 in ER model), high-frequency content in the spectrum of the network is not as strong as in case of scale-free networks.

9.7.2 Graph Fourier Transform Centrality

GFT-C is a spectral approach for assessing the importance of each node in a complex network [167]. GFT-C uses GFT coefficients of an importance signal corresponding to the reference node. This method relies on the global smoothness (or variations) of the carefully defined importance signal corresponding to a reference node. The importance signal for a reference node is the indicator of how remaining nodes in a network are seeing the reference node individually. The importance signal is defined in such a way that the importance information is captured in the global smoothness (or variations) of the signal. Further, GFT coefficients of the importance signal are used to obtain the global view of the reference node.

GFT-C of ith node is the weighted sum of the GFT coefficients of importance signal corresponding to node i. The importance signal for a reference node i is a graph signal which gives the individual view of the rest of the nodes about reference node i in terms of the minimum cost to reach i. Smoothness of this importance signal is the key in quantifying the importance of the corresponding node. GFT is used to capture the variations in the importance signal globally, which in turn is utilized to define GFT-C. Thus, GFT-C utilizes not only the local properties, but also the global properties of a network topology.

The Importance Signal

The importance signal describes the relation of a reference node to the rest of the nodes individually. It is characterized by the inverse of the cost to reach from an individual node to the reference node. The higher the cost to reach the reference node, the lower is the importance of the reference node, and vice versa.

Let the importance signal corresponding to reference node n on a connected weighted network be fn = [ fn(1) fn(2) ... fn(N)]T, where fn(i) is the inverse of the sum of weights in the shortest path from node i to node n. Normalize the signal such that the sum of signal values, except at the reference node, is unity, that is ∑i≠n fn(i) = 1. Also, consider the signal value at the reference node as unity (fn(n) = 1).

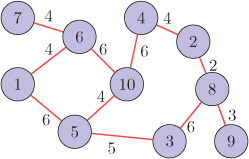

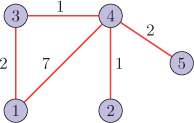

Figure 9.26 shows a sample weighted graph having 10 nodes. The importance signal along with the intermediate parameters corresponding to node 1 of Figure 9.26 are listed in Table 9.3. It is evident that as the cost to reach the reference node decreases, the value of the importance signal increases.

Figure 9.26. An example weighted graph

Table 9.3. Importance signal for node 1 of graph shown in Figure 9.26 as reference. Reprinted from Elsevier Physica A: Statistical Mechanics and Its Applications, Vol. 487, R. Singh, A. Chakraborty, and B. S. Manoj, GFT centrality: A new node importance measure for complex networks, Pages 185–195, December 2017, Copyright (2017), with permission from Elsevier.

Node |

Shortest Path |

Cost |

(Cost)−1 |

Importance Signal |

1 |

- |

0 |

- |

1 |

2 |

2-8-3-5-1 |

19 |

1/19 |

0.0550 |

3 |

3-5-1 |

11 |

1/11 |

0.0950 |

4 |

4-10-6-1 |

16 |

1/16 |

0.0653 |

5 |

5-1 |

6 |

1/6 |

0.1742 |

6 |

6-1 |

4 |

1/4 |

0.2614 |

7 |

7-6-1 |

8 |

1/8 |

0.1307 |

8 |

8-3-5-1 |

17 |

1/17 |

0.0615 |

9 |

9-8-3-5-1 |

20 |

1/20 |

0.0523 |

10 |

10-5-1 |

10 |

1/10 |

0.1045 |

The variations in the importance signal defined above are utilized to score the importance of a reference node. Also note that for a reference node with high global importance, the importance signal values in the neighborhood of the node will be smaller as compared to a reference node with low global importance but having the same value of DC. The reason behind this is that the sum of the importance signal values, except at the reference node, is unity and the importance signal value is inversely related to the cost to reach the reference node. Therefore, for a reference node with high global importance, from which the rest of the nodes are not very distant, the distribution of importance signal values is such that the neighboring nodes of the reference node receive small values. On the other hand, when the reference node is of low global importance, the neighboring nodes receive high importance signal values because there will be some nodes in the network that are very far from the reference node. These variations in the importance signal, which are captured using GFT, are utilized to quantify the importance of the reference node.

Graph Fourier Transform Centrality

GFT-C measures the importance of a reference node for rest of the network nodes collectively. To define GFT-C for a reference node, GFT coefficients of importance signal are used.

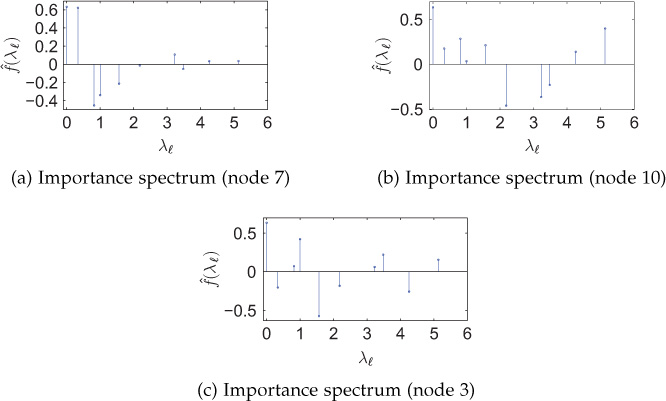

GFT of importance signal is known as importance spectrum. In Figure 9.27, the importance spectra for a few nodes in the network shown in Figure 9.26 are presented. The importance spectra corresponding to node 7 has nominal high-frequency components (Figure 9.27(a)), whereas large high-frequency components are present in the importance spectra of node 10 (Figure 9.27(b)). The importance spectra corresponding to node 3 has moderate GFT coefficients corresponding to high-frequencies (Figure 9.27(c)). From these observations, it can be argued that the importance information is encoded in the high-frequency components of the importance spectrum. The observations from Figure 9.27 are quite intuitive and follow from the fact that if a node is central to the network, then the importance signal is non-smooth, that is, variations in the importance signal are high. Based on these observations, the importance of a reference node can be quantified using the importance spectrum.

Figure 9.27. Importance spectra for the network topology shown in Figure 9.26. Reprinted from Elsevier Physica A: Statistical Mechanics and Its Applications, Vol. 487, R. Singh, A. Chakraborty, and B. S. Manoj, GFT centrality: A new node importance measure for complex networks, Pages 185–195, December 2017, Copyright (2017), with permission from Elsevier.

Let the GFT of importance signal corresponding to reference node n be ^?n=[ˆfn(λ0)ˆfn(λ1)…ˆfn(λN−1)]T, which can be calculated as

where fn(i) is the importance of the reference node n with respect to node i and uℓ is the eigenvector of the graph Laplacian at the node corresponding to the eigenvalue λℓ. Let the GFT-C of node n be In, then

where w(λℓ) is the weight assigned to the GFT coefficient corresponding to frequency λℓ. The choice of weights is made by a function which increases exponentially with the frequency (eigenvalues of L), that is w(λℓ) = e(kλℓ) − 1, where k > 0. Choosing such weights ensures that (i) larger weights are assigned to high-frequency components of the importance spectrum and smaller weights for the frequency components corresponding to lower frequencies and (ii) zero weight is assigned to zero frequency component. It is found experimentally that k = 0.1 shows good results. Table 9.4 shows GFT-C of the nodes, as found by using Equation (9.7.2), in the network shown in Figure 9.26. It can be observed that node 10 has the highest score and node 9 has the lowest score.

Table 9.4. GFT-C for nodes in the network shown in Figure 9.26. Reprinted from Elsevier Physica A: Statistical Mechanics and Its Applications, Vol. 487, R. Singh, A. Chakraborty, and B. S. Manoj, GFT centrality: A new node importance measure for complex networks, Pages 185–195, December 2017, Copyright (2017), with permission from Elsevier.

Node |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

GFT-C |

0.099 |

0.093 |

0.103 |

0.106 |

0.121 |

0.135 |

0.044 |

0.118 |

0.040 |

0.141 |

GFT-C Behavior on a String Topology Network

First, consider a network with string topology (path graph) which is shown in Figure 9.28. Various centrality scores are listed in Table 9.5, from which we observe that GFT-C scores for end nodes are low, and as we go toward middle nodes, the value of GFT-C increases. It shows superiority over DC as all the nodes except two end nodes have same DC scores of 2, which is not desirable. BC, CC, and eigenvector centrality (EC) also follow the same pattern as GFT-C.

Figure 9.28. A path graph

Table 9.5. Various centrality scores for nodes in the network shown in Figure 9.28. Reprinted from Elsevier Physica A: Statistical Mechanics and Its Applications, Vol. 487, R. Singh, A. Chakraborty, and B. S. Manoj, GFT centrality: A new node importance measure for complex networks, Pages 185–195, December 2017, Copyright (2017), with permission from Elsevier.

Node |

A |

B |

C |

D |

E |

F |

DC |

1 |

2 |

2 |

2 |

2 |

1 |

BC |

0 |

0.4 |

0.6 |

0.6 |

0.4 |

0 |

CC |

0.333 |

0.454 |

0.555 |

0.555 |

0.454 |

0.333 |

EC |

0.099 |

0.178 |

0.223 |

0.223 |

0.178 |

0.099 |

GFT-C |

0.0936 |

0.1979 |

0.2085 |

0.2085 |

0.1979 |

0.0936 |

GFT-C Behavior on an Unweighted Arbitrary Network

Consider an unweighted graph which contains more than just one neighborhood of nodes with high influence, as in Figure 9.29. Since CC and BC measure global importance, they find nodes t and s as the most important nodes, which can be found from Figures 9.29(a) and 9.29(b). On the other hand, though the nodes p, q, and u are influential in their respective neighborhoods, their CC and BC scores are small, implying the inability of CC and BC to capture local influence. Figure 9.29(c) shows the EC scores of nodes in the network. We observe that EC scores are high in the neighborhood of nodes p and q, while the rest of the network nodes receive very small EC scores. This is due to the nature of EC, which tends to focus on a set of influential nodes that are all within the same region (or community) of a graph and particularly focuses on the largest community [168]. DC scores of nodes are shown in Figure 9.29(d), from which we observe that nodes t and υ receive the same DC score of 3. However, this may not be desirable, as node t is more influential than node υ globally. The difference between DC and GFT-C can also be observed in other nodes of the network with the same DC scores. In comparison to DC, CC, BC, and EC, GFT-C takes local as well as global properties of the network into account and results in high importance for the nodes p, q, t, and u, as can be found in Figure 9.29(e).

Figure 9.29. Various centrality measures for an unweighted graph. Reprinted from Elsevier Physica A: Statistical Mechanics and Its Applications, Vol. 487, R. Singh, A. Chakraborty, and B. S. Manoj, GFT centrality: A new node importance measure for complex networks, Pages 185–195, December 2017, Copyright (2017), with permission from Elsevier.

9.7.3 Malfunction Detection in Sensor Networks

Another example application of GFT and graph filtering can be found in sensor networks where any malfunctions of sensors can be detected from the data generated by the sensors in the network. Consider a temperature sensor network in which nodes represent the weather stations collecting the temperature. Assume that the cities (weather stations) are located close to each other and, therefore, have almost similar temperatures. The temperature measurements can be treated as a graph signal, and observing the GFT coefficients of this graph signal, any sensor malfunction can be detected. If one observes the spectrum of the temperature snapshot (temperature graph signal), most of the energy will be concentrated in the low frequencies given that all the sensor measurements are correct. However, the measurements from a defected sensor may differ from the adjacent sensors drastically, and this drastic change will appear as significant high-frequency components in the spectrum of the temperature snapshot. Thus, if the spectrum of the temperature graph signal constitutes significant high-frequency components, one can conclude that there is at least one sensor with corrupted measurement. Note that, however, the locations of the corrupted sensors by observing the spectrum cannot be found.

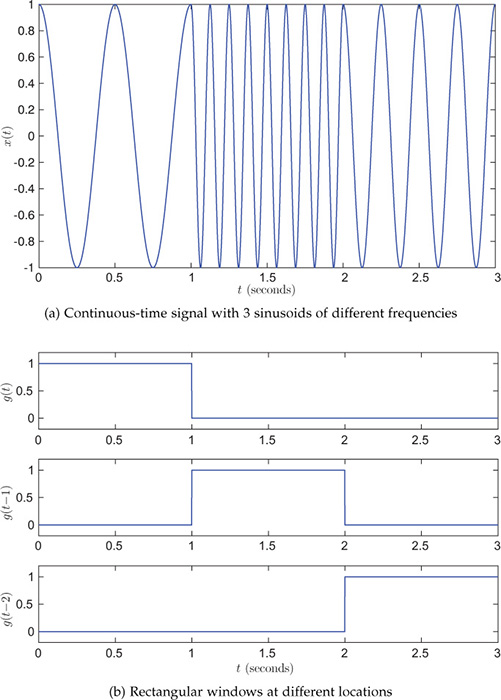

9.8 Windowed Graph Fourier Transform

The windowed Fourier transform (WFT), or short-time Fourier transform, is another important tool for time-frequency analysis in classical signal processing. It is particularly useful in extracting information from signals with frequencies that are localized in time. For example, consider a continuous-time signal x(t), shown in Figure 9.30, which contains sinusoids of three different frequencies: 3 Hz from 0 to 1 second, 8 Hz from 1 to 2 seconds, and 4 Hz from 2 to 3 seconds. The frequency information in this signal is local. If we use Fourier transform of this signal, we will not get any information of this time localization of frequencies. Such signals appear frequently in applications such as audio and speech processing, vibration analysis, and radar detection. Localization in time is achieved using an appropriate window function centered around a location of interest. Now consider a rectangular window g(t) of duration 1 second, as shown in Figure 9.30(b). The figure also shows translated versions of window g(t). Multiplying signal x(t) with window g(t), results in a part of signal x(t) from 0 to 1 second, and then Fourier transform of this windowed signal can be used to extract the frequency information in this time duration. Similarly, signal x(t) is multiplied with the translated versions of the window g(t), and then Fourier transform is used to get time-localized frequency information in the signal. Thus, short-time Fourier transform provides a means for time-frequency representation of a signal, through which both time and frequency information can be extracted.

Figure 9.30. A continuous-time signal with 3 sinusoids of different frequencies and window signals

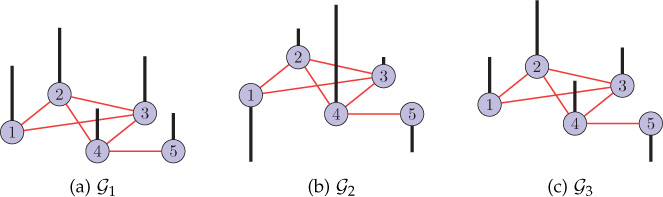

Windowed Fourier analysis has been generalized to the graph settings, and analogously, WGFT has been defined. As mentioned in Section 9.5, GFT is a global transform—it does not convey any information regarding where in a graph the frequency contents are present. Through WGFT, one can find the local information of frequency content in a graph signal.

Classical windowed Fourier transform is defined through two operators: translation and modulation. For a signal x(t) and t0∈ℝ, the translation operator Tt0 is defined as

The translation operator is used to move a window along the time axis. Moreover, for any ω∈ℝ, the modulation operator Mω is defined as

Now let g(t) be a window with ||g||2 = 1. Then a windowed Fourier atom is given by

WFT of a classical signal x(t) is defined as

Alternatively, WFT of a signal x(t) can also be interpreted as the Fourier transform of the windowed signal. The windowed signal localizes the signal to a specific time. Thus, WFT of a signal is an expansion in terms of two variables: frequency and time-shift.

To define WGFT, generalized translation and modulation operators defined in the Section 9.6 are used. The generalized translation operator is used to move a window around the graph, and then it is multiplied with a graph signal to localize the signal to a specific region of the graph. Analogous to Equation 9.8.3, for a window ?∈ℝN, windowed graph Fourier atom can be defined as

Subsequently, the WGFT coefficient, corresponding to frequency λk and centered at node i, of a graph signal ?∈ℝN is defined as

Using WGFT coefficients, one can identify the frequency contents of a graph signal at various vertex locations of the graph. As opposed to the GFT (which is a global transform), the WGFT provides us means to analyze a graph signal locally in the graph.

9.8.1 Example of WGFT

Consider a random sensor network with 48 nodes, as shown in Figure 9.31(a). The network is generated by randomly placing 48 nodes in [0, 1] × [0, 1] plane, and the edge weights are assigned with a threshold Gaussian kernel weighting function based on the Euclidean distance between nodes:

Figure 9.31. Windowed graph Fourier transform example

where d(i, j) is the Euclidean distance between nodes i and j, σ1 = 0.074, and σ2 = 0.075. Using a heat kernel shown in Figure 9.31(b), graph Fourier atoms corresponding to a graph frequency can be computed. A windowed graph Fourier atom g34,15 corresponding to frequency λ15 = 4.94 and centered at vertex 34 is shown in Figure 9.31(c). The corresponding WGFT coefficient of the graph signal shown in Figure 9.31(d) is S34,15f = 〈f, g34,15〉 = −0.7101.

9.9 Open Research Issues

The field of graph signal processing is an emerging research area. A number of major open research issues in this field are listed below.

Relationships between graph Fourier transform and traditional graph structure parameters such as centrality measures, path lengths, and clustering coefficients have not been studied yet. Establishing a link between structural properties of graphs and properties of the generalized operators as well as transform coefficients remains a major issue in graph signal processing.

The theory of statistical graph signal processing is not well developed. Recent efforts to develop the theory of stationary graph signal processing can be found in [169], where the traditional concept of wide sense station-arity has been extended to graph settings.

The concepts and techniques presented in this chapter assume that the graph signal to be analyzed is readily available. However, this is not the case all the time. One may need to model the graph signal from available data. Very little has been studied for modeling of graph signals. Some efforts can be found in [170], which estimates the adjacency matrix of the graph from a set of measured networked data.

One may have different models for constructing graph signals from available data. Whether GFT has a relation with the method used for modeling of the graph signal. Analysis of how the construction of the graph affects the properties of various transforms for signals on graphs is yet to be discovered.

Computation of GFT requires full eigendecomposition of the graph Laplacian. For a network with thousands of nodes, it becomes computationally inefficient. Therefore, development of fast GFT algorithms is required for analysis of large graphs. Some recent efforts made in this direction can be found in [171], [172].

Developement of sampling theory for graph signal is a major topic of research. Different theories have been presented for sampling of graph signals in [164], [173], [174], [175]. However, there is a need for a simpler and generalized sampling theory.

Developement of various tools and concepts for handling dynamic graphs is an open area of research. When the structure of a graph changes with time, the graph Fourier bases also change and require full eigendecomposition of many graphs over time. Finding new transforms for signals on dynamic graphs is a challenging research area. In [176], the authors have proposed a method for designing autoregressive moving average (ARMA) graph filters.

9.10 Summary

This chapter presented a review of concepts and techniques for analysis and processing of graph signals. The GFT and the WGFT were defined with the help of eigendecomposition of the graph Laplacian. The notion of frequency and the ability to capture changes in a graph signal makes GFT a very powerful tool for the analysis of data defined on complex networks. Various operators such as convolution, translation, and modulation were also generalized to graph settings. Some applications of the spectral representation of graph signals were also discussed in the chapter. The approach for graph signal processing discussed in this chapter is based on the graph Laplacian and is limited to undirected graphs with positive real weights. However, there exist other methods to handle directed graphs as discussed in the next chapter.

Exercises

Consider a graph ? shown in Figure 9.32.

Figure 9.32. Graph G The non-zero eigenvalues of the graph Laplacian are 1.1464, 2.1337, 5.4424, 17.2775. The eigenvector matrix of the Laplacian is

?=[0.44720.18400.21890.54770.64670.4472−0.8860−0.0467−0.10360.04630.44720.26850.5663−0.6370−0.03780.44720.12970.05290.4604−0.75400.44720.3039−0.7914−0.26750.0987]

1. Consider the graph shown in Figure 9.32.

(a) Calculate Laplacian quadratic forms of all the eigenvectors of the graph Laplacian.

(b) Calculate total variations of all the eigenvectors of the graph Laplacian.

(c) Compare results of parts (a) and (b).

2. For the three graph signals shown in Figure 9.33, which graph signal is likely to have the largest high-frequency components? Why? (All the graph signals are drawn at the same scale).

Figure 9.33. Graphs signals (Problem 2)

3. Represent the Laplacian matrix of an undirected graph in terms of impulses defined on the graph.

4. Represent a signal f = [3, 1, 5, − 2, 1]T defined on graph ?, shown in Figure 9.32, as a linear combination of the eigenvectors of the graph Laplacian.

![]() 5. The oscillations in a signal lying on a network can also be quantified by the number of zero crossings in the signal values. If there is an edge between nodes i and j and there is a change in the sign of the signal values at nodes i and j, then it is counted as one zero crossing. Create an undirected random network with 300 nodes by adding an edge between any two nodes with a probability of 0.15.

5. The oscillations in a signal lying on a network can also be quantified by the number of zero crossings in the signal values. If there is an edge between nodes i and j and there is a change in the sign of the signal values at nodes i and j, then it is counted as one zero crossing. Create an undirected random network with 300 nodes by adding an edge between any two nodes with a probability of 0.15.

(a) Plot the number of zero crossings in the eigenvectors of the Laplacian matrix with respect to the corresponding eigenvalues. Comment on your observations and explain the frequency ordering.

(b) Repeat part (a) for the normalized Laplacian matrix of the network.

6. Consider two graphs ?1 and ?2 having 20 nodes each. Both of the graphs have a common Laplacian eigenvalue 4.5, which also is the largest Laplacian eigenvalue of ?2. Is it possible to find a signal defined on graph ?2 that has a value greater than 2-Dirichlet form of the eigenvector corresponding to the eigenvalue 4.5? Is it possible to find such signal defined on graph ?2 ?

7. If all the edge weights of graph ? shown in Figure 9.32 are doubled, what effect will it have on the following?

(a) The graph frequencies.

(b) The graph harmonics.

(c) The GFT coefficients of a graph signal.

What if all the edge weights are halved?

8. Represent the graph signal f shown in Figure 9.34 as a linear combination of impulses, and subsequently find the GFT vector of the signal. The Laplacian eigenvector matrix of the graph is

?=[?0?1?2?3]=[0.44720.43750.703100.33800.44720.2560−0.24220.7071−0.41930.44720.2560−0.2422−0.7071−0.41930.4472−0.1380−0.536200.70240.4472−0.81150.31750−0.2018].

Figure 9.34. Graph G (Problems 8 and 9)

9. For the graph signal shown in Figure 9.34, compute the gradient vectors at each node. What is the total variation of the graph signal?

10. Consider graph ? shown in Figure 9.32. Draw a graph ?′ by interchanging the vertex labels of nodes 2 and 4. Calculate and plot the following for new graph ?′ :

(a) Harmonics of the graph.

(b) GFT vector of graph signal f = [3, 1, 5, − 2, 1]T.

Compare the results with that of graph ?.

11. For an undirected graph with the frequencies λ = {0, 1, 2, 4, 5} and the corresponding eigenvector matrix

?=[0.44720.288700.81650.22360.44720.2887−0.7071−0.40820.22360.44720.28870.7071−0.40820.22360.4472000−0.89440.4472−0.8660000.2236],

calculate the resulting vector when the graph signal f = [0, 1, −2, 0, 1]T is operated on by the graph Laplacian; that is, find Lf.

Hint: Represent f as linear combination of the Laplacian eigenvectors.

12. Consider a continuous kernel 2e−5λ in graph spectral domain. Corresponding to this kernel, plot the graph signal with its structure being the graph shown in Figure 9.32. For the obtained graph signal, what is the total variation?

Repeat the same for a kernel 2e2λ. What is the total variation for this graph signal? Compare it with the previous result.

13. For the graph shown in Figure 9.32, find a low-pass linear graph filter matrix H that passes frequencies up to 3 and suppresses all the frequency components above 3. For an input graph signal f = [1 , −4, − 6, 2, − 1]T to the filter, draw the output in the graph spectral domain.

14. Compute and plot the convolution product of two graph signals f = [1, 3, −3, 0, 8]T and g = [0, 1, −2, 4, 2]T defined on graph G shown in Figure 9.32.

15. Show that the graph convolution product satisfies the following properties:

(a) It is commutative, that is, f ∗ g = g ∗ f.

(b) It is distributive, that is, f ∗ (g + h) = f ∗ g + f ∗ h.

(c) It is associative, that is, f ∗ (g ∗ h) = (f ∗ g) ∗ h.

(d) L(f ∗ g) = f ∗ (Lg) = (Lf) ∗ g.

16. (a) Prove that the operator LK is localized within K hops at each node, that is, (LK)ij = 0for dG (i, j) > K, where L is the Laplacian matrix of a graph ? and dG (i, j) is the hop distance between nodes i and j. Consequently, show that a polynomial of order K in the Laplacian matrix L is also localized within K hops at each node.

(b) A signal f = [3, 0, 2, 4, 0, 1, 2, 3, 5]T is defined on the graph ? as shown in Figure 9.35. The edge weights a and b are unknown. Consider two polynomial filters

Figure 9.35. Graph G for Problem 16(b)

and

(i) What is the sum of the elements of each row of H1 and H2?