Chapter 10

Graph Signal Processing Approaches

The last chapter presented extension of classical signal processing operations such as convolution, translation, filtering, and modulation to the signals supported by complex networks. These operators were defined through graph Fourier transform (GFT). The GFT was defined by considering the eigenvectors of the (sym-metric) graph Laplacian matrix as the graph harmonics. In this approach to graph signal processing (GSP), the graph Laplacian played a fundamental role. However, GSP is not limited to the approach based on the graph Laplacian. There exist various approaches for GSP that define the GFT differently. For example, discrete signal processing on graphs (DSPG) approach is equipped with the theory of linear shift invariant (LSI) graph filters and defines the GFT such that the graph Fourier bases are the eigenfunctions of LSI graph filters. The GFT aimed at frequency analysis of graph signals facilitates efficient handling of the data and remains at the heart of every GSP approach. In this chapter, we discuss some of the existing approaches for GSP.

10.1 Introduction

There exist two major approaches for GSP: (i) GSP based on Laplacian and (ii) the DSPG framework.

One of the approaches for GSP is the Laplacian-based approach, which was discussed in Chapter 9. In this approach, the Laplacian matrix of the graph plays a fundamental role. The eigendecomposition of the (undirected) graph Laplacian was used to define the GFT, and Laplacian quadratic form was used to identify low and high frequencies. Basic operators such as convolution, translation, modulation, and dilation were defined through GFT. Moreover, windowed graph Fourier transform (WGFT) was also defined for analyzing local frequency components in a graph signal. WGFT was defined through generalized translation and modulation operators. The drawback of this approach is that it is limited to undirected graphs with positive edge weights.

The second approach, the DSPG framework [177][178] is rooted in the algebraic signal processing theory. In this framework, a shift operator is defined on the graph and plays a fundamental role. Based on the shift invariance, the concept of LSI filtering has been developed. A linear graph filter, which is a matrix operator, is called shift invariant if shifting and filtering operations can be done in any order without altering the filter output. Furthermore, to define the GFT, the eigenvectors of a graph structure matrix are used as the graph harmonics, and the corresponding eigenvalues act as the graph frequencies. The high and low frequencies of the graph are identified through the graph total variation of the eigenvectors. The total variation is defined with the help of the shift operator. This approach is applicable to undirected as well as directed graphs with real or complex edge weights.

10.2 Graph Signal Processing Based on Laplacian Matrix

The Laplacian-based approach is limited to the analysis of graph signals lying on undirected graphs with real nonnegative weights. In this framework, various transforms including GFT, WGFT, spectral graph wavelet transform (SGWT), and wavelet filter banks have been introduced. Among these transforms, GFT remains central because it provides frequency interpretation of a graph signal and, therefore, other transforms have been derived from it. In GFT, a graph signal is represented as a linear combination of the eigenvectors of the graph Laplacian matrix L that is assumed to be symmetric and thus constitutes a complete set of orthonormal real eigenvectors. The eigenvectors act as the graph harmonics, the corresponding (real) eigenvalues of L act as the graph frequencies. Mathematically, GFT of a graph signal f is defined as ^?=?T?

10.3 DSPG Framework

The roots of the DSPG approach lie in the algebraic signal processing (ASP) theory [179], [180]. The ASP theory is a formal, algebraic approach to analyze data indexed by special types of line graphs and lattices. The theory uses an algebraic representation of signals and filters as polynomials to derive fundamental signal processing concepts such as shifting, filters, z-transform, spectrum, and Fourier transform. ASP theory was also extended to multidimensional signals and nearest neighbor graphs and was applied in signal compression. The DSPG framework is a generalization of ASP to signals on arbitrary graphs. In this chapter, the ASP theory is not discussed in detail; the DSPG framework is self-contained and can be understood without the details of ASP theory. Also, to keep the explanation simple, we have avoided the algebraic model in our presentation of the framework. Interested readers can refer to the research papers.

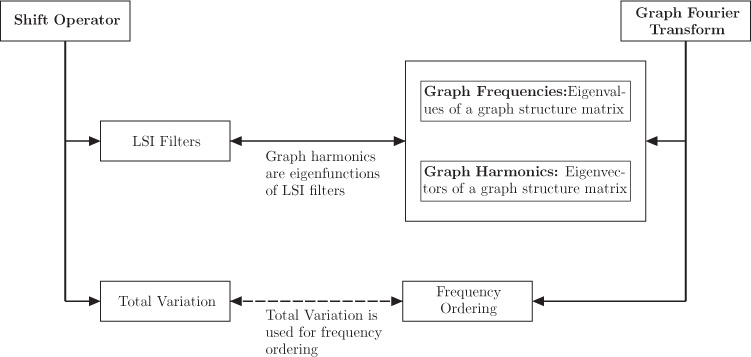

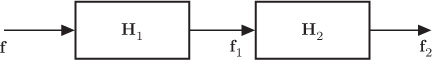

The DSPG framework has been built on a graph shift operator. The relationship between the concepts developed under DSPG is shown in Figure 10.1. The concept of LSI filters is extended to graph settings through the shift operator. Further to define the GFT, the graph Fourier bases are selected such that they are eigenfunctions of LSI filters. Based on the selection of shift operator, one can choose a graph representation (structure) matrix whose eigendecomposition is used to define the GFT. The eigenvalues of the graph representation matrix are treated as graph frequencies and the corresponding eigenvectors as graph harmonics. To identify the low and high frequencies, a quantity called total variation (TV) is defined that quantifies variations in a graph signal. The shift operator is used to define TV on graphs, which is subsequently utilized for ordering of graph frequencies. Based on the TV values of the eigenvectors of the graph representation matrix, the low and high frequencies are identified. Eigenvectors with low TV value correspond to low frequencies, and vice versa.

Figure 10.1. Relationship between different concepts under the DSPG framework

10.3.1 Linear Graph Filters and Shift Invariance

Filtering is a fundamental operation for processing of graph signals. A linear graph filter is characterized by a matrix ?∈ℂN×N

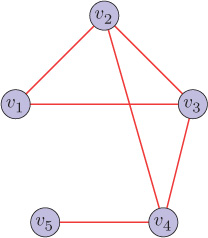

for some scalars a and b. Figure 10.2 shows a graph filter, where fin is the input to the filter H and fout is the filtered output.

Figure 10.2. A graph filter

In DSPG, the shift operator S is an elementary linear filter. Using the shift operator, the classical theory of LSI filters can be extended to graph signals. A linear graph filter is called shift invariant if shifting and filtering operations can be done in any order without changing the filter output. In other words, a filter H is shift invariant if the output to the shifted input is equal to the shifted output. That is, the filter H is shift invariant if it satisfies

Therefore, for a shift invariant filter, the shift operator and the filter matrix should commute, that is,

Note that not every linear filter can be shift invariant.

Eigenfunctions of LSI Filters

If the output of an LSI graph filter is only a scaled version of the input, the input is called an eigenfunction of the LSI filter. Consider a graph signal fin as input to an LSI filter H. If output of the filter is only a scaled version of the input, that is, fout = Hfin = αfin for some scalar α, then the input is an eigenfunction of the filter.

If the input to an LSI filter is represented as a linear combination of its eigenfunctions, then the output can also be represented as a linear combination of the same eigenfunctions. Therefore, representing a graph signal as a linear combination of eigenfunctions of an LSI filter facilitates processing tasks to a great extent. The GFT is defined by using a basis such that they are eigenfunctions of LSI graph filters.

The shift operator is the basic building block of DSPG. There are two popular choices of shift operator. One choice is the weight matrix W of the graph. Having the weight matrix as a shift operator, polynomials in the weight matrix result in LSI graph filters. As a result, the eigenvectors of the weight matrix become eigenfunctions of LSI filters and can be used as graph Fourier basis. Therefore, the weight matrix itself is used as the graph representation matrix. The second choice involves the directed Laplacian matrix L (see Section 10.5.1), and consequently, LSI graph filters turns out to be polynomials in the directed Laplacian matrix. Therefore, the directed Laplacian matrix is used as the graph representation matrix whose eigendecomposition is used for defining the GFT. Based on these two choices of the shift operator, the DSPG frameworks are presented below.

10.4 DSPG Framework Based on Weight Matrix

As discussed earlier, the DSPG framework is built on a shift operator. Now we elaborate the concepts of LSI filtering, GFT, and total variation when weight matrix W of the graph is chosen as a shift operator.

10.4.1 Shift Operator

In classical DSP, shift is an operator that delays a discrete-time signal by one sample. Consider a discrete-time finite duration periodic signal (with period of five samples) x = [ 9 7 5 0 6]T shown in Figure 10.3(a). The corresponding graph, that is, the support of the signal, is shown in Figure 10.3(b). The weight matrix of this five-node graph1 can be found as follows:

Figure 10.3. Illustration of shifting in discrete-time signals

Shifting the signal x by one unit to the right results in the signal ̃?=[69750]T

̃?=??=??=[0000110000010000010000010][97506]=[69750],

where S = W is the shift operator (matrix).

The notion of shift in a finite, periodic time series can be extended to arbitrary graphs, and analogously, the weight matrix of the graph can be used as a shift operator for a graph signal. Therefore, the shifted version of a graph signal f can be calculated as

where W is the weight matrix of the graph. The shift operator is an elementary graph filter, whose output value at vertex i is the linear combination of the input signal values at the neighboring nodes of i. Moreover, we shall see next that LSI filters are polynomials in the shift operator, or in this case, polynomials in the weight matrix.

10.4.2 Linear Shift Invariant Graph Filters

A linear graph filter is called shift invariant if shifting and filtering operations can be done in any order without changing the filter output. Having the weight matrix as a shift operator, a linear filter H will be shift invariant if it satisfies

The condition for a linear filter to be shift invariant is given by Theorem 10.1.

Multiplying W by a nonnegative constant does not change the properties of graph filters. Therefore, one can normalize the graph shift without any complications. The normalized graph shift matrix can be defined as

where σmax is the eigenvalue of W with largest amplitude.

10.4.3 Total Variation

The TV of a graph signal is a measure of total amplitude oscillations in the signal values with respect to the graph. In DSPG, TV of a signal with respect to a graph is defined for the purpose of frequency ordering.

In classical signal processing, TV has been utilized in solving a number of problems, particularly in regularization techniques for solving inverse problems. TV-based regularization techniques have been extremely useful in image processing applications such as image denoising, restoration, and deconvolution [181], [182], [183].

For time series and image signals, TV is defined as ℓ1-norm2 of the derivative. Extending this definition to graph settings, TV of a graph signal f with respect to the graph ?

where ∇i(f) is the derivative of the graph signal f at vertex i. Analogous to classical signal processing, where the derivative of a discrete-time signal x at time m is defined as ∇m(x) = x(m) − x(m − 1), the derivative of a graph signal f at vertex i can be defined as the difference between the values of the original graph signal f and its shifted version at vertex i:

where ̃?=??

(10.4.8) TV?(f)=NΣi=1|f(i)−˜f(i)|=||˜f−˜f||1=||f−Wnormf||1,

where Wnorm is the normalized shift operator. Note that a high TV value indicates that the oscillations in the signal values are large with respect to the underlying graph, and vice versa.

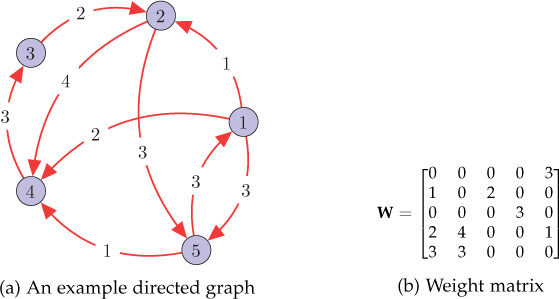

Example

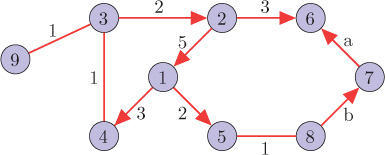

Consider the directed graph shown in Figure 10.4(a) supporting a graph signal f = [ 1 0 3 1 −2]T. The shifted graph signal is

Figure 10.4. Total variation example: a directed graph and its weight matrix

̃?=?norm?=14.07[0000310200000302400133000][1031−2]=[−1.471.720.7400.74],

and the total variation of graph signal f with respect to the underlying graph can be calculated as

TV?(?)=||?−̃?||1=10.19.

10.4.4 Graph Fourier Transform

In the Laplacian-based approach, the GFT was defined through the eigendecom-position of the (symmetric) Laplacian matrix. The eigenvalues of the Laplacian matrix were treated as the graph frequencies, and the eigenvectors of the Laplacian were used as the graph Fourier basis. The analogy was derived from the classical Fourier transform through the fact that the complex exponentials act as the eigenfunctions to the 1-D Laplacian operator. Subsequently, the eigenvectors of the Laplacian, or in other words, the eigenfunctions of the graph Laplacian, were treated as the graph Fourier basis. Furthermore, the graph frequencies were ordered based on the quadratic form of the Laplacian, which resulted in a natural frequency order, that is, small eigenvalues as low frequencies, and vice versa. However, in the Laplacian-based approach, the GFT was limited to the undirected graphs with positive edge weights.

In DSPG, the GFT is defined through the concept of LSI filtering. It makes analogy from the classical Fourier transform from the fact that the classical Fourier bases are invariant to LSI filtering. Thus, to define the GFT, we can use such bases that are invariant to LSI graph filters. From Theorem 10.1, it can be noted that the LSI filters are polynomials in the shift operator, which is the weight matrix of the graph. Therefore, the eigenvectors of the weight matrix of the graph are eigenfunctions of LSI filters and can be used as a graph Fourier basis. Moreover, the corresponding eigenvalues act as graph frequencies.

Assume that the weight matrix of the graph W is diagonalizable.3 Let ?=[?0…?N−1]∈ℂN×N

The graph transform decomposes a graph signal in terms of the graph Fourier basis, that is, the eigenvectors of W. Hence the GFT of a graph signal f is defined as

The value ˆf(n)

The inverse graph Fourier transform (IGFT), which reconstructs the signal back from its graph Fourier transform coefficients, is given by

The GFT defined under DSPG is an invertible transform, but it does not follow the Parseval’s theorem, which states that the signal energy in the spatial domain is equal to the energy in the frequency domain.

Frequency Ordering

Having defined the GFT, one needs to order the graph frequencies to identify the low and high frequencies of a graph. For this purpose, TV, defined in Section 10.4.3, is utilized. The graph frequencies are ordered based on the TV values of the eigenvectors of W. The eigenvalues corresponding to the eigenvectors with small TV are called low frequencies, and vice versa. Frequency ordering is established by Theorem 10.2.

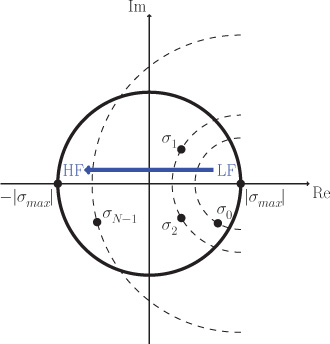

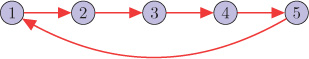

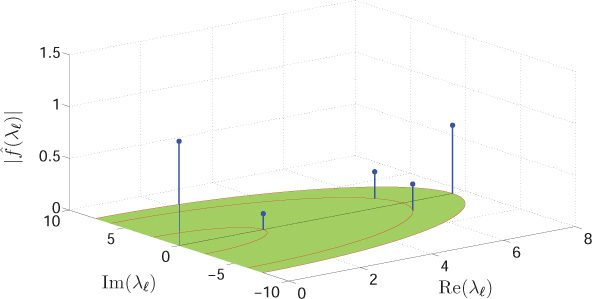

The frequency ordering for weight matrices with complex spectra is visualized in Figure 10.5. In the complex frequency plane, as one moves away from the eigenvalue with maximum absolute value, the eigenvalues correspond to higher frequencies.

Figure 10.5. Frequency ordering from low frequencies to high frequencies. As one moves away from the eigenvalue with maximum absolute value in the complex frequency plane, the eigenvalues correspond to higher frequencies because the TVs of the corresponding eigenvectors increase.

Example

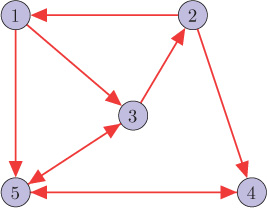

Consider the directed graph shown in Figure 10.6(a). The eigendecomposition of its weight matrix is W = VΣV−1 , where V and Σ are found to be

Figure 10.6. A directed graph and its weight matrix

(10.4.13) ?=[0.36−0.560.26+0.18j0.26−0.18j−0.750.300.44−0.21−0.45j−0.21+0.45j0.250.440.590.550.550.060.590.27−0.28+0.43j−0.28−0.43j0.050.49−0.260.27+0.11j0.27−0.11j0.6]

(10.4.14) ?=[4.07000001.3900000−1.51+2.35j00000−1.51−2.35j00000−2.44].

Observe the presence of two complex frequencies in the graph spectrum: −1.51 ± 2.35j, where j is the unit imaginary number. From frequency ordering theorem, it is clear that σmax = 4.07 is the lowest graph frequency, while σ = −2.44 is the highest graph frequency. In the matrix V, the graph Fourier bases are ordered from low to high frequencies where the first column corresponds to the lowest frequency.

Consider a graph signal f = [ 1 0 3 1 −2]T supported by the graph shown in Figure 10.6(a). Its GFT can be computed as

(10.4.15) ^?=?−1?=[0.752.601.15−0.45j1.15+0.45j−1.93].

Although DSPG based on a weight matrix is applicable to directed graphs with negative or complex edge weights as well, it does not provide natural intuition of frequency. The eigenvalue with maximum absolute value σmax acts as the lowest frequency, and as one moves away from this eigenvalue in the complex frequency plane, the frequency increases. Thus, frequency order is not natural and there is an overhead of frequency ordering as well. Also, the interpretation of frequency is not intuitive—for example, in general, a constant graph signal results in lowas well as high-frequency components in the spectral domain.

10.4.5 Frequency Response of LSI Graph Filters

In Section 10.4.2, we discussed LSI graph filters, which are polynomials in the weight matrix W. However, the filters were discussed only in the vertex domain. In this section, frequency domain representation of LSI graph filters is discussed.

From Equations (10.4.4) and (10.4.9), the output of an LSI graph filter H can be written as

where

is known as the frequency response of the filter at frequency σℓ. Given the frequency response of a filter, finding the filter coefficients is a graph filter design problem.

10.5 DSPG Framework Based on Directed Laplacian

As discussed earlier, selection of a shift operator is key to the DSPG framework. The directed Laplacian can also be used to derive a shift operator, and as a result, LSI graph filters become polynomials in the directed Laplacian [184]. Subsequently, the directed Laplacian is used as the graph structure matrix whose eigendecomposition is considered as the graph Fourier basis. In contrast to weight matrix–based DSPG, the difference in frequency ordering and interpretation becomes eventual.

10.5.1 Directed Laplacian

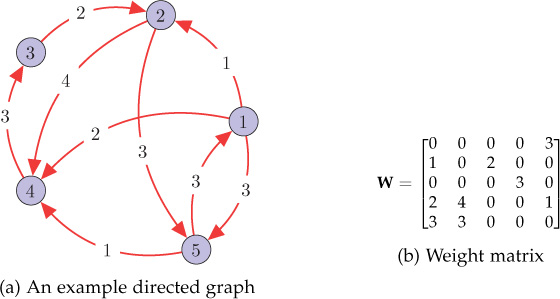

Directed Laplacian is the building block of the DSPG framework. The definition of graph Laplacian for undirected graphs, which is a difference operator L = D − W, can easily be extended to directed graphs. However, in the case of directed graphs (or digraphs), the weight matrix W of a graph is not symmetric. In addition, the degree of a vertex can be defined in two ways—in-degree and out-degree. In-degree of a node i is estimated as dini=∑Nj=1wij

Figure 10.7. A directed graph and the corresponding matrices

The directed Laplacian matrix L of a directed graph is defined as

where ?in=diag({dini}Ni=1)

There also exist a few other definitions of graph Laplacian (normalized as well as combinatorial) for directed graphs. However, selection of the directed Laplacian, as described above, results in a simple and straightforward analysis.

10.5.2 Shift Operator

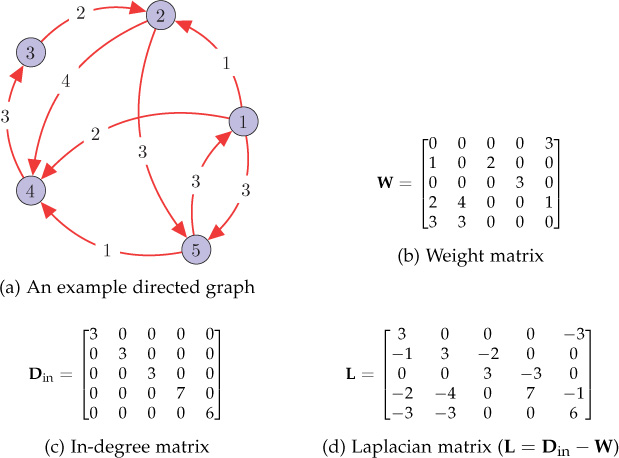

The directed Laplacian matrix can also be used to derive the shift operator. Similar to the discussion in Section 10.4.1, the shift operator is identified from the graph structure corresponding to a discrete-time periodic signal and then extended to arbitrary graphs.

A finite duration periodic discrete-time signal can be thought of as a graph signal lying on a directed cyclic graph shown in Figure 10.8. Indeed, Figure 10.8 is the support of a periodic time series having a period of five samples. The directed Laplacian matrix of the graph is

Figure 10.8. A directed cyclic (ring) graph of five nodes. This graph is the underlying structure of a finite duration periodic (with period five) discrete-time signal. All the edges have unit weights.

Consider a discrete-time finite duration periodic signal x = [ 9 7 1 0 6]T defined on the graph shown in Figure 10.8. Shifting the signal x by one unit to the right results in the signal ̃?=[69710]T

̃?=??=??=[0000110000010000010000010][97506]=[69750],

where S = (I − L) is treated as the shift operator (matrix).

This notion of shift can be extended to arbitrary graphs and consequently can be used S = (I − L) as the shift operator for graph signals. Hence, the shifted version of a graph signal f can be calculated as

Recall that shift operation can also be achieved by using the weight matrix (Section 10.4). However, selecting S = (I − L) as the shift operator results in a better and simpler frequency analysis of graph signals, which will become evident in the following sections.

10.5.3 Linear Shift Invariant Graph Filters

It was proved in the previous section that when weight matrix W is chosen as the shift operator, LSI graph filters are polynomials in W. However, when I − L is chosen as the shift operator, an LSI graph filter turns out to be a polynomial in the directed Laplacian L instead of W. It is proved by Theorem 10.3.

Considering a 2-tap graph filter and substituting h0 = 1 and h1 = −1 in Equation (10.5.4), we get H = S = I − L, which is the shift operator discussed in Section 10.5.2. Thus, the shift operator is a first-order LSI filter. Also, the graph Fourier bases, which are the Jordan eigenvectors of L, are the eigenfunctions of an LSI filter described by Equation (10.5.4).

10.5.4 Total Variation

As discussed earlier, the TV of a graph signal is a measure of total amplitude oscillations in the signal values with respect to the graph. For time series and image signals, TV is defined as ℓ1-norm of the signal derivative. This definition is extended to graph settings, and subsequently, the TV of a graph signal f with respect to the graph ?

where ∇i(f) is the derivative of the graph signal f at vertex i. Analogous to classical signal processing, where the derivative of a discrete-time signal x at time m is defined as ∇m(x) = x(m) − x(m − 1), the derivative of a graph signal f at vertex i can be defined as the difference between the values of the original graph signal f and its shifted version at vertex i:

From Equation (10.5.3), (10.5.5), and (10.5.6), we have

Observe that the quantity Lf at node i is the sum of the weighted differences (weighted by corresponding edge weights) between the value of f at node i and values at the neighboring nodes. In other words, (Lf)(i) = ∇i(f) provides a measure of variations in the signal values as one moves from node i to its adjacent nodes. Thus, ℓ1-norm of the quantity Lf can be interpreted as the absolute sum of the local variations in f. This definition of TV is intuitive, as opposed to the one defined in DSPG which results in a non-zero TV value for a constant graph signal. TV given by Equation (10.5.7) will be utilized to estimate variations of the graph Fourier basis and subsequently to identify low and high frequencies of a graph.

10.5.5 Graph Fourier Transform Based on Directed Laplacian

Fourier-like analysis of graph signals provides a means to analyze structured data in the frequency (spectral) domain. In the classical Fourier transform, the complex exponentials are treated as the Fourier basis. The existing Laplacian-based method defines the GFT by making analogy from the classical Fourier transform in a sense that the complex exponentials are the eigenfunctions of the 1-D Laplace operator. Therefore, analogously, the eigenvectors of the graph Laplacian (symmetric) are used as the graph harmonics. On the other hand, DSPG derives analogy from the perspective of LSI filtering. In DSPG, LSI graph filters are polynomials in the graph weight matrix W, and as a result, the eigenvectors of W become the eigenfunctions of LSI graph filters. Analogous to the fact that the complex exponentials are invariant to LSI filtering in classical signal processing, the eigenvectors of W are utilized as the graph Fourier bases.

As discussed earlier, DSPG derives analogy from the perspective of LSI filtering in the sense that complex exponentials (classical Fourier basis) are eigenfunctions of linear time invariant (LTI) filters. Now that LSI graph filters are polynomials in the directed Laplacian matrix L (Theorem 10.3), the eigenvectors of L can be used as graph Fourier bases because they are eigenfunctions of LSI graph filters. Using Jordan decomposition, the graph Laplacian is decomposed as

where J, known as the Jordan matrix, is a block diagonal matrix similar to L and the Jordan eigenvectors of L constitute the columns of V. Now, GFT of a graph signal f can be defined as

Here, V is treated as the graph Fourier matrix whose columns constitute the graph Fourier basis. IGFT can be calculated as

In this definition of GFT, the eigenvalues of the graph Laplacian act as the graph frequencies and the corresponding Jordan eigenvectors act as the graph harmonics. The eigenvalues with small absolute value correspond to low frequencies, and vice versa. Proof of this is provided later in this section (see Theorem 10.4). Thus, the frequency order turns out to be natural.

Now, we discuss two special cases: (i) when the directed Laplacian matrix is diagonalizable and (ii) undirected graphs.

Diagonalizable Laplacian Matrix

When the directed Laplacian is diagonalizable, Equation (10.5.8) is reduced to

Here, ?∈ℂN×N

Undirected Graphs

For an undirected graph with real weights, the directed Laplacian matrix L is real and symmetric. As a result, the eigenvalues of L turn out to be real and L constitutes an orthonormal set of eigenvectors. Hence, the Jordan form of the Laplacian matrix for undirected graphs can be written as

where VT = V−1 , because the eigenvectors of L are orthogonal in the undirected case. Consequently, the GFT of a signal f can be given as ^?=?T?

Frequency Ordering

Having defined the GFT based on directed Laplacian, one needs to identify which eigenvalues correspond to low or high frequencies. The definition of TV given by Equation (10.5.7) is utilized to quantify oscillations in the graph harmonics and subsequently to order the frequencies. Analogous to classical signal processing, the eigenvalues are labeled as low frequencies for which the corresponding proper eigenvectors have small variations, and vice versa. The frequency ordering is established by Theorem 10.4.

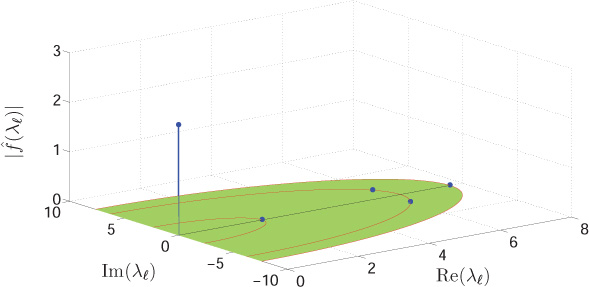

Figure 10.9 shows the visualization of frequency ordering in the complex frequency plane, where λ0 = 0 corresponds to zero frequency and, as one moves away from the origin, the eigenvalues correspond to higher frequencies. Note that the TV of a proper eigenvector is directly proportional to the absolute value of the corresponding eigenvalue. Therefore, all the proper eigenvectors corresponding to the eigenvalues having equal absolute value will have the same TV. For example, in Figure 10.9(a) and (b), the TV values of eigenvectors corresponding to eigenvalues λ1, λ2, and λ3 are equal. As a result, distinct eigenvalues may sometimes yield exactly same TV. For this reason, sometimes frequency ordering is not unique. However, if all the eigenvalues are real, the frequency ordering is guaranteed to be unique. Figure 10.9(c) shows the ordering in the case of an undirected graph with real edge weights. In this case, the directed Laplacian is symmetric and, therefore, the graph frequencies are real. Moreover, if an undirected graph has real and nonnegative edge weights, the directed Laplacian will be a symmetric and positive semidefinite matrix. Frequency ordering visualization for this case is shown in Figure 10.9(d).

Figure 10.9. Frequency ordering from low frequencies to high frequencies. As one moves away from the origin (zero frequency) in the complex frequency plane, the eigenvalues correspond to higher frequencies because the TVs of the corresponding eigenvectors increase.

Example

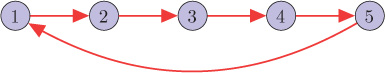

Let us consider a directed graph, shown in Figure 10.10(a). Performing Jordan decomposition of the Laplacian matrix, the Fourier matrix V and Jordan matrix J are as follows:

Figure 10.10. A directed graph and its directed Laplacian matrix

?=[0.4470.680−0.232−0.134j−0.232+0.134j−0.5350.447−0.5020.232+0.312j0.232−0.312j0.0800.447−0.502−0.502−0.201j−0.502+0.201j0.0800.447−0.1080.618−0.089j0.618+0.089j−0.1250.4470.1460.3090.3090.828]

?=[0000002.354000006.000−1.732j000006.000+1.732j000007.646]

The diagonal entries in J are the eigenvalues of the Laplacian matrix and constitute the graph spectrum. Eigenvalue λ = 7.646 corresponds to the highest frequency of the graph. Also note that the TVs of the eigenvectors corresponding to the frequencies λ = 6 − 1.732j and λ = 6 + 1.732j are equal because both the frequencies have the same absolute value. The magnitudes of the GFT coefficients of the graph signal f = [ 0.12 0.38 0.81 0.24 0.88]T are plotted in Figure 10.11.

Figure 10.11. Magnitude spectrum of the graph signal f = [ 0.12 0.38 0.81 0.24 0.88]T defined on the graph shown in Figure 10.10(a)

Concept of Zero Frequency

The Jordan eigenvector corresponding to the zero eigenvalue is given as ?0=1√N[11…1]T

Figure 10.12. Magnitude spectrum of a constant signal f = [ 1 1 1 1 1]T defined on the graph shown in Figure 10.10(a)

One can observe the presence of only a zero frequency component in the spectrum of a constant graph signal. This is in agreement with the intuition that the variation in a constant graph signal is zero as one travels from one node to another node connected by a directed edge. In contrast, the GFT defined in DSPG fails to give this basic intuition.

An important point worth mentioning here is that same order of frequency can be achieved, as given by Theorem 10.4, even if quadratic form (2-Dirichlet form) is used in place of TV. p-Dirichlet form of a signal f is defined as

Putting p = 2 in the above equation, we get 2-Dirichlet form:

Substituting ∇i(f) from Equation (10.5.6), we have

(10.5.17) S2(f)=12NΣi=1|f(i)−˜f(i)|2=12||f−˜f||22=12||Lf||22.

Now, using the above equation, quadratic form of a proper eigenvector vr corresponding to frequency λr can be calculated as S2(?r)=12||??r||22=12||λr?r||22=12|λr|2||?r||22

In other words, 2-Dirichlet form of a graph harmonic is proportional to the square of the corresponding frequency amplitude, and hence, the frequency ordering based on the quadratic form will be same as given by Theorem 10.4.

From the above discussion, it can be argued that GFT based on directed Laplacian provides natural intuition of frequency. Therefore, it allows extension of SGWT to directed graphs. SGWT is discussed in Chapter 11.

10.5.6 Frequency Response of LSI Graph Filters

Filtering can be characterized in the frequency domain using GFT. Assume that L is diagonalizable as in Equation (10.5.11). From Equation (10.5.4), the output fout of an LSI graph filter to an input graph signal fin is

where h(?)=diag{h(λ0),h(λ1),…,h(λN−1)}

To put Equation (10.5.20) in other words, the filter output in the frequency domain is equivalent to the element-wise multiplication of the input signal spectrum and the diagonal entries of h(Λ). Here, h(Λ) is known as the frequency response of the LSI graph filter. This multiplication in the graph spectral domain is a generalization of the classical convolution theorem4 to graph settings.

10.6 Comparison of Graph Signal Processing Approaches

Table 10.1 compares various features of the two approaches of GSP. The Laplacian-based approach is limited to undirected graphs, whereas DSPG is applicable to directed graphs. Moreover, DSPG provides the theory of LSI similar to the one existing in classical signal processing. The key to the DSPG frameworks is the shift operator. Depending on the choice of the shift operator, the structure matrix of the graph is either the weight matrix or the directed Laplacian matrix. Frequency interpretation and ordering is natural if a directed Laplacian matrix is used as the graph representation matrix under the DSPG framework.

Table 10.1. Comparison of the graph signal processing frameworks

GSP Based on Laplacian |

DSPG Framework |

||

Based on Weight Matrix |

Based on Directed Laplacian |

||

Applicability |

Undirected graphs |

Directed as well as undirected graphs |

Directed as well as undirected graphs |

Frequencies |

Eigenvalues of the Laplacian matrix (real) |

Eigenvalues of the weight matrix (complex) |

Eigenvalues of the directed Laplacian matrix (complex) |

Harmonics |

Eigenvectors of the Laplacian matrix (real) |

Jordan eigenvectors of the weight matrix (complex) |

Jordan eigenvectors of the directed Laplacian matrix (complex) |

Frequency Ordering |

Laplacian quadratic form (natural) |

Total variation (not natural) |

Total variation (natural) |

Shift Operator |

Not defined |

The weight matrix W |

Derived from the directed Laplacian matrix (I − L) |

LSI Filters |

Not applicable |

Applicable |

Applicable |

10.7 Open Research Issues

Designing dictionaries for sparsely represented graph signals is an active area of research. Dictionary learning has been widely studied in image processing, computer vision, and machine learning. The task of dictionary learning is to find a sparse representation of the training data as a linear combination of basic building blocks or atoms. In graph settings, the challenges in dictionary learning are that the dictionary should have the ability to adapt to specific signal data, it should be implementable in a computationally efficient manner, and it should incorporate the irregular graph structure into the atoms of the dictionary. Some efforts in this direction can be found in [185][186].

The Fourier transforms defined in all the above discussed approaches require full eigendecomposition of the weight matrix or the directed Laplacian matrix, computation of which is extremely costly. Development of fast algorithms for computing the transforms will help faster and efficient computation of the transforms. Some recent efforts made in this direction can be found in [171] and [172].

The eigendecomposition of the weight matrix or the directed Laplacian matrix does not form an orthogonal basis and, therefore, the signal energy is not preserved in the graph Fourier domain. Developement of orthogonal graph Fourier basis for directed graphs is an interesting area to explore. Recent efforts in this direction can be found in [187] and [188].

Development of various techniques for designing graph filters is an active area of research. The task of graph filter design is to find the coefficinets of matrix polynomials to acheive the desired graph frequency response. However, perfect implementation is not always possible. Designing more and more accurate graph filters is an open problem. Works in this direction include [189], [176], and [190].

Deriving new shift operators that are closer to the classical shift is an interesting field of research. In [191], authors proposed a set shift operators that satisfy the energy conservation property similar to its counterpart in classical signal processing. Problem 20 in the end-of-chapter exercises is based on their proposed shift operator. Moreover, in [192], the authors introduce an isometric shift operator, a matrix whose eigenvalues are derived from the graph Laplacian matrix, that also satisfies the energy conservation property.

Different approaches for sampling theory for graph signals have been proposed in [164], [173], [174], and [175]. However, the area is open for development of a simple and generalized sampling theory for data defined on complex networks.

Development of multirate signal processing tools such as filter banks and wavelets under the DSPG framework is an active area of research. Perfect reconstruction two-channel wavelet filter banks were developed in [193]. However, this approach is based on undirected graph Laplacian and is limited to undirected graphs. Some recent efforts in the direction of development of M-channel filter banks can be found in [194] and [195].

The directed Laplacian used in the existing theory is derived from the in-degree matrix. However, the directed Laplacian can also be defined as L = Dout − W, and based on that, an equivalent theory can be developed.

Data generated by complex networks is very huge. Techniques for compression of data lying on such huge graphs is an open area of research. Also, development of application-specific transforms is an active area.

10.8 Summary

This chapter presented existing approaches for graph signal processing: (1) the Laplacian-based approach and (2) the DSPG framework. The Laplacian-based framework is limited to undirected graphs with only positive edge weights. On the other hand, DSPG is applicable to directed graphs with complex edge weights as well. The DSPG framework relies on a shift operator based on which concepts, such as shift invariance, total variation, and GFT, are defined. Two popular choices of the shift operator, derived from the weight matrix and the directed Laplacian, were presented. Based on these shift operators, the DSPG framework was discussed in detail. However, one can derive other suitable shift operators and accordingly choose graph structure matrices.

Exercises

Consider the graph ?

Figure 10.13. Graph ?

1. For the graph ?

(a) Shifted version of a graph signal f = [ 2, −3, 1, 8, 0]T.

(b) Total variations of all the eigenvectors.

(c) GFT coefficients ordered from low to high frequency.

2. Considering eigenvalues of weight matrix as graph frequencies, order the following eigenvalues from low to high frequencies.

(a) 1.0264, 3.5663, −2.3504, −1.4289, −0.8134

(b) 0, 0.1668, −1.4685 − 0.0883j, −1.4685 + 0.0883j, 2.7703

(c) −0.3138 − 0.2196j, 0.6180, −1.3662 − 0.0847j, −1.6180, 2.6801 + 0.3042j

Also comment on the directivity and nature of edge weight (positive, real, or complex) of the graphs.

3. Consider a five-node directed ring graph, as shown in Figure 10.14. Identify a matrix operator using (a) the weight matrix, (b) the in-degree directed Laplacian, and (c) the out-degree directed Laplacian of the graph that shifts a signal one step left. That is, for a signal [ 1, 2, 3, 4, 5]T, the shifted version should be [ 2, 3, 4, 5, 1]T.

Figure 10.14. A directed ring graph with five nodes

4. Repeat the Problem 1 using the directed Laplacian-based approach.

5. Repeat Problem 2 if the given eigenvalues are of the directed Laplacian matrix.

![]() 6. The oscillations in a signal lying on a network can also be quantified by the number of zero crossings in the signal values. If there is a directed edge from node i to node j and there is a change in the sign of the signal values at nodes i and j, then it is counted as one zero crossing. Create a directed random network with 300 nodes by adding a directed edge between any two nodes with a probability of 0.15.

6. The oscillations in a signal lying on a network can also be quantified by the number of zero crossings in the signal values. If there is a directed edge from node i to node j and there is a change in the sign of the signal values at nodes i and j, then it is counted as one zero crossing. Create a directed random network with 300 nodes by adding a directed edge between any two nodes with a probability of 0.15.

(a) Plot the number of zero crossings separately in real and imaginary parts of the eigenvectors of the in-degree Laplacian matrix with respect to the magnitudes of the corresponding eigenvalues. Comment on your observations and explain the frequency ordering.

(b) Repeat part (a) for the weight matrix of the network.

7. Derive a shift operator by using the normalized weight matrix P = D−1/2WD−1/2 of a graph (analogy can be made from the case of discrete-time signals). Subsequently, redefine total variation and GFT.

8. For a graph signal lying on an N-node directed ring graph, considering the weight matrix as the shift operator, answer the following:

(a) Write down the TV expression for the graph Fourier basis.

(b) Identify and order the graph frequencies.

(c) Compare your results with the conventional frequencies in the DFT.

9. Prove that the GFT associated with a Cartesian product graph is given by the matrix Kronecker product of the GFTs for its factor graphs (use weight matrix as shift operator). Also comment on the spectrum of the Cartesian product graph.

Verify your results for the Cartesian product of the two graphs given in Figure 10.15. You can choose any values for the signals defined on the graphs.

Figure 10.15. Graphs for Problem 9

Hint: Refer to Section 2.5.9 for the definitions of product graphs.

10. Consider a graph signal f = [ 3, − 2, 0, 1, 6]T defined on the graph shown in Figure 10.13. Compute the outputs f1 and f2 of LSI graph filters in cascade, as shown in Figure 10.16, for the following cases:

Figure 10.16. Filters in cascade (Problem 10)

(a) H1 = 3I − 2W + W2 and H2 = I + 4W.

(b) H1 = 3I − 2L + L2 and H2 = I + 4L.

Use the vertex domain as well as the frequency domain methods to find the output graph signals and verify your results. Also, draw frequency responses of the filters. Can you find an equivalent filter H for the two filters H1 and H2 in cascade?

11. This problem is about designing LSI graph filters using least squares. An LSI graph filter can be defined through its frequency response h(σℓ) at its distinct frequencies σℓ , for ℓ = 0, 1, . . . , N − 1. In the weight matrix based DSPG framework, an LSI graph filter is a polynomial (in the weight matrix) of degree M. To construct an LSI filter with frequency response h(σℓ) = bℓ , one need to solve a system of N linear equations with M + 1 unknowns h0, h1,... , hM:

(10.8.1) h0+h1σ0+…+hLσM0=b0,h0+h1σ1+…+hLσM1=b1,⋮h0+h1σN−1+…+hLσMN−1=bN−1.

In general, N > M + 1, and therefore, the system of linear equations is overdetermined and does not have a solution. In this case, an approximate solution can be found in the least-squares sense.

Design the filters with the following specifications using least squares for the graph shown in Figure 10.17.

Figure 10.17. Graph for Problem 11

(a) A low-pass filter of order two that passes only the smallest two frequencies.

(b) A high-pass filter of order two that passes only the largest two frequencies.

(c) A band-pass filter of order two that passes only two frequency components λ2 and λ3.

![]() 12. This problem illustrates utilization of GFT in sensor malfunction detection. You will first create anomalous data and then validate the anomaly in the data by utilizing GFT. Using GSPBox (see Appendix G for additional information on GSPBox), create a 50-node random sensor network and define a signal f on the graph as

12. This problem illustrates utilization of GFT in sensor malfunction detection. You will first create anomalous data and then validate the anomaly in the data by utilizing GFT. Using GSPBox (see Appendix G for additional information on GSPBox), create a 50-node random sensor network and define a signal f on the graph as

where c is a constant and d(i, j) is the distance between nodes i and j (can be found using Dijkstra’s shortest path algorithm). This signal can be thought of as temperature values in a geographical area, since the variations in temperature values are not high if the sensors are placed densely.

(a) Plot the graph signal and its spectrum for c = 0.1. Now assume that sensors 31 and 42 are anomalous and show temperature values of zero.

(b) Plot the spectrum of this anomalous data. Can we conclude that the data generated by the sensor network is anomalous?

(c) Can we find the location of anomalous sensors using GFT? Explain why or why not.

13. (a) Prove that the operator LK is localized within K hops at each node, that is, (LK)ij = 0 for dG(i, j) > K, where L is the directed Laplacian matrix (in-degree) of a graph ?

(b) A signal f = [ 3, 0, 2, 4, 0, 1, 2, 3, 5]T is defined on the graph ?

Figure 10.18. Graph ?

and

where L is the directed Laplacian matrix (in-degree).

(i) What is the sum of the elements of each row of H1 and H2?

(ii) For filter H1, compute the filtered output at nodes 1 and 2. You should not compute the full filter matrix for finding output at a single node. Find only the corresponding row of the filter.

(iii) For filter H2, compute the filtered output at nodes 1 and 3. You should not compute the full filter matrix for finding output at a single node. Find only the corresponding row of the filter.

(iv) For filter H1, if the values of the filtered output at nodes 6 and 7 are 5 and 18, respectively, then find the edge weights a and b.

(v) Write down the spectral representations of the filters H1 and H2. How many parameters are required to characterize the filters?

14. This problem is about graph signal denoising via regularization. Usually the measurements are corrupted by noise, and the task of graph signal denoising is to recover the true graph signal from noisy measurements. Consider a noisy graph signal measurement

y = f + n,

where f is the true graph signal and n is the noise signal. The goal is to recover original signal f from noisy measurements y. In the classical signal processing, discrete-time signals and digital images are denoised via regularization, where the regularization term usually enforces smoothness. Similarly, a graph signal denoising task can be formulated as the following optimization problem:

where S2(f) is 2-Dirichlet form as given by Equation (10.5.16) and γ is the regularization constant. In the objective function, the first term minimizes the error between the true signal and the measurements, and the second term is a regularization term that enforces the smoothness. The regularization parameter γ can be tuned to control the trade-off between two terms of the objective function.

Find the closed-form solution for the denoised signal ̃?

Hint: Take the derivative with respect to f and equate to zero.

![]() 15. Consider the signal generated by Equation (10.8.2) in Problem 12 with c = 0.15. Now create noisy measurements y = f + n by considering zero mean Gaussian noise with standard deviation σ. Now use the results of Problem 14 to find the denoised signal and compute the root mean square error (RMSE) between the noiseless signal and the denoised signal for the following cases:

15. Consider the signal generated by Equation (10.8.2) in Problem 12 with c = 0.15. Now create noisy measurements y = f + n by considering zero mean Gaussian noise with standard deviation σ. Now use the results of Problem 14 to find the denoised signal and compute the root mean square error (RMSE) between the noiseless signal and the denoised signal for the following cases:

(a) σ = 1, (b) σ = 5, and (c) σ = 10.

Solve parts (a), (b), and (c) for γ = 0.01, 0.1, 1, and 10. Comment on your results for various values of noise variance σ2 and regularization constant γ.

16. This problem is about graph signal inpainting via total variation regularization. Signal inpainting is the process of estimating missing signal values from available noisy measurements. Assume that the observed graph signal is y = [y1 y2]T, where ?1∈ℝM×1

Consider y1 is noisy, that is, y1 = f1 + n1, where f1 is the true signal and n1 is noise. The inpainting problem is to find the original signal f = [f1 f2]T from the available noisy measurements y1. The true signal can be recovered by solving the following unconstrained minimization problem.

where S2(f) is 2-Dirichlet form as given by Equation (10.5.16) and γ is the regularization constant. In the objective function, the first term minimizes the error between the true signal and the measurements at known nodes, and the second term is a regularization term that enforces the smoothness.

Find the closed-form solution for the inpainted signal ̃?

(b) Considering clean (non-noisy) measurements y1, the inpainting problem can be written as

Find the closed-form solution for the inpainted signal ̃?

Hint: Use the method of Lagrange multipliers.

![]() 17. Consider the signal generated by Equation (10.8.2) in Problem 12 with c = 0.1 and denote the signal as f. Create noisy measurements y = f + n by considering zero mean Gaussian noise with standard deviation σ. Retain the signal values of y at nodes 1 to 44 and make signal values at nodes 45 to 50 to zero. Now the goal is to recover the true signal f from y, where five signal values are missing. Using the results of Problem 16, find the inpainted signal and compute the RMSE for the following cases:

17. Consider the signal generated by Equation (10.8.2) in Problem 12 with c = 0.1 and denote the signal as f. Create noisy measurements y = f + n by considering zero mean Gaussian noise with standard deviation σ. Retain the signal values of y at nodes 1 to 44 and make signal values at nodes 45 to 50 to zero. Now the goal is to recover the true signal f from y, where five signal values are missing. Using the results of Problem 16, find the inpainted signal and compute the RMSE for the following cases:

(a) σ = 0 (noiseless case), (b) σ = 1, and (c) σ = 5.

Solve parts (a), (b), and (c) for γ = 0.01, 0.1, 1, and 10. Comment on your results for various values of noise variance σ2 and regularization constant γ.

18. A graph signal is called K-bandlimited (K < N) if the first K number of GFT coefficients are non-zero and the rest are zero. Generate 2-bandlimited and 3-bandlimited signals defined on the graph shown in Figure 10.13.

![]() 19. This problem illustrates compression of graph signals using GFT. Assuming that the signal is smooth, we can store only the first K number of GFT coefficients (K < N), thus compressing the signal in frequency domain. For recovery of the signal from stored GFT coefficients, we take inverse GFT by considering the last (N − K) GFT coefficients as zero. Thus, the signal is approximated by a K-bandlimited signal while recovery. For a sufficiently smooth signal, we can achieve a good degree of compression.

19. This problem illustrates compression of graph signals using GFT. Assuming that the signal is smooth, we can store only the first K number of GFT coefficients (K < N), thus compressing the signal in frequency domain. For recovery of the signal from stored GFT coefficients, we take inverse GFT by considering the last (N − K) GFT coefficients as zero. Thus, the signal is approximated by a K-bandlimited signal while recovery. For a sufficiently smooth signal, we can achieve a good degree of compression.

Consider the signal generated by Equation (10.8.2) in Problem 12 with c = 0.05 and denote the signal as f. Now recover the signal by taking the first K number of GFT coefficients and compute the RMSE for (a) K = 20, (b) K = 30, (b) K = 40, and (d) K = 45.

Repeat (a) to (d) for signals with c = 0.1, 0.2, 1, and 5. Comment on your results for various values of K and c.

20. There can be multiple choices of shift operators other than those presented in this chapter. Unlike the classical shift, the shift operators W and (I − L) do not preserve energy of the graph signal. There also exists a shift operator [191] that preserves energy in the frequency domain. Note that energy of a signal f is ?

Assume that the weight matrix of a graph is diagonalizable and, therefore, can be decomposed as in Equation 10.4.9. Now consider a matrix Wθ = VΣθV−1 to be a shift operator. Here, Σθ = diag {σθ0, σθ1,... , σθN−1}, where σθk = ejθk and θk ∈ [ 0, 2π] is an arbitrary phase.

Express W in terms of Wθ.

Prove that the shift operator preserves energy in the frequency domain, that is, ||ˆ?nθ?||22=||^?||22

If σθk = ej2πk/N, then prove that ?Nθ?=?

21. Under the DSPG framework, the gradient of a signal at a node is a scalar value. However, let us define a vector gradient ∇if at a node i such that the jth element of the gradient vector is (∇i?)(j)=√wij(f(i)−f(j))

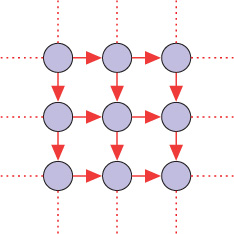

(a) An image signal can also be considered as a graph signal lying on the graph shown in Figure 10.19, where the edges are pointing rightward and downward. For an arbitrary node, what is the vector gradient at the node?

(b) Describe under what conditions on a graph signal f the relation ||∇if||1 = |(Linf)(i)| will hold? Here Lin is the directed Laplacian (in-degree) of the graph.

(c) Do you think that defining a vector gradient might be a better choice for quantifying the local variations in a graph signal and subsequently for quantifying global variation as well? Why?

Figure 10.19. Graph ?

22. Considering the gradient definition as in Problem 21, the p-Dirichlet form can be defined as

Sp(?)=1pN∑i=1||∇i(?)||p2.

Prove or disprove

S2(?)=12(?T?in?+?T?out?),

where Lin is the in-degree Laplacian matrix and Lout is the out-degree Laplacian matrix of the graph.

23. Repeat Problem 11 for the directed Laplacian based DSPG framework.

(a) Consider the filter in the frequency domain h(λℓ) = a + mλℓ, where λℓ is an eigenvalue of the (in-degree) directed Laplacian of a graph. Can you represent the filter matrix in terms of the directed Laplacian matrix? If yes, find the filter matrix H for the case of (i) a = 0.5 and m = 1, and (ii) a = 2 and m = −1. Also plot the frequency responses of the filters for the graph shown in Figure 10.13.

(b) Consider a graph filter H = 0.4I + L + 3L2. Determine if the filter H is LSI? Plot the frequency response of the filter for the graph shown in Figure 10.13.

1. For a directed graph, element wij of the weight matrix represents the edge weight directed from node j to node i (see Section 2.3.1 for details).

2. See Appendix A for deatils on norms.

3. When W is not diagonalizable, its Jordan decomposition can be used.

4. The classical convolution theorem states that convolution in the time domain is equivalent to multiplication in the frequency domain.