7

Stacks and Queues

Objectives

An introduction to the concept of a stack and to at least two ways in which

a stack can be implemented, including the Stack structure in the JCF

An introduction to the concept of a queue and to at least two ways in which

a queue can be implemented, including the Queue structure in the JCF

An introduction to the use of a stack for processing such things as HTML

or XML data that use explicit open–close tags for labeling data

An introduction to the utility of the stack structure for implementing some-

thing like reverse Polish notation, in which the stack itself replaces explicit

tags like HTML or XML or arithmetic operations and parentheses

An introduction to the use of a queue for representing first-in-first-out pro-

cessing structures that resemble checkout lines

An introduction to the heap and the priority queue as mechanisms for main-

taining a dynamic “next most important” data structure without requiring

complete sorting of the data

Key Terms

arc

binary tree

child node

complete binary tree

deque

heap

incomplete binary tree

max-heap

min-heap

node

priority queue

push-down stack

queue

reverse Polish notation

root

total order

tree

151

152 CHAPTER 7STACKS AND QUEUES

Introduction

The basic notion of a linked list uses the idea of a data item together with a link to

“the next item.” Two very useful data structures that are built on similar concepts

are the stack and the queue. In both cases, although we could use an array or an

ArrayList to implement the data structure, we will insist that the insertion and

retrieval of data follow the “next item” protocol of a linked list rather than the

random access by subscript of an array or ArrayList. At the end of this chapter,

though, we will go back to the notion of an ArrayList-based structure to implement

a priority queue.

It is important to think for a moment about the layers of concept and of imple-

mentation. In Chapter 4 we described a linked list data structure. We showed that

the concept of a linked list, in and of itself, is a useful concept for a data structure,

but we also showed how to implement a linked list using instances of nodes with

the DLL class. In Chapter 3 we described the concept of a flat file, implemented it

using an ArrayList, and mentioned that we could instead have used an array for

the implementation.

Likewise in this and subsequent chapters, you should be careful to separate the

concept of a given data structure from the means by which it is implemented. In the

ancient days of computing, using programming languages whose most sophisticated

inherent structure was an array of numbers, all data structure concepts had to be

implemented as clever uses of arrays. Although this can in principle still be done, you

should realize that this kind of programming can be error prone and tedious because

the programmer’s time is spent in details of subscripting, not in dealing with the

bigger picture. Indeed, many of the features of modern programming languages,

and much of the purpose of packages like the JCF, are specifically to eliminate

dealing repeatedly with messy details, usually subscripting details, that are easy to

think about but also easy to get wrong.

7.1 Stacks

A stack, sometimes also called a push-down stack, is a last-in-first-out (LIFO) data

structure. Many napkin dispensers in restaurants or tray dispensers in cafeterias

work as stacks. Napkins or trays are pushed into a spring-loaded dispenser through

the same slot from which they are extracted. A Pez candy dispenser

1

functions in

thesameway.

The standard stack data structure is characterized by its two required methods,

push and pop, and its one instance variable that is an attribute of the data structure,

1

These were popular in the 1950s, then disappeared, and are now once again available.

7.1 Stacks 153

a pointer called top.Thepush operation simply adds another item before the

location pointed to by top, just like putting a tray at the top of the dispenser,

and then adjusts the value of top so it now points to the new top item. The pop

operation is the reverse; one removes the item pointed to by top and adjusts the

variable to point to the new top item.

It is almost trivial, once one has written the code for a linked list, to implement

the concept of a stack using that linked list, viewing the stack data structure as

a linked list with the additional restriction that the additions and deletions are

permitted only at the head node. This is done as follows.

We already have an instance variable that “is” the top, but we called it head

in the case of a linked list. The push operation is exactly the same as the linked

list’s addAtHead method we previously implemented, so we need do no more than

create a wrapper method called push that is a pass-through to addAtHead.The

only slightly new method is pop, which we implement as a new method. We could

have done this already as a linked list method and called it deleteAtHead, but

we didn’t need a method at that time that specifically deleted from a predefined

location (namely, the head). We need that method now, and we will call it pop.

There are two auxiliary methods that we probably also ought to implement

because we are virtually certain to find them convenient if we are going to write

significant useful code using a stack. We should implement an isEmpty method

that will tell us (surprise, surprise) if the stack is empty. This could be either a

standalone method or a pass-through to an already-implemented isEmpty for the

linked list. The other method is a peek at the top entry of the stack. The peek

method would return the data item at the top of the stack but would not actually

delete it. We don’t actually have to have this. However, if our computation requires

us first to look at the top data item and then maybe but not always pop it off and

consume it, then not having a peek means that we have the extra hassle of popping

the item, finding that we don’t want to consume that data, and putting it back.

We can avoid the put-back step by implementing peek.

The UML diagram for a Stack might look as simple as Figure 7.1.

Stack

+top:DLLNode

+Stack():void

+isEmpty():boolean

+peek():DLLNode

+pop():DLLNode

+push(DLLNode):void

FIGURE 7.1 A UML diagram for a Stack class.

154 CHAPTER 7STACKS AND QUEUES

public boolean isEmpty()

{

return 'isEmpty' of the underlying linked list

}

public Record peek()

{

if(this.isEmpty)

{

return null;

}

else

{

Record rec = the 'head' record of the underlying linked list

return rec;

}

}

public Record pop(Record)

{

if(this.isEmpty)

{

throw new StackPopException("tried to pop an empty stack");

}

else

{

Record rec = the 'head' record of the underlying linked list

unlink the head record

return rec;

}

}

// CAVEAT: Note that we always assume we have enough memory to

// add to the underlying linked list. Runaway programs

// will crash ungracefully.

public void push(Record)

{

addAtHead(Record);

}

FIGURE 7.2 Code stubs for a Stack class.

Stubs of code for the necessary methods with the Record class as the data

payload would then look something like Figure 7.2, and with this as the conceptual

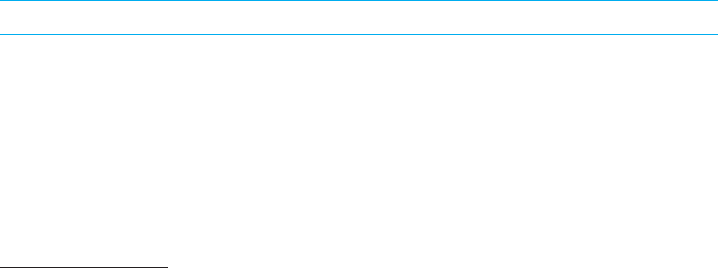

framework, Figure 7.3 shows a stack in operation.

7.1.1 Exception Handling

Just as with linked lists, we need to deal properly with the exceptions that can occur

with the methods in Figure 7.1. The isEmpty cannot generate an exception because

7.1 Stacks 155

Incoming data: 5, 2, 3, 4

Actions to be performed: push, push, pop, push

Step 1: An empty stack

top = null

Step 4: pop returns 2, and then we have

5

top

Step 2: push(5), and then we have

5

top

Step 5: push(3), and then we have

3

5

top

Step 3: push(2), and then we have

2

5

top

Step 6: push(4), and then we have

4

3

5

top

FIGURE 7.3 Inserting elements into a stack.

it returns an isEmpty from the underlying linked list. Although in a truly sophisti-

cated implementation one ought to check that sufficient memory exists to execute a

push, we generally will not assume that we are running out of memory in this text.

The peek and the push, however, could cause problems. If we try to execute a

peek on an empty list, we probably ought to return a null value and then require

the user to check for that.

Most complicated is the pop; unlike a peek issued in error, which can be writ-

ten just to return null data, the pop method is supposed to change the underlying

data structure as well as return a data value. To ensure that we crash the pro-

gram appropriately, we will issue an exception if we try to pop a stack that is

already empty.

There are two schools of thought regarding exceptions like trying to pop from an

already-empty stack. Viewing software as existing in hierarchical layers, the heart of

the religious issue here is the question of which layer will handle the exceptional case.

156 CHAPTER 7STACKS AND QUEUES

What we have provided code for in Figure 7.2 is a data structure that generates an

exception if an erroneous stack pop is issued. This will force programs to be written

so as to execute correctly under all circumstances, and a program that issued a pop

should never do so without first testing isEmpty to ensure that the pop won’t crash

the program. The test of isEmpty is a natural test in the layer of software that uses

the stack data structure, and the exception is dealt with in the next layer down

that implements the stack.

As an alternative, the layer that implements the stack could simply return a null

value from an erroneous pop and require the programmer using the stack structure

to check for nonnull data every time the pop is issued. If such a mechanism were

used, the test for a valid pop would come after the method call to pop and would

use a test for null instead of coming before the method call to pop and using a call

to isEmpty. The test would be whether or not valid data had been returned. This

would be advantangeous in one sense, in that the stack data structure was robust

under erroneous use, but it can also be considered a dangerous practice. After all,

why does the isEmpty method exist except to test for the presence of data in the

stack? From a philosophical viewpoint, the call to isEmpty is a natural call regarding

the state of the idealized version of a stack. The test for null, on the other hand,

represents a test that involves the implementation characteristics of the stack.

Regardless of which religious tenets are adhered to, they should be adhered to; in

a given program, one of the two versions should be used consistently and exclusively.

Mistakes often happen when one does a mix and match of such styles, or when one

person uses one version and a second person uses the other and they either don’t

communicate properly with each other or one has to rework the other’s code.

7.1.2 Stacks Using Nodes

A stack implemented using a linked list with nodes needs no further code to be

developed except for the new push and pop methods. The push canbedonesim-

ply as a method with a one-line body that wraps and thus renames the earlier

addAtHead method. The pop requires a slight bit of new code; our remove methods

for the linked list passed in the data payload to be searched for in a node and whose

node was to be removed. In the case of the pop, we are specifying that the node

(or rather, the data payload) first in position in the stack be removed, so there is

no contextual search through the data structure.

For completeness, we must also include the isEmpty method and a peek method

that returns the data at the top of the stack without actually popping the stack.

Done in this way, the linked list is more general than the stack because additions

to and deletions from a linked list can nominally be made anywhere in the list, but

the stack in contrast only permits changes at the top of a stack.

We emphasize yet again, because it is a very important point, that the concept of

the stack should be separated from the implementation of the stack. As a concept,

7.1 Stacks 157

the stack exists as defined, say, in the UML diagram in Figure 7.1, and a user

of the stack should be allowed to be blissfully ignorant of what lies beneath. The

implementation of a stack is what we take up next.

7.1.3 Stacks Using Arrays

Implementing a stack using an array is straightforward, and indeed was the only

method for implementing stacks using antique programming languages. There is

the usual complication, in Java and in other languages, that an array must be

preallocated with a fixed number of locations, so one does run the risk of overfilling

the stack unless error-checking code is written.

More significantly, and contrary to what might seem the initial way in which a

stack ought to be implemented, when using an array (or an ArrayList)onewants

the top variable to point to the last array location that has been filled with data,

not to the zeroth location. If one treats the zeroth location as the bottom of the

stack, then no data needs to be moved when items are popped off. If one has a

stack with subscripts 0 through 15 already used and top is 15, then one merely

increments top to 16 and pushes the item onto the stack by storing it in location

16. When this item is popped off the stack, the entry at location 16 is accessed and

top is decremented back to 15. It isn’t even necessary to get rid of the data in the

location that is now one beyond top. Done the other way around, with top always

pointing to location 0 in what might seem as the more natural way, then all data

would have to be moved with every push and pop because all the rest of the data

would have to be shifted down and back.

The same logic applies if one is using an ArrayList insteadofanarray.The

code for the push method might well look as simple as the following, assuming

that instance variables were properly declared in the class. This code checks for

an out-of-space condition; if one were using an ArrayList, this checking would be

done by the code at the next level down that implemented the ArrayList,andthe

programmer would not have to check this.

public void push(int newElement)

{

if(top >= array.length)

{

throw an exception because we have no more space

}

++top;

array[top] = newElement;

}

A stack implemented with an array is shown in Figure 7.4.

158 CHAPTER 7STACKS AND QUEUES

Step 1: Empty stack

top = *

subscript 0 1 2 3 4 5 6 7 8 9

data * * * * * * * * * *

Step 2: push(5), and then we have

top = 0

subscript 0 1 2 3 4 5 6 7 8 9

data 5 * * * * * * * * *

Step 3: push(2), and then we have

top = 1

subscript 0 1 2 3 4 5 6 7 8 9

data 5 2 * * * * * * * *

Step 4: pop returns 2, and then we have

top = 0

subscript 0 1 2 3 4 5 6 7 8 9

data 5 2 * * * * * * * *

Step 5: push(3), and then we have

top = 1

subscript 0 1 2 3 4 5 6 7 8 9

data 5 3 * * * * * * * *

Step 6: push(4), and then we have

top = 2

subscript 0 1 2 3 4 5 6 7 8 9

data 5 3 4 * * * * * * *

FIGURE 7.4 A stack implemented with an array.

7.1.4 Reverse Polish Arithmetic

One of the by-now classic uses for a stack is in the implementation of what is

called reverse Polish notation (RPN) for evaluating algebraic expressions.

2

Unlike

the standard notation for an expression, perhaps

4+

a

(2 ×

b

3) −

c

((5 +

d

7) ×

e

8)

2

It is incumbent on anyone who writes a general-purpose book that uses this expression to

point out that this has nothing to do with ethnic jokes. In fact, just the opposite is true; this

notation is a tribute to the Polish mathematician Jan Lukasiewicz who pioneered the use of this

representation.

7.1 Stacks 159

that uses nested parentheses to group subexpressions, one can write the equivalent

expression in RPN without needing parentheses. (We have labeled the operators

with subscripts so we can distinguish, for example, the first plus sign subscripted a

from the second plus sign subscripted d.) The preceding expression would become

423 ×

b

+

a

57 +

d

8 ×

e

−

c

.

This expression can be processed in the following way. We read the RPN expres-

sion left to right. If we have an operand, we push the operand onto the stack. If

we have an operation, then (in our simplified version) we know we have a binary

operation; we apply that operation to the first two operands popped off the stack

and we push the result back onto the stack.

For our example, the processing is as follows.

1. Read the 4. Since it is an operand, we push it onto the stack, which now holds

top → 4, NULL.

2. Read the 2. Since it is an operand, we push it onto the stack, which now holds

top → 2, 4, NULL.

3. Read the 3, and since it is an operand, push it onto the stack, which now holds

top → 3, 2, 4, NULL.

4. Read the ×

b

. Since this is an operation, we pop two operands (3 and 2, in that

order) off the stack, perform the multiplication to produce the product 6, and

push the result onto the stack, which now holds

top → 6, 4, NULL.

5. Read the +

a

. Since this is an operation, we pop two operands (6 and 4, in that

order) off the stack, perform the addition to produce the sum 10, and push the

result onto the stack, which now holds

top → 10 NULL.

6. Read the operand 5 and push it onto the stack, which now holds

top → 5, 10, NULL.

7. Read the operand 7 and push it onto the stack, which now holds

top → 7, 5, 10, NULL.

160 CHAPTER 7STACKS AND QUEUES

8. Read the operation +

d

, pop two operands (7 and 5) off the stack, perform the

addition, and push the result 12 onto the stack, which now holds

top → 12, 10 NULL.

9. Read the operand 8 and push it onto the stack, which now holds

top → 8, 12, 10, NULL.

10. Read the operation ×

e

, pop two operands (8 and 12) off the stack, perform the

multiplication, and push the result 96 onto the stack, which now holds

top → 96, 10 NULL.

11. Read the operation −

c

, pop two operands (96 and 10) off the stack, perform

the subtraction (and in this case we make sure to do the operation in the right

order because subtraction is not commutative), and push the result −86 onto

the stack, which now holds

top →−86 NULL.

The utility of RPN lies in the ability (which we have not shown completely) for

expressions to be written unambiguously without parentheses, and for all arithmetic

operations to be computable using a stack and operating on the two most recently

pushed operands. With RPN, all references are to data that is only a constant dis-

tance away from “here,” unlike with ordinary arithmetic expressions, in which one

might have to parse a string of unknown length in order to find a closing parenthesis.

7.2 HTML/XML and Stacks

The use of a stack for handling RPN arithmetic is in essence an implied insertion

of opening and closing parentheses to make well-formed algebraic expressions.

A very similar but more modern use for a stack would be to parse XML

(eXtensible Markup Language) or similar input and to verify that the XML expres-

sions are well formed. This has become an important part of handling documents

on the Web and elsewhere because the use of XML has standardized the form in

which documents are to be electronically stored.

An XML document might look like the text in Figure 7.5. An opening XML

token in this simplified version consists of a single tag within angle brackets and

has no spaces. A closing token has the same structure except that the character

immediately following the opening angle bracket is a forward slash. (Those who have

looked at real XML or HTML will note that other things are legal inside the angle

7.2 HTML/XML and Stacks 161

<book>

<title> Data Structures Using Java </title>

<bookinfo>

<author>

<forename> Duncan </forename>

<surname> Buell </surname>

</author>

<author>

<forename> Charles </forename>

<surname> Dickens </surname>

</author>

</bookinfo>

[MORE INPUT FOLLOWS]

</book>

FIGURE 7.5

A sample XML-like document.

brackets as modifiers to the initial tag. The attributes add complexity to the parsing

of tags but don’t really affect the idea of using a stack for this kind of processing,

so we will concentrate only on the tag and ignore the possibility of attributes.)

To be valid under modern rules it is necessary that such a document have prop-

erly nested opening and closing tags, just exactly as one would nest parentheses in

an algebraic expression. Thus, the opening <book> tag must eventually be closed

with a </book> tag, and the two <author> </author> tags enclose author infor-

mation for two authors inside the <bookinfo> </bookinfo> open–close structure.

In general, to test that an XML expression is well formed is to test that at any

given level of nesting, all tags that open a context at that level are closed at that

level before the level itself is closed. For example, the display in Figure 7.6 is well

formed, because name, number,andoffice are opened and then closed inside the

phonebook level before another opening tag is encountered.

<phonebook>

<name>

John Smith

</name>

<number>

555.1212

</number>

<office>

3A73

</office>

</phonebook>

FIGURE 7.6

A well-formed XML document.

162 CHAPTER 7STACKS AND QUEUES

<phonebook>

<name>

John Smith

<number>

555.1212

</name>

</number>

<office>

3A73

</office>

</phonebook>

FIGURE 7.7

A badly formed XML document.

However, the display in Figure 7.7 is not well formed because number is opened

inside the name level but not closed until after the name level has been closed.

Although indentation is cosmetic for XML and not functional (white space is

ignored), we have indented for readability, and one can easily see the nesting prob-

lem in the irregularity of the levels of indentation.

XML is in fact both more restrictive and less restrictive than are standard arith-

metic expressions. One can write, as we did earlier,

4+

a

(2 ×

b

3) −

c

((5 +

d

7) ×

e

8)

using the same open and close parentheses symbols for grouping all expressions. This

can lead to expressions that are syntactically correct but don’t parse as intended

if, for example, the writer has confused multiple nestings of “(” and “)”. For this

reason, we might see the preceding expression written as

4+

a

(2 ×

b

3) −

c

([5 +

d

7] ×

e

8),

which is easier for humans to read. XML is less restrictive than algebraic expressions

in the sense that the writer can invent whatever <thisTag> </thisTag> pairs are

desired, rather than using only the customary parentheses, brackets, and braces:

“(),” “[ ],” and “{}.” On the other hand, by requiring the open–close nesting to

be well defined with the unique open–close tags, XML is more restrictive than

the conventions of algebra, which usually only counts the number of opening and

closing parentheses. If you write an expression using only the standard parenthe-

ses, the rules of algebra say that a closing parenthesis closes whatever was most

recently opened. One does not need a stack, then, to determine that an expression

is well formed. A counter will do just fine. One increments the counter for an open

parenthesis and decrements for a closing parenthesis, and need only check that the

counter never goes below zero (which would indicate a close without a correspond-

ing open) and that it does drop to zero at the end of the expression (indicating

that the numbers of opening and closing parentheses are equal).

7.2 HTML/XML and Stacks 163

Just as with algebraic expressions, we can use a stack to handle the parsing

of XML and to verify that the nesting of tags is correct. When we encounter an

opening tag (that is, one with no slash), we push the tag onto the stack. When

we encounter data with no angle brackets (and here we need to appeal to the fact

that we are dealing with a simplified version of XML because in a real application

there would have to be some sort of protocol for handling data that began with an

open angle bracket), we also push that data onto the stack. When we encounter

a closing tag, however (defined as beginning with “</” and with the appropriate

protocol for trying to deal with data that might have these characters), we begin

to pop the stack, treating all entries that are popped as data. If the first popped

entry that is not data is a closing tag that matches up with an opening tag just

popped, the XML is properly nested. If we encounter some other nondata tag first

or if we never encounter the closing tag, then we have a case of improperly nested

tags and invalid XML.

The processing of the XML in Figure 7.6 might go as follows.

1. Begin with an empty stack with the top at the top. We will be storing

(type, contents) pairs and will load an initial (NULL, NULL) pair, so our stack

begins as

(NULL, NULL)

2. Read the first line and push onto the stack a (type, contents)pairthatinthis

case is (tag, phonebook). The stack is now

(tag, phonebook)

(NULL, NULL)

3. Read the next line and push onto the stack a pair (tag, name). The stack is now

(tag, name)

(tag, phonebook)

(NULL, NULL)

4. Read the next line and push onto the stack a pair (data, John Smith). The

stack is now

(data, John Smith)

(tag, name)

(tag, phonebook)

(NULL, NULL)

5. Read the next line. Because this is a closing tag, we pop the stack and acquire

the pair (data, John Smith). Because this is data, we consume the data and

continue, which means that we pop the stack and acquire the pair (tag, name).

This is the first nondata item we have popped, so we compare the contents

164 CHAPTER 7STACKS AND QUEUES

name against the closing tag contents that we read. This is also name,sothe

two match and we continue. The stack is now

(tag, phonebook)

(NULL, NULL)

6. We repeat steps 2 through 5 for the three lines of number and then for the three

lines of office.

7. We read the closing tag </phonebook>. We pop the stack to get a tag with

contents phonebook that matches the contents we just read. At this point we

are out of both data and entries on the stack, so the XML expression is well

formed.

Compare this with the processing of the XML in Figure 7.7 that might go as

follows.

1. Read the first line and push onto the stack a (type, contents)pairthatinthis

case is (tag, phonebook). The stack is now

(tag, phonebook)

(NULL, NULL)

2. Read the next line and push onto the stack a pair (tag, name). The stack is now

(tag, name)

(tag, phonebook)

(NULL, NULL)

3. Read the next line and push onto the stack a pair (data, John Smith). The

stack is now

(data, John Smith)

(tag, name)

(tag, phonebook)

(NULL, NULL)

4. Read the next line, which is <number>, and the following line, which is

<555.1212>, and push them onto the stack. The stack is now

(data, 555.1212)

(tag, number)

(data, John Smith)

(tag, name)

(tag, phonebook)

(NULL, NULL)

5. Read the next line, which is </name>. Since this is a closing tag, we pop the

stack and acquire the pair (data, 555.1212). Since this is data, we consume the

7.3 Queues 165

data and continue, which means that we pop the stack and acquire the pair

(tag, number). This is the first nondata item we have popped, so we compare

the contents number against the closing tag contents that we read. This is now

comparing number against name, so we do not have a match. We know that the

XML is not well formed, so we bail out with an appropriate exception message

to the user.

The use of XML has become ubiquitous, so it is worthwhile for the reader to think

about this example and to search the Web for further information. Common usage is

for data to be stored as tagged XML, like the bibliographic data entry in Figure 7.5,

with meaningful tag names. To make use of this data, the tagged XML data file

is paired with a Document Type Definition (DTD) that “explains” which tags are

legal and how to make use of them. There are many open-source and commercial

packages that will read the data files and the corresponding DTD in order, for

example, to present the data in a browser with formatting (type font and size, italics

for book titles, etc.). For many years the standard example in a text for the use of

a stack was reverse Polish notation, which was interesting but somewhat abstract.

XML, in contrast, is very real throughout the computing industry, and versions

or extensions of XML are found in many disciplines. Geographic information is

encoded, for example, in KML, and a version of XML has been developed for text

as part of the Text Encoding Initiative.

7.3 Queues

The stack is a LIFO data structure, with entries added and removed at one end of

the stack. In contrast, a queue is a first-in-first-out (FIFO) structure that is similar

to the way in which orders are managed in a fast-food restaurant or the way that

normal checkout lines are managed in a retail store. Entries are added only at one

end, called the tail, and are deleted only at the other end, called the head.

7.3.1 Queues Using Nodes

The simplest implementation (at least the conceptually simplest) of a queue is with

a linked list in which data items are added to the structure only at the tail and

removed from the list only at the head. Earlier in this chapter, we converted a linked

list into a stack by wrapping the addAtHead method with code to create a push

method, writing a specific method to unlink at the head and thus to serve as the

pop, and adding (if they were not already there) the isEmpty and peek methods

to simplify later programming tasks using the stack. Implementing a queue with a

linked list underneath is an equally straightforward process, and the UML diagram

for a Queue class could be as simple as that shown in Figure 7.8.

166 CHAPTER 7STACKS AND QUEUES

Queue

+head:DLLNode

+tail:DLLNode

+Queue():void

+dequeue():DLLNode

+enqueue(DLLNode):void

+isEmpty():boolean

+peek():DLLNode

FIGURE 7.8 The UML diagram for a Queue class.

Again, we encourage the reader to separate the traditional concept of a queue

data structure from the implementation of a queue data structure. The concept of

a queue is simple:

• Data items are added only at the tail.

• Data items are removed only at the head.

• The user is entitled to peek at the data item before removing it.

• A function exists to tell the user if there is nothing in the queue.

The UML diagram shows the queue as a concept. In implementation, we see that

we can reuse code that has already been written, and nothing except a little wrapper

code to rename methods is necessary. The head and the tail variables are identical

to the variables of the same name in the underlying DLL class. Similar to the push

for a stack, the enqueue method is just a wrapper that renames the method and

passes through to what could be an addBeforeTail method. The dequeue can be

code identical (again, except for wrapping a method to rename it) to the pop code

of a stack and that is a specific version of an existing unlink method. The isEmpty

and peek helper methods are the same as for the stack. If we treat an underlying

linked list as the code that actually handles the data, the implementation for a

queue is just another light layer of code that sits above the linked list.

As with linked lists in general and with stacks, in the case of a queue implemented

using freestanding nodes, the issue of allocated space does not arise. We must check

that we do not dequeue more nodes than we have enqueued (and we check this by

calling isEmpty), but we can enqueue nodes essentially without worrying about

space. If a program is overwhelmed by adding entries to a queue, then there will

come a time when we run out of space and the system throws an out of space

error, but this is a different kind of error. It would likely be caused in “finished”

code by doing a supercomputer-sized application on a desktop or in the process of

a programmer’s testing because of a runaway loop. Or, perhaps, if one is using a

queue to service Internet packet requests, then a denial-of-service attack will flood

7.3 Queues 167

the server with packets and perhaps overload the available memory as the attacker

probably intended. More “routine” use of memory for a queue, however, is unlikely

to crash the system.

7.3.2 Queues Using Arrays

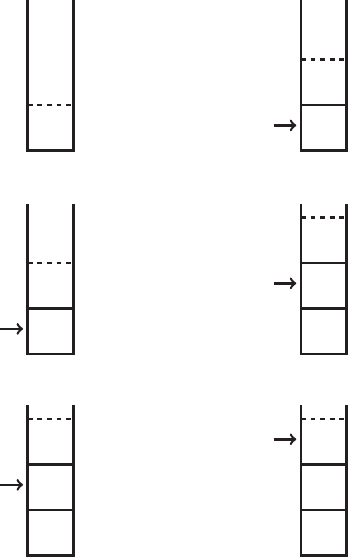

A conceptual example of a queue in operation, using a linked list as the underlying

structure, is shown in Figure 7.9.

Another conceptual view of a queue is as an array of potentially infinite length

in one direction. Entries are added at the “right,” and are removed from the “left,”

with the space between the head and tail pointers being the active window of the

queue. A queue viewed as an array of infinite length, whose window of actively used

Incoming data: 5, 2, 3, 4

Actions to be performed: enqueue, enqueue, dequeue, enqueue, enqueue

Step 1: An empty queue

head = null tail = null

Step 2: enqueue(5), and then we have

5

head

tail

Step 3: enqueue(2), and then we have

52

head

tail

Step 4: dequeue returns 5, and then we have

2

head

tail

Step 5: enqueue(3), and then we have

23

head

tail

Step 6: enqueue(4), and then we have

234

head

tail

FIGURE 7.9 A queue in operation.

168 CHAPTER 7STACKS AND QUEUES

Incoming data: 5, 2, 3, 4

Actions to be performed: enqueue, enqueue, dequeue, enqueue, enqueue

Step 1: An empty queue

...

head = null tail = null

Step 2: enqueue(5), and then we have

...

5

...

head tail

Step 3: enqueue(2), and then we have

...

52

...

head tail

Step 4: dequeue returns 5, and then we have

...

5 2

...

head tail

Step 5: enqueue(3), and then we have

...

5 23

...

head tail

Step 6: enqueue(4), and then we have

...

5 234

...

head tail

FIGURE 7.10 A queue viewed as an array of infinite length.

locations shifts to the right, is shown in Figure 7.10. The in-use portion of the array

simply moves off infinitely to the right as execution progresses.

Since objects of infinite size cannot be implemented in any computer, queues

implemented using arrays have often used circular arrays that resemble the order

ticket carousels in a restaurant. We preallocate an array of some fixed size, larger

7.3 Queues 169

than we ever expect to need, and with head and tail pointers initially assigned as

they would be if the array were of infinite length. Items are added to the queue at

the tail, which “walks” around the circle, and are taken from the queue at the head.

Since an array in a computer cannot actually be set up as a circle, we must

implement code to create the illusion of circularly organized space. Instead of using

just the naive incrementing of the top pointer,

top = top + 1;

we make the subscripts wrap around using code similar to

top = (top + 1) % array.length;

As with any queue implementation, we must check that we do not remove any more

elements than are in the queue (that is, that the head pointer does not walk beyond

the tail pointer) and that we do not exceed the allocated space (that is, that the

tail pointer does not walk beyond the head pointer).

A queue implemented as a circular array is shown in Figure 7.11. Except for the

fact that we must test the size to ensure we have not run out of memory, and the

5 “deleted”

2

3

4

not yet used

head

tail

head and tail move counterclockwise

FIGURE 7.11 A queue implemented as a circular array.

170 CHAPTER 7STACKS AND QUEUES

fact that our increment of head and tail subscripts works modulo array.length,

this is really just the same thing as the (impossible) implementation that would

use an infinite array.

7.4 The JCF Classes

7.4.1 The JCF Stack

We discussed in Chapter 5 the Java Collections Framework class LinkedList.Many

of the data structures we discuss in this text are implemented in the JCF, and Stack

and Queue are among them. It is worth looking at the differences between the JCF

version of a stack and that shown in Figure 7.1. The JCF class has methods empty,

peek, pop, push,andsearch.

The JCF empty method differs from our isEmpty only in the name. There is one

school of thought in computing that argues that all boolean functions should begin

with “is” to emphasize that their purpose is to answer a question. We have followed

this style in this text, so reading a code fragment using our isEmpty method might

look like the programmer is asking a question and the code resembles standard

English, as shown in Figure 7.12. Although there may be no reason to believe that

a casual reader would misunderstand the purpose of a method named empty,with

the method name read as a verb and the purpose of the method being to empty

the queue, we will stick with the “isX” convention for naming boolean methods like

this one. All too often code is written that looks like that shown in Figure 7.13 and

the myQ.empty() method was indeed a method that actually emptied a queue and

returned a boolean value to say that the queue had been emptied. This kind of

ambiguity should be avoided.

The peek, pop,andpush methods have the same purpose as our methods of

the same names. For completeness, we mention finally the search method, which

returns the 1-based position of the object on the stack. This no doubt derives from

the implementation heritage of the Java Stack as a subclass of Vector,anearly

version of ArrayList.

if(myQ.isEmpty())

{

DO SOMETHING

}

else

{

DO SOMETHING ELSE

}

FIGURE 7.12

Code that reads like English.

7.4 The JCF Classes 171

if(myQ.empty())

{

DO SOMETHING

}

else

{

DO SOMETHING ELSE

}

FIGURE 7.13

Code that could be ambiguous when read.

The primary difference between the JCF Stack and the stack as we have imple-

mented it is that our methods explicitly refer to a stack of nodes, which we know con-

tain data payloads. When our pop method executes, it returns a node, and we must

issue yet one more method in order to return the data payload inside that node.

In contrast, the JCF versions of the structures are implemented with gener-

ics and iterators; the JCF Stack is constructed with a generic E item where we

have used our DLLNode node. One could, of course, build a JCF-based stack by

Stack<DLLNode>, and that would provide a structure that the programmer would

view very much like that we have outlined with Figure 7.1. By building a JCF-based

stack with Stack<Record>, the JCF is providing yet another layer of insulation

between the concept of a stack and the implementation of a stack. The program-

mer sees a stack of data items, not a stack of nodes that each contain a data item.

We discussed this earlier in Section 5.3.

On the other hand, if one wanted to implement a Stack<DLLNode> structure,

the JCF stack would also work just fine, since it is implemented using generics and

DLLNode is just as good an object as is Record. There are times when more than

one access method is needed, so one can envision a situation in which nodes are

pushed onto the stack but the nodes also permit some sort of “sideways” pointer to

other nodes. (This kind of structure—a primary data structure with shortcuts or

other pointers added that are not usually included in the primary structure—will

show up later when we discuss trees.)

7.4.2 The JCF Queue

The JCF LinkedList and Stack classes are easily recognizable as the same struc-

tures we have described in detail. What is likely harder for the inexperienced student

to understand is the nature of the JCF Queue.

First off, we notice that Queue, per se, is not a class like LinkedList and Stack

but instead is a Java interface. Unlike LinkedList and Stack, then, this requires

a user-written class that implements Queue by including the methods required by

Queue. These are at least moderately familiar, although they are different from what

172 CHAPTER 7STACKS AND QUEUES

we have presented in Figure 7.8. Some of the reasons will be discussed momentarily;

in brief, since the JCF Queue is not a finished product and a class by itself, but

an interface intended to permit more general use of queue-like structures, there are

more options required of the code that implements Queue.

Our class had methods dequeue, enqueue, isEmpty,andpeek.TheQueue inter-

face requires that the programmer implement add, element, offer, peek, poll,and

remove. All these methods have generic parameters similar to those of the Stack

class. Since the parameters are defined by generics, it could be possible to pass a

parameter of an invalid type or to pass a NULL parameter to a queue in which NULL

wasnotavalidentry.AlltheQueue methods should throw exceptions for these

kinds of actions.

In all instances, it is understood that the underlying structure that is imple-

menting the Queue interface might be of fixed size (like a preallocated array), and

thus all the Queue methods permit throwing exceptions or returning false if one

is trying to add to an underlying structure that is already full.

The isEmpty method for Queue is present because it is inherited from the

java.util.Collection interface. (Note that this method is named isEmpty and

not just empty.)

Instead of an enqueue method, the Queue interface has two methods for adding

data to the queue: add and offer. Both return a boolean. The difference between

the two is that add returns true if the element can be added without overflow-

ing the underlying storage but must throw an exception if the storage structure

is already full. The offer method, in contrast, is preferred because although

it will return true if the element is added to the queue, it will return false

rather than throwing an exception if the element cannot be added due to capacity

restrictions.

Instead of our dequeue method, the Queue interface has methods poll and

remove. Both methods remove the element at the head of the queue and return the

element. The poll and remove methods function analogously to

add and offer

in that poll returns null if the queue is empty, but the remove method simply

throws an exception when the queue is empty.

Finally, instead of our single peek method, the Queue interface has methods

element and peek that function in an analogous way to poll and remove.Both

return the head element without deleting it if there is a valid head element; if the

queue is empty and there is no element to return, peek returns null and element

throws an exception.

Thus, add, remove,andelement will throw exceptions if the storage limit is

exceeded or the queue is empty, while offer, poll,andpeek return either a

boolean or null.

Another difference between using the interface and using the class is that type

checking is done differently. When the Stack class is used in a program, an instance

7.4 The JCF Classes 173

of a Stack is created with a line of code like Stack<Record> myStack,andall

references to the myStack variable or to the Stack methods must conform to the

requirement for parameters and returned values to be of type Record. This is some-

thing the compiler can check. There are no issues with space because the Stack

class is assumed to take care of such things.

In contrast, the programmer must implement the Queue interface using some

underlying data structure for storage. Since the interface is silent on the underlying

storage structure, the programmer must be prepared to include code that checks

for size constraints.

7.4.3 Stacks, Queues, and Deques

Perhaps the major difference between the JCF Stack class and the Queue interface is

that the Stack is old, by Java standards, and adheres to traditional notions of what

a stack ought to be, while the JCF Queue interface is actually much more general

than what we have described as a queue in Section 7.3. The Java documentation

describes a queue as “a collection designed for holding elements prior to processing”

that typically but not necessarily orders elements in a FIFO fashion. The closest

implemented JCF class to the traditional queue that we have described is the Java

ArrayDeque class.

A deque, pronounced “deck,” is a “double-ended queue,” a list structure in which

insertions and deletions can be made at either end but only at the ends. The JCF

ArrayDeque class implements both the Queue interface and the Deque subinterface

of Queue. As a JCF class, it subsumes LinkedList and Stack as well as provides a

class that can be used as a queue. The penalty paid for using ArrayDeque is com-

plexity; because the structure can be used as a double-ended queue, the ArrayDeque

class has, for example, addFirst and addLast methods, as well as a queue add

method that does the same thing as addLast. Similarly, the offer, peek, poll,

and remove methods each occur in triplicate. The addFirst and addLast meth-

ods are required by the Deque interface, and the add method is required by the

Queue interface.

Consistent with the Java documentation suggesting that ArrayDeque should be

used instead of the older Stack class, the ArrayDeque class has the stack methods

pop and push. Finally, the ArrayDeque class has methods for traversing the struc-

ture and dealing with entries that are not at the head or the tail. Taken together,

the ArrayDeque is extremely useful, but the programmer who uses this class should

probably exercise some self-discipline when using the methods. If it is intended

that a stack be implemented, then only the base methods for a stack should be

used. If a queue is intended, then only the base queue methods should be used.

Otherwise, the resulting code could easily be misunderstood by a subsequent reader

of the code.

174 CHAPTER 7STACKS AND QUEUES

7.5 Priority Queues

One of the major features of linked lists, stacks, and queues is that they accommo-

date dynamic data; that is, they provide structures that grow and shrink as data

is added to them and taken from them.

The basic queue structure allows one to add data items onto the back end of

a queue and to take them off the front, providing a first-in-first-out, or FIFO,

processing order. Let us add another constraint: we want to be able to add items

dynamically and to keep them ordered according to some priority value so that

when we remove an item, the item we remove is always the item of highest priority

in the current list. This scenario is similar to what needs to happen in an operating

system as entries are added to the list of tasks to be performed and some new

entries might have a higher priority than those already being executed.

A structure like this is called a priority queue. In this sort of queue, entries would

always be added at the tail and always removed at the head, but the additional

constraint is that some kind of ordering process occurs to ensure that the entry at

the head is the entry of highest priority.

This structure can certainly be achieved with an ordinary queue with an inser-

tionsort when items are added; when an item is added, we pull it forward in the

queue until it is in its proper place in the sorted list. This method will work, but it

will inherently take on average O(N/2) steps, or linear time, to add an item and to

recreate the state of being sorted. For a queue of active processes in an operating

system, this would be excessively slow because a queue with an insertionsort does

more work than is really needed.

The queue with an insertionsort keeps all the records in sorted order all the time.

We have not asked for all the items to be sorted, only that the largest, or highest

priority, item is at the head. What we would like to have is a mechanism that will

• efficiently (that is, faster than the linear time of a naive insertionsort

3

) rebuild

the structure after the head is removed so that the next-highest-priority item

is located at the head for the next removal; and

• efficiently (again, faster than linear time) insert a new entry into the structure

so that the new structure, one larger than before, still has the property that

the head is the item of highest priority.

7.5.1 The Heap

The structure that is commonly used for this purpose—that has as its head the

item of highest priority and that allows the structure to be rebuilt in O(lg N) time

3

You should remember that early on in this book we said that we would be dealing with ideas

that would allow you to implement more sophisticated algorithms to replace the naive ones and

to get improved performance as a result. This is one of those ideas.

7.5 Priority Queues 175

and not O(N) time—is the heap. The heap is more efficient than an insertionsort in

large part because it does less work than an insertionsort. When an item is added

in a list and an insertionsort is used to insert the item in its correct order, a total

order is produced, with every element larger than all those farther down in the list.

If our goal is only to be able to pull off the item with highest priority, we don’t

need a total order. Instead, we need only ensure that the item of highest priority is

at the head. The priority queue maintains only this weaker property, and thus can

be maintained with less work.

Consider an array of numbers array[*]. For convenience, because the algorithm

is simpler to state, we shall consider the array to be indexed 1-up instead of the

usual 0-up numbering of Java.

4

The array is said to satisfy the max-heap property

andthustobeamax-heap if for all items array[i] indexed 1 to N we have

array[i] >= array[2*i]

and

array[i] >= array[2*i+1],

for all subscripts i for which the subscripts on the right-hand sides are valid for the

given array length. (We could define a min-heap in the obvious analogous way.)

An example of an array with the max-heap property is given in Figure 7.14.

Since it is not totally obvious that this is a heap just from glancing at subscripts

and values, we also present, in Figure 7.15, a pictorial view of the same heap as a tree

structure. In the tree representation, we have put the subscripts in brackets. (We

will have a great deal more to say about trees later, including an entire chapter.)

This picture of the heap as a tree is clearly easier to understand than is the array

in subscript order. We are going to be using tree structures extensively because

they provide useful ways to visualize some important data structures. All trees are

formed from nodes (the circles) and arcs (the lines). (An arc is also called an edge

and we will use the two terms interchangeably.) Contrary to the usual way in which

a tree is viewed, computer scientists usually write trees as in Figure 7.15 with the

root node at the top (in this case, the node subscripted 1 and with value 104) and

the rest of the tree hanging down from the root instead of growing up from the

root. Nodes may have one or more children; these are the nodes immediately below

a given node and connected to the given node by an arc. A tree for which no node

hasmorethantwochildreniscalledabinary tree. The tree in Figure 7.15 is a

4

It can be argued that most of the time things are easier to express if one does indexing 0-up.

In this case, however, indexing 1-up makes the subscript calculation much simpler. Indeed, one

can probably justify just not using the subscript-0 data location and wasting that small amount

of space in order to gain the benefits of the simpler arithmetic in the code.

176 CHAPTER 7STACKS AND QUEUES

Subscript Value

1 104

2 93

3 76

4 17

5 82

6 65

7 71

8 13

9 2

10 71

11 69

12 63

13 61

14 70

FIGURE 7.14 An example of an array that is a max-heap.

[1]104

[2]93

[4]17

[8]13 [9]2

[5]82

[10]71 [11]69

[3]76

[6]65

[12]63 [13]61

[7]71

[14]70

FIGURE 7.15 A tree structure for a heap, with [node]value entries.

binary tree. If the missing entry (that would be subscript 15) were there, it would

be a complete binary tree;asitis,thetreeisanincomplete binary tree.

Given the picture of the tree in Figure 7.15, and with the subscript labeling as

indicated, the criterion for a tree to represent a max-heap is that the value at any

given node is greater than or equal to the values at the child nodes.

In this case, 104 for subscript 1 is larger than the values 93 and 76 for subscripts

2 and 3, 93 for subscript 2 is larger than 17 or 82 at subscripts 4 and 5, and so

7.5 Priority Queues 177

forth. Since the value at each node in the tree is larger than the values of any of

the children, the tree satisfies the heap property.

By the transitivity of “greater-than-or-equal to,” and since the tree is a heap,

we also know that 104 is the largest value in the entire structure, even though we

do not know what the total sorted order of the array is.

Clearly, then, if we have an array that is organized as a max-heap, the largest

value in the array is at subscript 1 in the root node of the tree.

To show that the heap provides a better structure for maintaining a prior-

ity queue than a queue with insertionsort, we must show that a priority queue

can be created and maintained faster than by using an insertionsort. First we

must know what we are comparing against. Given N records, an insertionsort will

require O(N

2

) comparisons in order to create a sorted list. Although each entry

could now be removed in sorted order, we want to consider a priority queue as

a dynamic structure with new entries coming in as time progresses. Think of the

queue of ready processes in an operating system; with every mouse click the user

could be spawning new tasks to be added to the list of processes to be executed.

Inserting these new processes would require on average N/2 further comparisons.

A priority queue implemented as a sorted list with an insertionsort thus has a

linear cost.

Since what we are doing is essentially a type of sorting, the unit of computation

that we must count is the comparison of two entries. Instead of the linear time cost

of an insertionsort-based method, we can prove two features of the priority queue

implemented using a heap:

1. A priority queue of N items can be created from scratch in O(N lg N)

comparisons.

2. Inserting one more item into a priority queue of N items can be done with

O(lg N) comparisons in steady state.

There does exist an O(N) algorithm for creating a heap (to be used in a priority

queue or in some other application) from N data items. We will present instead

an O(N lg N) algorithm that is simpler. Although it is nice to know that a faster

algorithm exists for creating the heap, most uses of a priority queue will eventually

require O(N lg N) comparisons anyway, so the benefit of the faster creation is lost

in a real application. What is critically important for our use of a heap as a priority

queue is that we have an algorithm to rebuild the heap in O(lg N)timeafterwe

extract the maximal element from the heap.

If we count the building of the heap as one-time work, as would be the case for

an operating system’s creating at boot time a queue of processes capable of being

executed, then the priority queue implemented with a heap is a structure with an

acceptable running time for maintaining a priority queue of data items.

178 CHAPTER 7STACKS AND QUEUES

7.5.2 Creating a Heap

Theorem 7.1

An array recs[i] of N numerical values can be converted into a heap in O(N lg N)

time.

Proof Our proof is constructive: we present an algorithm that will create a heap

from N data items and require O(N lg N) comparisons between values stored in

nodes.

Given that we have loaded instances of data of Record type into an array of data

records recs[*] indexed 1-up, we run the following loop

for(inti=1;i<this.getNumElts(); ++i)

{

this.fixHeapUp(i);

}

with the fixHeapUp method shown in Figure 7.16. If the length of the loop

this.getNumElts() is N,andfixHeapUp runs (as we will claim) in O(lg N) time

on an array of N items, then the running time of this code fragment is O(N lg N).

public void fixHeapUp(int insertSub)

{

int parentSub;

ProcessRecord insertRec;

insertRec = this.theData.get(insertSub);

parentSub = insertSub/2;

while(1 < insertSub)

{

if(this.theData.get(parentSub).compareTo(insertRec) < 0)

{

this.swap(insertSub, parentSub);

insertSub = parentSub;

insertRec = this.theData.get(insertSub);

parentSub = insertSub/2;

}

else

break;

}

} // public void fixHeapUp(int insertSub)

FIGURE 7.16

The fixHeapUp code.

7.5 Priority Queues 179

We must now show that in any call to the fixHeapUp method in Figure 7.16,

we make at most lg N comparisons. If there are N items in the list, then the value

of insertSub is at most N in any given call. In the first comparison we compute

parentSub as insertSub/2, and in the second comparison we assign that as the

new running value of insertSub, and continue. With each comparison, then, we cut

the value of insertSub in half until eventually we have 1 > insertSub in the test

in the while statement and we break out of the loop. Since we start with insertSub

no larger than N, and we divide by 2 with each iteration and comparison, then in

the worst case of any call to the fixHeapUp method we require at most O(lg N)

comparisons. What the fixHeapUp method does is to bubble the entry at the “end”

of the array (or ArrayList) into its proper position in a heap.

We note that this method requires a comparison method compareTo that returns

−1, 0, or 1 depending on whether the data in the this record is less than, equal to,

or greater than the data value passed in as an argument. But we are accustomed

to this as a standard method for nearly any data payload.

The reason for our original decision to subscript 1-up should be clear now from

the tree representation of the heap and from the code in Figure 7.16. By subscripting

1-up we take advantage of the nature of integer division in Java (and most other

languages) to get simpler, cleaner code. Certainly one could rewrite the property

and the algorithm to run 0-up, but the convenience of integer division to get the

subscript of the parent item would be lost.

We also note that this same fixHeapUp method easily allows for dynamic addi-

tions to the heap. If we add an item to the heap at the end of the array and then

call fixHeapUp, the element will percolate up to its proper location in the heap,

taking at most O(lg N) comparisons in the process.

An example of converting into a heap an initially random array, viewed as a

binary tree structure, is presented in Figures 7.17–7.19. In each step the colored

values are compared and then swapped if they are out of order and the nodes in

the array that are included in the current heap are outlined in bold. Our outer loop

runs from array subscript 1 up through subscript N.

7.5.3 Maintaining the Heap

The fixHeapUp method takes lg N time, and when applied to an array of N items,

converts in O(N lg N) time an array into an array that can also be read as a heap

using the subscripting in Figure 7.14. This in itself would not be all that useful.

What is useful is the following little bit of almost-magic that allows us to use the

heap as a priority queue as a dynamic data structure. It takes O(N lg N) time to

create the heap ab initio for N nodes or data items; after that, in steady state,

we can remove the maximum value (the value of highest priority), add a new data

item, and recreate the heap in only O(lg N) comparisons.

180 CHAPTER 7STACKS AND QUEUES

76

82

71 17

93

104 65

76

82

71 17

93

104 65

82

76

71 17

93

104 65

93

76

71 17

82

104 65

FIGURE 7.17 Building a heap from an array, part 1.

Theorem 7.2

The root node of the heap contains the element whose data payload has the maximal

value of the entire array, and we can remove that element and recreate the heap

structure in O(lg N) time.

Proof Let us assume that we have first created a heap. We now extract the maximal

value in the entire array. This leaves a vacancy at the root, and our array ought to

be one shorter than the array we started with. We accommodate this by moving the

last element, originally stored at location N but in steady state stored at location

array.length-1, into the root node. This element is probably out of place, but we

7.5 Priority Queues 181

93

76

71 17

82

104 65

93

76

71 17

82

104 65

93

76

71 17

104

82 65

FIGURE 7.18 Building a heap from an array, part 2.

104

76

71 17

93

82 65

104

76

71 17

93

82 65

FIGURE 7.19 Building a heap from an array, part 3.

can now run fixHeapDown (Figure 7.20) to push that element down into its proper

place in the new array. The fixHeapDown method also takes the same O(lg N)

time as does fixHeapUp and differs from fixHeapUp mostly in that it takes two

comparisons at each level and not one. In fixHeapUp, if the left child, say, is larger

182 CHAPTER 7STACKS AND QUEUES

public void fixHeapDown(int insertSub)

{

int largerChild, leftChild, rightChild;

leftChild = 2*insertSub;

rightChild = 2*insertSub + 1;

while(this.getSize() > leftChild)

{

largerChild = leftChild;

if(this.getSize() > rightChild)

{

if(this.theData.get(leftChild).

compareTo(this.theData.get(rightChild)) < 0)

{

largerChild = rightChild;

}

}

if(this.theData.get(insertSub).

compareTo(this.theData.get(largerChild)) < 0)

{

this.swap(insertSub, largerChild);

insertSub = largerChild;

leftChild = 2*insertSub;

rightChild = 2*insertSub + 1;

}

else

break;

}

} // public void fixHeapDown(int insertSub)

FIGURE 7.20

The fixHeapDown code.

than the parent, then it must also be larger than its sibling the right child because

we had started with a heap to begin with. In fixHeapDown, we must make sure

to put the largest of the three elements (parent and two children) in the parent

location in order to maintain the heap property.

7.6 Summary

We have in this chapter introduced the concept of a stack and presented multiple

ways in which a stack can be implemented. This includes the use of the built-in

Java Stack construct. We have similarly introduced the concept of a queue

7.7 Exercises 183

together with ways in which a queue can be implemented. This includes the Java

Queue construct, which implements much more than the basic version of a queue

as we have presented it.

As examples, we have looked at the processing of HTML or XML data, which

has explicit open–close tags that label the data lying between the tags. With the

explosion of data and applications on the Web, stack processing of data tagged

with a markup language has become a significant basic feature of much of modern

computing.

We also looked at reverse Polish notation. In processing RPN, the stack structure

makes the explicit tags of a markup language unnecessary. Although not so common

any more, many computers were originally built physically as stack-based machines.

Finally we presented the priority queue, used everywhere for keeping track of

dynamic task data for which “the most important” task must be processed next.

The priority queue, implemented with a heap, is one of the key data structures due

to its simplicity and efficiency for handling such computational problems.

7.7 Exercises

1. Finish the implementation of a stack by packaging up the existing linked list

code and finishing off the methods from the UML diagram in Figure 7.1 and

the code stubs in Figure 7.2.

2. Implement a queue, following the lead of Figure 7.8 and the code of the previous

exercise.

3. Improve the code of one or both of the previous exercises to use generics instead

of the Record class as the data payload.

4. Implement a stack or a queue using the LinkedList structure from the Java

Collections Framework.

5. Use your stack code from the first exercise and write a class that will parse an

XML file to determine whether or not it is well formed.

6. Use the JCF Stack class and write a class that will parse an XML file to

determine whether or not it is well formed.

7. Rewrite either of your XML-parsing programs to process the XML tag–data

pairs as a separate class.

8. Use any stack code and write a class that will parse arithmetic expressions in

RPN and evaluate them.

9. Use the JCF ArrayDeque class and write a class that implements a stack to

parse an XML file to determine whether or not it is well formed. You should

use only the methods in Figure 7.1.

184 CHAPTER 7STACKS AND QUEUES

10. Implement a myQueue class as described in the text by wrapping the

ArrayDeque class and its methods so that the public methods of myQueue are

named as in Figure 7.8 but call the methods from ArrayDeque.

11. Finish off the implementation of a priority queue. Read in a collection of random

numbers (25 or so, which will be enough for you to notice patterns and will

also guarantee that you get the not-power-of-two fencepost problems handled

properly). Then extract the elements in priority order, rebuilding the heap each

time. What have you been able to do with this? (We will do more on this in

Chapter 11.)

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.