2

Hardware Elements

2.1 TRANSISTORS, GATES AND FLIP-FLOPS

2.1.1 Implementing Gates with Switches

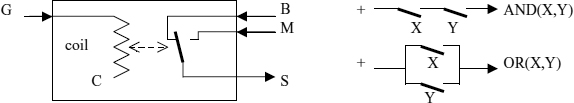

Elementary building blocks implementing the Boolean operations AND, OR, NOT or SEL from which all Boolean functions can in turn be constructed (see section 1.3.1) are realized quite easily by electronic means. A simple solution that was actually applied in the early days of computing is the use of electrically controlled, mechanical switches. The single basic component is the controlled switch with a control input G, a coil connected between G and a ground reference C, and the poles S, B, M of the mechanical switch (Figure 2.1). If a sufficiently high voltage is applied to G w. r. t. C, the magnetic force of the coil breaks the connection from S to B and makes the one from S to M, and hence performs a selection between the voltage levels applied to B and M, depending on the control input. An interval of voltage levels that cause the switch to be actuated is used to present the Boolean 1 while the voltages near zero represent the 0, all voltages being referenced to C. This SEL building block fulfills the requirement that the input and output signals be compatible. It can thus be composed with others. The high voltage level can be selected from a power supply (denoted ‘+’ in Figure 2.1). To output a zero voltage to another coil, the corresponding select switch input can be left open, as the unconnected coil will assume the zero level by itself. Thus, the switch can be simplified to a break or a make switch actuated by the field of the coil. A break switch connected to ‘+’ realizes the NOT operation.

The parallel and serial compositions of make switches shown in Figure 2.1 implement the OR and AND functions. In the serial composition the switches controlled by X and Y must both close to output the ‘+’ level. The parallel and serial compositions generalize to networks of switches with two dedicated nodes. The network is in the state f(a1,…,an) depending on the state ai of the switches it is composed of, the possible states being 1 (‘closed’) and 0 (‘open’). If a second network of switches is in the state g(b1,…,bm), then their serial and parallel compositions are switch networks with two dedicated nodes with the state functions AND(f(a1,…,an), g(b1,…,bm)) and OR(f(a1,…,an), g(b1,…,bm)).

Figure 2.1 Switch-based SEL building block, and AND and OR switch circuits

Figure 2.2 N-channel transistor switch and equivalent circuit

Unfortunately electromechanical switches are slow, consume much space and power and suffer from a limited lifetime. Modern electronic computers use networks of transistors instead which behave like electronic switches and are used in a similar fashion to the electromechanical switches, but are cheap and fast solid state devices with a low power consumption and almost unlimited life that, moreover, have microscopic dimensions and can be integrated in their thousands into silicon chips. For an overview of the various classes of transistors and circuits implementing the gate functions, we refer to [2] and concentrate on the NMOS technology and on the most important and elegant one, the CMOS technology invented as long ago as 1963.

The common CMOS (complementary metal oxide semiconductor) technology uses two kinds of insulated gate field effect transistors, the n-channel and the p-channel devices. The transistor symbols in the figures are denoted accordingly. These transistors have three terminals, the source and drain terminals (S, D) and the gate (G) which is the control input (for the sake of simplicity, the influence of the potential of the silicon substrate beneath the gate is ignored). For the n-channel transistor (Figure 2.2) with the source S near the ground reference (the negative supply), the gate input G at the H level causes a low resistance connection from S to the drain D whereas an L level disconnects S and D. The device is voltage controlled; no current flows into the gate once the tiny input capacitance Cin has been charged, as shown in the simplified equivalent circuit in Figure 2.2. The transistor, switch is modeled as an ideal switch put in series with a resistor, which is valid for small output voltages only. For the complementary p-channel transistor, the S terminal is at the level of the positive supply (0.6…18V depending on the technology; the most common for external interfacing are 5V, 3.3V). For an H input to G the S−D switch becomes disconnected while for an L input it becomes low resistance. The n-channel transistor is a make switch to L, and the p-channel transistor is a break switch to H.

This behavior of the transistors results from the VGS − ISD and VSD − ISD characteristics shown in Figure 2.3. For voltages VSD well below VGS, ISD grows linearly with VGS and the transistor behaves like a resistance that is inversely proportional to VGS − VT, VT being a constant of a few 100 mV that depends on the dimensions of the device and slightly decreases with temperature by about 3 mV/°C. For a manufacturing process with 0.8 μm feature sizes (e.g. the gate length) VT is about 0.8V [3] and the supply voltage is 5V; for a finer process of 0.1 μm VT is below 0.2V, and the supply voltage is reduced to about 1V [4]. A simple approximation to the current ISD valid for VSD < VGS − VT is:

Figure 2.3 Characteristics of the n-channel transistor

Figure 2.4 Simple inverter circuit and its transfer characteristic

For output voltages VSD beyond VGS − VT the current through the transistor becomes saturated to

and from (1) one concludes that:

A more accurate description reveals ISD will still grow slowly with VSD for VSD > VGS − VT and will not vanish but decay exponentially as a function of VGS for VGS < VT [4, 5]. The transistor is actually a symmetric device; source and drain can be interchanged and used as the poles of an electrically controlled, bi-directional switch (the role of the source is played by the more negative terminal).

The simplest way to implement the Boolean NOT function with transistor switches is by connecting a properly chosen ‘pull-up’ resistor between the drain terminal of an n-channel transistor and the positive supply. Figure 2.4 shows the circuit and its VG − VD characteristic. The L interval is mapped into the H interval, and H into L as required. A second transistor switch connected in parallel to the other leads to an implementation of the NOR function while a serial composition of the switches implements the NAND, similarly to the circuits shown in Figure 2.1. These circuits were the basis of the NMOS integrated circuits used before CMOS became dominant. Their disadvantage is the power consumption through the resistor if the output is L, and the slow L-to-H transition after the switch opens which is due to having to load the Cout capacitance and other load capacitors connected to the output through the resistor. The H-to-L transition is faster as the transistor discharges the capacitor with a much higher current. These disadvantages are avoided in CMOS technology by replacing the resistors by the complementary p-channel transistors.

Figure 2.5 CMOS inverter, equivalent circuit and characteristic

Figure 2.6 Inverter output current characteristics for different VG (VT = 0.8V)

The n- and p-channel transistors combine with the CMOS inverter shown in Figure 2.5 with a corresponding equivalent circuit and the typical VG − VD characteristic over the whole supply range. The CMOS inverter also implements the Boolean NOT operation. The equivalent circuit assumes that both transistors charge the output capacitor as fast as the same resistor R would do which is the case if the transistors are sized appropriately. Typical values for the capacitors reported in [3] for a 0.8 μm process are Cin = 8 fF and Cout = 10 fF (1fF = 10−15F = 10−3pF). The characteristic is similar to the curve in Figure 2.4 but much steeper as the p-channel becomes high-impedance when the n-channel one becomes low-impedance and vice versa. The inverter circuit can actually be used as a high gain amplifier if it operates near the midpoint of the characteristic where small changes of VG cause large changes of VD. The dotted curve in Figure 2.5 plots the current through the transistors as a function of VD, which is seen to be near zero for output voltages in L or H.

When the input level to the CMOS inverter is switched between L and H the output capacitance C is charged by the limited currents through the output transistors. Therefore, the digital signals must be expected to take a non-zero amount of time for their L-to-H and H-to-L transitions, called the rise and fall times respectively. The characteristic in Figure 2.6 shows that for input voltages in the middle third of the input range (0…4.8V) the currents supplied to charge the load capacitance are reduced by more than a factor of 2, and an input signal making a slow transition will have the effect of a slower output transition. There is hardly any effect on the output before the input reaches the midpoint (2.4V), and at the midpoint where the VG – VD characteristic is the steepest, the output becomes high impedance and does not deliver current at all at the medium output voltages.

Figure 2.7 Timing of the inverter signals

Figure 2.8 CMOS circuits for the NAND and NOR functions

The worst case processing time t of the inverter computing the NOT function may be defined as the time to load the output capacitance from the low end of the L interval (the negative supply) to the lower end of H for an input at the upper end of L (which is supposed to be the same as the time needed for the opposite transition). It is proportional to the capacitance,

where R depends on the definition of the H and L intervals and is a small multiple of the ‘on’ resistance of the transistors. Moreover, the output rise time that may be defined as the time from leaving L to entering H is also proportional to C (Figure 2.7). It is part of the processing time. In the data sheets of semiconductor products one mostly finds the related propagation delay which is the time from the midpoint of an input transition to the midpoint of the output transition for specific input rise and fall times and a given load capacitance.

Figure 2.8 shows how more transistor switches combine to realize the NAND and NOR operations. A single transistor is no longer enough to substitute the pull-up resistor in the corresponding, unipolar NMOS circuit. CMOS gates turn out to be more complex than their NMOS counterparts. Inputs and outputs are compatible and hence allow arbitrary compositions, starting with AND and OR composed from NAND and NOR and a NOT circuit. Putting switches in series or in parallel as in the NAND and NOR gates can be extended to three levels and even more (yet not many). The degradation from also having their on resistances in series can be compensated for by adjusting the dimensions of the transistors. Another potential problem is that the output resistance in a particular state (L or H) may now depend on the input data that for some patterns close a single switch and for others several switches in parallel. This can only be handled by adding more switches to a given arrangement so that in a parallel composition the branches can no longer be on simultaneously.

Figure 2.9 Inverter tree to drive high loads

Figure 2.10 Structure of a complex CMOS gate

The timing of CMOS gates with multiple switches is similar to that of the inverter, i.e. depends essentially on the load capacitances, the ‘on’ resistances and the transition times of the inputs. For a gate with several input signals that transition simultaneously, some switches may partially conduct during the transition time. For short rise and fall times it can be expected that the gate output makes just a single transition to the new output value within its processing time. During the signal rise and fall times invalid data are presented to the inputs, and the gates cannot be used to compute. The transition times hence limit the possible throughput. Therefore, short transition times (fast signal edges) are desirable, and large capacitive loads must be avoided. The load capacitance Co at the output of a CMOS gate is the sum of the local output capacitance, the input capacitances of the k gate inputs driven by the output, and the capacitance of the wiring. The processing time and the output rise and fall times are proportional to Co and hence to k (the ‘fan-out’). Figure 2.9 shows how a binary tree of h levels of inverters can be used to drive up to k = 2h+1 gate inputs with the fan-out limited to at most 2 at every inverter output. The tree has a processing time proportional to h = ld(k) which is superior to the linear time for the direct output. For an even h, the transfer function from the input of the tree to any output is the identity mapping. All outputs transition synchronously.

The general form of a complex CMOS gate is shown in Figure 2.10. If n-channel switch network driving the output to L has the state function f− and the p-channel network driving to H has the state function f+, then the Boolean function f computed by the gate is:

Usually, f+ and f− are complementary and f = f+. The switch networks must not conduct simultaneously for some input, i.e. f+, f− satisfy the equation f+ f− = 0. For an NMOS gate there is only the n-channel network with the state function f− = f°; the p-channel network is replaced by a resistor.

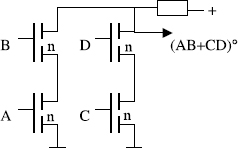

CMOS or NMOS circuits can be designed systematically for a given Boolean expression. One method is to construct the switch networks from sub-networks put in series or in parallel. Not every network can be obtained this way, the most general network of switches being an undirected graph (the edges representing the switches) with two special nodes ‘i’ and ‘o’ so that every edge is on a simple path from ‘i’ to ‘o’ (other edges are no good for making a connection from ‘i’ to ‘o’). This uses less switches than any network constructed by means of serial and parallel compositions that are controlled to perform the same function. Another method is to set up a selector switch tree and only maintain those branches on which the network is to conduct, and eliminate unnecessary switches. This also does not always yield a network with the minimum number of switches.

To derive a construction of the n-channel switch network using serial and parallel compositions to implement a given function f, the state function f− = f° of the network needs to be given as an AND/OR expression in the variables and their complements yet without further NOT operations (every expression in the AND, OR and NOT operations can be transformed this way using the de Morgan laws). For every negated variable an inverted version of the corresponding input signal must be provided by means of an inverter circuit to drive the switch. To every variable site in the expression an n-channel transistor switch is assigned that is controlled by the corresponding signal. AND and OR of sub-expressions are translated into the serial and parallel compositions of the corresponding switch networks, respectively. For the NMOS design, a single pull-up resistor is used to produce the H output when the switch arrangement is open. A CMOS circuit for the desired function requires a p-channel network with the state function f+ = f that is obtained in a similar fashion, e.g. by transforming the negated expression into the above kind of expression. The required number of transistor switches for the NMOS circuit is the number c of variable sites in the expression (the leaves in the expression tree) plus the number of transistors in the inverters required for the variables (the AND and OR operations that usually account for the complexity of a Boolean expression do not cost anything but add up to c-1). The CMOS circuit uses twice this number of transistors if the complementary switch arrangement is chosen to drive to the H level.

Forming circuits by this method leads to less complex and faster circuits than those obtained by composing the elementary NAND, NOR and NOT CMOS circuits. The XOR function would e.g. be computed as:

by means of two inverters and 8 transistor switches whereas otherwise one would use two inverters and 12 transistors (and more time). In the special case of expressions representing f− without negated variables, no inverters are required at all. The expression XY + UV for f− yields the so-called and-or-invert gate with just 8 transistors for the CMOS circuit or 4 transistors for the NMOS circuit (Figure 2.11). Another example of this kind is the three input operation O(X, Y, Z) = XY + YZ + ZX = X(Y + Z) + YZ which requires 10 transistors for the CMOS gate and an inverter for the output. Due to the availability of such complex gate functions the construction of compute circuits can be based on more complex building blocks than just the AND, OR and NOT operations.

Figure 2.11 4-transistor and-or-invert gate

Figure 2.12 CMOS gate using complementary n-channel networks

The p-networks in CMOS gates require a similar number of transistors as the n-networks but require more space. The circuit structure shown in Figure 2.12 uses two complementary n-channel networks instead, and two p-channel transistors to drive the outputs at the n-channel networks to the H level. This structure also delivers the inverted output. If the inputs are taken from gates of this type, too, then all inverters can be eliminated. For simple gates like AND and OR this technique involves some overhead while for complex gates the transistor count can even be reduced as the n-channel networks may be designed to share transistor switches. The XOR gate built this way also requires just 8 transistors plus two input inverters (which may not be required) and also provides the inverted output.

The n- and p-channel transistors not only can be used to switch on low resistance paths to the supply rails but also as ‘pass’ transistors to connect to other sources outputting intermediate voltages. The n-channel pass transistor, however, cannot make a low-impedance connection to a source outputting an H level close to the supply voltage U (above U − UT), and the p-channel pass transistor cannot do so to a source outputting a voltage below UT. If an n-channel and a p-channel transistor switch are connected in parallel and have their gates at opposite levels through an inverter, one obtains a bi-directional switch (the ‘transmission gate’) that passes signals with a low resistance over the full supply range in its on state. The controlled switch is also useful for switching non-digital signals ranging continuously from the ground reference to the positive supply. Transmission gates can be combined in the same fashion as the n-channel and p-channel single-transistor switches are in the networks driving L and H to perform Boolean functions but are no longer restricted to operate near the L or H level (if they do, they can be replaced by a single transistor). The output of a transmission gate will be within a logic level L or H if the input is. The transmission gate does not amplify. The output load needs to be driven by the input through the on resistance of the switch.

Figure 2.13 SEL based on transmission gates

Figure 2.13 shows an implementation of SEL with bi-directional transistor switches which requires less transistors than its implementation as a complex gate, namely just 6 instead of 12. If an inverter is added at the output to decouple the load from the inputs, two more transistors are needed. The multiplexer/selector can be further simplified by using n-channel pass transistors only. Then for H level inputs the output suffers from the voltage drop by UT. The full H level can be restored by an output inverter circuit.

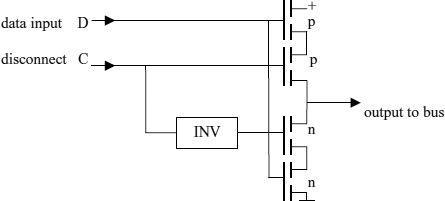

Besides driving an output to H or L there is the option not to drive it at all for some input patterns (it is not recommended to drive an output to H and L simultaneously). Every output connects to some wire used to route it to the input of other circuits or out of the system that constitutes the interconnection media used by the architecture of directly wired CMOS gates and constitutes a hardware resource. The idea to sequentially use the same system component for different purposes also applies to the interconnection media. Therefore it can be useful not to drive a wire continuously from the same output but to be able to disconnect and use the same wire for another data transfer. Then the wire becomes a ‘bus’ to which several outputs can connect. An output that assumes a high-impedance state in response to some input signal patterns is called ‘tri-state’, the third state besides the ordinary H and L output states being the high impedance ‘off’ state (sometimes denoted as ‘Z’ in function tables).

A simple method to switch a CMOS gate output to a high-impedance state in response to an extra control signal is to connect a transmission gate to the output of the gate. If several outputs extended this way are connected to a bus line, one obtains a distributed select circuit similar to the circuit in Figure 2.13 yet not requiring all selector inputs to be routed to the site of a localized circuit. Another implementation of an additional high-impedance state for some gate output is to connect an inverting or non-inverting buffer circuit (one with an identity transfer function) to it with extra transistor switches to disconnect the output that are actuated by the control signal (Figure 2.14). The switches controlled by the disconnect signal can also be put in series with the n- and p-channel networks of a CMOS gate (see Figure 2.10), or the ‘off’ state can be integrated into the definitions of the n- and p-channel networks by defining the input patterns yielding the ‘closed’ states for them not to be complementary (just disjoint).

Banks of several tri-state buffers are a common component in digital systems and are available as integrated components to select a set of input signals to drive a number of bus lines. The circuit in Figure 2.14 can be considered as part of an 8 + 2 transistor inverting selector circuit that uses another chain of 4 transistors for the second data input to which the disconnect signal is applied in the complementary sense.

Figure 2.14 Tri-state output circuit

A simplified version of the tri-state output circuit connected to a bus line is the ‘open-drain’ output that results from replacing the p-channel transistors driving the output to the H level by a single pull-up resistor for the bus line. Several open-drain outputs may be connected to the same bus line. Several outputs may be on and drive the bus to the L level simultaneously. The level on the bus is the result of an AND applied to the individual outputs as in Figure 2.14 within a gate. The AND performed by wiring to a common pull-up resistor is called the ‘wired AND’. An open drain output can be simulated by a tri-state output buffer that uses the same input for data and to disconnect.

The CMOS building blocks explained so far are reactive in the sense of shown in section 1.4.3 After the processing time they keep their output if the inputs do not change. Complex circuits composed from the basic CMOS gates are also reactive. They are usually applied so that their input remains unchanged within the processing time, i.e. without attempting to exploit their throughput that may be higher. Circuits suitable for raising the throughput via pipelining must be given a layered structure (see Figure 1.12) by adding buffers if necessary. Then they also have the advantage that they do not go through multiple intermediate output changes (hazards) that otherwise can arise from operands to a gate having different delays w.r.t. the input.

2.1.2 Registers and Synchronization Signals

Besides the computational elements which can now be constructed from the CMOS gates according to appropriate algorithms (further discussed in Chapter 4), storage elements (registers) have been identified in section 1.4 as an essential prerequisite to building efficient digital systems using pipelining and serial processing.

A simple circuit to store a result value for a few ms from a starting event is the tri-state output (Figure 2.14) or the output of a transmission gate driving a load capacitance (attached gate inputs). Once the output is switched to the high-impedance state, the load capacitor keeps its voltage due to the high impedance of the gate inputs and the output transistors in their ‘off’ state. Due to small residual currents, the output voltage slowly changes and needs to be refreshed by driving the output again at a minimum rate of a few 100 Hz if a longer storage time is required. This kind of storage element is called ‘dynamic’. If the inverter inside the tri-state output circuit can be shared between several storage elements (e.g., in a pipeline), only two transistors are required for this function.

Figure 2.15 Pipelining with tri-state buffers or pass gates used as dynamic D latches

Figure 2.16 Dynamic master-slave D flip-flop

Figure 2.15 shows how a pipeline can be set up using dynamic storage and a periodic clock as in Figure 1.16. The required tri-state outputs can be incorporated into the compute circuits or realized as separate circuits (called dynamic ‘D latches’). The input to the compute circuits is stable during the ‘off’ phase of the clock signal when the transmission gates are high-impedance. In the ‘on’ phase they change, and the compute circuit must not follow these changes before the next ‘off’ time but output the result of the previous input just before the next ‘off’ time at the latest (the clock period must be larger than the processing time). This would hold if the compute circuit has a layered structure operated in a non-pipelined fashion.

If the output follows the input changes too fast, one can resort to using two non-overlapping clocks, one to change the input and one to sample the output. Then the input data are still unchanged when the output gets stored. The output latch is the input latch of the next stage of the pipeline. A simple scheme is to connect two complementary clocks to every second set of output latches which, however, implies that the input ‘on’ phase cannot be used for processing (the dotted clock in Figure 2.15 is the complementary one).

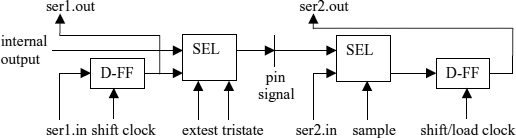

Alternatively, the input to the next pipeline stage can be stored in a second storage element during the time the output of the previous one changes, which is the master–slave storage element shown in Figure 2.16 that provides input and output events at the L-to-H clock edges only as discussed in Section 1.4.3. The clock signal for the second (slave) storage element is the inverse of the first and generated by the inverter needed for the first. While the first (master) storage element can be a transmission gate or the tri-state function within the data source, the second cannot be realized as a transmission gate as this would discharge the storage capacitor but needs an additional inverter or buffer stage (see Figure 2.14). Then a total of 8 transistors are needed to provide the (inverting) register function. From the inverter the dynamic D flip-flop also has a non-zero propagation delay or processing time from the data input immediately before the L-to-H clock edge to the data appearing at the output.

With the master-slave elements the input data are stable during the full clock period and the compute circuit can use all of the period for its processing except for the processing time of the flip-flop without special requirements on its structure. If the circuit in the pipeline is a single gate, the flip-flop delay would still inhibit its efficient usage as in the case of the two-phase sampling scheme.

Figure 2.17 Pipeline stage using dynamic logic

The tricky variant shown in Figure 2.17 (called ‘dynamic logic’) implements a single gate plus D flip-flop pipeline stage with just 5 transistors for the flip-flop function and further reduces the hardware complexity of the gate by eliminating the pull-up network. When the clock is L, the inner capacitor is charged high but the output capacitor holds its previous value. In the H phase the data input to the gates of the switch network must be stable to discharge the inner capacitor again to L if the network conducts, and the output capacitor is set to the resulting value. The processing by the gate and the loading of the output occur during the H phase of the clock, while the n-channel switch network is idle during the L phase. The next stage of the pipeline can use an inverted clock to continue to operate during the L phase when stable data are output. Alternatively, the next stage can use the same clock but a complementary structure using a p-channel network. The simplest case for the n- or p-channel network is a single transistor. Then the stage can be used to add delay elements into a complex circuit (called shimming delays) to give it a layered structure to enable pipelining.

One can hardly do better with such little hardware. If the n-channel network were to be operated at the double rate, the input would have to change very fast. The idle phase is actually used to let the inputs transition (charge the input capacitance to the new value). Dynamic logic is sometimes used in conjunction with static CMOS circuits to realize complex functions with a lower transistor count.

Static storage elements that hold their output without having to refresh it are realized by CMOS circuits using feedback and require some more effort. Boolean algorithms correspond to feed forward networks of gates that do not contain cycles (feedback of an output). If feedback is used for the CMOS inverter by wiring its output to the input, it cannot output an H or L level as none of them is a solution to the equation

![]()

In this case the output goes between the L and H levels and the inverter input (and output) is forced to the steep region of the VG − VD characteristic (see Figure 2.5) where it behaves like an analogue amplifier. For the non-inverting driver built from two inverters put in series, the feedback equation

![]()

has the two solutions L and H. The circuit composed of two CMOS inverters remains infinitely in each of these output states as the flipping to the other state would require energy to charge the output capacitance until it leaves the initial interval, overcoming the discharge current of the active output transistor that does not switch off before the double inverter delay. This 4-transistor feedback circuit is thus a storage element keeping its output value through time. If the energy is applied by briefly connecting a low impedance source to one of the outputs (e.g., from a tri-state output), the feedback circuit can be set into any desired state that remains stored afterwards. Actually, the needed amount of energy can be made very small by applying the feedback from the second inverter through a high resistor or equivalently by using transistors with a high on resistance for it (Figure 2.18) which is sufficient to keep the input capacitance to the first inverter continuously charged to H or L (there is no resistive load otherwise). An immediate application is to keep the last value driven onto a bus line to avoid the line being slowly discharged to levels outside L and H where gates inputting from the bus might start to draw current (see Figure 2.5).

Figure 2.18 Simple static storage element

Figure 2.19 The RS flip-flop (NOR version) and the MRS gate

The combination of Figures 2.14 and 2.18 (the dynamic D latch and the bus keeper circuit) is the so-called D latch (usually, an inverter is added at the output). To set the output value Q from the first inverter in Figure 2.18 to the value presented at the data input D, one needs to apply the L level to the disconnect input C for a short time. Thereafter Q does not change. During the time when the disconnect input is L, the D latch is ‘transparent’. The data input value is propagated to the output and the output follows all changes at the input. This may be tolerable if the input data do not change during this time which may be kept very short and may even be required in some applications.

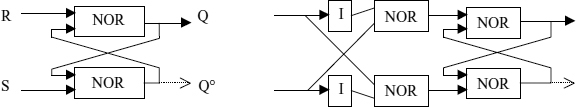

There are many other ways to implement storage elements with feedback circuits. The feedback circuit in Figure 2.19 built from 2 NOR gates (8 transistors) allows the setting of the output to H or L by applying H to the S or R input. It is called the RS flip-flop. It performs its output changes in response to the L-to-H transitions on R or S. A similar behavior results if NAND gates are used instead of the NOT gates (L and H become interchanged). A similar circuit having three stable output states and three inputs to set it into each of these can be built by cross-connecting three 3-input NOR gates instead of the two 2-input gates.

An RS flip-flop can be set and reset by separate signals but requires them not to become active simultaneously. A similar function often used to generate handshaking signals is the so-called Muller C gate with one input inverted (called MRS below) which differs from the RS flip-flop by also allowing the H-H input and not changing the output in that case. It can be derived from the RS flip-flop by using two extra NOR gates and two inverters to suppress the H-H input combination.

The register storing input data at the positive edges of a control signal (see Figure 1.15) without any assumptions about their frequency, and holding the output data for an unlimited time, can be derived from the static D latch. To pass and hold the input data present at the positive clock edge but not change the output before is done by cascading two D latches into the master–slave D flip-flop and using a complementary clock for the second as already shown in Figure 2.16 for the dynamic circuit. While the first stage opens to let the input data pass, the second stage still holds the previous output. At the positive edge the first stage keeps its output which is passed by the second. The inverted clock signal is already generated in the first D latch. Thus 18 transistors do the job, or 14 if pass gates are used instead of the tri-state circuits.

The (static) D flip-flop is the standard circuit implementing the sampling of digital signals at discrete times (the clock events). Banks of D flip-flops are offered as integrated components to sample and store several signals in parallel, also in combination with tri-state outputs. The timing of a static D flip-flop is similar to that of the dynamic flip-flop, i.e. a small processing time is required to pass the input data to the output after the clock edge. For commercial components the timing is referenced to the positive clock edge (for which a maximum rise time is specified) so that input data must be stable in between the set-up time before the edge and the hold time after the edge. The new output appears after a propagation delay from the clock edge.

Apart from these basic storage circuits feedback is not used within Boolean circuits. Feedback is, however, possible and actually resolved into operations performed at subsequent time steps if a register is within the feedback path (Figure 2.20). If the consecutive clock edges are indexed, xi is the input to the Boolean circuit from the register output between the edges i, i + 1, and ei is the remaining input during this time the output f(xi, ei) of the Boolean circuit to the register input is not constrained to equal xi but will be the register output after the next clock edge only, i.e.:

Circuits of this kind (also called automata) have many applications and will be further discussed in Chapter 5. If e.g. the xi are number codes and xi+1 = xi + 1, then the register outputs consecutive numbers (functions as a clock edge counter). The simplest special case are single bit numbers stored in a single D flip-flop and using an inverter to generate xi+1 = xi + 1 = (xi)° (Figure 2.21). After every L-to-H clock edge the output transitions from H to L or from L to H and toggles at half the clock frequency.

Figure 2.20 Feedback via a register

Figure 2.21 Single bit counter

Figure 2.22 Shift register

Figure 2.23 D flip-flop clocked at both edges

Another example of an automaton of this kind is the n-bit shift register built from n D flip-flops put in series (Figure 2.22). At the clock edge the data values move forward by one position so that if ei is the input to the first flip-flop after the ith edge, the n-tuple output by the shift register is (ei − 1,ei − 2,…,ei − n+1). The shift register is a versatile storage structure for multiple, subsequent input values that does not need extra circuits to direct the input data to different flip-flops or to select from their outputs. If the shift register is clocked continuously, it can be built using dynamic D flip-flops of 8 transistors each (6 if dynamic logic is employed).

If instead of just the L-to-H transitions of a ‘unipolar’ clock, both transitions are used, then the clock signal does not need not return to L before the next event, and this ‘bipolar’ clock can run at a lower frequency. Also, two sequences of events signaled by the bipolar clock sources c, c′ can be merged by forming the combined bipolar clock XOR(c, c′) (nearly simultaneous transitions would then be suppressed, however). An L-to-H only unipolar clock signal is converted into an equivalent bipolar one using both transitions with the 1-bit counter (Figure 2.21), and conversely by forming the XOR of the bipolar clock and a delayed version of it. A general method for building circuits that respond to the L-to-H edges of several unipolar clock signals is to first transform the clocks into bipolar ones signaling at both transitions and then merging them into a single bipolar clock.

Figure 2.23 shows a variant of the D flip-flop that samples the input data on both clock edges. The D latches are not put in series as in the master–slave configuration, but in parallel to receive the same input. The inverter is shared by the latches and the select gate.

The auxiliary circuits needed to provide handshaking signals (see Figure 1.18) to a compute building block can be synthesized in various ways from the components discussed so far [7, 39]. In order not to delay the input handshake until the output is no longer needed, and to implement pipelining or the ability to use the circuit several times during an algorithm, a register also taking part in the handshaking is common for the input or output. If a certain minimum rate can be guaranteed for the application of the building block, dynamic storage can be used. A building block that can be used at arbitrary rates requires static storage elements. The handshaking signals can be generated by a circuit that runs through their protocol in several sequential steps synchronized to some clock, but at the level of hardware building blocks simpler solutions exist. Due to the effort needed to generate the handshake signals, handshaking is not applied to individual gates but to more complex functions.

Figure 2.24 Handshake generation

Handshaking begins with the event of new input data that is signaled by letting IR perform its L-to-H transition. After this event the IR signal remains active using some storage element to keep its value. It is reset to the inactive state in response to another event, namely the IA signal transition, and hence requires a storage circuit that responds to two clock inputs. If IR and IA were defined to signal new data by switching to the opposite level (i.e., using both transitions), they would not have to be reset at all and could be generated by separately clocked flip-flops. This definition of the handshaking signals is suitable for pipelining but causes difficulties when handshaking signals need to be combined or selected from different sources.

The generic circuit in Figure 2.24 uses two MRS flip-flops to generate IA and OR. It is combined with an arbitrary compute function and a storage element for its data output (a latch freezing the output data as long as OR is H, maybe just by tri-stating the output of the compute circuit). The OR signal also indicates valid data being stored in the data register. The rising edge of the IR signal is delayed corresponding to its worst case processing delay of the compute circuit by a delay generator circuit while the falling edge is supposed to be passed immediately. A handshaking cycle begins with IA and IR being L. IR goes H, and valid data are presented at the input at the same time. After the processing delay the rising edge of IR is passed to the input of the upper MRS gate. It responds by setting IA to the H level as soon as the OR signal output by the lower MRS gate has been reset by an OA pulse. The setting of IA causes OR to be set again once OA is L, and thereby latches the output data of the compute that have become valid at that time. IA is reset to L when the falling edge of IR is passed to the upper MRS gate. Alternatively, the compute and delay circuits may be placed to the right of the MRS gates and the data register which then becomes an input register.

To generate the delay for a compute circuit that is a network of elementary gates, one can employ a chain of inverters or AND gates (then the delay will automatically adjust to changes of the temperature or the supply voltage). If the circuit is realized by means of dynamic logic or otherwise synchronized to a periodic clock signal, the delay can be generated by a shift register or by counting up to the number of clock cycles needed to perform the computation (an unrelated fast clock could also serve as a time base). Some algorithms may allow the delayed request to be derived from signals within the circuit.

2.1.3 Power Consumption and Related Design Rules

A CMOS circuit does not consume power once the output capacitance has been loaded and all digital signals have attained a steady state close to the ground level or the power supply level and transistor switches in the ‘open’ state really do not conduct. Actually a small quiescent current remains, but less than 1% of the power consumption of a system based on CMOS technology is due to it typically at the current supply voltage levels.

Another part of the total power consumption, typically about 10%, is due to the fact that for gate inputs in the intermediate region between L and H both the n-channel and p-channel transistors conduct to some degree (Figure 2.5). Inputs from a high impedance source (e.g., a bus line) may be kept from discharging into the intermediate region by using hold circuits (Figure 2.18) but every transition from L to H or vice versa needs to pass this intermediate region. The transition times of the digital signals determine how fast this intermediate region is passed and how much power is dissipated during the transitions. Using equation (4) in section 2.1.1, they are proportional to the capacitance driven by the signal source. If f are the frequency of L-H transitions at the inverter input, t the time to pass between L to H and j the mean ‘cross-current’ in that region, then the mean current drawn from the supply is:

To keep this current low, load capacitances must be kept low, and high fan-outs must be avoided. If N inverter inputs need to be driven by a signal, the load capacitance is proportional to N and the cross-current through the N inverters becomes proportional to N2. If a driver tree is implemented (Figure 2.9), about 2N inverter inputs need to be driven, but the rise time is constant and the cross-current is just proportional to N.

The major part of the power consumption is dissipated during the changes of the signals between the logic levels to charge or discharge the input and output capacitances of the gates. To charge a capacitor with the capacitance C from zero to the supply voltage U, the applied charge and energy are:

Half of this energy remains stored in the capacitor while the other half is dissipated as heat when the capacitor is charged via a transistor (or a resistor) from the supply voltage U. If the capacitor is charged and discharged with a mean frequency f, the resulting current and power dissipation are:

This power dissipation may set a limit to the operating frequency of an integrated circuit; if all gates were used at the highest possible frequency, the chip might be heated up too much even if extensive cooling is applied. Semiconductor junctions must stay below 150°C. The junction temperature is warmer than the surface of the chip package by the dissipated power times the thermal resistance of the package.

Equations (7) and (8) also apply if the capacitor is not discharged or charged to the supply voltage but charged by an amount U w.r.t. to an arbitrary initial voltage and then discharged again to this initial voltage through resistors or transistors connected to the final voltage levels to supply the charge or discharge currents. U cannot be reduced arbitrarily for the sake of a reduced power consumption as some noise margin is needed between the H and L levels. The voltage swing can be lowered to levels to a few 100 mV if two-line differential encoding is used for the bits (i.e. a pair of signals driven to complementary levels) by exploiting the common mode noise immunity of a differential signal. If the inputs to a Boolean circuit implementing an algorithm for some function on the basis of gate operations are changed to a new bit pattern, after the processing time of the circuit, all gate outputs will have attained steady values. If k gate inputs and outputs have changed from L to H, the energy for the computation of the new output is at least

if the capacitances at all gate inputs and outputs are assumed to be equal to C and the actual values within the L and H intervals are zero and U. It becomes higher if there occur intermediate changes to invalid levels due to gate delays. These may be determined through an analysis or a simulation of the circuit and are only avoided in a layered circuit design with identical, data-independent gate delays. If the computation is repeated with a frequency f, and k is the mean number of bit changes for the applied input data, then the power dissipation is P = E*f. The power dissipation depends both on the choice of the algorithm and the applied data. Different algorithms for the same function may require different amounts of energy. The number k of level changes does not depend on whether the computation is performed by a parallel circuit or serially. As a partially serial computation needs auxiliary control and storage circuits, it will consume more energy than a parallel one.

Equation (8) depends on the fact that during the charging of the capacitor a large voltage (up to U) develops across the resistor. If during the loading process the voltage across the resistor is limited to a small value by loading from a ramp or sine waveform instead of the fixed level U, the energy dissipated in the resistor of transistor can be arbitrarily low. The capacitor can be charged by the constant current I to the level of U in a time of T = UC/I. During this time the power dissipated by the resistor is N = RI2 and the energy dissipated during T becomes:

If before and after a computation the same number of signal nodes with capacitances C w. r. t. to the ground level are at the H level, then theoretically the new state could be reached without extra energy as the charges in the capacitors are just redistributed at the same level of potential energy. This would always be the case if input and output codes are extended by their complements and the Boolean circuit is duplicated in negative logic or implemented from building blocks as shown in Figure 2.12 (then NOT operations can be eliminated, too, that otherwise introduce data-dependent processing delays). ‘Adiabatic’ computation through state changes at a constant energy level also plays a role in the recent development of quantum computing [8].

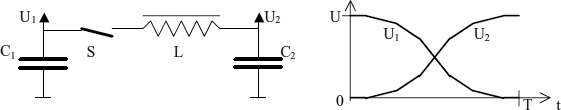

Figure 2.25 shows a hypothetical ‘machine’ exchanging the charges of two capacitors (hence performing the NOT function if one holds the bit and the other its complement) without consuming energy. Both capacitors are assumed to have the capacitance C, the capacitors and the inductance are ideal, and the switch is ideal and can be operated without consuming energy. At the start of the operation C1 is supposed to be charged to the voltage U while C2 is discharged. To perform the computation, the switch is closed exactly for the time of T = 2−1/2π(LC)1/2. At the end C2 is charged to U and C1 is discharged. After another time of T the NOT computation would be undone. In practical CMOS circuits, the energy stored in the individual load capacitors cannot be recovered this way (unless a big bus capacitance were to be driven), but a slightly different approach can be taken to significantly reduce the power consumption.

Figure 2.25 Zero-energy reversible NOT operation

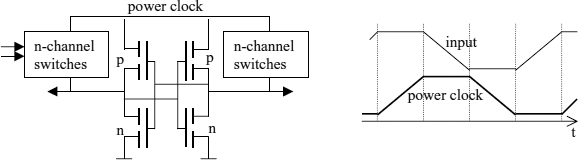

Figure 2.26 Adiabatic CMOS gate

The option to move charges between large capacitors, without a loss of energy, can be exploited by using the sine waveform developing during the charge transfer to smoothly load and discharge sets of input and output capacitors with a small voltage drop across the charging resistors or transistors, as explained above. Thus, the DC power supply is substituted by a signal varying between zero and a maximum value U (a ‘power clock’). Circuits relying on smoothly charging or discharging from or to a power clock are called adiabatic. Various adiabatic circuit schemes have been implemented [37, 38]. A simplified, possible structure of an adiabatic CMOS gate with two complementary n-channel switch networks and complementary outputs is shown in Figure 2.26. During the charging of the output capacitors the logic levels at the transistor gates are assumed to be constant. This can be achieved in a pipelined arrangement where one stage outputs constant output data using a constant supply voltage while the next one gets charged by smoothly driving up its supply. Once charged, the gate keeps its state even while its input gets discharged due to the feedback within the gate circuit. Using equation 10, the energy dissipated by an adiabatic computation can be expected to be inversely proportional to the execution time T (~ I−1), and the current consumption to decrease such as T−2 instead of just T−1 as for standard CMOS circuits clocked at a reduced rate. Practically, only a fraction of these savings can be realized, but enough to make it an interesting design option. The charge trapped in the intermediate nodes of the switch networks cannot be recycled unless all inputs are maintained during the discharging, and the discharging through the p-channel transistors only works until the threshold voltage is reached. Low capacitance registers can be added at the outputs as in Figure 1.16 to avoid the extensive input hold times.

Figure 2.27 Ripple-carry counter

Storage elements are built from CMOS gates and also dissipate power for the output transitions of each of them. A latch uses a smaller number of gates and hence consumes less power than a flip-flop. In a master-slave flip-flop the clock is inverted so that every clock edge leads to charging some internal capacitance C′ even if the data input and output do not change. Thus just the clocking of an n-bit data register at a frequency f continuously dissipates the power of

Registered circuits implemented with dynamic logic (see Figure 2.17) consume less power than conventional CMOS gates combined with latches or master–slave registers. If the clock is held at the L level, then there are no cross-currents even if the inputs discharge to intermediate levels.

In order to estimate the continuous power consumption of a subsystem operating in a repetitive fashion one needs to take into account that the transition frequencies at the different gate inputs and outputs are not the same. The circuit shown in Figure 2.27 is a cascade of single bit counters as shown in Figure 2.21 obtained by using the output of every stage as the clock input of the next. This is called the ripple counter and serves to derive a clock signal with the frequency f/2n from the input clock with the frequency f. Each stage divides the frequency by 2. If I0 is the current consumed by the first stage clocked with f, then the second stage runs at half this frequency and hence consumes I0/2, the third I0/4 etc. The total current consumption of the n-stage counter becomes:

![]()

The technique of using a reactive Boolean circuit with input and output registers clocked at a rate higher than the processing time of the circuit (see section 1.4.3) in order to arrive at a well-defined timing behavior thus leads to continuous power consumption proportional to the clock rate. Some techniques can be used to reduce this power consumption:

- Avoid early, invalid signal transitions and the secondary transitions that may result from them by using layered circuits.

- Use data latches instead of master–slave registers, maybe using an asymmetric clock with a short low time.

- Suppress the clock by means of a gate if a register is not to change, e.g. for extended storage or if the input is known to be unchanged.

- Use low level differential signals for data transfers suffering from capacitive loads.

The gating of a clock is achieved by passing it through an OR (or an AND) gate. If the second input is H (L for the AND gate), H (L) is selected for the gate output. The control signal applied to the second input must not change when the clock signal is L (H).

If the power consumption is to be reduced, the frequency of applying the components (the clock frequency for the registers) must be reduced and thereby the processing speed, the throughput and the efficiency (the fraction of time in which the compute circuits are actually active). The energy needed for an individual computation does not change and is proportional to the supply voltage U. The energy can only be reduced and the efficiency can be maintained by also lowering U. Then the transistor switches get a higher ‘on’ resistance and the processing time of the gate components increases. The ‘on’ resistance is, in fact, inversely proportional to U−UT where U denotes the supply voltage and UT is the threshold voltage (see section 2.1.1). Then the power consumption for a repeated computation becomes roughly proportional to the square of the clock frequency. If the required rate of operations of a subsystem significantly varies with time, this can be used to dynamically adjust its clock rate and the supply voltage so that its efficiency is maintained. The signals at the interface of the subsystem would still use some standard voltage levels. This technique is common for battery-powered computers, but can be systematically used whenever a part of a system cannot be used efficiently otherwise. A special case is the powering down of subsystems that are not used at all for some time.

The use of handshaking between the building blocks of a system can also serve to reduce the power consumption. Instead of a global clock, individual clocks are used (the handshake signals) that are only activated at the data rate really used for them. A handshaking building block may use a local clock but can gate it off as long as there are no valid data. This is similar to automatically reducing the power consumption of unused parts of the system (not trying to using them efficiently). If the processing delay for a building block is generated by a chain of inverters, the estimating delay adapts to voltage and temperature in the same way as the actual processing time. It then suffices to vary the voltage to adjust the power dissipation, and the handshake signals (the individual clocks) adjust automatically. A control flow is easily exploited by suppressing input handshake to unused sub-circuits. Similar power-saving effects (without the automatic generation and adjustment of delays) can, however, also be obtained with clocked logic by using clock gating.

2.1.4 Pulse Generation and Interfacing

Besides the computational building blocks and their control, a digital system needs some auxiliary signals like a power-on reset signal and a clock source that must be generated by appropriate circuits, and needs to be interfaced to the outside world, reading switches and driving loads. In this section, some basic circuits are presented that provide these functions. Interfaces to input and output analogue signals will follow in Chapter 8. For more details on circuit design we refer to [19].

The most basic signal needed to run a digital system (and most other electronic circuits) is a stable DC power supply delivering the required current, typical supply voltages being 5.0V, 3.3V for the gates driving the signals external to the chips and additionally lower voltages like 2.5V, 1.8 V, 1.5V, and 1.2V for memory interfaces and circuits within the more recent chips. In many applications, several of these voltages need to be supplied for the different functions. To achieve a low impedance at high frequencies the power supply signals need to be connected to grounded capacitors close to the load sites all over the system.

A typical power supply design is to first provide an unregulated DC voltage from a battery or one derived from an AC outlet and pass it through a linear or a switching regulator circuit. Regulators outputting e.g. a smooth and precise 5V DC from an input ranging between 7-20V with an overlaid AC ripple are available as standard integrated 3-terminal circuits. The current supplied at the output is passed to it from the input through a power transistor within the regulator. For an input voltage above 10V, more power is dissipated by this transistor than by the digital circuits fed by it. A switching regulator uses an inductance that is switched at a high frequency (ranging from 100 kHz to several MHz) to first store energy from the input and then to deliver it at the desired voltage level to the output. It achieves a higher efficiency (about 90%, i.e. consumes only a small fraction of the total power by itself) and a large input range. Switching regulators can also be used to convert from a low battery voltage to a higher one (Figure 2.28). The switches are implemented with n-channel and p-channel power MOS transistors having very low resistances (some 0.1Ω). The transistor switches are controlled by digital signals. Single and multiple regulators are available as integrated circuits including the power transistors.

Figure 2.28 Switching down and up regulator configurations

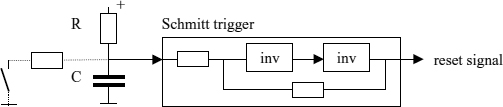

Figure 2.29 Reset signal generation using a Schmitt trigger circuit

A high efficiency voltage converter deriving the voltage Θ/2 from a supply voltage Θ can be built by using a switched capacitor only that is connected between the input and the output terminals to get charged by the output current, or alternatively between the ground reference and the output terminal to get discharged by the load current. The two connections are made by low resistance transistor switches and alternate at a high frequency so that a small voltage change U develops and the power dissipation is low due to equations (7) and (8) in the previous section. The input delivers the load current only at half time.

After power-up, some of the storage elements in a digital system must usually be set to specific initial values which is performed in response to a specific input signal called a reset signal. It is defined to stay at a specific level, say L, for a few ms after applying the power and then changes to H. An easy way to generate a signal of this kind is by means of a capacitor that is slowly charged to H via a resistor. In order to derive from a digital signal that makes a fast transition from L to H, the voltage across the capacitor can be passed through a CMOS inverter that is used here as a high gain amplifier. If feedback is implemented as in Figure 2.29 a single transition results even if the input signal or the power supply is overlaid with some electrical noise. The reset circuit outputs the L level after power-up that holds for some time after the power has reached its full level depending on the values for C and the resistors (usually its duration does not need be precise). The switch shown as an option permits a manual reset by discharging the capacitor.

Figure 2.30 Crystal oscillator circuit

The buffer circuit with a little amount of feedback to the input is a standard circuit known as the Schmitt trigger that is used to transform a slow, monotonic waveform into a digital signal. Its Vin − Vout characteristic displays a hysteresis. The L-H transition occurs at a higher input level than the H-L transition. The actual implementation would realize the feedback resistor from the output by simply using transistors with a high on resistance. The other one can be substituted by a two transistor non-inverting input stage (similar to Figure 2.5 but with the n- and p-channel transistors interchanged).

A periodic clock signal as needed for clocking the registers and as the timing reference within a digital system is easily generated using the CMOS inverter circuit as a high gain amplifier again and using a resonator for a selective feedback at the desired frequency. The circuit in Figure 2.30. uses a piezoelectric crystal for this purpose and generates a full swing periodic signal at its mechanical resonance frequency which is very stable (exhibits relative frequency deviations of less than 10−7 only) and may be selected in the rage of 30 kHz…60 MHz through the mechanical parameters of the crystal. The resistor serves to let the amplifier operate at the midpoint of its characteristic (Figure 2.5), and the capacitors serve as a voltage divider to provide the phase shift needed for feedback. The second inverter simply amplifies the oscillator output to a square waveform with fast transitions between L and H. Crystals are offered commercially at any required frequencies, and complete clock generator circuits including the inverters are offered as integrated components as well.

The frequency of a crystal oscillator cannot be changed but other clock signals can be derived from it by means of frequency divider circuits. A frequency divider by two is provided by the circuit shown in Figure 2.21 using a D flip-flop and feeding back its inverted output to its data input. Then the output becomes inverted after every clock edge (plus the processing delay of the flip-flop), and the resulting signal is a square wave of half the clock frequency h and a 50% duty cycle, i.e. the property that the L and H times are identical (this is not guaranteed for the crystal oscillator output). If several frequency dividers of this kind are cascaded so that the output of a divider becomes the clock input for the next stage, one obtains a frequency divider by 2n, the ripple-carry counter already shown in Figure 2.27. As each of the flip-flops has its own clock their clock edges do not occur simultaneously.

To divide the input frequency h by some integer k in the range 2n−1 < k ≤ 2n, a modified edge counter circuit can be used, i.e. an n-bit register with a feedback function f that performs the n-bit binary increment operation f(x) = x + 1 as proposed in section 2.1.2 (also called a synchronous counter as all flip-flops of the register here use the same clock signal), but only for x < k − 1, whereas f(k − 1) = 0. Then the register cycles through the sequence of binary codes of 0,1,2,…,k-1 and the highest code bit is a periodic signal with the frequency h/k.

Figure 2.31 Fractional frequency divider

Figure 2.32 PLL clock generator

Another variant is the fractional counter that generates the multiple h*k/2n for a non-negative integer k < 2n−1 (Figure 2.31). This time the feedback function is f(x) = x + k (algorithms for the binary add operation follow in section 4.2). The output from the highest code bit is not strictly periodic at the prescribed frequency (for odd k, the true repetition rate is h/2n). The transitions between L and H remain synchronized with the input clock and occur with a delay of at most one input period.

The frequency dividers discussed so far generate frequencies below ½h only. It is also useful to be able to generate a periodic clock at a precise integer multiple k of the given reference h. The crystal oscillators do not cover clock frequencies of the several 100 MHz needed for high speed processors but their frequencies might be multiplied to the desired range. It is quite easy to build high frequency voltage-controlled oscillators (VCO), the frequencies of which can be varied over some range by means of control voltages moving continuously over a corresponding range. The idea is to control the frequency q of a VCO so that q / k = h (a signal with the frequency q/k is obtained from a frequency divider). The deviation is detected by a so-called phase comparator circuit and used to generate the control voltage, setting up a phase-locked loop (PLL, Figure 2.32). If the VCO output is divided by m, then the resulting output frequency becomes k/m* h.

The phase comparator (PC in Figure 2.32) can be implemented as a digital circuit that stores two bits encoding the numbers 0, 1, 2, 3 and responds to the L-to-H transitions at two separate clock inputs. The one denoted ‘+’ counts up to 3, and the one denoted ‘−’ counts down to 0. The phase comparator outputs the upper code bit, i.e. zero for 0, 1 and the supply voltage for 2, 3. If the frequency of the VCO is higher than k*h, there are more edges counting down and PC is in one of the states 0, 1 and outputs the zero level which drives the VCO frequency down. If the reference clock is higher, it is driven up. If both frequencies have become equal, the state alternates between 1, 2 and the mean value of the output voltage depends on their relative phase which becomes locked at some specific value. The R-R′-C integrator circuit needs to be carefully designed in order to achieve a fast and stable control loop [40]. The VCO output can then be passed through a divide by m counter to obtain the rational multiple of the reference clock frequency by k/m.

Input data to a digital system must be converted to the H and L levels required by the CMOS circuits. The easiest way to input a bit is by means of a mechanical switch shorting a H level generated via a resistor to ground. Mechanical make switches generate unwanted pulses before closing due to the jumping of the contact, which are recognized as separate edges if the input is used as a clock. Then some pre-processing is necessary to ‘debounce’ the input. The circuit in Figure 2.29 can be used, or a feedback circuit like the RS flip-flop or the hold circuit in Figure 2.18 that keeps the changed input value from the first pulse (but needs a separate switch or a select switch to be reset).

Data input from other machines is usually by means of electrical signals. If long cabling distances are involved, the L and H levels used within the digital circuits do not provide enough noise margin and are converted to higher voltage levels (e.g. [3, 12]V to represent 0 and [−12, −3]V to represent 1) or to differential signals by means of input and output amplifiers that are available as integrated standard components. For differential signals the H and L levels can be reduced to a few 100 mV. At the same time the bit rates can be raised. The LVDS signaling standard (‘low voltage differential signaling’) e.g. achieves bit rates of 655 M bit/s and, due to its low differential voltages of ±350 mV, operates from low power supply voltages [21]. LVDS uses current drivers to develop these voltages levels across 100Ω termination resistors. Variants of LVDS support buses and achieve bit rates beyond 1 Gbit/s. An LVDS bus line is terminated at both ends and therefore needs twice the drive current.

If systems operating at different ground levels need to be interfaced, the signals are transferred optically by converting a source signal by means of a light emitting diode that is mounted close to a photo transistor converting back to an electrical signal. Such optoelectronic couplers are offered as integrated standard components as well (alternatively, the converters are linked by a glass fiber replacing the cable).

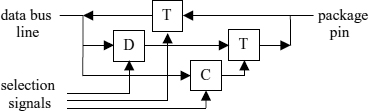

The switches, converters, cables, wires and even the input pins to the integrated circuits needed to enter data into a system are costly and consume space. The idea of reusing them in a time-serial fashion for several data transfers is applied in the same way as it was to the compute circuits. Again, this involves auxiliary circuits to select, distribute and store data. A common structure performing some of these auxiliary functions for the transfer of an n-bit code using a single-bit interface in n time steps is the shift register (Figure 2.22). After n time steps the code stands in the flip-flops of the register and can be applied in parallel as an input to the compute circuits. Figure 2.33 shows the resulting interface structure. The clock signal defines the input events for the individual bits and must be input along with the data (or generated from the transitions of the data input). If both clock edges are used, the interface is said to be a double data rate interface (DDR). No further handshaking is needed for the individual bits, but it is needed to define the start positions of multi-bit code words and must be input or be generated as well (at least, the clock edges must be counted to determine when the receiving shift register has been filled with new bits). The serial interface is reused as a whole to transfer multiple code words in sequence. The register, the generation of the clock and the handshake signals add up to a complex digital circuit that does not directly contribute to the data processing but can be much cheaper than the interface hardware needed for the parallel code transfer.

Figure 2.33 Serial interface structure (G: bit and word clock generator, C: signal converter)

The output from a digital system (or subsystem) to another one is by means of electrical signals converted to appropriate levels, as explained before. A serial output interface requires a slightly more complex register including input selectors to its flip-flops so that it can also be loaded in parallel in response to a word clock (Figure 2.33). If the data rate achieved with the bit-serial transfer is not high enough, two or four data lines and shift registers can be operated in parallel. Another option is to convert the interface signals into differential ones using LVDS buffers. Then much higher data rates can be achieved that compensate for the serialization of the transfer.

To further reduce the cables and wires the same can be used to transfer data words in both directions between the systems (yet at different times using some extra control). Finally, the clock lines can be eliminated. For an asynchronous serial interface each word transmission starts by a specific signal transition (e.g. L -> H) and the data bits follow this event with a prescribed timing that must be applied by the receiver to sample the data line. Another common method is to share a single line operating at the double bit rate for both the clock and the data by transmitting every ‘0’ bit as a 0-1 code and every ‘1’ as a 1-0 code (Manchester encoding), starting each transmission by an appropriate synchronization sequence. Then for every bit pattern the transmitted sequence makes many 0-1 transitions which can be used to regenerate the clock using a PLL circuit at the receive site. The effort to do this is paid for by the simplified wiring.

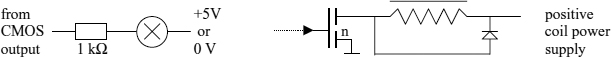

The CMOS outputs can directly drive light emitting diodes (LED) through a resistor that give a visible output at as little as 2mA of current (Figure 2.34). To interface to the coil of an electromechanical switch or a motor one would use a power transistor to provide the required current and voltage levels. When the transistor switches off, the clamp diode limits the induced voltage to slightly above the coil power supply voltage and thereby protects the transistor from excessive voltages. The same circuit can be used to apply any voltage between the coil power supply and zero by applying a high frequency, periodic, pulse width modulated (PWM) digital input signal to the gate of the transistor. To output a bipolar signal, ‘H’ bridge arrangements of power transistors are used. Integrated LED arrays or power bridges to drive loads in both polarities are common output devices.

Figure 2.34 Interfacing to LED lamps and coils

2.2 CHIP TECHNOLOGY

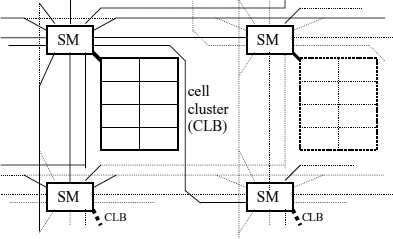

Since the late 1960s, composite circuits with several interconnected transistors have been integrated onto a silicon ‘chip’ and been packed into appropriate carriers supplying leads to the inputs and outputs of the circuit (and to the power supply). Since then the transistor count per chip has raised almost exponentially. At the same time, the dimensions of the individual transistors were reduced by more than two orders of magnitude. For the gate lengths the values decreased from 10 μm in 1971 to 0.1 μm in 2001. The first families of bipolar and CMOS integrated logic functions used supply voltages of 5V and above. A 100 mm2 processor chip filled with a mix of random networks of gates and registers and some memory can hold up to 5*107 transistors in 0.1 μ CMOS technology. For dedicated memory chips the densities are much higher (see section 2.2.2).

The technology used for a chip and characterized by the above feature size parameter s determines the performance level of a chip to a high degree. If a single-chip digital system or a component such as a processor is reimplemented in a smaller feature size technology, it becomes cheaper, faster, consumes less power, and may outperform a more efficient design still manufactured using the previous technology. Roughly, the thickness of the gate insulators is proportional to s. The supply voltage and the logic levels need to be scaled proportional to s in order to maintain the same levels for the electrical fields. For a given chip area, the total capacitance is proportional to s−1, the power dissipation P = U2Cf (formula (8) in 2.1.3) for an operating frequency f hence proportional to s, and f can be raised proportional to s−1 for a fixed power level. At the same time, the gate density grows with s−2.

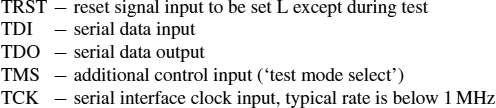

A problem encountered with highly integrated chips is the limitation of the number of i/o leads to a chip package. Whereas early small-scale integrated circuits had pin counts of 8–16, pin counts can now range up to about 1000, but at considerable costs for the packages and the circuit boards. For chips with up to 240 leads surface-mount quadratic flat packages (QFP) are common from which the leads extend from the borders with spacing as low as ½ mm. To reduce the package sizes and to also support higher i/o counts, ball grid array (BGA) packages have become common where the leads (tiny solder balls) are arranged in a quadratic grid at the bottom side of the package and thus can fill out the entire area of the package. While a 240 pin QFP has a size of 32 × 32 mm, a BGA package with the same lead count only requires about 16 × 16 mm. For the sake of reduced package and circuit board costs, chips with moderate pin counts are desirable. Chips are complex hardware modules within a digital system. Generally, the module interfaces within a system should be as simple as possible. The data codes exchanged between the chips may be much wider than the number of signal lines between them anyhow by using serial data transfers in multiple time steps.

For large chips, testing is an issue and must be supported by their logic design. Although the manufacturing techniques have improved, isolated faulty transistors or gates can render a chip unusable unless the logic design provides some capabilities to replace them by spare operational ones (this is common for chips which contain arrays of similar substructures). Otherwise the ‘yield’ for large chips becomes low and lets the cost of operational ones increase. Chips are produced side by side on large silicon wafers (with diameters of 20 cm and above) from which they are cut to be packaged individually. The level of integration has been raised further in special applications by connecting the operational chips on a wafer without cutting it (wafer-scale integration). The array of interconnected chips on a wafer must support the existence of faulty elements.

The achievable complexity of integrated circuits is high enough to allow a large range of applications to be implemented on single chip digital processors, at least in principle. The high design and manufacturing costs of large-scale integrated circuits, however, prohibit single chip ASIC implementations except for very high volume products. Otherwise the digital system would be built from several standard or application specific chip components mounted and connected on one or several circuit boards. The standard chips and ASIC devices are the building blocks for the board level design, and the implementation of multi-chip systems on circuit boards provides the scalability required to cover both high performance or low volume applications. Chips always have a fixed, invariable structure. They can, however, be designed to offer some configurability to support more than one application or some design optimizations without having to redesign the hardware (by implementing combined functions in the sense discussed in Section 1.3.3).

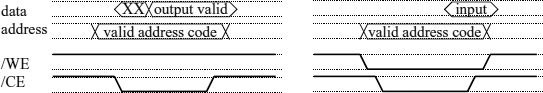

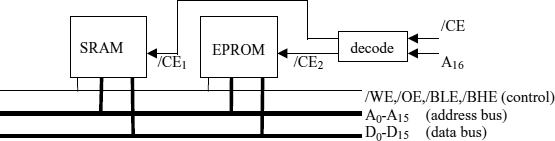

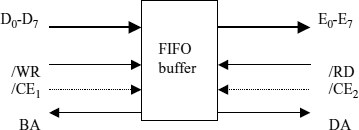

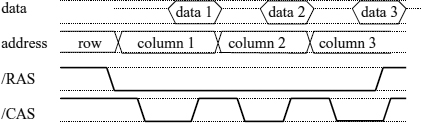

The component chips can only be cost effective if they are produced in large volumes themselves which is the case if their respective functions are required in several applications, or if they can be programmed or configured for different applications. At the board level, reusable ‘standard’ subsystems are attractive, too, and the cost for board level system integration must be considered to compare different design options. Chips to be used as components on circuit boards benefit from integrating as many functions as possible and from having a small number of easy-to-use interface signals with respect to their timing and handshaking. In general, the interfacing of chips on a board requires pin drivers for higher signal levels than those inside the chips involving extra delays and power consumption related to their higher capacitive loads. If there is a choice of using a chip integrating the functions of two other ones, it will provide more performance and lower power consumption yet less modularity for the board level design.