CHAPTER 10

Low Bit-Rate Coding: Theory and Evaluation

In a world of limitless storage capacity and infinite bandwidth, digital signals could be coded without regard to file size, or the number of bits needed for transmission. While such a world may some day be approximated, today there is a cost for storage and bandwidth. Thus, for many applications, it is either advantageous or mandated that audio signals be coded as bit-efficient as possible. Accomplishing this task while preserving audio fidelity is the domain of low bit-rate coding. Two approaches are available. One approach uses perceptual coding to reduce file size while avoiding significant audible loss and degradation. The art and science of lossy coding combines the perceptual qualities of the human ear with the engineering realities of signal processing. A second approach uses lossless coding in which file size is compressed, but upon playback the original uncompressed file is restored. Because the restored file is bit-for-bit identical to the original, there is no change in audio fidelity. However, lossless compression cannot achieve the same amount of data reduction as lossy methods. Both approaches can offer distinct advantages over traditional PCM coding.

This chapter examines the theory of perceptual (lossy) coding, as well as the theory of lossless data compression. In addition, ways to evaluate the audible quality of perceptual codecs are presented. Chapter 11 more fully explores the details of particular codecs, both lossy and lossless. The more specialized nature of speech coding is described in Chap. 12.

Perceptual Coding

Edison cylinders, like all analog formats, store acoustical waveforms with a mimicking pattern—an analog—of the original sonic waveform. Some digital media, such as the Compact Disc, do essentially the same thing, but replace the continuous mechanical pattern with a discrete series of numbers that represents the waveform’s sampled amplitude. In both cases, the goal is to reconstruct a waveform that is physically identical to the original within the audio band. With perceptual (lossy) coding, physical identity is waived in favor of perceptual identity. Using a psychoacoustic model of the human auditory system, the codec (encoder-decoder) identifies imperceptible signal content (to remove irrelevancy) as bits are allocated. The signal is then coded efficiently (to avoid redundancy) in the final bitstream. These steps reduce the quantity of data needed to represent an audio signal but also increase quantization noise. However, much of the quantization noise can be shaped and hidden below signal-dependent thresholds of hearing. The method of lossy coding asks the conceptual question—how much noise can be introduced to the signal without becoming audible?

Through psychoacoustics, we can understand how the ear perceives auditory information. A perceptual coding system strives to deliver all of perceived information, but no more. A perceptual coding system recognizes that sounds that are reproduced have the human ear as the intended receiver. A perceptual codec thus strives to match the sound to the receiver. Logically, the first step in designing such a codec is to understand how the human ear works.

Psychoacoustics

When you hear a plucked string, can you distinguish the fifth harmonic from the fundamental? How about the seventh harmonic? Can you tell the difference between a 1000-Hz and a 1002-Hz tone? You are probably adept at detecting this 0.2% difference. Have you ever heard “low pitch” in which complex tones seem to have a slightly lower subjective pitch than pure tones of the same frequency? All this and more is the realm of psychoacoustics, the study of human auditory perception, ranging from the biological design of the ear to the psychological interpretation of aural information. Sound is only an academic concept without our perception of it. Psychoacoustics explains the subjective response to everything we hear. It is the ultimate arbitrator in acoustic concerns because it is only our response to sound that fundamentally matters. Psychoacoustics seeks to reconcile acoustic stimuli and all the scientific, objective, and physical properties that surround them, with the physiological and psychological responses evoked by them.

The ear and its associated nervous system is an enormously complex, interactive system with incredible powers of perception. At the same time, even given its complexity, it has real limitations. The ear is astonishingly acute in its ability to detect a nuance or defect in a signal, but it is also surprisingly casual with some aspects of the signal. Thus the accuracy of many aspects of a coded signal can be very low, but the allowed degree of diminished accuracy is very frequency- and time-dependent.

Arguably, our hearing is our most highly developed sense; in contrast, for example, the eye can only perceive frequencies over one octave. As with every sense, the ear is useful only when coupled to the interpretative powers of the brain. Those mental judgments form the basis for everything we experience from sound and music. The left and right ears do not differ physiologically in their capacity for detecting sound, but their respective right- and left-brain halves do. The two halves loosely divide the brain’s functions. There is some overlap, but the primary connections from the ears to the brain halves are crossed; the right ear is wired to the left-brain half and the left ear to the right-brain half. The left cerebral hemisphere processes most speech (verbal) information. Thus, theoretically the right ear is perceptually superior for spoken words. On the other hand, it is mainly the right temporal lobe that processes melodic (nonverbal) information. Therefore, we may be better at perceiving melodies heard by the left ear.

Engineers are familiar with the physical measurements of an audio event, but psychoacoustics must also consider the perceptual measurements. Intensity is an objective physical measurement of magnitude. Loudness, first introduced by physicist Georg Heinrich Barkhausen, is the perceptual description of magnitude that depends on both intensity and frequency. Loudness cannot be empirically measured and instead is determined by listeners’ judgments. Loudness can be expressed in loudness levels called phons. A phon is the intensity of an equally loud 1-kHz tone, expressed in dB SPL. Loudness can also be expressed in sones, which describe loudness ratios. One sone corresponds to the loudness of a 40 dB SPL sine tone at 1 kHz. A loudness of 2 sones corresponds to 50 dB SPL. Similarly, any doubling of loudness in sones results in a 10-dB increase in SPL. For example, a loudness ratio of 64 sones corresponds to 100 dB SPL.

The ear can accommodate a very wide dynamic range. The threshold of feeling at 120 dB SPL has a sound intensity that is 1,000,000,000,000 times greater than that of the threshold of hearing at 0 dB SPL. The ear’s sensitivity is remarkable; at 3 kHz, a threshold sound displaces the eardrum by a distance that is about one-tenth the diameter of a hydrogen atom. For convenience of expression, it is clear why the logarithmic decibel is used when dealing with the ear’s extreme dynamic range. The ear is also fast; within 500 ms of hearing a maximum-loudness sound, the ear is sensitive to a threshold sound. Thus, whereas the eye only slowly adjusts its gain for different lighting levels and operates over a limited range at any time, the ear operates almost instantaneously over its full range. Moreover, whereas the eye can perceive an interruption to light that is 1/60 second, the ear may detect an interruption of 1/500 second.

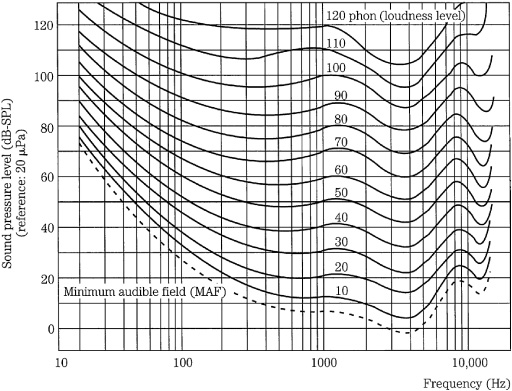

Although the ear’s dynamic range is vast, its sensitivity is frequency-dependent. Maximum sensitivity occurs at 1 kHz to 5 kHz, with relative insensitivity at low and high frequencies. This is because of the pressure transfer function that is an intrinsic part of the design of the middle ear. Through testing, equal-loudness contours such as the Robinson–Dadson curves have been derived, as shown in Fig. 10.1. Each contour describes a range of frequencies that are perceived to be equally loud. The lowest contour describes the minimum audible field, the minimum sound pressure level across the audible frequency band that a person with normal hearing can perceive. For example, a barely audible 30-Hz tone would be 60 dB louder than a barely audible 4-kHz tone. The response varies with respect to level; the louder the sounds, the flatter our loudness response. The contours are rated in phons, measuring the SPL of a contour at 1 kHz.

FIGURE 10.1 The Robinson–Dadson equal-loudness contours show that the ear is nonlinear with respect to frequency and level. These contours are based on psychoacoustic studies, using sine tones. (Robinson and Dadson, 1956)

Frequency is a literal measurement. Pitch is a subjective, perceptual measure. Pitch is a complex characteristic based on frequency, as well as other physical quantities such as waveform and intensity. For example, if a 200-Hz sine wave is sounded at a soft then louder level, most listeners will agree that the louder sound has a lower pitch. In fact, a 10% increase in frequency might be necessary to maintain a listener’s subjective evaluation of a constant pitch at low frequencies. On the other hand, in the ear’s most sensitive region, 1 kHz to 5 kHz, there is almost no change in pitch with loudness. Also, with musical tones, the effect is much less. Looked at in another way, pitch, quite unlike frequency, is purely a musical characteristic that places sounds on a musical scale.

The ear’s response to frequency is logarithmic; this can be demonstrated through its perception of musical intervals. For example, the interval between 100 Hz and 200 Hz is perceived as an octave, as is the interval between 1000 Hz and 2000 Hz. In linear terms, the second octave is much larger, yet the ear hears it as the same interval. For this reason, musical notation uses a logarithmic measuring scale. Each four and one-half spaces or lines on the musical staff represent an octave, which might be only a few tens of Hertz apart, or a few thousands, depending on the clef and ledger lines used.

Beat frequencies occur when two nearly equal frequencies are sounded together. The beat frequency is not present in the audio signal, but is an artifact of the ear’s limited frequency resolution. When the difference in frequency between tones is itself an audible frequency, a difference tone can be heard. The effect is especially audible when the frequencies are high, the tones fairly loud, and separated by not much more than a fifth. Although debatable, some listeners claim to hear sum tones. An inter-tone can also occur, especially below 200 Hz where the ear’s ability to discriminate between simultaneous tones diminishes. For example, simultaneous tones of 65 Hz and 98 Hz will be heard not as a perfect fifth, but as an 82-Hz tone. On the other hand, when tones below 500 Hz are heard one after the other, the ear can differentiate between pitches only 2 Hz apart.

The ear-brain is adept at determining the spatial location of sound sources, using a variety of techniques. When sound originates from the side, the ear-brain uses cues such as intensity differences, waveform complexity, and time delays to determine the direction of origin. When equal sound is produced from two loudspeakers, instead of localizing sound from the left and right sources, the ear-brain interprets sound coming from a space between the sources. Because each ear receives the same information, the sound is stubbornly decoded as coming from straight ahead. Similarly, stereo is nothing more than two different monaural channels. The rest is simply illusion.

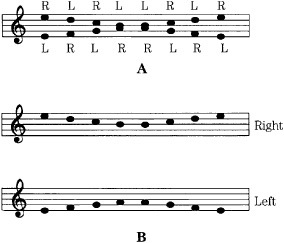

There is probably no limit to the complexity of psychoacoustics. For example, consider the musical tones in Fig. 10.2A. A scale is played through headphones to the right and left ears. Most listeners hear the pattern in Fig. 10.2B, where the sequence of pitches is correct, but heard as two different melodies in contrary motion. The high tones appear to come from the right ear, and the lower tones from the left. When the headphones are reversed, the headphone formerly playing low tones now appears to play high tones, and vice versa. Other listeners might hear low tones to the right and high tones to the left, no matter which way the headphones are placed. Curiously, right-handed listeners tend to hear high tones on the right and lows on the left; not so with lefties. Still other listeners might perceive only high tones and little or nothing of the low tones. In this case, most right-handed listeners perceive all the tones, but only half of the lefties do so.

FIGURE 10.2 When a sequence of two-channel tones is presented to a listener, perception might depend on handedness. A. Tones presented to listener. B. Illusion most commonly perceived. (Deutsch, 1983)

The ear perceives only a portion of the information in an audio signal; that perceived portion is the perceptual entropy—estimated to be as low as 1.5 bits/sample. Small entropy signals can be efficiently reduced; large entropy signals cannot. For this reason, a codec might output a variable bit rate that is low when information is poor, and high when information is rich. The output is variable because although the sampling rate of the signal is constant, the entropy in its waveform is not. Using psychoacoustics, irrelevant portions of a signal can be removed; this is known as data reduction. The original signal cannot be reconstructed exactly. A data reduction system reduces entropy; by modeling the perceptual entropy, only irrelevant information is removed, hence the reduction can be inaudible. A perceptual music codec does not attempt to model the music source (a difficult or impossible task for music coding); instead, the music signal is tailored according to the receiver, the human ear, using a psychoacoustic model to identify irrelevant and redundant content in the audio signal. In contrast, some speech codecs use a model of the source, the vocal tract, to estimate speech characteristics, as described in Chap. 12.

Traditionally, audio system designers have used objective parameters as their design goals—flat frequency response, minimal measured noise, and so on. Designers of perceptual codecs recognize that the final receiver is the human auditory system. Following the lead of psychoacoustics, they use the ear’s own performance as the design criterion. After all, any musical experience—whether created, conveyed, and reproduced via analog or digital means—is purely subjective.

Physiology of the Human Ear and Critical Bands

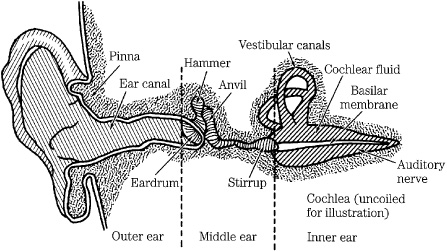

The ear uses a complex combination of mechanical and neurological processes to accomplish its task. In particular, the ear performs the transformation from acoustical energy to mechanical energy and ultimately to the electrical impulses sent to the brain, where information contained in sound is perceived. A simplified look at the human ear’s physiological design is shown in Fig. 10.3. The outer ear collects sound, and its intricate folds help us to assess directionality. The ear canal resonates at around 3 kHz to 4 kHz, providing extra sensitivity in the frequency range that is critical for speech intelligibility. The eardrum transduces acoustical energy into mechanical energy; it reaches maximum excursion at about 120-dB SPL, above which it begins to distort the waveform. The three bones in the middle ear, colloquially known as the hammer, anvil, and stirrup (the three smallest bones in the body) provide impedance matching to efficiently convey sounds in air to the fluid-filled inner ear. The vestibular canals do not affect hearing, but instead are part of a motion detection system providing a sense of balance. The coiled basilar membrane detects the amplitude and frequency of sound; those vibrations are converted to electrical impulses and sent to the brain as neural information along a bundle of nerve fibers. The brain decodes the period of the stimulus and point of maximum stimulation along the basilar membrane to determine frequency; activity in local regions surrounding the stimulus is ignored.

FIGURE 10.3 A simplified look at the physiology of the human ear. The coiled cochlea and basilar membrane are straightened for clarity of illustration.

Examination of the basilar membrane shows that the ear contains roughly 30,000 hair cells arranged in multiple rows along the basilar membrane, roughly 32 mm long; this is the Organ of Corti. The cells detect local vibrations of the basilar membrane and convey audio information to the brain via electrical impulses. The decomposition of complex sounds into constituent components is analogous to Fourier analysis and is known as tonotopicity. Frequency discrimination dictates that at low frequencies, tones a few Hertz apart can be distinguished; however, at high frequencies, tones must differ by hundreds of Hertz. In any case, hair cells respond to the strongest stimulation in their local region; this region is called a critical band, a concept introduced by Harvey Fletcher in 1940.

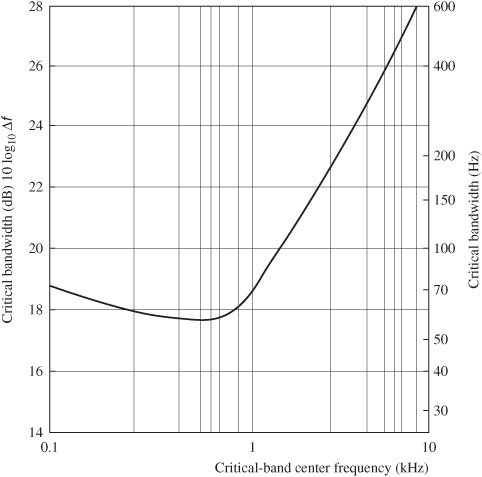

Fletcher’s experiments showed that, for example, when noise masks a pure tone, only frequency components of the noise that are near the frequency of the tone are relevant in masking the tone. Energy outside the band is inconsequential. This frequency range of relevancy is the critical band. Critical bands are much narrower at low frequencies than at high frequencies; three-fourths of the critical bands are below 5 kHz; in terms of masking, the ear receives more information from low frequencies and less from high frequencies. When critical bandwidths are plotted with respect to critical-band center frequency, critical bandwidths are approximately constant from 0 Hz to 500 Hz, and then approximately proportional to frequency from about 500 Hz upward, as shown in Fig. 10.4. In other words, at higher frequencies, critical bandwidth increases approximately linearly as the center frequency increases logarithmically.

FIGURE 10.4 A plot showing critical bandwidths for monaural listening. (Goldberg and Riek, 2000)

Critical bands are approximately 100 Hz wide for frequencies from 20 Hz to 500 Hz and approximately 1.5 octaves in width for frequencies from 1 kHz to 7 kHz. Alternatively, bands can be assumed to be 1.3 octaves wide for frequencies from 300 Hz to 20 kHz; an error of less than 1.5 dB will occur. Other research shows that critical bandwidth can be approximated with the equation:

Critical bandwidth = 25 + 75[1 + 1.4(f /1000)2]0.69 Hz

where f = center frequency in Hz.

The ear was modeled by Eberhard Zwicker with 24 arbitrary critical bands for frequencies below 15 kHz; a 25th band occupies the region from 15 kHz to 20 kHz. An example of critical band placement and width is listed in Table 10.1. Physiologically, each critical band occupies a length of about 1.3 mm, with 1300 primary hair cells. The critical band for a 1-kHz sine tone is about 160 Hz in width. Thus, a noise or error signal that is 160 Hz wide and centered at 1 kHz is audible only if it is greater than the same level of a 1-kHz sine tone. Critical bands describe a filtering process in the ear; they describe a system that is analogous to a spectrum analyzer showing the response patterns of overlapping bandpass filters with variable center frequencies. Importantly, critical bands are not fixed; they are continuously variable in frequency, and any audible tone will create a critical band centered on it. The critical band concept is an empirical phenomenon. Looked at in another way, a critical band is the bandwidth at which subjective responses change. For example, if a band of noise is played at a constant sound-pressure level, its loudness will be constant as its bandwidth is increased. But as its bandwidth exceeds that of a critical band, the loudness increases.

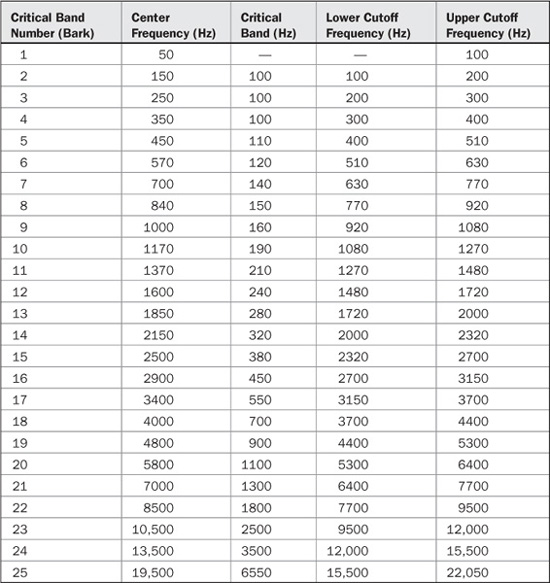

TABLE 10.1 An example of critical bands in the human hearing range showing an increase in bandwidth with absolute frequency. A critical band will arise at an audible sound at any frequency. (after Tobias, 1970)

Most perceptual codecs rely on amplitude masking within critical bands to reduce quantized word lengths. Masking is the essential trick used to perceptually hide coding noise. Indeed, in the same way that Nyquist is honored for his famous sampling frequency relationship, modern perceptual codecs could be called “Fletcher codecs.”

Interestingly, critical bands have also been used to explain consonance and dissonance. Tone intervals with a frequency difference greater than a critical band are generally more consonant; intervals less than a critical band tend to be dissonant with intervals of about 0.2 critical bandwidth being most dissonant. Dissonance tends to increase at low frequencies; for example, musicians tend to avoid thirds at low frequencies. Psychoacousticians also note that critical bands play a role in the perception of pitch, loudness, phase, speech intelligibility, and other perceptual matters.

The Bark (named after Barkhausen) is a unit of perceptual frequency. Specifically, a Bark measures the critical-band rate. A critical band has a width of 1 Bark; 1/100 of a Bark equals 1 mel. The Bark scale relates absolute frequency (in Hertz) to perceptually measured frequencies such as pitch or critical bands (in Bark). Conversion from frequency to Bark can be accomplished with:

z(f) = 13arctan(0.00076f) + 3.5arctan[(f/7500)2] Bark

where f = frequency in Hz.

Using a Bark scale, the physical spectrum can be converted to a psychological spectrum along the basilar membrane. In this way, a pure tone (a single spectral line) can be represented as a psychological-masking curve. When critical bands are plotted using a Bark scale, they are relatively consistent with frequency, verifying that the Bark is a “natural” unit that presents the ear’s response more accurately than linear or logarithmic plots. However, the shape of masking curves still varies with respect to level, showing more asymmetric slopes at louder levels.

Some researchers prefer to characterize auditory filter shapes in terms of an equivalent rectangular bandwidth (ERB) scale. The ERB represents the bandwidth of a rectangular function that conveys the same power as a critical band. The ERB scale portrays auditory filters somewhat differently than the critical bandwidth representation. For example, ERB argues that auditory filter bandwidths do not remain constant below 500 Hz, but instead decrease at lower frequencies; this would require greater low-frequency resolution in a codec. In one experiment, the ERB was modeled as:

ERB = 24.7[4.37(f/1000)+ 1] Hz

where f = center frequency in Hz.

The pitch place theory further explains the action of the basilar membrane in terms of a frequency-to-place transformation. Carried by the surrounding fluid, a sound wave travels the length of the membrane and creates peak vibration at particular places along the length of the membrane. The collective stimulation of the membrane is analyzed by the brain, and frequency content is perceived. High frequencies cause peak response at the membrane near the middle ear, while low frequencies cause peak response at the far end. For example, a 500-Hz tone would create a peak response at about three-fourths of the distance along the membrane. Because hair cells tend to vibrate at the frequency of the strongest stimulation, they will convey that frequency in a critical band, ignoring lesser stimulation. This excitation curve is described by the cochlear spreading function, an asymmetrical contour. This explains, for example, why broadband measurements cannot describe threshold phenomena, which are based on local frequency conditions. There are about 620 degrees of differentiable frequencies equally distributed along the basilar membrane; thus, a resolution of 1.25 Bark is reasonable. Summarizing, critical bands are important in perceptual coding because they show that the ear discriminates between energy in the band, and energy outside the band. In particular, this promotes masking.

Threshold of Hearing and Masking

Two fundamental phenomena that govern human hearing are the minimum-hearing threshold and amplitude masking, as shown in Fig. 10.5. The threshold of hearing curve describes the minimum level (0 sone) at which the ear can detect a tone at a given frequency. The threshold is referenced to 0 dB at 1 kHz. The ear is most sensitive in the 1-kHz to 5-kHz range, where we can hear signals several decibels below the 0-dB reference. Generally, two tones of equal power and different frequency will not sound equally loud. Similarly, the audibility of noise and distortion varies according to frequency. Sensitivity decreases at high and low frequencies. For example, a 20-Hz tone would have to be approximately 70 dB louder than a 1-kHz tone to be barely audible. A perceptual codec compares the input signal to the minimum threshold, and discards signals that fall below the threshold; the signals are irrelevant because the ear cannot hear them. Likewise, a codec can safely place quantization noise under the threshold because it will not be heard. The absolute threshold of hearing is determined by human testing, and describes the energy in a pure tone needed for audibility in a noiseless environment. The contour can be approximated by the equation:

T(f) = 3.64(f/1000)−0.8 − 6.5e−0.6[(f/1000)−3.3]2 + 10−3(f/1000)4 dB SPL

where f = frequency in Hz.

This threshold is absolute, but a music recording can be played at loud or soft levels—a variable not known at the time of encoding. To account for this variation, many codecs conservatively equate the decoder’s lowest output level to a 0-dB level or alternatively to the −4-dB minimum point of the threshold curve, near 4 kHz. In other words, the ideal (lowest) quantization error level is calibrated to the lowest audible level. Conversely, this corresponds to a maximum value of about 96 dB SPL for a 16-bit PCM signal. Some standards refer to the curve as the threshold of quiet.

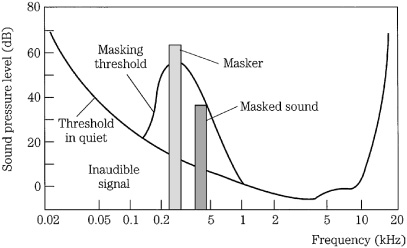

FIGURE 10.5 The threshold of hearing describes the softest sounds audible across the human hearing range. A masker tone or noise will raise the threshold of hearing in a local region, creating a masking curve. Masked tones or noise, perhaps otherwise audible, that fall below the masking curve during that time will not be audible.

When tones are sounded simultaneously, amplitude masking occurs in which louder tones can completely obscure softer tones. For example, it is difficult to carry on a conversation in a nightclub; the loud music masks the sound of speech. More analytically, for example, a loud 800-Hz tone can mask softer tones of 700 Hz and 900 Hz. Amplitude masking shifts the threshold curve upward in a frequency region surrounding the tone. The masking threshold describes the level where a tone is barely audible. In other words, the physical presence of sound certainly does not ensure audibility and conversely can ensure inaudibility of other sound. The strong sound is called the masker and the softer sound is called the maskee. Masking theory argues that the softer tone is just detectable when its energy equals the energy of the part of the louder masking signal in the critical band; this is a linear relationship with respect to amplitude. Generally, depending on relative amplitude, soft (but otherwise audible) audio tones are masked by louder tones at a similar frequency (within 100 Hz at low frequencies). A perceptual codec can take advantage of masking; the music signal to be coded can mask a relatively high level of quantization noise, provided that the noise falls within the same critical band as the masking music signal and occurs at the same time.

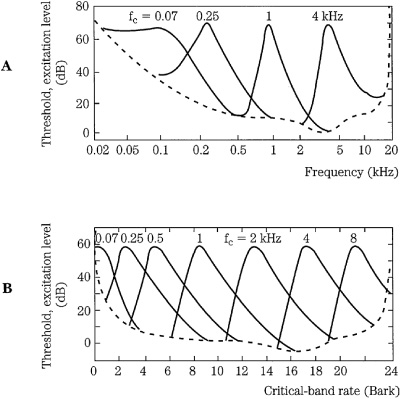

The mechanics of the basilar membrane explain the phenomenon of amplitude masking. A loud response at one place on the membrane will mask softer responses in the critical band around it. Unless the activity from another tone rises above the masking threshold, it will be swamped by the masker. Figure 10.6A shows four masking curves (tones masked by narrow-band noise) at 60 dB SPL, on a logarithmic scale in Hertz. Figure 10.6B shows seven masking curves on a Bark scale; using this natural scale, the consistency of the critical-band rate is apparent. Moreover, this plot illustrates the position of critical bands along the basilar membrane.

FIGURE 10.6 Masking curves describe the threshold where a tone or noise is just audible in the presence of a masker. Threshold width varies with frequency when plotted logarithmically. When plotted on a Bark scale, the widths and slopes are similar, reflecting response along the basilar membrane. A. Masking thresholds plotted with logarithmic frequency. B. Masking thresholds plotted with critical-band rate. (Zwicker and Zwicker, 1991)

Masking thresholds are sometimes expressed as an excitation level; this is obtained by adding a 2-dB to 6-dB masking index to the sound pressure level of the just-audible tone. Low frequencies can interfere with the perception of higher frequencies. Masking can overlap adjacent critical bands when a signal is loud, or contains harmonics; for example, a complex 1-kHz signal can mask a simple 2-kHz signal. Low amplitude signals provide little masking. Narrow-band tones such as sine tones also provide relatively little masking. Likewise, louder, more complex tones provide greater masking with masking curves that are broadened, and with a greater high-frequency extension.

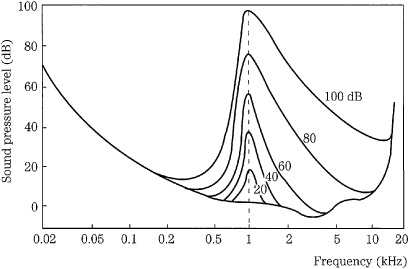

Amplitude-masking curves are asymmetrical. The slope of the threshold curve is less steep on the high-frequency side. Thus it is relatively easy for a low tone to mask a higher tone, but the reverse is more difficult. Specifically, in a simple approximation, the lower slope is about 27 dB/Bark; the upper slope varies from −20 dB/Bark to −5 dB/Bark depending on the amplitude of the masker. More detailed approximations use spreading functions as described in the discussion of psychoacoustic models below. Low-level maskers influence a relatively narrow band of masked frequencies. However, as the sound level of the masker increases, the threshold curve broadens, and in particular its upper slope decreases; its lower slope remains relatively unaffected. Figure 10.7 shows a series of masking curves produced by a narrow band of noise centered at 1 kHz, sounded at different amplitudes. Clearly, the ear is most discriminating with low-amplitude signals.

Many masking curves have been derived from studies in which either single tones or narrow bands of noise are used as the masker stimulus. Generally, single-tone maskers produce dips in the masking curve near the tone due to beat interference between the masker and maskee tones. Narrow noise bands do not show this effect. In addition, tone maskers seem to extend high-frequency masking thresholds more readily than noise maskers. It is generally agreed that these differences are artifacts of the test itself. Tests with wideband noise show that only the frequency components of the masker that lie in the critical band of the maskee are effective at masking.

FIGURE 10.7 Masking thresholds vary with respect to sound pressure level. This test uses a narrow-band masker noise centered at 1 kHz. The lower slope remains essentially unchanged.

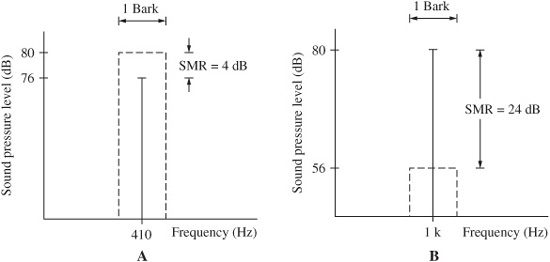

FIGURE 10.8 Noise maskers have more masking power than tonal maskers. A. In NMT, a noise masker can mask a centered tone with an SMR of only 4 dB. B. In TMN, a tonal masker can mask centered noise with an SMR of 24 dB. The SMR increases as the tone or noise moves off the center frequency. In each case, the width of the noise band is one critical bandwidth.

As noted, many masking studies use noise to mask a tone to study the condition called noise-masking-tone (NMT). In perceptual coding, we are often more concerned with quantization noise that must be masked by either a tonal or nontonal (noise-like) audio signal. The conditions of tone-masking-noise (TMN) and noise-masking-noise (NMN) are thus more pertinent. Generally, in noise-masking-tone studies, when the masker and maskee are centered, a tone is inaudible when it is about 4 dB below a 1/3-octave masking noise in a critical band. Conversely, in tone-masking-noise studies, when a 1/3-octave band of noise is masked by a pure tone, the noise must be 21 dB to 28 dB below the tone. This suggests that it is 17 dB to 24 dB harder to mask noise. The two cases are illustrated in Fig. 10.8. NMN generally follows TMN conditions; in one NMN study, the maskee was found to be about 26 dB below the masker. The difference between the level of the masking signal and the level of the masked signal is called the signal-to-mask ratio (SMR); for example, in NMT studies, the SMR is about 4 dB. Higher values for SMR denote less masking. SMR is discussed in more detail below.

Relatively little scientific study has been done with music as the masking stimulus. However, it is generally agreed that music can be considered as relatively tonal or nontonal (noise-like) and these characterizations are used in psychoacoustic models for music coding. The determination of tonal and nontonal components is important because, as noted above, the masking abilities are quite different and this greatly affects coding. In addition, sine-tone masking data is generally used in masking models because it provides the least (worst case) masking of noise; complex tones provide greater masking. Clearly, one musical sound can mask another, but future work in the mechanics of music masking will result in better masking algorithms.

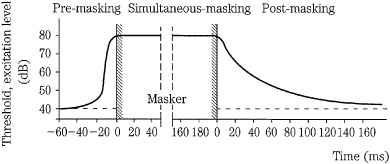

Temporal Masking

Amplitude masking assumes that tones are sounded simultaneously. Temporal masking occurs when tones are sounded close in time, but not simultaneously. A signal can be masked by a noise (or another signal) that occurs later. This is pre-masking (sometimes called backward masking). In addition, a signal can be masked by a noise (or another signal) that ends before the signal begins. This is post-masking (sometimes called forward masking). In other words, a louder masker tone appearing just after (pre-masking), or before (post-masking) a softer tone overcomes the softer tone. Just as simultaneous amplitude masking increases as frequency differences are reduced, temporal masking increases as time differences are reduced. Given an 80-dB tone, there may be 40 dB of post-masking within 20 ms and 0 dB of masking at 200 ms. Pre-masking can provide 60 dB of masking for 1 ms and 0 dB at 25 ms. This is shown in Fig. 10.9. The duration of pre-masking has not been shown to be affected by the duration of the masker. The envelope of post-masking decays more quickly as the duration of the masker decreases or as its intensity decreases. In addition, a tone is better post-masked by an earlier tone when they are close in frequency or when the earlier tone is lower in frequency; post-masking is slight when the masker has a higher frequency. Logically, simultaneous amplitude masking is stronger than either temporal pre- or post-masking because the sounds occur at the same time.

FIGURE 10.9 Temporal masking occurs before and, in particular, after a masker sounds; the threshold decreases with time. The dashed line indicates the threshold for a test-tone impulse without a masker signal. (Zwicker and Zwicker, 1991)

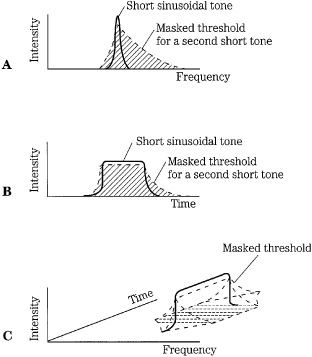

Temporal masking suggests that the brain integrates the perception of sound over a period of time (perhaps 200 ms) and processes the information in bursts at the auditory cortex. Alternatively, perhaps the brain prioritizes loud sounds over soft sounds, or perhaps loud sounds require longer integration times. Whatever the mechanism, temporal masking is important in frequency domain coding. These codecs have limited time resolution because they operate on blocks of samples, thus spreading quantization error over time. Temporal masking can help overcome audibility of the artifact (called pre-echo) caused by a transient signal that lasts a short time while the quantization noise may occupy an entire coding block. Ideally, filter banks should provide a time resolution of 2 ms to 4 ms. Acting together, amplitude and temporal masking form a contour that can be mapped in the time-frequency domain, as shown in Fig. 10.10. Sounds falling under that contour will be masked. It is the obligation of perceptual codecs to identify this contour for changing signal conditions and code the signal appropriately.

Although a maskee signal exists acoustically, it does not exist perceptually. It might seem quite radical, but aural masking is as real as visual masking. Lay your hand over this page. Can you see the page through your hand? Aural masking is just as effective.

FIGURE 10.10 When simultaneous and temporal masking are combined, a time-frequency contour results. A perceptual codec must place quantization noise and other artifacts within this contour to ensure inaudibility. A. Simultaneous masking. B. Temporal masking. C. Combined masking effect in time and frequency. (Beerends and Stemerdink, 1992)

Psychoacoustic Models

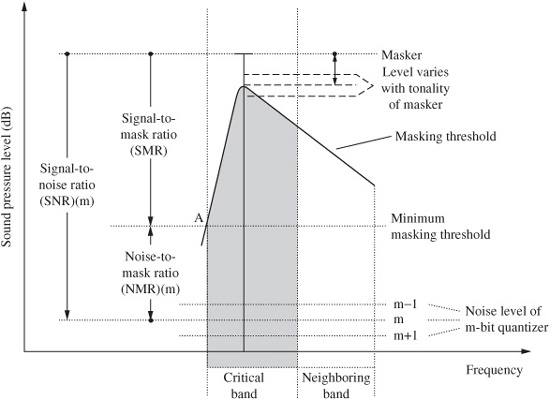

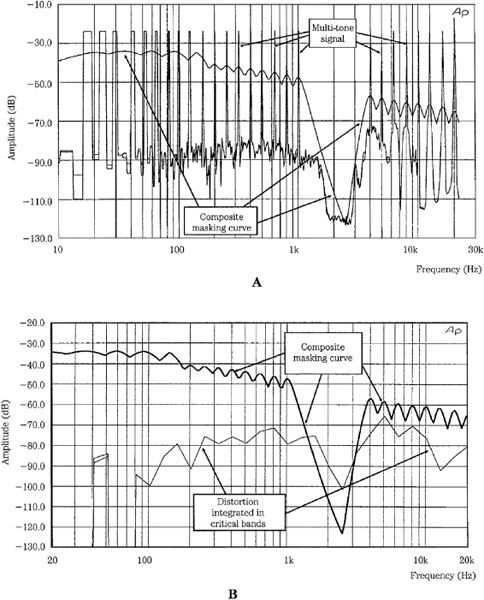

Psychoacoustic models emulate the human hearing system and analyze spectral data to determine how the audio signal can be coded to render quantization noise as inaudible as possible. Most models calculate the masking thresholds for critical bands to determine this just-noticeable noise level. In other words, the model determines how much coding noise is allowed in every critical band, performing one such analysis on each frame of data. The difference between the maximum signal level and the minimum masking threshold (the signal-to-mask ratio) thus determines bit allocation for each band. An important element in modeling masking curves is determining the relative tonality of signals, because this affects the character of the masking curve they project. Any model must be time-aligned so that its results coincide with the correct frame of audio data. This accounts for the filter delay and need to center the analysis output in the current data block.

In most codecs, the goal of bit allocation is to minimize the total noise-to-mask ratio over the entire frame. The number of bits allocated cannot exceed the number of bits available for the frame at a given bit rate. The noise-to-mask ratio for each subband is calculated as:

NMR = SMR − SNR dB

The SNR is the difference between the masker and the noise floor established by a quantization level; the more bits used for quantization, the larger the value of SNR. The SMR is the difference between the masker and the minimum value of the masking threshold within a critical band. More specifically, the pertinent masking threshold is the global masking threshold (also known as the just-noticeable distortion or JND) within a critical band. The SMR determines the number of bits needed for quantization. If a signal is below the threshold, then the signal is not coded. The NMR is the difference between the quantization noise level, and the level where noise reaches audibility. The relationship is shown in Fig. 10.11

FIGURE 10.11 The NMR is the difference between the SMR and SNR, expressed in dB. The masking threshold varies according to the tonality of the signal. (Noll, 1997)

Within a critical band, the larger the SNR is compared to the SMR, the less audible the quantization noise. If the SNR is less than the SMR, then the noise is audible. A codec thus strives to minimize the value of the NMR in subbands by increasing the accuracy of the quantization. This figure gauges the perceptual quality of the coding. For example, NMR values of less than 0 may indicate transparent coding, while values above 0 may indicate audible degradation.

Referring again to Fig. 10.11, we can also note that the masking threshold is shifted downward from the masking peak by some amount that depends most significantly on whether the masker is tonal or nontonal. Generally, these expressions can be applied:

ΔTMN = 14.5 + z dB

ΔNMT = S dB

where z is the frequency in Bark and S can be assumed to lie between 3 and 6 but can be frequency-dependent.

Alternatively, James Johnston has suggested these expressions for the tonalnontonal shift:

ΔTMN = 19.5 + z(18.0/26.0) dB

ΔNMT = 6.56 − z(3.06/26.0) dB

where z is the frequency in Bark.

The codec must place noise below the JND, or more specifically, taking into account the absolute threshold of hearing, the codec must place noise below the higher of JND or the threshold of hearing. For example, the SNR may be estimated from table data specified according to the number of quantizing levels, and the SMR is output by the psychoacoustic model. In an iterative process, the bit allocator determines the NMR for all subbands. The subband with the highest NMR value is allocated bits, and a new NMR is calculated based on the SNR value. The process is repeated until all the available bits are allocated.

The validity of the psychoacoustic model is crucial to the success of any perceptual codec, but it is the utilization of the model’s output in the bit allocation and quantization process that ultimately determines the audibility of noise. In that respect, the interrelationship of the model and the quantizer is the most proprietary part of any codec. Many companies have developed proprietary psychoacoustic models and bit-allocation methods that are held in secret; however, their coding is compatible with standards-compliant decoders.

Spreading Function

Many psychoacoustic models use a spreading function to compute an auditory spectrum. It is straightforward to estimate masking levels within a critical band by using a component in the critical band. However, masking is usually not limited to a single critical band; its effect spreads to other bands. The spreading function represents the masking response of the entire basilar membrane and describes masking across several critical bands, that is, how masking can occur several Bark away from a masking signal. In crude models (and the most conservative) the spreading function is an asymmetrical triangle. As noted, the lower slope is about 27 dB/Bark; the upper slope may vary from −20 to −5 dB/Bark. The masking contour of a pure tone can be approximated as two slopes where S1 is the lower slope and S2 is the upper slope, plotted as SPL per critical-band rate. The contour is independent of masker frequency:

S1 = 27 dB / Bark

S2 = [24 + 0.23(fv/1000)−1 − 0.2Lv/dB] dB / Bark

where fv is the frequency of the masking tone in Hz, and Lv is the level of the masking tone in dB.

The slope of S2 becomes steeper at low frequencies by the 0.23(fv/1000)−1 term because of the threshold of hearing, while at masking frequencies above 100 Hz, the slope is almost independent of frequency. S2 also depends on SPL.

A more sophisticated spreading function, but one that does not account for the masker level, is given by the expression:

10 log10 SF(dz) = 15.81 + 7.5(dz + 0.474) − 17.5[1 + (dz + 0.474)2]1/2 dB

where dz is the distance in Bark between the maskee and masker frequency.

To use a spreading function, the audio spectrum is divided into critical bands and the energy in each band is computed. These values are convolved with the spreading function to yield the auditory spectrum. When offsets and the absolute threshold of hearing are considered, the final masking thresholds are produced. When calculating a global masking threshold, the effects of multiple maskers must be considered. For example, a model could use the higher of two thresholds, or add together the masking threshold intensities of different components. Alternatively, a value averaged between the values of the two methods could be used, or another nonlinear approach could be taken. For example, in the MPEG-1 psychoacoustic model 1, intensities are summed. However, in MPEG-1 model 2, the higher value of the global masking threshold and the absolute threshold is selected. These models are discussed in Chap. 11.

Tonality

Distinguishing between tonal and nontonal components is an important feature of most psychoacoustic models because tonal and nontonal components demand different masking emulation. For example, as noted, noise is a better masker than a tone. Many methods have been devised to detect and characterize tonality in audio signals. For example, in MPEG-1 model 1, tonality is determined by detecting local maxima in the audio spectrum. All nontonal components in a critical band are represented with one value at one frequency. In MPEG-1 model 2, a spectral flatness measure is used to measure the average or global tonality. These models are discussed in Chap. 11.

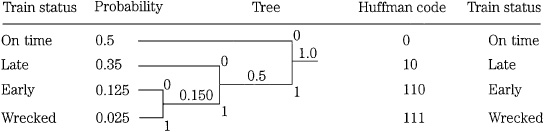

In some tonality models, when a signal has strong local maxima tonal components, they are detected and withdrawn and coded separately. This flattens the overall spectrum and increases the efficiency of the subsequent Huffman coding because the average number of bits needed in a codebook increases according to the magnitude of the maximum value. The increase in efficiency depends on the nature of the audio signal. Some models further distinguish the harmonic structure of multitonal maskers. With two multitonal maskers of the same power, the one with a strong harmonic structure yields a lower masking threshold.

Identification of tonal and nontonal components can also be important in the decoder when data is conveyed across an error-prone transmission channel and error concealment is applied before the output synthesis filter bank. Missing tonal components can be replaced by predicted values. For example, predictions can be made using an FIR filter for all-pole modeling of the signal, and using an autocorrelation function, coefficients can be generated with the Levinson–Durbin algorithm. Studies indicate that concealment in the lower subbands is more important than in the upper subbands. Noise properly shaped by a spectral envelope can be successfully substituted for missing nontonal sections.

Rationale for Perceptual Coding

The purpose of any low bit-rate coding system is to decrease the data rate, the product of the sampling frequency, and the word length. This can be accomplished by decreasing the sampling frequency; however, the Nyquist theorem dictates a corresponding decrease in high-frequency audio bandwidth. Another approach uniformly decreases the word length; however, this reduces the dynamic range of the audio signal by 6 dB per bit, thus increasing broadband quantization noise. As we have seen, a more enlightened approach uses psychoacoustics. Perceptual codecs maintain sampling frequency, but selectively decrease word length. The word-length reduction is done dynamically based on signal conditions. Specifically, masking and other factors are considered so that the resulting increase in quantization noise is rendered as inaudible as possible. The level of quantization error, and its associated distortion from truncating the word length, can be allowed to rise, so long as it is masked by the audio signal. For example, a codec might convey an audio signal with an average bit rate of 2 bits/sample; with PCM encoding, this would correspond to a signal-to-noise ratio of 12 dB—a very poor result. But by exploiting psychoacoustics, the codec can render the noise floor nearly inaudible.

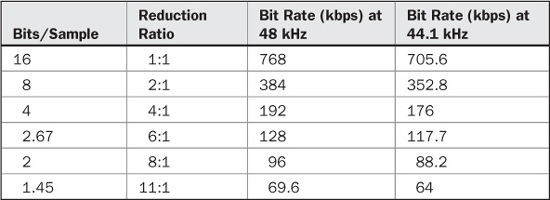

Perceptual codecs analyze the frequency and amplitude content of the input signal. The encoder removes the irrelevancy and statistical redundancy of the audio signal. In theory, although the method is lossy, the human perceiver will not hear degradation in the decoded signal. Considerable data reduction is possible. For example, a perceptual codec might reduce a channel’s bit rate from 768 kbps to 128 kbps; a word length of 16 bits/sample is reduced to an average of 2.67 bits/sample, and data quantity is reduced by about 83%. Table 10.2 lists various reduction ratios and resulting bit rates for 48-kHz and 44.1-kHz monaural signals. A perceptually coded recording, with a conservative level of reduction, can rival the sound quality of a conventional recording because the data is coded in a much more intelligent fashion, and quite simply, because we do not hear all of what is recorded anyway. In other words, perceptual codecs are efficient because they can convey much of the perceived information in an audio signal, while requiring only a fraction of the data needed by a conventional system.

Part of this efficiency stems from the adaptive quantization used by most perceptual codecs. With PCM, all signals are given equal word lengths. Perceptual codecs assign bits according to audibility. A prominent tone is given a large number of bits to ensure audible integrity. Conversely, fewer bits are used to code soft tones. Inaudible tones are not coded at all. Together, bit rate reduction is achieved. A codec’s reduction ratio (or coding gain) is the ratio of input bit rate to output bit rate. Reduction ratios of 4:1, 6:1, or 12:1 are common. Perceptual codecs have achieved remarkable transparency, so that in many applications reduced data is audibly indistinguishable from linearly represented data. Tests show that reduction ratios of 4:1 or 6:1 can be transparent.

TABLE 10.2 Bit-rate reduction for 48-kHz and 44.1-kHz sampling frequencies.

The heart of a perceptual codec is the bit-allocation algorithm; this is where the bit rate is reduced. For example, a 16-bit monaural signal sampled at 48 kHz that is coded at a bit rate of 96 kbps must be requantized with an average of 2 bits/sample. Moreover, at that bit rate, the bit budget might be 1024 bits per block of analyzed data. The bit-allocation algorithm must determine how best to distribute the bits across the signal’s spectrum and requantize samples to minimize audibility of quantization noise while meeting its overall bit budget for that block.

Generally, two kinds of bit-allocation strategies can be used in perceptual codecs. In forward adaptive allocation, all allocation is performed in the encoder and this encoding information is contained in the bitstream. Very accurate allocation is permitted, provided the encoder is sufficiently sophisticated. An important advantage of forward adaptive coding is that the psychoacoustic model is located in the encoder; the decoder does not need a psychoacoustic model because it uses the encoded data to completely reconstruct the signal. Thus as psychoacoustic models in encoders are improved, the increased sonic quality can be conveyed through existing decoders. A disadvantage is that a portion of the available bit rate is needed to convey the allocation information to the decoder. In backward adaptive allocation, bit-allocation information is derived from the coded audio data itself without explicit information from the encoder. The bit rate is not partly consumed by allocation information. However, because bit allocation in the decoder is calculated from limited information, accuracy may be reduced. In addition, the decoder is more complex, and the psychoacoustic model cannot be easily improved following the introduction of new codecs.

Perceptual coding is generally tolerant of errors. With PCM, an error introduces a broadband noise. However, with most perceptual codecs, the error is limited to a narrow band corresponding to the bandwidth of the coded critical band, thus limiting its loudness. Instead of a click, an error might be perceived as a burst of low-level noise. Perceptual coding systems also permit targeted error correction. For example, particularly vulnerable sounds (such as pianissimo passages) may be given greater protection than less vulnerable sounds (such as forte passages). As with any coded data, perceptually coded data requires error correction appropriate to the storage or transmission medium.

Because perceptual codecs tailor the coding to the ear’s acuity, they may similarly decrease the required response of the playback system itself. Live acoustic music does not pass through amplifiers and loudspeakers—it goes directly to the ear. But recorded music must pass through the playback signal chain. Arguably, some of the original signal present in a recording could degrade the playback system’s ability to reproduce the audible signal. Because a perceptual codec removes inaudible signal content, the playback system’s ability to convey audible music may improve. In short, a perceptual codec may more properly code an audio signal for passage through an audio system.

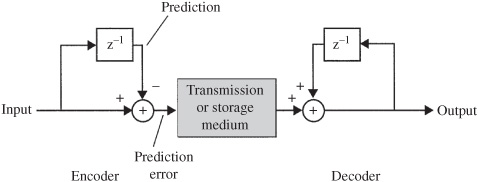

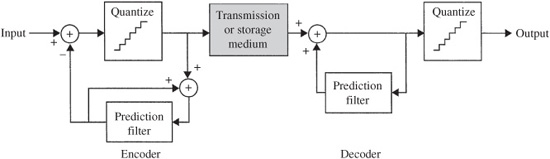

Perceptual Coding in Time and Frequency

Low bit-rate lossy codecs, whether designed for music or speech coding, attempt to represent the audio signal at a reduced bit rate while minimizing the associated increase in quantization error. Time-domain coding methods such as delta modulation can be considered to be data-reduction codecs (other time-domain methods such as PCM do not provide reduction). They use prediction methods on samples representing the full bandwidth of the audio signal and yield a quantization error spectrum that spans the audio band. Although the audibility of the error depends on the amplitude and spectrum of the signal, the quantization error generally is not masked by the signal. However, time-domain codecs operating across the full bandwidth of the time-domain signal can achieve reduction ratios of up to 2.5. For example, Near Instantaneously Companded Audio Multiplex (NICAM) codecs reduce blocks of 32 samples from 14 bits to 10 bits using a sliding window to determine which 10 of the 14 bits can be transmitted with minimal audible degradation. With this method, coding is lossless with low-level signals, with increasing loss at high levels. Although data reduction is achieved, the bit rate is too high for many applications; primarily, reduction is limited because masking is not fully exploited.

Frequency-domain codecs take a different approach. The signal is analyzed in the frequency domain, and only the perceptually significant parts of the signal are quantized, on the basis of psychoacoustic characteristics of the ear. Other parts of the signal that are below the minimum threshold, or masked by more significant signals, may be judged to be inaudible and are not coded. In addition, quantization resolution is dynamically adapted so that error is allowed to rise near significant parts of the signal with the expectation that when the signal is reconstructed, the error will be masked by the signal. This approach can yield significant data reduction. However, codec complexity is greatly increased.

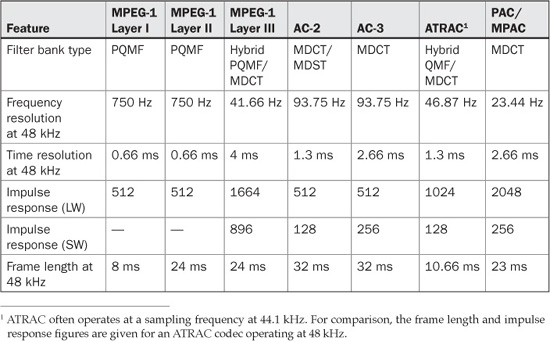

Conceptually, there are two types of frequency-domain codecs: subband and transform codecs. Generally, subband codecs use a low number of subbands and process samples adjacent in time, and transform codecs use a high number of subbands and process samples adjacent in frequency. Generally, subband codecs provide good time resolution and poor frequency resolution, and transform codecs provide good frequency resolution and poor time resolution.

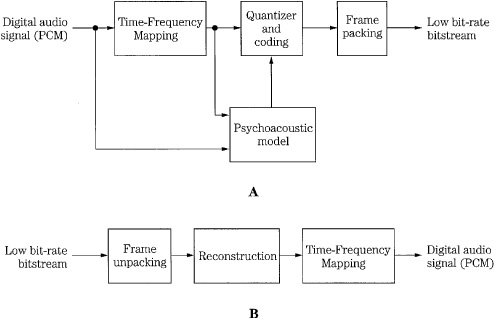

However, the distinction between subband and transform codecs is primarily based on their separate historical development. Mathematically, all transforms used in codecs can be viewed as filter banks. Perhaps the most practical difference between subband and transform codecs is the number of bands they process. Thus, both subband and transform codecs follow the architecture shown in Fig. 10.12; either time-domain samples or frequency-domain coefficients are quantized according to a psychoacoustic model contained in the encoder.

In subband coding, a hybrid of time- and frequency-domain techniques is used. A short block of time-based broadband input samples is divided into a number of frequency subbands using a filter bank of bandpass filters; this allows determination of the energy in each subband. Using a side-chain transform frequency analysis, the samples in each subband are analyzed for energy content and coded according to a psychoacoustic model.

In transform coding, a block of input samples is directly applied to a transform to obtain the block’s spectrum in the frequency domain. These transform coefficients are then quantized and coded according to a psychoacoustic model. Problematically, a relatively long block of data is required to obtain a high-resolution spectral representation. Transform codecs achieve greater reduction than subband codecs; ratios of 4:1 to 12:1 are typical. Transform codecs incur a longer processing delay than subband codecs.

FIGURE 10.12 The basic structure of a time-frequency-domain encoder and decoder (A and B, respectively). Subband (time) codecs quantize time-based samples, and transform (frequency) codecs quantize frequency-based coefficients.

As noted, most low bit-rate lossy codecs use psychoacoustic models to analyze the input signal in the frequency domain. To accomplish this, the time-domain input signal is often applied to a transform prior to analysis in the model. Any periodic signal can be represented as amplitude variations in time, or as a set of frequency coefficients describing amplitude and phase. Jean Baptiste Joseph Fourier first established this relationship between time and frequency. Changes in a time-domain signal also appear as changes in its frequency-domain spectrum. For example, a slowly changing signal would be represented by a low-frequency spectral content. If a sequence of time-based samples are thus transformed, the signal’s spectral content can be determined over that period of time. Likewise, the time-based samples can be recovered by inverse transforming the spectral representation back into the time domain. A variety of mathematical transforms can be used to transform a time-domain signal into the frequency domain and back again. For example, the fast Fourier transform (FFT) gives a spectrum with half as many frequency points as there are time samples. For example, assume that 480 samples are taken at a 48-kHz sampling frequency. In this 10-ms interval, 240 frequency points are obtained over a spectrum from the highest frequency of 24 kHz to the lowest of 100 Hz, which is the period of 10 ms, with frequency points placed 100 Hz apart. In addition, a dc point is generated.

Subband Coding

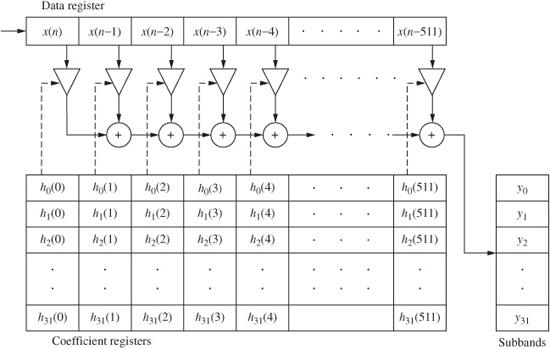

Subband coding was first developed at Bell Labs in the early 1980s, and much subsequent work was done in Europe later in the decade. Blocks of consecutive time-domain samples representing the broadband signal are collected over a short period and applied to a digital filter bank. This analysis filter bank divides the signal into multiple (perhaps up to 32) bandlimited channels to approximate the critical band response of the human ear. The filter bank must provide a very sharp cutoff (perhaps 100 dB/octave) to emulate critical band response and limit quantization noise within that bandwidth. Only digital filters can accomplish this result. In addition, the processing block length (ideally less than 2 ms to 4 ms) must be small so that quantization error does not exceed the temporal masking limits of the ear. The samples in each subband are analyzed and compared to a psychoacoustic model. The codec adaptively quantizes the samples in each subband based on the masking threshold in that subband. Ideally, the filter bank should yield subbands with a width that corresponds to the width of the narrowest critical band. This would allow precise psychoacoustic modeling. However, most filter banks producing uniformly spaced subbands cannot meet this goal; this points out the difficulties posed by the great difference in bandwidth between the narrowest critical band and the widest.

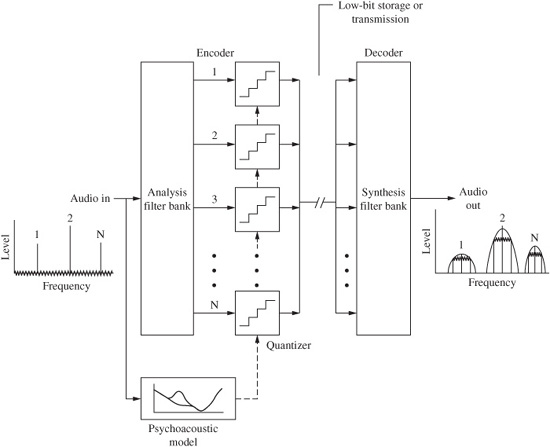

Each subband is coded independently with greater or fewer bits allocated to the samples in the subband. Quantization noise may be increased in a subband. However, when the signal is reconstructed, the quantization noise in a subband will be limited to that subband, where it is ideally masked by the audio signal in that subband, as shown in Fig. 10.13. Quantization noise levels that are otherwise intrusive can be tolerated in a subband with a signal contained in it because noise will be masked by the signal. Subbands that do not contain an audible signal are quantized to zero. Bit allocation is determined by a psychoacoustic model and analysis of the signal itself; these operations are recalculated for every subband in every new block of data. Samples are dynamically quantized according to audibility of signals and noise. There is great flexibility in the design of psycho-acoustic models and bit-allocation algorithms used in codecs that are otherwise compatible. The decoder uses the quantized data to re-form the samples in each block; a synthesis filter bank sums the subband signals to reconstruct the output broadband signal.

FIGURE 10.13 A subband encoder analyzes the broadband audio signal in narrow subbands. Using masking information from a psychoacoustic model, samples in subbands are coarsely quantized, raising the noise floor. When the samples are reconstructed in the decoder, the synthesis filter constrains the quantization noise floor within each subband, where it is masked by the audio signal.

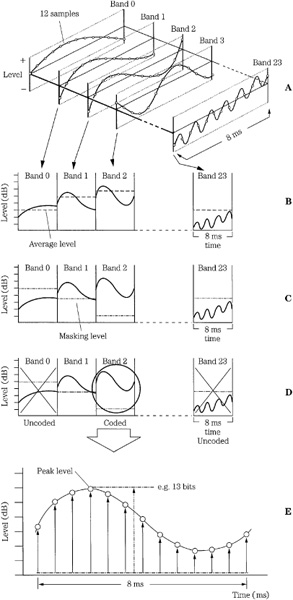

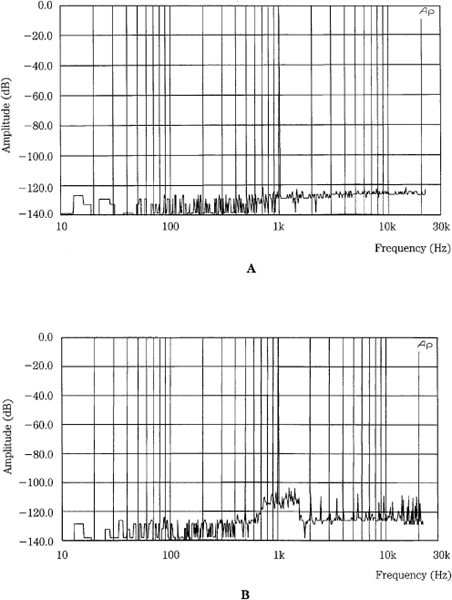

A subband perceptual codec uses a filter bank to split a short duration of the audio signal into multiple bands, as depicted in Fig. 10.14. In some designs, a side-chain processor applies the signal to a transform such as an FFT to analyze the energy in each subband. These values are applied to a psychoacoustic model to determine the combined masking curve that applies to the signals in that block. This permits more optimal coding of the time-domain samples. Specifically, the encoder analyzes the energy in each subband to determine which subbands contain audible information. A calculation is made to determine the average power level of each subband over the block. This average level is used to calculate the masking level due to masking of signals in each subband, as well as masking from signals in adjacent subbands. Finally, minimum hearing threshold values are applied to each subband to derive its final masking level. Peak power levels present in each subband are calculated, and compared to the masking level. Subbands that do not contain audible information are not coded. Similarly, tones in a subband that are masked by louder nearby tones are not coded, and in some cases entire subbands can mask nearby subbands, which thus need not be coded.

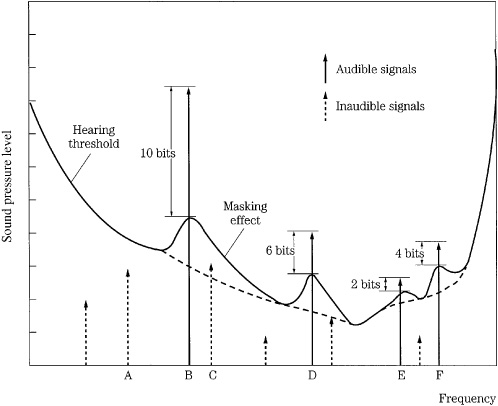

Calculations determine the ratio of peak power to masking level in each subband. Quantization bits are assigned to audible program material with a priority schedule that allocates bits to each subband according to signal strength above the audibility curve. For example, Fig. 10.15 shows vertical lines representing peak power levels, and minimum and masking thresholds.

The signals below the minimum or masking curves are not coded, and the quantization noise floor is allowed to rise to those levels. For example, in the figure, signal A is below the minimum curve and would not be coded in any event. Signal C is also irrelevant in this frame because signal B has dynamically shifted the hearing threshold upward.

Signal B must be coded; however, its presence has created a masking curve, decreasing the relative amplitude above the minimum threshold curve. The portion of signal B between the minimum curve and the masking curve represents the fewer bits that are needed to code the signal when the masking effect is taken into account. In other words, rather than using a signal-to-noise ratio, a signal-to-mask ratio (SMR) is used. The SMR is the difference between the maximum signal and the masking threshold and is used to determine the number of bits assigned to a subband. The SMR is calculated for each subband.

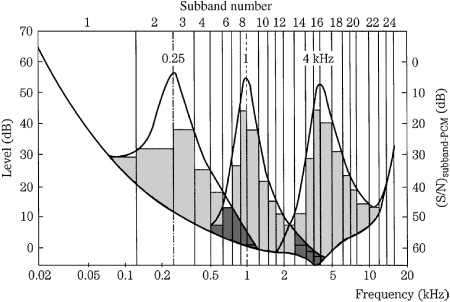

The number of bits allocated to any subband must be sufficient to yield a requantizing noise level that is below the masking level. The number of bits depends on the SMR value, with the goal of maintaining the quantization noise level below the calculated masking level for each subband. In fixed-rate codecs, a bit-pool approach can be taken. A large number of subbands requiring coding and signals with large SMR values might empty the pool, resulting in less than optimal coding. On the other hand, if the pool is not empty after initial allocation, the process is repeated until all bits in the codec’s data capacity have been used. Typically, the iterative process continues, allocating more bits where required, with signals with the highest SMR requirements always receiving the most bits; this increases the coding margin. In some cases, subbands previously classified as inaudible might receive coding from these extra bits. Thus, signals below the masking threshold can in practice be coded, but only on a secondary priority basis. Summarizing the concept of subband coding, Fig. 10.16 shows how a 24-subband codec might code three tones at 250 Hz, 1 kHz, and 4 kHz; note that in each case the quantization noise level is below the combined masking and threshold curve.

FIGURE 10.14 A subband codec divides the signal into narrow subbands, calculates average signal level, and masking level; and then quantizes the samples in each subband accordingly. A. Output of 24-band subband filter. B. Calculation of average level in each subband. C. Calculation of masking level in each subband. D. Subbands below audibility are not coded; bands above audibility are coded. E. Bits are allocated according to peak level above the masking threshold. Subbands with peak levels above the masking level contain audible signals that must be coded.

FIGURE 10.15 The bit-allocation algorithm assigns bits according to audibility of subband signals. Bits may not be assigned to masked or inaudible tones.

Transform Coding

In transform coding, the audio signal is viewed as a quasi-stationary signal that changes relatively little over short time intervals. For efficient coding, blocks of time-domain audio samples are transformed to the frequency domain. Frequency coefficients, rather than amplitude samples, are quantized to achieve data reduction. For playback, the coefficients are inverse-transformed back to the time domain.

The operation of the transform approximates how the basilar membrane analyzes the frequency content of vibrations along its length. The spectral coefficients output by the transform are quantized according to a psychoacoustic model; masked components are eliminated, and quantization decisions are made based on audibility. In contrast to a subband codec, which uses frequency analysis to code time-based samples, a transform codec codes frequency coefficients. From an information theory standpoint, the transform reduces the entropy of the signal, permitting efficient coding. Longer transform blocks provide greater spectral resolution, but lose temporal resolution; for example, a long block might result in a pre-echo before a transient. In many codecs, block length is adapted according to audio signal conditions. Short blocks are used for transient signals, while long blocks are used for continuous signals.

FIGURE 10.16 In this 24-band subband codec, three tones are coded so that the quantization noise in each subband falls below the calculated composite masking curves. (Thiele, Link, and Stoll, 1987)

Time-domain samples are transformed to the frequency domain, yielding spectral coefficients. The coefficient numbers are sometimes called frequency bin numbers; for example, a 512-point transform can produce 256 frequency coefficients or frequency bins. The coefficients, which might number 512, 1024, or more, are grouped into about 32 bands that emulate critical-band analysis. This spectrum represents the block of time-based input samples. The frequency coefficients in each band are quantized according to the codec’s psychoacoustic model; quantization can be uniform, nonuniform, fixed, or adaptive in each band.

Transform codecs may use a discrete cosine transform (DCT) or modified discrete cosine transform (MDCT) for transform coding because of low computational complexity, and because they can critically sample (sample at twice the bandwidth of the bandpass filter) the signal to yield an appropriate number of coefficients. Most codecs overlap successive blocks in time by about 50%, so that each sample appears in two different transform blocks. For example, the samples in the first half of a current block are repeated from the second half of the previous block. This reduces changes in spectra from block to block and improves temporal resolution. The DCT and MDCT can yield the same number of coefficients as with non-overlapping blocks. As noted, an FFT may be used in the codec’s side chain to yield coefficients for perceptual modeling.

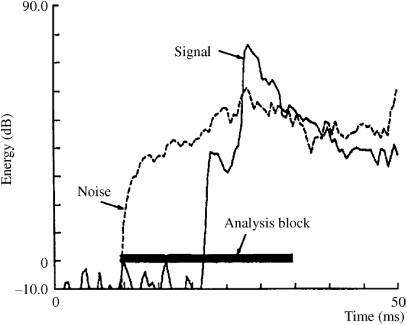

All low bit-rate codecs operate over a block of samples. This block must be kept short to stay within the temporal masking limits of the ear. During decoding, quantization noise will be spread over the frequency of the band, and over the duration of the block. If the block is longer than temporal backward masking allows, the noise will be heard prior to the onset of the sound, in a phenomenon known as pre-echo. (The term pre-echo is misleading.) Pre-echo is particularly problematic in the case of a silence followed by a time-domain transient within the analysis window. The energy in the transient portion causes the encoder to allocate relatively few bits, thus raising the eventual quantization noise level. Pre-echoes are created in the decoder when frequency coefficients are inverse-transformed prior to the reconstruction of subband samples in the synthesis filter bank. The duration of the quantization noise equals that of the synthesis window, so the elevated noise extends over the duration of the window, while the transient only occurs briefly. In other words, encoding dictates that a transient in the audio signal will be accompanied by an increase in quantization noise but a brief transient may not fully mask the quantization noise surrounding it, as shown in Fig. 10.17. In this example, the attack of a triangle occurs as a transient signal. The analysis window of a transform codec operates over a relatively long time period. Quantization noise is spread over the time of the window and precedes the music signal; thus it may be audible as a pre-echo.

FIGURE 10.17 An example of a pre-echo. On reconstruction, quantization noise falls within the analysis block, where the leading edge is not masked by the signal. (Herre and Johnston, 1996)

Transform codecs are particularly affected by the problem of pre-echo because they require long blocks for greater frequency accuracy. Short block length limits frequency resolution (and also relatively increases the amount of overhead side information). In essence, transform codecs sacrifice temporal resolution for spectral resolution. Long blocks are suitable for slowly changing or tonal signals; the frequency resolution allows the codec to identify spectral peaks and use their masking properties in bit allocation. For example, a clarinet note and its harmonics would require fine frequency resolution but only coarse time resolution. However, transient signals require a short block length; the signals have a flatter spectrum. For example, the fast transient of a castanet click would require fine time resolution but only coarse frequency resolution.

In most transform codecs, to provide the resolution demanded by particular signal conditions, and to avoid pre-echo, block length dynamically adapts to signal conditions. Referring again to Fig. 10.17, a shorter analysis block would constrain the quantization noise to a shorter duration, where it will be masked by the signal. A short block is also advantageous because it limits the duration of high bit rates demanded by transient encoding. Alternatively, a variable bit rate encoder can minimize pre-echo by briefly increasing the bit rate to decrease the noise level. Some codecs use temporal noise shaping (TNS) to minimize pre-echo by manipulating the nature of the quantization noise within a filter bank window. When a transient signal is detected, TNS uses a predictive coding method to shape the quantization noise to follow the transient’s envelope. In this way, the quantization error is more effectively concealed by the transient. However, no matter what approach is taken, difficulty arises because most music simultaneously places contradictory demands on the codec.

In adaptive transform codecs, a model is applied to uniformly and adaptively quantize each individual band, but coefficient values within a band are quantized with the same number of bits. The bit-allocation algorithm calculates the optimal quantization noise in each subband to achieve a desired signal-to-noise ratio that will promote masking. Iterative allocation is used to supply additional bits as available to increase the coding margin, yet maintain limited bit rate. In some cases, the output bit rate can be fixed or variable for each block. Before transmission, the reduced data is often compressed with entropy coding such as Huffman coding and run-length coding to perform lossless compression. The decoder inversely quantizes the coefficients and performs an inverse transform to reconstruct the signal in the time domain.

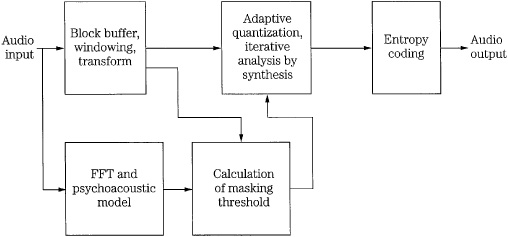

An example of an adaptive transform codec proposed by Karlheinz Brandenburg is shown in Fig. 10.18. An MDCT transforms the signal to the frequency domain. Signal energy in each critical band is calculated using the spectral coefficients. This is used to determine the masking threshold for each critical band. Two iterative loops perform quantization and coding using an analysis-by-synthesis technique. Coefficients are initially assigned a quantizer step size and the algorithm calculates the resulting number of bits needed to code the signal in the block. If the count exceeds the bit rate allowed for the block, the loop reassigns a larger quantizer step size and the count is recalculated until the target bit rate is achieved. An outer loop calculates the quantization error as it will appear in the reconstructed signal. If the error in a band exceeds the error allowed by the masking model, the quantizer step size in the band is decreased. Iterations continue in both loops until optimal coding is achieved. Codecs such as this can operate at low bit rates (for example, 2.5 bits/sample).

FIGURE 10.18 Adaptive transform codec using an FFT side-chain and iterative quantization to achieve optimal reduction. Entropy coding is additionally used for data compression.

Filter Banks

Low bit-rate codecs often use an analysis filter bank to partition the wide audio band into smaller subbands; the decoder uses a synthesis filter bank to restore the subbands to a wide audio band. Uniformly spaced filter banks downconvert an audio band into a baseband, then lowpass filter and subsample the data. Transform-based filter banks multiply overlapping blocks of audio samples with a window function to reduce edge effects, and then perform a discrete transform such as DCT. Mathematically, the transforms used in codecs can be seen as filter banks, and subband filter banks can be seen as transforms.

Time-domain filter banks should provide ideal lowpass and highpass characteristics with a cutoff of fs/2, where fs is the sampling frequency; however, real filters have overlapping bands. For example, in a two-band system, the subband sampling rates must be decreased (2:1) to maintain the overall bit rate. This decimation introduces aliasing in the subbands. In the lower band, signals above fs/4 will alias to 0 to fs/4. In the upper band, signals below fs/4 alias up to fs/4 to fs/2. In the decoder, the sampling rate is restored (1:2) by adding zeros. Because of interpolation, in the lower band, signals from 0 to fs/4 will image around fs/4 into the upper band. Similarly, in the upper band, signals from fs/4 to fs/2 will image to the lower band.

Quadrature Mirror Filters

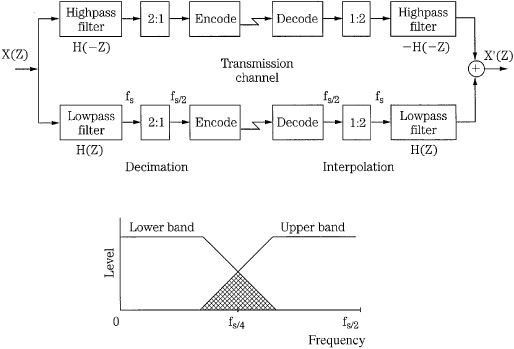

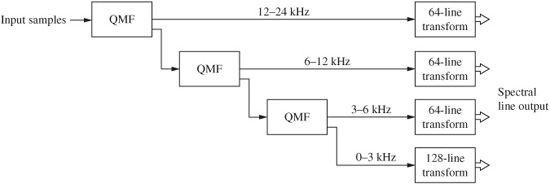

Generally, when N subbands are created, each subband is sub-sampled at 1/N to maintain an overall sampling limit. We recall from Chap. 2, that the sampling frequency must be at least twice the bandwidth of a sampled signal. As noted, most filter banks do not provide ideal performance because of the finite width of their transition bands; the bands overlap, and the 1/N sub-sampling causes aliasing. Clearly, bands that are spaced apart can avoid this, but will leave gaps in the signal’s spectrum. Quadrature mirror filter (QMF) banks have the property of reconstructing the original signal from N overlapping subbands without aliasing, regardless of the order of the bandpass filters. The aliasing components are exactly canceled, in the frequency domain, during reconstruction and the subbands are output in their proper place in frequency. The attenuation slopes of adjacent subband filters are mirror images of each other. Ideally, alias cancellation is perfect only if there is no requantization of the subband signals. A QMF is shown in Fig. 10.19. Intermediate samples can be critically sub-sampled without loss; if the input signal is split into N equal subbands, each subband can be sampled at 1/N; the sampling frequency for each subband filter is exactly twice the bandwidth of the filter. Generally, cascades of QMF banks may be used to create 4 to 24 subbands. By cascading some subbands unequally, relative bandwidths can be manipulated; delays are introduced to maintain time parity between subbands.

QMF banks can be implemented as symmetrical finite impulse-response filters with an even number of taps; the use of a reconstruction highpass filter with a z-transform of −H(−z) instead of H(−z) eliminates alias terms (when there is uniform quantizing in each subband). However, perfect reconstruction is generally limited to the case when N = 2, creating two equal-width subbands from one. These fs/2 subbands can be further divided by repeating the QMF process, and splitting each subband into two more subbands, each with a fs/4 sampling frequency. This can be accomplished with a tree structure; however, this adds delay to the processing. Other QMF architectures can be used to create multiple subbands with less delay. However, cascaded QMF banks suitable for multi-band computation needed for codec design suffer from long delay and high complexity. For that reason, many codecs use a pseudo-QMF, also known as a polyphase filter, which offers a faster parallel approach that approximates the QMF. The QMF method is similar in concept to wavelet techniques.

FIGURE 10.19 A quadrature mirror filter forms two equal subbands. Alias components introduced during decimation are exactly canceled during reconstruction. Multiple QMF stages can be cascaded to form additional subbands.

Hybrid Filters