Transaction Processing Monitors

6.2. Transactional Remote Procedure Calls

6.2.1. The Resource Manager Interface

6.2.2. What the Resource Manager Has to Do in Support of Transactions

6.2.3. Interfaces between Resource Managers and the TP Monitor

6.2.4. Resource Manager Calls versus Resource Manager Sessions

6.3. Functional Principles of the TP Monitor

6.3.1. The Central Data Structures of the TPOS

6.3.2. Data Structures Owned by the TP Monitor

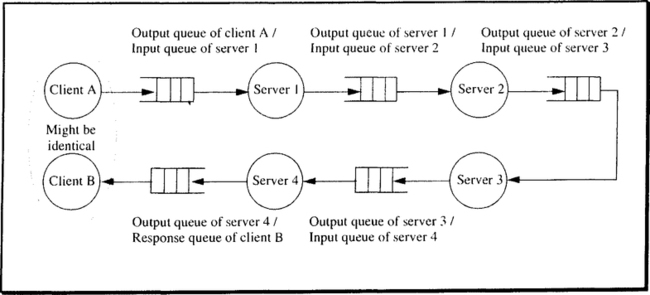

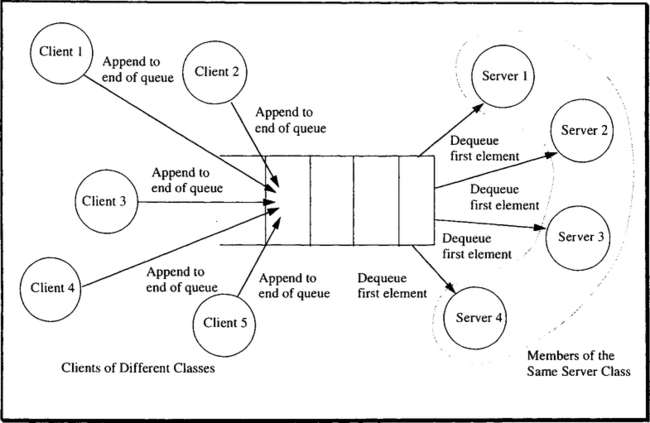

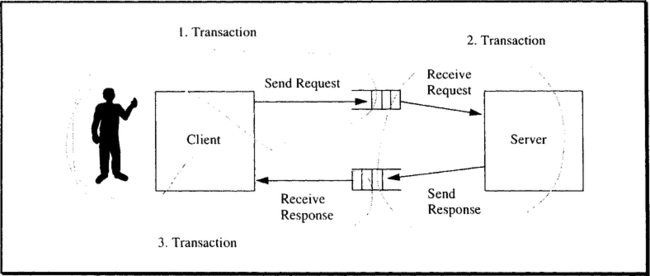

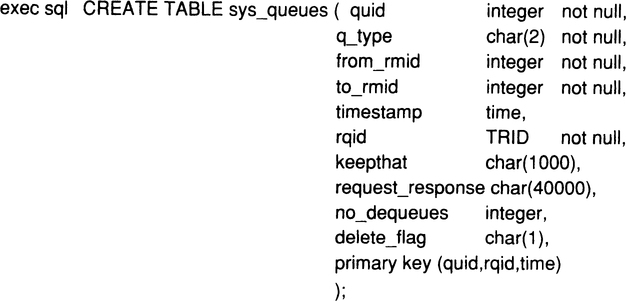

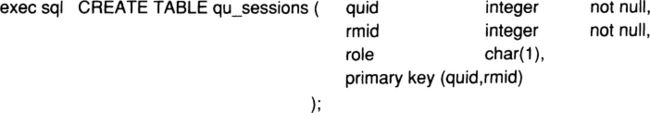

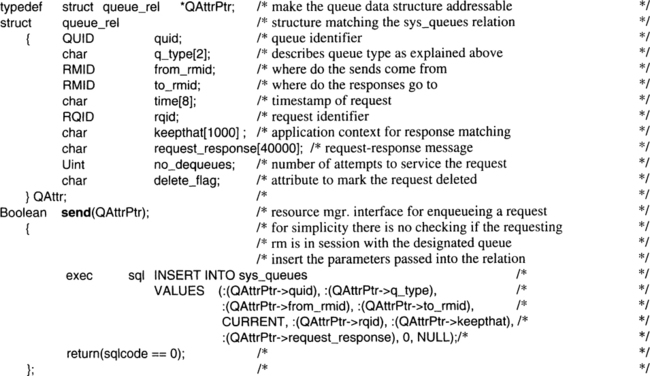

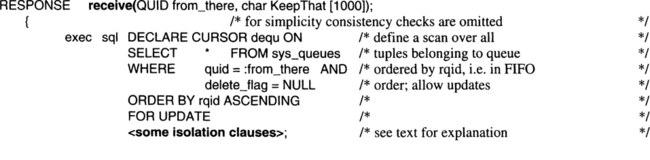

6.4. Managing Request and Response Queues

6.4.1. Short-Term Queues for Mapping Resource Manager Invocations

6.4.2. Durable Request Queues for Asynchronous Transaction Processing

6.1 Introduction

Beginning with this chapter, and continuing up to Chapter 12, we look at the topics presented in Chapter 5, but through a magnifying lens. This close-up will reveal which concepts, algorithms, and techniques are used to provide the application with a transaction-oriented execution environment. The component structure and the sample implementations are based on the functions of the basic operating system introduced in Chapter 2; that is, on processes, address spaces, messages, and sessions. The description of how it works is focused on the core mechanisms for implementing simple ACID transactions and phoenix transactions. Due to the limited space, the implementation of the large number of other transaction types (see Chapter 4) cannot be covered.

Chapter 5 has already mentioned the variety of meanings attributed to the term TP monitor. Having defined the role of a TP monitor in a transaction-oriented system by enumerating the services it provides, we will be equally careful in specifying the interfaces used for implementing a TP monitor. A good way to structure interfaces is by layers of abstraction. Let us therefore start by considering those layers in a transaction processing system.

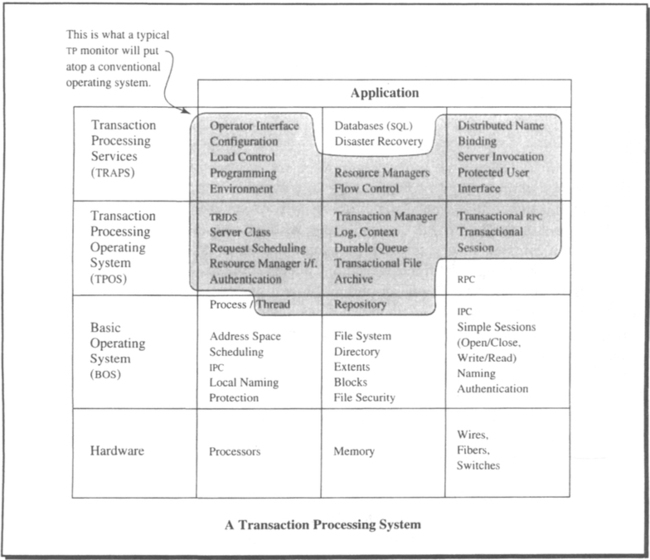

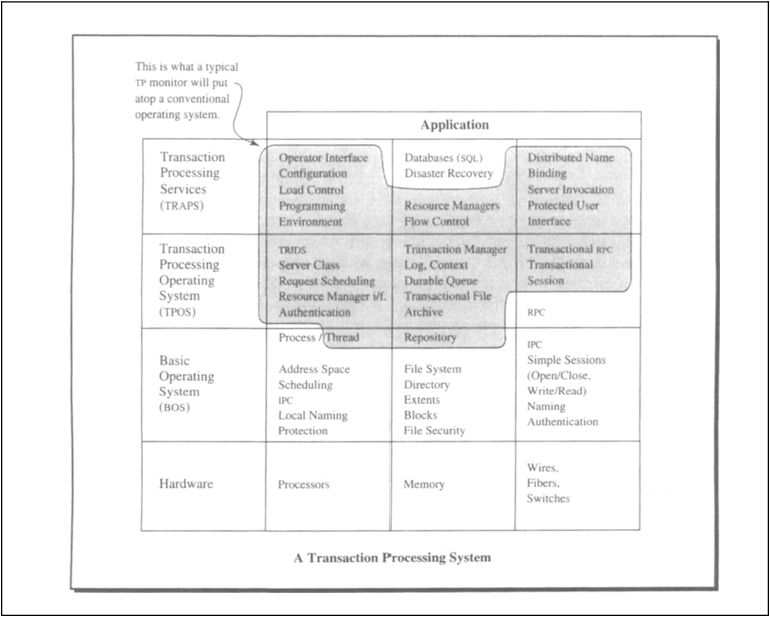

Table 6.1 organizes the terms in the way they are used throughout this book. It contains three central layers. First, there is the basic operating system (BOS), which is assumed to know little or nothing about transactions. Next comes the transaction processing operating system (TPOS), the main task of which is to render the objects and services of the BOS in a transactional style. For example, the basic OS provides processes; in order to exercise transaction-oriented (commitment) control, each process executing code on behalf of an application must be bound to the transaction that surrounds this execution. The basic OS also provides messages: when transaction programs send protected output messages, they must not be delivered until the transaction has committed. Therefore, messages must be bound to transactions, too. The same argument applies to interprocess communication, units of durable storage, and—in some cases—physical devices.

Table 6.1

Layering of services in a transaction processing system. The description of functions and services throughout this book assumes five interface layers. The basic operating system uses the hardware directly. Its interfaces are used by the transaction processing operating system to provide a transactional programming environment. Sophisticated servers like databases, configuration management, window management, and so forth, use both the basic operating system and the transaction processing operating system to create a transaction-oriented programming environment. The application typically uses the transaction processing services and the interfaces to the transaction processing operating system, depending on the application’s sophistication.

|

Finally, there are transaction oriented services, which make the TPOS manageable and usable for developing transaction-oriented applications. Table 6.1 also relates the services to the types of objects and functions they provide. For completeness, the table includes the hardware underneath and the application on top of the transaction processing services.

The following chapters are largely concerned with the middle layer of Table 6.1, the transaction processing operating system (TPOS), and with showing how its main functions are implemented. Discussion of functions that are attributed to other layers is restricted to the degree that the functions contribute to the main purpose of the TPOS. Chapters 13–15 then use the mechanisms of the TPOS to outline the implementation of a transaction-oriented file system. The file system has the features needed as a platform for SQL. It serves as an example for a resource manager in the sense described in Chapter 5. A precise definition of the term resource manager is given in Section 6.2.

Bear in mind that the layering in Table 6.1 does not reflect a strict implementation hierarchy. Rather, it describes a separation of concerns among different system components. It is therefore perfectly reasonable to assume that the TPOS implements some of its data structures (repositories, queues) using SQL, although SQL is shown to sit on top of the TPOS. This situation is analogous to the assumption made in Chapter 3, Section 3.7, where the operating system uses SQL to keep track of its ticket numbers.

Table 6.1 tries to relate the strongly used and weakly defined term TP monitor to the service categories used in this book, by way of the services a typical TP monitor provides (according to Chapter 5). Given the overview of terms in Table 6.1, we use TPOS and TP monitor interchangeably, whenever there is no risk of confusion.

To describe the implementation of a complete TP monitor in full detail is far beyond the scope of this book. Rather, we focus on the key services provided by a TP monitor, which encircle the transactional remote procedure call (TRPC), as was sketched in Chapter 5. TRPCs are used to invoke services from resource managers under transaction protection.

The organization of this chapter follows generally the flow of a TRPC. Section 6.2 gives a detailed description of what a transactional RPC looks like and the consequences of its use on the structure of resource managers. In particular, it discusses the interfaces of a resource manager, both in its role as a server to applications and other resource managers, and as a client invoking services via TRPC. The implementation of transactional RPCs is then described in Section 6.3. It is the longest section in this chapter and covers all related aspects, such as server classes, name binding, and transaction management.

Queue management is the topic of Section 6.4. All the miscellaneous topics that have not been given their own sections, such as load balancing, authentication and authorization, and restart processing, are briefly discussed in Section 6.5.

The discussion makes use of a number of concepts in operating systems and programming languages; we will not explain these. To avoid any confusion, here are the topics that the reader is assumed to be familiar with (for the reader unfamiliar with these topics, textbooks on operating systems are a particularly good source of information):

Linking and loading. The TP monitor is responsible for creating processes and making sure the code for the resource manager requested can be executed in them. For this, it needs to link and load the resource manager’s object modules together with the stub modules of the TPOS. There is no explanation in this chapter about how exactly this is done.

Library management. The object modules of the resource managers and application programs are stored in libraries. These can be libraries maintained by the operating system, or the TP monitor can keep it all in its own repository. There are no specific assumptions made here.

In addition to these basic techniques, we will also gloss over some TP-specific items, most notably the administrative interface. These important issues are not addressed in this book.

6.2 Transactional Remote Procedure Calls

Having introduced the notion of remote procedure call (RPC) in Chapter 5, we now proceed to explain what exactly a transactional remote procedure call (TRPC) is. It is much more than just an RPC used within a transaction. To get into the right mind set, think of a TRPC being as different from a standard RPC as a subroutine call is from an ACID transaction. For an illustration, go back to Figure 5.9. There, the request coming in at the upper left-hand corner is a TRPC from some other node, aimed at the application represented in the center of the figure. The application begins to work and calls various system resource managers, the database, and so on. Each of these invocations is a TRPC of its own, handled in the way just described. The database, in turn, calls system resource managers, some of which have also been called by the application, again using TRPCs.

The distinguishing feature is that all resource managers, by having been called through a transactional remote procedure call, become part of the surrounding transaction. Remember that in a standard RPC environment, the RPC and the operating system have only to make sure that once a call has arrived at the local node, there is a process running the server’s code. When the call returns, the server is free again, and that is all there is to it.

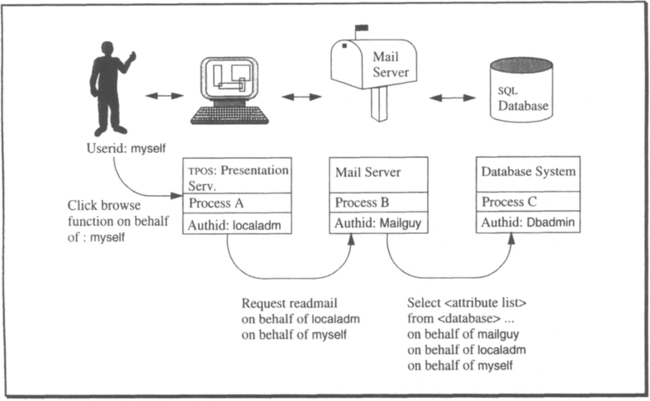

A TP monitor must manage a pool of server processes, and in addition, each TRPC has to be tagged with the identifier of the transaction within which the call was issued. In particular, TRPC messages going across the network have to carry the transaction identifier with them. When scheduling a process for a request, the TPOS must note that this process now works “for” that transaction. After the call returns, the process is detached from the transaction, but the response message has to be tagged with the transaction identifier.

If that were all that distinguished a TRPC from an RPC, it would not require a section to describe it; but the point is that TRPC is not just transaction control over one resource manager invocation. Rather, transaction control must be exercised over all resource manager interactions within one transaction. Keeping all resource managers together as part of the same transaction requires more than just appending a transaction identifier to each request. The TP monitor also needs to enforce certain conventions with respect to the behavior of the resource managers issuing and receiving TRPCs, and with respect to the way transactions are administered by the nodes constituting the transaction system. The following are required for the “web” of a transaction’s calls to hang together:

Control of participants. Someone must track which resource managers have been called during the execution of one transaction in order to manage commit and rollback. As part of the two-phase commit protocol, all resource managers participating in the transaction must, in the end, agree to successfully terminate that transaction; otherwise, it will be rolled back. That means somebody must go out and ask each resource manager involved whether it is okay to commit the work of the transaction. The component responsible is called the transaction manager (TM), which is explained in detail in Chapter 11. It is instructive to contemplate for a moment that a resource manager does not necessarily remember that it has been called at all. Assume a simple COBOL server that gets invoked as part of transaction T1, reads some tuple from a database, performs some computations, inserts a tuple, and returns. Each time the server is called, it starts in its initial state; that is, all the data for doing its work must be in the parameters passed. After the server is done, it forgets everything (frees its dynamic storage); thus, there is no point in asking the server later on whether transaction T1 should commit; it has no information about the transaction.

Preserving transaction-related information. As Figure 5.9 shows, the same resource manager can be invoked more than once during the same transaction—by the same or by different clients. In the process environment that we assume, each invocation can, in principle, be handled by a different process (remember the concept of server classes outlined in Chapter 5). Since each RPC finally ends up in a process, at commit each process running the same resource manager code is asked individually about its commit decision. For some types of resource managers, this may be sufficient. Assume that, upon each invocation, 10 tuples were inserted into a database; the transaction will commit if each of the 10-tuple-insert requests was successful. In other situations, however, the resource manager can only vote on commit when all the information pertaining to that transaction is available in one place. If the different invocations left their traces in different servers, this will be hard to do. The TRPC mechanism, then, must provide a means to relate multiple invocations of the same resource manager to each other.1

Support of the transaction protocol. The resource managers must stick to the rules imposed by the ACID paradigm. This must be supervised by the TRPC mechanism; in case of a violation, the transaction must abort.

The long and short of all this is that the concept of transactional remote procedure calls has two complementary aspects. One is the association of requests, messages, and processes with a transaction identifier. The other aspect is the coordination of resource managers in order to implement the transaction protection around RPCs. If this sounds recursive again, bear with us until Section 6.3, where the dynamic interaction among the TPOS components is explained in some detail.

All TRPC-related issues are handled by the TPOS, though not by the TP monitor alone. As the description progresses, it will turn out that the TP monitor acts as a kind of switchboard that receives requests and passes them on, without actually acting on most of them. However, the TP monitor makes sure that these are valid transactional RPCs and that they get routed to the proper components of the TPOS or the application. The explanation of the way TRPCs are handled is divided into two parts. First, we explain the interfaces through which transactional resource managers interact with their environment—the TPOS in particular. Second, the problem of preserving transaction-related information is discussed.

6.2.1 The Resource Manager Interface

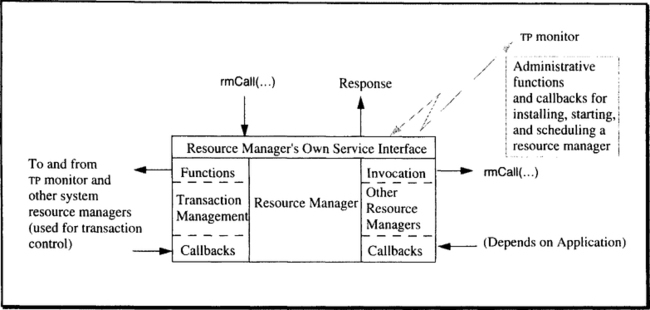

A resource manager qualifies as such by exhibiting a special type of behavior. More specifically, a resource manager uses and provides a set of interfaces that, in turn, are used according to the protocols of transaction-oriented processing. Depending on how many of the interfaces a resource manager uses (and provides), it has more or less influence on the way a transaction gets executed in the system. This is discussed extensively in this chapter, as well as in Chapter 10. Figure 6.2 gives an overview of the types of interfaces a resource manager has to deal with.

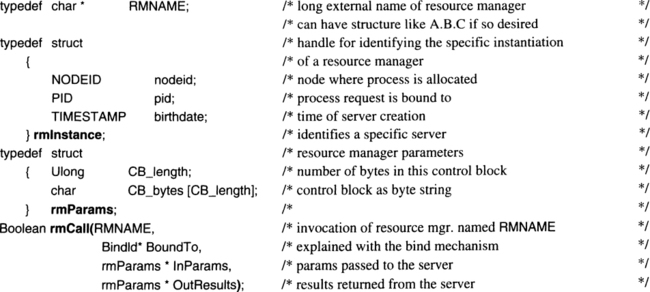

On top of Figure 6.2, there is the rmCall interface, used for invoking the resource manager’s services; the results are returned as parameters to this call.2 The function prototype declare follows. One entry in the parameter list that has not been mentioned so far, Bindld, is explained in detail in Section 6.2.4, but here is the idea: since there can be many processes executing the same resource manager code, it may be necessary in some cases to know exactly which one has serviced a request within a transaction. The parameter Bindld is a handle for such an interaction between a client and a specific server that has to be used repeatedly.

This is about all there is to say about the service interface of a transactional resource manager. Whenever such an rmCall is issued from somewhere, it gets the TRPC treatment we have outlined. In particular, the TP monitor assures that the execution of the resource manager becomes part of the transaction in which the caller is running—provided there is an ongoing transaction. If there is none, then whatever has happened so far has no ACID properties.

Some of the interactions of a resource manager or an application program with the transaction manager, which are alluded to on the left-hand side of Figure 6.2, have already been discussed with the programming examples in Chapter 5. But as the figure indicates, a resource manager generally can not only call the transaction manager, it can also be called upon by the transaction manager. This feature, which is required for the implementation of general resource managers that keep their own durable state, is discussed in the next subsection.

6.2.2 What the Resource Manager Has to Do in Support of Transactions

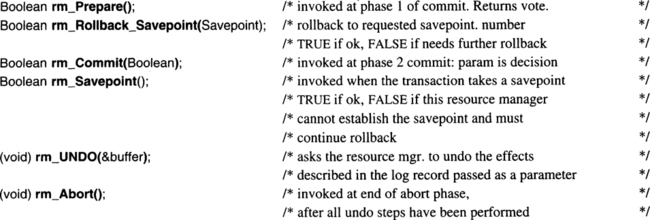

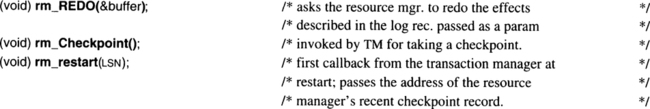

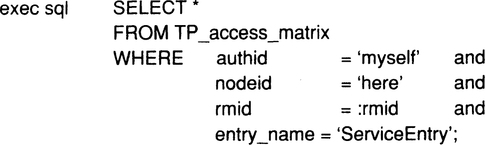

The transactional remote procedure calls described up to this point cover the normal business of invoking the services of resource managers from application programs or other resource managers. It has also been pointed out that the verbs for structuring programs in a transactional fashion result in calls to system resource managers, most notably to the transaction manager. But sophisticated resource managers, especially those maintaining durable data, must also be able to respond to requests from the TPOS. For example, they must vote during two-phase commit, they must be able to recover their part of a transaction (either roll it back or redo it after a crash), they must be able to take a savepoint, and so forth. There are two ways to invoke the resource managers at these special service entries:

Single entry. The server has only one service entry point (message buffer) through which it receives all its service requests. So before acting on a request, it must determine what kind of request it is and then branch to the appropriate subroutine.

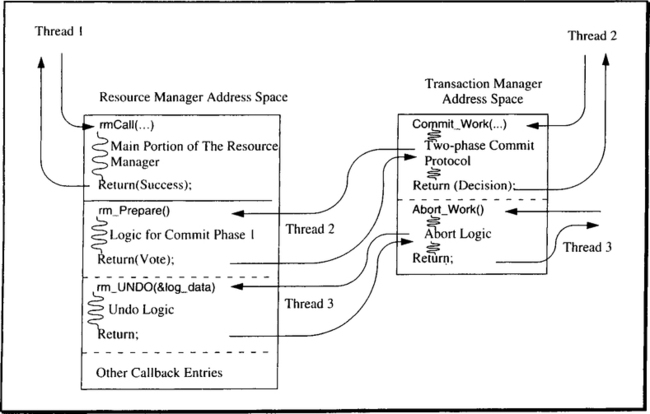

Service entry plus callback entries. Interfacing the resource managers to the transaction manager via a set of entry points is the approach taken in this book (and by X/Open DTP). As Figure 6.2 indicates, each resource manager has a service interface that defines what the resource manager does for a living. Furthermore, it declares a number of callback entries. In the resource manager’s code portion these are entry points, which can be called when certain events happen. The principle is illustrated in Figure 6.3.

The function prototype declares are shown below. Note that the invocation of a resource manager by the transaction manager at such a callback entry is just another transactional remote procedure call.

For example, the rm_Commit entry of a resource manager is called by the transaction manager when a transaction is in the prepared state and the two-phase commit protocol has made the decision to commit. The resource manager can then do whatever is required to commit its portion of the transaction, such as releasing locks or sending output messages. Details of what resource managers are expected to do at each of these entry points are explained in Chapters 10 and 11.

As mentioned, the number of callback entries provided by a resource manager depends on its level of sophistication. The types of servers discussed in the previous subsection need not provide any callback entries. They do not write log data, so they have nothing to undo or to redo. They also do not keep other context data in their own domain, which means their vote on commit is implicitly Yes when they return to the caller. This can be put in a simple rule of thumb: if the resource manager maintains any durable objects, it must be able to accept rollback and prepare/commit callbacks from the transaction manager. It must write log records and, consequently, must be able to act on undo/redo callbacks. If it has all of its state maintained by other resource managers (e.g., SQL servers), then it becomes their problem to maintain the ACID properties of the state. There is a slight deviation from this rule in case of resource managers that do not maintain durable objects but do have to remember all invocations by the client within one transaction; for example, to check deferred integrity constraints. This is discussed in Subsection 6.2.4. The rm_Checkpoint callback is listed for completeness only; its meaning is described in Chapter 11.

The resource managers, on one hand, and the transaction manager, on the other, usually run in different address spaces, all of which may be different from the address space the TP monitor uses. All addressability issues with respect to resource managers are handled by the TP monitor. Most of the callbacks, however, are needed by the transaction manager; thus, there must be a way for the transaction manager to point to the right entry points without actually knowing the addresses. This issue is discussed in the next subsection.

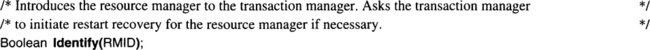

There is one last call from the resource manager to the transaction manager that is needed for start-up, and especially for restart after a failure. This call, Identify, informs the transaction manager that the resource manager is in the process of opening for business and wants to do any recovery that might be necessary. The transaction manager responds by providing the resource manager with all the recovery data that might be relevant; to do that, it uses the rm_restart, rm_UNDO, and rm_REDO callbacks. Details are presented in Section 6.5. The point is that after the Identify call returns, the resource manager has caught up with the most recent transaction-consistent state and from now on can accept service requests. The function prototype declaration looks as follows:

The resource manager just passes its RMID and gets a boolean back; it is TRUE if the transaction manager has gone successfully through the restart logic for that resource manager. If FALSE is returned, something serious went wrong, and the resource manager cannot be declared up.

Since Identify is needed only to start up those resource managers that have to recover durable state (sophisticated resource managers), simple resource managers and application programs do not have to call Identify at all.

The right-hand side of Figure 6.2 shows the interaction between the resource manager and servers other than the system resource managers. These could be other low-level resource managers, such as the log manager or the context manager, or any other server in the system. With each of them, the resource manager could agree on additional callback entries. The invocations of these “other” resource managers need no further explanation; they are simply TRPCs.

6.2.3 Interfaces between Resource Managers and the TP Monitor

At this point, it is necessary to remember that TRPCs never go directly from the client to the server; rather, they are forwarded by the TRPC stub, which is linked to each resource manager’s address space (see Figure 5.11). From a logical perspective, then, clients, servers, and system components are invoking their respective interfaces, but the TRPC stub intercepts the requests and thus enables the TP monitor to act upon them as necessary. This is to say, the TP monitor plays a crucial role in resource manager invocation. The TP monitor does, however, have some other roles: it is the component responsible for starting up the transaction system (bringing up the TPOS), for closing it down again, and for administering all the transaction-related resources. Startup and shutdown are described in some detail in Section 6.5; here we only introduce the TRPCs needed after startup. The administrative functions are not explained in this book; they only require updating the repository database and, as such, are part of the general operations interface, which is not described here.

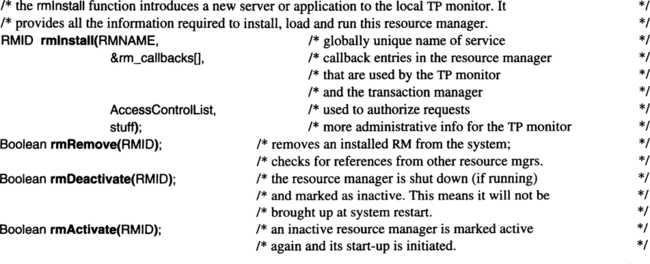

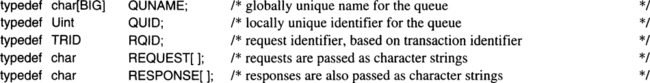

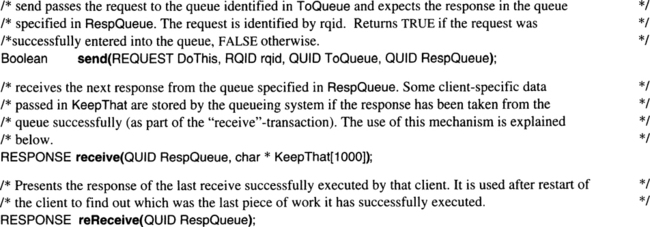

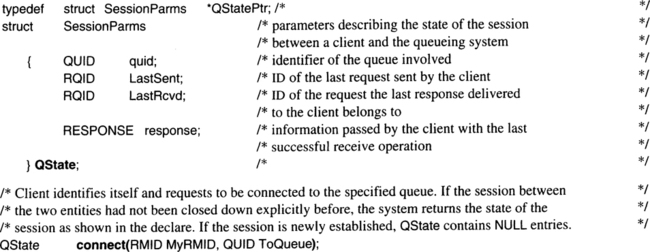

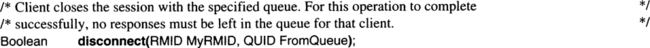

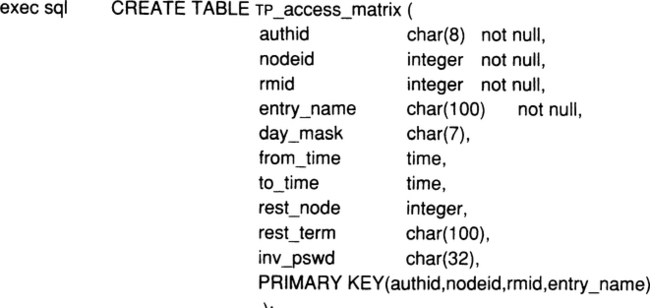

The procedure calls required for system startup and shutdown are exchanged directly among the resource managers and the TP monitor. The administrative TRPCs are exchanged among the TP monitor and the repository resource manager. All this is depicted by the “lightning” in Figure 6.2. Let us start with the function calls used to install a new resource manager or to remove an existing one:

The parameter list of rmlnstall is very rudimentary. The first three parameters are spelled out. RMNAME is the user-supplied global external identifier for the new service; its actual uniqueness will be checked against the repository when the resource manager is installed.

The next parameter is an array of pointers to the callback entries in the resource manager. These callback entries are specified as relative addresses in the load module for that resource manager; as a result, invoking that callback entry means that the TRPC stub branches to the respective address. There is a convention saying that, for example, the first element of the array is the address of the rm_Startup entry, the next element is the address of the rm_Prepare entry, and so on. The TP monitor keeps this array in the TRPC stub of the respective resource manager’s address space. If the transaction manager issues a TRPC to such a callback entry, it has to specify three things: first, the name of the entry to be called; second, the RMID of the resource manager the call is directed to; and third, the parameters that go with the call. The TRPC stub then takes the entry name and associates it with the index in the rm_callbacks array. The copy of that array for the resource manager identified by RMID then contains the actual address to branch to.

As with the callbacks for the transaction manager, these entries need not be provided by simple resource managers; such resource managers can just be loaded or canceled. Sophisticated resource managers, however, may have files to open (close) or other initial (terminal) work to do. Note that in a real system there is more to say about callbacks than just an address in the event the resource manager needs to be called back for the respective event. Especially in cases where no address is provided, additional information is required by the transaction manager. For example, if a resource manager specifies no rm_Prepare entry, this indicates that under all circumstances its vote on commit is Yes. But this convention of “no entry = blind agreement” is not generally applicable. For example, specifying no rm_Rollback_Savepoint entry might indicate that the resource manager has no durable state and therefore does not object to a rollback request. It might, on the other hand, say that the resource manager is able to abort the transaction but cannot return to any local savepoint. Thus, for each missing callback address, the transaction manager must be told the default result of that function for the respective resource manager.

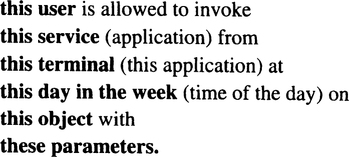

The remaining parameters are left vague. They merely indicate that more information is needed when installing a new resource manager. There must, for example, be an access control list that allows the TP monitor to check whether an incoming request for that resource manager is acceptable from a security point of view. Other necessary declarations include the location from which the code for the resource manager can be loaded, which other resource managers need to be available before this one can start, what its priority should be, and what the resource consumption is likely to be (processor load and I/O per invocation). Some of these issues are discussed in Section 6.5.

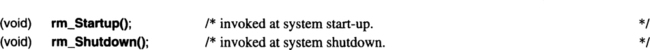

The second group of functions is very straightforward; they were mentioned in the description of Identify. The transaction manager invokes the callbacks for driving the transaction protocols, and the TP monitor brings a resource manager to life by calling rm_Startup. When this TRPC returns, the TP monitor knows that this resource manager is open for service (see Section 6.5). Conversely, when rmDeactivate has been called from the administrator, the TP monitor invokes rm_Shutdown and from now on rejects all further calls to that server class.

No program can participate as a resource manager in a transaction unless it has properly registered with the TP monitor. The TP monitor enters the new service to the name server, which is part of the repository, then checks its resource requirements and its dependencies on other resource managers. Note that this attitude is different from the somewhat anarchic way distributed computing is done in PC or workstation networks. There a server can come up and announce itself to the network by entering its name and address into the name server, and from then on everybody who is interested can send requests to that server directly via RPC. The scheme used by TP monitors is more controlled in the sense that there is always a TPOS, especially a TP monitor, in the invocation path. The major advantage of this approach over the anarchic one is obvious: Going through the TPOS layer allows system-wide end-to-end control, authentication, and load balancing. One can argue that the same control would be possible in the anarchic scheme by proper cooperation among the RPC mechanism and the name server. While this is true, it means that that scheme would effectively evolve into a TPOS.

6.2.4 Resource Manager Calls versus Resource Manager Sessions

Thus far, we have seen the problems of invoking different types of resource managers via transactional remote procedure calls. As mentioned at the outset, however, there is a second aspect to TRPCs that goes beyond the scope of one invocation: context. This is a loaded topic—and an important one—so we will try to give it careful treatment.

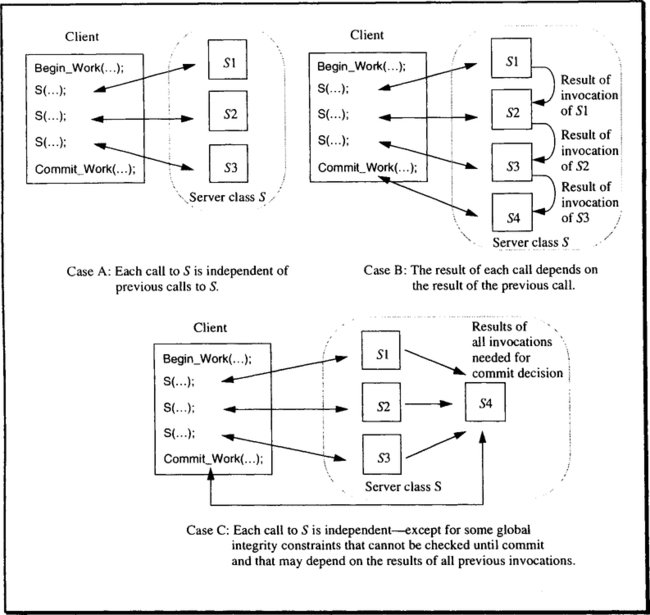

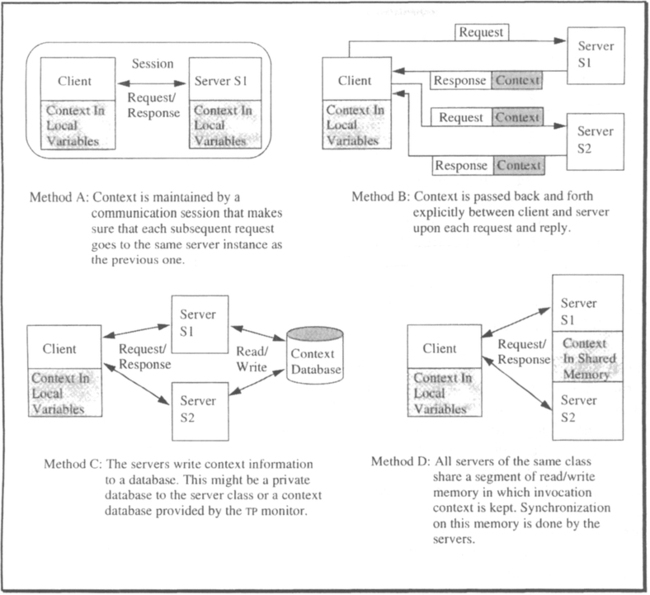

The fundamental question can be put like this: If, in the course of one transaction, a client C repeatedly invokes server class S, how should that be handled? To appreciate that there is a problem at all, note that S denotes a server class, and each invocation can go to a different process belonging to that class. Since these processes have separate address spaces, process Si has no information about what process Sk has done on behalf of C. With these constraints in mind, consider the following scenarios, which are illustrated in Figure 6.4:

Independent invocations. A server of class S can be called arbitrarily often, and the outcome of each call is independent of whether or not the server has been called before. Moreover, each server instance can forget about the transaction by the time it returns the result. Since the server keeps no state about the call, there is nothing in the future fate of the transaction that could influence the server’s commit decision. Upon return, the server declares its consent to the transaction’s commit, should that point ever be reached. An example for this is a server that reads records from a database, performs some statistical computations on them, and returns the result.

Invocation sequence. The client wants to issue service requests that explicitly refer to earlier service requests; for example, “Give me the next 10 records.” The requirement for such service requests arises with SQL cursors. First, there is the OPEN CURSOR call, which causes the SELECT statement to be executed and all the context information in the database system to be built up. As was shown, this results in an rmCall to the SQL server. After that, the FETCH CURSOR statement can be called arbitrarily often, until the result set is exhausted. If it was an update cursor, then the server cannot vote on commit before the last operation in the sequence has been executed; that is, the server must be called at its rm_Prepare() callback entry, and the outcome of this depends on the result of the previous service call.

Complex interaction. This is the general case, of which only a simple version is shown in Figure 6.4 (Case C). The server class must remember the results of all invocations by client C until commit, because only then can it be decided whether certain deferred consistency constraints are fulfilled. Think of a mail server with which the client can interact. The server creates the mail header during the first interaction, the mail body during the next interaction, and so on. The mail server stores the various parts of a message in a database. In principle, then, all these interactions are independent; the client might as well create the body first, and the header next. However, the mail server maintains a consistency constraint that says that no mail must be accepted without at least a header, a body, and a trailer. Since this constraint cannot be determined until commit, there must be some way of relating all the updates done on behalf of the client when the server is called (back) at rm_Prepare—even if this call goes to a process that has not been called upon before. Note that all sophisticated resource managers, such as SQL database systems, entertain such complex interactions with their clients.

This whole issue of relating multiple calls of one client to the same server class is one facet of an almost religious debate that has been going on in the field of distributed computing for many years. It has to do with the question of whether computations should be session oriented or service-call oriented. This is not the place to resolve this question, but let us use the previous example to consider the fundamental issue.

This whole debate has some of the flavor of the data modeling discussions circling around the question of whether all information must be expressed by values (the relational camp), or whether positional information should be allowed, too (the hierarchical, network-and object-oriented camp). Common terms for this controversy are connection-less versus connection-oriented, context-free versus context-sensitive, stateless versus stateful, datagram versus session.

A client and a server are said to be in session if they have agreed to cooperate for some time, and if both are keeping state information about that cooperation. Thus, the server knows that it is currently servicing that client, what that client is allowed to do, which results have been produced for it so far, and so forth. If the session covers an invocation sequence, the server knows which records the client refers to and has context information pointing to the last record delivered to the client. Consider the request for “the next 10 records” issued by the client to a server it contacts for the first time—this obviously would not make any sense.

The advocates of statelessness hold that nonsensical service requests like these should not be issued in the first place. Put the other way around: each remote procedure call must contain enough information to describe completely what the service request is about, without alluding to some previous request, earlier agreement, or anything of that sort.

There are, however, many situations in which it is just very convenient to have some agreed-upon state, if only for the sake of performance. Consider the example of the cursor from which the next n tuples are read. One could think of an implementation that passes with each new request the identifier of the last record read with the previous request; that way, the server knows where to continue. But that would solve only part of the problem. Without context, each new request would have to be authenticated and authorized; that is, the server would have to decide over and again whether the client is allowed to read these records. If there is state, then the result of the security check done upon the first request is kept in the context on the server’s side.

As will become obvious in the following chapters, there is even more context that needs to be preserved in order to achieve the ACID properties. For example, guaranteeing atomicity and isolation requires the server to remember all the records a transaction has touched so far—and not just the most recent one, which would be enough to support the “fetch next” semantics. Furthermore, a transaction in itself establishes a frame of reference that can (and must) be referred to by all instances that have participated in it. Just to make the decision whether “this transaction” should be committed or aborted requires some context from which to derive which state the transaction is in (from each client’s or server’s perspective). Of course, since a transaction can involve an arbitrary number of agents, it is by nature more general than a session, which is always peer-to-peer. But the important similarity at this point is the necessity of context that can be referred to by all instances involved. The following discussion is focused on the issue of how to achieve shared context.

The problem of “sessions” versus independent rmCalls will come up over and again during this chapter. The position taken here is that the difference between the two at the level of the resource manager interface is not that dramatic and, therefore, the issue should not be overrated (and overheated). There are situations where maintaining context on both sides lends itself naturally toward supporting certain types of (important) resource manager request patterns. In other cases, keeping the context on one side is the more economical solution. Let us briefly sketch the different methods for keeping client-server context; it turns out that client-server association via context is a fairly general phenomenon and allows for many different implementations. Four structurally different approaches are sketched in Figure 6.5.

It is easy to see that each of these techniques can, in principle, be used to maintain the relationships among server invocations as they are illustrated in Figure 6.4. This is not to say, though, that the techniques are arbitrarily interchangeable; they are quite different with respect to the burden they put on both the client’s and the server’s implementation in terms of cost for maintaining the processing context.

Having the communication system manage the context is the simplest approach for both sides. There is some magic around them, called session, that makes sure all invocations from the same client go to the same instance of the server. Thus, the server behaves like a statically linked subroutine, which means it can keep its context in local program variables. This applies not only to the normal service invocations, but also to any callbacks the server may get from the transaction manager. Such a session has to be managed by the TPOS; all the client or the server has to do is declare that it needs one. The price for this is considerable overhead in the TPOS for maintaining and recovering sessions; see Chapter 10, Section 10.3. Note that only this method is a session according to the established terminology. The other three techniques implement a stateful relationship between a client and a server class, but most people familiar with communication protocol stacks would not call them sessions. This text takes a somewhat less rigid perspective: whenever there is relationship between a client and server for which context is kept somewhere, it is referred to it as a session, provided there is no risk of confusion.

Context passing relieves the TPOS of the problem of context management. Both the client and the server side cooperate in order not to get out of sync. The server decides what context it needs to carry on in case of a subsequent call. The client stores the context in between server calls and passes it along with the next invocation. There is a subtle problem when using Method B (shown in Figure 6.5) in a scenario of the type depicted in Case C of Figure 6.4. The server instance that gets invoked via rm_Prepare needs the context for making its decision. But, because this call comes from the transaction manager, there will be no context in the parameter list. The only solution is for the client to issue some “final” service call (with context), which tells the server that the client is about to call commit. If the server does not like the situation, it calls Abort_Work. If, on the other hand, the server returns normally, the client can call Commit_Work, and the server will not be called back by the transaction manager.

Keeping the context in the database gives the responsibility of maintaining context to the server. It has to write the state that might be required to act on future invocations into a file, database, or whatever. The state information must be supplied with the necessary key attributes to uniquely identify which thread of control it belongs to. The key attributes include the TRID, client RMID, and the sequence number of the invocation. Some of the work involved in maintaining the context can be offloaded to either an SQL database system or to the context management service of the TP monitor (see Section 6.5).

The important point is that with this method, an arbitrary instance of the server can be invoked to vote on commit. Using the MyTrid call, it can find out what the current transaction is; through the TRPC mechanism, it also knows which RMID it is working for, so that it can pick up the right context from the database.

The shared memory approach is similar to the solution using a context database, except that now the whole responsibility is with the server (class). Context has to be kept in shared memory, access to which must be carefully synchronized. This solution is used by very sophisticated resource managers, such as SQL database systems, and is only applicable when all instances of the server class are guaranteed to run at the same node. Chapters 13–15 discuss some of the problems related to that type of context management.

So far, we have ignored a very important distinction with respect to the type of context that needs to be maintained from a server’s perspective. Considering our various examples, one can easily see that there are two types:

Client-oriented context. This reflects the state of an interaction between a client and a server. The solutions presented in Figure 6.5 implicitly assume that this is the type of context to be managed. Typical examples are cursor positions, authenticated userids, and so on.

Transaction-oriented context. This type of context is bound to the sphere of control established by a transaction rather than to an isolated client-server interaction. Consider the following example: Client C invokes server S1, which in turn invokes server S3—all within T. After return from the service call, C invokes S2 (a different server class), which also invokes S3, but needs the context established by the earlier call to S3 from S1. Case C) in Figure 6.4 describes a similar situation. The point here is that the context needed by S3 is not bound to any of the previous client-server interactions, but it is bound to the transaction as such.3 This leads back to the argument about the similarities between sessions and transactions in terms of context management. Examples of transaction-oriented context are deferred consistency constraints and locks (see Chapter 7).

A general context management scheme must be able to cope with both types of state information; that is, it must distinguish whether a piece of context is identified by the client-server interaction or by the TRID. Note that communication sessions can only be used to support client-oriented context. Exercises 4 and 5 are concerned with ways to maintain both types of context based on the mechanisms introduced in Figure 6.5.

Now let us return to the example of the SQL cursor; the only way to avoid any kind of session-like notion at the TRPC level is to use Method B. In this case, all the cursor management is done by some piece of code in the client’s process. The server delivers all the result tuples to that local cursor manager and can then forget about the query. Assuming that the cursor navigates over a large relation, this is an expensive solution.

To get reasonable performance, then, the server must be involved somehow. It has to maintain the result produced by the SELECT of the cursor definition and must record the current cursor position in that result set. In other words, it must be in a position to make sense of a FETCH NEXT service request. The client and server share responsibilities for maintaining context. They must decide what context information about their interaction is to be kept from now on. Having both maintain the same context would be overkill. For example, if the client issues a SELECT, which he knows returns at most one tuple, then no client context is needed; only if he wants to process a cursor must context be maintained for the client.

Transaction-oriented context must be maintained by each server that manages persistent state, no matter what the invocation patterns with its clients look like. This aspect is elaborated over the course of the next five chapters.

The ground rule is this: Each server that manages persistent objects must be implemented such that it can keep transaction-oriented context. The TRPC mechanism must provide means for servers of any type to establish a stateful interaction with a client in case this should be needed (client-oriented context). Of course, both types of context must be relinquished after they have become obsolete, either after commit/abort or after an explicit request to terminate a particular client-server interaction. The TPOS provides two mechanisms to implement client-oriented context:

Session management. If context maintenance is handled through communication sessions, then the TP monitor is responsible for binding a server process to one client for the duration of a stateful invocation.

Process management. Even if the TP monitor has no active responsibility for context management, it may use information about the number of existing sessions per server for load balancing. The rationale is that an established session is an indicator for more work in the future.

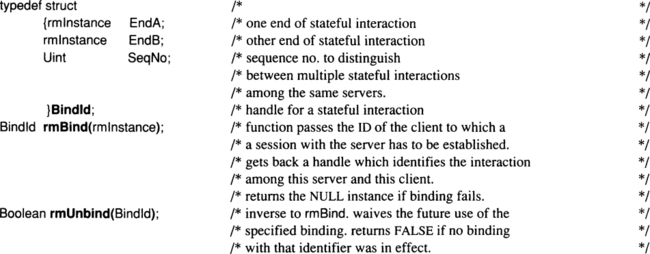

For the purpose of our presentation, we use two TRPC calls to establish and relinquish a session among a client and a server; they assume that the request for a session is always issued by the server. Here are the function prototype declares:

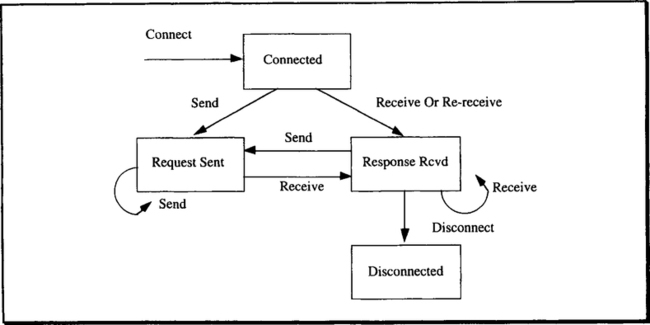

It is important to understand that a Bindld uniquely identifies an association between a client instance and a server instance. Therefore, each Bindld points to one rmlnstance on the client’s side and one rmlnstance on the server’s side. Consider the use of these functions for handling SQL cursors. The sequence of SQL statements reads like this:

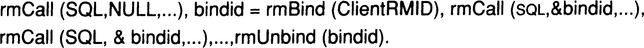

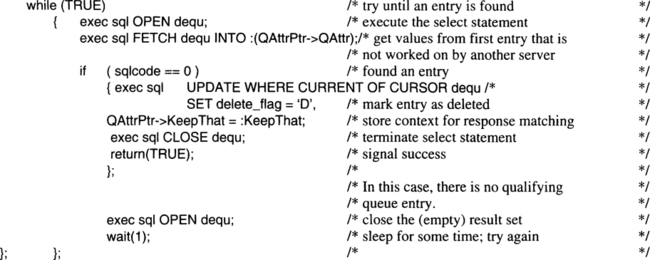

Using the ideas just introduced, this would be mapped onto the following sequence of TRPCs:4

The imbedded SQL calls on the client’s side are turned into rmCalls to the SQL server; the first one requests the open function, the next one the fetch operations, and so forth. Upon the first request, the server issues an rmBind request to the TP monitor, because the open establishes context to which the client subsequently is likely to refer. The server instance therefore asks to be bound to that client instance to make sure future client requests are mapped correctly. After this, the server acts on the function requests. The CLOSE CURSOR request is essentially a signal to the server to destroy the context; therefore—after its local clean-up work—it invokes rmUnbind. Of course, the SQL connection module on the client’s side must be able to associate cursor names with the Bindlds that are returned with the rmBind requesting to open the cursor. Since this has nothing to do with TP monitors in particular, it is not discussed here.

If rmCalls are to be issued without a preference for a particular instance of the server, rmlnstance is set to NULL; otherwise, the value for it must be determined by a preceding rmBind. Note that a client can entertain an arbitrary number of simultaneous bindings with the same server class, though of course, not with the same instance. The pair of rmBind/rmUnbind operations establishes a session between the client and the server.

There is one more interesting thing to note about the binding between client and server, especially in case of an SQL server. The example makes it look as though binding was meant only to protect cursors or the work within one transaction. However, sessions are generally used in a much wider scope. Imagine that an application program starts up, and the first thing it has to do is to find its proper database. For example, it could have to run with a test database or with the production database; it could have to use the order entry database of region A or of region B, and so on. In short, all its schema names need to be bound to the right schema description. This requires control blocks to be set up on both sides—again, a session between the client and the server. Once this is established during the startup of the application, it can be used for all subsequent interactions that refer to this schema. Cursors will, of course, work correctly, because the initial SELECT, as well as the corresponding FETCH NEXT, will all be sent to the same rmlnstance. Thus, the duration of an rmBind can be longer than one transaction; a client and a server “in session” can execute an arbitrary number of transactions across that session. But assuming that there is no parallelism within a transaction, each such session will, at any point in time, be used by at most one transaction. If this transaction aborts, the state on both sides will be rolled back, as atomicity demands. Since the session is essentially defined by the state kept on both ends, this implies that the session is also recovered to the beginning of the transaction. This is explained in more detail in Chapter 10.

It is interesting to consider who (i.e., at which level of programming) actually uses the rmBind/rmUnbind mechanism. A full-blown resource manager, designed to service many clients simultaneously, must be able to bind many server instances of itself—for load distribution, for throughput, or for other reasons. In that case the the resource manager’s program has to use the binding mechanism explicitly.

If, on the other hand, the resource manager is just a COBOL application, then no one can expect its programmer to use such tricks. It is therefore up to, say, the SQL pre-compiler or the TP monitor to handle properly the sessions established on behalf of the SQL server in order to let the COBOL program run correctly. You can consider some of the consequences of this as an exercise.

The foregoing explicitly assumes that sessions are established by servers only. This is a simplification for the purpose of keeping the presentation free of too many extras. Depending on the type of services provided and on the level of sophistication in the implementation of the clients and servers, it might well be the client asking to be bound to a particular server. Think, for example, of a piece of software that acts as a server to one side, but is a client to other servers. If it receives a request that requires binding, then it will have to go into session with its servers to be able to service the request. The discussion of which way to set up a session under which circumstances is beyond the scope of this book. In many of today’s systems, however, there is a clear distinction between clients (application programs) and servers (TP services) in terms of sophistication and complexity: applications do not want to be bothered with any aspects of concurrency, error handling, context maintenance, and so on, leaving the servers to take care of the context.

6.2.5 Summary

The TP monitor’s main task at run time is to implement transactional remote procedure calls (TRPCs). TRPCs look very much like standard RPCs in that a service can be invoked via its external name (RMNAME), irrespective of which node in the network actually runs the code for if. However, TRPCs come with a much more sophisticated infrastructure, which is embodied by the TPOS.

Apart from the automatic process scheduling and load balancing that is discussed in the following sections, the TP monitor makes sure that all resource manager invocations within a transaction are part of that transaction. Thus, even a simple program that uses none of the transactional verbs becomes attached to a transaction when invoked through a TRPC. That does not mean that updates it makes to an arbitrary resource become magically recoverable, but if that simple program in turn invokes recoverable resource managers, such as SQL databases, the TP monitor tags the invocation with the transaction ID of the simple program and thus keeps the whole execution within one sphere of control.

The actual management of the transactional protocols is done by the transaction manager. The TP monitor forwards TRPCs and guarantees that they carry the right TRID and go to the right process, depending on the TRID.

Sophisticated resource managers maintain durable objects, or at least state information, about the interaction with a client for the duration of a transaction, or longer. It has been demonstrated that the TP monitor needs to support these associations among clients and servers spanning multiple TRPCs by providing session-like concepts. In some implementations, the TP monitor is responsible for keeping state that belongs to such a client-server session.

This section has taken the perspective that resource managers and applications are structurally indistinguishable. In general, an application is simpler than a full-blown resource manager—say, an SQL database system—in that it does not actively participate in most of the transaction protocols, does not have any changes to durable storage that need to be undone, and so on; in principle, however, it could do all that if the application required that level of sophistication. The TP monitor treats resource managers and applications as the same type of objects.

6.3 Functional Principles of the TP Monitor

The crucial thing about resource managers and transactional applications is that all invocations of services other than calls to linked-in subroutines have to use the TRPC mechanism. In current operating systems, there is no way to effectively enforce these conditions; yet, we must appreciate that all bypasses and shortcuts will cause part of the work to be unprotected, resulting in dire consequences for the global state of the system. Ignoring the issue of side doors, we will assume a well-designed system according to Table 6.1, where the TPOS is in full control of all its resource managers.

As is apparent in the previous section, the TP monitor’s premier function is to handle TRPCs and all the resources pertaining to them. This section, therefore, focuses exclusively on this aspect. Some other services provided by the TP monitor—managing queues, authenticating users, and bringing up the system—are covered in the later sections of this chapter.

This section contains a large amount of technical detail. It begins by sketching the address space and process structure the TP monitor requires for managing its applications and resource managers. Based on that, the core data structures of the TP monitor and the transaction manager are declared. Then the logic for handling a TRPC is presented in some detail—first for a local invocation, and then for a remote invocation. This analysis shows that there are (at least) two topics that need further exploration: the binding of names of resource managers, and the dynamic interaction between the TP monitor and the transaction manager. These topics are covered in the next subsections. Finally, some subtleties omitted during the main discussion are explained. They illustrate a phenomenon that occurs with increasing frequency the closer one gets to the implementation details of a TP system: whenever things look nicely controlled and strictly synchronous, parallelism rears its head and calls for another round of complication. This is but one example of the complexities a TP system hides from the application.

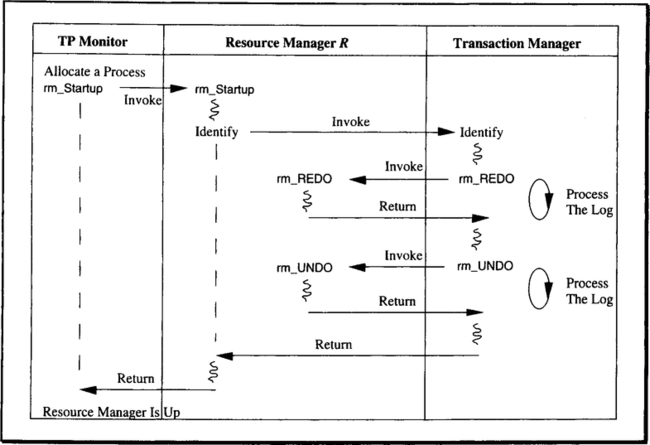

6.3.1 The Central Data Structures of the TPOS

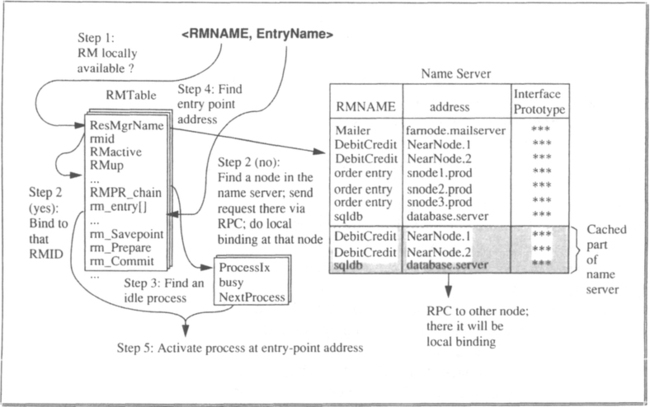

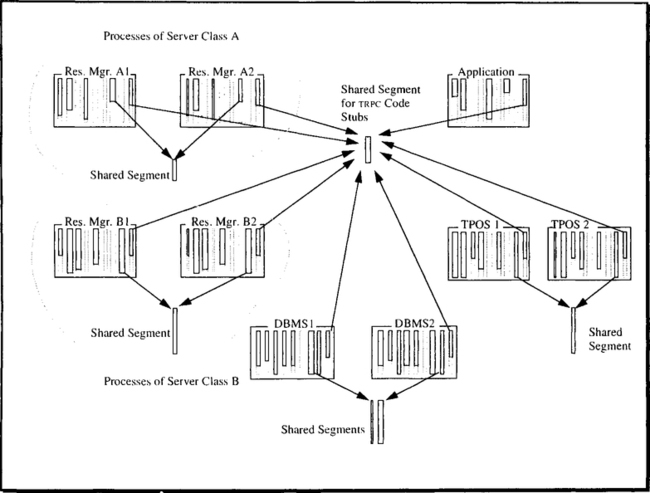

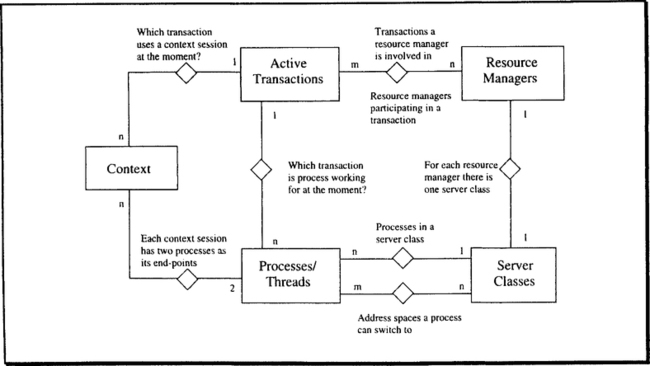

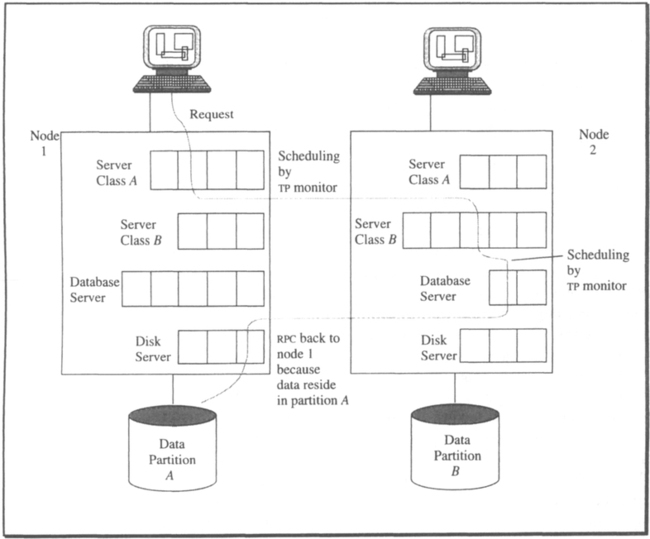

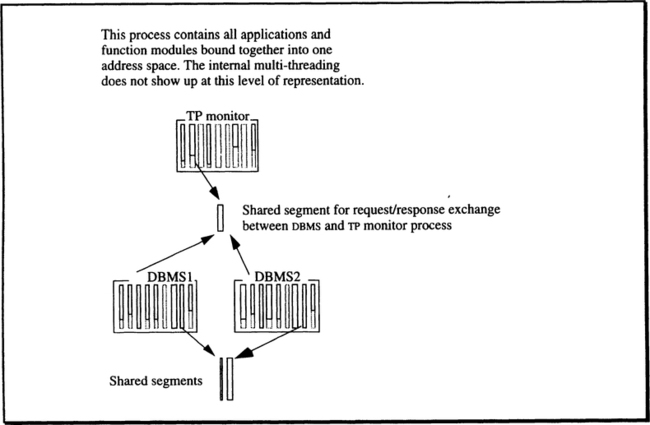

The TP monitor manages resources in terms of server classes (or resource manager types). The definition of these terms in Chapters 2 and 5 are briefly repeated here. The idea is illustrated in Figure 6.6, which is a refined version of Figure 5.11.

Remember that an RMNAME is a globally unique identifier for a service that is available in the system. At the moment the resource manager is installed at a node (function rmlnstall), it gets a locally unique RMID. At run time the TP monitor maintains a server class for each active RMID. The server class consists of a group of processes with identical address space structures; this is to say that each member of a server class runs exactly the same code. Processes in a server class are functionally indistinguishable.

For each TRPC, the TP monitor has to find the RMID for the requested RMNAME and then the server class for that RMID. If there is no unused process in that server class, the TP monitor has to create one. Otherwise, it has to select from the server class a process to receive the request. A third option is to defer execution of the TRPC for load balancing reasons; this, however, may cause deadlocks (can you see why?). All this happens within the TRPC stub.

The reason for having multiple processes running on behalf of the same resource manager is load balancing. As the load for a certain resource manager increases, the TP monitor creates more processes for it. The considerations determining how many processes should be in a given server class are outlined in Section 6.5. For the moment, let us concentrate on the address space structure required for making server class management efficient—which means low pathlength for a TRPC.

As described in Chapter 5, for all processes using TRPCs, there is one piece of shared memory, which holds the TRPC stub. This portion is displayed in the center of Figure 6.6. Each resource manager invocation via TRPC results in a branch to that stub (within the process issuing the call), and then the processing we have already sketched begins. Of course, the TRPC-stub code is re-entrant; that is, it can be executed by many processes simultaneously, as is the case for compilers, sort routines, and editors.

The remarks about what happens when a TRPC is mapped to a process do indicate that the TRPC stub needs information about the local server class configuration, as well as about remote resource managers that might be targets of TRPCs issued by local clients. For fast access, this information is kept in shared global data structures, for which the TP monitor is responsible. The segment(s) for these shared data structures is shown as the common segment for the two TPOS address spaces in Figure 6.6.

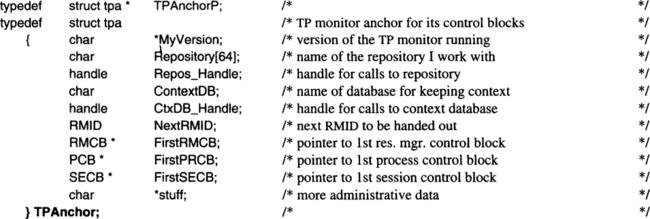

To give an idea of how the TP monitor and the other components of the TPOS cooperate, we now describe a global view of what the core data structures maintained by the TPOS are, how they hang together, and what access functions are available for them. Note that this section only presents the “grand design” plus a more detailed discussion of the TP monitor’s data structures.

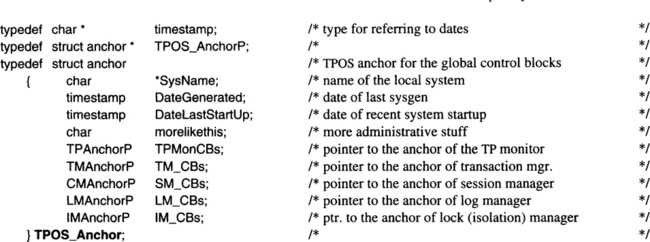

6.3.1.1 Defining the System Control Blocks

To keep things manageable, it helps to have one starting point from which all data structures can be tracked down, rather than a bundle of unrelated structures that can be located only via their names in a program. Thus, above all there is an anchor data structure providing access to the other global data structures. Its declare looks like this:

Of course, a real system anchor contains more than what is shown in the example. The TPOS_Anchor is one well-defined point in the TPOS, from which all the system data structures can be reached (provided the requestor has the required access privileges). Each resource manager is assumed to have its own local anchor, where this resource manager’s data structures are rooted. Keeping the pointers to these anchors in the TPOS_Anchor ensures that addressability can be established in an orderly manner upon system startup; this is necessary, because the TPOS comes up before any of the resource managers is activated. Note that although all the data structures to be introduced are global TPOS data structures (in that they are required for all activities of the TPOS), each one is “owned” by one of the system resource managers, which tries to hide them (that is, their physical organization) from the other components of the TPOS. The degree to which this can be done depends on the flexibility of domain protection offered by the underlying operating system.

The declaration in our example assumes five system resource managers: the TP monitor, the transaction manager, the log manager, the lock manager, and the communication manager. This is a basic set; real systems might have more.

The anchor of a system resource manager has no fixed layout; it is declared by each resource manager for itself. The TP monitor’s anchor might have the following structure:

The resource manager control blocks and the others rooted at TPAnchor need more than just declarative treatment; accordingly, they are introduced immediately following the description of the global data structures. Figure 6.7 gives an overview of which data structures the TPOS keeps in its different components and how they are related. To be precise: only the global data structures entertained by the TP monitor and the transaction manager are fully shown, because these are the ones needed for handling TRPCs. For the remaining system resource managers, Figure 6.7 shows the anchor structure but none of the global data structures managed by these components.

The flags attached to the control blocks in Figure 6.7 serve as metaphors for a special data type that has not yet been introduced: the semaphore. As explained in Chapter 8, semaphores are used to control the access of concurrent processes to data structures in shared memory. It is important to understand that the control blocks shown in Figure 6.7 will be heavily accessed, because the TPOS executes on behalf of virtually every process in the system. Since the control blocks are threaded together through different types of linked lists, it is important that update operations on these link structures be consistent. Consistency can be lost if different processes access the same control block simultaneously, applying contradictory updates to it. Again, exactly how this can happen is a subject of Chapter 8; for the the moment, just accept that the little flags called semaphores help to make sure that a process can operate on these central data structures without being disturbed by other processes.

The small control blocks in the lower part of Figure 6.7 are used to establish fast cross-references from one type of control block to the other; they are explained in Subsection 6.3.2. Before we come to that, let us introduce the access functions to the TPOS’s major control blocks.

6.3.1.2 Accessing the Central Data Structures of the TPOS

There is one system resource manager that is responsible for and encapsulates each type of control block in the central data structures. For example, the TP monitor is responsible for the resource manager control blocks, the process control blocks, and the session control blocks. The transaction manager is responsible for the transaction control blocks, and so on. The cross-reference control blocks are maintained by the resource manager at whose control block they are rooted.

Whenever a component of the TPOS executes one of its functions, the characteristics of transactional remote procedure calls require the TPOS to know:

In many cases, it also important to get the resource manager ID of the client that called upon the system resource manager. Based on such an identifier, the routine might request detailed information about the process, transaction, or resource manager. Of course, such data could be passed along with each TRPC, as is the case with remote invocations. But for local invocations, it is much more efficient to use the central control blocks where all this environmental information is kept.

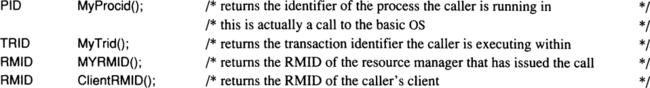

For the purposes of this and the following chapters, we define a set of functions that allow controlled access of the TPOS’s data structures. Here is the list of function prototype definitions:

These functions can be called from any process running in the transaction system. The three types of identifiers returned are accessible to anybody involved in the processing and thus need no additional privilege for reading them. The next group of functions is not as public; their use is restricted to system resource managers that form core parts of the TPOS. The ways of enforcing these access restrictions are not discussed here; just keep in mind that an arbitrary application is not allowed to call the functions declared in the following example code.

The data structures that are returned by these functions will be explained as we go along. Processes that are entitled to call, say, MyTrans will get a copy of all descriptive data for their current transaction, but no linkage information from there to other control blocks. Access to that kind of data is reserved to those who are allowed to invoke MyTransP.

6.3.2 Data Structures Owned by the TP Monitor

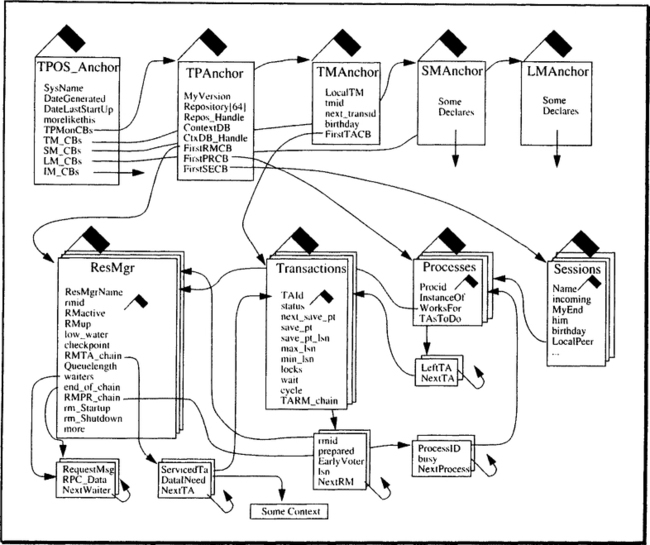

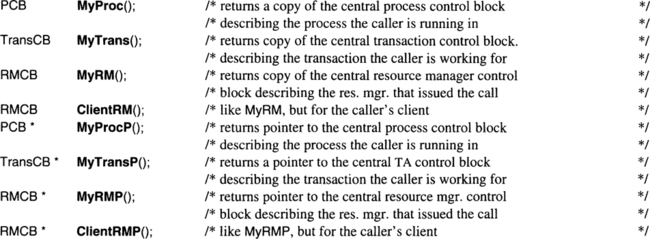

If Figure 6.7 left the reader a bit puzzled, that is because it focuses on the organizational aspects of the central data structures; that is, the addressing hierarchy and the cross-referencing among the control blocks. Let us leave this to the side for a moment and consider what has to be described in the control blocks in order to handle TRPCs as laid out in the previous section. Figure 6.8 shows an entity relationship diagram with the key entities the TP monitor has to deal with, and their relationships. Don’t worry about the fact that the data structures of some of these entities are not owned by the TP monitor; for the moment, we view them as logical data objects and try to come up with a data model reflecting the TP monitor’s perspective of the world. Mapping this view to some kind of data structure is the next step. Now consider each of the entity types and their relationships.

First, there are resource managers, the descriptions of which are stored in the repository. For each resource manager, there is a server class (and vice versa), which is the run-time environment for that resource manager. For mapping TRPCs, these two categories can be joined into one data structure that holds the static information from the repository as well as the dynamic data, such as request rates and queue lengths. This structure, called RMCB, is introduced later in this subsection.

Server classes are associated with processes in two ways. First, there is a 1:n relationship that describes which processes have been allocated for that server class. If a process is allocated for a server class, then its own, private address space contains the code of the resource manager corresponding to the server class. In other words, the process always starts processing in the code of the server class to which it has been assigned. Assigning processes to server classes is done by the TP monitor. Once such an assignment has been established, it can only be changed by killing the process.

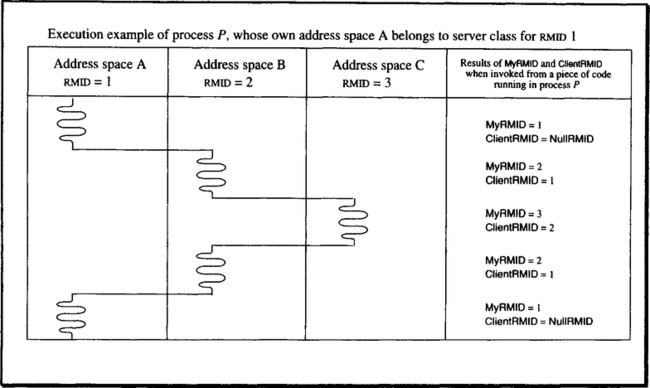

Despite the fixed assignment of processes to server classes, processes can switch address spaces during execution; that is, they can execute code of other resource managers. This is an immediate consequence of the orthogonal process/address space design laid out in Chapter 2. Of course, any process cannot switch to any address space; that has to be restricted for both scheduling and protection reasons. Thus, we need a second relationship among processes and server classes describing which processes can “domain-switch” to which address spaces. It is this ability of a process to execute in different spaces that requires the system calls MyRMID and ClientRMID. Consider the scenario shown in Figure 6.9.

Of course, there is the static association of a process to its own resource manager, that is, to the server class running for that resource manager. Because the association is static, we have not defined a separate system call for it. Rather, it is stored at a fixed entry in the process control block.

Except for a few special situations, all execution in the system is protected by a transaction. Thus, at any point in time, a process is running code on behalf of a transaction. Over time, each process will service many transactions, but if we take a snapshot of the system each process is either idle, or it works for one transaction. Conversely, each transaction can, at one instance, have many processes working for it.

The complex m:n relationship between transactions and resource managers follows immediately from that. A transaction can invoke the services of many resource managers, and each resource manager, having many processes in its server class, can at any instant be involved in many transactions.

The last aspect is context sessions in the sense of application-level stateful interactions among clients and servers. For the implementation discussion in this chapter, we assume the session technique depicted in Figure 6.5, Method A; this means reserving a server process for a client process for the duration of the stateful interaction. Therefore, each context session is associated with exactly two processes, one for the client and one for the server. Each process, on the other hand, can entertain multiple sessions at the same time. Similar arguments hold with respect to the relationship between sessions and transactions. A transaction can have many sessions, but a session, although it may be open for a long time, is associated with only one transaction at any instant.

The perspective taken for designing the central data structures of the TPOS, therefore, is to look at the relationships that can exist at any point in time between the various entity types. Some of the relationships are static, while others can vary at a high frequency. Maintaining these is largely the job of the TP monitor.

6.3.2.1 Declaring the Control Blocks

To start with, there must be a data structure for each of the entity types shown in Figure 6.8, and there must be an efficient way of maintaining the relationships among them. The following declarations are not meant to represent an actual implementation; rather, they contain a number of simplifications that keep the descriptions of the algorithms simple.

The first simplification is to assume a linked list of control blocks for the instances of each entity type, as is shown in the declares that follow. We could have as easily declared them as relations and used SQL to get to the required entries—this would have gone well with the explanations of the principles underlying the implementation of a TP monitor.

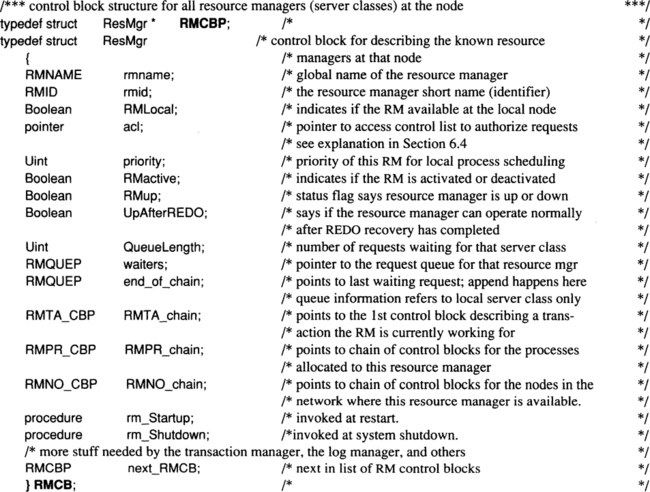

The first group of declares contains everything the TP monitor needs to know at run time about the resource managers installed at that node. The declares for the semaphores in Figure 6.7 have been left out to avoid confusion; but, of course, in a real system they have to be there. Note there is no control block for server classes. But, as Figure 6.8 indicates, at each node there is a 1:1 relationship between resource managers and server classes, so both entity types are represented by just one type of control block.

This is but the skeleton of a resource manager control block; for a complete system, more entries would be needed. However, the ones shown here are sufficient for the explanations of the TP monitor algorithms in the next sections. Note also that in a system that makes use of the address space/protection domain facility described in Chapter 2, there would not be just one central resource manager control block. Rather, the TP monitor would have one control block with the entries it needs, the transaction manager would have another one, the log manager would keep resource manager control blocks, and so on. (This aspect is ignored during the following presentations.)

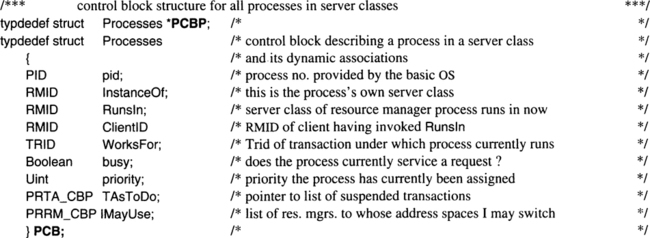

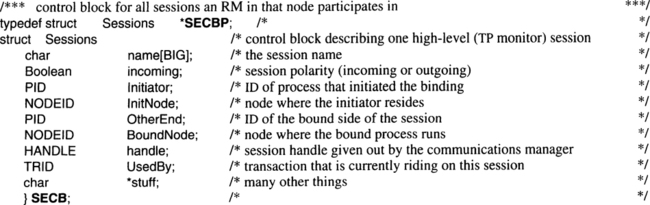

The process control block is simple in comparison to the resource manager control block:

The third group of objects for which the TP monitor is responsible is the sessions among clients and servers. There is not very much to remember about them from the TP monitor’s point of view. Note, however, that in case one end of the session is at a remote site, communication will travel along a communication session, for which the communication manager is responsible. These sessions typically are not established and released on a pertransaction basis; the cost for that would be prohibitively high. Rather, sessions are maintained between processes in different nodes over longer periods of time (in much the same spirit as the Bind/Unbind mechanism); but since they can at any point in time be used by only one transaction, it is possible to recover the state of each session in accord with the ACID paradigm. The communications manager must, of course, behave like a transactional resource manager and keep enough information about the activities of each session to able to roll back in case of a transaction failure. This is described in some detail in Chapter 12. The communications manager must also keep track of which transactions are associated with which sessions (via their processes), since it might receive messages from the network saying that a certain session is broken because of a link failure, or because the other node crashed. Those messages must then be translated into an abort message for the affected local transaction.

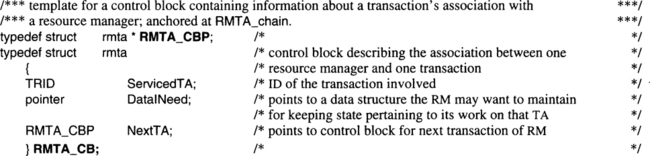

Now that the data structures for the entity types have been established, we need the control blocks for the cross-references among the entity types. Let us start with the control block for the m:n relationship between resource managers and transactions. Since this chapter is on the TP monitor, these control blocks are rooted in the RMCB; they might as well be rooted in the TACB, or in both.

The interesting thing about this data structure is the variable DatalNeed. It allows a resource manager (not a particular process from its server class) to maintain context for a transaction. This is a simple version of the context management techniques depicted in Figure 6.5. The way it is declared here is a mixture of keeping context data in the database and of putting it into shared memory.

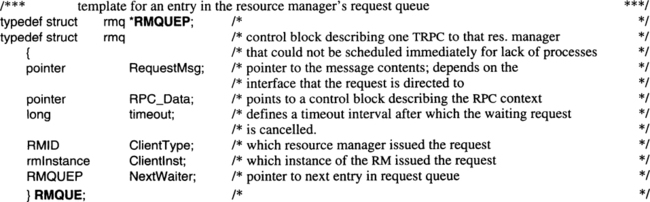

Another type of control block rooted at RMCB is needed to implement volatile queues. These queues have to be kept in cases where there are more TRPCs for a resource manager than the server class has processes—and where the TP monitor for some reason decides not to create new processes. (See Section 6.4 for details.)

The entries ClientType and Clientlnst are provided to support detection of deadlocks that are caused by suspended TRPCs.

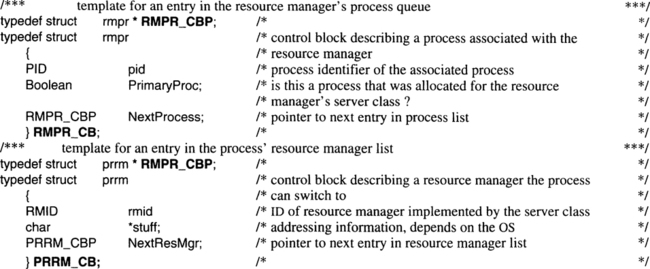

Two lists relate processes to server classes, as shown in Figure 6.8. The first one lists all the processes that may switch to a server class (i.e., to its address space), and it holds a flag saying if this is one of the processes that has been allocated for that server class. Note that the same information is also kept in the PCB entry InstanceOf.

The second list starting at PCB contains the RMIDs whose code a process is allowed to execute. That is, it can switch to the address space of the corresponding server class.

The other reference data structures mentioned in Figure 6.7 are organized in exactly the same way and therefore need not be spelled out explicitly. The transaction control block and the data structures that go with it are introduced in Chapter 11, Section 11.2.

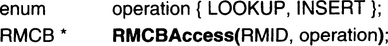

When describing the algorithms for handling TRPCs, it will be convenient to have routines that access the control blocks declared above (such as RMCB, PCB) via their primary keys. Of course, these routines can be used only inside the components owning the respective control block. From the outside, the more restricted retrieval operations introduced at the beginning of this subsection are available.

The ancillary routines perform the following function: given the identifier of the object type, they return the pointer to the corresponding control block upon lookup, or they return the pointer to a newly allocated control block upon insert. The following prototype declaration illustrates this for the RMCB:

The operation is INSERT if a new control block is required for the given RMID, in which case there must be no existing entry with that RMID. The function returns the pointer to the control block that has been allocated for the new resource manager. If the operation is LOOKUP, the function returns the pointer to the control block belonging to the given RMID. In either case, the function returns NULL if something goes wrong. Note that access is defined via the RMID; one can easily imagine an equivalent function for accessing RMCB via RMNAME. Similar functions are assumed for the process and the session control block.

6.3.3 A Guided Tour Along the TRPC Path

This subsection takes you through a complete TRPC thread. We start at the moment the TRPC is issued by some resource manager, follow it through the TRPC stub, see how control is transferred to the callee, and then, upon return, trace the way back to the caller. To avoid unnecessary repetition, we will divide the path into a number of steps:

Local call processing. This is what happens in the caller’s TRPC stub to find out what kind of call is being made, where it is directed, and so on.

Preparing outgoing calls. In case the call is not local—that is, the server invoked runs in a different node—the communication manager has to get involved to send the message off to the other side.

Incoming call processing. When a TRPC from another node has arrived, it must be prepared so that it can be passed on to a local process.

Call delivery. After the recipient of a TRPC (be it local or remote) has been determined, it must be invoked at the proper address.

Call return. This can be described as one step, because the return path is completely set up during the call phase, no matter if it is a local or a remote call.

Let us now describe each of these steps in some detail. To do so, we rely on a mixture of explanations in plain English and portions of C code, in a style similar to that used in Chapter 3. Note, though, that what looks like C code is often just pseudo-code in C syntax. Spelling out the complete code for transactional remote procedure calls, including process scheduling and the other services of a TP monitor, would require a separate, quite sizeable book. The reader is therefore cautioned not to take the code examples as something one would want to compile and run; rather, they are a slightly stricter way of writing explanations.

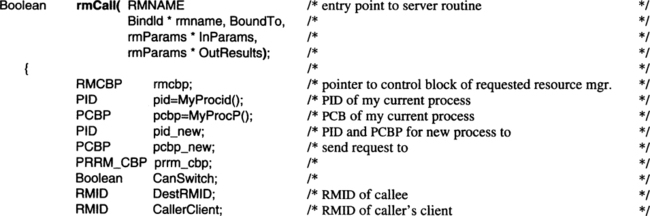

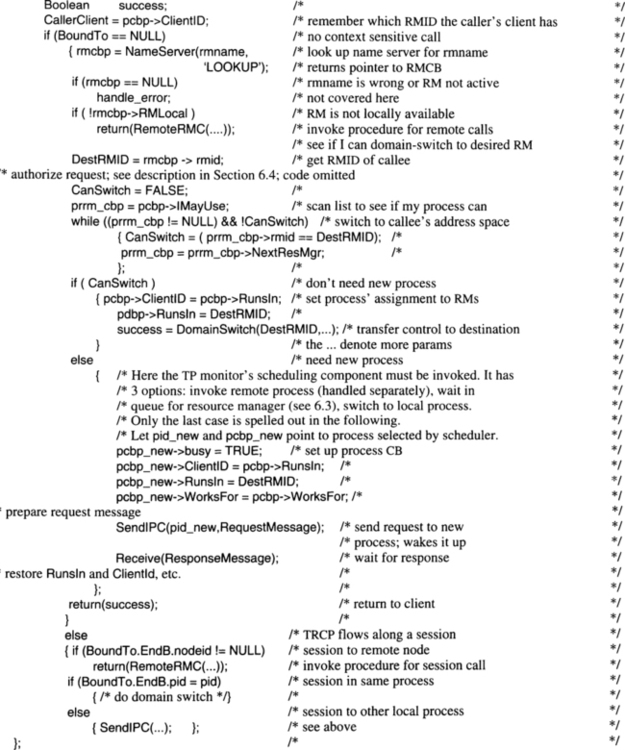

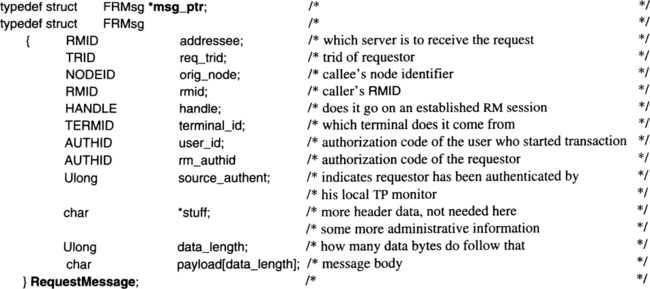

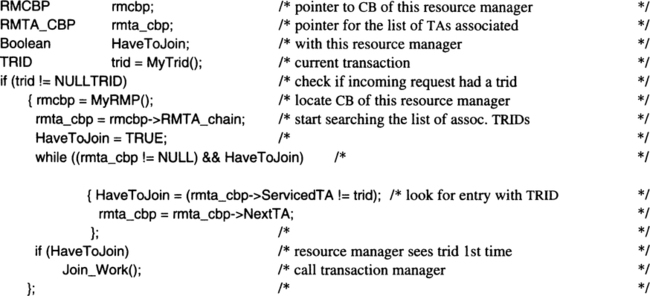

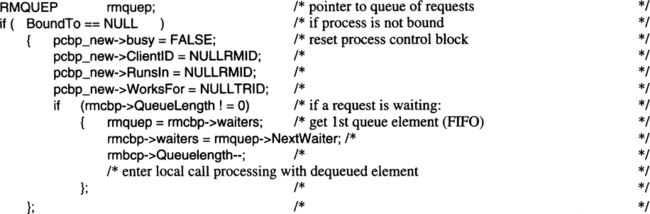

6.3.3.1 Local Call Processing

A TRPC comes from an application or resource manager running in some process. Whether it is the process’s own resource manager calling or not makes no difference at all. We assume the do-it-yourself format of the call introduced in Subsection 6.2.1, and now look at the corresponding entry in the TRPC stub. As pointed out repeatedly, this is part of each address space, so it is just a subroutine call to rmCall. This routine starts out checking what kind of request has been issued. It should be easy to get this information by following the (pseudo-) code for the first part of rmCall.