Basic Computer System Terms

Humpty Dumpty: “When I use a word, it means exactly what I chose it to mean; nothing more nor less.”

Alice: “The question is, whether you can make words mean so many different things.”

Humpty Dumpty: “The question is, which is to be master, that’s all.”

2.1 Introduction

Texts on computer systems introduce many more terms and concepts per page than do texts in other disciplines. This is a fundamental aspect of the field: Its goal is to recognize and define new computational structures and to refine those concepts. Besides the proliferation of terms, there is also widespread use of different terms to mean the same thing. Here are some synonyms one often encounters:

record = tuple = object = entity = …

block = page = frame = slot = …

file = data set = table = collection = relation = relationship =…

Of course, each author will maintain that a thread is not exactly the same as a task or a process—there are subtle differences. Yet the reader is often left to guess what these differences are.

In writing this book, we have tried to use terms consistently while mentioning synonyms along the way. In doing so, we have had to assume a basic set of terms. This chapter reviews those terms and defines them. All the terms presented here are repeated in the Glossary in a more abbreviated form.

This chapter also conveys our view that processors and communications are undergoing unprecedented changes in price and performance. These changes imply the transition to distributed client-server computer architectures. In our view, the transaction concept is essential to structuring such distributed computations.

The chapter assumes that the reader has encountered most of these ideas before, but may not be familiar with the terminology or taxonomy implicit in this book. Sophisticated readers may want to just browse this chapter, or come back to it as a reference for concepts used later.

2.1.1 Units

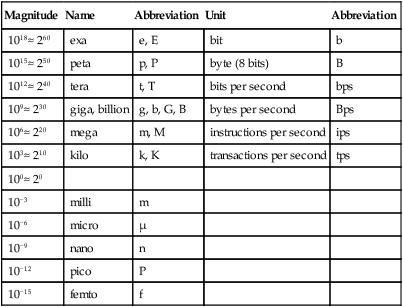

It happens that 210 ≈ 103; thus, computer scientists use the term kilo ambiguously for the two numbers. When being careful, however, they use k (lowercase) for the small kilo (103) and K for the big kilo (210). Similar ambiguities and conventions apply to mega and giga. Table 2.1 defines the standard units and their abbreviations and gives the standard names and symbols for orders of magnitude.

Table 2.1

The standard units and their abbreviations.

| Magnitude | Name | Abbreviation | Unit | Abbreviation |

| 1018≈ 260 | exa | e, E | bit | b |

| 1015≈ 250 | peta | p, P | byte (8 bits) | B |

| 1012≈ 240 | tera | t, T | bits per second | bps |

| 109≈ 230 | giga, billion | g, b, G, B | bytes per second | Bps |

| 106≈ 220 | mega | m, M | instructions per second | ips |

| 103≈ 210 | kilo | k, K | transactions per second | tps |

| 100≈ 20 | ||||

| 10−3 | milli | m | ||

| 10−6 | micro | μ | ||

| 10−9 | nano | n | ||

| 10−12 | pico | P | ||

| 10−15 | femto | f |

2.2 Basic Hardware

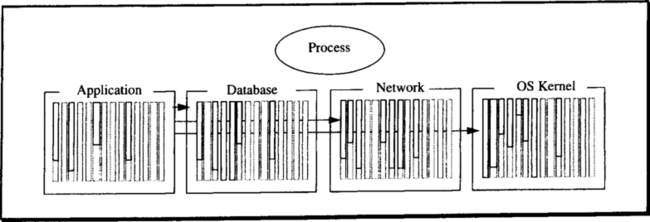

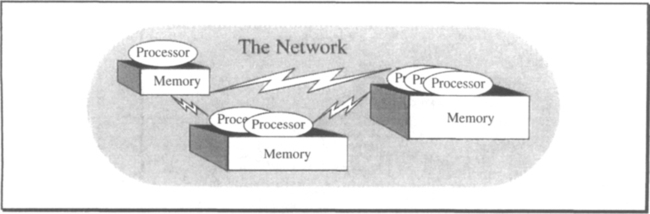

In Bell and Newell’s classic taxonomy [1971], hardware consists of three types of modules: processors, memory, and communications (switches or wires). Processors execute instructions from a program, read and write memory, and send data via communications lines. Figure 2.2 shows the overall structure of a computer system.

Computers are generally classified as supercomputers, mainframes, minicomputers, workstations, and personal computers. However, these distinctions are becoming fuzzy with current shifts in technology. Today’s workstation has the power of yesterday’s mainframe. Similarly, today’s WAN (wide area network) has the communications bandwidth of yesterday’s LAN (local area network). In addition, electronic memories are growing in size to include much of the data formerly stored on magnetic disk.

These technology trends have deep implications for transaction processing. They imply the following:

Distributed processing. Processing is moving closer to the producers and consumers of the data (workstations, intelligent sensors, robots, and so on).

Client-server. These computers interact with each other via request-reply protocols. One machine, called the client, makes requests of another, called the server. Of course, the server may in turn be a client to other machines.

Clusters. Powerful servers consist of clusters of many processors and memories, cooperating in parallel to perform common tasks.

Why are these computer architecture trends relevant to transaction processing? We believe that to be programmable and manageable, distributed computations require the ACID transaction properties. Thus, we believe that the transaction techniques described in this book are an enabling technology for distributed systems.

To argue by analogy, engineers have been building highly parallel computers since the 1960s. However, there has been little progress in parallel programming techniques. Consequently, programming parallel computers is still an art, and few parallel machines are in actual use. Engineers can build distributed systems, but few users know how to program them or have algorithms that use them. Without techniques to structure distributed and clustered computations, distributed systems will face the same fate that parallel computers do today.

Transaction processing provides some techniques for structuring distributed computations. Before getting into these techniques, let us first look at processor, memory, and communications hardware, and sketch their technology trends.

2.2.1 Memories

Memories store data at addresses and allow processors to read and write the data. Given a memory address and some data, a memory write copies the data to that memory address. Given a memory address, a memory read returns the data most recently written to that address.

At a low level of abstraction, the memory looks like an array of bytes to the processor; but at the processor instruction set level, there is already a memory-mapping mechanism that translates logical addresses (virtual memory addresses) to physical addresses. This mapping hardware is manipulated by the operating system software to give the processor a virtual address space at any instant. The processor executes instructions from virtual memory, and it reads and alters bytes from the virtual memory.

Memory performance is measured by its access time: Given an address, the memory presents the data at some later time. The delay is called the memory access time. Access time is a combination of latency (the time to deliver the first byte) and transfer time (the time to move the data). Transfer time, in turn, is determined by the transfer size and the transfer rate. This produces the following overall equation:

![]()

Memory price-performance is measured in one of two ways:

Cost/byte. The cost of storing a byte of data in that media.

Cost/access. The cost of reading a block of data from that media. This is computed by dividing the device cost by the number of accesses per second that the device can perform. The actual units are cost/access/second, but the time unit is implicit in the metric’s name.

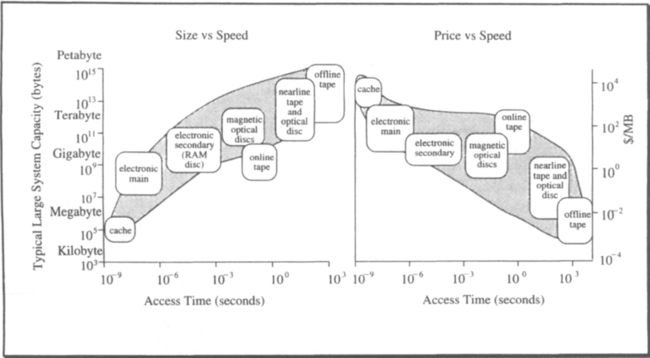

These two cost measures reflect the two different views of a memory’s purpose: (1) it stores data, and (2) it receives and retrieves data. These two functions are different. A device that does one cheaply probably is expensive for doing the other. Figure 2.3 shows the spectrum of devices and their price-performance as of 1990. Notice that the cost/byte and cost/access of these devices span more than ten orders of magnitude in capacities, access times, and cost/access.

Two generic forms of memory are in common use today: electronic and magnetic. Electronic memories represent information as charges or currents passing through integrated circuits. Magnetic memories represent information as polarized magnetic domains on a magnetic media.

Magnetic memories are non-volatile; they do not lose data if the power is turned off. Most electronic memory needs a power source to maintain the data. Increasingly, batteries are being added to electronic memories to make them non-volatile. The more detailed properties of these two memory technologies are discussed in the following two subsections. Discussion then turns to the way electronic and magnetic storage are combined into a memory hierarchy.

2.2.1.1 Electronic Memory

Byte-addressable electronic memory is generically called main memory, while block-addressable bulk electronic memory is variously called secondary storage, extended memory, or RAM disk. Usually, the processor cannot directly manipulate secondary memory. Rather, a secondary memory block (say, 10 KB) must be copied to main memory, where it is read and updated; the changed result is then copied back to secondary memory.

Electronic memories are getting much bigger. Gordon Moore observed that integrated circuit memory chips started out at 1 Kb/chip in 1970, and that since then their per-chip capacity has been increasing by a factor of four about every three years. This observation is now called Moore’s Law:

![]()

Moore’s Law implies that the 16 Mb memory chip will appear in 1991, and that the 1 Gb (gigabit) chip will appear in the year 2000. These trends have interesting implications for disks, for databases, and for transaction processing systems, but let us stick to processors and memories for a moment longer.

Memories are getting much bigger, while processors are getting faster. Meanwhile, inexpensive electronic memories are not getting much faster. Their access times are measured in tens of nanoseconds. Thus, a fast processor (say, a 1 bips machine) might spend most of its time waiting for instructions and data from memory. To mask this problem, each processor is given a relatively small, high-speed cache memory. This cache memory is private to the processor (increasingly, it is on the processor chip). The processor cache holds data and programs recently accessed by the processor. If most memory accesses “hit” the cache, then the processor will rarely wait for data from main memory.

The cache concept recurs again and again. Main memory is a cache for disk memories. Disk memories, in turn, may serve as a cache for tape archives.

2.2.1.2 Magnetic Memory

Magnetic memory devices represent information as polarized magnetic domains on a magnetic (or magneto-optical) storage media (see Figure 2.4). Some form of mechanical transport, called the drive, moves these domains past an electronic (or magneto-optical) read-write station that can sense and change the magnetic domains. This movement trades time for capacity. Doubling the area of the storage media doubles the capacity, but it also doubles the latency. Since magnetic media is very inexpensive, this time-space trade-off provides a huge spectrum of access time versus cost/byte choices (see Figure 2.3).

Two basic topologies are used to exploit magnetic media: the line (a tape) and the circle (a disk). The line has the virtue that it goes on forever (according to Euclid). The circle has the virtue that, as it rotates, the same point returns again and again. If the circle is rotated 100 times a second, the maximum latency for a piece of data to pass by the read station is 10 milliseconds. For a tape, the maximum read time depends on the tape length (≈1 km) and the tape transport speed. A maximum read time of one minute is typical for a tape. In general, tapes have excellent cost/byte but poor access times, while disks have higher cost/byte but better access times. In addition, magnetic tapes and floppy disks have become the standard media for interchanging data among computers.

Disks

Disk devices usually stack several (say, ten) platters together on a single spindle and then rotate them as a unit (see Figure 2.4). For each platter, the disk arm assembly has one read-write head, mounted on a fixed structure. The circle of data on a particular surface is called a disk track. As the disk platters rotate, the disk arm sees a cylinder’s worth of data from the many read-write heads. To facilitate reading and rewriting the data, each track is formatted as fixed-size sectors of data (typically about 1 KB). Sectors are the smallest read/write unit of a disk. Each sector stores self-correcting error codes. If the sector fails (that is, has an uncorrectable defect), a spare sector in the cylinder is used to replace it. The arm normally can move in and out across the platters, thereby creating hundreds or thousands of cylinders. This lateral movement is called seeking.

Disks have a three-dimensional address space: a sector address consists of (1) the cylinder number, (2) the track number within that cylinder, and (3) the sector number on that track. Disk controllers are getting more and more intelligent; they remap defects, cache recently read or written pages, and even multiplex an array of disks into a single logical disk to provide high transfer rates or high availability.

Modem disk controllers give a geometry-independent view of the disk. Such controllers have only two parameters: (1) the sector size and (2) the number of sectors. But the underlying three-dimensional geometry and performance of the disk has not changed. Consequently, disk access times are determined by three parameters:

![]()

High disk transfer rates come from disk striping, which partitions the parts of a large object among n disks. When the object is read or written, the pieces of it are moved in parallel to or from the n disks, yielding an effective transfer rate of n times the transfer rate of individual disks.

Today, average seek and rotation times are on the order of 10 milliseconds, rotation times are on the same order, and transfer rates are between 1 MBps and 10 MBps. These times are unlikely to change dramatically in the next decade. Consequently, access patterns to disks will remain a critical performance issue. To picture this, consider two access patterns to a megabyte of data stored in 1000 sectors of a disk:

Sequential access. Read or write sectors [x, x + 1, …, x + 999] in ascending order. This requires one seek (10 ms) and half a rotation (5 ms) before the data in the cylinder begins transfering the megabyte at 10 MBps (the transfer takes 100 ms, ignoring one-cylinder seeks). The total access time is 115 ms.

Random access. Read the thousand sectors [x, …, x + 999] in random order. In this case, each read requires a seek (10 ms), half a rotation (5 ms), and then the 1 KB transfer (.1 ms). Since there are 1000 of these events, the total access time is 15.1 seconds.

This 100:1 time ratio between sequential and random access to the same data demonstrates the basic principle: sequential access to magnetic media is tens or thousands of times faster than random access to the same data. Furthermore, transfer rates are increasing faster than access rates, so the speed differential between sequential and random access to magnetic media is increasing with time. This has two implications:

Big blocks. Transfer units for random accesses (block sizes) should be large so that transfer time compares to access time.

Data clustering. Great care should be taken by data management systems to recognize and preserve data clusters on magnetic media, so that the big blocks will move useful data (by prefetching it or postwriting it).

Chapter 13 explains how database systems use large electronic memories to cache most of the active data. While reads to cached data do not generate disk activity, writes generate a sequence of log records describing the updates. The log records of all updates form a single sequential stream of writes to magnetic storage. Periodically, the cached data is written to secondary storage (disk or nonvolatile electronic memory) as large sequential writes that preserve the original data clustering. This logging technique is now being applied to other areas, notably directory management of file servers.

A more radical approach keeps recently used data in electronic memory and writes changed blocks to magnetic storage in a sequential stream. This strategy, called a log-structured file system, converts all writes to sequential writes. Unfortunately, for many applications a log-structured file system seems to make subsequent reads of the archived data more random. There will be many innovations in this area as the gap between sequential and random access widens.

Our ability to read and write magnetic domains per unit area has been growing by a factor of ten every decade. This observation, originally made by Al Hoagland, is expressed in the formula:

![]()

Hoagland’s Law suggests that the capacity of individual disks and tapes will continue to increase. Of course, the exponential growth predicted by Moore’s Law, Hoagland’s Law, and Joy’s Law (Equations 2.3, 2.4, 2.8) must come to an end someday.

Tapes

Magnetic tape memories do not have the same high densities that magnetic disks have, because the media is flexible and not matched to the read-write head.1 Tapes, however, do have much greater area. In addition, this area can be rolled into a reel to yield spectacular volumetric memory densities (terabytes/meter3). In 1990, a typical tape fit in the palm of your hand and stored about a gigabyte.

Mounted tapes have latencies of just a few milliseconds to read or write data at the current position, in part because they use a processor to buffer the reads and writes. But the latency for a random read or write to a tape drive is measured in tens of seconds. Once a transfer starts, tape transfer rates are comparable to disk transfer rates (say, 10 MBps). Tapes, then, are the ultimate sequential devices. Tapes are typically formatted in blocks for error control and to allow rewriting.

Because a tape drive costs about as much as a disk drive (around $10/MB), tapes mounted on a tape drive have a relatively high cost/byte (see Figure 2.3). But when the tape is offline—removed from the reader—the cost/byte is reduced by a factor of a thousand, to just the cost of the media (around $10/GB). There is an intermediate possibility: nearline tape robots, which move a few thousand tapes between a storage rack and a few tape readers. The robot typically has between 2 and 10 tape drives and can dismount one tape and mount another in 40 seconds.

Tape robots are another example of trading time for space. The cost of the tape readers and the tape robot is amortized across many tapes (say, 10,000). If the robot costs $500,000, the unit cost of each tape is increased by a factor of six—from, say, $10 to $60 per gigabyte. The offline figure ignores the price of the reader needed for the offline tapes, as well as the cost of the four shifts of human operators needed to feed the reader. When those considerations are added, tape robots are very attractive.

2.2.1.3 Memory Hierarchies

The simple memory boxes shown in Figure 2.2 are implemented as a memory hierarchy (see Figure 2.5). Small, fast, expensive memories at the top act as caches for the larger, slower, cheaper memories used at lower levels of the hierarchy. Processor registers are at the top of the hierarchy, and offline tapes are at the bottom. In between are various memory devices, that are progressively slower, but that also store data less expensively.

A perfect memory would always know what data the processor needed next and would have moved exactly that data into cache just before the processor asked for it. Such a memory would be as fast as the cache. Memories cannot predict future references, but they can guess future accesses by using the principle of locality: data that was used recently is likely to soon be used again. By using the principle of locality, memories cache as much recently used data, along with its neighboring data, as possible. The success of this strategy is measured by the cache hit ratio:

![]()

When a reference misses the cache, it must be satisfied by accessing the next level in the memory hierarchy. That memory is just a cache for the next-lower level of memory; thus it, too, can get a miss, and so on.

Unless cache hit rates are very high (say, .99), the cache memory has approximately the same access time as the secondary memory. To understand this, suppose a cache memory with access time C has hit rate H, and suppose that on a miss the secondary memory access time is S. Further, suppose that C ≈ .01 • S, as is typical in Figure 2.3. The effective access time of the cache will be as follows:

Therefore, unless H is very close to unity, the effective access time is much closer to S than to C. For example, a 50% hit ratio attains an effective memory 50 times slower than cache; a 90% hit ratio attains an effective memory 11 times slower than cache. If the hit ratio is 99%, the effective memory is half as fast as cache. To achieve an effective memory speed within 10% of the cache speed, the hit rate must be 99.98%.

This simple computation shows that high hit ratios are critical to good performance. There are two approaches to improving hit ratios:

Clustering. Cluster related data together in one storage block, and cluster data reference patterns and instruction reference patterns to improve locality.

Larger cache. Keep more data in a larger cache, in the hopes that it will be reused.

Moore’s Law (Equation 2.2) says that electronic memory chips grow in capacity by a factor of four every three years. Hence, future transaction systems and database systems will have huge electronic memories. At the same time, the memory requirements of many applications continue to grow, suggesting that the relative portion of data that can be kept close to the processor will stay about the same. It is therefore unlikely that everything will fit in electronic memory.

The Five-Minute Rule

How shall we manage these huge memories? The answers so far have been clustering and sequential access. However, there is one more useful technique for managing caches, called the five-minute rule. Given that we know what the data access patterns are, when should data be kept in main memory and when should it be kept on disk? The simple way of answering this question is, Frequently accessed data should be in main memory, while it is cheaper to store infrequently accessed data on disk. Unfortunately, the statement is a little vague: What does frequently mean? The five-minute rule says frequently means five minutes, but the rule reflects a way of reasoning that also applies to any cache-secondary memory structure. In those cases, depending on relative storage and access costs, frequently may turn out to be milliseconds, or it may turn out to be days (see Equation 2.7).

The logic for the five-minute rule goes as follows: Assume there are no special response time (real-time) requirements; the decision to keep something in cache is, therefore, purely economic. To make the computation simple, suppose that data blocks are 10 KB. At 1990 prices, 10 KB of main memory cost about $1. Thus, we could keep the data in main memory forever if we were willing to spend a dollar. But with 10 KB of disk costing only $.10, we presumably could save $.90 if we kept the 10 KB on disk. In reality, the savings are not so great; if the disk data is accessed, it must be moved to main memory, and that costs something. How much, then, does a disk access cost? A disk, along with all its supporting hardware, costs about $3,000 (in 1990) and delivers about 30 accesses per second; access per second cost, therefore, is about $100. At this rate, if the data is accessed once a second, it costs $100.10 to store it on disk (disk storage and disk access costs). That is considerably more than the $1 to store it in main memory. The break-even point is about one access per 100 seconds, or about every two minutes. At that rate, the main memory cost is about the same as the disk storage cost plus the disk access costs. At a more frequent access rate, disk storage is more expensive. At a less frequent rate, disk storage is cheaper. Anticipating the cheaper main memory that will result from technology changes, this observation is called the five-minute rule rather than the two-minute rule.

The five-minute rule: Keep a data item in electronic memory if its access frequency is five minutes or higher; otherwise keep it in magnetic memory.

Similar arguments apply to objects stored on tape and cached on disk. Given the object size, the cost of cache, the cost of secondary memory, and the cost of accessing the object in secondary memory once per second, frequently is defined as a frequency of access in units of accesses per second (a/s):

![]()

Objects accessed with this frequency or higher should be kept in cache.

Future Memory Hierarchies

There are two contrasting views of how memory hierarchies will evolve. The one proclaims disks forever, while the other maintains that disks are dead. The disks-forever group predicts that there will never be enough electronic memory to hold all the active data; future transaction processing systems will be used in applications such as graphics, CAD, image processing, AI, and so forth, each of which requires much more memory and manipulates much larger objects than do classical database applications. Future databases will consist of images and complex objects that are thousands of times larger than the records and blocks currently moving in the hierarchy. Hence, the memory hierarchy traffic will grow with strict response time constraints and increasing transfer rate requirements. Random requests will require the fast read access times provided by disks, while the memory architecture will remain basically unchanged. Techniques such as parallel transfer (striping) will provide needed transfer rates.

The disks-are-dead view, on the other hand, predicts that future memory hierarchies will consist of an electronic memory cache for tape robots. This view is based on the observation that the price advantage of disks over electronic memory is eroding. If current trends continue, the cost/byte of disk and electronic memory will intersect. Moore’s Law (Equation 2.2) “intersects” Hoagland’s Law (Equation 2.4) sometime between the years 2000 and 2010. Electronic memory is getting 100 times denser each decade, while disks are achieving a relatively modest tenfold density improvement in the same time. When the cost of electronic memory approaches the cost of disk memory, there will be little reason for disks. To deal with this competition, disk designers will have to use more cheap media per expensive read-write head. With more media per head, disks will have slower access times—in short, they will behave like tapes.

The disks-are-dead group believes that electronic memories will be structured as a hierarchy, with block-addressed electronic memories replacing disks. These RAM disks will have battery or tape backup to make them non-volatile and will be large enough to hold all the active data. New information will only arrive via the network or through real-world interfaces such as terminals, sensors, and so forth. Magnetic memory devices will be needed only for logging and archiving. Both operations are sequential and often asynchronous. The primary requirement will be very high transfer rates. Magnetic or magneto-optical tapes would be the ideal devices; they have high capacity, high transfer rates, and low cost/byte. It is possible that these tapes will be removable optical disks, but in this environment they will be treated as fundamentally sequential devices.

The conventional model, disks forever, is used here if only because it subsumes the nodisk case. It asks for fast sequential transfers between main memory and disks and requires fast selective access to single objects on disk.

2.2.2 Processors

As stated earlier, processors execute instructions that read and write memory or send and receive bytes on communications lines. Processor speeds are measured in terms of instructions per second, or ips, and often in units of millions, or mips.

Between 1965 and 1990, processor mips ratings rose by a factor of 70, from about .6 mips to about 40 mips.2 In the decade of the 1980s, however, the performance of microprocessors (one-chip processors) approximately doubled every 18 months, so that today’s microprocessors typically have mips ratings comparable to mainframes (often in the 25 mips range). This rapid performance improvement is projected to continue for the next decade. If that happens, processor speeds will increase more in the next decade than they have in the last three decades. Bill Joy suggested the following “law” to predict the mips rate of Sun Microsystems processors to the year 2000.

![]()

In reality, things are going a little more slowly than this; still, the growth rate is impressive. Billion-instructions-per-second (1 bips) processors are likely to be common by the end of the decade.

These one-chip processors are not only fast, but they are also mass produced and inexpensive. Consequently, future computers will generally have many processors. There is debate about how these many processors will be connected to memory and how they will be interconnected. Before discussing that architectural issue, let us first introduce communications hardware and its performance.

2.2.3 Communications Hardware

Processors and memories (see Figure 2.2) are connected by wires collectively called the network. Regarded in one way, computers are almost entirely wires. But the notion of wire is changing: While some wires are just impurities on a chip, others are fiber-optic cables carrying light signals. The basic property of a wire is that a signal injected at one end eventually arrives at the other end. Some wires do routing—they are switches—while others broadcast to many receivers; here, the simple model of point-to-point communications is considered.

The time to transmit a message of M bits via a wire is determined by two parameters: (1) the wire’s bandwidth—that is, how many bits/second it can carry—and (2) the wire’s length (in meters), which determines how long the first bit will take to arrive. The distance divided by the speed of light in the media (Cm ≈ 200 million meters/s in a solid) determines the transmission latency. The time to transmit Message_Bits bits is approximately

![]()

Transmit times to send a kilobyte within a cluster are in the microsecond range; around a campus, they are in the millisecond range; and across the country, the transmit time for a 1 KB message is hundreds of milliseconds (see Table 2.6).

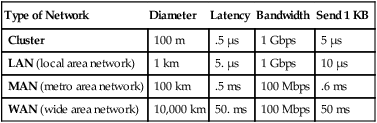

Table 2.6

The definition of the four kinds of networks by their diameters. These diameters imply certain latencies (based on the speed of light). In 1990, Ethernet (at 10 Mbps) was the dominant LAN.3 Metropolitan networks typically are based on 1 Mbps public lines. Such lines are too expensive for transcontinental links at present; most long-distance lines are therefore 50 Kbps or less. As the text notes, things are changing (see Table 2.7).

| Type of Network | Diameter | Latency | Bandwidth | Send 1 KB |

| Cluster | 100 m | .5 μs | 1 Gbps | 10 μs |

| LAN (local area network) | 1 km | 5. μs | 10 Mbps | 1 ms |

| MAN (metro area network) | 100 km | .5 ms | 1 Mbps | 10 ms |

| WAN (wide area network) | 10,000 km | 50. ms | 50 Kbps | 210 ms |

The numbers in Table 2.6 are “old”; they are based on typical technology in 1990. The proliferation of fiber-optic connections, both locally and in long-haul networks, is making 1 Gbps local links and 100 Mbps long-haul links economical. Bandwidth within a cluster can go to a terabit per second, if desired, but it is more likely that multiple links in the 1 Gbps range will be common. Similar estimates can be made for MANs and WANs. The main theme here is that bandwidth among processors should be plentiful and inexpensive in the future. Future system designs should use this bandwidth intelligently. Table 2.7 gives a prediction of typical point-to-point communication bandwidths for the network of the year 2000. These predictions are generally viewed as conservative.

Table 2.7

Point-to-point bandwidth likely to be common among computers by the year 2000.

| Type of Network | Diameter | Latency | Bandwidth | Send 1 KB |

| Cluster | 100 m | .5 μs | 1 Gbps | 5 μs |

| LAN (local area network) | 1 km | 5. μs | 1 Gbps | 10 μs |

| MAN (metro area network) | 100 km | .5 ms | 100 Mbps | .6 ms |

| WAN (wide area network) | 10,000 km | 50. ms | 100 Mbps | 50 ms |

Table 2.7 indicates that cluster and LAN transmit times will be dominated by transfer times (bandwidth), but that MAN and WAN transmit times will be dominated by latency (the speed of light). Parallel links and higher technology can be used to improve bandwidth, but there is little hope of improving latency. As we will see in Section 2.6, cluster, LAN, and MAN times are actually dominated by software costs—the processing time to set up a message and the marginal cost of sending and receiving each message byte.

2.2.4 Hardware Architectures

To summarize the previous two sections, processor and communications performance are expected to improve by a factor of 100 or more in the decade of the 1990s. This is comparable to the improvement in the last three decades. In addition, the unit costs of processors and long-haul communications bandwidth is expected to drop dramatically.

2.2.4.1 Processor-Memory Architecture

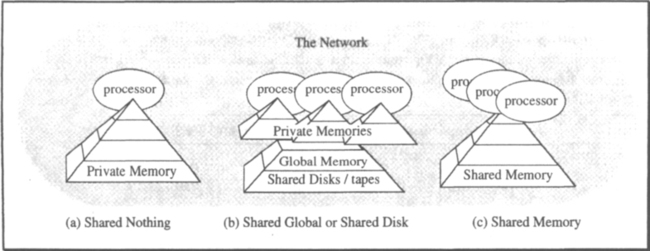

How will these computers be interconnected? Where are processors in the memory hierarchy? How do processors fit into the communications network? The answers to these questions are still quite speculative, but we can make educated guesses. First, let us look at the question of how processors will attach to the memory hierarchy. Figure 2.8 shows three different strategies for this.

Shared nothing. In a shared-nothing design, each memory is dedicated to a single processor. All accesses to that data must pass through that processor.4 Processors communicate by sending messages to each other via the communications network.

Shared global. In a shared-global design, each processor has some private memory not accessible to other processors. There is, however, a pool of global memory shared by the collection of processors. This global memory is usually addressed in blocks (units of a few kilobytes or more) and is RAM disk or disk.

Shared memory. In a shared-memory design, each processor has transparent access to all memory. If multiple processors access the data concurrently, the underlying hardware regulates the access to the shared data and provides each processor a current view of the data.

Pure shared-memory systems will probably not be feasible in the future. Each processor needs a cache, which is inherently private memory. Considerable processor delays and extra circuitry are needed to make these private caches look like a single consistent memory. If, as often happens, some frequently accessed data appears in all the processor caches, then the shared-memory hardware must present a consistent version of it in each cache by broadcasting updates to that data. The relative cost of cache maintenance increases as the processors become faster.

One can picture this by thinking of the processor event horizon—the round-trip distance a processor can send a message in one instruction time. This time/distance determines how much interaction the processor can have with its neighboring processors. For a 1 bips processor, the event horizon is about 10 centimeters (≈4 inches). This is approximately the size of a processor chip or very small circuit board. Since wires are rarely straight, the event horizon of a 1 bips processor is on chip. This puts a real damper on shared memory.

As cache sizes grow, much of the program and data state of a process migrates to the cache where that process is running. When the process runs again, it has a real affinity to that processor and its cache. Because running somewhere else will cause many unneeded cache faults to global memory, processes are increasingly being bound to processors. This effectively partitions global memory, resulting in a software version of the shared-nothing design.

As a related issue, each device will be managed by a free-standing, powerful computer that can execute client requests. Requests will move progressively closer to the devices. Each keyboard and display, each disk, each tape robot, and each communications line will have its own dedicated processor. Consider, for example, the controller for a tape robot. This processor will manage an expensive device. The controller’s job is to optimize access to the device, deal with device failures, and integrate the device into the network. The controller will store a catalog of all the tapes and their contents and will buffer incoming data and prefetch outgoing data to optimize tape performance. Programming this software will require tools to reduce development and maintenance costs. Thus, the tape robot will probably run a standard operating system, include a standard database system, and support standard communications protocols. In other words, the tape robot controller is just a general-purpose computer and its software, along with a standard peripheral. Figure 2.9 diagrams this hardware.

Similar arguments apply to display controllers, disk controllers, and bulk electronic memory controllers (RAM-disks). Each is becoming a general-purpose processor with substantial private memory and a memory device or communications line behind it. Global memory devices are becoming processors with private memory.

2.2.4.2 Processor-Processor Architecture

Based on the previous discussion, shared-nothing and shared-global processors are likely to be grouped to form a single management entity called a cluster. The key properties of these clusters (see Table 2.7) are:

High communication bandwidth. Intra-cluster links can move bulk data quickly.

Low communication latency. Intra-cluster links have latency of less than 1 μs.

Clusters include software that allows a program (process) to access all the devices of the cluster as though they were locally attached. That is, subject to authorization restrictions, any program running in one processor can indirectly read or write any memory or wire in the cluster as if that device were local to the program. This is done by using a client-server mechanism. Clusters are available today from Digital (VAXcluster), IBM (Sysplex), Intel (Hypercube), Tandem (T16), and Teradata (DBC/1012).

Structuring a computation within a cluster poses an interesting trade-off question: Should the program move to the data, or should the data move to the program? The answer is yes. If the computation has a large state, it may be better to move the data than to move the process state; on the other hand, if the data is huge and the client process and the answer are small, then it is probably better to move the program to the data. Remote procedure calls are one way of moving programs so that they can execute remotely.

In summary, the processor-memory architecture is likely to move toward a cluster of shared-nothing machines communicating over a high-speed network. Clusters, in turn, communicate with other clusters and with clients via standard networks. As Table 2.7 suggests, these wires have impressive bandwidth, but they have large latencies because they span long distances. These trends imply that computations will be structured as clients making requests to servers. Remote procedure calls allow such distributed execution, while the transaction mechanism handles exceptions in the execution.

2.3 Basic Software—Address Spaces, Processes, Sessions

Having made a quick tour of hardware concepts, terms, and trends, let us look at the software analogs of the hardware components. Address spaces, processes, and messages are the software analogs of memories, processors, and wires. Following that basic client-server concepts are discussed.

2.3.1 Address Spaces

A process address space is an array of bytes. Each process executes instructions from its address space and can read and write bytes of the address space using processor instructions. The process address space is actually virtual memory; that is, the addresses go through at least one level of translation to compute the physical memory address.

Address spaces are usually composed of memory segments. Segments are units of memory sharing, protection, and allocation. As one example, program and library segments in one address space can be shared with many other address spaces. These programs are read-only in the sense that no process can modify them. As another example, two processes may want to cooperate on a task and, in doing so, may want to share some data. In that case, they may attach a common segment to each of their address spaces. Figure 2.10 shows these two forms of sharing. To simplify memory addressing, the virtual address space is divided into fixed-size segment slots, and each segment partially fills a slot. Typical slot sizes range from 224 to 232 bytes. This gives a two-dimensional address space, where addresses are (segment_number, byte). Again, segments are often partitioned into virtual memory pages, which are the unit of transfer between main and secondary memory. In these cases, virtual memory addresses are actually (segment, page, byte). If an object is bigger than a segment, it can be mapped into consecutive segments of the address space.

Paging and segmentation are not visible to the executing process. The address space looks like a sequence of bytes with invalid pages in the empty segment slots and at the end of partially filled segment slots.

Address spaces cannot usually span network nodes: that is, address spaces cannot span private memories (see Figure 2.8). Global read-only and execute-only segments can be shared among address spaces in a cluster. However, since keeping multiple consistent copies of a read-write shared segments is difficult, this is not usually supported.

2.3.2 Processes, Protection Domains, and Threads

Processes are the actors in computation; they are the software analog of hardware processors. They execute programs, send messages, read and write data, and paint pictures on your display.

2.3.2.1 Processes

A process is a virtual processor. It has an address space that contains the program the process is executing and the memory the process reads and writes. One can imagine a process executing C programs statement by statement, with each statement reading and writing bytes in the address space or sending messages to other processes.

Why have processes? Well, processes provide an ability to execute programs in parallel; they provide a protection entity; and they provide a way of structuring computations into independent execution streams. So they provide a form of fault containment in case a program fails.

Processes are building blocks for transactions, but the two concepts are orthogonal. A process can execute many different transactions over time, and parts of a single transaction may be executed by many processes.

Each process executes on behalf of some user, or authority, and with some priority. The authority determines what the process can do: which other processes, devices, and files the process can address and communicate with. The process priority determines how quickly the process’s demand for resources (processor, memory, and bandwidth) will be serviced if other processes make competing demands. Short tasks typically run with high priority, while large tasks are usually given lower priority and run in the background.

2.3.2.2 Protection Domains

As a process executes, it calls on services from other subsystems. For example, it asks the network software to read and write communications devices, it asks the database software to read and write the shared database, and it asks the user interface to read and write widgets (graphical objects). These subsystems want some protection from faults in the application program. They want to encapsulate their data so that only they can access it directly. Such an encapsulated environment is called a protection domain. Many of the resource managers discussed in this book (database manager, recovery manager, log manager, lock manager, buffer manager, and so on) should operate as protection domains. There are two ways to provide protection domains:

Process = protection domain. Each subsystem executes as a separate process with its own private address space. Applications execute subsystem requests by switching processes, that is, by sending a message to a process.

Address space = protection domain. A process has many address spaces: one for each protected subsystem and one for the application (see Figure 2.11). Applications execute subsystem requests by switching address spaces. The address space protection domain of a subsystem is just an address space that contains some of the caller’s segments; in addition, it contains program and data segments belonging to the called subsystem. A process connects to the domain by asking the subsystem or OS kernel to add the segment to the address space. Once connected, the domain is callable from other domains in the process by using a special instruction or kernel call.

The concept of an address space as a protection domain was pioneered by the Burroughs descriptor-based machines. Today, it is well represented by IBM’s AS400 machines, by the cross-memory services of the MVS/XA system, and by the four protection domains of the VAX-VMS process architecture. The concept of a process as a protection domain was pioneered by Per Brinch Hansen [1970] and is well represented by the UNIX operating systems of today.

Most systems use both process domains and address space domains. The process approach is the only solution if the subsystem’s data is remote or if the underlying operating system has a very simple protection model. But even that approach has at least two address spaces for each process: (1) the application domain and (2) the privileged operating system kernel domain that implements address spaces, processes, and interprocess communication. Typically, the hardware implements an instruction to switch from user mode to this privileged mode.

The process protection domain approach is the most general: it works in networks and in shared-memory systems. The drawback of using processes to implement protection domains is that a domain switch requires a process switch, and it may require copying parameters and results between the processes.

Structuring applications as multiple address space protection domains per process has real performance advantages. The multiple-address-space-per-process approach avoids most of these data copies. When the application calls the database system, the process assumes a new address space that shares the caller’s memory segments and the database system’s memory segments. The call begins executing at a standard location in the database system program. The database system can directly address the caller’s data as well as the database code and data. When implemented in hardware, such domain switches can be very rapid (≈100 instructions for a call plus return). This is much faster than the round-trip process switch, which involves several kernel calls and process dispatches. When the additional costs of parameter passing and authority checking are added, the cost of process switching rises substantially.

2.3.2.3 Threads

Address space protection domains show the need for multiple address spaces per process. There is a dual need for multiple processes per address space. Often the simplest way to structure a computation is to have two or more processes independently access a common data structure. For example, to scan through a data stream, one process is appointed the producer, which reads the data from an external source, while the second process processes the data. Further examples of cooperating processes, such as file read-ahead, asynchronous buffer flushing, and other housekeeping chores, are given in later chapters of this book.

Processes can share the same address space simply by having all their address spaces point to the same segments. Most operating systems do not make a clean distinction between address spaces and processes. Thus a new concept, called a thread or a task, is introduced to multiplex one operating system process among many virtual processes. To confuse things, several operating systems do not use the term process at all. For example, in the Mach operating system, thread means process, and task means address space; in MVS, task means process, and so on.

The term thread often implies a second property: inexpensive to create and dispatch. Threads are commonly provided by some software that found the operating system processes to be too expensive to create or dispatch. The thread software multiplexes one big operating system process among many threads, which can be created and dispatched hundreds of times faster than a process.

The term thread is used in this book to connote these light-weight processes. Unless this light-weight property is intended, process is used. Several threads usually share a common address space. Typically, all the threads have the same authorization identifier, since they are part of the same address space domain, but they may have different scheduling priorities.

2.3.3 Messages and Sessions

Sessions are the software analog of communication wires, and messages are the software analog of signals on wires. One process can communicate with another by sending it a message and having the second process receive the message.

Shared memory can be used to communicate among processes within a processor, but messages are used to communicate among processors in a network. Even within a processor, messages can be used among subsystems if the underlying operating system kernel does not support shared-memory segments (see Subsection 2.3.2.2).

Most systems allow a process to send messages to others. The send routine looks up the recipient’s process name to find its address—more on that below—and then constructs an envelope containing the sender’s name, the recipient’s name, and the message. The resulting envelope is given to the network to deliver, and the send routine returns to the caller. Such simple messages are called datagrams. The recipient process can ask the network if any datagrams have recently arrived for the recipient, and it can wait for a datagram to arrive.

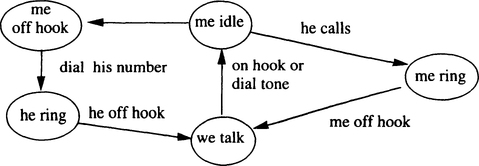

More often, the communication between two processes is via a pre-established bidirectional message pipe called a session. The basic session operations are open_session(), send_msg(), receive_msg(), and close_session(). The naming and authentication issues of sessions are described in Section 2.4, but the basic functions deserve some comment here.

There is a curious asymmetry in starting a session. Once started, however, sessions are completely symmetric in that each side can send and receive messages via the session. The asymmetry in session setup is similar to the asymmetry in establishing a telephone conversation: one person dials a number, the other person hears the ring and picks up the phone. Once this asymmetric call setup is done and the two people have identified each other, the telephone conversation itself is completely symmetric. Similarly, in starting the session, one process, typically a server, listens for an open_session datagram from the network. Another process, call it the client, decides it wants to talk to the server; hence, the client sends an open_session datagram to the server and waits for a response. The server examines the client’s open_session request and either accepts or rejects it. Once the server has accepted the open request, and the client has been so informed, the client and server have a symmetric relationship. Either side can send at any time, and either side can unilaterally close the session at any time. The client-server distinction provides a convenient naming scheme, but the session endpoints are peers. The term peer-to-peer communication is often used to denote this equality.

Since datagrams seem adequate for all functions, why have sessions at all? The answer is that sessions provide several benefits over datagrams:

Shared state. A session represents shared state between the client and the server. The next datagram might go to any process with the designated name, but a session goes to a particular instance of that name. That particular process can remember the state of the client’s request. When the client is done conversing with the server, it closes the session, and the server waits for a new client’s open_session() request.

Authorization-encryption. As explained in the next section, clients and servers do not always trust each other completely. The server often checks the client’s credentials to see that the client is allowed (authorized) to perform the requested function. This authorization step requires that the server establish the client’s identity, that is, authenticate the client. The client may also want to authenticate the server. Once they have authenticated each other, they can use encryption to protect the contents and sequence of the messages they exchange from disclosure or alteration. The authentication and encryption protocols require multi-message exchanges. Once the session encryption key is established, it becomes shared state.

Error detection and correction. Messages flowing in each session direction are numbered sequentially. These sequence numbers can detect lost messages (a missing sequence number) and duplicate messages (a repeated sequence number). In addition, since the underlying network knows that the client and server are in session, it can inform the endpoints if the other endpoint fails or if the link between them fails. In this way, sessions provide simple failure semantics for messages. This topic is elaborated in the next chapter.

Performance and resource scheduling. Resolving the server names to an address, authenticating the client, and authorizing the client are fairly costly operations. Each of these steps often involves several messages. By establishing a session, this information is cached. If the client and server have only one message to exchange, there is no benefit. But if they exchange many messages, the cost of the session setup functions is paid once and amortized across many messages.

2.4 Generic System Issues

With the basic software concepts of process, address space, session, and message now introduced, the generic client-server topics of communication structure, naming, authentication, authorization, and scheduling can be discussed. Then particular topics of a file system and a communications system are covered in slightly more detail.

2.4.1 Clients and Servers

As mentioned earlier, computations and systems are structured as independently executing processes, either to provide protection and fault containment, or to reflect the geographic dispersion of the data and devices, or to structure independent and parallel computations.

How should a computation consisting of multiple interacting processes be structured? This simple question has no simple answer. Many approaches have been tried in the past, and more will be tried in the future.

One of the fundamental issues has been whether two interacting processes should behave as peers or as clients and servers. These two structures are contrasted as follows:

Peer-to-peer. The two processes are independent peers, each executing its computation and occasionally exchanging data with the other.

Client-server. The two processes interact via request-reply exchanges in which one process, the client, makes a request to a second process, the server, which performs this request and replies to the client.

The debate over this structuring issue has been heated and confused. The controversy centers around the point that peer-to-peer is general; it subsumes client-server as a special case. Client-server, on the other hand, is easy to program and to understand; it is fine for most applications.

To understand the simplicity of the client-server model, imagine that a program wants to read a record of a file. It can issue a read subroutine call (a procedure call) to the local file system to get the record’s value from the file. Virtually all our programming languages and programming styles encourage this operation-on-object approach to computing, in this case,

read(file, record_id) returns (record_value).

This programming model is at the core of object-oriented programming, which applies methods to objects. In client-server computing, the server implements the methods on the object. When the client invokes the method (procedure, subroutine), the invocation parameters are sent to the server as a message, then the server performs the operation and returns the response as a message to the client process. The invocation software copies the response message into the client’s address space and returns to the client as though the method had been executed by a local procedure.

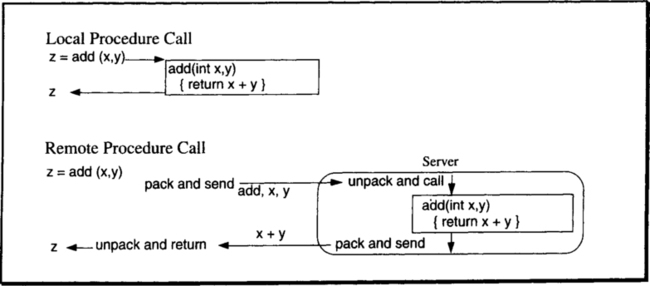

The transparent invocation of server procedures is called remote procedure call (RPC). To the client programmer (program), remote procedure calls look just like local procedure calls. The client program stops executing, and a message is sent to the server, which executes and replies to the client. On the server side, the invocation also looks just like a local procedure call. That is, the server thinks it was called by a local client. The mechanisms that achieve this are elaborated in Chapter 6, but the basic idea is diagrammed in Figure 2.12.

The RPC model seems wonderful. It fits our programing model, it is simple, and many standard versions of it are emerging. What, then, is the controversy about? Why do some prefer the peer-to-peer approach? The answer is complex. The client-server model postulates a one-to-one relationship between requests and replies. While this is fine for most computations, there are cases in which one request generates thousands of replies, or where thousands of requests generate one reply. Operations that have this property include transferring a file between the client and server or bulk reading and writing of databases. In other situations, a client request generates a request to a second server, which, in turn, replies to the client. Parallelism is a third area where simple RPC is inappropriate. Because the client-server model postulates synchronous remote procedure calls, the computation uses one processor at a time. However, there is growing interest in schemes that allow many processes to work on problems in parallel. The RPC model in its simplest form does not allow any parallelism. To achieve parallelism, the client does not block waiting for the server response; rather, the client issues several requests and then waits for the answers.

It appears that the debate between the remote procedure call and peer-to-peer models will be resolved by constructing the underlying mechanisms—the messages and sessions—on the peer-to-peer model, while basing most programming interfaces on the client-server model. Applications needing a non-standard model would use the underlying mechanisms directly to get the more general model.

One other generic issue concerning clients and servers deserves discussion here. Where do the servers come from? Here, there are two fundamentally different models, push and pull. In the push model, typical of transaction processing systems, the servers are created by a controlling process, the transaction processing monitor (TP monitor). As client requests arrive, the TP monitor allocates servers to clients, pushing work onto the servers. In the pull style, typical of local network file servers, an ad hoc mechanism creates the servers, which offer their services to the world via a public name server. Clients looking for a particular service call the name server. The name server returns the names of one or more servers that have advertised the desired service. The client then contacts the server directly. In this model, the servers manage themselves, and they pull work into the system. Pull results in higher server utilization since idle servers advertize for work, rather than having busy clients search for idle servers.

To summarize, the client-server structure lends itself to a request-reply programming style that dovetails with procedural programming languages. The server implements operations (methods, procedures) on objects, much in the style of object-oriented programming. The client invokes these procedures as though the objects were local, and the remote procedure call mechanism orchestrates the execution of the procedure by a remote server.

2.4.2 Naming

Every object, whether a process, a file, or a device, has a name, an address, and a location. The name is an abstract identifier for the object, the address is the path to the object, and the location is where the object is. As an example, the telephone named Bruce Lindsay’s Office has the address 408-927-1747 and is located at some electronic address inside IBM’s phone network. The name never changes, but Bruce may change locations, moving his telephone to a new office with the same phone number, or he may change addresses by going to MIT for a year.

An object can have several names. Some of these names may be synonyms, called aliases. Let us say that Bruce and Lindsay are two aliases for Bruce Lindsay. For this to be explicit, all names, addresses, and locations must be interpreted in some context, called a directory. For example, in our RPC context, Bruce means Bruce Nelson, and in our publishing context, Bruce means Bruce Spatz. Within the 408 telephone area, Bruce Lindsay’s address is 927-1747, and outside the United States it is +1-408-927-1747 (where the + stands for the international access code used to get to the root of the international telephone address space in the caller’s country).

Names are grouped into a hierarchy called the name space. An international commission has defined a universal name space standard, X.500, for computer systems. The commission administers the root of that name space. Each interior node of the hierarchy is a directory. A sequence of names delimited by a period (.) gives a path name from the directory to the object.

No one stores the entire name space—it is too big, and it is changing too rapidly. Certain processes, called name servers, store parts of the name space local to their neighborhood; in addition, they store a directory of more global name servers.

Clients use name servers to resolve server names to addresses. They then present these server addresses, along with requests, to the network. The network transports the requests to the server location, where it presents the request to the server (when the server asks for it). This is the scenario whereby sessions are established between clients and servers, and whereby remote procedure calls are implemented.

There is a startup problem: clients have to find the name and address of the local name server. To facilitate this, name servers generally have well-known names and addresses. A client wanting to resolve a name to an address sends a message to the well-known address or broadcasts the message to a well-known name on the local network. Once the name server responds to the client, the client knows the local name server’s address. The client can then ask the local name server for the addresses of more global name servers.

2.4.3 Authentication

When a client first connects to a server, each may want to establish the identity of the other. Consider the simple case of accessing your bank from your workstation. The bank wants to be sure that the person at the workstation is you, and you want to be sure that you are talking to your bank and not some Trojan horse that will steal your passwords and financial data. In computer terms, each process wants to authenticate the other: to establish the authorization identity, or authid, of the other. Every process executes under some authorization identity. This identity usually can be traced back to some person and, therefore, often is called the userid.

How can the client authenticate itself to a server? How can it convince the server of the client’s authorization identity? Conversely, how can the server convince the client? There are many answers to these questions, and there are many subtleties. Generically, however, there are two solutions: either (1) the client and the server must have a shared secret, or (2) the client and the server must trust some higher authority.

Passwords are the simplest case of shared secrets. The client has a secret password, a string of bytes known only to it and the server. The client sends this password to the server to prove the client’s identity. A second secret password is then needed to authenticate the server to the client. Thus, two passwords are required.

Another shared secret approach, called challenge-response,5 uses only one password or key. In this scheme, the client and the server share a secret encryption key. The server picks a random number, N, and encrypts it with the key as EN. The server sends the encrypted number, EN, to the client and challenges the client to decrypt it using the secret key, that is, to compute N from EN. If the client responds with N, the server believes the client knows the secret encryption key. The client can also authenticate the server by challenging it to decrypt a second random number. This protocol is especially secure because it sends only random numbers between the client and the server, no third party can see the shared secret. It may seem to introduce extra messages, but it turns out that the challenges and responses can piggyback on session-open messages, so that no extra message flows are needed.

A third scheme uses no shared secrets at all. Each authid has a pair of keys—a public encryption key, EK, and a private decryption key, DK. The keys are chosen so that DK(EK(X)) = X, but knowing only EK and EK(X) it is hard to compute X. Thus, a process’s ability to compute X from EK(X) is proof that the process knows the secret DK. Each authid publishes its public key to the world. Anyone wanting to authenticate the process as that authid goes through the challenge protocol: The challenger picks a random number X, encrypts it with the authid’s public key EK, and challenges the process to compute X from EK(X).

All these schemes rest on some form of key, but where do the keys come from? How do the client and the server get them securely? This issue, called key distribution, immediately brings some higher authority into the picture: someone or something that can make keys and securely distribute them. Such entities are called authentication servers. There are many different ways to structure authentication servers, but a fairly simple scheme goes as follows: The network is partitioned into authentication domains. Authorization identifiers become local to a domain in that an authorization identifier has a two-part name: (1) the authentication domain and (2) the authorization id within that domain. For example, if Sam is working in the government authentication domain called Gov, then Gov. Sam is Sam’s authid in the government authentication domain. All requests by Sam will be authorized against this authid. Authentication servers have a trusted way of securely communicating with each other so that they can certify these authids and their public keys. This communication mechanism is itself set up by some higher (human) authority.

A process acquires its authid by connecting to a local authentication server and authenticating itself. This authentication can be done via passwords, by challenge-response, or by some other scheme. Suppose a client in one authentication domain wants to talk to a server at a remote node and, at a remote authentication domain. Consider how the server authenticates the client: The server asks its authentication server for the client’s public key. The server’s authentication server asks the authentication server “owning” the client for the client’s public key. The authentication servers return the client’s public key to the server, which can then perform the challenge-response protocol. The main issue is trust—who trusts whom about what. In the scenario described above, trust takes two forms: (1) a process trusts its local authentication server to provide correct public keys, and (2) each authentication server trusts other authentication servers to provide the public keys of authids in their domains.

2.4.4 Authorization

What happens once a process has established an authid and a server has authenticated a client as having that authid? The server now knows who the client is and what the client wants to do. The question then becomes, Is the client’s authid allowed to perform that operation on that object? This decision, called authorization, can be expressed as the following simple predicate:

boolean = Authorize(object, authid, operation);

The Authorize() predicate can be viewed as a three-dimensional boolean array or as an SQL table. The array, called the access control matrix, is quite sparse and stores only the TRUE entries. Usually, a column of the array is stored with the object. That is, the authorization logic keeps a list of (authid, operation) pairs for an object, called the object’s access control list. Alternatively, SQL systems routinely keep a three-column privileges table indexed by (object, authid). Either scheme quickly answers the authorization question.

The object owner often wants to grant access to everybody, or to all bank tellers, or to everybody in his group. Thus far, we have not encountered the concept of an authority group—a list of authids, or a predicate on authids. The group concept eases administration of the access control matrix and collapses its size. For example, to grant everybody in group G read authority on object O, the (O, G, read) element would be added to the privileges table. When a member of the group wanted to read the object, the authorization test would check the requestor’s membership in group G.

Some systems, especially governmental ones, want to know each time an authentication or authorization step happens. At such points, a record or message about the step must be sent to a security auditing server, which collects this information for later analysis.

Once the authorization check has been passed, the requestor is given a capability to operate on the object. This capability is often just a control block stored in the client’s context at the server. For example, if the client opens a file, the server allocates a file control block, which indicates the read-write privileges of the client. Thereafter, the client refers to the control block by a token, and the server uses the contents of the control block to avoid retesting the client’s authority on each call. Occasionally, the client must be given the capability directly; an example is if the server does not maintain client state. In this case, the server generally encrypts the control block and sends it to the client as a capability. When the client later presents the encrypted control block, the server decrypts it, decides that it is still valid, and then acts on it.

When a client calls a server, the server may, in turn, have to call a second server to perform the client’s request. For example, when your client calls a mail server and asks it to send a file, the server has to get the file either indirectly from your client or directly from the file server. When the mail system server goes to the file server, the mail system wants to use your (the client’s) authority to access the file. A mechanism that allows a server to act with the authority of the client is called amplification. Amplification is difficult to control because of the question of how and when the server’s amplified authority gets revoked. However, some form of amplification is essential to structuring client-server computations.

2.4.5 Scheduling and Performance

Once a server starts executing a request, the performance of the server becomes an issue. From the client’s perspective, performance is measured by the server’s response time: the time between the submission of the request and the receipt of the response. Response time has two components: (1) wait time—the time spent waiting for the server or, once the server is executing, the time spent waiting for resources, and (2) service time—the time needed to process the request when no waiting is involved. As the utilization of a server or resource increases, the wait time rises dramatically. In many situations,6 the average response time can be predicted from the server utilization, p, by the formula,

![]()

Equation 2.10 is graphed in Figure 2.13. Notice that for low utilization—say, less than 50%—the response time is close to the service time, but then it quickly rises. At 75% utilization it is four times the service time, and at 90% utilization, it is ten times the service time.

There are two ways to improve response time: reduce service time or reduce wait time. This subsection deals primarily with ways to reduce wait time of critical jobs by scheduling them in preference to less-critical jobs. Each process has an integer priority, that determines how quickly the process’s resource requests will be granted. In general, a high-priority process should be scheduled in preference to a low-priority process, and processes with the same priority should be scheduled so that they all make approximately the same rate of progress. A typical scheme is for high-priority requests to preempt low-priority requests and for requests of the same priority to be scheduled using a round-robin mechanism. Batch jobs get low priority and run in the background, while interactive jobs get high priority and are serviced as quickly as possible.

Servers generally service clients in priority order. To do this, the server must have a queue of requests that it can sort in priority order. This, in turn, suggests that many clients are waiting for the server. Such waiting is exactly what priority scheduling tries to avoid. Rather than having a single server process, then, each request should have its own server process. This concept, called a server class, is discussed in Chapter 6. Server classes reduce queueing for servers.

What should the server priority be, once the server starts executing a client request? In many systems, the server has its own priority, independent of the client. Often, server priorities are high compared to any other priorities, so that services have short wait times. However, this approach—fixed server priorities—breaks down in a client-server environment. A low-priority client running on a workstation can bombard a high-priority server with long-running requests, creating a priority inversion problem: a server performing low-priority requests at high priority, thereby causing truly high-priority work to wait for the low-priority task to complete. The server should run requests at the client’s priority, or perhaps at the client’s priority plus 1, so that client requests are executed in priority order.

2.4.6 Summary

This section introduced several client-server issues. First, RPCs were defined and the general peer-to-peer versus RPC issue was reviewed. Then, the issues of naming, authentication, and authorization were sketched. Finally, the issue of scheduling clients and servers in a network was reviewed. The discussion now turns briefly to file systems in a client-server environment.

2.5 Files

Files represent external storage devices to the application program in a device-independent way. A file system abstracts physical memory devices as files, which are large arrays of bytes. Chapters 13–15 describe a transactional file system in detail. This section gives a brief background on standard file systems.

2.5.1 File Operations

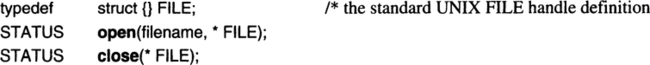

Files are created and deleted by the create() and delete() operations. In reality, the create operation has a vast number of parameters, but for present purposes a file name will suffice. As discussed in the previous section, names are merely strings of characters that are resolved to an address by looking in some name server. The file system usually acts as a name server, implementing a hierarchical name space. A file server with the name “net.node.server” might implement a file named “a.b.c” with the resulting file name “net.node.server.a.b.c”. The local file system and remote file servers accept file creation operations in a manner similar to this:

Once created, a file can be accessed in one of two ways. The first approach is to map the file to a slot of a process address space, so that ordinary machine instructions can read and write the file’s bytes. Address spaces share memory by mapping the same file. For example, processes running a certain program map the binary version of that program to their address spaces. The operations for such memory-mapped files are something like this:

A second approach, and by far the most common, is to explicitly copy data between files and memory. This style first opens the file and then issues read and write file actions to copy the data between the file and memory. These are the approximate operations for such explicit file actions:

The execution of the open() routine gives a good example of the concepts of the previous section. When a client invokes the open() routine, code in the client’s library goes through the following logic: First, it must look up the name to find the file server owning that file. Often, the name is cached by the client library, but let us suppose it is not. In that case, the client asks the name server for the file server address. The client then sends the open request to the file server at that address. The file server authenticates the client and then looks for the file in its name space. If the file is found, the file server looks at the file descriptor, which, among other things, contains an access control list. The file server examines the file’s access control list to see if the client is authorized to open the file. If so, the file server creates a capability for the file (a control block that allows quick access to the file) and returns this capability to the client.

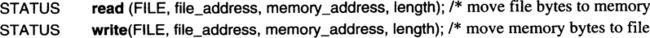

Given one of these file capabilities, the client can read and write bytes from the file using the following routines: