Chapter 12. MDM and the Functional Services Layer

One of the main objectives of a master data management program is to support the enterprise application use of a synchronized shared repository of core information objects relevant to business application success. There are two focuses here: sharing and coordination/synchronization. To maintain the master data environment, proper data governance policies and procedures at the corporate or organizational level oversee the quality of the data as the contributing data sets are consolidated.

However, the process does not end when the data sets are first consolidated; the objectives of MDM are not accomplished through the initial integration. Quite the contrary, the value is achieved when the consolidated master data are published back for operational and analytical use by the participating applications to truly provide a single, synchronized view of the customer, product, or other master data entity. This means that integration and consolidation must be performed on a continuous basis, and as applications embrace the use of MDM, it is necessary to support how application-level life cycle events impact the master data system.

This suggests that the data integration layer should be abstracted as it relates to business application development, which exposes two ways that master data are integrated into a services-based framework. Tactically, a services layer can be introduced to facilitate the transition of applications to the use of the master repository, perhaps through the use of message-based interfacing. Strategically, the abstraction of the core master entities at a data integration layer provides the foundation for establishing a hierarchical set of information services to support the rapid and efficient development of business applications in an autonomous fashion. Both of these imperatives are satisfied by a services-oriented architecture (SOA).

In essence, there is a symbiotic relationship between MDM and SOA: a services-oriented approach to business solution development relies on the existence of a master data repository, and the success of an MDM program depends on the ability to deploy and use master object access and manipulation services.

12.1. Collecting and Using Master Data

An MDM program that only collects data into a consolidated repository without allowing for the use of that data is essentially worthless, considering that the driving factor for establishing a single point of truth is providing a high-quality asset that can be shared across the enterprise. This means that information must easily be brought into the master repository and in turn must also be easily accessible by enterprise applications from the repository.

The first part is actually straightforward, as it mimics the typical extraction, transformation, and loading (ETL) techniques used within the data warehouse environment. However, exposing the master data for use across the different application poses more of a challenge for a number of factors. To enable the consistent sharing of master data, there are a number of issues to consider:

▪ The insufficiency of extraction/transformation/loading tools

▪ The replication of functionality across the de facto application framework

▪ The need for applications to adjust the way they access relevant data

▪ The need for a level of maturity to be reached for enterprise information models and associated architectures

12.1.1. Insufficiency of ETL

Traditional ETL is insufficient for continuous synchronization. Although the master repository may have initially been populated through an extraction, transformation, and loading process, there is a need to maintain a state of consistency between the master repository and each participating application's views of the master data. Although ETL can support the flow of data out of application data sets into the master repository, its fundamentally batch approach to consolidation is insufficient for flowing data back out from the repository to the applications. This may become less of an issue as real-time warehousing and change data capture facilities mature.

12.1.2. Replication of Functionality

There will be slight variances in the data model and component functionality associated with each application. Yet the inclusion of application data as part of a master data object type within the master repository implies that there are similarities in data entity format and use. Clearly, the corresponding data life cycle service functionality (creation, access, modification/update, retirement) is bound to be replicated across different applications as well. Minor differences in the ways these functions are implemented may affect the meaning and use of what will end up as shared data, and those variations expose potential risks to consistency.

12.1.3. Adjusting Application Dependencies

The value proposition for application participation in the MDM program includes the benefit of accessing and using the higher quality consolidated data managed via the MDM repository. However, each application may have been originally designed with a specific data model and corresponding functionality, both of which must be adjusted to target the data managed in the core master model.

12.1.4. Need for Architectural Maturation

Applications must echo architectural maturation. Architectural approaches to MDM may vary in complexity, and the repository will become more sophisticated, adapt different implementation styles (e.g., registry versus transaction hub), and grow in functionality as the program matures. This introduces a significant system development risk if all the applications must be modified continually in order to use the master data system.

The natural process for application migration is that production applications will be expected to be modified to access the master data repository at the same time as the application's data sets are consolidated within the master. But trying to recode production applications to support a new underlying information infrastructure is both invasive and risky. Therefore, part of the MDM implementation process must accommodate existing application infrastructures in ways that are minimally disruptive yet provide a standardized path for transitioning to the synchronized master.

12.1.5. Similarity of Functionality

During the early phases of the MDM program, the similarities of data objects were evaluated to identify candidate master data entities. To support application integration, the analysts must also review the similarities in application use of those master data entities as part of a functional resolution for determining core master data services. In essence, not only must we consolidate the data, we must also consider the consolidation of the functionality as well.

In addition, as the MDM program matures, new applications can increasingly rely on the abstraction of the conceptual master data objects (and corresponding functionality) to support newer business architecture designs. The use of the standard representations for master data objects reduces the need for application architects to focus on data access and manipulation, security and access control, or policy management; instead they can use the abstracted functionality to develop master data object services.

The ability to consolidate application functionality (e.g., creating a new customer or listing a new product) using a services layer that supplements multiple application approaches will add value to existing and future applications. In turn, MDM services become established as a layer of abstraction that supports an organization's transition to an SOA architecture. MDM allows us to create master data objects that embody behaviors that correctly govern the creation, identification, uniqueness, completeness, relationships with/between, and propagation of these MDOs throughout an ecosystem using defined policies. This goes above and beyond the duplication of functionality present in existing systems. Taking the opportunity to define an “object model” for capturing policy and behavior as part of the representation and service layer enables the creation of reusable master data objects that endure over generations of environmental changes.

12.2. Concepts of the Services-Based Approach

The market defines SOA in many ways, but two descriptions best capture the concepts that solidify the value of an MDM initiative. According to Eric Marks and Michael Bell:

SOA is a conceptual business architecture where business functionality, or application logic, is made available to SOA users, or consumers, as shared, reusable services on an IT network.1

The Organization for the Advancement of Structured Information Standards (OASIS) provides this definition:

A paradigm for organizing and utilizing distributed capabilities that may be under the control of different ownership domains.2

2OASIS Reference Model for Service Oriented Architecture, http://www.oasis-open.org/committees/download.php/19679/soa-rm-cs.pdf

To paraphrase these descriptions, a major aspect of the services-based approach is that business application requirements drive the need to identify and consolidate the use of capabilities managed across an enterprise in a way that can be shared and reused within the boundaries of a governed environment. From the business perspective, SOA abstracts the layers associated with the ways that business processes are deployed in applications. The collection of services that comprises the business functions and processes that can be accessed, executed, and delivered is essentially a catalog of capabilities from which any business solution would want to draw.

This addresses the fundamental opportunity for SOA: The SOA-enabled organization is one that is transitioning from delivering applications to delivering business solutions. Application development traditionally documents the functional requirements from a vertical support point of view; the workflow is documented, procedures are defined, data objects are modeled to support those procedures, and code is written to execute those procedures within the context of the data model. The resulting product operates sufficiently—it does what it needs to do and nothing more.

Alternatively, the services-based approach looks at the problem from the top down:

▪ Identifying the business objectives to be achieved

▪ Determining how those objectives relate to the existing enterprise business architecture

▪ Defining the service components that are necessary to achieve those goals

▪ Cataloging the services that are already available within the enterprise

▪ Designing and implementing those services that are not

Master data lends itself nicely to a services-based approach for a number of reasons (see the following sidebar).

▪ The inheritance model for core master object types captures common operational methods at the lowest level of use.

▪ MDM provides the standardized services and data objects that a services-oriented architecture needs in order to achieve consistency and effective reuse.

▪ A commonality of purpose of functionality is associated with master objects at the application level (e.g., different applications might have reasons for modifying aspects of a product description, but it is critical that any modifications be seen by all applications).

▪ Similarity of application functionality suggests opportunities for reuse.

▪ The services model reflects the master data object life cycle in a way that is independent of any specific implementation decisions.

12.3. Identifying Master Data Services

Before designing and assembling the service layer for master data, it is a good idea to evaluate how each participating application touches master data objects in order to identify abstract actions that can be conveyed as services at either the core component level or the business level. Of course, this process should be done in concert with a top-down evaluation of the business processes as described in the business process models (see Chapter 2) to validate that what is learned from an assessment is congruent with what the business clients expect from their applications.

12.3.1. Master Data Object Life Cycle

To understand which service components are needed for master data management, it is worthwhile to review the conceptual life cycle of a master data object in relation to the context of the business applications that use it. For example, consider the context of a bank and its customer data objects. A number of applications deal directly with customer data, including branch banking, ATM, Internet banking, telephone banking, wireless banking, mortgages, and so on. Not only that, but the customer service department also requires access to customers' data, as do other collaborative divisions including credit, consumer loans, investments, and even real estate. Each of these applications must deal with some of the following aspects of the data's life cycle.

Distribution. This aspect includes the ability to make the data available in a way that allows applications to use it as well as managing that data within the constraints of any imposed information policies derived from business policies (such as security and privacy restrictions).

Access and use. This aspect includes the ability to locate the (hopefully unique) master record associated with the real-world entity and to qualify that record's accuracy and completeness for the specified application usage.

Deactivate and retire. On the one hand, this incorporates the protocols for ensuring that the records are current while they are still active, as well as properly documenting when master records are deactivated and to be considered no longer in use.

Maintain and update. This aspect involves identifying issues that may have been introduced into the master repository, including duplicate data, data element errors, modifying or correcting the data, and ensuring that the proper amount of identifying information is maintained to be able to distinguish any pair of entities.

In other words, every application that deals with customer data is bound to have some functionality associated with the creation, distribution and sharing, accessing, modification, updating, and retirement of customer records. In typical environments where the applications have developed organically, each application will have dealt with this functionality in slightly different ways. In the master data environment, however, we must look at these life cycle functions in terms of their business requirements and how those requirements are mapped to those functions.

In turn, as the business uses of the customer data change over time, we must also look at the contexts in which each customer object is created and used over the customer lifetime. These considerations remain true whether we examine customer, product, or any other generic master data set. As we navigate the process of consolidating multiple data sets into a single master repository, we are necessarily faced not just with the consolidation of life cycle functionality but also with the prospect of maintaining the contexts associated with the master data items over their lifetime as a support for historical assessment and rollback. This necessity suggests the need for a process to both assess the operational service components as well as the analytical service components.

12.3.2. MDM Service Components

The service components necessary to support an MDM program directly rely on both the common capabilities expected for object management and the primary business functions associated with each of the participating applications. The service identification process is composed of two aspects: the core services and the business services. Identifying application functionality and categorizing the way to abstract that functionality within a service layer exposes how the applications touch data objects that seem to be related to master object types. This reverse engineering process is used to resolve and ultimately standardize that functionality for simplification and reuse.

The first part of the process relies on the mapping from data object to its application references, which one hopes will have been captured within the metadata repository. Each application reference can be annotated to classify the type of access (create, use, modify, retire). In turn, the different references can be evaluated to ascertain the similarity of access. For example, the contact information associated with a vendor might be modified by two different applications, each of which is updating a specific set of attributes. The analysts can flag the similarity between those actions for more precise review to determine if they actually represent the same (or similar) actions and whether the action sequence is of common enough use for abstraction. By examining how each application reflects the different aspects of the data life cycle, analysts begin to detect patterns that enable them to construct the catalog of service components. The process is to then isolate and characterize the routine services necessary to perform core master data functions.

The next step is to seek out the higher levels of functionality associated with the business applications to understand how the master objects are manipulated to support the applications' primary business functions. The candidate services will focus specifically on business functionality, which will depend on the lower-level life cycle actions already associated with the master data objects.

12.3.3. More on the Banking Example

Let's return to our bank example. Whenever an individual attempts to access account features through any of the numerous customer-facing interfaces, there are routine activities (some business-oriented, others purely operational) that any of the applications are expected to perform before any application-specific services are provided:

Whether the individual is banking through the Internet, checking credit card balances, or calling the customer support center, at the operational level the application must be able to determine who the individual is, establish that the individual is a customer, and access that customer's electronic profile. As core customer information changes (contact mechanisms, marital status, etc.), the records must be updated, and profiles must be marked as “retired” as customers eliminate their interaction. At the business level, each application must verify roles and permissions, validate “Know Your Customer” (KYC) characteristics, and monitor for suspicious behavior.

When reviewing these actions, the distinctions become clear. Collecting identifying information, uniquely identifying the customer, and retrieving the customer information are core master data services that any of the applications would use. Verifying roles and access rights for security purposes, validating against OFAC, and suspicious behavior monitoring are business application-level services.

Most application architects will have engineered functions performing these services into each application, especially when each application originally maintains an underlying data store for customer information. Consolidating the data into a single repository enables the enterprise architect to establish corresponding services to support both the operational and the business-oriented capabilities. The applications share these services, as Figure 12.1 illustrates.

|

| ▪Figure 12.1 Business and technical services for banking. |

12.3.4. Identifying Capabilities

In practice, the applications are making use of the same set of core capabilities, except that each capability has been implemented multiple times in slightly variant ways. Our goal is to identify these capabilities in relation to how they make use of master data objects and to consolidate them into a set of services. So if every application must collect individual identifying information, uniquely resolve the customer identity, and retrieve customer records, then an application that assembles a set of generic services to collect identifying information, to resolve customer identity, and to retrieve customer records and profiles is recommended.

In retrospect, then, the analyst can review the component services and recast them in terms of the business needs. For example, collecting identifying information and resolving identity are components of the service to access a customer record. On the other hand, when a new customer establishes a relationship with the organization through one of the applications, there will not be an existing customer record in the master repository. At that point, the customer's identifying information must be collected so that a new customer record can be created. This new record is then provided back to the application in a seamless manner. Whether or not the customer record already exists, the result of the search is the materialization of the requested record!

In both of these cases, the core business service is accessing the unique customer record. Alternate business scenarios may require the invocation of different services to supply that record; in the first case, the identifying information is used to find an existing record, whereas in the second case, the absence of an existing record triggers its creation. But the end result is the same—the requesting application gains access to the master customer record.

Building business services on top of core technical services provides flexibility in implementation, yet the shared aspect of the service layer allows the application architect to engineer the necessary master data synchronization constraints into the system while abstracting its complexity from the business applications themselves. Because every application does pretty much the same thing, consolidating those components into services simplifies the application architecture. In essence, considering the business needs enables a collection of individual operational capabilities to be consolidated into a layer of shared services supporting the business objectives.

To isolate the service components, the process of reverse engineering is initiated, as described in Section 12.3.2. This process exposes the capabilities embedded within each application and documents how those capabilities use the master data objects. Each proposed service can be registered within a metadata repository and subjected to the same governance and standards processes we have used for the identifying candidate master data objects. By iterating across the applications that are participating in the MDM program, analysts can assemble a catalog of capabilities. Reviewing and resolving the similarities between the documented capabilities enables the analysts to determine business services and their corresponding technical components.

In turn, these capabilities can be abstracted to support each application's specific needs across the data life cycle. Component services are assembled for the establishment of a master record, distribution of master data in a synchronized manner, access and use of master objects, and validation of role-based access control, as well as to ensure the currency and accuracy of active records and proper process for deactivation. At the same time, service components are introduced to support the consistency and reasonableness of master objects and to identify and remediate issues that may have been introduced into the master repository. Business services can be designed using these core technical component services.

12.4. Transitioning to MDM

Providing the component service layer addresses two of the four issues introduced in Section 12.1. Regarding the fact that traditional ETL is insufficient for continuous synchronization, the challenge changes from enforcing strict synchronization to evaluating business requirements for synchronicity and then developing a service level agreement (SLA) to ensure conformance to those business requirements. The transaction semantics necessary to maintain consistency can be embedded within the service components that support master data object creation and access, eliminating the concern that using ETL impedes consistency. The consolidation of application capabilities within a component service catalog through the resolution of functionality eliminates functional redundancy, thereby reducing the risks of inconsistency caused by replication.

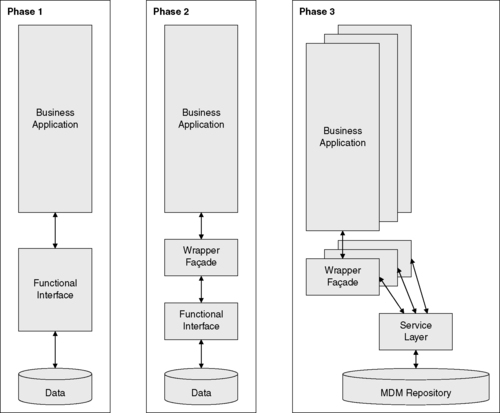

Yet the challenge of applications transitioning to using the master repository still exists, requiring a migration strategy that minimizes invasiveness, and a reasonable migration strategy will address the other concerns from Section 12.1. To transition to using the master repository, applications must be adjusted to target the master repository instead of their own local data store. This transition can be simplified if an interface to the master repository can be created that mimics each application's own existing interface. Provided that core service components for the master data have been implemented, one method for this transition is to employ the conceptual service layer as a stepping-stone, using a design pattern known as “wrapper façade.”

12.4.1. Transition via Wrappers

The idea behind using this design pattern is to encapsulate functionality associated with master data objects into a concise, robust, and portable high-level interface. It essentially builds on the use of the developed service layer supporting core functionality for data life cycle actions. After the necessary service components have been identified and isolated, the application architects devise and design the component services. The programmers also create a set of application interfaces that reflect the new services, then they wrap these interfaces around the calls to the existing application capabilities, as is shown in Figure 12.2.

|

| ▪Figure 12.2 Transitioning via the wrapper façade. |

At the same time, the MDM service layer is constructed by developing the components using the same interface as the one used for the wrapper façade. Once the new service layer has been tested and validated, the applications are prepared to migrate by switching from the use of the wrapper façade to the actual MDM service components.

12.4.2. Maturation via Services

This philosophy also addresses the fourth concern regarding the growing MDM sophistication as the program matures. MDM projects may initially rely on the simplest registry models for consolidation and data management, but over time the business needs may suggest moving to a more complex central repository or transaction hub. The complexity of the underlying model and framework has its corresponding needs at the application level, which introduces a significant system development risk if all the applications must be continually modified to use the master data system. In addition, there may be autonomous applications that are tightly coupled with their own data stores and will not be amenable to modification to be dependent on an external data set, which may preclude ever evolving to a full-scale hub deployment.

The services-based approach addresses this concern by encapsulating functionality at the technical layer. The business capabilities remain the same and will continue to be supported by the underlying technical layer. That underlying technical layer, though, can easily be adjusted to support any of the core master data implementation frameworks, with little or no impact at the application level.

12.5. Supporting Application Services

A services-based approach supports the migration to an MDM environment. The more important concept, though, is how the capability of a centralized view of enterprise master data more fully supports solving business problems through the services-based approach. Once the data and the functional capabilities have been consolidated and the technical service components established, the process of supporting business applications changes from a procedural, functional architecture to a business-directed one. In other words, abstracting out the details associated with common actions related to access, maintenance, or security enables the developers to focus on supporting the business needs.

New services can be built based on business requirements and engineered from the existing service components. Returning to our banking example, consider that regulations change over time, and new business opportunities continue to emerge as technology and lifestyles change. In addition, we may want to adjust certain customer-facing applications by introducing predictive analytics driven off the master customer profiles to help agents identify business opportunities in real time. The master repository makes this possible, but it is SOA that brings it into practice.

With a services-based approach, there is greater nimbleness and flexibility in being able to react to changes in the business environment. Integrating new capabilities at the business service layer enables all applications to take advantage of them, reducing development costs while speeding new capabilities into production.

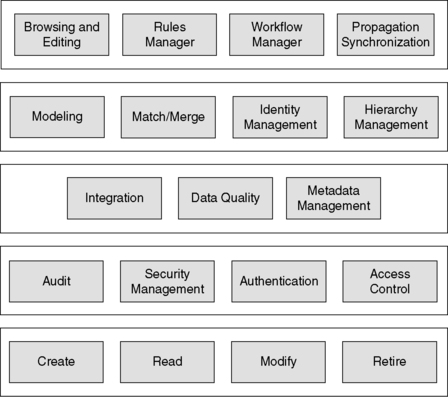

12.5.1. Master Data Services

What are the master data services? Implementations may vary, but in general, we can characterize services grouped within three layers: 3

▪ Object services, which focus on actions related to the master data object type or classification (such as customer or product)

▪ Business application services, which focus on functionality necessary at the business level

In Figure 12.3, we show a sampling of core services, which include life cycle services, access control, integration, consolidation, and workflow/rules management. Of course, every organization is slightly different and each catalog of services may reflect these differences, but this framework is a generic starting point for evaluation and design.

|

| ▪Figure 12.3 Some examples of core master data services. |

12.5.2. Life Cycle Services

At the lowest level, simple services are needed for basic master data actions, providing a “master data service bus” to stream data in from and out to the applications.

Read. The ability to make the data available within the context of any application; this level of service presumes that the desired master entity has been established and the corresponding record can be located and accessed.

Modify. This level of service addresses any maintenance issues that may have been introduced into the master repository, such as eliminating duplicate data, correcting data element errors, modifying the data, and ensuring that the proper amount of identifying information is available.

Retire. This process ensures the currency of active records and documents those master records that are deactivated and no longer in use.

12.5.3. Access Control

As part of the governance structure that supplements the application need to access master data, access control and security management services “guard the gateway.”

Access control. Actors in different roles are granted different levels of access to specific master data objects. These services manage access control based on the classification of roles assigned to each actor.

Authentication. These services qualify requestors to ensure they are who they say they are.

Security management. These services oversee the management of access control and authentication information, including the management of data for actors (people or applications) authorized to access the master repository.

Audit. Audit services manage access logging as well as analysis and presentation of access history.

12.5.4. Integration

The integration layer is where the mechanics of data consolidation are provided.

Metadata management. Metadata management services are consulted within the context of any data element and data entity that would qualify for inclusion in the master repository. The details of what identifying information is necessary for each data entity are managed within the metadata management component.

Data quality. This set of services provides a number of capabilities, including parsing data values, standardizing the preparation for matching and identity resolution and integration, and profiling for analysis and monitoring.

12.5.5. Consolidation

At a higher level, multiple records may be consolidated from different sources, and there must be a service layer to support the consolidation process. This layer relies on the services in the integration layer (particularly the data quality services).

Modeling. Analysis of the structure of incoming data as it compares to the master model as well as revisions to the rules for matching and identity resolution are handled through a set of modeling and analysis services.

Identity management. For each master object type, a registry of identified entities will be established and managed through the identity management services.

Match/merge. As the core component of the identity resolution process, match and merge services compare supplied identifying information against the registry index to find exact or similar matches to help determine when the candidate information already exists within the registry.

Hierarchy management. These services support two aspects of hierarchy management. The first deals with lineage and tracking the historical decisions made in establishing or breaking connectivity between records drawn from different sources to support adjustments based on discovered false positive/false negatives. The second tracks relationships among entities within one master data set (such as “parent-child relationships”), as well as relationships between entities across different master data sets (such as “customer A prefers product X”).

12.5.6. Workflow/Rules

At the level closest to the applications, there are services to support the policies and tasks/actions associated with user or application use of master data.

Browsing and editing. A service for direct access into the master repository enables critical data stewardship actions to be performed, such as the administration of master metadata as well as edits to master data to correct discovered data element value errors as well as false positive and false negative links.

Rules manager. Business rules that drive the workflow engine for supporting application use of master data are managed within a repository; services are defined to enable the creation, review, and modification of these rules as external drivers warrant. Conflict resolution directives to differentiate identities can be managed through this set of services, as well as the business classification typing applied to core master objects as needed by the applications.

Workflow manager. Similar to the way that application rules are managed through a service, the work streams, data exchanges, and application workflow is managed through a service as well. The workflows are specifically configured to integrate the master data life cycle events into the corresponding applications within the specific business contexts.

Service level agreements. By formulating SLAs as combinations of rules dictated by management policies and workflows that drive the adherence to an agreed-to level of service, the automation of conformance to information policy can be integrated as an embedded service within the applications themselves.

Propagation and synchronization. As changes are made to master data, dependent applications must be notified based on the synchronization objectives specified through defined SLAs. Depending on the architectural configuration, this may imply pushing data out from the master repository or simply notifying embedded application agents that local copies are no longer in synch with the master, but allowing the application to refresh itself as needed.

12.6. Summary

There is a symbiotic relationship between MDM and SOA: a services-oriented approach to business solution development relies on the existence of a master data repository, and the success of an MDM program depends on the ability to deploy and use master object access and manipulation services. But employing a services-based approach serves multiple purposes within a master data management program. As the program is launched and developed, the use of multiple service layers simplifies both the implementation of the capabilities supporting the data object life cycle and the transition from the diffused, distributed application architecture into the consolidated master application infrastructure. But more important, the flexibility provided by a services-oriented architecture triggers the fundamental organizational changes necessary to use the shared information asset in its most effective manner. By driving application development from the perspective of the business instead of as a sequence of procedural operations, the organization can exploit the master data object set in relation to all business operations and ways that those objects drive business performance.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.